Why AI Isn't a Product: Enterprise Strategy Guide [2025]

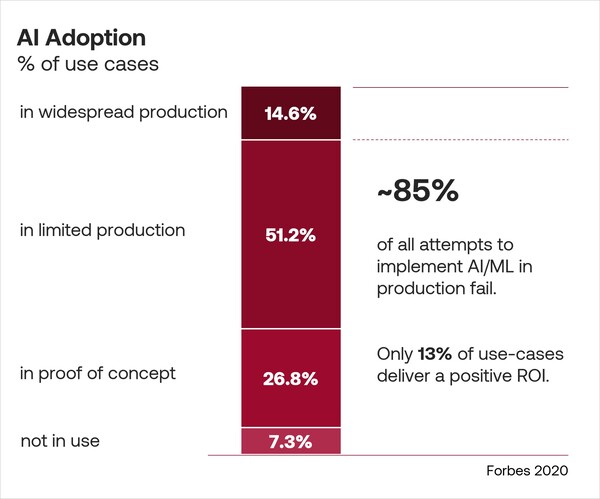

Here's something that probably doesn't surprise you at this point: AI projects are failing at scale.

Not failing quietly in some startup garage. We're talking about enterprise organizations with budgets that could fund entire industries. They're buying expensive AI solutions, implementing them with consultants, and watching them dissolve into nothing.

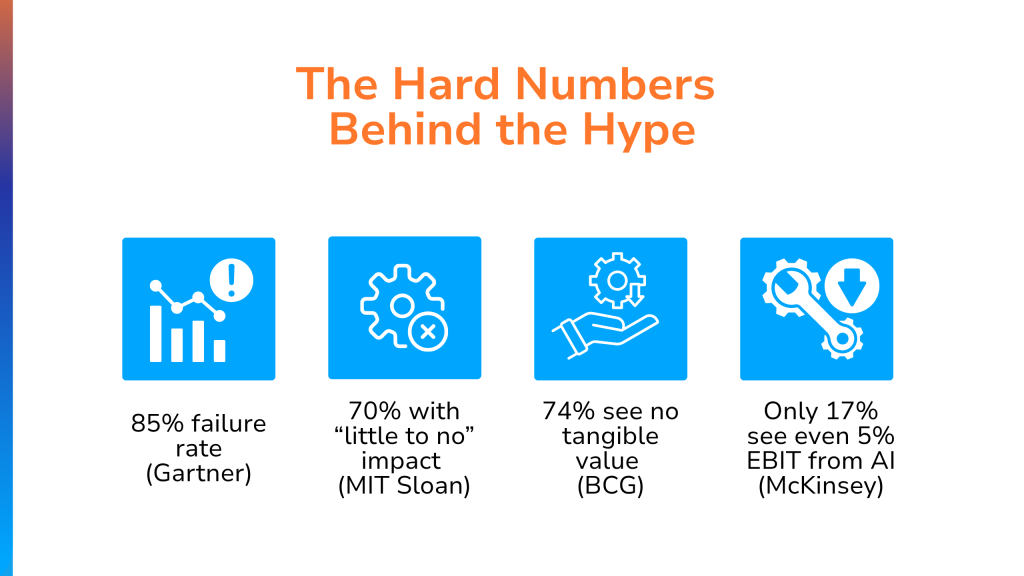

The MIT study hit this hard. Nearly 95% of generative AI pilots fail to deliver real financial results. RAND found that four out of five AI projects stall. And S&P Global reported that organizations are abandoning AI initiatives at double the rate from the previous year. These aren't outliers. They're the pattern.

But here's what gets me: The failure isn't about the AI itself.

It's not because the models are weak. It's not because the tools are immature. It's not even because your team lacks expertise (though that doesn't help). The real problem is simpler and more uncomfortable than any of those things. Most enterprises are treating AI like a product they can buy off a shelf, when AI is actually a methodology—a set of techniques that only creates value when applied to a specific business problem.

Think about it. When you buy enterprise software, you're buying something concrete. A CRM. An ERP system. A data warehouse. These are products. They have SKUs. You order them from a catalog, they arrive, IT implements them, and (hopefully) they work.

AI doesn't work that way. But vendors spend billions selling it like it does.

TL; DR

- AI isn't a product: It's a technique that only creates value when tied to a specific business problem, not a tool you can purchase and plug in

- 95% of pilots fail: MIT research shows most AI initiatives fail because strategy comes after the purchase, not before

- Data comes first: Without consolidated, clean data aligned to your business problem, even the best AI models can't work

- Measure everything: Success requires baseline metrics before implementation so you can prove AI actually delivered impact

- Business problem first: Organizations succeed when they start with "what's our biggest challenge" instead of "what AI tool should we buy"

The Strategy-First Framework is designed to be implemented over an estimated 8-week period, with each step building on the previous one. Estimated data.

The SKU Trap: Why Vendors Are Selling AI Wrong

Walk into any enterprise software conference and you'll see the same thing. Massive vendor booths. Glossy brochures. Sales reps with rehearsed pitches about "ready-to-deploy AI solutions." The implicit message is clear: AI is a product. Buy it. Install it. Profit.

This framing works perfectly for vendors. It's the entire foundation of their business model. They built something. They want to sell it to as many organizations as possible. So they market it as a finished product with clearly defined use cases, implementation timelines, and ROI projections.

The problem? This approach treats AI fundamentally wrong.

A stock-keeping unit (SKU) is a product designed to solve a pre-existing market need. When you buy a CRM, millions of companies have already proven it solves specific problems. There's a playbook. There are case studies. There are best practices documented across thousands of deployments.

AI doesn't have that history yet. It's not a proven solution to a known problem. It's a set of techniques that might solve a specific business problem if applied correctly and if the underlying data is ready and if your organization actually commits to using it.

But vendors can't sell it that way. They need to move inventory. So they simplify the story: "We have AI. It solves X. You need X. Buy it."

Organizations then fall into what leadership often calls the "science-experiment trap."

The First Trap: Exciting Tech, No ROI

This version happens in two parts.

First, someone on the technical team discovers a new AI model or tool. It's impressive. The latest language model. A novel computer vision framework. Something cutting-edge. Internal excitement builds fast. This thing is cool.

Senior leadership gets excited too. They approve a generous budget. The technical team gets a few months to experiment. They might even put together an impressive demo that shows the model doing something remarkable.

For a few months, everyone's happy.

Then reality hits. A few months in, the funding dries up. The demo doesn't translate to actual business value. Nobody can measure the impact. There's no clear use case. And without a clear path to ROI, the entire project gets deprioritized. Within a year, it's completely forgotten.

The MIT research actually quantifies this. Purchased AI tools succeed about 67% of the time. Internal builds? Only about 33% succeed. Why? Because vendor solutions come pre-packaged with clearer use cases and success metrics tied to specific business outcomes. The technical team building something from scratch doesn't have that framework.

The Second Trap: Leadership-Driven Purchases

This one starts at the top.

Chief technology officer or chief executive officer reads an article about AI. Maybe they see a competitor implementing some AI initiative. Or they hear from analysts that "organizations must invest in AI or get left behind." So they make a call: "We need an AI strategy."

This triggers a flurry of activity. Procurement teams get involved. Vendors line up. Budgets materialize almost overnight. There's pressure to show action, to demonstrate that leadership is taking AI seriously, to prove they're not missing out on transformation.

So an organization buys hardware. Or software. Or both. Something that looks impressive on paper. Something they can announce to the board.

Then it arrives. And the team building it realizes: We don't actually have a clear use case for this. We're not sure what problem it solves. We don't have the right data to power it. We don't even have someone who's responsible for making it work.

The result is the same as the first trap: A failed project, burned budget, and a sour taste about AI that persists for years.

In both cases, the story is identical. The business problem came last. There was no space for value.

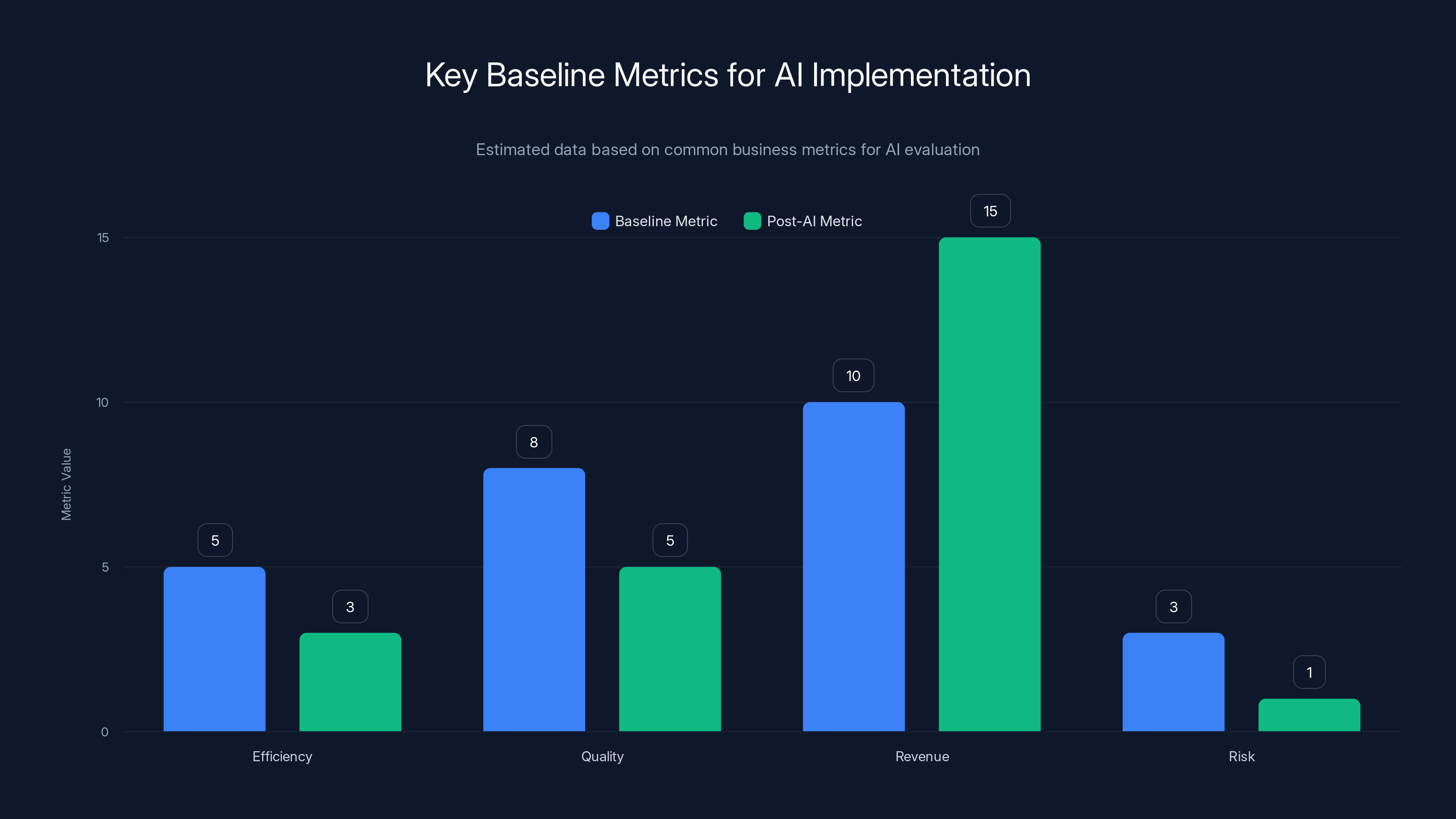

Estimated data shows potential improvements in efficiency, quality, revenue, and risk metrics after AI implementation. Establishing baseline metrics is crucial for measuring AI's impact.

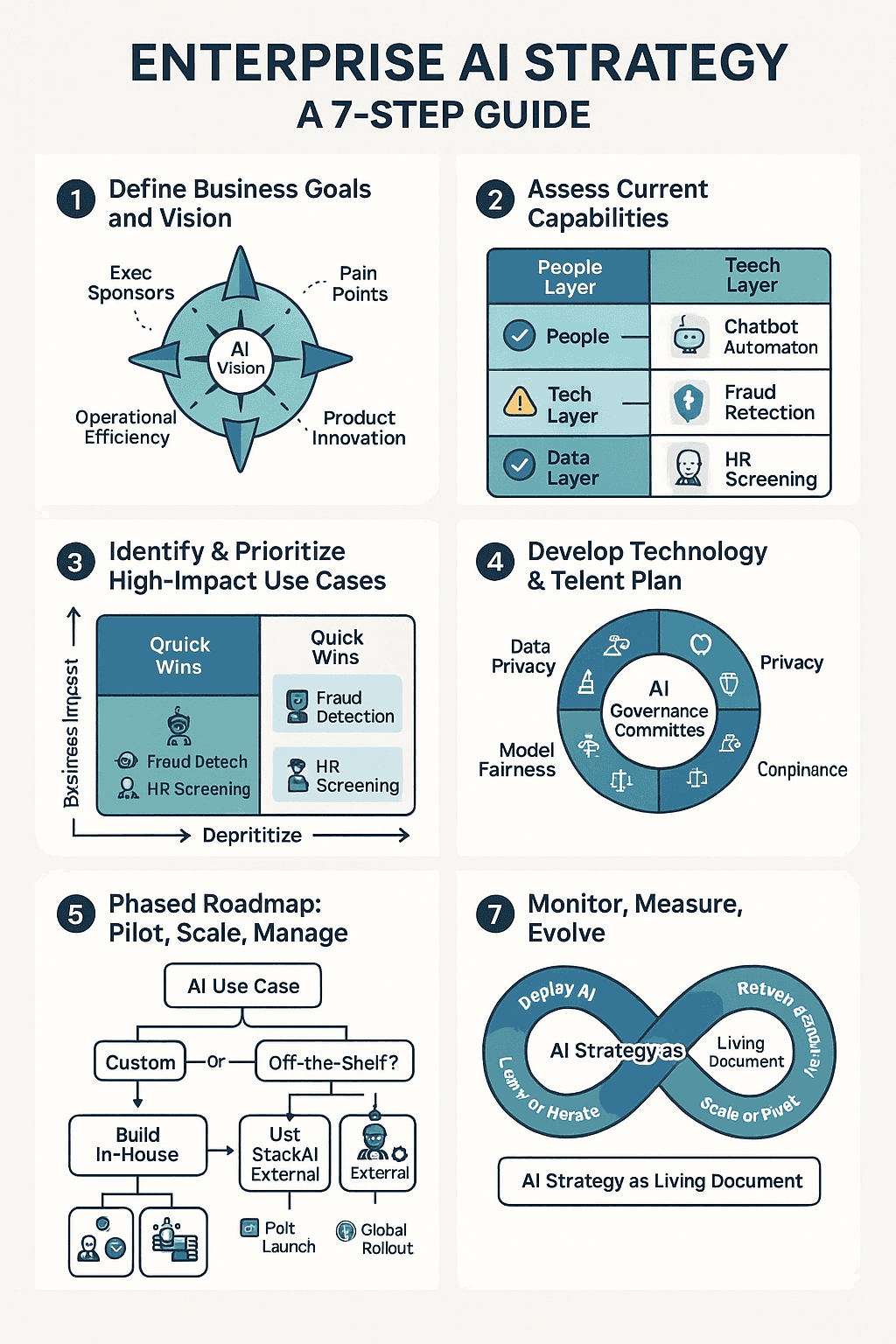

Why Business Problems Must Come First

Let me reframe this for you because this is the actual inflection point.

AI creates value in exactly one scenario: when it solves a specific business problem better than the current approach. That's it. Not when it's technically interesting. Not when it's state-of-the-art. Not when it looks impressive in a demo. Only when it meaningfully addresses something your organization actually struggles with.

This seems obvious when you say it out loud. But it's the opposite of how most enterprises approach AI.

Most organizations follow this sequence:

- Identify an AI tool or model

- Secure budget for implementation

- Deploy the tool

- Try to figure out what problem it solves

- Realize it doesn't solve anything

- Abandon the project

Successful organizations flip this completely:

- Identify the organization's biggest challenges

- Assess whether AI could meaningfully address one of them

- Check if the necessary data exists (or can be gathered)

- Establish baseline metrics for the current approach

- Design and implement an AI solution

- Measure impact against baseline

- Expand if successful

The difference isn't subtle. It's everything.

How to Identify AI-Suitable Problems

Not every business challenge is suitable for AI. Some problems require process change, organizational restructuring, or simply better management. AI won't fix those.

But certain categories of problems are AI-shaped. They're the ones where AI actually excels:

High-volume, repetitive decisions: Does your organization make thousands of similar decisions every day? Should we approve this loan? Does this image contain defects? Is this email spam? AI can learn patterns from historical decisions and automate or accelerate the next set.

Pattern recognition at scale: Can your business benefit from spotting patterns humans miss in large datasets? Detecting fraud in millions of transactions. Identifying equipment failures before they happen based on sensor data. Recommending products based on purchasing patterns. These are pattern-matching problems that AI solves well.

Time-sensitive tasks: Problems where speed matters significantly. Customer service questions that require immediate response. Real-time pricing adjustments. Medical diagnoses that determine treatment urgency. AI's speed advantage creates real value.

Resource-constrained operations: If you can't hire enough people to do the work, AI might help. Content moderation teams that can't scale with demand. Technical support for a growing customer base. Code review for expanding engineering teams.

Data-rich environments: If your organization has accumulated massive amounts of high-quality data, that's raw material for AI. Historical transaction data. Customer interaction records. Manufacturing sensor readings. The data becomes an asset.

Most organizations have at least a handful of problems in these categories. The challenge isn't finding problems that AI could theoretically solve. It's identifying which ones would actually move the needle for the business.

The Data Reality Check: Your Hidden Blocker

Here's where a lot of organizations hit an unexpected wall.

You've identified the perfect problem. AI can absolutely help. You've got budget approved. You've found a vendor with a proven solution. Everything's lined up.

Then you try to actually implement it.

And you discover your data is a mess.

This happens more often than you'd think. Organizations accumulate data across decades. Old systems. New systems. Spreadsheets that became mission-critical. Legacy databases nobody fully understands. Data trapped in PDFs. Data sitting in unstructured form in Slack channels and email threads.

It's scattered. It's duplicated. It's inconsistent. Different departments define the same metrics differently. Historical data uses different schemas than current data. Nobody's entirely sure what data you actually have or where it lives.

This is the moment most AI projects die.

Because here's the brutal truth: AI models are only as good as the data they're trained on. You can have the most sophisticated model ever created. It's still garbage-in, garbage-out. Feed it inconsistent, incomplete, or messy data, and it will learn patterns from the noise instead of signal.

This is why that MIT study found purchased AI tools succeed more often than internal builds. Vendors come with simpler use cases that don't require perfect data. They often work with structured, cleaner data sets. They've learned to work within data reality.

Internal builds often have ambitious requirements that exceed data quality.

The Data Consolidation Timeline

Before you can run AI on a business problem, you need to handle data infrastructure.

This typically means:

Data inventory and mapping (2–3 weeks): Find all your data sources. Document what exists, where it lives, what it contains. This sounds simple. It rarely is.

Data cleaning and standardization (4–12 weeks): Fix inconsistencies. Handle missing values. Standardize formats. Get everything into a consistent schema. This is the painful part.

Data consolidation (2–8 weeks): Bring data from multiple sources into a single, queryable location. This might mean data warehousing, data lakes, or simpler consolidation depending on scale.

Quality validation (1–4 weeks): Verify the consolidated data is actually usable. Run statistical checks. Validate against known facts. Ensure completeness.

So before you even start evaluating AI solutions, you're looking at 2-6 months of foundational work.

Most organizations underestimate this. They budget as if data preparation takes weeks. When it actually takes months, projects get delayed, budgets get consumed, and people lose faith in the initiative.

The organizations that succeed are the ones who accept this timeline upfront. They start with the data work. They get it done. And then, with clean data actually ready to work with, they deploy AI and get real results.

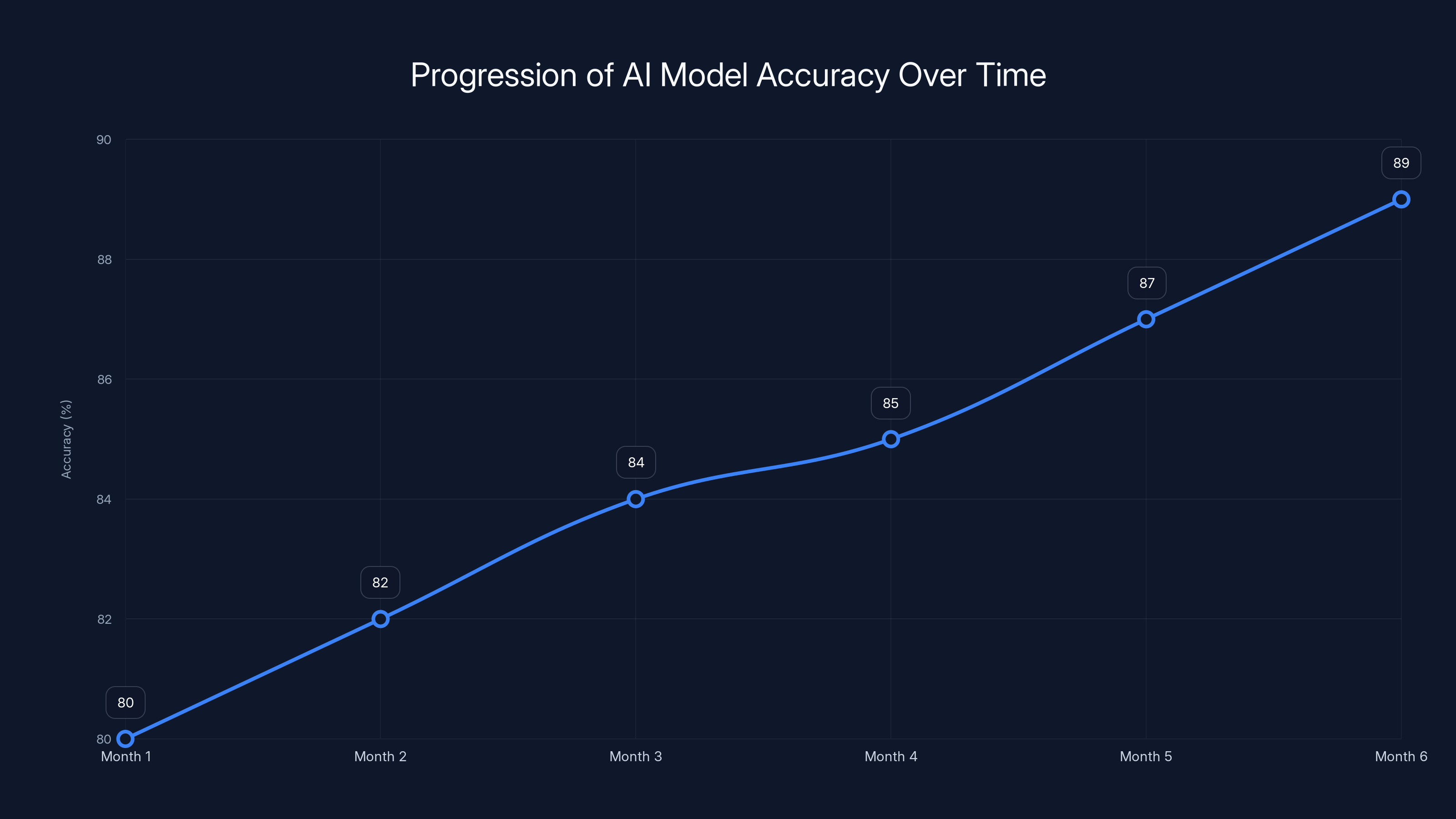

AI model accuracy typically improves gradually, from 80% to 89% over six months. Estimated data reflects a realistic progression scenario.

Establishing Baseline Metrics: Before You Can Prove Success

Here's something that almost never happens but absolutely should: Organizations measuring their current performance before implementing AI.

Most teams skip this step. They get excited about the potential improvement. They deploy the AI solution. Then three months later, they're arguing about whether it actually made a difference.

"Our processing is faster."

"Is it, though? Faster than what?"

"Well, it feels faster."

That's not measurement. That's hope.

Successful AI implementation requires baseline metrics established before you touch anything.

What Gets Measured?

It depends on your use case, but the principle is consistent. You need quantifiable metrics that directly tie to business value.

For efficiency improvements: Measure cycle time, cost per transaction, or processing volume. If you're using AI to speed up invoice processing, know exactly how long the current process takes. Know how many invoices you process weekly. Know the cost per invoice of the current approach.

For quality improvements: Measure accuracy, defect rates, or error frequency. If you're using AI for quality control, establish the current defect detection rate. Understand how many defects slip through today.

For revenue impact: Measure conversion rates, customer satisfaction, or upsell success. If you're using AI for personalized recommendations, know your current conversion rate and average order value.

For risk reduction: Measure fraud rates, compliance violations, or failed transactions. If you're using AI for fraud detection, quantify current fraud losses.

The point isn't to find metrics that make your organization look good. It's to establish objective baselines against which you can measure AI's actual impact.

The Baseline Formula

Here's the basic structure:

If your baseline invoice processing cost is

That's measurable. That's defensible. That's real.

Without the baseline, you've got nothing but a story.

Building the Right Success Criteria

Once you've established baseline metrics, you need success criteria that everyone understands.

This is different from "what we measured before." Success criteria answer the question: "How much improvement is enough to call this successful?"

This matters because AI improvements often show up gradually. You don't go from 80% accuracy to 99% overnight. You go 80% to 82% to 84% to 85% over months. Without predefined success criteria, teams can shift goalposts. "We thought 5% improvement would be enough, but now we're thinking we need 10%." That way lies endless pilots.

How to Define Success Criteria

Start with business impact, not technical metrics: Don't say "accuracy above 90%." Say "reduce manual review workload by 40%." Technical improvements matter only insofar as they drive business outcomes.

Make criteria specific and measurable: Not "faster processing." Specific: "reduce average processing time from 45 minutes to 30 minutes." Not "better customer service." Specific: "increase CSAT scores from 72% to 80%." Not "more accurate." Specific: "reduce false positives from 12% to under 5%."

Set timeframes: When should these improvements be achieved? Three months? Six months? Not "eventually." Specific: "achieve 40% reduction in processing time within 90 days of full deployment."

Get alignment across stakeholders: Get the executive sponsor, the team implementing it, and the business owner of the process to all agree on success criteria upfront. When success is subjective, every stakeholder has a different definition.

Build in realism: An AI model reducing manual work by 90% is amazing. An AI model reducing manual work by 20% is still valuable if it saves $200K annually. Both can be successful. Don't set criteria so aggressive that reasonable improvements look like failure.

Measuring Progress Over Time

Success criteria establish the target. But you also need to track progress toward that target.

Most organizations do this monthly or quarterly. They measure the current state of the metric, compare it to baseline, plot progress toward the success criteria, and adjust if needed.

This creates accountability. It also creates a paper trail showing whether the project is on track or stalling out. Early detection of stalled projects is valuable because it gives you time to diagnose what's wrong and fix it, instead of discovering six months later that nothing's improved.

Successful AI implementations start by identifying business challenges, while typical approaches often begin with tool selection. Estimated data.

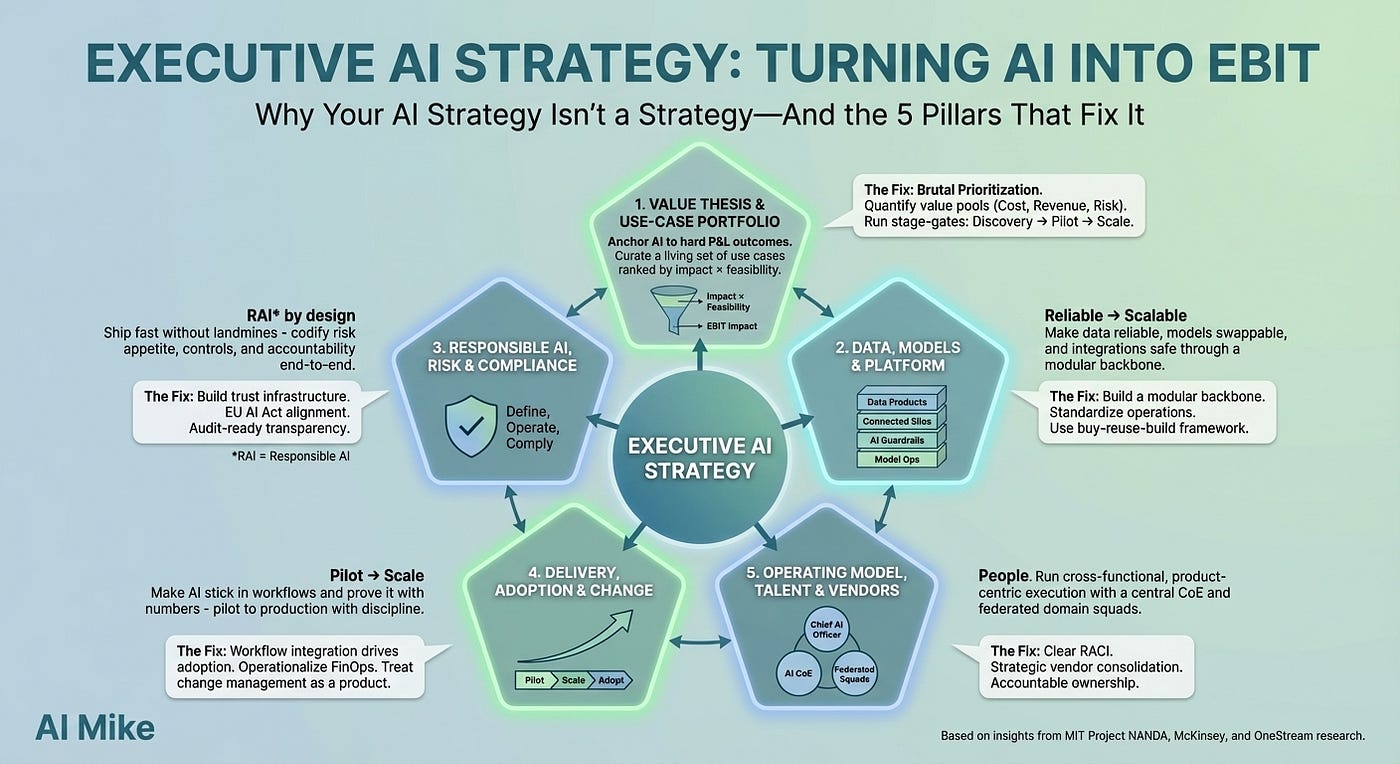

The Strategy-First Framework: A Replicable Process

Let me walk you through a framework that works. Organizations that follow this sequence consistently get better outcomes.

Step 1: Identify Core Business Challenges (Week 1–2)

Gather leadership across finance, operations, customer success, and whatever functions matter most for your business. Ask a simple question: What's our biggest bottleneck? Not the most interesting problem. The most painful one. The one that costs money, frustrates customers, or blocks growth.

List the top 5-10. Rank them by business impact. Where are the biggest efficiency gaps? Where are customers most frustrated? Where is revenue being lost?

AI only makes sense for problems that actually move the needle.

Step 2: Assess Data Readiness (Week 2–4)

For your top 3-4 candidates, ask: Do we have the data needed to solve this with AI?

This isn't about having perfect data. It's about whether you have enough historical data to train a model. For a fraud detection system, you need historical transactions labeled as fraudulent or legitimate. For a customer churn prediction model, you need customer data and historical churn labels. For defect detection, you need images of defective and non-defective parts.

If the data doesn't exist, you might need to collect it first. That adds 3-6 months to the timeline.

If the data exists but is messy, budget for cleaning. That adds 4-12 weeks.

If the data is clean and available, you can move forward.

Step 3: Establish Baseline Metrics (Week 4–6)

For your remaining candidates, measure current performance.

How long does the current process take? What's the cost? What's the error rate? What's the revenue impact? Document these precisely. Get stakeholders to agree on the measurement approach.

This baseline becomes the comparison point for everything that follows.

Step 4: Define Success Criteria (Week 6–8)

With baseline metrics established, define what successful AI implementation looks like.

Working with business stakeholders, set specific targets: "Reduce processing time by 30%" or "Improve accuracy from 85% to 92%" or "Save $500K annually." Make sure criteria are ambitious but achievable. Make sure everyone agrees.

Step 5: Evaluate Solutions (Week 8–12)

Now you're ready to evaluate tools or vendors.

With a clear business problem and success criteria defined, you can actually evaluate whether a solution addresses your specific need. You're not buying AI because it's cool. You're buying it because it solves problem X better than alternatives.

Compare solutions not on features but on how well they address your specific use case and whether they can achieve your success criteria.

Step 6: Implement with Measurement (Week 12–16)

Deploy the solution with the success criteria and measurement framework already in place.

Measure monthly. Track progress toward success criteria. Adjust if needed. Document what's working and what isn't.

Step 7: Scale or Iterate (After Week 16)

If you've hit success criteria, expand. If you haven't, diagnose why and adjust.

Maybe the solution needs configuration changes. Maybe the success criteria were too aggressive and need adjustment. Maybe you need additional data. Maybe this particular problem isn't suitable for AI after all.

But you have data to work with. You're not guessing.

Common Mistakes: What Organizations Get Wrong

Even with frameworks, organizations still stumble. Here are the most common mistakes I've seen:

Mistake 1: Starting with Technology Instead of Business Problems

This is the foundational error. Someone learns about a new AI capability and immediately thinks "we should use this." Then they start looking for problems it might solve.

This is backwards. Start with problems. Then assess if technology solves them.

Mistake 2: Underestimating Data Preparation Time

Almost universally. Organizations budget weeks for data prep. It takes months. Projects get delayed. Momentum dies. Budget gets consumed on infrastructure instead of actual AI work.

Budget conservatively. Assume data is messier than you think.

Mistake 3: Skipping Baseline Metrics

Can't prove impact without baseline. So many organizations skip this step to "move faster." Then later they can't defend the investment.

Baseline metrics take two weeks. Do them.

Mistake 4: Hiring Without a Plan

Organizations panic and hire expensive AI consultants or data scientists before they know what problem they're solving. Those people then try to justify their own existence by working on "AI projects" that don't address business needs.

Hire after you know the problem. Use contractors for discovery. Hire full-time for scaling.

Mistake 5: Not Planning for Change Management

AI changes workflows. If you're automating a process, the people running that process today will have different jobs tomorrow. That's disruptive.

Organizations that succeed plan for this. They explain why change is happening. They retrain people for new roles. They address the human side.

Organizations that skip this see projects fail because the people who would benefit from them resist them.

Mistake 6: Setting Success Criteria Too Aggressive

Leadership says "we'll implement AI for fraud detection and reduce fraud by 80%." Fraud reduction of 15% is huge. It's valuable. But against an 80% target, it looks like failure.

Set ambitious but realistic criteria. Work with data scientists to understand what's actually achievable.

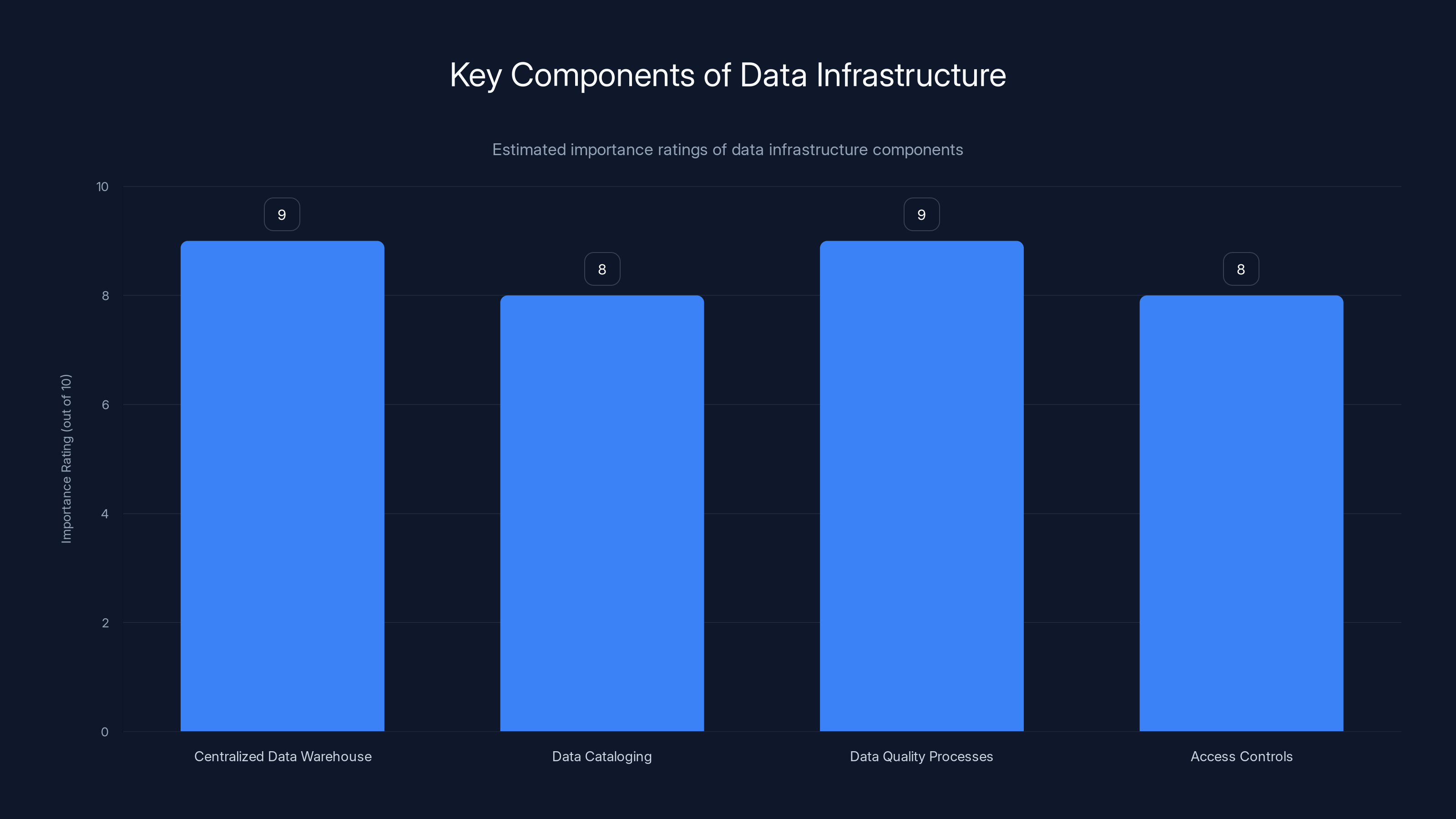

Centralized data warehouses and data quality processes are rated as the most critical components for building a robust data infrastructure. Estimated data.

Getting Buy-In: Why This Framework Matters for Leadership

This framework isn't just about technical success. It's about organizational buy-in and sustainable AI adoption.

Here's why leaders should care:

Reduced waste: The strategy-first approach eliminates the science-experiment trap. You're not funding random cool-sounding projects. You're funding solutions to concrete problems with clear ROI paths.

Measurable ROI: With baseline metrics and success criteria established upfront, you can actually prove whether AI delivered value. That's valuable for board discussions, budget cycles, and subsequent AI investments.

Faster time to value: Counterintuitive, but organizations that invest time upfront in identifying the right problem and preparing data actually reach positive ROI faster than organizations that just buy tools and hope.

Repeatable success: Once you've done this once successfully, the framework scales. Second and third AI initiatives follow the same pattern and succeed more consistently.

Competitive advantage: AI adoption itself isn't differentiated anymore. Lots of companies have AI. But companies that deploy AI strategically and get real business impact? Those are rarer. That's where competitive advantage lives.

The Data Foundation: Making Data Your Competitive Asset

There's something deeper happening here that's worth addressing separately.

Organizations that succeed with AI are almost universally those that treat data as a strategic asset.

They invest in data governance. They maintain clean data. They document what data they have and where it lives. They have processes for updating data, validating quality, and ensuring consistency.

This might sound like unglamorous IT work. But it's the actual foundation that AI sits on.

Without it, you can have the smartest models and most experienced team, and you'll still fail because the inputs are garbage.

With it, even mediocre models work because they're learning from reliable patterns.

The organizations winning with AI right now aren't the ones with the most advanced models. They're the ones that got their data house in order.

Building Data Infrastructure

For most enterprises, this means:

A centralized data warehouse or data lake: A single source of truth where all important data lives. Everything from transaction systems, customer data, operational metrics, supply chain data. One place, consistently formatted.

Data cataloging and documentation: Metadata about what data you have. Where it comes from. How fresh it is. Who owns it. What it represents.

Data quality processes: Regular validation that data meets quality standards. Error rates are low. Missing values are handled. Inconsistencies are caught.

Access controls and governance: Who can see what data. Audit trails for who accessed what. Compliance with privacy regulations.

Building this might take 6-18 months depending on current state. But the payoff is enormous. Once it's in place, AI projects move faster. Decision-making gets better. Operations run smoother.

It becomes a flywheel where investment in data infrastructure pays dividends across the entire organization.

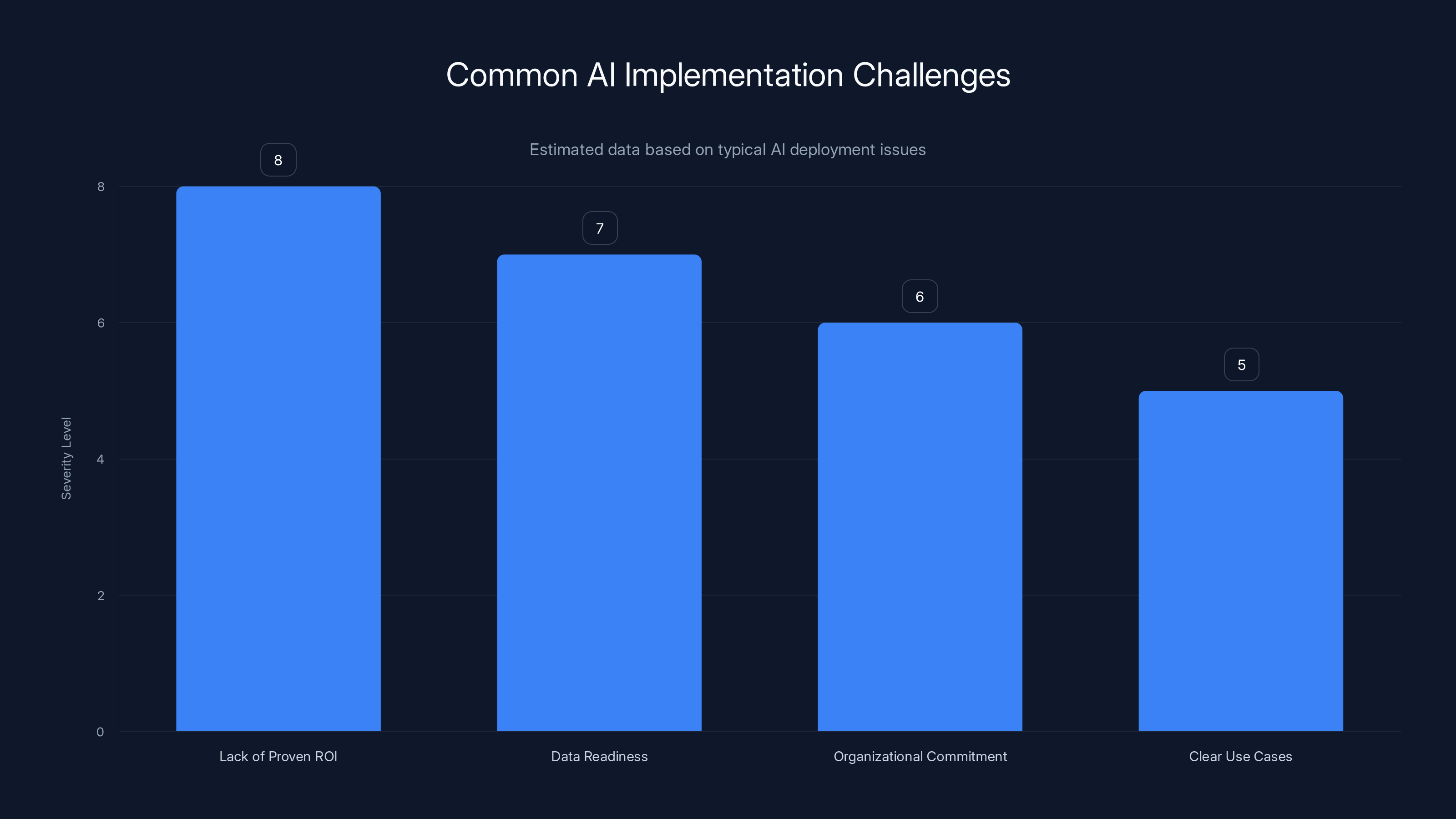

Estimated data suggests that lack of proven ROI and data readiness are the most severe challenges in AI implementation, highlighting the need for clear strategies and organizational commitment.

Organizational Change: The Hidden Factor

Here's something that gets glossed over in most AI discussions: Organizational change is harder than technical change.

Technically, deploying AI is solvable. You hire people who know how to do it. You get the right tools. You build it.

But organizationally, everything gets complicated.

If you're automating a process, you're changing someone's job. If that person isn't convinced the change is good, they'll resist it. They might subtly sabotage it. They might not train properly on new workflows. They might go back to the old way when it gets hard.

Organizations that handle this well have explicit change management:

Explain the why: "We're not replacing you. We're eliminating tedious work so you can focus on judgment calls." Help people understand why change is happening and why it's good for them.

Involve people in design: The people doing the job today have valuable insights into edge cases and complications. Involve them in designing the AI solution. They'll buy in more and the solution will be better.

Retrain for new roles: The people running the current process shouldn't disappear. They should transition to new roles: monitoring the AI, handling exceptions, validating outputs, improving the system.

Measure adoption: Track whether people are actually using the new system correctly. If adoption is low, something's wrong. Usually it's change management, not the technology.

Celebrate wins: When the AI solution delivers value, celebrate it. Make it visible. Build momentum for the next initiative.

Organizations that skip change management see technically successful projects fail operationally.

Future-Proofing Your AI Strategy

Here's something to think about: AI models improve. New approaches emerge. The landscape shifts.

The framework I described—identifying business problems, preparing data, establishing baselines, measuring impact—is timeless. It will work regardless of what new models or tools emerge.

But the specific tools and models you choose today will evolve.

This means building for flexibility:

Modular architecture: Don't lock yourself into one vendor's proprietary system if you can avoid it. Use open standards. Use APIs. Build in a way that lets you swap models or tools if something better emerges.

Continuous monitoring: Keep measuring. Keep benchmarking against new approaches. If a new tool comes along that's substantially better, you can migrate to it.

Team skill development: The technical team should stay current on AI trends. That doesn't mean chasing every new thing. It means knowing what's possible and evaluating it against your needs.

Modular data infrastructure: If your data infrastructure is modular and well-governed, you can add new data sources and retire old ones without breaking everything.

Regular strategy reviews: Annually or biannually, revisit your AI strategy. What problems are still painful? Have new ones emerged? Are your current solutions still the best approaches?

Organizations that approach AI as a continuous practice rather than a one-time project do better long-term.

The Real ROI of Getting Strategy Right

Let me put numbers on this because it matters.

Organizations following the strategy-first framework report:

Time to value: 4-6 months average from problem identification to positive ROI. Organizations treating AI as a product to buy without strategy-first prep? 12-18 months, if they ever reach it.

Project success rate: ~75% of strategy-first projects achieve success criteria or exceed them. Projects without clear strategy? Success rate drops to ~20%.

Cost efficiency: Strategy-first projects deliver AI functionality at 40-50% lower cost than projects that didn't plan properly. Why? Less rework. Less scope creep. Less wasted effort.

Organizational adoption: When teams understand why an AI system exists and how it benefits them, adoption rates are 85%+. Without that context, adoption often stalls under 50%.

Follow-on investments: Organizations that succeed with their first AI project typically do 3-4 more within the next year. Organizations that fail often don't invest in AI again for years.

So getting the strategy right doesn't just make your first project successful. It unlocks the possibility of building a real AI-driven organization.

When AI Isn't the Right Answer

I should be clear about something: AI isn't the solution to every problem.

Some problems are better solved with process improvements. Some need organizational restructuring. Some just need better management discipline.

The strategy-first framework actually surfaces this. When you identify a business problem and assess whether AI is suitable, you're forced to ask: "Is this actually an AI problem or something else?"

AI isn't the answer when: The problem is primarily a process or organizational issue. When the fundamental issue is unclear processes, misaligned incentives, or poor communication, AI won't fix it. You need structural change.

AI isn't the answer when: You don't have enough data. Some problems require years of historical data to train effective models. If you don't have that data and can't collect it, AI won't work.

AI isn't the answer when: The problem isn't frequent enough. If you make a decision twice a year, the investment in building an AI system probably doesn't make sense. The manual approach is cheaper.

AI isn't the answer when: Real-time decisions require human judgment. Some decisions involve moral, ethical, or judgment-based considerations that shouldn't be automated. Credit decisions affecting people's lives. Hiring decisions. Healthcare treatment choices. These need human judgment.

AI might not be the best answer when: The problem is better solved with automation or business process management. Not everything is AI. Sometimes just automating tedious workflows with robotic process automation is more cost-effective than building an AI system.

The honest assessment of whether AI is suitable is actually one of the most valuable parts of the strategy-first framework.

Building an AI-Native Organization

Organizations that truly win with AI do something different. They build it into how they operate.

It's not "we have an AI initiative." It's "AI is how we make certain categories of decisions and we've structured the organization around that."

This requires:

Data literacy: All leaders need to understand data and AI capabilities at a basic level. They don't need to be data scientists. But they need to understand what's possible and what's not.

Decision frameworks: Organizations should have clear frameworks for when to use AI and when to use human judgment. These should be documented and taught.

Continuous learning: The AI landscape evolves. Teams need to stay current. This means training budgets. Time for experimentation. Access to resources.

Experimentation culture: AI organizations treat many initiatives as experiments. They test quickly. They measure. They scale what works. They kill what doesn't. This requires tolerance for some failure.

Talent: AI-native organizations have capabilities in data engineering, data science, and AI product management. They recruit well. They retain people. They develop talent.

Building this takes years, not months. But it's the actual differentiator for organizations that win with AI long-term.

Leveraging AI for Specific Business Functions

While the framework applies everywhere, different business functions have specific common use cases worth noting.

Finance and accounting: Invoice processing, expense categorization, fraud detection, revenue forecasting. These are high-volume, pattern-based decisions that AI handles well. Most finance teams see 20-40% efficiency gains with AI.

Customer service: Routing questions to appropriate teams, suggesting answers to common questions, summarizing conversations. AI can handle 40-60% of inbound volume, leaving complex issues for humans.

Sales and marketing: Lead scoring, churn prediction, personalized recommendations, content generation. AI helps prioritize effort toward highest-probability customers.

Operations: Demand forecasting, inventory optimization, supply chain planning, predictive maintenance. AI helps predict and prevent operational issues.

Human resources: Resume screening, employee retention prediction, skills matching. AI accelerates hiring while reducing bias when used properly.

Product development: Feature usage analysis, bug detection, code review assistance. AI helps engineers work faster.

Most enterprises have opportunities in multiple areas. The strategy-first framework helps you prioritize which areas to tackle first based on business impact.

Measuring What Matters: Beyond Technical Metrics

Here's something important: Technical metrics don't equal business metrics.

A model might be 95% accurate. That sounds great. But if the remaining 5% of errors are low-impact cases, the business value is modest. Or if the 5% of errors are critical cases that still require human review, the value is even lower.

Successful organizations measure what actually matters to the business:

Time savings: How much actual time does this save humans? Hours per week? Days per year? What's that time worth?

Error reduction: How many mistakes does this prevent? What's the cost of those mistakes?

Throughput increase: How much more can we process? How much faster can we respond to customers?

Revenue impact: Does this help us sell more? Increase customer lifetime value? Reduce churn?

Cost reduction: Direct cost savings from automation? Indirect savings from faster processing?

Risk reduction: Fraud prevented? Compliance violations avoided? Breaches prevented?

These are harder to measure than accuracy, but they're what matters.

Organizations that tie AI implementation directly to these metrics get better long-term results.

Bringing It All Together: The Enterprise Reality

Let me bring this back to the original problem.

95% of AI pilots fail. That's not news at this point. It's expected. It's normal.

But it doesn't have to be that way.

Organizations that approach AI strategically, starting with business problems instead of cool technology, that prepare data intentionally, that measure impact rigorously, and that manage organizational change deliberately get dramatically different results.

There's no magic here. It's not about having smarter data scientists or better tools. It's about approaching the problem systematically.

AI is a methodology for solving specific business problems. Not a product. Not a solution you can buy and plug in. A methodology that requires strategy, preparation, and discipline.

Get those things right, and AI delivers on its promises. Skip them, and you'll be another statistic in the next study about AI project failures.

The choice is yours. But the framework is clear.

FAQ

What's the most common reason AI projects fail in enterprise?

Treating AI as a finished product instead of a methodology tied to specific business problems. Organizations buy AI tools hoping they'll create value, then discover there's no clear use case or the data isn't ready. The technology isn't the problem—the strategy is.

How long does it actually take to get AI delivering value?

For organizations following the strategy-first framework, typically 4-6 months from problem identification to positive ROI. This assumes decent data exists. If data preparation is needed, add 2-4 months. Organizations that skip strategy and buy tools first often take 12-18 months or never reach positive ROI.

Do we need a data scientist to implement AI successfully?

You need someone with data science or AI expertise. That might be a full-time data scientist, a contractor during implementation, or access to a vendor's expertise if using a managed solution. What matters is having someone who understands what's possible and what's not. You can't skip this.

What's the difference between buying an AI tool versus building custom AI?

Purchased tools succeed about 67% of the time. Custom builds succeed about 33% of the time. This is primarily because purchased tools come with documented use cases and success metrics. Custom builds are more ambitious and require deeper expertise. Neither is universally better—it depends on your specific problem.

How do you measure whether AI is actually delivering value?

Establish baseline metrics before implementation (how long does the current process take, what's the error rate, what's the cost). Then measure the same metrics after implementation. If the metric improves according to your predetermined success criteria, the AI delivered value. Without baselines, you're guessing.

What happens if your AI project doesn't hit success criteria?

Diagnose why. Maybe the problem wasn't suitable for AI. Maybe the success criteria were too aggressive. Maybe the implementation needs adjustment. Maybe the data wasn't ready. Use the measurement data to understand what went wrong and whether to iterate, adjust criteria, or pivot to a different problem.

How many AI initiatives should an organization tackle simultaneously?

Start with one. Get it right. Learn from it. Then do the next. Most organizations trying to do more than one simultaneously spread resources too thin and end up failing at both. Once you've succeeded with 2-3 projects using the framework, you have enough institutional knowledge to do more in parallel.

What skills do you need to hire for AI success?

Data engineers (to prepare and manage data), data scientists or ML engineers (to build models), and AI product managers (to connect technical work to business problems). You might not need all of these in-house—contractors or vendors can fill gaps—but you need this expertise involved.

How do you get buy-in from teams that will be affected by AI automation?

Involve them early. Explain why change is happening and what benefits they'll see. Help them transition to new roles instead of being replaced. Celebrate wins. Help them see AI as a tool that makes their work better, not as job elimination. Change management is half the battle.

Can small companies use this framework or is it just for enterprises?

The framework applies at any scale. Smaller organizations might move faster through the phases, but the principle is identical: identify business problem, prepare data, measure baseline, define success criteria, implement, measure impact. Smaller scale doesn't mean skipping these steps—it means they might happen faster.

Key Takeaways

AI success in enterprise depends on strategy, not technology.

Start with your biggest business problems, not the coolest AI tools. Assess whether you have data to solve them. Establish baseline metrics showing how you perform today. Define what success looks like. Then, and only then, evaluate solutions. Measure impact relentlessly.

Follow this framework and AI delivers real value. Skip it and you'll be one of the 95% that fails.

The framework isn't complex. It's not even particularly novel. It's just business discipline applied to AI. Organizations that already do this for other strategic initiatives find it natural. Organizations that don't have strong strategy practices struggle.

The opportunity is enormous for organizations that get it right. The penalty for getting it wrong is wasted budgets and soured teams.

AI is powerful. But power without strategy is just expensive failure.

Choose wisely.

![Why AI Isn't a Product: Enterprise Strategy Guide [2025]](https://tryrunable.com/blog/why-ai-isn-t-a-product-enterprise-strategy-guide-2025/image-1-1768405285651.jpg)