Open AI vs Anthropic: Enterprise AI Model Adoption Trends [2025]

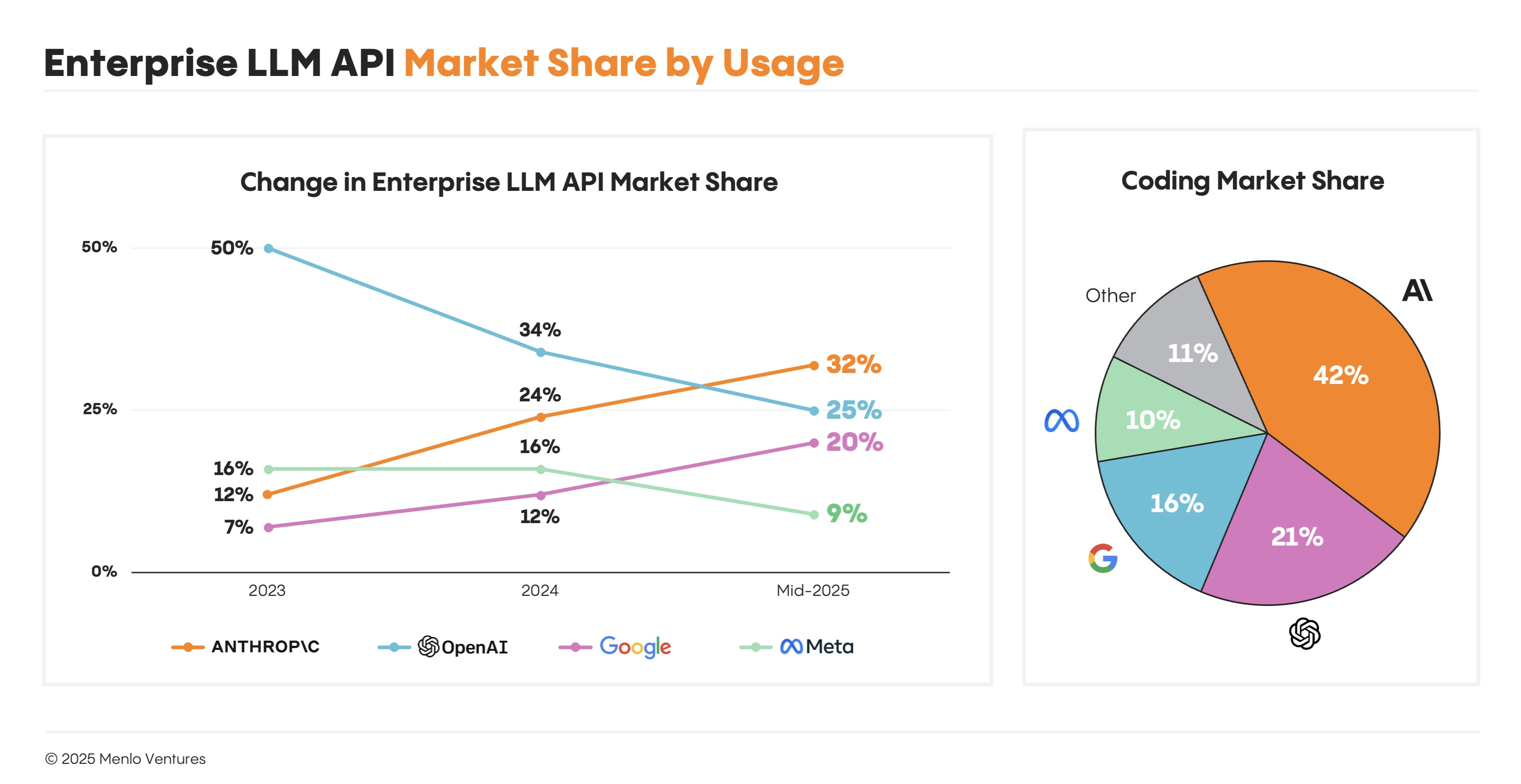

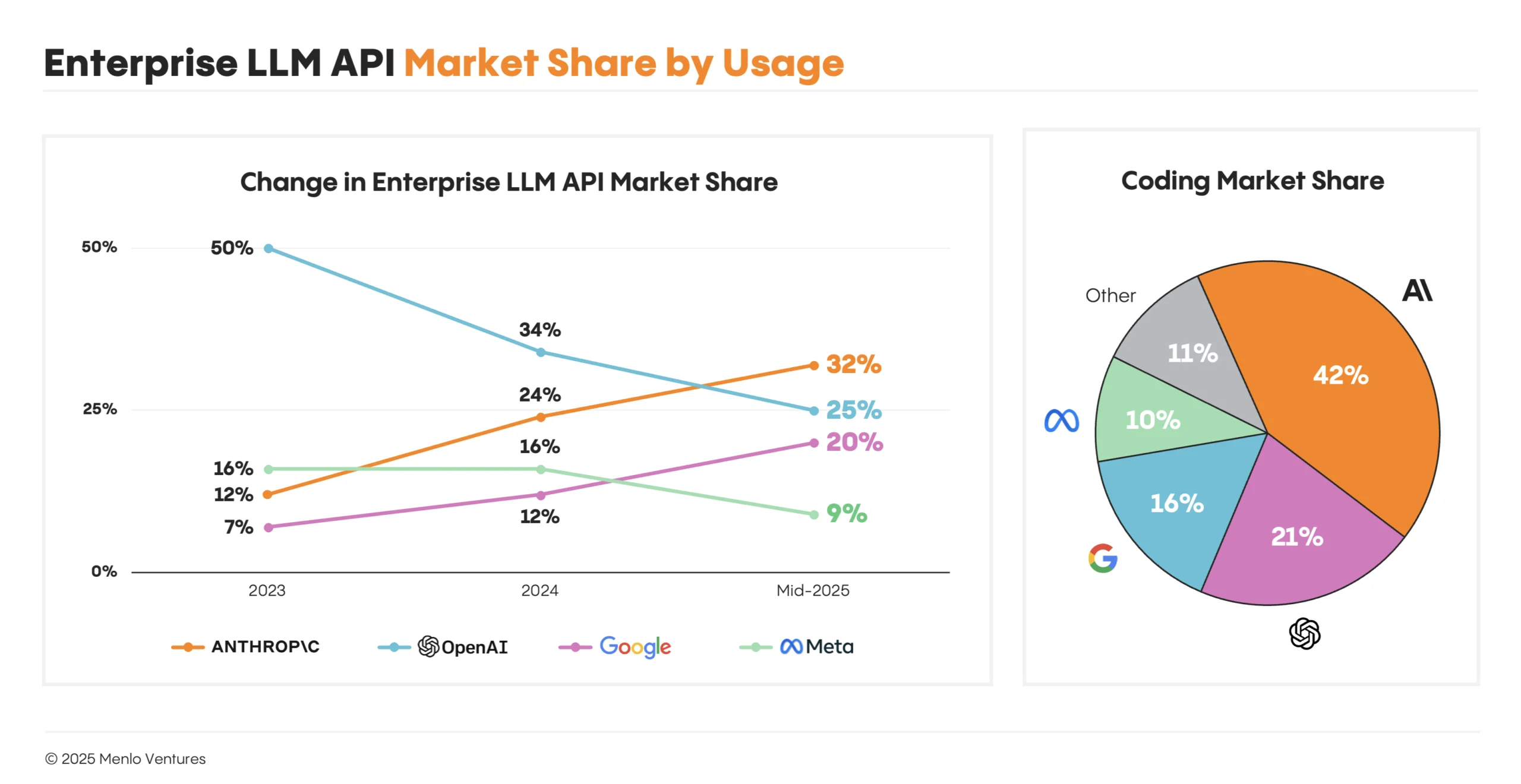

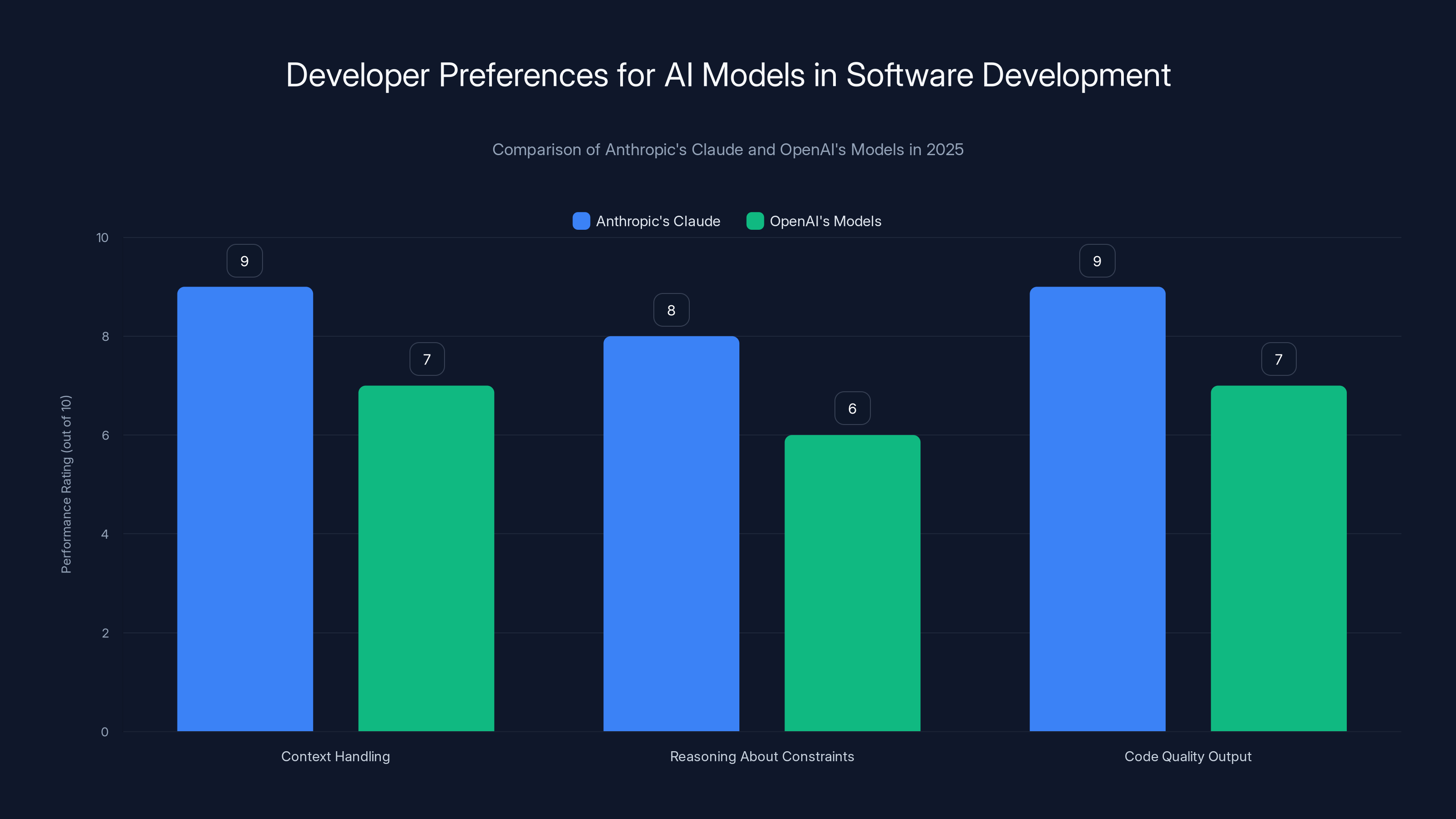

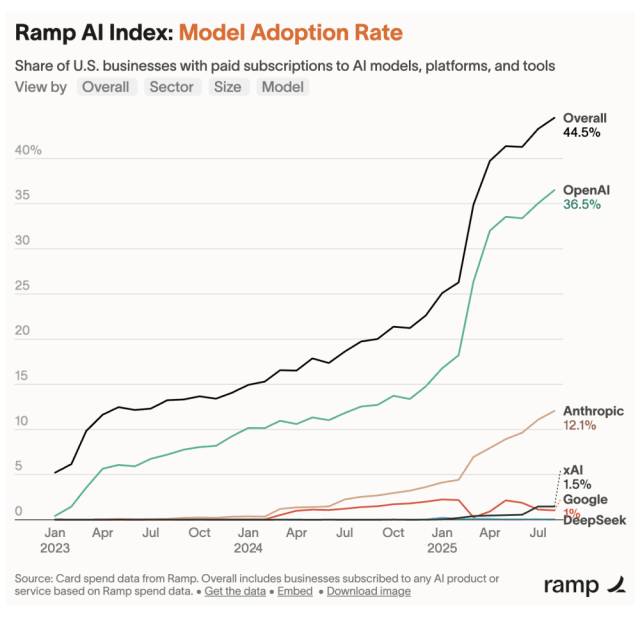

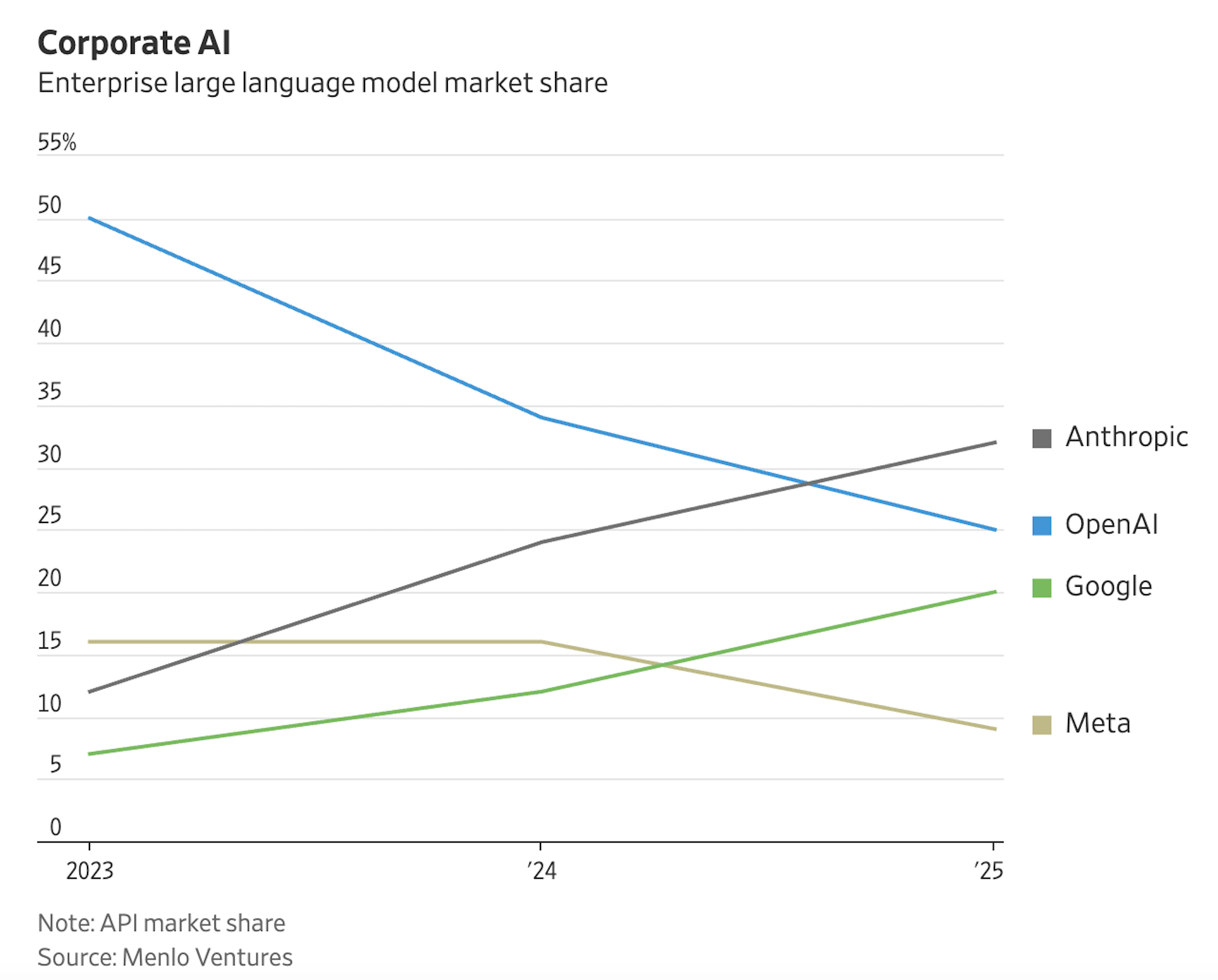

When enterprise leaders started evaluating AI models in 2024, the conversation was simple: Open AI or nothing. Flash forward to 2025, and the landscape looks completely different.

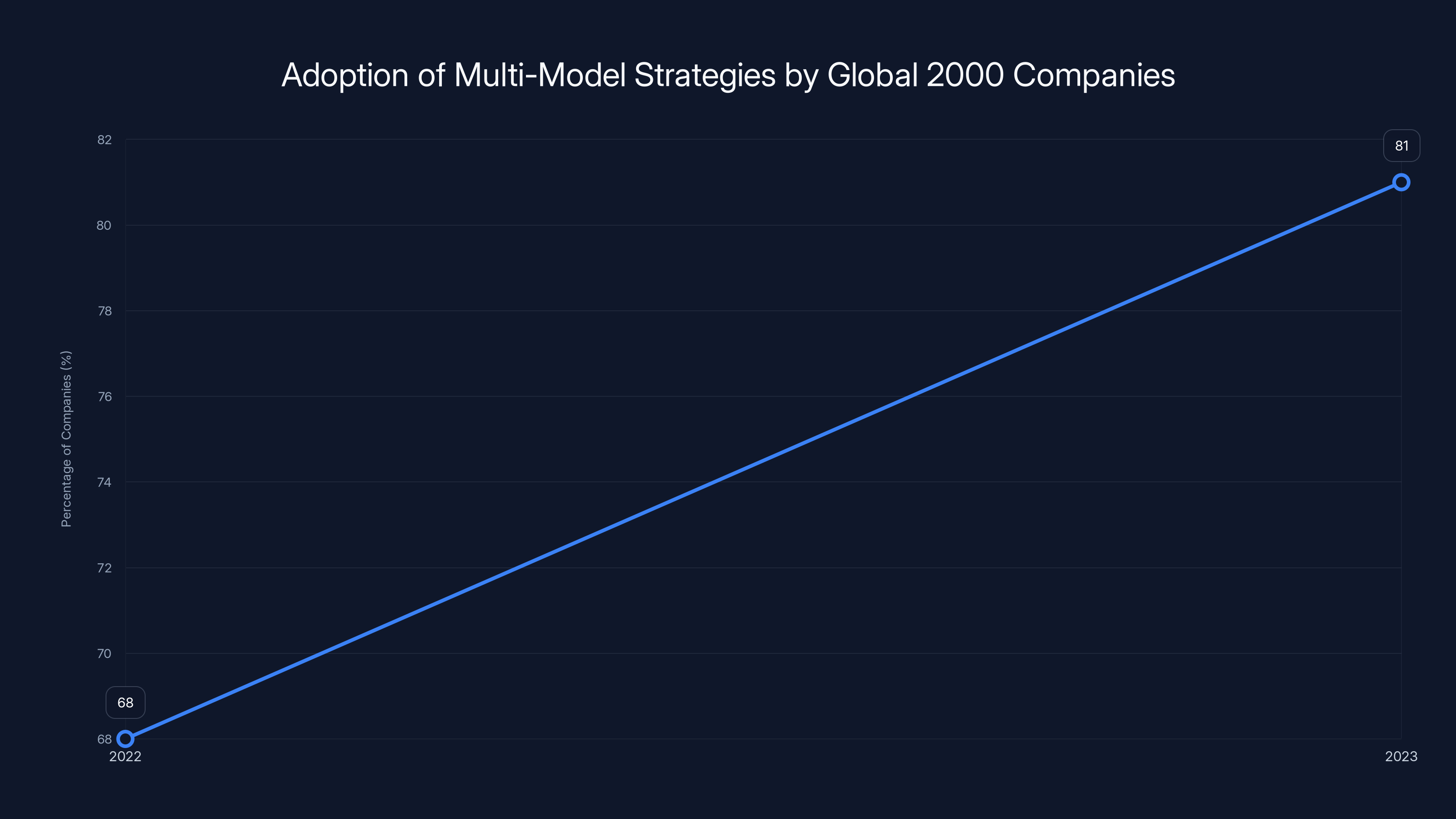

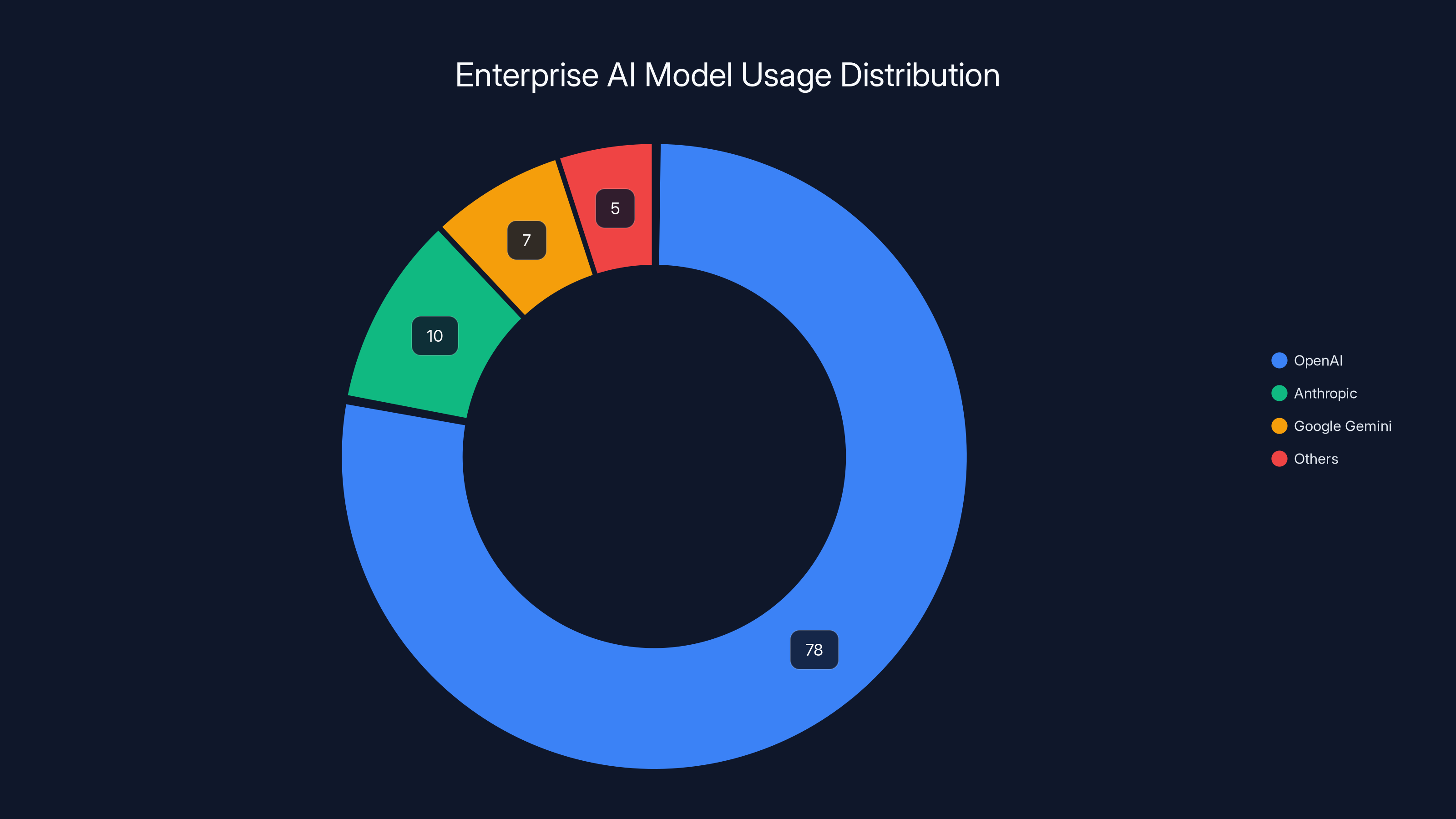

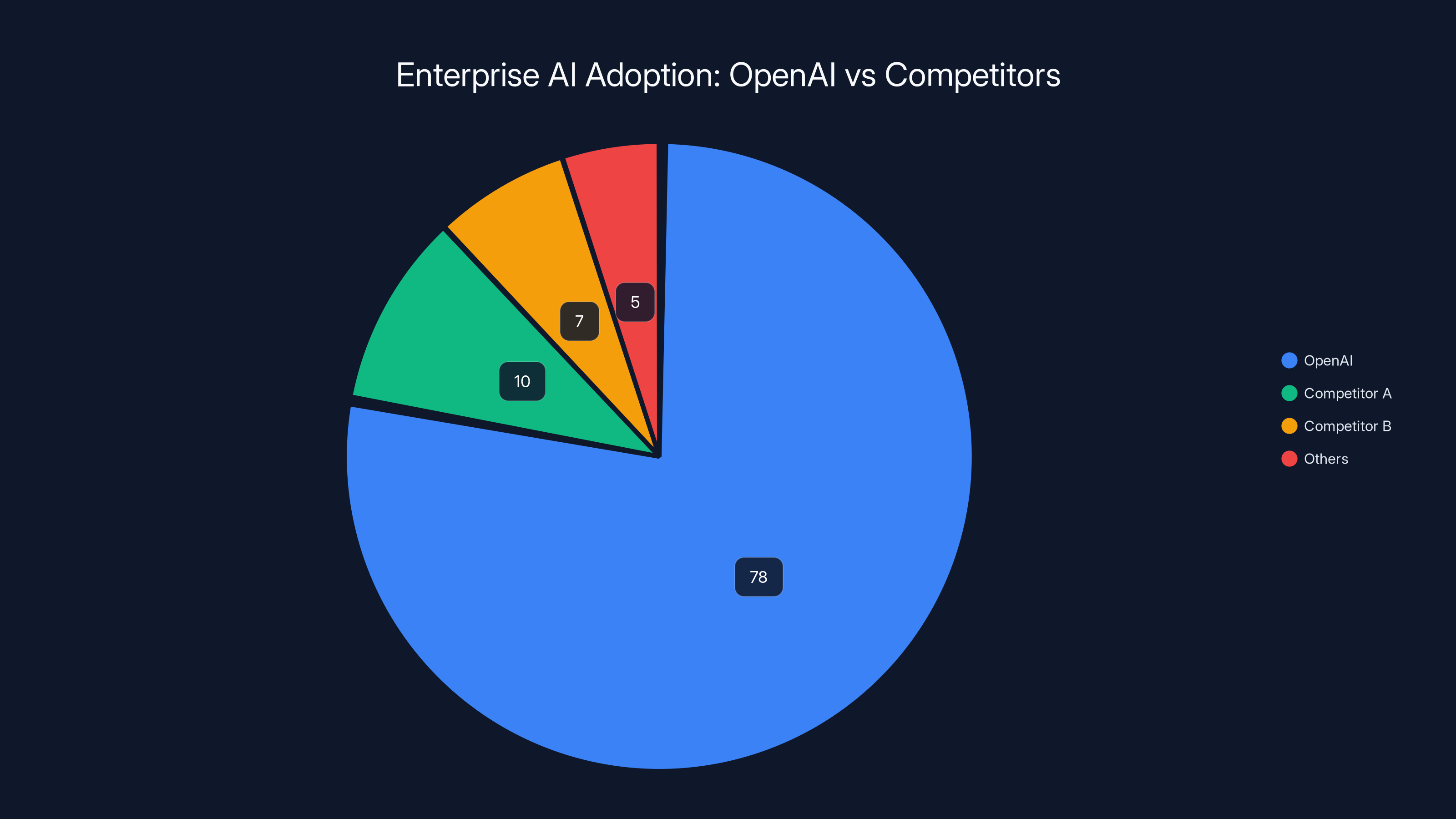

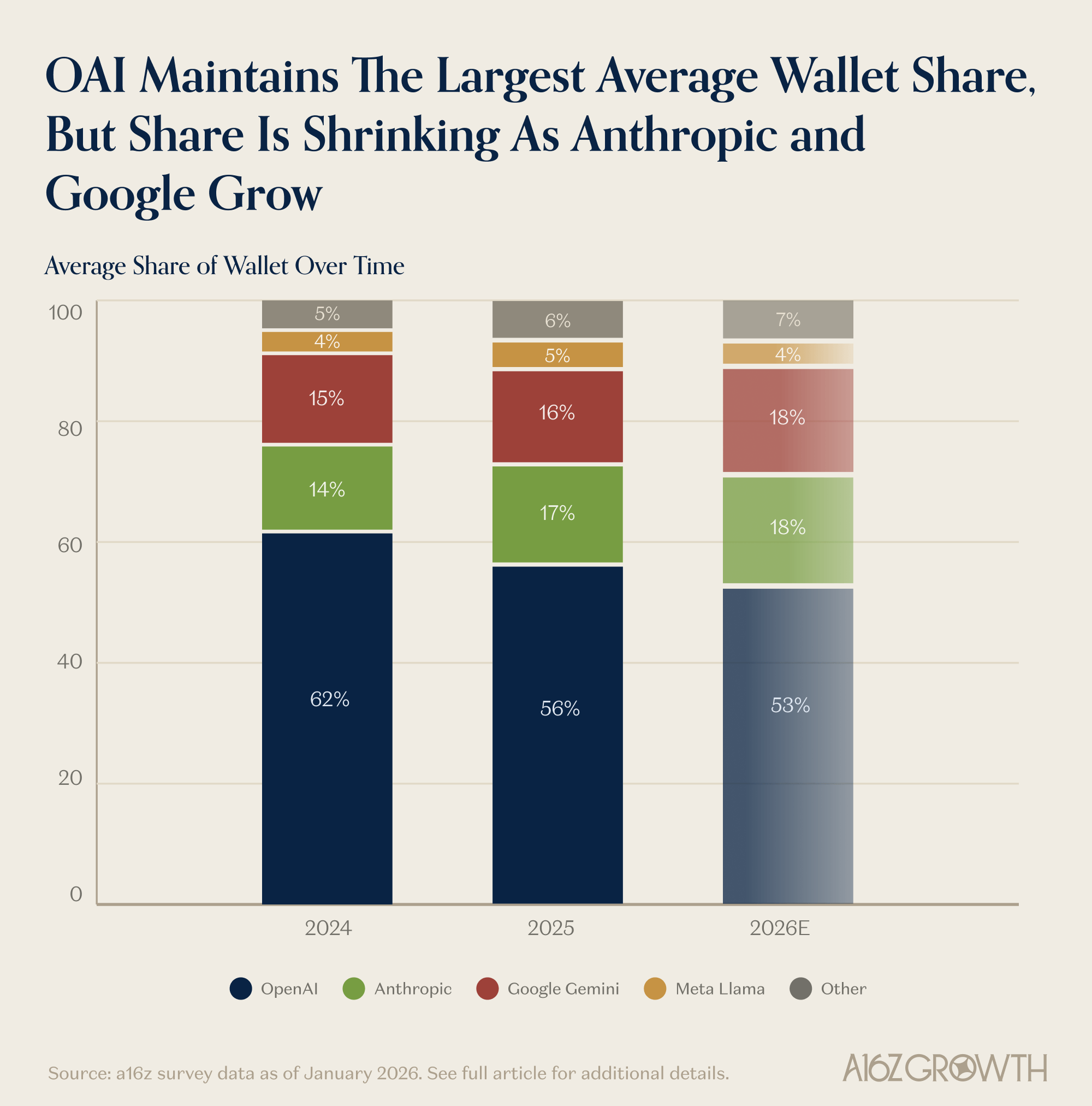

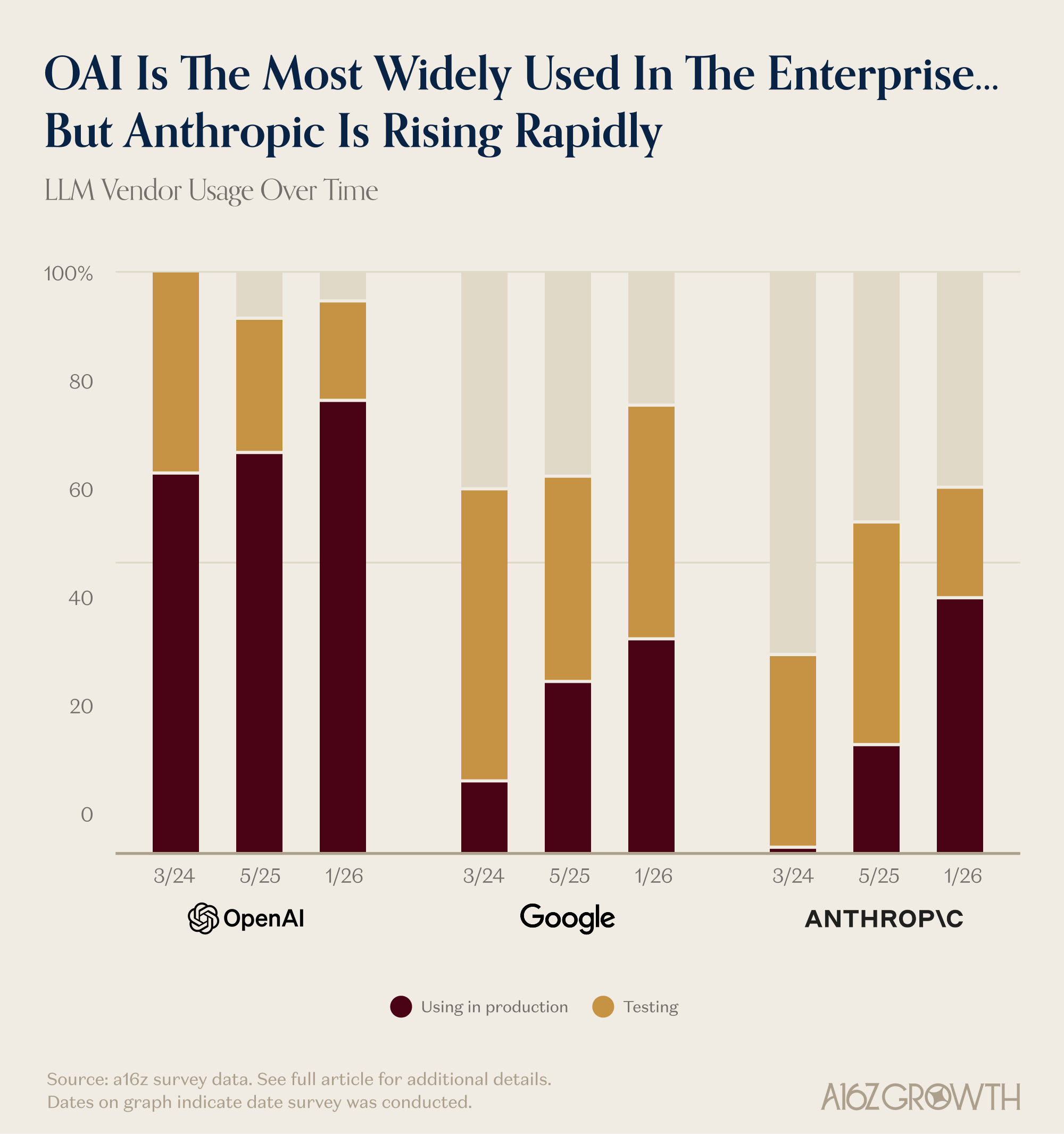

Open AI still commands the market with 78% of Global 2000 companies choosing their models for production workloads. But here's what caught my attention in the latest data from venture capital giant Andreessen Horowitz: 81% of enterprises are now running three or more AI model families simultaneously. That number was just 68% a year ago.

This shift tells you everything. Enterprises aren't consolidating on a single vendor anymore. They're building multi-model strategies, testing different families, and deploying based on what actually works for their specific problems.

I spent the last two weeks digging through enterprise AI adoption patterns, talking to CTOs, and analyzing what's driving these decisions. The real story isn't about Open AI versus Anthropic. It's about how enterprises are making intelligent trade-offs, discovering which models excel at which tasks, and building AI strategies that aren't dependent on any single company.

Let's break down what's actually happening in the enterprise AI world right now.

TL; DR

- Open AI maintains market leadership at 78% adoption among Global 2000 companies, with broad use across chat, knowledge management, and customer support

- Anthropic is rapidly gaining ground, especially for software development and data analysis tasks, with 75% of their users running Sonnet or Opus models

- Multi-model strategies now dominate enterprise deployments, with 81% of companies using three or more AI model families (up from 68% in one year)

- Use case specificity drives model selection, not brand loyalty—enterprises choose based on performance for their particular problem

- Microsoft 365 Copilot significantly outpaces Google Gemini for Workspace adoption in the enterprise

- Model generation matters more than you'd think: Less than half of Open AI customers have upgraded to GPT-5.2, while 75% of Anthropic users are on newer models

The adoption of multi-model strategies among Global 2000 companies has increased from 68% in 2022 to 81% in 2023, indicating a shift towards deployment diversity over single-vendor optimization.

Why Open AI Still Dominates Enterprise AI

Let's start with the obvious: Open AI's 78% adoption rate among Global 2000 companies isn't accidental. It's built on several concrete advantages that enterprises depend on.

First, there's the stability factor. Open AI has been shipping reliable, predictable models for longer than anyone else. When you're running customer-facing chat systems, content moderation, or knowledge management systems, you need models that don't surprise you. Open AI's track record of consistent performance across model generations gives CIOs confidence.

Second, the ecosystem is mature. Chat GPT has over a million business customers. That means there are thousands of case studies, integration patterns, and best practices documented. Your engineers can find answers to their problems on Stack Overflow before they even ask the question.

Third, Open AI's general-purpose performance is genuinely strong. Open AI excels in broad use cases: conversational AI, knowledge retrieval, content summarization, and customer support interactions. If you're building something that doesn't require specialized expertise, Open AI's models get the job done without requiring you to optimize heavily.

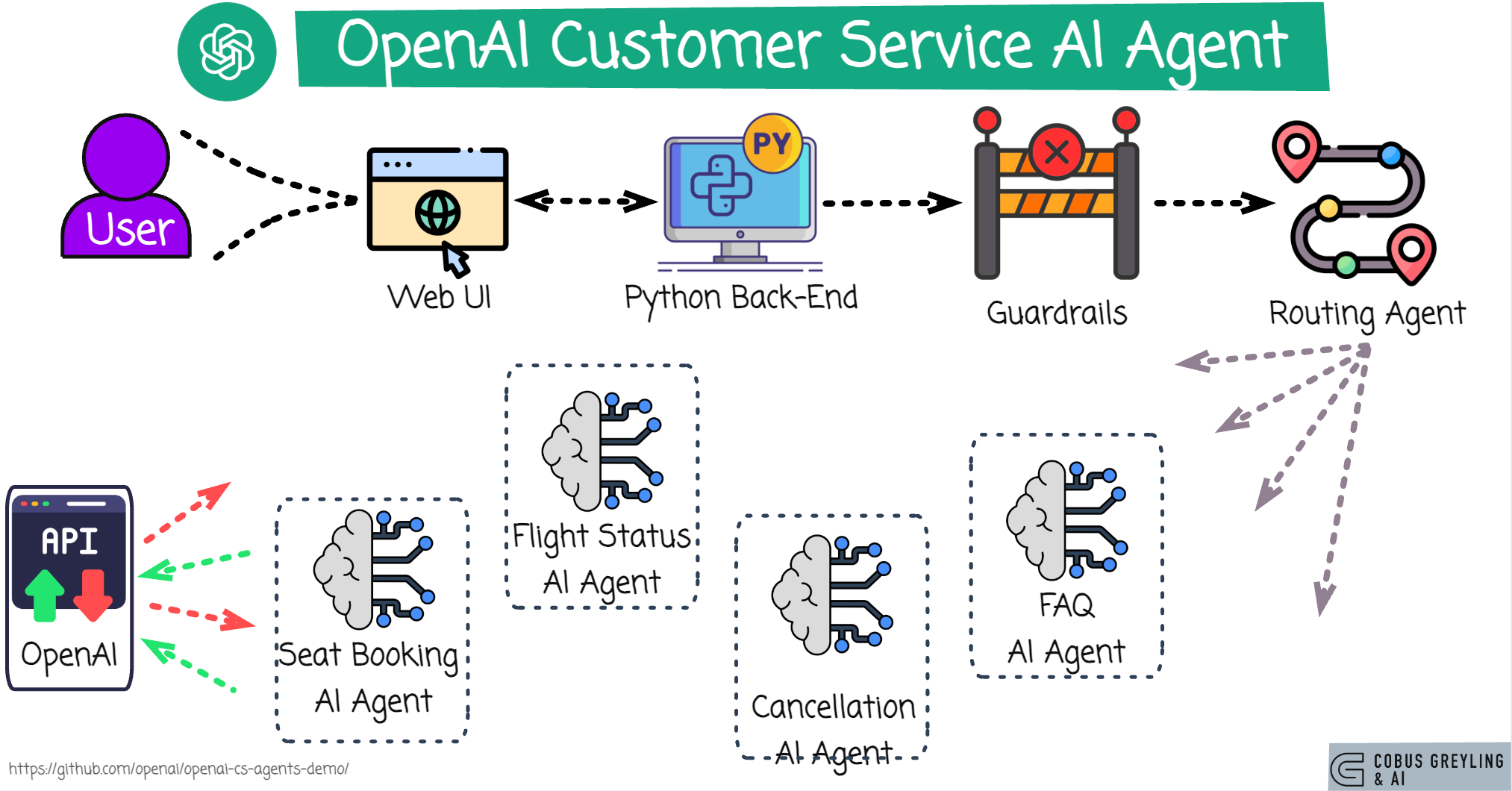

The Customer Support and Knowledge Management Play

Enterprise adoption of Open AI models is heavily driven by customer-facing use cases. When Salesforce integrates AI into Service Cloud, or when enterprises build internal knowledge assistants, they're typically reaching for Open AI models because of three things:

- Ease of integration: Open AI's APIs are clean, well-documented, and have been battle-tested by hundreds of thousands of developers

- Cost predictability: Pricing is straightforward—you pay per token, and the pricing hasn't moved dramatically month to month

- Balanced capability: Open AI models are strong enough for these tasks without being overkill, which keeps costs down

For knowledge management specifically, enterprises deploy Open AI models to surface internal documentation, answer employee questions, and reduce the load on customer support teams. The companies doing this at scale report 25-40% reduction in support ticket volume after implementing RAG (retrieval-augmented generation) systems with Open AI models.

What surprised me was how many enterprises are still running older Open AI models. Less than half of Open AI's enterprise customers have deployed GPT-5.2 in production. That's not because GPT-5.2 is worse—it's because migration is friction. They've optimized prompts, fine-tuned token usage, and built deployment infrastructure around GPT-4. Switching means revalidating everything.

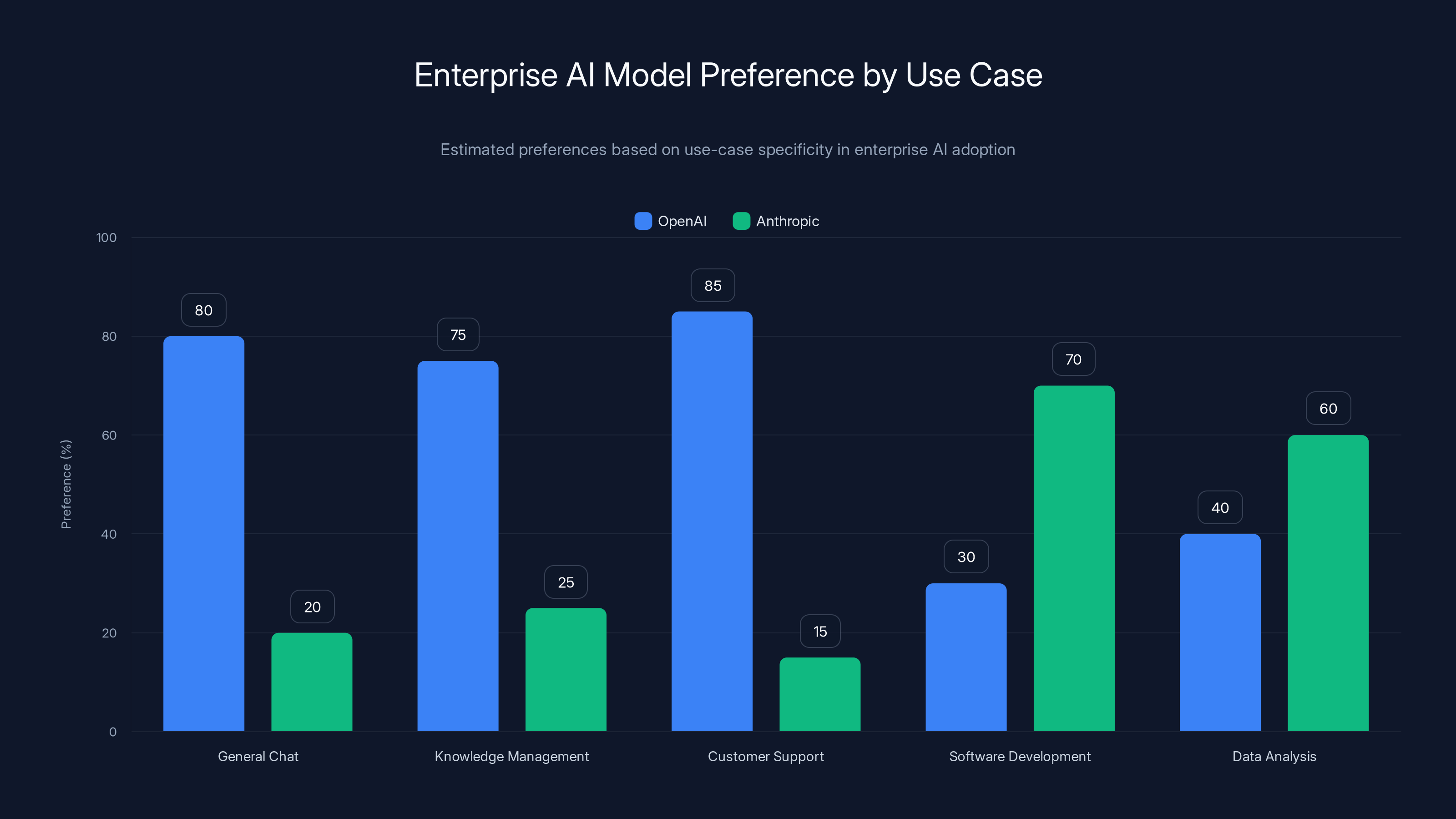

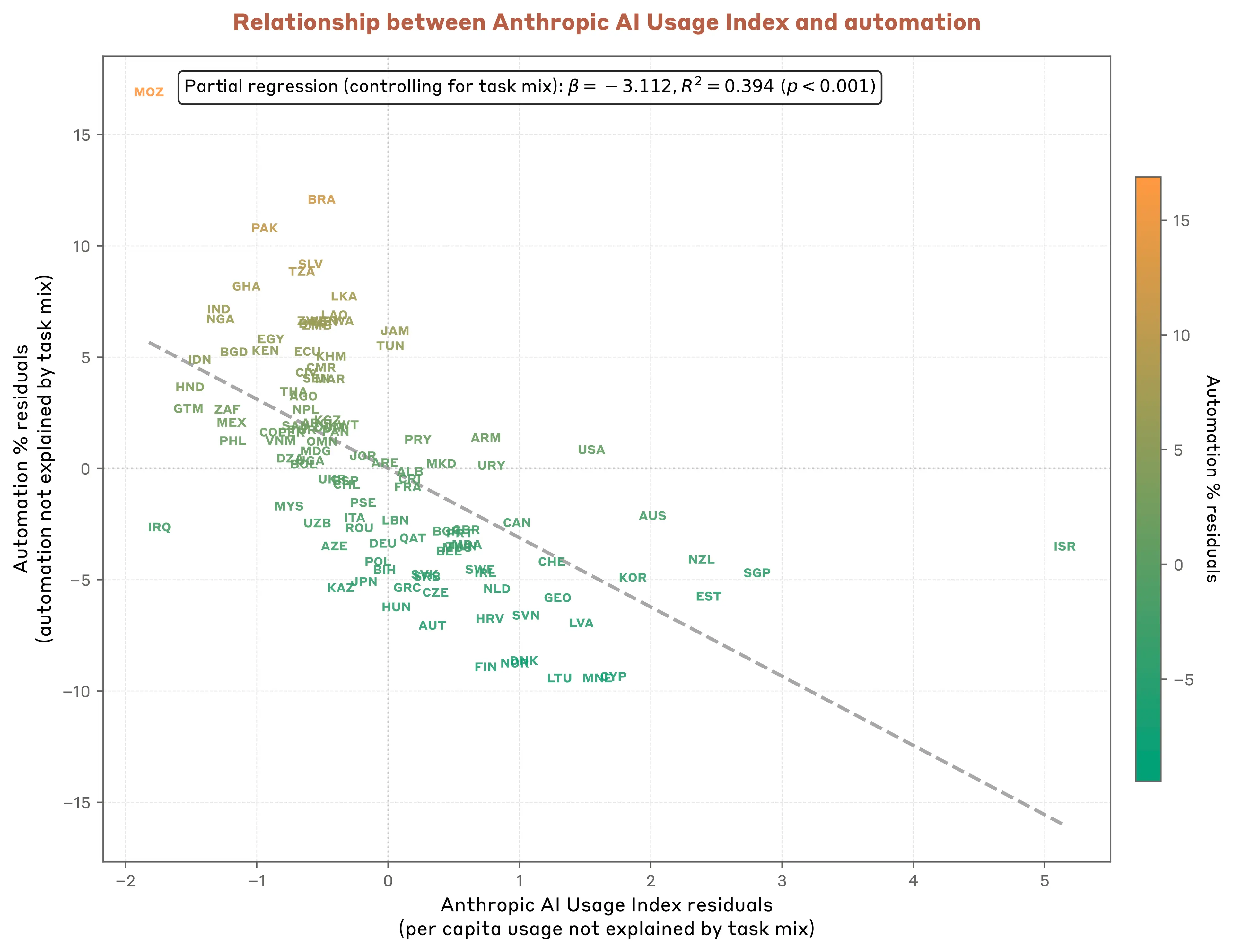

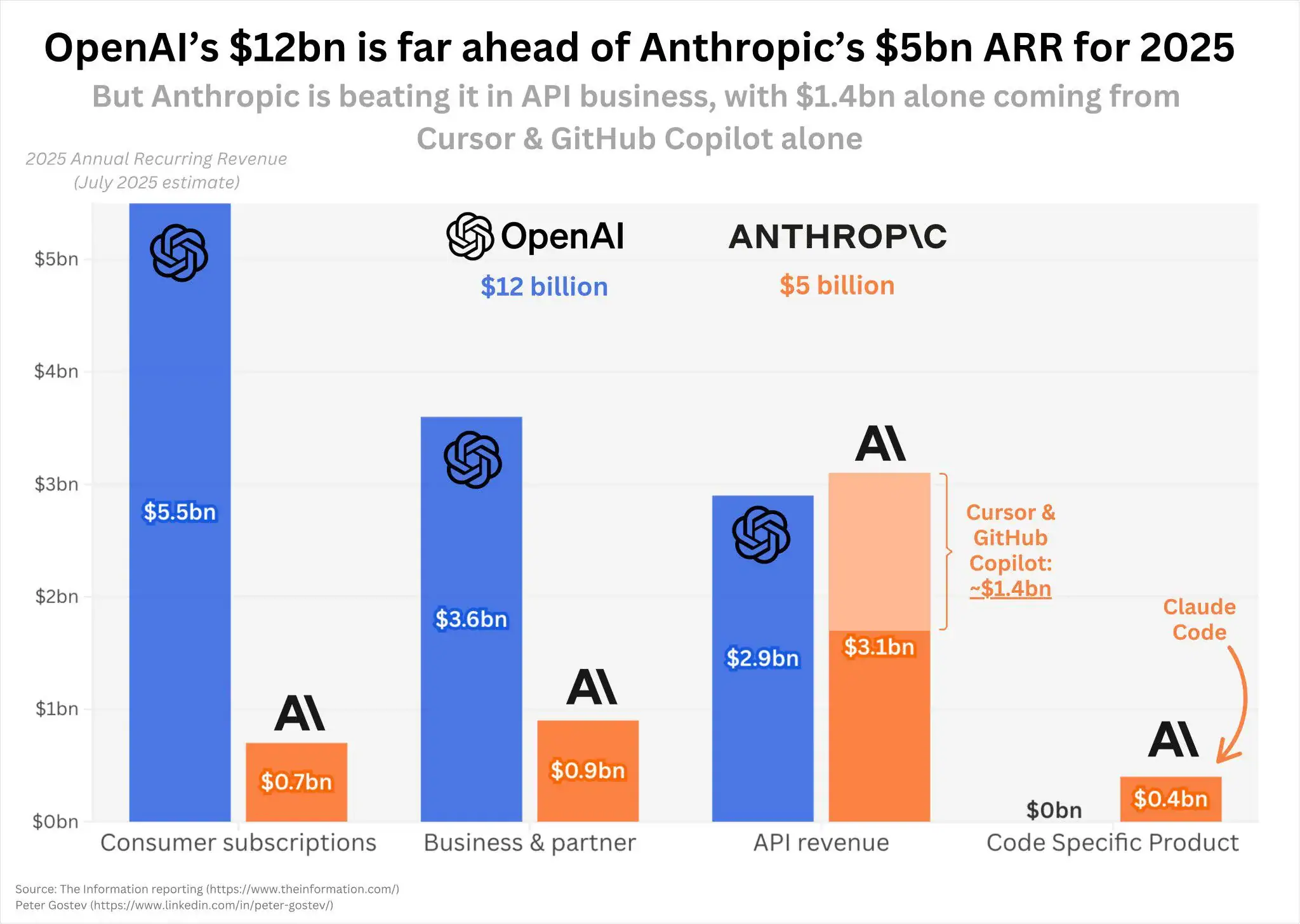

Enterprises prefer OpenAI for general chat and customer support, while Anthropic is favored for software development and data analysis due to its higher accuracy. (Estimated data)

Anthropic's Rapid Rise: The Developer's Choice

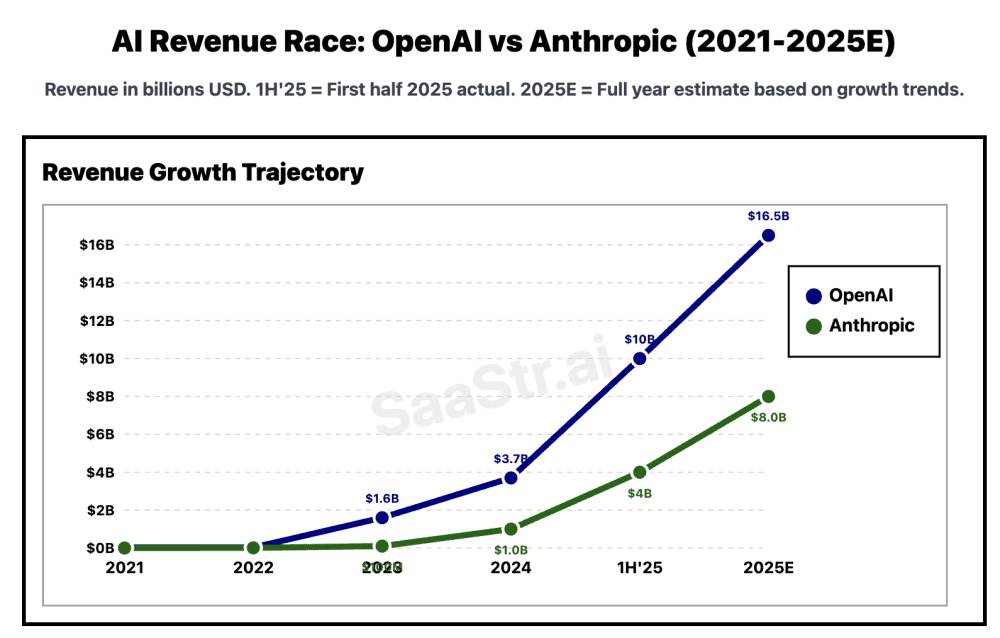

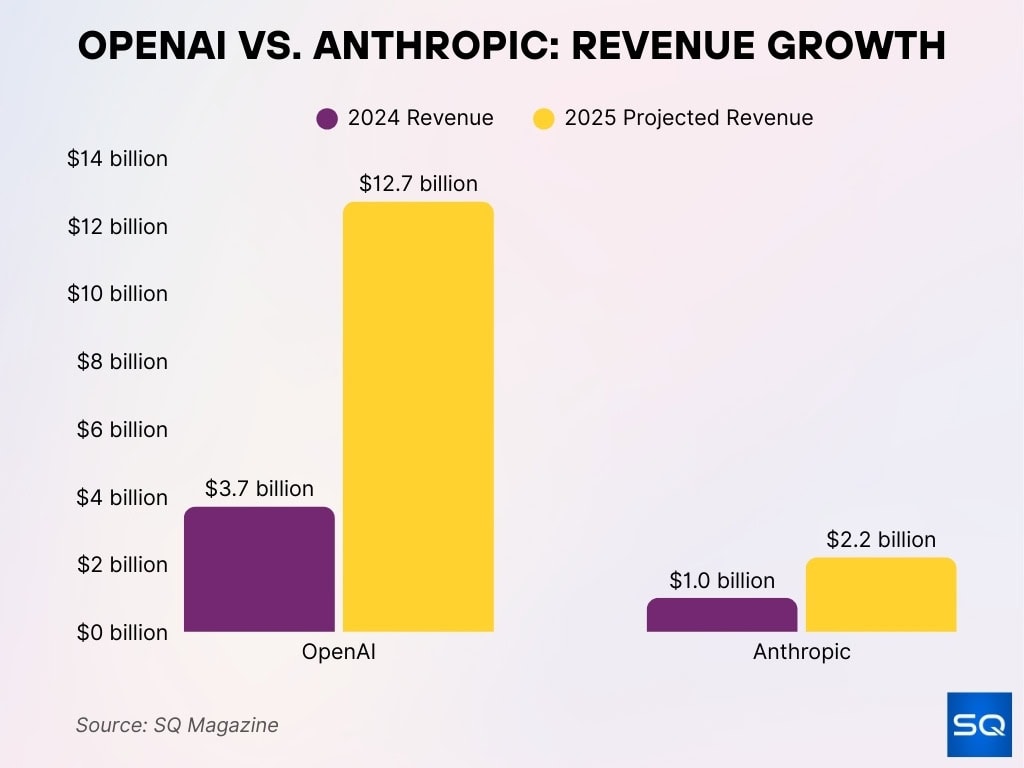

Here's where things get interesting. Anthropic wasn't on most enterprise radar two years ago. Now, they're the second most popular choice, and the data shows they're winning in specific, high-value domains.

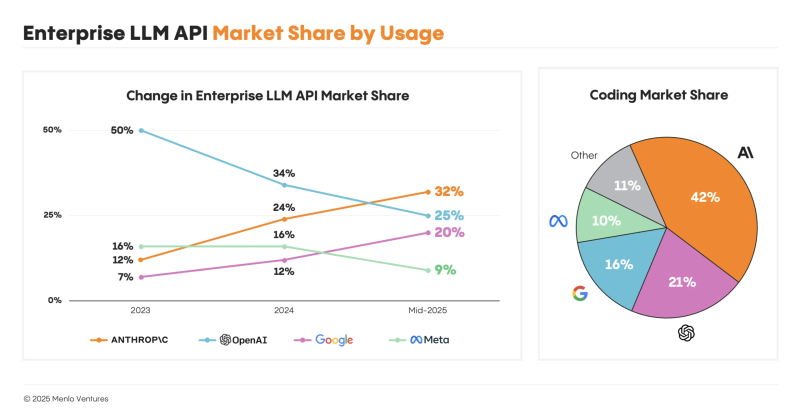

Anthropic's growth started picking up in mid-2025, and the reasons are concrete. CIOs report that Anthropic models perform better at software development tasks and data analysis. That's not marketing speak—that's coming from engineering teams who actually ran benchmarks.

The numbers back this up: 75% of Anthropic's enterprise customers are running Claude Sonnet 4.5 or Opus 4.5. That's a much higher adoption rate for newer models compared to Open AI's customer base. This tells you something important: Anthropic's customers believe the newer models are worth upgrading to.

Why Developers Prefer Claude for Coding Tasks

When you talk to engineering leads about why they're choosing Anthropic for code generation, refactoring, and technical analysis, three things consistently come up:

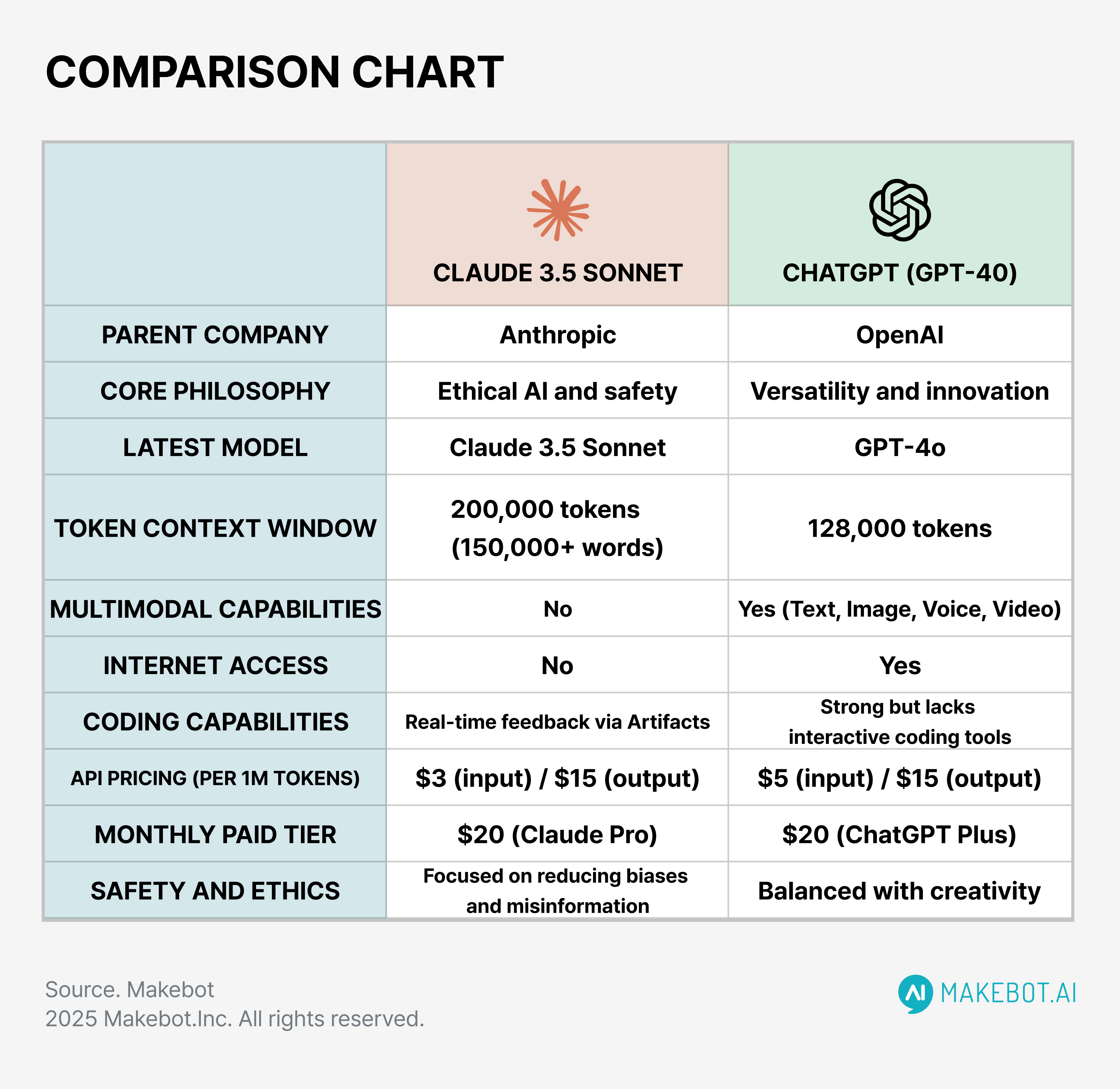

1. Better context handling: Claude models can ingest entire codebases without losing coherence. For large systems refactoring, you can dump thousands of lines of code into Claude and ask it to identify patterns or suggest architectural improvements. The model maintains context quality across longer sequences.

2. Stronger reasoning about constraints: Building software isn't about writing code in isolation. It's about understanding dependencies, trade-offs, and architectural patterns. Claude's reasoning capabilities excel here. Engineers report fewer hallucinations about API availability and library versions compared to other models.

3. Better code quality output: Anthropic invests heavily in constitutional AI training, which has the side effect of producing code that's more aligned with common patterns. The model is less likely to suggest overly clever solutions that work in isolation but break in real systems.

Data Analysis: Where Claude Outperforms

For data analysis tasks, the advantage is even clearer. When enterprises use AI to analyze datasets, identify anomalies, or generate SQL queries, Claude performs better because it maintains stronger logical consistency through multi-step reasoning.

One CTO told me: "We tested both Open AI and Claude on our data warehouse queries. Claude generated correct SQL 94% of the time. Open AI was at 73%. That difference matters when you're running queries against production data."

This is why Anthropic is seeing traction in analytics, financial modeling, and business intelligence teams. These use cases require not just language understanding but logical precision.

The Trust Factor

Anthropically has positioned itself around AI safety from day one. For enterprises dealing with regulated data or risk-sensitive applications, this matters. Claude's training includes explicit focus on reducing harmful outputs and maintaining transparency about model limitations.

This isn't just ethical positioning. It's practical. When you're using AI in financial services, healthcare, or legal contexts, compliance teams need to understand how the model makes decisions. Anthropic publishes detailed documentation on how their models behave, which makes compliance easier.

The Multi-Model Enterprise Strategy

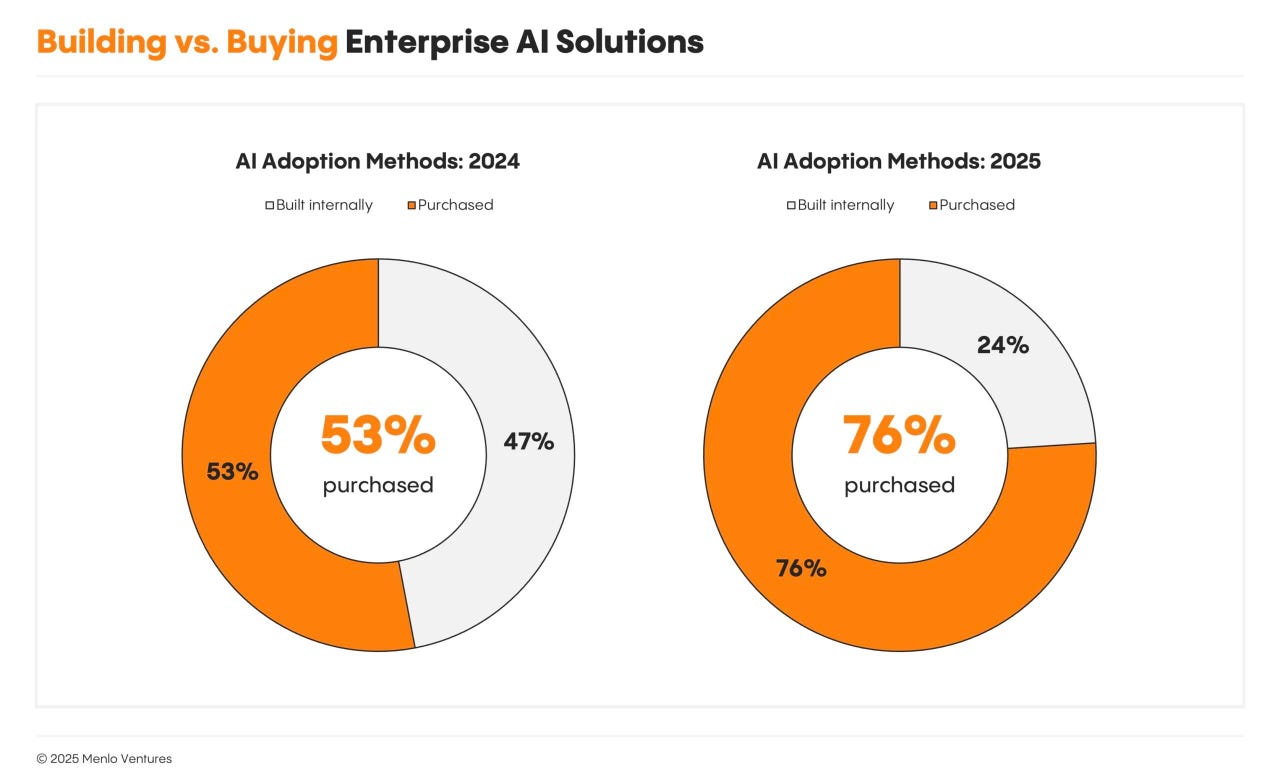

This is the most important shift happening right now, and most people are missing it.

81% of Global 2000 companies now use three or more AI model families at testing or production stages. One year ago, that number was 68%. The trajectory is clear: enterprises are moving away from single-vendor strategies.

Why? Because the cost of optimization exceeds the cost of deployment diversity.

Let me explain. If you commit entirely to one model provider, you need to optimize everything for that provider. Your prompts, your fine-tuning, your infrastructure, your token budgeting—everything is tailored to one company's API.

But if you deploy multiple models, you lose some optimization but you gain optionality. You can route different request types to different models. You can switch providers if one starts underperforming or raises prices dramatically. You can A/B test model performance in production with real users.

How Enterprises Are Organizing Multi-Model Deployments

The enterprises doing this well follow a specific pattern:

Tier 1: General-purpose tasks (Open AI): Customer support, internal chatbots, content summarization. These are high-volume, low-complexity tasks where Open AI's general strength makes sense.

Tier 2: Specialized tasks (Anthropic, sometimes Google): Code generation, data analysis, complex reasoning. Route these to models that excel at the specific task type.

Tier 3: Experimental (new providers): Keep one slot open for testing emerging models. This year, that might be Mistral or a specialized fine-tuned model.

This architecture buys you flexibility. If Open AI's pricing increases, you can shift volume to Anthropic without rebuilding your entire system. If Anthropic releases a new model that's better for your specific use case, you can adopt it incrementally.

The Cost Dynamics of Multi-Model Strategies

You'd think maintaining multiple integrations would be expensive. Technically, yes. But the actual cost math works in favor of multi-model approaches for enterprises at scale.

Let's say you process 10 million API calls per month across all your AI workloads. If you optimize everything for one model, you might spend $150K monthly. But you're also paying a consistency tax: you're forcing all workloads through a model that isn't ideal for all of them.

With a multi-model approach, you might spend:

- $80K on Open AI (general-purpose, high volume)

- $50K on Anthropic (specialized tasks, better performance)

- $5K on experimentation

Total: $135K—actually cheaper than the single-model approach, plus you get better overall performance and flexibility.

Anthropic's Claude models outperform OpenAI's models in context handling, reasoning about constraints, and code quality output, making them a preferred choice among developers (Estimated data).

Use Case Specificity: The Real Driver of Adoption

Here's what most analysts get wrong about the enterprise AI market: they talk about "Open AI vs Anthropic" as if enterprises are choosing a primary vendor.

That's not how it works anymore.

Enterprises choose models for specific use cases, then aggregate those choices into a portfolio. The organization doesn't say "we're an Anthropic shop." They say "we use Anthropic for software development and Open AI for customer support."

This use-case-driven approach is why the data looks so interesting. CIOs prefer Open AI for general chat, knowledge management, and customer support, but those are broad, low-specificity categories. When you get specific about the task, Anthropic often wins.

Software Development and Code Generation

The data here is clear: Anthropic is winning. Engineering leaders prefer Claude for code-related tasks because the model's reasoning capabilities produce fewer errors. When you're generating code that will actually run in production, being 20% more accurate matters immensely.

Enterprise engineering teams report using Claude for:

- Code refactoring and modernization

- Bug analysis and root cause investigation

- Database query generation

- Documentation generation from code

- Architectural review and decision support

Data Analysis and BI Tasks

For analytics and business intelligence, Anthropic's logical consistency provides an advantage. When your finance team asks an AI to analyze quarterly trends or forecast revenue, accuracy matters more than speed.

SQLQuery Generation Accuracy is where this shows up most. Claude correctly generates SQL that works on the first try 94% of the time in enterprise testing. That might not sound like much better than 73%, but consider the cost of errors in production databases. One misguided DELETE query is worth more investigation time than the 20 queries that work correctly.

Customer Support and Conversational AI

Here, Open AI maintains the advantage. Their models excel at the constant micro-adjustments required for customer-facing chat: maintaining tone, understanding context, handling edge cases, and knowing when to escalate.

Open AI's broader training on conversational data gives their models better intuition for human interaction. They're less likely to overcomplicate responses or get stuck in logical loops.

Knowledge Management and Retrieval

For internal knowledge systems—think intranet, documentation search, or employee Q&A—Open AI's general strength is valuable. These systems need to handle a broad range of questions without being specialized for any one type.

Open AI's models excel here because they've seen more diverse training data and can handle the weird, context-dependent questions that come from actual employees.

Google Gemini: The Third Player (With a Caveat)

Google's entering this market from a position of strength—they have distribution, cloud infrastructure, and a massive platform. But the adoption numbers tell a different story.

CIOs note that Gemini performs well quite broadly, but lags in some coding use cases. This is important because coding is increasingly a key evaluation criterion. If a model can't handle your engineers' needs, executives are reluctant to sign enterprise contracts.

Google's advantage is infrastructure and integration. If you're already on Google Cloud, Workspace, and Android, adding Gemini makes sense economically. But that advantage only applies to a subset of enterprises.

Microsoft 365 Copilot: The Enterprise Favorite

Here's the thing that surprised me in the data: Microsoft 365 Copilot far outpaces Gemini for Workspace in enterprise adoption.

This is happening for a simple reason: Microsoft integrated AI directly into the products enterprises already use daily. You don't need to log into a separate interface or learn new workflows. You hit a button in Word, Outlook, or Teams, and AI is there.

Google is trying the same approach with Gemini for Workspace, but the adoption lag suggests they're either arriving too late or their integration isn't compelling enough yet. Enterprises have already optimized their workflows around Microsoft 365.

78% of enterprises use OpenAI models, but many also integrate Anthropic and Google Gemini for specific tasks. Estimated data for Anthropic, Google Gemini, and Others.

The Model Generation Question: Why Newer Isn't Always Better

One of the most interesting findings from the data is how unevenly model adoption is distributed across generations.

Only 46% of Open AI's enterprise customers have GPT-5.2 in production. This is Open AI's newest flagship model, released in early 2025. Why the slow adoption?

Because enterprises have optimized for GPT-4.

They've built systems around GPT-4's capabilities, limitations, and quirks. Their prompts are tuned for it. Their cost calculations are based on it. Their compliance processes are validated for it. Switching to GPT-5.2 means re-validating everything.

For many enterprise use cases, GPT-4 is good enough. The incremental improvements from GPT-5.2 don't justify the migration cost.

Contrast this with Anthropic: 75% of their enterprise customers are running Sonnet 4.5 or Opus 4.5. That's dramatically higher adoption of newer models.

Why? Several reasons:

- Anthropic's customers adopted more recently, so they didn't have as much optimization debt around older models

- The performance improvements are more visible in the task categories where Anthropic dominates (code, analysis)

- Anthropic has positioned newer models as major upgrades, so there's perception of differentiation

This matters for the future. As both companies release new models, enterprises will be more willing to adopt Anthropic's improvements than Open AI's simply because migration is easier.

Enterprise ROI Metrics: What Actually Matters

When enterprise leaders evaluate AI investments, they're measuring success through a specific lens. The data shows companies are still primarily focused on productivity and cost savings metrics.

But that's changing.

Four in five Global 2000 companies expect to break even or deliver higher ROI on their AI investments. That's a remarkably high confidence level. But more importantly, the metrics being tracked are diversifying.

Productivity Gains

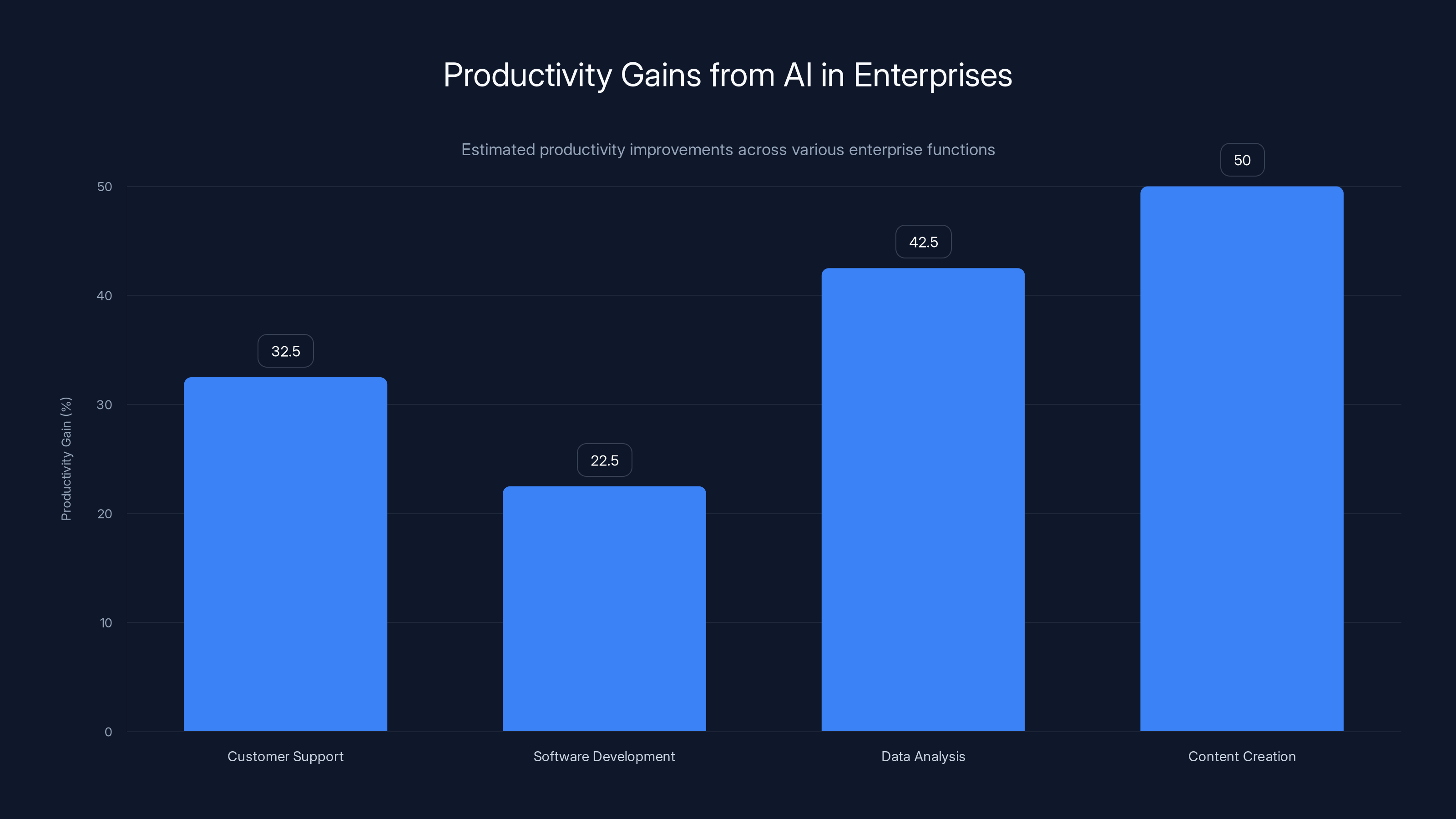

Most enterprises measure this first. It's easy to quantify: "We reduced time spent on task X by Y%." For customer support, this means faster ticket resolution. For code generation, this means faster feature development.

Typical productivity gains reported:

- Customer support: 25-40% reduction in average resolution time

- Software development: 15-30% faster code completion for new features

- Data analysis: 35-50% reduction in time to insight

- Content creation: 40-60% reduction in drafting time

These numbers matter because they directly impact headcount planning. If AI accelerates your support team's productivity by 30%, you need fewer support staff to handle the same volume.

Cost Savings

The second metric enterprises track is pure cost reduction. How much cheaper is it to run operations with AI?

For customer support, the math is straightforward: automated responses handle 40-60% of inbound queries. Each resolved query that doesn't require human time is a cost save.

But here's where enterprises often underestimate: the cost of incorrect AI responses. If an AI system confidently gives the wrong answer, now you've created customer frustration and an escalation cost.

Employee and Customer Satisfaction (The New Metric)

This is what's starting to gain traction. Enterprises are realizing that productivity metrics don't capture the full picture.

Employee satisfaction with AI tools is becoming important because burnout from poorly-implemented AI is real. If you automate the interesting part of a job and leave your employees with mundane oversight tasks, you're not improving—you're creating frustration.

Customer satisfaction with AI-assisted support is also being measured more carefully. Some customers prefer human support, and that preference affects retention.

Four in five companies expect to deliver ROI, but an increasing number are expanding their ROI definition beyond pure productivity or cost metrics to include quality and satisfaction measurements.

OpenAI leads the enterprise AI market with a 78% adoption rate among Global 2000 companies, far surpassing its closest competitors. Estimated data.

Reasoning Capabilities: The Next Frontier

One of the most significant themes in enterprise adoption is the focus on model reasoning capabilities rather than raw pattern matching.

Enterprises are discovering that what matters isn't how well a model can predict the next word. It's how well the model can work through a problem logically.

This is why Anthropic is gaining ground. Their models are explicitly trained for reasoning. When you ask Claude to debug a complex system or analyze multi-step processes, the model approaches it like a reasoner rather than a pattern matcher.

This has concrete benefits:

- Faster time to value: Less prompt engineering needed because the model understands what you're trying to accomplish

- Less manual validation: The model's reasoning is closer to correct the first time

- Higher trust: When the model explains its reasoning, you can verify whether it makes sense

Open AI's models are powerful, but they're optimized for different things (general capability, broad knowledge). That's why enterprises are discovering they need multiple models.

The Integration Complexity Challenge

One thing that's not discussed enough in the enterprise AI conversation is the complexity of running multiple models at scale.

It's not just about choosing which model to use. It's about orchestrating requests, tracking costs across providers, managing authentication, handling failures, and maintaining consistency.

Enterprises managing multiple model deployments typically implement:

- A model abstraction layer: A wrapper API that handles routing decisions without the application knowing which underlying model is being used

- Cost tracking per model: Understanding which models are actually providing value at what cost

- Monitoring and alerting: Detecting when a model's performance degrades or costs spike

- Failover logic: Automatically switching to a backup model if the primary option is unavailable

For companies at scale, this infrastructure becomes increasingly important. The largest enterprises are building specialized teams just to manage their AI model portfolio.

Enterprises report significant productivity gains from AI, with content creation seeing the highest improvement at an average of 50%. Estimated data based on typical reported ranges.

Security and Compliance: The Unglamorous Reality

When I talk to enterprise security teams about AI adoption, the conversation is usually about data leakage risk, not capabilities.

Every model vendor claims to not train on your data. But enterprises need to verify this at a technical level. They need to audit the systems, verify data handling, and ensure compliance with regulations like HIPAA or GDPR.

Both Open AI and Anthropic have enterprise data handling options, but the verification process is rigorous. This is where many smaller AI providers lose out—they can't scale compliance operations fast enough.

For regulated industries (healthcare, financial services, legal), this becomes a major factor in model selection. It's not about capabilities anymore. It's about which vendor has the compliance infrastructure your industry requires.

Anthropically has positioned itself well here. Their focus on AI safety and transparency extends to compliance. Healthcare enterprises specifically cite Anthropic's compliance capabilities as a reason for adoption.

The Emerging Model Landscape

While Open AI and Anthropic dominate enterprise adoption, the landscape is fracturing for specialized use cases.

Enterprises are experimenting with:

- Specialized fine-tuned models: Running custom-trained variants for domain-specific tasks

- Open-source models: Mistral, Llama variants running locally or on dedicated infrastructure

- Niche vendors: Focused players for specific industries (e.g., healthcare, legal)

The multi-model strategy is actually enabling this diversity. Because enterprises aren't locked into a single vendor, they can afford to experiment.

One CTO told me: "We run Open AI for customer chat, Anthropic for internal engineering tasks, a fine-tuned open model for our proprietary analysis, and we're testing a new vendor's legal document analyzer. No single vendor could win all those evaluations."

This fragmentation is healthy for innovation. It prevents lock-in and forces vendors to compete on actual capabilities rather than network effects.

What's Missing From the Current Adoption Data

The data from enterprise adoption surveys gives us a clear picture of what's happening, but there are important gaps.

Actual task-level performance data is sparse. We know enterprises are choosing different models for different use cases, but detailed benchmarks on real enterprise workloads are rare. Most testing happens on public benchmarks that don't reflect actual production use.

Cost per task is also underreported. Enterprises know their total spend with each vendor, but understanding cost per use case is harder. This matters because it drives real financial decisions.

Satisfaction with vendor support should be measured but often isn't. If Anthropic's enterprise support is better than Open AI's, that should be a decision factor. But we don't see this in the public data.

Switching costs are real but invisible. How much would it cost to migrate from Open AI to Anthropic? Enterprises don't talk about this, but it heavily influences their multi-model strategy.

The Competitive Dynamics Ahead

Here's what I think happens next based on the data and the current trajectory.

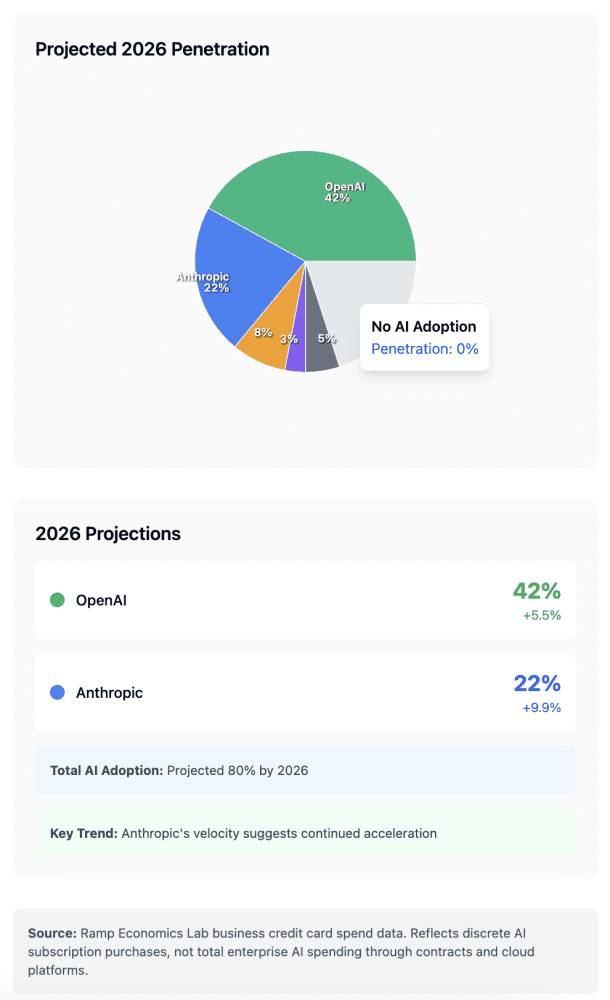

Open AI maintains leadership in volume but loses percentage market share as Anthropic gains. By 2026, Anthropic might reach 40-50% adoption among Global 2000 companies. Not because Open AI gets worse, but because Anthropic's specialization becomes increasingly valuable.

Multi-model strategies become standard practice, not exceptional. By 2027, enterprises that don't run multiple models will be the outliers. The infrastructure to manage this will commoditize.

Specialized models emerge as second-class citizens: For most enterprises, it's not worth maintaining a specialized fine-tuned model unless you have truly unique data or requirements. The major vendors will keep innovating faster than custom models can compete.

Cost becomes the primary competitive lever. Capabilities plateau as both Open AI and Anthropic approach the frontier of what's possible. The differentiation shifts to cost per token and ability to handle large-scale deployments efficiently.

Best Practices for Enterprise Adoption

Based on what enterprises are actually doing successfully, here are concrete practices that work.

Start With a Pilot Program

Don't roll out AI across your entire organization. Start with 2-3 specific use cases and test thoroughly. This lets you understand costs, performance, and integration challenges before scaling.

Measure Baselines First

Before deploying AI, measure your current performance on the task you're automating. Time spent, error rates, cost, customer satisfaction. After deployment, measure the same things. The delta is your actual ROI.

Invest in Abstraction Early

When you're running multiple models, the cost of switching vendors or adding new models increases exponentially without a proper abstraction layer. Build this first, before you need it.

Monitor Continuously

AI systems degrade in performance over time as data distributions change. Implement monitoring that alerts you when model quality drops or costs spike unexpectedly.

Plan for Version Upgrades

When new model versions release, have a process for evaluating them, testing them in production with real users, and rolling them out gradually. Don't upgrade everything at once.

Build Human Oversight Into Critical Paths

For high-stakes decisions (financial, legal, health-related), maintain human review even after AI handles the initial analysis. AI accelerates the process; it shouldn't remove human judgment for risky decisions.

The Broader Enterprise AI Strategy

What's happening in enterprise AI adoption right now isn't about choosing a winner in the Open AI versus Anthropic competition. It's about enterprises becoming sophisticated consumers of AI.

They're moving away from "what's the best AI model" and toward "what models do we need for our specific business." This is a maturing market. Early adopters picked one vendor and rode with it. Mature enterprises are building portfolios.

This shift creates opportunity for multiple vendors. It also creates complexity. Infrastructure for managing multiple models becomes important. Specialized models for specific industries become valuable. Support and compliance capabilities become competitive advantages.

The winner isn't Open AI or Anthropic. It's the enterprise that figures out how to orchestrate these tools effectively. And that's a conversation about your strategy, not about the raw capabilities of any single model.

FAQ

What percentage of enterprises use Open AI models?

According to recent data from Global 2000 company CIOs, 78% of enterprises are using Open AI models for production workloads. However, this doesn't mean 78% use only Open AI—most of these enterprises are part of multi-model strategies where they also use competing models from Anthropic and Google for specific use cases.

Why are enterprises using multiple AI models instead of just one?

Enterprises use multiple models because different models excel at different tasks. 81% of Global 2000 companies now deploy three or more AI model families because the cost of optimizing every workload for a single model exceeds the cost of managing multiple models. Open AI is better for customer support; Anthropic excels at code generation and data analysis; Google Gemini has its own strengths. This multi-model approach gives enterprises flexibility and better performance across their entire portfolio of AI applications.

Is Anthropic gaining market share from Open AI?

Yes, Anthropic is gaining ground, particularly in specific high-value use cases. 75% of Anthropic's enterprise customers are running their newer models (Sonnet 4.5 or Opus 4.5), and CIOs report that Anthropic models outperform Open AI on software development and data analysis tasks. However, Open AI still dominates overall adoption. The market is expanding rather than entirely consolidating, so Anthropic's gains don't necessarily come at Open AI's direct expense—both vendors are growing with the overall market.

What factors drive enterprise model selection?

Use case specificity is the primary driver. CIOs choose models based on specific performance characteristics: Open AI for general chat and customer support, Anthropic for code generation and reasoning tasks, Google Gemini for integration with existing Google Cloud infrastructure. Secondary factors include cost, compliance capabilities, vendor support, and integration with existing enterprise systems. Brand loyalty plays a much smaller role than capability matching.

Should enterprises upgrade to the latest model versions immediately?

No. Most successful enterprises take a cautious approach to model version upgrades. Only 46% of Open AI's customers have upgraded to GPT-5.2, their latest model, because migration costs are substantial. You need to re-test prompts, re-validate compliance, update cost calculations, and verify performance on your actual workloads. Enterprises should pilot new models with a subset of workloads first, then roll out gradually if the benefits justify the migration effort.

What's the role of Microsoft 365 Copilot in enterprise AI adoption?

Microsoft 365 Copilot has become the most widely adopted AI tool for workplace productivity in enterprises, far outpacing Google Gemini for Workspace. This adoption happens because the integration is seamless—employees encounter AI within their normal workflow rather than needing to switch to a separate interface. For enterprises already invested in Microsoft 365, Copilot provides immediate value without requiring new tools or processes.

How do enterprises handle compliance and data privacy with multiple AI models?

Both Open AI and Anthropic offer enterprise data handling options where data isn't used for model training. Enterprises need to verify vendor claims at a technical level, audit data handling procedures, and ensure compliance with regulations like HIPAA or GDPR. Security and compliance teams typically evaluate vendors separately from engineering teams, and compliance capabilities can become a deciding factor for regulated industries. The complexity of managing compliance across multiple vendors is one reason some enterprises prefer consolidating on one vendor despite capability trade-offs.

What's the expected ROI for enterprise AI implementations?

Four in five Global 2000 companies expect to break even or deliver higher ROI on their AI investments. However, ROI metrics are evolving beyond pure productivity or cost savings. Enterprises are increasingly measuring employee satisfaction, customer satisfaction, and quality alongside traditional metrics. A deployment that improves productivity by 20% but decreases customer satisfaction by 10% often delivers lower overall ROI than initially calculated, so modern enterprises track multiple metrics rather than single measures.

The enterprise AI market in 2025 looks fundamentally different from 2023. It's not about picking a winner—it's about building a strategy that matches your business needs with the right tools. Open AI still leads in volume, Anthropic is winning in specialized domains, and smart enterprises are doing both. That's the real story behind the numbers.

Key Takeaways

- OpenAI maintains market leadership at 78% adoption, but 81% of enterprises use three or more models, indicating a shift toward multi-model strategies

- Anthropic is rapidly gaining ground, particularly for software development and data analysis, with 75% of their customers on newer model versions

- Use case specificity drives model selection more than vendor preference, leading enterprises to optimize different tasks with different providers

- Less than half of OpenAI customers have upgraded to GPT-5.2 due to migration costs, while Anthropic customers show higher adoption of new versions

- Microsoft 365 Copilot far outpaces Google Gemini for Workspace, demonstrating the power of seamless integration in enterprise adoption

- Enterprise AI ROI expectations are high (80% expecting ROI), but metrics are evolving beyond productivity to include satisfaction and quality measures

Related Articles

- NVIDIA's $100B OpenAI Investment: What the Deal Really Means [2025]

- SpaceX's Million-Satellite Orbital Data Center: Reshaping AI Infrastructure [2025]

- Major Cybersecurity Threats & Digital Crime This Week [2025]

- AI Agent Training: Why Vendors Must Own Onboarding, Not Customers [2025]

- Windows 11 Hits 1 Billion Users: What This Milestone Means [2025]

- Amazon's CSAM Crisis: What the AI Industry Isn't Telling You [2025]

![OpenAI vs Anthropic: Enterprise AI Model Adoption Trends [2025]](https://tryrunable.com/blog/openai-vs-anthropic-enterprise-ai-model-adoption-trends-2025/image-1-1770059409266.jpg)