Why AI PCs Failed (And the RAM Shortage Might Be a Blessing)

Two years ago, tech companies lined up at the podium with a promise: AI PCs were the future. Every manufacturer from Dell to HP to Lenovo was shouting about on-device neural processors, local AI acceleration, and the magic of running Chat GPT on your laptop without cloud connectivity. Marketing teams spent millions positioning these machines as the next must-have upgrade. The pitch was simple and compelling: AI on your device, faster response times, privacy preserved, no internet required.

Then reality happened.

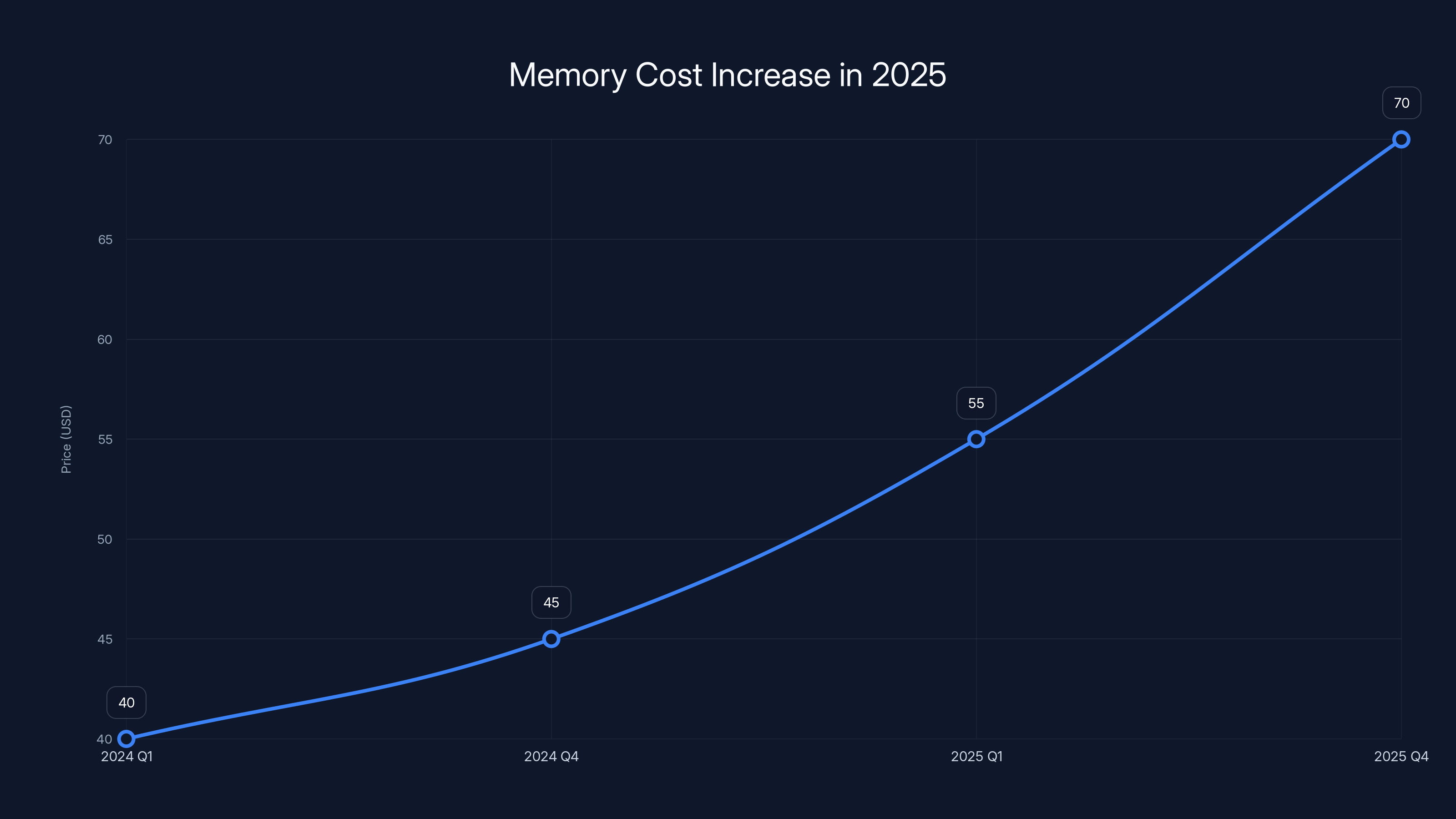

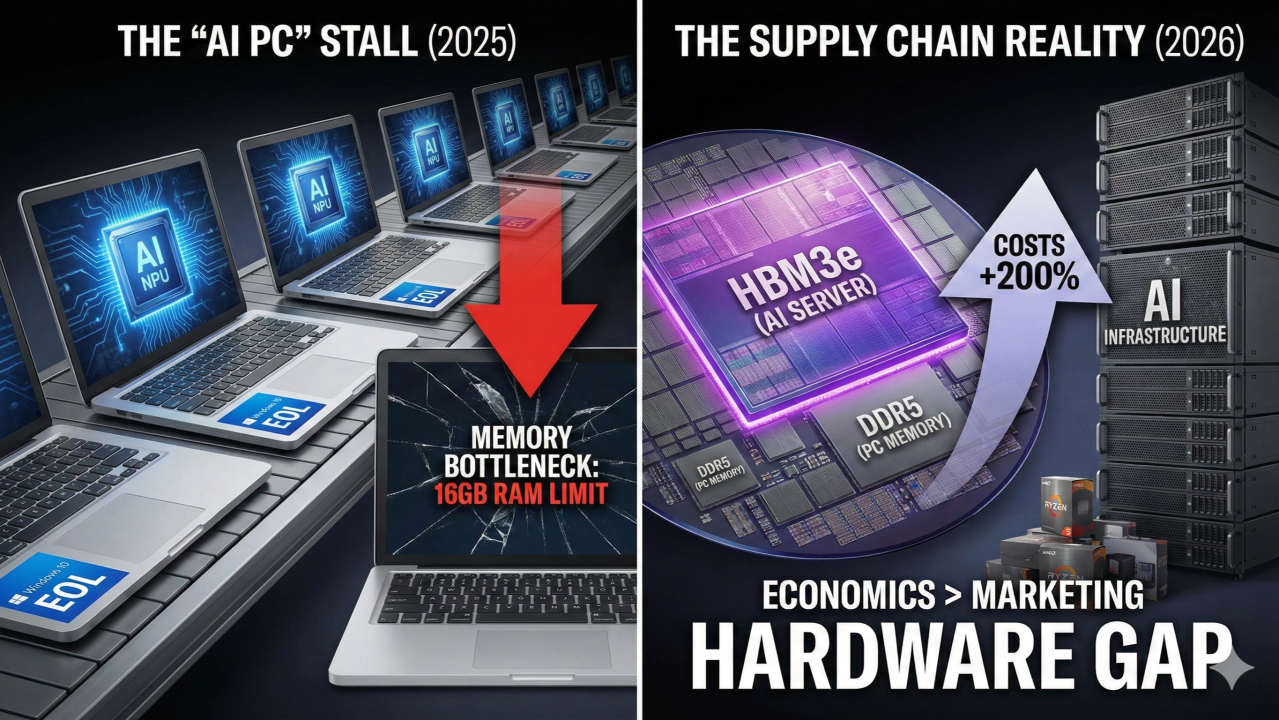

By late 2025, that hype machine sputtered to a halt. It wasn't killed by skeptical reviewers or technical failures, though those existed too. It was strangled by something far more mundane: a memory shortage that sent RAM prices skyrocketing by 40 to 70 percent in a single year. Suddenly, building computers with the 16GB minimum memory requirement for real AI features became economically impractical. PC makers faced a brutal choice: raise prices so high that no one would buy, or abandon the AI PC narrative altogether and go back to the basics.

Here's the thing though. As counterintuitive as it sounds, this RAM shortage might be the best thing that could've happened to the AI PC concept. Not to the products themselves, but to the idea. Because right now, the only way forward is honest.

The AI PC Promise That Never Materialized

When Qualcomm, Intel, and AMD started embedding AI accelerators into their processors around 2023, the vision seemed bulletproof. Imagine running language models directly on your machine. No latency. No privacy concerns. No reliance on cloud infrastructure. For certain use cases—creative professionals, people working with sensitive data, users in regions with poor internet—it made theoretical sense.

Manufacturers pushed this angle hard. They released processors with names like "AI Engine" and "Neural Processing Unit." Laptops started sporting "Copilot Plus" branding. Tech specs highlighted TOPS (trillion operations per second), implying that local AI meant you'd get lightning-fast responses to any prompt.

But here's what nobody really asked: fast for what? And what does the user actually need?

When you look at actual on-device AI implementations in 2024 and 2025, the answer gets uncomfortable. Most "AI features" running locally weren't genuinely useful versions of Chat GPT or Claude. They were narrower, weaker implementations: image upscaling, background blur on video calls, autocomplete suggestions, basic document summarization. Some worked fine. Many didn't. And virtually all of them had cloud-based equivalents that were either free or cheaper.

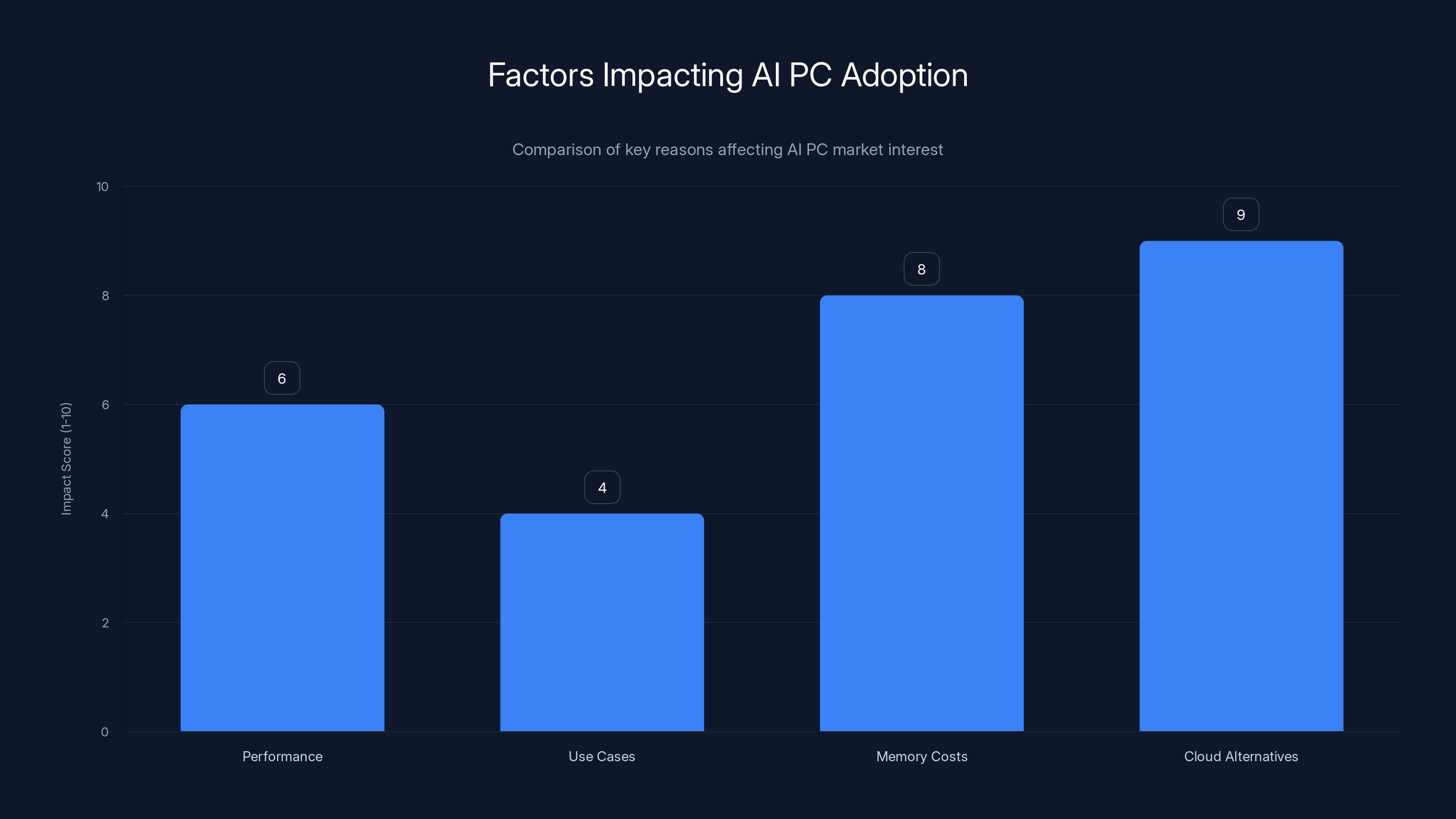

As analyst Jitesh Ubrani from IDC noted, "General interest in AI PCs has been wavering for a while, since cloud-based options are widely available and the use cases for on-device AI have been limited." Translation: people didn't actually want local AI that badly when they had reliable cloud alternatives available through Microsoft Copilot, Chat GPT, or Claude.

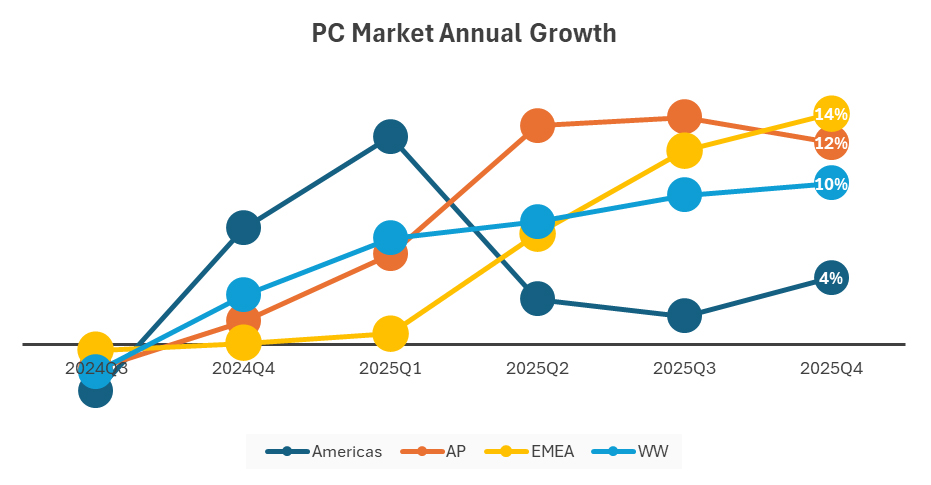

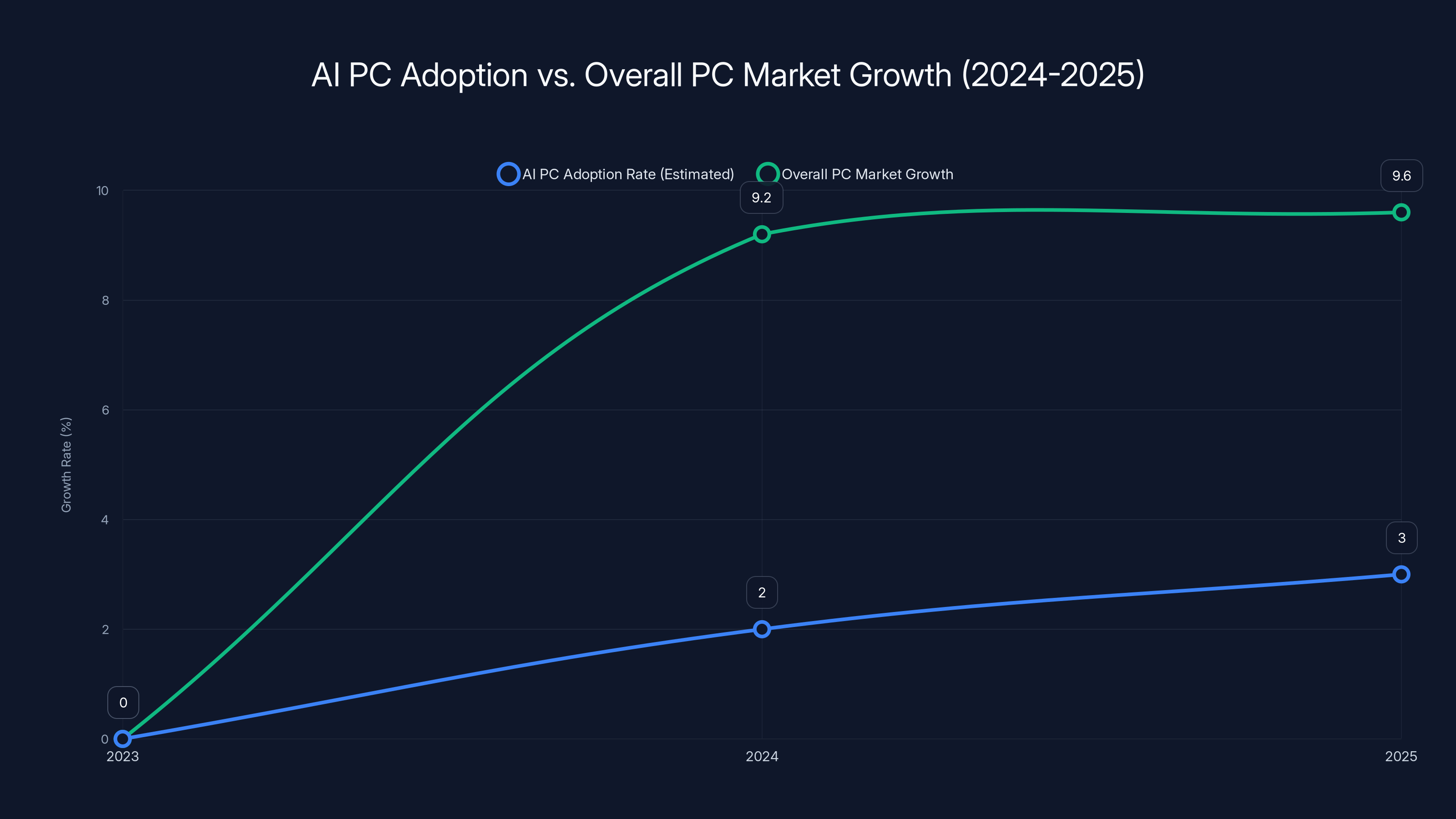

The market spoke clearly. During 2024 and 2025, when AI PC adoption should've accelerated, shipments remained tepid. Sure, the overall PC market grew around 9.2 to 9.6 percent year-over-year, but most of that growth had nothing to do with AI features. People were buying better displays, longer battery life, improved build quality. AI performance? It barely registered in customer purchase decisions.

What manufacturers discovered the hard way is that adding AI silicon to a laptop doesn't automatically make AI compelling to users. It's like putting a Ferrari engine in a golf cart—technically impressive, strategically pointless, and missing the actual problem you're trying to solve.

The price of a 16GB SODIMM increased from

How Memory Became the Bottleneck

Starting in late 2024 and accelerating through 2025, the semiconductor supply chain got strangled by an unexpected culprit: data center demand. AI training and inference workloads—particularly the massive GPU clusters running large language models—consumed enormous quantities of RAM and NAND flash memory. Companies building AI infrastructure weren't buying consumer quantities of memory chips. They were buying in industrial volumes.

This created a supply crunch that rippled through the entire memory market. Omdia, a technology research firm, reported that mainstream PC memory and storage costs rose between 40 and 70 percent in 2025 alone. Not gradually. In a single year. For comparison, that's roughly three times the normal annual price volatility in the memory market.

The practical impact: a 16GB SODIMM (small outline dual in-line memory module) that cost roughly

PC makers had three theoretical options:

Option one: Accept lower margins and eat the cost increase. This is suicide for publicly traded companies with margin expectations.

Option two: Raise prices by 15 to 20 percent to maintain margins. IDC expected this outcome. But raising laptop prices during a period of sluggish consumer spending? That kills volume.

Option three: Lower the memory configurations on budget and mid-range machines to reduce component costs. This was the consensus move. Ben Yeh from Omdia predicted "leaner mid to low-tier configurations to protect margins."

But here's where AI PCs get caught in the crossfire. The entire AI PC narrative depended on one thing: widespread adoption of machines with 16GB or more of memory. That's the minimum threshold where on-device AI models actually run at acceptable speed. Drop to 8GB, and you're back to cloud-based AI. Drop further, and you're not running meaningful AI locally at all.

When manufacturers started shipping mainstream laptops with 8GB base configurations to control costs, the AI PC narrative became mathematically impossible to sell. You can't credibly market a machine as "AI-powered" when it barely has enough memory for the operating system and a web browser.

The irony is brutal: the memory shortage that powered the AI boom in data centers directly killed the AI PC segment that was supposed to capitalize on consumer interest in AI.

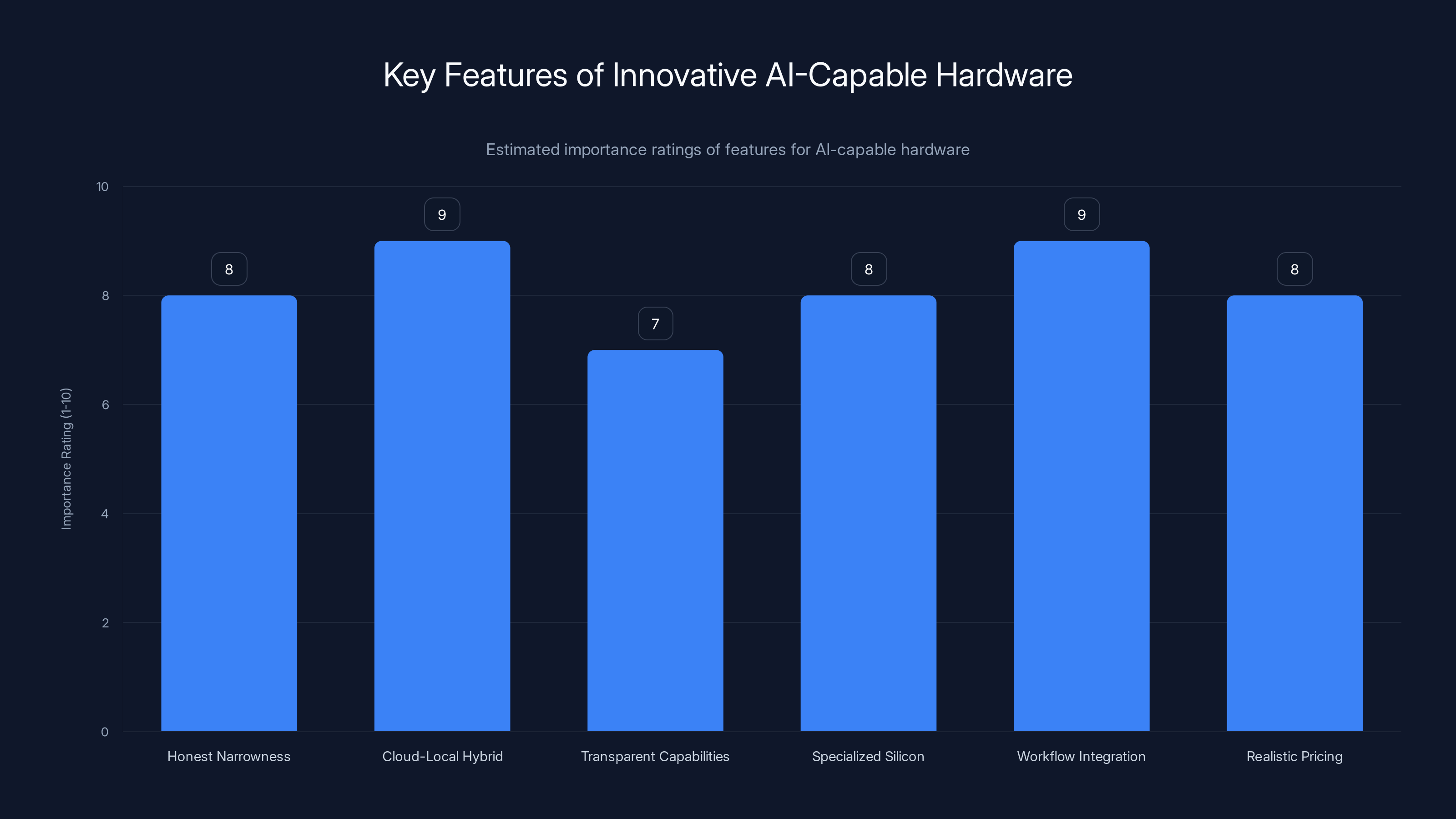

Cloud-local hybrid design and integration with existing workflows are rated highest in importance for innovative AI-capable hardware. Estimated data based on feature discussion.

The Marketing About-Face

Nothing reveals market reality more clearly than watching marketing messages completely reverse in real time.

In 2024, Dell discontinued its iconic XPS consumer laptop line, a brand that had been central to the company's consumer strategy for nearly a decade. The official reason: "the AI PC market is quickly evolving," according to Kevin Terwilliger, VP and GM of consumer, commercial, and gaming PCs at Dell. The subtext was clear: we're restructuring around AI.

Then something unexpected happened. By CES 2026, just a few months later, Dell resurrected the XPS brand. But the messaging had changed completely. The new XPS pitch focused on "build quality, battery life, and display quality"—the foundational hardware attributes that have always mattered, not flashy AI features.

Terwilliger's tone shifted dramatically. At a press briefing ahead of the show, he stated plainly: "What we've learned over the course of this year, especially from a consumer perspective, is they're not buying based on AI. In fact, I think AI probably confuses them more than it helps them understand a specific outcome."

That's a remarkable admission. The executive in charge of Dell's consumer PC strategy just said, on the record, that AI features confuse customers rather than help them buy laptops. It's the kind of statement that probably generated uncomfortable conversations with the marketing department.

But it's honest. And it reflects what the market had been signaling for months: people care about computers being fast, reliable, and pleasant to use. They care less about computational features they don't fully understand or haven't actually needed.

Other manufacturers followed similar trajectories. HP, Lenovo, and ASUS all maintained AI PC product lines for 2026, but notice what happened to the marketing emphasis in their press materials. AI features moved from the headline to the bullet point. Build quality, battery life, and display specifications moved up. The narrative shifted from "revolutionary on-device AI" to "AI features when you need them."

This isn't cynicism. It's market discipline. When actual customer feedback conflicts with your planned strategy, you adapt or you fail. These companies adapted.

Why Cloud-Based AI Just Works Better (For Now)

There's a reason cloud-based AI became the default and users showed remarkably little interest in local alternatives. The math is straightforward.

Performance: A query to Chat GPT or Claude reaches servers with thousands of GPUs optimized for AI inference. Response times are typically under two seconds. Local AI running on a laptop's NPU is constrained by thermal limits, power budgets, and the system's overall memory bandwidth. For complex tasks, cloud is genuinely faster.

Cost efficiency: Running AI inference at scale in data centers benefits from economies of scale and specialized hardware amortized across millions of queries. A cloud AI service can charge $20/month or offer free tiers because the infrastructure is already built. Building equivalent capability into every laptop is economically inefficient.

Model quality: The best performing language models—GPT-4, Claude 3.5, Gemini 2.0—are too large to run on consumer hardware. Even heavily quantized versions are compromises. Cloud services run full-capability models, delivering better answers to complex questions.

Continuous improvement: Cloud-based AI models update automatically. Local models on your laptop require manual updates, driver updates, and retraining if you want them to improve. That's friction most users don't accept.

Simplicity: Cloud AI requires a username and password. Local AI requires you to understand VRAM requirements, quantization formats, model selection, and potential security implications. Most users just want to type a question and get a good answer.

When you enumerate the actual reasons cloud AI dominates, local AI only wins in narrow scenarios: offline environments, extreme privacy requirements, deterministic tasks where you need reproducible results, or use cases where sub-second latency is critical and network connectivity unreliable.

For the 99 percent of users buying consumer laptops? Those scenarios are niche. Very niche. Not "I need to completely restructure my product roadmap" niche.

Cloud AI providers understood this instinctively. OpenAI didn't position Chat GPT as a replacement for local processing. They positioned it as a service. Microsoft embedded Copilot into Windows and Office as a cloud-first feature (despite later adding some local capabilities). Google integrated Gemini into Android, also cloud-first, with local processing as a secondary consideration.

These companies had the resources to build local AI into consumer devices. They didn't, because they correctly assessed that the user experience and competitive advantage lived in the cloud.

Cloud alternatives and high memory costs are major barriers to AI PC adoption, scoring 9 and 8 respectively. Estimated data based on content analysis.

The RAM Shortage as Honest Reset

Now we arrive at the genuinely interesting part: why this shortage might actually be beneficial.

In a functioning market, products should be built to solve real problems. When problem-solution matching breaks down—when you're trying to convince people to buy something they don't actually need—you're in marketing-driven product development. That's not sustainable long-term.

The AI PC hype cycle was beginning to show cracks even before the memory shortage. Reviewers tested on-device AI features and found them underwhelming. Buyers ignored the marketing. Industry analysts noted wavering interest. But the industry had momentum, marketing budgets, and a narrative to defend.

Enter the memory shortage. It imposed a hard constraint: you can't build the thing you were trying to sell. You can't put 16GB of RAM in every mainstream laptop and maintain acceptable prices. You can't promise "AI-powered" experiences when the hardware foundation is too constrained.

This forced a reset. Instead of asking "how do we convince people to buy AI PCs," manufacturers now have to ask "what actual problem does an AI-capable laptop solve, and how do we build that sustainably?"

The answer isn't "everything runs AI locally." It's more nuanced. Maybe it's "certain frequently-used features like autocomplete and image processing happen locally for responsiveness, while complex AI tasks route to the cloud." Maybe it's "AI features are available on premium tiers only, where we can justify the component costs." Maybe it's "we invest more in making cloud AI integration seamless, so the device becomes a beautiful interface to powerful remote processing."

These are all valid strategies. But they start from honesty instead of hype.

Some manufacturers clearly understand this shift. Dell's pivot back to emphasizing build, battery, and display quality signals that they're ready to compete on substantive product attributes rather than feature superlatives. It's a more defensible position. It's also more honest.

What Actually Works in On-Device AI

Here's the counterpoint: on-device AI isn't fundamentally broken. Certain implementations work legitimately well.

Image processing is a perfect example. Real-time background blur, noise reduction, upscaling—these tasks benefit enormously from local processing. The processing is purely local, the results are immediate, and the user experience is noticeably better than cloud-based alternatives. Every phone now has local image processing for computational photography, and it's transparently better than the alternative.

Autocomplete and predictive text work well locally. Phones have been doing this for years with dedicated processors. Local language models for next-word prediction on laptops would be similarly effective: faster response, no network latency, no privacy concerns about sending your typing patterns to a cloud service.

Voice processing for meeting transcription or real-time language translation could be excellent on-device. The latency requirements are real, and the privacy argument is strong.

Accessibility features—text-to-speech, voice recognition, optical character recognition—all benefit from local processing. These are frequently privacy-sensitive, latency-critical tasks where on-device processing has clear advantages.

But notice what these have in common: they're all narrow, specialized tasks. Not "run a general-purpose large language model." Not "let me use AI for any task I can think of." Specific, bounded problems where local processing genuinely offers technical advantages.

That's where the AI PC concept should've started. Not "AI PC" as a marketing category signifying a class of devices with certain processors, but "laptop optimized for AI-accelerated image processing" or "laptop designed for on-device voice AI." Honest categories describing honest value propositions.

Instead, manufacturers tried to sell "AI PC" as a generic category, like "gaming laptop." But gaming laptops have a clear value proposition: high frame rates in demanding games. AI PCs never developed an equivalent clarity. What was the AI PC for, exactly? Everything? Nothing? Kind of maybe some stuff?

The market correctly rejected the answer.

Despite the introduction of AI features, AI PC adoption rates remained low compared to the overall PC market growth from 2024 to 2025. Estimated data highlights the limited impact of AI on PC sales.

The Analyst Perspective: 2026 and Beyond

Industry analysts expect the memory shortage to persist into 2026 and possibly 2027. Jitesh Ubrani from IDC provided the gloomiest timeline: "These RAM shortages will last beyond just 2026." Meanwhile, data centers will continue consuming vast quantities of memory for AI infrastructure, preventing rapid normalization.

IDC expects PC makers to respond by prioritizing midrange and premium systems where they can maintain margins despite higher component costs. This means mainstream budget laptops will become thinner in terms of memory specifications. The average entry-level laptop memory configuration could drop from 8GB to 6GB or even stay at 8GB with slower memory modules.

Ben Yeh from Omdia predicted that PC manufacturers will release "leaner mid to low-tier configurations to protect margins." Translation: if you're buying a budget laptop in 2026, you're getting a device designed around constraints, not optimization.

For AI PC positioning, this creates a paradox. The segment that manufacturers were trying to build the largest volumes in—budget and mid-range mainstream machines—becomes the least capable of running meaningful on-device AI. The devices with sufficient memory will be expensive premium tiers where consumers are already accustomed to paying for innovative features.

So perhaps the 2026-2027 AI PC market becomes genuinely real, but accidentally inverted from the original vision. Instead of "every laptop has AI," it becomes "AI is a premium feature available on high-end models." That's a smaller market, but potentially a more honest one.

Microsoft's Cooling on Consumer Copilot

Another data point suggests the industry is internally acknowledging AI PC weaknesses: Microsoft's reported pivot away from consumer Copilot integration.

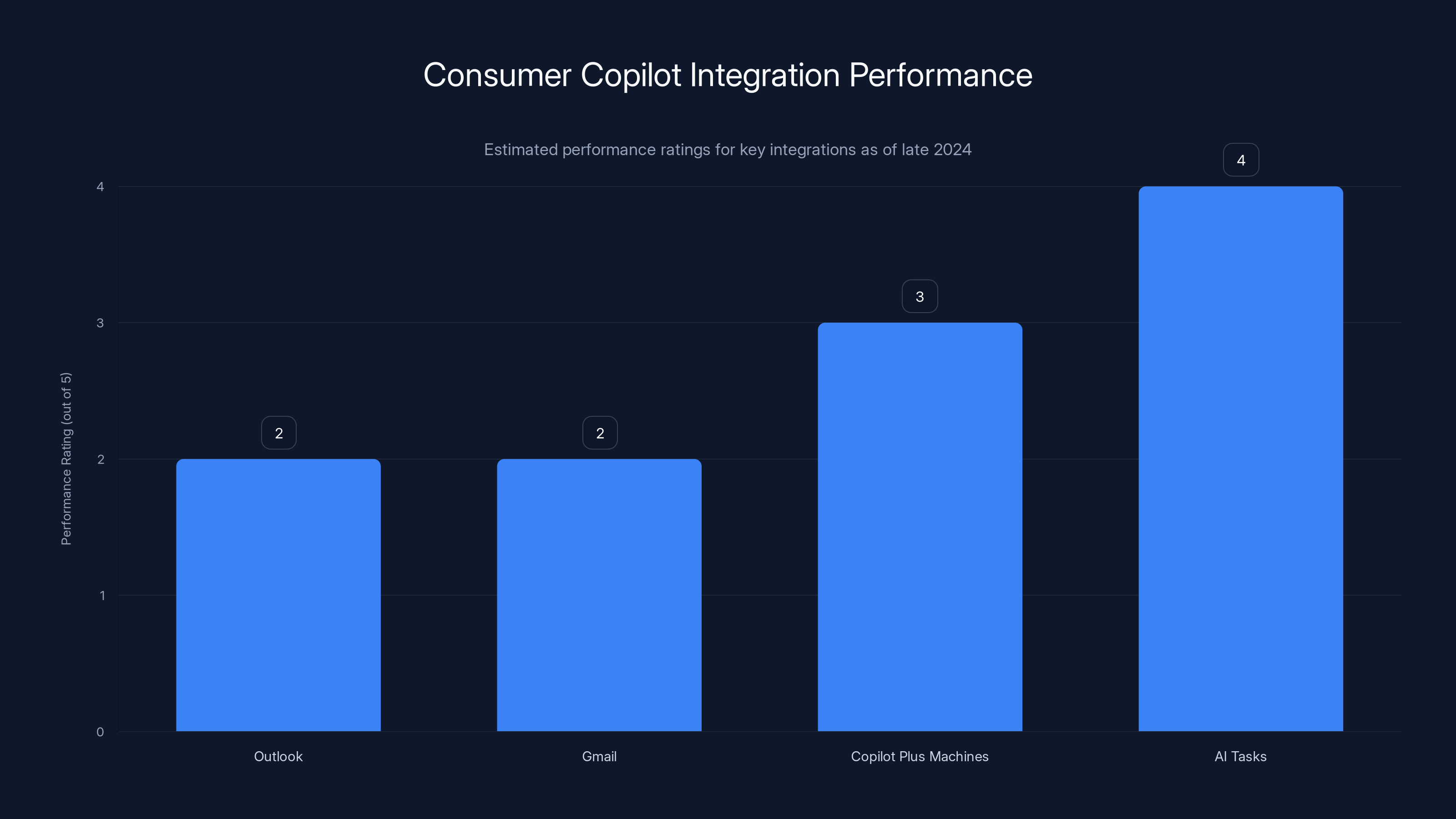

In December 2024, The Information reported that CEO Satya Nadella sent an email to engineering heads expressing significant disappointment with Copilot's consumer performance. Specifically, he noted that integrations with Outlook and Gmail—foundational productivity tools for most users—"for the most part don't really work" and are "not smart."

That's not vague criticism. That's a CEO identifying core functionality that's broken. If the flagship integration for a marquee AI feature isn't working well, that's a serious problem for the entire value proposition.

The report also indicated that Nadella delegated some of his other responsibilities to focus more time on Copilot, suggesting internal recognition that the consumer AI strategy needed executive-level attention and course correction.

Why mention this in the context of AI PCs? Because Microsoft was positioning Copilot as a primary value-add for Copilot Plus machines—the company's official branding for AI-capable laptops. If Copilot isn't working well as integrated software, then one of the main selling points for AI-capable hardware evaporates.

This wasn't a hardware problem. The processors in Copilot Plus machines could theoretically handle various AI tasks. The problem was the software wasn't good enough to deliver the promised experience. That's a cautionary tale: processor capabilities don't determine adoption. User experience does.

Estimated data shows that key integrations like Outlook and Gmail had low performance ratings, indicating significant issues with Copilot's consumer functionality by late 2024.

What Real Innovation Might Look Like

Given all the constraints—the memory shortage, the weak consumer demand, the disappointing real-world implementations—what would genuinely innovative AI-capable hardware look like?

Honest narrowness: Start with specific, well-defined use cases rather than generic "AI capabilities." A laptop optimized for local image editing and composition with real-time AI enhancement. A machine designed for writers with intelligent autocomplete and grammar checking running locally. A device for video editors with on-device rendering assistance. These aren't generic "AI PC" products. They're specific tools for specific workflows.

Cloud-local hybrid design: Stop thinking of local AI and cloud AI as competitors. Design systems where frequently-used tasks run locally for speed and privacy, while complex tasks route to the cloud. This requires good design, not just throwing both technologies at the problem. The integration becomes the innovation.

Transparent capabilities and limitations: Tell users honestly what the device can and can't do. "Image upscaling runs locally—roughly 30% faster than cloud processing." "Complex writing assistance routes to the cloud—requires internet connectivity." This is less sexy than generic marketing, but it's usable information.

Specialized silicon for high-volume tasks: Instead of general-purpose AI accelerators, build hardware optimized for specific tasks that benefit from acceleration. Codec processors optimized for video, signal processors optimized for voice, matrix units optimized for specific model architectures users actually run.

Integration with existing workflows: Don't make users learn new software to access AI features. Integrate AI assistance into tools people already use—the text editor they write in, the image software they edit with, the spreadsheet software they analyze with. The hardware becomes invisible; the experience is seamless.

Realistic pricing: If you're adding significant hardware costs for AI features, price accordingly and be explicit about the value. "This model costs

None of these approaches involve magical on-device large language models or running GPT on your laptop. They're all pragmatic, achievable, and actually solve problems users have.

The Silver Lining is Honestly Just the Reset

So we arrive at the headline's premise: the RAM shortage might be good news for people exasperated by AI PC marketing.

It's not good news because the shortage is good. Component shortages never create prosperity. It's good news because the shortage removed marketing's ability to simply declare something true and move forward.

For two years, companies said "AI PCs are the future" loudly and often enough that it became part of the ambient tech narrative. Marketing worked its magic. Most consumers didn't have strong opinions contradicting the claim because they hadn't actually used meaningful on-device AI features.

But now there's a hard constraint. Memory costs money. Memory shortages mean choices. And manufacturers are choosing to build machines without robust on-device AI, because that's what the economics allow.

This forces a reset to first principles: what problems do we actually solve for users? And for that question, "on-device AI capability" is not the answer nearly as often as manufacturers wanted to believe.

What users actually want: fast devices, beautiful displays, long battery life, responsive keyboards, adequate ports, reasonable prices, and reliability. Boring, fundamental stuff. The tools that help them work, create, communicate, and learn effectively.

AI might help with some of that. Cloud-based AI does help with some of that. But you don't need a specialized chip in your laptop to access cloud AI. You just need good software integration and internet connectivity, both of which are standard.

The next phase of AI in consumer devices won't be "every device has AI silicon." It'll be "AI is integrated thoughtfully into the workflows that benefit from it, and the integration might happen locally, in the cloud, or in a hybrid arrangement depending on what makes sense for that specific task."

That's less exciting as marketing messaging. It's more complex than a simple category. It requires nuance and honesty.

But it's probably where we were headed anyway. The RAM shortage just accelerated the timeline.

Looking Forward: 2026 and the Realistic AI Device Landscape

If you're buying a laptop in 2026, here's what's actually likely to happen:

Manufacturers will continue offering devices with AI accelerators because the hardware exists and the marketing value hasn't completely evaporated. But the emphasis will shift. The messaging will soften from "AI-powered" to "AI-capable," from revolutionary to evolutionary.

Most machines in the

Premium machines at $2,000+ might maintain better specifications and could credibly claim meaningful AI capabilities, but they'll also showcase them more carefully—specific features with demonstrated utility, not vague promises of AI everywhere.

Cloud-based AI integration will improve as Microsoft, Google, Apple, and others refine their implementations. By 2026, the best practical AI experiences will come from tight integration with cloud services, not local processing.

Specialized AI features—image processing, voice recognition, predictive text—will quietly become standard without being labeled "AI features." They'll just be expected components of good software.

The term "AI PC" will probably linger as a category, but it'll mean less as time passes. Like "Internet PC" in the 1990s—once every computer was connected, calling one an "Internet PC" became silly.

This isn't a loss for consumers. It's an honest reassessment. Markets work best when marketing claims reflect genuine capability and real value. We're finally transitioning toward that for AI in consumer devices.

Conclusion: From Hype to Honesty

The RAM shortage won't permanently cripple AI technology or prevent it from becoming important in consumer devices. What it will do—what it's already doing—is puncture the hype cycle and force a reckoning with reality.

For two years, the tech industry tried to convince us that every laptop needed AI capabilities. The market response was essentially "no thanks." Then the memory shortage made that choice physical—you literally couldn't build affordable AI PCs without severe compromises.

Now we get to see what happens when marketing doesn't get to set the terms. What emerges probably won't be less AI-integrated, but it will be more honest about what AI actually does and where it actually helps.

That's not exciting. It doesn't generate the same headlines or marketing budgets. But it's healthy for the market and better for consumers. We'll get devices built around actual needs rather than aspirational technology categories.

The AI PC as it was marketed—a complete reimagining of how we use laptops, powered by revolutionary on-device AI—is dead. And that's probably fine. What replaces it will be less transformative but more real. And in technology, real usually wins eventually.

FAQ

What is an AI PC?

An AI PC is a laptop or desktop computer equipped with specialized silicon (typically called an NPU or Neural Processing Unit) designed to run artificial intelligence models and tasks locally on the device. These processors accelerate AI-related computations, theoretically enabling faster response times and local processing without requiring cloud connectivity.

Why did AI PC adoption disappoint consumers?

Consumers showed limited interest in AI PCs because actual on-device AI features were underwhelming compared to cloud-based alternatives. Cloud services like Chat GPT and Claude offered better performance, more capable models, and simpler user experiences without needing specialized hardware. Most users didn't identify compelling use cases that required local AI processing specifically.

How did the RAM shortage impact AI PCs?

AI PCs require at least 16GB of memory to run meaningful on-device AI models effectively. When memory prices surged 40-70% in 2025 due to data center demand, manufacturers couldn't afford to build AI-capable machines at consumer price points. They responded by lowering memory configurations on budget machines, making those devices unsuitable for local AI processing and undermining the entire AI PC marketing narrative.

Is cloud-based AI actually better than local AI processing?

For most consumer use cases, yes. Cloud-based AI offers superior model quality, faster inference times due to data center optimization, continuous automatic updates, and no requirement for specialized hardware. Local AI only provides advantages in specific scenarios like extreme privacy requirements, offline environments, or when sub-second latency is critical—niche cases that don't justify mainstream adoption.

Will AI PCs become viable when the memory shortage ends?

Possibly, but not in the way originally envisioned. Even if memory prices normalize by 2027, the core problem remains: users don't strongly desire local AI capabilities when cloud alternatives are available and often superior. Any future AI PC success will likely depend on honest positioning around specific, useful features rather than generic "AI-powered" marketing.

What should consumers prioritize when buying laptops in 2026?

Focus on fundamental attributes that actually impact daily use: processor speed, display quality, keyboard feel, battery life, build materials, and total available memory for your specific workflow. If you use cloud-based AI services, those work on standard laptops without specialized hardware. If you want specific local AI features, identify them precisely before purchasing rather than trusting generic "AI-capable" marketing.

How will manufacturers position AI features going forward?

Expect a shift from broad "AI PC" categorization toward honest, specific feature positioning. Instead of "AI-powered," you'll see features like "AI-enhanced image processing" or "local voice transcription with cloud backup." Cloud-local hybrid approaches will become common, automatically routing complex tasks to the cloud while running simple, frequent operations locally for speed.

Will the memory shortage last into 2027?

Analysts expect continued constraints through 2026 and potentially into 2027, driven by ongoing data center demand for AI infrastructure. However, this timeline is speculative. Technology supply chains can shift quickly based on investment decisions and demand patterns. Most forecasts suggest meaningful improvement by late 2027 or early 2028.

Key Takeaways

- AI PC adoption collapsed not due to technology failure but because users didn't want local AI when cloud alternatives existed

- RAM prices surged 40-70% in 2025 due to data center demand, making 16GB-minimum AI laptops economically infeasible to manufacture at reasonable prices

- Manufacturers have reversed marketing messaging—Dell, HP, and others now emphasize display, battery life, and build quality rather than AI features

- Cloud-based AI outperforms local AI in speed, capability, and user experience for most consumer use cases, making specialized hardware unnecessary

- The memory shortage forces honest reset: manufacturers must now build products around real user needs rather than aspirational technology categories

Related Articles

- AI PCs Are Reshaping Enterprise Work: Here's What You Need to Know [2025]

- The 11 Biggest Tech Trends of 2026: What CES Revealed [2025]

- Gigabyte Z890 AORUS 256GB DDR5 Memory Support [2025]

- AI PC Crossover 2026: Why This Is the Year Everything Changes [2025]

- Nissan Qi2 Magnetic Wireless Chargers: The Future of Car Tech [2025]

- Apple Adopts Google Gemini for Siri AI: What It Means [2025]

![Why AI PCs Failed (And the RAM Shortage Might Be a Blessing) [2025]](https://tryrunable.com/blog/why-ai-pcs-failed-and-the-ram-shortage-might-be-a-blessing-2/image-1-1768345550742.jpg)