Why Curl Killed Its Bug Bounty Program: The AI Slop Crisis [2025]

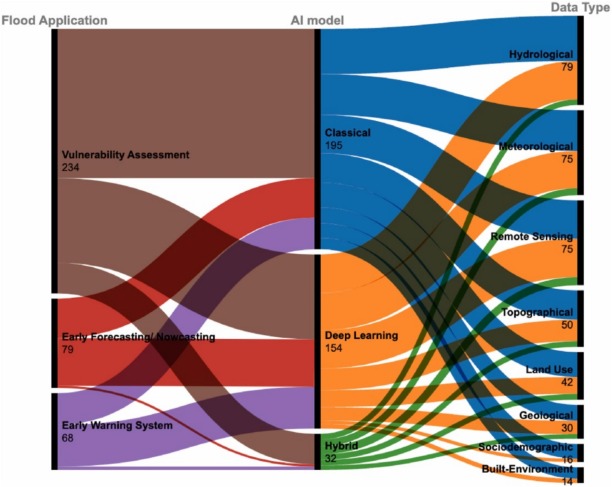

Imagine running a lean security team. You're maintaining one of the most critical tools on the internet. Then, without warning, fake vulnerability reports start flooding your inbox at an impossible rate. Some are obviously AI-generated. Others are clever enough to waste hours of your time. This isn't a hypothetical scenario for the maintainers of curl, the wildly popular open source command-line tool and software library used by millions of developers worldwide.

In January 2026, after years of maintaining a security-focused bug bounty program through Hacker One, the curl team made a controversial decision: they shut it down completely. No more financial rewards. No more incentives. Just a GitHub issues channel, available to anyone, but with zero compensation.

This decision represents a watershed moment for open source security. It exposes a brutal truth that's been building quietly for months: artificial intelligence is destroying the integrity of vulnerability disclosure programs. AI-powered report generation isn't just making noise anymore. It's actively harming the security infrastructure that protects billions of devices and billions of dollars in software.

Let's dig into what actually happened, why it matters, and what it means for the future of open source security.

TL; DR

- Curl's bug bounty was killed due to an overwhelming avalanche of fake and AI-generated vulnerability reports flooding the Hacker One platform.

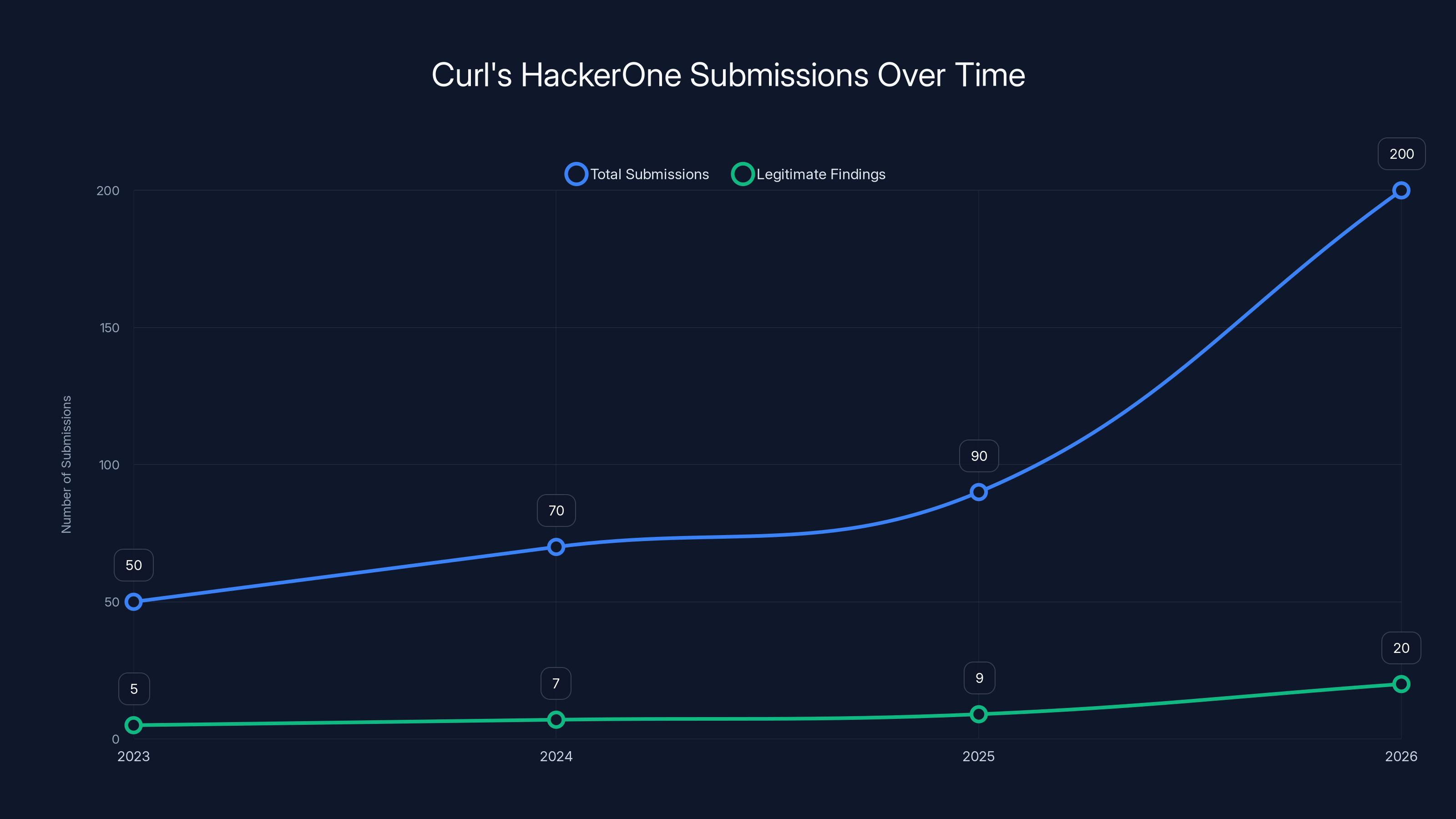

- The numbers were staggering: the security team received seven valid Hacker One issues in a 16-hour period, and already logged 20 submissions by early 2026.

- AI slop became the primary problem: researchers were using generative AI tools to fabricate non-existent vulnerabilities, wasting hours of security team time.

- No financial incentive, no false reports: curl moved all bug reporting to GitHub without any monetary rewards, effectively eliminating the economic motivation for low-quality submissions.

- This reflects a broader crisis: open source projects are increasingly discovering that bug bounties attract noise, not genuine security researchers.

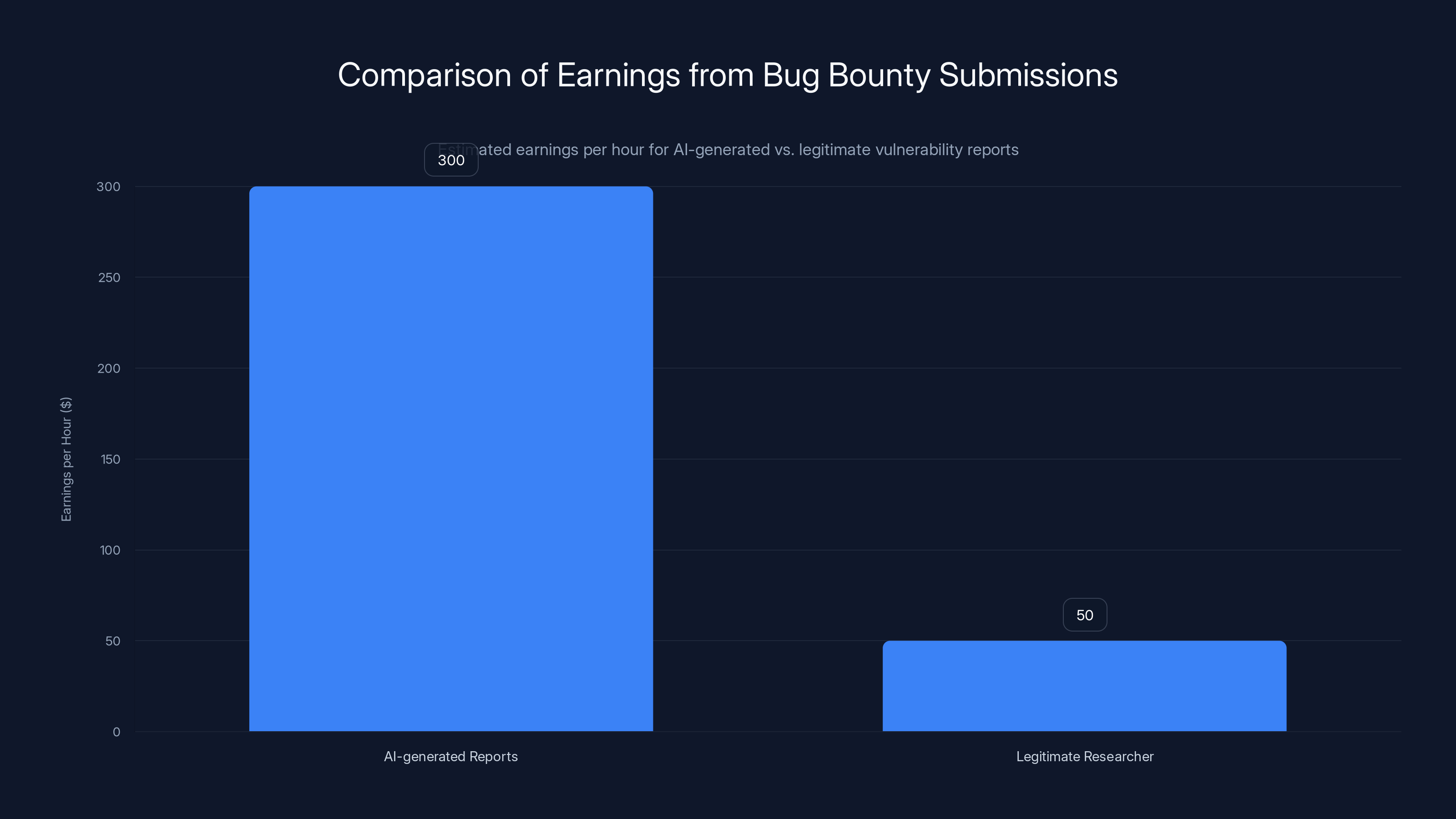

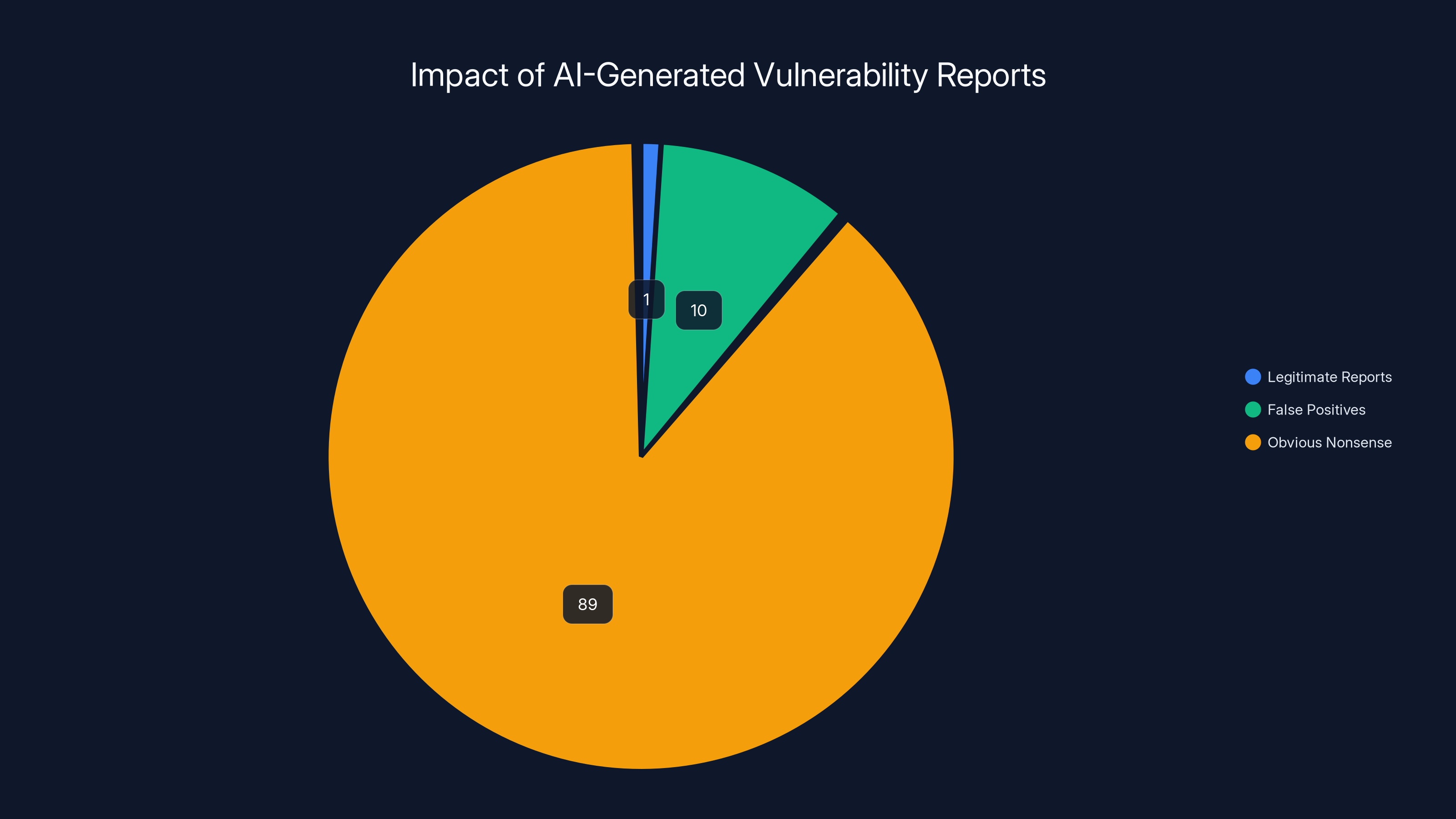

AI-generated reports can yield an estimated

The Breaking Point: How Curl's Security Team Got Overwhelmed

Daniel Stenberg, curl's founder and lead developer, was direct about the problem in his official announcement. The curl team didn't make this decision lightly. They had been running their Hacker One bug bounty program successfully for years, offering financial rewards for legitimate security vulnerabilities. The program was supposed to incentivize skilled researchers to find real bugs before malicious actors could exploit them.

Then something shifted.

In early 2026, the volume of submissions didn't just increase. It exploded. The security team started receiving seven qualified Hacker One submissions within a single 16-hour window. That doesn't sound catastrophic until you understand what "qualified" actually means in this context: Hacker One's system flagged them as potentially legitimate enough to reach the curl team. The curl maintainers then had to investigate each one individually.

Here's where it gets frustrating. After investigating those seven submissions, the security team concluded that none of them contained actual vulnerabilities. Zero. Not a single actionable finding.

Worse, by the time we're reading this article, the curl team had already logged 20 submissions in 2026 alone. In a matter of weeks. At this rate, the team would field hundreds of bogus reports annually. For a lean open source project running on volunteer time, this becomes unsustainable.

Stenberg explained the real cost: investigating these submissions takes time away from actual security work, bug fixes, and feature development. The curl project isn't backed by a billion-dollar company with a dedicated security operations center staffed with dozens of analysts. It's a tight team of passionate developers trying to maintain critical infrastructure in their spare time.

The math becomes simple when you think about it that way: if even 10% of submissions are legitimate findings, but 90% are fake, is the bug bounty program actually making the project more secure? Or is it just creating busy work?

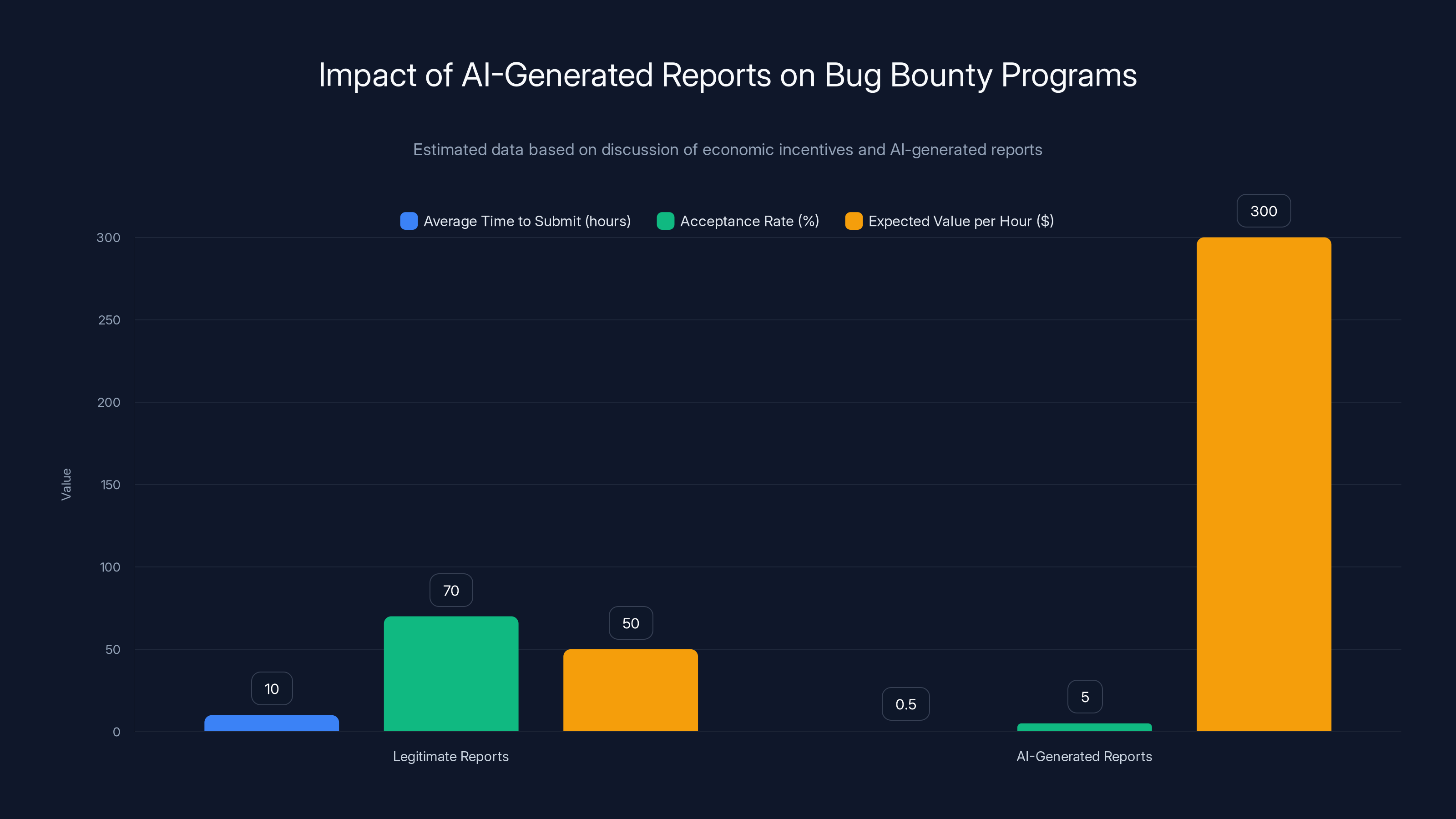

AI-generated reports can be submitted much faster and, despite a lower acceptance rate, offer a higher expected value per hour than legitimate reports. (Estimated data)

The AI Slop Problem: How Generative AI Broke Vulnerability Disclosure

Let's be clear about what's happening here. This isn't about legitimate researchers using AI as a productivity tool. It's not about using ChatGPT to write better bug reports or to help structure findings. This is about researchers using generative AI to fabricate entire vulnerabilities that don't exist.

The term "AI slop" has become industry shorthand for low-quality, AI-generated content that floods platforms. In the context of bug bounties, it refers to vulnerability reports that have no factual basis. They might describe attack vectors that are theoretically possible but have no practical implementation path. They might cite CVE numbers that don't exist. They might describe security flaws in features that don't work the way the report claims.

How does this even work? Here's the process that researchers appear to be following:

They prompt a large language model with something like: "Generate 10 vulnerability reports for curl, a command-line HTTP tool. Make them plausible but include some that are unlikely to be real vulnerabilities. Format them like professional security researcher reports."

The LLM generates a batch of reports. Some sound genuine. Others are obviously nonsense. But here's the key: the person submitting them doesn't necessarily care. They're playing a numbers game. If they submit 100 AI-generated reports, even if 99 are garbage, that one legitimate-sounding report might fool the initial Hacker One triage system. It gets flagged for human review. The submitter gets paid. They move on to the next batch.

From the curl team's perspective, this creates a massive problem. Each report that gets through to human review requires someone to:

- Read and understand the alleged vulnerability

- Investigate whether it's actually possible

- Test the claim in a real curl environment

- Document their findings

- Respond to the researcher explaining why it's not valid

All of that takes time. Multiply that by hundreds of submissions, and you're looking at weeks of wasted work.

The insidious part is that AI-generated vulnerability reports have become sophisticated enough to pass initial screening. They use correct security terminology. They reference real CVE formats. They structure findings like legitimate penetration test reports. A busy triage team skimming submissions might easily flag a few AI slop reports as worth investigating.

But here's what makes curl's situation different from other projects. Curl is a foundational tool. It's used in everything from web browsers to IoT devices to cloud infrastructure. That makes it a valuable target for security researchers. It also means that the bug bounty program attracted both legitimate researchers and, unfortunately, people looking for an easy payday.

The Economics of Abuse: Why Incentives Breed Noise

This brings us to an uncomfortable economic truth that curl's team had to confront: bug bounty programs create perverse incentives.

When you offer money for vulnerabilities, you're not just attracting the world's best security researchers. You're also attracting people who are motivated by easy money. Some are entirely well-intentioned but lack the expertise to validate their own findings. Others are opportunistic. And now, a third category has emerged: people using AI to game the system.

Let's think through the economics from a malicious actor's perspective:

- Effort required to submit an AI-generated vulnerability report: 5 minutes

- Chance of that report getting accepted: let's say 5% (very low)

- Average bug bounty payout for a low-severity finding: $500

- Expected value per submission: 5 minutes × 25 of expected value per 5 minutes of work

- That's equivalent to earning $300 per hour

Even with a 5% acceptance rate, that's an attractive hourly wage for someone willing to spam the system. Add in the fact that LLMs can generate multiple plausible-sounding reports in seconds, and suddenly this becomes a viable income stream for people who aren't interested in actual security work.

Contrast that with a legitimate researcher spending a week to find a real vulnerability worth

Curl's team realized they were competing against a broken system. They weren't just fighting AI-generated noise. They were fighting the economic rationality of spam.

So they eliminated the incentive altogether.

The number of submissions to Curl's HackerOne program surged in 2026, but legitimate findings remained low. Estimated data.

Why Other Bug Bounties Haven't Collapsed Yet

You might be wondering: if curl is experiencing this level of AI spam, aren't other projects? The answer is yes, but for different reasons, many larger platforms are hanging on.

Here's the thing: curl's situation is unique in some ways, but the problem is widespread. Many major tech companies running bug bounty programs have noticed increasing submission volumes, but they're handling it differently. Companies like Microsoft, Google, and Amazon have the resources to build sophisticated filtering systems that automatically flag low-quality submissions. They can employ security analysts whose entire job is triage.

The difference is scale. Microsoft might receive thousands of submissions monthly. If 90% are garbage, their 50-person security team can probably handle it. Curl, with a lean team of volunteer maintainers, simply cannot.

Smaller open source projects have started making similar decisions. Some have quietly reduced their bounty amounts. Others have implemented stricter submission requirements. A few have followed curl's path and eliminated bounties entirely, relying instead on vulnerability disclosure programs without financial incentives.

The deeper issue is that AI has changed the economics of spam at a fundamental level. Generating plausible-sounding but fake vulnerability reports at scale is now cheap enough that even small payouts make the spam worth attempting.

There's also a chicken-and-egg problem: as more projects experience this, fewer new maintainers will want to start bug bounty programs, which means fewer legitimate security researchers will bother looking for vulnerabilities in open source tools at all. This could have serious long-term security implications.

The Shift to GitHub: A New Model for Vulnerability Disclosure

Starting in February 2026, curl moved all vulnerability reporting to GitHub. No monetary rewards. No Hacker One interface. Just the standard GitHub issue tracker.

This is a fascinating strategic move, and it reveals something important about the future of open source security. By removing the financial incentive, curl is betting that they'll actually get better vulnerability reports.

Here's the logic: people submitting reports on GitHub without expecting payment are probably submitting them because they actually found something they believe is a real problem. They have a genuine interest in curl's security. They're not playing a numbers game. They're not optimizing for bounty payouts per minute of effort. They're being helpful.

In other words, curl is filtering for motivation. They're eliminating the people who are primarily motivated by money and keeping the people who are primarily motivated by wanting to help.

This aligns with research on open source motivation more broadly. Studies of open source contributors show that intrinsic motivation (the desire to solve problems, contribute to something meaningful, build reputation) drives better long-term outcomes than extrinsic motivation (money). The developers who stick around and produce quality work are usually the ones who started because they cared about the project, not because they wanted to maximize income.

The same principle might apply to security research. The researchers who find real vulnerabilities in curl are probably doing it because they use curl, or they care about security, or they want to build their reputation in the security community. The people churning out AI-generated reports are doing it purely for the bounty money.

Curl is making a bold bet: removing the money will remove the noise, leaving only the signal.

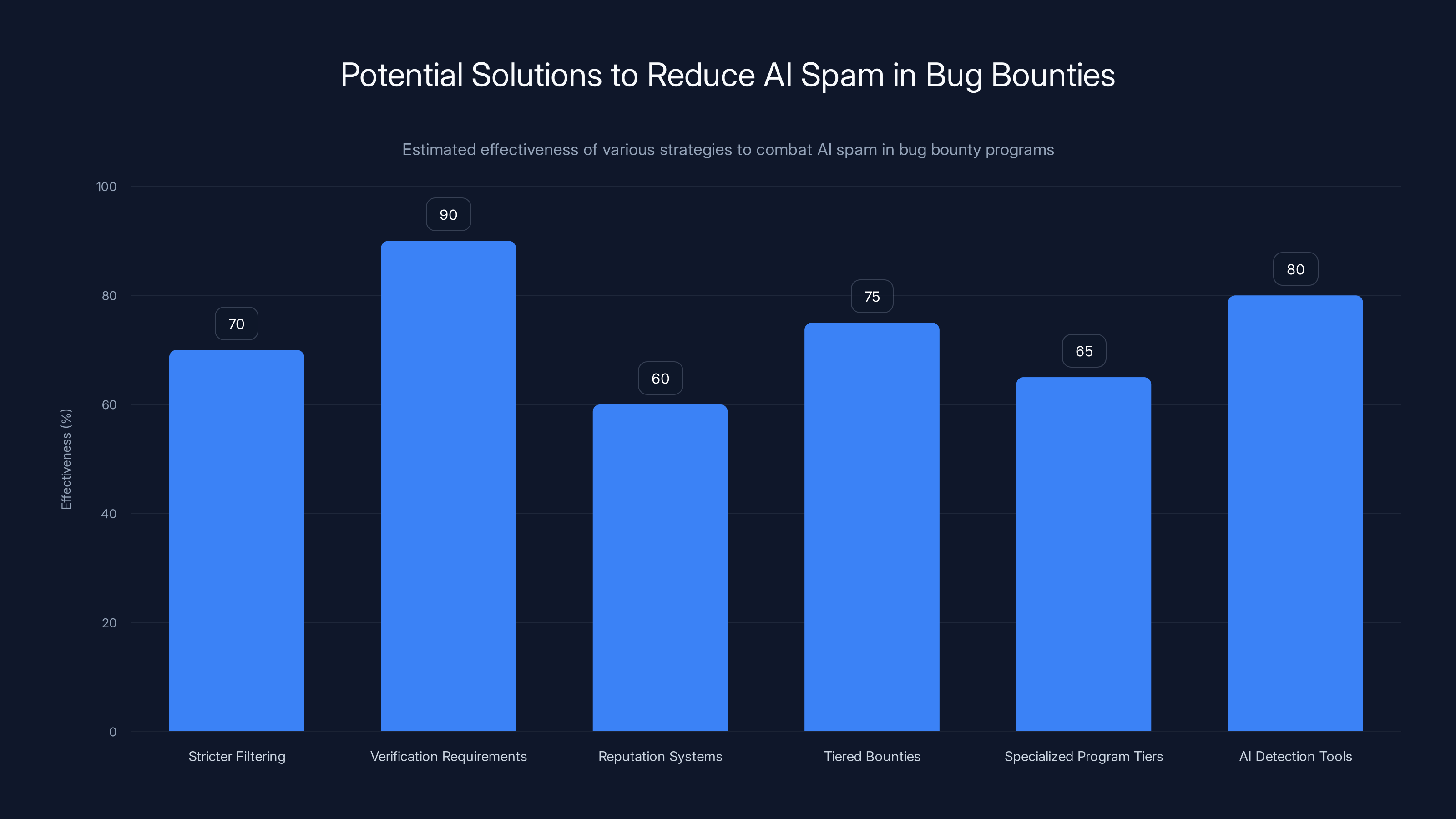

Verification requirements are estimated to be the most effective solution, potentially eliminating 90% of AI spam by demanding proof-of-concept code. (Estimated data)

The Broader Crisis: Open Source Security in Crisis

Curl's decision happens against a backdrop of growing anxiety about open source security in general. The software supply chain has become a critical national security issue. Vulnerabilities in foundational tools can have cascading effects across the entire digital infrastructure.

But here's the paradox: the open source projects that are most critical to global security often have the fewest resources. Curl is a perfect example. It's used in countless critical systems, from financial software to medical devices to military applications. But it's maintained by a small team of volunteers.

Bug bounty programs emerged as a way to partially solve this problem. They incentivize security research on critical projects. They help smaller teams catch vulnerabilities before they become exploits in the wild. They've been genuinely beneficial for many projects.

But AI has broken the model, at least temporarily. And until the bounty platforms (like Hacker One) develop better filtering systems or new models emerge, more projects are likely to follow curl's path.

This creates a security vacuum. If critical open source projects are abandoning bug bounties, where will security researchers focus their attention? On proprietary software with massive budgets? On less critical projects?

The risk is that the projects that most desperately need external security help will be the ones that stop soliciting it.

How AI-Generated Vulnerability Reports Actually Look

Let's make this concrete. What does an AI-generated vulnerability report look like? Here are some patterns that security teams have noticed:

Pattern 1: Plausible But Impossible Vulnerabilities These are reports that describe security flaws that sound real but have no actual implementation path. For example: "User authentication can be bypassed by sending HTTP requests to the /curl endpoint with malformed headers." It uses correct terminology. It references real components. But curl doesn't have a /curl endpoint, and the headers referenced don't work the way described.

Pattern 2: Vague Technical Descriptions AI-generated reports often lack specific details that real researchers would include. They might say "memory corruption is possible" without pointing to specific code lines or providing a proof-of-concept. They might reference attack vectors without explaining how to trigger them.

Pattern 3: Generic Vulnerability Types Real security researchers find specific, novel vulnerabilities. AI models often generate reports about common vulnerability types (SQL injection, buffer overflow, etc.) in tools where those vulnerabilities are already well-known or don't exist. It's pattern-matching without context.

Pattern 4: Missing Proof of Concept The most telling sign of an AI-generated report is the absence of actual code or steps that prove the vulnerability. Real researchers always provide ways to reproduce their findings. AI can't generate working exploits because the vulnerabilities don't exist.

The curl team's observation was that as they investigated submissions, they recognized these patterns immediately. But recognizing the pattern and writing a rejection response still takes time.

Estimated data shows that a vast majority of AI-generated vulnerability reports are either false positives or obvious nonsense, with only a small fraction appearing legitimate.

The Human Cost: Why This Matters Beyond Curl

Here's something that doesn't get discussed enough: what is the emotional and psychological cost of receiving hundreds of bad-faith reports?

Open source maintainers are already overwhelmed. Most of them do this work for free. They're juggling full-time jobs, personal lives, and maintaining critical software in their spare hours. A constant stream of spurious bug reports isn't just a time drain. It's demoralizing.

It's the equivalent of someone reporting fake crimes to your local police department hundreds of times per week. Eventually, the department doesn't have resources to solve real crimes. More importantly, the investigators become burnt out.

This is a real concern in the open source community right now. Maintainer burnout is already a documented problem. AI-generated spam on top of everything else pushes projects closer to the breaking point.

When a maintainer finally decides to shut down a security program because the noise has become unbearable, that's not just a technical decision. It's a sign that the system has failed them. It's maintainers saying: "We would rather reduce visibility into our security process than continue to suffer through this abuse."

That's a loss for everyone. It means fewer eyes on security. It means fewer opportunities for legitimate researchers to contribute. It means the project becomes less secure, not more secure.

Solutions: What Could Actually Help

So what's the fix? How do we preserve the benefits of bug bounties while eliminating AI spam?

There are several approaches worth considering:

1. Stricter Initial Filtering Bug Bounty platforms like Hacker One could implement more sophisticated filtering that rejects obvious AI-generated reports before they reach project maintainers. This might involve analyzing writing patterns, checking for proof-of-concept code, and verifying that the vulnerability described actually exists in the code being reviewed.

2. Verification Requirements Requiring submitters to provide actual proof-of-concept code before a report is eligible for payment would immediately eliminate 90% of AI spam. Real vulnerabilities are reproducible. Fake ones aren't.

3. Reputation Systems Building accountability into the system. If a researcher has a history of submitting low-quality reports, future submissions from them should face higher scrutiny or stricter requirements.

4. Tiered Bounties Instead of flat payouts, use sliding scales that reward thorough, well-documented reports much more than minimal findings. This inverts the incentives so that quality is rewarded far more than quantity.

5. Specialized Program Tiers Allow projects to opt into different program types. A project like curl could run a "high-confidence researchers only" program that manually accepts researchers who have proven track records, rather than accepting submissions from anyone.

6. AI Detection Tools Develop specialized tools that can detect AI-generated vulnerability reports with high accuracy. This isn't foolproof, but it could catch the most obvious spam.

What curl decided to do is essentially burn down the system and rebuild from scratch. By moving to GitHub without financial incentives, they're filtering for genuinely motivated researchers. Is it perfect? No. But it's a rational response to an irrational situation.

What This Means for Security Researchers

If you're a legitimate security researcher, curl's decision is actually good news in the long run.

Yes, you're no longer getting paid for curl vulnerabilities. But you're now competing in an environment where the noise is eliminated. That means your legitimate findings will be noticed and appreciated. You won't be lost in an avalanche of AI spam. You might not get paid, but you'll build reputation in the security community, which has long-term value.

Plus, contributing to high-profile open source projects is valuable for resume-building and professional development. Many security researchers value that more than a small bounty.

The researchers who are harmed by this decision are the ones who were primarily motivated by quick bounty payouts. And honestly, those people were probably harming the system anyway.

Lessons for Other Open Source Projects

Every open source maintainer should be paying attention to curl's announcement. This is a canary in the coal mine.

The questions your project should be asking:

- Are you seeing a significant increase in low-quality vulnerability submissions?

- Do your reports lack proof-of-concept code?

- Are researchers describing vulnerabilities that don't seem to exist?

- Is the time cost of triaging submissions outweighing the security benefits?

If you're answering yes to these, you're probably already experiencing AI spam. The question is whether you'll address it proactively or wait until it becomes unbearable.

Curl chose to address it. But there might be better solutions than eliminating bounties entirely. Stricter filtering, reputation systems, or higher proof-of-concept requirements might work for your project.

The key lesson is this: monitor your submissions carefully. Watch for sudden spikes in volume. Look for patterns in low-quality reports. Don't wait until your team is burnt out before taking action.

The Bigger Picture: AI's Impact on Information Quality

Curl's crisis is one instance of a much broader problem: AI-generated content is degrading the quality of information across all platforms.

We're seeing this play out everywhere. Search results cluttered with AI-generated blog posts. Social media flooded with AI-generated images. Help forums filled with AI-generated answers that sound confident but are often wrong.

Vulnerability reports are just another category of information that's being degraded. But the stakes are higher here. When your search results are polluted with AI slop, that's annoying. When your security processes are polluted with AI slop, that's dangerous.

This suggests that we need better tools and standards for detecting and filtering AI-generated content. It also suggests that platforms hosting information need to invest more in quality control.

The tragic irony is that security is one of the fields where we can least afford to have noisy, low-quality information. Yet it's becoming one of the first places where AI spam is creating real damage.

Looking Forward: What Happens Next?

Curl's decision to shut down its bug bounty program sends a signal to the rest of the open source community. It says: the current model is broken. Something has to change.

We can probably expect a few outcomes:

Short term: More open source projects will reduce or eliminate their bug bounty programs, moving to unfunded vulnerability disclosure instead. This will reduce noise but might also reduce the incentive for security researchers to look at these projects.

Medium term: Bug bounty platforms like Hacker One will implement stronger filtering to reduce AI spam. They'll realize that hosting spam is bad for business because projects will leave.

Long term: We might see new models emerge. Perhaps AI-resistant bounty structures. Perhaps reputation-based systems where only established researchers can submit for payment. Perhaps a split model where different bounties apply to different researcher tiers.

What won't change is the underlying reality: large language models make it cheap to generate seemingly plausible content at scale. Until we have better detection and filtering systems, AI spam will remain a problem across all information systems, including security.

Curl's team did what they had to do to protect their own sanity and maintain their ability to actually do security work. They chose quality over quantity. They chose a sustainable model over an optimized-for-spam model.

Other projects will have to make similar choices, or find better ways to filter. But one thing is certain: the old model of unrestricted, financially-incentivized vulnerability submissions is becoming untenable in the age of AI.

FAQ

Why did curl shut down its bug bounty program?

Curl's security team was overwhelmed by an avalanche of low-quality and AI-generated vulnerability reports submitted through Hacker One. Investigators discovered that none of the reports in a batch of seven submissions contained actual vulnerabilities, and the volume was consuming all available security team resources. By removing the financial incentive, curl could filter out researchers motivated purely by bounty payouts and focus on genuine security contributions.

What is "AI slop" in the context of vulnerability reporting?

AI slop refers to fake or low-quality vulnerability reports generated by large language models. These reports use correct security terminology and may describe plausible-sounding attack vectors, but they reference vulnerabilities that don't actually exist. They typically lack proof-of-concept code and specific details that legitimate researchers would provide. AI tools can generate these reports at massive scale, making them an attractive way for malicious actors to spam bounty programs.

How did the economic incentives create the problem?

When bug bounties offer payment for vulnerability reports, they inadvertently incentivize quantity over quality. A person submitting AI-generated reports takes only minutes to generate plausible-sounding submissions. Even with a low acceptance rate of 5%, the expected value per effort can exceed $300/hour. This makes spamming bounty programs economically rational, unlike legitimate research which requires deep technical expertise and extensive testing.

Will other open source projects follow curl's example?

Probably, though it's unclear if terminating bounties entirely is the best solution. Many projects are already experiencing increased AI-generated submissions and may eventually reduce or eliminate their programs. However, platforms like Hacker One are likely to implement stronger filtering to prevent researcher exodus. The long-term solution may involve better detection systems rather than eliminating bounties entirely.

What happens to curl's security without a bug bounty program?

Curl is moving vulnerability reporting to GitHub without financial incentives. This creates a filter for genuinely motivated researchers who contribute because they care about security rather than earning bounty payouts. The theory is that legitimate researchers will still submit findings, while spam will disappear. This reduces noise and allows the security team to focus on real vulnerabilities rather than investigating hundreds of fake reports monthly.

How can other projects protect themselves from AI-generated submissions?

Projects can implement several strategies: require proof-of-concept code before accepting reports, use reputation systems to track submitter quality over time, implement stricter initial filtering, tiered bounties that reward thorough documentation, and AI detection tools. The key is monitoring submission quality metrics and watching for sudden spikes in volume that indicate potential spam campaigns.

What's the future of bug bounties in open source?

The vulnerability disclosure model is likely to evolve. Expect more projects to implement reputation-based systems, require technical proof before payments, and possibly split programs into different tiers for established vs. new researchers. Platforms like Hacker One will need to invest in better filtering. Some projects may move to unfunded disclosure models like curl's. The goal will be preserving the benefits of crowdsourced security research while eliminating the noise that AI enables.

Can AI-generated vulnerability reports ever be useful?

While the current wave of AI-generated reports is entirely harmful, AI tools could theoretically be useful for security research if properly guided. A researcher using AI to help analyze code, brainstorm potential vulnerabilities, or document findings is different from someone using AI to generate fake reports. The key difference is accountability and verification. Reports with working proof-of-concept code can't be AI-generated slop, by definition.

Conclusion

Curl's decision to shut down its bug bounty program is a watershed moment for open source security. It represents the point at which the noise from AI-generated vulnerability spam exceeded the signal from legitimate security research.

This didn't happen because of an evil plot or because AI is inherently malicious. It happened because incentives matter, and when you create a financial incentive without sufficient friction or verification, people will optimize for it. When AI makes it possible to generate plausible-sounding submissions at scale, the economics of spam become attractive.

The curl team's response was pragmatic: remove the incentive, reduce the noise, filter for genuine researchers. It's a loss in some ways—legitimate security researchers won't get paid for curl findings. It's a gain in others—the security team can now focus on actual security work instead of triaging hundreds of fake reports monthly.

For the broader open source community, this is a warning signal. The current bug bounty model is under stress. As more projects experience AI spam, some will follow curl's path. Others will adapt their programs. The platforms hosting bounties will need to improve their filtering.

But the underlying lesson is this: in an age of generative AI, information quality depends on verification, not just reputation or platform design. Vulnerability reports with working proof-of-concept code can't be entirely AI-generated. Requirements for specificity and verification are the immune system against spam.

Curl chose to redesign that immune system from scratch. Other projects should be learning from their experience now, while there's still time to fix the problem before burnout forces their hand.

The question isn't whether AI will continue to create spam in security programs. It will. The question is whether platforms and projects will adapt faster than the problem spreads. So far, curl's announcement suggests the answer might be no.

Key Takeaways

- You're maintaining one of the most critical tools on the internet

- This isn't a hypothetical scenario for the maintainers of curl, the wildly popular open source command-line tool and software library used by millions of developers worldwide

- In January 2026, after years of maintaining a security-focused bug bounty program through Hacker One, the curl team made a controversial decision: they shut it down completely

- The curl maintainers then had to investigate each one individually

- It's a tight team of passionate developers trying to maintain critical infrastructure in their spare time

Related Articles

- Forza Horizon 6: Japan Setting, Career Overhaul & EventLab Changes [2025]

- Ecovacs Deebot X8 Pro Omni Review: Features, Performance & Value [2025]

- AI-Generated Bug Reports Are Breaking Security: Why cURL Killed Its Bounty Program [2025]

- Bitwarden Premium & Family Plans 2025: Vault Health Alerts & Phishing Protection

- Driving Theory Test Cheating: How Technology is Breaking the System [2025]

- SSD Prices Are Rising: The Best Drives to Buy Before 2025 [2025]

![Why Curl Killed Its Bug Bounty Program: The AI Slop Crisis [2025]](https://tryrunable.com/blog/why-curl-killed-its-bug-bounty-program-the-ai-slop-crisis-20/image-1-1769189859809.jpg)