Wikipedia's 25-Year Journey: Inside the Lives of Global Volunteer Editors

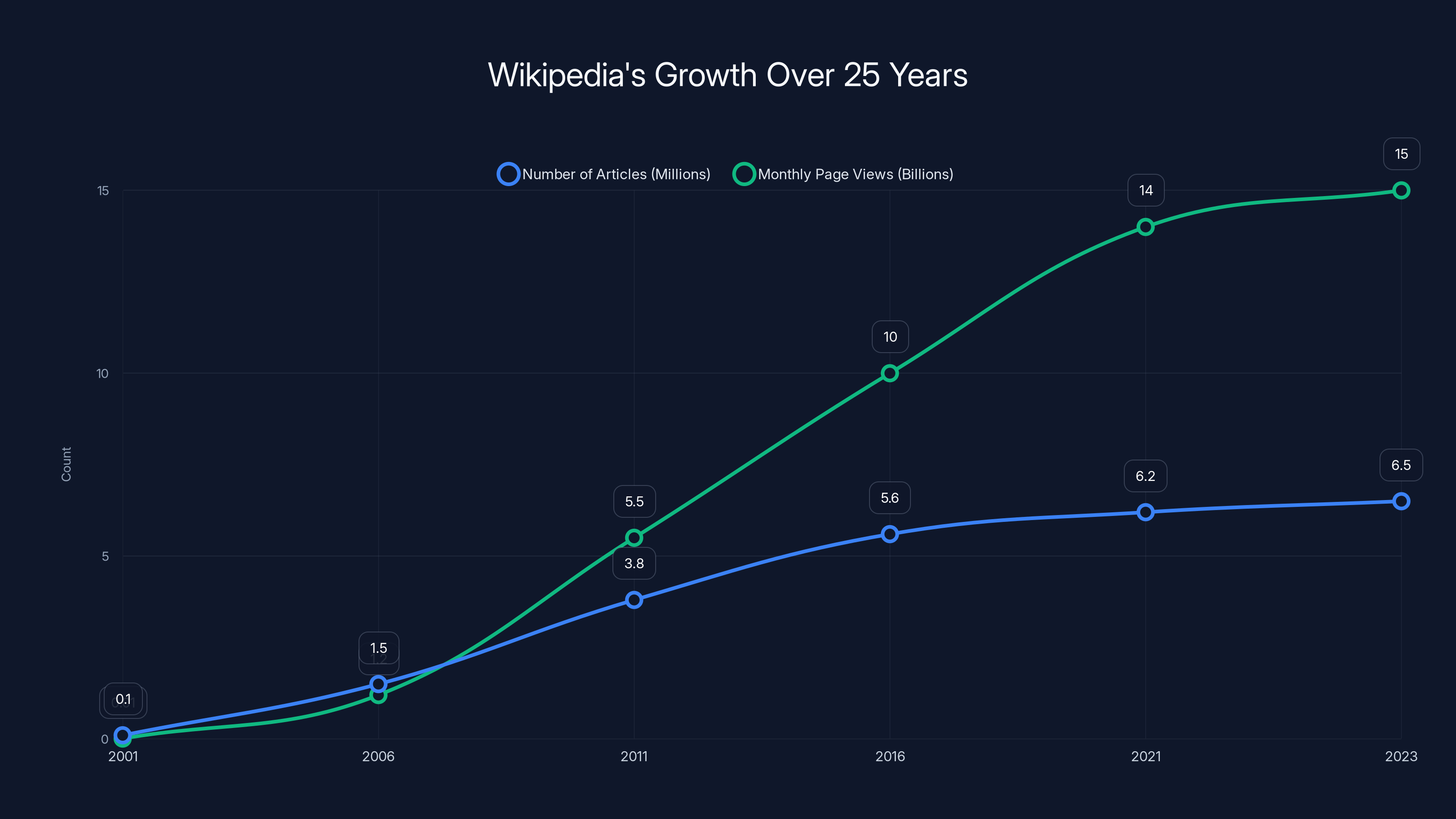

Twenty-five years. That's how long Wikipedia has been quietly transforming the way humans access and share knowledge. Since January 15, 2001, when founder Jimmy Wales launched the site with just 100 pages, the platform has become something almost unimaginable: a crowdsourced encyclopedia that rivals traditional reference materials in both breadth and depth.

Here's what's wild about this milestone. When Wikipedia launched, the internet was still skeptical about the idea of "anyone can edit." Critics said it would devolve into chaos. Bad information would dominate. The whole thing would collapse under its own anarchic principles. Instead, the opposite happened. Through a combination of volunteer passion, community governance, and an almost obsessive commitment to accuracy, Wikipedia became something resembling a modern miracle.

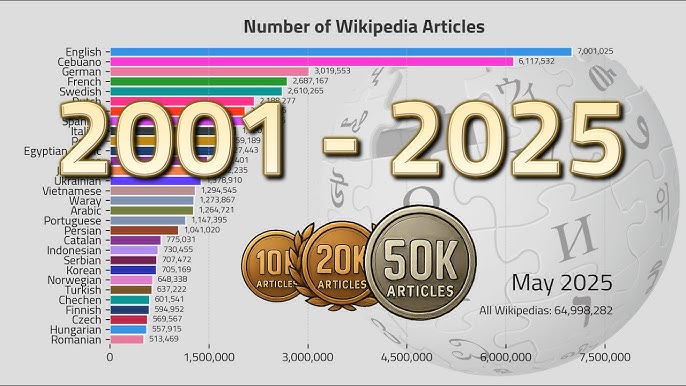

Today, the site hosts more than 65 million articles in over 300 languages. It receives almost 15 billion page views every month. On a typical day, more people reference Wikipedia than read the New York Times. For students, researchers, and casual knowledge-seekers, it's become the default first stop for answering almost any question.

But here's what often gets lost in the statistics: Wikipedia isn't built by algorithms or massive corporations. It's built by people. Thousands of them. Volunteers from Bangladesh to Brazil, from Australia to Argentina. People who spend their evenings and weekends editing articles about everything from medieval history to quantum mechanics, often without recognition, rarely for money, and mostly just because they believe knowledge should be free.

To mark this quarter-century milestone, the Wikimedia Foundation, the nonprofit organization that operates Wikipedia, is releasing something unprecedented: a mini docuseries that introduces readers to eight of these editors. It's a deliberate move to humanize the platform in an era when AI and automated systems dominate conversations about knowledge generation. The series puts faces, names, and stories to the abstract concept of "Wikipedia editors," revealing the motivations, challenges, and passions that drive the most ambitious knowledge-sharing project in human history.

TL; DR

- Massive Scale: Wikipedia now hosts 65 million articles across 300+ languages, receiving 15 billion monthly views

- Human-Powered: All content created and edited by volunteers, not AI or corporate teams

- 25-Year Milestone: Platform celebrating a quarter-century of free, accessible knowledge since January 2001

- Documentary Focus: New mini-docuseries highlighting 8 volunteer editors from around the globe

- Cultural Impact: Over 1.5 billion unique devices access Wikipedia monthly, making it the backbone of internet knowledge

- Challenges Remain: Platform faces pressure from misinformation, political bias accusations, and AI-generated content threats

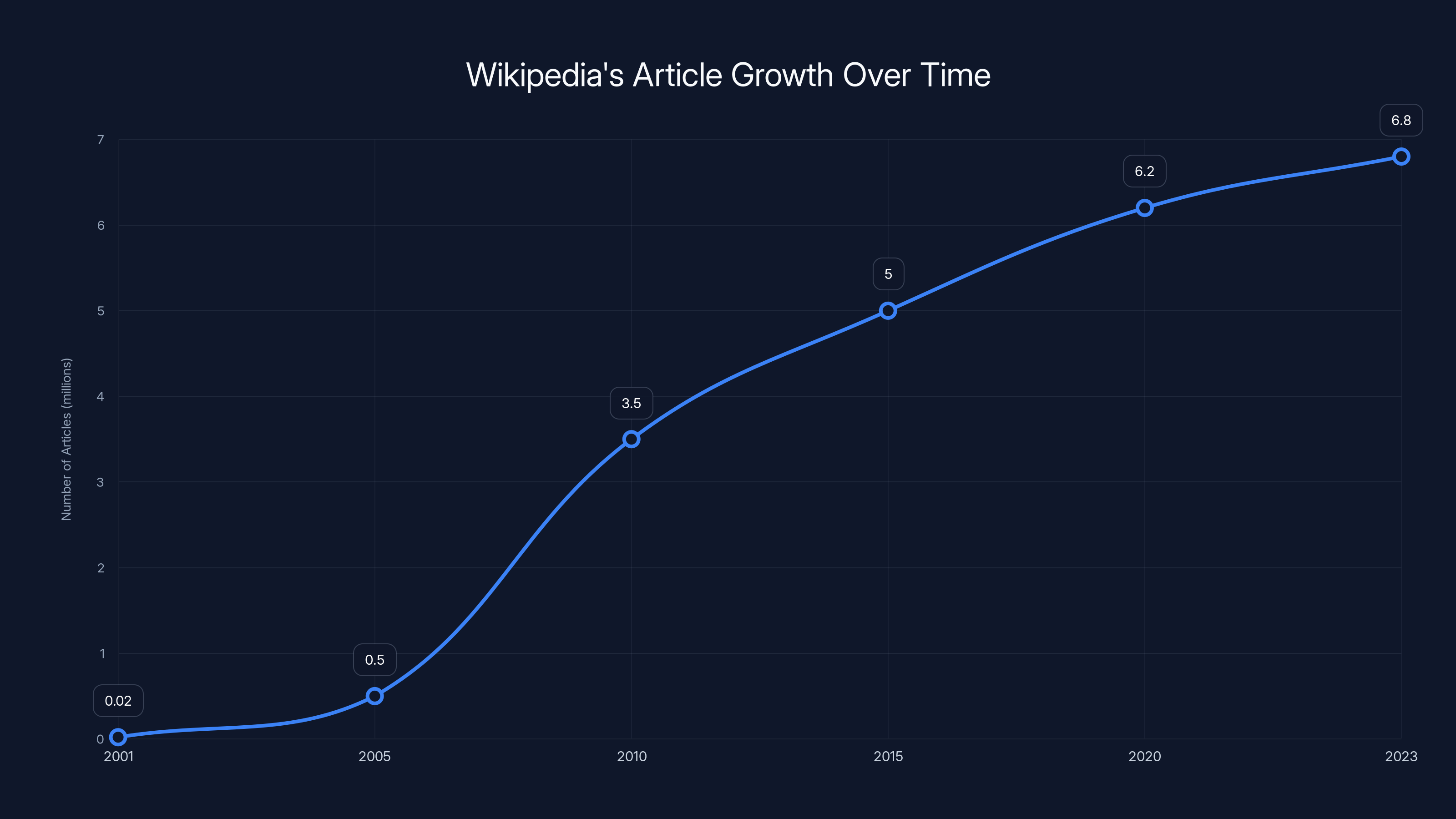

Wikipedia's article count has grown from a few thousand in 2001 to nearly 6.8 million in 2023, showcasing its exponential growth and global reach. (Estimated data)

From 100 Pages to a Global Phenomenon: Wikipedia's Growth Story

The numbers tell only part of the story. When Wikipedia launched, having an online encyclopedia at all was remarkable. The previous gold standard—Britannica—was a physical product, updated annually, accessible only to those who could afford it. Wikipedia flipped the model entirely. Free. Online. Constantly updated. Editable by anyone.

The growth trajectory defies conventional business logic. Most platforms require massive funding, sophisticated marketing, and corporate infrastructure. Wikipedia grew through word-of-mouth, volunteer enthusiasm, and the elegance of its simplicity. Within its first year, the site had thousands of articles. By 2005, hundreds of thousands. Today, the English Wikipedia alone contains nearly 6.8 million articles, each the product of collaborative editing, peer review, and continuous refinement.

But quantity alone doesn't explain Wikipedia's dominance. The real achievement is reliability. Studies comparing Wikipedia to traditional encyclopedias show surprisingly small margins in accuracy. A famous 2005 study by Nature found that Wikipedia's science articles had roughly the same error rate as Britannica. That's not just surprising—it's almost impossible to explain if you don't understand how Wikipedia actually works.

The platform has weathered multiple crises and transformations. It survived the transition from print-era thinking to digital-era expectations. It adapted to mobile access becoming dominant. It absorbed the rise of AI and machine learning without losing its core identity. Most impressively, it maintained its volunteer-first ethos while scaling to serve billions of people.

Today, Wikipedia serves as the knowledge backbone for countless applications, search engines, and AI systems. When AI models train on text, they're often trained partially on Wikipedia data. When people ask voice assistants factual questions, those systems frequently reference Wikipedia. The irony is delicious: a platform built on the radical principle of volunteer collaboration has become essential infrastructure for the most sophisticated technological systems we've created.

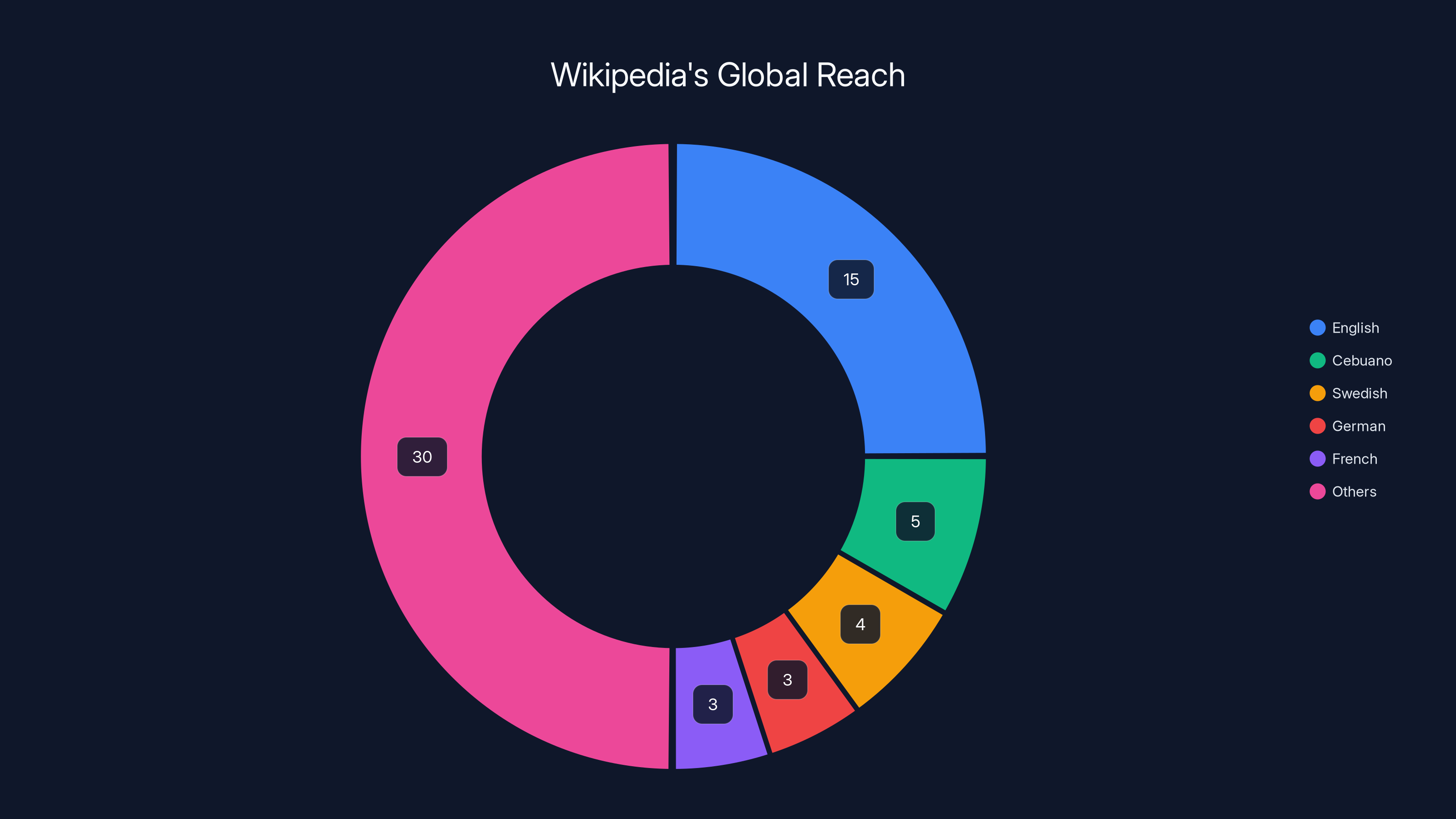

English accounts for the largest share of Wikipedia articles, but a significant portion is also in other languages, reflecting its global reach. (Estimated data)

The Eight Faces Behind the Encyclopedia: Introducing Wikipedia's Volunteer Editors

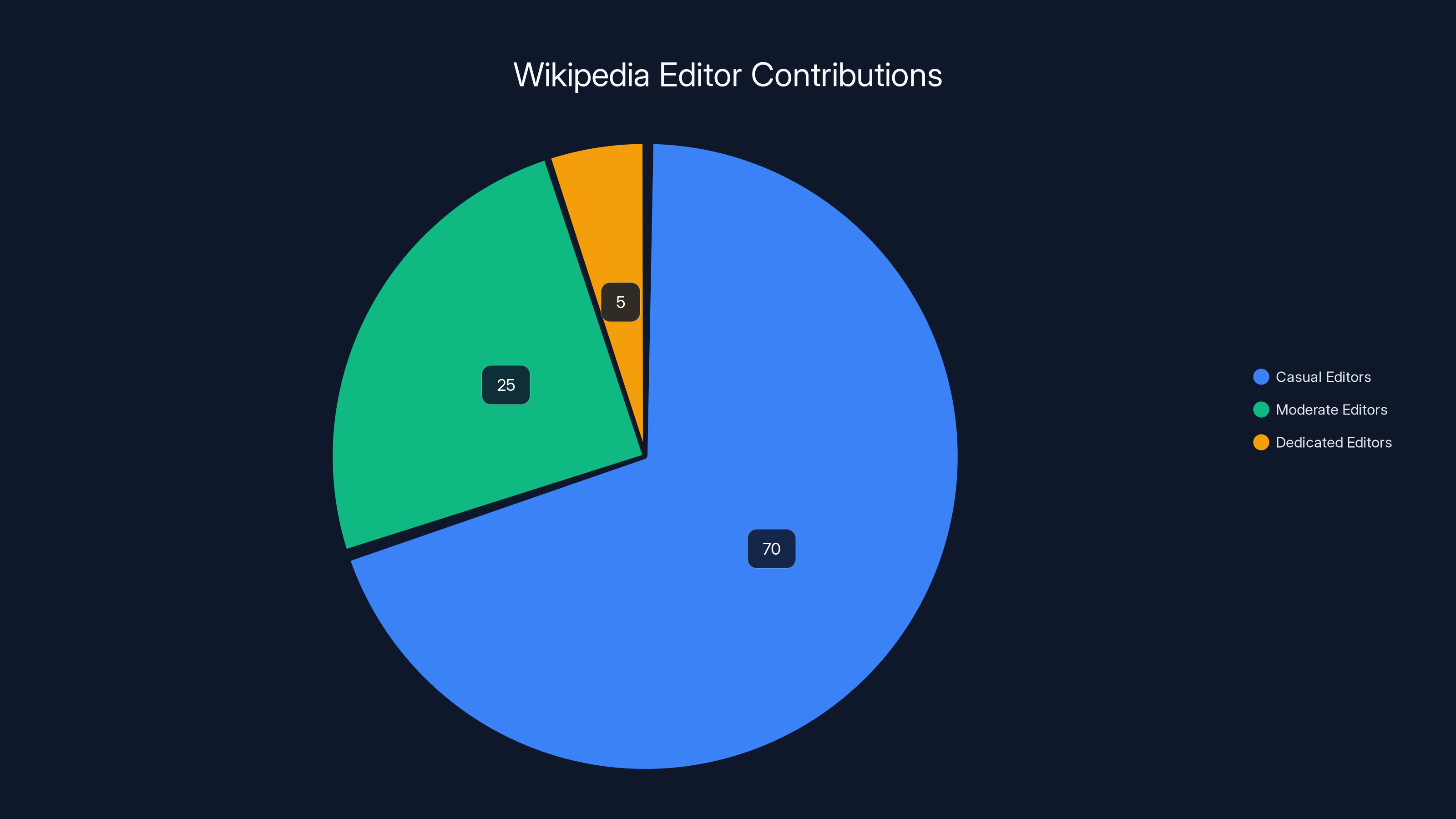

The docuseries doesn't try to cover everyone. That would be impossible. There are millions of Wikipedia editors globally, from casual contributors who fix a typo once to dedicated editors who've made over 100,000 edits. Instead, the Wikimedia Foundation selected eight editors whose stories represent different aspects of what Wikipedia means to different people.

Hurricane Hank: Documenting Disasters in Real Time

"Hurricane Hank" joined Wikipedia in 2005, during the aftermath of Hurricane Katrina. His motivation was straightforward: he wanted to ensure that the disaster's full story was documented accurately and comprehensively. This wasn't abstract knowledge work—it was about contributing to historical record-keeping during a moment when misinformation could spread rapidly.

Editors like Hurricane Hank represent something critical about Wikipedia's value proposition. Immediately after major events, Wikipedia becomes a gathering place for people who want the facts right. During natural disasters, political upheavals, or breaking news, editors across the globe coordinate to ensure Wikipedia articles stay current and accurate. They're not getting paid. Many aren't recognized. But they're driven by something deeper: the conviction that collective knowledge-gathering matters.

Hurricane Hank's work on Hurricane Katrina coverage demonstrates how Wikipedia evolves through real-time collaboration. The article he contributed to would've had multiple editors, multiple sources, and continuous refinement. What started as raw information during a crisis became a lasting historical record.

Netha: Fighting Misinformation in Healthcare

Netha represents a different species of Wikipedia contributor: the domain expert with a mission. An Indian doctor, Netha leveraged her medical expertise to combat health misinformation during the COVID-19 pandemic. This is where Wikipedia becomes genuinely critical infrastructure.

During COVID, misinformation spread faster than the virus itself. People searched for medical information frantically, and they often landed on Wikipedia. Editors like Netha made sure the information they found was accurate, evidence-based, and potentially lifesaving. She didn't wait for the Wikimedia Foundation to recruit her. She saw a need and acted on it.

This highlights a crucial aspect of Wikipedia's model: self-organization. The platform doesn't have a top-down structure assigning editors to topics. Instead, people identify areas where they can contribute and jump in. Netha identified healthcare as an area where her expertise could provide real value, and she's been editing medical content ever since.

The stakes in healthcare editing are extraordinarily high. Bad information on a medical Wikipedia page doesn't just mislead—it can harm people. Editors like Netha understand this weight. They don't take their role casually. Many spend hours verifying sources, cross-checking claims, and ensuring that medical articles reflect the best available evidence.

Joanne: Recovering Hidden Histories

Joanne from the UK has a different motivation entirely. She learned about Eloise Butler, a pioneering botanist who created the first public wildflower garden in the United States, through a social media post. Realizing that Butler didn't have a Wikipedia page despite her historical significance, Joanne took it upon herself to create one.

Joanne represents a growing cohort of Wikipedia editors: people using the platform to correct historical erasure. For centuries, women, people of color, and other marginalized groups have been absent from historical records. Wikipedia offers a decentralized mechanism for recovering these stories. Anyone can create an article. If it's well-sourced and follows Wikipedia's guidelines, it stays.

Butler's life is the kind of story that deserves documentation. She was an innovator, a conservationist, and a educator who influenced how Americans think about nature preservation. The fact that she didn't have a Wikipedia page before Joanne created one reveals gaps in crowdsourced knowledge—gaps that require human initiative to fill.

This work is painstaking. Joanne had to research Butler's life, find credible sources, format the information according to Wikipedia's style guidelines, and prepare for potential edits or deletions if reviewers thought the article didn't meet standards. She did this voluntarily because she believed the information mattered.

Gabe: Ensuring Representation of Black History

Gabe's mission is explicitly about representation. He focuses on ensuring that historic Black figures are documented accurately and thoroughly on Wikipedia. This is activism through information architecture.

For generations, Black history has been systematically underrepresented in mainstream reference materials. Wikipedia offers an opportunity to correct that. Gabe works to create and improve articles about Black scientists, artists, leaders, and changemakers whose contributions deserve recognition.

His work highlights a tension in Wikipedia's supposedly neutral model. There's no such thing as truly neutral information selection. Deciding which people deserve Wikipedia pages, which events deserve coverage, and which perspectives get represented—these are all choices. Gabe makes those choices deliberately, working to ensure that Wikipedia reflects the full diversity of human achievement.

The Digital Time Capsule: Preserving Wikipedia's Most Pivotal Moments

Beyond the docuseries, the Wikimedia Foundation created something equally important: a digital time capsule. This isn't just nostalgia—it's about preserving the key moments that shaped how Wikipedia evolved and what it came to mean.

The time capsule includes an audio recording from Jimmy Wales, Wikipedia's founder, reflecting on the past quarter-century. Hearing directly from Wales—the person who had the audacity to believe that volunteers could build a comprehensive, reliable encyclopedia—adds a layer of authenticity and context to the anniversary celebration.

It also features what might be the most telling moment in Wikipedia's history: the server crash during Michael Jackson's death. On June 25, 2009, Michael Jackson died. The news spread globally within hours. Wikipedia became one of the first places people turned for comprehensive information about his life, career, and death. The surge of traffic was so massive that Wikipedia's servers nearly crashed under the load.

This moment crystallized something that was always true but rarely acknowledged: Wikipedia isn't just a reference tool. It's a critical information utility. When major events happen, millions of people simultaneously want reliable information, and Wikipedia is one of the places they instinctively turn.

The time capsule also documents the platform's expansion into multiple languages, the development of sophisticated tools for fighting vandalism and misinformation, and the growing sophistication of Wikipedia's governance structures. What started as a loose collection of volunteers working with minimal coordination evolved into a complex ecosystem with policies, governance processes, and quality control mechanisms.

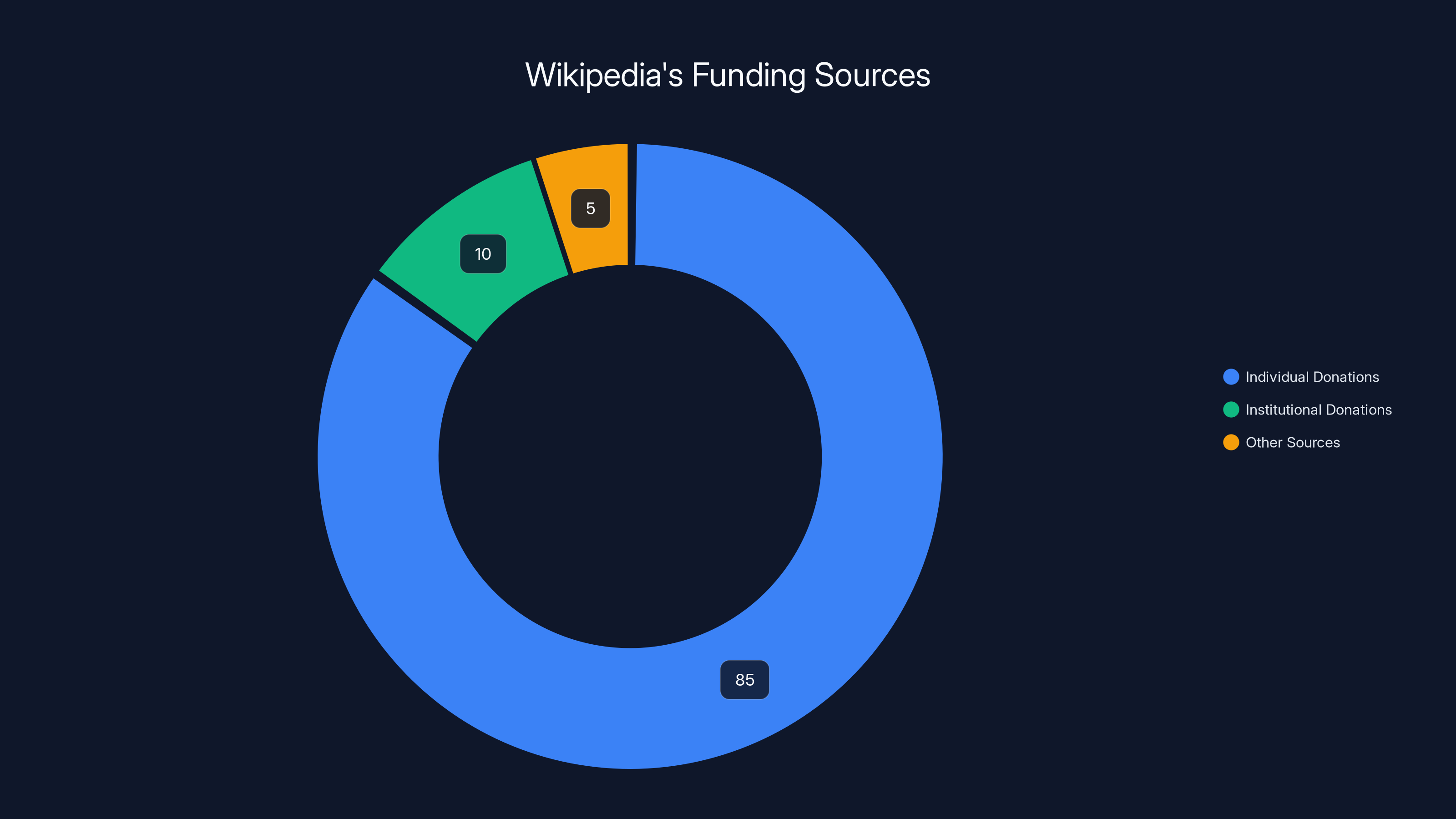

The majority of Wikipedia's funding comes from individual donations, highlighting the platform's reliance on user support. Estimated data.

The Architecture of Trust: How Wikipedia Maintains Quality

This is the question everyone asks: how does Wikipedia avoid descending into chaos? How does a platform where literally anyone can edit maintain such surprisingly high quality?

The answer isn't a single mechanism—it's a sophisticated ecosystem of overlapping systems, each designed to catch different types of problems.

Community Governance and Peer Review

Every edit is visible. That's crucial. When someone changes a Wikipedia article, that change appears in the article's edit history. Other editors see the change, verify it, and either approve it or revert it. This creates continuous peer review without formal approval processes.

For disputed changes, Wikipedia has escalation mechanisms. If editors disagree about what should appear in an article, they can discuss it on the article's talk page. If disagreements persist, more formal dispute resolution processes exist. Most importantly, Wikipedia's community has developed incredibly sophisticated norms about how these discussions should proceed.

Automated Systems and Bots

Sophistication in Wikipedia's quality systems comes from a combination of human and automated approaches. The platform uses bots—automated programs that perform specific tasks. Some bots revert obvious vandalism. Others check for formatting issues or flag articles that might need attention.

But these bots don't make final decisions. They flag problems. Humans review those flags and decide what to do. The human judgment layer is essential.

Edit Wars and Consensus Building

Wikipedia has developed explicit mechanisms for handling disagreements. When two editors keep reverting each other's changes, that's called an "edit war." Wikipedia's policy is that edit wars are unproductive. Instead, editors must discuss the disagreement and build consensus.

This sounds idealistic, and sometimes it is. But it also works better than you'd expect. Most Wikipedia editors care deeply about accuracy, and when faced with a disagreement, they're willing to engage in good-faith discussion.

Protection and Admin Oversight

Highly contentious articles—like those about politics, religion, or celebrities—are often protected from editing by non-established users. This prevents trolls or vandals from making changes without consequence. Established editors with proven track records can edit such articles.

Wikipedia also has administrators: users who've demonstrated trustworthiness and have been granted tools to enforce policies. Admins can lock articles, block users, or undo changes. But they're not empowered to make arbitrary decisions—they're bound by community policies and can face removal if they abuse their power.

The Volunteer Economy: Why People Spend Hours on Unpaid Work

This remains one of the most fascinating aspects of Wikipedia. Why would millions of people spend countless hours writing and editing articles for no pay?

Traditional economics suggests this shouldn't happen. People exchange labor for compensation. Yet Wikipedia defies this logic completely.

Intrinsic Motivation and Meaning

Research on motivation suggests that intrinsic drivers—the desire to do meaningful work, to solve problems, to contribute to something larger than yourself—can be more powerful than financial incentives. Wikipedia editors consistently report that they're motivated by a desire to contribute to free knowledge.

There's something almost spiritual about this for some editors. They believe knowledge should be free. They believe in the democratization of information. Contributing to Wikipedia isn't just a task—it's alignment with a deeper value system.

Expertise and Recognition

Within the Wikipedia community, editors develop reputations. Seasoned contributors with thousands of edits and consistent quality work gain social status. They're trusted to make certain decisions. In some cases, they become administrators.

This reputation isn't monetized, but it provides psychological reward. People want recognition for their work, even if it's not financial.

Community and Belonging

Wikipedia has developed genuine communities. Editors working on related topics interact, collaborate, and develop relationships. Wikipedia's discussion forums and editing platforms become spaces where people find community and connection.

For some editors, particularly those in countries where discussing certain topics might be restricted, Wikipedia editorship becomes a form of meaningful civic participation.

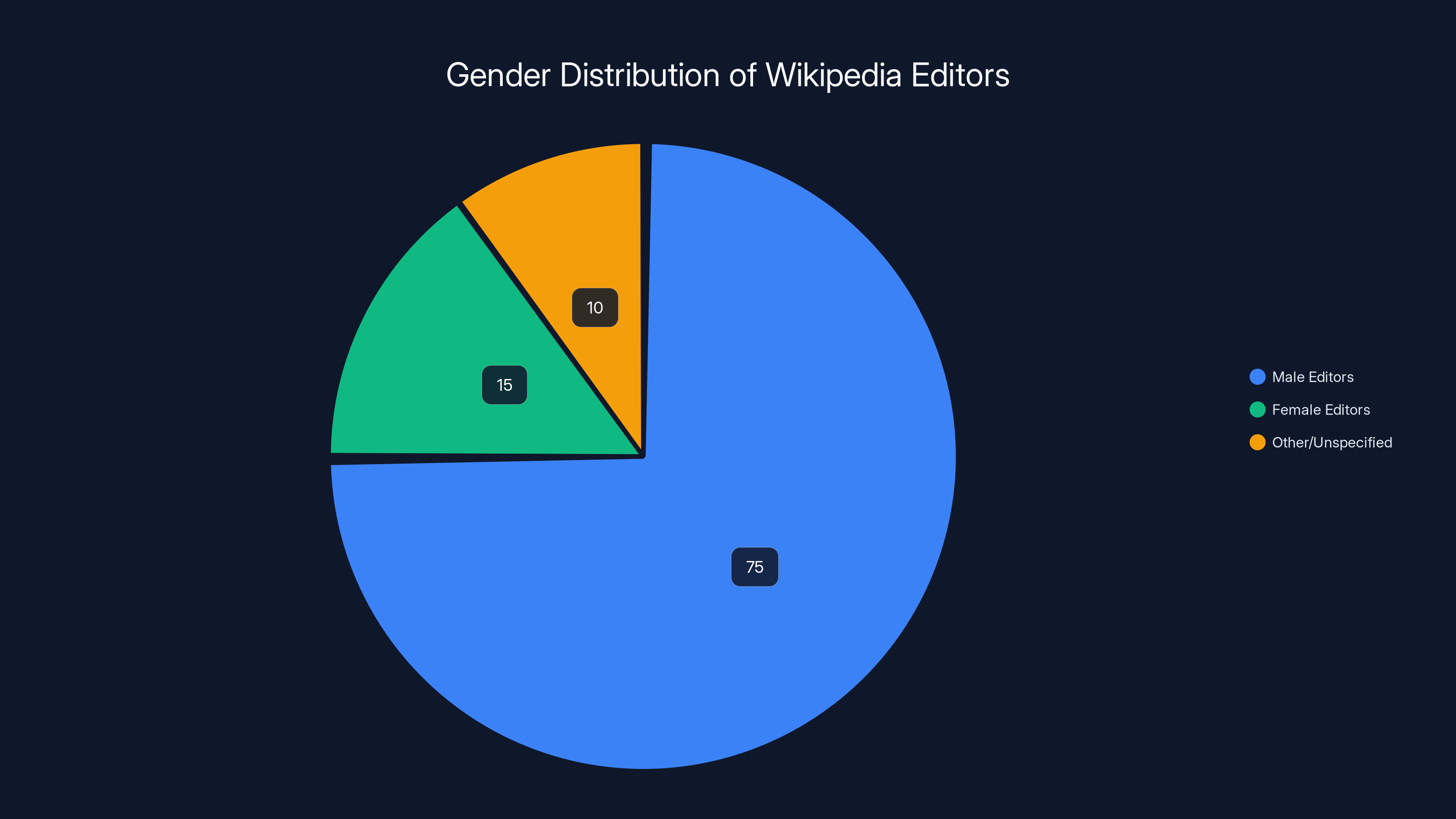

Estimated data shows that fewer than 15% of Wikipedia editors are women, highlighting a significant gender gap that could affect content diversity and coverage.

Wikipedia Under Pressure: Threats and Challenges

Despite its success, Wikipedia faces genuine challenges. Understanding these challenges provides context for why the 25th anniversary celebration matters.

The AI Generation Problem

As Open AI, Anthropic, and other AI companies build increasingly sophisticated language models, Wikipedia faces a new threat: AI-generated content flooding the platform.

AI systems can write plausible-sounding content at scale. An adversary could theoretically train a bot to generate thousands of AI-written Wikipedia articles. Most would be low-quality, but some might be convincing enough to slip through initial review.

Wikipedia has responded by developing policies around AI-generated content. Generally, articles created entirely by AI aren't acceptable. But AI tools used to assist human editors—for fact-checking, initial drafting, or translation—are increasingly accepted.

This creates a moving target. As AI improves, the line between acceptable AI assistance and unacceptable AI content generation becomes harder to draw.

Political Pressure and Bias Accusations

Wikipedia has become a battleground for political disputes. In 2024, political figures publicly accused Wikipedia of bias, particularly around coverage of sensitive geopolitical issues. Articles about the Gaza conflict, for example, have been the subject of intense debate about what information should be emphasized and how disputes should be framed.

These accusations reflect a genuine problem: Wikipedia can't be truly neutral because the selection of what to cover is inherently a choice. But they also reflect an emerging reality: Wikipedia's importance means that disputes about its content become high-stakes political disputes.

Declining Editor Base

Research suggests that the number of active Wikipedia editors has been declining, particularly in certain regions. The communities that sustain Wikipedia in different languages are sometimes aging out. Young people are less likely to spend hours editing Wikipedia than previous generations were.

This presents an existential threat. Wikipedia is only as good as its editor base. If editors decline significantly, quality might suffer. Finding ways to recruit and retain new editors—particularly in non-English languages—is becoming increasingly important.

The Economics of Knowledge: How Wikipedia Serves Billions for Free

Wikipedia operates on a shoestring budget compared to its value and scale. The Wikimedia Foundation's annual budget is roughly $150 million—a figure that would be utterly inadequate for a commercial operation serving 1.5 billion monthly users.

How is this possible? A combination of factors.

Server Efficiency and Open Source

Wikipedia runs on relatively efficient technology. The platform doesn't require cutting-edge infrastructure because the architecture is fundamentally simple: articles stored in a database, served to readers. This simplicity enables efficiency.

The platform also uses open-source technology. Rather than licensing expensive proprietary software, Wikipedia builds on freely available tools. This reduces costs dramatically.

Donations and Nonprofit Status

The Wikimedia Foundation funds operations through donations. The annual fundraising campaign is iconic—users see banners asking for support. Most donations come from individual users rather than large institutional donors. This creates a direct relationship between Wikipedia users and its funding.

Nonprofit status means that every dollar raised goes toward operations and maintenance, not shareholder returns.

Efficiency of Volunteer Labor

Most critically, Wikipedia replaces expensive employees with volunteer editors. In a commercial context, employing millions of people to write, edit, and manage content would be prohibitively expensive. Volunteers make the model work.

This isn't to say volunteers do work that would otherwise be done by expensive professionals. Many Wikipedia editors are highly skilled professionals—doctors, academics, scientists—who contribute expertise that would cost vast sums if purchased from employers.

Wikipedia has seen exponential growth in both the number of articles and monthly page views over its 25-year history. Estimated data shows a significant increase in user engagement and content creation.

Language, Translation, and Global Access

English Wikipedia gets most attention, but the platform's global impact comes from its multilingual nature. Wikipedia exists in over 300 languages, from major languages like Spanish and French to languages with just thousands of speakers.

For speakers of smaller languages, Wikipedia often represents unprecedented access to comprehensive information in their native tongue. A researcher in Mongolia can read about advanced topics in Mongolian. A student in Kenya can learn about physics in Swahili.

This is profoundly democratizing. For centuries, access to knowledge has been mediated by language. English speakers have advantages because most advanced texts exist in English. Wikipedia begins correcting this imbalance.

However, translation creates challenges. Not all information is equally easy to translate. Some concepts are culture-specific or language-specific. Different Wikipedia communities develop different editorial standards and interpretations of neutrality.

The Wikimedia Foundation supports translation tools and infrastructure, but ultimately, each language community must solve these problems through collaboration.

The Future of Wikipedia: What's Next for the Platform?

As Wikipedia enters its second quarter-century, several trends will likely shape its evolution.

AI Integration Without Loss of Identity

Wikipedia will almost certainly integrate AI tools more deeply. The question is how to do this while maintaining the platform's core identity as a human-edited, community-governed resource.

One possibility: AI tools become more sophisticated at assisting editors. Tools for translation, fact-checking, source-finding, and initial drafting could make editors more efficient. If done right, this could increase quality and productivity without displacing the human judgment layer.

Improved Accessibility and Design

Wikipedia's interface is functional but dated. Modern users expect more sophisticated design. The Wikimedia Foundation has been investing in modernizing Wikipedia's appearance and usability. This could make the platform more appealing to younger users.

Expansion of Multimedia Content

Wikipedia is increasingly incorporating not just text but images, videos, and interactive elements. As broadband access improves globally, richer multimedia content becomes practical. This could make Wikipedia more engaging and accessible to people with different learning styles.

Addressing Systemic Biases

Wikipedia's editor base skews toward older, more affluent, predominantly male users. This creates biases in coverage. Addressing these biases requires deliberately recruiting editors from underrepresented groups and creating editorial projects focused on improving coverage of marginalized topics.

Estimated data suggests that the majority of Wikipedia editors are casual contributors, while a small percentage are dedicated editors with over 100,000 edits.

The Docuseries: Putting Faces to the Editors

The mini docuseries celebrating Wikipedia's 25th anniversary serves a specific purpose. It humanizes the platform at a moment when AI and corporate knowledge systems are increasingly dominating conversations.

By introducing readers to Hurricane Hank, Netha, Joanne, and Gabe, the series answers an implicit question: who are these people doing this work? What drives them? The answer turns out to be more interesting than you'd expect.

These aren't tech gurus or academic elites. They're regular people from different countries, cultures, and professions. What unites them is a commitment to the idea that knowledge should be free and accurate. They've chosen to spend significant portions of their lives working toward that vision.

The docuseries also serves a secondary purpose: recruitment. Watching stories about Wikipedia editors inspires others to contribute. The Wikimedia Foundation knows that attracting new editors is crucial for long-term sustainability. By highlighting the impact and meaning of editorship, the series encourages potential contributors.

Wikipedia's Role in the AI Era: Why It Matters More Than Ever

Paradoxically, as AI becomes more sophisticated, Wikipedia becomes more important, not less.

AI systems need training data. Much of that data comes from Wikipedia. When Chat GPT provides you with information, it's drawing from patterns learned partly from Wikipedia text. When Google Search answers your questions, Wikipedia articles often inform those answers.

This creates a responsibility for Wikipedia editors. The information they contribute to Wikipedia influences the training and behavior of AI systems. If Wikipedia contains biases, those biases propagate into AI. If Wikipedia information is inaccurate, AI systems perpetuate that inaccuracy.

It also creates a resource issue. AI companies benefit enormously from Wikipedia content, often without significant reciprocal contribution. The Wikimedia Foundation has argued, with some justification, that companies profiting from Wikipedia should contribute to its operations.

Moreover, Wikipedia serves as a check on AI-generated misinformation. As AI tools become easier to use, the risk of widespread AI-generated disinformation increases. Wikipedia, maintained by humans who verify sources and debate accuracy, represents a bastion of human-verified information. Its existence becomes more crucial as a point of reference.

The Birthday Celebration: More Than Just Nostalgia

The 25th-anniversary celebration involves more than just a docuseries and time capsule. The Wikimedia Foundation is hosting a birthday event livestream where people can participate, share stories, and celebrate the platform's achievements.

There's also a digital birthday card that anyone can sign. This serves both practical and symbolic purposes. Practically, it's a fun way to engage the community. Symbolically, it affirms that Wikipedia belongs to everyone who uses it, everyone who contributes to it.

These celebrations matter because they maintain momentum and community spirit. Volunteering can be thankless work. Regular recognition and celebration help sustain motivation. The 25th-anniversary events are partly about thanking editors for their contributions.

Beyond Wikipedia: The Broader Wikimedia Movement

While Wikipedia is the Wikimedia Foundation's most visible project, it's not the only one. The foundation supports Wiktionary (a free dictionary and thesaurus), Wikimedia Commons (a repository of free media), Wikidata (a free database), and numerous other projects.

Wikidata is particularly important for Wikipedia's future. It's a structured database of factual information that multiple projects can use. Rather than each Wikipedia article containing the same basic facts independently, Wikidata stores those facts once and multiple projects can reference them. This improves consistency and reduces work for editors.

These projects collectively represent an alternative vision of how knowledge infrastructure could work. Rather than centralized, proprietary systems, Wikimedia projects demonstrate that decentralized, community-governed, open-source knowledge systems can be viable and valuable.

Lessons from Wikipedia: What Its Success Teaches Us

Wikipedia's quarter-century of success offers lessons that extend far beyond encyclopedia editing.

Trust Can Be Built Collectively

Wikipedia demonstrates that distributed systems can develop and maintain trust without centralized authority. The mechanisms are sophisticated—reputation systems, peer review, governance processes—but they emerge from community rather than being imposed from above.

This has implications for how we think about governance, knowledge production, and social organization more broadly.

Intrinsic Motivation Is Powerful

Capitalist economies assume that people work primarily for financial compensation. Wikipedia proves that meaningful work, community, and belief in a mission can motivate millions of hours of labor.

This suggests that organizational redesign focused on meaning and community might be more powerful than purely financial incentive structures.

Open Systems Can Scale

There was genuine doubt about whether an open-source encyclopedia could scale to global relevance. The fact that Wikipedia succeeded demonstrates that openness isn't inherently a barrier to quality or scale. Instead, openness can enable both.

Knowledge Should Be Decentralized

Wikipedia's decentralized editorial model—where power is distributed among the community rather than concentrated in editorial boards or corporate decision-makers—produces better outcomes than centralized alternatives.

This has implications for how we think about authority, expertise, and who gets to decide what information is reliable.

The Birthday Event: Thursday, January 15th

The formal birthday celebration happens Thursday at 11 AM ET. The livestream event will feature discussions with editors, Wikimedia Foundation leadership, and potentially surprise appearances from notable contributors.

For anyone interested in learning more about Wikipedia's story or meeting some of the people behind the platform, the event is worth watching. It's free, accessible, and offers a chance to participate in celebrating a genuinely remarkable achievement.

You can sign the digital birthday card, watch the livestream, and engage with the time capsule on the Wikimedia Foundation's website. The events are designed to be inclusive and welcoming to anyone interested in the platform, regardless of whether you've ever edited Wikipedia.

Conclusion: A Quarter-Century of Knowledge Freedom

Twenty-five years might not seem long in the context of human history, but in internet terms, it's a lifetime. Wikipedia emerged during the early days of the web, when the fundamental questions about how information would be organized and shared were still unresolved.

Wikipedia's model—volunteer-edited, community-governed, freely accessible—represented a radical bet on human cooperation. That bet has paid off spectacularly. Wikipedia has become the most comprehensive reference work ever created, accessed by billions of people, maintained by thousands of dedicated volunteers.

But the platform's success should not obscure the ongoing challenges. The volunteer editor base faces sustainability questions. Political pressure and misinformation threaten its role as a reliable reference. The emergence of sophisticated AI creates both opportunities and threats.

Despite these challenges, Wikipedia's 25th anniversary is genuinely worth celebrating. The platform has demonstrated something important: that when people come together around a shared vision of what knowledge could be, remarkable things become possible.

The docuseries and time capsule celebrate not just Wikipedia's past but what it represents for the future. In a world increasingly dominated by proprietary, corporate-controlled platforms, Wikipedia stands as a demonstration that alternatives are possible. Free knowledge is possible. Decentralized governance is possible. Volunteer collaboration at massive scale is possible.

As Wikipedia enters its next quarter-century, the editors—from Hurricane Hank to Netha to Joanne to Gabe and thousands of others—will continue the work of building and maintaining humanity's shared knowledge base. Their contributions may never be monetized, but they're profoundly valuable. They're also profoundly hopeful.

In trusting that people will spend their time making information free and accurate for strangers, Wikipedia bet on human goodness. That bet has been vindicated. That vindication is worth celebrating.

FAQ

What is Wikipedia and why is it significant?

Wikipedia is a free, online encyclopedia built and maintained by volunteer editors from around the world. It's significant because it's become the largest and most comprehensive reference work ever created, with 65 million articles across 300+ languages, receiving almost 15 billion page views monthly. The platform demonstrates that reliable, high-quality information can be produced through decentralized volunteer collaboration rather than corporate or institutional structures.

How do Wikipedia editors maintain quality and prevent misinformation?

Wikipedia maintains quality through multiple overlapping systems: all edits are visible and subject to peer review, disputed changes are discussed by editors before being finalized, automated bots flag potential problems for human review, and community policies enforce standards around sourcing and neutrality. Experienced editors and administrators can protect articles and enforce policies, but major decisions come from community consensus rather than top-down authority.

Who are the volunteer editors featured in Wikipedia's 25th-anniversary docuseries?

The docuseries features eight editors with different motivations and expertise: Hurricane Hank, who documents major events and disasters; Netha, an Indian doctor fighting health misinformation; Joanne from the UK, who recovers hidden historical figures; and Gabe, who works to ensure accurate representation of Black history. Their stories reveal that Wikipedia's contributors are regular people from diverse backgrounds united by a commitment to making knowledge freely accessible.

Why would people spend thousands of hours editing Wikipedia without payment?

Wikipedia editors are motivated by intrinsic factors including belief in free knowledge, desire for meaningful work, community and belonging, and the opportunity to contribute expertise in areas they care about. Reputation within the Wikipedia community provides social recognition. Some editors are driven by activism—using editorship to correct historical erasures or combat misinformation in fields like medicine or history.

What challenges does Wikipedia face as it enters its second quarter-century?

Wikipedia faces several challenges: the declining number of active editors, particularly in non-English languages; political pressure and accusations of bias around sensitive topics; the threat of AI-generated content flooding the platform; and the need to recruit and retain new editors from diverse backgrounds. The platform also grapples with systemic biases in its coverage and ensuring that information remains reliable as AI increasingly depends on Wikipedia data for training.

How does Wikipedia's volunteer model differ from traditional reference works like Britannica?

Unlike Britannica, which relied on paid expert editors and was physically published, Wikipedia is free, constantly updated in real-time, editable by anyone, and exists in multiple languages. Rather than authority coming from institutional credentials, Wikipedia's credibility emerges from community verification and transparency—all changes are visible and can be discussed. This distributed model has proven surprisingly effective at maintaining quality while enabling rapid growth.

What role does Wikipedia play in training AI systems?

Wikipedia content is used as training data for AI language models, including systems like Chat GPT. This means that biases, inaccuracies, or gaps in Wikipedia coverage influence how AI systems behave. Wikipedia also serves as a counterbalance to AI-generated misinformation by representing human-verified, sourced information. As AI becomes more prevalent, Wikipedia's role as a reliable knowledge reference becomes more critical.

How can I contribute to Wikipedia as a new editor?

Start by reading Wikipedia's guidelines about editing, selecting a topic you know well and care about, and making small edits to improve existing articles. Focus on topics where you have genuine expertise. Most contributions start small—fixing citations, improving clarity, adding relevant sources. Once you're familiar with the platform's norms and processes, you can tackle larger projects like creating new articles or improving underdeveloped topics.

Key Takeaways

- Wikipedia has grown from 100 pages to 65 million articles in 25 years, serving 15 billion monthly page views through volunteer editors

- The 25th-anniversary docuseries humanizes Wikipedia by featuring eight editors whose motivations range from disaster documentation to historical recovery and combating misinformation

- Wikipedia's success demonstrates that volunteer-driven, community-governed knowledge systems can achieve quality and scale rivaling commercial alternatives

- The platform faces emerging challenges including declining editor bases, political pressure around bias accusations, and threats from AI-generated content

- Wikipedia's role becomes more critical as AI systems depend on its content for training data and as it serves as a counterbalance to automated misinformation

Related Articles

- Apple and Google Face Pressure to Ban Grok and X Over Deepfakes [2025]

- Why Grok's Image Generation Problem Demands Immediate Action [2025]

- Grok AI Deepfakes: The UK's Battle Against Nonconsensual Images [2025]

- Bandcamp's AI Music Ban: What Artists Need to Know [2025]

- Bluesky Social Platform: The Complete Guide [2025]

- TikTok Shop's Algorithm Problem With Nazi Symbolism [2025]

![Wikipedia's 25-Year Journey: Inside the Lives of Global Volunteer Editors [2025]](https://tryrunable.com/blog/wikipedia-s-25-year-journey-inside-the-lives-of-global-volun/image-1-1768467965904.png)