TikTok Shop's Algorithm Problem With Nazi Symbolism [2025]

I went looking for hip hop jewelry on TikTok Shop. What I found instead was an algorithmic rabbit hole into Nazi merchandise.

This isn't hyperbole. This is what happened when I started searching for basic streetwear accessories on one of social media's fastest-growing e-commerce platforms. Within minutes, TikTok's recommendation system was suggesting I look for "swatika jewelry," "double lightning bolt necklaces," "ss necklaces," and "hh necklaces"—each one coded in white nationalist symbology. Some of these searches came with product images that seemed deliberately plausible, as if someone had thought through exactly how to market extremist jewelry to unsuspecting users.

The story gets worse. In December 2024, TikTok had to remove a swastika necklace from its shop after it went viral on social media. The product description used "hiphop" as a keyword—a deliberate obfuscation technique designed to make the item seem innocent while appealing to extremist buyers who knew what they were actually searching for. This wasn't some rogue seller who slipped through the cracks. This was a product promoted directly to millions of users through TikTok's official e-commerce platform.

What makes this worse than a simple moderation failure is that it reveals how algorithmic recommendation systems can be weaponized. You don't need massive infrastructure to poison a platform's search suggestions. You need users (or bots pretending to be users) who understand how recommendation algorithms work, combined with a platform that doesn't adequately police what gets recommended.

TikTok is now the third-largest e-commerce platform in the United States, just behind Amazon and Shopify. Younger users—the demographic most vulnerable to radicalization—are discovering products, sellers, and entire ideological communities through TikTok Shop without realizing they're being algorithmically funneled toward extremist content. This is a problem that goes way beyond a single bad necklace. It's about infrastructure, incentives, and what happens when growth matters more than safety.

Let's break down what's happening, why it's happening, and what needs to change.

TL; DR

- TikTok Shop's algorithm actively suggests Nazi-related search terms including "double lightning bolt necklace," "ss necklace," and "hh necklace" when users search for innocent products like hip hop jewelry

- The platform has acknowledged the problem but only after being caught, promising to remove these suggestions while maintaining vague details about moderation efforts

- Dog-whistle marketing is baked into the system, with sellers deliberately using innocuous keywords like "hiphop" to disguise extremist products from automated filters

- Younger users are disproportionately exposed to this content because TikTok Shop targets Gen Z and Gen Alpha users who may not recognize extremist symbology

- Algorithmic poisoning is cheaper and easier than you'd think, requiring only coordinated search manipulation to push toxic terms into a platform's recommendation engine

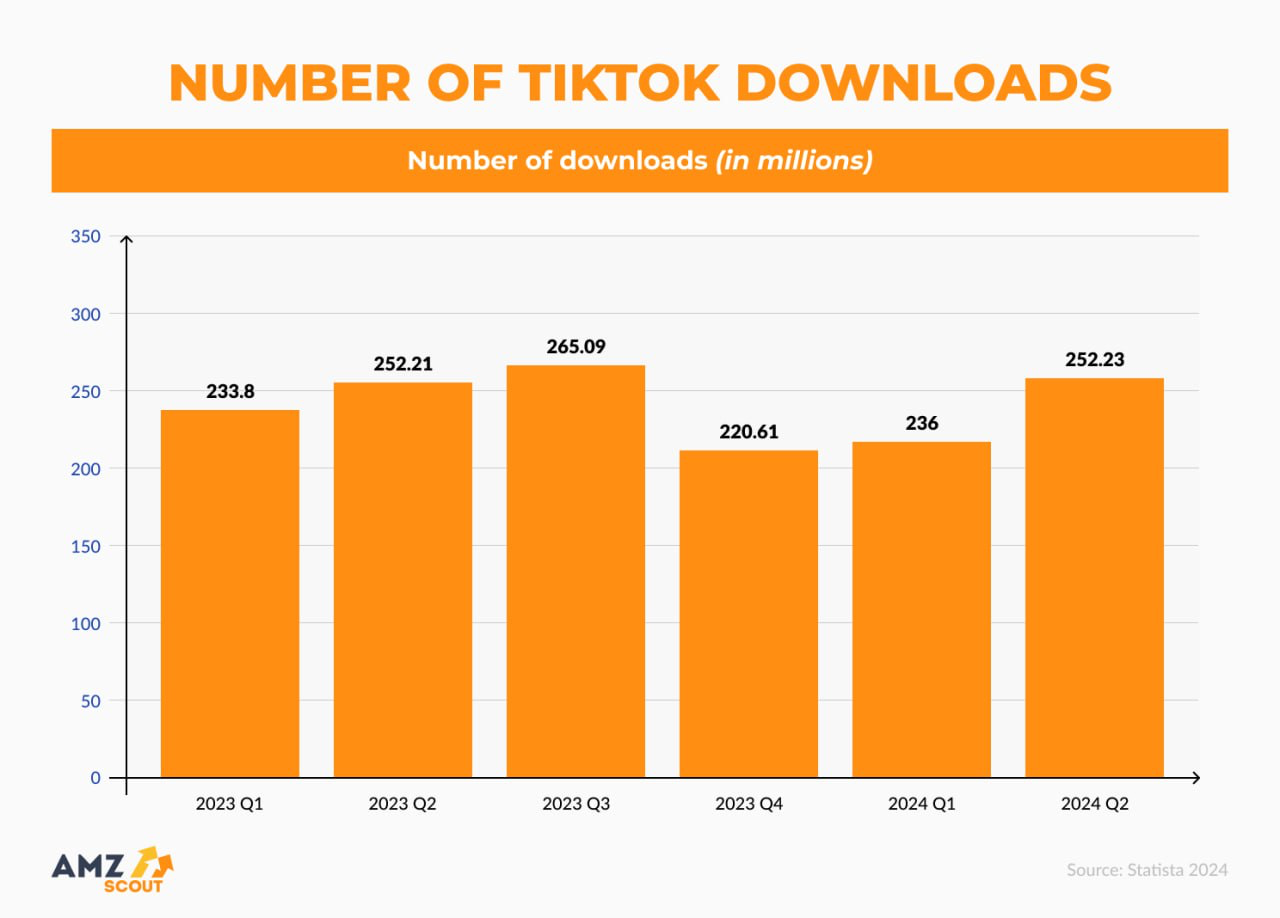

In the first half of 2025, TikTok Shop removed 1.4% of sellers and 0.2% of products, indicating a primarily reactive moderation approach. Estimated data.

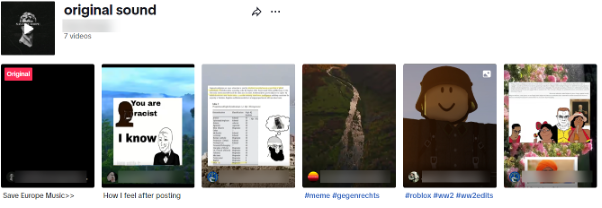

How I Found the Nazi Symbolism Rabbit Hole

I started with a simple search. "Hip hop jewelry." Nothing unusual there. Millions of people search for streetwear accessories every day. I was curious what TikTok Shop would recommend.

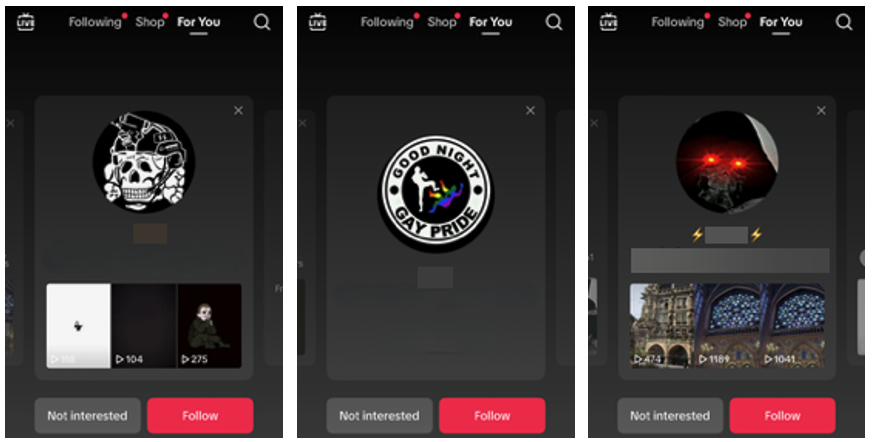

The interface is straightforward. You type in a search term, and TikTok shows you products. But there's also a "Others searched for" section—a recommendation box that suggests related searches. This box appears as four tiles with images and search terms. It's designed to guide users toward products they might not have originally thought to look for.

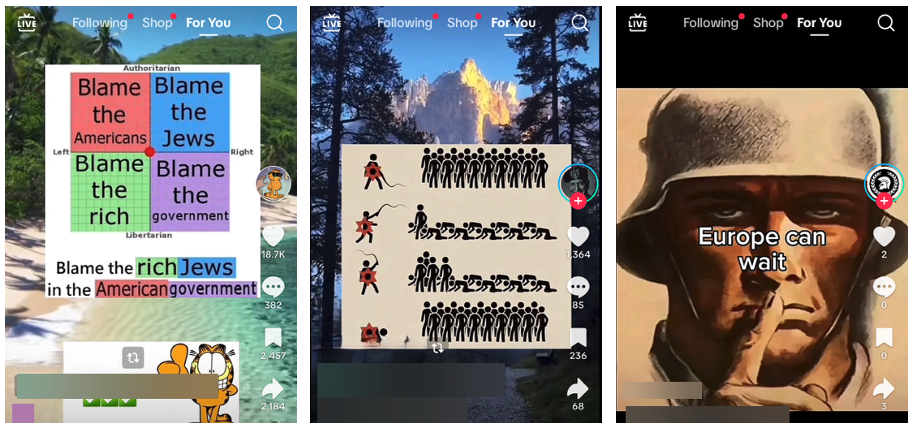

After searching "hip hop jewelry," the "Others searched for" box suggested "swatika jewelry." This was already a red flag. Someone misspelled "swastika" but spelled it phonetically in a way that would still trigger the search. The image next to it? An $11 rhinestone-covered chain necklace.

I clicked on that suggested search. Now TikTok was showing me searches people had made for "german ww 2 necklace," with a Star of David pendant displayed next to it. The juxtaposition was unmistakable. This wasn't accidental. Someone had deliberately searched for that exact phrase, likely paired with that exact product, to create an association in the algorithm's memory.

I continued clicking on TikTok's suggestions. Each click revealed another layer of extremist terminology:

- "double lighting bolt necklace"

- "ss necklace"

- "german necklace swastik"

- "hh necklace"

- "lightning bolt pendant"

Each suggestion came with product images that were somewhat plausibly deniable when viewed individually. An S-shaped lightning bolt necklace could theoretically be a trendy design. A double lightning bolt could be a fashion statement. But when you string them all together in the order TikTok recommends them, the pattern becomes unmistakable.

This wasn't a glitch. This was a system being used exactly as designed—but weaponized by people who understood how these systems work.

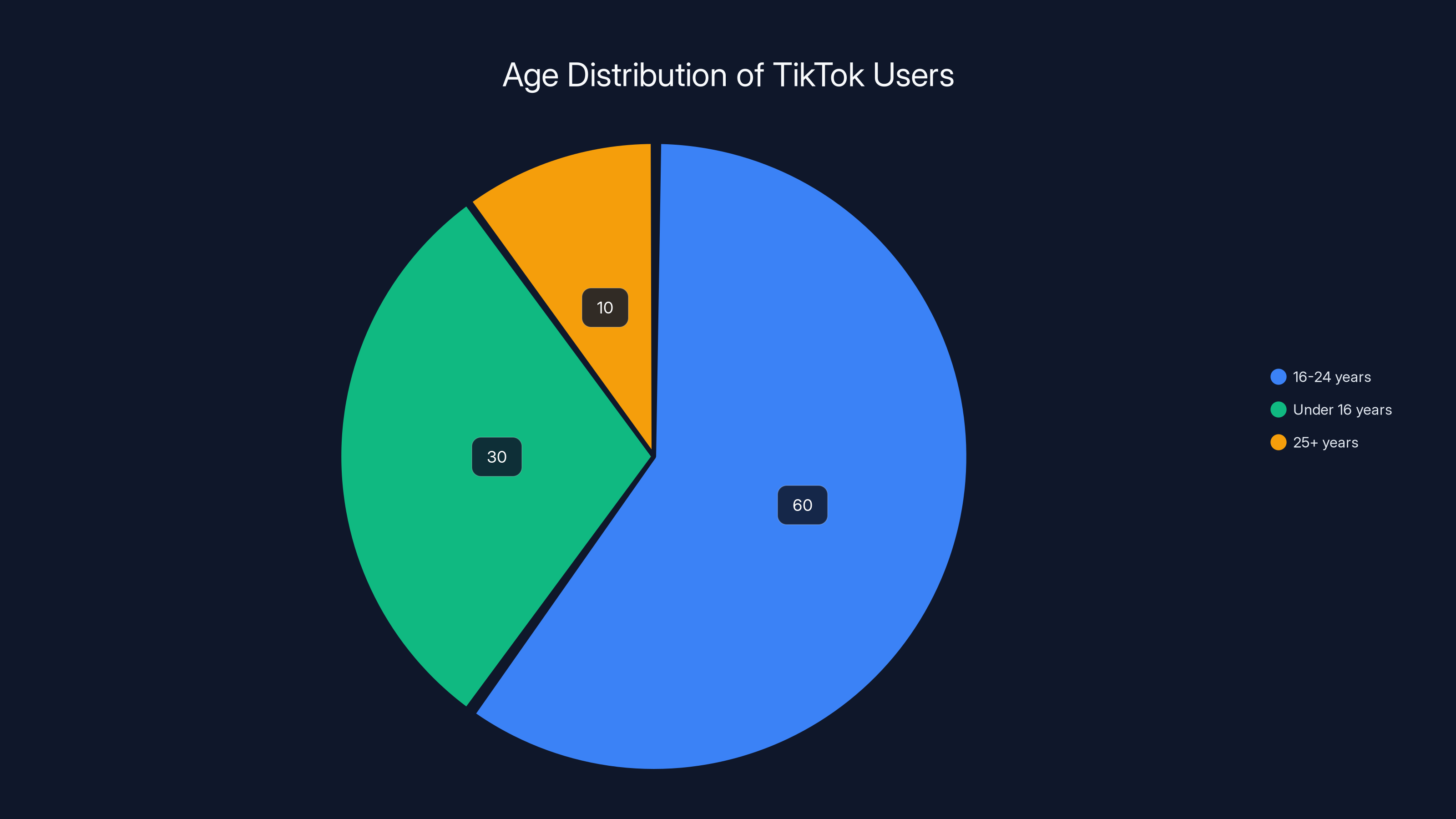

Estimated data shows that 60% of TikTok users are aged 16-24, with a significant portion under 16, highlighting the platform's young user base.

The December 2024 Swastika Necklace Incident

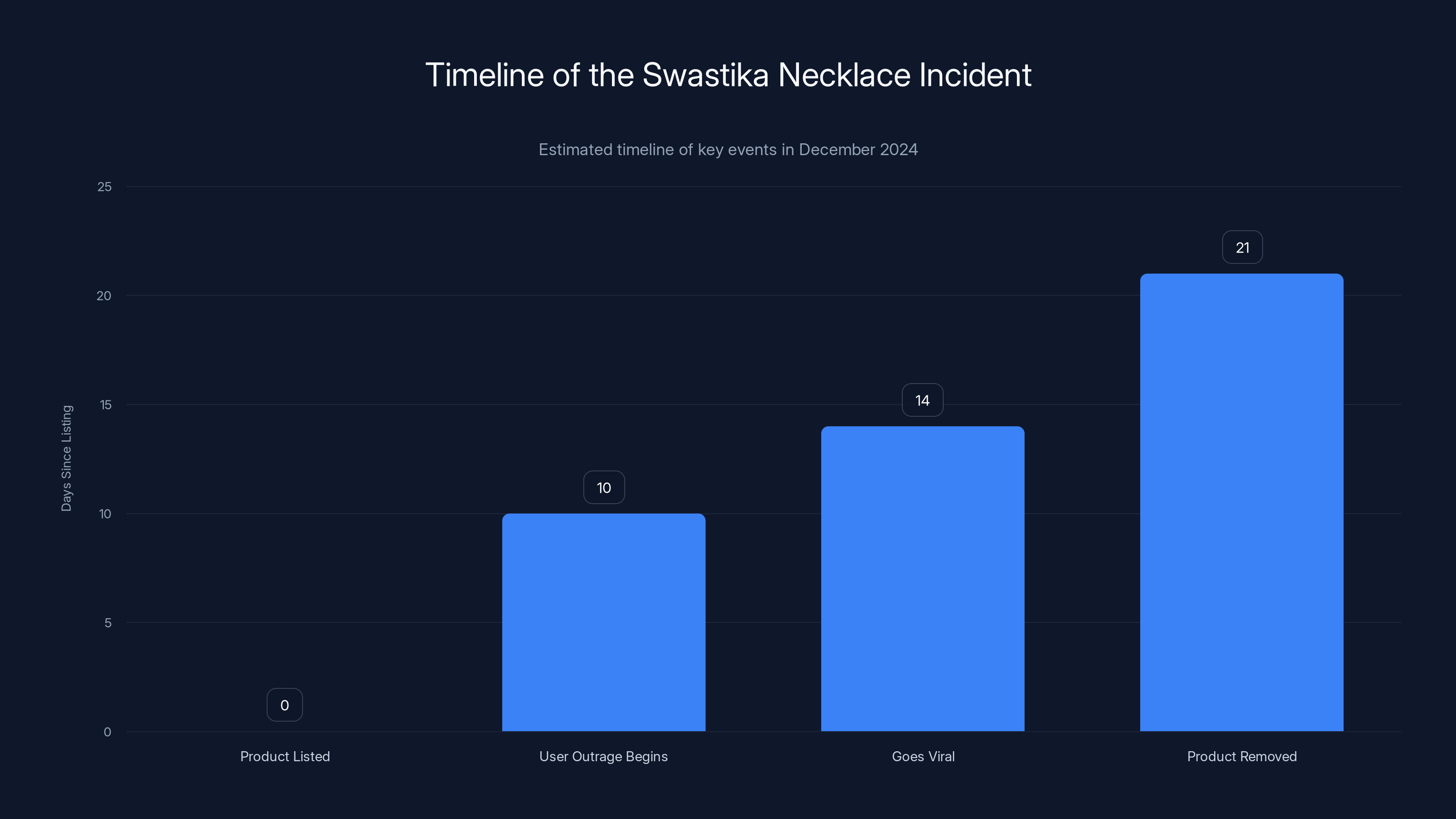

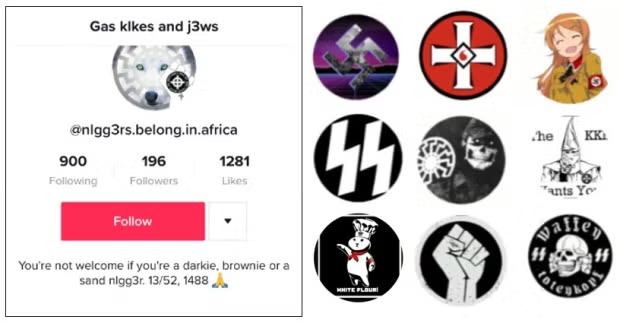

The incident that brought this issue to public attention happened in late December 2024. A swastika necklace popped up in TikTok Shop, labeled as a "hiphop titanium steel pendant" and priced at $8. Users started screenshotting it, posting it to other social media platforms with outrage. Within days, it went viral.

The swastika symbol itself has ancient roots. Hindu, Buddhist, and Jain traditions have used variations of the symbol for thousands of years as a sacred emblem representing auspiciousness and eternity. These "manji" symbols can look identical to the Nazi swastika to untrained eyes. This is genuinely important context for understanding moderation challenges.

But here's what made this case different: the product description. It specifically used the keyword "hiphop" as part of its title. If you're trying to sell authentic Buddhist or Hindu religious jewelry, you wouldn't describe it as "hiphop jewelry." You'd describe it as Buddhist jewelry, Hindu jewelry, or spiritual jewelry.

The keyword "hiphop" was a dog-whistle. It signaled to people who know what they're looking for (white nationalists familiar with coded language) that this product was being sold for the explicit purpose of displaying Nazi symbolism. It also acted as an obfuscation technique to avoid keyword filtering—an automated system looking for "swastika" might not catch "hiphop."

Joan Donovan, founder of the Critical Internet Studies Institute and coauthor of "Meme Wars," explained the significance: the connection between "hiphop" and a swastika necklace is intentional. The labeling reveals this is coming from someone engaged in rage-baiting and extremist marketing, not someone genuinely selling cultural religious items.

TikTok removed the product after it went viral. But this was reactive, not proactive. The necklace sat on the platform for weeks, being recommended to users, before public outcry forced action.

Understanding the Algorithmic Web

Here's what needs to be understood about how this happens: recommendation algorithms work by finding patterns in user behavior.

When thousands of users search for a term, the algorithm learns that term is popular. When the same term gets searched multiple times over short periods, it climbs in the recommendation system's rankings. Most of the time, this works great. Users search for "Nike shoes," others see "Nike shoes" recommended, those recommendations drive real sales.

But what if you deliberately manipulate this system? What if you create accounts (or use existing accounts) to search for specific toxic terms repeatedly? What if you pair those searches with images designed to look innocent? What if you do this at scale?

This is called algorithmic poisoning, and it's significantly cheaper and easier than people realize.

You don't need massive computational resources. You don't need to hack anything. You just need to understand how the system counts searches and recommendations. A coordinated group of accounts—even a few dozen—searching for "double lightning bolt necklace" repeatedly can push that term into TikTok's "Others searched for" suggestions.

Filippo Menczer, a professor at Indiana University and director of the Observatory on Social Media, explained the challenge: "We have general ideas about how these algorithms work. But the exact details of what they implemented at any given time in any of their products are opaque. Nobody is going to be able to tell you exactly why those recommendations are made."

This opacity is the real problem. Even TikTok's own engineers might struggle to immediately identify why Nazi-related search terms are being recommended. Was it genuinely organic (unlikely)? Was it bot manipulation? Was it a deliberate attack by extremists? Without transparency, it's impossible to know.

But here's what we do know: the system allowed it to happen. And once it happens once, the recommendation algorithm learns these associations. Other users start seeing the suggestions. Some of those users search for these terms out of curiosity. This generates even more data points, cementing the associations in the algorithm's memory.

It's a feedback loop. And by the time a human moderator notices the problem, thousands of users have been exposed to it.

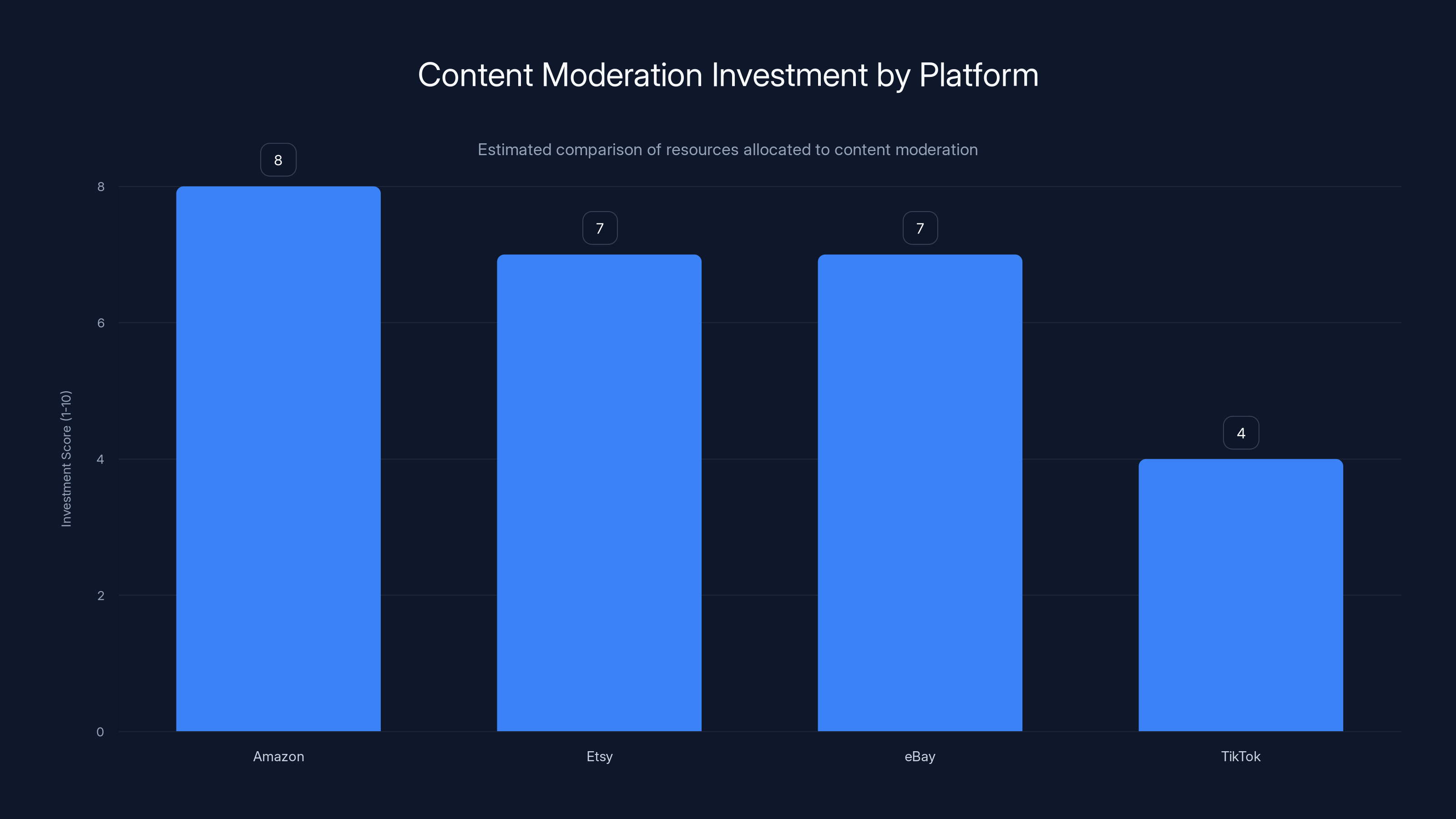

Amazon, Etsy, and eBay have invested more in content moderation systems compared to TikTok, which relies more on algorithms and user reporting. Estimated data.

TikTok Shop's Scale and Reach

TikTok Shop isn't a niche feature. It's becoming central to TikTok's business model and revenue strategy.

The platform launched TikTok Shop in the United States in 2023, and by 2024, it had become a significant revenue driver. TikTok is now the third-largest e-commerce platform in the US, behind only Amazon and Shopify. For users under 25, TikTok Shop has become the go-to place for discovering and purchasing products—often based entirely on recommendations from their For You feed.

This matters for moderation because it means the stakes are higher. When TikTok was just a video platform, problematic content could theoretically exist without directly harming commerce. But when TikTok Shop is recommending products, the platform is making a direct endorsement of those items.

Younger users—TikTok's primary demographic—are also the least equipped to recognize Nazi symbolism and white nationalist dog-whistles. A 16-year-old seeing a "double lightning bolt" necklace recommended in the context of streetwear might not realize what it actually represents. They might buy it, wear it, and inadvertently broadcast extremist ideology without understanding what they're displaying.

This is particularly concerning given recent trends in youth radicalization. Research has shown that exposure to extremist symbols and ideology on social media platforms increases the likelihood of younger users being drawn toward extremist content and communities.

TikTok's moderation team is already stretched thin. The platform claims to have removed 700,000 sellers and 200,000 restricted or prohibited products in the first half of 2025. Those are big numbers, but they also represent an admission that the scale of the problem is massive. If 200,000 prohibited products made it through their systems in just six months, what percentage of actual violations are they catching?

Dog-Whistle Marketing and Coded Language

Understanding why this matters requires understanding dog-whistle marketing—a technique where messaging appears innocent to outsiders but carries hidden meaning to an in-group audience.

The "hiphop" keyword paired with a swastika necklace is a perfect example. To someone casually browsing, they might think: "Oh, this is hip hop culture merchandise." But to someone familiar with white nationalist codes, they understand: this is Nazi merchandise being disguised with an innocuous keyword.

Other examples from my investigation:

- "Double lightning bolt necklace": Refers to the SS rune symbol, but "double lightning bolt" is generic enough to avoid automated filtering

- "HH necklace": Stands for "Heil Hitler," but the abbreviation alone isn't obviously extremist

- "SS necklace": Explicit reference to the Schutzstaffel, but SS has other legitimate meanings (stainless steel, steamship, etc.)

- "German WW2 necklace": Circumlocution that signals Nazi-era products without using the word "Nazi"

These coded terms have been in use within white nationalist communities for years. They exist on other platforms too—4chan, certain corners of Reddit, Telegram groups. The problem is that TikTok's algorithm doesn't understand context or coded language. It just sees search patterns and recommends based on frequency.

This is why algorithmic poisoning is so effective against social media platforms. The algorithm can't distinguish between someone searching "SS necklace" as shorthand for stainless steel versus searching it as a Nazi reference. It just counts both as the same search and amplifies the recommendation.

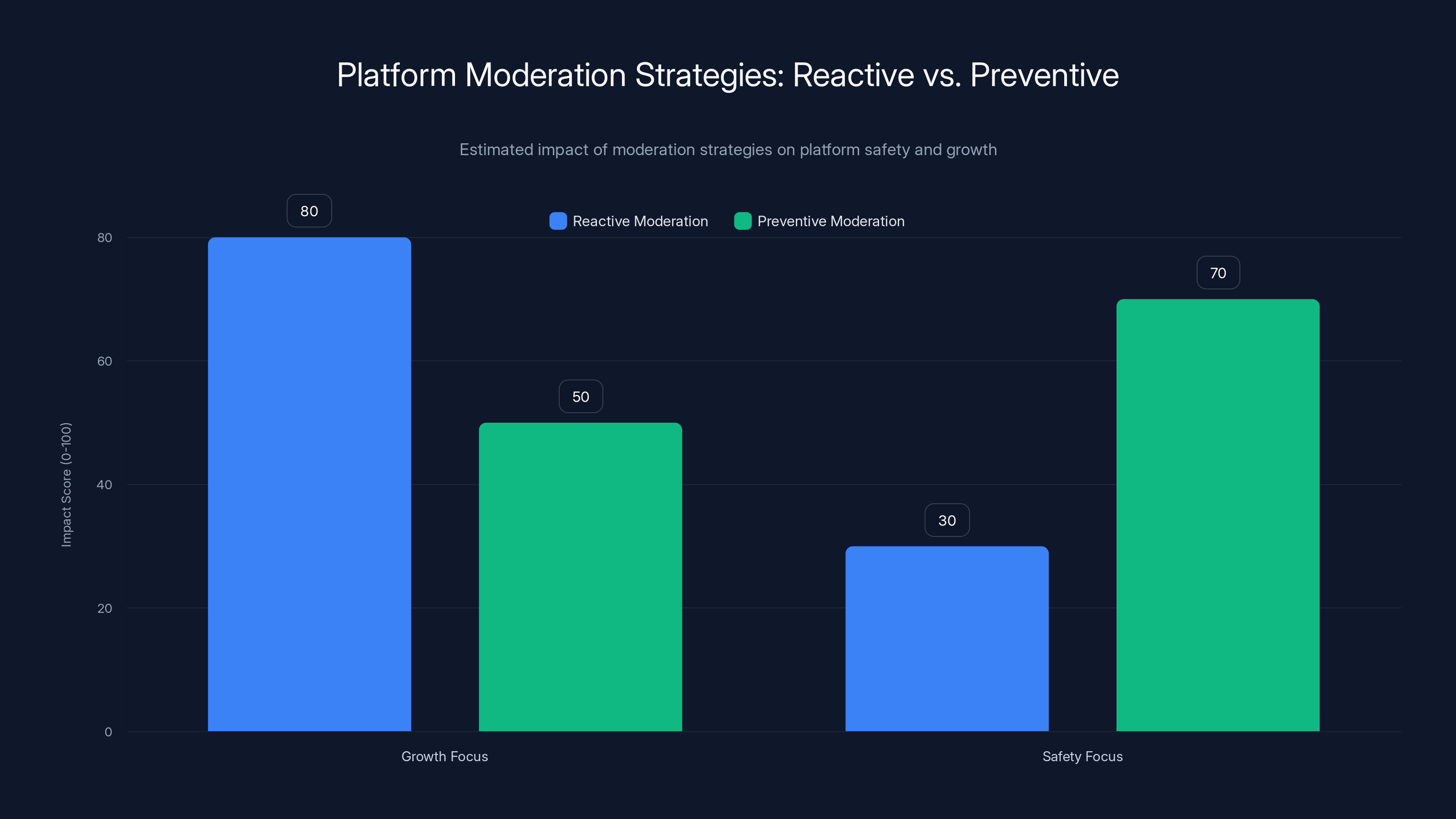

Reactive moderation prioritizes growth with an estimated impact score of 80, but only 30 for safety. Preventive moderation shifts focus, scoring 70 for safety but lower for growth at 50. Estimated data highlights the trade-off between growth and safety.

How Extremists Exploit E-Commerce Platforms

This isn't the first time e-commerce platforms have been exploited by extremists. It happens regularly, usually in cycles:

-

Exploitation phase: A group of coordinated accounts discovers they can manipulate a platform's recommendation algorithm by searching for specific terms and buying (or fake-buying) products

-

Growth phase: These terms start appearing in recommendations, reaching broader audiences who don't know what the symbols mean

-

Normalization phase: Some buyers purchase items not knowing their significance; others buy intentionally, wearing them as status symbols within extremist communities

-

Backlash phase: Journalists or activist groups expose the problem; the company removes products and promises action

-

Complacency phase: The company claims the problem is solved; the extremists migrate to a different platform or find a new exploit

We've seen this pattern on Etsy, Amazon, eBay, and dozens of smaller platforms. The pattern persists because e-commerce platforms prioritize scale and growth over safety. It's cheaper to remove products after they've been exposed in the media than to maintain robust proactive moderation.

TikTok is repeating this pattern. In 2023 and 2024, the company removed multiple antisemitic products from TikTok Shop. Each time, it was reactive—removing items after users complained on other platforms. There's no evidence the company has invested in systems specifically designed to prevent this from happening in the first place.

TikTok's Glenn Kuper told WIRED that the platform is "currently working to remove these algorithmic suggestions." Note the language: not "has removed," but "is working to remove." This suggests the company knew about the problem before being contacted by journalists but hadn't fixed it yet.

The Opacity Problem

One of the most frustrating aspects of this issue is that we can't fully understand why it happened because TikTok's algorithms are a black box.

When journalists or researchers ask TikTok how its recommendation algorithm works, the company typically responds with vague statements about "balancing multiple factors" or "user interest." The actual mechanics remain secret, proprietary, and protected as trade secrets.

This opacity is intentional. TikTok competes with other platforms partly on the sophistication of its recommendation algorithm. Revealing how it works would give competitors insights into its strategy. But this secrecy also makes it impossible for external parties to identify vulnerabilities, understand how extremist content spreads, or hold the platform accountable.

Menczer pointed out that even researchers studying algorithmic systems struggle with this: "The exact details of what they implemented at any given time in any of their products are opaque."

Without transparency, we're left with educated guesses about what went wrong. Did TikTok's algorithm genuinely count searches from bot accounts as equivalent to human searches? Does TikTok filter recommendations based on product content at all, or only on search frequency? Are there ANY safeguards designed to prevent extremist terminology from rising in recommendations?

These questions matter because they determine what policies TikTok needs to implement to prevent this from happening again.

If the problem is bots, TikTok needs better bot detection. If the problem is that the algorithm doesn't analyze product content (only search frequency), TikTok needs to integrate content moderation into its recommendation system. If the problem is that there are zero safeguards for extremist terminology, TikTok needs to build them.

But we can't know which of these is true because TikTok won't say.

Estimated data shows the timeline of the swastika necklace incident, highlighting the delay in response from TikTok, which took approximately 21 days to remove the product after it was listed.

TikTok's Moderation Claims vs. Reality

TikTok claims it takes moderation seriously. The company's most recent safety report states that TikTok Shop removed 700,000 sellers and 200,000 restricted or prohibited products in the first half of 2025.

That sounds impressive. But context matters.

TikTok Shop has millions of sellers. A vendor directory search would show the actual percentage being removed, but TikTok doesn't make that public. If there are 50 million sellers and they removed 700,000, that's a 1.4% removal rate—meaning 98.6% of sellers stay active.

Similarly, 200,000 products is meaningless without knowing the total number of products listed. TikTok likely sells tens of millions of items. If they removed 200,000 prohibited products out of, say, 100 million total products, that's a 0.2% removal rate.

Moreover, these are reactive removals. The products were already listed, already recommended, already sold to people, before TikTok removed them. The swastika necklace sold to dozens of users before being removed. Users saw recommendations for Nazi-related products before the algorithm was adjusted.

TikTok's approach is fundamentally reactive. The company waits for problems to surface (usually through media reports), then removes them. This is cheaper than proactive moderation, but it's also significantly less effective at protecting users.

Joan Donovan was clear about what needs to happen: "They really need to dig in, do an investigation, and understand where it's coming from. And also provide transparency, so that users understand how they were targeted."

This requires TikTok to:

-

Investigate the source of the algorithmic poisoning (was it coordinated bot accounts? Real accounts? An exploit in the recommendation system?)

-

Identify all affected products that were recommended through this vector, not just the ones that went viral

-

Notify users who may have seen these recommendations or purchased these items

-

Implement preventive measures to stop this from happening again

-

Be transparent about how they're fixing the problem

As of now, there's no evidence TikTok is doing any of these things beyond removing the most obvious products after they've been exposed.

The Role of Younger Users

TikTok's audience is overwhelmingly young. About 60% of TikTok users are between 16 and 24. Another significant portion is under 16.

Younger users have less life experience recognizing coded language and extremist symbolism. A 15-year-old might not know what an SS lightning bolt means. A 13-year-old might see a "German WW2 necklace" recommendation and think it's historical merchandise.

This age differential matters because it makes younger users disproportionately vulnerable to radicalization pipelines. Researchers have documented that exposure to extremist symbols and content on social media increases the likelihood that young people will seek out more extremist content.

The pathway often looks like this:

- User sees extremist symbol recommended in innocuous context

- User purchases item, not understanding its significance

- User wears item or displays it online

- User encounters real extremists who recognize the symbol

- User is recruited into extremist communities

This isn't theoretical. Law enforcement agencies have documented this exact pattern in cases involving young people radicalized through social media.

TikTok Shop specifically targets younger demographics and has different content recommendations for different age groups. Yet the platform doesn't seem to have stronger safeguards against extremist products for younger users. If anything, TikTok's algorithm might be more likely to recommend these items to younger users because they're less likely to recognize and report them.

Comparison to Other Platforms

Amazon, Etsy, and eBay have all had problems with extremist merchandise. But these platforms have had more time to develop moderation systems and public pressure has been more sustained.

Amazon removed books and merchandise promoting white supremacy after pressure from civil rights organizations. Etsy implemented policies against hate material and created reviewer systems to flag problematic products. eBay removed Nazi memorabilia listings after sustained campaigns.

None of these platforms are perfect. All of them still have problems. But they've invested more resources in preventive moderation than TikTok has.

TikTok's approach is notably different. Rather than building robust content moderation teams, TikTok has relied more heavily on algorithm-based approaches and user reporting. This is cheaper but less effective, especially against coordinated manipulation.

Moreover, TikTok's shop is newer than these competitors', which means it has fewer established systems and policies. The company is essentially rebuilding an e-commerce ecosystem from scratch, and moderation infrastructure hasn't kept pace with growth.

What Needs to Change

Fixing this problem requires changes at multiple levels.

At the platform level, TikTok needs to:

- Implement content-based filtering in the recommendation algorithm, not just search-frequency-based filtering

- Build in detection systems for coded extremist language and symbolism

- Require sellers to provide explicit product descriptions that match product images

- Implement random audits of trending recommendation terms to catch poisoning early

- Improve bot detection to prevent accounts from artificially inflating search frequencies

- Provide transparency about how recommendations work and what safeguards exist

At the policy level, there needs to be:

- Stronger FTC enforcement requiring platforms to implement adequate safety measures

- Requirements for platforms to report on moderation metrics in standardized ways

- Mandated third-party audits of e-commerce platforms' moderation systems

- Legal liability for platforms that knowingly host and recommend extremist merchandise

At the user level, people need to:

- Understand that recommendation algorithms can be weaponized and shouldn't be blindly trusted

- Recognize coded extremist language and symbols

- Report suspicious recommendations and products to platforms

- Educate younger people about white nationalist symbols and dog-whistle terminology

None of these solutions are difficult technically. Detecting that a product image contains a Nazi symbol is something computer vision systems can do reliably. Identifying coded extremist language is possible with trained models. Requiring transparency about how algorithms work is a policy choice, not a technical challenge.

The problem is that these solutions require investment, and investment requires prioritizing safety over growth. For TikTok, which is in aggressive expansion mode and faces regulatory scrutiny, prioritizing safety could mean slower growth.

But here's the reality: the longer platforms wait, the bigger the problem becomes. Extremist communities get better at gaming algorithms. Younger users get more normalized to extremist symbols. The damage spreads.

The Bigger Picture: Platform Accountability

The TikTok Shop Nazi symbolism problem isn't really about TikTok. It's about a broader pattern of social media platforms choosing growth over safety.

When a platform has millions of sellers and billions of users, it's technically impossible to manually review every listing. Automated systems have to do most of the work. But automated systems have vulnerabilities, and those vulnerabilities can be exploited by people with time and motivation.

The extremist communities exploiting TikTok Shop understand this. They know that platforms prioritize growth metrics over moderation metrics. They know that even getting caught doesn't result in meaningful consequences. They know that the worst-case scenario is their listings get removed after they've already sold the products and reached their audience.

This is the game theory of platform moderation: from the extremists' perspective, the rewards for exploitation exceed the risks. A seller might get 100 swastika necklaces sold before the listing is removed. That's money made, messages delivered, symbols distributed. The removal only matters if it happens before the sale.

Platforms could change these game theory incentives by implementing preventive moderation instead of reactive moderation. But preventive moderation is expensive. It requires hiring more moderators, training them better, investing in technology, being transparent about problems.

It's cheaper to be reactive. It's faster to grow. It's easier to claim problems don't exist until media outlets expose them.

But this approach has costs too. It results in harm. Young people get radicalized. Communities get poisoned. Democracy gets corroded as extremist ideology spreads unchecked.

Ultimately, the question isn't really about TikTok's algorithm or whether the company made a good-faith effort to moderate content. The question is: what does society owe its youngest users in terms of protection from extremist recruitment?

TikTok's answer so far seems to be: whatever is cheapest to implement.

FAQ

What is algorithmic poisoning and how does it work on social media platforms?

Algorithmic poisoning is a technique where coordinated users or bots manipulate a platform's recommendation algorithm by repeatedly searching for or engaging with specific content, causing that content to be promoted more widely. In TikTok Shop's case, coordinated searches for Nazi-related terms caused those recommendations to surface to other users. The algorithm can't distinguish between genuine searches and manipulated ones, so it treats them identically and learns to promote the poisoned terms.

How does TikTok's "Others searched for" recommendation system work?

When you search for a product on TikTok Shop, the platform displays a "Others searched for" box showing four related search suggestions based on what users have previously searched. These suggestions are pulled from the platform's search frequency data. TikTok uses these suggestions to guide users toward related products, but the system can be weaponized by having bot accounts or coordinated users search for specific terms repeatedly, pushing those terms into the recommendations others see.

Why is dog-whistle marketing effective against content moderation systems?

Dog-whistle marketing uses coded language that appears innocent to outsiders but carries hidden meaning to an in-group audience. Automated moderation systems struggle with this because they typically rely on keyword matching or image recognition. A swastika necklace described as "hiphop jewelry" might avoid filters looking for "Nazi" or "swastika," while still signaling to extremists that this is Nazi merchandise. The mismatch between the description and the product isn't obviously suspicious to an algorithm.

What responsibility do e-commerce platforms have to prevent extremist merchandise?

E-commerce platforms have a responsibility to implement reasonable safeguards against extremist products based on their size, capacity, and the harms being caused. This includes content-based filtering (analyzing product images and descriptions), bot detection to prevent algorithmic poisoning, and proactive moderation rather than purely reactive responses. While platforms can't catch every violation, they should invest in systems designed to minimize harm, particularly to younger users.

How does age affect vulnerability to extremist recruitment through e-commerce platforms?

Younger users have less familiarity with extremist symbols and coded language, making them more vulnerable to unintentionally purchasing items they don't understand or being exposed to radicalization pipelines. TikTok's user base is predominantly under 25, which means a significant portion of users seeing these recommendations lack the context to recognize extremist symbols. This age differential makes the problem especially concerning from a youth protection perspective.

What would proactive moderation look like for TikTok Shop?

Proactive moderation would include implementing content-based filtering that analyzes product images for extremist symbols, building detection systems for coded extremist language in product descriptions, requiring explicit product documentation, conducting random audits of trending recommendation terms to catch poisoning early, and improving bot detection. Instead of waiting for products to be reported or exposed in media, the platform would actively hunt for problematic content before it reaches users.

Why is transparency important in fixing this problem?

Without transparency, external researchers, journalists, and regulators can't understand why platforms' systems are failing or how to fix them. TikTok claims to have removed problematic content, but without knowing the total number of products listed, the percentage removed, or how recommendations actually work, it's impossible to verify whether these claims represent meaningful action. Transparency also helps users understand they're being targeted by algorithmic poisoning and empowers them to protect themselves.

How does TikTok Shop's Nazi symbolism problem compare to similar issues on other platforms?

Amazon, Etsy, and eBay have all struggled with extremist merchandise, but these platforms have had longer to develop moderation infrastructure and have faced more sustained public pressure. TikTok Shop is newer and has invested less in preventive moderation, relying instead on algorithm-based approaches and user reporting. While no e-commerce platform has solved this problem completely, TikTok's approach appears notably more reactive and less resource-intensive than competitors.

The Path Forward

TikTok Shop's Nazi symbolism problem isn't going away on its own. The only way it gets fixed is through some combination of platform investment, regulatory pressure, and sustained public attention.

Right now, TikTok has the lowest incentive to invest heavily in this issue. The company faces regulatory scrutiny in the United States and is focused on growth. Spending resources on preventive moderation doesn't directly increase revenue or user engagement.

Regulatory pressure could change this. If the FTC or other agencies established clear expectations for what adequate moderation looks like, with penalties for non-compliance, platforms would have economic incentives to invest. But regulatory action takes time, and in the meantime, harm continues.

Sustained public attention also matters. When journalists cover these issues, when activist groups apply pressure, when users understand what's happening, platforms respond faster. The swastika necklace got removed because it went viral on other social media platforms. That's embarrassing for TikTok in a way that private reports never are.

But we shouldn't have to rely on viral outrage to protect young people from extremist recruitment. We should be able to expect that platforms investing billions in growth would invest adequately in safety.

The technical solutions exist. The policy frameworks exist. What's missing is the will to implement them.

That will only materialize if the cost of not implementing safety becomes higher than the cost of implementing it.

Until then, algorithmic poisoning will continue. Nazi-related products will continue to be recommended to unsuspecting users. Younger people will continue to be vulnerable to extremist recruitment through platforms that prioritize growth over protection.

And journalists will continue finding these problems months or years after they started, when the damage has already spread.

The question isn't whether platforms can fix this. The question is whether they will.

Key Takeaways

- TikTok Shop's algorithm actively recommends Nazi-related search terms through its 'Others searched for' feature, creating a poisoned recommendation pathway from innocent searches to extremist products

- Dog-whistle marketing (like labeling swastika necklaces as 'hiphop jewelry') exploits moderation systems that rely on keyword matching rather than contextual understanding

- Algorithmic poisoning requires minimal resources: coordinated searches by a few dozen accounts can push toxic terms into platform-wide recommendations, making this vulnerability systemic to recommendation algorithms

- TikTok's user base is predominantly under 25, making younger users disproportionately vulnerable to extremist symbol exposure and potential radicalization pipelines they may not recognize

- Platform moderation is reactive rather than proactive: TikTok removes products only after media exposure, not through preventive systems, allowing harm to spread before action is taken

Related Articles

- X's Open Source Algorithm: Transparency, Implications & Reality Check [2025]

- Ofcom's X Investigation: CSAM Crisis & Grok's Deepfake Scandal [2025]

- Indonesia Blocks Grok Over Deepfakes: What Happened [2025]

- Roblox's Age Verification System Catastrophe [2025]

- Senate Passes DEFIANCE Act: Deepfake Victims Can Now Sue [2025]

- AI Music Flooding Spotify: Why Users Lost Trust in Discover Weekly [2025]

![TikTok Shop's Algorithm Problem With Nazi Symbolism [2025]](https://tryrunable.com/blog/tiktok-shop-s-algorithm-problem-with-nazi-symbolism-2025/image-1-1768392487519.jpg)