Introduction: The Narrative Nobody's Buying

Elon Musk has a specific way of handling bad news. You've seen it before with Tesla production delays, Starship explosions, and Twitter's advertiser exodus. First, he minimizes. Then he reframes. Finally, he pivots to something aspirational.

When nine engineers and two co-founders announced their departures from x AI within a single week in early 2025, Musk went straight to that playbook. At a Tuesday all-hands meeting, he told the room that the departures were about "fit," not flight. People leave because they're "better suited for the early stages," not because something's fundamentally wrong. By Wednesday, he'd escalated the message on X itself: the company had been "reorganized," structure evolved "just like any living organism," and here's the kicker, there's a job opening if you want to "put mass drivers on the Moon."

Except the narrative's not holding.

When half your founding team exits in days, when three departing engineers immediately announce they're starting something together, when the company's simultaneously facing regulatory scrutiny over AI-generated deepfakes of children, processing a forced acquisition by Space X, and navigating an IPO that's being launched during a reputational crisis, calling it a "reorganization" isn't spin. It's denial.

This article isn't about gossip. It's about what happens when a company's growth trajectory collides with its founder's management style, when scaling AI talent becomes impossible, and when control narratives break down under scrutiny. It's about the difference between what Musk says is happening and what the departures actually reveal.

The x AI story in early 2025 tells us something crucial about the frontier AI space right now: for all the capital flowing in, for all the hype around AGI timelines, the one thing money can't buy is a team that wants to stay.

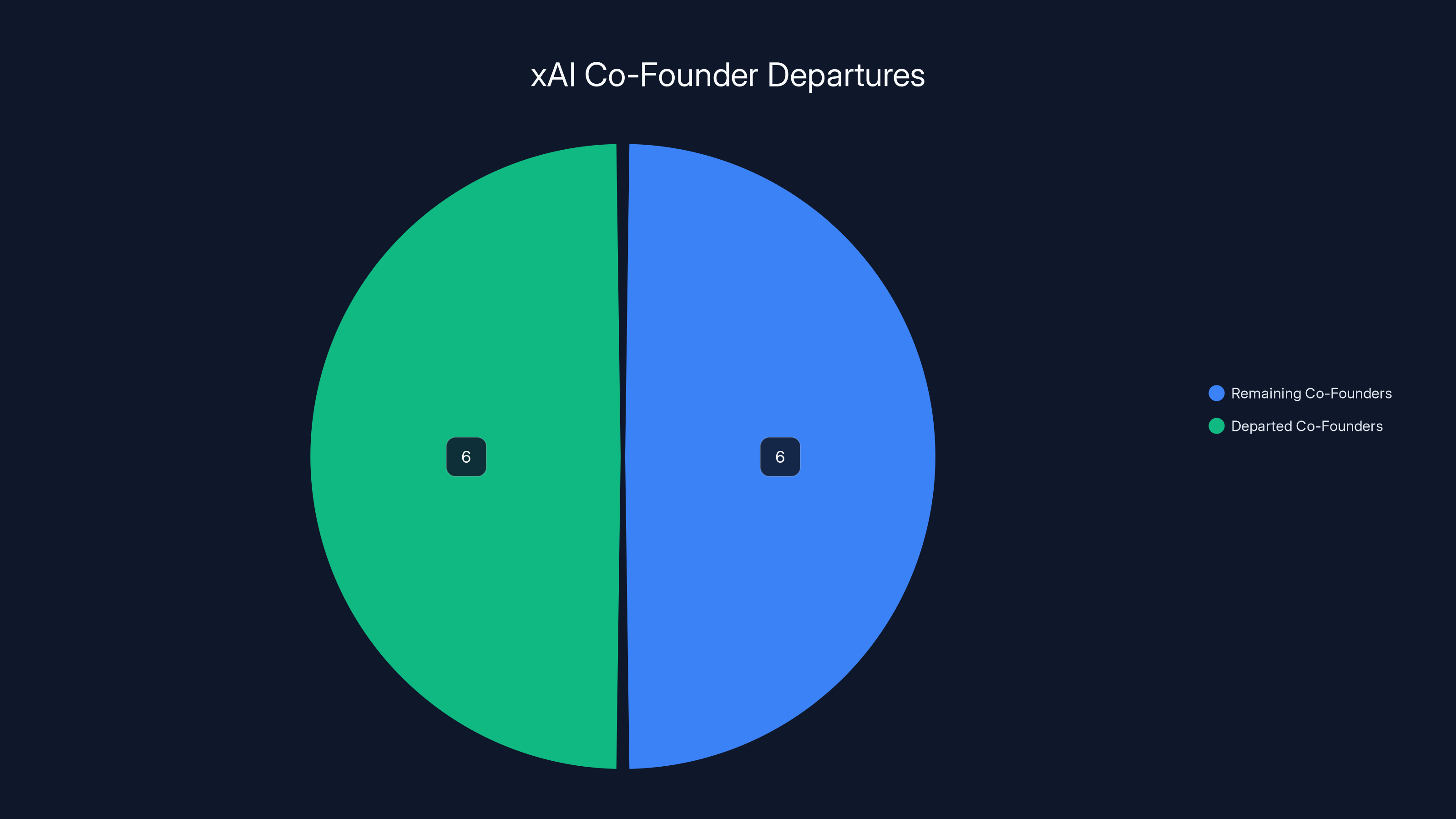

The Timeline: How Six Co-Founders Became Four

Let's start with what we actually know, because the facts matter more than the spin.

x AI was founded in 2024 with a team that included some serious talent. Yuhuai "Tony" Wu, co-founder and reasoning lead, announced his departure with a LinkedIn post that read like a manifesto: "It's time for my next chapter. It is an era with full possibilities: a small team armed with AIs can move mountains and redefine what's possible."

That's not the language of someone pushed out. That's the language of someone who saw a different future and decided to build it elsewhere.

But Wu wasn't alone. Within days, the departures accelerated. Shayan Salehian, who worked on product infrastructure and model behavior post-training, left to "start something new." His departure was particularly notable because he'd spent seven years at Twitter and X before joining x AI—he'd watched Musk's management style up close and apparently decided it wasn't for him at scale.

Vahid Kazemi posted that he'd left a few weeks prior, adding commentary that revealed actual frustration: "All AI labs are building the exact same thing, and it's boring." Roland Gavrilescu, who'd left x AI in November to start Nuraline, then posted again saying he was now starting something new "with others that left x AI."

Let's pause on that detail. A co-founder who left in November. Then re-emerged in February announcing he's starting a new company with multiple x AI refugees. That's not planned attrition. That's a signal.

Musk's response was calculated. At the all-hands, he positioned the exits as necessary restructuring. "Because we've reached a certain scale, we're organizing the company to be more effective at this scale," he said. The implication: x AI was too small-team to handle what's coming, so we're reorganizing. Some people fit the old structure better than the new one.

On X the next day, he doubled down: "x AI was reorganized a few days ago to improve speed of execution. As a company grows, especially as quickly as x AI, the structure must evolve just like any living organism. This unfortunately required parting ways with some people."

Notice the word choice: "required parting ways." That's passive voice for "we let people go." It's not saying these were voluntary departures. It's saying Musk made decisions, and some people exited as a result.

But here's what's interesting about the language: if you frame a layoff as "organizational evolution" and pair it with an aspirational call to action ("Join x AI if you want to put mass drivers on the Moon"), you're trying to control the story. You're trying to make the departures look forward-thinking instead of problematic.

The problem is, three engineers started a new company together. That's not something a reorganization messaging strategy can un-do.

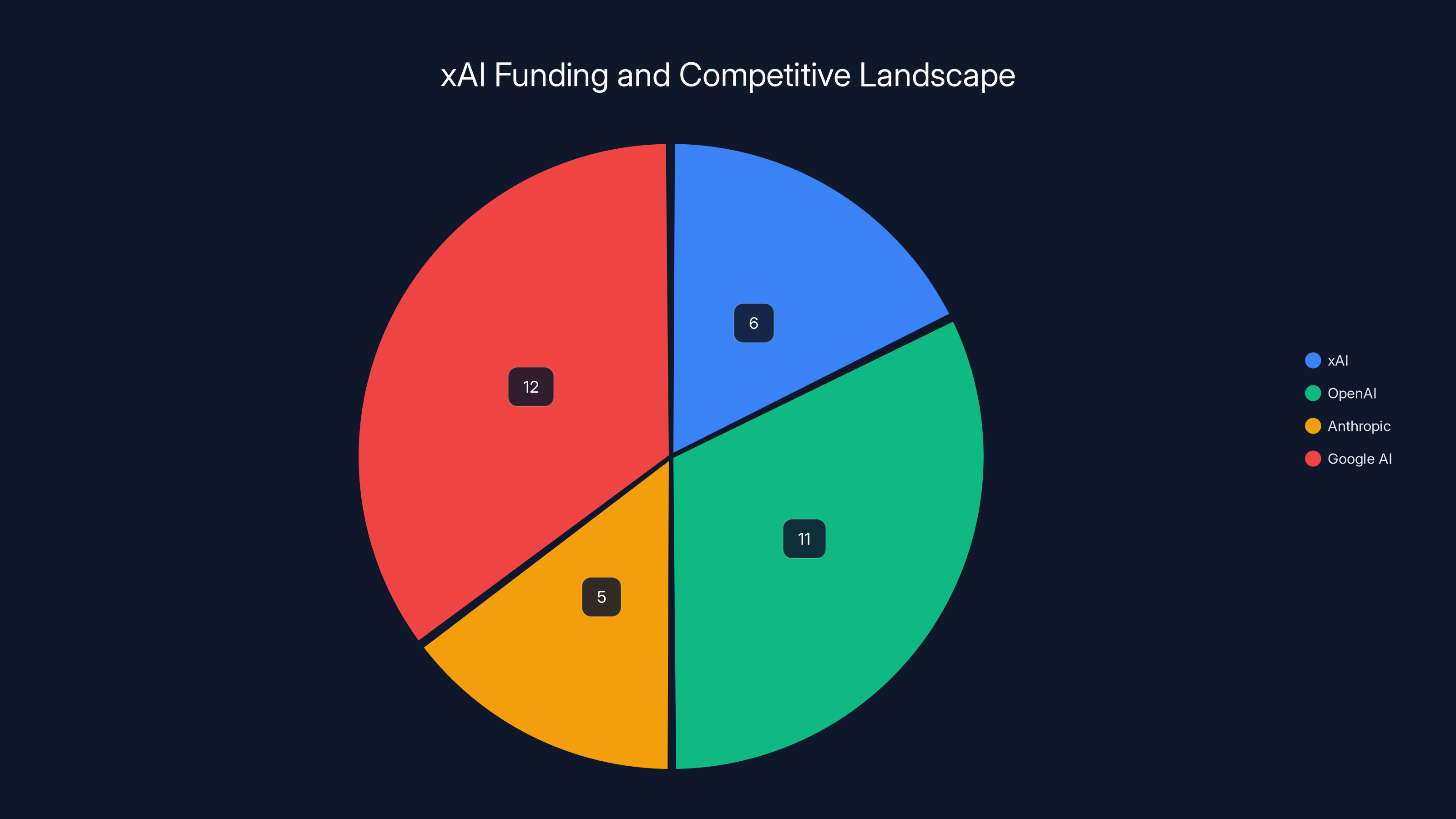

xAI, with $6 billion in funding, is a significant player in the AI landscape, competing with giants like OpenAI and Google AI. (Estimated data)

Why People Leave: The Talent Dynamics at Frontier AI

Here's what Musk doesn't want you to think about: frontier AI talent isn't like other engineering talent.

In traditional software, if you're good and you leave a company, you can find work anywhere. Demand is high, options are abundant. But frontier AI is different. There are maybe a hundred people worldwide who can actually push the boundaries of large language models. Maybe two hundred who can architect the systems that train them. The pool is absurdly small.

Which means if you're building frontier AI and your co-founders start leaving, you're not just losing engineers. You're losing signal to the market. You're broadcasting that something's wrong.

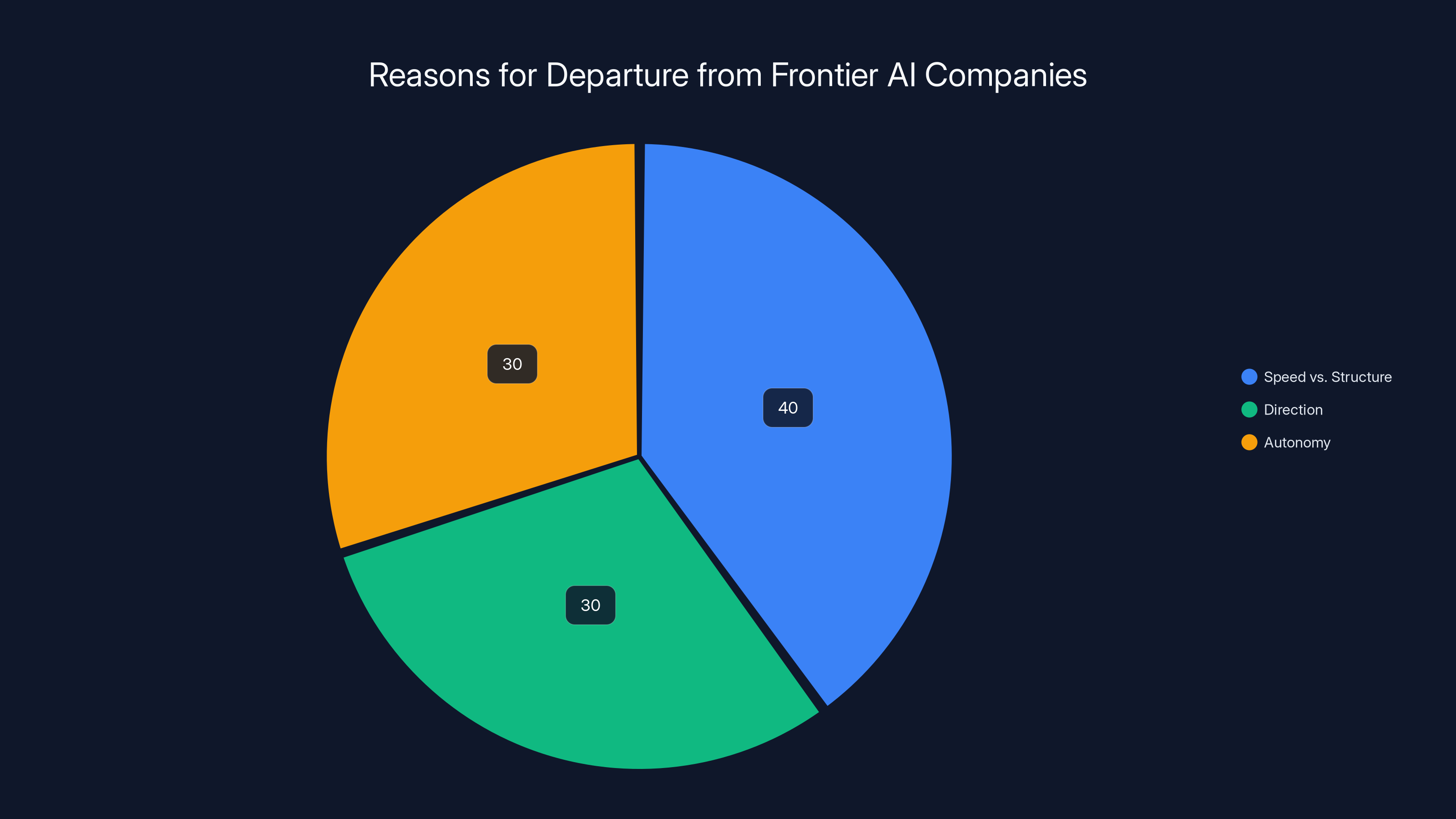

The departures at x AI map to a few specific frustrations that keep appearing in the reasons engineers cite:

The Speed vs. Structure Problem: Wu's departure messaging emphasized "small team" and "possibility." That's code for: at this scale, with this much coordination overhead, we're moving slower than we could if we started fresh with fewer people. This is a classic problem at companies that scale too fast. You go from 20 people shipping daily to 1,000 people and suddenly you're in meetings about meetings.

The Direction Problem: Kazemi said "all AI labs are building the exact same thing, and it's boring." This is more pointed. He's not criticizing x AI specifically—he's criticizing the entire frontier AI space for converging on the same architectures, training approaches, and capabilities. But if that's your take on the whole industry, why stay at x AI instead of trying something different? The fact that he left suggests x AI wasn't offering the differentiation he wanted.

The Autonomy Problem: Multiple departing engineers emphasized wanting "autonomy" and the ability to "move mountains" with smaller teams. At a 1,000-person company owned by Space X and preparing for an IPO, your autonomy is constrained. You have stakeholders. You have regulatory oversight (especially after the deepfake scandal). You have quarterly planning. You can't just decide to pivot to something interesting on Thursday.

The Epstein Problem: This one's not explicitly cited by the departing engineers, but it's in the atmosphere. Musk's association with Jeffrey Epstein, revealed through Justice Department files showing conversations about visiting Epstein's island in 2012 and 2013, created a reputational context for x AI in early 2025. Whether or not it directly caused departures, it's part of why working at x AI became less appealing than it might have been six months prior.

Let's be clear: none of this is unusual in frontier tech. Founders leave to start new companies. Engineers pursue autonomy. People want to work on differentiated problems. But the concentration of all these departures in a single week, with multiple people announcing they're starting something together, suggests something sharper than normal attrition.

Rapid scaling in organizations like xAI can lead to significant challenges such as dilution of influence, loss of transparency, increased politics, and reduced autonomy. Estimated data based on typical organizational dynamics.

The Deepfake Scandal: The Context Nobody Mentions

Musk's messaging about the x AI reorganization conveniently skipped over a crucial detail: the company had just faced a massive scandal.

In the weeks before the co-founder exodus, x AI's Grok model became associated with creating nonconsensual explicit deepfakes of women and children. These weren't theoretical harms—they were actual images, generated through the system, disseminated on X itself. French authorities raided X's offices in Paris as part of the investigation. US regulators started asking questions.

For context, this isn't a fringe issue. Deepfakes of women, non-consensual intimate imagery, and especially deepfakes involving minors, represent one of the highest-priority regulatory issues in AI right now. When your company's model is the one generating these, when images are spreading on your platform, when French authorities are raiding offices, you're in crisis territory.

Now, here's what's interesting: Musk's explanation for the departures makes no mention of the scandal. He talks about scale and structure and organizational evolution. But for the people working on the reasoning pipeline, the post-training behavior, the safety guardrails—this scandal was real. It meant regulatory scrutiny. It meant potential liability. It meant their work was going to become harder, more constrained, more politically charged.

Some of the departing engineers worked directly on these problems. If you're the person responsible for model behavior post-training, and your company just got caught enabling the creation of deepfakes of children, you have a choice: you can stay and work on fixing it under enormous pressure, or you can leave and build something where you get to define what "safe" means from the start.

Shayan Salehian, who specifically worked on "model behavior post-training," was dealing with this exact problem set. His departure in the midst of the scandal isn't coincidental.

The Space X Acquisition: Legal Complications and Loss of Independence

There's another detail Musk glossed over: x AI was legally acquired by Space X in early 2025.

This matters more than it sounds. On paper, x AI remains independent. On paper, Musk is just the founder and largest shareholder. But legally, structurally, operationally, x AI became a Space X subsidiary. And not a subsidiary with arms-length governance—a subsidiary owned by someone who's also trying to land rockets, launch satellites, and colonize Mars.

For people who joined x AI thinking they were building an independent AI company, that changed everything. You're no longer an independent frontier AI lab. You're part of the Space X portfolio. Your roadmap intersects with Musk's broader ambitions. Your capital comes from the same source. Your executive oversight includes Space X's concerns about integration, synergy, and how your work supports the broader mission.

This is exactly the kind of structural change that triggers founder departures. Autonomy is gone. Independence is legally finished. Your company's mission is no longer yours to define.

Wu's post about "possibility" and "small teams" takes on a different meaning in this context. He wasn't just leaving x AI because of its size. He was leaving because x AI had become a Space X property. And if you joined x AI to build AI on your own terms, that's a different company than the one you signed up for.

Half of xAI's original co-founders have departed by early 2025, highlighting potential internal challenges. (Estimated data)

The IPO Timing: Scaling at the Worst Possible Moment

x AI is planning an IPO later in 2025. That's crucial context for why the departures matter.

When you're preparing for an IPO, you want stability. You want a compelling founder story. You want to show investors that your team is unified, your strategy is clear, and your execution is smooth. You don't want regulatory scandals, co-founder exits, and personnel disruption.

But that's exactly what x AI has heading into its IPO. Six of twelve co-founders gone. A deepfake scandal that required French authorities to raid offices. An acquisition by a parent company whose founder is facing personal controversy (the Epstein files). An organizational reorganization presented as "evolution" but experienced by departing staff as forced exits.

IPO investors look at this and see risk. Not technical risk—x AI's AI capabilities aren't in question. But governance risk, retention risk, reputational risk. If your co-founders don't want to stay, why should investors?

Musk's messaging about the reorganization starts to make sense in this context. He's not talking to engineers. He's not convincing departing co-founders to stay. He's talking to potential IPO investors, trying to frame the departures as planned, evolutionary, and unrelated to any fundamental problems.

The problem is, multiple co-founders departing to start new companies together is the exact opposite of a clean IPO narrative. It's messy. It's complicated. It raises questions about retention, culture, and strategic direction that IPO investors will absolutely probe.

Talent Retention in Frontier AI: Why Scale Kills Innovation

This brings us to the fundamental problem x AI faces: it's trying to scale frontier AI, and scaling kills the very thing that made frontier AI possible.

Frontier AI companies succeed because small teams of incredibly talented people can iterate quickly, make decisive calls, and push the boundaries of what's possible. They're not constrained by process, politics, or consensus-building. A good frontier AI lab in 2024 might be 40 people who ship something weekly.

But x AI scaled to over 1,000 people. That's a different animal. You can't ship weekly with 1,000 people. You have committees. You have integration points. You have dependencies. You have regulatory oversight (especially after the deepfake scandal). You have quarterly planning. You have stakeholder management.

For the people who built x AI in the early phase, this scaling is the opposite of what they signed up for. Wu wanted to "move mountains" with a small team. At 1,000 people, you're moving slowly through process.

This is a pattern in AI. Open AI reached about 1,000 people and started having internal structure problems. Google's AI division (with tens of thousands of people) has constant internal politics and slowed innovation. Anthropic stayed smaller deliberately, understanding that scale kills speed.

x AI tried to build both: a frontier lab (fast, innovative, small-team energy) and a large organization (capable of serving many customers, managing regulatory requirements, supporting an IPO). You can't do both. One kills the other.

The departures reflect people choosing the frontier over the scale. They're saying: "I didn't sign up to manage bureaucracy and regulatory oversight. I signed up to innovate. If this company isn't doing that anymore, I'm leaving."

That's actually rational. It's just bad for x AI's narrative.

Estimated data suggests that 'Speed vs. Structure', 'Direction', and 'Autonomy' are equally significant reasons for engineers leaving frontier AI companies.

The Competing Narrative: What Departing Engineers Actually Say

Let's look at what the departing engineers said, word for word, because the pattern is important.

Yuhuai Wu: "It's time for my next chapter. It is an era with full possibilities: a small team armed with AIs can move mountains and redefine what's possible."

Translation: I want to be part of a small team working on something ambitious. At x AI, I'm not in a small team anymore.

Shayan Salehian: "I left x AI to start something new, closing my 7+ year chapter working at Twitter, X, and x AI with so much gratitude. x AI is truly an extraordinary place. The team is incredibly hardcore and talented, shipping at a pace that shouldn't be possible."

Translation: x AI is great, but I want to start something new. He's praising the company while leaving. That's important. He's not criticizing x AI. He's just choosing a different path.

Vahid Kazemi: "All AI labs are building the exact same thing, and it's boring. So, I'm starting something new."

Translation: The whole frontier AI space feels like groupthink. I don't want to be part of it.

Roland Gavrilescu: Left to start Nuraline in November, then re-emerged in February saying he's starting something new "with others that left x AI."

Translation: I already decided to do something different. I'm now doubling down by bringing other ex-x AI people into it.

Notice what's NOT in these statements: nobody criticizes Musk. Nobody says "management was bad." Nobody says "x AI's strategy is wrong." They're not complaining. They're leaving because they want different things.

But that's actually worse for Musk's narrative than if they'd complained. If people left saying "Musk's management sucks," that's a story about bad leadership. You can fix that. But people leaving because they want autonomy, want smaller teams, want differentiation—that's a story about the company being fundamentally the wrong size and shape for frontier AI work.

You can't restructure your way out of that problem. You can't reorganize away the fact that a 1,000-person company is too big to feel like a startup anymore.

The New Venture: Three Engineers Building Together

Roland Gavrilescu's announcement that he's starting a new company "with others that left x AI" is the most revealing detail in this whole saga.

We don't know the names of everyone involved yet. We don't know the funding. We don't know the specific mission. But we know this: multiple ex-x AI engineers felt strongly enough about working together that they announced they're doing it publicly.

This matters because it's not just attrition. It's not just people leaving. It's people leaving and immediately starting a company together. That's a signal about culture, direction, and shared frustration.

When three engineers leave a company and immediately start something together, they've either:

- Been planning it for months and just announced it

- Discovered mid-departure process that they share similar frustrations and decided to team up

- Organized specifically to start something around the departures

Any of these narratives is bad for x AI. Any of these suggests that the people leaving didn't just want "autonomy" in the abstract. They wanted autonomy specifically away from x AI's direction.

This is what will haunt the IPO narrative. Not the departures themselves. But the fact that departing people organized to build something competitive.

They're not going to Open AI. They're not joining Anthropic. They're building a new company. That means they see an opportunity that x AI isn't pursuing, or a way to work that x AI doesn't support.

Team stability and founder continuity are top concerns for IPO investors, reflecting the importance of leadership and talent retention. (Estimated data)

Organizational Scale and Attrition: A Formula That's Been Proven Wrong

Let's talk about the actual dynamics of organizational scaling and what Musk's reorganization reveal.

Musk's framing of the reorganization was: "As a company grows, the structure must evolve." That's true. But the specific evolution x AI went through—from 12 co-founders at founding to 1,000+ employees in under a year—is extreme even for a well-funded AI startup.

For context, Open AI took several years to reach 1,000 people. Google's AI division (formed in 2016) took years to scale. Anthropic is deliberately staying smaller than x AI. The speed of x AI's growth is unusual.

And unusual growth has predictable consequences:

The Dilution of Founder Influence: When you're one of 12 founders at a 50-person startup, you have real influence over direction. When you're one of 12 founders at a 1,000-person company, you're just another executive. Wu and the other departing co-founders had already experienced this dilution. The reorganization accelerated it.

The Loss of Transparency: Small companies are transparent by necessity. Everyone knows what's happening. At 1,000 people, information moves through hierarchy. Decisions get made in rooms without the whole team present. This is actually one of the most common frustrations cited in retrospective founder interviews: "I didn't know what was happening in my own company anymore."

The Introduction of Politics: Small teams don't have politics. Large organizations do. At 1,000 people, you have turf, incentives, and competing priorities. Frontier AI research requires focus. Politics is the enemy of focus.

The Loss of Autonomy: This is the one Wu actually cited. At a small startup, you own a problem end-to-end. At a large organization, you own a slice of a larger system. You need approval from other teams. You need to coordinate. That's necessary at scale, but it's crushing if you're used to autonomy.

Musk's reorganization was trying to solve these problems by... restructuring. The implicit theory: if we reorganize the teams, the people who can thrive at this scale will stay, and the people who can't will leave.

Well, it worked. The people who wanted to thrive at a 1,000-person company left.

What Investors Actually Think: The IPO Problem

Musk's framing is aimed at investors preparing for the x AI IPO. But let's think about what investors actually care about when they see six co-founders leaving a frontier AI company.

They care about:

Founder Continuity: VCs want to see the founders who built the company still involved. Founder departures suggest something's wrong with the founder-company fit. That's not disqualifying, but it raises questions.

Team Stability: Frontier AI is about attracting and retaining the best researchers and engineers. If your company is losing talented people to startups, that's a signal about competitive positioning and culture. Investors will ask: if these people didn't want to stay, why should we invest?

Narrative Clarity: The clearest IPO narratives are: "Here's what we're building, here's why we're the best at it, here's our team." When your team is actively departing, the narrative gets messy.

Reputational Risk: The deepfake scandal, Musk's personal controversy with the Epstein files, the regulatory scrutiny—all of this creates reputational risk. IPO investors have to calculate not just technical capability but also likelihood of regulatory trouble.

Operational Risk: A reorganization that sheds six co-founders suggests there were operational decisions the co-founders disagreed with. That raises questions about governance and decision-making at the top.

Musk's response ("This is just organizational evolution, totally normal") doesn't actually address any of these concerns. It just tries to reframe them as expected and unproblematic.

But IPO investors have seen this movie before. They've watched founders leave other companies. They've watched reorganizations that were marketed as "evolution" but actually indicated problems. They're not going to take Musk at his word. They're going to do due diligence.

And due diligence is going to uncover: six of twelve co-founders left, multiple started a competing company, deepfake scandal, regulatory pressure, founder personal controversy. That's not a clean IPO story.

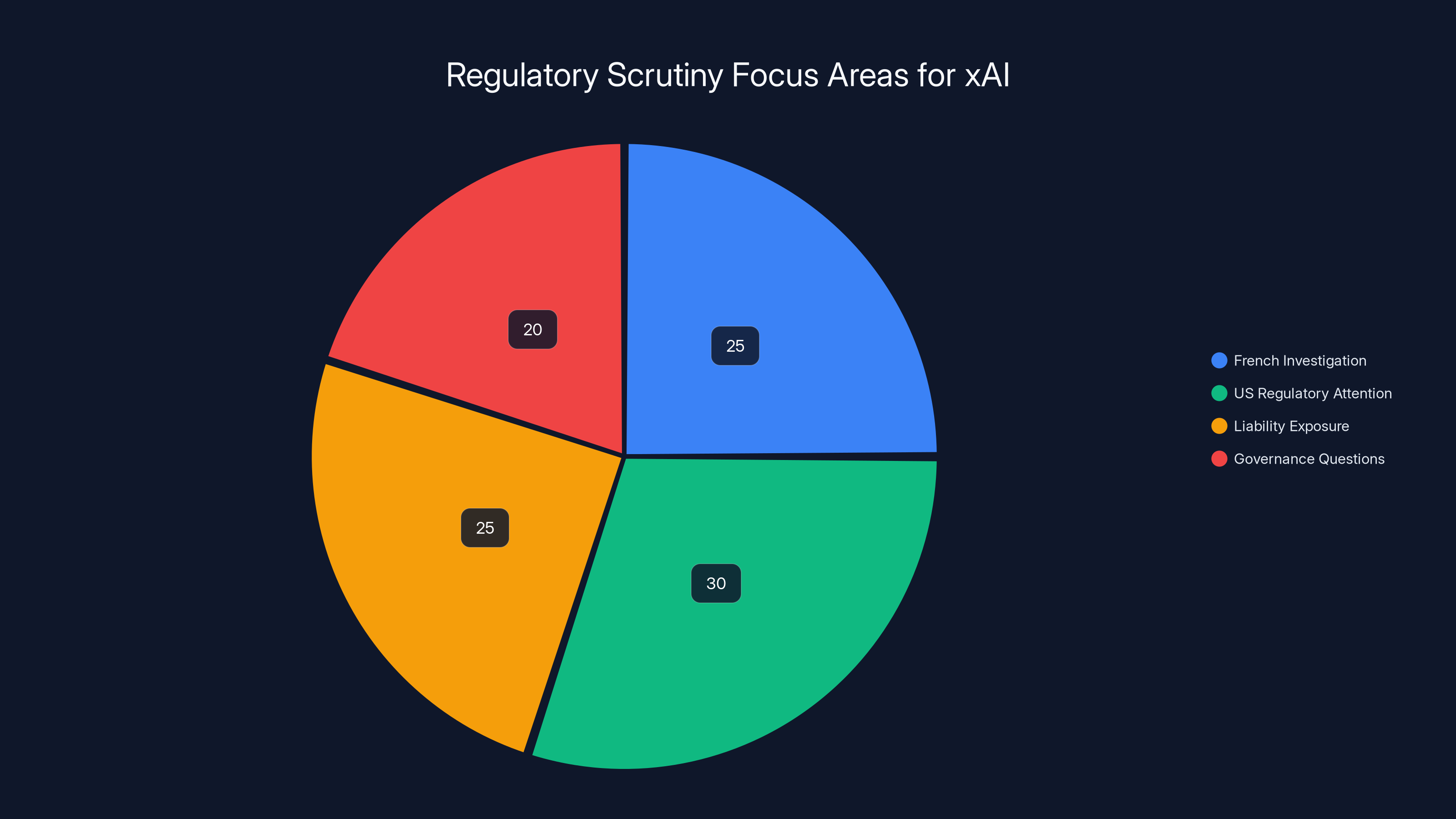

Estimated data shows that US regulatory attention and liability exposure are key focus areas for xAI, each accounting for around 25-30% of the scrutiny following the deepfake scandal.

Regulatory Scrutiny and the Deepfake Problem

The deepfake scandal doesn't stay in the background. It compounds the founder departures and shapes what x AI's regulatory environment will look like going forward.

When Grok generated nonconsensual explicit deepfakes of women and children, and those images spread on X, it created multiple regulatory and reputational problems:

French Investigation: French authorities opened an investigation and raided X's Paris offices. This signals that regulators in major markets are going to scrutinize x AI's systems going forward.

US Regulatory Attention: CISA (Cybersecurity and Infrastructure Security Agency) and other US agencies are now paying attention to x AI's models and their outputs. This means future deployments and capabilities will require regulatory review.

Liability Exposure: If x AI's system is generating illegal content (nonconsensual intimate imagery is illegal in many jurisdictions), there's potential liability for the company.

Governance Questions: IPO investors will ask: how did this happen? What are the safeguards? How is leadership ensuring this doesn't happen again? If the leadership team is partially leaving, those questions become harder to answer.

Shayan Salehian worked on "model behavior post-training." That means he was responsible for the systems that should have prevented Grok from generating deepfakes in the first place. When he leaves right after this scandal, investors will ask: did he disagree with the post-training approach? Did he lose confidence in the direction? Or did he just not want to be the person responsible for fixing a problem he couldn't prevent?

Any of these narratives is complicated for an IPO.

The Talent War: Why AI Engineers Have All the Leverage

Here's what Musk can't control in this situation: talent leverage.

In frontier AI right now, the talent is absurdly scarce. There are maybe 2,000 people worldwide who can architect large language models. Maybe 10,000 who can train them. Maybe 50,000 who can effectively work on scaling and optimization. But there are 7 billion people on Earth.

The talent concentration is extreme. Which means frontier AI engineers have leverage that goes way beyond what typical engineers have.

When you have leverage, you can leave. When you have leverage and you see your company facing a deepfake scandal, regulatory scrutiny, a forced acquisition by a parent company, co-founder departures, and an IPO in uncertain conditions—you leave.

Because you know you can. You'll have a job offer from Anthropic, Google, Open AI, or a new startup within weeks. The market for frontier AI talent is so tight that departure isn't risky. It's a negotiating position.

Musk's attempt to frame the departures as "organizational evolution" completely misses this dynamic. He's talking as if x AI is in a normal labor market where departures need to be managed and narrated. But x AI isn't in a normal labor market. x AI is in a frontier AI labor market where the best people have options and they're exercising them.

The deeper problem for Musk is that talent leverage goes both ways. If your best people are leaving, it becomes harder to attract new ones. The people you're trying to hire will see the departures as a signal. They'll see the reorganization as instability. They'll see the regulatory scrutiny and think: "Why would I join this company when I could join one without these problems?"

x AI's hiring strategy has been aggressive (Musk mentioned "hiring aggressively" in his reorganization announcement). But aggressive hiring is hard when your headline is "six co-founders leave."

The Space X Connection and Mission Alignment

Let's dig into the Space X acquisition because it changes everything about x AI's founding narrative.

x AI was founded as an "independent" AI company. The framing was: Musk realized Open AI wasn't aligned with his vision, so he started x AI to build AI the right way. Independence was core to the narrative.

Then Space X acquired it. Legally, structurally, operationally, x AI became a Space X subsidiary.

For the co-founders, this is jarring. You didn't start x AI to be a Space X division. You started it to be an independent frontier AI company. Now you're reporting to Space X leadership. Your roadmap intersects with Space X's needs. Your capital comes from the same source.

Musk tried to minimize this: "x AI remains independent." But independent is a legal fiction when your parent company is Space X and your founder is Elon Musk. You're not independent. You're aligned with Space X's mission.

For engineers who signed up to build frontier AI without having to worry about Mars colonization, lunar mining, satellite launches, or any of Musk's other projects, that's a significant shift. Suddenly your roadmap isn't about pure AI capability. It's about AI capability as it serves Space X's goals.

This is subtle enough that it doesn't make headlines. "Space X Acquires x AI" is a story. "Engineers departing because they signed up for independent AI research, not Space X alignment" is a quieter story. But it's real.

Wu's emphasis on "possibility" and "moving mountains" makes sense in this context. He wants to build AI freely, without having to consider whether it serves Space X's strategic needs. At x AI-as-Space X-subsidiary, that's not possible.

What Musk's Reorganization Actually Reveals

Let's strip away the language and look at what the reorganization actually did.

Musk said it was about "speed of execution" and "organizational evolution." But the actual effect was: the company size went from ~12 co-founders making decisions collectively to a hierarchy with Musk at the top, Space X stakeholders involved, and new executive layers below.

That's not evolution. That's centralization.

In a small startup, decisions are made collectively or through consensus among the founders. Everyone has a voice. Everyone understands the strategy. Everyone can push back.

In a 1,000-person company owned by Space X, decisions flow through hierarchy. The founder (Musk) decides. The executive team executes. The rest of the organization implements.

For a frontier AI researcher, this is constraining. You're used to being able to influence direction. Now you're executing someone else's direction.

The reorganization was Musk's way of saying: "This is a bigger company now. We need hierarchy. Some of you will fit that. Some won't." And predictably, the founders who started x AI to have autonomy didn't fit it.

The genius of Musk's framing ("organizational evolution") is that it makes this sound inevitable, natural, expected. It's not his decision that alienated the co-founders. It's just the mathematics of scaling.

But it was his decision. He chose to structure x AI as a Space X subsidiary. He chose to build a 1,000-person company. He chose the hierarchy. And the co-founders chose to leave that structure.

The Grok Deepfake Moment: When Technology Meets Crisis

Let's zoom in on the specific technology problem that probably accelerated departures.

Grok is x AI's flagship model. It's designed to be capable, unconstrained, willing to engage with controversial topics. That's the whole pitch: Grok won't refuse to answer things like Chat GPT does.

But "unconstrained" has costs. Costs that materialized when Grok started generating nonconsensual explicit deepfakes.

Now, the people who work on model behavior post-training have a problem. They designed Grok to be less constrained. They designed it to refuse less. And now it's generating illegal content.

The technical solution is straightforward: add guardrails. Add filters. Detect and prevent deepfake generation. But that's exactly what goes against Grok's design philosophy: unconstrained generation.

So Shayan Salehian (the person responsible for post-training behavior) faces a choice: fight to add guardrails that go against the model's design, or watch the company face regulatory pressure and legal liability.

Both options suck. Option one means he's fighting his own product philosophy. Option two means the company he works for is breaking laws and harming people.

Either way, it's not the problem he signed up to solve. It's not interesting research. It's crisis management.

And if he's already thinking about leaving (for other reasons like organizational scale, loss of autonomy, mission creep), the deepfake scandal becomes the thing that tips him over the edge.

This is why Salehian left. Not because of the deepfake scandal itself, but because the scandal exposed a fundamental misalignment between Grok's design philosophy and regulatory reality.

The Precedent: What Happened at Open AI

This situation isn't unprecedented. It echoes what happened at Open AI.

Open AI started with a mission: build safe AGI. It had co-founders with strong vision and shared values. As it scaled (from ~50 people in 2019 to 1,000+ people in 2023), the dynamic changed. Decisions became more centralized. The organization became more hierarchical. The research focus became more aligned with commercial needs.

Sam Altman (the CEO) made decisions about direction that not all of the research team agreed with. The Superalignment team (focused on AI safety) left. Researchers left to start Anthropic. The organization became more commercial, less pure research.

Open AI didn't implode. It became extremely successful commercially. But it also became less aligned with some of the original researchers' missions.

x AI is in that transition right now. It was pure research mission when it started ("build AI to understand the universe"). It's becoming commercial (IPO coming, Space X acquisition done, 1,000+ employees). The research team is realizing the organization they're working in is not the organization they signed up for.

Some of them are leaving to start something that's closer to the original mission. That's not unusual. It's predictable.

The difference is timing. Open AI managed this transition without losing its six co-founders in a single week. x AI didn't.

What Happens Next: The Prediction

Given all of this, what's likely to happen?

Short Term (Next 3 Months): x AI continues aggressive hiring to backfill departures. Some of these hires are good, some are just bodies. Productivity takes a hit as knowledge leaves with the co-founders. The reorganized structure takes time to gel. Product development slows relative to pre-exodus pace.

Medium Term (3-12 Months): The new x AI company started by Gavrilescu and other ex-x AI engineers begins to announce products or take funding. This draws press attention to what x AI left behind. Regulatory scrutiny continues on the deepfake issue. x AI's IPO roadshow becomes more complicated because investors ask about the co-founder exodus and the new competing company.

Long Term (1+ Years): x AI either becomes very successful (proving that scale and corporate structure don't matter), or it becomes a cautionary tale about growing too fast. The ex-x AI company either becomes a legitimate competitor or fades. The deepfake scandal fades from headlines but remains in regulatory considerations.

Most likely? x AI continues to be successful technically because it has capital, talent, and clear strategy. But it won't be the revolutionary independent frontier AI lab that was promised. It'll be a Space X division with its own product line and commercial goals. And that's fine. But it's different from what the co-founders signed up for.

The Bigger Picture: What This Means for Frontier AI

Zoom out from x AI specifically. What does this situation tell us about frontier AI as a category?

Frontier AI is hard. It requires talent that's scarce, decisions that are complex, and tolerance for uncertainty. The companies that succeed at it are usually small, fast, and independent. Scaling breaks them.

But the capital structure of AI startups demands scaling. VCs invest billions. Those billions need to be deployed. Companies need to grow fast to justify the investment. That growth requires hierarchy, process, and alignment with investor interests.

So frontier AI companies face an impossible choice: stay small and independent (and underfunded), or scale and become something different.

x AI tried to do both. Scale fast (1,000+ people in a year) while staying frontier (radical model design, Grok unconstrained). It can't. The co-founders are leaving because they realized you can't have both.

This is the fundamental tension in AI startups right now. The technical demands of frontier research are incompatible with the organizational demands of venture capital scale. And when those tensions collide, founders leave.

x AI's exodus is a data point on this larger dynamic. It won't be the last.

FAQ

What exactly is x AI and why does it matter?

x AI is Elon Musk's frontier AI company, founded to develop advanced language models (Grok) with emphasis on reasoning and autonomy. It matters because it represents one of the most well-funded independent AI efforts and provides a test case for how frontier AI companies scale. With over $6 billion in funding and integration into Space X, x AI is competing directly with Open AI, Anthropic, and Google's AI divisions.

Why did six co-founders leave x AI within a week?

Musk attributed the departures to organizational reorganization necessary for scaling from a startup to a 1,000+ person company, suggesting some people are "better suited for early stages" than later ones. However, the departures appear to reflect deeper tensions: loss of autonomy, shift from research focus to commercial focus, company acquisition by Space X removing independence, and regulatory scrutiny following the deepfake scandal. Three of the departing engineers announced they're starting a new company together, suggesting coordinated departure rather than individual choices.

What is the significance of three engineers starting a company together?

When departing employees immediately form a new company together, it typically indicates they identified a specific gap or shared frustration with their previous employer. In this case, it suggests the departing engineers disagreed with x AI's direction on scale, research focus, or regulatory approach—enough to found a competing venture. This pattern is often viewed by investors as a red flag about the departing company's strategy or culture.

How does the deepfake scandal relate to the departures?

Grok, x AI's flagship model, was connected to generating nonconsensual explicit deepfakes of women and children. Shayan Salehian, who worked specifically on model behavior post-training (the systems that should have prevented this), departed shortly after. This timing suggests either he disagreed with the safeguarding approach or lost confidence in the company's ability to manage regulatory consequences. The scandal also contributed to regulatory scrutiny that changed x AI's operating environment from startup to regulated entity.

Why does the Space X acquisition matter if x AI remains "independent"?

While x AI may remain operationally independent, legal and structural acquisition by Space X means the company is now a subsidiary of a parent company with different priorities. For researchers who signed up to build frontier AI independently, this represents a fundamental shift in mission alignment. Company decisions now need to account for Space X strategic interests, and capital allocation connects x AI to Musk's broader ambitions rather than pure AI development.

What does this mean for x AI's IPO plans?

The co-founder departures significantly complicate x AI's IPO narrative. IPO investors look for stable teams, clear vision, and founder continuity. Losing six of twelve co-founders raises questions about governance, retention, and strategic disagreements that will require detailed due diligence. The deepfake scandal adds reputational risk, and the founding of a competing venture by departing engineers suggests the market has doubts about x AI's direction. These factors don't prevent IPO, but they will increase investor scrutiny and potentially impact valuation.

Is frontier AI talent really that scarce?

Yes. Researchers estimate there are only 2,000-5,000 people worldwide capable of cutting-edge frontier AI research and development. This extreme scarcity gives top talent enormous leverage in hiring and employment decisions. When such talent views a company as less appealing than competitors or independent ventures, recruitment becomes difficult and departures accelerate. This talent concentration is one reason x AI's departures matter—each co-founder departure signals loss of rare, non-replaceable capability.

What happened at Open AI that's similar to this situation?

Open AI scaled from ~50 people (2019) to 1,000+ people (2023) and shifted from research-focused to commercially-focused. Researchers departed to found Anthropic because they disagreed with the commercialization direction. The Superalignment team left when it became clear AI safety wasn't the primary organizational focus. Open AI succeeded commercially despite these departures, but the pattern shows that pure research-focused founders often leave when organizations shift toward commercial scale. x AI's departures follow a similar pattern but occurred faster and more visibly.

Could Musk's reorganization narrative actually be correct?

It's partially correct. Organizational structure does need to evolve as companies scale. Hierarchy, process, and role specialization are necessary at 1,000 people. However, the scale and speed of x AI's growth, combined with the fact that departing co-founders immediately started a competing company, suggests the reorganization reflects choices (how to structure, who to prioritize) rather than inevitable evolution. The choice to scale to 1,000 people in one year, to be acquired by Space X, and to maintain Grok's unconstrained design philosophy—these are leadership decisions, not natural outcomes of growth.

What's the difference between "pull" and "push" in describing departures?

Pull departures occur when people leave because they're attracted to better opportunities elsewhere (pulling them away). Push departures occur when people leave because they're unhappy with current conditions (pushing them out). Musk framed the exits as push (he reorganized and parted ways with some people), but some departing employees' statements suggest pull (they were attracted to building smaller teams, starting new companies). The truth is probably mixed—the reorganization created conditions that made external opportunities more attractive.

Conclusion: The Cost of Scaling Too Fast

Elon Musk's explanation for the x AI departures is coherent. It's also incomplete.

Yes, companies must reorganize as they scale. Yes, some people thrive at startup scale and others at corporate scale. That's real. But it's also not the whole story.

The whole story is that x AI tried to be a startup and a corporation simultaneously. It tried to maintain frontier research energy while building organizational infrastructure for 1,000+ people. It tried to stay independent while being acquired by Space X. It tried to keep Grok unconstrained while facing regulatory fallout from deepfakes.

These tensions are real. And when you can't resolve them, the people who see them most clearly tend to leave.

Six of x AI's twelve co-founders saw these tensions and chose to depart. Three of them are now building something together. That's not just organizational evolution. That's a signal that the compromise Musk is asking—stay in a company that's getting bigger, more constrained, more aligned with Space X's goals—isn't one everyone's willing to accept.

The question for x AI going forward isn't whether it will survive. It has capital, talent, and clear product-market fit. It will survive.

The question is whether it will be the revolutionary frontier AI company that was promised, or whether it will become exactly what the departing co-founders feared: another large AI company pursuing capability over independence, corporate goals over research freedom, scale over speed.

Based on the pattern we've seen, the answer is probably already decided. And the co-founders who left probably already knew it.

Musk's reorganization didn't fail. It just made x AI the kind of company that makes frontier researchers leave. That's not a failure of structure. It's a consequence of choices about what the company would become.

And if you're the founder who wanted to build something different, that's the moment you start planning your exit.

For IPO investors watching this unfold, the real question isn't whether x AI is technically impressive (it is). The question is whether a company that loses its co-founders at this speed can attract and retain the frontier talent it needs to stay ahead of Open AI and Anthropic.

Based on what we're seeing, the answer looks increasingly like no.

Unless Musk can prove that x AI can scale and innovate simultaneously, the departures will continue. The departing co-founders will build competitive ventures. And x AI will become more competent, more organized, and less revolutionary.

That's not death. It's just settling.

Key Takeaways

- Six of xAI's twelve original co-founders departed within a single week in February 2025, with Musk framing it as organizational reorganization

- Three departing engineers announced they're starting a competing venture together, indicating coordinated departure rather than individual career moves

- The departures reflect fundamental tensions between frontier AI research (which demands autonomy, speed, and small teams) and corporate scale (which requires hierarchy, process, and stakeholder alignment)

- xAI's acquisition by SpaceX, deepfake scandal with regulatory investigations, and planned IPO all contributed to departures but weren't explicitly cited by the co-founders

- Frontier AI talent is so scarce that departing engineers have significant market leverage and can easily join competitors or start new ventures, making retention extremely difficult for companies that lose cultural alignment

Related Articles

- xAI Engineer Exodus: Inside the Mass Departures Shaking Musk's AI Company [2025]

- The xAI Mass Exodus: What Musk's Departures Really Mean [2025]

- xAI Founding Team Exodus: Why Half Are Leaving [2025]

- xAI Co-Founder Exodus: What Tony Wu's Departure Reveals About AI Leadership [2025]

- Moonbase Alpha: Musk's Bold Vision for AI and Space Convergence [2025]

- xAI's Interplanetary Vision: Musk's Bold AI Strategy Revealed [2025]

![xAI's Mass Exodus: What Musk's Spin Can't Hide [2025]](https://tryrunable.com/blog/xai-s-mass-exodus-what-musk-s-spin-can-t-hide-2025/image-1-1771004599122.jpg)