AI Agent Orchestration: Making Multi-Agent Systems Work Together

Here's the problem nobody talks about openly: your AI agents are terrible at teamwork.

You can build agents that understand natural language. You can train them to call APIs, read documents, and make decisions. But the moment you need two agents to work together on the same task? Everything falls apart.

They step on each other's toes. They duplicate work. They misunderstand what the other agent is trying to do. Sometimes they produce contradictory outputs that confuse the downstream systems that depend on them. It's like having a team of brilliant people in a meeting where nobody speaks the same language.

This is where orchestration comes in, and it's quietly becoming the most important layer in enterprise AI infrastructure.

Orchestration is the difference between having AI agents and having an AI system that actually works. It's the conductor that keeps all the individual musicians in sync, the traffic controller managing a complex intersection, the operations manager ensuring multiple teams aren't stepping on each other.

Right now, most enterprises are in the early stages of agent adoption. They're focused on building individual agents that can automate specific workflows. But the leaders who are thinking ahead recognize that the real value comes when those agents coordinate with each other, with existing automation systems, and with human teams. That coordination? That's orchestration.

And it's becoming a critical differentiator between organizations that see 30% productivity gains and those that see 3X improvements.

Let's break down what's actually happening in the world of multi-agent systems, why orchestration matters so much, and what enterprise leaders need to do right now to position themselves for the AI era.

TL; DR

- Agent orchestration solves a critical coordination problem: Without it, multiple AI agents create misunderstandings, duplicate work, and inconsistent outputs that undermine reliability and security

- Orchestration platforms are becoming mandatory infrastructure: Tools like Salesforce Mule Soft, Ui Path Maestro, and IBM Watsonx Orchestrate help enterprises see and manage agentic actions at scale

- Risk management is the real differentiator: Future orchestration platforms will move beyond monitoring to active quality control, agent assessment, and policy enforcement

- The shift from human-in-the-loop to human-on-the-loop is reshaping workflows: This transition democratizes AI development while moving humans upstream to design roles rather than approval roles

- Enterprise leaders need to act now: Organizations should audit their entire automation stack, implement orchestration platforms, and begin migrating toward agent-first systems to unlock true velocity gains

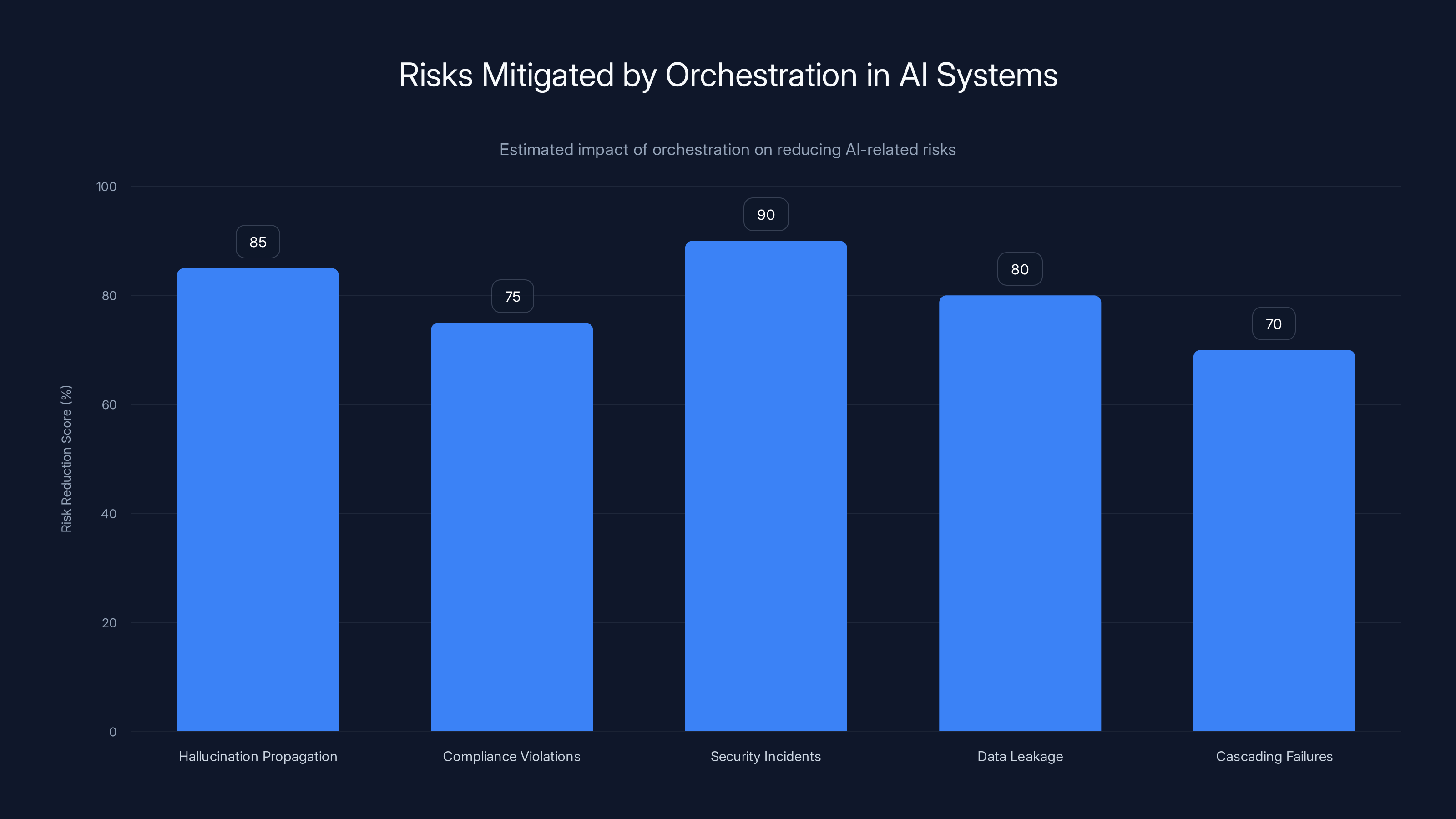

Orchestration significantly reduces risks associated with AI systems, with security incidents seeing the highest risk reduction. Estimated data.

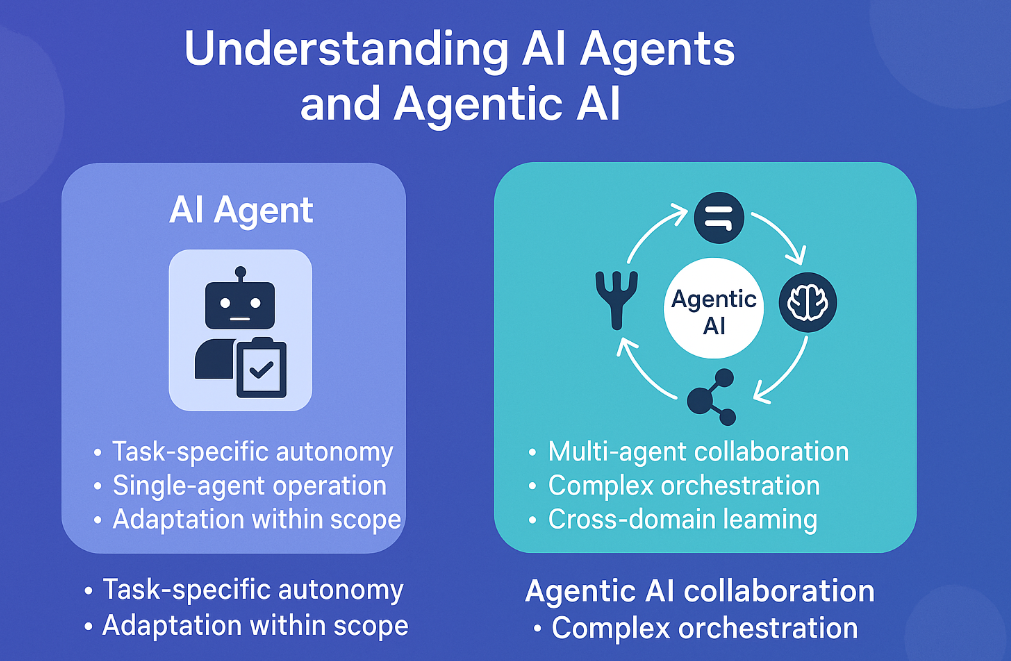

Why AI Agents Can't Coordinate Without Help

Let's start with a concrete example because this is where the rubber meets the road.

Imagine your enterprise has deployed three separate AI agents:

- Agent A handles customer service inquiries by accessing your knowledge base and customer history

- Agent B manages billing disputes by reviewing transaction records and authorization policies

- Agent C handles refund processing by coordinating with payment systems

A customer calls in with a complex issue that touches all three domains. Without orchestration, here's what typically happens:

Agent A starts working on the problem and pulls customer history. It decides the customer might need a refund and escalates to Agent B. But Agent B doesn't know that Agent A already accessed the knowledge base, so it pulls the same data again (wasting resources). Agent B requests some specific documentation from the customer, but Agent A already requested similar documents. The customer now has conflicting instructions.

Meanwhile, Agent C is waiting for a signal from Agent B, but Agent B is still gathering information. Agent C times out and escalates to a human. The human has to manually review what Agents A, B, and C have already done, and there are inconsistencies in how they interpreted the policies.

What started as a simple refund request turned into a mess because the agents couldn't communicate effectively.

This is what happens without orchestration. And it gets worse as you add more agents and more complex workflows.

The real issue is that AI agents operate in isolation by default. They don't have a shared understanding of:

- Who's doing what: Which other agents are involved in this workflow?

- What they've already done: Have other agents already completed this step?

- What the standards are: Are we following the same policies and approval processes?

- What went wrong: If something failed, who needs to know about it?

Without orchestration, each agent essentially operates as a standalone system. They might be intelligent individually, but they're not intelligent together.

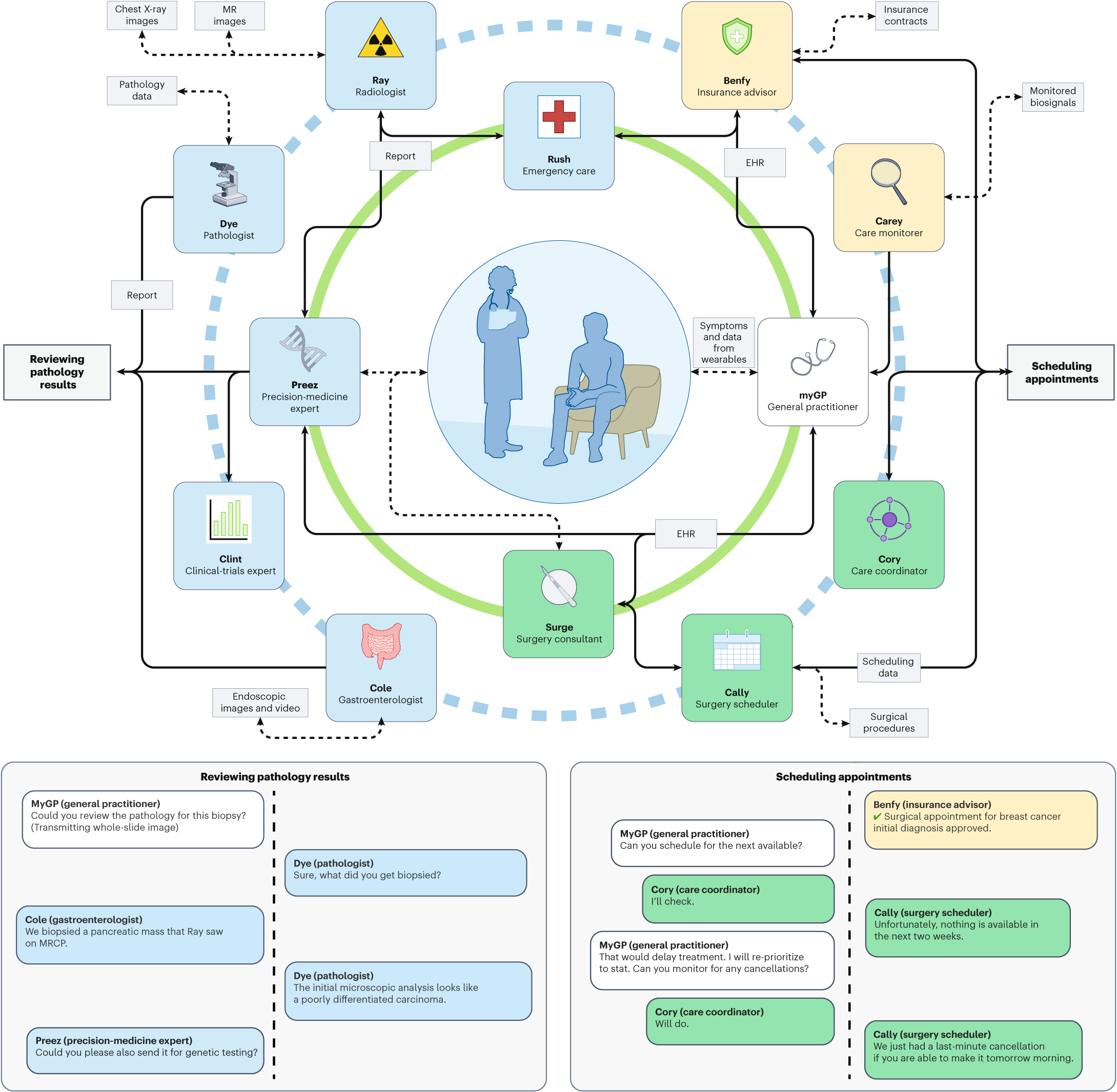

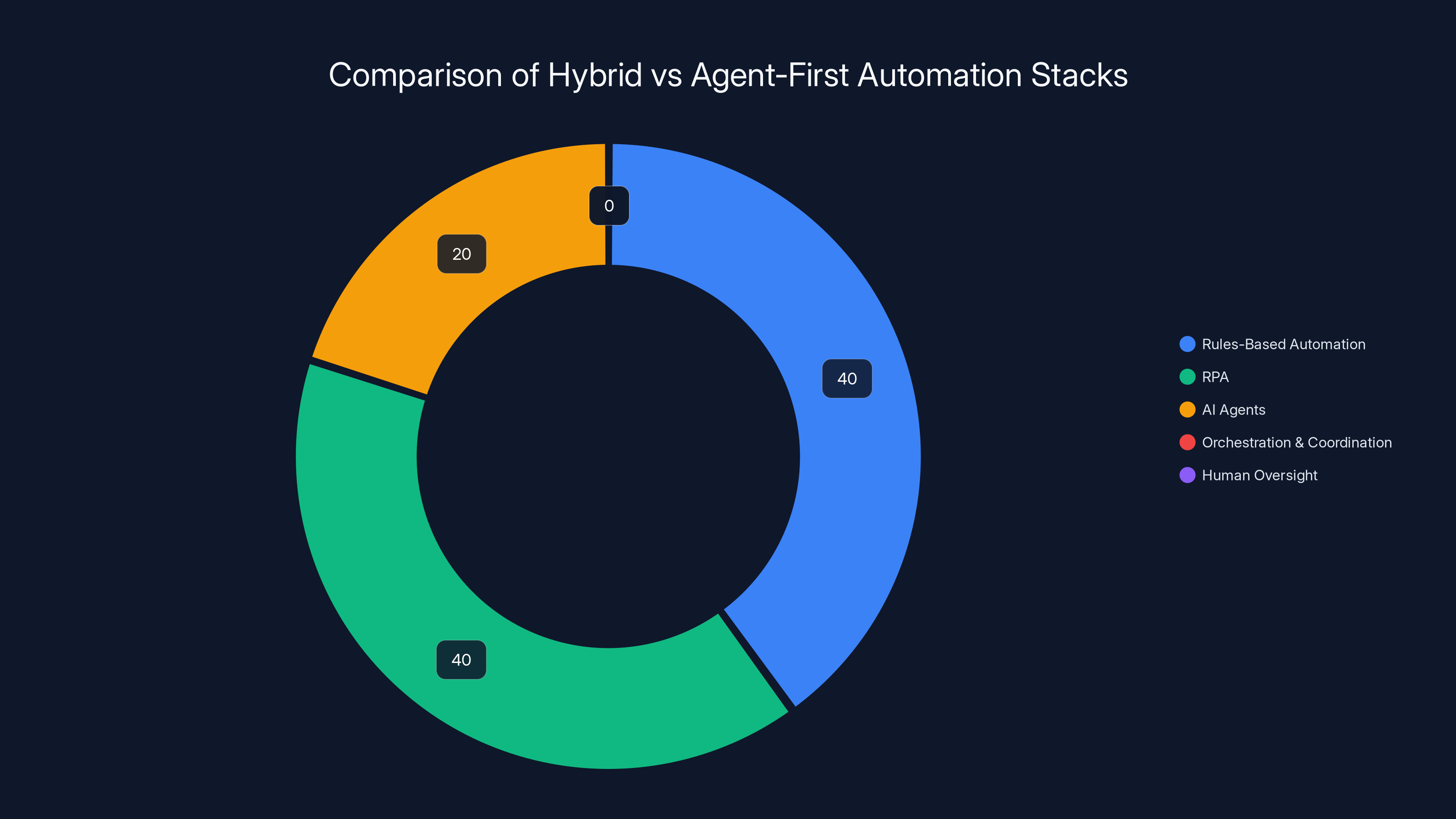

Agent-first stacks prioritize AI agents and orchestration, reducing reliance on RPA and rules-based automation. Estimated data highlights the shift towards more intelligent and coordinated systems.

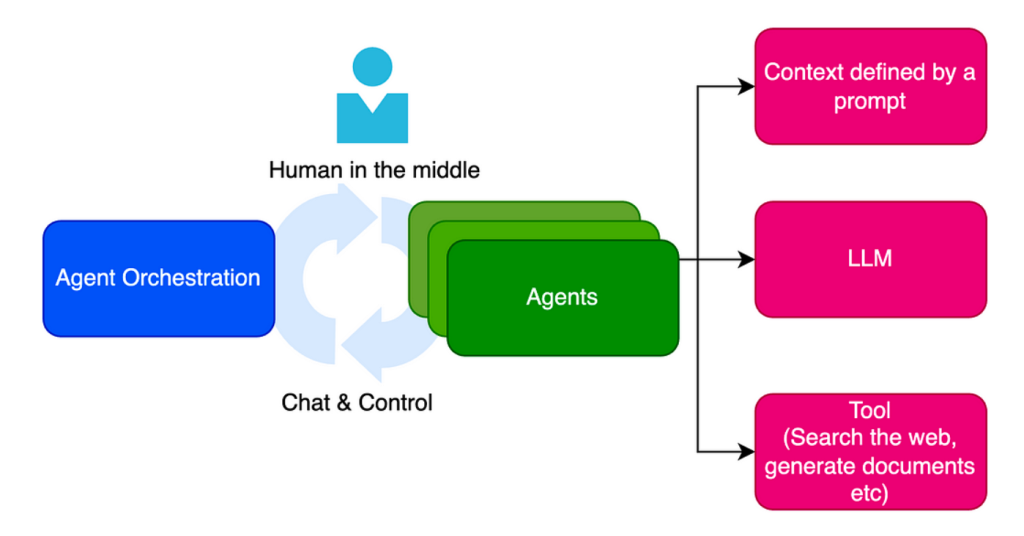

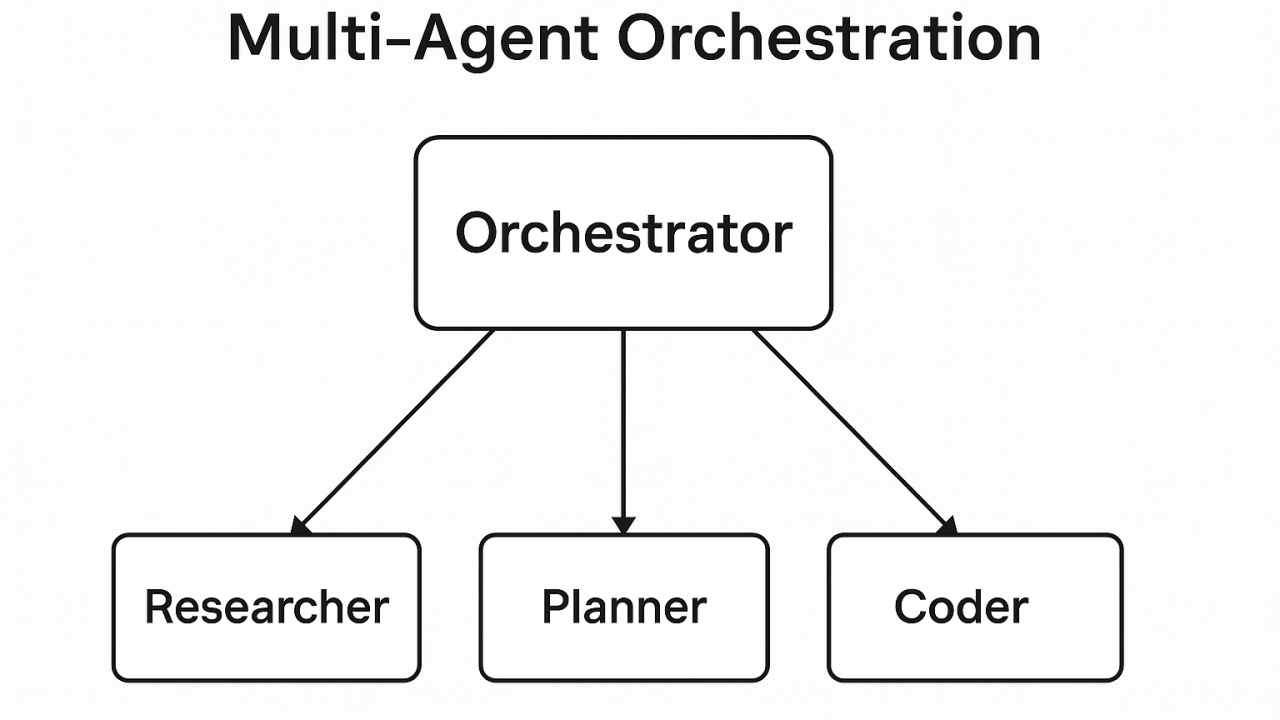

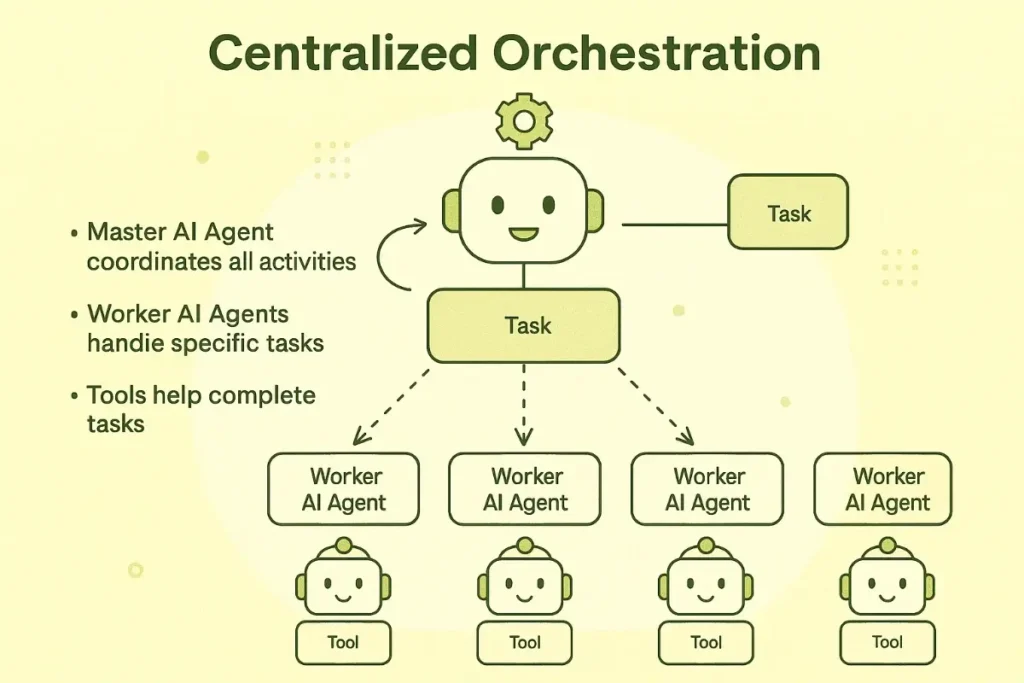

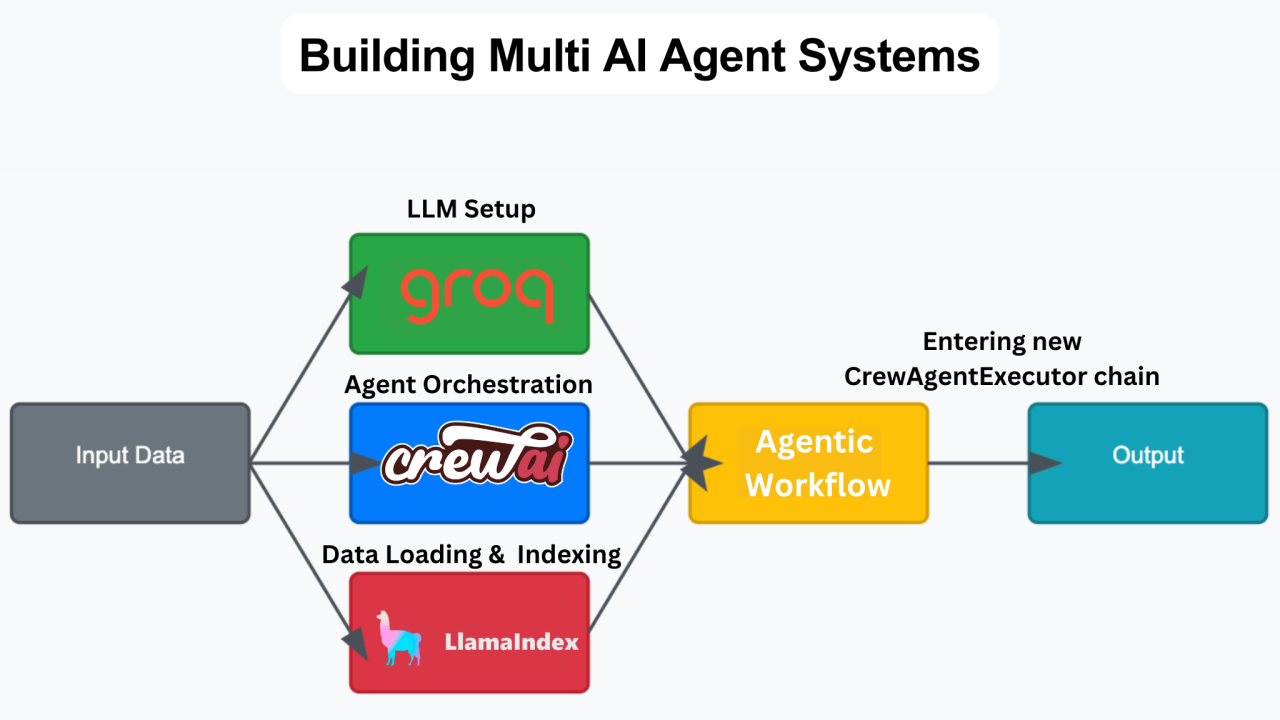

What Orchestration Actually Means in Practice

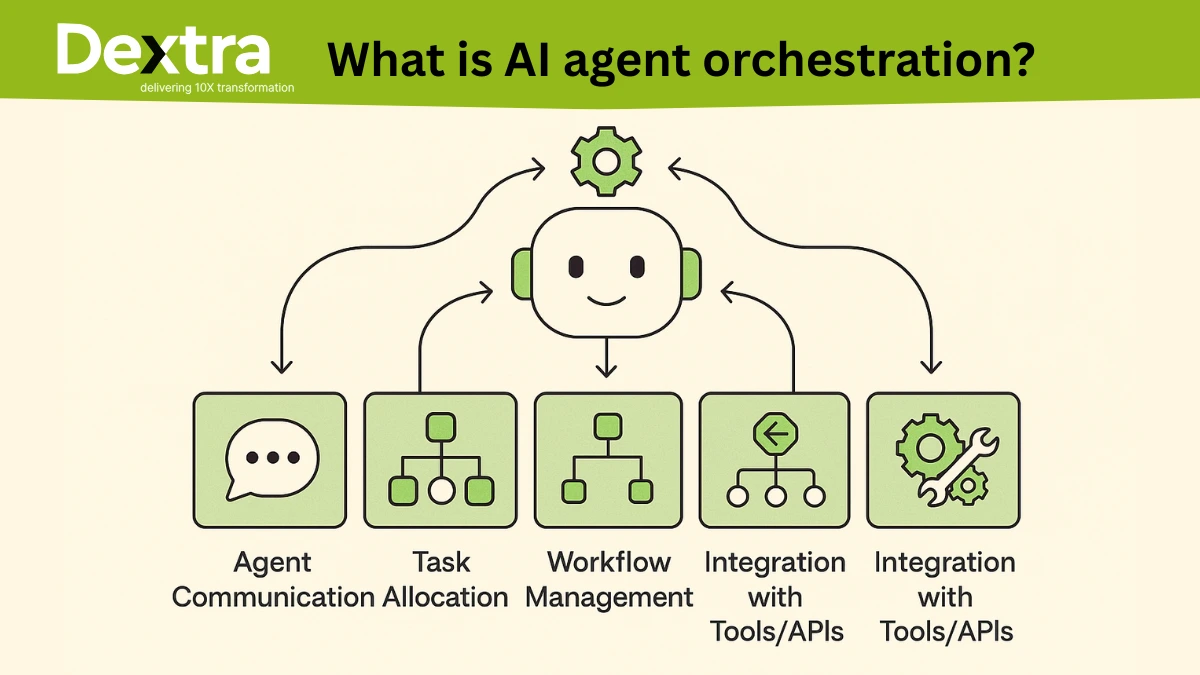

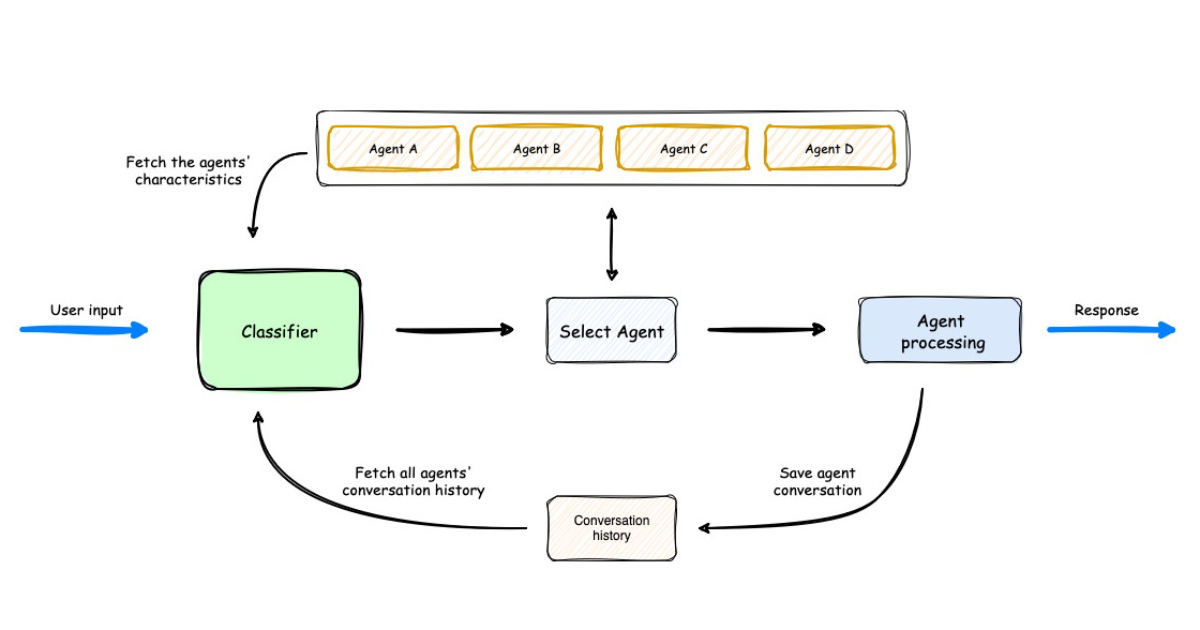

When people use the term "orchestration" in the context of AI agents, they're talking about a platform or system that does several things:

Visibility and Coordination: The orchestration layer sees all the agents operating in your system and can see what each one is doing. It's like having a command center where you can observe all active agents and understand their current actions.

Communication Standards: It ensures that when agents need to hand off work to each other, they're using a common language. Agent A can tell Agent B, "I've completed X, and here's the context you need," in a way that Agent B actually understands.

Workflow Management: It defines which agents should be involved in which processes, in what order, and what triggers one agent to start working while another pauses.

Error Handling and Escalation: When something goes wrong, orchestration decides what happens next. Does the workflow retry? Does it escalate to a human? Does it roll back the changes from the failed agent?

Policy Enforcement: It ensures that all agents are operating within guardrails. If an agent tries to approve a transaction that violates policy, orchestration catches it.

Think of orchestration like the difference between hiring three random contractors without any project manager versus hiring three contractors with a project manager overseeing everything. The project manager (orchestration) ensures they're all working toward the same goal, that their work complements rather than conflicts, and that the final result is coherent.

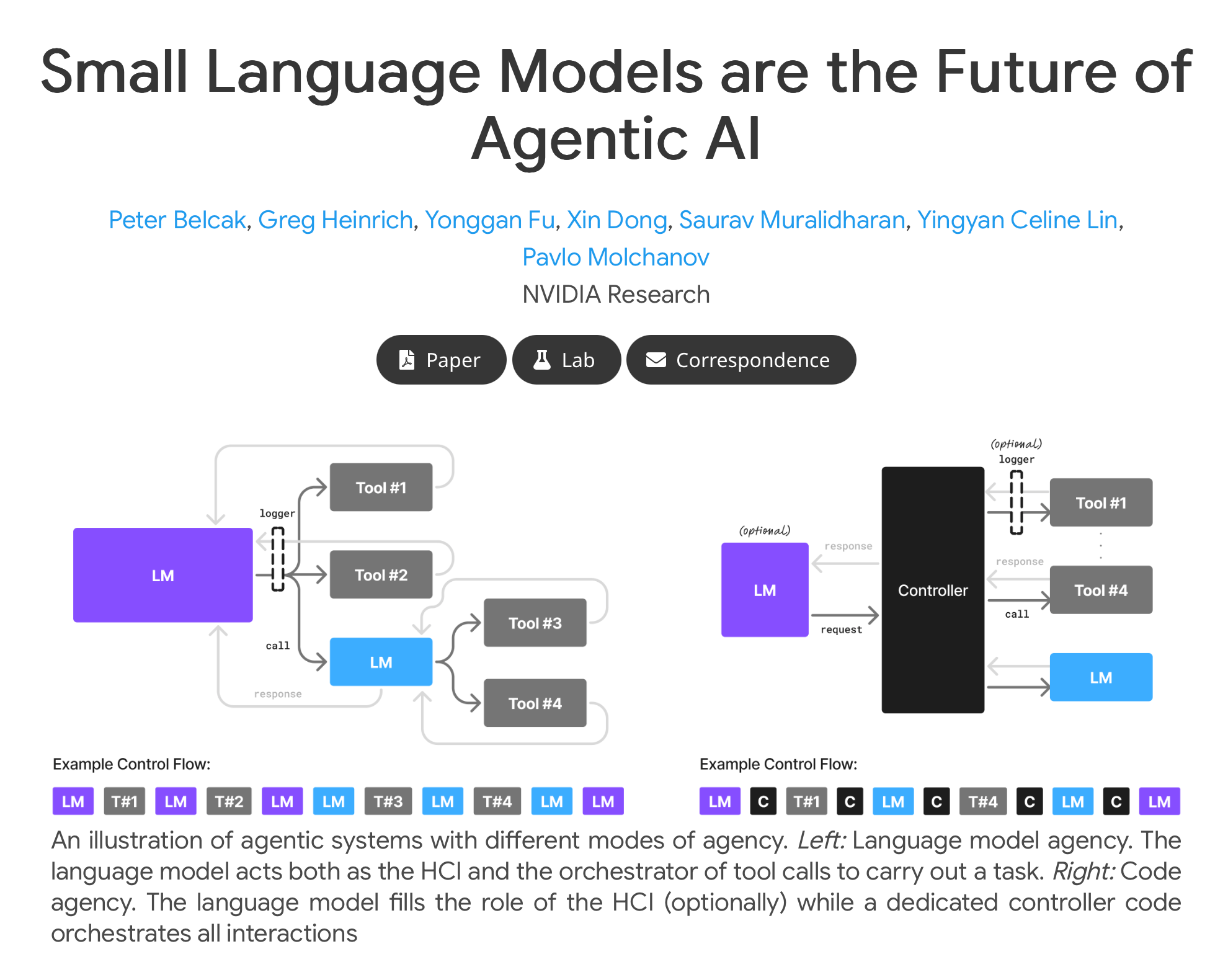

The sophistication of orchestration has evolved significantly. Early versions were purely data-centric, focused on moving data between systems. But modern orchestration platforms are action-centric. They're orchestrating what agents actually do, not just what data they move.

This shift is crucial because the value of AI agents isn't in moving data around. Data movement was already being handled by traditional integration platforms. The value is in decision-making, reasoning, and autonomous action. So orchestration needs to handle action coordination, not just data coordination.

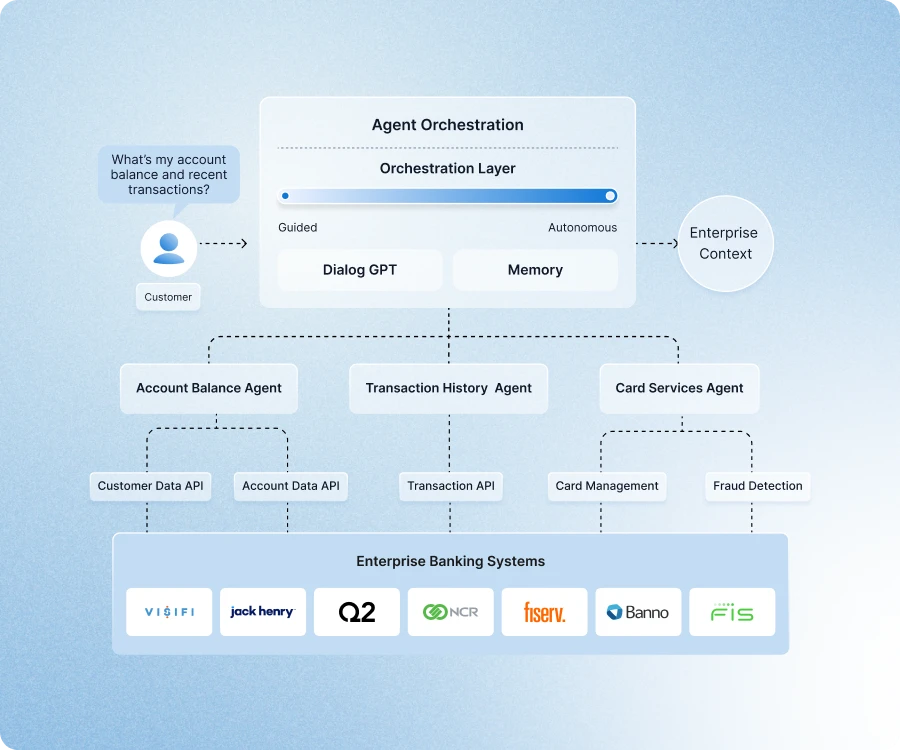

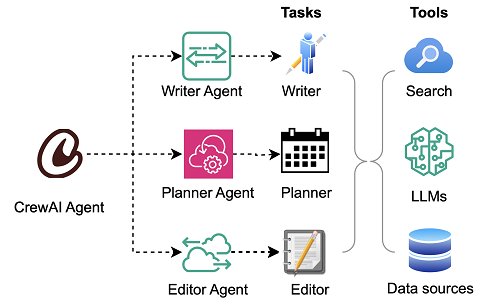

The Role of "Conductor" Platforms in Enterprise Architecture

A new class of platforms has emerged specifically to handle multi-agent orchestration. Industry analysts have taken to calling these "conductor" solutions because they conduct the activities of multiple agents, RPA systems, and data repositories.

These platforms typically emerge from one of three backgrounds:

Integration Platform Vendors: Companies like Salesforce MuleSoft come from the enterprise integration space. They've built extensive expertise in connecting systems, and orchestration is a natural evolution of that capability. They bring deep API knowledge and proven integration patterns.

RPA Vendors: UiPath Maestro comes from the robotic process automation world. These vendors understand workflow automation deeply. They're adding agent coordination on top of their existing RPA capabilities, creating hybrid automation stacks that combine rules-based automation, human-in-the-loop processes, and AI agents.

AI Platform Vendors: IBM Watsonx Orchestrate comes from the AI side of the fence. These vendors understand how to deploy and manage AI models at scale, and they're extending that to managing multiple agents.

What's interesting is that all three types of vendors are converging on similar solutions. The specifics differ, but the core function is the same: providing visibility into what agents are doing, enabling them to coordinate, and enforcing policies across the agentic system.

These platforms typically start with observability dashboards. Enterprises need to see what's happening. They need to answer questions like:

- How many agents are currently active?

- What workflows are in progress?

- Where are bottlenecks occurring?

- Which agents are failing most often?

- How long is each workflow taking from start to finish?

This is the table stakes for any orchestration platform. If you can't see what's happening, you definitely can't manage it.

But observability alone isn't enough. The real value comes from the actions the platform takes based on what it observes.

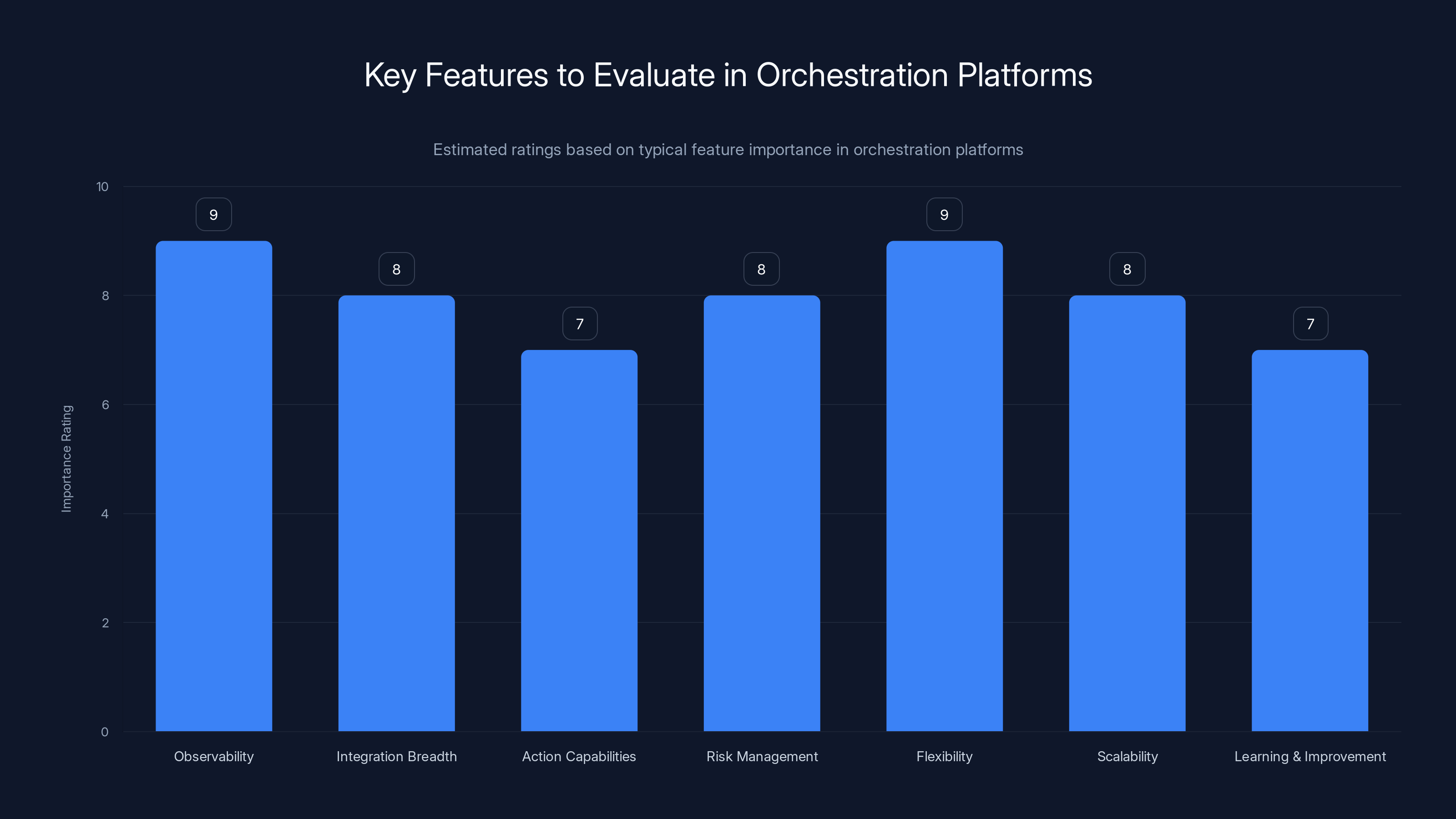

Observability and Flexibility are crucial features in orchestration platforms, with high importance ratings. Estimated data based on typical industry needs.

From Visibility to Action: The Evolution of Orchestration

The first generation of orchestration tools essentially said: "Here's what's happening, what do you want to do about it?"

They provided dashboards and alerts. When an agent failed or reached a guardrail, the system would alert a human, and the human would decide what to do.

But this creates a critical problem: human bottlenecks. And it completely undermines the whole point of AI agents, which is to reduce the need for humans in the loop.

The next generation of orchestration tools is moving toward proactive action. Rather than just alerting humans, they're making decisions about what should happen next.

For example:

Intelligent Escalation: Instead of escalating everything to a human, the system learns what types of issues need human attention and which ones can be handled automatically. It escalates intelligently based on risk, urgency, and policy.

Conditional Routing: When an agent reaches a guardrail, the orchestration platform doesn't just stop. It evaluates whether the guardrail is actually necessary in this context. Can the agent proceed with additional approval from another agent? Can it proceed with additional documentation from the user? Should it escalate?

Predictive Intervention: The orchestration platform learns patterns from historical data. It notices that Agent A tends to make mistakes when handling certain types of requests, so it automatically routes those requests to Agent B instead, or requires additional verification.

Adaptive Policies: Rather than enforcing static rules, orchestration platforms can implement policies that adapt based on context. A transaction approval policy might have different thresholds on different days or for different customer segments.

This evolution from "monitor and alert" to "monitor, decide, and act" is the real differentiator between first-generation and second-generation orchestration tools.

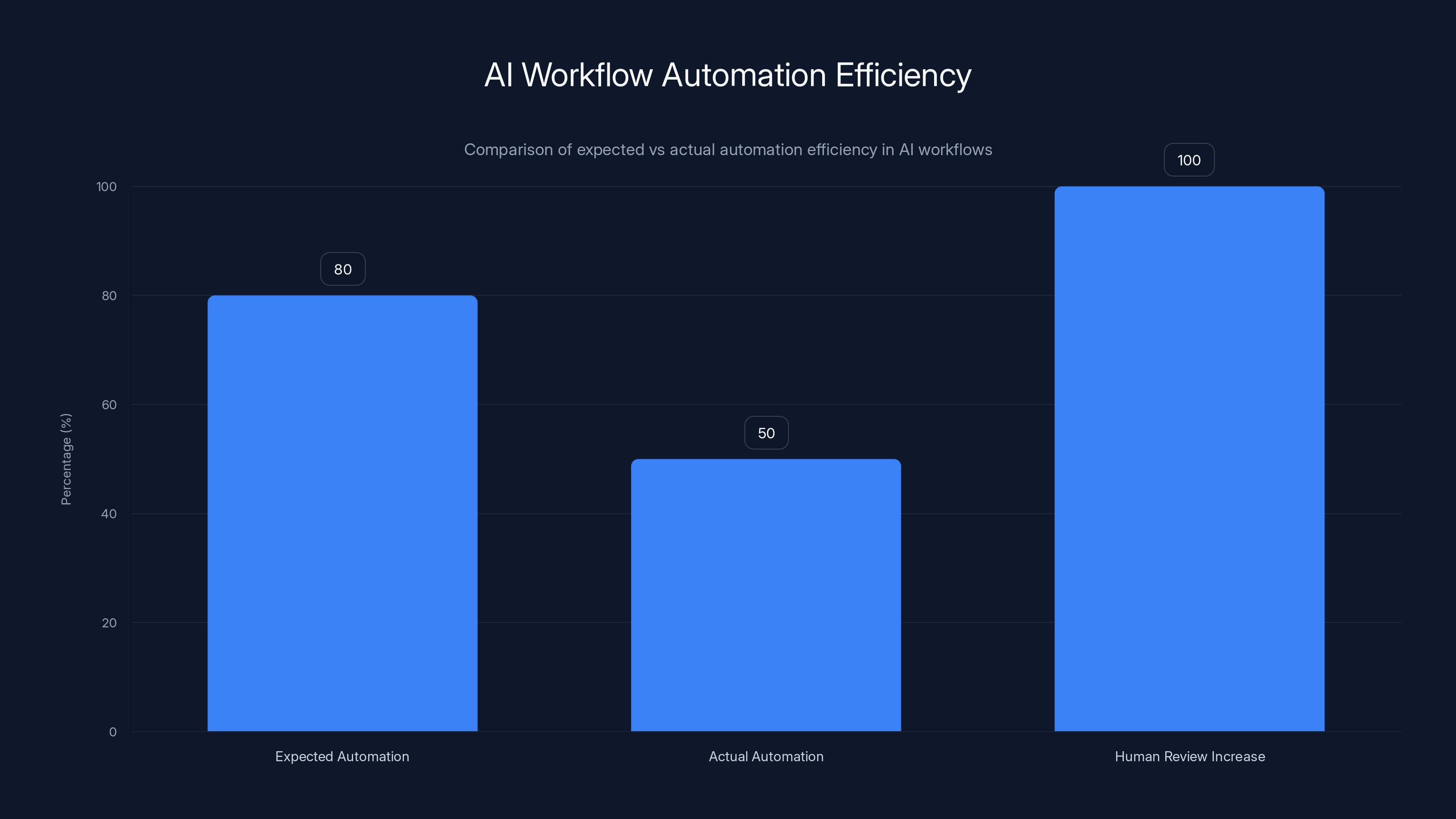

The Growing Problem of "Ticket Exhaustion"

One of the most pressing problems that orchestration needs to solve is something that's already showing up in semi-automated systems: ticket exhaustion.

Here's how it works in practice. Your enterprise implements an AI agent to handle a workflow that currently requires humans. The agent handles the routine cases beautifully. But when it encounters anything outside its training, it escalates to a human with a ticket.

That's good. That's what you want.

But then you realize the agent is escalating far more often than you expected. Instead of handling 80% of cases automatically, it's only handling 50%. And each escalation creates a ticket that a human needs to process. Your support team that used to handle 100 cases per day per person is now handling 200 cases per day per person because 50% of them are just reviewing what the agent did and either approving or rejecting it.

You've created a new bottleneck. The automation didn't reduce headcount. It just changed the nature of the work humans do.

A bank's loan approval process illustrates this perfectly. The workflow requires 17 steps for loan approval. An AI agent automates steps 1-5 and 7-10. But step 6 requires a human judgment call about the applicant's creditworthiness, and steps 11-17 require various compliance checks and sign-offs.

So the agent gets 60% of the way through the process and then hits a guardrail: "Step 6 requires human review." It creates a ticket, and a human reviews the application. The human approves it. Then the agent continues and hits another guardrail: "Steps 11-17 require compliance approval from two different teams."

So the agent creates two more tickets. If this workflow is handling thousands of applications per day, you're creating thousands of tickets per day.

That's ticket exhaustion. Your automation system is creating more work than it's eliminating.

This is where orchestration becomes critical. A sophisticated orchestration platform doesn't just escalate and wait for human approval. It does several things instead:

Policy Reevaluation: It questions whether the guardrail is actually necessary in this specific context. Does step 6 really require human judgment, or is the guardrail overly conservative? Can the agent proceed with conditional approval or additional verification?

Contextual Routing: Rather than escalating to a generic human, it routes to a human who's specifically qualified to handle this type of decision. This speeds up the review process because the right person is handling it.

Bulk Processing: It batches similar escalations together so they can be handled more efficiently. Rather than reviewing 100 individual escalation tickets, a human reviews 20 batches of 5 similar cases.

Elimination Through Redesign: It recognizes that certain guardrails are creating more work than they're preventing. It recommends that those guardrails be removed or redesigned, freeing the agent to make those decisions autonomously.

The goal isn't to eliminate human involvement entirely. It's to eliminate wasteful human involvement and ensure that human judgment is being applied to decisions that actually require it.

The AI agent was expected to automate 80% of cases but only managed 50%, doubling the human review workload. Estimated data.

Risk Management: The Real Value of Orchestration

Here's what most enterprises don't realize: orchestration is ultimately a risk management tool, not a workflow automation tool.

Yes, it helps agents work together. Yes, it eliminates bottlenecks. But the fundamental reason orchestration matters is that it's the safety net that makes AI agents trustworthy at enterprise scale.

Consider what could go wrong with unorchestrated agents:

Hallucination Propagation: Agent A hallucinates a fact. It passes that hallucinated information to Agent B, which acts on it. Now the hallucination has been baked into a business process or a customer interaction.

Compliance Violations: Agent A makes a decision that's technically within its guardrails but violates the spirit of your compliance policies. No system catches it because agents operate independently.

Security Incidents: Agent A gets compromised. It starts making decisions that benefit an attacker. Other agents don't realize that Agent A is compromised, so they trust its outputs.

Data Leakage: Agent A accesses customer data for a legitimate purpose. But that data includes sensitive information that shouldn't be passed to Agent B. Without orchestration, there's no layer checking what information flows between agents.

Cascading Failures: Agent A fails. It leaves a workflow in an inconsistent state. Agent B tries to continue the workflow but has incomplete information, leading to corrupted data or failed transactions.

These risks are why enterprise leaders are rightfully cautious about AI agents. They're not worried about agents being smart. They're worried about agents making smart-looking decisions that are actually terrible.

Orchestration addresses this by adding a quality control layer. It's not just orchestrating actions. It's orchestrating quality.

This is why the best orchestration platforms are evolving toward what might be called "agent assessment" capabilities:

Reliability Scoring: The platform tracks how reliable each agent is when it interacts with different systems. It notices that Agent A has a 95% success rate when calling the accounting system but only a 70% success rate when calling the customer database. It uses this information to route appropriately.

Hallucination Detection: The platform compares agent outputs against known reliable sources. If an agent claims that a customer has a balance of

Policy Drift Detection: The platform learns what the right decisions look like by examining historical approvals. It notices when an agent starts making decisions that differ from the historical pattern. This might indicate a problem with the agent, or it might indicate that policies have changed.

Contextual Trust Levels: Rather than trusting an agent equally in all contexts, the platform assigns context-specific trust levels. An agent might be highly trustworthy for routine customer service inquiries but require human review for refund requests above $1,000.

These aren't checks that exist outside the system. They're built into the orchestration platform's core logic.

Enterprise leaders have learned (sometimes painfully) that vendors will oversell the reliability of their AI systems. They'll show you the 95% success rate in controlled tests. They won't mention the 70% success rate in production with real data.

This is why orchestration platforms that provide independent quality assessment are becoming table stakes. You need a system that can verify what vendors claim and catch problems before they become incidents.

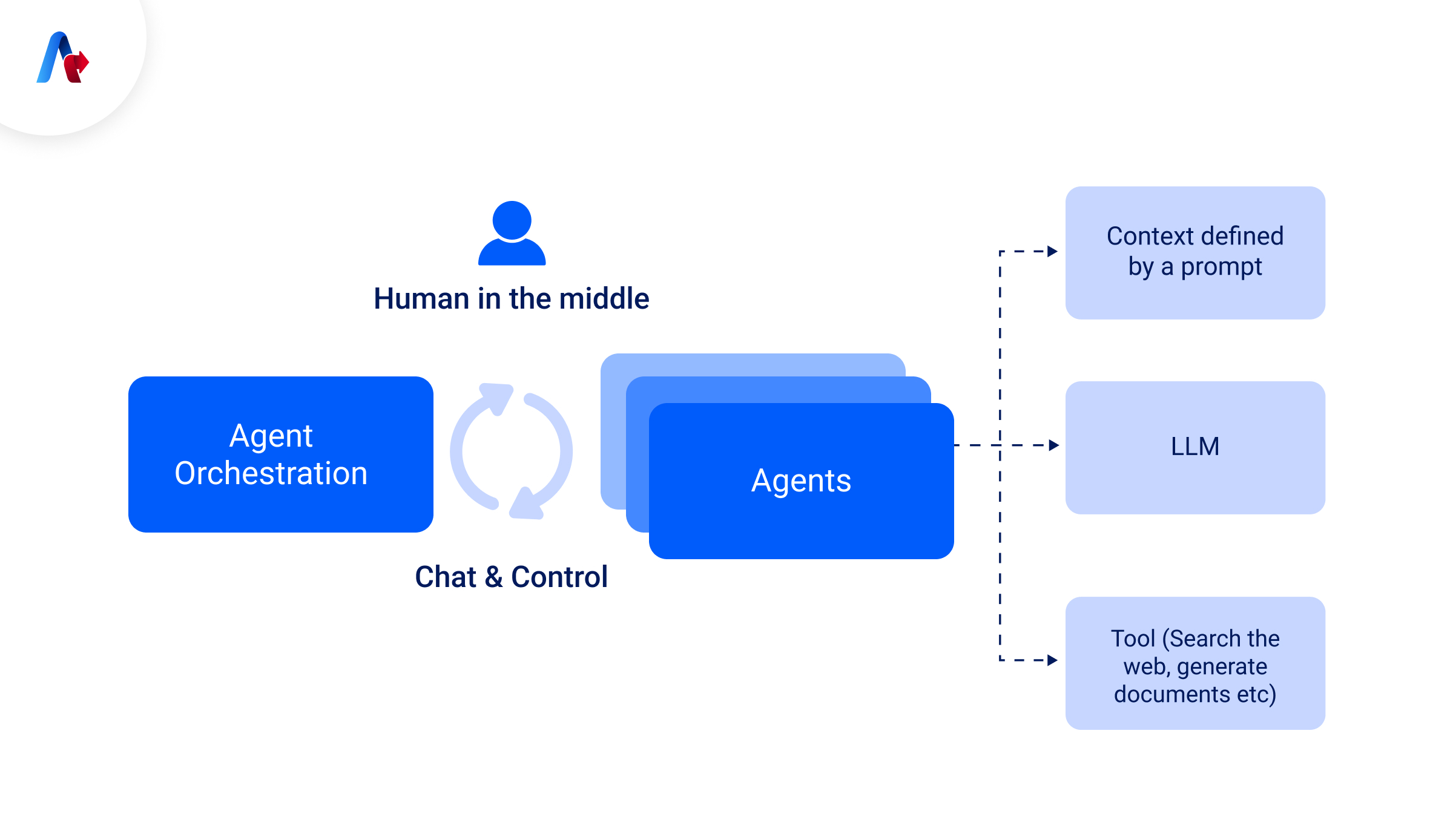

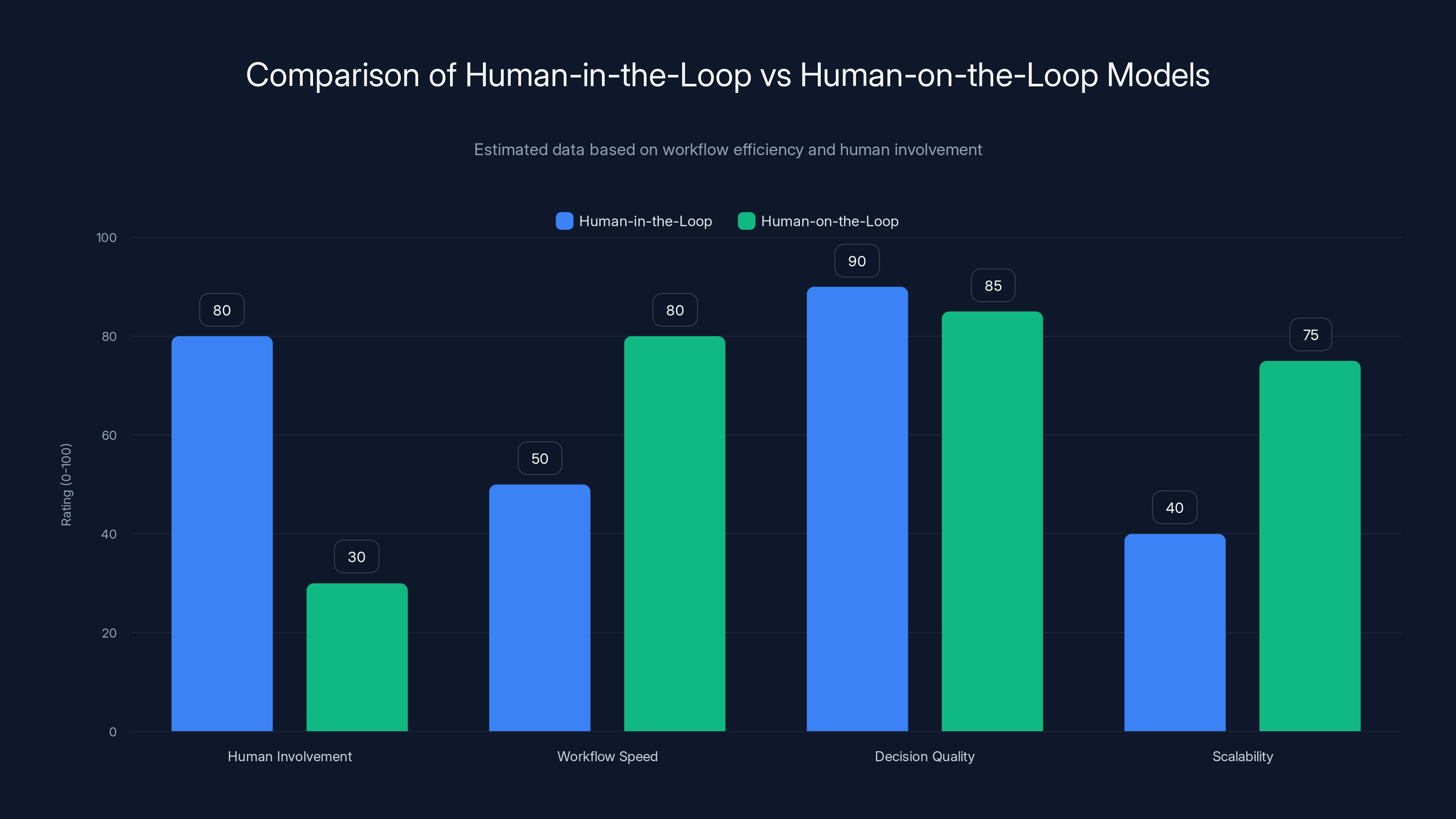

The Shift From Human-in-the-Loop to Human-on-the-Loop

There's a critical evolution happening in how enterprises think about human involvement in AI workflows, and orchestration is central to it.

Traditional automation approaches use "human-in-the-loop" models. This means humans are actively involved in the execution of the workflow. The automation handles routine steps. When something requires judgment or approval, the automation pauses and asks a human to make a decision.

This is good. It ensures quality and compliance. But it's also limiting because humans become a bottleneck. The automation can only go as fast as humans can review decisions.

The emerging model is "human-on-the-loop." In this model, humans aren't making individual decisions within the workflow. Instead, humans are designing the workflow upfront.

They define the policies. They establish the guardrails. They train the agents. They review the outcomes to ensure quality. But they're not making individual decisions within the execution.

This is a fundamental shift in where human intelligence is applied.

With human-in-the-loop, humans are reactive. Something comes up, the system pauses, and humans react.

With human-on-the-loop, humans are proactive. They think about potential problems upfront and build guardrails to prevent them.

What makes this shift possible? Orchestration and AI-native tools that can translate human intent into executable workflows.

Agent builder platforms are increasingly offering no-code or low-code interfaces that let business people express their intent in natural language. A business analyst can say, "Create an agent that processes refund requests up to $500 automatically but escalates anything above that." The platform translates that natural language into an actual agent with actual guardrails.

This is democratizing agent development. You don't need to be a data scientist or software engineer to create an agent. You just need to be able to clearly articulate what the agent should do.

That skill—the ability to express a goal clearly, provide complete context, and anticipate failure modes—is precisely the skill that makes a good manager.

So the shift from human-in-the-loop to human-on-the-loop is also a shift in who can do this work. It's moving from engineers to business people. It's moving from reactive approval to proactive design.

This shift has profound implications for organizations:

Skills Evolution: Rather than hiring more humans to review more decisions, you're training humans to design better systems. The skills that matter are process analysis, systems thinking, and continuous improvement, not transaction processing.

Scalability: A human-in-the-loop system can only scale linearly. If you double the transaction volume, you need to double the review capacity. A human-on-the-loop system scales differently. Better upfront design means fewer escalations, so transaction volume can increase without proportional increases in headcount.

Speed: Escalations for human review are the enemy of velocity. By shifting humans upstream, you reduce escalations. Workflows that previously took days (because they had to wait for human review) can now complete in hours or minutes.

Quality: Paradoxically, human-on-the-loop systems often have better outcomes than human-in-the-loop systems. Why? Because humans are better at design than at making individual decisions quickly. A human who's designing a process has time to think through edge cases. A human who's reviewing a transaction has 20 seconds to decide.

The orchestration platform is what makes this shift possible. It's the platform that ensures that proactive design actually prevents problems, that escalations are rare, and that the system maintains quality while operating autonomously.

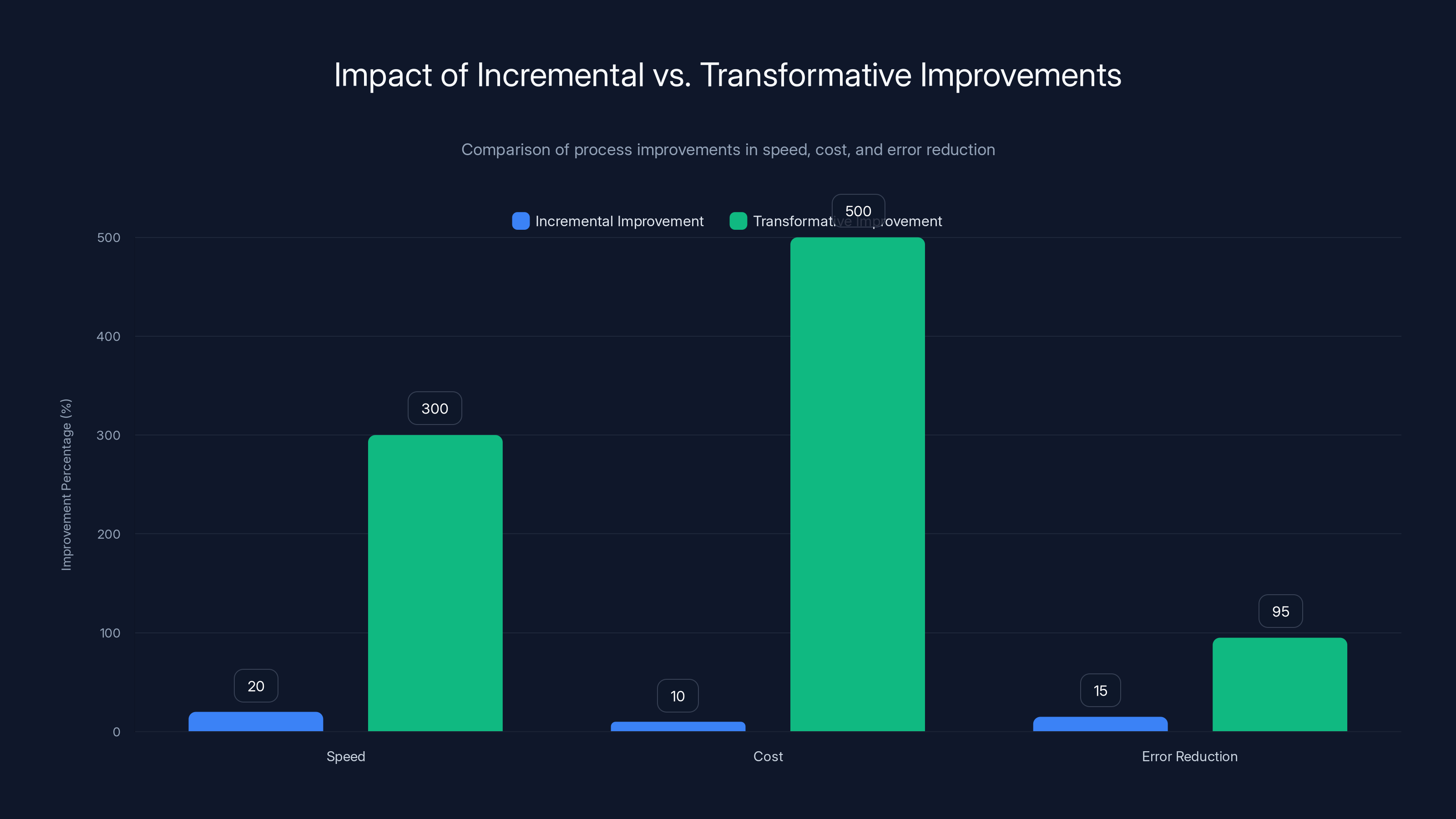

Transformative improvements through orchestration and automation can achieve up to 3X speed, 5X cost reduction, and 95% error reduction, far surpassing incremental improvements. Estimated data.

Building an Agent-First Automation Stack

So what does this mean for enterprise leaders right now? How do you actually move toward orchestrated, agent-first automation?

The first step is recognizing that agent-first automation is fundamentally different from the hybrid approach most enterprises are currently using.

A hybrid stack looks something like this:

- 40% rules-based automation (traditional business logic)

- 40% RPA (robotic process automation for legacy systems)

- 20% AI agents (new automation for newer systems)

This is where most enterprises are today. They're running multiple automation technologies in parallel because they have legacy systems that require RPA, mid-age systems that run on rules-based automation, and new systems that use AI agents.

But hybrid stacks create coordination problems. The three layers of automation don't talk to each other well. They have different logic for managing failures. They have different approaches to data. They require different skills to build and maintain.

An agent-first stack looks different:

- 60-70% AI agents (capable of handling complex decisions)

- 20-30% Orchestration and coordination (ensuring agents work together)

- 10-20% Human oversight (ensuring quality and managing edge cases)

This isn't saying you'll eliminate your RPA and rules-based automation immediately. But it's the direction you're moving. As agents become more capable, they gradually replace RPA and rules-based logic.

The key insight is that agent-first stacks dramatically outperform hybrid stacks across almost every metric:

User Satisfaction: When workflows complete faster and make better decisions, users are happier. Agents can be trained to explain their reasoning, which builds trust.

Quality: Agents trained with modern machine learning techniques make fewer errors than hand-coded rules or RPA bots.

Security: Agent-first systems can implement consistent security policies across all automation, rather than having different security models for RPA vs. rules-based vs. AI.

Cost: Agents are more flexible than RPA bots (which are brittle and break when systems change) and more maintainable than rules-based systems (which accumulate technical debt as rules multiply).

Flexibility: When requirements change, agents can be retrained. Rules-based systems and RPA require code changes.

The path to an agent-first stack has several stages:

Stage 1: Identify High-Value Workflows: Start with highly repetitive work that poses significant bottlenecks. These are the workflows where agents will deliver the most value. Look for processes with clear decision logic but high transaction volume.

Stage 2: Implement with Human-in-the-Loop: Initially, use agents with heavy human oversight. This ensures quality while you're learning. It also helps with change management—employees see that their judgment is being respected, not replaced.

Stage 3: Progressively Reduce Escalations: As the agent proves reliable, systematically reduce the amount of human review required. Replace "human approval required for every decision" with "human review 5% of decisions" with "human review only if quality metrics decline."

Stage 4: Shift to Human-on-the-Loop: Once the agent is reliable, transition humans from reactive approval to proactive design. They should be focused on improving the agent, not approving its decisions.

Stage 5: Expand and Orchestrate: Once you have multiple agents working reliably, implement orchestration to help them coordinate with each other and with your existing systems.

This journey takes time. It's not something you do in a quarter. But the organizations that start now will have a massive competitive advantage over those that wait.

Auditing Your Current Automation Stack

If you're an enterprise leader reading this, the most important action you can take right now is to audit your current automation stack.

You probably have automation technology scattered across your organization:

- RPA bots managing legacy system integration

- Rules-based engines handling straightforward business logic

- Workflow tools orchestrating human processes

- Possibly some AI agents already deployed by innovative teams

- Custom integrations and scripts solving one-off problems

But most enterprises don't have a clear picture of all of this. Different teams own different pieces. There's no unified understanding of what automation exists, what it does, or how it relates to other automation.

This is a massive blind spot. You can't orchestrate what you can't see.

So step one is to map your entire automation landscape:

Document Every Automation System: For each system, capture:

- What it does

- What technology it uses (RPA, rules, agent, custom, etc.)

- Who owns it

- What systems it integrates with

- How often it fails

- What volume it handles

- Whether humans are involved and at what points

Map Integration Points: Draw a diagram showing how these systems connect to each other. Where does data flow from one system to another? Where do they have conflicting logic?

Identify Automation Debt: Look for redundancy. Are multiple systems doing similar things? This is common when different teams have built automation independently. Consolidating redundant automation often yields quick wins.

Spot Bottlenecks: Where do humans get involved? These are your biggest opportunities for improvement. A process that requires three human approval steps is a candidate for agent automation.

Assess Maintainability: How hard is it to change each automation system? RPA systems are notoriously brittle—they break whenever the system they're automating changes. Rules-based systems accumulate debt as rules multiply. Agents are more flexible but require skilled people to maintain.

Once you have this map, you can start thinking strategically about how to evolve toward an agent-first stack.

The audit also reveals where orchestration can deliver immediate value. Find places where:

- Multiple automation systems touch the same workflow

- Data flows from one system to another

- Humans are escalating cases between systems

- There's conflicting logic that requires manual resolution

These are exactly the places where orchestration can improve consistency and reduce human involvement.

The shift from human-in-the-loop to human-on-the-loop increases workflow speed and scalability while maintaining decision quality. Estimated data highlights the proactive role of humans in designing workflows.

The Strategic Opportunity: Velocity Gains vs. Incremental Improvement

Here's the distinction that most enterprises miss: there's a difference between incremental improvement and transformative improvement.

Incremental improvement means you optimize what you already have. You make your processes 20% faster, 10% cheaper, reduce errors by 15%. This is good. You should do it.

But orchestration and agent-first automation can deliver transformative improvement. Not 30% faster. 3X faster. Not 50% cheaper. 5X cheaper. Not 80% fewer errors. 95% fewer errors.

Why is the difference so dramatic?

Because when you're orchestrating across multiple agents, you're not just speeding up the same process. You're eliminating entire categories of work.

A loan application that previously took three days because it needed human review at three different points? With orchestrated agents, it takes two hours. You didn't optimize the process. You eliminated the review steps that were creating delay.

A customer service interaction that previously required escalation to a specialist? An orchestrated agent system can handle it completely, or escalate directly to the right specialist with complete context. The customer gets a faster resolution, and the specialist spends less time gathering information.

A compliance audit that previously took two weeks of human effort? An agent system can generate the documentation in an hour, leaving humans to focus on exception cases.

These aren't 30% improvements. These are order-of-magnitude differences.

And they only become possible when you have:

- Capable agents that can make good decisions across a wide range of scenarios

- Orchestration that ensures those agents work together and maintain quality

- Shifted governance where humans are designing systems rather than approving individual decisions

The organizations that move toward this model first will have a massive competitive advantage. Their processes will be faster, cheaper, and more reliable. That advantage will compound over time.

The organizations that delay—waiting for agents to become perfect, or waiting for some external trigger—will find themselves behind.

Implementing Orchestration: What to Look For in a Platform

Assuming you're going to start implementing orchestration, what should you be looking for in a platform?

Not all orchestration platforms are created equal. Some are better suited for specific use cases. Here's what to evaluate:

Observability and Visibility: Can you see what's happening across all your agents and automation systems? Does the platform give you dashboards that show active workflows, completion rates, error rates, and performance metrics? Can you drill into individual workflows to understand what happened?

Integration Breadth: How many systems can it integrate with? You probably have systems from 10+ different vendors. The orchestration platform needs to connect with all of them. If it only connects with a limited set of systems, it won't be able to orchestrate your entire workflow.

Action Capabilities: Beyond visibility, can the platform actually do things? Can it route work to different agents based on content? Can it make decisions about escalation? Can it enforce policies? Or is it just a monitoring tool?

Risk Management Features: Does it have features for assessing agent reliability, detecting hallucinations, enforcing compliance, and preventing policy violations? These are what transform it from an orchestration platform into a risk management platform.

Flexibility: How easy is it to configure new workflows? Do you need to involve IT every time you want to orchestrate a new process? Or can business users configure orchestration using visual tools or natural language?

Scalability: How many agents can it orchestrate? How many concurrent workflows can it handle? What's the latency? For many use cases, you need sub-second response times. If the orchestration platform adds latency, it's not useful.

Learning and Improvement: Does the platform improve over time? Can it learn from historical outcomes and use that learning to make better decisions going forward?

Cost: How is the platform priced? By agent? By workflow? By transaction? Make sure the pricing model aligns with how you'd actually use the platform.

Vendor Vision: Where is the vendor going with the product? Are they thinking about this as an orchestration platform that will evolve with your needs? Or is it a feature added to an existing product that might not get the investment it needs?

Different vendors will score differently on these criteria. There's no one-size-fits-all answer. But these are the dimensions you should be evaluating on.

Avoiding Common Orchestration Mistakes

Organizations that have attempted orchestration haven't all succeeded. Here are the mistakes they made, so you don't have to:

Mistake 1: Starting Too Ambitiously: Organizations often try to orchestrate their entire automation system at once. This is too complex. You're trying to change too much simultaneously. Start with a single high-value workflow. Get that working. Then expand from there.

Mistake 2: Ignoring Change Management: Orchestration changes how people work. Your agent developers need to understand how orchestration affects their agents. Your operations team needs to understand how to monitor orchestrated workflows. Your end users need to understand why the experience is different. Without proper change management, adoption stalls.

Mistake 3: Treating Agents as Autonomous Systems: The worst mistake is assuming that once you deploy an agent and set up orchestration, you're done. Agents require continuous monitoring and improvement. They'll make mistakes. They'll encounter scenarios they weren't trained for. You need a process for identifying these issues and improving the agents over time.

Mistake 4: Centralizing Everything: Some organizations try to make the orchestration platform a centralized hub that everything flows through. This creates a bottleneck. Orchestration should be distributed. Each workflow should have its own orchestration logic. The platform should provide the tools and the standards, not centralize all the decisions.

Mistake 5: Underinvesting in Governance: You can't orchestrate what you can't govern. You need clear policies about what agents can do, how decisions are made, what data they can access, etc. Organizations that skip this step end up with chaos.

Mistake 6: Misaligning Incentives: If your IT team is evaluated on cost reduction, they won't want to invest in orchestration (which costs money upfront). If your business teams are evaluated on transaction velocity, they might push for orchestration without proper quality control. Align incentives around value creation, and these problems go away.

Mistake 7: Ignoring the Security Implications: When you orchestrate agents, you're creating new attack surface. An attacker who compromises one agent might be able to compromise others through the orchestration layer. You need to think about security from the beginning, not add it later.

Learn from these mistakes. Avoid them in your organization.

The Convergence of AI, Automation, and Work Design

Orchestration is ultimately about something bigger than technology. It's about how work gets designed and executed in an AI era.

For decades, enterprises have used technology to optimize work that humans designed. Business analysts design processes. Software developers build systems to execute those processes. Operations teams monitor the systems. Humans execute the routine parts. When something goes wrong, escalation procedures kick in.

This is a human-centric model of work. The process is designed for humans. The technology is in service of the human process.

Orchestration signals a shift to an agent-centric model. The process is designed for agents. Humans design the systems that agents execute. When agents need human judgment, the orchestration platform makes sure it gets it efficiently.

This shift is profound because it changes what skills matter, what roles exist, and how organizations operate.

In a human-centric model, you need lots of people executing transactions. You hire transaction processors. You train them. You manage them. Productivity gains come from optimizing how efficiently humans can execute a process.

In an agent-centric model, you need fewer people executing transactions and more people designing agents. You hire process analysts, systems thinkers, and continuous improvement specialists. Productivity gains come from designing agents to be more capable and more autonomous.

Orchestration is the platform that makes this transition possible. It's what allows organizations to trust agents enough to make them autonomous. It's what enables scaling beyond what humans can handle.

The organizations that understand this transition and move toward it deliberately will thrive. The organizations that resist it or move too slowly will find themselves struggling to compete.

Preparing Your Organization for Agent-Centric Automation

If you're going to move toward orchestrated, agent-centric automation, you need to prepare your organization.

Build Literacy: Your organization doesn't understand what AI agents are yet. Most people think of agents as just another tool. They don't realize that agents represent a fundamentally different model of work. You need to build understanding across your organization about what agents can do, what they can't do, and how they'll affect workflows.

Establish Governance Early: Don't wait until you have massive agent deployments to think about governance. Establish policies now about what data agents can access, what decisions they can make autonomously, how they escalate, etc. Build in experimentation time to figure out what works for your organization.

Develop Agent Builders: You need people who can build agents. These might be data scientists or software engineers. But you probably need more people who can translate business requirements into agent specifications. People who understand your domain deeply and can anticipate what agents need to be trained on.

Create Feedback Loops: Agents improve through feedback. You need processes for gathering feedback from end users about agent performance, identifying issues, and continuously improving agents. This isn't a one-time deployment. It's an ongoing practice.

Invest in Infrastructure: Orchestration platforms, agent deployment infrastructure, monitoring systems, feedback collection systems—these all cost money. Budget for them. Don't try to save money by using subpar tools. The quality of your infrastructure directly impacts the quality of your automation.

Pilot Strategically: Don't deploy agents everywhere at once. Start with specific workflows where you can clearly measure value and manage risk. Let those pilots inform your broader strategy.

Communicate Benefits: Your employees will be affected by agent deployment. Some roles will change. Some will disappear. Some will expand. Be transparent about this. Show how agents create opportunities for people to focus on higher-value work rather than transaction processing.

Stay Current: This space is evolving rapidly. New tools emerge. New capabilities become possible. New risks emerge. You need to stay current on what's happening in the industry and how it applies to your organization.

Looking Ahead: The Future of Orchestration

Where is orchestration heading? A few trends are becoming clear:

Orchestration Will Become Invisible: Right now, orchestration is something you consciously implement. In the future, it will be built into every automation platform. The concept of orchestration will be so fundamental that we won't even use the term anymore. It will just be how systems work.

Self-Healing Automation: Future orchestration platforms will learn from failures and automatically adjust. If an agent starts hallucinating on a particular type of request, the orchestration platform will detect it and route that type of request elsewhere. If a system integration breaks, the platform will find an alternative route automatically.

Predictive Escalation: Rather than escalating when things go wrong, platforms will predict when escalation will be needed and prepare for it upfront. A human specialist can start reviewing documentation before an escalation even occurs.

Multi-Organization Orchestration: Currently, orchestration happens within an organization. In the future, it will span organizational boundaries. Your agents will orchestrate with your partners' agents and with cloud services seamlessly.

Compliance-Native Orchestration: Future platforms will have compliance baked in from the start. Rather than auditing for compliance, the platform will enforce compliance continuously. Every action will generate proof that it was compliant.

AI-Driven Design: Rather than humans designing orchestration workflows, AI will analyze your existing processes and recommend orchestration patterns. The platform will learn what works in your organization and suggest improvements.

These aren't science fiction. They're extensions of what orchestration platforms are already starting to do.

Conclusion: Orchestration as the Foundation of Enterprise AI

Here's the bottom line: individual AI agents are useful. But orchestrated AI agents are transformative.

Orchestration solves the coordination problem that makes multi-agent systems coherent. It provides visibility, enables action, enforces policy, and manages risk. It's the difference between having smart individual agents and having a smart system.

For enterprise leaders, orchestration is the critical infrastructure that enables the shift from incremental improvement to transformative value creation. It's what allows you to move from "agents handle 20% of cases" to "agents handle 80% of cases while maintaining or improving quality."

The organizations that implement orchestration now will have a massive advantage. Their processes will be faster, cheaper, and more reliable. That advantage will compound as they expand orchestration across more workflows.

The first step is auditing your current automation stack. Map what you have. Understand where agents can add value. Start piloting orchestration in a high-value workflow. Learn from the pilot. Expand from there.

Don't wait for orchestration to become perfect. It won't. Don't wait for agents to become fully autonomous. They won't. Start experimenting now while the technology is still developing. The organizations that wait will find themselves playing catch-up.

The future of enterprise automation isn't agents alone. It's orchestrated agents, working together with humans and existing systems, delivering transformative value. That future is being built right now.

The question is: are you going to be part of building it, or are you going to be left behind?

FAQ

What exactly is AI agent orchestration?

AI agent orchestration is the process of coordinating multiple AI agents to work together on complex workflows. It provides visibility into what agents are doing, enables them to communicate and hand off work to each other, enforces policies and guardrails, and manages escalations when agents need human judgment. Think of it as a conductor managing a orchestra—each musician (agent) plays their part, but the conductor ensures they stay in sync and produce coherent music.

Why do AI agents need orchestration in the first place?

AI agents naturally operate in isolation. Without orchestration, they can't see what other agents are doing, they might duplicate work, they can hallucinate and pass misinformation to other agents, and there's no centralized place to enforce policies or manage risk. Orchestration creates a coordination layer that prevents these problems and ensures that multiple agents working on the same workflow actually work together effectively.

What are the main benefits of orchestration for enterprises?

Orchestration delivers several critical benefits: improved visibility into automation across your organization, faster workflow completion by eliminating human bottlenecks, better quality through automated quality control, reduced risk through policy enforcement and agent assessment, and the ability to scale automation without proportionally scaling headcount. Organizations implementing orchestration typically see productivity improvements measured in multiples (3X, 5X) rather than percentages.

How does orchestration differ from traditional workflow automation?

Traditional workflow automation (like BPM systems) manages processes that involve humans. Orchestration manages processes that involve agents making autonomous decisions. Orchestration needs to handle agent-to-agent communication, assess agent reliability, detect hallucinations, and manage escalations intelligently. It's fundamentally different from routing work between humans.

What's the difference between human-in-the-loop and human-on-the-loop?

Human-in-the-loop means humans are actively involved in executing the workflow, making decisions when agents can't. Human-on-the-loop means humans design the system upfront but aren't involved in execution unless something goes seriously wrong. Human-on-the-loop is more scalable because humans become a bottleneck in human-in-the-loop systems. As orchestration platforms mature, organizations can transition from human-in-the-loop to human-on-the-loop, moving humans from reactive approval to proactive design.

What types of vendors are building orchestration platforms?

Orchestration platforms are emerging from three main areas: integration platform vendors (like Salesforce MuleSoft) who have deep API and system integration expertise, RPA vendors (like UiPath Maestro) who understand workflow automation, and AI platform vendors (like IBM Watsonx Orchestrate) who understand deploying AI at scale. These different backgrounds bring different strengths, but they're all converging on similar solutions.

How do we avoid the "ticket exhaustion" problem with orchestration?

Ticket exhaustion happens when agents escalate too frequently to humans, creating more work than the automation eliminates. Orchestration solves this by questioning whether escalations are actually necessary, batching similar escalations so they're handled efficiently, routing to qualified specialists rather than generic human reviewers, and recommending policy changes that eliminate unnecessary escalations. The goal is to push humans upstream to design better systems rather than keeping them downstream reviewing every decision.

How should we prioritize which workflows to orchestrate first?

Start with high-volume, repetitive workflows where you can clearly measure value and manage risk. Look for processes with clear decision logic but significant human bottlenecks. These are typically administrative processes, customer service interactions, or approval workflows. Avoid starting with mission-critical processes or those with complex regulatory requirements. Pilot on one workflow, learn from the pilot, then expand to others.

What's the typical timeline for implementing orchestration?

A pilot project implementing orchestration for a single workflow might take 2-3 months. Expanding to 3-5 workflows might take 6-12 months. Full organization-wide orchestration of major automation systems might take 18-24 months. But the productivity gains start appearing within the first pilot, which provides justification for continued investment. This isn't a quick fix—it's a strategic initiative that evolves over time.

How does orchestration address AI safety and risk management?

Orchestration is fundamentally a risk management platform. It detects when agents are hallucinating, verifies that decisions comply with policy, assesses agent reliability, and escalates appropriately when risks are detected. Advanced orchestration platforms go beyond monitoring to active intervention—they might stop an agent that's making decisions inconsistent with its training, or automatically route certain decision types to more reliable agents. This gives enterprises the assurance they need to deploy agents at scale.

The Strategic Next Step

Orchestration isn't a nice-to-have feature. It's the foundation that enterprise AI will be built on.

The time to move forward is now. Start with that audit of your automation stack. Identify one high-value workflow where orchestration can deliver clear value. Pilot it. Learn from it. Then expand from there.

The organizations that move now will be ahead. The organizations that delay will be playing catch-up. The choice is yours.

Key Takeaways

- AI agent orchestration solves the critical coordination problem that prevents multiple agents from working together effectively on complex workflows

- Organizations without orchestration experience 'ticket exhaustion' where escalations increase 40-60% in the first six months, completely undermining automation value

- The shift from human-in-the-loop to human-on-the-loop moves humans from reactive approval roles to proactive system design roles, enabling true scalability

- Agent-first automation stacks dramatically outperform hybrid stacks across user satisfaction, quality, security, and cost metrics by order-of-magnitude differences

- Enterprises should audit their entire automation landscape now and begin strategic migration toward orchestrated, agent-first systems to compete effectively

Related Articles

- Meta's Manus Acquisition: What It Means for Enterprise AI Agents [2025]

- Ofcom's X Investigation: CSAM Crisis & Grok's Deepfake Scandal [2025]

- Grok's CSAM Problem: How AI Safeguards Failed Children [2025]

- Grok's Explicit Content Problem: AI Safety at the Breaking Point [2025]

- Lenovo ThinkCentre X Tower: Dual GPU Workstation for AI [2025]

- AI-Generated CSAM: Who's Actually Responsible? [2025]

![AI Agent Orchestration: Making Multi-Agent Systems Work Together [2025]](https://tryrunable.com/blog/ai-agent-orchestration-making-multi-agent-systems-work-toget/image-1-1768397866818.png)