Introduction: The Memento Problem in Modern AI Development

Imagine you're working with an AI coding assistant on a complex project. You spend two hours teaching it your company's coding standards, architectural patterns, and quality requirements. The agent learns your preferences, understands your codebase deeply, and even catches subtle bugs you missed. Then you close the session.

When you open a new conversation window the next day, it's like meeting a stranger. Everything you taught it is gone. The AI wakes up from scratch, forgetting every pattern, every standard, every decision you made together. It's the digital equivalent of the protagonist in Christopher Nolan's "Memento," forced to tattoo important information on his body because his short-term memory resets constantly.

This isn't a minor inconvenience. It's a fundamental architectural flaw in how most AI coding tools operate today.

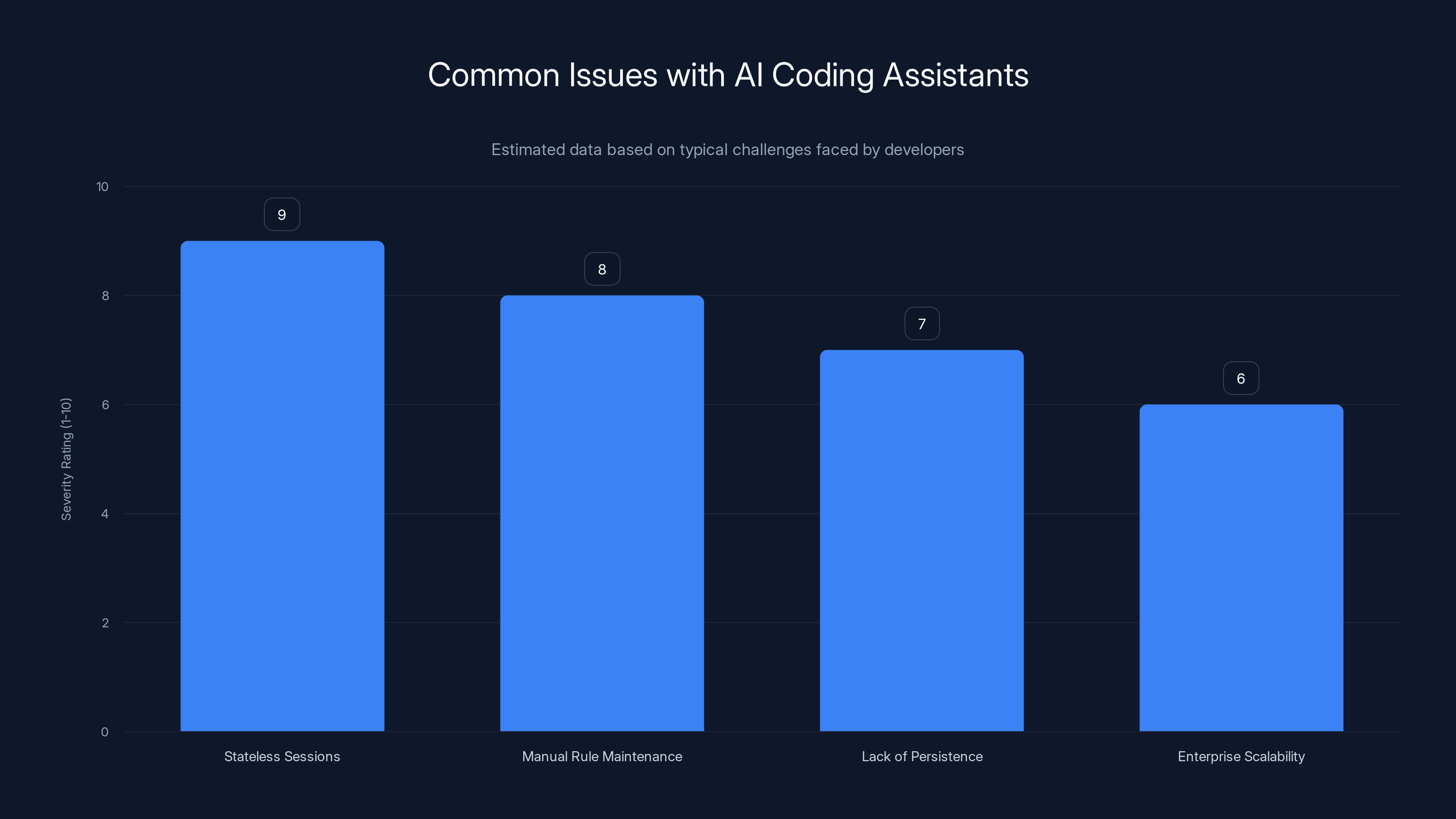

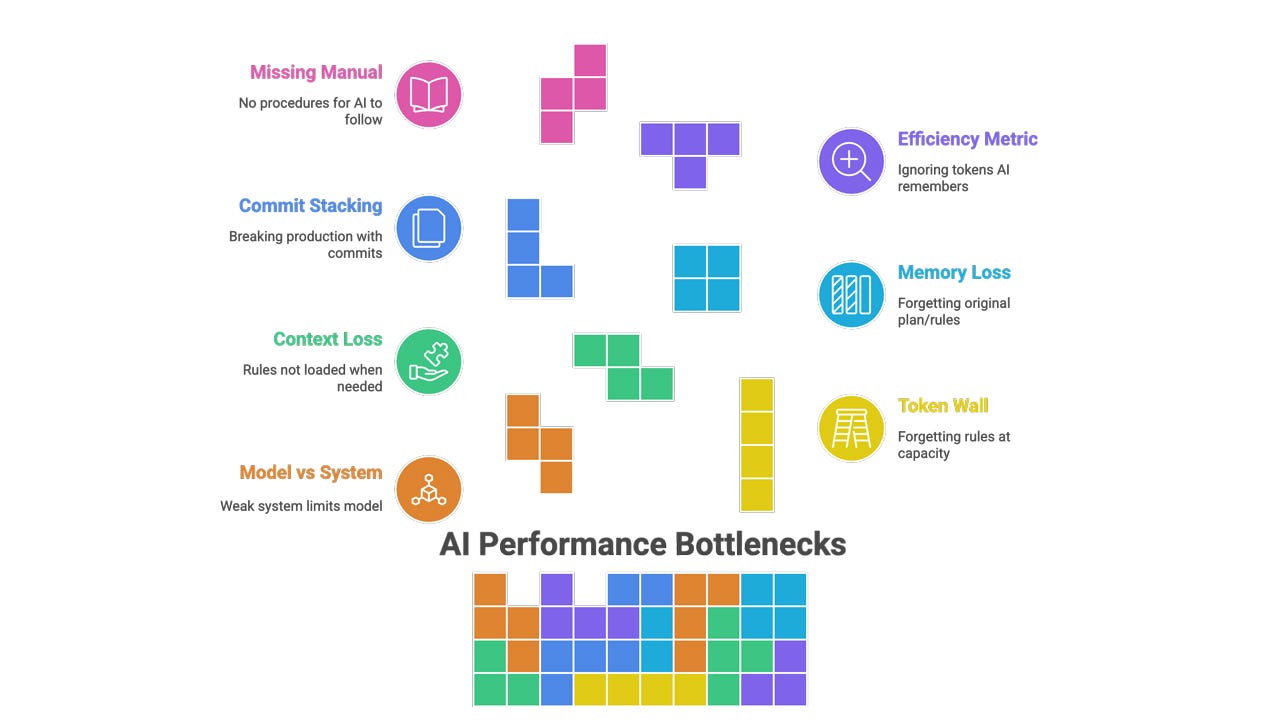

Since the explosion of AI development tools over the past two years, a critical weakness has emerged: by default, as with most large language model chat sessions, they are stateless and temporary. As soon as you close a session and start a new one, the tool forgets everything you were working on. Your custom rules disappear. Your organizational standards vanish. Your team's architectural decisions evaporate. Developers have worked around this by having coding tools and agents save their state to markdown files and text documents, but this solution is hacky at best and breaks down catastrophically at enterprise scale.

Qodo, an AI code review startup founded by Itamar Friedman and his team, believes it has solved this critical problem with a groundbreaking announcement: what it calls the industry's first intelligent Rules System for AI governance. The new system, launched as part of Qodo 2.1, replaces static, manually maintained rule files with an intelligent governance layer that gives AI code reviewers persistent, organizational memory.

But here's what makes this genuinely important: this isn't just an incremental feature update. This represents a fundamental shift in how AI development tools can operate. For the first time, an AI code review tool is moving from reactive to proactive. Instead of waiting for developers to ask questions or request reviews, the system automatically learns your organization's coding standards, maintains them intelligently, enforces them continuously, and measures their real-world impact.

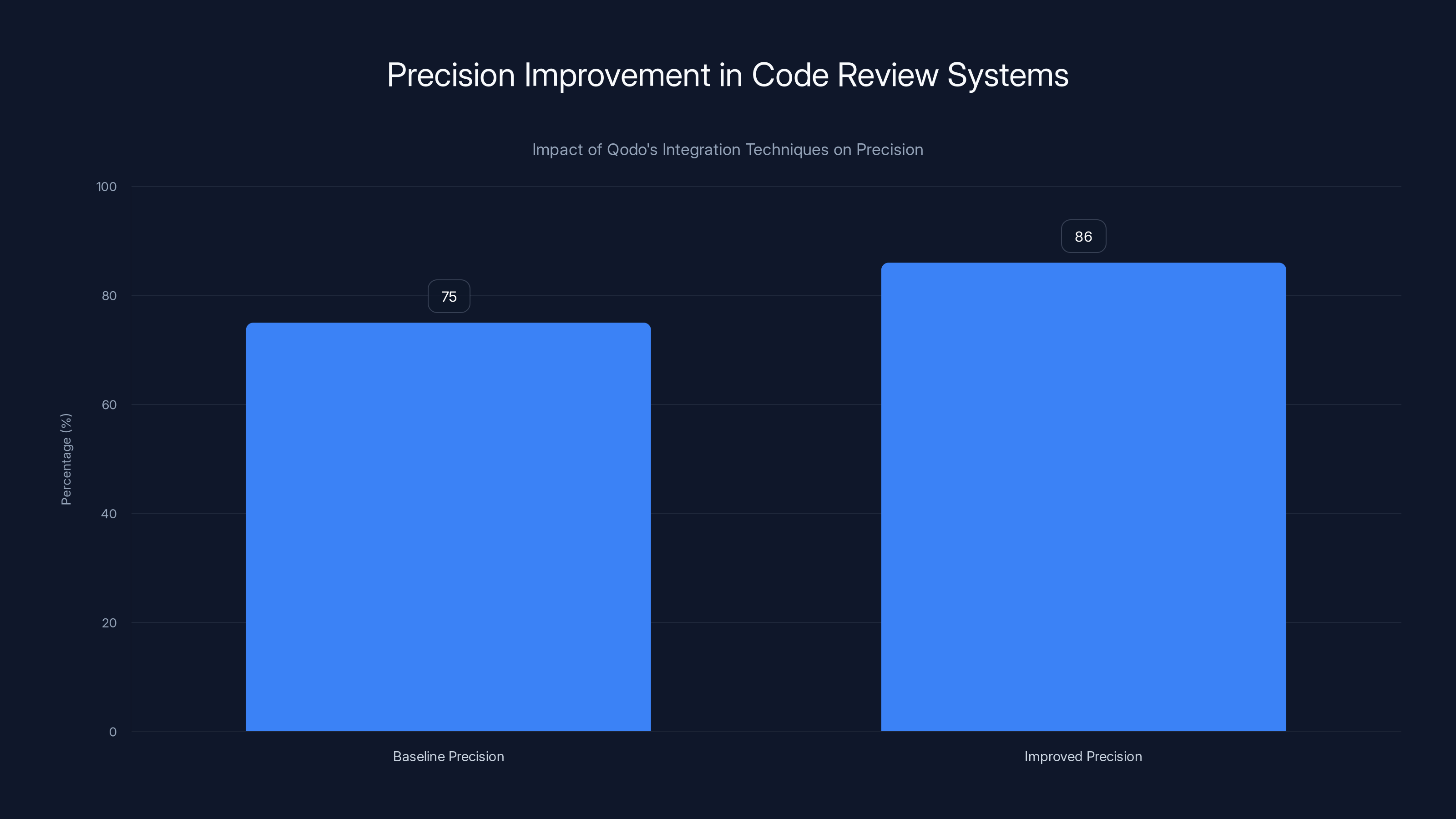

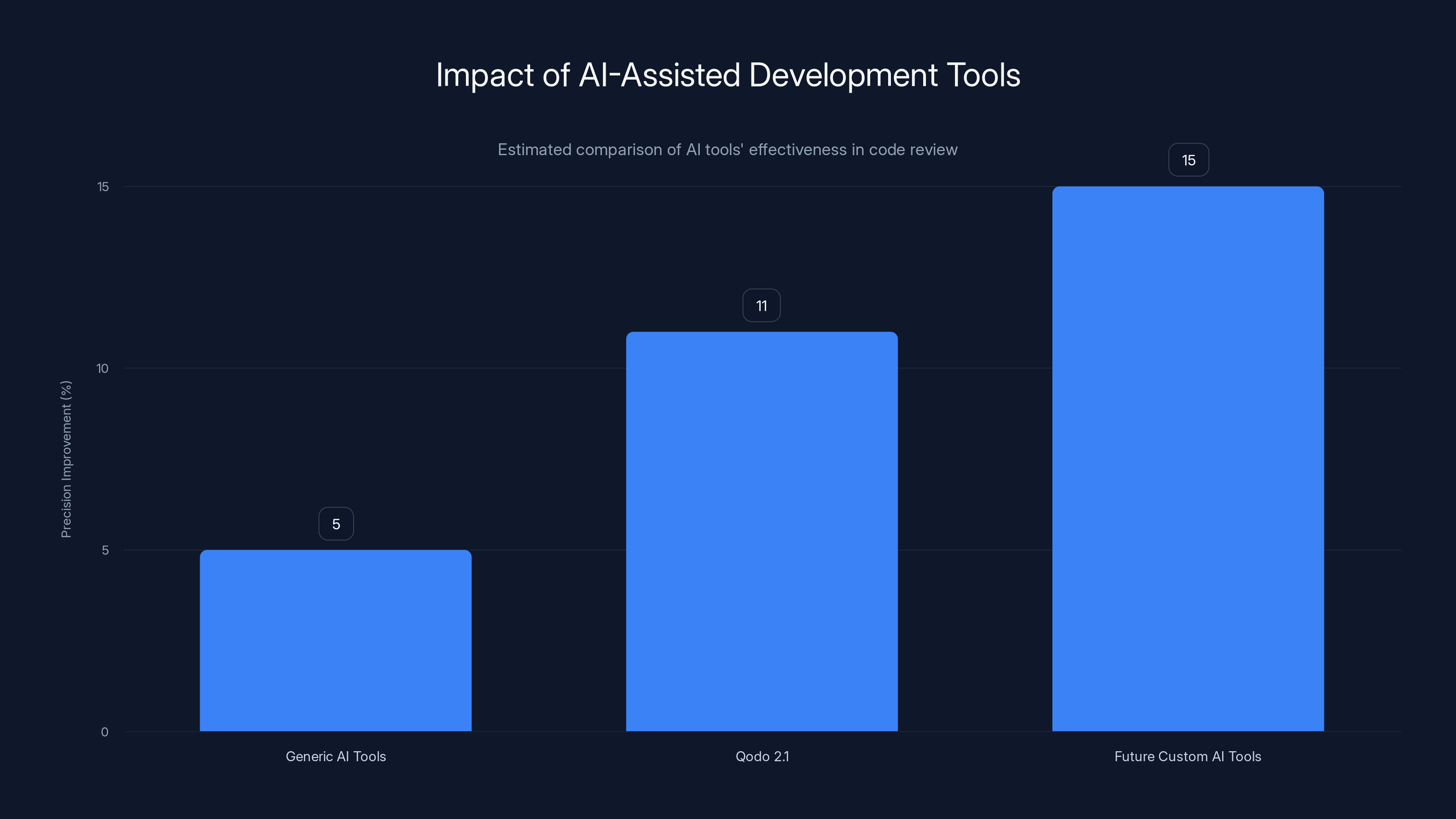

The results? Qodo achieved an 11% improvement in precision by integrating their Rules System with fine-tuning and reinforcement learning techniques. But the real value isn't in that single metric. It's in what that precision improvement represents: AI agents that finally understand your business, remember your standards, and apply them consistently across your entire codebase.

TL; DR

- AI coding agents suffer from stateless memory loss: Current tools reset after each session, forgetting context, standards, and organizational patterns

- Manual workarounds don't scale: Developers save context to markdown files, but managing thousands of files at enterprise scale becomes chaotic and unreliable

- Qodo's Rules System solves organizational amnesia: Automatically discovers rules from code patterns, maintains rule health, enforces standards, and measures real-world impact

- Precision improvements are significant: 11% accuracy boost from intelligent integration between memory systems and AI agents through fine-tuning and reinforcement learning

- The shift is from reactive to proactive: AI agents move from waiting for instructions to automatically understanding and enforcing your organization's unique standards

Qodo's integration of contextual understanding, fine-tuning, and reinforcement learning led to an 11% increase in precision, enhancing developer trust and code quality. Estimated data based on typical precision improvements.

The Architecture Problem: Why Current AI Tools Are Fundamentally Stateless

To understand why this matters, you need to grasp how AI development tools have evolved and where they fall short.

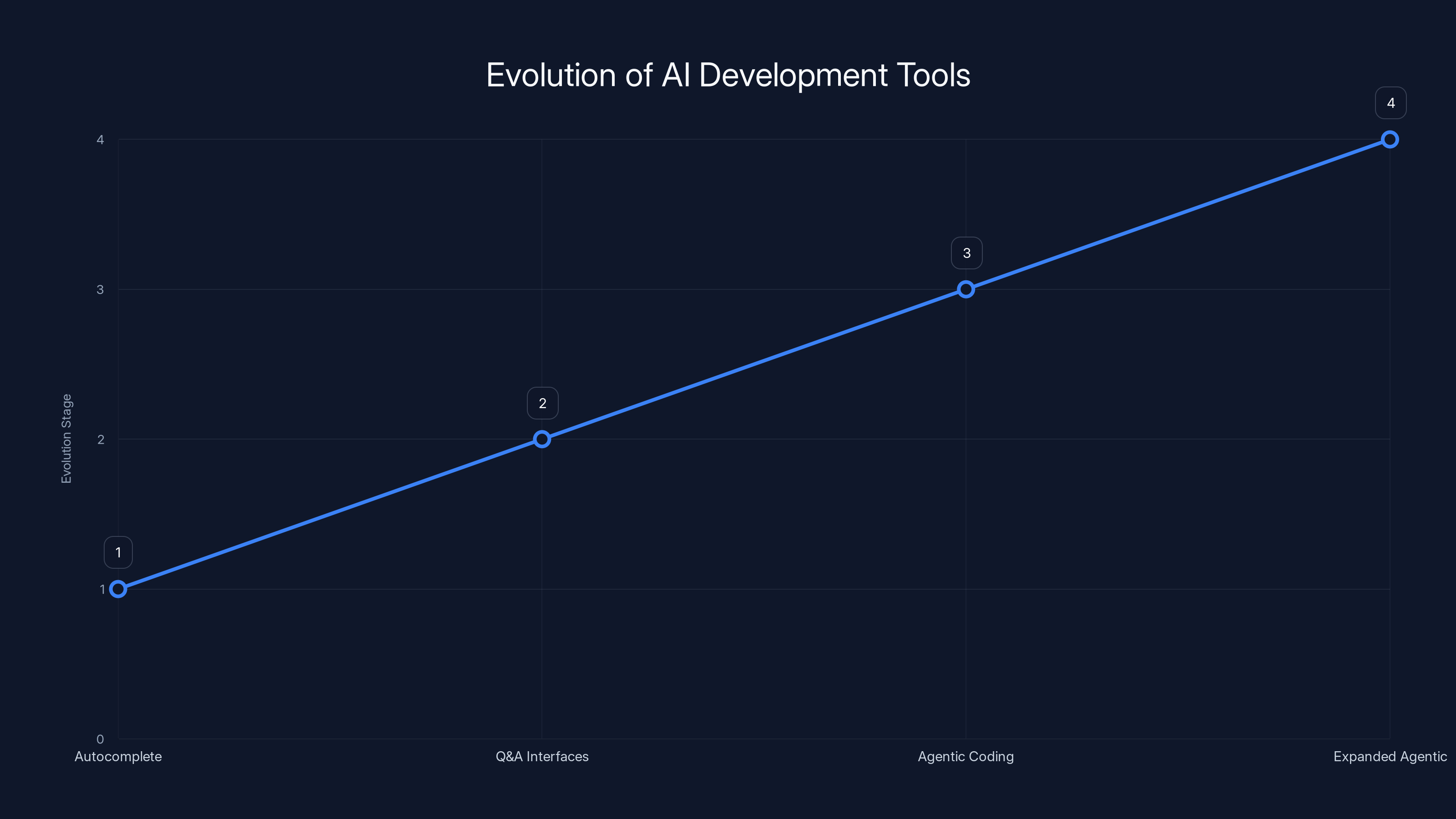

The evolution has followed a predictable trajectory. First came autocomplete tools like GitHub Copilot, which suggested the next line of code based on context. Then came question-and-answer interfaces like ChatGPT, where developers could ask anything and get responses. Next evolved agentic coding within the IDE, exemplified by tools like Cursor, which could perform multi-step coding tasks autonomously. Most recently, we've seen agentic capabilities expand everywhere, with tools like Claude Code operating across different environments.

But here's the critical insight: all of these remain fundamentally stateless machines.

What does stateless mean in this context? It means the AI has no persistent memory layer. Each conversation, each session, each interaction starts from zero. The model can see your current message and maybe a few lines of code in the current window, but it cannot maintain context across sessions. It cannot learn your organization's specific preferences. It cannot build a coherent understanding of your codebase's architecture over time. It cannot improve by remembering past mistakes and successes.

This creates a cascade of problems. First, there's the cognitive load problem. You end up repeating yourself constantly. You explain your coding standards, and the AI acknowledges them and follows them in the moment. But start a new session, and you're explaining them again. Your architectural decisions, your naming conventions, your error handling patterns, your team's preferred libraries and frameworks—all forgotten.

Second, there's the consistency problem. Without persistent memory, the AI cannot enforce standards consistently across your entire codebase. It might suggest one architectural pattern in one file and a completely different approach in another file, because it has no way to remember what came before. This creates technical debt and inconsistency that compounds over time.

Third, there's the scale problem. At enterprise scale, with hundreds of developers and millions of lines of code, the problem becomes intractable. Different teams have different standards. Subteams within the same organization have different architectural approaches. Some projects prioritize performance, others prioritize readability, still others prioritize maintainability. Without a persistent, intelligent memory system, the AI cannot adapt to these nuanced, organization-specific requirements.

Currently, developers have developed workarounds. They save context to markdown files: agents.md, napkin.md, standards.md, architecture-decisions.md. They create README files with explicit instructions about code style. They maintain documents describing organizational patterns. This approach has become common among developers using tools like Claude Code and Cursor.

But Friedman is right when he argues this method breaks down at enterprise scale. Think about a large software organization with 100,000 lines of code and complex architectural requirements. You create sticky notes—some brief, some extensive—documenting coding standards, architectural patterns, team preferences, and project-specific requirements. Every morning, the AI wakes up and faces a massive pile of these notes. The first thing it does is statistically search through them to find relevant context. It's better than having nothing, certainly. But it's random. It's inefficient. And it's unreliable.

Moreover, this approach doesn't solve the deeper problem. It's not just about having context available. It's about the AI agent having a coherent understanding of your organization's subjective quality standards. Code quality is inherently subjective. Your definition of "clean code" might be entirely different from another organization's definition. Your performance requirements might be different. Your security standards might be stricter. Your architectural patterns might be unique to your domain.

The Memory and Context Challenge: Why Stateless AI Falls Apart

Let's drill deeper into why this matters specifically for coding agents and AI development tools.

When you're working with code, context is everything. A function that looks perfectly fine in isolation might violate your organization's architectural standards when viewed in the broader system context. A variable naming convention that's appropriate for one type of code might be completely wrong for another. A performance optimization that works in one scenario might create problems in another.

AI agents need to understand these contextual relationships. But that understanding requires memory. It requires learning from past interactions, past code reviews, past decisions. It requires building a model of your specific organization's requirements over time.

Here's a concrete example. Imagine your team uses a specific error handling pattern throughout your codebase. Instead of throwing exceptions, you return error objects. Instead of using null, you use Option types. Instead of broad try-catch blocks, you use specific error types. These are subjective choices, but they're deeply important to your organization's quality standards.

Without persistent memory, an AI agent will occasionally suggest the wrong pattern. It sees a code block, it tries to be helpful, and it suggests adding a try-catch block—which violates your standards. You correct it. It seems to understand. But start a new session, and it suggests the same pattern again, because it has no memory of what you taught it.

With persistent memory, the agent learns this pattern once. It remembers it. It applies it consistently. It even gets better at recognizing when this pattern applies and when similar patterns should be used instead.

The problem gets worse at scale. In an organization with ten teams, each team might have slightly different standards. One team prioritizes performance and uses aggressive optimization techniques. Another team prioritizes readability and maintainability. Another team is working on legacy code and needs different patterns than new code. Without persistent, organized memory, the AI cannot navigate these nuances.

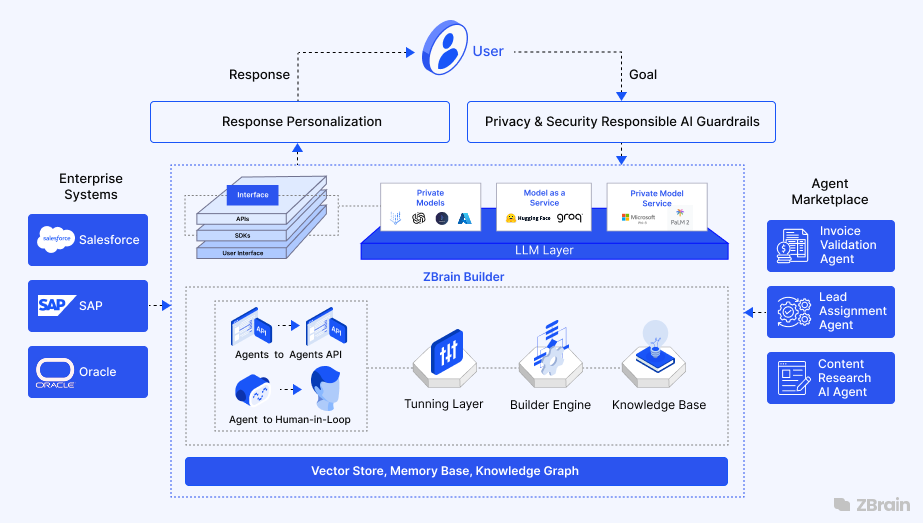

What you need is what Friedman calls "stateful" AI: machines that maintain context, learn from experience, and can be customized to your organization's specific requirements. But building this is genuinely hard. It requires more than just long context windows. It requires intelligent memory systems. It requires learning mechanisms. It requires feedback loops that allow the AI to improve over time based on real-world performance.

Qodo 2.1 shows an 11% precision improvement, highlighting the shift towards stateful, customized AI systems. Estimated data suggests future tools could achieve even higher precision.

From Reactive to Proactive: The Fundamental Shift

Here's where Qodo's approach becomes genuinely novel: it moves AI code review tools from reactive to proactive.

Traditional code review tools are reactive. You write code. You submit a pull request. The tool reviews it. It finds issues and suggests fixes. The developer reacts to these suggestions. This is better than no review at all, but it's still fundamentally reactive.

A proactive system is different. It doesn't wait for pull requests. It doesn't just review what you write. Instead, it learns your standards, understands what "good code" looks like in your organization, and proactively surfaces rules and standards for your team. It prevents problems before they happen. It guides developers toward correct patterns before they code them wrong.

This distinction matters because it changes the entire dynamic. In a reactive system, the AI is a passenger. In a proactive system, the AI is an active participant in maintaining code quality standards.

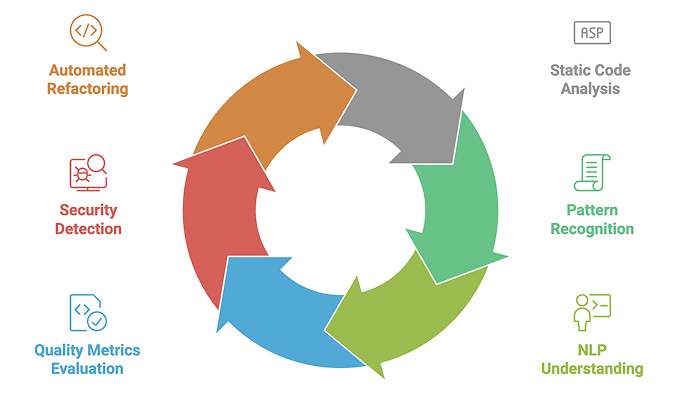

How does this work in practice? Qodo's Rules System automatically discovers standards from your actual codebase and pull request history. It looks at code patterns that your team accepts consistently. It looks at feedback from code reviews. It identifies the implicit rules that guide your organization's decision-making. Then it makes those rules explicit.

This is genuinely powerful because most coding standards exist implicitly. Your senior engineers know them. Your code reflects them. But they're not written down, or they're written down in ways that are hard for AI to parse. By making implicit rules explicit, the system allows the AI to apply them consistently.

Moreover, rules aren't static. Codebases evolve. Standards change. New best practices emerge. A rules system needs to maintain rule health over time. Qodo's approach includes what they call a "Rules Expert Agent" that continuously identifies conflicts, duplicates, and outdated standards. It prevents what the company describes as "rule decay," where rules that made sense five years ago become outdated and create friction rather than clarity.

This represents a genuine paradigm shift in how AI development tools can operate. Instead of being stateless, reactive tools that respond to each request in isolation, they can become stateful, proactive systems that understand your organization deeply and help maintain your standards continuously.

Intelligent Rules Discovery: How AI Learns Your Organization's Standards

Now let's get into the mechanics of how Qodo's Rules System actually works.

The foundation is what they call "Automatic Rule Discovery." The system includes a Rules Discovery Agent that analyzes your codebase and your pull request history to generate coding standards automatically. You don't write the rules manually. The AI learns them by looking at what your organization actually does.

This is a critical distinction. Manual rules systems require you to document your standards explicitly. You sit down and write: "All functions should be under 50 lines." "Variable names should be descriptive." "Error handling should use specific exception types." This creates friction. First, you need to explicitly decide on standards. Second, you need to document them. Third, you need to maintain them as they evolve.

Automatic rule discovery eliminates the first two steps. The AI looks at your codebase and identifies patterns that show what your organization values. It looks at pull request comments and reviews to see what kinds of feedback your senior engineers give most frequently. It synthesizes this into rules.

For example, imagine your senior engineers consistently reject pull requests that have functions over 100 lines of code. They comment: "This function is too long, break it up." They do this again and again. The Rules Discovery Agent sees this pattern and generates a rule: "Functions should be under 100 lines." It doesn't require anyone to explicitly write this rule. It discovers it from behavior.

Or imagine your team consistently prefers certain naming conventions. Most variables are camel Case. Most constants are SCREAMING_SNAKE_CASE. Most classes use Pascal Case. The Rules Discovery Agent looks at your entire codebase, identifies these patterns, and generates rules that reflect them. Again, nobody had to explicitly document this. It was discovered automatically.

This approach has several advantages. First, it's pragmatic. The rules reflect what your organization actually does, not what you think you should do or what some style guide says you should do. Second, it's comprehensive. A human could never document every subtle pattern that your organization follows, but an AI analyzing millions of lines of code can identify patterns that might be invisible to humans. Third, it's efficient. You don't spend time writing documentation. The system discovers standards automatically.

But there's a challenge. Rules discovered automatically need to be validated. You don't want the system generating rules based on mistakes or outliers in your codebase. So Qodo's Rules System surfaces discovered rules to technical leads for approval. The leadership team reviews them and decides: yes, this rule reflects our standards, or no, this rule is based on an outlier and we should reject it.

This combination of automatic discovery plus human validation creates a pragmatic balance. The AI does the heavy lifting of analyzing patterns. Humans make the final judgment call about what represents your organization's actual standards.

Intelligent Maintenance: Keeping Rules Fresh and Conflict-Free

Once rules are in place, they need maintenance. This is where most static rules systems fail.

Imagine you had a rules system five years ago that said: "All logging should use log 4j." But your organization migrated to a modern logging library three years ago. That rule is now outdated and creates friction. Developers see the rule and ignore it because they know the organization switched to a new library. Or they follow it reluctantly, creating inconsistency in your logging approach.

Or imagine you have two separate rules that conflict. One rule says: "Functions should be under 50 lines." Another rule says: "Functions should have between 10 and 30 statements before extracting helper methods." At some point, these might come into conflict depending on statement length.

Or imagine you have duplicate rules. Different teams documented similar standards in different ways. One rule says: "Variable names should be descriptive." Another rule says: "Avoid single-letter variable names except for loop counters." These are saying similar things but in different ways.

Statically maintained rules systems accumulate these kinds of problems over time. Rules decay. They conflict. They duplicate. Developers lose trust in the system.

Qodo's approach includes what they call "Intelligent Maintenance" through a Rules Expert Agent. This AI agent continuously monitors your rule set for problems. It identifies rules that conflict with each other. It identifies duplicate rules that are saying the same thing in different ways. It identifies rules that have become outdated based on current best practices or changes in your technology stack.

The system doesn't automatically delete or modify rules—that would be dangerous. Instead, it surfaces problems to your team. It says: "These two rules appear to conflict. You might want to review and consolidate them." Or: "This rule hasn't been referenced in any pull request feedback for six months and appears to be outdated." Or: "These three rules are saying similar things. Consider consolidating them into a single rule."

This intelligent maintenance prevents rule decay. It keeps your rule set lean, clear, and actually useful to developers. It ensures that rules remain aligned with your organization's actual practices and evolving technology landscape.

This is genuinely important because it addresses a critical failure point of static rules systems. Over time, they accumulate technical debt. They become less useful, not more useful. By maintaining rule health continuously, Qodo's system gets better over time rather than worse.

Estimated data shows that stateless sessions are the most severe issue faced by AI coding assistants, followed by the challenges of manual rule maintenance and lack of persistence.

Scalable Enforcement: Rules That Actually Get Applied

Having discovered and maintained intelligent rules is useless if they don't actually get enforced. This is where the scalability challenge becomes real.

Qodo's Rules System automatically enforces rules during pull request code review. When a developer submits code for review, the system checks it against all applicable rules. If code violates a rule, the system doesn't just point out the violation—it provides recommended fixes.

This is important because many code review tools just tell you what's wrong. "This function is too long." "This variable name is unclear." Developers then have to figure out how to fix it. But Qodo's system goes further. It says: "This function is too long. Here's a refactoring that would break it into smaller functions." This dramatically reduces friction and makes it more likely that developers will actually fix issues.

Moreover, enforcement needs to scale. In a large organization, you might have hundreds of developers submitting thousands of pull requests per day. You need rule enforcement that can handle that scale automatically. You can't have humans manually reviewing whether rules are followed. The system needs to be fully automated.

Qodo's approach is designed for this scale. Rules are enforced automatically during the pull request review process. Violations are flagged immediately. Fixes are suggested. Developers get immediate, actionable feedback without needing to wait for a human code review.

What's particularly clever is the integration with AI code review agents. The rules system becomes part of the agent's decision-making process. When an agent is reviewing code, it doesn't just apply generic best practices. It applies your organization's specific rules. It suggests changes that align with your standards. It becomes genuinely customized to your organization.

Precision Improvements Through Integration: The 11% Boost

So how much does this actually matter? Qodo achieved an 11% improvement in precision by integrating their Rules System with fine-tuning and reinforcement learning techniques.

Let's break down what that means. Precision in code review refers to the accuracy of flagged issues. A high-precision review system flags problems that are actually problems and avoids false positives. Low precision means flagging things that aren't actually issues, creating noise and reducing developer trust.

That 11% improvement is significant because it directly impacts developer experience and code quality. Fewer false positives means developers trust the system more. They're more likely to follow recommendations. They have more time to focus on real issues rather than defending against incorrect flagging.

How does the Rules System contribute to this improvement? Through multiple mechanisms:

First, contextual understanding. When the agent understands your organization's specific rules and standards, it makes fewer mistakes. It doesn't suggest changes that violate your standards. It doesn't flag things that your organization considers acceptable. This immediately reduces false positives.

Second, fine-tuning. Qodo applies fine-tuning techniques, using your organization's specific codebase and review history as training data. The model learns what patterns your organization values and replicates them more accurately. Instead of using a generic model, the agent is specifically trained on your code and standards.

Third, reinforcement learning. The system uses feedback loops to improve continuously. When developers accept suggestions, the system learns that those suggestions were valuable. When developers reject suggestions, the system learns to adjust. Over time, the agent gets better and better at making suggestions that your team actually wants.

The combination of these three approaches—contextual rules integration, fine-tuning on organization-specific data, and reinforcement learning from real feedback—creates a system that improves over time. Unlike static tools that stay the same, this system gets better as it gets more data about your organization's preferences.

That 11% might seem like a modest improvement, but consider what it means in practice. For a large organization with 500 developers submitting 1,000 pull requests per day, reducing false positives by 11% could mean eliminating dozens of incorrect flags daily. That's dozens of times developers don't waste time investigating issues that aren't actually issues. That's dozens of times the system maintains developer trust. Over a year, for a large enterprise, this adds up to thousands of hours saved.

Real-World Analytics: Measuring What Actually Matters

Here's another critical piece: measurement. You can implement rules, enforce them, and improve precision, but if you can't measure impact, how do you know if any of this is actually working?

Qodo's Rules System includes what they call "Real-World Analytics." The system tracks adoption rates for different rules. It monitors violation trends over time. It measures how standards compliance affects code quality metrics. It proves, with data, that standards are being followed and that following them has measurable benefits.

This is genuinely important because it creates accountability and visibility. You can answer questions like: "Which rules are most commonly violated?" "Are violations trending up or down over time?" "Which teams have the best standards compliance?" "Does following our naming convention standards actually correlate with fewer bugs in production?"

These aren't academic questions. They're practical questions that leadership and engineering teams care about. By providing data that shows correlation between rule compliance and code quality or production issues, the analytics system builds the case for why standards matter.

Moreover, the analytics help you identify which rules are actually valuable and which are just noise. Some rules might look good in theory but don't correlate with actual code quality improvements. Others might be surprisingly predictive of bugs or maintenance issues. By measuring real-world impact, you can focus your team's attention on rules that actually matter.

This measurement capability also supports organizational learning. Over time, you build an evidence base about what coding standards actually improve code quality in your specific domain. You learn that certain naming conventions correlate with fewer bugs. Certain architectural patterns correlate with faster feature delivery. Certain error handling approaches correlate with fewer production incidents. This knowledge becomes valuable intellectual property for your organization.

AI development tools have evolved from basic autocomplete to advanced agentic capabilities, yet remain stateless. Estimated data.

The Integration Challenge: Why Tightly Coupled Memory Matters More Than You Might Think

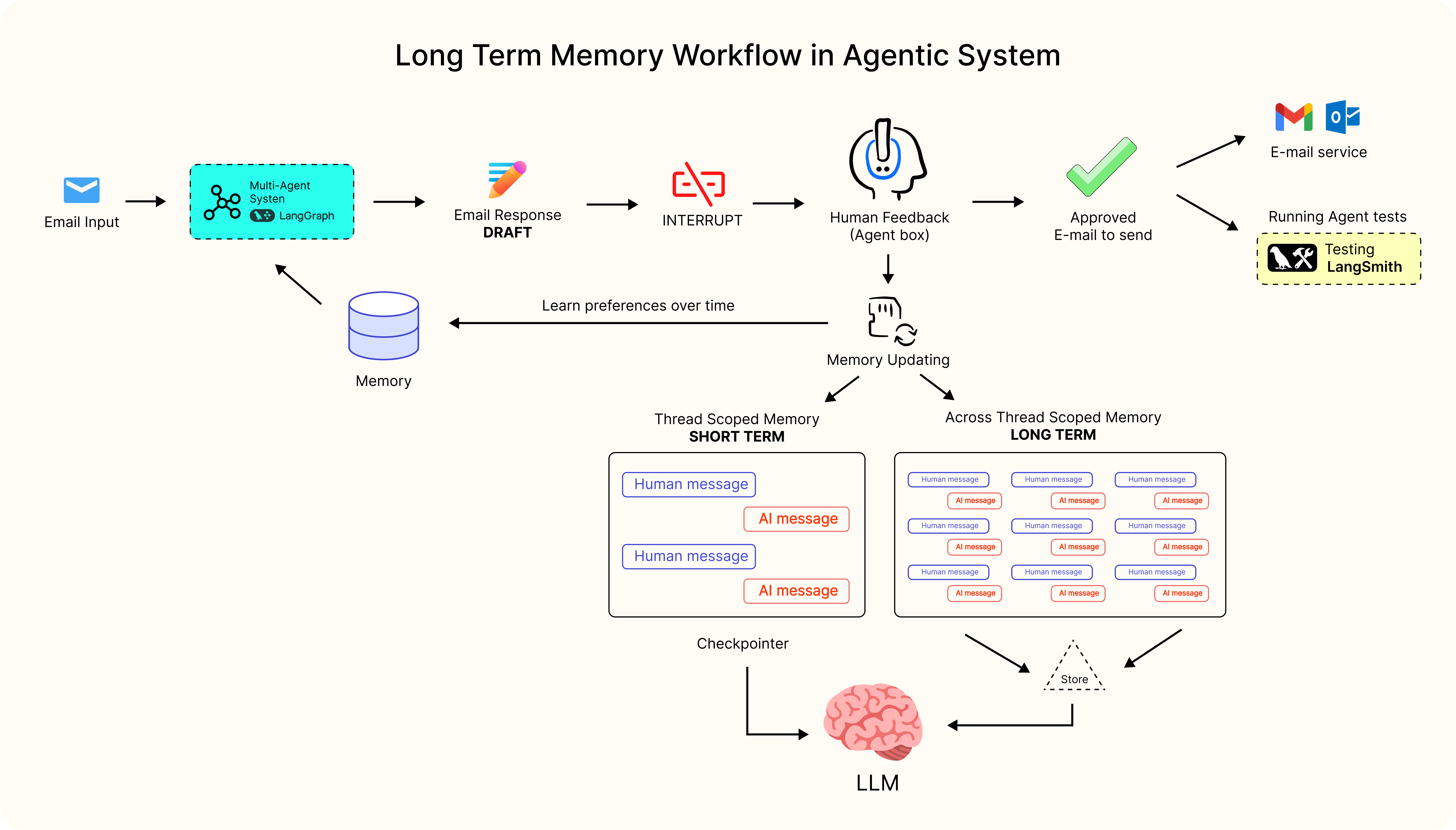

Here's where Friedman's argument gets particularly interesting. Most people think of memory as something external to the agent. The agent has a task, it searches through external memory to find relevant context, and then it proceeds. This is how most current tools work.

But Qodo's approach is different. The memory and the agents are tightly integrated, not loosely coupled.

What does this mean in practice? It means the rules aren't just data that the agent consults. The rules are integrated into the agent's decision-making architecture. The agent doesn't need to search for relevant context and synthesize it. The rules are already part of how the agent thinks.

This distinction matters because it affects both speed and accuracy. When memory is loosely coupled and external, the agent needs to spend time searching for relevant information. This creates latency. Moreover, the agent might not find the most relevant context, or it might find context that's less important than other context. This creates noise.

When memory is tightly integrated, the agent already has access to relevant rules and context as part of its core reasoning process. It doesn't need to search. It doesn't need to decide what's relevant. The rules are already part of how it evaluates code. This makes the agent faster and more accurate.

Think of it like the difference between a person who has to search through a filing cabinet to remember a rule versus a person who has the rule internalized and can apply it instantly. The second person is not just faster—they're more accurate because they don't misremember or misapply the rule.

This tight integration is why Qodo's approach represents a genuine architectural innovation. It's not just adding a memory layer to existing tools. It's redesigning the agent to have memory as a core part of its reasoning process.

The Organizational Memory Problem: Code Quality as a Subjective, Enterprise-Specific Challenge

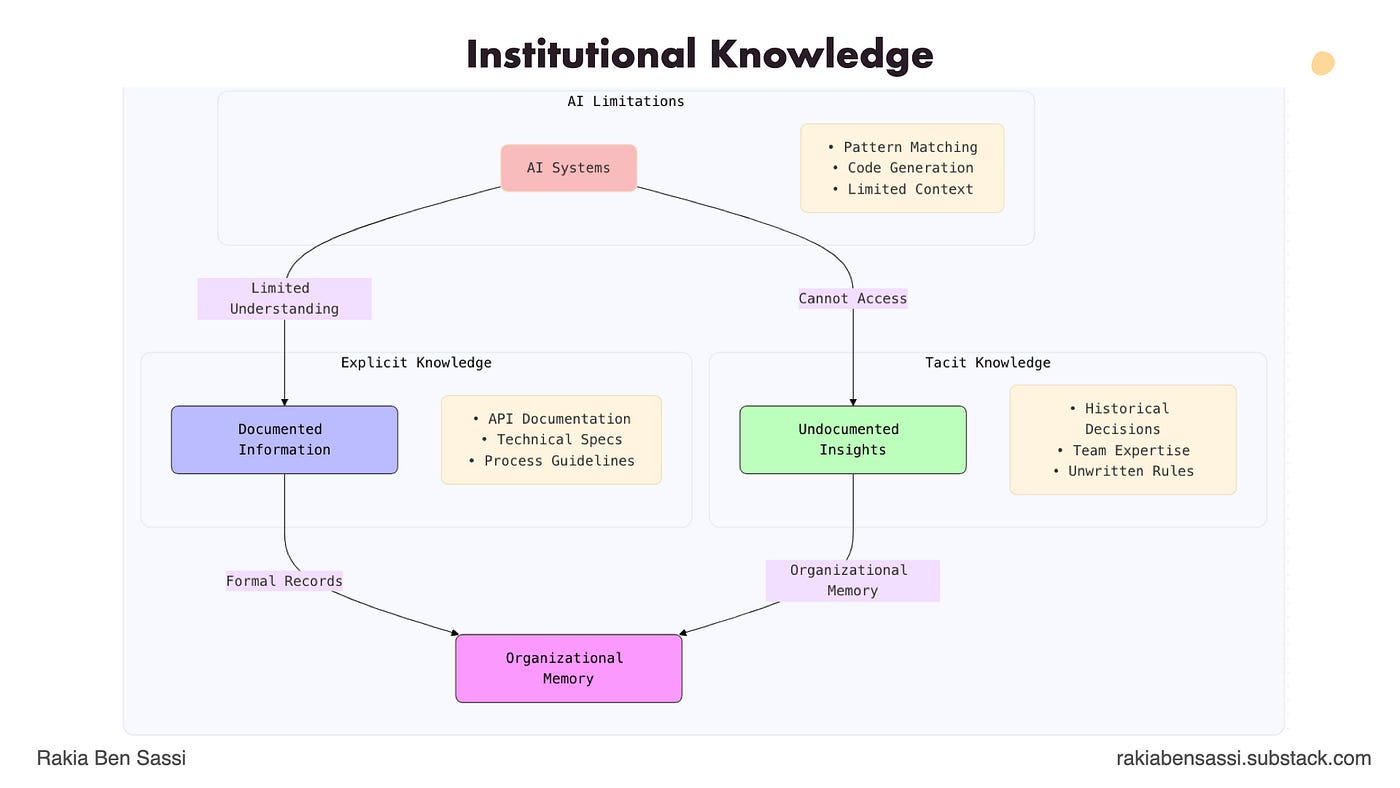

Let's zoom back out and think about why this architectural innovation matters at a deeper level.

Code quality is fundamentally subjective. Your definition of clean code might differ from mine. Your performance requirements might differ from another company's requirements. Your architectural patterns might be unique to your domain. Your technology stack choices might create constraints that other organizations don't have.

This subjectivity creates a challenge for generic AI tools. They're trained on millions of lines of code from thousands of organizations, all with different standards. They learn to recognize patterns that are common across many organizations. But they miss the specific patterns that are unique to your organization.

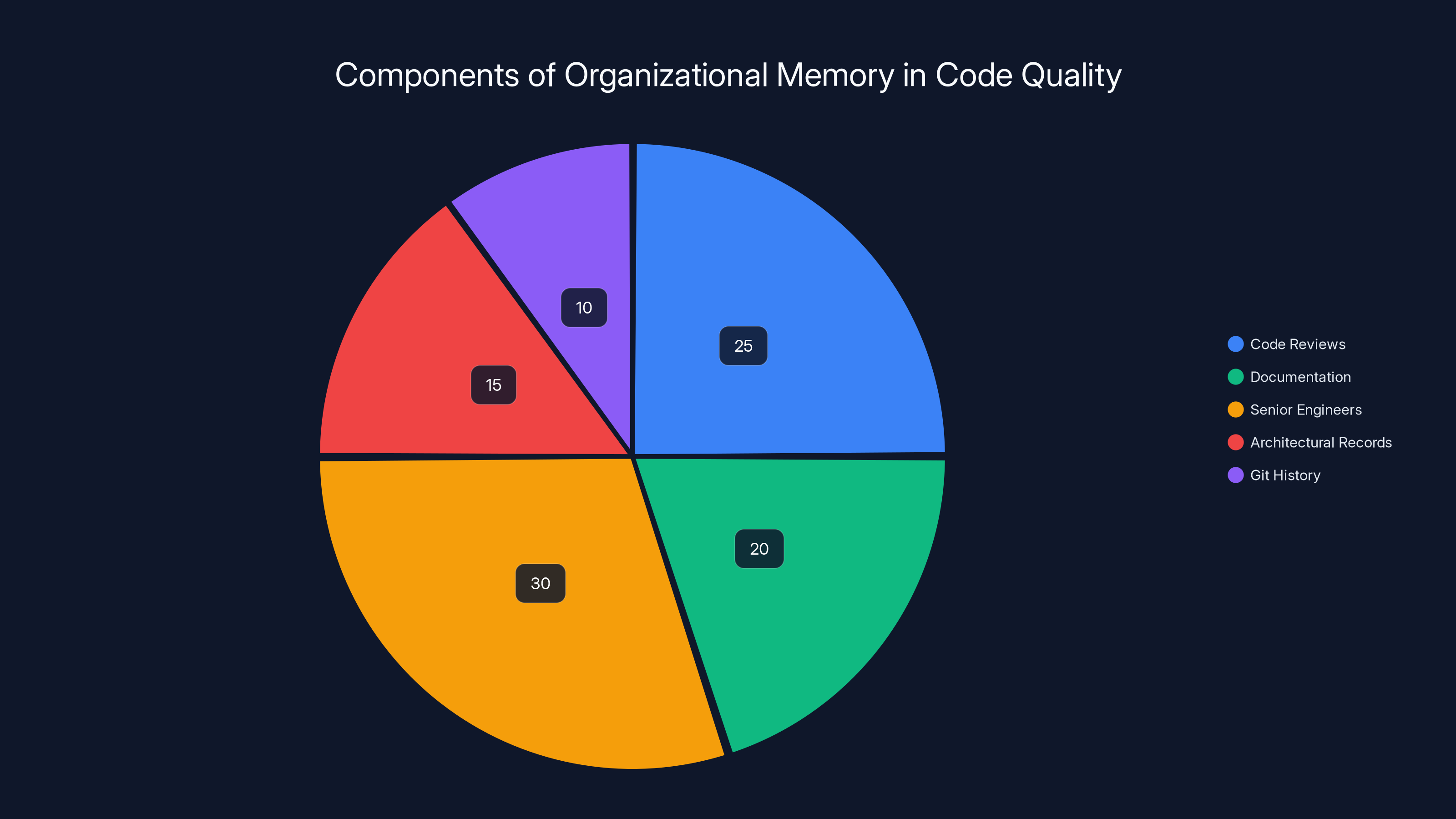

More specifically, they miss what we might call "organizational memory." This is the accumulated knowledge about what works well in your specific context. It includes explicit standards, but also implicit knowledge about architectural decisions, technology choices, team preferences, and hard-won lessons from past projects.

Your organization has this memory. It exists in code reviews, in documentation, in senior engineers' heads, in architectural decision records, in git history. But most AI tools can't access it in a meaningful way. They see individual pieces of this memory, but they don't integrate it into a coherent understanding of your organization.

Qodo's Rules System changes this. It extracts organizational memory from your actual code and decisions. It makes that memory explicit, organized, and actionable. It integrates that memory into the AI agent's decision-making process. Effectively, it gives the AI access to your organization's accumulated knowledge about code quality.

This is genuinely transformative because it allows AI agents to move from being generic tools that apply universal best practices to being organization-specific tools that apply your organization's specific standards and accumulated wisdom.

Conversely, this highlights why stateless tools are so limited. Without organizational memory, without ability to learn and apply your specific standards, they're essentially generic tools applying generic best practices. They're helpful, but they're not great. They're like a generic coding consultant who knows industry best practices but doesn't understand your specific business, your specific constraints, and your specific organizational context.

Implementation Patterns: How Organizations Adopt Intelligent Rules Systems

So how does an organization actually implement something like this? What does adoption look like?

Based on how Qodo and similar systems work, there are generally several phases:

Phase 1: Baseline and Discovery (Weeks 1-2) The system analyzes your existing codebase and pull request history. The Rules Discovery Agent identifies patterns and surfaces potential rules to your technical leadership team. You're not implementing any rules yet—you're just discovering what rules exist implicitly in your code and decisions. This phase is about visibility. You're answering: what are our actual standards?

Phase 2: Rule Approval and Configuration (Weeks 2-4) Your technical leadership team reviews the discovered rules and decides which ones to activate. You might approve some, reject others that are based on outliers, and potentially modify some. You're setting up the explicit rule set that will govern your code review process. This phase is about intentionality. You're deciding: which standards do we actually want to enforce?

Phase 3: Enforcement and Feedback (Weeks 4-8) The system starts enforcing rules during pull request review. Developers see rule violations and suggested fixes. Feedback loops start generating data about which rules are actually helping and which are creating noise. This phase is about operationalization. You're seeing: how does this work in practice?

Phase 4: Refinement and Learning (Month 2 and beyond) Based on feedback, you adjust rules. You might tighten some rules that developers consistently violate, loosen others that create too much friction, or add new rules based on patterns you discover. The Rules Expert Agent continuously maintains rule health. The system gets better at applying rules based on reinforcement learning. This phase is about continuous improvement. You're optimizing: how do we make this work better for our organization?

This phased approach is important because it doesn't try to do everything at once. It starts with discovery and visibility, moves to decision-making and configuration, then to implementation and feedback, and finally to refinement and learning. Each phase builds on the previous one.

Different organizations move at different speeds through these phases. A startup might move quickly because they have fewer existing standards and can easily agree on new ones. A large enterprise might move slowly because they have many teams with different standards and need more discussion to create consensus.

But the key insight is that this isn't a "flip the switch and now rules are enforced" kind of change. It's a process of discovery, discussion, implementation, and refinement. That process takes time, but it's necessary for adoption to stick.

Organizational memory in code quality is distributed across various sources, with senior engineers and code reviews contributing the most. Estimated data.

Competitive Landscape: Where This Fits in the Market

It's worth understanding where this innovation fits in the broader market for AI development tools.

The market has evolved rapidly over the past two years. You have autocomplete tools like GitHub Copilot. You have chat-based tools like ChatGPT and Claude. You have IDE-integrated agents like Cursor. You have specialized code review tools. You have testing automation tools. You have documentation generation tools.

But none of these have solved the fundamental memory problem in a systematic way. GitHub Copilot doesn't maintain organizational memory. ChatGPT has no idea what your company's standards are. Cursor can work within a session but resets between sessions. Even tools that claim to support "long context" haven't really solved the problem of persistent, organized, actionable organizational memory.

Qodo's Rules System addresses this gap. It's specifically designed for the enterprise code review use case, and it's specifically designed to solve the organizational memory problem. This positions it as a genuine innovation in the AI development tools market.

That said, this innovation will likely spread. Once it proves valuable, other code review tools will try to implement something similar. Eventually, general-purpose coding agents like Cursor and Claude might add similar organizational memory capabilities.

But right now, Qodo appears to be the first mover on this specific innovation: intelligent, organizationally-specific rules systems that integrate tightly with AI agents and provide persistent organizational memory for code quality standards.

Best Practices: Making Organizational Rules Systems Work

If you're considering implementing a rules system for your organization, here are some best practices based on how these systems tend to work well:

Start with leadership alignment. Before implementing rules, make sure your technical leadership team agrees on what standards matter. Rules without leadership support become friction rather than value. Get your CTOs, principal engineers, and engineering managers aligned on what you're trying to achieve.

Discover before you prescribe. Don't start by writing rules. Start by discovering what rules already exist implicitly in your code and decisions. This is more likely to surface standards that your team actually follows and values, rather than standards that look good on paper but don't reflect reality.

Separate enforcement from learning. In the early phases, focus on discovery and learning, not enforcement. Discover what standards you have, understand them, validate them, and make sure your team agrees with them. Only after you've done that should you start enforcing rules in pull requests.

Measure real-world impact. Don't just count rule violations. Measure whether following rules actually correlates with code quality, fewer bugs, faster development, or whatever metrics matter to your organization. This gives you data to justify continued investment in the rules system.

Maintain rule health continuously. Treat your rule set like code. It needs maintenance. You need to review rules periodically, update them as technology evolves, consolidate duplicates, and remove rules that are no longer valuable. A rules system that isn't maintained actively decays and becomes less useful over time.

Integrate with development workflow. Rules are only valuable if developers see them and can act on them during their regular workflow. Integration with pull requests is important. But also consider integration with local development tools, IDE warnings, and other places developers spend their time.

Make it easy to understand why rules exist. When a rule is violated, developers need to understand why the rule exists and why it matters. Generic rule names like "rule-12" create friction. Descriptive rule names and explanations build understanding and support.

These practices aren't specific to Qodo. They apply to any rules system in any organization. But they're important to understand if you're considering implementing this kind of system.

The Broader Architectural Implications: Stateful AI Systems

Let's zoom out even further and think about what this means for the future of AI development tools.

Friedman's argument about stateful versus stateless systems is genuinely important. Current AI tools are mostly stateless. They process requests in isolation. They don't maintain context across sessions. They don't learn and improve from interactions. They don't build deep understanding of your organization over time.

This architecture made sense in the early days of AI. It kept things simple. It made the tools generally applicable across many different use cases. But as AI development tools mature, this architectural limitation becomes more obvious.

What stateful systems enable is genuine customization. Not the superficial kind where you adjust some settings, but deep customization where the AI agent understands your specific organization, learns your specific standards, and applies them consistently across your entire operation.

This is why Qodo's Rules System is architecturally significant. It's one of the first attempts to move AI code review tools from stateless to stateful. But it's unlikely to be the last. As time goes on, you'll probably see:

- More stateful general-purpose coding agents

- Better integration between coding agents and persistent organizational knowledge

- More sophisticated learning mechanisms that allow agents to improve from feedback

- Better tools for managing and maintaining organizational standards

- More integration of organizational rules into different parts of the development workflow

The future of AI development tools probably looks like stateful, learning systems that are deeply integrated with your organization's specific workflows, standards, and goals. Qodo's Rules System is an early example of what this future might look like.

Leadership alignment and workflow integration are critical for successful organizational rules systems. Estimated data based on best practices.

Challenges and Limitations: What Intelligent Rules Systems Can't Do

That said, intelligent rules systems aren't a silver bullet. They have limitations worth understanding.

First, rules can't capture everything. Code quality isn't purely rule-based. Sometimes the right approach depends on context, judgment, and experience in ways that rules can't easily express. A rules system can catch obvious violations, but it can't replace human judgment and experience.

Second, rules can become outdated. Even with intelligent maintenance, rules can lag behind technological change. Your organization might adopt new technologies or new architectural patterns faster than your rules system can adapt. You need processes for staying ahead of this.

Third, rules can create false negatives. A rules system might flag code that violates rules but is actually the right approach in context. Or it might fail to flag code that violates rules but should have been flagged. Precision improvements of 11% are meaningful, but they're not perfect.

Fourth, rules require organizational discipline. A rules system is only as valuable as your organization's commitment to maintaining it. If technical leadership doesn't stay engaged, if the rule set isn't maintained actively, the value degrades over time.

Fifth, rules can create friction if not implemented carefully. If rules are too strict or too numerous, developers might start working around them or ignoring them. If rules aren't well explained, developers might not understand why they exist. Rules need to be introduced thoughtfully to avoid creating the opposite of what you intend.

Understanding these limitations helps you implement rules systems in ways that work for your organization rather than creating friction.

The Organizational Culture Angle: Why This Matters Beyond Just Code Quality

Here's something worth thinking about that goes beyond pure technical consideration: implementing a rules system is also an organizational and cultural change.

When you implement a rules system, you're making explicit what was previously implicit. You're formalizing standards that existed in your senior engineers' heads. You're creating accountability and visibility around code quality standards. You're distributing knowledge about organizational standards across your entire team rather than concentrating it in senior people's heads.

This has organizational implications. On the positive side, it creates more level playing field. Junior developers have access to the same standards and guidance that senior engineers know implicitly. It accelerates learning because standards are explicit and accessible. It reduces bottlenecks because code quality doesn't depend on having senior engineers review everything.

On the challenging side, it creates accountability. If standards are explicit, then everyone is expected to follow them. There's no hiding behind "I didn't know that was expected." It also creates potential tension if people disagree with standards or find them unnecessarily restrictive.

Implementing a rules system successfully requires that your organization is ready for this kind of transparency and accountability. It works best in organizations with good communication, shared values around code quality, and willingness to have conversations about standards.

If your organization has significant communication issues or disagreement about standards, implementing a rules system might expose those issues rather than solve them. This is worth thinking about before you start.

Integration with Broader Development Tools: The Ecosystem Angle

Another practical consideration: a rules system is most valuable when it's integrated with your broader development tools and workflows.

Ideal integration points include:

- IDE integration: Rules and suggestions appear right in your IDE as you code

- Pull request integration: Rules are enforced automatically as part of PR review

- Local development integration: Linters and formatters are aligned with rules

- Metrics integration: Rule violations feed into broader metrics and dashboards

- Documentation integration: Rules and their rationales are documented and searchable

- Learning integration: Rules help train new developers and support onboarding

The more integrated a rules system is with your broader workflows, the more value it creates. Conversely, if a rules system exists in isolation, it becomes just another tool developers need to check periodically, and its value decreases.

Qodo is designed primarily for pull request integration, which is a good place to start because that's where most code review happens in modern development teams. But ideally, you'd want rules integrated across your entire development workflow.

Future Directions: Where This Technology Is Likely Heading

Looking forward, intelligent rules systems like Qodo's are likely to evolve in several directions:

More sophisticated learning mechanisms. Current systems use relatively simple pattern recognition and reinforcement learning. Future systems will probably use more sophisticated learning approaches that understand not just what rules developers follow, but why they follow them and in what contexts exceptions are appropriate.

Deeper integration with development workflows. Right now, rules systems primarily focus on code review and pull requests. Future systems will probably integrate throughout the development lifecycle, from local development through production.

Cross-organizational learning. Imagine if organizations could share learnings about coding standards and architectural patterns, while maintaining confidentiality about their specific code. This could help new standards emerge and spread faster.

Multi-modal rules. Current rules are mostly about code patterns and style. Future systems might include rules about architecture, performance, security, accessibility, and other dimensions of code quality.

Better conflict and exception handling. Rules are useful for 95% of cases, but sometimes exceptions are appropriate. Future systems will probably be better at understanding when to enforce rules strictly and when to allow exceptions.

These directions represent the natural evolution of the space as the technology matures.

Implementation Timeline: Realistic Expectations

If you're considering implementing a rules system, here are realistic expectations for implementation timeline:

- Week 1-2: Discovery phase, understanding your organization's existing standards

- Week 2-4: Leadership discussion and rule approval, deciding which standards to enforce

- Week 4-6: Initial enforcement, dealing with initial false positives and adjustments

- Month 2: Refinement based on early feedback, turning down overly strict rules, clarifying rules that created confusion

- Month 3+: Continuous maintenance and improvement, measuring impact, and optimizing based on results

Full adoption and realization of benefits typically takes 3-6 months. Don't expect to implement a rules system and have everything perfect from day one. Expect a process of discovery, implementation, feedback, and refinement.

Organizations that try to force adoption too quickly or that don't allow sufficient time for adjustment often see pushback from developers. Organizations that move thoughtfully and allow time for discussion and adjustment tend to see better adoption and results.

ROI and Business Case: Why This Matters Beyond Just Developer Experience

Why should an organization invest in a rules system? What's the business case?

The benefits include:

Reduced bugs. Organizations with consistent coding standards typically have fewer production bugs. Better code quality means fewer customer-facing issues.

Faster development. When standards are clear and consistently enforced, developers spend less time in code review discussions about style and more time on functionality. This can accelerate feature development.

Easier maintenance. Codebases with consistent standards are easier to maintain. New developers can understand code faster when it follows familiar patterns. Technical debt accumulates more slowly.

Better knowledge transfer. When standards are explicit and accessible, new developers learn organizational practices faster. Senior knowledge workers can scale their expertise across more team members.

Compliance and governance. In regulated industries, explicit rules and automated enforcement provide evidence of governance and compliance.

Reduced technical debt. Many development teams accumulate technical debt because quality standards aren't consistently enforced. Enforced rules prevent this accumulation.

Quantifying these benefits is imperfect—code quality impact is hard to measure precisely—but most organizations see measurable improvements within 3-6 months of implementing rules systems.

For a large organization with many developers, the benefits compound. An organization with 500 developers might save 40-80 hours per week in code review time alone if rules enforcement is automated. That's 2,000-4,000 hours per year, which at reasonable engineering salaries is

Add improvements in code quality, fewer bugs, faster feature development, and better onboarding, and the ROI often becomes clear.

Conclusion: The Path Forward for AI-Assisted Development

Qodo 2.1 and its intelligent Rules System represent an important evolution in how AI development tools can work. They solve a real problem that's been plaguing the industry: how to give AI agents persistent, organizational-specific memory.

The 11% precision improvement is meaningful, but the real significance is broader. It's the shift from stateless, generic tools to stateful, customized systems. It's the move from AI agents that apply universal best practices to agents that understand and apply your organization's specific standards.

For developers and engineering teams, this matters because it means AI code review tools can finally become organization-specific. They can understand your codebase deeply. They can apply your standards consistently. They can improve over time based on feedback. They can become genuinely helpful rather than just occasionally useful.

For the broader AI development tools market, this matters because it shows a path forward for solving customization and integration challenges. It demonstrates that stateful systems designed around organizational memory can provide significantly better results than stateless systems.

The future of AI-assisted development isn't generic tools that apply universal best practices. It's customized systems that understand your specific organization, learn your specific standards, and help you maintain them consistently across your entire codebase.

Qodo's Rules System is an early, important step on that path. Whether or not organizations ultimately choose Qodo, the architectural patterns they're pioneering—intelligent rules discovery, enforcement, maintenance, learning, and measurement—are likely to become baseline expectations for AI development tools in the coming years.

The question for organizations isn't whether to adopt these patterns, but when, and with which tools. Organizations that move first will gain advantages in code quality, development speed, and team efficiency. Organizations that wait will eventually feel pressure to adopt similar approaches as they become industry standard.

The age of stateless, generic AI coding tools is ending. The age of stateful, organizationally-intelligent AI coding tools is beginning. That transition is worth paying attention to.

FAQ

What is organizational memory in AI development tools?

Organizational memory refers to the ability of AI systems to retain and apply persistent knowledge about your organization's specific coding standards, architectural patterns, best practices, and quality requirements across multiple sessions and interactions. Unlike stateless systems that reset after each session, systems with organizational memory understand your company's unique context and can apply that understanding consistently throughout the development process.

How do intelligent rules systems differ from traditional code review tools?

Traditional code review tools are reactive—they review code after it's written and flag issues. Intelligent rules systems are proactive—they automatically discover your organization's standards, maintain them over time, continuously enforce them during development, and measure their real-world impact. Rather than relying on manual rule documentation, intelligent systems automatically identify patterns from your actual codebase and decisions, then surface them to leadership for approval.

What is rule decay and why does it matter?

Rule decay is the gradual deterioration of coding standard rules over time as technology evolves and organizational priorities shift. Without active maintenance, rules that made sense five years ago become outdated and create friction rather than clarity. Intelligent rules systems address this by continuously monitoring rules for conflicts, duplicates, and obsolescence, ensuring rules remain aligned with current best practices and technology landscape.

How does automatic rule discovery work?

Automatic rule discovery uses AI agents to analyze your existing codebase, pull request history, and code review feedback to identify patterns that reflect your organization's actual standards. Rather than requiring teams to manually document rules, the system discovers them from behavior—for example, if senior engineers consistently reject functions over 100 lines, the system discovers and surfaces a rule reflecting this pattern.

What does the 11% precision improvement mean in practical terms?

Precision improvement in code review refers to accuracy in flagging actual issues while avoiding false positives. An 11% improvement means approximately 11% fewer incorrect warnings for large organizations reviewing thousands of pull requests daily. Practically, this translates to significant time savings, improved developer trust in the system, and greater likelihood that developers will act on suggestions.

How long does it take to see results from implementing an intelligent rules system?

Organizations typically see initial results within 4-6 weeks, with full adoption and measurable impact by 3-6 months. The implementation follows phases: discovery (weeks 1-2), rule approval and configuration (weeks 2-4), enforcement and feedback (weeks 4-8), and continuous refinement (month 2 and beyond). Organizations that rush these phases tend to see pushback from developers and slower adoption.

Key Takeaways

- Current AI coding tools are stateless, resetting after each session and forgetting organizational context, standards, and decisions

- Manual workarounds like saving context to markdown files fail at enterprise scale with thousands of files and complex standards

- Intelligent rules systems automatically discover standards from codebase patterns and pull request history, making implicit knowledge explicit

- Tight integration between rules and AI agents improves precision by 11% while enabling proactive rather than reactive code review

- Real-world analytics prove that enforced standards correlate with measurable improvements in code quality, development speed, and team efficiency

Related Articles

- LLM Security Plateau: Why AI Code Generation Remains Dangerously Insecure [2025]

- AI Coding Tools Work—Here's Why Developers Are Worried [2025]

- How OpenAI's Codex AI Coding Agent Works: Technical Details [2025]

- Thomas Dohmke's $60M Seed Round: The Future of AI Code Management [2025]

- GPT-5.3-Codex: OpenAI's Next-Gen Coding Model Explained [2025]

- GitHub's Claude & Codex AI Agents: A Complete Developer Guide [2025]

![AI Coding Agents Memory Problem: How Intelligent Rules Systems Fix Amnesia [2025]](https://tryrunable.com/blog/ai-coding-agents-memory-problem-how-intelligent-rules-system/image-1-1771337264412.png)