The $300 Million Problem Nobody Expected

Thomas Dohmke wasn't supposed to be starting a company right now. After four years as CEO of GitHub, watching Copilot explode from a neat experiment into a fundamental shift in how developers work, he had options. Comfortable options. The kind of options that let you disappear into an advisory board and a quarterly board meeting.

Instead, in August 2025, he walked away and announced he was starting something new.

Six months later, we know what it is. Entire is raising the largest seed round ever for a developer tools company:

That's not a validation round. That's a signal that something fundamental is broken in how developers work right now. And that Dohmke knows how to fix it.

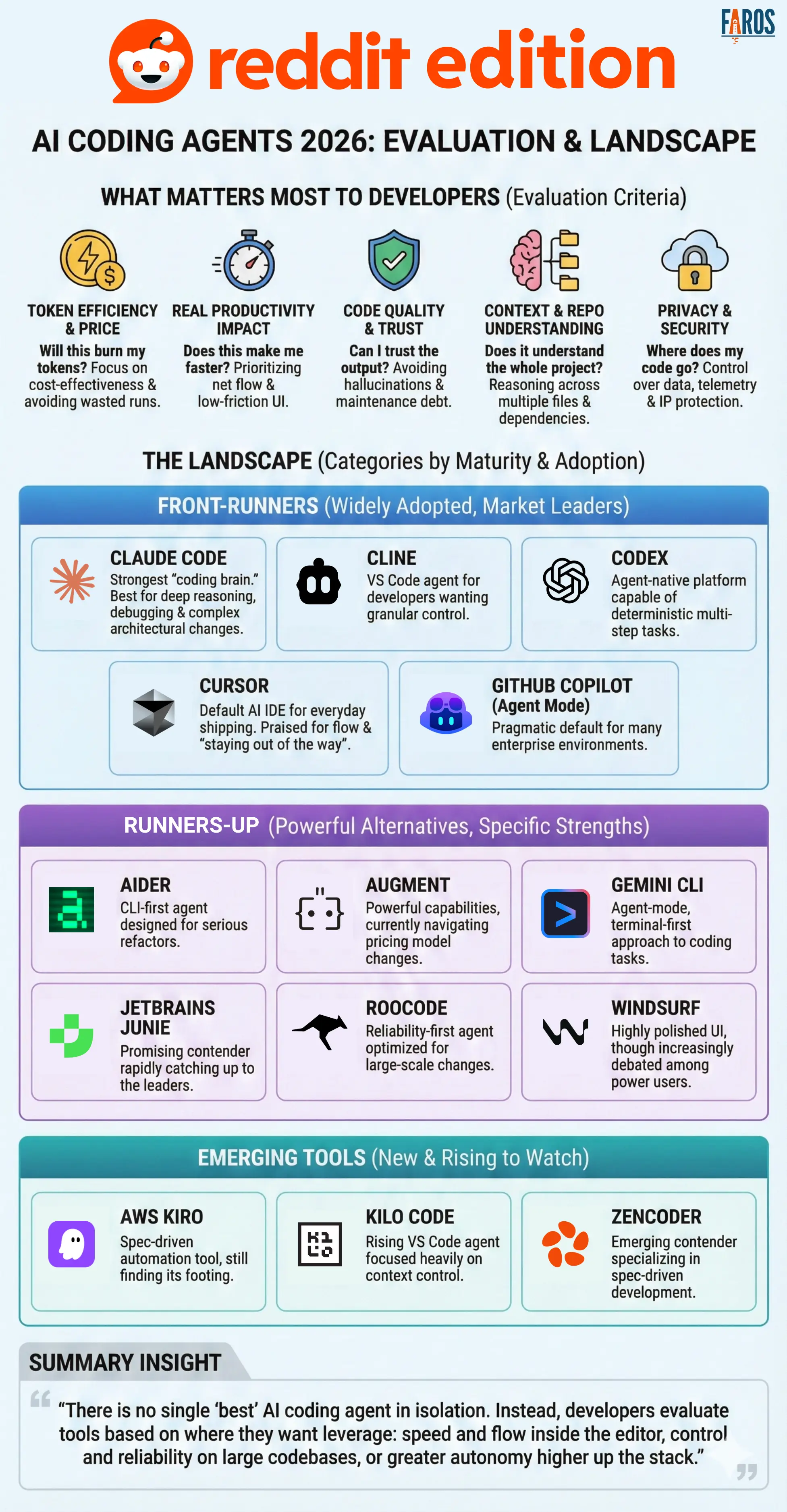

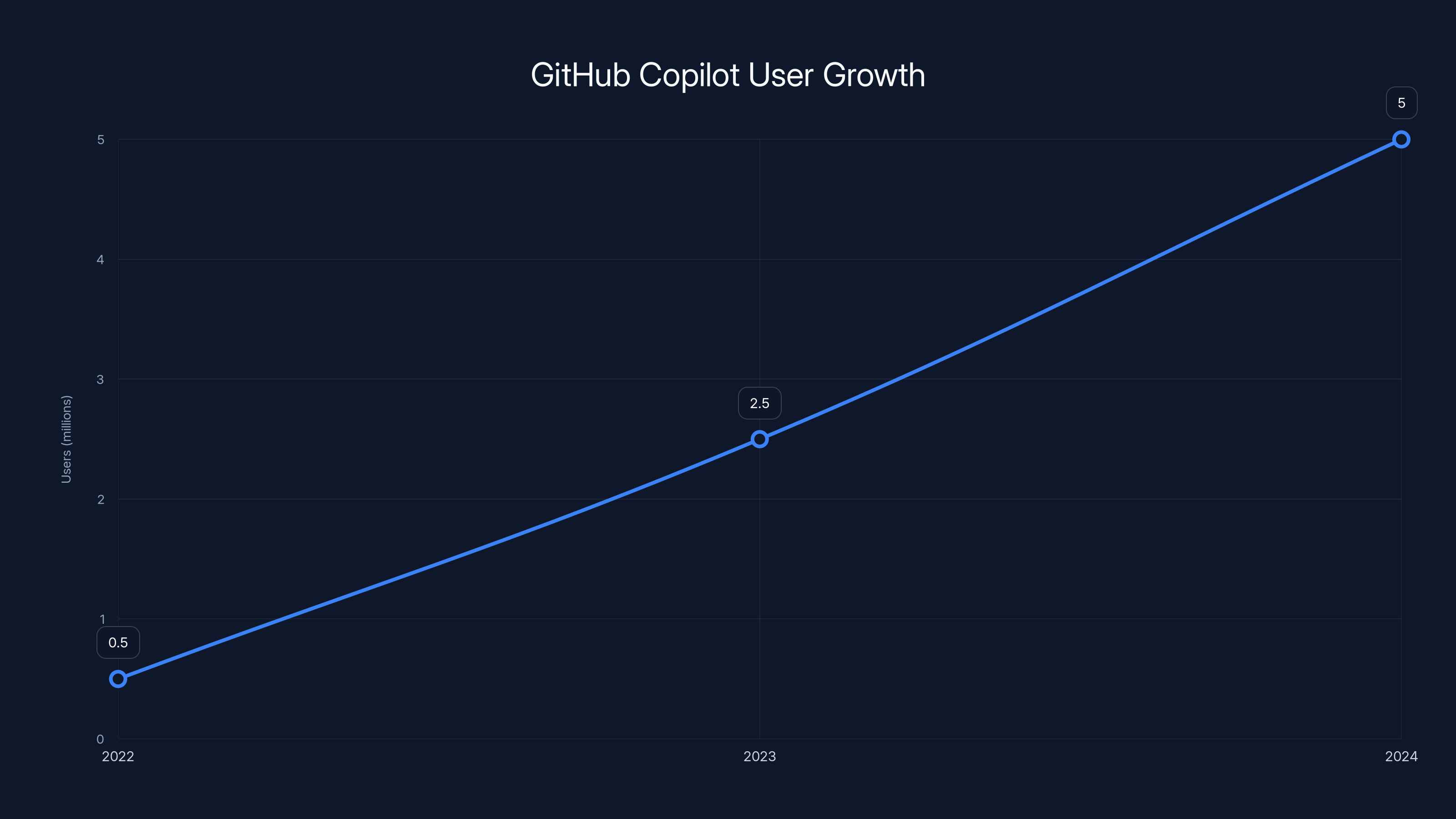

Here's what you need to understand: we're living through an AI agent boom. GitHub Copilot went from "cool demo" to millions of developers using it daily. Claude, ChatGPT, specialized coding models, open-source agents, proprietary agents—they're all generating code faster than human developers can review, understand, or integrate it.

The problem isn't that AI can't write code. It obviously can. The problem is that our entire development workflow was designed for humans writing code. Git. Pull requests. Code reviews. Issue tracking. Deployment pipelines. All of it assumes a human is making the decisions and taking responsibility.

Now multiply that human's output by 10 with an AI agent, and the system breaks.

Entire isn't trying to replace that system. It's trying to rebuild it from the ground up for an era where code generation happens at machine speed, not human speed.

What Entire Actually Is (And Why It Matters)

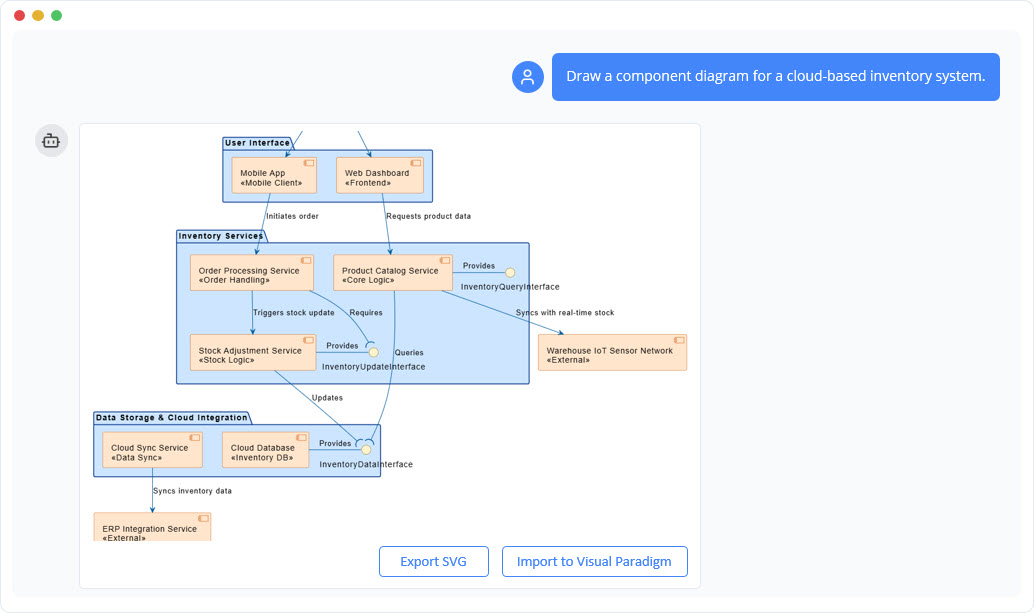

Entire has three core components, and understanding them matters because they represent a completely different mental model for modern development.

The first component is a git-compatible database. This isn't GitHub, GitLab, or Bitbucket. This is a system that understands git natively but can handle the volume and structure of AI-generated code. Think of it as git rebuilt for the reality that code is now generated by multiple agents, humans, and hybrid workflows simultaneously. It's git's distributed model, but with semantic understanding built in.

The problem it solves is real. When a human writes code, they commit maybe 10-50 lines at a time. They're intentional about it. They reason through it. When an AI writes code, it can dump thousands of lines in seconds. Your version control system wasn't built for that. Your workflow can't handle it. So what Entire is doing is creating a foundation that can.

The second component is a "universal semantic reasoning layer." This is where it gets interesting. Right now, if you want multiple AI agents to work together, they don't really understand each other's output. Agent A generates code, Agent B reads it as text, makes decisions based on that text, generates more code. It's like two people playing telephone through a document.

Entire's semantic layer creates a shared understanding across agents. When Agent A writes a function, Agent B doesn't just see text—it understands the function's purpose, constraints, dependencies, and implications. Agents can reason about what other agents have done. They can collaborate instead of just sequence.

For developers, this means AI agents can actually work together on complex projects, not just handle isolated tasks.

The third component is an AI-native user interface. This is the human-in-the-loop part. Most development tools were designed for humans to use them. Then AI came along, and we just... tried to make AI work with human tools. Slack for AI, GitHub for AI, Linear for AI. It's backwards.

Entire's interface is designed with the assumption that agents and humans are collaborating. Humans aren't trying to teach the system; humans are trying to understand and guide the system. That changes everything about what you show, when you show it, and how you interact.

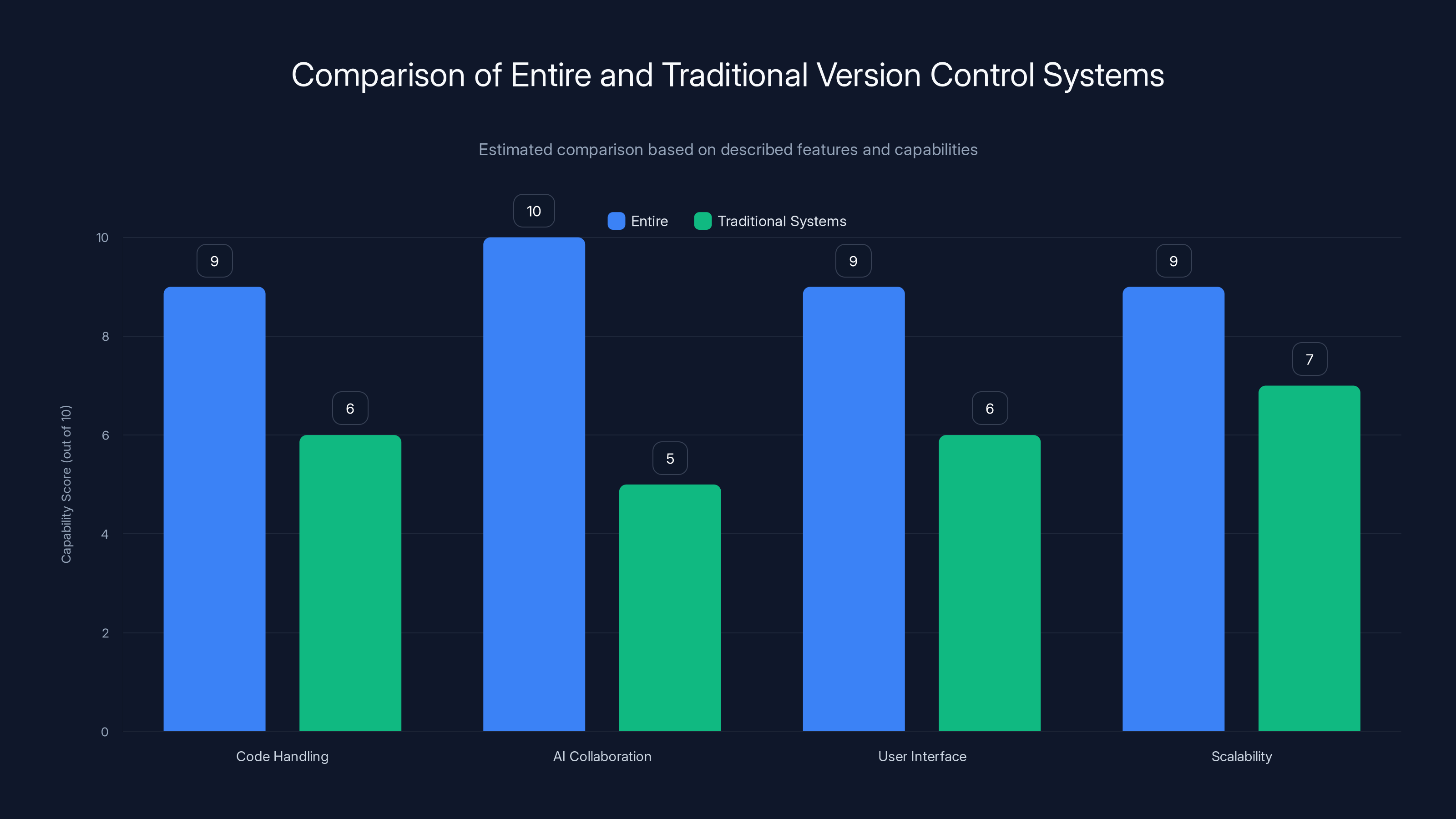

Entire significantly enhances AI collaboration and scalability compared to traditional systems, offering a more robust infrastructure for AI-generated code. Estimated data based on feature descriptions.

The First Product: Checkpoints and the Traceability Problem

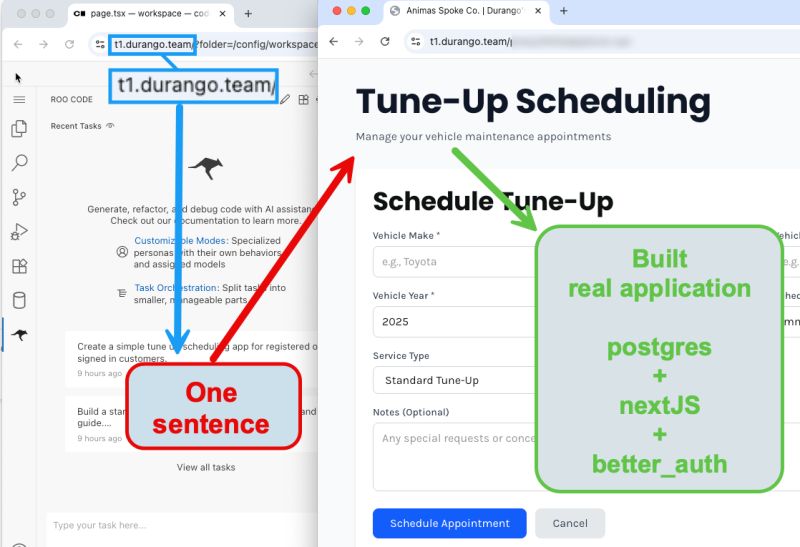

The first product Entire is releasing isn't a full platform. It's a focused tool called Checkpoints, and it solves one specific, painful problem: understanding why an AI did what it did.

Checkpoints automatically pairs every bit of code an AI agent submits with the complete context that created it. Prompts. Transcripts. The chain of reasoning. The constraints. All of it. When you look at a piece of code in Checkpoints, you don't just see code. You see the reasoning that created it.

This matters more than you might think. Right now, when an AI generates code, you have a few options:

- Trust it and merge it (dangerous)

- Review it line by line (slow, and you don't understand the intent)

- Ask the AI to explain itself (but the AI is usually overconfident or vague)

- Rewrite it yourself (defeats the purpose of using AI)

None of these work at scale. You can't review 10,000 lines of code per sprint. You can't ask every generated function to explain itself. You can't rewrite everything.

Checkpoints does something different. It lets you search through generated code by intent, by problem, by context. It lets you see patterns in what the agent generated and why. It lets you learn from the agent's reasoning. And when the code is wrong, you understand not just what's wrong, but what the agent was trying to do, so you can fix it and potentially improve future generations.

For open-source projects, this is critical. Right now, maintainers are drowning in AI-generated pull requests. Most of them are low-quality. Some are decent. A few are great. But evaluating them takes time, and without understanding the context and reasoning, it's hard to distinguish good code from code that looks good but has subtle issues.

Checkpoints lets maintainers say, "Show me all PRs generated to solve the performance problem in the authentication module." Not just code diffs—the actual reasoning. That changes the evaluation process entirely.

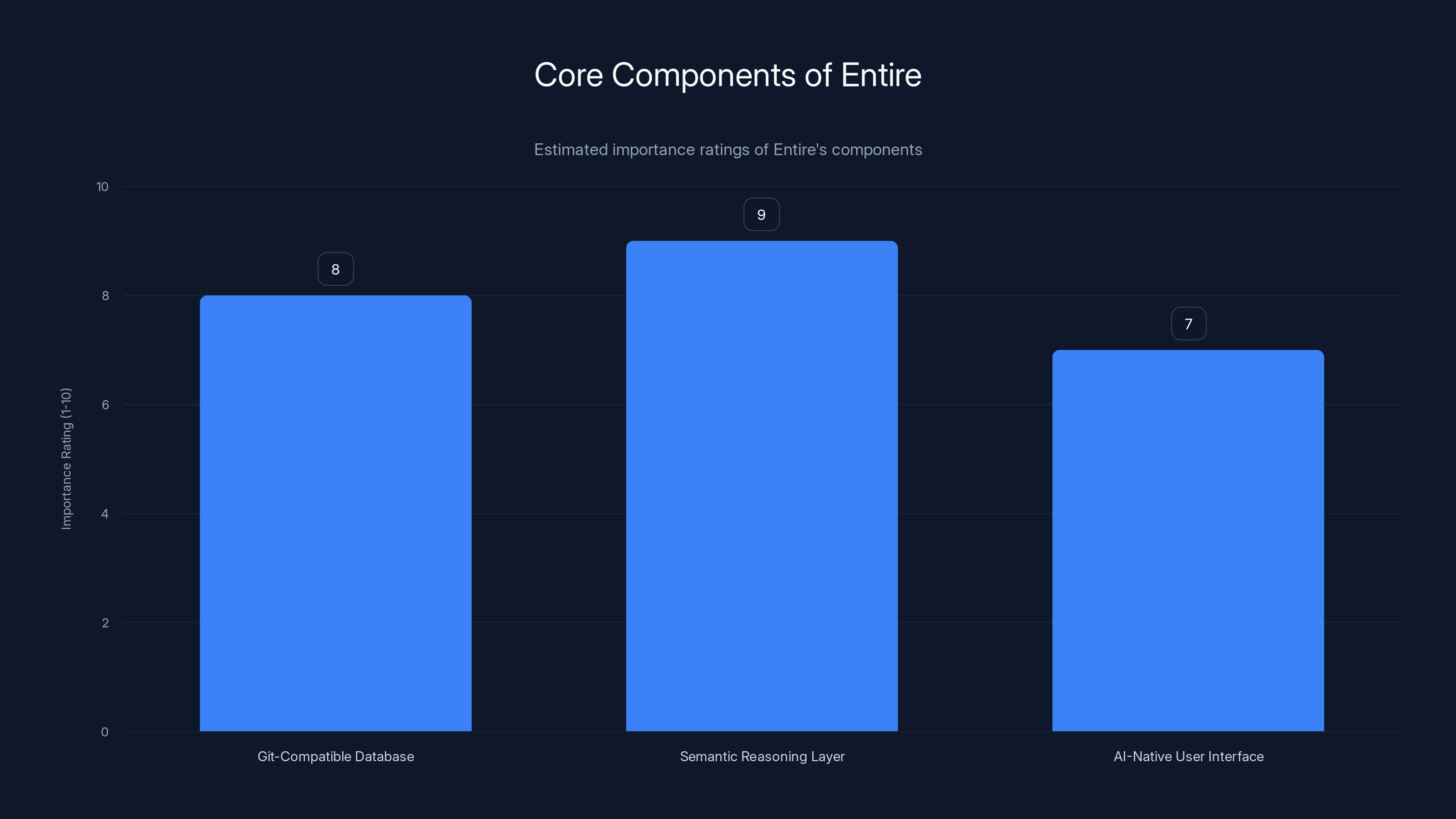

The semantic reasoning layer is estimated to be the most crucial component, facilitating AI collaboration. Estimated data.

The Market Context: Why $60M Isn't Crazy

Let's talk about why this valuation makes sense. And why the investor list matters.

First, the market timing. We're at an inflection point. Copilot has been available for years, but it's only in the last 12-18 months that it's gone mainstream. Most development teams are still figuring out how to integrate it. They're not complaining about missing features; they're complaining about chaos. Too much code. No oversight. No clarity.

Second, the team. Dohmke ran GitHub during its most transformative period. He oversaw the integration of Copilot. He knows the problem intimately because he lived it. He knows what GitHub got right and what it got fundamentally wrong for this new era. That's not hype; that's founder-market fit.

Third, the problem is genuinely massive. Every company writing code is facing this. Every open-source project is facing this. The scale is enormous. And there's no good solution yet. GitHub is trying to add features. But GitHub is also the platform where code lives, so it has conflicting incentives. Entire has no conflicts. Its entire product is solving this one problem.

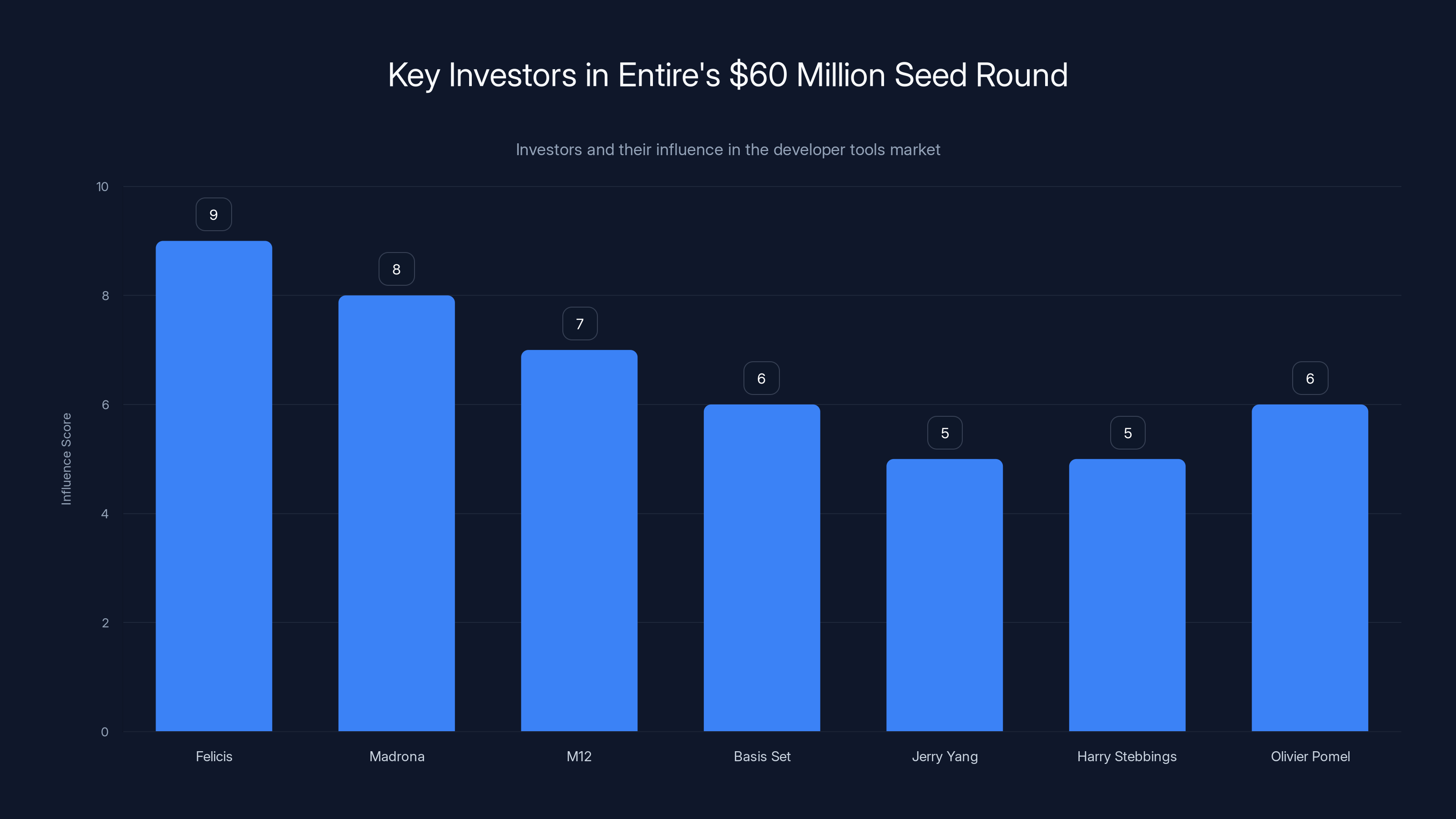

Fourth, look at the investors. Madrona invested early in Slack and Amazon Web Services. M12 is Microsoft's venture arm. Basis Set invested in Databricks when it was early. Olivier Pomel and Datadog understand operational data at scale. Jerry Yang and Harry Stebbings both have pattern-recognition track records.

This isn't money betting on hype. This is money from people who've seen fundamental infrastructure shifts happen. And they're betting that Dohmke understands this shift better than anyone else.

The Broader Shift in Development Infrastructure

Entire isn't an island. It's part of a much larger shift in how development infrastructure works when AI is a first-class participant.

For years, the development stack was stable. Git. GitHub. CI/CD. Monitoring. Most of the innovation was incremental. Faster CI. Better visibility. Better collaboration.

Now the stack is breaking. Not because the old tools are bad. Because the assumption they're built on—that developers are the primary agents of code creation—is wrong.

We're seeing this crack show up everywhere:

Version control is broken for AI. Git works fine when humans commit. It's confusing when agents commit constantly. GitHub is trying to patch this with features. But features are band-aids on a fundamental design mismatch.

Code review is broken for AI. Human code review assumes intent matters. You read code, you understand what the developer was trying to do, you evaluate if it's correct. With AI, the intent is often vague, the code is often right for the wrong reasons, and you can't just email the AI and ask for clarification. You need systems designed for this dynamic.

Testing is broken for AI. When a human writes code, they make mistakes in predictable ways. Static analysis, unit tests, integration tests, manual QA—the testing pyramid evolved for human-generated code. AI generates code that passes the obvious tests but fails in weird edge cases. You need different testing strategies.

Observability is broken for AI. When something breaks in production, you want to know why. You trace through the code, find the bad logic, fix it, prevent future instances. When an AI generated the code, you trace through, find that the logic looks reasonable, and realize the AI was working with incomplete or wrong context. The debugging process is different.

Security is broken for AI. A human who writes SQL injection or hardcodes a password did it once or twice. An AI that learns a pattern might generate it consistently across your entire codebase. The attack surface changes.

Entire is addressing these problems from the infrastructure level. It's saying, "Let's rebuild the tools to actually work with AI as a fundamental component, not a plugin."

That's a much bigger shift than most people realize.

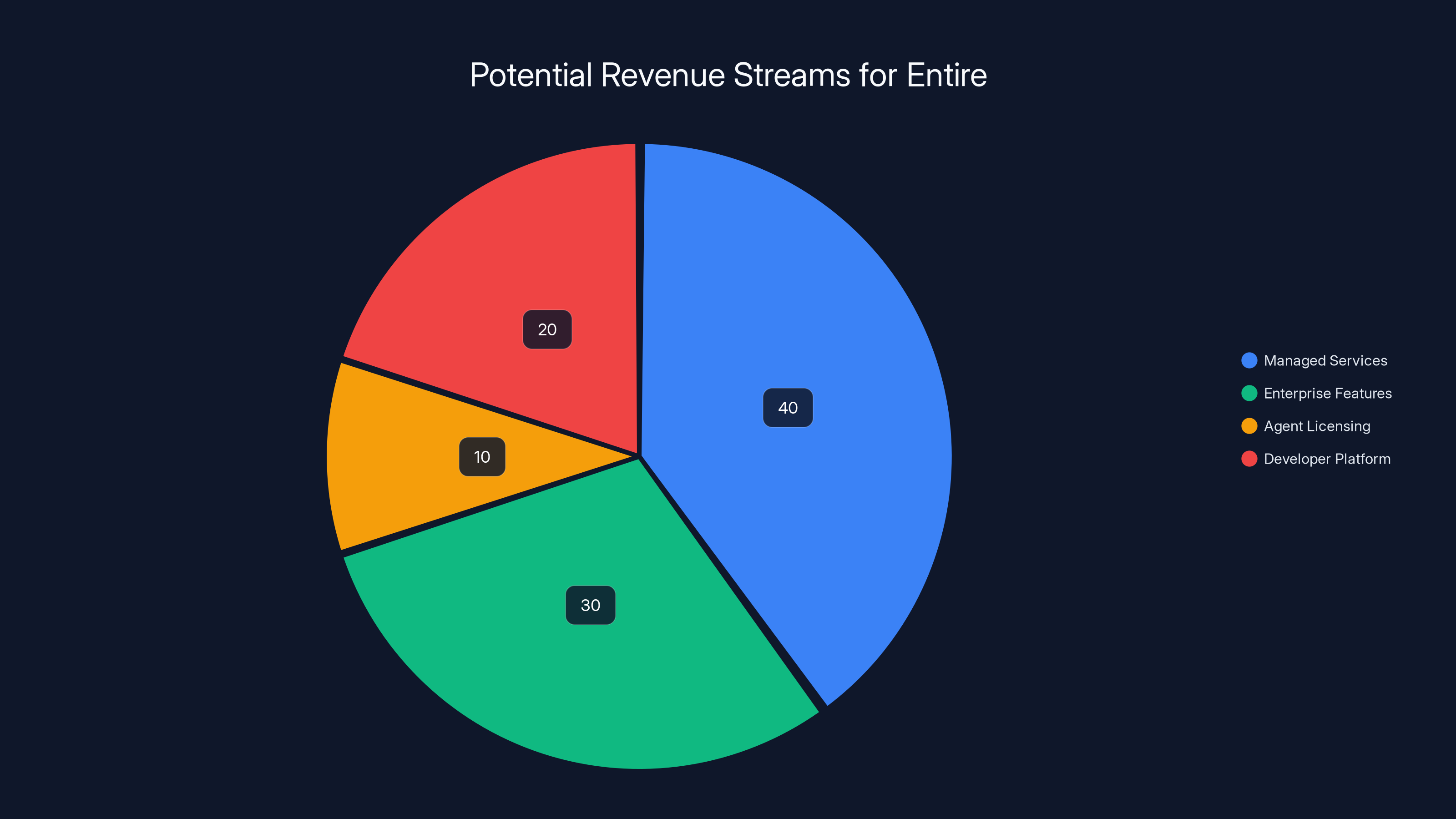

Estimated data suggests that managed services and enterprise features are likely to be the primary revenue streams for Entire, while agent licensing and developer platform revenues will play smaller roles.

How Entire's Architecture Solves Real Problems

Let's dig into how each component of Entire actually solves specific problems that developers are facing right now.

The Git-Compatible Database Problem

When you have 50 developers on a team, each committing 10-20 times a day, git works fine. Your database gets maybe 1,000 commits per day. Conflicts are manageable. Code review throughput is reasonable.

Now add one AI agent that generates code for your entire codebase. Suddenly you're looking at 10,000 or 50,000 commits per day. The branching strategy breaks. The conflict resolution becomes impossible. The review process collapses.

A traditional database doesn't solve this. You could use a time-series database, but then you lose git semantics. You could use a data lake, but then you lose version control. You're stuck.

Entire's git-compatible database is built for this scale. It maintains git semantics (branches, commits, merges) but can handle the throughput and structure of machine-generated code. That means:

- Agents can commit constantly without clogging up the system

- You can query across enormous commit histories instantly

- Branching strategies that make sense for AI workflows actually work

- Conflicts are resolved intelligently, not just by timestamp

The Semantic Layer Problem

Let's say you have two AI agents working on a project. Agent A specializes in performance optimization. Agent B specializes in security hardening. Ideally, they collaborate. Agent B hardens the authentication system, and Agent A doesn't accidentally add a security vulnerability while optimizing it.

Right now, that doesn't happen. Agent B writes code and commits it. Agent A reads it as text. Agent A doesn't understand the security implications. Agent A optimizes something that shouldn't be optimized. You get a code review comment: "Why did the agent do this?" And you're stuck because neither agent can explain itself well to the other.

With a semantic layer, Agent A understands not just the text of what Agent B wrote, but the intent, the constraints, the security properties. Agent A can reason about whether its optimization respects those properties. Agents can actually collaborate instead of just taking turns.

For humans, this means:

- Agents can work on larger, more complex projects

- The code generated is more coherent and less contradictory

- Bugs caused by agent-agent miscommunication disappear

- The reasoning is traceable (because the semantic layer maintains it)

The AI-Native UI Problem

Most development tools are designed for command-line nerds or designers. The interface assumes a human expert is using it. You have to know what you're looking for. The tool gives you what you ask for, not what you need.

When an AI is using the same tool, that breaks down. The AI doesn't know what it's looking for. The tool shows the AI information in a format optimized for human parsing. The AI misunderstands. The human can't tell what the AI misunderstood because the interface wasn't designed for that conversation.

Entire's AI-native interface assumes the opposite. It assumes agents and humans are both using it, and they need to understand each other. That means:

- Information is structured for machine understanding first, human understanding second

- Interactions are designed for agents to make proposals and humans to evaluate them

- Feedback loops are optimized for teaching agents, not just supporting humans

- The interface adapts based on whether a human or agent is using it

This is a small thing that's actually huge. Right now, people are using Slack, GitHub, Jira, and Linear with AI. But these tools fight the AI at every step. An AI-native tool doesn't fight. It cooperates.

The Open Source Angle and Community Adoption

Entire's first product is open source. This is important strategically and philosophically.

Strategically, open source means rapid adoption. Developers trust open source more. They can audit it. They can fork it. They don't have to trust a corporation. In a space where trust is hard (because you're trusting the system with your code), that's powerful.

Open source also means the community will iterate on the design. Entire has a good foundation, but the community will find use cases the team didn't think of. The community will build integrations. The community will solve problems Entire didn't anticipate. That accelerates the product.

Philosophically, open source signals that Entire isn't trying to lock you in. It's saying, "We're solving this infrastructure problem. We think we have a good approach. Let's see if it works. If you want to use our open-source tools, great. If you want to build on top of them, better. If you want to fork and do something different, that's fine too."

That's the opposite of how most platforms operate. And it builds loyalty.

For adoption in open-source projects specifically, this changes everything. A maintainer of a popular open-source project gets 100 AI-generated PRs per week. Right now, the evaluation process is manual and painful. Checkpoints makes it possible to actually manage that volume without burning out the maintainers.

Entire has a real shot at becoming the infrastructure for open-source AI collaboration. And once you're embedded in open source, adoption in commercial projects follows naturally.

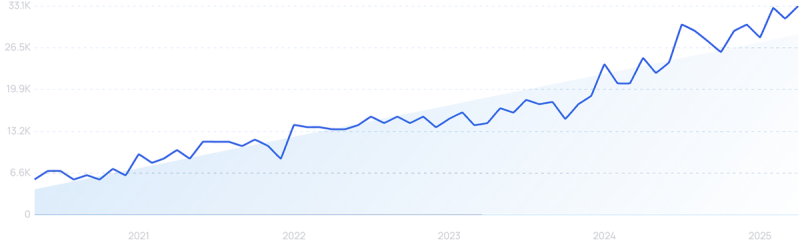

GitHub Copilot experienced a 10x increase in monthly active users from 2022 to 2024, highlighting its rapid adoption and market relevance.

Competitive Positioning and Why GitHub Can't Copy This

Someone's probably already asking: Why doesn't GitHub just build this?

GitHub could. But it probably won't. At least not for a while. And that's Entire's window.

GitHub's incentives are misaligned. GitHub makes money from subscriptions and from GitHub Copilot. If Entire makes Copilot easier to use, that's bad for GitHub's leverage. If Entire makes Copilot output easier to integrate, that validates Copilot and increases competition. If Entire makes it easier to use competing AI agents, that directly threatens Copilot.

GitHub could build a better system for managing AI-generated code. But doing so would undermine the product that's driving most of their growth right now. So they'll add features. They'll improve the UI. But they won't rebuild from first principles.

That's Entire's opportunity.

Other competitors are fragmented. Some are trying to build better code review tools for AI. Some are trying to build better CI/CD. Some are trying to build agent orchestration. Nobody else is building the infrastructure layer that brings it all together.

The other advantage Entire has is credibility. Dohmke isn't some startup founder with a theory about how development should work. He's the person who ran GitHub through the Copilot revolution. He knows exactly what works and what breaks. That credibility matters when you're asking developers and companies to bet on new infrastructure.

The Business Model (And Why It Matters)

We don't know exactly how Entire plans to monetize yet. But we can infer from the funding and strategy.

Checkpoints is open source, so it won't have a direct business model. It's a freemium product that proves the value of the platform.

The money will come from either:

1. Managed services. Entire hosts the git-compatible database, semantic layer, and UI as a service. You pay for compute, storage, and access. This is the most likely model.

2. Enterprise features. The open-source tool is free. Enterprise features (audit trails, access control, custom workflows, integrations) require a paid tier.

3. Agent licensing. Entire embeds AI agents that generate code. You pay per agent or per code generation. This is less likely given their positioning, but possible.

4. Developer platform. Entire becomes the platform on top of which other tools build. Marketplace revenue. Integration revenue.

Most likely, it's a combination. Open-source core, managed hosting, with premium features for enterprises and integrations for ISVs.

The pricing will probably be competitive with GitHub—maybe $20-100 per developer per month depending on tier. The total addressable market is enormous. Every company that uses AI agents needs this infrastructure.

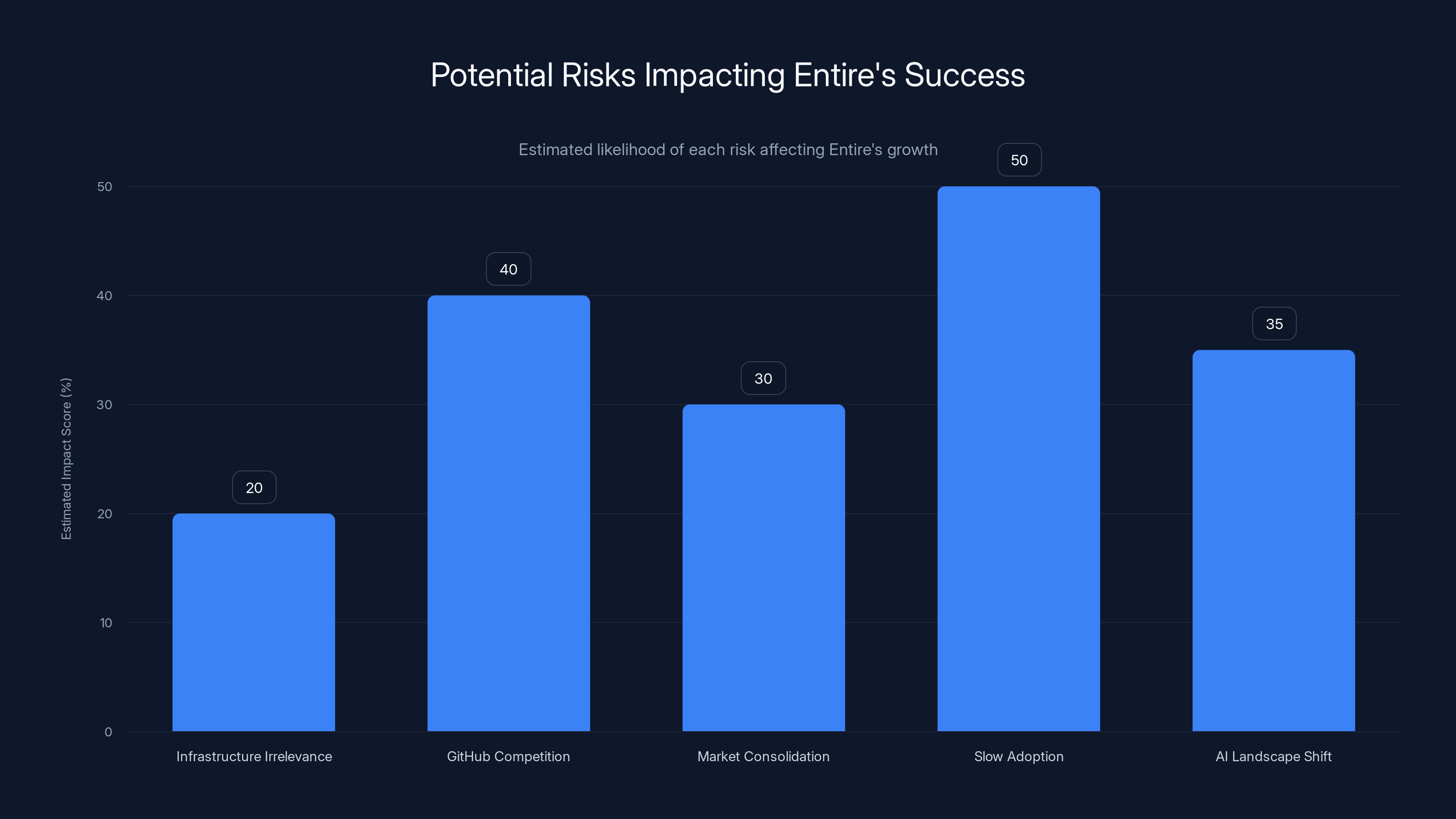

This chart estimates the potential impact of various risks on Entire's success, with 'Slow Adoption' and 'GitHub Competition' posing the highest challenges. Estimated data.

The Broader Implications for the Developer Tools Market

This $60M seed round signals something bigger than Entire's success or failure.

It signals that venture capitalists believe AI-native developer infrastructure is a category, not a feature. That someone will build the underlying tools that make AI agents useful for developers. That there's room for a new platform layer above git and below deployment.

That's different from the last 15 years, when developer tools were mostly about improving incremental efficiency. A better code editor. A faster CI pipeline. Better monitoring. These were optimizations of an existing system.

AI-native developer tools are about rebuilding the system. And venture capitalists are betting billions that the system needs rebuilding.

You'll probably see more funding in this space. You'll probably see more startups building specialized tools (AI testing, AI security, AI documentation) that assume Entire's infrastructure underneath.

You might also see acquisitions. A company that builds AI-native testing tools would be way more valuable if it integrates with Entire's semantic layer. An observability company that understands AI-generated code is way more useful. An IDE that treats agents as first-class citizens is way more powerful.

Entire is playing for building block status. If it succeeds, every developer tool startup in the next five years will be built assuming Entire exists.

The Team, The Timing, The Momentum

Funding tells a story, but it's usually wrong without context.

Thomas Dohmke's story is: "I ran the platform where this problem emerged. I know exactly what's broken. I know exactly how to fix it. I've raised billions in validation that this problem matters. Now I'm building the solution."

That's not a startup founder with a theory. That's someone with proof of concept, domain expertise, and validation.

The timing is also perfect. We're past the phase where people are wondering if AI coding actually works. We're in the phase where people are trying to integrate it and failing. Infrastructure vendors thrive in that phase.

The momentum is building. GitHub Copilot adoption is accelerating. Claude's coding capabilities are getting scary good. Open-source projects are drowning in AI-generated contributions. Enterprises are experimenting with internal agents. The demand for a solution to "how do I manage this" is urgent.

Six months ago, this company didn't exist. Now it's worth $300M based on an idea and a team. That's how you know you're riding a real wave, not hype.

The chart illustrates the influence of key investors in Entire's record-breaking seed round, indicating strong confidence in the company's potential to address the AI-driven development workflow challenges. (Estimated data)

Why This Matters for Your Development Workflow

If you're a developer or building a development team, this matters.

First, Entire's existence validates something you probably already suspect: AI code generation is part of your future. You can resist it. You can ban it. But it's coming. Infrastructure that makes it manageable is necessary.

Second, the design philosophy matters. Entire is built on the assumption that agents and humans collaborate. That's different from tools that assume AI is a tool for humans to use. Tools built on that collaboration assumption are more powerful and more honest about the future.

Third, you should be thinking about your own AI governance strategy. Checkpoints is designed to help with that. As AI-generated code becomes more prevalent, you need ways to understand it, trace it, learn from it, and control it. That's infrastructure, not policy.

Fourth, if you're building on top of developer tools (as an ISV, as a consultant, as a platform), you should be paying attention to Entire. If it succeeds, it becomes the foundation layer. Building on top of it earlier is better than adapting later.

The Road Ahead: What Comes Next

We don't know what Entire's product roadmap looks like. But we can guess.

First priority is getting Checkpoints into the hands of developers and maintainers. Proving that it solves the traceability problem. Building community around it.

Second is fleshing out the semantic layer. Making it real, useful, powerful. Getting agents to actually collaborate intelligently instead of just taking turns.

Third is the managed platform. Taking the open-source pieces and building a product around them that enterprises and large teams actually want to buy.

Fourth is probably integrations. ChatGPT integration, Anthropic integration, open-source agent integration. Making Entire the platform where all agents can work together.

Fifth is probably domain-specific applications. AI-native testing. AI-native security. AI-native documentation. Built on top of Entire's infrastructure.

The massive funding suggests they're thinking about all of this simultaneously. Hiring top engineers to execute on multiple fronts. Building the foundation while proving the market. Moving fast.

If this sounds like it could be the next major developer platform (the way GitHub was for version control, or Stripe was for payments), that's probably intentional.

The Broader Startup Ecosystem Implications

This round doesn't just matter for Entire. It signals something about the venture ecosystem.

First, experienced founders from big tech with real domain expertise can still raise enormous seed rounds. You don't need to be 22 and Stanford-educated. Dohmke is neither (he's the former CEO of GitHub). But he has credibility, domain expertise, and a thesis about where the market is going. Venture capitalists bet on that.

Second, infrastructure plays are still hot if they solve real problems at scale. There was this brief period where everyone said infrastructure was dead and everyone should build AI applications. Turns out, AI makes you need better infrastructure, not less.

Third, the AI revolution is remaking the entire stack. From data labeling to data infrastructure to model training to deployment to model management to application building to code generation to code management. Every layer is getting rebuilt for an AI-first world. That means opportunities at every layer.

Fourth, being first with credibility beats being first with a product. Entire doesn't have a finished product yet (Checkpoints is the MVP). But Dohmke has credibility, so capital flows anyway.

If you're thinking about starting something or investing in this space, pay attention to these lessons.

Why This Might Not Work (The Honest Take)

It would be wrong to close this without being honest about the risks.

Risk 1: The infrastructure might not matter as much as it seems. Right now, developers are overwhelmed by AI code. But maybe that's temporary. Maybe in two years, everyone's figured out how to integrate AI agents and the problem disappears. Unlikely, but possible.

Risk 2: GitHub might copy faster than expected. GitHub could decide that the risk of losing developers to Entire is higher than the risk of undercutting Copilot growth. GitHub could acquire Entire. GitHub could fork the ideas. GitHub has distribution and installed base that's hard to compete against.

Risk 3: The market might consolidate to other platforms. Maybe cloud providers (AWS, Azure, Google Cloud) build this as a managed service. Maybe Datadog (an investor) decides to build this directly. Maybe something emerges that's better than Entire's thesis.

Risk 4: Adoption might be slower than expected. Companies are conservative about changing their version control or code management. Even if Entire's solution is better, migration costs are real. Organizations might stick with git and GitHub even if it's suboptimal.

Risk 5: The AI landscape might shift. Maybe open-source agents become dominant and replace GitHub Copilot. Maybe new paradigms emerge that make the semantic layer irrelevant. Maybe the problem Entire is solving gets solved differently.

These risks don't mean Entire won't succeed. They just mean success isn't guaranteed. The market is nascent, the problem is real, and the team is strong. But there's uncertainty.

The Conversation This Raises About AI and Developer Autonomy

Zooming out, there's a philosophical question here that Entire's success or failure will partially depend on.

As AI agents become more capable, developers have to choose between:

1. Deep collaboration with AI. Using agents as teammates. Adopting systems designed for human-agent collaboration. Spending time understanding what agents do and why. This requires new skills but unlocks more velocity.

2. Limited integration with AI. Using agents for specific tasks. Keeping a clear boundary between human and machine code. Maintaining control and understanding. This is slower but safer.

3. Full AI automation. Letting agents write and deploy everything. Becoming managers of AI rather than developers. Trusting the agents. This is the future if it works, but it's risky if it doesn't.

Entire is designed to support approaches 1 and 2. It lets you have deep collaboration (through the semantic layer and traceability) or limited integration (by giving you clear control and visibility).

Developers who choose approach 3 don't need Entire. They need a different kind of system.

Where most developers land will determine whether Entire is infrastructure for a decade, or infrastructure for a five-year transition.

Looking at This From the Developer's Perspective

If you're a developer using Copilot or Claude for coding, here's what matters:

First, Entire makes that experience better if you choose to use it. Right now, you use Copilot in your IDE, you see suggestions, you accept or reject them, they go into your codebase. You don't see why Copilot made that suggestion. You can't easily search for all Copilot-generated code. You can't easily revert just the AI parts if something goes wrong.

Checkpoints changes that. It lets you see the reasoning. It lets you search. It lets you understand what the agent was thinking. That's valuable even if you use the tool exactly the same way.

Second, if you're on a team, this becomes about collaboration. Right now, when a teammate commits AI-generated code, you can't easily tell. The code review process doesn't adapt. The questioning process doesn't change.

With infrastructure designed for human-AI collaboration, the review process adapts. You see that something was AI-generated. You can look at the reasoning. You can ask different questions. The collaboration is more efficient.

Third, if you're worried about job security (a fair concern when AI gets better at coding), Entire actually helps. By making it easier to understand and integrate AI-generated code, Entire makes you more valuable as a developer. You become the person who knows how to work with AI effectively. That's worth more than the person who just writes code without AI.

So from a developer perspective, Entire is probably good news, even if it feels threatening at first.

The Enterprise Angle and What It Means for Large Orgs

For large enterprises, this matters differently.

Enterprise version control is often separate from GitHub. Some companies use Perforce. Some use custom systems. Some are on GitHub Enterprise but with extensive customization.

Entire is saying, "Your version control setup is about to break when you integrate AI agents. We've built something new to handle it."

That's compelling for large enterprises. They spend millions on dev tooling. They have complex requirements. They have security and compliance concerns. They have governance needs.

Entire's positioning as git-compatible but AI-native speaks to that. You get the benefits of git semantics (everyone knows how to use it) but with the capabilities to handle AI-generated code at scale.

For enterprises, the managed version will be important. They don't want to operate infrastructure for this. They want Entire to handle it.

The moat for Entire in enterprise might actually be stronger than in startups. Enterprises are sticky. If you become the standard for AI-code management at a large company, you're embedded for years.

Connectivity to the Broader AI Infrastructure Boom

Entire's $60M round is part of a bigger pattern.

Vector databases raised billions. RAG frameworks are getting funded. Fine-tuning tools raised hundreds of millions. Model compression raised venture capital. Model evaluation raised venture capital. Model deployment raised venture capital.

Essentially, everything around AI is getting rebuilt for a world where AI is central rather than peripheral.

Entire is the developer tools version of that. It's saying, "Development infrastructure needs to be rebuilt too."

If you're investing in this space, you're probably seeing that every major category is getting rethought. That's a sign of a fundamental shift, not a bubble.

For individual developers and teams, it means: the tools you use are about to get a lot better, but only if you adopt new ones. The tools that worked for human-only development will become less useful. The tools designed for human-AI collaboration will become standard.

That adoption curve is probably 5-10 years out. But it's coming.

The Bottom Line

Thomas Dohmke's $60M seed round for Entire isn't just a funding announcement. It's a signal that the infrastructure layer for AI-native development is being built right now. It's a signal that developers are overwhelmed with AI-generated code and need systems designed to manage it. It's a signal that venture capitalists believe this is a multi-billion-dollar category, not a feature.

For developers, the implications are: better tools are coming, but you'll need to learn how to use them. For teams, the implications are: your development workflow is about to get a lot more complex, then a lot more efficient. For enterprises, the implications are: new vendor lock-in dynamics are emerging, so move deliberately but move now.

Entire's success or failure will determine how the next decade of development looks. Given the team, the timing, and the capital, the bet that they'll succeed is reasonable.

But the best part of Entire's arrival isn't Entire itself. It's what it signals about where development tools are heading. And if you're building technology on top of developers, you should care about that.

FAQ

What is Entire and what problem does it solve?

Entire is a development infrastructure platform designed to help teams manage the code generated by AI agents. It combines a git-compatible database, a semantic reasoning layer for multi-agent collaboration, and an AI-native user interface. The core problem it solves is that modern development workflows were built for humans writing code, but AI agents now generate code faster than humans can understand and integrate it. Traditional tools like GitHub aren't designed for this scale and speed of code generation.

How does Entire's architecture work differently from GitHub or other version control systems?

Entire has three core components: first, a git-compatible database that understands git semantics but can handle the high volume and structure of machine-generated code; second, a semantic reasoning layer that allows multiple AI agents to understand each other's output and collaborate intelligently rather than just taking turns; and third, an AI-native user interface designed for human-agent collaboration rather than human-only workflows. This architecture assumes that agents and humans will work together, not that AI is just a tool for humans to use. GitHub, by contrast, was designed when humans were the primary code generators and treats AI as an add-on feature.

What is Checkpoints and why is it being open-sourced?

Checkpoints is Entire's first product, released as open source. It automatically pairs every piece of code generated by an AI agent with the complete context that created it: prompts, transcripts, reasoning chains, and constraints. This lets developers see not just what code was generated, but why the agent generated it. It's open-sourced because open source accelerates adoption, builds community trust, and allows developers to audit the tool. Open source also serves as a freemium product that demonstrates value and drives adoption of Entire's managed platform.

Why is this a $300 million problem when similar developer tools raise much less?

This valuation reflects several factors: the massive scale of the problem (every company using AI agents faces this), the credibility of the founder (Dohmke ran GitHub through the Copilot revolution), the quality of investors (including people who spotted AWS, Slack, and Databricks early), and the timing (we're at an inflection point where AI code generation is mainstream and integration is painful). Additionally, the addressable market is enormous—every development team on Earth will need infrastructure for managing AI-generated code. The valuation assumes Entire becomes a fundamental platform layer, not a point solution, similar to how GitHub became essential for version control or Stripe became essential for payments.

How does Entire compete with GitHub if GitHub is already dominant in version control?

GitHub's incentives are misaligned with solving this problem. GitHub makes money from subscriptions and GitHub Copilot. If Entire makes Copilot easier to use or validate, that benefits GitHub's revenue. But if Entire makes competing AI agents more viable or makes it easier to switch vendors, that threatens GitHub's position. Additionally, GitHub was designed when humans were the primary developers, so rebuilding it from scratch for human-AI collaboration would require admitting the architecture is outdated. Entire has no such conflicts and can build purpose-built for the new reality. GitHub will likely add features, but Entire can move faster on core infrastructure redesign.

What does the "semantic reasoning layer" actually do in practical terms?

In practical terms, the semantic layer creates shared understanding between AI agents. Instead of Agent A writing code that Agent B reads as plain text and misunderstands, the semantic layer lets Agent B understand the purpose, constraints, dependencies, and implications of what Agent A wrote. This allows agents to reason about each other's work, spot conflicts, suggest improvements, and collaborate on complex projects rather than just handling isolated tasks sequentially. For developers, this means AI agents working on the same project can actually coordinate and produce more coherent results instead of overwriting or contradicting each other.

Who would benefit most from adopting Entire?

Three groups would benefit most immediately: first, open-source maintainers drowning in AI-generated pull requests who need a way to evaluate them at scale; second, enterprises integrating AI agents into their development workflows who need governance, auditing, and control; and third, development teams actively experimenting with multiple AI agents (Copilot, Claude, in-house agents) who need those agents to work together rather than interfere with each other. Developers and teams still hesitant about AI adoption might not see immediate value until they commit to using agents regularly.

What's the business model and how will Entire make money?

While not officially announced, the most likely model is managed hosting of the git-compatible database, semantic layer, and UI as a service, with pricing per developer or team similar to GitHub Enterprise. Premium features (advanced audit trails, custom workflows, dedicated integrations, compliance certifications) would drive higher-tier pricing. Open-source Checkpoints generates no direct revenue but serves as a lead gen and freemium product that demonstrates value to potential paying customers. Additionally, a developer platform or marketplace where other tools and integrations build on top of Entire's infrastructure could generate additional revenue.

How does this affect security and code governance?

Entire actually improves security and governance by making AI-generated code fully traceable and auditable. Every piece of code is paired with the prompt and reasoning that created it, making it possible to audit what code was generated, why, and when. This is stronger governance than traditional human code, which often lacks detailed reasoning trails. However, enterprises will need to establish new policies around which prompts are acceptable, which agents are allowed, and how to handle edge cases where AI-generated code breaks security constraints. Entire provides the infrastructure to enforce those policies, but the policies themselves need to be designed by security teams.

When will Entire be available and how do I get access?

Checkpoints, the open-source tool, should be available now (or very soon after the announcement). It will be a public repository that anyone can clone and use. The managed platform with full semantic reasoning and UI will come later, with likely beta access to early customers within the next 6-12 months. If you want to try Checkpoints now, you can find it on the Entire website or through open-source repositories like GitHub, though you'll be using the self-hosted version rather than the managed service.

If you're building development tools or managing development infrastructure, this is the time to be thinking carefully about AI integration. The infrastructure layer is being built right now, and decisions you make today about which platforms to bet on will matter for the next decade.

For an AI-powered way to automate your own documentation, reporting, and internal tooling, consider Runable, which offers AI-powered automation for creating presentations, documents, reports, and slides starting at $9/month.

Key Takeaways

- Thomas Dohmke's Entire raised a record 300M valuation, signaling that AI-native development infrastructure is a major category

- The core problem: traditional development workflows (git, pull requests, code review) were built for humans writing code, not AI agents generating code at machine speed

- Entire's three-component architecture (git-compatible database, semantic reasoning layer, AI-native UI) represents a fundamental rebuild of development infrastructure

- Checkpoints, the open-source flagship product, gives developers full traceability of AI-generated code including prompts and reasoning—solving the 'black box' problem

- The investor list (Felicis, Madrona, Datadog founder, Jerry Yang) suggests this is positioned as a foundational platform, not a point solution

Related Articles

- Venture Capital Split Into Two Industries: SVB 2025 Report Analysis [2025]

- Epstein's Silicon Valley Network: The EV Startup Connection [2025]

- Salesforce Halts Heroku Development: What It Means for Cloud Platforms [2025]

- Deploying AI Agents at Scale: Real Lessons From 20+ Agents [2025]

- India's Deep Tech Startup Rules: What Changed and Why It Matters [2025]

- Tech Billionaires At Super Bowl LIX: Inside Silicon Valley's $50K Power Play [2025]

![Thomas Dohmke's $60M Seed Round: The Future of AI Code Management [2025]](https://tryrunable.com/blog/thomas-dohmke-s-60m-seed-round-the-future-of-ai-code-managem/image-1-1770736281805.jpg)