The AI Energy Crisis: Who Pays When Data Centers Drain the Grid

We've got a problem brewing in America's power infrastructure, and it's growing exponentially.

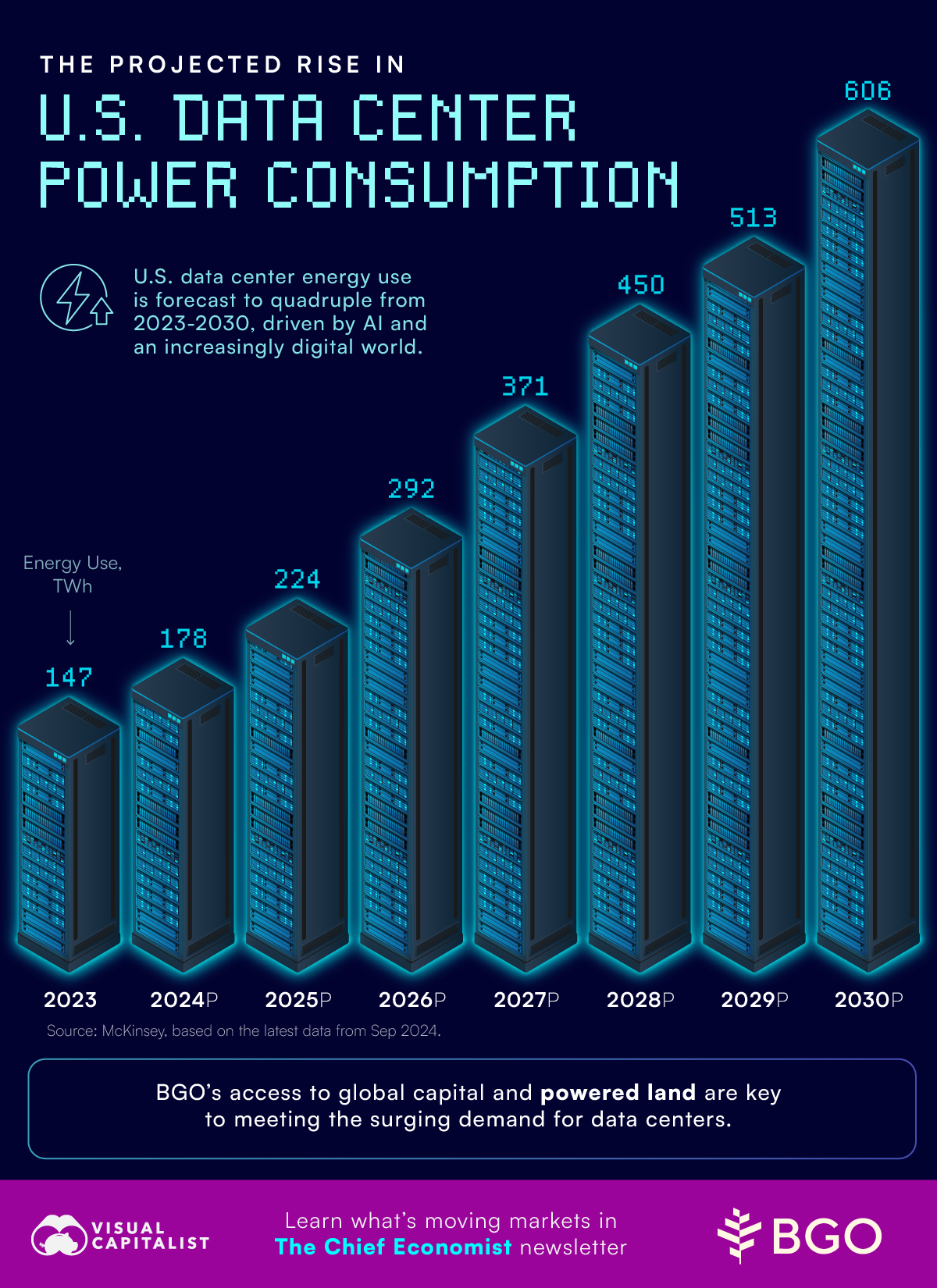

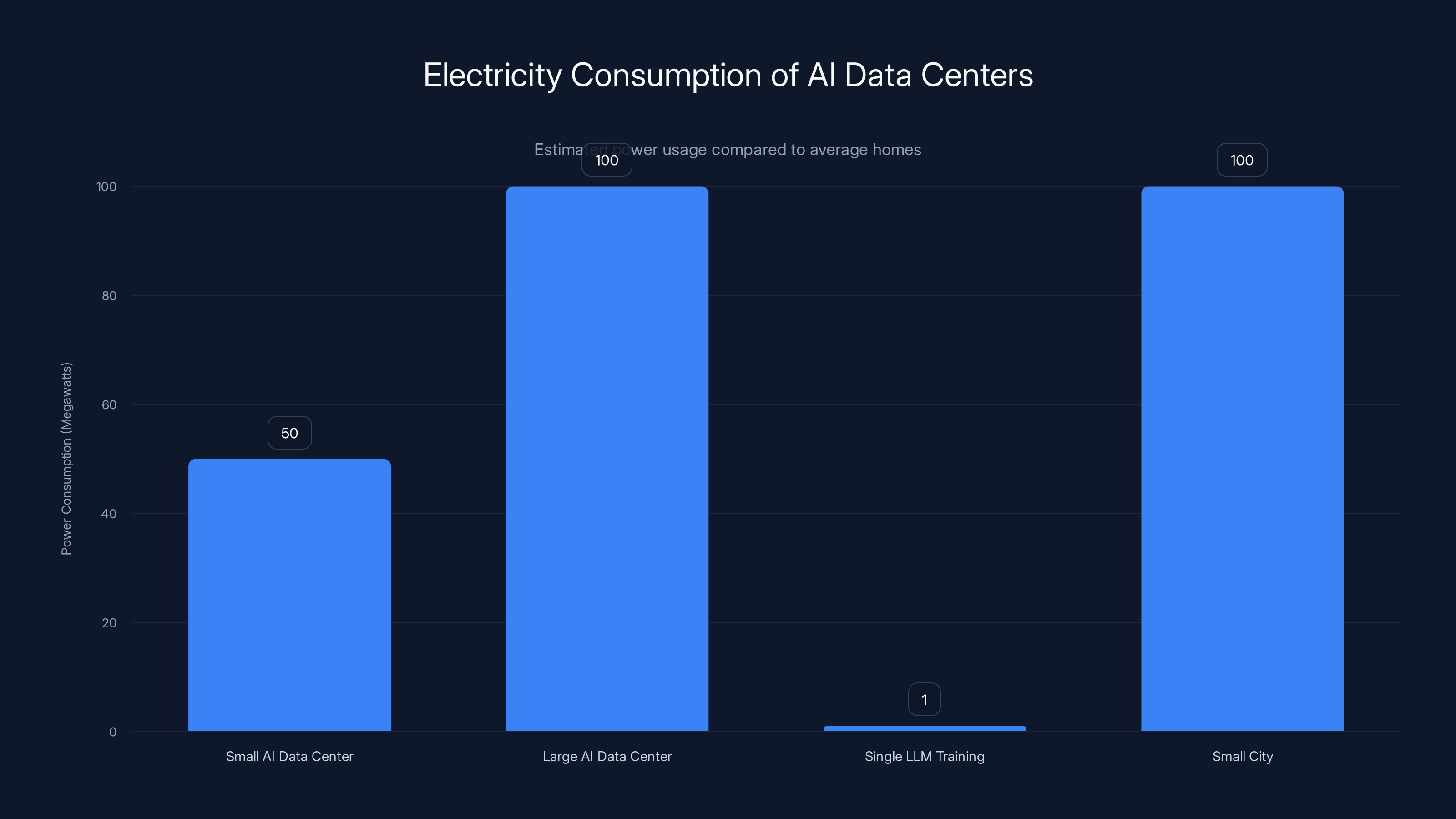

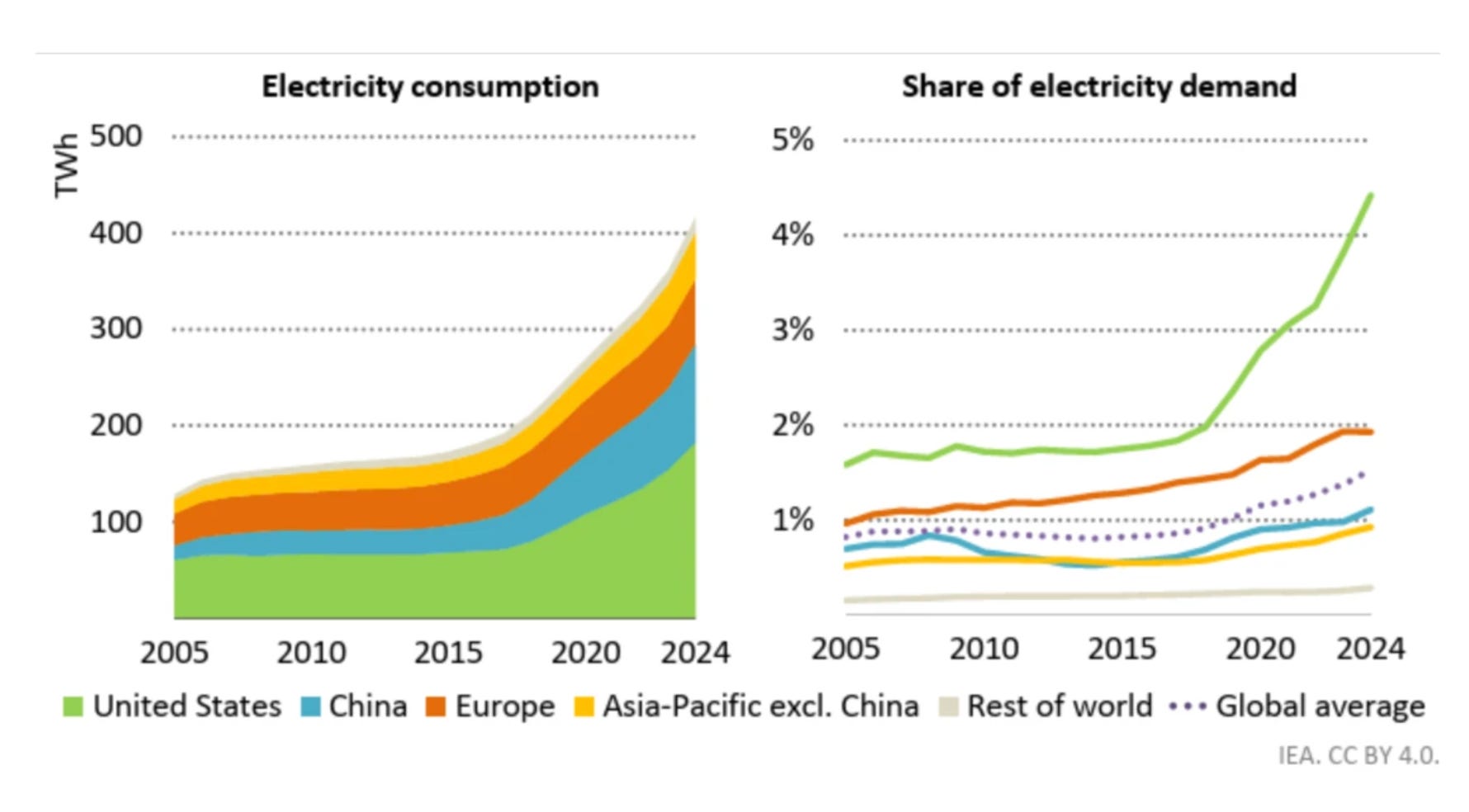

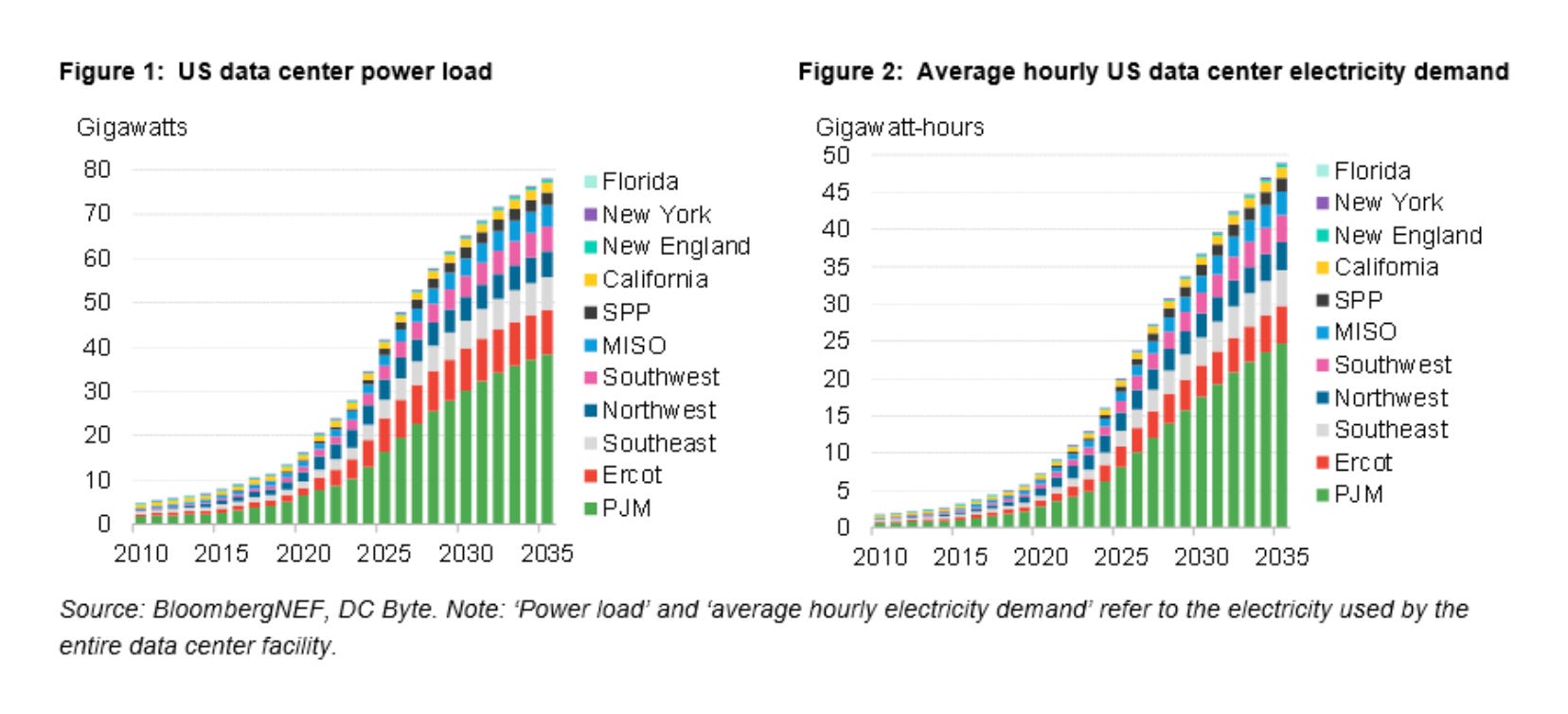

AI data centers consume staggering amounts of electricity. A single large-scale AI training facility can draw as much power as a mid-sized city. Now add thousands of these facilities across the country, all competing for energy resources, and you start to see why utility companies are nervous and why everyday Americans worry about their electricity bills.

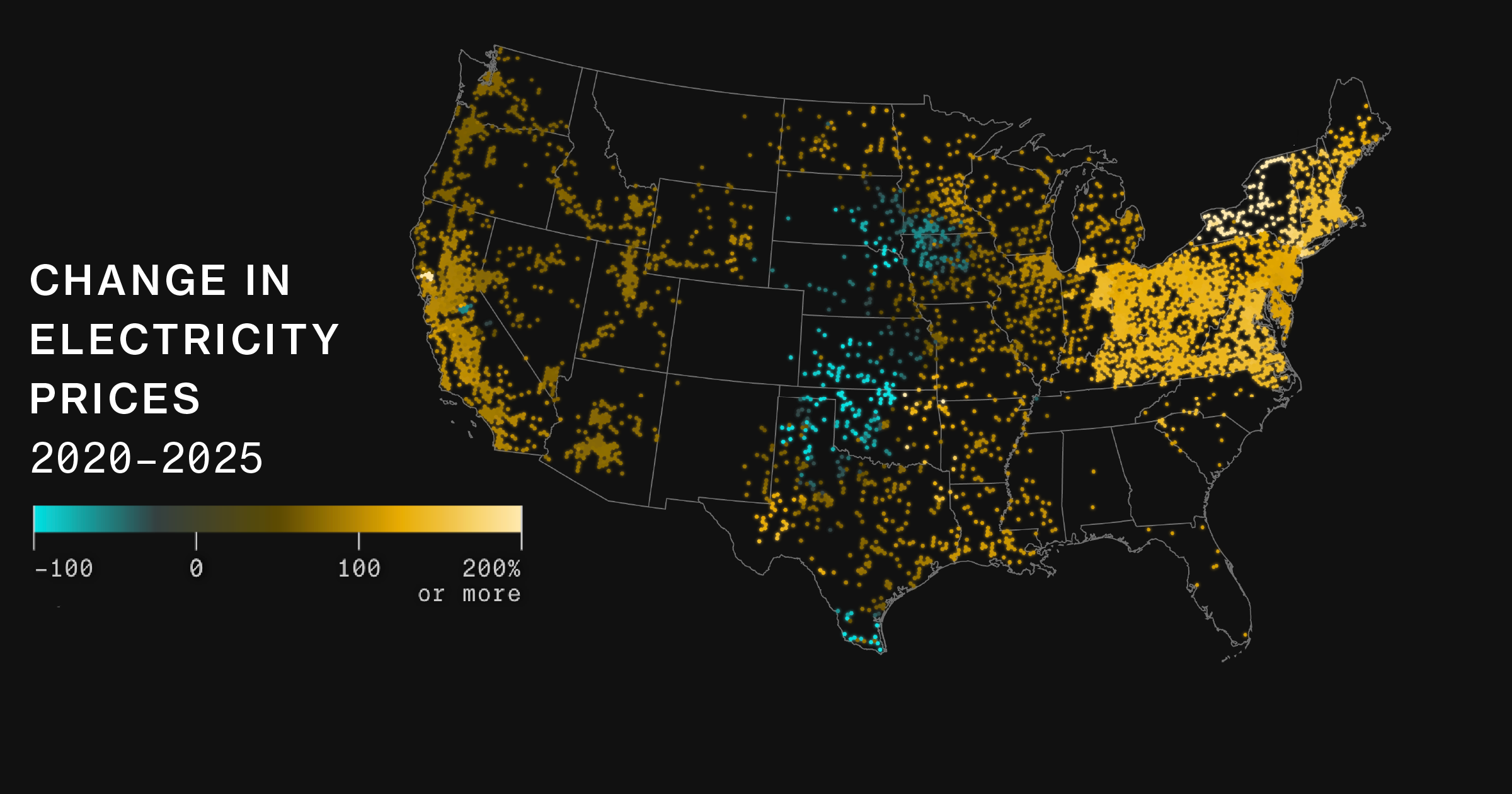

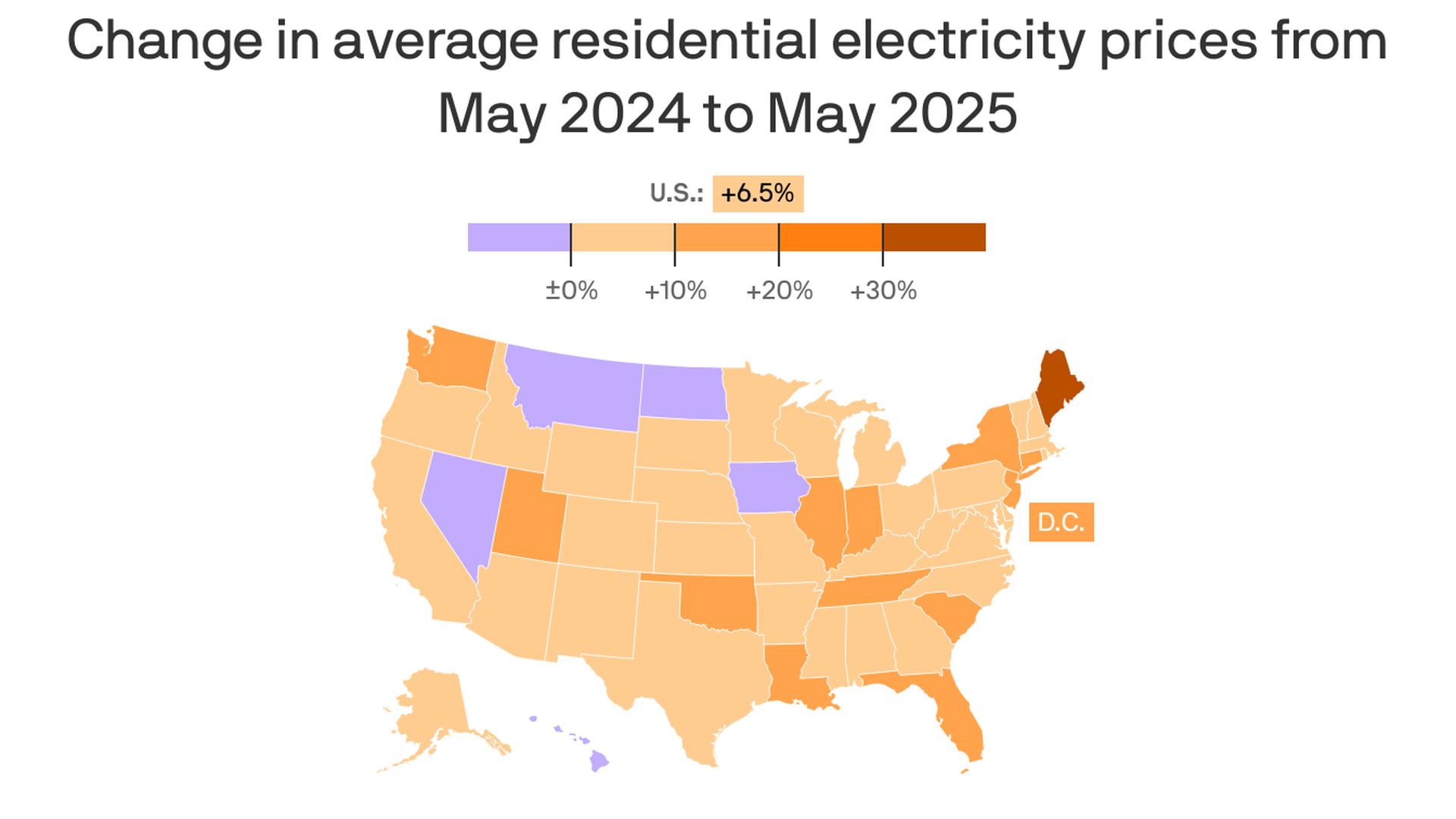

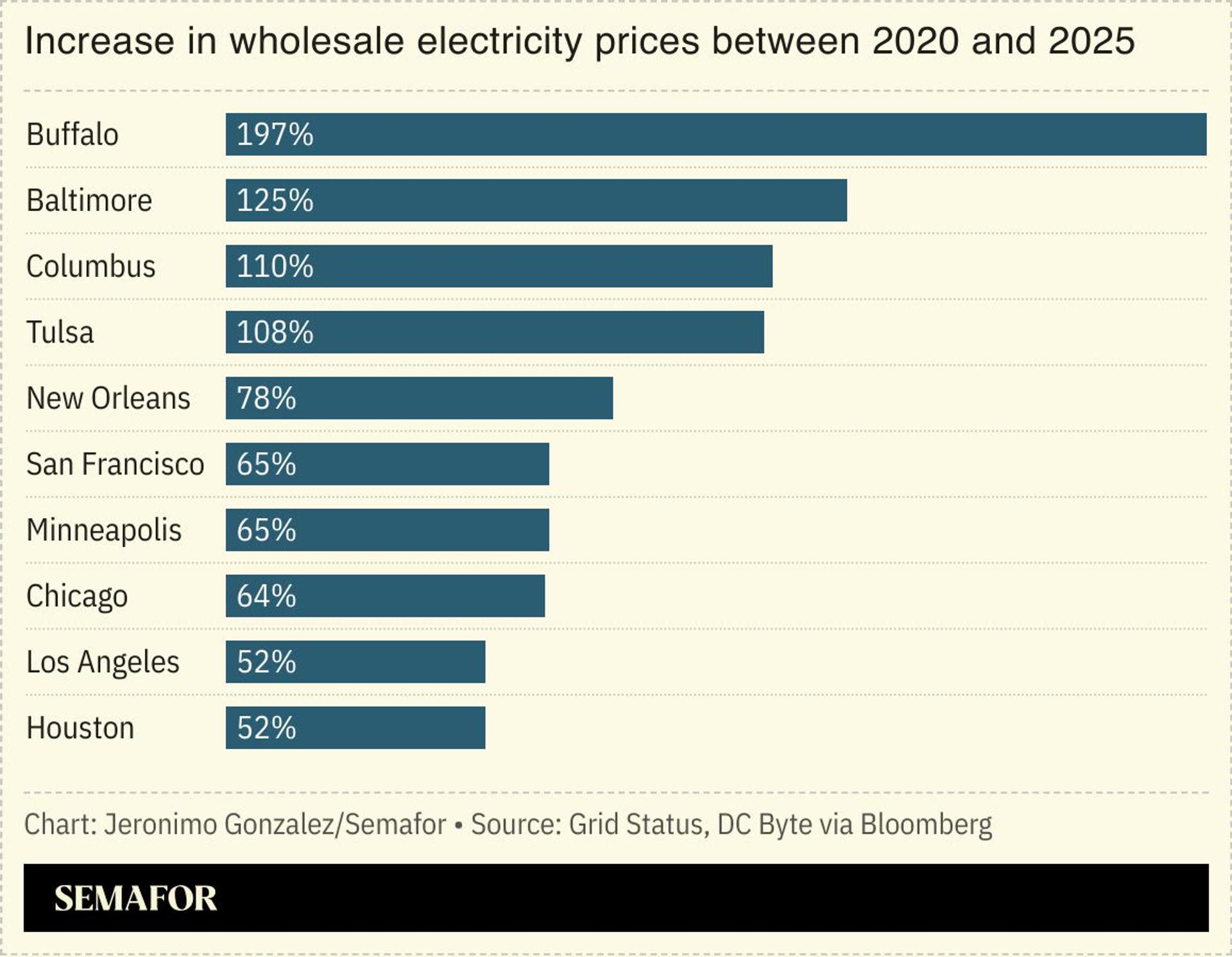

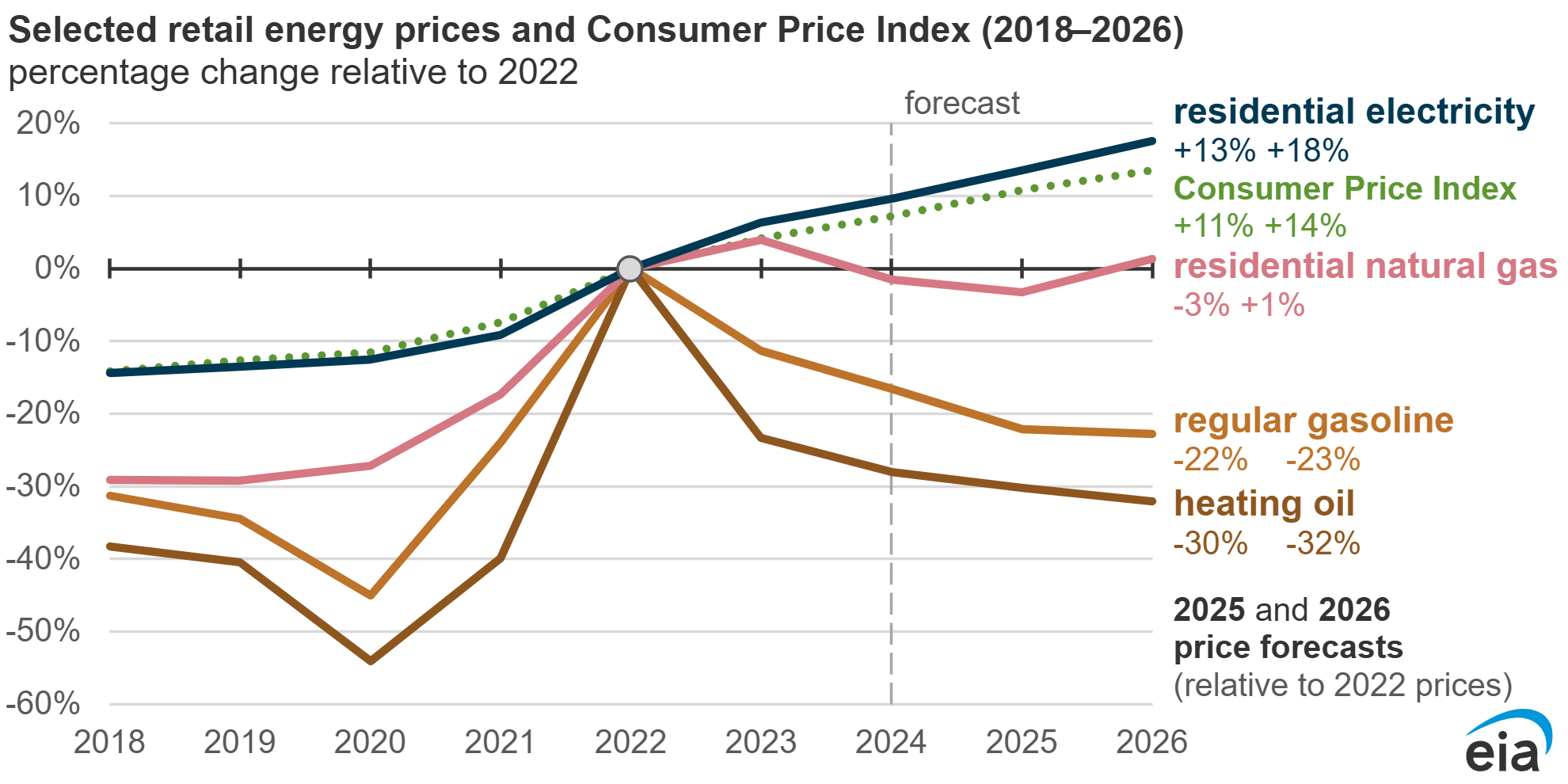

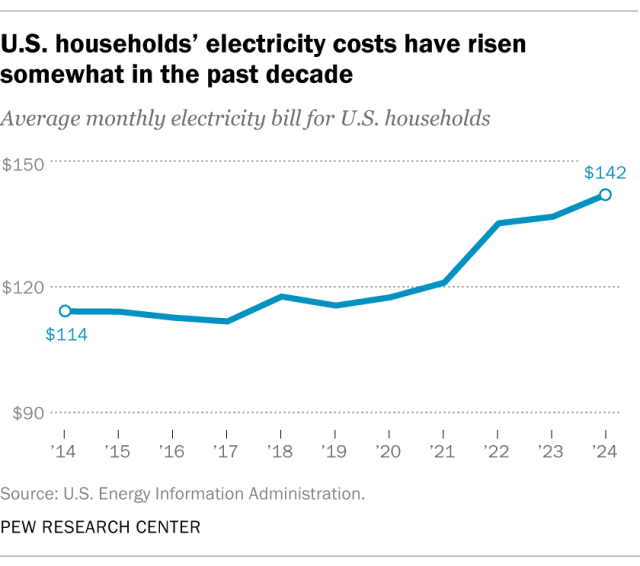

Here's the tension: When a massive tech company builds a data center in a region, it doesn't just tap into unlimited power. It strains the existing grid. Local utilities have to upgrade infrastructure, build new transmission lines, and sometimes even construct entirely new power plants. These costs don't vanish. They get passed along to the people living in those communities through higher electricity bills, as noted by Stateline.

So the question became unavoidable: Should an AI company's growth burden fall on consumers who had nothing to do with that choice?

President Trump asked this question directly in January 2025. He posted on Truth Social urging Big Tech companies to "pay their own way" and stop leaving American ratepayers holding the bill for their massive energy demands. It was a blunt statement that reflected growing public concern about AI's environmental and economic footprint, as reported by Governing.

Then something unexpected happened. Major AI companies started responding.

Microsoft pledged to cover energy costs. Anthropic went further, making specific commitments about grid upgrades and power procurement. These weren't vague promises. They were detailed commitments with concrete financial backing.

But here's what most articles miss: This isn't just corporate PR. It's a fundamental shift in how the AI industry is thinking about its infrastructure footprint. And it has massive implications for how AI companies will expand, where they'll build, and how much they'll pay to do it.

Let's dig into what's actually happening, why it matters, and what it means for the future of AI development.

TL; DR

- AI data centers consume enormous amounts of electricity, often drawing as much power as cities of hundreds of thousands of people

- Trump and regulators demanded Big Tech cover their own energy costs, pushing back against grid upgrade expenses being passed to consumers

- Anthropic and Microsoft made specific pledges to pay for grid upgrades, new power generation, and demand-driven price increases

- This fundamentally changes AI expansion economics, making it more expensive to build new facilities in high-demand regions

- Long-term impact: Expect AI companies to seek renewable energy sources, build data centers in less congested areas, and develop more energy-efficient AI models

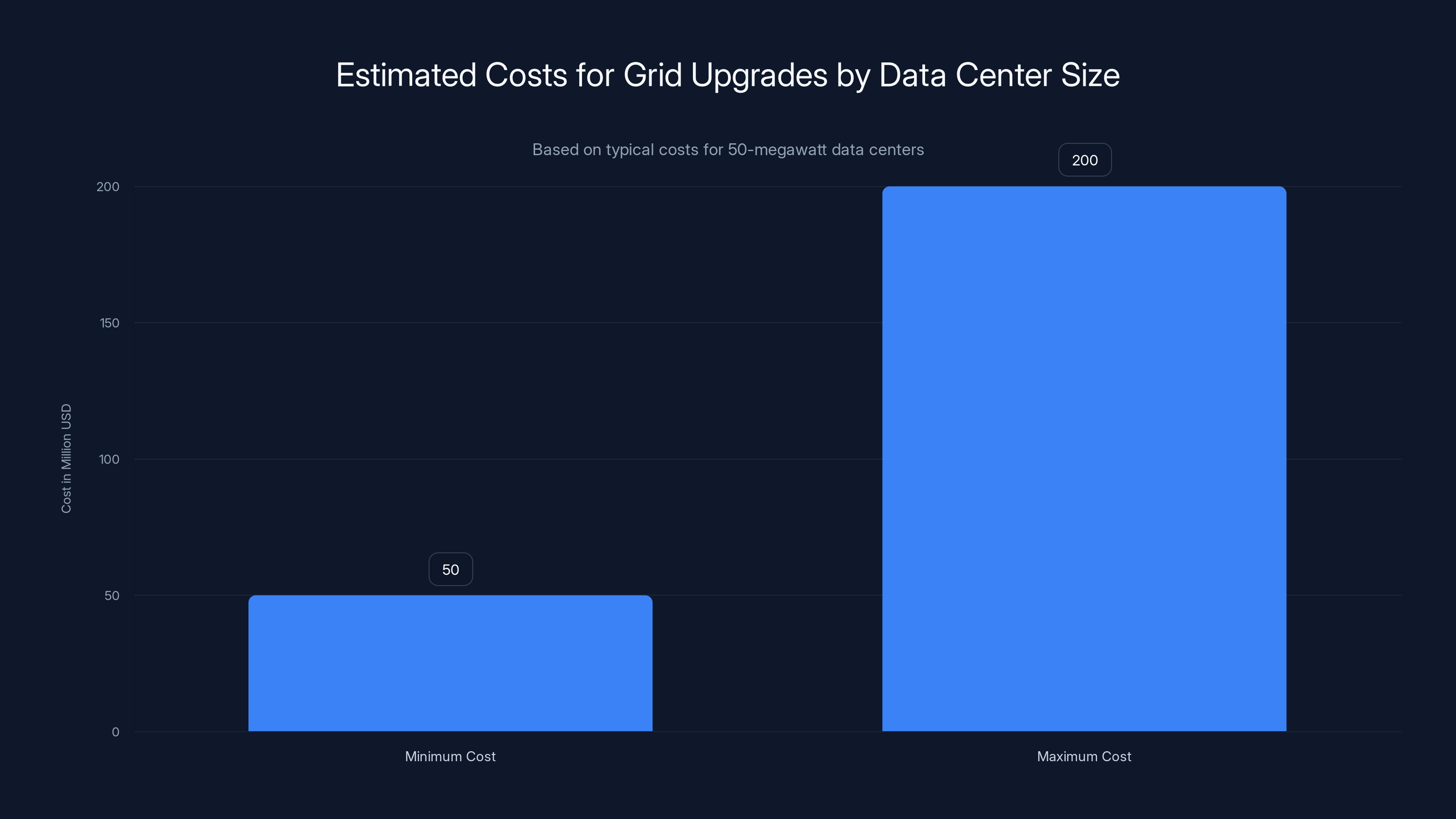

Anthropic's commitment to cover 100% of grid upgrade costs for a 50-megawatt data center ranges from

Understanding AI Data Center Power Consumption

How Much Power Does AI Actually Need?

Let's establish baseline numbers, because the scale is genuinely hard to comprehend.

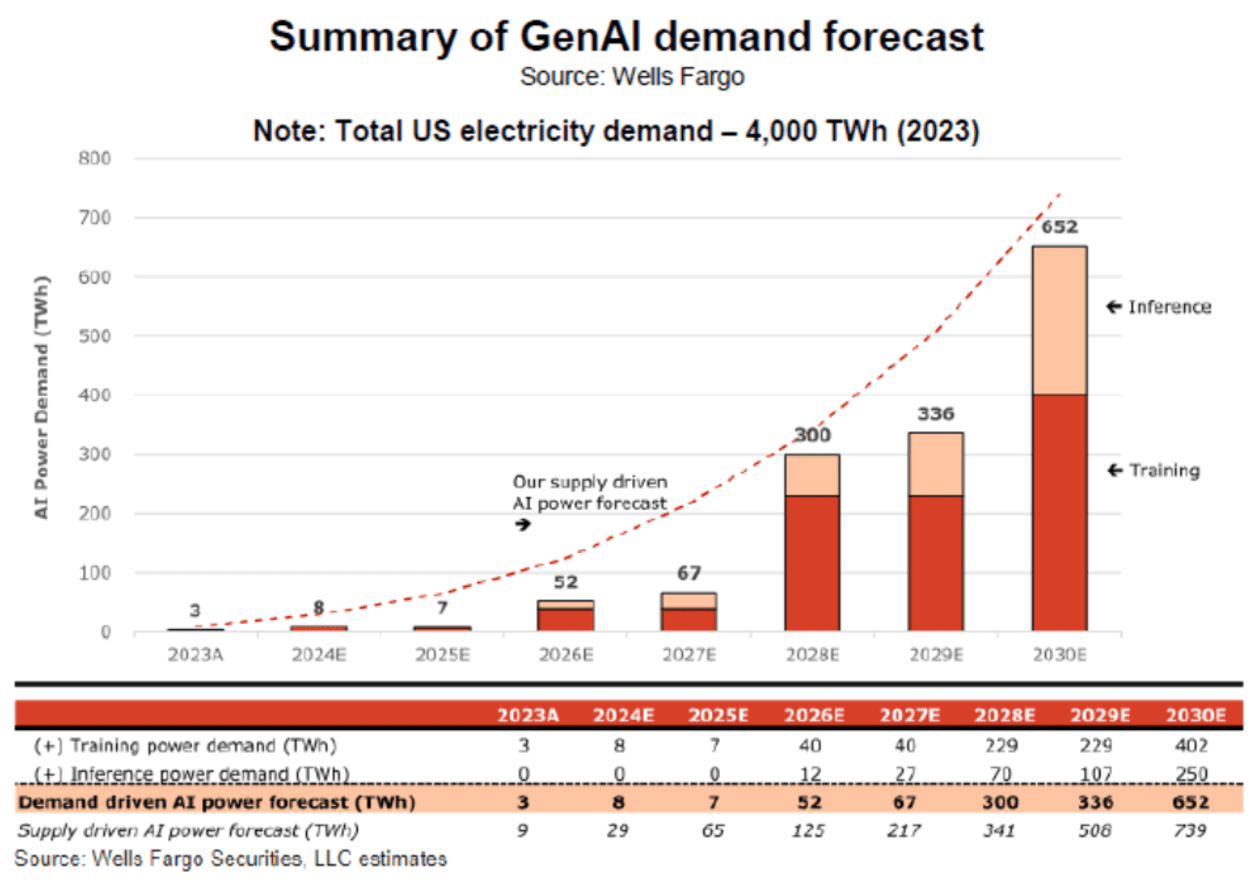

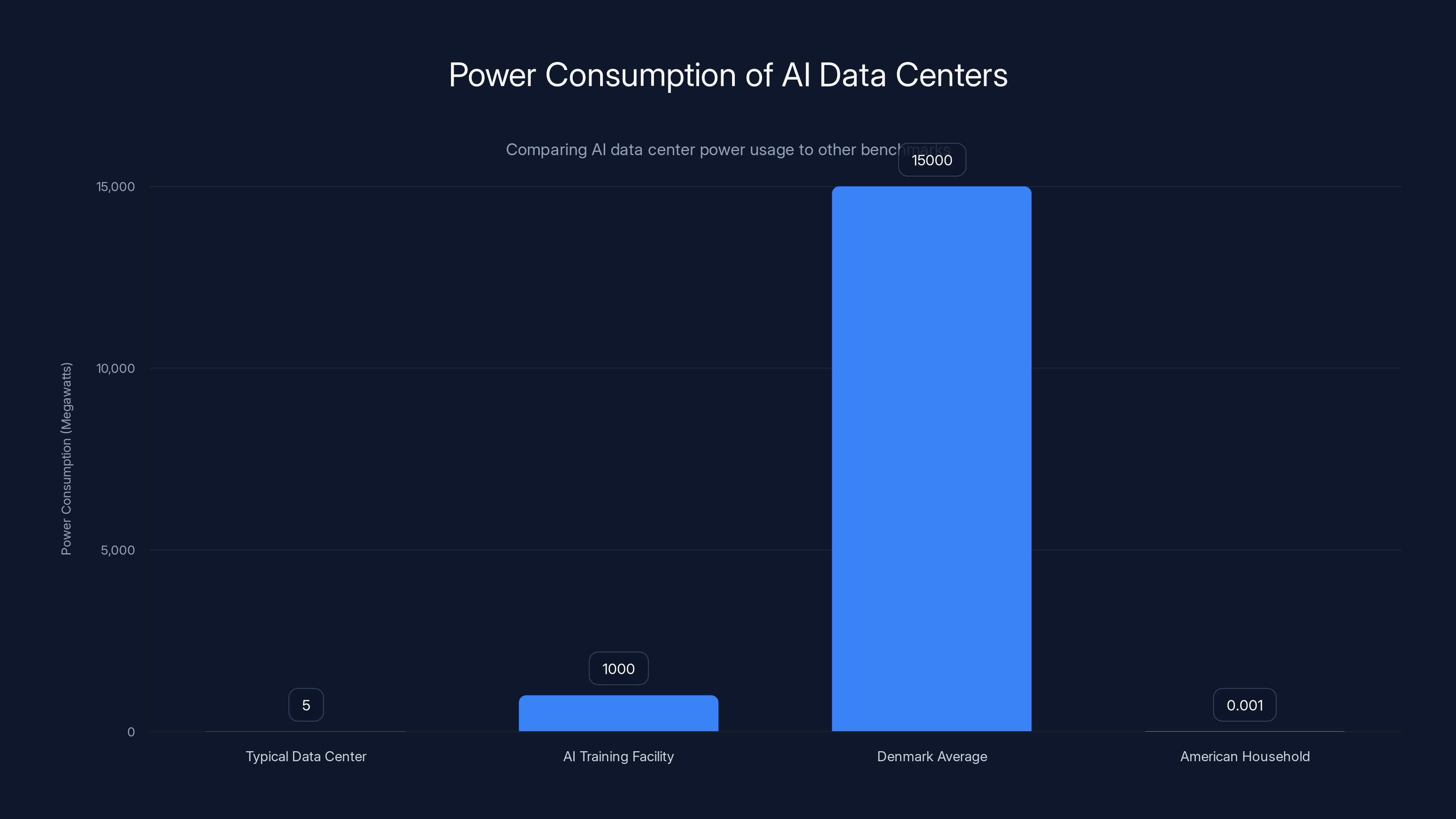

A typical data center might consume between 5 and 50 megawatts of continuous power. But cutting-edge AI training facilities? They're often 10 to 100 times that. Some estimates suggest that training a single large language model can consume over 1,000 megawatts during peak periods. That's comparable to a nuclear power plant's output, as highlighted by Galaxy.

To put this in perspective, the entire country of Denmark consumes roughly 15,000 megawatts on average. A single AI training run can represent 6-8% of that country's total power consumption. For context, the average American household uses about 1 kilowatt continuously.

Why AI Models Demand So Much Energy

AI models aren't magical. They're massive mathematical operations running across thousands of specialized processors working in parallel. Each operation generates heat. Each processor requires cooling. Cooling systems require even more electricity.

Think of it like this: If a traditional software application is a small bakery oven, an AI training facility is a massive industrial furnace. The heat output doesn't just disappear. It has to be managed, cooled, and dissipated. That's expensive.

The problem gets worse during inference (when the model is actually being used). A single query to OpenAI's GPT-4 runs trillions of calculations. Billions of people using these systems means billions of queries per day, each one consuming measurable energy. The math becomes staggering.

Grid Impact and Infrastructure Strain

When a large AI facility arrives in a region, local power grids aren't prepared. Most infrastructure was built 20, 30, or 40 years ago with different consumption patterns in mind.

Sudden massive power demand forces utilities to make expensive choices: upgrade existing infrastructure, build new transmission lines, construct new power generation capacity, or use expensive peaker plants that only run during peak demand. All of these carry enormous upfront costs, as discussed in Atmos.

These costs get recovered through utility rates. When rates go up, everyone in the region pays more, regardless of whether they use AI services or benefit from the data center's presence.

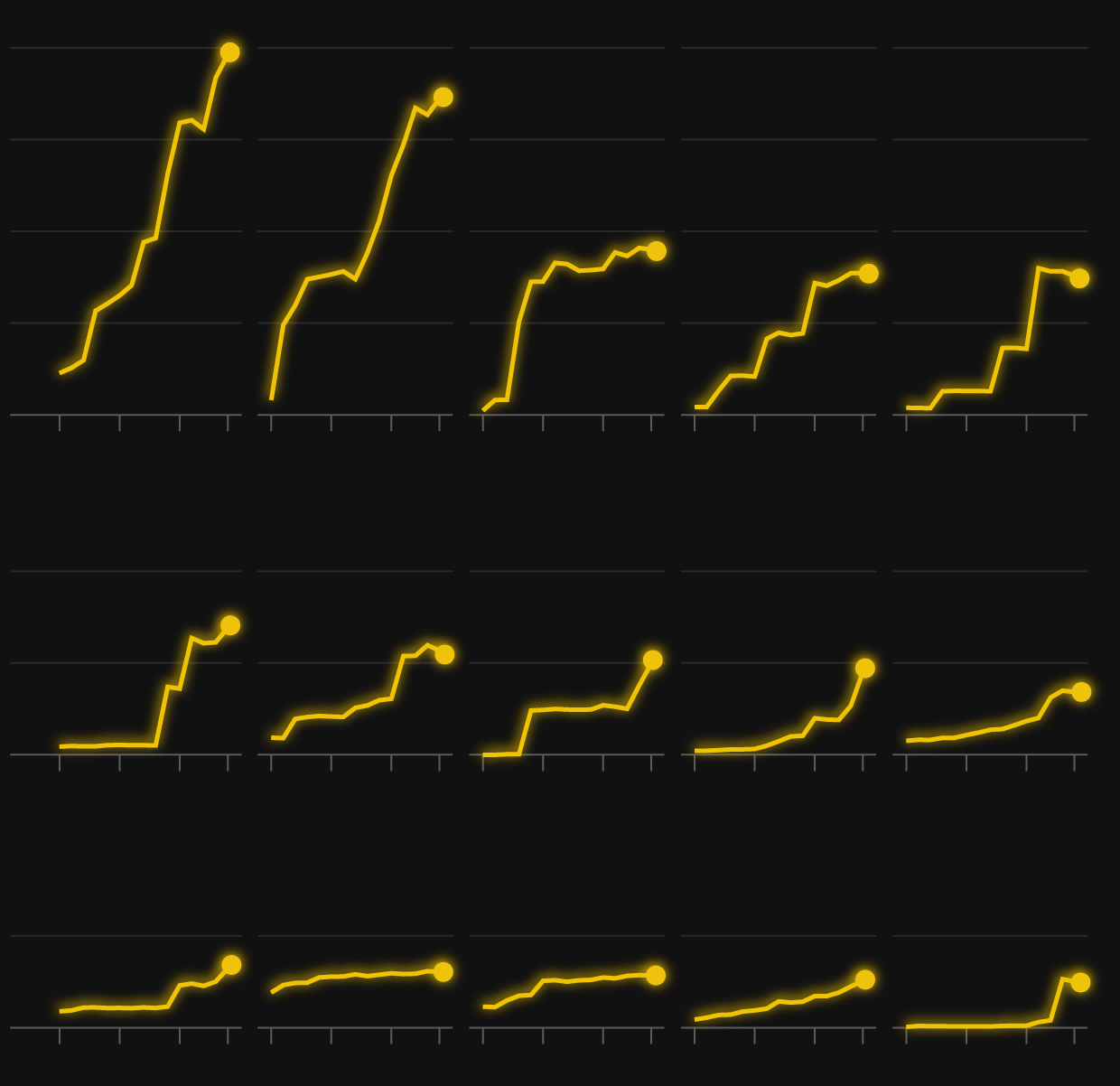

AI data centers consume significant power, with large facilities using 50-100 MW, comparable to the needs of a small city. Estimated data.

The Political Pressure: Trump's January 2025 Statement

The Catalyst

In January 2025, President Trump issued what seemed like a casual statement on Truth Social, but it was anything but casual. He directly called out major tech companies, saying they needed to "pay their own way" for their data center operations and that they shouldn't pass energy costs to American consumers.

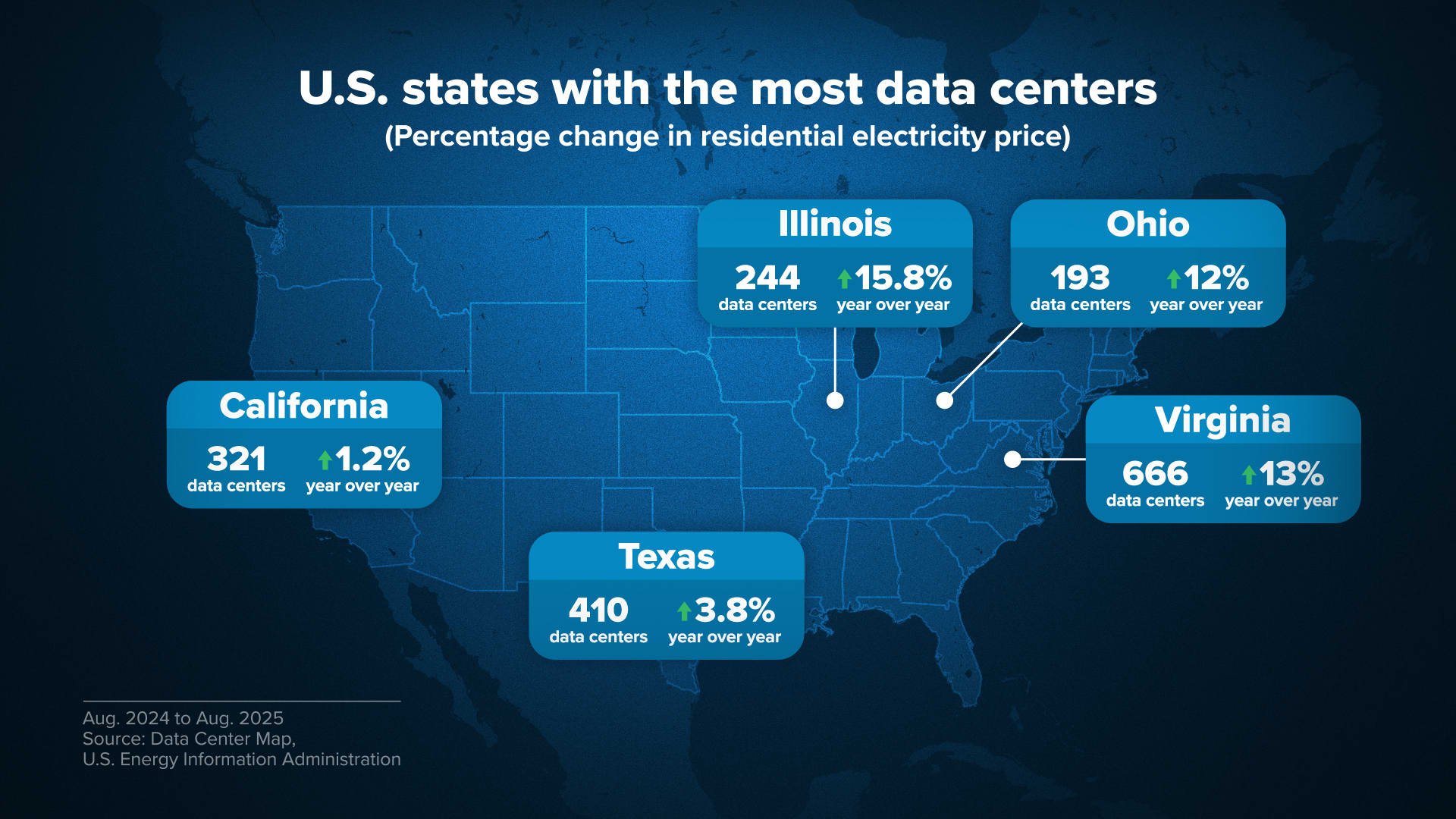

The timing wasn't random. It came after months of reporting about electricity bill increases in regions hosting new AI data centers. Communities in states like Virginia, Ohio, and North Carolina saw utility rate filings that explicitly cited "AI data center demand" as a driver of infrastructure upgrade costs.

Why This Mattered

Trump's statement did something important: It gave political cover to utility regulators who were increasingly skeptical of allowing data center operators to shift costs to consumers. If the federal government was saying companies should pay their own way, state utility commissions felt emboldened to demand the same.

Moreover, Trump's statement created a Catch-22 for AI companies. They could either:

- Accept higher operational costs by paying for grid upgrades themselves

- Face regulatory backlash and potential franchise fee increases

- Relocate data centers to other countries (not an option for most, due to national security concerns)

Most chose option one. Paying for upgrades became the path of least resistance.

Market Response

Within weeks of Trump's statement, major tech companies issued their own statements. Microsoft announced plans for grid-aware data center placement and committed to purchasing renewable energy to offset new facilities. Anthropic went further with specific financial commitments.

This wasn't altruism. It was competitive strategy. Companies that could absorb costs more efficiently would win market share against competitors who couldn't. Efficiency became a competitive advantage.

Anthropic's Specific Commitments: The Details

100% Grid Upgrade Cost Coverage

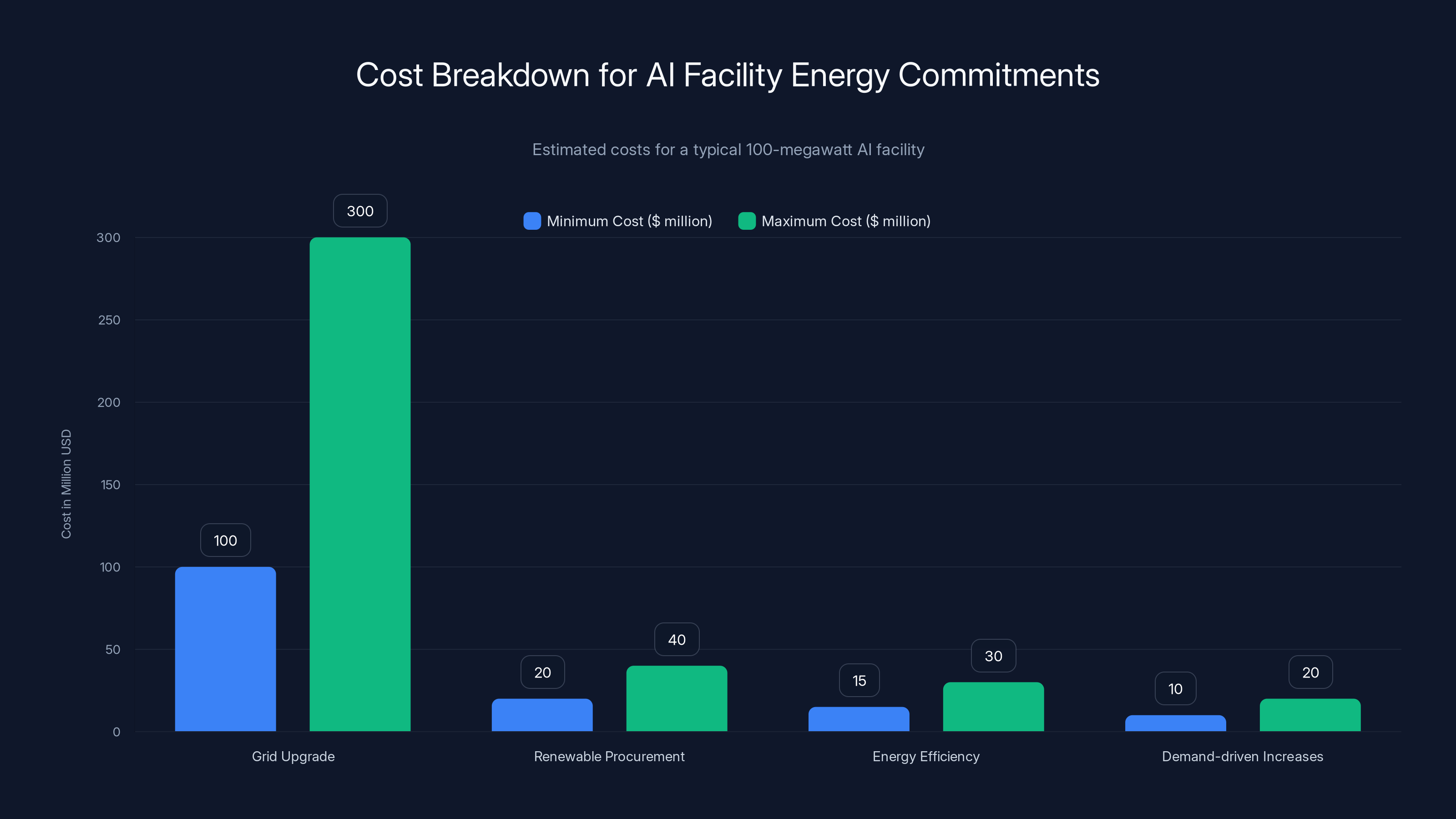

Anthropics's CEO Dario Amodei made a specific pledge: The company would pay 100% of grid upgrade costs needed to connect its data centers. This isn't theoretical. Let's break down what this means in actual dollars.

Upgrading power infrastructure to support a new 50-megawatt data center typically costs between

That's a massive financial commitment. It changes the economics of data center placement dramatically. Now, instead of looking at cheap land and existing power capacity, Anthropic has to weigh the cost of grid upgrades against the benefits of that specific location.

Net-New Power Generation Procurement

Anthropic also committed to procuring "net-new power generation where possible to match its consumption." This is the renewable energy piece.

Instead of plugging into the existing grid and increasing demand on fossil fuel plants, Anthropic will build or contract for equivalent amounts of new renewable capacity. This means solar farms, wind installations, or battery storage systems that generate power exclusively for the data center's operations.

This commitment has a hidden benefit: It forces AI companies to accelerate renewable energy development. Instead of waiting for government incentives or market forces to drive solar and wind growth, massive tech companies are now funding it directly as part of their operational requirements.

Demand-Driven Price Increase Coverage

Here's where it gets complex. When you can't procure new power generation (maybe the location doesn't have good solar or wind resources), Anthropic committed to covering "estimated demand-driven price increases."

What does this mean in practice? Let's model it:

Suppose a region's electricity costs

Anthropics would pay the $1.50 per megawatt-hour difference for all electricity consumed in that region, compensating consumers for the cost increase caused by the data center's presence.

But here's the catch: This only works if you can accurately predict demand-driven price increases. In reality, electricity markets are complex. Prices fluctuate based on weather, other industrial demand, seasonal patterns, and fuel costs. Separating "price increase caused by Anthropic" from "price increase caused by other factors" is genuinely difficult.

Energy Efficiency Improvements

Beyond paying for infrastructure, Anthropic committed to deploying efficiency improvements like liquid cooling systems. This is the overlooked piece of the puzzle.

Traditional data centers use air cooling, which is inefficient and requires enormous fans constantly running. Liquid cooling circulates cool water directly over hot components, removing heat far more effectively. The efficiency gains are substantial—often reducing energy consumption by 20-30%.

Anthropics investing in these technologies across its facilities means lower overall power demands, which reduces the grid upgrade costs and makes the company's commitment more achievable long-term.

The first-year cost for energy commitments in AI facilities ranges from

Microsoft's Approach: A Different Strategy

Early Mover Advantage

Microsoft was already making energy commitments before Trump's statement, though not for purely altruistic reasons. The company realized that controlling its energy narrative was good business.

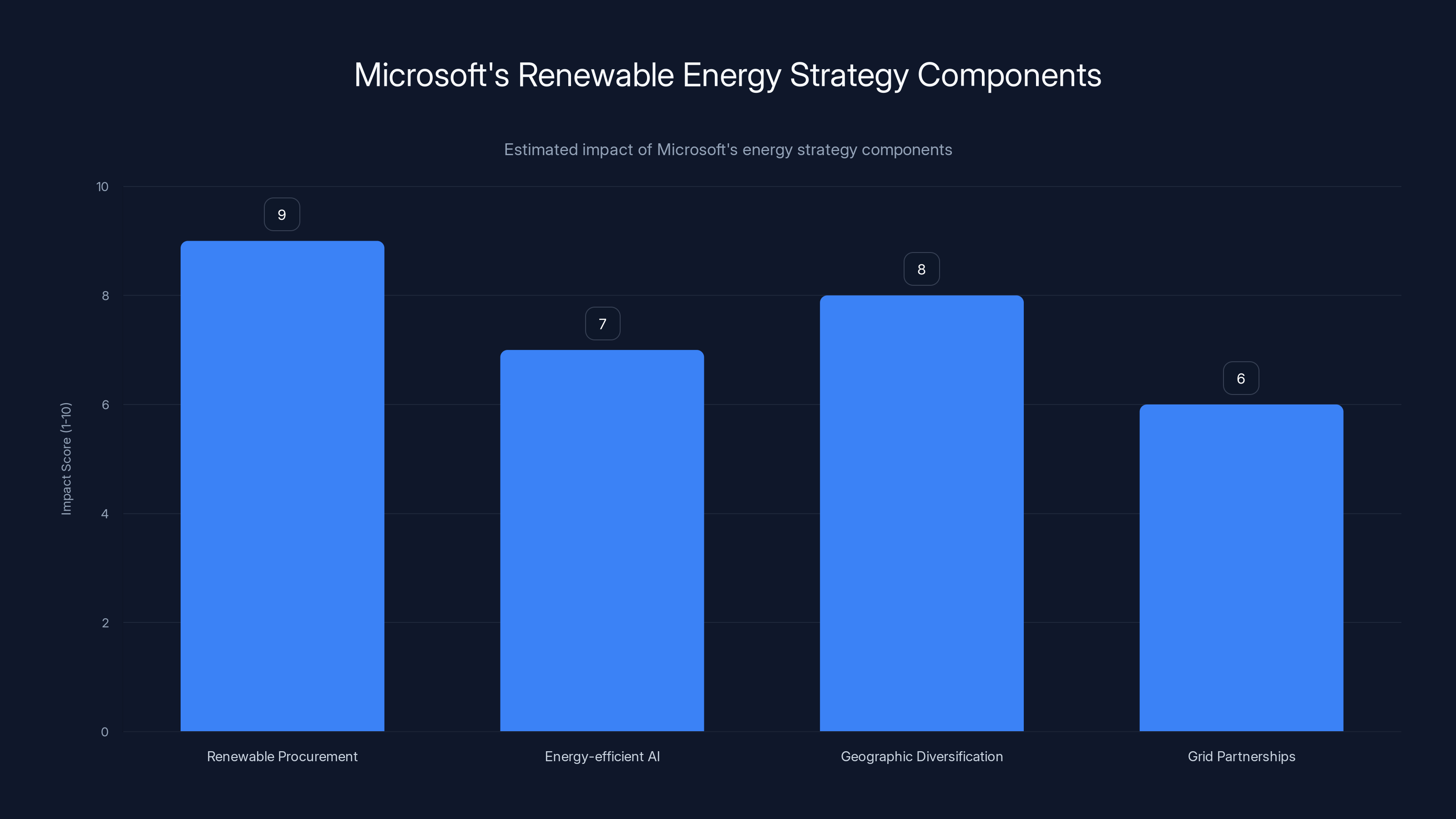

Microsoft announced a comprehensive energy strategy that includes:

- Renewable energy procurement: Signing massive deals for wind and solar capacity

- Energy-efficient AI models: Investing heavily in model optimization

- Geographic diversification: Building data centers in areas with abundant renewable resources

- Grid partnership programs: Working with utilities on demand-response initiatives

Microsoft's approach is broader than Anthropic's. Instead of just covering costs caused by its facilities, Microsoft is attempting to change how AI companies think about energy holistically.

Renewable Energy at Scale

Microsoft has committed to purchasing enough renewable energy to cover 100% of its data center operations by 2025. This is a massive undertaking. The company is contracting for gigawatts of new wind and solar capacity.

But here's what makes this strategically interesting: By committing to huge renewable purchases, Microsoft is accelerating the buildout of renewable infrastructure across the entire grid. This benefits everyone, not just Microsoft customers.

Competitive Positioning

Microsoft's renewable energy strategy also positions the company favorably with customers and regulators. Companies choosing cloud providers increasingly consider environmental impact. By being the AI company with the lowest carbon footprint, Microsoft gains competitive advantages in the enterprise market.

The Broader Industry Response

Who Else Made Commitments?

Beyond Microsoft and Anthropic, other AI companies have made various pledges:

- Google committed to carbon-neutral operations and renewable energy procurement

- OpenAI hasn't made public commitments as specific as Anthropic's, but is exploring partnerships with power companies

- NVIDIA is working with partners on energy-efficient chip designs

- Numerous startups are racing to build more efficient AI models specifically to reduce power consumption

The Skeptics

Not everyone believes these commitments solve the problem. Critics argue:

Energy math doesn't work: Even with efficiency improvements, AI demand is growing faster than renewable capacity. Some regions will eventually run out of energy options.

Cost shifting, not elimination: Paying for grid upgrades doesn't eliminate the environmental impact. It just means tech company shareholders absorb costs instead of electricity consumers.

Renewable energy is finite: There's only so much renewable generation capacity in the world. If multiple AI companies are all competing for renewable power, prices will rise, making the commitments harder to maintain.

Geopolitical complications: Building renewable capacity takes time. Some regions simply can't build enough renewable infrastructure fast enough to match AI data center growth.

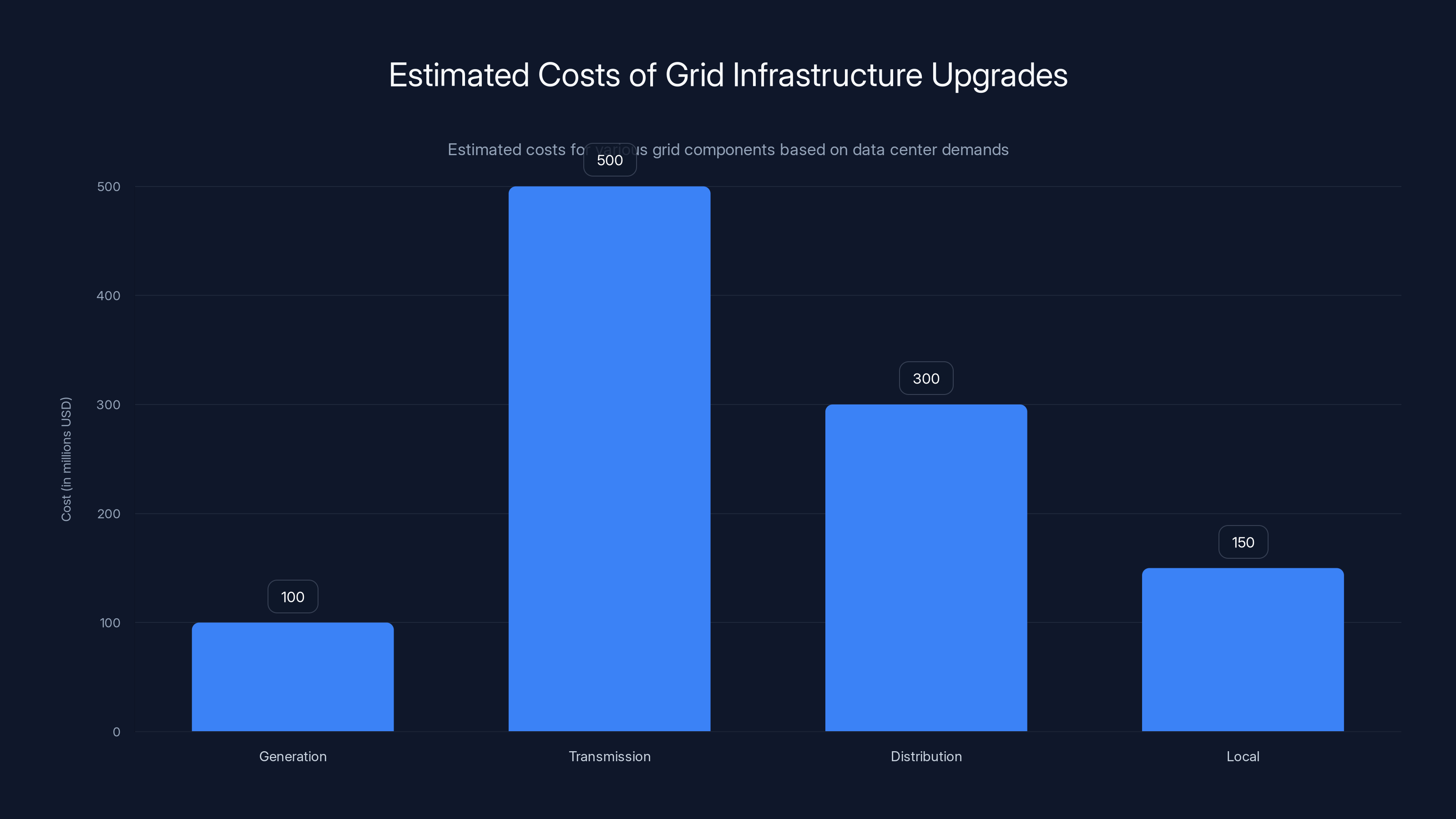

Estimated data shows transmission upgrades are the most costly, requiring significant investment compared to other grid components.

Economic Impact: The Real Numbers

Cost Implications for AI Companies

Let's quantify what these commitments actually mean financially.

For a typical 100-megawatt AI facility:

Grid upgrade costs:

Total first-year cost:

These costs are significant. For context, building a data center traditionally costs $150-500 million. Now you're adding 10-80% to that cost just to handle energy commitments.

Impact on Data Center Economics

These costs fundamentally change where data centers get built. Traditional logic might suggest building near cheap land with cheap electricity. But now the calculation is:

(Land cost) + (Energy cost) + (Renewable procurement cost) + (Grid upgrade cost) + (Efficiency system cost) - (Tax incentives)

Regions with abundant renewable energy, minimal grid upgrades needed, and good tax incentives suddenly become much more attractive. Places like Iowa, Wyoming, and Oregon (with abundant wind and hydro) become preferred locations. Places like New Jersey or California (with expensive land and grid congestion) become less attractive.

This is already happening. AI companies are actively shifting data center placement toward renewable-rich regions, and this trend will accelerate.

Grid Infrastructure: The Upgrade Reality

What Actually Needs Upgrading?

When a utility says "grid upgrades," what does that mean?

Power flows from generation facilities to your home through a network of infrastructure:

- Generation: Power plants, wind farms, solar arrays

- Transmission: High-voltage lines carrying power across regions (345k V, 765k V)

- Distribution: Medium-voltage lines in neighborhoods (13k V, 25k V)

- Local: Low-voltage service to individual buildings

When a 100-megawatt data center connects to the grid, it might require upgrades at multiple levels. Maybe the transmission line capacity is maxed out (needs new lines). Maybe the local substation transformer is undersized (needs replacement). Maybe there's insufficient reactive power support (needs capacitor banks).

Each upgrade is expensive and time-consuming. Transmission line construction alone can take 5-10 years just for permitting and environmental review, not counting actual construction.

Real Examples

Virginia's Loudoun County is experiencing this directly. The county now hosts about 30% of the world's data center capacity. New data centers arriving in the region recently triggered utility requests for $2+ billion in infrastructure upgrades over the next five years.

Under traditional models, these costs would be spread across all electricity ratepayers. Under the new model, data center operators pay for upgrades caused by their facilities.

A Dominion Energy filing from 2024 showed that a single 50-megawatt data center required about $250 million in grid upgrades. With Anthropic's commitment, that data center operator would pay that bill, not consumers.

The Renewable Energy Infrastructure Challenge

Here's where things get genuinely difficult. Procuring net-new renewable energy sounds simple until you confront logistics.

Building a utility-scale solar farm requires:

- Land: 5-10 acres per megawatt

- Permitting: 1-3 years

- Construction: 1-2 years

- Grid connection: 6-18 months

- Total timeline: 3-5 years

Building a wind farm requires similar timelines. Battery storage? 4-6 years.

Meanwhile, AI companies want to expand data centers now. This creates a temporal mismatch. Companies commit to matching their consumption with renewable generation, but they can't build the renewable generation fast enough.

The solution? Buying renewable energy credits (RECs) from existing facilities. But there's a finite supply of RECs. When multiple AI companies are all buying RECs simultaneously, prices rise.

AI training facilities can consume up to 1,000 megawatts, significantly more than typical data centers and comparable to national power usage levels. Estimated data.

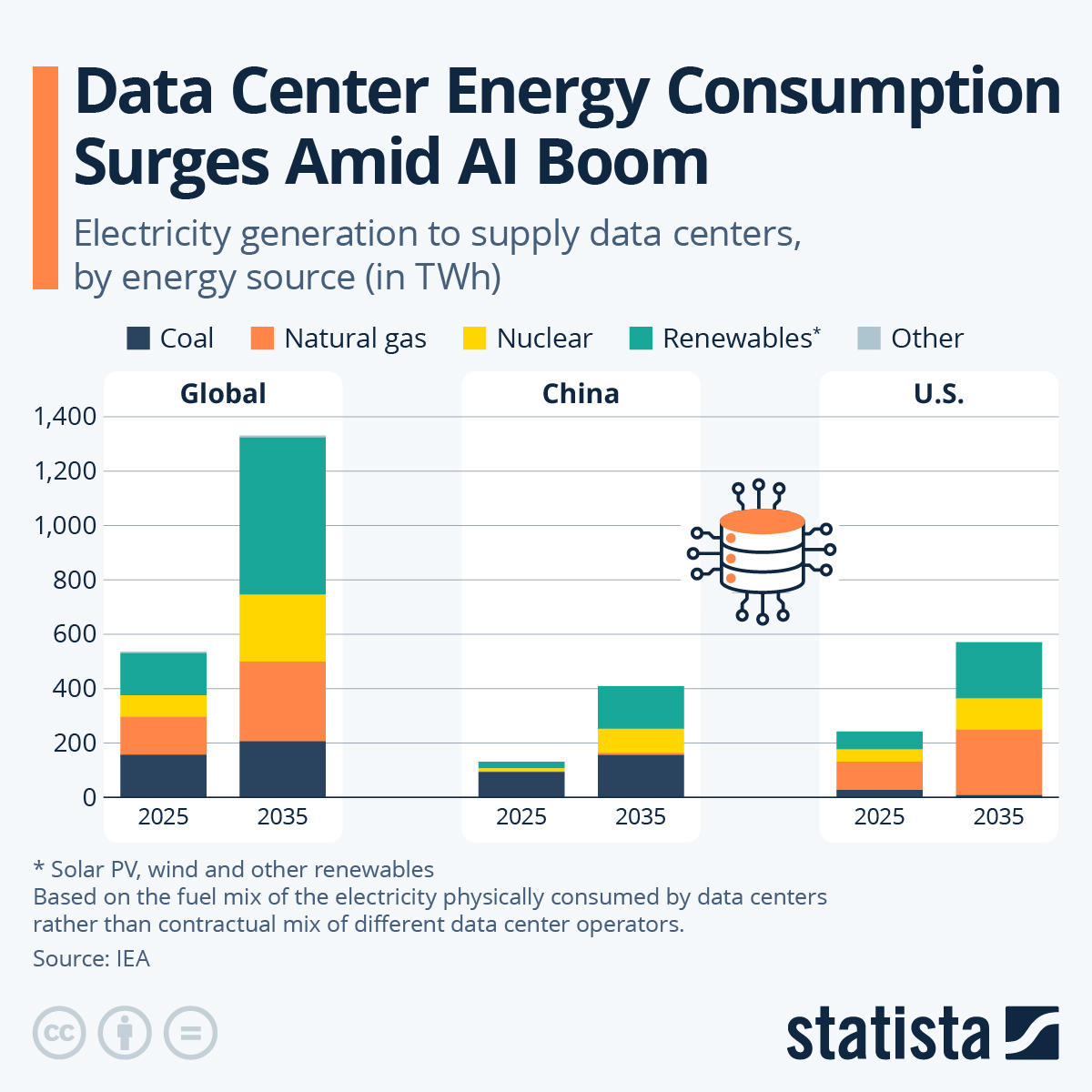

Environmental Impact: Beyond the Energy Bill

Carbon Footprint Reality

Let's be clear: Renewable energy procurement reduces carbon emissions, but it doesn't eliminate them entirely.

A 100-megawatt AI data facility consuming 877,000 megawatt-hours annually produces:

- With coal power: 700,000+ metric tons of CO2 annually

- With natural gas power: 450,000+ metric tons of CO2 annually

- With renewable power: 50,000+ metric tons of CO2 (embodied carbon from manufacturing)

Committing to renewable power eliminates 85-95% of emissions. That's significant. But it's not zero.

Water Consumption

Here's something often overlooked: AI data centers need water, lots of it.

Cooling systems use water (either through evaporative cooling or water-based cooling systems). A 100-megawatt facility might consume 1-2 million gallons of water daily.

In water-stressed regions (California, Texas, Southwest), this becomes a major environmental issue. Anthropic's commitment addresses energy costs but doesn't directly address water consumption. This is becoming a secondary pressure point for regulators.

Ecosystem Impact

Building new renewable energy infrastructure has its own environmental costs:

- Solar farms: Land use impacts, wildlife habitat disruption

- Wind farms: Bird and bat mortality, noise concerns

- Hydroelectric: Ecosystem disruption, fish migration impacts

- Geothermal: Induced seismicity (rare but concerning)

The commitment to renewable energy doesn't eliminate environmental impact. It shifts impact from carbon emissions to other environmental factors.

Regulatory Landscape: What Comes Next

State-Level Regulations

Individual states are already moving beyond Trump's general request. Specific regulatory actions:

Virginia is considering explicit requirements for data center operators to demonstrate renewable energy procurement before approval.

New York is exploring "energy impact fees" that would require companies to reimburse the state for any electricity cost increases caused by their facilities.

Texas is debating whether data centers should be treated like other industrial users or given special treatment.

California is effectively requiring data centers to be carbon-neutral through renewable energy mandates.

These aren't coordinated. Each state is developing its own rules independently, creating a patchwork of requirements that AI companies must navigate.

Federal Possibilities

There's no federal mandate yet, but conversations are happening. Potential federal approaches could include:

- Tax incentives for companies that pay grid upgrade costs voluntarily

- Federal standards for energy efficiency in data centers

- Renewable energy requirements for companies receiving government contracts

- Environmental impact fees that fund renewable infrastructure buildout

International Implications

This is becoming a competitive issue globally. The European Union is considering even stricter requirements for AI infrastructure energy sourcing. Countries competing for AI investment are strategically positioning themselves by offering renewable-rich regions with favorable regulations.

Ireland, for example, is leveraging abundant renewable energy to attract data centers. Singapore is investing heavily in renewable energy specifically to support AI industry growth.

Microsoft's comprehensive energy strategy includes renewable procurement and AI model optimization, with renewable procurement having the highest strategic impact. Estimated data.

The Efficiency Revolution: Building Better AI

Model Optimization

While companies were committing to paying for energy, they were simultaneously investing in making AI models more efficient. These efforts are running parallel and reinforcing each other.

Model compression techniques reduce the size of AI models by 50-70% without significant accuracy loss. Smaller models require less energy to run.

Quantization reduces numerical precision (using 8-bit instead of 32-bit numbers). This saves computation and energy while maintaining accuracy in most cases.

Pruning removes unnecessary connections in neural networks. Think of it like trimming dead branches from a tree.

Knowledge distillation trains a smaller model to mimic a larger model's outputs. The smaller model runs faster and uses less energy.

These techniques are advancing rapidly. The efficiency improvements in AI models year-over-year rival the improvements from hardware advancement.

Hardware Evolution

Chip manufacturers are racing to build more efficient AI accelerators.

NVIDIA's new H100 and GB200 chips offer substantially better performance-per-watt than previous generations. But efficiency gains plateau—you can only optimize silicon so much.

New architectures like analog chips, neuromorphic processors, and optical processors are being explored. These could potentially offer 10-100x efficiency improvements over current digital systems, but they're still largely experimental.

The Efficiency Paradox

Here's the catch: Efficiency improvements alone won't solve the problem.

Historically, whenever efficiency improves, usage expands to consume the saved capacity. Jevons Paradox describes this phenomenon.

If AI models become 50% more efficient, companies don't reduce AI usage by 50%. They either build models twice as capable or deploy AI in twice as many applications.

So while efficiency matters greatly, it likely won't prevent power consumption growth. It will just slow the growth rate.

Economic Winners and Losers

Who Benefits?

Large AI companies with strong financial positions benefit most. They can absorb grid upgrade costs, making smaller competitors less viable.

Renewable energy companies benefit from accelerated demand for solar, wind, and battery systems. This is essentially a massive government-directed subsidy for the renewable energy industry.

Regions with abundant renewable resources become more attractive for data center investment, leading to economic growth and job creation.

Electricity consumers in regions without new data centers benefit from the companies' grid upgrade commitments reducing pressure on shared infrastructure.

Who Loses?

Small AI startups lose because they can't afford grid upgrade costs. Data center economics shift toward capital-intensive players.

Incumbent utilities lose because they're no longer funding infrastructure upgrades through rate increases. They have to adapt business models.

Regions without renewable resources lose because they become less attractive for data center investment, missing out on economic benefits and job creation.

Consumers in renewable-poor regions might see higher electricity costs as renewable energy is preferentially exported to data centers.

Long-Term Implications: The Next Decade

Data Center Geography Will Shift

Within five years, expect to see a dramatic reshuffling of where AI data centers get built.

Companies will prioritize:

- Renewable-rich regions: Iowa, Wyoming, Oregon, Iceland, Norway

- Locations with low grid upgrade requirements: Near existing high-capacity transmission

- Climate-favorable areas: Cooler climates reduce cooling costs

- Tax-incentive-friendly jurisdictions: States offering credits for data center investment

This means some regions will see explosive growth while others are locked out of the AI boom. States like Texas and California, which aren't typically thought of as renewable-energy hubs, will face competitive disadvantages unless they aggressively build renewable infrastructure.

The Renewable Energy Buildout

AI companies' commitments will accelerate renewable infrastructure development by 10-15 years. Instead of waiting for fossil fuel plants to naturally age out of the system, renewable energy becomes immediately cost-competitive due to massive corporate demand.

This is actually positive for addressing climate change. Accelerating the transition from fossil fuels to renewable energy is valuable, even if it's motivated by corporate profit rather than environmental concerns.

Consolidation in AI Infrastructure

Companies that can't afford to pay for grid upgrades and renewable energy will be squeezed out of competing on computing infrastructure.

Expect consolidation where large, well-capitalized companies acquire smaller ones. The AI landscape will likely become even more concentrated around Microsoft, Google, Anthropic, and a handful of other mega-players.

This has implications for AI development pace, innovation direction, and competitive dynamics in the industry.

Geopolitical Implications

Countries competing for AI dominance will be competing for renewable energy capacity.

Iceland and Norway, with abundant geothermal and hydroelectric resources, will become strategic assets. Countries without renewable resources will be disadvantaged in the race for AI leadership.

This could reshape global geopolitics. Energy-poor nations might struggle to develop domestic AI capabilities. Energy-rich nations could weaponize energy access as a competitive advantage.

The Role of Automation and AI Tools

Managing Energy Operations

Here's an interesting irony: AI itself is becoming essential for managing AI energy consumption.

Companies are using machine learning to optimize:

- Workload distribution across data centers based on power availability

- Cooling system operation to minimize energy waste

- Predictive maintenance to prevent inefficient hardware operation

- Energy market participation to buy electricity when prices are low

Runable represents a category of AI tools increasingly used for automating energy monitoring and optimization workflows. Tools that generate reports on energy consumption patterns, automate alerts when consumption exceeds thresholds, and create presentations for stakeholder communication are becoming standard infrastructure.

Teams building data center operations are using AI agents to automatically process utility bills, identify anomalies, and suggest efficiency improvements. This meta-use of AI (using AI to optimize AI energy consumption) is becoming increasingly important.

Environmental Impact Reporting

Companies making public commitments about energy need to prove they're meeting them. This has created demand for automated environmental reporting tools.

AI tools that analyze energy consumption data, generate sustainability reports, and track progress against stated goals are increasingly valuable. What used to be manual accounting work is becoming automated.

Corporate Accountability: How We'll Know If Companies Deliver

Transparency Requirements

Anthropics, Microsoft, and other companies have made commitments, but how will we verify they're keeping them?

This requires transparency. Companies should publish:

- Energy consumption data by facility and time period

- Renewable energy procurement documentation with specific contracts

- Grid upgrade project updates and completion timelines

- Carbon emissions calculations and methodology

- Water consumption and conservation efforts

Some companies are embracing transparency. Others are being more opaque. This will become a competitive and reputational issue.

Auditing and Verification

Third-party auditing of corporate energy claims has become an industry. Firms like Carbon Trust, SGS, and Bureau Veritas now offer certification of renewable energy procurement and carbon accounting.

Expect to see companies competing on having the most credible third-party verification of their energy commitments. This adds costs but increases trustworthiness.

Stakeholder Pressure

As commitments age, stakeholder pressure will increase if companies aren't delivering.

Utility commissions, environmental groups, and affected communities will demand accountability. Companies that are clearly meeting their commitments will gain reputation and regulatory advantages. Companies that are dragging feet or making excuses will face increasing pressure.

This creates a virtuous cycle where transparency and honesty become competitive advantages.

Challenges and Criticisms

The Limitations of Corporate Commitments

It's important to acknowledge that these commitments, while significant, have real limitations.

They're voluntary: Unlike regulations, companies can modify or reduce commitments if business circumstances change. There's no enforcement mechanism if Anthropic or Microsoft decide to backtrack.

They're incomplete: Energy costs aren't the only environmental impact of data centers. Water consumption, land use, electronic waste, and manufacturing impacts remain largely unaddressed.

They don't address all stakeholders: Communities hosting data centers might still experience negative impacts (noise, heat, traffic) that energy cost commitments don't address.

They might not be financially sustainable: If AI business models become less profitable, companies might struggle to maintain expensive renewable energy procurement commitments.

The Rebound Effect

Here's the uncomfortable truth: Even if companies perfectly implement their energy commitments, total AI energy consumption will likely continue growing.

Why? Because AI keeps getting cheaper to use, so demand keeps growing. Training new models, running inference at scale, and deploying AI in more applications all expand, potentially negating efficiency gains.

The Renewable Energy Limits

Renewable energy capacity is growing, but it's not growing infinitely. If multiple AI companies are all competing for renewable power simultaneously, eventually the supply runs out.

At that point, companies face a choice: Stop expanding (not attractive), use fossil fuel power (violates commitments), or compete on renewable energy prices and drive up costs.

This suggests that energy-based solutions alone are insufficient. We might need deeper changes in how AI models are designed or deployed.

The Bigger Picture: Sustainability vs. Capabilities

The Fundamental Tension

There's an unavoidable tension between AI capability and sustainability.

Larger models are more capable but consume more energy. More capable models drive more business value. Business competition incentivizes always building bigger models.

Commitments to pay for energy costs don't resolve this tension. They just mean companies pay more to build bigger models. The underlying incentive to scale remains.

What Real Solutions Look Like

Paying for energy upgrades and procuring renewables is important but probably insufficient long-term. Real solutions might require:

- Rethinking AI architectures to be fundamentally more efficient

- Regulatory frameworks that limit AI power consumption directly

- Pricing mechanisms that make energy costs directly reflected in AI service pricing

- International coordination to prevent energy-poor nations from being locked out

- Investment in next-generation energy like fusion power or advanced nuclear

None of these are happening yet. We're still in the phase where companies are making voluntary commitments to handle energy cost increases.

The Role of Innovation

The most important factor might be innovation in energy itself, not just in AI efficiency.

If fusion power becomes viable, it eliminates the scarcity constraint. If next-generation batteries drastically improve storage capacity, renewable energy becomes more viable. If advanced cooling technologies are developed, heat removal becomes less energy-intensive.

These breakthroughs would fundamentally change the energy economics of AI, potentially making current concerns seem quaint.

International Comparisons: How Other Countries Handle AI Energy

European Approach

The European Union is taking a more regulatory approach than the U. S.

The proposed AI Act includes provisions for energy efficiency requirements. Data centers operating in EU member states must meet specific efficiency standards. This is more prescriptive than the U. S. approach of letting companies make voluntary commitments.

EU countries are also leveraging abundant renewable energy as a competitive advantage for attracting data centers.

Asian Strategies

China is aggressively building data center infrastructure powered by renewable energy from western regions. This strategic approach to energy positioning China as a competitive AI powerhouse.

Singapore is similarly positioning itself as a regional AI hub by investing in renewable energy and favorable regulations.

India is trying to attract AI investment through low-cost energy but is constrained by insufficient renewable capacity and grid reliability challenges.

Resource-Rich Nations

Countries with abundant renewable resources (Iceland, Norway, New Zealand) are actively marketing themselves to AI companies. These nations understand that energy resources are increasingly valuable in the AI economy.

FAQ

What is the relationship between AI data centers and electricity prices?

Large AI data centers significantly increase electricity demand in their regions, requiring utilities to upgrade infrastructure. These costs are traditionally passed to all consumers through rate increases. Companies like Anthropic are committing to pay these costs directly, preventing impacts on existing ratepayers. The connection is direct: more data center demand equals higher infrastructure costs that would otherwise appear on utility bills.

How much electricity do AI data centers actually consume?

AI data centers are among the most power-intensive facilities, with major facilities consuming 50-100+ megawatts continuously. For perspective, this equals the power consumption of 50,000-500,000 average homes. Training a single large language model can consume over 1,000 megawatt-hours of electricity. A data center operating continuously could consume power equivalent to a small city's total usage.

What exactly is Anthropic paying for when it says it will cover electricity price increases?

Anthropics committed to three specific things: paying 100% of grid upgrade costs needed to connect its facilities, procuring equivalent new renewable energy generation, and covering demand-driven price increases that result from its operations. Grid upgrades can cost

Why don't other countries face this same issue?

Most other countries are addressing this through different mechanisms. The EU uses regulatory requirements rather than voluntary commitments. Some countries with abundant renewable resources don't face the same infrastructure strain. However, as AI demand grows globally, every country is eventually facing these issues, and international competition for energy resources is intensifying.

Is renewable energy really the solution to AI's energy problem?

Renewable energy significantly reduces carbon emissions from AI operations, but it doesn't eliminate the underlying energy consumption issue. Data centers still consume enormous amounts of electricity whether it comes from renewables or fossil fuels. Additionally, renewable energy capacity is finite, so if AI demand grows too fast, renewable resources alone won't suffice. Real solutions likely require both efficiency improvements and next-generation energy technologies.

How will small AI startups afford these energy commitments?

They likely won't, at least not in the same way larger companies can. This economic pressure will push the industry toward consolidation where larger, well-capitalized companies acquire smaller competitors. Startups will increasingly need to either join established companies or find alternative ways to access computing infrastructure that don't require building their own data centers.

When will we see data centers moving to renewable-rich regions?

This is already happening, and the trend will accelerate. States like Iowa, Wyoming, and Oregon are actively attracting data center investment by offering abundant wind and hydroelectric power combined with favorable tax policies. Within 5-10 years, expect a significant geographic shift in where AI infrastructure concentrates, potentially disrupting regions that currently host large data center populations.

What happens if companies don't follow through on their commitments?

There's limited enforcement since these are currently voluntary commitments. However, regulatory pressure, public opinion, and competitive disadvantage will likely force compliance. Companies that fail to deliver will face stakeholder backlash, regulatory scrutiny, and potential restrictions on future facilities. The competitive advantage of being seen as responsible will incentivize most companies to maintain their commitments.

Conclusion: The Future of AI Energy Economics

Anthropic's and Microsoft's commitments represent a significant inflection point. They acknowledge that the companies building AI infrastructure should bear the costs of that infrastructure, not everyday electricity consumers.

This is important because it fundamentally changes the economics of AI expansion. Building a data center just got much more expensive. That's actually good—it forces companies to be more strategic about where they expand, more committed to efficiency improvements, and more accountable for their environmental impact.

But here's what I genuinely believe will matter most: These commitments will accelerate the renewable energy transition by a decade or more. Instead of waiting for government policy or market forces to drive solar and wind adoption, massive tech companies are now funding it directly because they have no choice.

The challenge ahead isn't primarily about energy costs or even carbon emissions. It's about managing expectations. AI companies made specific commitments. They'll need to deliver transparently and completely. If they do, they'll establish trust with regulators and communities. If they don't, regulatory backlash will be swift.

Long-term, I suspect these voluntary commitments will evolve into regulatory requirements. States and countries will formalize what companies like Anthropic pioneered voluntarily. That's how policy usually works—market leaders set the standard, then regulators codify it.

The next question isn't whether AI energy commitments stick. It's whether these commitments are actually sufficient, or if we need even more dramatic changes in how AI infrastructure is built and deployed.

For now, we're watching a genuinely important moment where the most powerful technology companies in the world are being held accountable for their resource consumption. That's rarely happened in tech history. It matters. And it will reshape how AI develops over the next decade.

If you're involved in energy policy, AI infrastructure, utilities, or renewable energy, this shift is the defining issue of the next five years. Understanding how these commitments actually play out—where companies succeed, where they face unexpected challenges, how regulations evolve—will determine the trajectory of both AI and energy infrastructure development globally.

The electricity bill you pay next month might seem disconnected from AI data centers. But the grid that delivers that electricity is being fundamentally reshaped by AI's demands. Anthropic's commitment to cover those costs represents a first step in ensuring that development happens fairly and sustainably.

Key Takeaways

- AI data centers consume enormous electricity (equivalent to cities), forcing companies to cover grid upgrade costs instead of passing them to consumers

- Anthropic committed to paying 100% of grid upgrades, procuring new renewable energy, and covering demand-driven price increases—a major shift in AI infrastructure economics

- These commitments fundamentally change where data centers get built, favoring renewable-rich regions like Iowa, Wyoming, and Oregon while disadvantaging others

- Timeline mismatch between data center construction (18-24 months) and renewable energy development (3-10 years) creates unavoidable infrastructure gaps that money alone can't solve

- Long-term, voluntary corporate commitments will likely evolve into regulatory requirements as other states and countries formalize what companies like Anthropic pioneered

Related Articles

- Dehumidifier for Drying Clothes: Condenser vs Desiccant [2025]

- Trump's Coal Military Order: Policy Reality Check [2025]

- Microsoft's Superconducting Data Centers: The Future of AI Infrastructure [2025]

- New York's AI Regulation Bills: What They Mean for Tech [2025]

- Why States Are Pausing Data Centers: The AI Infrastructure Crisis [2025]

- Elon Musk's Orbital Data Centers: The Future of AI Computing [2025]

![AI Data Centers & Electricity Costs: Who Pays [2025]](https://tryrunable.com/blog/ai-data-centers-electricity-costs-who-pays-2025/image-1-1770944797808.png)