How AI Turned Fashion Week Into an Interactive Experience

New York Fashion Week just got a serious technology upgrade. Designer Kate Barton didn't just show clothes on Saturday. She reimagined the entire experience by blending runway tradition with cutting-edge AI. The result? Guests could virtually try on pieces, ask questions in any language, and explore the collection through an immersive digital lens.

This isn't your typical "let's add some tech for hype" move. Barton partnered with Fiducia AI and IBM Cloud to build a production-grade activation that actually solves real problems. The multilingual AI agent, powered by IBM Watsonx, could identify any piece in the collection and let visitors virtually try them on. No gimmicks. No fluff. Just genuine innovation.

Why does this matter? Because fashion has been slow to adopt AI in meaningful ways. Most luxury brands treat technology like it's radioactive. They're nervous about the optics. They're worried about authenticity. But Barton saw something different. She saw AI as a storytelling tool, a way to deepen the connection between designer and audience, not replace it.

The collaboration signals a larger shift happening in fashion right now. Brands are experimenting quietly. But someone had to go public with it, and Barton did. The question everyone's asking now: Is this the future, or just a one-off stunt? The answer might surprise you.

TL; DR

- AI Virtual Try-Ons: Fiducia AI built a visual recognition system that detects collection pieces and generates photorealistic virtual try-ons

- Multilingual Agent: The AI assistant responds to questions in any language via voice and text, making fashion accessible globally

- IBM Enterprise Stack: Built on IBM Watsonx, IBM Cloud, and IBM Cloud Object Storage for production-grade reliability

- Craft Enhancement, Not Replacement: Barton's philosophy is clear: AI expands the human experience, it doesn't erase the designers who make it possible

- Industry Inflection Point: This moment represents the shift from "should fashion use AI?" to "how do we deploy AI responsibly in fashion?"

Companies using AI for personalization see an average revenue increase of 6 to 10 percent, highlighting AI's potential as a growth engine.

The Problem Fashion Brands Actually Face

Let's be honest. Fashion is stuck in an interesting paradox. The industry is obsessed with storytelling, immersion, and emotional connection. But the actual experience of buying clothes? It hasn't fundamentally changed in decades.

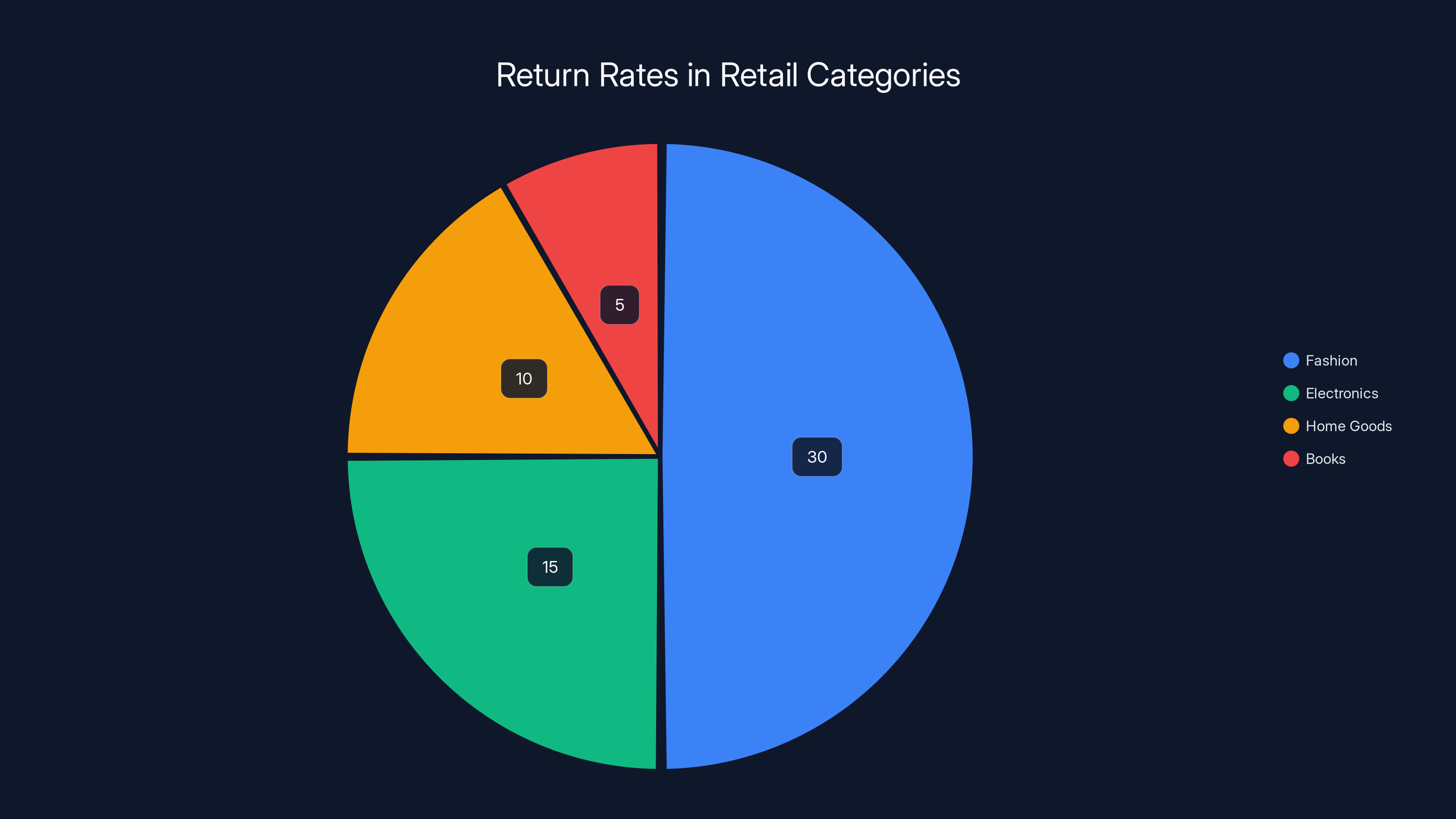

You walk into a store. You see things on hangers. You might try them on. You either like them or you don't. Online, it's even worse. You scroll through photos. You read descriptions. You guess on fit. Return rates in fashion average 30 percent, which is higher than almost any other retail category. That's because seeing something online and wearing it are two completely different experiences.

Virtual try-on technology has existed for years. But most implementations feel janky. The avatars look plastic. The sizing estimates are laughable. Customers don't trust them. So they keep returning clothes, and brands keep eating the cost.

Barton identified something deeper though. It's not just about buying. It's about understanding. When you see a collection in person, you understand the designer's vision, the fabric quality, the way pieces relate to each other. But most people never get that experience. They see photos. They read marketing copy. They miss the story entirely.

That's the gap Fiducia AI and IBM tried to close. Not by replacing the runway experience, but by extending it. Making it accessible. Making it interactive. Making people feel like they're part of the creative process, not just passive consumers.

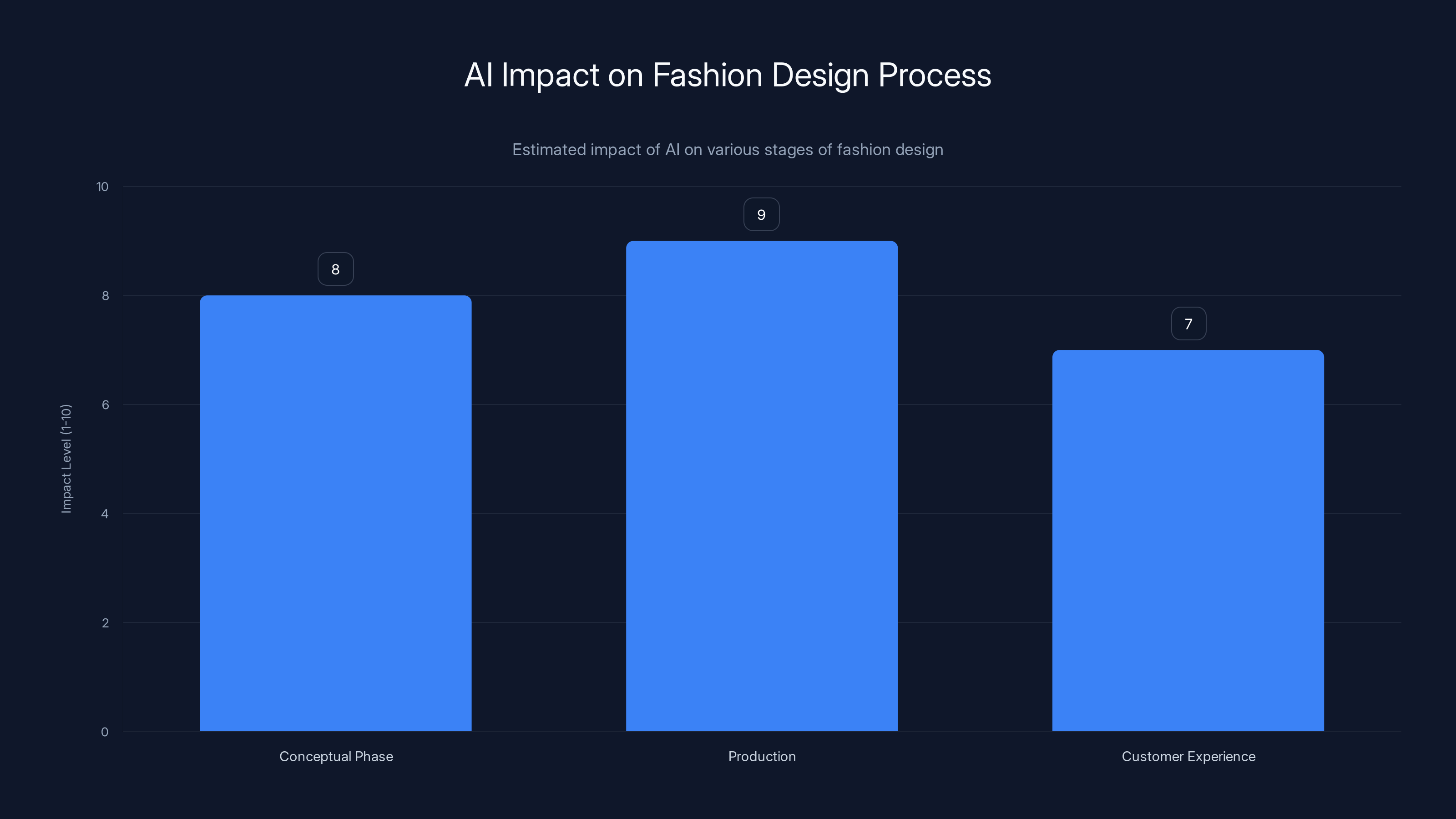

AI significantly enhances the production phase by optimizing supply chains and forecasting demand, with an estimated impact level of 9 out of 10. Estimated data.

Understanding Fiducia AI's Technical Approach

Ganesh Harinath, founder and CEO of Fiducia AI, said the hardest work wasn't model tuning. It was orchestration. That single sentence reveals everything you need to know about this project.

Building a machine learning model that recognizes clothing is table stakes now. Computer vision has matured. The real challenge was creating a system where multiple technologies work together seamlessly. The Visual AI lens needed to run fast enough for real-time interaction. It needed to be accurate enough to identify specific pieces. It needed to handle variables like lighting, angles, and crowd chaos at a major fashion event.

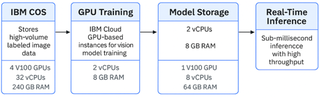

They built the system on IBM Watsonx, IBM's enterprise AI platform. Watsonx provides foundational models that can be customized without retraining from scratch. That's crucial for a project with a tight timeline. You don't have months to gather training data and iterate. You have weeks.

The visual recognition component needed to identify every piece in Barton's collection accurately. That means training or fine-tuning models on hundreds of photos of each garment, from multiple angles, in different lighting conditions. The team built a dataset, but more importantly, they built a pipeline. If a piece wasn't recognized correctly, they could add more examples and improve the model in hours, not days.

The conversational component added another layer. A visitor could ask "Can I see this in blue?" or "How would this look with the other jacket?" in Spanish, Mandarin, French, or any other language. That requires natural language understanding in multiple languages, plus the ability to retrieve correct images from a massive inventory and generate new visual content on the fly.

They used IBM Cloud Object Storage to manage the massive volume of imagery and video the system generates. Photorealistic virtual try-ons aren't small files. Each one is a rendered 3D representation of a person wearing a specific garment. The system had to generate these in real-time during the event, store them, and make them accessible through mobile apps and web browsers.

It's a far cry from slapping a chatbot on your website. This is serious infrastructure.

Why Virtual Try-Ons Matter (More Than You Think)

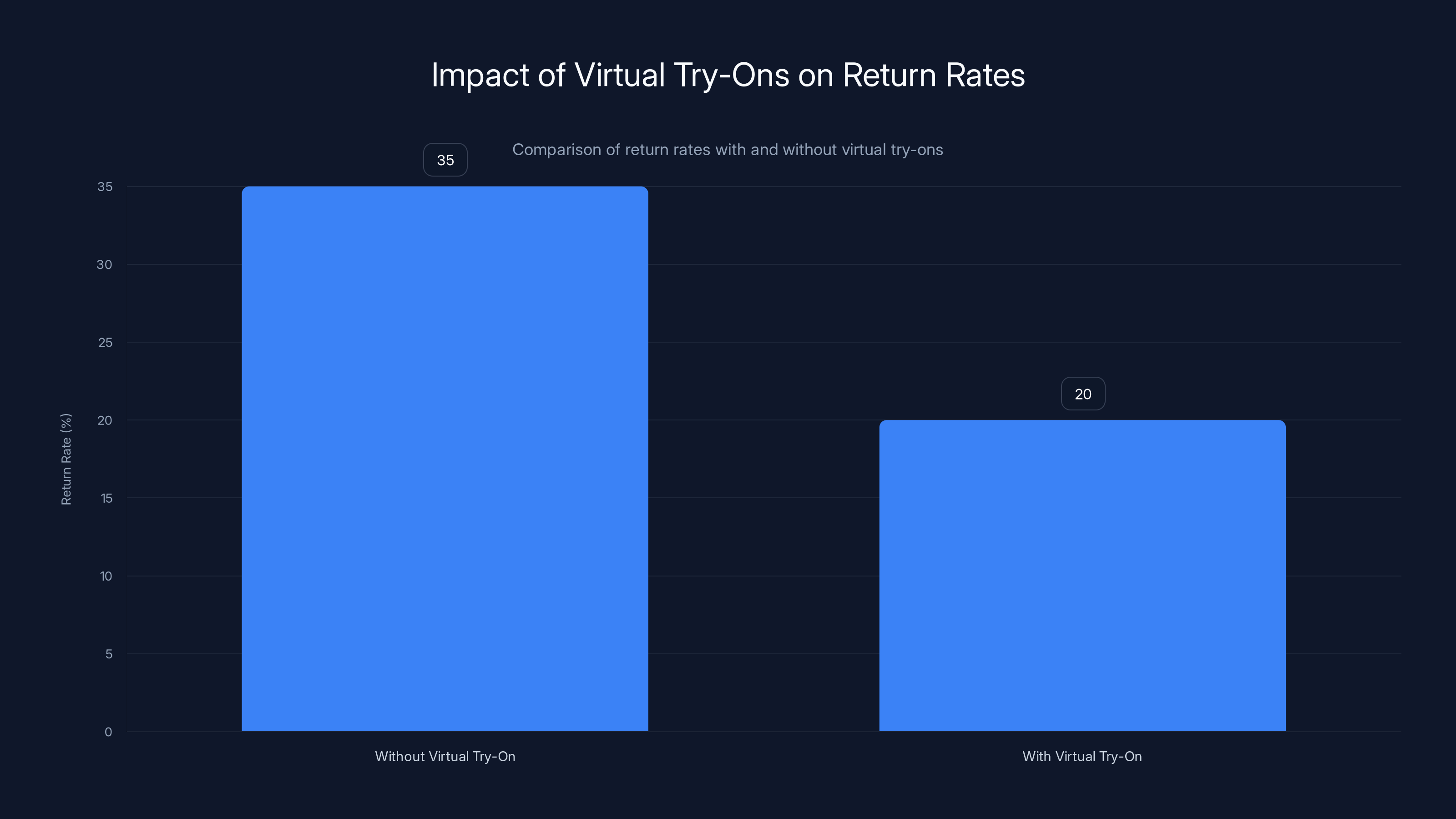

Retail returns are destroying margins across the industry. Fashion e-commerce returns hit 35 percent in 2024, compared to about 7 percent for other retail categories. That's a staggering gap.

Why? Because online, you can't see how something fits. You can't feel the fabric. You can't tell if the color matches your skin tone or clashes with your existing wardrobe. So you order multiple sizes, you order multiple colors, you hope one of them works. Then you return the rest.

Virtual try-ons don't eliminate returns, but they reduce them. A study by McKinsey found that customers who use virtual try-on technology are 40 percent less likely to return items. That's not trivial. If a brand does

But there's a secondary benefit that Barton cares about more. Virtual try-ons change the shopping experience from transactional to exploratory. Instead of "Do I like this?" the question becomes "What else could I wear with this?" or "How would I style this?" Suddenly customers are thinking about the collection holistically, not just individual pieces.

During Barton's NYFW presentation, visitors could see how a jacket paired with different bottoms. They could experiment with accessories. They could get styling advice from the AI. That's not just reducing returns. That's creating a relationship between the customer and the brand.

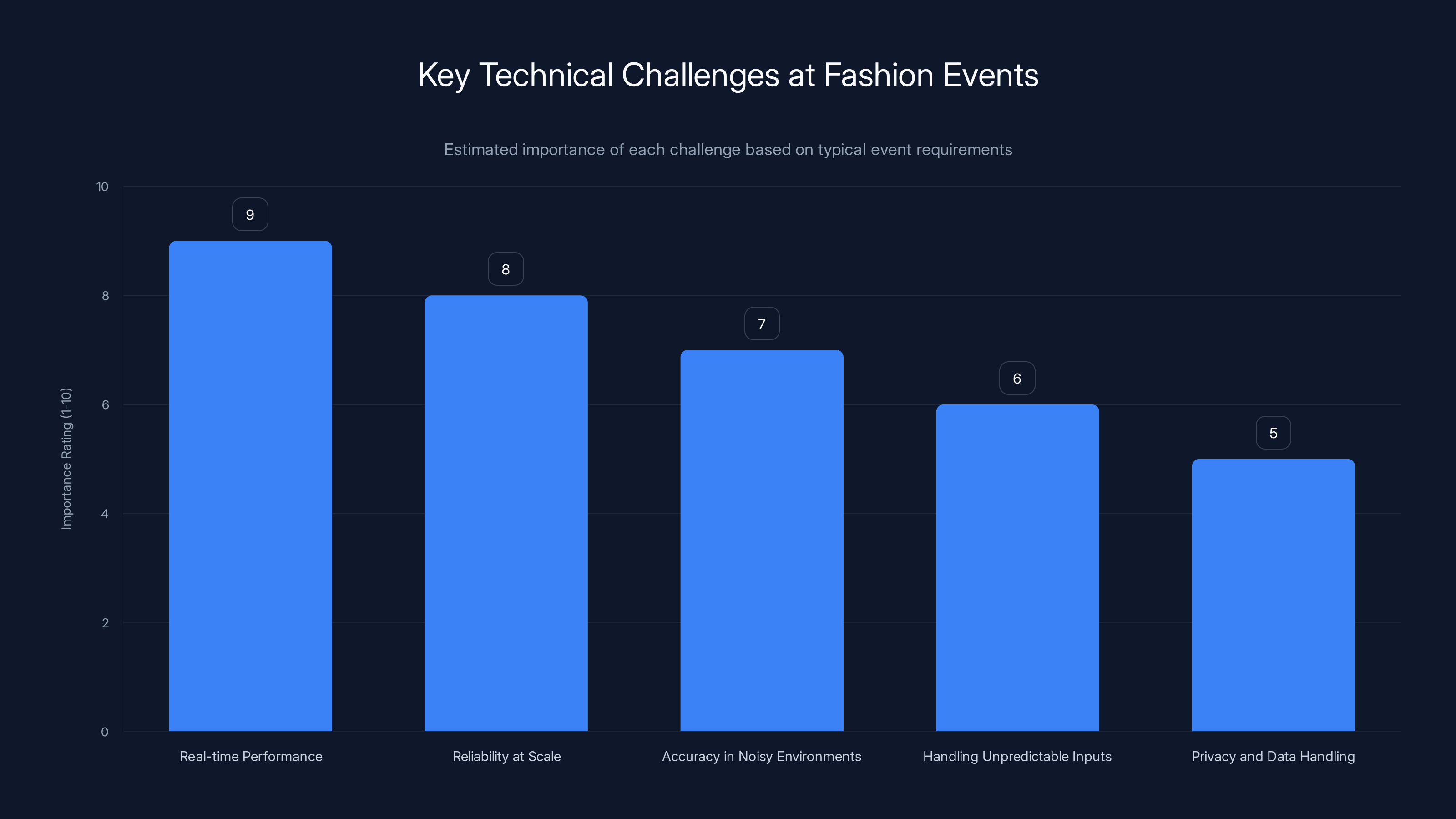

Real-time performance and reliability are the most critical challenges at fashion events, requiring significant focus to ensure a seamless experience. Estimated data based on typical event requirements.

The Multilingual AI Agent: Breaking Language Barriers

Here's something that gets overlooked in most tech coverage. The AI agent didn't just speak English. It spoke every language.

Fashion is global. A designer in New York has customers in Tokyo, Dubai, London, and São Paulo. But fashion shows are local events. You had to be in New York to experience it in real-time. Now, thanks to the AI agent, you could experience it in your language, wherever you are.

The technical implementation is simple in theory, complex in practice. Large language models like those powered by IBM Watsonx have been trained on text in hundreds of languages. So technically, they can generate responses in those languages. The challenge is context.

When someone asks a question in Mandarin about whether a piece would work for a specific occasion, the system needs to understand the cultural context of that occasion. It needs to know whether formal wear means a tuxedo or a tailored suit. It needs to understand color preferences that vary by culture. Red is lucky in Chinese culture. It's associated with danger in some Western contexts. The AI agent needs to account for these nuances.

Barton's team handled this partly through the model itself, partly through careful prompt engineering. You don't just ask the model "respond in Mandarin." You give it context about the cultural preferences of the person asking. You provide examples of how to describe fabric quality in different languages. You build guardrails to prevent the model from making assumptions.

The voice component adds another layer. Voice recognition and text-to-speech have both improved dramatically, but they're still imperfect. An accent, background noise, or someone speaking quickly can confuse the system. During a fashion show, with crowds and music and general chaos, accuracy matters. If the system mishears a question, the entire experience falls apart.

Barton's team solved this partly through hardware. They used quality microphones and spatial audio processing to isolate voices from background noise. They also built redundancy. If the system wasn't confident in a voice command, it would ask for clarification. No guessing.

The result was that someone could walk up to a kiosk, point at a jacket, ask in Korean how it would look with a specific pair of pants, and get an intelligent, contextually appropriate response. That's a level of accessibility that fashion has never offered before.

Kate Barton's Vision: Technology as Storytelling Tool

When Barton talks about her approach to technology, she's thinking about something most fashion designers ignore. She's thinking about narrative arc.

A collection tells a story. There's a theme, a message, an evolution from piece to piece. Traditionally, the runway is how that story gets told. Models walk, music plays, lighting creates mood, and the audience experiences the narrative in real-time. When you buy the collection later, you're trying to recapture that experience.

Barton saw AI as a way to strengthen that storytelling, not replace it. The visual AI lens isn't there so people can skip the show. It's there to deepen their understanding of the show. When you can point at a piece and the system tells you the inspiration, the construction technique, the designer's intent, you're not reading marketing copy. You're hearing the story directly.

She described the AI system as "a portal into the collection's world, rather than 'AI for AI's sake." That's the key insight. The technology isn't the story. The technology is the gateway to the story.

This philosophy extends to the virtual try-ons. Most virtual try-on systems just show you how something looks on a body. Barton's system shows you how something looks within the context of the collection. It lets you see styling advice from the designer. It lets you understand the intention behind the pieces, not just the aesthetics.

That's fundamentally different from existing virtual try-on technology. It's not just fashion retail optimization. It's fashion as a medium where technology enhances the human creative experience.

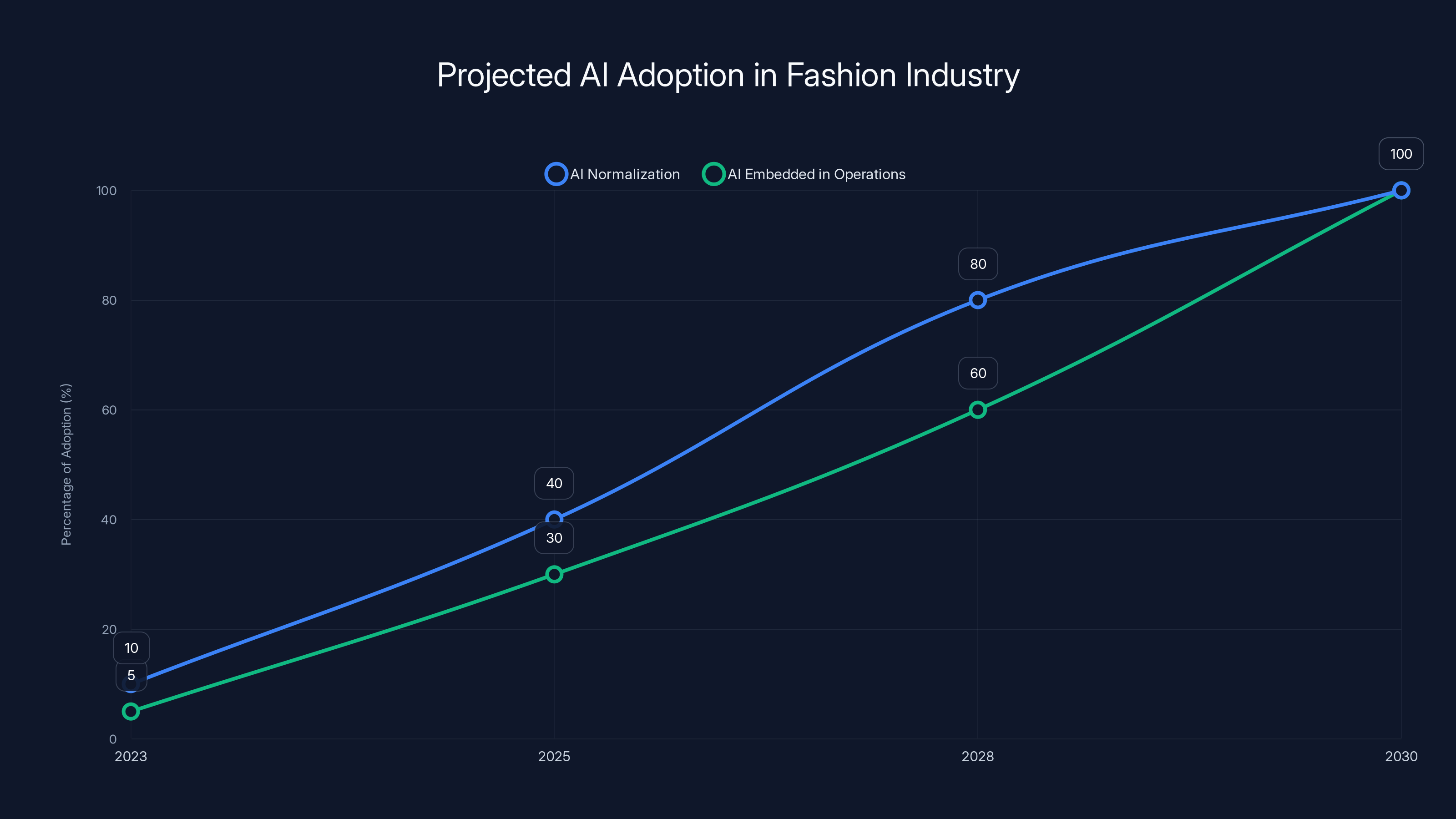

By 2028, AI is expected to be normalized in fashion, with 80% adoption, and by 2030, fully embedded in operations, reaching 100%. Estimated data.

The Fashion Industry's Quiet AI Experimentation

Here's what surprised me about the conversation with Barton and Harinath: The admission that many brands are already using AI, but nobody talks about it.

Luxury fashion is conservative. The brands that built their reputation on heritage and craftsmanship are nervous about looking like they're cutting corners with automation. So they experiment in the shadows. Operations teams use AI to optimize supply chains. Design teams use it to forecast trends. Marketing teams use it to personalize emails. But publicly? Silence.

Barton thinks that's changing, slowly. She drew a parallel to websites in the 1990s. Fashion brands were terrified of e-commerce. It seemed cheap, inauthentic, a betrayal of the luxury experience. Why would someone buy a dress without seeing it in person?

But then it became inevitable. Brands had to go online. The question shifted from "should we?" to "how do we do it well?" Now, a major luxury brand without a sophisticated e-commerce presence is unthinkable.

AI is heading in the same direction. Not tomorrow. Maybe not in 2025. But by 2028, according to Harinath's prediction, AI in fashion will be normalized. It won't be a special presentation at Fashion Week. It'll just be how fashion works.

The brands that are experimenting quietly now, learning what works and what doesn't, will have a massive advantage when that shift happens. They'll have internal expertise. They'll have partnerships established. They'll have learned from failures on a small scale, not on a public stage.

Barton's public approach is riskier, but it establishes her as a leader. She's not following the trend. She's creating it.

The Concerns: Can AI Respect Human Creativity?

There's a tension that nobody in fashion wants to talk about directly. AI, used poorly, can erase the human element. It can turn art into commodities. It can automate away the jobs of designers, pattern makers, and skilled craftspeople.

Barton is aware of this, and she's not interested in participating in that future. She was explicit: "If the technology is used to erase people, I am not into it."

What does erasing people look like? It's when a brand uses AI to generate designs entirely, removing human designers from the process. It's when they use AI-generated models instead of hiring photographers and talent. It's when they use synthetic imagery and pretend it's real photography, misleading customers. It's when they use AI to analyze competitors' designs and copy them algorithmically.

Barton's approach is the opposite. She uses AI to amplify what she does. It lets her tell stories better. It lets her reach more people. It lets customers understand her vision more deeply. But the creative work, the artistic decisions, the human judgment? That's still hers.

She raised a point about licensing and credit that resonates beyond fashion. When you use AI trained on millions of existing images, you're standing on the shoulders of other artists and photographers. If you're not transparent about that, if you're not compensating those artists, you're basically stealing. If you're not crediting the people whose work trained your models, you're erasing them.

That's starting to shift. OpenAI and other AI companies are beginning to address copyright concerns. But we're still in the early stages. The question of how to ethically build AI models trained on human creativity is unresolved.

Barton's solution is clarity. Clear discourse about what AI is doing. Clear licensing agreements. Clear credit for the humans involved. A shared understanding that human creativity isn't overhead, it's the entire point.

Fashion has a significantly higher return rate at 30% compared to other retail categories, highlighting a major challenge for the industry. Estimated data.

Dee Waddell's Perspective: AI as a Growth Engine

Dee Waddell, Global Head of Consumer, Travel and Transportation Industries at IBM Consulting, frames AI differently than Barton does. She's thinking about business results.

She said: "When inspiration, product intelligence, and engagement are connected in real time, AI moves from being a feature to becoming a growth engine that drives measurable competitive advantage."

That's business language, but it points to something real. Most brands think about AI as a cost center. It's an expense you take on to stay competitive. But Waddell is arguing that AI can be a revenue center. It can directly drive sales, increase average order value, and improve customer lifetime value.

How? By making the customer experience better. When inspiration is connected to product intelligence (knowing what's in stock, what's being restocked, what's popular), customers make faster, more confident purchase decisions. When that's connected to engagement (personalized recommendations, styling advice, community features), customers feel a relationship with the brand.

The data backs this up. McKinsey research shows that companies using AI for personalization see an average increase of 6 to 10 percent in their revenue. For fashion, where margins are already thin, that can be the difference between profitability and losses.

Barton's approach and Waddell's approach aren't contradictory. Barton is thinking about artistic integrity. Waddell is thinking about business impact. The success of this NYFW presentation depends on both being true. It has to enhance the creative vision and drive measurable business results.

Timeline: When Does AI Become Normal in Fashion?

Harinath made two predictions. By 2028, AI will be normalized in fashion. By 2030 (he said 2023 in the original quote, but that seems like a typo), it'll be embedded in the operational core of retail.

Let's think about what that means. Normalized by 2028 means we stop being surprised by it. An AI that creates personalized lookbooks? That's normal. An AI that optimizes inventory? That's normal. An AI that helps with design inspiration? That's normal.

Embedded in operational core by 2030 means it's not a special feature. It's infrastructure. It's like how e-commerce is now infrastructure for retail. You don't separate "online sales" from "sales." There's just "sales," and some of it happens to be through the internet. Eventually, there won't be "AI fashion" and "traditional fashion." There'll just be "fashion," and some of it happens to use AI.

The timeline is aggressive, but plausible. Here's why:

Technology maturity: Large language models, computer vision, and generative AI have reached a tipping point. They're good enough to deliver real value. They're not perfect, but they're better than doing things manually.

Competitive pressure: Brands that don't adopt AI will fall behind. Their design cycles will take longer. Their supply chains will be less efficient. Their customer experiences will feel outdated.

Consumer expectations: A 25-year-old customer in 2026 expects personalization. They expect recommendations. They expect their purchases to be remembered. Brands that can't deliver that through AI won't survive.

Talent availability: There are now thousands of AI engineers and designers who understand both AI and fashion. Five years ago, that was rare. Now it's becoming common. As talent availability increases, implementation costs decrease.

The real question isn't whether AI will be normal in fashion. It's how quickly different segments of fashion will adopt it. Luxury brands might lag. Fast fashion will lead. But eventually, everyone catches up.

Virtual try-ons can reduce fashion e-commerce return rates from 35% to 20%, potentially saving brands millions annually. Estimated data based on industry studies.

The Reputational Risk That's Keeping Brands Silent

Barton mentioned something interesting: Many brands are using AI quietly because of the potential reputational risk.

Why is that a problem? Let's think about a luxury brand's perspective. If you announce you're using AI to help design clothes, a portion of your customers will think: "Wait, my $5,000 dress was designed by a computer?" That's bad for the premium positioning. It feels like cost-cutting.

But if you use AI quietly, if you use it for supply chain optimization and trend forecasting and sizing recommendations, nobody questions it. It's just business as usual.

Barton's decision to be public about it is risky. Some customers will judge. Some will think less of the collection. Some will feel like she's selling out.

But Barton is betting that sophisticated customers—the people who actually understand design and fashion—will appreciate the honesty. She's betting that by being transparent about how AI was used, by emphasizing that it enhanced her creative vision rather than replacing it, she'll build trust. And she's right. The NYFW presentation generated significant buzz, and most of it was positive.

The reputational risk is real, but so is the reputational reward. Brands that are bold enough to lead often build stronger emotional connections with customers than brands that follow quietly.

The Differentiator: Having the Right Partners

Harinath emphasized that most AI technology already exists. The differentiator now is assembling the right partners and building teams that can operationalize it responsibly.

That's crucial insight. You can't build this stuff alone. Barton couldn't do it herself. Neither could Fiducia AI. Neither could IBM. But together, they could.

Barton brought creative vision. She knew exactly what kind of experience she wanted to create. She understood fashion at the highest level. She could articulate the story she wanted to tell.

Fiducia AI brought AI expertise. They understood computer vision, natural language processing, orchestration. They knew how to build systems that work at scale and with reliability. They could translate Barton's vision into technical specifications.

IBM brought enterprise infrastructure. They provided Watsonx for the foundational models. They provided Cloud for the computing resources. They provided Object Storage for managing massive amounts of imagery. They brought credibility and support.

None of them could have done it alone. Barton couldn't have built the AI system herself. Fiducia AI couldn't have created the creative vision. IBM couldn't have understood the fashion context.

This partnership model is how AI gets deployed responsibly. It's not AI engineers in a vacuum, building systems without understanding the human impact. It's humans, AI experts, and infrastructure providers working together, each bringing their expertise.

That's the template for AI adoption across industries. Find the right partners. Build teams with diverse expertise. Create accountability throughout the process.

What Gets Better: Prototyping, Visualization, Production Decisions

Barton sees several concrete improvements that AI brings to her work. She mentioned prototyping, visualization, and smarter production decisions.

Prototyping: Traditionally, creating a prototype of a design is expensive and time-consuming. You sketch it. You source fabrics. You hand-sew or sample versions. You try them on. You iterate. Each iteration might cost hundreds of dollars and take weeks. With AI, you can generate dozens of visual prototypes in hours. You can see how different fabrics look. You can see different proportions. You can experiment wildly without the cost of physical samples.

This doesn't replace hand-sewn prototypes entirely. At some point, you need to feel the fabric, see how it drapes, understand the construction. But AI can accelerate the exploration phase dramatically.

Visualization: Explaining a design concept to a production team is hard. You have sketches, maybe mood boards, reference images. But imagine explaining your vision in 3D, photorealistic detail. The manufacturer sees exactly what you want. There's less room for misinterpretation. Quality improves.

For customers, visualization matters even more. Looking at a flat sketch or a technical drawing doesn't convey how a piece will look and move on a body. AI can generate realistic renderings that show that. Customers understand the product better. They have fewer surprises when items arrive.

Production decisions: Data about which pieces are popular, which styles sell better in different regions, which fabrics are most requested—this information can inform production runs. Instead of guessing and ending up with excess inventory or stock-outs, brands can use AI to forecast demand more accurately. It's not perfect, but it's better than human intuition alone.

All three improvements are about doing what you already do, but better. Not replacing humans, but making humans more effective.

The Real Future of Fashion (It's Not Automated)

Barton's final statement is powerful: "The most exciting future for fashion is not automated fashion. It is fashion that uses new tools to heighten craft, deepen storytelling, and bring more people into the experience, without flattening the people who make it."

That should be the north star for AI adoption in creative industries. Not efficiency for its own sake. Not automation for automation's sake. But tools that make the creative work better, more accessible, and more human.

What would that future look like?

Smaller designers could compete with larger ones because AI levels the playing field. You don't need a massive design team or production infrastructure. You need vision and the ability to partner with the right technology providers.

Customers could have more direct relationships with designers. Instead of buying through retailers and departments stores, they could customize pieces directly. AI could help manage that process, but the creative decisions would still be human.

Production would be more efficient and sustainable. Instead of making huge batches of clothing that might not sell, brands could make smaller runs and adjust based on demand. Less waste. Less inventory sitting in warehouses. Less environmental impact.

Craft wouldn't disappear. If anything, it would become more valued. In a world where AI can do a lot of the routine work, the hand-crafted, unique, non-algorithmic creative work becomes more precious. The market for products made with true human skill would expand.

That's the bet Barton is making. That's the vision that makes this NYFW presentation interesting. It's not "AI will take over fashion." It's "AI will help fashion become more creative, more accessible, and more human."

Whether that happens depends on whether enough designers think like Barton.

Technical Challenges That Nobody Talks About

Getting this to work at a major fashion event is harder than it sounds. Here are the hidden technical challenges:

Real-time performance: The system had to respond in under 2 seconds or people would get bored and walk away. That means every component had to be optimized. The vision model had to run locally or with minimal latency. The language model had to have fast inference. The image generation had to happen quickly. That's not just about building a good system. It's about building a fast system.

Reliability at scale: Fashion Week draws thousands of visitors. If the system crashed or became slow when traffic peaked, the entire experience would fall apart. That meant building redundancy, load balancing, and failover systems. It meant testing under load before the event. It meant having support staff ready to troubleshoot in real-time.

Accuracy in noisy environments: A fashion show is loud. There's music, crowds, ambient noise. The voice recognition system had to work despite all that. That meant special microphones, noise cancellation, and fallback mechanisms. The visual system had to work under varying lighting conditions and camera angles.

Handling unpredictable inputs: What happens when someone asks the system something it wasn't trained for? What if they try to break it? What if they ask about a piece from last season that's not in the collection? The system had to handle edge cases gracefully, without crashing or giving nonsensical answers.

Privacy and data handling: Every interaction generates data. The system needs to handle it responsibly, delete it appropriately, and protect customer information. That's not a technical problem, but it requires technical implementation.

Fiducia AI's comment that "the hardest work wasn't model tuning, it was orchestration" makes sense now. Building the ML model is maybe 20% of the work. Building the system that actually works in the real world is the other 80%.

What Brands Should Learn From This

If you're in any kind of creative business and thinking about AI, here's what Barton's approach teaches:

1. Start with the problem, not the technology: Barton didn't say "How do we use AI?" She said "How do we deepen the customer experience?" Then she found the technology that solved that problem. That's the right order.

2. Be honest about what you're doing: Transparency builds trust. If you're using AI, say so. Explain why. Explain how it's making things better. Customers are smarter than you think. They can tell the difference between innovation and BS.

3. Invest in the right partnerships: You can't do this alone. Find partners who understand the technology deeply and who respect your creative vision. It's not a vendor relationship. It's a collaboration.

4. Test extensively before going public: The NYFW presentation looked seamless, but that's because the team tested relentlessly. They found bugs before customers did. They practiced responses. They prepared for failure modes. When you go public with AI, reliability matters.

5. Maintain human judgment at the core: AI should augment human decisions, not replace them. The final creative decisions should come from humans who understand the business and the brand.

The Industry Inflection Point

We're at the moment where fashion is deciding what role AI will play. The moment when brands choose: Are we going to lead, or are we going to follow?

Barton chose to lead. She made a public bet that AI can enhance fashion without erasing the human element. That bet is being validated by the response to her NYFW presentation. People are talking about it. Other designers are taking notes. Brands are reaching out to Fiducia AI and asking how they can do something similar.

This won't be the last AI-powered fashion presentation. In fact, expect to see many more in 2025 and 2026. Some will be better executed. Some will be worse. Some will understand the balance between AI and human creativity. Some will get it wrong.

But Barton got there first, and she got it right. That matters.

The question now is whether the fashion industry learns the lesson. The lesson isn't "use AI because it's cool." The lesson is "think carefully about how to use AI to make your work better and more human."

Banerjee summed it up: "Most of this technology already exists. The differentiator now is assembling the right partners and building teams that can operationalize it responsibly."

That should be the standard for AI adoption everywhere. Not just in fashion.

FAQ

What is virtual try-on technology and how does it work?

Virtual try-on technology uses computer vision and 3D rendering to show customers how clothing would look on their body without physically wearing it. The system captures an image of the customer (or uses a preset avatar), identifies the garment being tested, and renders a photorealistic image of that person wearing the item. The technology works by creating 3D models of both the clothing and the body, then simulating how fabric drapes and moves, accounting for factors like fit, proportions, and material properties. Advanced systems like those used in Barton's presentation can handle real-time adjustments, different poses, and even styling combinations.

How does AI improve the fashion design process?

AI accelerates several stages of fashion design by automating repetitive tasks and providing intelligent suggestions. During the conceptual phase, designers can use generative AI to explore visual variations, test different color palettes, and experiment with proportions without creating expensive physical prototypes. During production, AI helps forecast demand more accurately, optimize supply chains, and identify potential quality issues before manufacturing. For customer experience, AI powers personalization, style recommendations, and virtual try-ons. The key is that AI handles the computational heavy lifting, freeing designers to focus on creative decisions and brand vision. It's not replacing the designer's judgment, but rather amplifying their capabilities.

Why would fashion brands hesitate to use AI publicly?

Luxury and fashion brands have built their reputation on heritage, craftsmanship, and authenticity. There's a concern that publicly using AI might damage that positioning by suggesting the creative work is less valuable or that the brand is cutting corners through automation. Customers sometimes equate AI with a loss of human artistry or quality. Additionally, there are legitimate questions about copyright and fair use when AI models are trained on millions of existing images from photographers and other designers. Brands worry about backlash if they're seen as erasing human creators or using generative AI without proper attribution and compensation. This is why many brands experiment with AI in operations quietly, while a few like Kate Barton choose transparency and position AI as a tool that enhances rather than replaces human creativity.

What role did IBM Cloud infrastructure play in Barton's presentation?

IBM Cloud provided the foundational infrastructure and tools for building the AI system. IBM Watsonx supplied the foundational large language models that could be customized for Barton's specific use case without requiring months of retraining. IBM Cloud provided the computing resources to run the visual recognition models, language models, and image generation systems in real-time at scale. IBM Cloud Object Storage managed the massive volume of imagery—from product photos to generated virtual try-on renders—that the system needed to access instantly. Essentially, IBM Cloud handled the "boring" but essential parts of infrastructure, allowing Fiducia AI to focus on building the intelligence layer. This partnership exemplifies how modern AI projects require not just smart algorithms, but robust, scalable infrastructure from enterprise providers.

How can smaller brands compete with larger ones using AI?

AI democratizes access to capabilities that were previously available only to large, well-resourced brands. A small designer can now partner with an AI service provider to create personalized customer experiences, optimize their supply chain, and enhance their product presentation—things that used to require large teams and massive budgets. The barrier is no longer capital; it's access to the right partnerships and understanding of how to deploy AI responsibly. Emerging platforms and consultancies make it possible for smaller brands to implement AI solutions without building their own infrastructure from scratch. The playing field has shifted from "Who can afford the biggest teams?" to "Who can assemble the best partnerships and deploy technology thoughtfully?" Barton's collaboration with Fiducia AI rather than attempting to build everything internally shows this model in action.

What does "AI orchestration" mean and why is it the hard part?

AI orchestration refers to integrating multiple AI systems so they work together seamlessly to solve a complex problem. In Barton's case, it meant connecting visual recognition (to identify clothing), natural language understanding (to process customer questions in multiple languages), image generation (to create virtual try-ons), and response generation (to provide helpful answers) into one cohesive system that responds in under 2 seconds. The hard part isn't building any single component—those technologies exist and are mature. The hard part is ensuring they work together reliably, handle edge cases gracefully, maintain real-time performance under load, and degrade gracefully when something fails. It's about orchestration, not just intelligence. Most AI projects fail not because the models are bad, but because the orchestration is poor. This is why partnerships with companies that understand enterprise systems (like IBM) are valuable.

Is AI adoption in fashion inevitable?

Yes, according to industry leaders like Ganesh Harinath, who predicts AI will be normalized in fashion by 2028 and embedded in the operational core of retail by 2030. The forces driving this are competitive pressure, consumer expectations for personalization, technology maturity, and the increasing availability of AI talent. Brands that don't adopt AI will find themselves disadvantaged—longer design cycles, less efficient supply chains, less personalized customer experiences. This follows the pattern of e-commerce in the 1990s, which seemed risky and inauthentic to luxury brands at first, but eventually became table stakes. However, the future depends on how brands adopt AI. The most successful approach—like Barton's—will enhance human creativity and maintain transparency about how the technology is being used, rather than attempting to hide it or use it to replace human workers.

What are the ethical concerns with AI in fashion?

The primary ethical concern is that AI, deployed incorrectly, could erase human creators. This could happen through generative AI that produces designs without human input, synthetic imagery that replaces photographers and models, or algorithmic copying of competitors' designs. Secondary concerns include proper attribution and compensation for the artists and photographers whose work was used to train AI models. Intellectual property questions remain unresolved: if your AI model was trained on thousands of existing fashion photographs, do you owe compensation to those photographers? How transparent must you be about using AI in your creative process? Kate Barton's answer is clear: transparency, clear licensing agreements, proper credit, and a commitment that technology amplifies rather than erases human creativity. The industry is still working out the standards, but Barton's ethical framework—"If the technology is used to erase people, I am not into it"—should guide adoption across the industry.

How does multilingual AI support global fashion experiences?

Multilingual AI removes language barriers that traditionally limited global fashion experiences to people who could physically attend events or speak English. With Barton's system, a customer in Seoul can ask a question in Korean about a piece from New York Fashion Week, get an answer in Korean, and see photorealistic virtual try-ons. This requires more than just translation; it requires cultural understanding. The system needs to understand that "formal wear" means something different in different cultures. It needs to account for color preferences that vary by geography. It needs to deliver styling advice that makes sense in different contexts. Large language models trained on diverse global data can handle much of this, but careful prompt engineering and cultural context from human experts is essential. This makes fashion genuinely global in a way it never has been before.

What should brands do if they want to adopt AI like Kate Barton did?

Start by clearly defining the problem you're trying to solve, not the technology you want to use. What customer experience are you trying to improve? What creative process do you want to enhance? Then find partners who understand both the technology and your industry. Don't try to build everything in-house. Build relationships with AI service providers, infrastructure companies, and consultancies. Be transparent with customers about what AI is doing and why. Maintain human judgment at the core of creative decisions. Test extensively before going public—reliability matters when you're representing your brand. And finally, commit to using AI responsibly: proper attribution, clear communication, and a firm ethical stance that technology enhances rather than erases human creativity. This isn't about being early to adopt AI; it's about adopting it thoughtfully.

The Bottom Line

Kate Barton's NYFW presentation represents an inflection point in how creative industries think about technology. It's not "AI is here, let's use it." It's "AI is here, and we can use it to make our work better without losing our humanity."

That distinction matters. It's the difference between innovation and hype. It's the difference between building something that lasts and building something that generates buzz for a moment.

Barton, Fiducia AI, and IBM showed that it's possible to deploy sophisticated AI systems that respect human creativity, maintain transparency, and deliver genuine value to customers. That's the template for AI adoption in any creative field.

The question now is whether the fashion industry, and industries like it, will follow this template or take shortcuts. The shortcuts would be tempting: automate design, eliminate human photographers, replace human stylists with algorithms. But that path leads to commodification, not innovation.

Barton chose the harder path. She chose to use AI to amplify human creativity. That choice, and the successful execution of her vision, should inspire others to do the same.

The future of fashion isn't automated. It's human, augmented by intelligent tools. And that future just arrived at New York Fashion Week.

Key Takeaways

- Fiducia AI built a production-grade system combining computer vision, multilingual NLP, and real-time image generation on IBM infrastructure

- Virtual try-on technology reduces fashion return rates by approximately 40%, delivering measurable business value alongside customer experience improvements

- Kate Barton positioned AI as a storytelling and craft-enhancement tool, not as a replacement for human creativity, establishing an ethical framework for AI adoption

- The hardest challenge in AI implementation is orchestration (connecting multiple systems seamlessly), not building individual components

- Industry leaders predict AI will be normalized in fashion by 2028, with embedding in retail operations by 2030, following the pattern of e-commerce adoption

- Successful AI adoption requires partnerships combining creative vision (designers), technical expertise (AI companies), and infrastructure (cloud providers)

- Transparency about AI use builds trust with customers; most AI concerns stem from perceived deception, not from the technology itself

Related Articles

- AI Bias as Technical Debt: Hidden Costs Draining Your Budget [2025]

- AI-Generated Music at Olympics: When AI Plagiarism Meets Elite Sport [2025]

- Paza: Speech Recognition for Low-Resource Languages [2025]

- AI Safety by Design: What Experts Predict for 2026 [2025]

- SME AI Adoption: US-UK Gap & Global Trends 2025

- The AI Trust Paradox: Why Your Business Is Failing at AI [2025]

![AI Fashion Week: How Kate Barton Redefined Runway Shows [2025]](https://tryrunable.com/blog/ai-fashion-week-how-kate-barton-redefined-runway-shows-2025/image-1-1771092388881.jpg)