The AI Trust Paradox: Why Your Business Is Failing at AI (And How to Fix It)

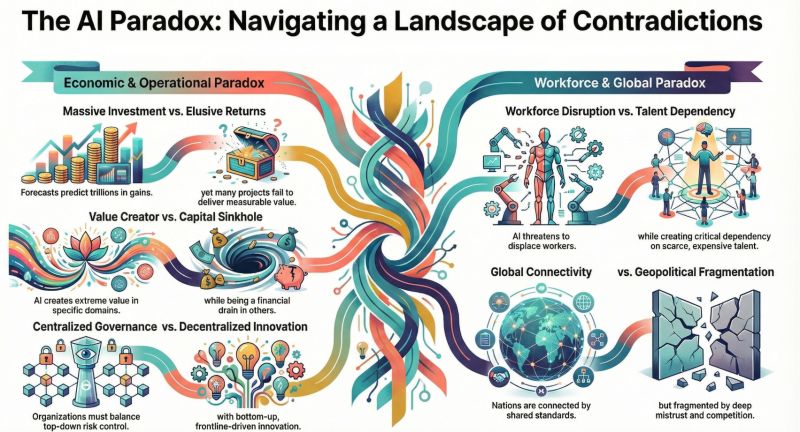

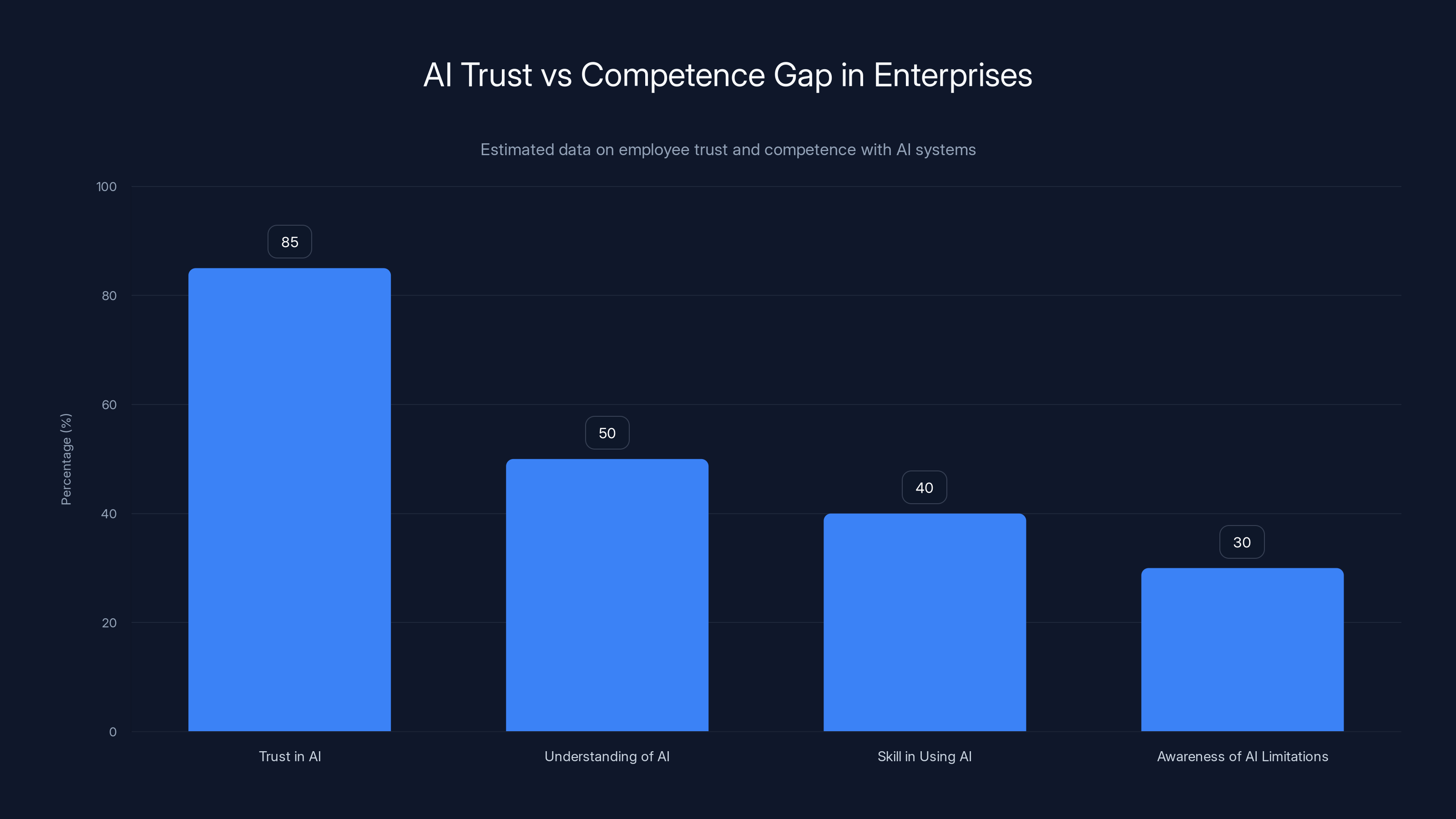

There's something deeply unsettling happening in enterprise technology right now. Your team is deploying AI systems with confidence. They're trusting outputs they don't fully understand. They're making decisions based on data they haven't validated. And nobody seems to notice the contradiction.

This is the AI trust paradox, and it's probably costing you more money than you realize.

Here's the thing: recent research reveals a dangerous gap between confidence and capability. Employees trust AI tools at scale. They've bought into the hype. But they haven't actually developed the skills to use those tools responsibly. That's not just inefficient—it's a business risk wrapped in a productivity bow.

The data is pretty stark. Ninety-six percent of data leaders admit their staff need more training to use AI responsibly. Yet here's where it gets weird: employees are already using these systems today. They're already making decisions. They're already generating reports, analyzing data, and steering business direction based on AI outputs they don't truly understand.

That's not innovation. That's gambling.

I've watched this play out in real companies. A finance team adopts an AI forecasting tool without understanding the underlying models. A marketing department uses AI to segment customers without validating data quality. An engineering team relies on AI code suggestions without reviewing the security implications. The systems work—sometimes brilliantly. But when something breaks, when bias emerges, or when accuracy suddenly drops, everyone's scrambling to figure out what went wrong.

The problem isn't the AI itself. The problem is that we've romanticized these tools without building the foundation of knowledge that actually makes them work safely and effectively. We've created a situation where confidence exceeds competence by a dangerous margin.

This article breaks down what the AI trust paradox really means for your business, why it happened, and most importantly, what you need to do about it right now. Because ignoring this gap isn't just inefficient—it's leaving competitive advantage on the table.

TL; DR

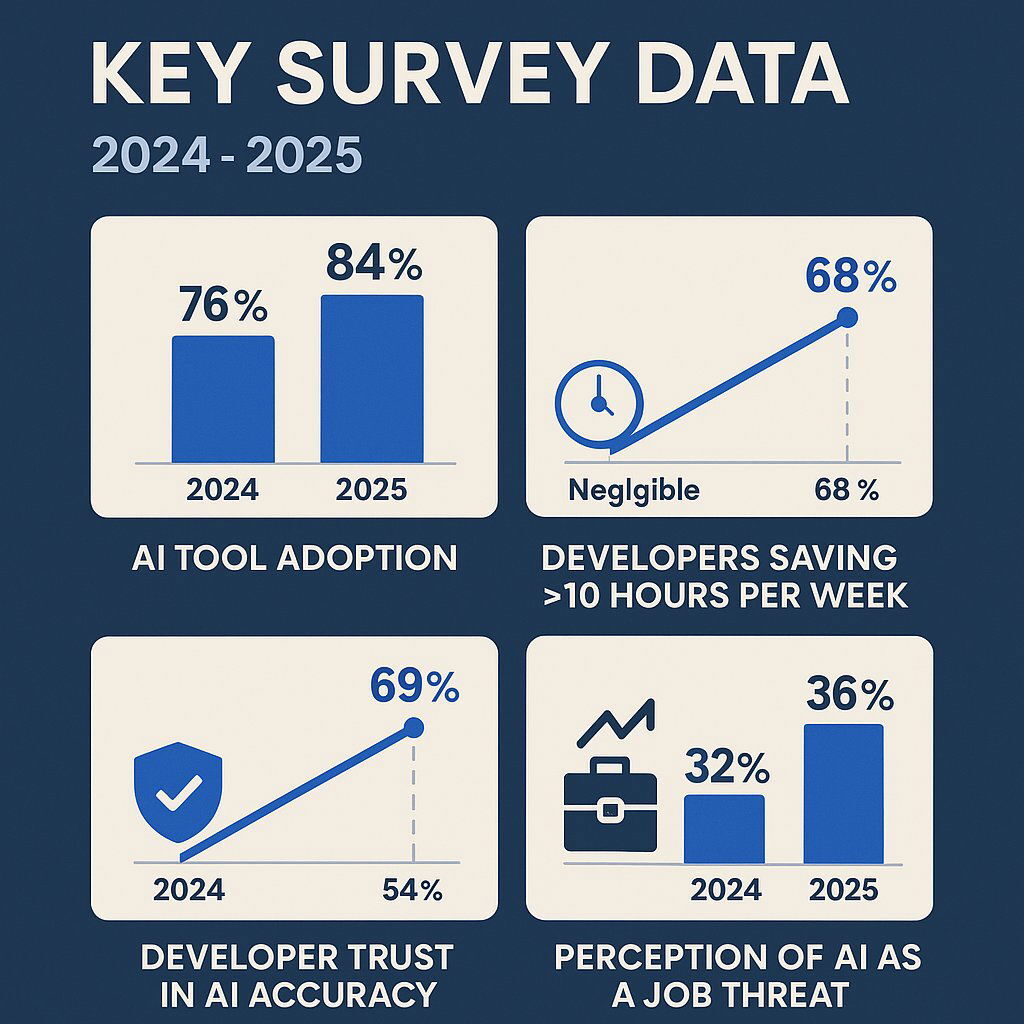

- The paradox exists: 96% of leaders say staff need AI training, but employees already trust and use AI tools daily without proper skills

- Data literacy beats AI literacy: 82% of leaders prioritize data training over AI training (71%), yet this critical gap remains unfilled

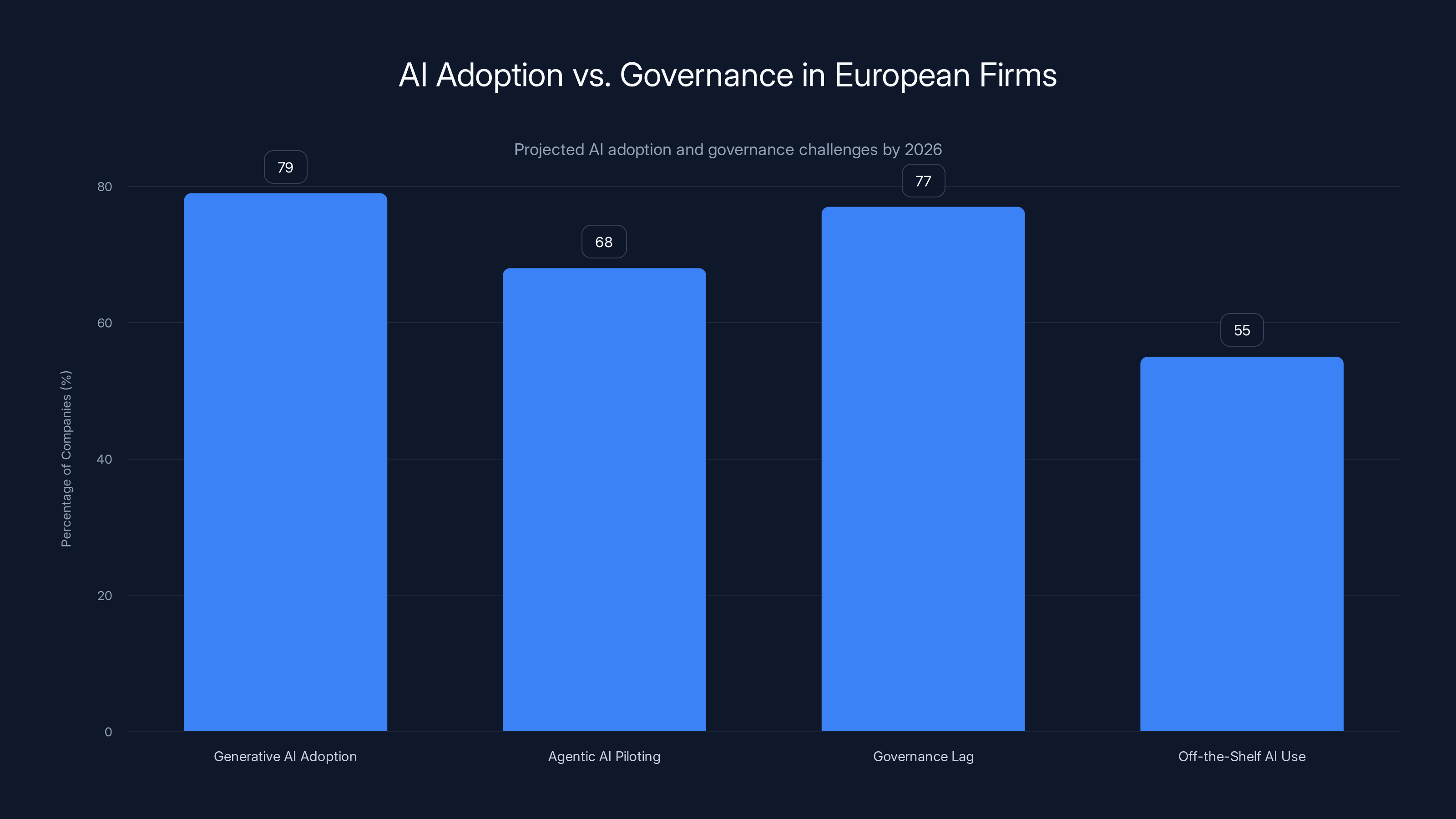

- Governance is failing: 77% of European firms admit AI visibility and governance haven't kept up with employee AI adoption

- Budget is increasing: 23% of companies plan significant spending increases on employee upskilling, security, and governance

- Real impact incoming: By Q1 2026, 79% of European businesses expect to have deployed generative AI, with 68% piloting agentic AI systems

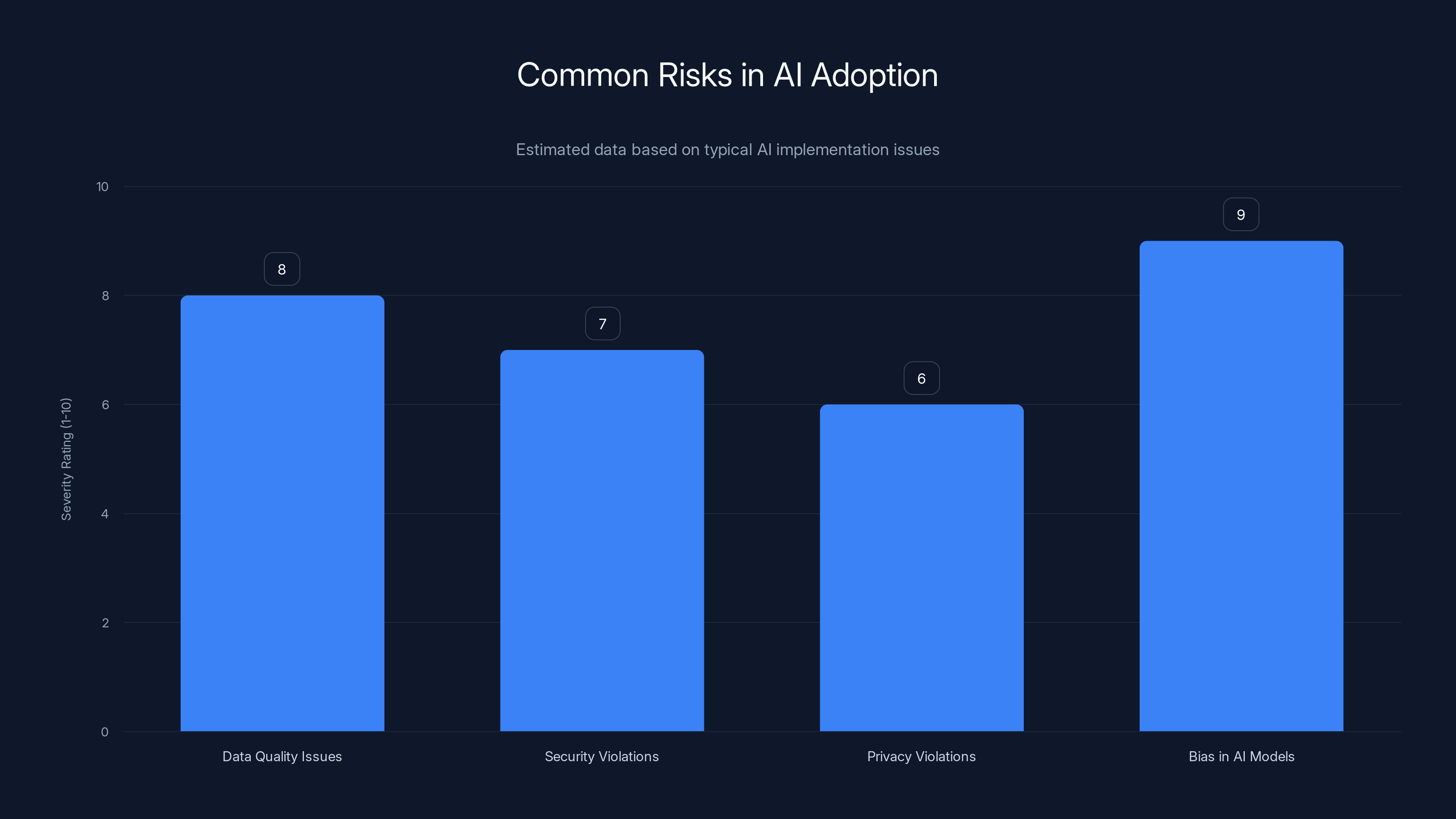

Data quality issues and bias in AI models are the most severe risks when adopting AI without addressing the trust paradox. Estimated data based on typical challenges.

Understanding the AI Trust Paradox: The Core Contradiction

Let me explain what makes this a paradox and why it matters more than it initially sounds.

A paradox, by definition, is a contradiction that seems impossible but is actually true. The AI trust paradox fits perfectly: employees trust AI systems and the data behind them despite not having developed the skills needed to use them responsibly. This isn't theoretical. This is happening right now in enterprises across every continent.

Think about what that means in practical terms. An accountant trusts an AI system to flag irregularities in monthly spending reports. But they don't understand how the algorithm weights different transaction types. They don't know if the model was trained on data from their industry or a completely different one. They don't recognize the blind spots in the training data. Yet they review the flagged items and act on the recommendations with genuine confidence.

Or consider a customer service manager using AI to predict which customers might churn. The system is accurate—maybe 78% accurate. But the manager doesn't know what that 22% of false positives means. They don't understand the business cost of misclassifying a loyal customer as likely to leave. So they implement retention campaigns based on incomplete information, wasting budget on customers who were never at risk.

This trust-competence gap isn't a minor training issue. It's a systemic problem that grows as AI adoption accelerates.

The paradox exists for specific reasons. First, AI systems are genuinely impressive. They work. They produce results. When results arrive quickly and seem accurate, trust follows naturally—humans are pattern-matching creatures, and positive outcomes build confidence faster than education builds understanding. Second, enterprise culture often prizes speed over depth. Deploy now, train later. Implement quickly, validate gradually. This approach works when you're talking about spreadsheets, but it fails spectacularly with systems that make autonomous decisions based on complex algorithms.

Third, there's an asymmetry in what people think they need to know. Many employees believe they understand AI better than they actually do. They've seen Chat GPT. They've read articles about machine learning. They've experimented with prompts. They assume this surface familiarity translates to understanding complex enterprise AI systems. It doesn't. But that confidence persists anyway.

The result is an organization where everyone is using AI tools, most people trust the outputs, but few truly understand how or why the systems work. That's the paradox. That's the problem.

Estimated data shows that investing

The Research: What Leaders Are Actually Saying About AI Trust

Let's ground this in data, because paradoxes only matter if they're real.

Recent research from enterprise data platforms reveals something crucial: 96% of data leaders say their staff need more training to use AI responsibly. That's not "most"—that's nearly everyone. If you took a room of 100 enterprise data leaders, 96 of them would tell you their teams lack adequate AI training. That's consensus.

But here's where the paradox crystallizes. That same research shows that employees already trust AI outputs. They're already using the systems. They're already making decisions. The training they need and the action they're taking are completely misaligned.

The same leaders also reveal something interesting about what training matters most. When asked about priorities, 82% emphasize data literacy training, while 71% emphasize AI literacy training. This 11-point gap is telling. Leaders understand intuitively that if your data is garbage, your AI is garbage, no matter how sophisticated the algorithm. They understand that employees need to know how to evaluate data quality, validate sources, and recognize missing information before they can possibly use AI responsibly.

Here's the kicker: most companies aren't delivering either training effectively. They're caught between knowing what's important and actually implementing it. That gap grows every quarter that AI adoption accelerates.

The research also highlights specific concerns that keep data leaders up at night. The top worries include:

- Data quality issues: Incomplete, outdated, or biased data feeding into AI systems

- Security vulnerabilities: Sensitive information exposed through AI integrations

- Lack of agentic expertise: Teams don't understand autonomous AI agents well enough to oversee them safely

- Observability gaps: Companies can't see what their AI systems are actually doing or why

- Missing safety guardrails: No clear protocols for when AI should and shouldn't be trusted

Each of these concerns traces back to the trust paradox. Employees trust systems. But the organization hasn't built the oversight infrastructure that trust requires. That's the structural problem underneath all the specific concerns.

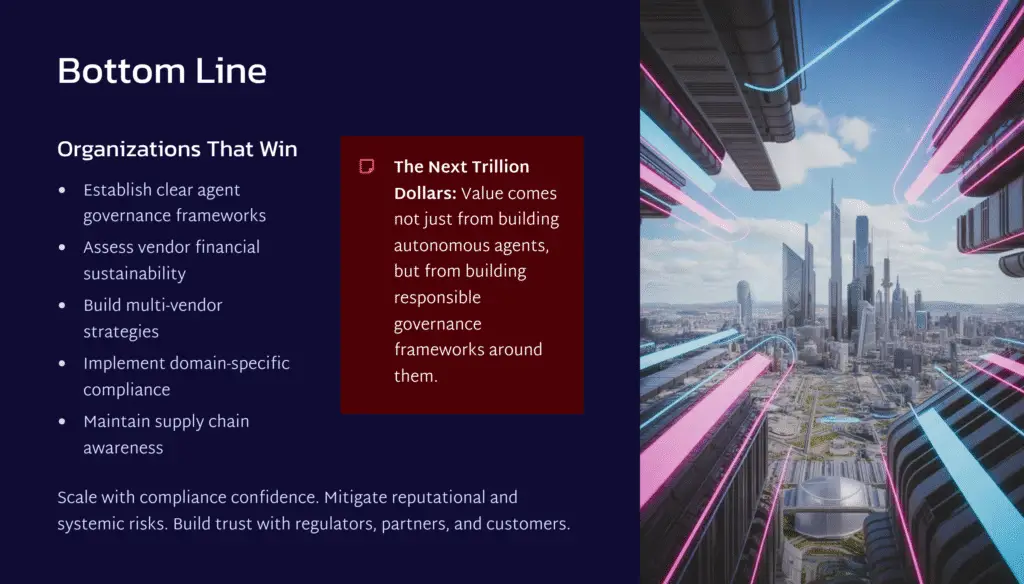

AI Adoption Is Accelerating, But Governance Is Falling Behind

The pace of AI adoption is genuinely staggering. Here's what companies are actually planning:

By the end of Q1 2026, 79% of European businesses expect to have adopted generative AI in their workflows. That's almost four in five companies. In a single year. That's not adoption—that's wholesale transformation.

But there's more. Nearly as many—68% of companies—are piloting agentic AI systems. These aren't passive tools that employees interact with. These are autonomous agents that make decisions, execute tasks, and take actions with minimal human oversight. That's a completely different risk profile than asking Chat GPT for a summary.

So adoption is happening at breakneck speed. What about governance?

Here's the problem: 77% of European firms admit that AI visibility and governance haven't kept pace with employee AI adoption. That's more than three-quarters of companies essentially saying: "We have no idea what our teams are actually doing with AI, and we haven't built the controls to oversee it properly."

Think about what that means. Teams are deploying agentic AI systems—systems that operate autonomously—without adequate visibility or governance frameworks. That's not just inefficient. That's a compliance nightmare. That's a data security risk. That's a potential competitive problem if sensitive information leaks or if decisions made by AI agents turn out to be discriminatory or biased.

The gap between adoption speed and governance implementation is the AI trust paradox in its most dangerous form.

Most companies are taking shortcuts on the implementation side too. More than half (55%) of surveyed firms are buying off-the-shelf AI agents instead of building custom systems. This is actually smart in some ways—it's faster and cheaper than building from scratch. But it also means they have less visibility into how these systems work, less ability to customize them for safety, and more dependency on vendor expertise they may not have properly evaluated.

The fundamental issue is that governance infrastructure takes time to build. You need policies. You need tools. You need training. You need oversight processes. You need people who understand both the technology and your business well enough to catch problems before they become incidents. Building that takes quarters, not weeks. But AI adoption is happening at the speed of weeks.

That mismatch is creating enormous risk.

Data literacy is prioritized by 82% of leaders, compared to 71% for AI literacy, highlighting the foundational importance of understanding data sources and biases.

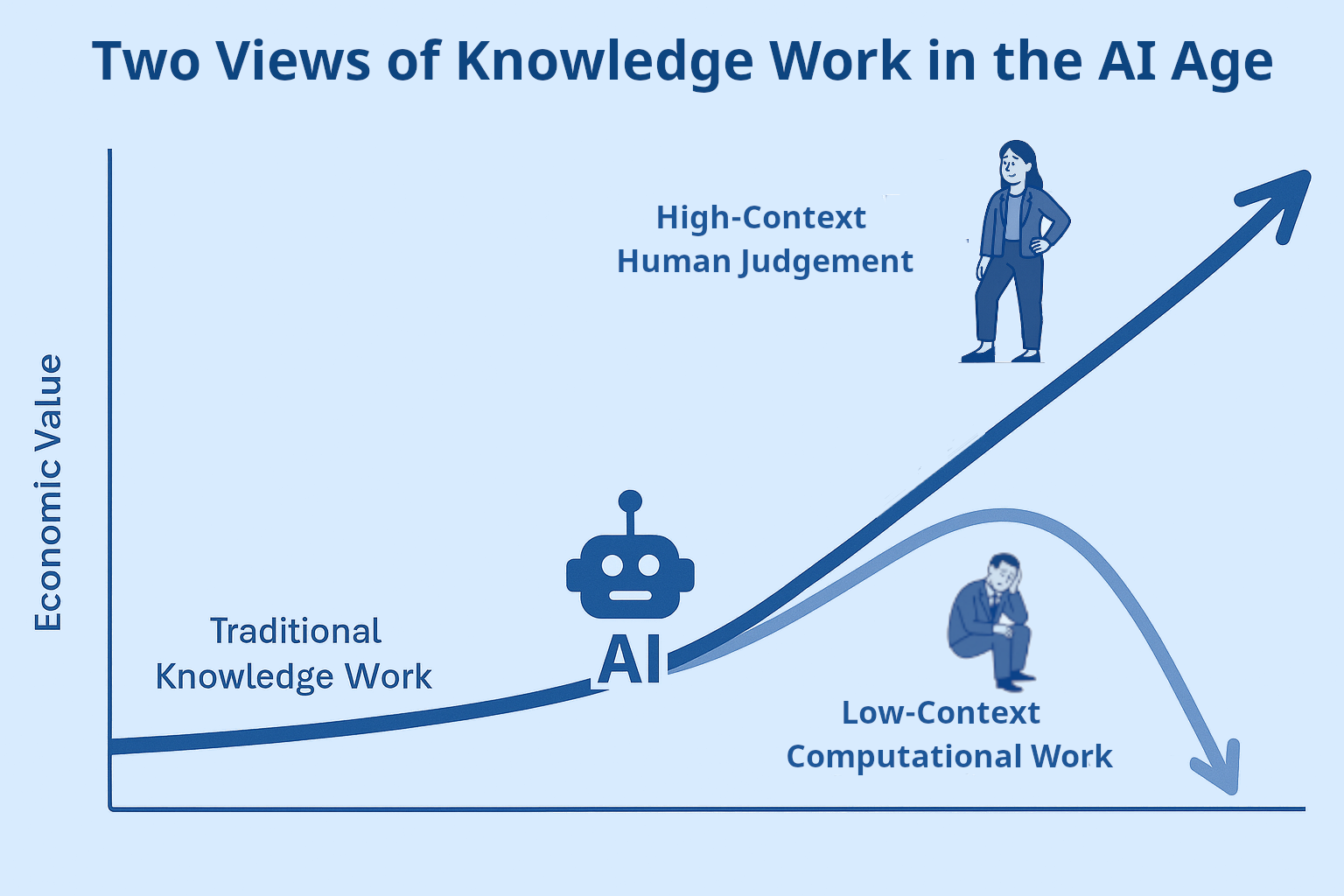

Data Literacy vs. AI Literacy: Which One Actually Matters More

This is where the research gets genuinely useful because it challenges common assumptions.

There's a widespread belief that companies need to train their workforce on AI fundamentals. Understand neural networks. Learn how algorithms work. Study machine learning concepts. This belief drives the "everyone should learn AI" movement that sounds great in principle.

But the research suggests something different. Companies prioritize data literacy training over AI literacy training by an 11-point margin, and there's a good reason: data literacy is the foundation.

Here's why this matters. Even if you perfectly understand how a neural network works, if you don't understand your data, you'll use AI wrong. You'll trust outputs from biased datasets without recognizing the bias. You'll make decisions based on incomplete information without knowing what's missing. You'll be confidently wrong in ways that sophisticated technical understanding can't fix.

Conversely, if you deeply understand your data—its sources, its quality, its limitations, its biases—you can use AI systems effectively even with basic understanding of how they work. You'll ask the right skeptical questions. You'll validate outputs. You'll notice when something seems off.

This suggests a training priority that might surprise you:

1. Data literacy comes first (understanding data sources, quality, biases, completeness) 2. Domain expertise comes second (understanding what good and bad outcomes look like in your specific business) 3. AI literacy comes third (understanding how AI systems work) 4. Technical skills come fourth (actual technical implementation)

Most companies have this backwards. They start with technical skills because that's easier to teach and easier to credential. But this creates the trust paradox perfectly: technically capable employees without deep data understanding using powerful AI systems they don't fully trust.

The data literacy gap is especially critical because most enterprise data is messy. It's collected from multiple systems over years. It has gaps. It has biases. It reflects historical inequities and outdated categorizations. An employee who deeply understands their company's data will spot these issues immediately. An employee who's been trained on AI theory but has never really examined the actual data they work with will miss them entirely.

Here's the practical implication: if you're allocating training budget, spend significantly more on data literacy than on AI literacy. Teach people to question their data. Teach them to validate. Teach them to recognize patterns and anomalies. Teach them to ask: "Where did this number come from? Who collected it? When? Under what conditions? What's changed since then?"

Do that, and they'll use AI systems cautiously and effectively. Skip that, and they'll trust AI outputs even when they should be skeptical.

The Specific Risks: What's Actually Going Wrong

Let's move from theory to practice. What are the concrete problems that emerge when companies adopt AI without solving the trust paradox?

Data Quality Problems at Scale

When employees trust AI systems without understanding the underlying data, data quality issues become compounding. An AI model trained on incomplete or biased data produces biased outputs. Those outputs are trusted by employees. Decisions are made based on those outputs. Bad decisions compound over time.

I've seen this specifically in customer segmentation. A company uses AI to create customer segments for targeted marketing. The AI model works with data that includes only customers who completed purchases. But that data is missing customers who browsed extensively but never bought. So the model never learns anything about potential customers. It only optimizes around actual customers. The resulting segmentation is useful but incomplete. Employees trust the segments. They spend budget on targeting the identified segments. They completely miss potential customers that the biased data never showed to the model.

That's one example. Multiply it across every AI application in your organization, and you start seeing the scale of the problem.

Security and Privacy Violations Through Carelessness

Employees who don't understand how AI systems work sometimes feed sensitive data into those systems without realizing the implications.

I know of a company where a marketing team used an AI tool to analyze customer conversations from support tickets. The tickets contained customer names, contact information, payment details, and sensitive personal information. The employees trusted the AI tool—it was a commercial product from a known vendor—so they uploaded the data without properly evaluating whether the vendor's privacy policies aligned with regulatory requirements. The vendor's terms of service included using the data for model training. Suddenly, sensitive customer data was being used to improve a vendor's product that other customers might access.

This happens constantly. Employees trust AI systems. They don't read the terms of service. They don't understand the implications. They don't ask whether that particular use case violates GDPR, CCPA, or industry-specific regulations. They just use the tool.

Agentic AI Running Without Proper Oversight

Agentic AI is different from passive tools. These are systems that make decisions and take actions autonomously. They don't ask for permission before executing.

When employees don't fully understand how agentic AI systems work, they sometimes give these systems more autonomy than is safe. An AI agent trained to reduce costs might start canceling supplier relationships without fully understanding the business consequences. An AI agent trained to improve response times might start making customer service decisions that violate company policy. The system is doing exactly what it was trained to do. But the person who deployed it didn't have enough expertise to anticipate the consequences.

This is where the expertise gap becomes genuinely dangerous. Agentic AI requires different oversight than passive tools. You need people who understand how these systems make decisions well enough to spot when they're making dangerous decisions. Research shows that most companies lack this expertise.

Bias and Discrimination That Nobody Catches

This is subtle because it often doesn't announce itself. A hiring AI trained on historical hiring data learns the biases embedded in that data. A lending AI trained on past approval decisions replicates the discriminatory patterns from that past. A pricing AI trained on competitor pricing learns to price differently for different groups in ways that might be illegal.

Employees using these systems trust them because they produce outputs quickly and seem reasonable. Nobody catches the bias because nobody has the expertise to spot it. The organization makes decisions. Only later, when regulators investigate or when journalists report on the discrimination, does the problem surface.

This is where the trust paradox becomes a legal liability.

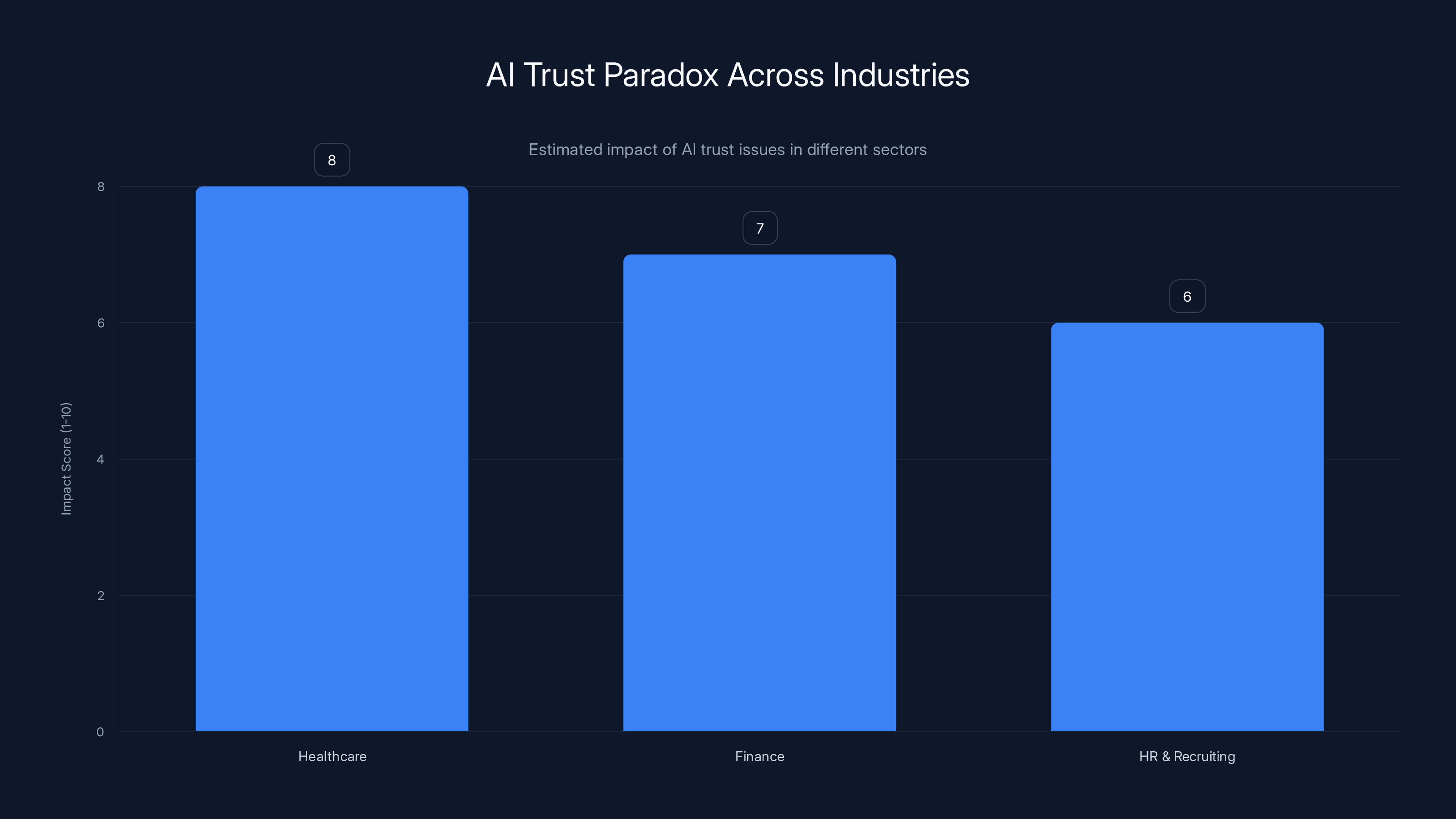

Estimated data shows healthcare faces the highest impact from AI trust issues, followed by finance and HR. Each industry has unique challenges that need tailored governance strategies.

Why Companies Are Investing in Solutions Now

The good news is that companies are recognizing these problems and starting to invest.

According to research, companies are planning significant increases in spending on several fronts. Specifically, 23% of organizations project significant spending increases on:

- Employee upskilling (especially data literacy and AI literacy)

- Privacy and security infrastructure (to prevent the data exposure problems described above)

- AI governance and observability (to gain visibility into what AI systems are actually doing)

- Safety guardrails and controls (to limit what autonomous systems can do without human oversight)

That 23% number might not sound huge, but consider what it means. One in four companies is moving from "we have an AI adoption problem" to "we're going to spend real money fixing it." That's a tipping point.

Why now? Several factors converge:

First, the problems are becoming visible. Companies have deployed AI. Problems have emerged. Regulators are asking questions. Risk management teams are flagging issues. Suddenly the invisible trust paradox becomes very visible.

Second, the tools for governance are becoming available. Better monitoring platforms. Better training solutions. Better oversight frameworks. Three years ago, if you wanted to implement AI governance, your options were limited. Now there are commercial solutions, industry frameworks, and proven approaches.

Third, the cost of not fixing the problem is becoming clear. A company that doesn't invest in governance might save money short-term, but they're taking on AI-related risks that could become very expensive very quickly. That calculus is shifting.

Building Trust Through Governance: The Framework

So how do you actually solve the AI trust paradox? How do you move from a state where employees trust systems they don't understand to a state where they use AI responsibly and effectively?

The solution involves four interconnected layers:

Layer 1: Data Governance Foundation

Before you can trust any AI system, you need to trust the data flowing into it. That means:

- Data inventory: Know every source of data used by your AI systems

- Data quality standards: Define what "good data" means for your specific use cases

- Data validation processes: Check data for completeness, accuracy, and bias before it enters AI systems

- Data documentation: Record where data came from, who collected it, when, and under what conditions

- Data access controls: Ensure sensitive data is handled according to regulations and policy

This layer is foundational. You can't build effective AI governance on top of unclear data.

Layer 2: AI System Inventory and Documentation

You need to know what AI systems your organization is actually using. Sounds obvious, but many companies discover they have AI systems deployed that leadership didn't know about.

For each system, document:

- What it does: Its specific function and decision-making scope

- How it was trained: What data was used, what time period, what source

- What it decides: What outputs it produces and what decisions it influences

- Who uses it: Which teams and employees

- What could go wrong: Specific failure modes and what they would look like

- How to override it: Can humans intervene if the system is producing bad outputs

This inventory is your baseline for governance. You can't govern what you don't know about.

Layer 3: Risk Assessment and Oversight

Not all AI systems need the same level of oversight. A system that recommends optional features to customers is lower risk than a system that makes lending decisions that determine whether someone gets credit.

For each AI system, assess:

- Risk level: What's the worst-case outcome if this system fails or produces biased outputs

- Oversight intensity: How much human review and validation does this system require

- Intervention triggers: What outputs should automatically trigger human review

- Audit frequency: How often should you check whether the system is still producing fair and accurate outputs

High-risk systems (hiring decisions, lending, content moderation for children) need intense oversight. Medium-risk systems need periodic validation. Low-risk systems can run with minimal oversight.

Layer 4: Ongoing Training and Capability Building

Governance isn't static. As AI systems evolve, as your business changes, as new risks emerge, your governance needs to evolve too.

This requires:

- Regular data literacy training: Employees need ongoing education on how to validate data and spot bias

- System-specific training: When you deploy a new AI system, employees need training on how to use it, what it can and can't do, and what to do if something seems wrong

- Red flag training: Teach employees to recognize outputs that should trigger skepticism

- Regular updates: As systems change or as new risks emerge, update the training

Governance infrastructure needs people who understand both the technology and the business well enough to maintain it over time.

Estimated data shows a significant gap between employee trust in AI systems (85%) and their understanding (50%), skill (40%), and awareness of limitations (30%). This highlights the AI trust paradox.

Real-World Implementation: What Successful Companies Are Actually Doing

Theory is useful, but practice is what matters. Let me walk through what companies are actually implementing to solve the trust paradox.

Case Study: The Financial Services Approach

A major financial services company discovered they had dozens of AI systems in use across credit decisions, customer service routing, fraud detection, and investment recommendations. Most had been deployed without formal governance.

They started with an inventory. It took three months to identify every system, document what it did, and understand its decision-making logic. Sixteen systems met criteria for "high-risk" because they directly influenced lending, investment, or fraud decisions.

For each high-risk system, they implemented:

- Monthly accuracy audits: Compare AI decisions against ground truth data to ensure the system is still performing correctly

- Quarterly bias audits: Check whether the system's decisions vary by protected characteristics (age, gender, race, etc.)

- Human review for edge cases: Any decision above a certain risk level gets reviewed by a human before being implemented

- Customer explanation: When the system makes a decision that affects a customer (like rejecting a loan application), the company can explain the reasoning

After six months, they discovered:

- One system had developed a subtle bias against applicants from certain zip codes

- Another system was making loan decisions based on data that was over two years old

- A third system was using outdated risk models that no longer reflected current market conditions

They fixed these issues. Result: better decision-making, reduced regulatory risk, and genuine confidence in the AI systems because that confidence was now based on actual validation rather than assumption.

Cost: approximately 2-3 FTEs per quarter for ongoing oversight. Value: preventing a potential discrimination lawsuit or regulatory fine that could have cost millions.

Case Study: The Software Company Approach

A software company was deploying AI across product recommendations, customer support routing, and content moderation. They took a different approach focused on building AI literacy across the engineering team.

Instead of centralizing governance, they embedded AI expertise into product teams. Each team got training on:

- How their specific AI system works

- What data it uses and where that data comes from

- What could cause it to fail or produce biased outputs

- How to monitor its performance

- When to escalate to the AI governance team

They also built dashboards that every product team could access showing:

- Real-time accuracy metrics for their AI system

- Bias metrics broken down by customer segment

- Anomalies that the system is detecting in its own performance

- Automated alerts when the system's accuracy drops below a threshold

Result: problems get caught quickly because the people using the systems every day are trained to spot them. Governance becomes distributed rather than centralized, which works better for a fast-moving software company.

Cost: significant training investment upfront, plus

The Role of Automation in Solving the Trust Paradox

Here's an interesting meta-observation: automation can actually help solve the trust paradox if you're intentional about it.

Specifically, automated governance tools can make oversight scalable. Instead of hiring teams of compliance people to manually review AI outputs, you can use automated systems to flag anomalies, monitor accuracy, and detect bias.

For example, platforms like Runable enable teams to automate report generation, document creation, and insight synthesis from data and AI systems. But more importantly, they can help teams build automated oversight processes—systems that pull data from your AI systems regularly, run validation checks, and generate reports that flag problems.

When done well, this automation actually builds trust because:

- Continuous monitoring: Rather than periodic reviews, the system is always checking

- Consistent standards: Rules are applied consistently rather than varying based on who's reviewing

- Scalability: You can monitor dozens or hundreds of AI systems with the same infrastructure

- Documentation: Every decision is logged and can be reviewed later if needed

The key is that automation should serve governance, not replace it. You still need humans who understand the business deciding what matters and what thresholds should trigger alerts. But the mechanics of checking can be automated.

By 2026, 79% of European firms plan to adopt generative AI, but 77% acknowledge governance lag. Estimated data highlights the rapid adoption and governance gap.

Industry-Specific Applications and Challenges

The AI trust paradox manifests differently depending on your industry. Let me walk through how this plays out in different sectors and what the specific governance challenges are.

Healthcare

In healthcare, the trust paradox becomes a patient safety issue. A doctor uses an AI diagnostic system to help interpret medical imaging. The system is accurate 94% of the time. The doctor trusts the system and spends less time carefully reviewing the images themselves. That works great 94% of the time. The other 6% of the time, the AI misses something important, the doctor misses it because they weren't carefully reviewing it anyway, and the patient gets a delayed or wrong diagnosis.

The governance challenge: healthcare professionals need to understand enough about AI systems to know when to trust them and when to maintain skepticism. That requires ongoing education and clear protocols for when AI should and shouldn't be used.

Finance

In finance, the trust paradox becomes a regulatory and risk management issue. A trading algorithm uses AI to make portfolio adjustments automatically. It works well most of the time. But what happens during a market anomaly that the AI system hasn't seen before? Without proper oversight, the AI might make decisions that violate risk policy or that create unexpected exposure.

The governance challenge: real-time monitoring of AI decisions to ensure they stay within acceptable risk parameters. This requires systems that can halt automated decision-making if something goes wrong.

HR and Recruiting

In HR, the trust paradox becomes a discrimination and fairness issue. A recruiting AI trained on historical hiring decisions makes resume screening recommendations. The AI learned from the company's historical hiring data, which reflects the company's historical biases. Now it's replicated those biases at scale. Hiring managers trust the AI's recommendations without realizing they're selecting for the same demographic patterns as the company's historical hiring.

The governance challenge: regular bias audits and the ability to override AI recommendations when they don't pass fairness standards.

E-commerce and Marketing

In e-commerce, the trust paradox becomes a customer experience and conversion issue. AI systems optimize pricing, product recommendations, and marketing messages. They work—they increase revenue. But sometimes they optimize in ways that hurt customer trust. A customer notices their price changed when they returned to add something to their cart. They feel manipulated. Meanwhile, the AI system is doing exactly what it was trained to do: optimize for revenue.

The governance challenge: balancing optimization with ethics and ensuring that AI-driven changes don't harm customer relationships or violate trust.

The Investment Case: Why Governance Actually Saves Money

Let me make the financial case for why solving the trust paradox through governance is worth the investment.

The short-term cost is clear: you're hiring people, building tools, implementing processes. That costs money. Let's say implementing comprehensive AI governance costs a company with 50 AI systems deployed

What's the ROI?

Consider the cost of not having governance:

Scenario 1: Regulatory Fine An AI system makes biased decisions. A regulator investigates. GDPR fine:

Scenario 2: Reputational Damage A news story breaks: "Company's AI System Discriminates Against [Group]." Customer acquisition cost jumps 30%. Customer lifetime value drops 20%. For a company with

Scenario 3: Operational Failure An AI system that was supposed to save the company money is actually making expensive mistakes. A supply chain AI cancels valuable supplier relationships. A pricing AI underprices products. A hiring AI creates compliance problems. The problem goes undetected for six months. Cost: $2-5 million in lost value.

Scenario 4: Data Breach An employee accidentally feeds sensitive customer data into a public AI tool. The tool's vendor uses the data to train their models. Customer data is now part of a system accessible to competitors or bad actors. Regulatory fines, customer notification costs, reputation damage: $5-10 million.

Now compare those scenarios to the

Moreover, governance actually creates business value beyond risk reduction:

- Better decision-making: When your AI systems are actually trustworthy, people use them more effectively

- Faster implementation: When you have governance frameworks in place, you can deploy new AI systems faster because you know how to evaluate them

- Competitive advantage: Companies that trust their AI systems use them more aggressively and gain competitive advantages

- Talent retention: People want to work at companies that are handling AI responsibly

Building Your AI Governance Team: Roles and Responsibilities

Implementing governance requires specific expertise. Here's how most successful companies are organizing their AI governance functions.

Chief AI Officer or Head of AI

This person owns the overall strategy for how the company uses AI responsibly. They report to the CEO or CFO. They set policy, allocate resources, and escalate problems. This role didn't exist five years ago. Now it's becoming standard in large companies.

AI Ethics Lead

This person focuses specifically on bias, fairness, and discrimination issues. They work with product teams to identify potential fairness problems, conduct regular audits, and recommend policy changes. This is often a combination of technical expertise and social science understanding.

Data Governance Lead

This person manages data quality, data access controls, and ensures that data used by AI systems meets quality standards. They work closely with data engineering teams.

AI Governance Engineer

This person builds the technical infrastructure for governance: monitoring systems, alerting systems, dashboards, and validation tools. They translate policy into actual systems that ensure compliance.

AI Literacy and Training Lead

This person designs and delivers training programs for employees on AI literacy, data literacy, and responsible AI use. They measure training effectiveness through tests and behavior change.

AI Product Manager

For each significant AI system, there's often a product manager who understands the business context, the technical implementation, and the governance requirements. They're the translator between business and AI teams.

Compliance and Legal

Legal expertise is critical. AI systems can violate regulations in ways that are genuinely difficult to predict. Having legal expertise embedded in AI governance decisions prevents costly mistakes.

The exact structure varies by company, but the key is that governance requires a team with diverse expertise, not just technical people.

Looking Ahead: What's Changing in 2025 and Beyond

The AI trust paradox is going to intensify before it gets better. Here's what's coming:

Regulatory Pressure: Governments are getting more serious about AI regulation. The EU AI Act is being implemented. The US is working on AI safety frameworks. Regulators are investigating AI discrimination cases. This will force companies to implement governance whether they want to or not.

Agentic AI Proliferation: More AI systems will be autonomous agents that make decisions without human involvement. That dramatically increases the importance of governance because the costs of failure increase.

Data Transparency Requirements: Regulations are starting to require that companies explain what data they use in AI systems. This will force data governance.

Liability Shifts: Currently, if an AI system causes harm, it's often unclear who's liable. Companies are starting to assume liability. That makes governance not optional.

Talent Scarcity: There aren't enough people with AI governance expertise yet. This will create bottlenecks. Companies that start building governance expertise now will have an advantage.

The companies that move early on solving the AI trust paradox will be the companies that get the most value from AI and face the least regulatory risk. This is a window where first-movers have a real advantage.

The Path Forward: Practical Next Steps

If you've read this far, you're probably thinking: "This is important, but where do I actually start?"

Here's a practical roadmap:

Month 1: Assess and Inventory

- Conduct an AI system inventory. What AI systems is your company currently using?

- For each system, document: what it does, who uses it, what data it uses, what decisions it influences

- Identify which systems are high-risk (big business impact if they fail or produce biased outputs)

Month 2-3: Set Governance Policy

- Define what "responsible AI" means for your company

- Set standards for data quality

- Define oversight requirements based on risk level

- Create policies for how employees should use AI systems

Month 4-6: Implement Monitoring

- For high-risk systems, implement monitoring that checks accuracy and bias regularly

- Set up alerts that trigger when systems start producing unexpected outputs

- Create dashboards that teams can use to monitor their own systems

Month 7-12: Build Capability

- Start data literacy training for key teams

- Train teams on specific AI systems they use

- Build the governance team that will maintain this over time

Ongoing: Maintain and Iterate

- Regular audits of high-risk systems

- Continuous training as new systems are deployed

- Update governance policies as new risks emerge

The key is starting now. The earlier you build governance, the more manageable it is. The longer you wait, the more AI systems you'll have deployed without proper oversight, and the harder governance becomes.

AI Automation as Part of the Solution

Here's something that might seem counterintuitive: using AI to govern AI.

As companies deploy more AI systems, manually reviewing each one becomes impractical. The solution that some companies are implementing is to use automated systems to handle the routine work of governance while humans focus on judgment calls and policy decisions.

For example, you could use an automated reporting system (like Runable's automation capabilities) to:

- Pull accuracy metrics from all your AI systems weekly

- Compare outputs against ground truth data

- Generate reports flagging systems where accuracy dropped

- Create executive summaries of governance status

- Document governance decisions for compliance purposes

This automation handles the repetitive work. Humans focus on interpreting results and making decisions. It's governance at scale.

The irony is that the tool solving the trust paradox is itself an AI tool. But used intentionally, it works.

Final Thoughts: The Real Cost of Ignoring the Paradox

Let's be direct about what happens if you ignore the AI trust paradox.

Your company will continue deploying AI systems because they work. They improve efficiency, they reduce costs, they provide competitive advantages. So you'll keep using them.

Meanwhile, the trust paradox persists. Employees trust systems they don't understand. Problems emerge slowly. An AI system starts producing biased outputs and nobody catches it. Or it makes an expensive mistake and everyone discovers it too late. Or a regulator investigates and finds compliance failures. Or a competitor leapfrogs you because they implemented governance early and can now trust their AI systems completely.

The paradox doesn't resolve itself. It compounds.

On the other hand, companies that invest in solving the AI trust paradox get multiple benefits: they can use AI more effectively because they trust the systems, they face lower regulatory risk, they have competitive advantages because they can move faster with AI than competitors who are still dealing with governance chaos, and they sleep better at night knowing the systems that are driving their business are actually trustworthy.

The decision is yours. But the longer you wait, the harder it becomes.

FAQ

What exactly is the AI trust paradox?

The AI trust paradox is the contradiction where employees trust AI systems and the data behind them despite not having developed the skills needed to use them responsibly. Research shows 96% of data leaders say their staff need more training to use AI responsibly, yet those same employees are already using AI systems daily with genuine confidence in the outputs. This gap between confidence and competence creates significant business and compliance risks.

Why does data literacy matter more than AI literacy?

Data literacy—understanding where data comes from, recognizing bias, validating completeness, and knowing limitations—is the foundation for using any AI system responsibly. Research shows 82% of leaders prioritize data literacy training while only 71% prioritize AI literacy. An employee who deeply understands their company's data will catch problems and use AI systems skeptically, even with basic AI knowledge. Conversely, an employee with sophisticated AI knowledge but poor data literacy will confidently make decisions based on bad information.

How do I know if my company has an AI governance problem?

You likely have a governance problem if: (1) You can't easily list all the AI systems your company is using, (2) Different teams are deploying AI with inconsistent oversight, (3) You don't have regular audits of your high-risk AI systems for accuracy and bias, (4) Your data quality standards aren't formally documented, (5) You haven't clearly documented what data feeds into your AI systems or where it comes from, (6) Employees use AI tools without formal training on responsible use. If three or more of these apply, governance is a priority.

What's the difference between oversight for high-risk vs. low-risk AI systems?

High-risk systems (those that influence lending, hiring, medical decisions, content moderation affecting children) need intense oversight: monthly accuracy audits, quarterly bias audits, human review of edge cases, and clear documentation of how decisions are made. Medium-risk systems need periodic validation and defined escalation triggers. Low-risk systems (recommending optional features, suggesting content improvements) can run with minimal formal oversight. The key is assessing the downside if the system fails or produces biased outputs and implementing oversight proportional to that risk.

How much does it actually cost to implement AI governance?

For a company with 10-20 significant AI systems deployed, basic governance typically costs

What's the most common mistake companies make when implementing AI governance?

The most common mistake is centralizing governance in a small team separate from product teams. When governance becomes something that governance specialists do rather than something that product teams own, it creates friction and slows everything down. Better approaches distribute governance responsibility to product teams with central standards and oversight. Each team knows how to monitor their systems and escalate problems; the governance team sets policy and manages aggregate risk.

How do I build a business case for AI governance spending to my CFO?

Frame it as risk management with specific ROI: "We're spending

What role should legal play in AI governance?

Legal expertise is critical because AI systems can violate regulations in surprising ways. Lawyers should be involved in: evaluating compliance risks before systems are deployed, drafting policies around responsible AI use, reviewing high-risk AI systems for potential discrimination or privacy violations, and ensuring the company can explain its AI governance approach to regulators if asked. Embed legal expertise in AI governance rather than treating it as a peripheral concern.

How often should I audit high-risk AI systems?

High-risk systems that influence major business decisions should be audited monthly for accuracy and quarterly for bias. Accuracy audits check whether the system is still producing reliable outputs. Bias audits check whether the system's decisions vary inappropriately by protected characteristics. Medium-risk systems can be audited quarterly or semi-annually. The key is consistency and documented evidence of oversight. Regulators look for this documentation when evaluating whether a company has taken AI governance seriously.

Conclusion: The Window Is Now

The AI trust paradox is real, it's present in most organizations using AI at scale, and it's solvable. But solving it requires intention, investment, and time.

The companies that recognize this problem early and start building governance infrastructure now will be the companies that get the most value from AI while facing the least regulatory risk. They'll be able to deploy AI systems faster because they have proven frameworks. They'll trust their AI systems more because they've validated them. They'll compete more effectively because they're not spending energy dealing with AI-related crises.

The companies that ignore the problem will muddle through. They'll deploy AI, problems will emerge, they'll react to crises, they'll eventually implement governance under regulatory pressure. They'll waste resources on reactive fixes rather than proactive planning.

The choice is yours. But the longer you wait, the harder it becomes. The AI systems you deploy today without governance are the governance problems of tomorrow.

If your organization is at any stage of AI adoption—whether you're just starting to explore generative AI or you already have dozens of systems deployed—now is the time to build the governance foundation that makes trust possible.

Start with an inventory. Understand what you're actually running. From there, the path forward becomes clear.

Key Takeaways

- The AI trust paradox exists where employees trust AI systems despite lacking the skills to use them responsibly—96% of leaders say staff need training yet they're already using AI daily

- Data literacy (82% priority) matters significantly more than AI literacy (71%) for responsible AI deployment; understanding data quality is foundational to all governance

- 77% of European firms admit AI governance and visibility haven't kept pace with adoption, creating significant compliance and operational risk as organizations scale AI use

- Four governance layers—data foundation, system inventory, risk assessment, and training—must work together to transform the trust paradox into trustworthy AI systems

- Companies planning significant governance spending (23%) in employee upskilling, security, and oversight will outcompete those treating governance as optional as regulations tighten in 2025-2026

Related Articles

- Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]

- Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

- How AI Models Use Internal Debate to Achieve 73% Better Accuracy [2025]

- Microsoft's AI Strategy Under Fire: OpenAI Reliance Threatens Investor Confidence [2025]

- ChatGPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode [2025]

![The AI Trust Paradox: Why Your Business Is Failing at AI [2025]](https://tryrunable.com/blog/the-ai-trust-paradox-why-your-business-is-failing-at-ai-2025/image-1-1769772910045.jpg)