AI PCs Will Dominate Computing in 2025: What You Need to Know

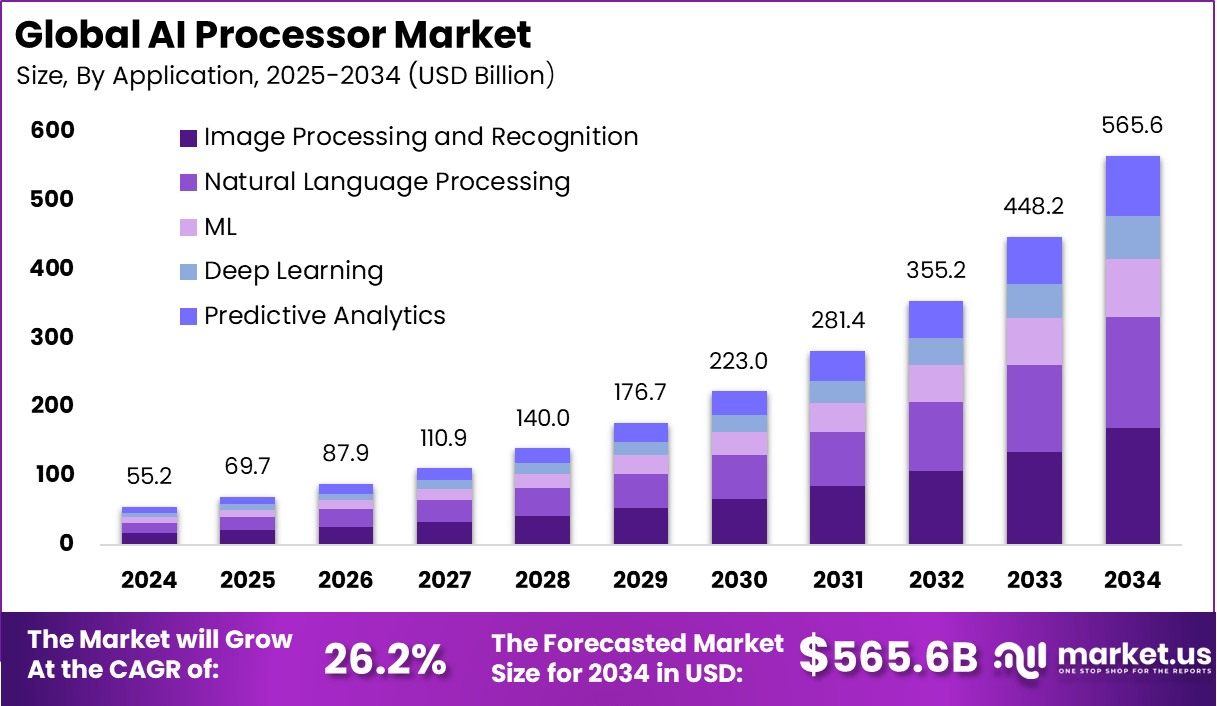

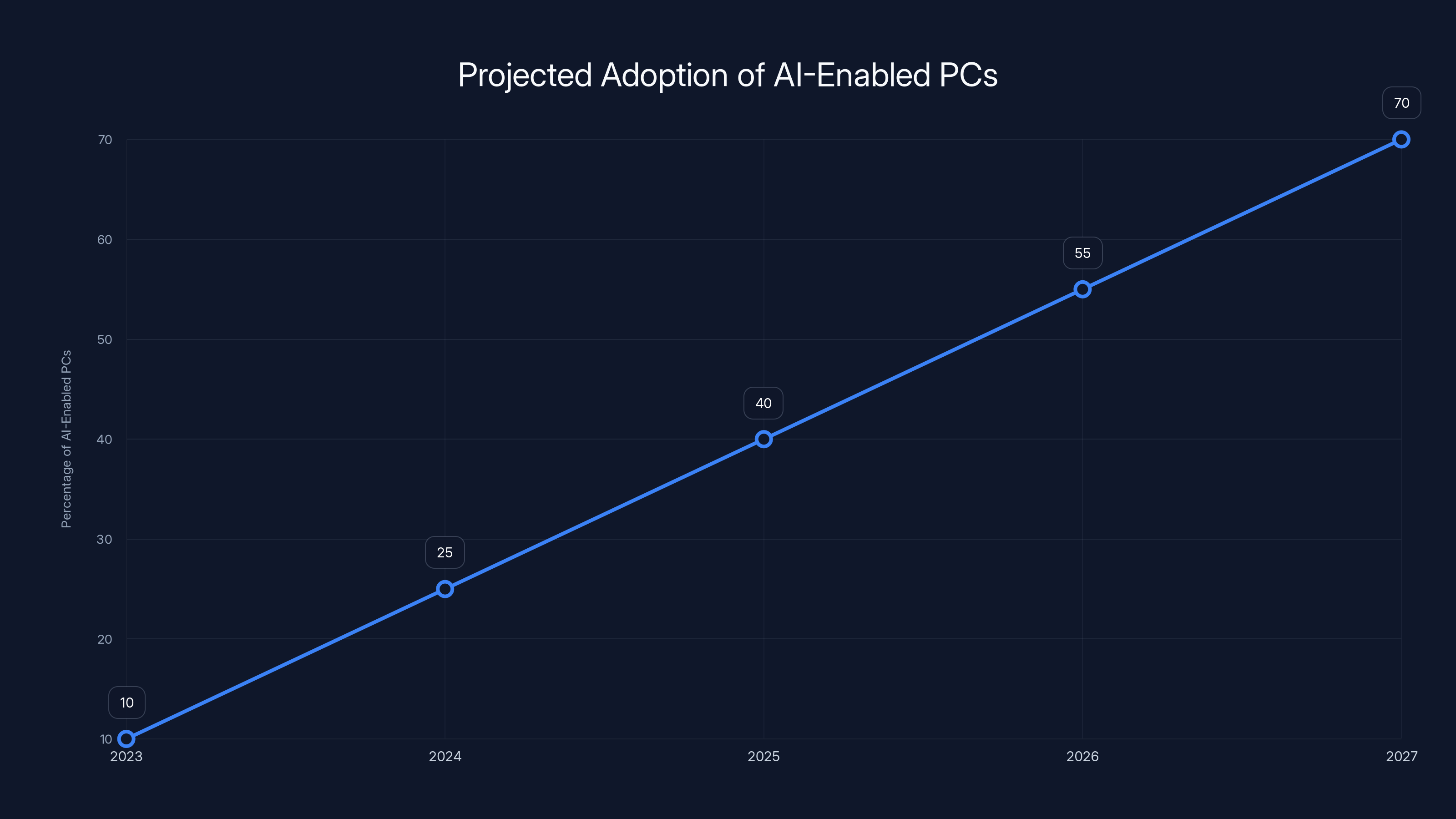

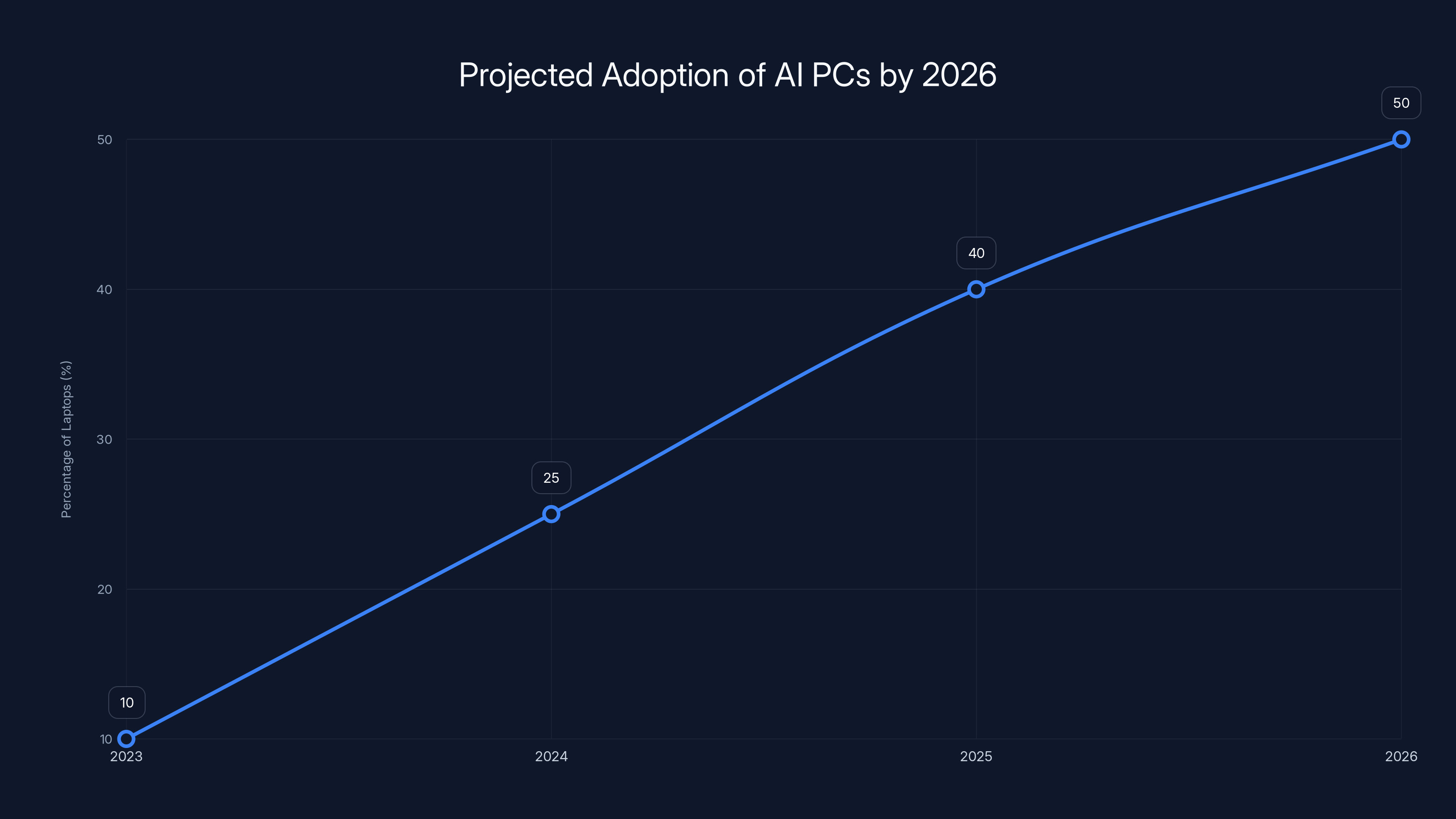

Something's shifting in the laptop market, and honestly, it caught a lot of people off guard. Intel just made a prediction that feels bold on the surface but weirdly plausible when you dig into it: more than half of all PCs shipped globally will have built-in AI capabilities by 2026. We're talking roughly 130 million units out of 260 million total.

Now, before you roll your eyes at another "AI is everywhere" prediction, hear me out. This isn't just marketing hype. This is about fundamental changes in how computers are designed, what hardware they include, and what you can actually do with them. We're not talking about every app becoming an AI app overnight. Instead, we're looking at a shift where AI processing moves off the cloud and onto your actual machine.

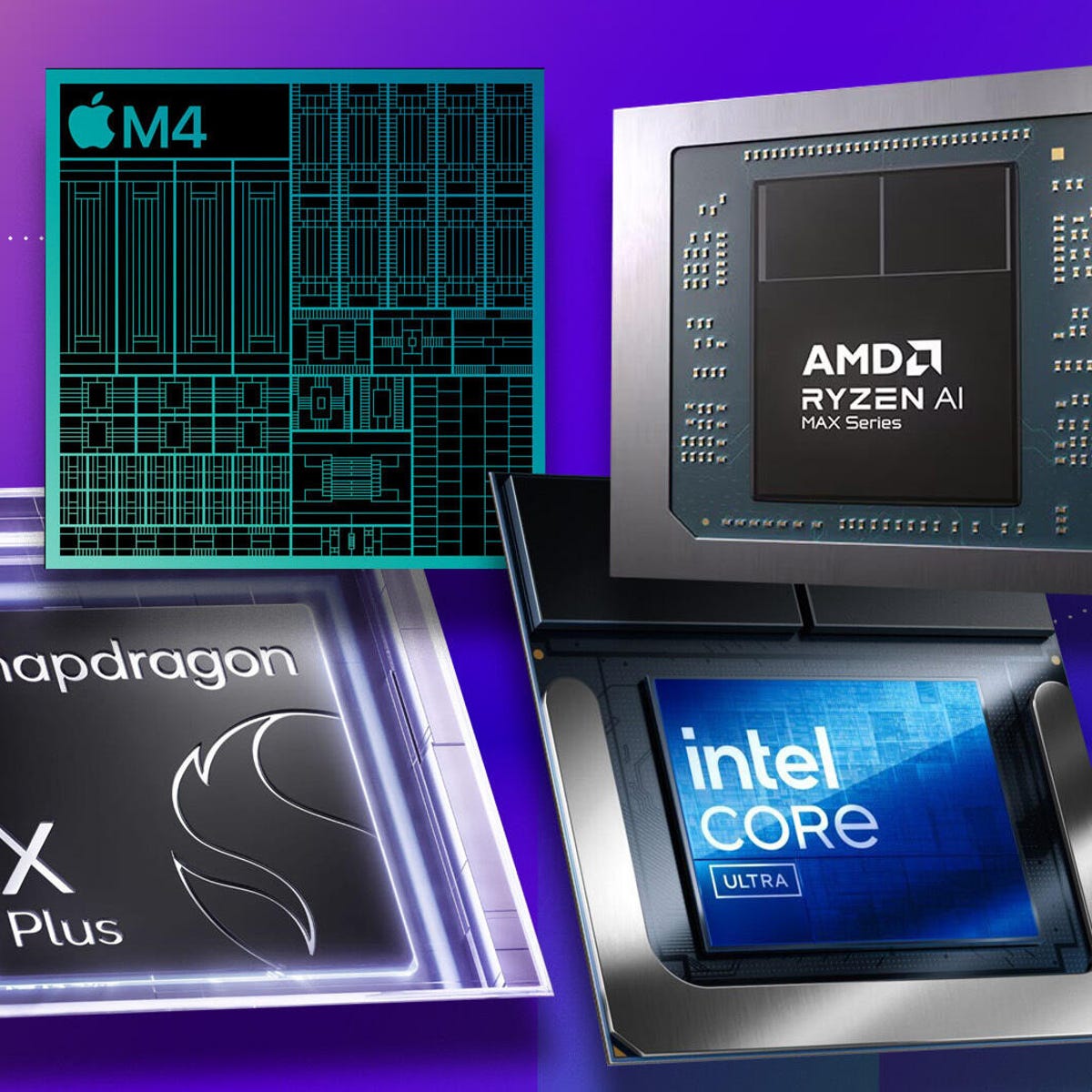

The reason I'm taking this seriously? Because the infrastructure's already here. The chips are being manufactured. OEMs are building laptops with NPUs (Neural Processing Units) right now. AMD, Qualcomm, and Intel are all shipping silicon with dedicated AI acceleration. It's not a future thing anymore. It's a present thing that's about to become normal.

What's interesting is that most people buying these machines right now aren't choosing them for AI features at all. They're buying them because they're faster, they have better battery life, and they handle multitasking better than older machines. The AI capabilities are almost a side effect. But that's changing, and understanding why matters whether you're shopping for a laptop, managing IT infrastructure, or just trying to figure out what the heck everyone's talking about at tech conferences.

This guide digs into what AI PCs actually are, why the market's shifting this way, what companies are building, and what the practical implications are for you. Let's start with the basics, because "AI PC" has become one of those terms everyone uses without really explaining what it means.

TL; DR

- Intel predicts 50%+ of PCs shipped by 2026 will include AI processing hardware, primarily NPUs (Neural Processing Units) or similar accelerators

- Current buyers prioritize speed and battery life over AI features, but AI processing improves both by handling tasks locally instead of relying on cloud computing

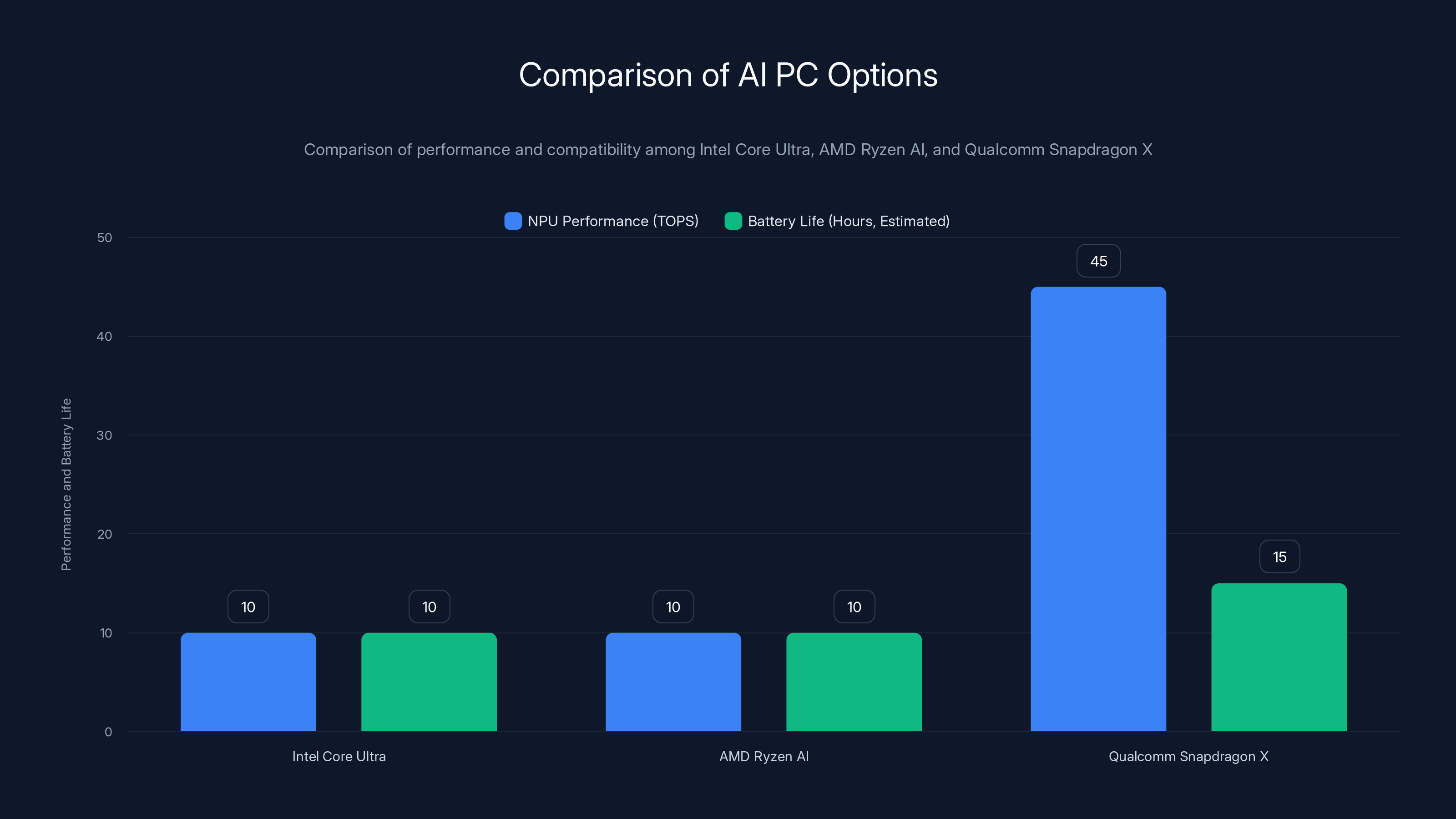

- Three major chipmakers are shipping AI PCs now: Intel with Core Ultra, AMD with Ryzen AI, and Qualcomm with Snapdragon X

- AI PCs aren't replacing traditional laptops, they're becoming the baseline, making older machines look underpowered for standard tasks

- Software is the actual bottleneck right now, not hardware, meaning useful AI applications are still catching up to the silicon capabilities

Qualcomm Snapdragon X offers superior NPU performance and battery life, but requires software emulation for some applications due to its ARM architecture. Estimated data for battery life.

What Exactly Is an AI PC?

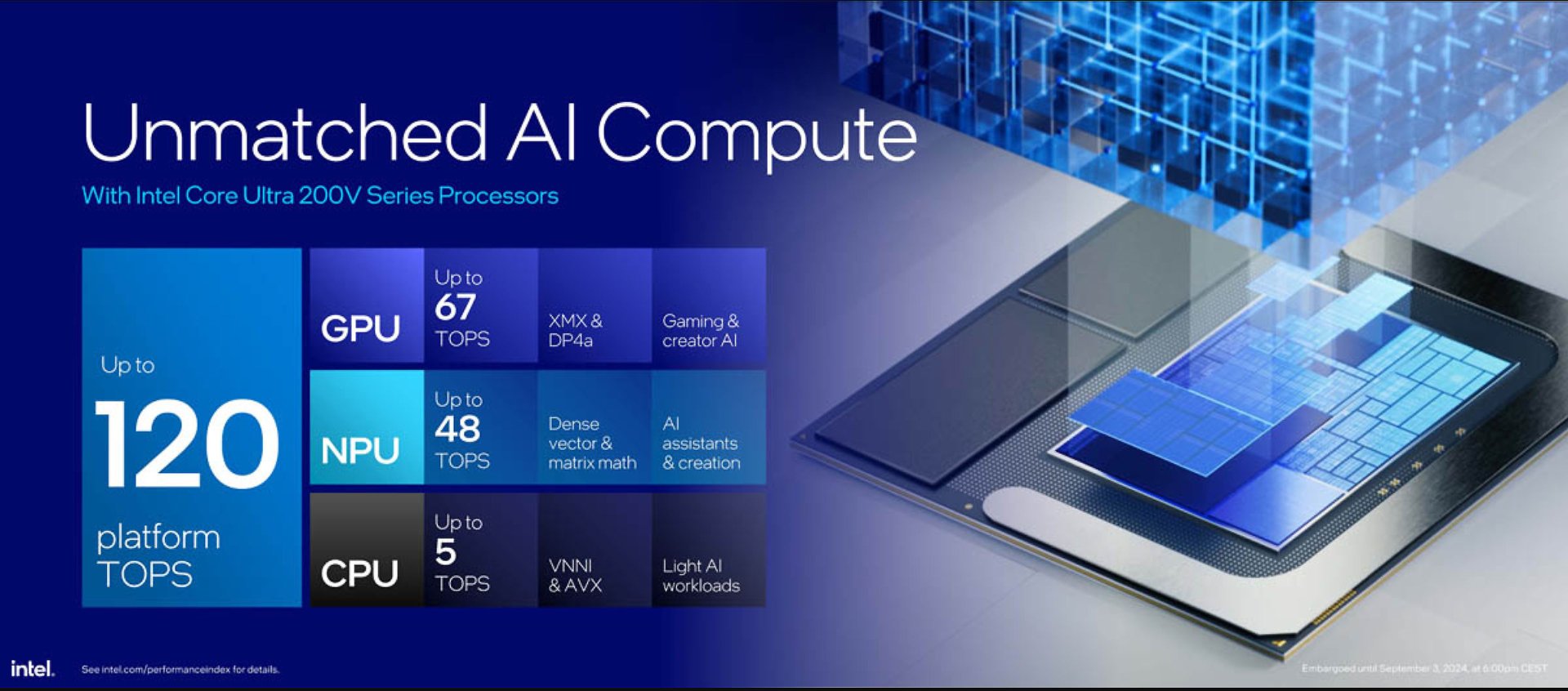

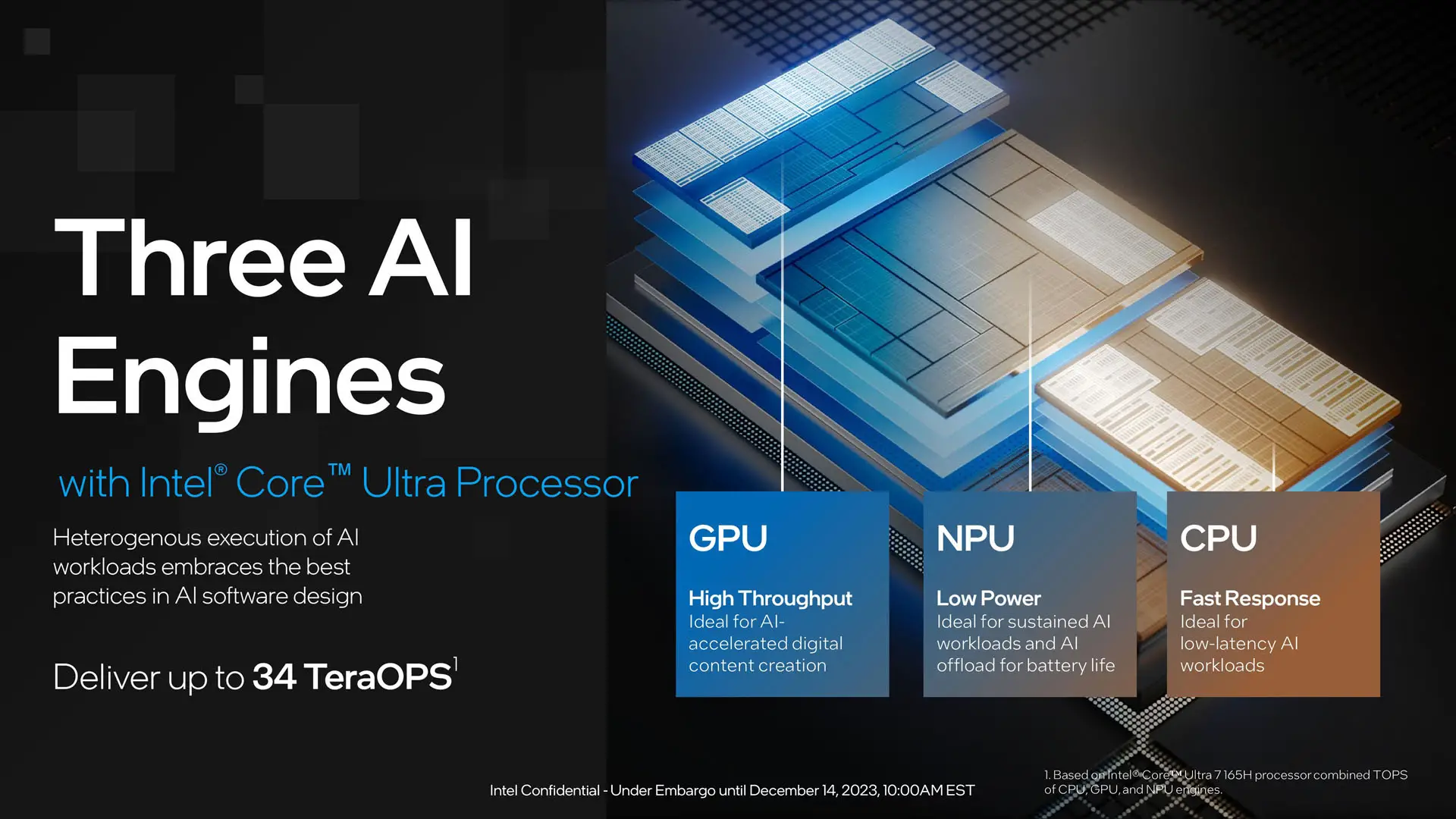

Let me start with a definition that actually makes sense. An AI PC isn't fundamentally different from the laptop or desktop you're using right now. It has a CPU, GPU, memory, storage. Everything normal. The key difference is one specific component: dedicated AI hardware, usually called an NPU.

Think of it this way. Your regular laptop's CPU (Central Processing Unit) is a generalist. It does everything: handles your email, runs your browser, processes documents, plays videos. It's good at lots of things but not optimized for any one thing. A GPU (Graphics Processing Unit) handles graphics and can be repurposed for certain computational tasks. An NPU (Neural Processing Unit) is built specifically for AI inference and machine learning operations.

AI inference is basically the process where an AI model makes a prediction or generates content based on input. Your laptop asks the NPU: "Here's some data, what should I do with it?" The NPU's hardware architecture is optimized to answer that question really efficiently. It can handle matrix multiplications and tensor operations way faster than a general-purpose CPU, and it does it while using less power.

What makes 2025 different is the scale. Intel's Core Ultra processors come with an NPU. AMD's Ryzen AI chips include NPU acceleration. Qualcomm's Snapdragon X is built around NPU performance. We're past the experimental phase. NPUs are showing up in consumer hardware as a standard feature, not an expensive add-on.

The interesting thing is that you don't need to buy an expensive, specialized machine to get an AI PC anymore. You can get one at the same price point as a traditional laptop. That's huge from a market perspective.

Why Intel's 50% Prediction Actually Makes Sense

Here's the thing about market predictions from chipmakers: they have skin in the game. Intel wants to sell chips with NPUs. Of course they're predicting that 50% of laptops will have them. But let's look at whether the prediction is reasonable, not just whether it's self-serving.

First, consider the manufacturing side. Intel, AMD, and Qualcomm have already retooled their production lines. The chips are rolling off the assembly line. OEMs like Lenovo, Dell, HP, and ASUS have already announced AI PC models. This isn't speculative. Hardware's shipping now.

Second, there's no meaningful price premium. If you can buy an AI PC for the same price as a traditional laptop with a similar spec sheet, why wouldn't you? The NPU doesn't add significant manufacturing cost at scale. It's just another block of silicon on the die.

Third, the market's already shifting. Lenovo announced that a significant portion of their 2025 laptop lineup will feature AI PCs. Same with Dell and HP. If the three largest PC makers are building AI PCs as their primary product lines, hitting 50% market share by 2026 isn't ambitious. It might actually be conservative.

Here's where Intel's prediction gets interesting though. They're not saying 50% of people will use AI features. They're saying 50% of machines will have the hardware. There's a difference. Right now, most people buying AI PCs are getting them for traditional reasons: better performance, longer battery life, faster multitasking. The AI hardware is delivering value through improved system efficiency, not through AI-specific applications.

Makoto Ohno, Intel's Japan president, said it clearly: "In other words, it is important to reflect on the fact that people are not currently purchasing an AI PC in order to use its AI-related functions." People are buying them because they're objectively better machines, not because they want to run AI locally.

That distinction matters because it means the prediction isn't dependent on software ecosystem maturity or widespread adoption of AI applications. The hardware's valuable even without that stuff. Once the software catches up, which it will, you've got a much bigger upside.

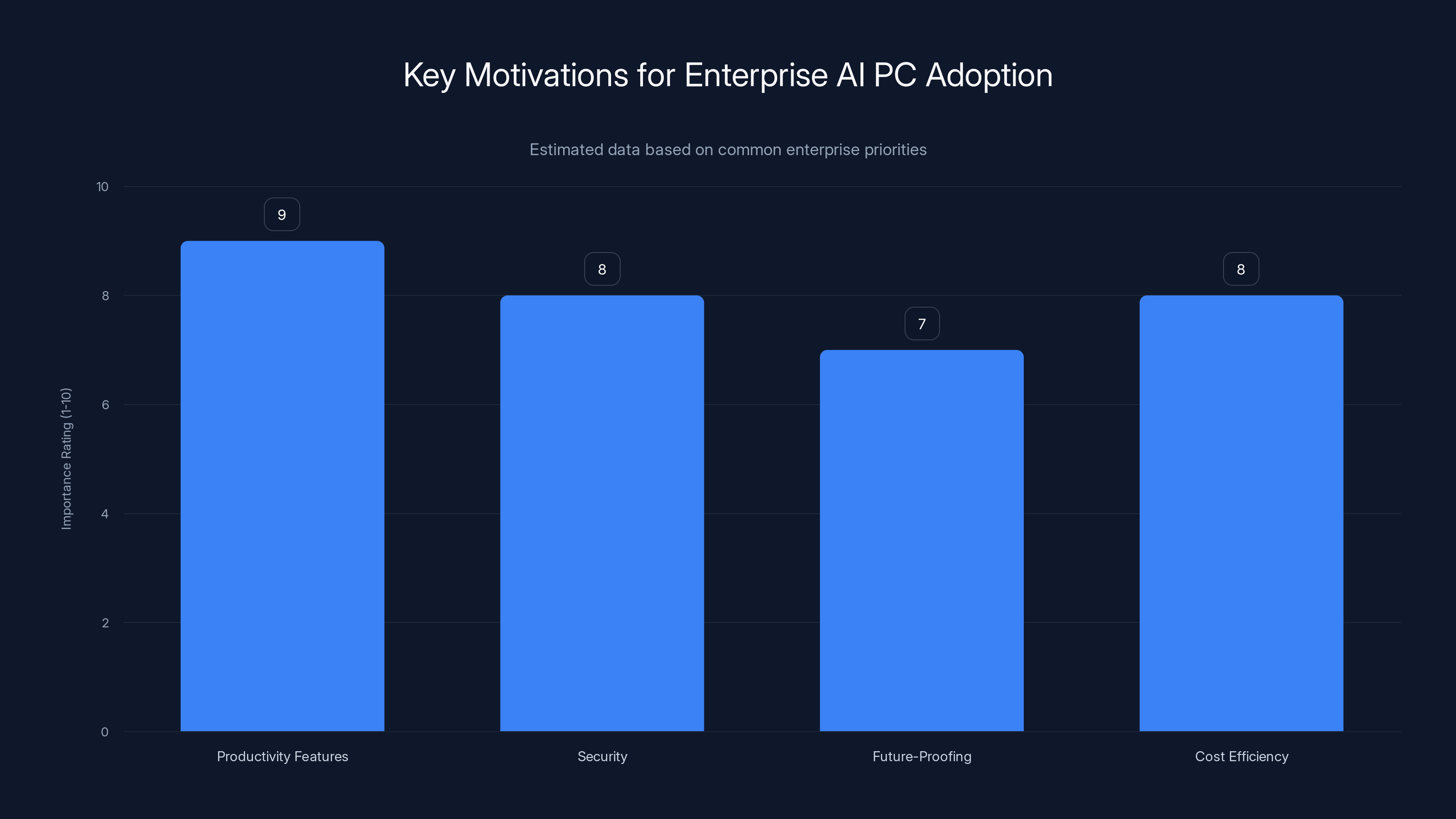

Enterprises prioritize productivity features and security when adopting AI PCs, with cost efficiency also being a significant factor. (Estimated data)

The Hardware Players: Who's Shipping What

Three companies are really dominating the AI PC space right now, and understanding their different approaches matters because it affects what kind of machine you can actually buy.

Intel Core Ultra with NPU

Intel's Core Ultra processors launched in late 2024 and represent a significant redesign of their mobile chip architecture. The key innovation here is the integration of the NPU directly onto the die, rather than as a separate component.

The Core Ultra NPU can handle up to 10 TOPS (Tera Operations Per Second) of AI inference. That's a specific technical metric that basically means it can perform 10 trillion AI operations per second. For context, that's enough to run large language models locally on your machine. You're not waiting for cloud processing or API calls. The inference happens on your laptop.

What matters practically is that this opens up possibilities for running privacy-sensitive AI workloads entirely on your machine. Medical data, financial information, sensitive documents—all of that can be processed by AI without ever leaving your laptop.

Dell's XPS line now uses Core Ultra, as do premium models from Lenovo and other major OEMs. The entry-point pricing is competitive. You're not paying an enormous premium for Intel's AI capabilities.

AMD Ryzen AI with XDNA

AMD's approach is slightly different. They call their NPU the XDNA engine, and it's built around a more aggressive AI-first design philosophy. The specs are competitive with Intel's, but AMD's been more vocal about the performance benefits for specific AI workloads.

Ryzen AI processors also offer 10 TOPS of AI inference capability, but AMD claims better performance per watt in many scenarios. They've also been more aggressive about software partnerships, working directly with AI framework developers to optimize performance.

Where AMD's approach differs is in their emphasis on gaming and content creation performance alongside AI. Ryzen AI chips often pair the NPU with a stronger GPU, making them appealing to creators who want both AI acceleration and graphics performance.

Lenovo's Think Pad series heavily features Ryzen AI processors, as do ASUS gaming laptops and high-performance workstations. Pricing is similar to Intel's Core Ultra offerings.

Qualcomm Snapdragon X

Qualcomm's Snapdragon X represents a different design philosophy entirely. It's an ARM-based processor, not x 86 like Intel and AMD. That matters because it means software compatibility is different, but the performance story is compelling.

Snapdragon X processors deliver up to 45 TOPS of AI inference on the premium variant. That's significantly higher than Intel and AMD's offerings. The catch is that it comes with a power consumption profile that's even more efficient, which translates to exceptional battery life.

Microsoft's Copilot+ PC initiative heavily features Snapdragon X processors. Surface devices use them. So do models from Samsung and ASUS.

The consideration here is software. Since Snapdragon X is ARM-based, existing Windows applications need to either run through emulation or be recompiled for ARM. This isn't a dealbreaker for most people, but it's a point of friction that Intel and AMD don't have.

Why Battery Life Improves with AI Hardware

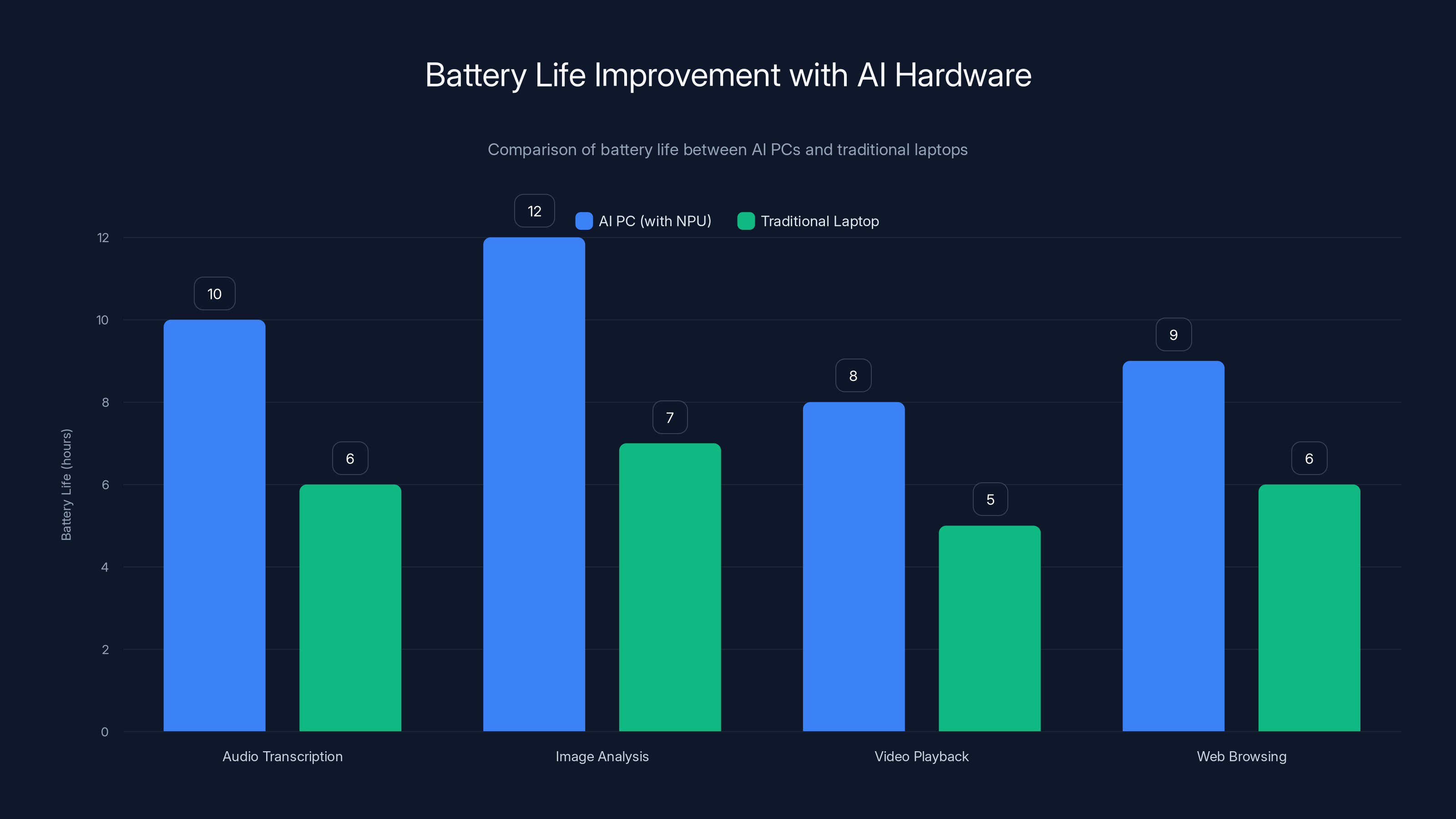

This is one of the counterintuitive aspects that actually sells people on AI PCs. You might think adding more hardware means more power consumption. In reality, it's the opposite, and understanding why matters.

Traditional laptop CPUs are incredibly powerful but also power-hungry. When you offload certain tasks to an NPU, you're moving work to a piece of hardware that's optimized specifically for that task. An NPU handles AI inference using 90% less power than a CPU running the same operation.

Here's a concrete example. Let's say your laptop needs to transcribe audio or analyze images for your photo library. A CPU would need to clock up to maximum frequency, consuming a lot of power and generating heat. An NPU can handle the same task at a fraction of the power consumption because the hardware architecture matches the computational patterns of AI workloads.

When your laptop can do this work more efficiently, the overall power draw of the system decreases. Your battery lasts longer. You get a practical 2-4 hour improvement in real-world battery life depending on what you're doing. That's not marketing nonsense. That's physics.

OEM reviews show that machines using dedicated NPU hardware consistently get better battery life than previous generations running the same workloads. This is one of the main reasons people are actually buying AI PCs right now, regardless of whether they're using AI features specifically.

The Software Gap That Actually Matters

Here's the honest part. Intel, AMD, and Qualcomm have shipped hardware that's incredibly capable. But the software ecosystem hasn't caught up yet. There's a massive gap between what AI PCs can do and what they're actually being used for.

Think about it from a developer's perspective. You're building an application. Do you optimize specifically for NPU acceleration? That requires rewriting parts of your codebase, testing on hardware that's still relatively new, and targeting a customer base where maybe 20-30% of users have compatible hardware. From a business perspective, that doesn't make sense yet.

So most applications run on the CPU as they always have. The NPU sits mostly idle, waiting for software that's designed to use it. The GPU gets used for graphics. The CPU handles everything else. The NPU? Still waiting.

This is changing, but slowly. Microsoft's working on integrating NPU support into Windows more deeply. Open AI, Anthropic, and other AI companies are starting to optimize their models to run locally on NPU hardware. But we're talking about years of gradual adoption, not overnight transformation.

The exciting part is that when software does catch up, the capabilities will be genuinely useful. Local AI processing means faster response times, better privacy, no internet dependency. Applications can be smarter without cloud infrastructure overhead.

AI PCs with NPU hardware show a significant 2-4 hour increase in battery life across various workloads compared to traditional laptops. Estimated data based on typical usage scenarios.

Practical AI PC Use Cases Emerging Now

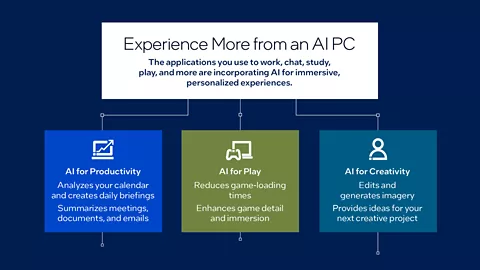

Despite the software gap, some practical use cases are already emerging. These aren't the sci-fi AI applications everyone talks about. They're mundane, practical, and they work.

Content Creation and Editing

Video and image editing applications are starting to integrate NPU acceleration. Adobe Premiere and Photoshop now support NPU acceleration on compatible hardware. Tasks like background removal, automatic color correction, and upscaling that used to take minutes now happen in seconds.

Real-world example: A content creator working on a 4K video project in Premiere Pro used to spend 45 minutes rendering effects. With NPU acceleration, that dropped to 8 minutes. That's the difference between finishing a project in one session versus spreading it across two days.

Document Processing and Transcription

Applications like Microsoft Word are adding AI writing assistance. Otter AI and similar transcription tools process audio locally on AI PCs instead of sending it to cloud servers. This means faster transcription, no internet dependency, and privacy guarantees because the audio never leaves your machine.

For journalists, researchers, and anyone taking meeting notes, this is immediately practical. Transcription quality is good enough for real work.

Local Privacy-Sensitive AI

This is the use case that's genuinely unique to AI PCs. Healthcare applications, financial analysis tools, legal research software—any application handling sensitive data can run AI locally without ever transmitting that data to a cloud server.

A radiologist reviewing medical images can run image analysis AI on their workstation without worrying about HIPAA compliance issues around cloud processing. A financial analyst can run predictive models on confidential company data entirely on their machine. This is genuinely valuable, not hype.

How This Changes OEM Strategy

The shift toward AI PCs is forcing OEMs to think differently about their product lines. Traditional laptop design prioritized thinness, lightness, and battery life above all else. The formula was simple: thinner laptop beats thicker laptop in marketing.

AI PCs are changing that equation. Now you've got multiple dimensions to optimize: AI performance, GPU capability, thermal design, battery efficiency. Some machines lean hard into AI with maxed-out NPU specs. Others balance AI with gaming performance. Some prioritize battery life above everything else.

What we're seeing is the emergence of multiple AI PC categories. Business laptops with strong AI capabilities for productivity features. Gaming laptops with AI alongside powerful GPUs. Ultraportables with high-efficiency NPUs prioritizing battery life. Workstations with maximized AI performance for content creation.

This fragmentation is actually healthy. It means you've got options based on what you actually need, rather than everyone buying the same generic laptop.

Lenovo completely restructured their laptop lines around AI capabilities. Dell created specific AI PC SKUs. HP launched dedicated AI PC brands. This is a top-to-bottom strategy shift, not an afterthought.

The Microsoft Copilot+ PC Initiative

Microsoft's actively pushing the AI PC narrative with their Copilot+ PC program. This is significant because Microsoft controls the Windows operating system and can actually make AI features deeply integrated into the OS itself, not just surface-level additions.

Copilot+ PCs must meet specific hardware requirements: 40 TOPS of AI processing, at least 16GB of RAM, and at least 256GB of SSD storage. These specs ensure that the machine can actually run AI features responsively without performance degradation.

What Copilot+ PCs get is Windows-level AI features. Copilot integration throughout Windows, faster local processing, better privacy controls. Microsoft's betting that users will want AI features integrated into their OS and that having guaranteed hardware specifications makes that feasible.

The Copilot+ PC certification is becoming a marketing point. You see it advertised prominently. OEMs are competing to get Copilot+ certified because it differentiates their products and gives consumers a clear quality signal.

This is Microsoft's way of ensuring that AI PC adoption has immediate, tangible benefits within Windows itself. You don't have to wait for third-party software to optimize for NPUs. The OS itself uses them.

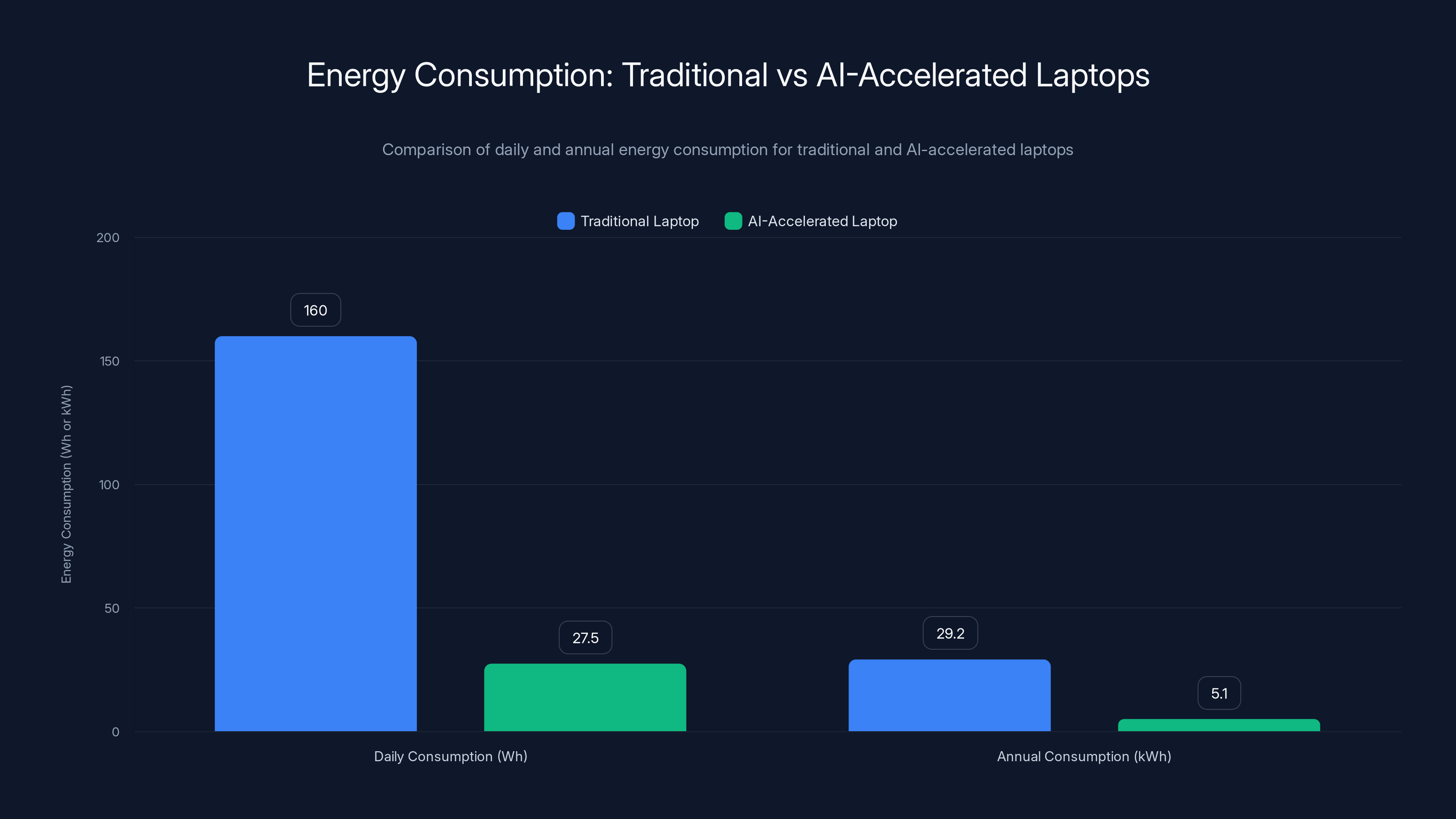

AI-accelerated laptops significantly reduce energy consumption, saving approximately 22-37 kWh per device annually. Across 100 million devices, this results in an annual saving of 2.2-3.7 billion kWh, equivalent to the electricity usage of 300,000-500,000 US households.

What This Means for Older Laptops

Here's the part that might sting if you bought a laptop in the last couple of years: machines without AI hardware are going to start looking underpowered. Not immediately, but gradually.

As software increasingly targets NPU acceleration and assumes AI hardware availability, non-AI machines will handle those tasks less efficiently. It's not a compatibility issue. Older laptops will still run new software. But that software will be optimized for hardware they don't have.

Think of it like the shift from single-core to multi-core processors. Older machines could run multi-core optimized software, but they ran it less efficiently. The same thing is happening here, but it's the GPU and NPU instead of additional processor cores.

This creates a timeline for equipment refresh cycles. Companies that refreshed their laptop fleets 3-4 years ago are now in an awkward position. The machines still work, but they're increasingly suboptimal. That drives replacement cycles faster than they might otherwise occur.

If you're shopping for a laptop and you intend to keep it for 3-5 years, an AI PC is the sensible choice. It's not about using AI features today. It's about ensuring your machine doesn't become the tech equivalent of a smartphone from 2015 when everyone else has moved on.

Security Implications of Local AI Processing

Moving AI processing off the cloud and onto the local machine creates both security benefits and new risks. Understanding both matters.

On the benefit side: data never leaves your machine. Medical records, financial data, sensitive work documents—if an AI application processes them locally, there's no network transmission risk. No third-party servers involved. No account breaches at cloud providers affecting your data.

For regulated industries like healthcare and finance, this is genuinely game-changing. You can use powerful AI features without introducing compliance nightmares around data transmission and cloud storage.

But there are new risks. Local NPU acceleration opens up new attack vectors. Malware that can exploit NPU hardware or manipulate local AI inference. Data extraction from compromised machines now includes whatever AI models are running locally. These are manageable risks if you're following good security practices, but they're real.

Microsoft's Windows Security features are being updated to account for AI hardware. Windows Defender now includes NPU-aware threat detection. But this is early days. The security community is still figuring out best practices for securing AI-capable hardware.

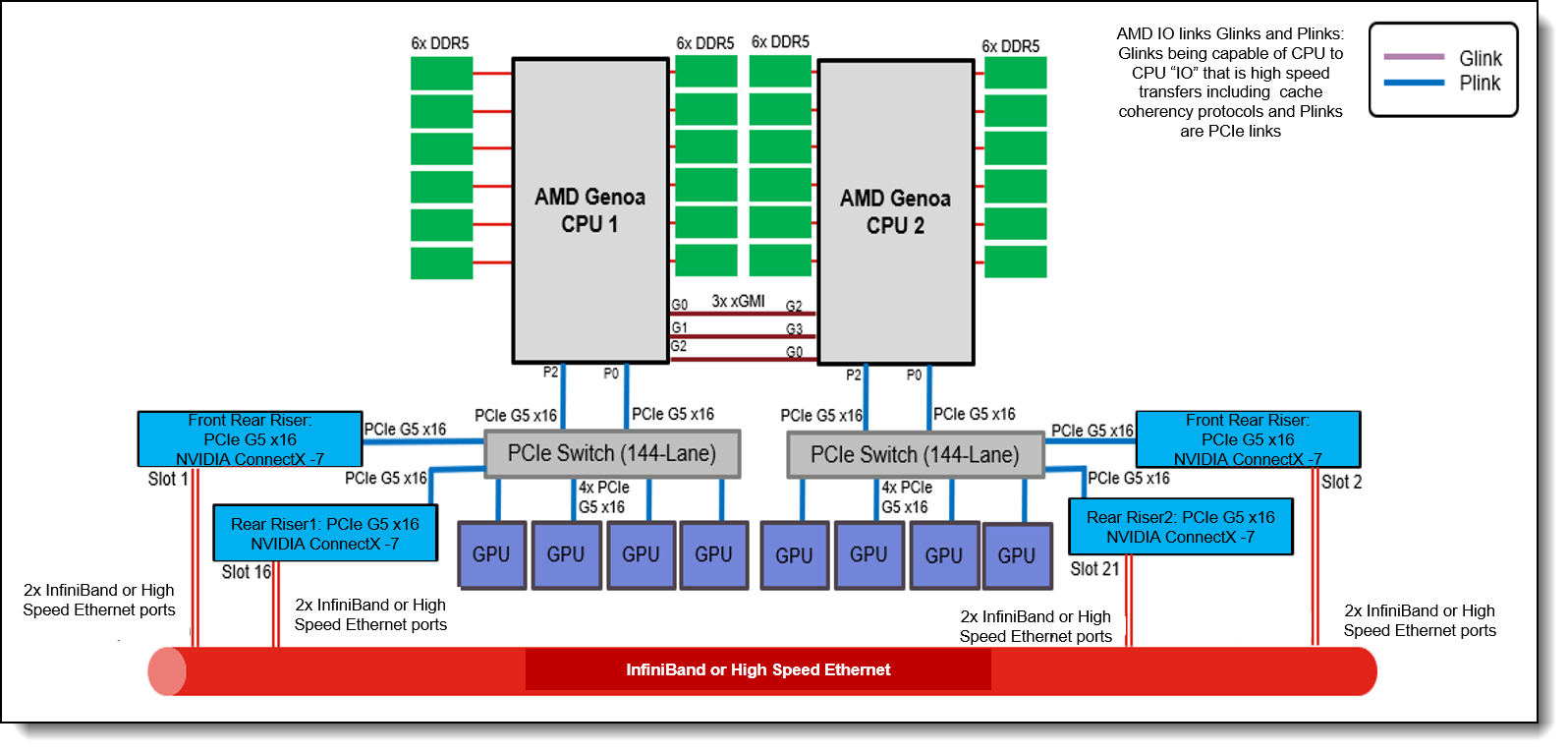

The GPU Still Matters

One misconception is that NPUs replace GPUs in AI-capable systems. They don't. AI PCs still have traditional GPUs for graphics and general-purpose computing. The NPU handles inference for AI models. The GPU handles graphics and some other compute tasks. The CPU handles everything else.

In fact, good AI PC design balances all three. A machine with a great NPU but weak GPU and CPU is unbalanced. You want a machine where all three components complement each other.

Where this matters most is in gaming and content creation. A gaming laptop with a strong GPU and a capable NPU is a genuinely versatile machine. You can game at high frame rates, create content with AI acceleration, and handle productivity work efficiently. The components work together.

When evaluating AI PCs, don't just look at NPU specs. Look at the overall hardware balance. A machine with a 10 TOPS NPU but a weak GPU and slow CPU is missing the point. You want a holistically capable system where the NPU is one part of a well-designed machine.

The adoption of AI-enabled PCs is projected to rise significantly, reaching 70% by 2027. Estimated data.

Enterprise Adoption and AI PCs

While consumer AI PC adoption is driven by hardware availability, enterprise adoption has different motivations. Companies are buying AI PCs for specific reasons: productivity features, security, and future-proofing.

For enterprises, the appeal is substantial. Local AI processing means fewer security audit nightmares. AI features embedded in productivity software mean less training overhead. Better performance across the board means employees need fewer resources, which impacts IT infrastructure costs.

Large organizations like Fortune 500 companies are already planning or executing AI PC rollouts. Lenovo and Dell both offer enterprise AI PC lines with management tools and support specifically designed for corporate deployment.

The economic argument is straightforward. If an AI PC costs

This drives adoption faster than consumer demand alone would support. When enterprises start buying AI PCs, the software optimization follows because vendors now have a large install base. The ecosystem develops faster.

Training Data and Localized AI

One aspect that doesn't get enough attention is how companies are handling training data for locally-running AI models. If you're running an AI model on your laptop, where did that model come from? How was it trained?

This is where open-source models like Meta's Llama and Hugging Face models become important. These are models trained on diverse data that companies can then quantize and optimize to run locally on NPU hardware.

Quantization is the process of reducing the precision of a model's weights and activations to make it smaller and faster to run. A model trained in 32-bit precision can be quantized to 8-bit or even 4-bit with minimal accuracy loss. This compression makes it practical to run on local hardware with limited memory.

The implications are interesting. Instead of cloud-based AI services from a few large providers, you can have locally-running AI using open models. This democratizes AI capabilities and reduces dependency on large tech companies' cloud infrastructure.

It also means organizations can use their own proprietary training data without sending it to cloud providers. A financial services company can train models on internal data and run them locally. A healthcare provider can train on patient data (properly de-identified) and run inference locally without cloud infrastructure.

Environmental and Power Consumption Considerations

The shift toward NPU-accelerated computing has environmental implications that are often overlooked. More efficient hardware means lower power consumption, which means lower electricity costs and reduced carbon footprint.

Let's do some math. If a laptop's CPU uses 100 watts for an AI task that an NPU can do in 10 watts, you're looking at an 90% reduction in power consumption for that workload. Scale that across millions of devices, and you're talking about massive reductions in data center power draw and distributed computing power consumption.

A traditional laptop running at 30-50 watts of average power consumption for 8 hours a day uses roughly 120-200 watt-hours daily. An AI PC with better efficiency might use 20-35 watts for the same workload, saving 60-100 watt-hours daily. Over a year, that's 22-37 kilowatt-hours per device saved. Across 100 million devices, that's 2.2-3.7 billion kilowatt-hours annually.

For context, that's roughly the annual electricity consumption of 300,000-500,000 US households. The environmental impact of moving to more efficient AI hardware is genuine and substantial.

OEMs are starting to market this. "Energy-efficient AI laptops" is becoming a selling point because it resonates with customers who care about environmental impact. And it's not marketing nonsense. The efficiency gains are real.

Intel's prediction of 50% of laptops having AI capabilities by 2026 aligns with current trends and manufacturer announcements. Estimated data.

Challenges and Limitations of Current AI PCs

Before we get too excited about AI PCs, let's talk about the real limitations. These aren't dealbreakers for everyone, but they're worth understanding.

Software Integration Is Still Spotty

Not every application supports NPU acceleration. Many popular productivity applications don't yet optimize for local AI hardware. You might have a powerful NPU sitting idle while your CPU handles tasks it's not optimized for. This gap closes over time as developers integrate NPU support, but it's a current limitation.

Model Complexity Is Limited

A high-end NPU like the one in Snapdragon X can run about 10 TOPS of inference. That sounds like a lot, but cutting-edge language models are genuinely large and complex. Running GPT-4-scale models locally is impractical. You can run smaller, quantized models or specific models designed for mobile inference, but you're working within constraints.

For specialized tasks like transcription, image analysis, or document processing, this works great. For open-ended tasks that benefit from large, general-purpose models, you're still relying on cloud services.

Hardware Thermal Design

Fitting NPU, GPU, and CPU all on the same die in a thin and light package creates thermal challenges. Some AI PCs require more aggressive cooling than traditional laptops, which means more noise or reduced thermal headroom under sustained loads. This is improving as designs mature, but it's a current limitation.

Ecosystem Lock-In Concerns

With Snapdragon X requiring ARM emulation for x 86 software, there's legitimate concern about ecosystem fragmentation. What happens if you buy a Snapdragon X laptop and key software doesn't run well under emulation? You're stuck. This is why many people prefer Intel and AMD's x 86-based AI PCs despite Snapdragon X's superior specs.

The Road Ahead: What's Coming Next

If Intel's prediction of 50% AI PC market share by 2026 comes true, where does the market go from 2026-2028? Some trends are already visible.

Specialized AI PCs

Expect to see increasingly specialized AI PCs. Gaming AI PCs optimized for both gaming and AI workloads. Creator-focused machines with maximized AI and GPU capabilities. Business laptops with lightweight AI features prioritizing productivity. Ultra-portable machines with efficient NPUs prioritizing battery life. The market will segment beyond "AI PC" as the category matures.

Model Compression Improvements

As quantization techniques improve, you'll be able to run increasingly capable models locally. Today's cutting-edge models that require cloud processing might run on local NPU hardware in 18-24 months. This is a moving target driven by academic research in model compression.

Framework Integration

Tensor Flow, Py Torch, and other AI frameworks are adding first-class support for NPU hardware. This makes it progressively easier for developers to target NPU acceleration without specialized knowledge. As frameworks mature, software adoption accelerates.

Privacy-Preserving AI Applications

Expect to see more applications specifically designed to take advantage of local AI processing for privacy. Whether it's healthcare, finance, or legal tech, industries handling sensitive data will embrace local AI for privacy and compliance benefits.

Should You Buy an AI PC Right Now?

This depends on your timeline and needs. Let me give you practical guidance for different scenarios.

If you need a new laptop anyway: Buy an AI PC. There's no downside. The hardware costs the same, and you get better performance and battery life from the optimized design. The AI features are a bonus that will become more valuable over time.

If your current laptop still works: Keep it for now. The performance gap between a 3-year-old laptop and an AI PC isn't dramatic enough to justify replacement. When your machine starts feeling slow or the battery degrades, then consider an AI PC.

If you do content creation or productivity work: Buy an AI PC as soon as possible. The performance gains from NPU acceleration in tools you actually use are immediate and substantial. A content creator will see real productivity improvements, not theoretical benefits.

If you're in an enterprise considering fleet refresh: AI PCs are worth the consideration. The productivity improvements have measurable ROI. Model the costs and benefits for your use case, but don't dismiss AI PCs as hype.

If you need maximum portability: Snapdragon X-based AI PCs are worth serious consideration if software compatibility isn't a concern. The battery life improvements are genuine. Just test compatibility with software you actually use before buying.

The Bigger Picture: What This Means for Computing

AI PCs represent a shift in how computing is structured. Instead of everything running on CPUs with specialized hardware for specific tasks, we're seeing an ecosystem where machines have multiple specialized processors all optimized for different types of computation.

This is genuinely new in consumer computing. It's been common in data centers and server environments for years, but having this complexity in a personal laptop changes what's possible at the edge.

The implication is that computing becomes more distributed. Some tasks happen locally on your machine. Some happen on cloud servers. The architecture is designed to route tasks to the most efficient processor for that task. Your NPU handles inference. Cloud services handle training. Your GPU handles graphics. Your CPU handles orchestration and general computing.

This architectural shift enables features and capabilities that weren't practical before. It also means software design has to evolve to take advantage of these capabilities. Companies that do it well will have dramatically more capable products. Companies that ignore it will look increasingly dated.

Intel's 50% prediction might be conservative. By 2027-2028, AI PCs might not be a special category anymore. They might just be what laptops are. At that point, we'll need new terminology for machines that are optimized beyond basic AI capabilities, and we'll look back at 2025 as the year the market tipped irreversibly toward AI-accelerated consumer computing.

FAQ

What exactly is an AI PC?

An AI PC is a personal computer that includes dedicated hardware specifically designed for AI processing, typically called an NPU (Neural Processing Unit). Unlike traditional laptops that handle all computing on a general-purpose CPU, AI PCs have specialized silicon that efficiently performs AI inference and machine learning operations. The key benefit is that tasks like transcription, image analysis, and content creation run faster and consume less power when handled by the NPU instead of the CPU.

Do I need to use AI features to benefit from an AI PC?

No, you don't need to actively use AI features to benefit from an AI PC. The specialized hardware makes the entire system more efficient, improving overall performance and battery life for standard computing tasks. People currently buying AI PCs often choose them for better speed and longer battery life rather than specific AI functionality. As software catches up, additional benefits will emerge, but the hardware improvements are valuable right now.

Which AI PC should I buy: Intel Core Ultra, AMD Ryzen AI, or Qualcomm Snapdragon X?

Each has different strengths. Intel Core Ultra and AMD Ryzen AI are x 86-based, meaning full compatibility with Windows software without emulation concerns. Qualcomm Snapdragon X offers superior NPU performance (45 TOPS vs. 10 TOPS) and exceptional battery life but uses ARM architecture, requiring software emulation for some traditional applications. Choose based on your software requirements and priority use case. Gaming and content creation favor Intel or AMD. Maximum portability favors Snapdragon X.

How much more expensive are AI PCs compared to traditional laptops?

AI PCs cost the same as comparably-specced traditional laptops in 2025. The NPU doesn't add significant manufacturing cost at scale. You're not paying a premium for AI capabilities anymore. However, entry-level AI PCs may cost slightly more than entry-level traditional models, but the specification improvements justify the cost.

Will older software work on AI PCs?

Yes, completely. AI PCs run standard Windows or Linux operating systems and support all existing software. The NPU sits alongside your CPU, GPU, and other components. Applications that aren't specifically optimized for NPU acceleration run exactly as they would on traditional laptops. You're not losing compatibility. You're gaining capabilities without losing anything.

What AI PCs are actually available to buy right now?

Multiple options exist from major OEMs. Dell's XPS series, Lenovo's Think Pad and Legion lines, HP's Pavilion and Envy series, ASUS Vivo Book and ROG gaming laptops all have AI PC variants. Microsoft Surface devices use Snapdragon X. Apple's Mac Book Pro with M-series chips includes neural engine functionality, though Apple doesn't market them as "AI PCs.">Models range from

Is 40 TOPS or 10 TOPS important for my needs?

TOPS (Tera Operations Per Second) measures inference throughput. For most users, 10-40 TOPS is more than sufficient. The limiting factor isn't usually computational capacity but software support and model size. 10 TOPS handles transcription, image analysis, document processing, and creative tools well. 40 TOPS enables more complex simultaneous workloads. Unless you're running multiple AI inference tasks simultaneously, the difference is academic.

Will AI PC prices drop as they become more common?

Historically, specialized hardware drops in price as volume increases and manufacturing matures. We've already seen this pattern with GPUs and SSDs. As AI PCs become the baseline and volume increases, prices should remain stable or decline, but the real value shift comes from software catching up and actually using the hardware. The software improvements will be more significant than price drops.

What's the Microsoft Copilot+ PC certification and is it important?

Copilot+ PC is Microsoft's standard for AI PCs, requiring 40 TOPS of AI performance, 16GB+ RAM, and 256GB+ SSD storage. Certification ensures the machine can run Windows-integrated AI features smoothly. It's a quality signal but not absolutely essential. Many excellent AI PCs don't carry the certification. If you want guaranteed Windows AI integration, Copilot+ certified machines are safer. If you're flexible, non-certified AI PCs offer equal hardware capability at potentially lower cost.

How do I ensure an AI PC I'm considering will have good software support in the future?

Look for machines from established OEMs (Dell, Lenovo, HP, ASUS) that have committed to AI PC strategies. Check if major software providers support the specific processor (Core Ultra, Ryzen AI, or Snapdragon X). Join online communities for the specific model to see real-world software compatibility discussions. For critical software, contact the vendor and ask directly about NPU optimization plans. Software support follows adoption, so machines from major OEMs in volume configurations get better support.

Conclusion

Intel's prediction that over 50% of PCs shipped in 2026 will be AI-enabled isn't just marketing hype dressed in statistics. The hardware's already manufacturing at scale. OEMs are building entire product lines around it. The question isn't whether AI PCs will become common. The question is how quickly software catches up to the hardware capabilities.

What makes this moment genuinely interesting is that the hardware improvements deliver immediate value even without AI features. Better battery life, faster performance, improved multitasking. Users benefit right now, regardless of whether they ever consciously use an AI feature.

The real transformation happens when software evolves. When the tools you use every day are optimized for local AI processing. When privacy-sensitive applications leverage local inference instead of cloud services. When specialized applications designed around NPU capabilities emerge. That's still ahead of us, but the infrastructure's already in place.

If you're shopping for a laptop in 2025, the sensible choice is an AI PC. Not because you need AI features today, but because you're buying a machine architected for how computing is evolving. The hardware costs the same. The performance is better. The battery life is longer. You're not sacrificing anything by choosing an AI PC over a traditional laptop.

By 2027, we probably won't call them "AI PCs" anymore. They'll just be PCs. The ones without AI acceleration will be the specialty category, positioned for budget-conscious buyers who don't need leading-edge performance. That's how you know a technology has become mainstream.

We're at that inflection point now. The market's tipping. The hardware's ready. The software will follow. It's happening faster than most people realize, and understanding what AI PCs actually are and why they matter puts you ahead of the curve.

Stay informed about this shift. It affects what device you buy, what software you'll use, and how your computing experience will evolve over the next few years. The AI PC era isn't coming. It's already here.

Key Takeaways

- Intel forecasts 130 million of 260 million PCs shipped globally in 2026 will include dedicated AI processing hardware like NPUs

- Current AI PC buyers prioritize speed and battery life over AI features, but specialized hardware delivers both through efficient architecture

- Three competing processors dominate: Intel Core Ultra (10 TOPS), AMD Ryzen AI (10 TOPS), and Qualcomm Snapdragon X (45 TOPS), each with different strengths

- NPU acceleration can reduce power consumption by 90% for AI inference, enabling 2-4 hour improvements in battery life

- Software support remains the limiting factor, not hardware capability, but content creation and privacy-sensitive applications already benefit from local AI processing

- AI PCs cost the same as comparable traditional laptops in 2025, eliminating the price premium barrier to adoption

- For new laptop purchases, AI PCs are the sensible choice since improved performance and efficiency benefit all workloads, not just AI-specific tasks

Related Articles

- Mirai's On-Device AI Inference Engine: The Future of Edge Computing [2025]

- Reliance's $110B AI Investment: India's Tech Ambition Explained [2025]

- Edge AI Models on Feature Phones, Cars & Smart Glasses [2025]

- Meta, NVIDIA Confidential Computing & WhatsApp AI [2025]

- Best Budget Laptops Under $400: Presidents Day Sales [2025]

- Dynabook Tecra A65-M Review: Full Analysis [2025]

![AI PCs Will Dominate Computing in 2025: What You Need to Know [2025]](https://tryrunable.com/blog/ai-pcs-will-dominate-computing-in-2025-what-you-need-to-know/image-1-1771630568240.jpg)