Introduction: The Email Security Crisis Nobody Talks About

Email remains the primary attack vector for business compromise, yet modern email security has become a paradox. Organizations invest heavily in sophisticated detection systems, deploy multiple security layers, and staff dedicated security operations centers—only to discover that their best security analysts spend a quarter of their time investigating false alarms rather than hunting actual threats.

This isn't a failure of security teams. It's an architectural problem that has been building for years and has now reached a critical inflection point due to artificial intelligence.

A decade ago, email security economics were straightforward and predictable. Security teams deployed pattern-matching systems, accepted some level of false positives as an operational cost, and staffed security operations centers to handle escalations. The mathematics worked because adversaries operated within certain constraints. They reused attack templates, followed recognizable patterns, and relied on tactics that had historical precedent. Pattern-matching systems could learn these patterns, flag suspicious emails, and investigate human-flagged alerts. The return on investment was clear and measurable.

Then artificial intelligence entered the equation, and everything changed.

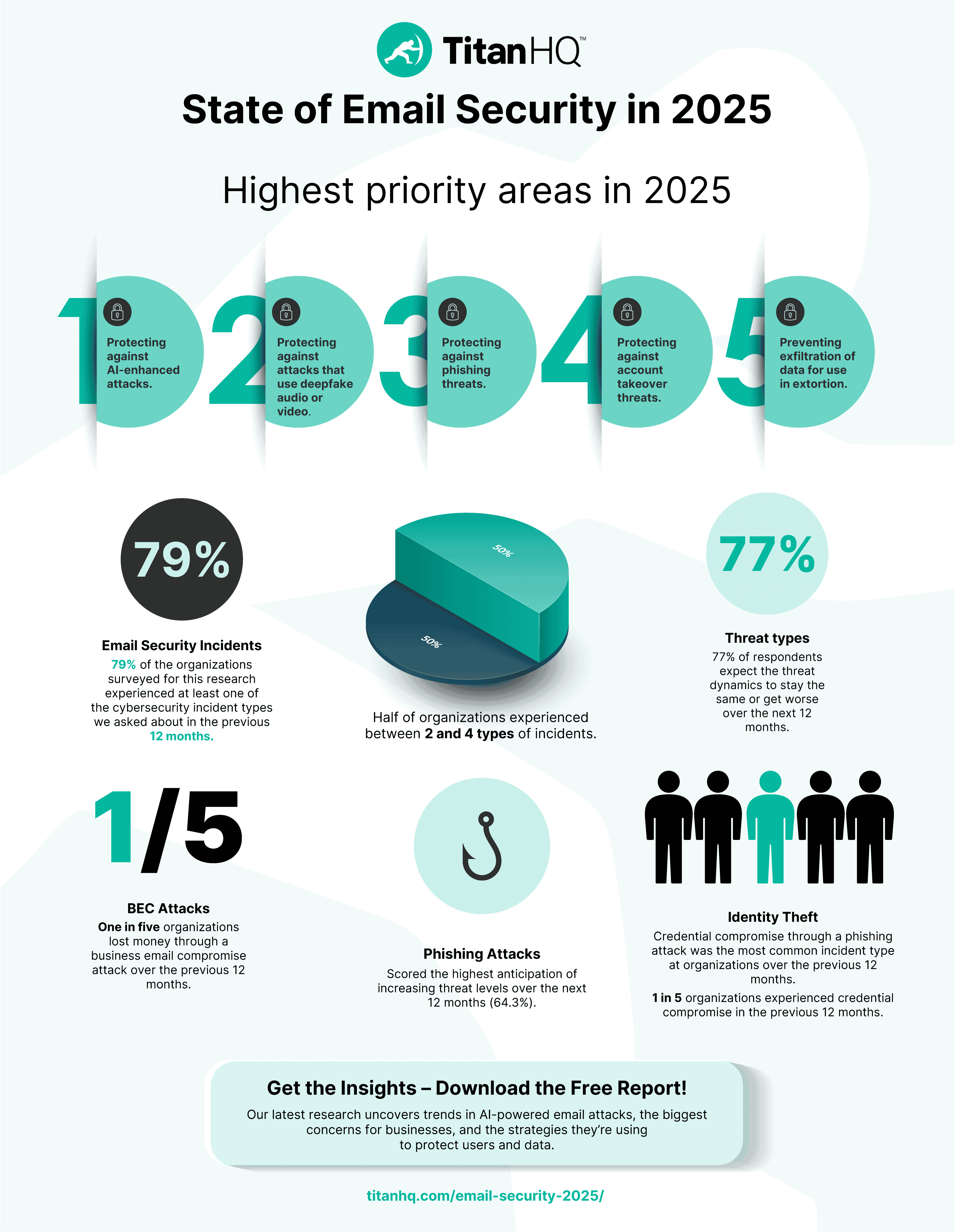

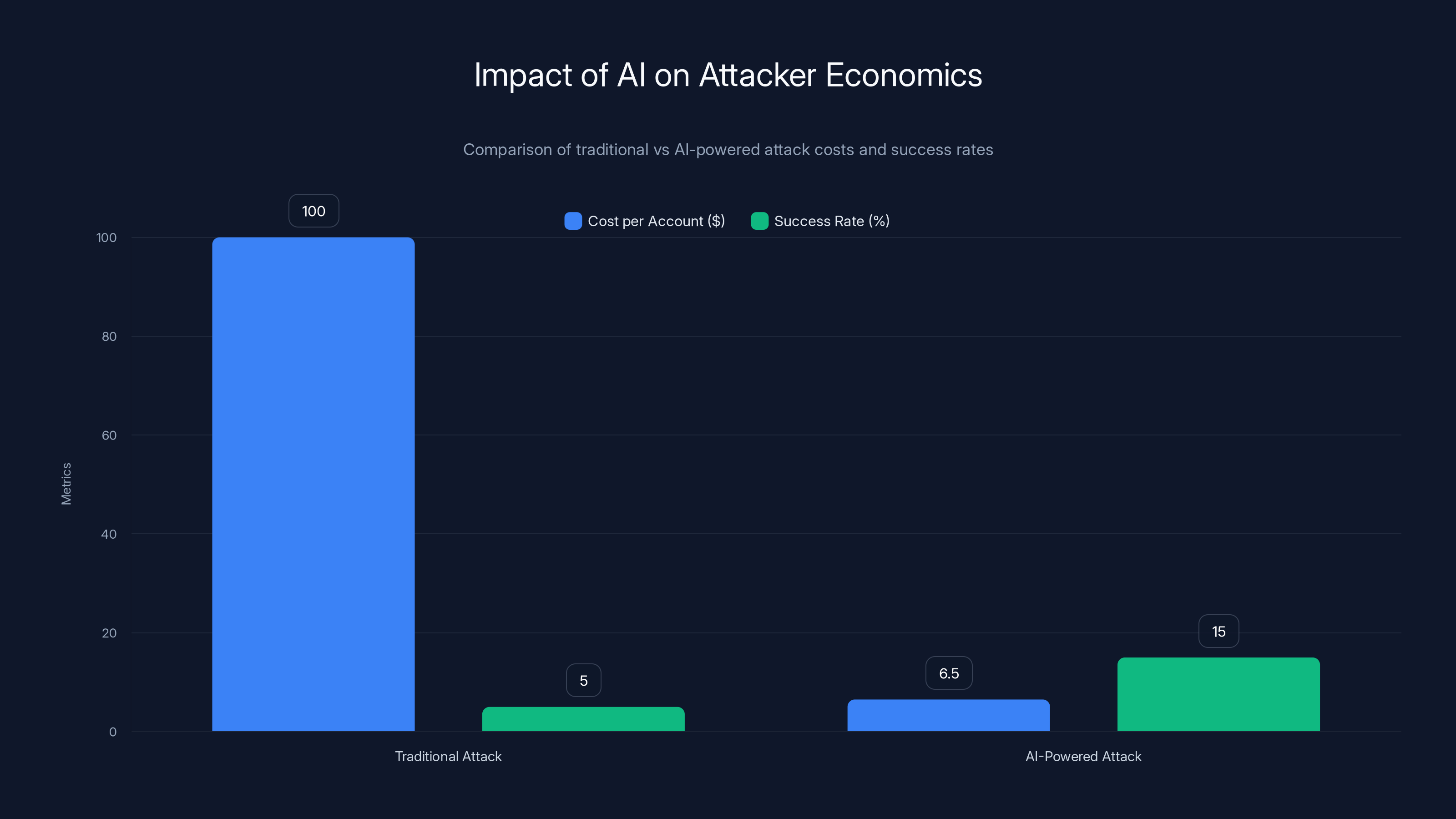

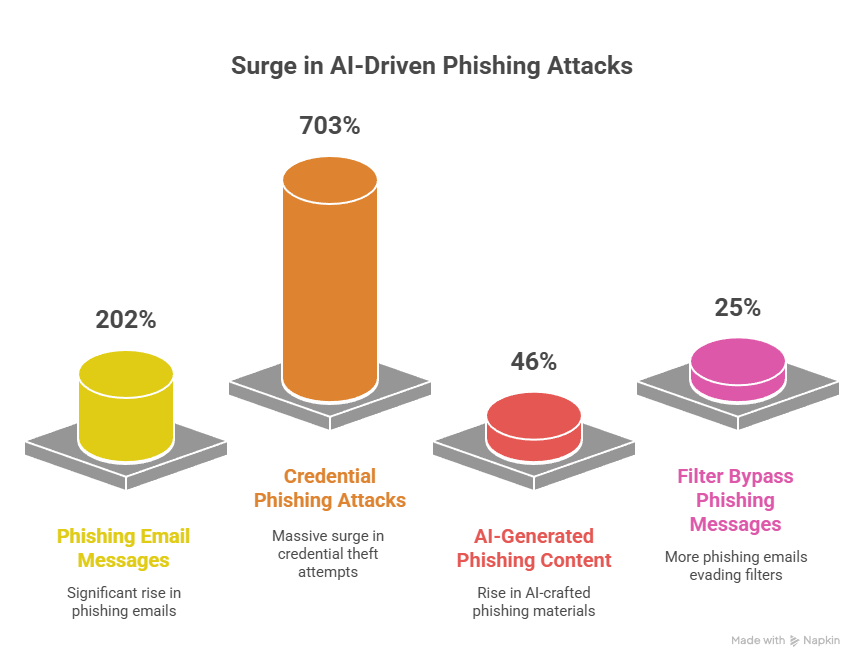

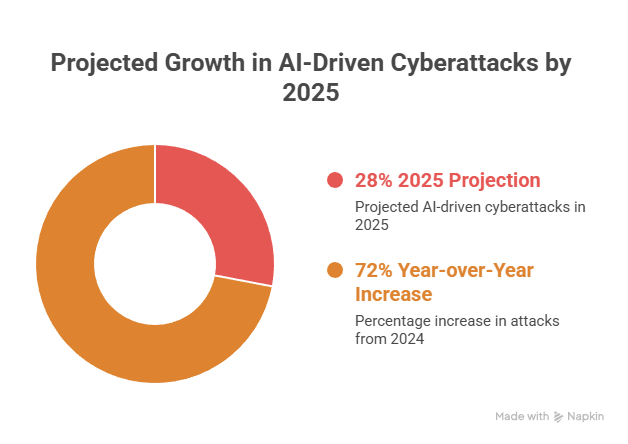

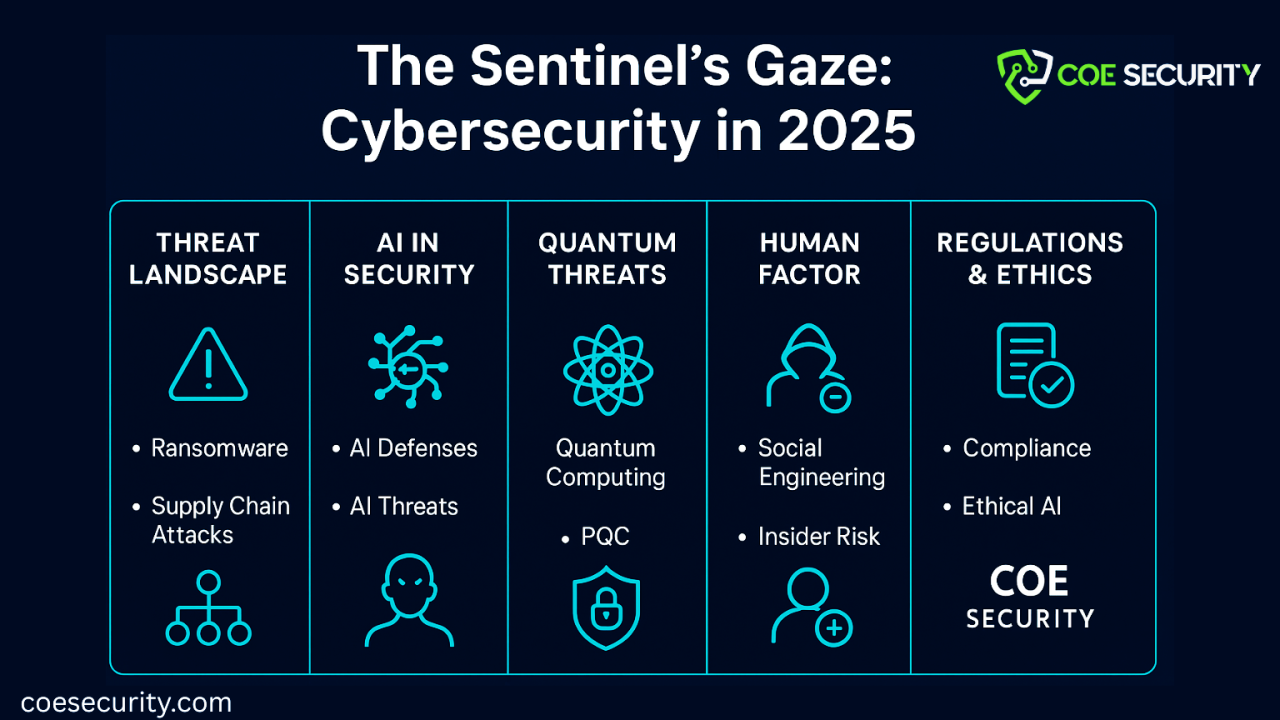

AI has fundamentally altered the adversary side of the security equation. Modern threat actors can now generate unlimited unique email variants, each personalized to specific organizational contexts, with no historical precedent and minimal reuse of tactics. Attack costs have plummeted by up to 95%, while attack success rates have increased dramatically. The traditional detection architectures that once caught 60-70% of threats through pattern recognition now fail against AI-generated attacks that have no patterns to match.

Meanwhile, the defender side of the equation hasn't evolved architecturally. Organizations continue deploying pattern-matching and machine learning systems—Generation 1 and Generation 2 email security architectures—against threats that are fundamentally mismatched to detection approaches designed for predictable, repeating attack patterns. The result is predictable: alert fatigue, false positive explosion, and security analyst burnout.

The economic impact is staggering. Industry research reveals that 65% of email security alerts are false positives, consuming an average of 33 minutes each to investigate. Across a typical enterprise security operations center, this translates to 25% of analyst time spent validating nothing. For organizations with experienced security analysts earning

This isn't a technology problem that can be solved by hiring more analysts or tuning detection thresholds more aggressively. The problem is architectural and structural. Traditional email security systems were designed to solve a different problem: detecting threats that matched known patterns. When every attack is novel and unique, pattern-matching fails mathematically. You cannot match patterns that don't repeat. You cannot train machine learning models on attacks you've never seen.

A third generation of email security architecture is emerging that fundamentally changes the economics of the problem by solving it at the architectural level rather than through incremental improvements to detection systems. These reasoning-based systems evaluate emails across two simultaneous dimensions: threat indicators and business legitimacy patterns. By breaking the false positive/false negative tradeoff that has haunted email security for years, they restore acceptable ROI to email security investments and allow security teams to allocate their most valuable resource—expert analyst time—to work that actually produces security outcomes.

This comprehensive guide explores how AI has rewritten email security economics, why traditional approaches fail against modern threats, and how organizations can restructure their email security strategies to restore operational efficiency and security effectiveness simultaneously.

Part 1: The Historical Model and Why It Worked

The Pattern-Matching Era

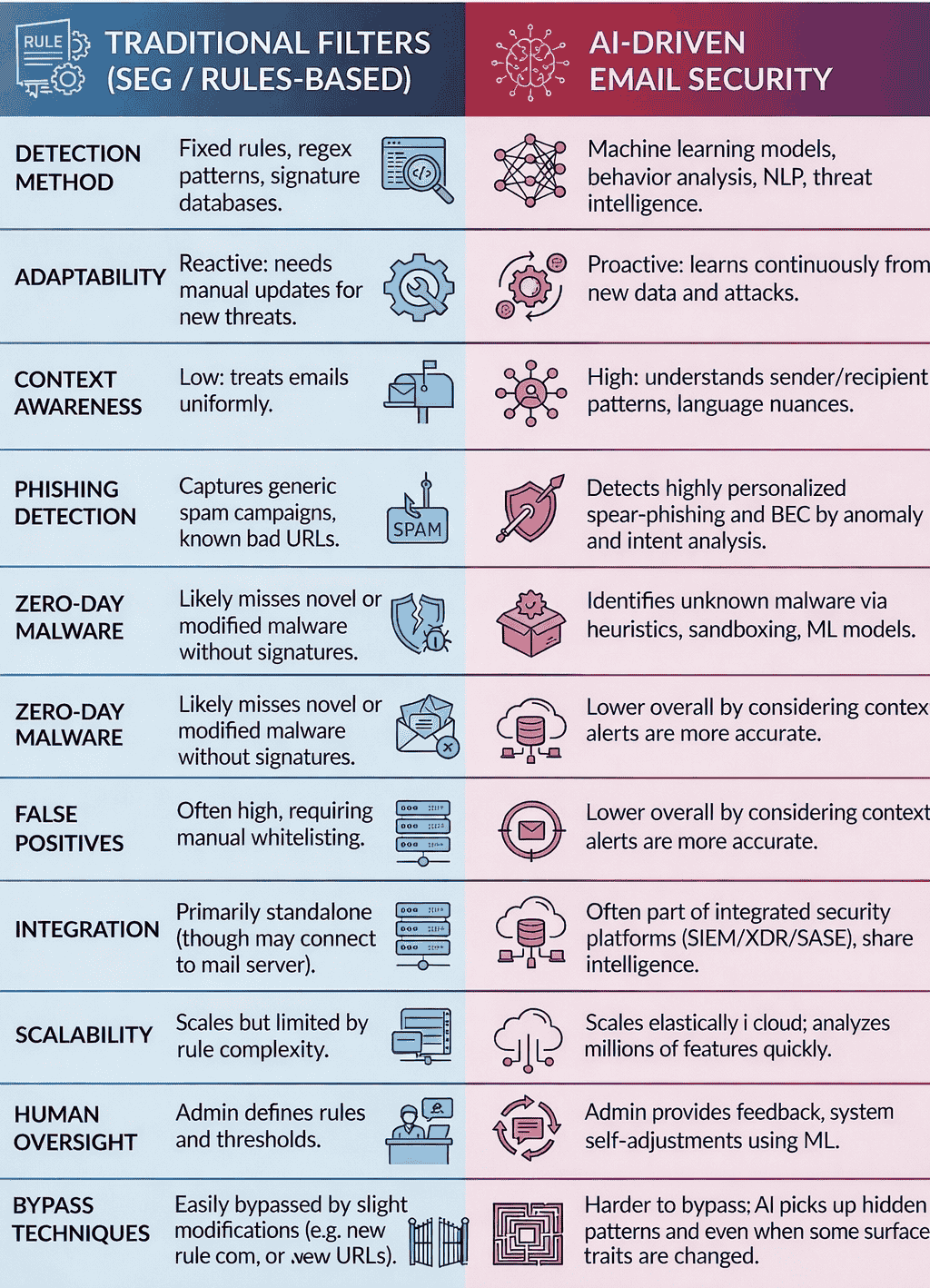

Traditional email security was born from a simple observation: attackers are lazy. They reuse tactics, repurpose attack frameworks, and modify templates rather than creating entirely new attacks from scratch. This made perfect economic sense for attackers ten years ago. Creating a new phishing template took time. Customizing it to a new target took resources. So attackers developed variations on proven templates and deployed them across thousands of targets, knowing that even if detection rates were 40-60%, the economics still worked at scale.

Email security systems evolved to exploit this laziness. They built detection engines around identifying suspicious characteristics: unexpected sender domains, suspicious URLs, malicious attachments, HTML characteristics typical of phishing. When an email matched known malicious signatures or exhibited characteristics similar to previously detected phishing attempts, the system would quarantine the message or flag it for human review.

The system worked because of the fundamental constraint on attacker resources. If every attack required custom development and personalization, attackers couldn't achieve scale. They were forced to reuse and recycle, creating repeating patterns that detection systems could learn and flag.

The Machine Learning Transition

As attackers became slightly more sophisticated and began varying their techniques, the industry shifted to machine learning-based detection. Rather than relying on hand-crafted signatures, these systems learned patterns from large datasets of known phishing and legitimate emails. They could identify subtle characteristics—word choices, sentence structure, URL patterns, sender behavior anomalies—that correlated with phishing attacks.

Machine learning was more flexible than pattern-matching. It could catch variations of known attacks that strict signature matching would miss. It could adapt to evolving tactics without requiring manual rule updates. The detection precision improved, false positive rates decreased (compared to the most aggressive signature systems), and email security seemed to be progressing toward a more mature state.

But machine learning inherited a critical limitation from its predecessor: it was fundamentally a detective system looking for threat signals. It could identify characteristics correlated with attacks, but it had no mechanism to validate whether communication was legitimate from a business perspective. It hunted for malicious patterns but couldn't establish business legitimacy.

This created an unsolvable tradeoff: make detection aggressive to catch sophisticated attacks, and legitimate business emails get quarantined. Make detection conservative to preserve business communications, and novel attacks slip through. Security teams adjusted thresholds endlessly, trying to find the sweet spot where false positives were acceptable and false negatives were minimal. That sweet spot didn't exist.

Why the ROI Was Acceptable

Despite this fundamental architectural limitation, the economics of email security remained acceptable throughout the pattern-matching and machine learning eras. Why? Because attackers were still constrained by the need to develop, refine, and test attacks before deployment.

The math was simple: if an attacker could develop and deploy a phishing template in 2-4 hours of work, and that template had a 5-15% success rate against a typical organization, they could potentially compromise user credentials that would grant access to email systems, cloud applications, or network resources. The expected value was still positive, even if 85-95% of their emails were detected.

Security teams, meanwhile, operated under an acceptable cost model. Yes, they investigated false positives. Yes, they spent analyst time on alerts that didn't represent actual threats. But the false positive rate was manageable—typically 20-40% with well-tuned systems. An analyst could investigate 15-25 alerts per 8-hour shift, spending roughly 20-30 minutes per alert. The cost was real but acceptable.

They also had confidence that their most sophisticated attacks would be caught. Unknown variants of known attack families would be detected. Emails from suspicious domains would be flagged. Unusual sender behavior would trigger alerts. The system wasn't perfect, but it worked well enough that the economics remained positive.

This equilibrium depended entirely on attackers facing resource constraints. Once those constraints disappeared, the entire model collapsed.

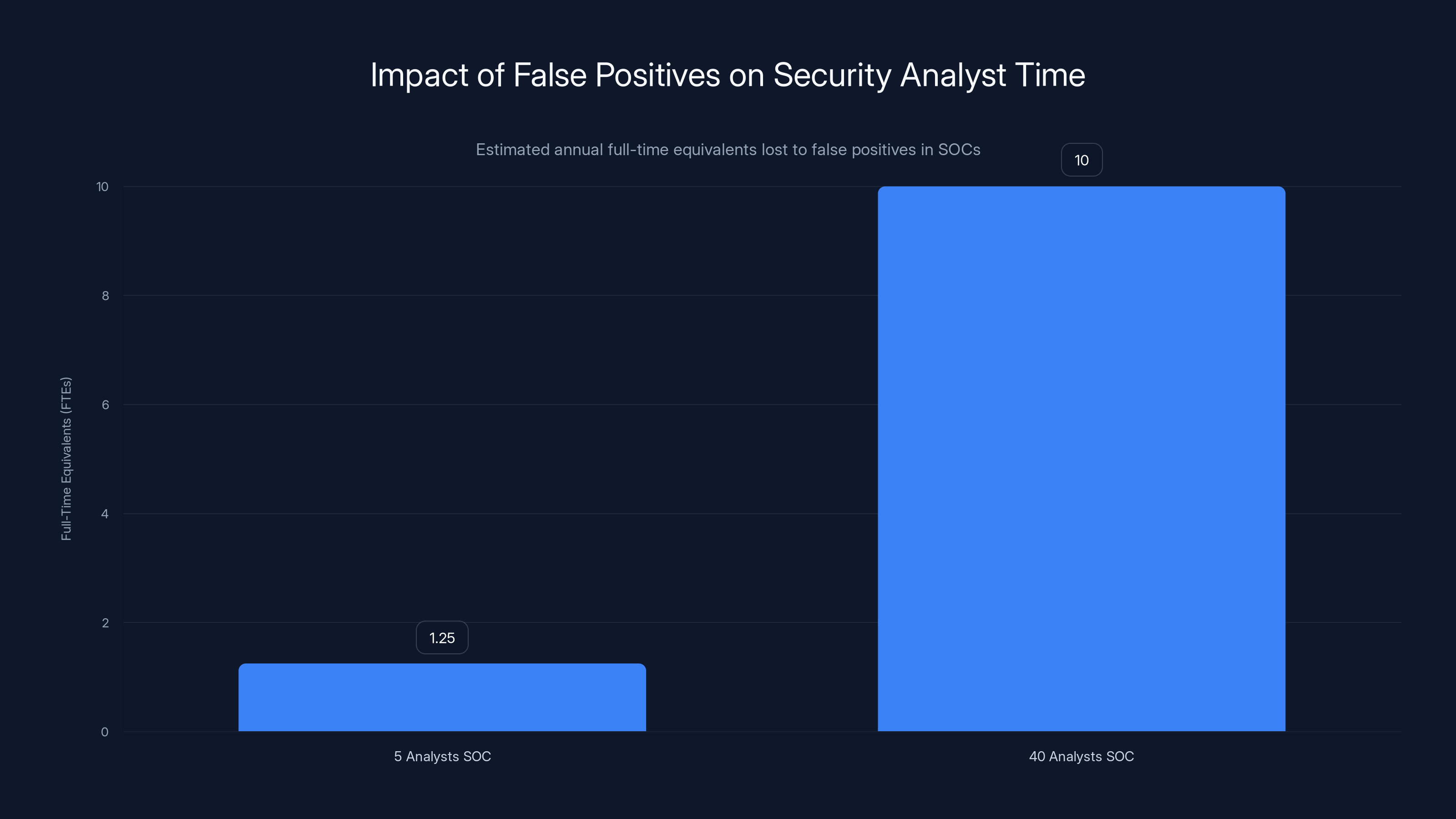

Security operations centers lose significant analyst capacity to false positives, with a 5-analyst SOC losing 1.25 FTEs and a 40-analyst SOC losing 10 FTEs annually. Estimated data.

Part 2: The AI Inflection Point

How AI Broke the Attacker Economics

Artificial intelligence didn't introduce a new type of attack or a new tactic. Instead, it eliminated the resource constraints that had constrained attacker behavior for decades. What previously required 2-4 hours of skilled human effort—researching a target, crafting a personalized phishing email, creating a landing page to harvest credentials, setting up infrastructure—can now be accomplished in seconds or minutes using language models.

The implications are fundamental. An attacker with a large language model and basic technical competency can generate unlimited unique email variants, each personalized to a specific organization, each with novel phrasing and structure that has never been seen before. They can create variations of variations, testing thousands of approaches with minimal effort.

Research from Harvard demonstrates the dramatic economic shift. AI can now fool more than 50% of humans while simultaneously cutting attack costs by 95% and increasing profitability up to 50-fold. These aren't theoretical numbers. They reflect the actual economics of AI-powered attacks observed in real-world deployments.

Consider the mathematics: if an attacker spends

Worse, the attacker faces minimal downside for failure. If their personalized email is detected by email security, they've lost nothing but time—and AI makes time effectively free. They can generate 1,000 variations of an attack for the same effort a human operator would spend creating a single variant.

The economics have inverted. It's now cheaper to attack than to defend against randomized, novel attacks. This creates what security researchers call an "asymmetric advantage" for attackers: they can generate unlimited novel variants while defenders must detect all variations.

The Defender Side Hasn't Evolved

While attacker economics have fundamentally improved, defender capabilities have improved incrementally. Most organizations still deploy email security systems built on the same architectural principles as systems deployed a decade ago: detect threat signals and escalate suspicious emails for investigation.

These systems haven't fundamentally changed because the underlying technology hasn't changed. Machine learning-based detectors still look for characteristics correlated with phishing and malware. They still operate on a single dimension: threat likelihood. They still face the same architectural limitation as their predecessors: they can identify suspicious characteristics but cannot validate business legitimacy.

When facing attackers constrained by resource limitations, this was acceptable. When facing attackers operating with AI-generated unlimited variants, this is catastrophic.

The problem manifests directly in the alert landscape. Systems continue generating the same percentage of false positives—approximately 65% of all email security alerts—but now they're investigating emails that are fundamentally different from attacks the system was trained on.

Consider a business email compromise attack targeting a financial executive. The attacker uses a language model to craft an email that mentions specific projects the executive is working on, references recent company announcements, and requests a wire transfer for what sounds like a legitimate business purpose. The email perfectly mimics business communication patterns.

The email security system has never seen this exact attack before. The sender domain might be spoofed but uses a slightly misspelled variation of the company's domain that passes SPF and DKIM validation through a compromised vendor system. The email content has no malicious attachments or URLs—it requests action on a voice call or in-person meeting. The attack pattern has no historical precedent to match against.

From a pattern-matching perspective, the email looks legitimate. From a machine learning perspective, it might generate a low threat score because it doesn't match characteristics of known phishing attacks. Yet it remains a sophisticated social engineering attack designed to compromise executive accounts.

Meanwhile, the legitimate invoice from a new vendor—legitimate in every sense, but from a sender the system has never encountered before—gets flagged as suspicious because it comes from an unknown domain and contains an unusual attachment type. The security analyst investigating this alert spends 33 minutes determining that the invoice is legitimate business correspondence, learning nothing about security threats.

This is the core problem: defenders operating with pre-AI detection architectures against AI-powered attacks.

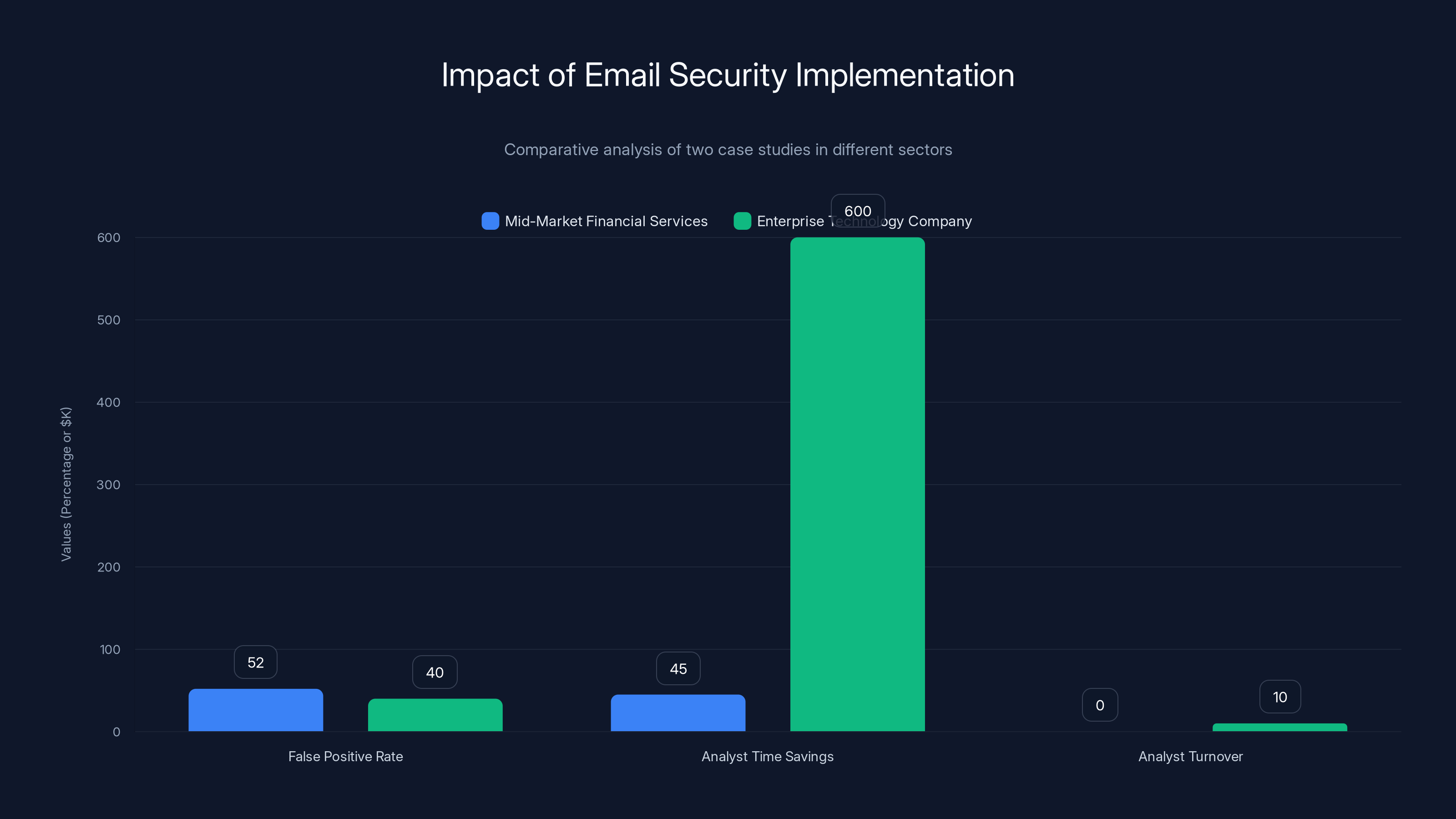

The implementation of reasoning-based email security significantly reduced false positive rates and improved analyst efficiency in both case studies. Estimated data used for analyst turnover and savings.

Part 3: The Resource Allocation Crisis

The Hidden Cost of False Positives

False positives in email security are invisible costs that compound daily. When a security analyst investigates an alert that doesn't represent an actual threat, the investigation time is lost to productive security work. With 65% of alerts being false positives, organizations lose significant analyst capacity to this perpetual noise.

For a typical enterprise security operations center, the math is staggering. A SOC with five analysts investigating email security alerts spends approximately 1.25 full-time equivalents on false positive investigation annually. A SOC with forty analysts (typical for a mid-to-large enterprise) loses approximately 10 full-time equivalents to false positive investigation—that's 10 experienced security professionals doing nothing but validating that legitimate emails are legitimate.

At an average fully-loaded compensation of

More importantly, these aren't junior analysts doing false positive investigation. The analysts with the most expertise—those with 7-15 years of experience, those who understand threat modeling and detection engineering, those who could be building threat intelligence programs—are trapped investigating false positives. They're the ones assigned escalated alerts that passed automated filtering. They're the ones determining whether a suspicious-looking email is actually phishing or legitimate business communication.

This represents a catastrophic misallocation of the organization's most valuable security resource. Expert analysts should be:

- Building threat hunting programs to identify breaches before attackers establish persistent access

- Developing detection capabilities that anticipate emerging attack techniques

- Running tabletop exercises that prepare incident response teams for realistic attack scenarios

- Assessing the security implications of new AI tool adoption across business units

- Creating threat intelligence programs that inform strategic security decisions

- Enabling secure adoption of new communication platforms and business tools

- Analyzing breach incidents to extract lessons applicable across the organization

Instead, they're validating that vendor invoices are legitimate emails and confirming that expense reports from business units aren't phishing.

Capacity Planning and Alert Fatigue

The false positive problem creates an invisible constraint on security operations. When SOCs operate at their true capacity, they're investigating approximately 105-175% of their theoretical maximum throughput before any proactive security work begins. This happens because analysts have some capacity for parallel work, some alerts are investigated quickly, and some investigation time overlaps with other responsibilities.

But this apparent efficiency masks a fundamental problem: the organization has zero capacity for growth in security requirements. Add a new business application with email integration? That's additional alerts your SOC must investigate. Implement new security tools requiring email validation? That's more alerts. Face an emerging threat class requiring investigation? Your SOC is already at maximum capacity before the threat emerges.

Larger organizations don't escape this constraint. They just waste more analyst time at scale. A 40-person SOC losing 10 FTE to false positive investigation isn't 30% more efficient than a 5-person SOC losing 1.25 FTE. It's operating under the same structural constraint, just with higher absolute numbers.

This creates alert fatigue in the traditional sense: analyst burn-out, missed actual threats buried in alert noise, and security professionals leaving the field because they spend their days validating that emails are legitimate rather than building security capabilities.

But it also creates a more insidious problem: false confidence. When organizations believe they have email security because they've deployed sophisticated detection systems, but those systems are buried under alert fatigue that prevents effective alert investigation, they operate with false confidence that threats are being detected and investigated. Meanwhile, business email compromise attacks slip through because the analyst reviewing the alert spends 33 minutes on a false positive instead of the additional 10 minutes that would be required to validate the suspicious email as an actual compromise attempt.

Part 4: Why Incremental Fixes Fail

The Hiring Trap

When faced with overwhelming alert volume and resource constraints, the intuitive response is straightforward: hire more analysts. More people to investigate alerts means faster alert investigation and more capacity for proactive security work.

This approach fails to address the structural problem. If you hire additional analysts to investigate the same email security alerts, you're scaling the inefficiency rather than solving it. The problem isn't that you have too few analysts to investigate alerts. The problem is that 65% of alerts don't represent actual threats.

Hiring more analysts doesn't change this ratio. It just means more people are investigating false positives. If you're losing 25% of analyst time to false positive investigation with a 5-person team, you're still losing 25% of analyst time with a 40-person team. The cost structure scales linearly with the inefficiency.

Moreover, hiring good security analysts—those with the experience and judgment required to investigate sophisticated threats—is a years-long challenge in most markets. The security talent shortage is well-documented. Organizations that try to solve the alert fatigue problem through hiring often discover that their hiring plans move slowly while alert volumes continue increasing.

In the worst cases, hiring more analysts to handle false positive investigation actually reduces security effectiveness. You shift resources from experienced threat hunters (who might catch sophisticated attacks despite alert fatigue) to junior analysts trained only on false positive investigation procedures. Their investigation skills develop around validating legitimate emails, not hunting for threats that bypass detection.

The Detection Tuning Illusion

Another common approach is tuning detection thresholds and rules: reduce sensitivity to decrease false positives, or increase sensitivity to catch more sophisticated attacks. Both approaches encounter the same fundamental limitation.

Email security systems evaluate emails on a single dimension: threat likelihood. A detection engine assigns a risk score based on observed characteristics. High risk scores trigger alerts. Low risk scores pass through to user inboxes.

There exists some threshold where you separate "probably malicious" from "probably legitimate." The problem is that this threshold doesn't correspond to actual threat/legitimate boundaries in a mathematically consistent way.

Consider a personalized spear-phishing email crafted by an AI system. It comes from a domain that passes SPF and DKIM checks (using a legitimate but compromised vendor system). It references specific projects the target executive is working on. It uses formal business language consistent with internal communications. It requests a wire transfer for what sounds like a legitimate business purpose. From a pattern-matching or statistical perspective, this email looks legitimate.

Meanwhile, a legitimate invoice from a new vendor comes from a domain the system has never seen before. The email contains an unusual attachment type. The sender doesn't match any known business relationships. Statistically, this also looks suspicious.

Make your detection threshold aggressive enough to catch the spear-phishing email, and you quarantine legitimate business communications. Make it conservative enough to allow legitimate vendor communications, and the spear-phishing email gets delivered.

This is the fundamental false positive/false negative tradeoff that has haunted email security for two decades. You can't resolve it through threshold tuning because the problem isn't in the threshold. The problem is that systems evaluating only threat signals have no mechanism to validate business legitimacy.

The Whitelist Limitation

Some organizations attempt to solve the false positive problem through whitelisting: maintain a list of approved senders, and allow emails from those senders to bypass most detection. This creates obvious problems: business communications often come from new vendors or unexpected sender addresses. Whitelisting reduces false positives by treating whole categories of legitimate email as pre-approved.

But whitelisting attacks this problem from the wrong angle. It doesn't improve detection. It reduces the scope of emails that require detection. For the emails still subject to detection—new vendors, unexpected communications, unusual requests—the system still faces the original false positive/false negative tradeoff.

Moreover, whitelisting creates a different security problem. If attackers understand that emails from whitelisted vendors bypass additional security controls, they can compromise those vendor accounts and use them to deliver targeted attacks that bypass controls specifically designed to catch compromised accounts.

AI-powered attacks drastically reduce costs to $6.5 per compromised account and increase success rates to 15%, compared to traditional methods. Estimated data based on described economics.

Part 5: The Architectural Root Cause

Single-Dimension Threat Detection

The fundamental architectural limitation of Generation 1 and Generation 2 email security systems is that they evaluate emails on a single dimension: threat indicators. They look for characteristics correlated with attacks and assign risk scores based on detected threat signals.

This architectural choice made sense when attacks were constrained by attacker resources. Threat signals were sufficient because real attacks had identifiable characteristics. Legitimate emails were simply the complement of emails with threat indicators.

But this architecture fails catastrophically when every email is novel and unique. If an attacker can generate unlimited variants, no repeating threat signals exist to detect. The only reliable signal becomes business legitimacy: does this email fit the normal pattern of business communication for this recipient and organization?

Traditional email security systems have no mechanism to evaluate business legitimacy. They can't assess whether:

- The communication pattern matches established organizational relationships

- The request aligns with documented approval workflows

- The sender behavior matches historical norms for that relationship

- The urgency level and request type fit the sender's role and authority

- The communication channel and format align with how this sender usually communicates

Without these dimensions, they can't break the false positive/false negative tradeoff. They must choose between detecting sophisticated attacks (aggressive threshold, high false positives) or preserving business communications (conservative threshold, missed attacks).

The Two-Dimensional Solution

An emerging third generation of email security architecture solves this problem by evaluating emails across two simultaneous dimensions: threat indicators and business legitimacy patterns.

For every incoming email, the system runs parallel investigations:

Threat Signal Collection examines:

- Authentication failures (DKIM, SPF, DMARC)

- Suspicious relay paths and originating infrastructure

- Manipulation tactics (urgency language, authority spoofing)

- Known malicious patterns and behavioral characteristics

- Attachment analysis and payload detection

Business Legitimacy Analysis examines:

- Whether the sender is an established organizational relationship

- Whether the request fits documented approval workflows and processes

- Whether sender behavior matches historical communication patterns

- Whether authority claims in the email match organizational hierarchy

- Whether the communication channel and format align with norms for this sender

A reasoning layer—using large language models as the orchestration architecture—weighs all evidence simultaneously and makes contextual decisions. This is the critical difference from older systems that weighted threat signals without business context.

Consider the personalized spear-phishing example: an email that looks legitimate from a threat signal perspective but represents a sophisticated business email compromise attempt. The threat signal analysis might show clean authentication and standard business language—minimal threat indicators. But business legitimacy analysis can flag that the urgent wire transfer request contradicts established approval workflows (executives don't wire transfer funds without formal approval processes). The reasoning layer integrates these dimensions: "This email has no malicious threat indicators but violates established business legitimacy patterns. Probable attack."

Now consider the legitimate vendor invoice that looks suspicious from a threat signal perspective: unknown sender, new domain, unusual attachment. The threat signal analysis might show moderate threat scores based on these unfamiliar characteristics. But business legitimacy analysis can verify that the email sender is a recently onboarded vendor with a contract on file, the request for payment aligns with established procurement workflows, and the attachment type (PDF invoice) aligns with standard vendor communication. The reasoning layer integrates these dimensions: "This email shows moderate threat signals but aligns perfectly with business legitimacy patterns. Probable legitimate."

By breaking the single-dimension evaluation, these systems break the false positive/false negative tradeoff. They can be aggressive on emails that show threat signals or violate business legitimacy patterns, while being permissive to emails that align with both.

Part 6: The Role of Language Models in Email Security

Beyond Bolt-On Features

Many traditional email security vendors have added language models to their products as a feature—a tool for analyzing email content or writing detection rules. These are bolt-on features that don't fundamentally change the architectural approach.

The distinction is critical. Using a language model to extract suspicious keywords from an email is still single-dimension threat detection. Using a language model to analyze email content and identify social engineering patterns is still looking for threat signals.

Reasoning-based email security uses language models differently. Language models become the orchestration architecture itself—the system that simultaneously evaluates threat dimensions, weighs evidence, and makes contextual decisions about business legitimacy.

This requires different prompt engineering, different integration patterns, and fundamentally different reasoning processes. Rather than asking a language model "Is this email suspicious?", reasoning-based systems ask "Given these threat indicators and these legitimacy patterns, what's the most likely explanation for this email?"

The language model becomes a reasoning engine that:

- Evaluates conflicting signals (high threat indicators vs. strong legitimacy patterns)

- Contextualizes individual characteristics within organizational norms

- Identifies threat patterns that don't match historical training data

- Explains decisions in human-understandable reasoning

- Applies organizational policies to specific email scenarios

This is fundamentally different from threat signal detection.

Reasoning About Unknown Attacks

One of the most powerful aspects of reasoning-based architecture is the ability to reason about attacks that don't match any known pattern. This was impossible in previous generations.

Pattern-matching systems can only detect attacks they've seen before. Machine learning systems can only identify attacks with characteristics statistically similar to known attacks. Neither can reason about novel attacks.

Reasoning-based systems can apply logical reasoning to novel attack patterns. For example, consider an attack that uses:

- A legitimate domain (borrowed from a compromised vendor system)

- Legitimate attachment types (standard business documents)

- Legitimate business language and context

- Standard business requests (approvals, information sharing)

- But violates a specific organizational policy (requesting sensitive information to be sent externally)

This attack might have no known precedent and no statistical characteristics that would distinguish it from legitimate communication. A pattern-matching or machine learning system would likely miss it entirely.

But a reasoning-based system can apply organizational policy knowledge: "This email requests sensitive information be sent to an external address. Our organizational policy requires that sensitive information transfers go through secure channels with formal approval. This violates known policy. Probable attack."

The reasoning isn't based on threat signal detection. It's based on contextual understanding of organizational norms and policies.

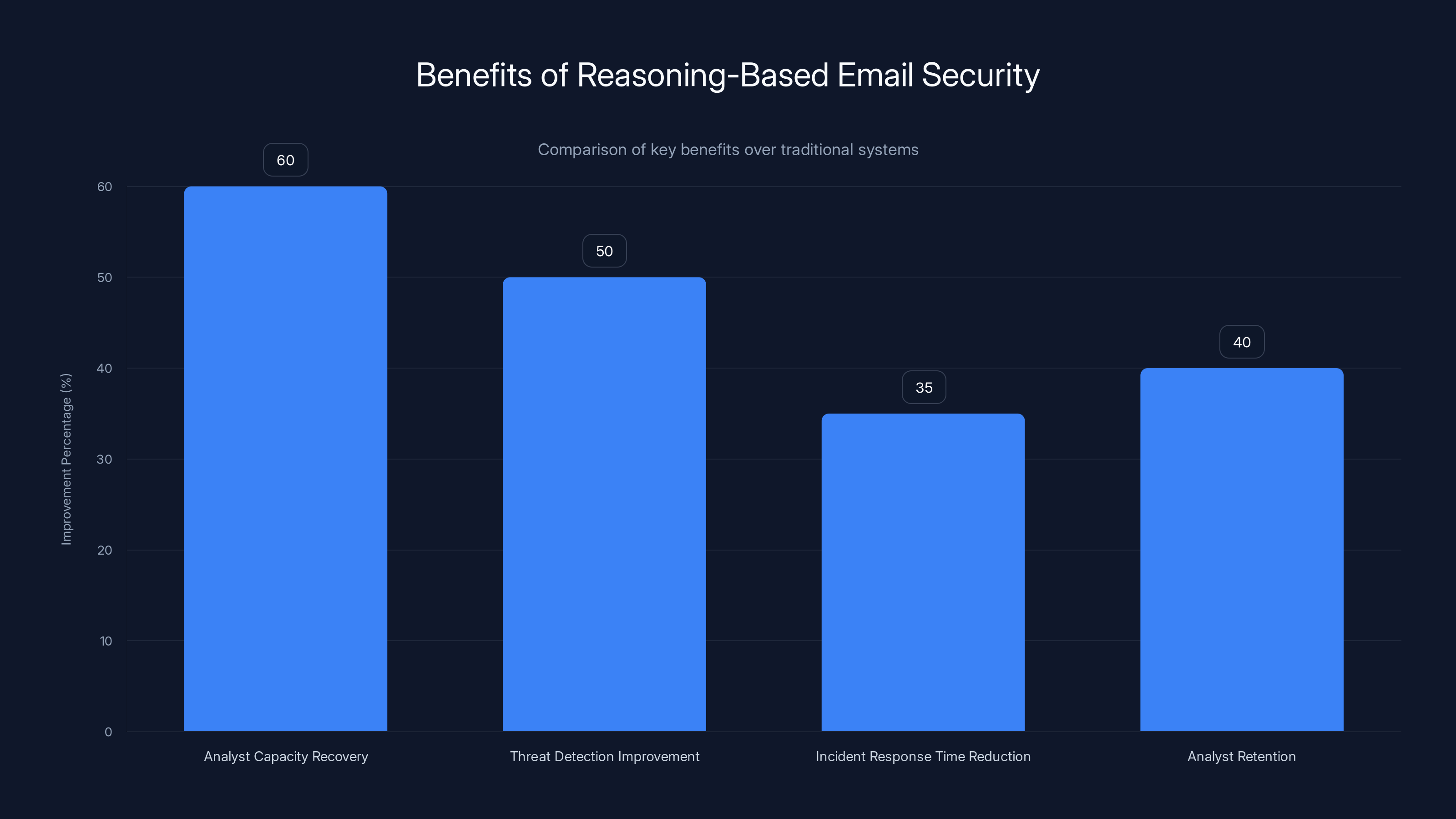

Reasoning-based email security systems can recover 50-70% of analyst capacity, improve threat detection by 50%, reduce incident response time by 30-40%, and enhance analyst retention by 40%. Estimated data.

Part 7: Breaking the False Positive Economics

The Economics of Two-Dimensional Evaluation

When email security systems can evaluate emails across both threat and legitimacy dimensions, the false positive rate drops fundamentally. This isn't incremental improvement. It's an architectural change that breaks the false positive/false negative tradeoff.

Consider the baseline: with single-dimension threat detection, approximately 65% of alerts are false positives. This is a structural artifact of the architecture. You must choose between aggressive detection (high false positives, fewer missed attacks) and conservative detection (low false positives, more missed attacks).

When you add business legitimacy evaluation, emails that appear suspicious but align perfectly with business patterns can be immediately released without investigation. This eliminates a major category of false positives: legitimate business communications that don't match standard threat characteristics.

The net effect is dramatic. Organizations implementing reasoning-based email security typically see false positive rates drop from 65% to 15-20%. This isn't because threat detection becomes less sensitive. It's because legitimate emails no longer trigger alerts when they align with known business patterns.

Consider the mathematical impact on analyst time: if false positives drop from 65% to 20%, and false positive investigation takes 33 minutes on average, the time recovered is substantial.

With 1,000 daily emails (typical for a mid-size organization):

- Old system: 650 false positives × 33 minutes = 357.5 analyst-hours monthly

- New system: 200 false positives × 33 minutes = 110 analyst-hours monthly

- Time recovered: 247.5 analyst-hours monthly ≈ 1.2 FTE

For a 40-person SOC, this represents recovery of approximately 3 FTE from false positive investigation. Instead of losing 10 FTE to false positives, the organization loses roughly 3-4 FTE. The ROI on the email security system improves by a factor of 2-3x immediately.

Confidence Scores and Investigation Prioritization

Beyond simple false positive reduction, reasoning-based systems provide something equally valuable: confidence scores that enable intelligent investigation prioritization.

Older systems provided threat scores: a numerical representation of threat likelihood. These scores were arbitrary in many ways. A score of 7.5/10 might represent 75% likelihood of being phishing according to the system's learned patterns, but this doesn't translate to useful investigation priority.

Reasoning-based systems provide confidence scores based on explicit reasoning: "This email shows high threat indicators (spoofed domain, urgency language, credential request) but also violates established business legitimacy patterns (unusual request type, contradicts workflow, sender not authorized). High confidence this is an attack." Or: "This email shows low threat indicators and aligns with business legitimacy patterns across all dimensions. High confidence this is legitimate."

These confidence scores map directly to investigation priority and investigation methodology. High-confidence alerts get investigated immediately. High-confidence legitimacy gets auto-released without investigation.

Mid-confidence emails—those with conflicting signals or insufficient context—get routed to investigation but with context about what specific dimensions require examination. An analyst investigating an email flagged as "moderate threat with strong legitimacy patterns" knows to focus investigation on whether the legitimacy patterns are accurate rather than spending time on threat signal analysis.

This changes the investigation experience fundamentally. Analysts still investigate suspicious emails, but they investigate with explicit reasoning that guides their investigation process.

Part 8: Implementation Considerations and Organizational Impact

Integration with Existing Email Infrastructure

Implementing reasoning-based email security requires different integration patterns than traditional systems. Rather than deploying a new detection engine that merely sits alongside existing systems, organizations need to restructure how email flows through their security stack.

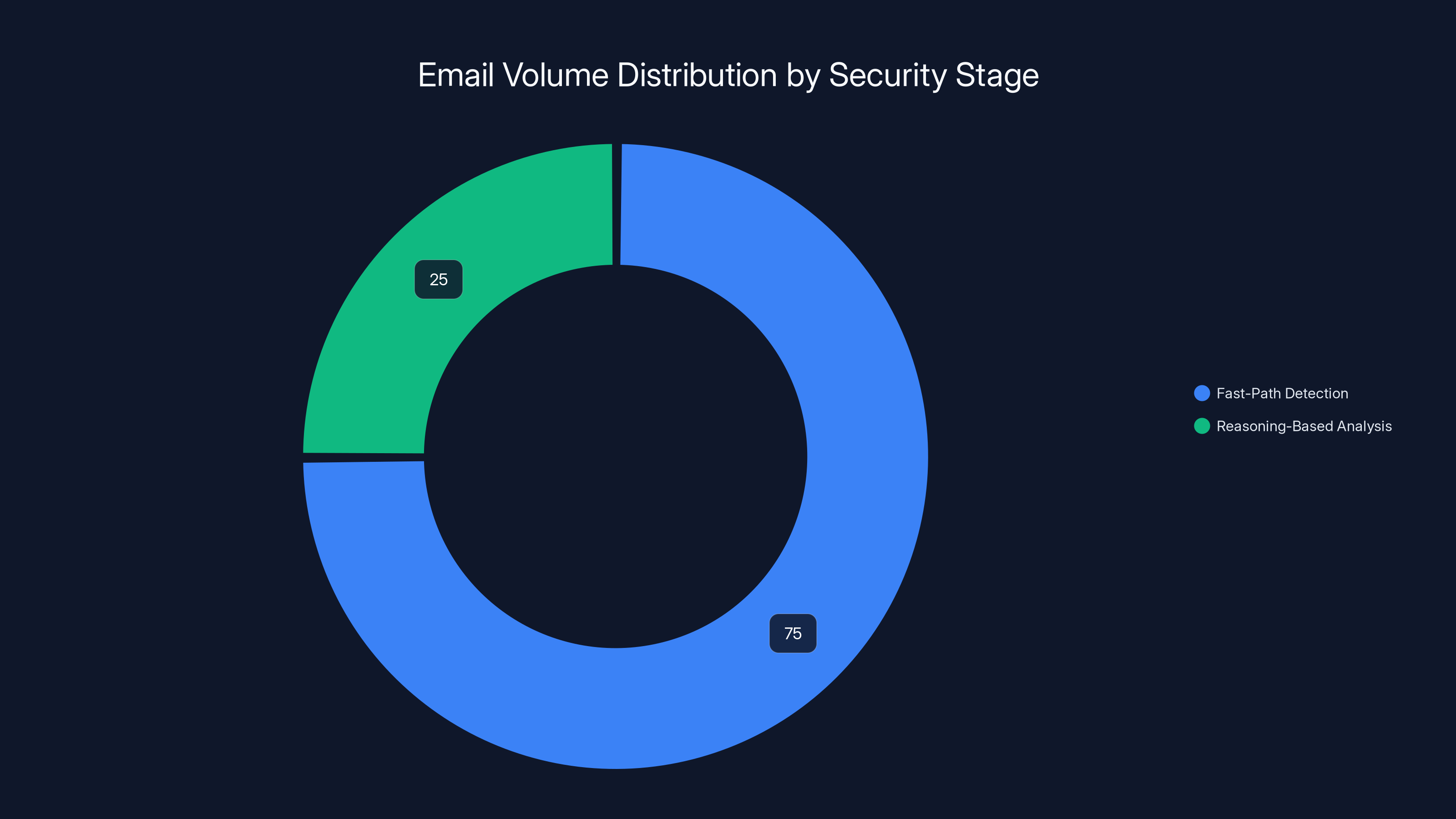

The most effective implementations use a two-stage approach:

Stage 1: Fast-Path Detection runs basic threat checks (malware detection, credential harvesting patterns) and immediately releases emails that show no threat indicators and align with business legitimacy. This stage completes in milliseconds and handles 70-80% of daily email volume.

Stage 2: Reasoning-Based Analysis applies complex reasoning to emails that show any threat indicator or legitimacy concern. This stage is more compute-intensive but only processes 20-30% of volume, making the performance impact acceptable.

This staged approach maintains email delivery speed while enabling sophisticated analysis. Organizations typically see minimal impact to email latency while dramatically improving detection and reducing false positives.

Organizational Workflow Changes

Migrating to reasoning-based email security requires changes to how SOCs handle alerts and escalations. The most significant change is the shift from investigating all alerts to investigating only alerts that meet specific confidence thresholds.

Organizations typically implement a three-tier investigation framework:

Tier 1: Auto-Release - Emails flagged as high-confidence legitimate, no analyst investigation required. These are released to user inboxes immediately. Typically includes 15-20% of emails.

Tier 2: Standard Investigation - Emails with moderate confidence scores (either threat or legitimacy signals conflict). Analysts investigate using provided reasoning context. Typically 10-15% of emails.

Tier 3: Escalation - Emails with high-confidence threat assessment, likely representing active attacks. Escalated to senior analysts and security leadership. Typically 1-2% of emails.

This framework eliminates the false positive investigation burden while increasing organization's focus on actual threats. Tier 3 emails (genuine threats) get investigated more thoroughly because analyst time is recovered from Tier 1 (legitimate emails) and Tier 2 investigates faster with provided reasoning context.

Training and Competency Development

SOC analysts transitioning to reasoning-based email security need different skills than traditional alert investigation. Rather than becoming expert pattern-matchers and rule-tuners, they develop competency in:

- Understanding organizational workflows and approval processes

- Validating reasoning provided by the system against business context

- Identifying gaps in legitimacy pattern detection (new vendors, unusual workflows)

- Providing feedback that improves reasoning for edge cases

- Responding to sophisticated attacks that defeat both threat and legitimacy detection

This shift often requires 2-4 weeks of transition training and ongoing capability development as analysts internalize how to work with reasoning-based systems.

The positive side: many analysts find this work more engaging than false positive investigation. They're building security knowledge about their organization's workflows, understanding threats in business context, and developing expertise in threat hunting rather than alert response.

Increasing the number of analysts does not reduce the percentage of time lost to false positives, maintaining a consistent inefficiency level. Estimated data.

Part 9: Cost-Benefit Analysis and ROI

Direct Cost Savings

The immediate ROI from implementing reasoning-based email security comes from analyst time recovered from false positive investigation.

Baseline scenario: 40-person SOC, 65% false positive rate, 33 minutes per investigation, 1,000 daily emails

- Monthly false positive investigations: 19,500 hours

- Monthly analyst capacity spent on false positives: 125/hour average load)

- Recovered capacity through false positive reduction: 1,200,000 monthly

- Annual savings: 14.4M

These are conservative estimates. Many organizations see higher false positive rates, more daily emails, or higher analyst compensation, which increases savings proportionally.

Additionally, organizations typically recover another 10-15% of analyst capacity through faster investigation of remaining alerts. Alerts that previously took 33 minutes might take 12-15 minutes when analysts have explicit reasoning context from the system.

Indirect Benefits and Risk Reduction

Beyond analyst time savings, organizations realize several indirect benefits:

Reduced Breach Risk: By eliminating alert fatigue and enabling more thorough investigation of genuine threats, organizations catch compromises earlier in the attack chain. Gartner research suggests that improving threat investigation speed and thoroughness typically reduces breach response time by 30-40% and reduces average breach cost.

Improved Analyst Retention: Security analyst burnout from alert fatigue is a major driver of talent loss in the industry. Organizations that eliminate false positive investigation typically see 15-25% improvements in security analyst retention, reducing costly recruiting and training cycles.

Faster Incident Response: With analyst capacity recovered, organizations can implement proactive threat hunting and faster incident response. This typically reduces dwell time (the time attackers remain in a system before detection) by 20-30%.

Improved Business Operations: Users experience fewer false positive quarantines of legitimate business emails. Help desk tickets related to email not arriving drop 40-60%, reducing business friction.

Regulatory and Compliance Benefits: Faster detection and investigation of email-based attacks strengthens audit trails and compliance documentation, particularly for regulations like HIPAA, GDPR, and SOC 2 that require demonstrated email security controls.

Part 10: Comparing Reasoning-Based Solutions

Key Differentiation Factors

As reasoning-based email security emerges as a category, organizations need evaluation frameworks to compare solutions. Key differentiation factors include:

Reasoning Depth: Does the system apply simple rule-based reasoning (if threat indicator then alert) or sophisticated multi-dimensional reasoning that weighs conflicting signals and applies organizational context? Solutions that apply deep reasoning dramatically outperform rule-based alternatives.

Business Context Integration: Can the system access and leverage organizational knowledge about workflows, approval processes, organizational hierarchy, and established business relationships? Solutions that integrate this context see significantly lower false positive rates.

Language Model Architecture: Is the language model a bolt-on feature or the core orchestration engine? Solutions where the LLM serves as the central reasoning architecture perform better on novel attacks.

Investigation Support: Does the system provide reasoning explanations that guide analyst investigation, or does it simply provide threat scores? Systems that provide detailed reasoning context significantly reduce investigation time for flagged emails.

Tuning and Customization: Can the system learn organizational-specific patterns and policies? Solutions that allow customization to specific organizational contexts perform better than one-size-fits-all systems.

Integration Maturity: How mature is integration with existing email infrastructure? Solutions with shallow API integration often perform worse than solutions designed for deep integration with modern email systems.

Performance Benchmarks

When evaluating reasoning-based solutions, organizations should examine published benchmarks on:

| Metric | Good Performance | Excellent Performance |

|---|---|---|

| False Positive Rate | 20-30% | 10-15% |

| Detection of Known Attacks | >90% | >95% |

| Detection of Novel Attacks | 60-70% | 75-85% |

| Average Investigation Time | 15-20 minutes | 8-12 minutes |

| Email Latency Impact | <50ms | <20ms |

| SOC Integration Complexity | Moderate | Low |

These benchmarks represent realistic expectations for mature reasoning-based systems. Solutions performing significantly worse on any dimension likely have architectural limitations worth investigating.

Estimated data shows that 75% of emails are processed through fast-path detection, while 25% undergo reasoning-based analysis, balancing speed and security.

Part 11: Common Implementation Challenges and Solutions

Challenge 1: Organizational Workflow Documentation

Reasoning-based systems require explicit documentation of organizational workflows and approval processes. Many organizations have undocumented or partially documented workflows, particularly around emergency procedures and executive decision-making.

Solution: Implement a phased documentation approach. Start with the highest-risk workflows (financial approvals, user account provisioning, sensitive data access) and document iteratively as the system encounters new workflow patterns. The system can flag potential undocumented workflows during operation, which helps identify documentation gaps.

Challenge 2: False Legitimacy Patterns

Business legitimacy patterns can themselves be targets for attack. If attackers understand that emails from certain senders or to certain recipients bypass additional scrutiny, they can compromise those accounts and use them as trusted relay points.

Solution: Implement anomaly detection for business legitimacy patterns themselves. If an established vendor suddenly sends emails to an unusual recipient or requests unusual information, that's a legitimacy pattern violation that signals potential compromise. Reasoning-based systems should flag these anomalies.

Challenge 3: Feedback Loop Quality

Reasoning-based systems improve when analysts provide feedback about investigation outcomes: "This was legitimately a business email" or "This was an actual attack I missed." Poor quality feedback or insufficient feedback volume can limit improvement.

Solution: Implement structured feedback mechanisms that make providing quality feedback easy. Many organizations use:

- Quick-action buttons for common feedback scenarios

- Required feedback classification for escalated emails

- Regular analyst training on feedback importance

- Automated feedback collection from email user actions (forwarding, replying, etc.)

Challenge 4: Change Management and Analyst Resistance

Analysts trained on pattern-matching and traditional investigation may initially resist transitioning to reasoning-based systems. The different investigation methodology and reduced alert volume can feel like uncertainty.

Solution: Implement comprehensive change management:

- Extensive hands-on training with realistic scenarios

- Quick-wins period where analysts see obvious false positive elimination

- Regular communication about threat detection improvements

- Career development opportunities around new skills (workflow knowledge, threat hunting)

- Gradual transition period with overlapping old and new systems

Part 12: The Future Evolution of Email Security

Predictive Threat Assessment

Next-generation reasoning-based systems are moving beyond reactive threat assessment to predictive analysis. Rather than evaluating only the email that arrived, they assess the likelihood that this email represents the beginning of a multi-stage attack campaign.

For example, a single suspicious email requesting information about a specific employee might be of minor concern. But a pattern of multiple emails across different users requesting information about the same employee suggests reconnaissance for a targeted attack. Predictive systems can identify these patterns and escalate accordingly.

This requires sophisticated analysis of:

- Attack campaign patterns from threat intelligence

- Historical compromise patterns in similar organizations

- Behavioral clustering of related emails

- Geographic, temporal, and technical indicators suggesting coordinated attacks

Automated Response and Isolation

As reasoning-based systems achieve higher confidence in threat assessment, organizations are beginning to implement automated response for high-confidence attacks: quarantine, user notification, and even automatic password reset for potentially compromised accounts.

These automations dramatically reduce response time. Traditional email security systems would detect the attack, alert analysts, who would investigate and decide whether to take action. Automated response based on high-confidence threat assessment can quarantine dangerous emails within seconds.

This requires extremely high confidence thresholds and extensive testing, but mature organizations are seeing significant benefits from this capability.

Cross-Channel Threat Assessment

Future systems will likely evaluate threats across multiple communication channels—not just email, but Slack, Teams, SMS, and other channels where business communication happens. Sophisticated attackers are diversifying their approaches across channels, and defense must follow.

Reasoning-based architectures are well-suited to this expansion because the core reasoning principles (threat signals + business legitimacy patterns) apply across channels. A Slack message can be evaluated the same way as an email: does it show threat indicators? Does it align with business legitimacy patterns?

Part 13: Alternative Solutions and Competitive Approaches

Security Awareness and User Training

Some organizations approach email security from the user defense perspective: train employees to recognize phishing and social engineering attacks. User training is an important defensive layer, but it has fundamental limitations.

User training works well for obvious attacks (fake Netflix password reset requests, obvious impersonations). It works poorly for personalized spear-phishing crafted with knowledge of the target's role, projects, and organizational context. Research shows that even security-trained users are fooled by sophisticated personalized attacks at rates of 15-25%.

User training should complement, not replace, email security. The combination of trained users and sophisticated email detection provides the strongest defense.

Email Encryption and Digital Signatures

Some approaches focus on verifying email authenticity through encryption and digital signatures. End-to-end encryption and strong digital signatures do prevent certain attacks (domain spoofing, email forgery). They don't prevent social engineering attacks where the email comes from an actual, legitimate sender (compromised account) requesting unauthorized actions.

These technologies are valuable components of comprehensive email security but insufficient as standalone solutions.

Vendor Diversification and Multiple Detection Layers

Some organizations deploy multiple email security solutions from different vendors in parallel, assuming that different vendors' detection approaches will catch different attacks.

This approach has merit but significant limitations. Multiple detection layers create multiplicative false positive problems: if each system has a 30% false positive rate and they're stacked, the combined false positive rate approaches 60% or higher. Additionally, maintaining and tuning multiple systems creates operational complexity that often outweighs the detection benefits.

A single, sophisticated reasoning-based system typically outperforms multiple simpler systems because it provides lower false positives and simpler operations.

Platform-Native Security Features

Cloud email platforms (Microsoft 365, Google Workspace) increasingly incorporate security features directly into their platforms. These platform-native features have the advantage of deep integration and access to user and organizational context.

Platform-native security is often sufficient for organizations with relatively straightforward email security needs. However, as attacks become more sophisticated, platform-native features typically lack the sophisticated reasoning capabilities of specialized email security solutions. Organizations with high-value targets, sophisticated threat actors, or regulatory requirements typically benefit from supplementing platform-native security with specialized solutions.

Part 14: Building Your Email Security Strategy

Assessment Framework

Organizations considering transition to reasoning-based email security should first assess current state:

Measurement 1: Alert Volume and False Positive Rate

- How many email security alerts does your SOC investigate daily?

- What percentage are actually malicious? (Most organizations don't know—this is worth measuring)

- How much analyst time do false positives consume?

Measurement 2: Incident Response Metrics

- How long from first phishing email to detection and user notification?

- How many business email compromises succeed before detection?

- What's your average dwell time (time from breach to detection)?

Measurement 3: Operational Health

- What percentage of SOC capacity is dedicated to email security?

- What's your analyst turnover rate?

- How many critical security projects are delayed due to alert volume?

These measurements provide baseline understanding of current email security economics and ROI.

Evaluation Process

When evaluating specific email security solutions, use a structured evaluation process:

-

Define Requirements: Based on your assessment, define specific requirements for false positive rate, threat detection capabilities, analyst workflow changes required, etc.

-

Proof of Concept: Request a Po C with realistic email volume and threat samples. Evaluate both false positive rate and threat detection performance.

-

Integration Assessment: Evaluate integration requirements and operational impact. How much changes to current email flow? What staff training is required?

-

TCO Analysis: Calculate total cost of ownership including software costs, integration costs, training costs, and analyst time changes. Compare to baseline.

-

Risk Assessment: Consider risks of transition—will the new system miss attacks during transition period? How will you validate that threat detection improves?

Migration Planning

A phased migration approach reduces risk:

Phase 1: Parallel Operation - Run new system alongside existing email security for 2-4 weeks. Compare alert generation, investigate discrepancies, validate that new system performs as expected.

Phase 2: Gradual Transition - Move a subset of email traffic through new system while maintaining existing system for all traffic. Gradually increase percentage over 2-4 weeks.

Phase 3: Full Transition - Move all email traffic through new system. Maintain existing system in receive-only mode for 2-4 weeks to validate no degradation.

Phase 4: Optimization - Remove existing system once new system operates stably. Begin optimization to leverage new capabilities.

This phased approach takes 8-12 weeks total but significantly reduces migration risk.

Part 15: Real-World Implementation and Results

Case Study 1: Mid-Market Financial Services

A mid-market financial services company (150 employees) was experiencing 45 email security alerts daily, with approximately 70% being false positives. Their 2-person SOC was spending approximately 4 hours daily investigating false positives—essentially their entire security capacity.

After implementing reasoning-based email security with organization-specific workflow customization:

- False positive rate dropped from 70% to 18%

- Daily analyst time on email decreased from 4 hours to 45 minutes

- Recovered analyst capacity was redirected to threat hunting and incident response preparation

- Detection of legitimate threats improved because analysts could focus on actual threats

- Analyst retention improved as security team members engaged in more meaningful work

Total implementation cost was

Case Study 2: Enterprise Technology Company

A large technology company (2,000+ employees) was losing approximately 10 FTE worth of analyst capacity ($1.2M annually) to false positive investigation. Their 35-person SOC was operating at 110% capacity—security leadership couldn't staff new initiatives.

After implementing reasoning-based email security across their environment:

- False positive rate decreased from 62% to 22%

- Recovered analyst capacity: approximately 5 FTE (worth $600K annually)

- Threat hunting initiatives that were previously impossible due to capacity constraints were implemented

- Incident response time improved by average of 35% (from 2.5 hours detection to action down to 1.6 hours)

- Analyst turnover decreased from 18% annually to 8% annually

Total implementation cost was

Part 16: Addressing Common Objections and Concerns

Objection 1: "This will miss sophisticated attacks"

This is understandable caution. Won't a system focused on business legitimacy miss novel, sophisticated attacks?

In practice, the opposite is true. Current systems miss sophisticated attacks because analysts are buried under false positives. By eliminating false positive burden, reasoning-based systems allow analysts to focus on sophisticated threats that don't match obvious patterns.

Additionally, sophisticated attacks often come from compromised legitimate accounts (business email compromise). These attacks come from legitimate sender addresses with legitimate authentication. Pattern-matching and machine learning systems typically miss these entirely because they have no malicious characteristics. Reasoning-based systems can catch these by identifying that legitimate senders are requesting unusual actions or violating workflow patterns.

The net effect is that reasoning-based systems typically detect sophisticated attacks better than traditional systems, not worse.

Objection 2: "Implementing this requires too much organizational knowledge"

Reasoning-based systems do require knowledge of organizational workflows and approval processes. Doesn't this create implementation burden?

Implementation does require some workflow documentation, but this is typically a one-time effort that provides ongoing benefits. Additionally, modern systems can learn workflows from historical email patterns rather than requiring explicit documentation from scratch. The system can observe that financial approvals always follow a specific workflow and learn these patterns automatically.

The operational value of this knowledge is substantial—it's not just for email security, but also enables compliance auditing, process optimization, and governance enforcement.

Objection 3: "Machine learning and AI are hype; will this actually work?"

Legitimate skepticism about AI hype is warranted. However, the reasoning-based architecture described here is substantively different from typical "AI" marketing.

Reasoning-based email security applies language models to a concrete, well-defined problem: evaluate emails across threat and legitimacy dimensions using organizational context. This is a specific application of AI technology to a business problem, not a generic "AI-powered" feature.

Proof of capability is straightforward: measure false positive rates, threat detection performance, and analyst time before and after implementation. These are concrete, measurable metrics that definitively show whether the system works.

Part 17: Organizational Readiness and Change Management

Readiness Assessment

Not all organizations are ready to transition to reasoning-based email security immediately. Organizational readiness depends on several factors:

Factor 1: Process Maturity - Organizations with highly documented, standardized processes (financial approval workflows, user provisioning, etc.) are better positioned to implement reasoning-based systems. Organizations with highly ad-hoc, undocumented processes face higher implementation complexity.

Factor 2: Email Volume Stability - Organizations with relatively stable, predictable email patterns (stable workforce, stable business units) are easier to transition than organizations with constant change (acquisitions, reorganizations).

Factor 3: SOC Capability - SOCs with sophisticated analysts capable of understanding reasoning and providing feedback are better positioned for success than SOCs with junior analysts only trained on alert response.

Factor 4: Leadership Support - Migration to reasoning-based systems requires organizational change and analyst retraining. Leadership support for this change is critical.

Organizations lacking readiness in multiple areas may benefit from pre-implementation planning to build readiness before migration.

Change Management Strategy

Successful implementation requires change management across several dimensions:

Stakeholder Communication: Executive stakeholders need understanding that email security effectiveness improves through analyst time recovery (enabling threat hunting), not just through reduced alert volume. This requires clear communication of strategy and expected outcomes.

SOC Culture: Security operations teams need to understand that reduced alert volume isn't a sign of reduced security—it's a sign of improved security. This mindset shift is crucial. Teams need education about how false positive elimination improves threat detection.

Business Unit Engagement: Business units impacted by email security (finance, legal, human resources) should be engaged to help define workflows and legitimacy patterns. This creates alignment and improves implementation success.

Continuous Improvement: The system should be treated as continually improving, not static. Regular review of false positives, threat detection gaps, and workflow changes ensures the system adapts to evolving business needs.

Part 18: Industry Trends and Future Outlook

The Evolution of Email as Attack Vector

Email continues to be the primary attack vector despite decades of security investment. Why? Because email combines several properties that attackers prize: direct access to targets, high trust (business users check email multiple times daily), integration with other systems (calendar, file sharing, authentication), and resistance to security controls (business legitimacy makes detection difficult).

The increasing sophistication of AI-powered attacks doesn't suggest that email will become less important as an attack vector. Rather, it suggests that traditional defense approaches will become increasingly obsolete. Organizations using pre-AI detection architectures against AI-powered attacks will face increasing compromise rates.

Conversely, organizations that migrate to reasoning-based email security now position themselves advantageously for the threat landscape of the next 5-10 years.

The Business Email Compromise Problem

Business email compromise (BEC)—attacks that compromise legitimate business email accounts and use them to request fraudulent wire transfers or sensitive information—is now the highest-impact email threat. These attacks:

- Originate from legitimate sender addresses

- Use legitimate authentication

- Request actions that fit organizational workflows (wire transfers, information requests)

- Generate minimal threat signals that traditional detection systems can identify

BEC typically only gets caught when the recipient validates the request through an out-of-band channel (phone call, in-person conversation) before complying. Email security systems provide minimal protection.

Reasoning-based systems provide meaningful protection against BEC by identifying that the request, while legitimate-appearing, violates established workflows or authorization patterns. This converts BEC from an essentially undetectable threat to a detectable threat.

As BEC damages continue to increase (estimated $50+ billion annually globally), the competitive advantage of reasoning-based detection becomes increasingly valuable.

The Convergence of Email and Other Channels

Sophisticated threat actors increasingly diversify attacks across multiple communication channels: email, Slack, Teams, SMS, phone. Defense must follow.

Reasoning-based architectures, because they're built on general principles (threat signals + legitimacy patterns) rather than email-specific heuristics, extend to other channels naturally. A Teams message can be evaluated the same way as an email: does it show threat signals? Does it align with business patterns?

We should expect to see reasoning-based defense extended across communication channels over the next 3-5 years.

Conclusion: Restructuring Email Security Economics

Email security has reached an inflection point. Traditional architectures—pattern-matching and machine learning systems designed to detect repeating attack patterns—fail catastrophically against AI-powered attacks that generate unlimited unique variants. The result is predictable: overwhelming alert volume, analyst burnout, and staggering cost for minimal security improvement.

The root cause isn't in detection sensitivity or tuning. It's architectural. Systems that evaluate only threat signals face an insurmountable false positive/false negative tradeoff. They must choose between aggressive detection (high false positives, analyst burnout) and conservative detection (missed attacks).

Reasoning-based email security breaks this tradeoff by evaluating emails across two dimensions simultaneously: threat indicators and business legitimacy patterns. By adding business context to threat evaluation, these systems achieve something that wasn't possible before: they can eliminate false positives while improving threat detection.

The economic impact is substantial. Organizations migrating from traditional to reasoning-based email security typically:

- Recover 50-70% of analyst capacity previously consumed by false positive investigation

- Reduce SOC analyst burnout and improve retention

- Improve threat detection of sophisticated attacks by enabling analyst focus

- Decrease incident response time by 30-40%

- Achieve ROI of 200-500% in the first year

These aren't marginal improvements. They're fundamental restructuring of email security economics.

For IT leaders managing flat or declining security budgets, the choice is clear: continue investing in systems that create overwhelming alert volume and require more headcount to manage, or transition to architectures that achieve better security outcomes with fewer resources.

The transition requires organizational change and short-term implementation effort. But the alternative—continuing to operate with pre-AI detection architectures against AI-powered threats—is increasingly untenable.

Email security has become a resource allocation problem. The solution isn't more analysts or more aggressive detection. It's better architecture that makes analyst time productive through elimination of false positive burden and focus on genuine threats.

For organizations making this transition now, the competitive advantage is significant. For organizations delaying this transition, the costs—in analyst burnout, in missed threats, in security incidents—will continue accumulating until architectural change becomes unavoidable.

The future of email security isn't in pattern-matching or machine learning. It's in reasoning-based architecture that combines threat detection with business context, that eliminates the false positive/false negative tradeoff, and that restores email security to economic viability as a security control.

FAQ

What is artificial intelligence-powered email threat detection?

AI-powered email threat detection uses large language models and reasoning engines to evaluate incoming emails across multiple dimensions simultaneously—threat indicators (malicious characteristics) and business legitimacy patterns (alignment with organizational norms). Unlike traditional pattern-matching systems that look only for threat signals, reasoning-based systems weigh all evidence contextually to determine whether an email represents an actual threat, distinguishing sophisticated social engineering attacks from legitimate business communications.

How does reasoning-based email security differ from traditional machine learning detection?

Traditional machine learning systems evaluate emails on a single dimension: threat likelihood, based on characteristics statistically similar to known phishing attacks. Reasoning-based systems evaluate emails on two dimensions simultaneously—threat indicators and business legitimacy—and use language models as orchestration architecture to weigh conflicting signals and apply organizational context. This architectural difference breaks the false positive/false negative tradeoff that has plagued email security for decades, enabling simultaneous improvement in detection accuracy and reduction in false positives.

What are the primary benefits of transitioning to reasoning-based email security?

The primary benefits include recovering 50-70% of analyst capacity previously consumed by false positive investigation (worth

Why do traditional email security systems have such high false positive rates?

Traditional systems evaluate emails only for threat characteristics—suspicious domains, malicious URLs, authentication failures—but have no mechanism to validate whether communication is legitimate from a business perspective. This creates an unsolvable tradeoff: make detection aggressive to catch sophisticated attacks, and legitimate business emails get quarantined; make detection conservative to preserve business communications, and novel attacks slip through. With approximately 65% of emails having some suspicious characteristic (new sender, unfamiliar domain, urgent language) but being completely legitimate, false positive rates remain structurally high in traditional systems.

How do reasoning-based systems handle novel, AI-generated attacks that have no historical precedent?

Reasoning-based systems apply logical reasoning about organizational policies and workflows rather than relying on historical attack patterns. For example, a sophisticated spear-phishing email requesting sensitive information to be sent to an external address can be flagged because it violates the organization's policy requiring sensitive information transfers through secure channels with formal approval, even if the email has no other threat characteristics. This reasoning capability enables detection of attacks that traditional pattern-matching and machine learning systems would miss entirely.

What organizational changes are required to implement reasoning-based email security?

Implementation requires explicit documentation or learning of organizational workflows (approval processes, business relationships, role-based authority patterns) to enable business legitimacy evaluation. Most organizations can operationalize this through a combination of explicit documentation and automated pattern learning from historical email. SOC workflows also change: instead of investigating all alerts, analysts investigate only those with specific threat or legitimacy concerns, guided by explicit reasoning about why the email was flagged. These changes typically require 2-4 weeks of analyst training and 8-12 weeks of phased migration from existing systems.

How does reasoning-based email security protect against business email compromise attacks?

Business email compromise attacks use compromised legitimate accounts and have minimal threat signals (legitimate sender, legitimate authentication, normal language). Traditional systems typically cannot detect these. Reasoning-based systems flag them by identifying that the request violates established workflows or authorization patterns—for example, a legitimate executive's email requesting an unusual wire transfer to an unfamiliar recipient violates established financial approval workflows, enabling detection despite the email having no threat characteristics. This converts BEC from an essentially undetectable threat to a reliably detectable threat.

What false positive rate can organizations expect after implementing reasoning-based email security?

Organizations typically see false positive rates decrease from approximately 65% (traditional systems) to 15-25% (reasoning-based systems). This reduction varies based on how thoroughly organizational workflows and business relationships are documented—organizations with mature process documentation see rates toward the lower end (15-20%), while those with less documentation see rates toward the higher end (20-25%). Even at the higher end, the reduction represents significant analyst time recovery and improvement in alert quality.

How do reasoning-based systems avoid becoming targets for false legitimacy pattern attacks?

Advanced reasoning-based systems monitor business legitimacy patterns themselves for anomalies. If an established, trusted vendor suddenly begins sending emails to unusual recipients or requesting unusual information, this represents a violation of the vendor's normal pattern and signals potential account compromise. These pattern violations trigger investigation even though the sender is normally trusted. This requires understanding that compromise of trusted accounts is a threat, not just compromise of untrusted accounts.

What is the typical implementation timeline and cost for reasoning-based email security?

Implementation timelines typically range from 8-12 weeks, including 2-4 weeks of proof of concept, 4-8 weeks of phased migration, and 2-4 weeks of optimization. Costs vary by organization size: mid-market organizations (100-500 employees) typically invest

How do organizations measure improvement after transitioning to reasoning-based email security?

Key metrics include false positive rate (measured as percentage of alerts investigated that don't represent actual threats), threat detection rate (percentage of real attacks detected before reaching users), analyst time spent on email security alerts (should decrease by 50-70%), incident response time from detection to action (should improve by 30-40%), and analyst retention (typically improves 10-15%). Organizations should establish baseline measurements before implementation and measure quarterly for 12 months to validate ROI and identify optimization opportunities.

Key Takeaways

- AI has eliminated attacker resource constraints, enabling unlimited unique email variants that traditional detection systems cannot match based on patterns

- Traditional email security systems face an unsolvable false positive/false negative tradeoff because they evaluate only threat signals without business context

- 65% of email security alerts are false positives consuming 25% of analyst time—representing 1.7M annual cost for mid-to-large enterprises

- Reasoning-based architecture evaluates emails across two dimensions (threat signals + business legitimacy) simultaneously, breaking the traditional tradeoff

- Organizations transitioning to reasoning-based systems recover 50-70% of analyst capacity while improving threat detection and incident response

- Business email compromise attacks, which have minimal threat signals, are efficiently detected by reasoning-based systems evaluating workflow violations

- Implementation requires organizational workflow documentation but achieves 200-500% first-year ROI through analyst time recovery alone

- Reasoning-based systems can detect novel AI-generated attacks by applying logical reasoning about organizational policies rather than relying on historical patterns

Related Articles

- Gemini Model Extraction: How Attackers Clone AI Models [2025]

- AI Recommendation Poisoning: The Hidden Attack Reshaping AI Safety [2025]

- Why Your VPN Keeps Disconnecting: Complete Troubleshooting Guide [2025]

- Odido Telco Breach Exposes 6.2M Users: Complete Analysis [2025]

- NordVPN & CrowdStrike Partnership: Enterprise Security for Everyone [2025]

- Mysterious Bot Traffic From China Explained [2025]

![AI-Powered Email Threats: How Security Economics Are Changing [2025]](https://tryrunable.com/blog/ai-powered-email-threats-how-security-economics-are-changing/image-1-1770997741629.jpg)