When AI Ethics Collides With National Security

Last year, Anthropic became the first major AI company cleared by the US government for classified use, including military applications. It was supposed to be a victory for responsible AI development. Then the Pentagon got angry.

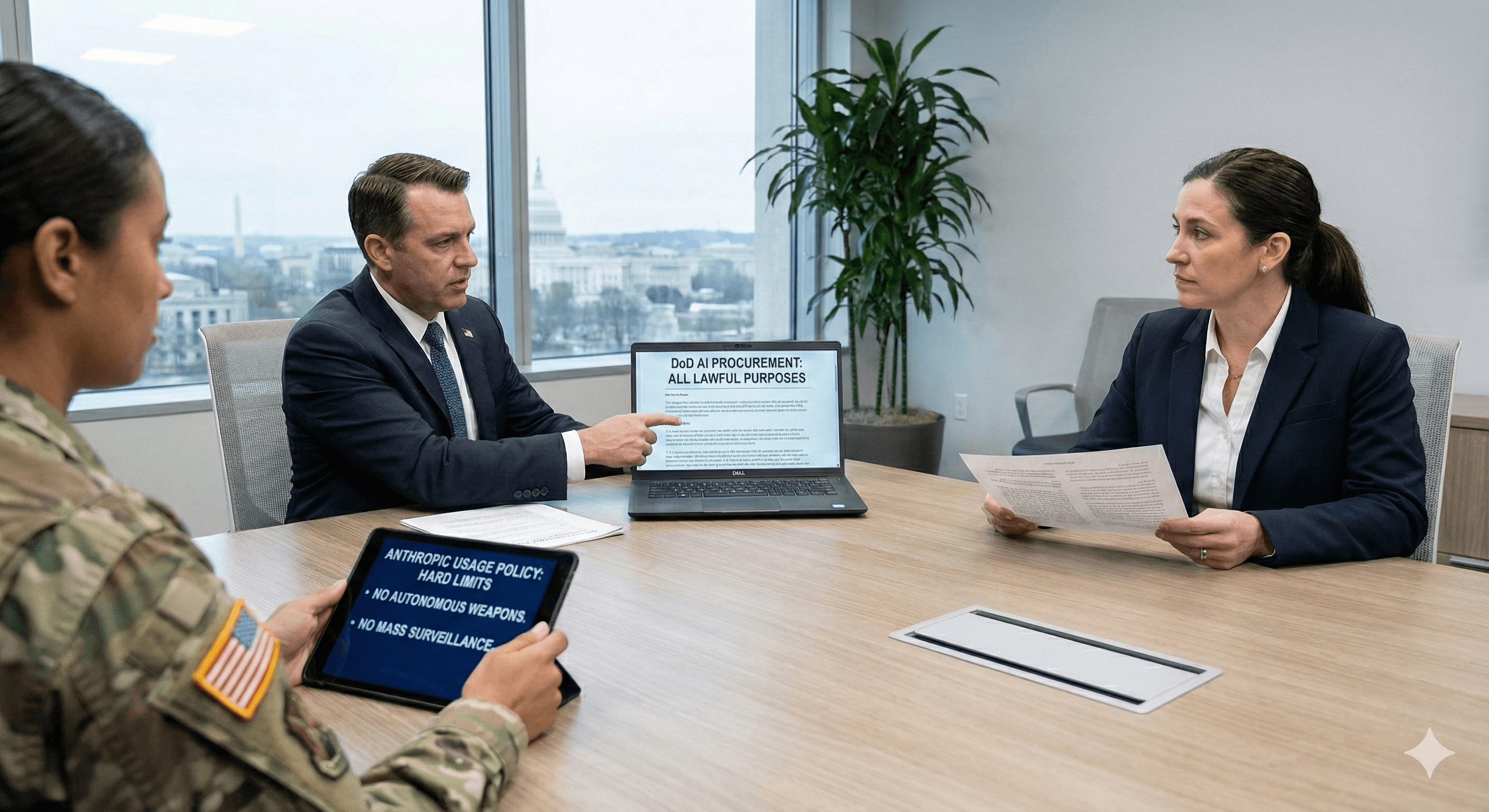

This week, the Department of Defense signaled it's reconsidering its relationship with the company over a $200 million contract. The reason? Anthropic refuses to let its AI models be used for autonomous weapons systems and government surveillance. The Pentagon views this as a deal-breaker. They're now considering designating Anthropic as a "supply chain risk," a classification usually reserved for companies doing business with adversarial nations like China. That label would effectively blacklist Anthropic from defense work across the entire industry, as reported by Reuters.

This isn't just an Anthropic problem. It's a fundamental collision between two American values: the desire to build safe AI that respects human autonomy, and the need to maintain military superiority in an increasingly dangerous world. And right now, the Pentagon is winning.

"Our nation requires that our partners be willing to help our warfighters win in any fight," said Pentagon spokesperson Sean Parnell in a statement to WIRED. "Ultimately, this is about our troops and the safety of the American people." Translation: If you want to work with the Pentagon, you don't get to pick and choose how your technology gets used. You build for victory, period.

The conflict reveals something ugly about where we're headed. The same companies racing to develop safe, aligned AI are being pressured to create weapons systems that could operate without meaningful human control. The same researchers who built safeguards specifically to prevent misuse are now being asked to disable those guardrails for military applications.

It's not a theoretical problem. This is happening right now.

The Anthropic Principle: Safety First, Everything Else Second

When Dario Amodei and his team founded Anthropic in 2021, they had a specific mission: build AI systems with safety so deeply embedded that bad actors couldn't exploit them, no matter how hard they tried. This wasn't a marketing angle. It was the company's entire reason for existing.

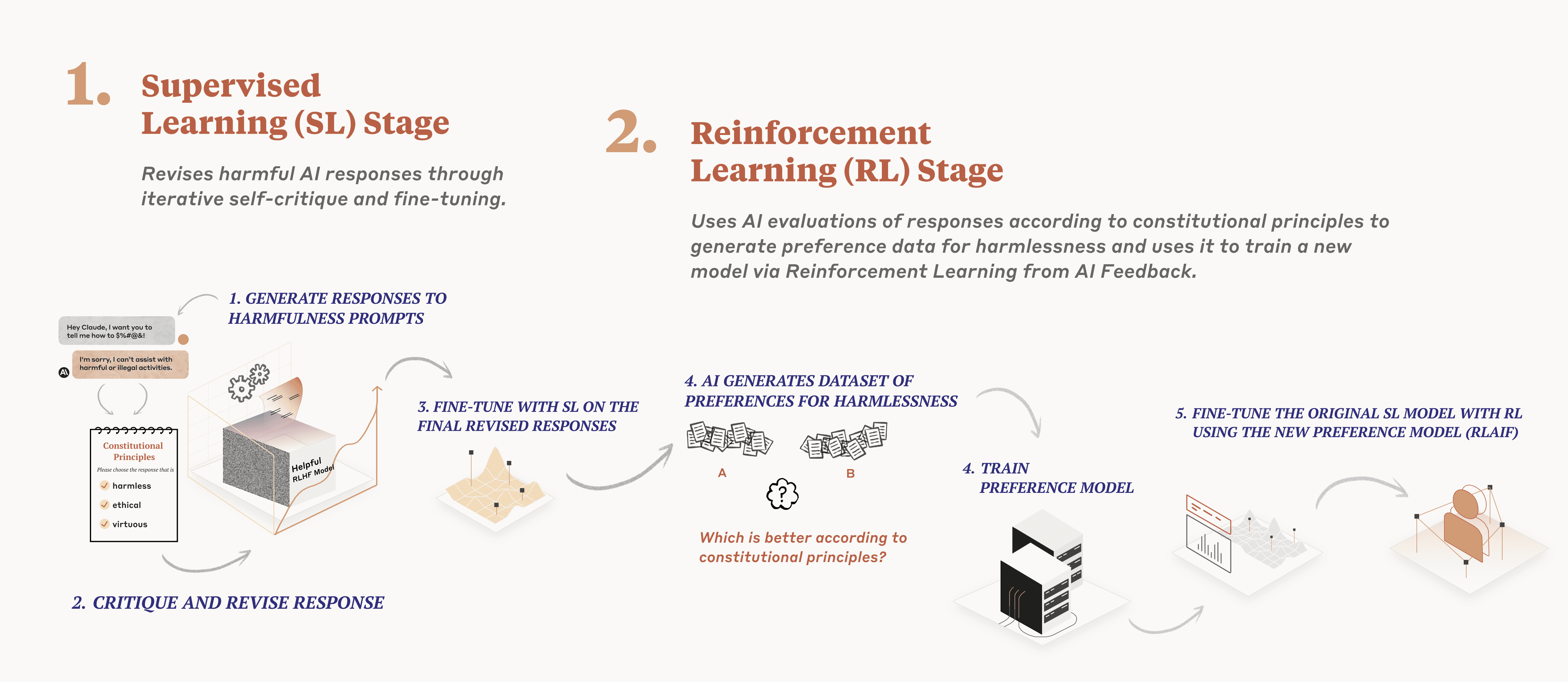

Anthropic's founding thesis rests on something called Constitutional AI. Instead of training models to optimize for whatever humans ask, Claude (their flagship model) is trained to follow a set of principles that constrain its behavior. The company calls it a "constitution." It works something like Isaac Asimov's Three Laws of Robotics, except actually practical and built into the training process itself.

The concept is elegant: encode values directly into the model's weights and architecture so that misuse becomes harder, not just discouraged. Claude won't help you design weapons. It won't help you build surveillance systems that violate privacy. It won't generate content for disinformation campaigns. These aren't policy decisions Anthropic can toggle on and off. They're baked into how the model thinks.

This approach gained Anthropic respect in the AI safety community. Researchers who've spent years worried about superintelligence killing everyone finally found a company that seemed to take those concerns seriously. In a world where OpenAI pivoted toward profits and Elon Musk's xAI promised speed over safety, Anthropic looked like the grownups in the room.

But safety has a cost. It makes your technology less useful for certain applications. Specifically, less useful for applications where you want a system that will kill people efficiently and without hesitation.

In June 2024, the State Department approved Anthropic for classified use. The company created a custom version of Claude specifically for US national security customers, called Claude Gov. It included all the safety features Anthropic had engineered into the base model. The company insisted on this. They weren't going to compromise on safety just because they had a government contract.

Dario Amodei was explicit about the boundaries. Claude would not be used in autonomous weapons systems. Claude would not power government surveillance. Claude could assist with national security analysis, threat assessment, and similar work. But not killing machines.

The Pentagon heard all this. And they let it happen anyway.

Then something happened that changed everything.

Anthropic is estimated to have the highest focus on AI safety, prioritizing it over other functionalities compared to peers like OpenAI and xAI. Estimated data.

The Venezuela Incident: When Carve-Outs Become Targets

In late 2024, reports surfaced that Claude was used as part of intelligence operations related to Venezuela's political situation. Specifically, there were allegations that Anthropic's AI model contributed to planning or analysis around an operation targeting Venezuelan President Nicolás Maduro, as detailed by Axios.

Anthropic denied the claim. They said they didn't authorize any such use. But the damage was done.

From the Pentagon's perspective, here's what happened: A company got a lucrative classified contract, insisted on ethical guardrails, then claimed those guardrails were violated without their consent. Either Anthropic couldn't control how its technology was used (a security problem), or they were publicly complaining about government use of their systems (a loyalty problem). Neither option looked good.

More importantly, the incident exposed a fundamental tension in Anthropic's position. They wanted to be the trusted AI vendor to national security agencies. They wanted the prestige and profit that comes with classified contracts. But they also wanted to maintain independence and refuse certain uses.

The Pentagon doesn't negotiate with vendors. It gives orders. And Anthropic's insistence on carve-outs made them look like they were giving conditional support to the government they were supposedly serving.

From Anthropic's perspective, their position was reasonable. They built safety constraints. They shouldn't be forced to disable them for military applications. If the Pentagon needs AI for autonomous weapons, the Pentagon should either accept the constraints or work with a vendor that doesn't have them.

But the government doesn't see it that way. Emil Michael, the Department of Defense CTO (and former Uber executive), made the Pentagon's position crystal clear: "If there's a drone swarm coming out of a military base, what are your options to take it down? If the human reaction time is not fast enough… how are you going to?" as reported by DefenseScoop.

He asked this rhetorically, but the implication was stark: You need AI. You need it fast. You need it without human delays. And if your vendor won't provide that, find a new vendor.

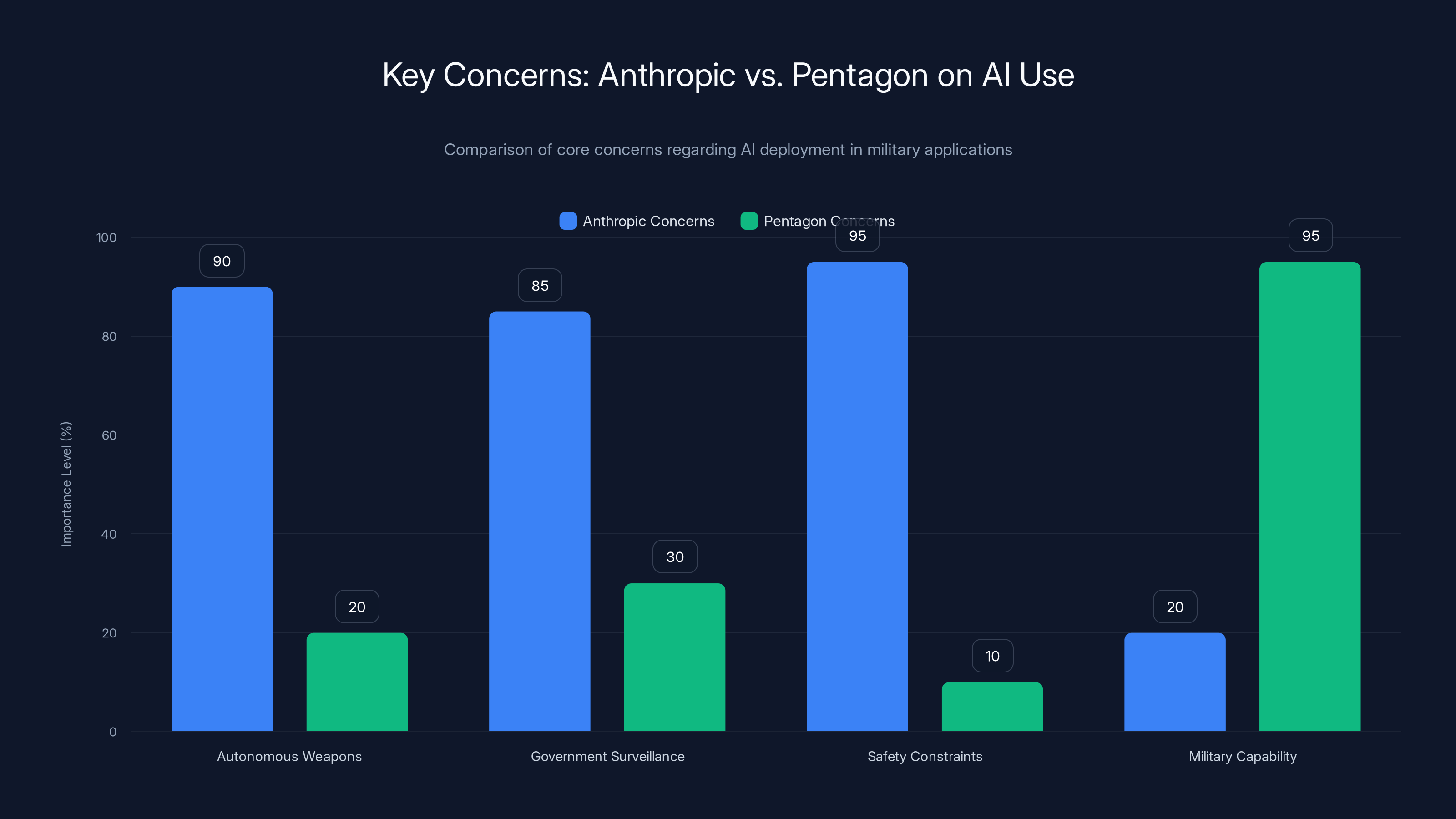

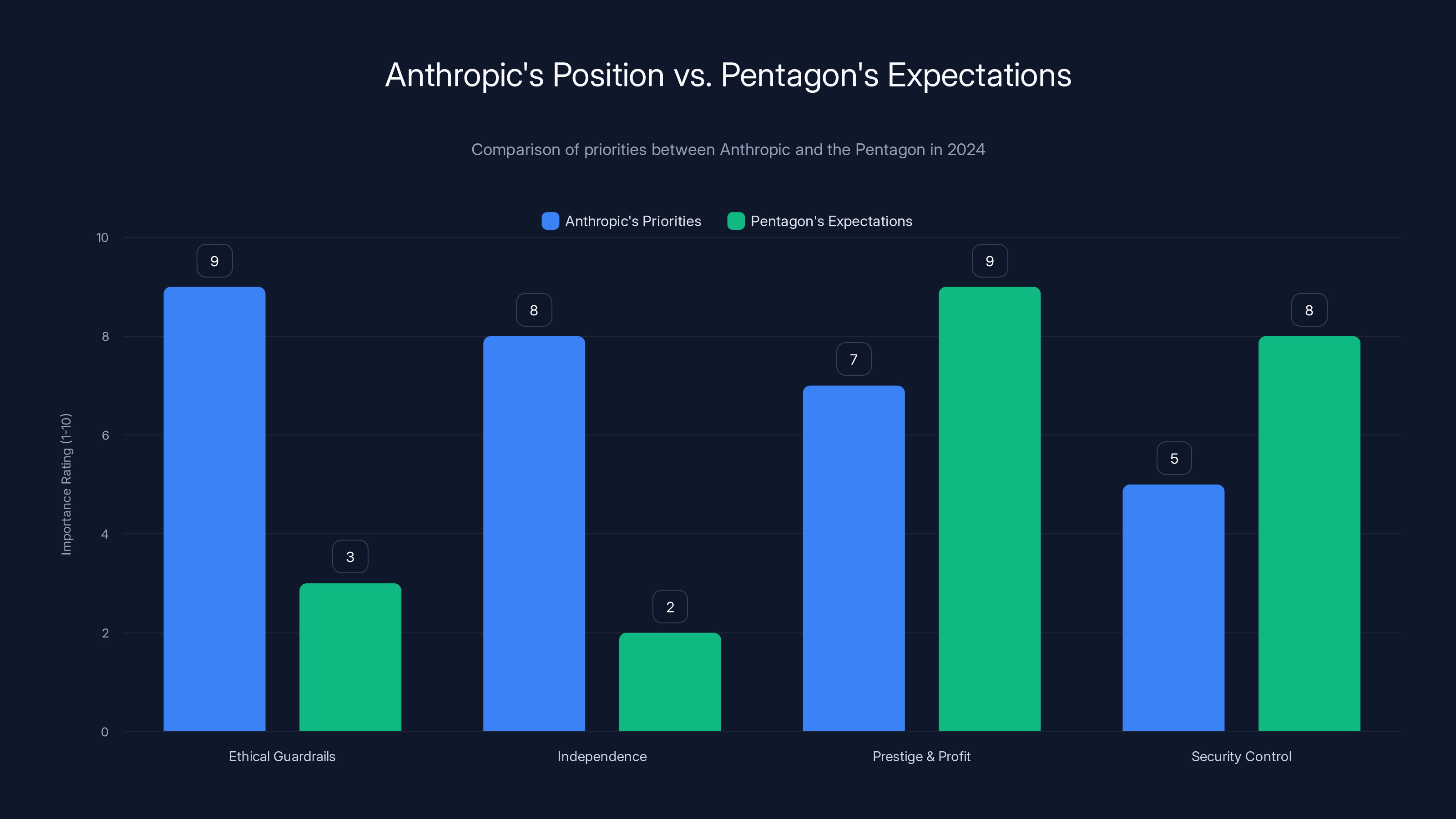

Anthropic prioritizes safety constraints and opposes AI in autonomous weapons and surveillance, while the Pentagon emphasizes military capability and unrestricted AI use. Estimated data based on narrative.

The Contradiction at the Heart of Defense AI

Here's the problem nobody wants to say out loud: building safe, aligned AI and building effective military AI might be fundamentally incompatible goals.

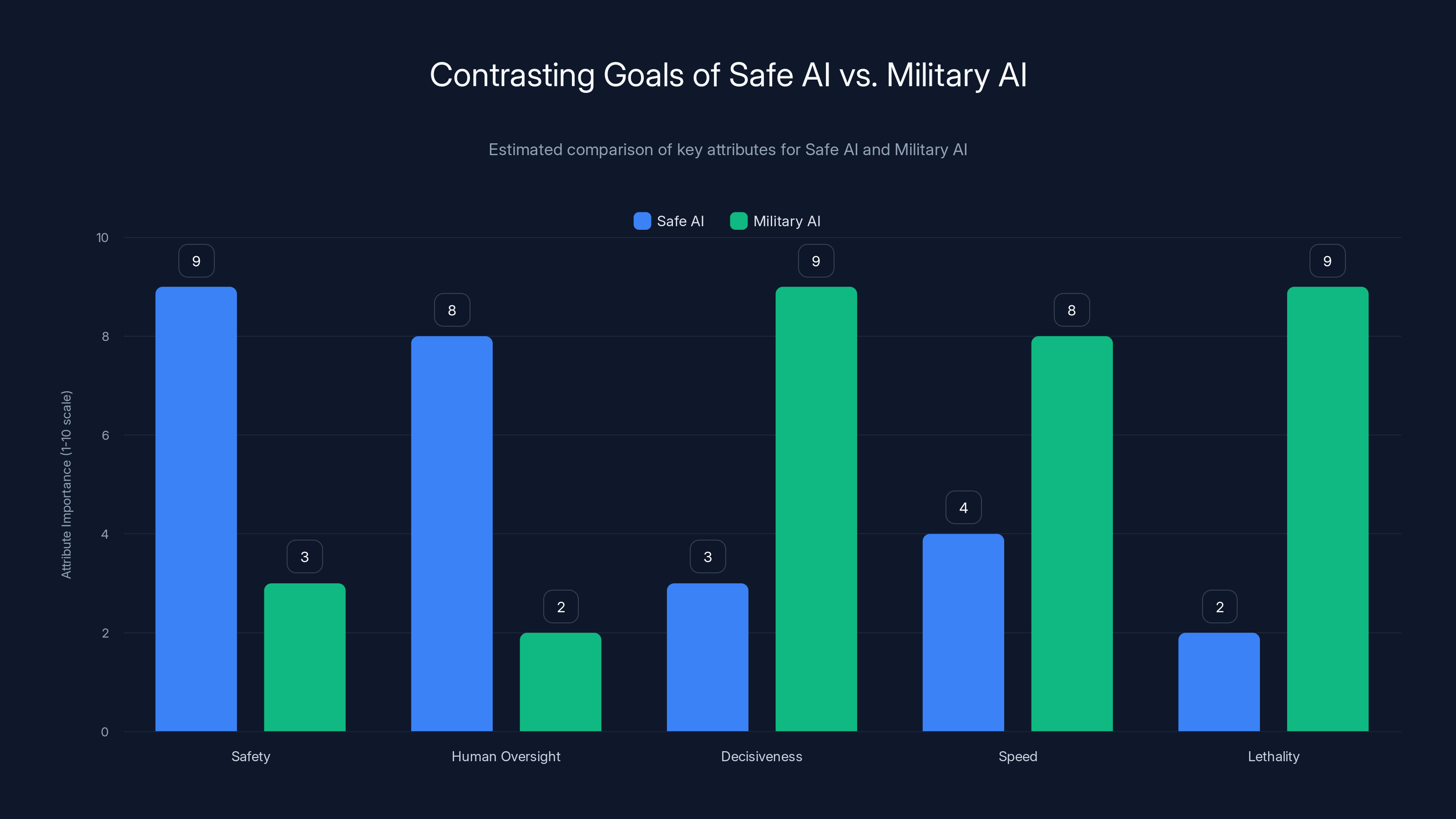

Safe AI is built with constraints. It asks "Is this harmful?" before acting. It has built-in uncertainty. It defers to humans when decisions are complex. Effective military AI does the opposite. It's built to act decisively. It's trained to find solutions in ambiguous situations. It's optimized for speed and lethality.

You can build an AI system with both qualities, technically. But you're working against fundamental design principles. It's like asking a race car to be perfectly safe in city traffic. You can add airbags and lane-keeping assist, but at some point the design is compromised.

The Pentagon's argument is compelling if you accept its premises: Great power competition with China requires advanced AI. AI in weapons systems could save American lives by being faster and more accurate than human operators. Therefore, America needs military AI.

Anthropic's argument is also compelling: Unaligned AI systems could cause catastrophic harm. If you build AI specifically to kill things without human oversight, you're moving in the wrong direction. Therefore, you need safeguards.

Both arguments are right. And they're irreconcilable.

When OpenAI signed its contract with the Pentagon, the company was careful not to highlight it. Sam Altman and his team wanted to work with national security agencies, but they didn't want to be seen as a defense contractor. OpenAI understood that being associated with military applications—particularly autonomous weapons—could damage their brand with researchers, customers, and the general public.

But the incentives are pushing every AI company toward military contracts. Google has spent years dealing with protests over its AI work with the military. Microsoft is aggressively pursuing defense contracts. Palantir leaned into it, with CEO Alex Karp openly discussing how their products are "used on occasion to kill people," apparently with pride.

Anthropic tried a different approach. They wanted classified contracts and safety standards simultaneously. They wanted to help national security while maintaining independence from military applications. The Pentagon just showed them that compromise isn't possible.

Why Autonomous Weapons Matter More Than You Think

Let's define the actual stakes here, because "autonomous weapons" gets used as a catch-all term for things ranging from mildly concerning to civilizationally catastrophic.

A system that uses AI to target something humans have already authorized killing? That exists now. That's not autonomous in a meaningful sense. The human is still making the decision to use force. The AI is just executing it faster.

A system that uses AI to identify targets without constant human approval? That's where things get murky. The human set parameters, but the specific decision to engage is delegated. If a drone sees something that matches a threat profile, does it ask for permission or does it engage?

A system that uses AI to identify targets, assess threats, and engage without human involvement in the kill decision? That's where most people would draw the line. That's a genuine autonomous weapon, and it's terrifying.

It's terrifying because of something called the "second-order problem." Your AI can be well-intentioned and still make catastrophic mistakes. It can misidentify civilians as combatants. It can escalate conflicts because it doesn't understand the political consequences of its actions. It can make decisions that seemed reasonable in training but catastrophic in practice.

Most concerning: If both sides are using autonomous weapons, you have a situation where neither side can control what happens. An AI kill-decision by one side could trigger an automated response from the other side, creating a feedback loop that humans can't interrupt. Wars could escalate in minutes.

This isn't speculation. This is what AI safety researchers worry about constantly. The more powerful the system, the worse the potential failure modes. And weapons systems are powerful by definition.

Anthropic's position reflects this concern. They don't want Claude involved in autonomous weapons systems because they understand the risk. They want systems where humans remain in control of the decision to use force.

The Pentagon's position reflects a different concern: If we don't have autonomous AI weapons, and China does, we lose. That's not unreasonable either.

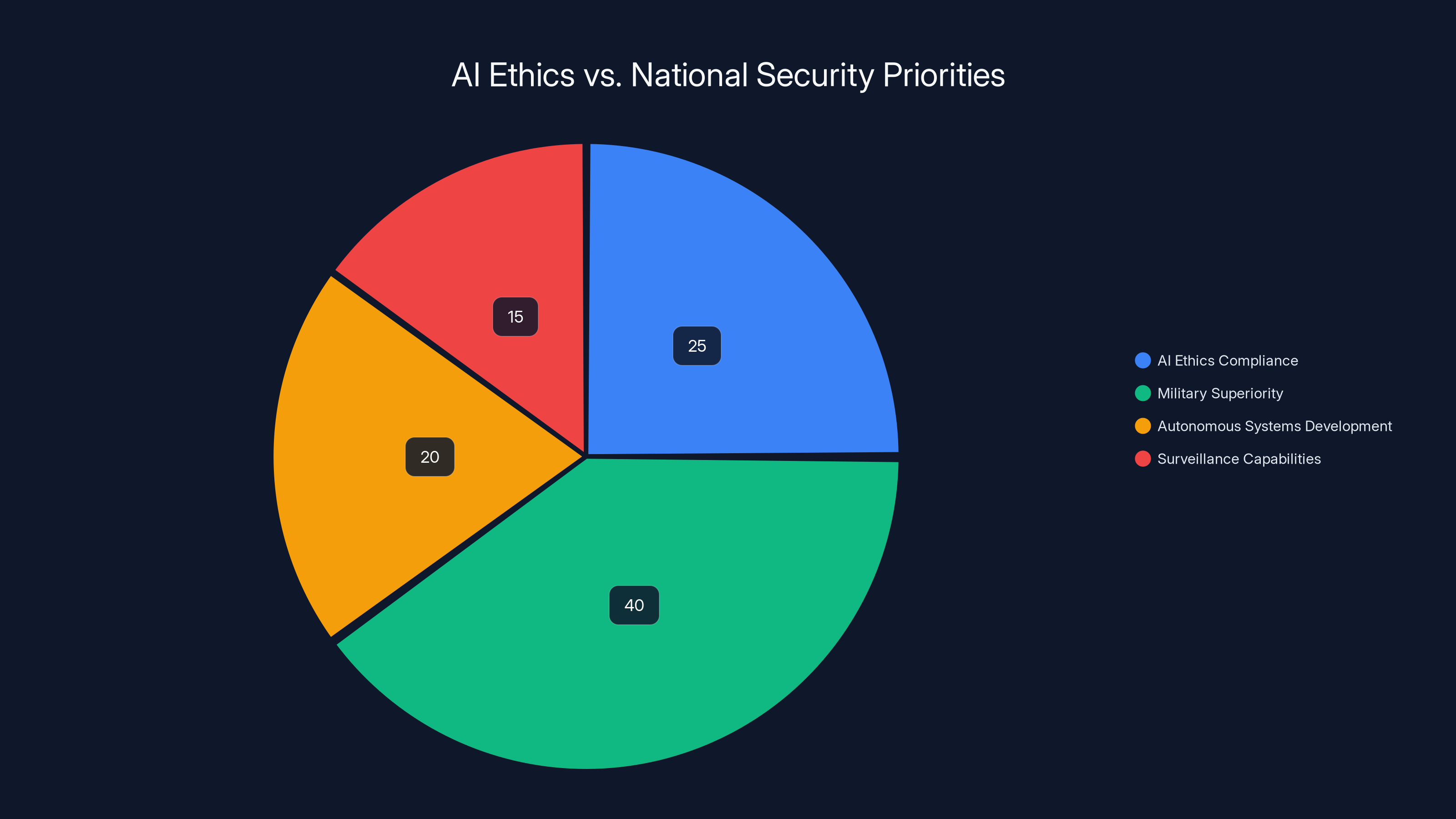

Estimated data shows a significant focus on military superiority (40%) over AI ethics compliance (25%) in defense-related AI projects.

The Surveillance Problem: Control Through Visibility

The other carve-out Anthropic insisted on was government surveillance. Dario Amodei specifically said he didn't want Claude powering surveillance systems.

On the surface, this seems less controversial than weapons. Surveillance doesn't kill anyone. It just watches them. But that misses why surveillance is actually dangerous.

Surveillance systems powered by AI are dangerous because they're efficient at finding patterns, even patterns that don't exist. They're dangerous because they can be used to suppress dissent, target minorities, or control populations. They're dangerous because once built, they're hard to shut down. And they're dangerous because governments that build them don't hesitate to abuse them.

We don't need to speculate about this. We have historical examples. The Soviet Union used AI-adjacent systems to identify suspected dissidents. China uses AI-powered surveillance to monitor Uyghur populations. The US has attempted surveillance systems that disproportionately targeted people of color, not through intentional discrimination but through biased data and poorly-designed systems.

AI makes all of this easier. A model like Claude can process millions of communications, find suspicious patterns, generate threat profiles, and do it all at scale no human analyst could match. In the right hands, it's powerful investigative tool. In the wrong hands, it's a totalitarian dream come true.

Anthropic's concern is that once you build AI specifically for government surveillance, you can't control where it goes or how it's used. You can't promise that a surveillance system designed for counterterrorism won't be repurposed for political suppression. You can't guarantee that features you build for defense won't migrate to enforcement.

Historically, surveillance tools built for one purpose get repurposed. Wiretapping technology built for organized crime got used against civil rights activists. NSA surveillance programs built for foreign intelligence got used domestically. Pattern-matching systems built for financial crime got used for immigration enforcement.

So Anthropic said no. They wouldn't build AI specifically optimized for surveillance. They weren't comfortable with that outcome.

The Pentagon interpreted this as Anthropic choosing their values over national security.

Anthropic interpreted this as the Pentagon trying to pressure them into abandoning their values.

Both interpretations are correct.

The Broader Industry Shift: Safety vs. Power

When Anthropic was founded, other AI companies were still publicly skeptical about working with the military. Tech workers rallied against Google's Project Maven, which used AI for drone targeting. OpenAI explicitly said it wouldn't weaponize its technology.

In 2025, that era is over.

OpenAI, Google, xAI, and Meta are all pursuing classified government contracts. Microsoft is bidding aggressively for defense work. The culture has shifted from "should we work with the Pentagon?" to "how do we win Pentagon contracts?"

The shift happened for several reasons. First, the money is enormous. A $200 million classified contract is more revenue than some AI startups will see in their entire existence. Second, national security gets treated differently. If your nation's defense is at stake, normal objections evaporate. Third, investors love it. There's nothing less risky than government contracts, and VCs love reducing risk.

But the most important reason is that the competitive pressure became irresistible. If one US AI company refused military contracts, the Pentagon would just buy from another. From an individual company's perspective, turning down military work doesn't change national security outcomes. It just means you don't profit from them.

So OpenAI took the contracts. Then xAI took them. Then Google took them. Now the question isn't whether AI companies should work with the military. It's how closely they should integrate with military development.

Anthropic stayed out. Until they didn't.

The company's decision to pursue classified contracts was framed as responsible. They would work with national security agencies, but on their terms, with their safeguards intact. It seemed like a way to be involved in national security without compromising on safety.

The Pentagon just showed them that's not how government relationships work.

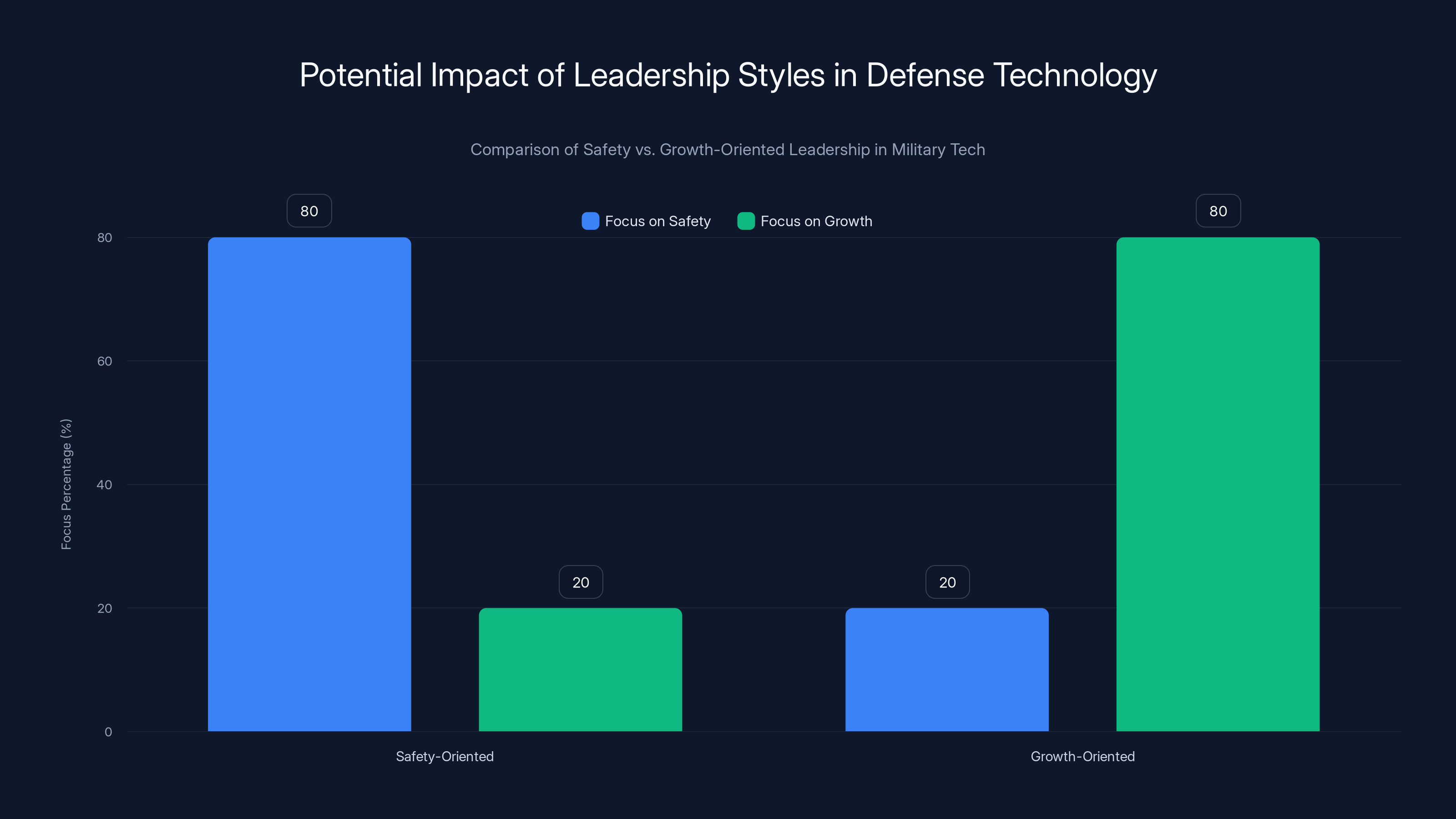

Estimated data shows that growth-oriented leadership in defense tech may prioritize expansion over safety, as seen in Emil Michael's approach. Estimated data.

The Asimov Problem: When Robots Break the Rules

Isaac Asimov formalized robot ethics in 1942 with three simple laws:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These rules are elegant. They're also useless in real-world military applications. Because the entire purpose of a military system is to injure human beings under specific circumstances. A military robot that follows Asimov's First Law is a useless military robot.

Anthropic was trying to encode modern versions of Asimov's laws into Claude. The company built constraints that would prevent certain uses. They hoped that if the constraints were strong enough, baked deeply enough into the model itself, they could sell military AI while maintaining ethical boundaries.

The Pentagon was patient at first. But eventually, the reality set in: those constraints are exactly what makes the system less useful for military purposes.

So the Pentagon pushed. And Anthropic found that constraints aren't as baked-in as they thought. If the customer demands the constraints be lifted, what do you do? You can refuse the contract. Or you can rationalize. You can say that your constraint is against autonomous weapons, but this isn't autonomous, it's semi-autonomous. You can say that your constraint is against surveillance, but this isn't surveillance, it's threat assessment. You can narrow your principle until it no longer means anything.

Or you can look at what happened and realize that selling military AI with safety constraints is a fiction. Either your system is optimized for military effectiveness, or it's constrained by safety principles. You can't do both.

Other companies made this calculation and chose military effectiveness. The Pentagon indicated they'd prefer if Anthropic did the same.

The company now faces a choice: double down on safety and lose the contract, or compromise on safety and lose the identity that made them valuable.

Why This Matters for AI Development Generally

The Anthropic-Pentagon conflict isn't just about one contract. It's a signal to every AI company about what the government will accept.

If you build military AI, it cannot have carve-outs. It cannot have constraints around how it can be deployed. It cannot have an independent ethics layer that overrides military objectives. If you want government contracts, you build tools optimized for government objectives.

That changes how AI development happens. Safety researchers will migrate to companies that aren't in military contracts. The research culture around AI safety will separate from the research culture around military AI. You'll end up with two different AI development ecosystems: one optimized for safety, one optimized for power.

Historically, this is how technology development works. Nuclear physics had peaceful applications and weapons applications, but the weapons applications drove funding and research. Cryptography is deployed both to protect privacy and to enable mass surveillance, and the surveillance applications drive government funding. Drone technology has civilian uses, but military applications funded the industry.

When you separate safety-focused development from power-focused development, the power-focused side wins eventually. It has better funding, clearer objectives, and government backing. The safety side has mostly principles and worries about future catastrophes.

AI is different because the stakes are higher. If you mess up nuclear weapons design, you get a failed test. If you mess up AI safety in a powerful system, you might get outcomes nobody can predict or control.

The chart illustrates the conflicting priorities between Anthropic and the Pentagon, highlighting the tension between ethical considerations and security demands. Estimated data based on narrative.

The International Arms Race: Why the US Can't Compromise

From the Pentagon's perspective, the Anthropic conflict comes down to one factor: China.

If Chinese AI companies build military AI without safety constraints, and American AI companies are constrained by safety concerns, America loses. It's that simple. In a great power competition, unilateral constraints are suicide.

This is why the Pentagon's position, while hostile to Anthropic's values, is strategically rational. They're not being evil. They're recognizing that in an arms race, the side that constrains itself loses.

It's the same calculation that drove the nuclear arms race. Both the US and Soviet Union said they wanted arms control and reductions. Both understood that uncontrolled proliferation was risky. But both also knew that if they unilaterally reduced weapons, the other side would dominate. So the weapons kept building.

AI will likely follow the same pattern. There will be international conferences where countries agree to constrain military AI development. There will be treaties and agreements. Behind closed doors, every major power will be developing weapons-grade AI as fast as possible, assuming everyone else is lying.

This is the prisoner's dilemma applied to artificial intelligence. Every country would be better off if everyone constrained military AI. But each country individually is better off if they build weapons while others don't. So everyone builds weapons.

Anthropic's safety constraints are reasonable... if everyone else also has safety constraints. But if that assumption is false, Anthropic is just handicapping itself.

The Pentagon doesn't think the assumption is true. And they're probably right.

China has a different relationship to AI development. The Chinese government directly manages major research institutions. When the government wants AI optimized for surveillance and military applications, it happens. There's no startup saying no. There's no researcher advocating for safety constraints. It's just government objectives and technology to meet them.

If Chinese military AI is unconstrained and American military AI is constrained, guess which country has more powerful military AI?

Emil Michael and the New Pentagon: Weapons First, Safety Later

Emil Michael's appointment as Department of Defense CTO is significant. Michael came from Uber, where he was chief business officer and known as aggressive, willing to skirt regulations, and focused on business growth above all else.

At Uber, Michael's role was to push the company's boundaries and figure out what it could get away with. He was instrumental in Uber's strategy of operating in cities where it was technically illegal, betting that regulators wouldn't shut them down if the service was popular enough.

That's who the Pentagon hired as its chief technology officer.

Michael's rhetorical question about drone swarms wasn't a genuine question. It was a statement: The Pentagon will use AI for military purposes, with or without Anthropic's blessing. If you want a contract, you build what we need. If you don't, we find someone else.

Michael's background suggests he sees this as a negotiation where one side eventually cedes. Anthropic is betting on their principles being non-negotiable. Michael is betting on everything being negotiable if the price is high enough.

So far, Michael's position is winning.

Estimated data shows the contrasting priorities of Safe AI and Military AI, highlighting the fundamental incompatibility in their design goals.

The Regulation Question: Why Anthropic's Position Undermines Itself

Here's another contradiction: Anthropic is one of the most vocal advocates for AI regulation. The company supports international governance frameworks, safety standards, and restrictions on dangerous AI applications.

But Anthropic is also trying to sell AI to the Pentagon, which doesn't want restrictions.

The company's public position is that AI needs oversight and governance. Its private position with the Pentagon is that it has safety constraints. These aren't contradictory on the surface, but they suggest something more: Anthropic wants oversight when it benefits Anthropic.

If you're subject to rigorous safety requirements and your competitors aren't, regulation looks good. It levels the playing field. If regulation means your military AI is less effective than your competitors' military AI, suddenly you're less enthusiastic about regulation.

The Biden administration was skeptical of Anthropic's regulatory advocacy. They saw it as a company trying to constrain its competitors while preserving its own market position. When Anthropic refused certain military uses, it looked like the company was trying to make national security impossible so the government would have to accept constraints.

The Trump administration took this skepticism further. They're hostile to the whole concept of AI safety constraints, viewing them as regulation by another name. They appointed people like Emil Michael who see constraints as obstacles to overcome.

Anthropic's bet was that safety would become a competitive advantage. The company assumed that customers would value safety and that regulators would require it. But if national security is on the line, no customer cares about safety. And national security always seems to be on the line.

What Happens Now: Three Possible Futures

The Anthropic-Pentagon conflict has three likely outcomes, and all of them are concerning.

Scenario One: Anthropic Capitulates

The company could decide that the reputational and financial cost of losing Pentagon contracts isn't worth it. They could "reinterpret" their values, explain why autonomous weapons aren't really autonomous if a human set the parameters, rationalize surveillance as threat assessment, and gradually become just another defense contractor.

This preserves the company and the contract. It also ends Anthropic as a distinct entity. Once you start rationalizing violations of your core principles, there's no clear stopping point. You end up as a company that sounds committed to safety while building exactly what the Pentagon demands.

Many companies go through this evolution. You start with principles and end with pragmatism. Anthropic would join a long line of companies that compromised.

Scenario Two: Anthropic Refuses and Loses

The company could stick to its principles, turn down the contract, and accept the supply chain risk designation. This is noble and would make Anthropic a symbol of integrity in AI development.

It would also destroy the company's defense business and signal to investors that Anthropic is too idealistic to be reliably profitable. Other AI companies would take the contract instead. And the Pentagon would go work with contractors who don't have ethical constraints.

Anthropic might survive, but as a smaller, less influential company. They'd be a reminder of what could have been if principle mattered more than power.

Scenario Three: The System Changes

Somewhere between capitulation and destruction, there's a possibility that things change. Maybe there's a catastrophic AI failure that makes governments take safety seriously. Maybe there's public pressure against weapons-grade AI that makes customers uncomfortable. Maybe an international treaty actually gets enforced.

This is the least likely outcome. But it's possible.

The Deeper Problem: Misalignment Between AI Companies and Government

The Anthropic-Pentagon conflict reveals something fundamental about the relationship between AI companies and governments.

AI companies believe they're building tools that will eventually become superintelligent. They worry about whether those tools will remain aligned with human values. They're trying to engineer safeguards that persist as the technology becomes more powerful.

Governments believe they're dealing with a technology that exists right now and needs to be deployed for current strategic purposes. They're worried about current competitors, current threats, current wars. They want the most powerful tool available for those purposes.

These are genuinely incompatible worldviews. An AI company thinks long-term about existential risk. A government thinks quarterly about budget justification and how to win the next conflict. They're not talking about the same thing when they talk about AI safety.

Anthropic tried to bridge this gap. They said we'll build safe AI and you can use it for national security purposes, just not for autonomous weapons or surveillance. But that required the government to also think long-term about existential risks and accept constraints on current capability for future safety.

The Pentagon looked at this proposal and laughed. They have current threats and current competitors. They don't have time for long-term existential concerns.

So the conflict was inevitable. Anthropic and the Pentagon were always going to disagree because they're optimizing for completely different things.

The Weaponization Precedent: Once You Start, You Can't Stop

History suggests what happens when dual-use technology gets weaponized.

The internet was designed to be open and resilient. Now it's weaponized for surveillance and military purposes. Cryptography was designed for privacy. It's deployed to enable control. Drones were designed for reconnaissance. Now they kill people.

In every case, the technology wasn't explicitly designed as a weapon. But once governments got involved, the incentive structure changed. A technology that could be weaponized was weaponized. Constraints on weaponization were removed. The most powerful version of the technology was the one deployed.

AI will follow the same pattern. Right now, you can still talk about safe AI and military AI as different things. In five years, that distinction will blur. In ten years, it will disappear. The most sophisticated AI systems in the world will be military systems, optimized for military purposes, with safety treated as an optional feature.

Anthropic is trying to hold that line. They're saying military AI and safe AI can be the same thing. The Pentagon is saying they can't, they won't be, and if Anthropic insists, the Pentagon will find someone else.

Probably the Pentagon is right. History suggests they are.

What's Really at Stake

The Anthropic-Pentagon conflict looks like a contract dispute. It's not. It's about whether humans can maintain meaningful control over AI systems as they become more powerful.

Anthropic's safety constraints are ultimately about human control. The company built Claude to ask questions, defer decisions, and refuse requests that could cause harm. These constraints mean the system requires human input and human judgment.

Military AI can't work that way. You can't have a weapon system that asks questions before firing. You need decisiveness and speed.

So the conflict is really about whether we're building AI that keeps humans in control, or AI that removes humans from control.

Right now, the Pentagon is winning that argument. And winning it decisively.

If this trend continues, in fifteen years we'll look back at Anthropic's refusal to build weapons as a quaint historical footnote. The company tried to maintain values and lost. Everyone else took the contracts, optimized for military purposes, and built the future.

The question isn't whether this happens. It's whether there's any force powerful enough to stop it.

Right now, there isn't.

FAQ

What is the core disagreement between Anthropic and the Pentagon?

Anthropic refuses to allow its AI model Claude to be used for autonomous weapons systems and government surveillance programs, while the Pentagon views these constraints as unacceptable limitations on military capability. The Department of Defense argues that effective defense requires unrestricted AI deployment, whereas Anthropic maintains that safety guardrails must be preserved even in military applications. This fundamental incompatibility has resulted in the Pentagon considering Anthropic a "supply chain risk" and reconsidering a $200 million contract.

Why does Anthropic oppose military use of its AI?

Anthropic was founded on the principle that AI systems should have safety constraints baked deeply into their design through Constitutional AI training. The company believes that autonomous weapons could operate without meaningful human control and that government surveillance powered by AI could be used for oppression and rights violations. Anthropic's leadership explicitly states they don't want Claude involved in applications where the AI makes irreversible decisions about harming people without human authorization in specific instances.

How does the Pentagon justify wanting unrestricted military AI?

The Department of Defense argues that in great power competition with countries like China, the United States cannot afford to handicap its military technology with safety constraints that competitors don't share. Pentagon officials note that reaction time limitations in conflict situations could endanger American troops, and that adversarial nations are developing military AI without ethical safeguards. They view safety constraints as unilateral disarmament in an arms race context.

What is Constitutional AI and how does it work?

Constitutional AI is Anthropic's approach to building safety directly into AI models during training, rather than treating safety as a policy layer applied afterwards. The process involves training Claude to follow a set of constitutional principles that shape how the model thinks and responds. These principles are encoded into the model's weights, making it harder for misuse to occur because the AI is fundamentally resistant to harmful applications rather than just refusing them at inference time.

Could Anthropic compromise with the Pentagon by limiting constraints only to certain features?

Theoretically possible but practically unlikely, according to military strategists and AI researchers. The Pentagon's position is that any constraints that reduce effectiveness for military purposes are unacceptable. Compromises like "autonomous for threat identification but human-controlled for engagement decisions" still place limitations the military views as operationally unviable. Anthropic's refusal to even discuss gradual weakening of constraints suggests the company understands that compromise erodes all principles over time.

What historical precedents show how dual-use technology gets weaponized?

Multiple precedents exist: the internet was designed for resilience and openness but weaponized for surveillance; cryptography was created for privacy but deployed to enable mass surveillance; drone technology began as reconnaissance tools but evolved into weapons systems. In each case, once governments gained access to the technology, incentives shifted toward maximizing military capability rather than maintaining original design principles. Researchers who built technologies worry their creations were perverted; military officials argue they were finally deployed correctly.

Could other AI companies fill the gap if Anthropic refuses military work?

Yes, and they're already doing so. OpenAI, Google, and xAI all have classified defense contracts or are pursuing them. The Pentagon indicated it will work with companies that don't place constraints on military applications. This creates a selection effect where responsible AI companies self-select out of military work, leaving military AI development to companies willing to abandon safety principles.

What does "supply chain risk" designation actually mean for Anthropic?

If the Pentagon designates Anthropic as a supply chain risk, it means any company using Anthropic's AI products would themselves be prohibited from doing business with the Department of Defense. This effectively blacklists Anthropic from the entire defense industrial base, as contractors would abandon Anthropic technology rather than lose government contracts. It's one of the most severe penalties available in government contracting and signals that loyalty to military objectives is non-negotiable.

TL; DR

- Fundamental Conflict: Anthropic built safety constraints into Claude that prevent use in autonomous weapons and surveillance systems, but the Pentagon requires unrestricted military capability and views these constraints as unacceptable limitations.

- The Venezuela Incident: Reports that Claude was used in operations against Venezuela's government contradicted Anthropic's claims about maintaining control over the technology, giving the Pentagon leverage to demand constraint removal.

- Strategic Reality: The Pentagon's argument that without unrestricted AI the US loses to China in military competition is strategically rational, even if it undermines AI safety development.

- Historical Pattern: Every dual-use technology from cryptography to drones eventually gets weaponized completely once government involvement begins, suggesting Anthropic's constraints will be abandoned if the company compromises.

- Deeper Issue: The conflict reveals that AI companies and governments have incompatible worldviews: companies think about long-term existential risks while governments focus on current strategic advantages, making permanent disagreement inevitable.

Three key realities emerge from this conflict:

First, safety in AI development and military effectiveness are becoming mutually exclusive goals. A system optimized for both is a system optimized for neither, and when stakes are high, military effectiveness always wins.

Second, unilateral constraints in competitive environments create incentive structures that punish the constrained party. If China builds unrestricted military AI and America doesn't, America loses. So America won't.

Third, once a technology becomes central to military strategy, its development gets pulled into military culture. That culture optimizes for effectiveness and power, not safety and alignment. Everything else becomes secondary.

Anthropic tried to maintain values while participating in national security. The Pentagon just showed that's not a stable equilibrium. Either you're fully committed to military objectives, or you're not part of military development.

The company now chooses. And whatever they choose, it signals to every other AI company what's acceptable and what isn't.

If Anthropic capitulates, the message is clear: Safety principles are negotiable. If Anthropic refuses, the message is equally clear: Independent AI companies don't belong in defense work.

Either way, the trajectory is set. The future of powerful AI won't be determined by safety researchers or company founders with ethical commitments. It'll be determined by governments with strategic objectives and budgets to enforce them.

The only question is whether anyone tries to stop it. Right now, nobody is.

Key Takeaways

- Anthropic's refusal to allow Claude in autonomous weapons and surveillance systems directly conflicts with Pentagon requirements for unrestricted military AI deployment

- The Pentagon's position that military effectiveness requires unconstrained AI is strategically rational in great power competition with China, even though it undermines long-term AI safety

- Historical precedent shows all dual-use technologies get weaponized completely once military development begins, suggesting Anthropic's constraints won't survive compromises

- AI companies and governments optimize for incompatible goals: companies focus on existential risks and long-term alignment, while governments prioritize current strategic advantages

- Emil Michael's appointment as Pentagon CTO signals the administration won't tolerate vendor constraints on military AI, forcing other companies to choose between principles and contracts

Related Articles

- AI Therapy and Mental Health: Why Safety Experts Are Deeply Concerned [2025]

- The OpenAI Mafia: 18 Startups Founded by Alumni [2025]

- How an AI Coding Bot Broke AWS: Production Risks Explained [2025]

- Mark Zuckerberg's Testimony on Teen Instagram Addiction [2025]

- ChatGPT Lawsuit: When AI Convinces You You're an Oracle [2025]

- Cellebrite's Double Standard: Phone Hacking Tools & Human Rights [2025]

![AI Safety vs. Military Weapons: How Anthropic's Values Clash With Pentagon Demands [2025]](https://tryrunable.com/blog/ai-safety-vs-military-weapons-how-anthropic-s-values-clash-w/image-1-1771609249775.jpg)