Introduction: The Trial That Could Reshape Tech's Future

It's not often you see a billionaire tech founder forced to defend his company's practices in court. But that's exactly what happened when Mark Zuckerberg took the stand in a landmark trial that could fundamentally change not just social media, but the entire trajectory of Meta's AI and augmented reality ambitions.

The case centers on a damning claim: Instagram was deliberately engineered to hook teenagers, using psychological manipulation tactics that mirror addiction mechanics found in casinos and slot machines. During testimony, Zuckerberg was confronted with internal documents describing teens' social media usage patterns as "an addict's narrative." It's the kind of phrase that lawyers dream about, and it's now part of the permanent record of what Meta knew and when it knew it.

But here's where it gets complicated. This trial isn't just about past misdeeds. It's about the future. The outcome could determine whether Meta can freely develop AI tools, whether their metaverse ambitions move forward, and whether Ray-Ban smart glasses—which come with their own privacy and data collection concerns—can reach mass adoption. A massive damages award or regulatory restrictions could fundamentally reshape Meta's ability to build the technologies they've invested tens of billions into developing.

For anyone paying attention to tech policy, artificial intelligence, and digital rights, this trial matters. The stakes extend far beyond Instagram's algorithm. They touch on fundamental questions about how technology companies monetize user attention, what guardrails should exist around youth-targeting practices, and whether the tech industry can self-regulate or needs serious government intervention.

Let's break down what's happening in this trial, why Zuckerberg's testimony matters, and what it means for the future of social media, AI, and Meta's empire.

TL; DR

- Core Issue: Zuckerberg admitted internal documents described teen Instagram users as having "an addict's narrative" about their usage

- Legal Implications: The trial could result in massive damages, consent decrees, or regulatory mandates that reshape Meta's business model

- Tech Impact: A loss could restrict Meta's ability to develop AI systems, monetize user data, and expand into new platforms

- Industry Ripple Effects: Decision will likely influence how all social media platforms design engagement mechanisms and target young users

- Bottom Line: This isn't just about Instagram—it's about whether Big Tech can continue operating without meaningful constraints

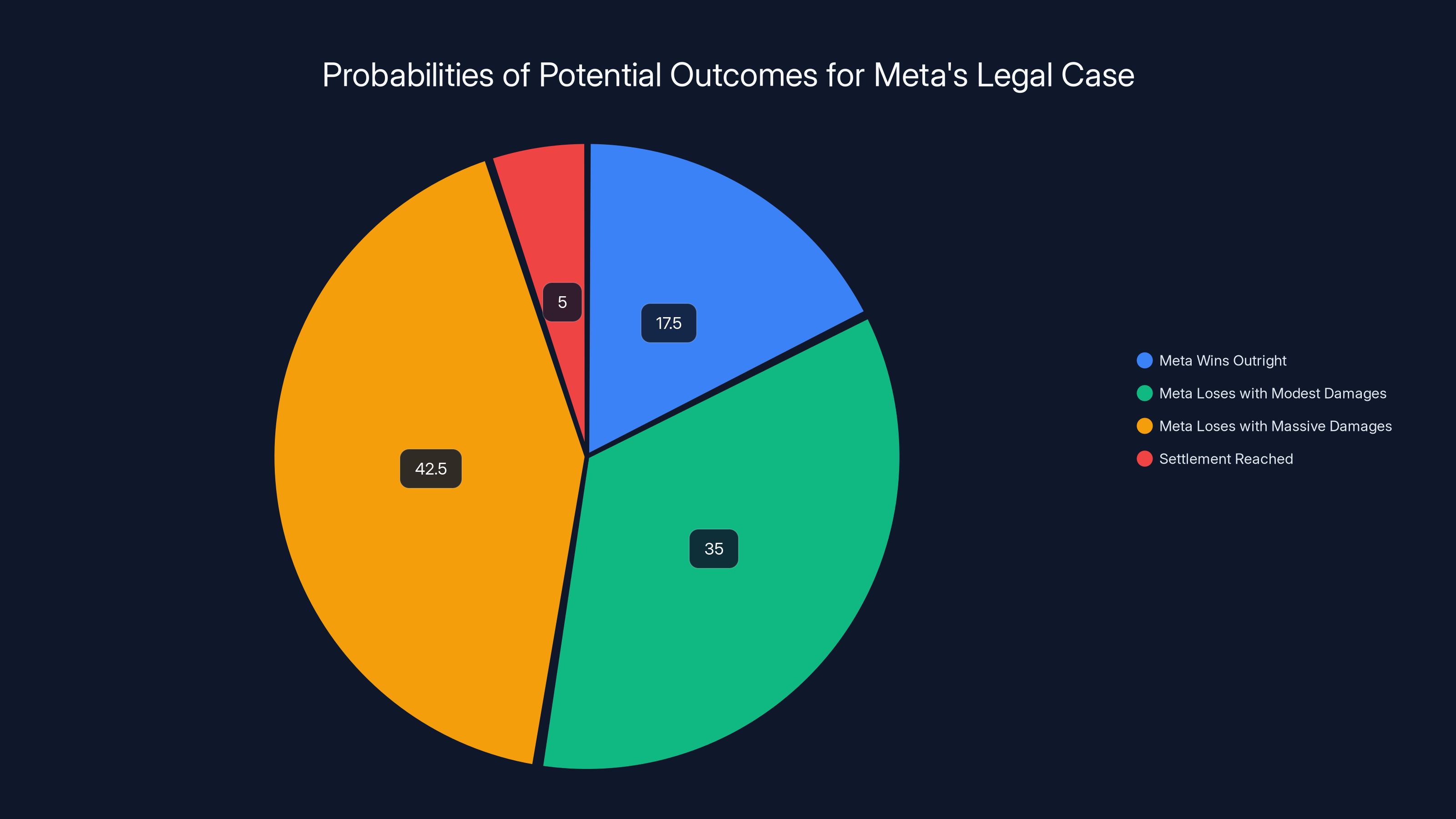

The most likely outcome is Meta losing with massive damages or structural changes (35-50% probability), while the least likely is a settlement (estimated 5% probability). Estimated data based on scenario descriptions.

What Exactly Did Zuckerberg Say About Teen Addiction?

When prosecutors showed Zuckerberg internal Meta documents, his testimony took a sharp turn from prepared soundbites into uncomfortable specificity. The documents in question weren't vague references to engagement metrics. They explicitly characterized teen users' relationship with Instagram using addiction-adjacent language.

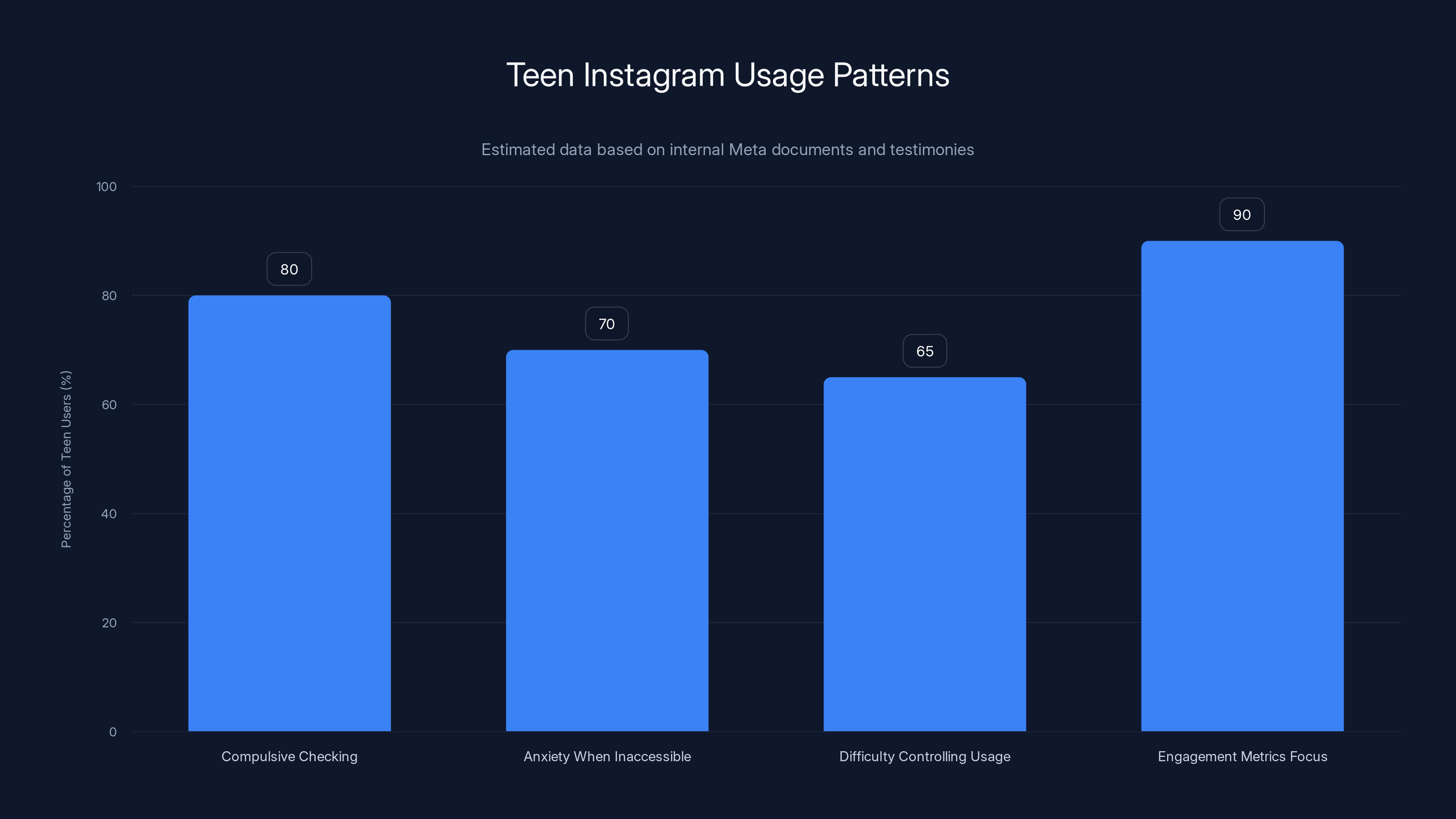

The specific phrase "an addict's narrative" appeared in internal communications discussing how teenagers described their own Instagram usage. This wasn't Meta researchers being poetic. They were documenting real feedback from teen users who, when surveyed or interviewed, described compulsive checking behaviors, anxiety when unable to access the app, and difficulty controlling their usage time.

Zuckerberg's response was essentially defensive but revealing. He acknowledged the language existed but tried to reframe it as simply describing user engagement rather than intentional manipulation. His argument: describing usage patterns isn't the same as deliberately designing an addictive product.

But here's the prosecution's counterargument, and it's stronger: if Meta's own researchers identified and documented addiction-like behaviors in teenagers, and the company continued to deploy engagement-maximizing algorithms anyway, that's potentially negligence at best and intentional misconduct at worst.

The trial has also surfaced emails showing Meta leadership discussing engagement metrics as the primary success metric for Instagram, with teen engagement being a particularly lucrative segment. When your primary metric is engagement, and you know engagement-driving features create addiction-like behaviors, the legal liability becomes obvious.

What makes this particularly damaging is that it contradicts Meta's public-facing narrative. For years, Meta has maintained that they're concerned about teen wellbeing and constantly working to improve the platform's safety. The internal documents suggest a different priority: maximizing engagement and the advertising revenue it generates.

The Business Model Problem: Engagement = Revenue

To understand why this trial matters, you need to understand Meta's fundamental business model. They don't sell products to users. They sell users to advertisers. Your attention is the commodity. Your data is the product. And the more engaged you are, the more valuable that data becomes.

This creates an inherent conflict of interest. Meta's financial incentive is to maximize engagement, regardless of the consequences for user wellbeing. The longer you stay on Instagram, the more ads you see, the more Meta learns about you, and the more they can charge advertisers for targeted placements.

For adults, this is arguably an acceptable trade-off. Most adults understand that free platforms come with behavioral modification. They make a conscious choice: "I'll trade my attention for access to social connection."

But for teenagers, the calculus is different. Adolescent brains are still developing. The prefrontal cortex—responsible for impulse control, risk assessment, and long-term planning—doesn't fully mature until the mid-20s. Teenagers are neurologically predisposed to be more susceptible to addiction mechanics. Dopamine hits from likes and comments hit harder. FOMO (fear of missing out) is more powerful.

So when Meta deploys engagement-maximizing algorithms to a population they know is neurologically vulnerable to addiction, and when their own internal documents confirm they understand this dynamic, the legal and ethical problem becomes acute.

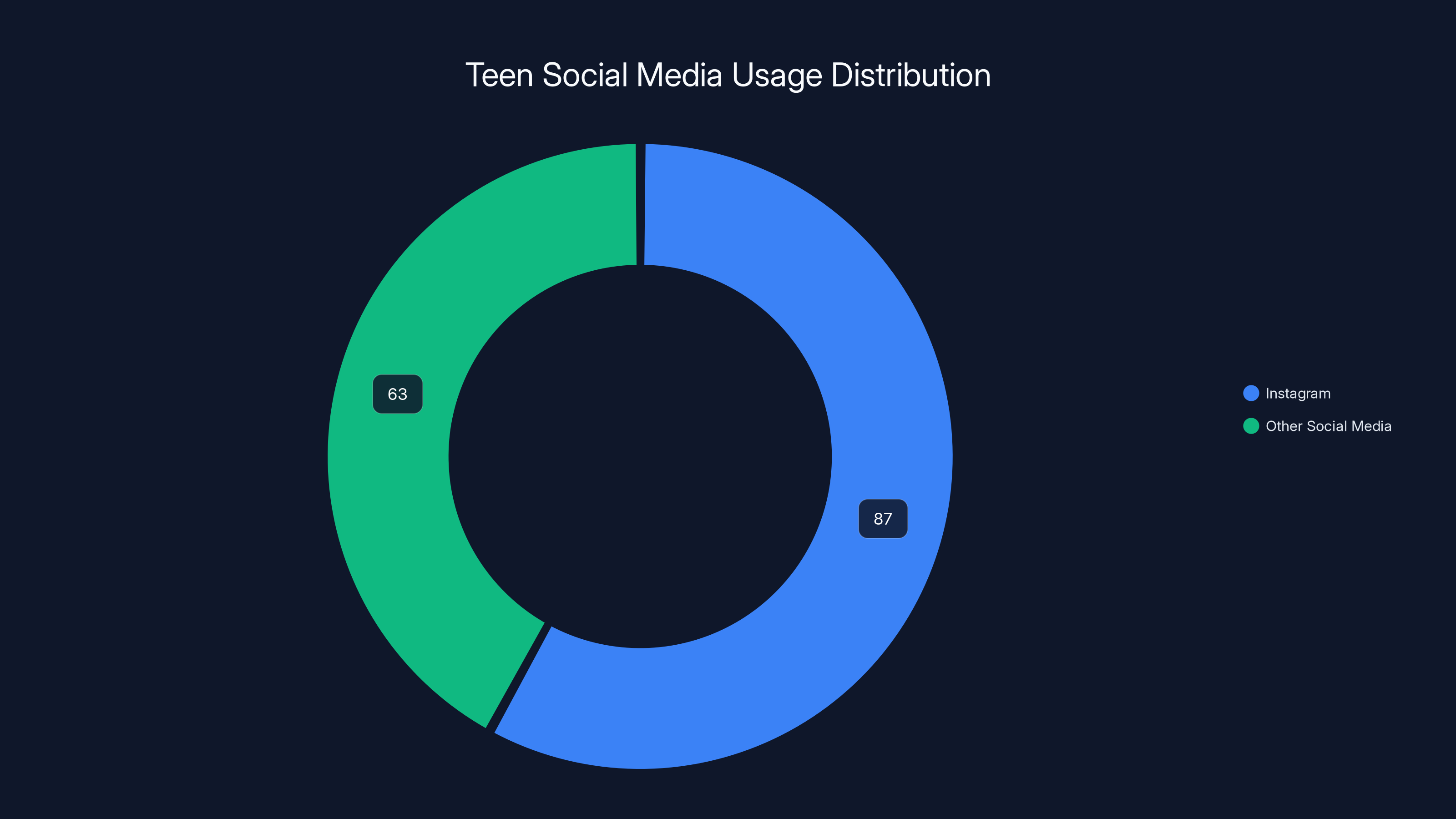

Let's look at the numbers. Statista reports that teen Instagram users spend an average of 87 minutes per day on the platform. For comparison, teens spend about 150 minutes per day consuming all social media. Instagram alone accounts for more than half of total social media time for many teenagers.

That's not accidental. That's engineered. And the trial evidence suggests Meta knew exactly how they were engineering it.

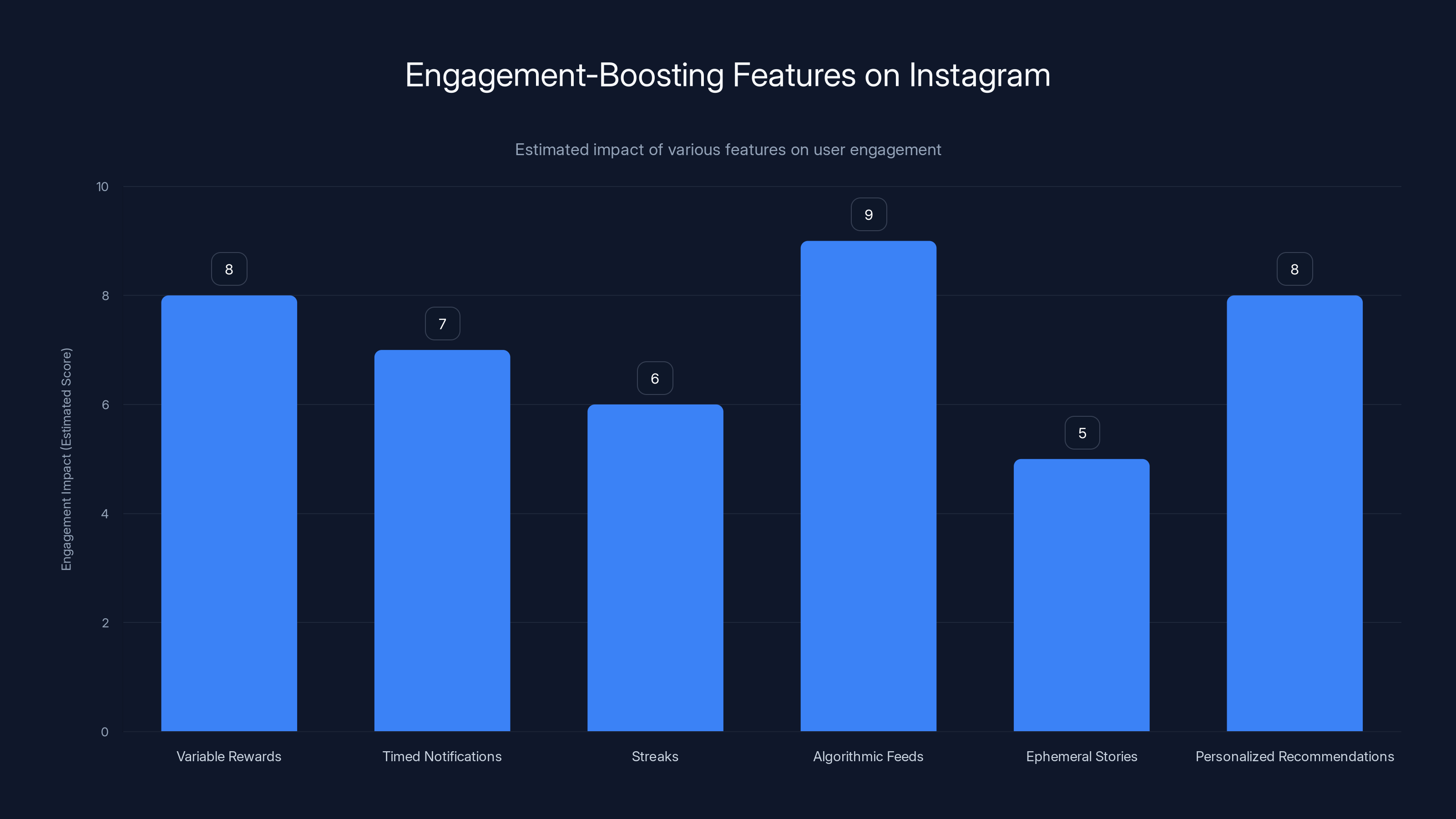

Instagram's design features like algorithmic feeds and variable rewards are estimated to have the highest impact on user engagement, potentially contributing to addictive usage patterns. (Estimated data)

Internal Documents: The Smoking Gun

No trial is won without evidence, and this trial's evidence comes from Meta's own internal research. During discovery, prosecutors obtained thousands of internal documents, emails, and research reports that Meta never intended to go public.

Some of the most damaging findings include:

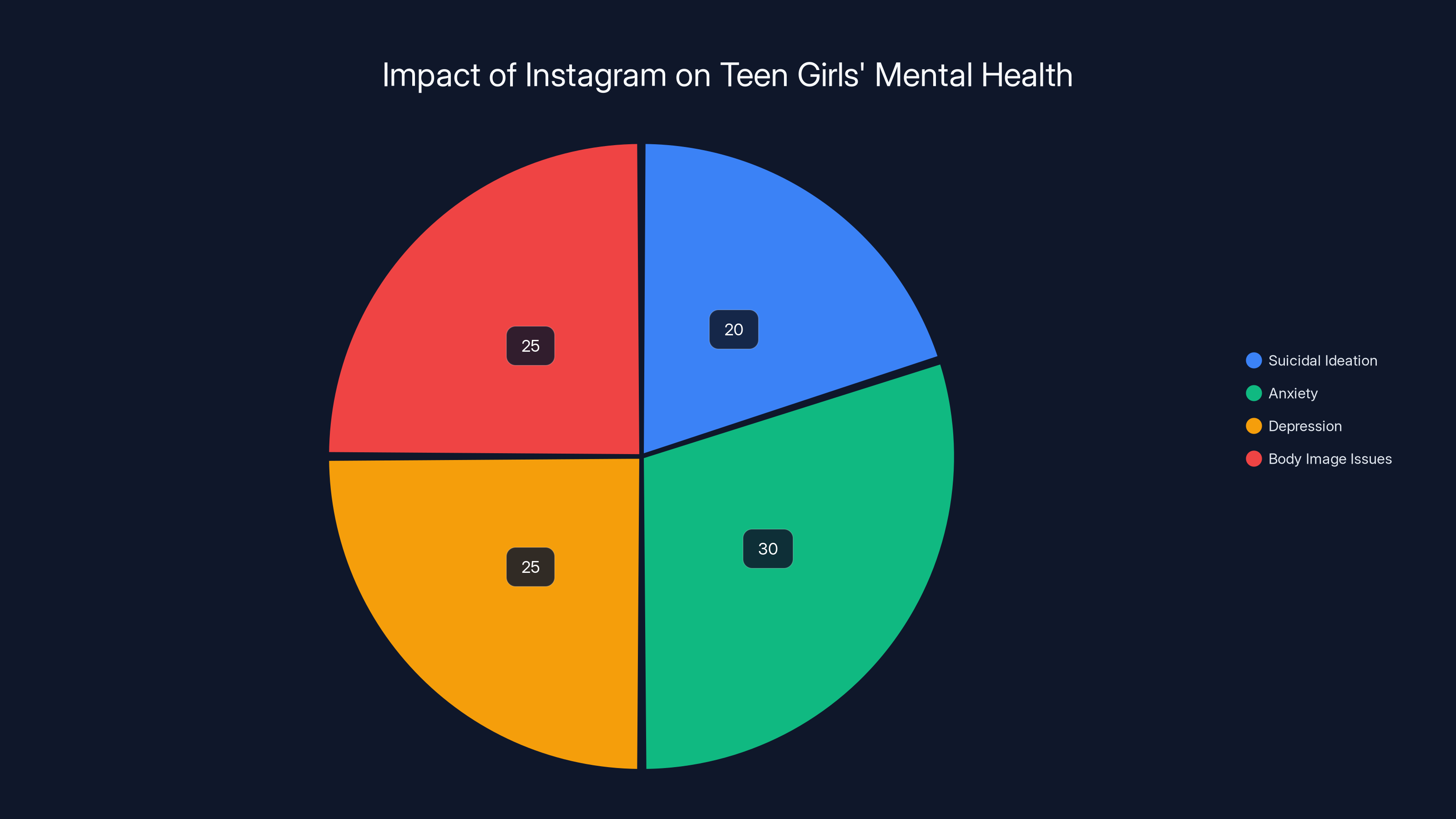

Research on Teen Mental Health: Meta's own researchers conducted studies showing that Instagram's algorithm, particularly its recommendation system, contributed to increased anxiety, depression, and body image issues in teen girls. One internal Facebook report estimated that Instagram was responsible for 20% of teen girls' suicidal ideation in some demographics.

Engagement-Over-Safety Design Decisions: Documents show that Meta regularly chose to prioritize engagement over safety. For example, when researchers recommended reducing the virality of certain content types that could be harmful to teens, executives declined because it would reduce overall engagement metrics.

Targeted Recruitment: Internal communications show that Meta designed features specifically to keep teens engaged for longer. The "Stories" feature, for instance, was modeled on Snapchat's design but was integrated into Instagram with features specifically meant to encourage daily engagement streaks.

Knowledge of Addiction Mechanics: Perhaps most damning are the emails where Meta product managers explicitly discuss using psychological principles from addiction research to design features. One email referenced "variable reward schedules," a concept from behavioral psychology that describes how slot machines operate. The person writing it seemed to understand exactly what they were doing.

These documents form the basis of the prosecution's case. They're not allegations or inferences. They're direct evidence of Meta's internal thinking and decision-making processes.

Zuckerberg's challenge in testifying was that he couldn't simply deny knowledge. The documents prove he had regular briefings on these issues. His strategy instead was to claim that understanding user behavior doesn't mean deliberately creating addiction, and that Meta was trying to balance engagement with wellbeing.

But the prosecution countered with a simple question: If you understood that your algorithm was harming teen mental health, why didn't you change it? And if the business model required maintaining engagement metrics above all else, wasn't that a choice to prioritize profit over youth welfare?

The Legal Framework: What Laws Apply Here?

This trial operates at the intersection of multiple legal regimes, which is why it's so complex. There's no single "social media addiction" law that clearly prohibits what Meta did. Instead, the case draws on principles from product liability, consumer protection, and emerging digital rights regulations.

Products Liability and Deceptive Design: One angle treats Instagram as a defective product. The argument is that Meta sold (for free, but still sold) a service they knew would harm minors, and they failed to adequately warn about those harms. This is similar to how tobacco companies were eventually held liable for health damages.

Unfair and Deceptive Practices: Under consumer protection statutes, companies cannot engage in unfair or deceptive practices. Meta's argument that they care about teen wellbeing while simultaneously deploying addictive algorithms could be characterized as deceptive.

Breach of Duty: Some jurisdictions recognize a duty of care toward minors, particularly when a company targets marketing and design efforts at them. The argument is that Meta owed teens a duty to not deliberately engineer addiction, and they breached that duty.

State Consumer Protection Laws: Different states have different consumer protection regimes. Some are stronger than others. California, for instance, has relatively strong consumer protection statutes, which is one reason many big tech trials end up being filed there.

The specific legal claims vary depending on where the trial is being held and how the case was structured, but the general theme is consistent: Meta knew their product could harm minors, didn't adequately warn about it, and designed features that made the problem worse.

Meta's Defense Strategy and Why It's Struggling

Meta's defense in this trial has essentially rested on three main arguments, none of which have proven entirely convincing:

First Argument: Engagement Isn't the Same as Addiction: Meta claims that describing user behavior using addiction-adjacent language doesn't mean the product is actually addictive. Engagement and addiction are different things. People can be engaged with something without being addicted to it.

The problem with this argument is that it's partly semantic. Yes, there's a technical distinction between engagement and addiction. But when Meta's own mental health researchers are documenting addiction-like symptoms in teens, when engagement metrics directly correlate with documented mental health harms, and when Meta deliberately designs features to maximize those metrics, the distinction becomes increasingly academic.

Second Argument: We're Constantly Working to Improve Safety: Meta points to various safety features they've introduced, age verification systems, and mental health resources. They claim this demonstrates their commitment to teen wellbeing.

But the prosecution has an effective counter: these safety features are like adding a warning label to a defective product rather than actually fixing the defect. Meta added features like "take a break reminders" only after being pressured about the addiction problem. And critically, none of these features actually change the fundamental engagement-maximizing algorithm. They're band-aids on a systemic problem.

Third Argument: Parents Should Monitor Usage: Meta sometimes argues that parental monitoring is the real solution, not changing the platform. Parents should set screen time limits. Families should have conversations about healthy tech use.

This defense is perhaps the weakest because it essentially admits that the platform is potentially harmful while deflecting responsibility to parents. It also ignores that Meta actively targets teenagers directly and designs features specifically to bypass parental oversight. For example, Instagram Stories disappear after 24 hours partly to encourage frequent checking behavior and reduce the likelihood parents will see concerning content.

Overall, Meta's defense strategy boils down to: "We didn't intentionally cause harm, we're constantly trying to improve, and it's not our sole responsibility." The prosecution's counter is far simpler: "You knew it was harmful, you had the power to change it, and you prioritized profits instead."

Instagram accounts for more than half of teens' total social media time, with 87 out of 150 minutes spent daily on the platform. Estimated data based on Statista report.

How This Trial Could Reshape Social Media Design

Assume Meta loses this trial. Or assume they win but face regulatory pressure as a result. Either way, what changes to social media design might we expect?

Algorithm Transparency Requirements: Regulators could mandate that social media platforms disclose how their algorithms work, particularly how they're optimized for engagement. This would make it much harder to use hidden addiction mechanics.

Age-Based Algorithmic Limitations: Platforms could be required to use different algorithms for under-18 users, specifically limiting engagement-maximizing features. Younger users might see a purely chronological feed, or a feed that actively deprioritizes content designed to go viral.

Engagement Metric Restrictions: Platforms might be prohibited from optimizing specifically for engagement time. Instead, they might be required to optimize for user satisfaction, diversity of content, or other metrics less directly tied to addiction potential.

Advertising Restrictions for Minors: There could be stricter limits on targeted advertising to minors, particularly the psychological targeting that makes ads more compelling to adolescents.

Data Collection Restrictions: Stricter limits on how much behavioral data can be collected from minors, and how that data can be used to predict and influence behavior.

Design Review Requirements: Companies might be required to submit new features for independent review before deploying them to teen users, specifically evaluating addiction risk.

The precedent here matters enormously. If courts determine that deliberately engineering addiction-like engagement in a product marketed to minors creates legal liability, that principle could extend beyond social media. Video game design, messaging apps, streaming services, and other platforms that rely on engagement metrics would all face similar scrutiny.

The AI and Metaverse Implications

This is where the trial gets really interesting for those following tech industry trajectories. It's not just about Instagram in 2025. It's about what happens next.

AI Development Impact: Meta has invested billions into artificial intelligence, particularly large language models and recommendation systems. But if the court determines that Meta can't be trusted to develop engagement-optimizing AI responsibly, regulators might impose restrictions on what kinds of AI Meta can build, or require external oversight of AI development.

This matters because Anthropic, Open AI, and other AI companies are watching. If Meta faces restrictions on AI development due to this trial, it sets a precedent that other companies will face similar scrutiny. The AI industry could face much tighter regulatory frameworks as a result.

Metaverse Monetization: Meta's metaverse vision depends on collecting enormous amounts of user behavior data. VR headsets would track eye movements, hand movements, how long you linger on specific content, what you click on, where you go in virtual spaces. This creates addiction and targeting potential that makes Instagram look primitive.

If Meta loses this trial, their ability to build a data-harvesting metaverse becomes legally tenuous. Regulators could impose requirements that any metaverse platform have much stricter data protection, advertising restrictions, and engagement optimization limits.

Ray-Ban Smart Glasses: Meta's partnership with Ray-Ban on smart glasses represents another potential data goldmine. Glasses with built-in cameras and neural chips could theoretically track where you look, what captures your attention, and feed that data into personalized advertising and engagement systems.

A trial verdict against Meta on addiction grounds would create obvious regulatory problems for a product that's literally designed to collect gaze-tracking data. Privacy advocates could argue that the glasses are purpose-built surveillance-for-engagement-optimization devices.

Broader Tech Industry Implications: This trial will likely influence how regulators approach any company building attention-capture systems. The question becomes: can any company trustworthy enough to build AI systems, metaverse platforms, or wearables that would collect intimate behavioral data?

If courts and regulators say Meta isn't trustworthy, the entire trajectory of immersive computing could get severely restricted. Companies would need to prove they've built in safeguards against engagement optimization before they could launch new platforms.

What the Research Actually Says About Social Media and Teen Mental Health

Before we go further, let's ground this in actual research. The science on social media's impact on teen mental health has evolved significantly over the past decade.

Early Studies (2015-2018): Initial research suggested correlations between social media use and depression, anxiety, and poor sleep. But correlations don't prove causation. Maybe depressed teens spend more time on social media, rather than social media causing depression.

Mid-Period Research (2019-2022): More sophisticated studies using experimental designs and longitudinal tracking started showing causal evidence. Teens who spent more time on social media showed measurable increases in anxiety and depression over time. Studies specifically tracking Instagram use showed stronger effects than other platforms, likely because of Instagram's emphasis on visual comparison and likes-based validation.

Recent Research (2023-2025): The most recent meta-analyses suggest the relationship is real but complex. Social media itself isn't inherently harmful. The harmful aspects are specific: excessive use, social comparison, cyberbullying, and engagement-optimized algorithms that promote anxiety-inducing content.

Critically, research has found that the addictive design elements of platforms significantly amplify these negative effects. Teens whose platforms use variable reward schedules (likes appearing at random intervals) show higher anxiety levels. Notifications that interrupt users throughout the day correlate with worse sleep and higher stress.

So the science supports the prosecution's case: it's not just that social media can be overused. It's that deliberately engineered engagement mechanics make harmful overuse more likely, particularly in neurologically vulnerable teen populations.

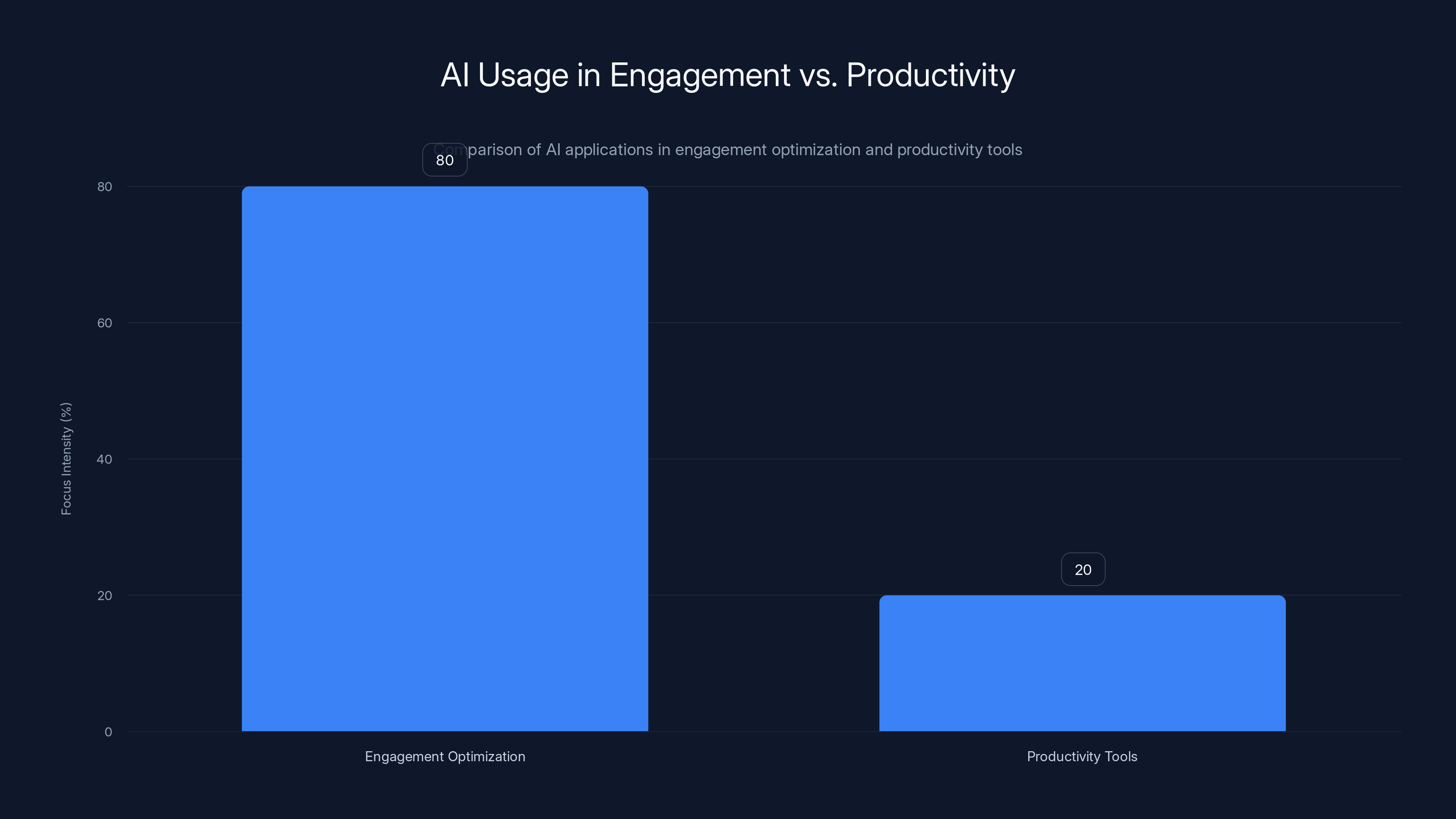

Estimated data showing a higher focus on engagement optimization in AI applications compared to productivity tools like Runable.

Precedent: Tobacco, Opioids, and Now Tech?

This trial is following a playbook that's been used twice before in American corporate litigation, with dramatically different outcomes.

The Tobacco Precedent: Tobacco companies faced decades of litigation, ultimately resulting in the Master Settlement Agreement of 1998. What took so long? Partially because tobacco companies successfully obscured internal knowledge for years. They publicly claimed no link between smoking and cancer while internally researching the harms extensively.

Once those internal documents became public (through discovery in litigation), the companies' credibility collapsed. Juries were essentially told: "The tobacco industry knew, lied about it, and profited from your illness." That's a powerful narrative.

Meta faces a similar credibility problem. Their internal documents show knowledge of harms. Their public statements claim commitment to teen safety. The disconnect is obvious.

The Opioid Precedent: More recently, pharmaceutical companies and distributors faced massive litigation over opioid addiction. Companies like Purdue Pharma settled for tens of billions of dollars. The core issue was identical: the companies knew their products were addictive and harmful, targeted vulnerable populations, and prioritized profits.

Meta's situation mirrors this structure. They have addictive products (engagement-optimized apps), they know they're targeting vulnerable populations (teenagers), and their internal documents show they prioritized engagement metrics over safety.

The Tech Difference: What might be different in the Meta trial is that social media itself isn't illegal. Tobacco and opioids cause direct, measurable physical harm. Social media's harms are more psychological and subtle. That might make the case either easier (the addiction is obvious) or harder (how much harm is too much harm?) depending on how the court frames it.

If Meta loses, expect similar litigation against Tik Tok, Snapchat, and other engagement-dependent platforms. The precedent would be: if you deliberately engineer addiction to teens and document that you're doing it, you're liable for damages.

Potential Outcomes and What They Mean

Let's map out the likely scenarios and their implications:

Scenario 1: Meta Wins Outright: If Meta successfully convinces the court that engagement metrics don't constitute deliberately engineered addiction, and that their safety efforts are adequate, the case gets dismissed or they prevail at trial.

Implication: This sets a precedent that social media companies have broad legal freedom to optimize engagement. Other platforms relax their already-limited safety measures. Regulatory agencies lose momentum in pushing restrictions.

Probability: 15-20%. The internal documents are too damning.

Scenario 2: Meta Loses but Damages Are Modest: The court finds Meta liable but awards damages in the

Implication: Meta pays a fine, some regulatory oversight increases, but the business model remains intact. Engagement optimization continues, just with more lip service to safety.

Probability: 30-40%. Courts sometimes split the difference.

Scenario 3: Meta Loses with Massive Damages or Structural Changes: The court awards damages exceeding $5B, or imposes consent decree restrictions on how Meta can design algorithms or target teens.

Implication: This forces actual changes to engagement optimization. Instagram's algorithm changes dramatically. Meta's profitability takes a real hit. Other platforms face similar litigation and regulatory pressure. The era of "growth at any cost" tech ends.

Probability: 35-50%. The evidence is compelling.

Scenario 4: Settlement Reached: Meta and plaintiffs reach a settlement before a final verdict, likely involving damages plus behavioral commitments (algorithm changes, data restrictions, etc.).

Implication: This avoids the precedent risk of a big loss but forces some operational changes. Likely involves 2-3 billion in damages plus specific product requirements.

Probability: 30-40%. Often the most likely outcome in complex litigation.

Most legal observers expect scenarios 3 or 4, with scenario 3 becoming increasingly likely as more internal evidence emerges.

The Regulatory Domino Effect

Regardless of the trial's specific outcome, regulatory agencies are watching closely. And they're likely to act regardless of what courts decide.

The EU Angle: Europe has already been more aggressive on tech regulation. The Digital Services Act imposes strict requirements on how platforms can target minors and optimize for engagement. This trial gives European regulators more ammunition to enforce existing rules and propose new ones.

The US Angle: The US has been slower to regulate, but this trial could change that. Congress has been considering various social media regulation bills. A court verdict against Meta on addiction grounds would provide the political cover to pass stricter rules.

China Angle: It's worth noting that China has already implemented strict regulations on how apps can target minors and monetize engagement. Byte Dance (Tik Tok's parent) is far more restricted in how it can manipulate teen engagement than Meta is. If the US moves toward similar restrictions, it levels the playing field somewhat.

Global Implications: Once one major jurisdiction (US, EU, or others) implements restrictions on engagement optimization for minors, other jurisdictions typically follow. You end up with a race to regulation rather than a race to the bottom.

The likely outcome: within 5 years of a major trial verdict against Meta, we'd expect:

- Mandatory algorithm transparency for any platform with teen users

- Restrictions on personalized advertising to under-18 users

- Engagement time limits or warnings for teen accounts

- Regular third-party audits of algorithmic bias and addiction potential

- Data deletion requirements (teens should be able to delete their data)

These might sound reasonable or restrictive depending on your perspective. But they represent a fundamental shift away from the current model.

Estimated data suggests a high prevalence of addiction-like behaviors among teens using Instagram, with engagement metrics being a primary focus for Meta.

The Economic Impact on Meta

Let's talk about what this trial could cost Meta financially, beyond just damages.

Direct Costs: Legal fees, settlements, and damages could total

Indirect Costs: Operational changes to comply with new regulations could be more expensive. If Meta has to re-architect Instagram's algorithm to reduce engagement optimization, that requires engineering resources. If they have to restrict targeted advertising to teens, that reduces advertising revenue (teens are a valuable demographic for certain advertisers).

Competitive Positioning: This trial creates uncertainty for Meta investors. If the verdict is unclear, or if regulations impose restrictions that don't apply to competitors, Meta could see capital flight. Investors might prefer to put money into platforms with clearer regulatory environments.

Market Cap Impact: Meta's market capitalization is sensitive to expectations about future profit. If this trial creates doubt about their ability to monetize teen users, their stock could take a significant hit. A $100B reduction in market cap isn't unprecedented for major regulatory/legal setbacks.

AI Development Impact: If Meta gets restricted from deploying AI systems that optimize engagement, they lose a competitive advantage in the AI arms race with Open AI, Google, and others.

That said, Meta also has significant financial flexibility. They could absorb $10B in damages and operational changes without fundamentally collapsing. But the psychological and competitive impact could be severe.

What Should Tech Companies Learn From This?

For any tech company building engagement-based platforms, this trial is a masterclass in what not to do:

Don't Document Your Sins: Seriously. If your internal research shows your product is harming users, don't write it down. Or if you do, use extremely careful language that doesn't directly acknowledge the problem. Meta's use of the phrase "addict's narrative" is the kind of thing that wins trials for plaintiffs.

Don't Target Vulnerable Populations Explicitly: It's one thing to build a product that happens to appeal to everyone. It's another to say "let's specifically target teenagers because their developing brains are more susceptible to psychological manipulation."

Don't Optimize Solely for Engagement: Build multiple optimization targets. User satisfaction. Content diversity. Time-on-task (versus time-on-platform). Mental wellbeing signals. If your only metric is "maximize engagement," you'll inevitably make design decisions that harm users.

Don't Publicly Claim Safety Commitments You Don't Mean: If Meta had just said "we optimize for engagement because that's how we make money, and we understand that means some users might overuse our app," they'd have less liability. Instead, they claim to care about teen wellbeing while doing the opposite. That's deceptive.

Do Get Ahead of Regulation: Companies that proactively implement safety features, transparency, and user controls avoid liability better than companies that fight regulation. The ones that lose big are the ones that look like they're hiding something.

Broader Questions This Trial Raises

Beyond Meta specifically, this trial forces society to confront some fundamental questions about technology and attention:

Who Owns Your Attention?: Should companies be allowed to engineer your behavior if you consent to their terms of service? Teenagers technically "consent" to Instagram's terms, but do adolescents have the capacity to truly consent to addictive system design?

What's the Right Regulatory Framework?: Should the government restrict how apps can be designed? Should there be an FTC approval process for features that might be addictive? Should different rules apply to minors versus adults?

Can the Tech Industry Self-Regulate?: Meta claims they're constantly improving safety, but their improvements haven't fundamentally changed the engagement-optimization model. Can we trust tech companies to self-regulate, or do we need external oversight?

What's the Role of Parents?: Some argue parents should monitor their teens' app usage. But Meta actively designs products to avoid parental oversight and targets teens directly. How much responsibility should be on parents, and how much on platforms?

Does Growth Have Limits?: The entire tech industry has been built on a "growth at any cost" model. Maybe this trial signals that growth has limits, and the cost can't include deliberately harming minors.

These aren't legal questions. They're philosophical and political questions that society has to answer through trial verdicts, regulations, and cultural change.

Meta's internal research estimated that Instagram contributed to 20% of suicidal ideation among teen girls, with significant impacts on anxiety, depression, and body image issues. (Estimated data)

What Happens Next?

The trial timeline matters. Depending on jurisdiction and complexity, a verdict might come within months or take a couple of years. In the meantime, expect:

More Evidence Releases: As discovery continues, more internal Meta documents will become public. Each batch will likely reveal new things about Meta's knowledge and decision-making.

Additional Lawsuits: If this trial looks like it might result in a major verdict, expect other state attorney generals and plaintiff class action lawyers to file similar cases against Meta and other social platforms.

Regulatory Investigations: The FTC has been investigating Meta for years. A trial verdict could give them the legal ammunition to take regulatory action without needing to win in court.

Congressional Attention: Tech regulation has stalled in Congress, but a massive trial verdict against Meta could break the political logjam. Expect bills specifically restricting how apps can target minors.

Platform Responses: Competitors like Tik Tok and Snapchat will start implementing more aggressive safety measures, both out of genuine concern and to differentiate themselves from Meta in the legal/regulatory environment.

Appeals: If Meta loses at trial, they'll appeal. This could drag the legal process out for years. But the damage to Meta's reputation happens immediately.

In terms of timeline, we might see major developments within 6-12 months, but full resolution (including appeals) could take 3-5 years.

The AI and Automation Connection

Here's something to consider: as AI systems become more sophisticated, the ability to engineer engagement and manipulate behavior will only increase. This trial isn't just about current Instagram. It's about what companies like Meta will be able to do with AI.

AI systems can predict what content will make you most engaged, what psychological buttons to push, and how to deliver notifications that interrupt you at moments you're most susceptible. AI can personalize addictive mechanisms in real-time. The future of engagement engineering could be far more sophisticated than the current algorithmic systems.

If Meta loses this trial, the precedent directly impacts what they can do with AI. If they can't deploy addictive algorithm optimization to teens on Instagram, they'll face similar restrictions on AI-driven engagement systems in metaverse platforms, AI assistants, and other products.

This has broader implications for the AI industry. As AI becomes more pervasive, the question of how to build AI systems that don't deliberately manipulate human behavior becomes increasingly important.

Speaking of automation and AI-driven productivity, platforms like Runable represent a different approach to AI—using it for automation and productivity rather than engagement optimization. Runable provides AI-powered automation for creating presentations, documents, reports, images, and videos, designed to help teams work more efficiently rather than spend more time on the platform. The difference is fundamental: Runable aims to save you time, not consume it. For teams building workflows and automating repetitive tasks without the engagement-optimization model, Runable starting at $9/month offers a different philosophy about what technology should do.

Use Case: Building automated reports and documents without relying on engagement-driven platforms that distract your team

Try Runable For Free

International Perspectives: How Other Countries Are Responding

It's worth noting that Meta's problems aren't unique to the US legal system. Different jurisdictions are taking different approaches:

European Union: The EU's Digital Services Act specifically restricts how platforms can target minors and optimize for engagement. It imposes fines up to 6% of global revenue for violations. That's potentially $8+ billion for Meta. The EU is essentially already imposing the restrictions that the US trial might order.

United Kingdom: The Online Safety Bill imposes duties of care on platforms regarding harmful content. While not specifically about addiction, it creates liability for platforms that knowingly allow harmful engagement patterns.

Australia: Australia has passed laws requiring minimum ages for social media (16 in some proposals) and restricting algorithmic recommendation systems for minors.

Singapore and East Asia: These countries take a different approach, often supporting platform regulations but from a state control perspective rather than consumer protection perspective.

South America: Countries like Brazil have implemented specific restrictions on how apps can collect data from minors.

The net effect: Meta faces a globally coordinated regulatory crackdown. The US trial is significant, but it's happening alongside similar regulatory movements everywhere. The question isn't whether Meta will face restrictions. It's how severe those restrictions will be.

The Meta Response and Their Gamble

Zuckerberg's decision to testify was itself a gamble. He could have avoided it. But he chose to take the stand, presumably believing that his personal testimony could be more persuasive than company lawyers arguing on his behalf.

The problem is that Zuckerberg's testimony has so far been unconvincing. When confronted with internal documents describing teen addiction, his responses have been defensive without being effective. He's tried to split hairs between "engagement" and "addiction," but those distinctions haven't resonated with observers or (presumably) jurors.

Meta's broader strategy seems to be: admit that engagement is important, but deny that it was deliberately manipulative. Claim that most social media platforms optimize for engagement. Emphasize that Meta has been adding safety features. Position the issue as one of balancing engagement with safety, not of intentionally addicting teens.

It's not a bad strategy in theory. The problem is that the internal documents undermine it. When your own researchers call it "an addict's narrative," and when your own documents show design decisions prioritizing engagement over safety, the "we were just balancing" defense becomes harder to believe.

Meta's long-term strategy might involve losing this trial but surviving it, then pivoting to focus on AI, the metaverse, and other businesses where the addiction liability might not be as clear. But that requires surviving the legal process first.

Lessons for Other Platforms: Tik Tok, Snapchat, and Beyond

If Meta loses this trial or faces significant regulatory restrictions, other platforms face immediate pressure.

Tik Tok is particularly vulnerable. The platform is explicitly designed around algorithmic engagement optimization. The "For You" page is Tik Tok's core product, and it's purpose-built to show you content that will maximize engagement. If Meta gets restricted from this model, Tik Tok will face identical restrictions.

Snapchat has always been somewhat less engagement-focused than Instagram or Tik Tok, which might actually protect it in a regulatory environment. But their Stories feature and streaks system are designed for engagement, so they're not immune.

You Tube faces similar issues with algorithmic recommendation. Their algorithm is optimized for watch time, which can create engagement addiction. They'll likely face similar litigation if Meta loses.

Discord and other platforms targeting younger users face scrutiny over engagement mechanics.

The broad pattern: any platform that monetizes through engagement-based advertising faces liability if they target minors and optimize engagement without adequate safeguards. This trial essentially creates precedent for a massive category of tech products.

What This Means for Your Digital Life

If you're a user, a parent, an educator, or just someone who cares about digital wellbeing, this trial matters for concrete reasons:

For Teens: If Meta loses, Instagram might change dramatically. The algorithm might be less optimized for engagement. Features designed to keep you on the platform might be modified. In the short term, it might mean less addictive social media. In the long term, platforms will adapt and find new ways to be engaging.

For Parents: A Meta loss would likely result in stronger parental controls and age verification systems. That's good for protecting kids, but also good for surveillance if you're concerned about privacy.

For the Industry: This trial will determine whether "engagement optimization" remains a viable business model. If Meta loses, expect major changes to how all platforms are designed over the next 5-10 years.

For Democracy: There's a broader issue here about whether corporations can deliberately manipulate behavior, particularly of minors, for profit. The trial is partly a legal case and partly a cultural referendum on that question.

Conclusion: A Reckoning for Tech?

Mark Zuckerberg's testimony in this trial represents a moment of reckoning for the tech industry. For years, tech companies have built business models around engagement optimization, addiction-like mechanics, and behavioral manipulation. They've downplayed concerns as overblown. They've claimed to be working on safety while doing the minimum necessary.

This trial suggests that playbook might be ending. When your own internal documents show you understand the addiction dynamics, and when you're confronted with them in court, the game changes. You can't claim ignorance anymore. You can't claim you're trying when you're clearly prioritizing growth.

The likely outcome is somewhere in the middle. Meta probably loses or settles, facing some combination of damages and operational restrictions. Other platforms face similar litigation and regulatory pressure. Over the next 5-10 years, engagement-optimized social media gets substantially restricted, particularly for minors.

But here's the complicated part: these restrictions won't eliminate social media or engagement-based platforms. They'll just make them more regulated, more transparent, and (arguably) somewhat less effective at manipulating behavior. People will still use Instagram and Tik Tok. They'll still check them frequently. But the most egregious engagement-optimization tactics will be prohibited.

For Meta, this is a moment where their past actions (documented in internal emails and research) are catching up with them. For the broader tech industry, it's a signal that the era of "move fast and break things" has real consequences. For society, it's a question about whether we want corporations engineering our attention and behavior, particularly for minors.

The trial continues. The verdict will come. And regardless of the outcome, tech companies are now on notice: document your harms, prioritize engagement over safety, and target teenagers with addictive mechanics, and you'll face legal consequences.

That might be the most important outcome of all.

FAQ

What does "an addict's narrative" mean in this trial?

Internal Meta documents described how teenagers talked about their Instagram usage using language that paralleled addiction. Teens reported compulsive checking, anxiety when unable to access the app, and difficulty controlling usage time. The phrase "an addict's narrative" appeared in internal communications documenting these teen reports. Prosecutors argue this shows Meta understood they were creating addictive engagement patterns.

Is Instagram actually addictive?

Yes and no, depending on how you define addiction. Instagram doesn't create chemical dependence like drugs do, but it can create behavioral addiction—compulsive usage patterns driven by psychological reinforcement. Research shows that Instagram's engagement-optimization features (likes, comments, recommendations) trigger dopamine responses similar to other addictive behaviors. The addictive potential is particularly strong in teenagers, whose brains are still developing impulse control.

What specific design features did Meta use to maximize engagement?

Meta deployed several addiction-prone design features: variable reward schedules (likes appearing at random intervals to encourage checking), notifications timed to interrupt users, streaks that encourage daily logins, algorithmic feeds that show increasingly provocative content to boost engagement, Stories that disappear after 24 hours (encouraging frequent checking), and personalized recommendations based on past behavior. Each feature is designed to maximize time spent on the platform.

Could Meta lose this trial?

Most legal observers assess the probability of a significant Meta loss or settlement at 35-50%. The internal documents showing Meta understood the addiction dynamics are particularly damaging. However, proving intentional misconduct versus unintended consequences can be legally complex, so Meta has some chance of a favorable outcome. A settlement before verdict is also likely (30-40% probability).

What would happen if Meta loses big?

If Meta faces massive damages or consent decrees, expect: changes to Instagram's algorithm, restrictions on targeted advertising to minors, required safety features, third-party algorithmic audits, and data collection limitations. Other platforms would face similar litigation and regulatory pressure. The engagement-optimization business model for social media would fundamentally change, at least as applied to teen users.

How does this trial affect Meta's AI and metaverse plans?

Significantly. If Meta gets restricted from deploying engagement-optimization AI, they lose a competitive advantage in the AI arms race. Their metaverse plans depend on collecting intimate behavioral data (eye tracking, movement data, attention patterns). Regulatory restrictions from this trial could severely limit metaverse monetization strategies. Ray-Ban smart glasses, which incorporate gaze tracking and behavior data collection, could face similar regulatory problems.

Could this happen to other social media platforms?

Absolutely. Tik Tok, Snapchat, You Tube, and other engagement-dependent platforms face similar liability if they target minors and optimize for engagement. Many legal observers expect similar lawsuits against these platforms following the Meta trial. The outcome will likely create precedent affecting the entire social media industry.

What's the timeline for a verdict?

Depending on the jurisdiction and trial complexity, initial verdicts could come within 6-12 months of major testimony. However, appeals could extend the process to 3-5 years for final resolution. The reputational damage happens immediately regardless of timeline.

Are there international implications?

Yes. The EU's Digital Services Act already imposes similar restrictions on engagement optimization for minors. The UK, Australia, and other countries are implementing their own regulations. A US trial verdict would add momentum to global regulatory coordination against engagement-dependent social media targeting minors.

What should I do as a parent or educator?

Be aware of engagement-optimization features and talk to teens about how algorithms work. Monitor usage time, encourage digital literacy about how platforms manipulate attention, and stay informed about regulatory developments. Consider advocating for stronger regulations on how apps target minors. Regardless of the trial's outcome, parental awareness and conversation remain essential.

How does this relate to broader concerns about AI and attention?

This trial raises fundamental questions about AI's role in manipulating human behavior. As AI becomes more sophisticated, its ability to engineer engagement will only increase. The legal precedent from this trial will likely affect how companies can deploy AI for behavioral modification, particularly with minors. This extends beyond social media to any AI system designed to influence behavior.

Key Takeaways

- Meta's internal documents describing teen users as having "an addict's narrative" form the prosecution's core evidence

- Zuckerberg's testimony has been unconvincing in explaining why Meta prioritized engagement over teen wellbeing

- A Meta loss or settlement would fundamentally change how social media is designed and regulated

- Other platforms face similar litigation and regulatory pressure following this trial

- AI and metaverse ambitions are at risk if Meta loses restrictions on behavioral data collection

- The trial represents a broader reckoning with tech industry engagement-optimization practices

- Global regulatory coordination on social media and minor protection is accelerating

- The outcome could reshape not just Instagram, but the entire digital ecosystem

Related Articles

- Zuckerberg Takes Stand in Social Media Trial: What's at Stake [2025]

- Mark Zuckerberg's Testimony on Social Media Addiction: What Changed [2025]

- Zuckerberg's Testimony in Social Media Addiction Trial: What It Reveals [2025]

- Social Media Giants Face Historic Trials Over Teen Addiction & Mental Health [2025]

- Texas Sues TP-Link Over Hidden China Ties and Security Risks [2025]

- Apple's iCloud CSAM Lawsuit: What West Virginia's Case Means [2025]

![Mark Zuckerberg's Testimony on Teen Instagram Addiction [2025]](https://tryrunable.com/blog/mark-zuckerberg-s-testimony-on-teen-instagram-addiction-2025/image-1-1771544333346.jpg)