The Open AI Mafia: 18 Startups Founded by Open AI Alumni [2025]

There's a phenomenon happening in Silicon Valley that reminds observers of the legendary PayPal mafia. Except this time, the exodus is from OpenAI, the company that fundamentally shifted how the world thinks about artificial intelligence. And honestly, the scale is staggering.

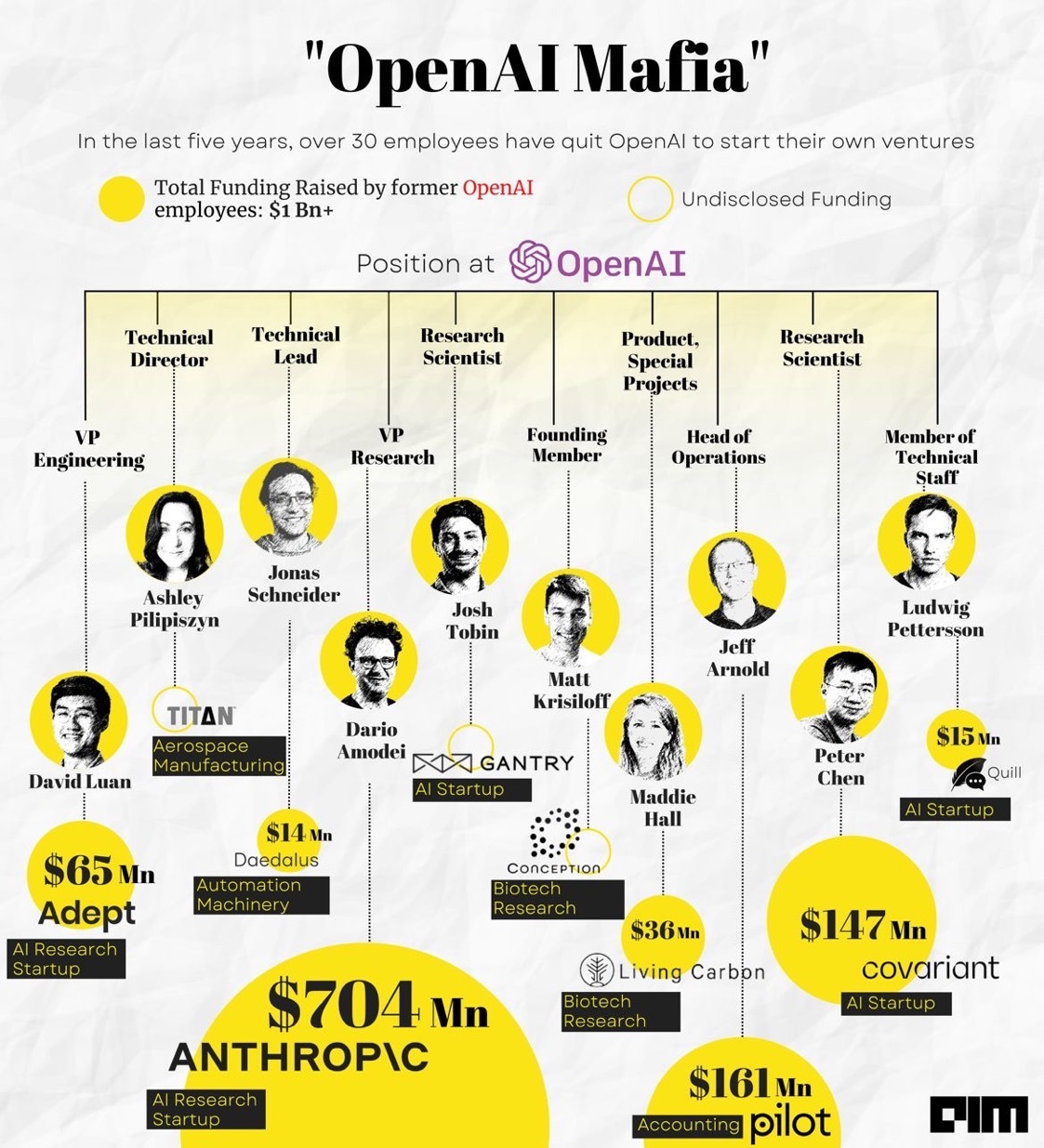

OpenAI's valuation has soared to over $850 billion with ongoing fundraising discussions that suggest even higher figures are possible, as reported by Bloomberg. But as the company has grown, so has its alumni network. Engineers, researchers, product leaders, and executives have left to build their own AI-powered companies. Some became formidable competitors. Others raised billions without shipping a single product. A few were quietly acquired by Big Tech giants trying to rapidly scale their AI capabilities.

We're not talking about a handful of people spinning off side projects. We're talking about a systematic brain drain of some of the most talented AI researchers and engineers on the planet. These aren't just startups either—many have become multi-billion dollar valuations, raised unprecedented funding rounds, or been acquired by Amazon, Microsoft, and other tech giants at premium prices.

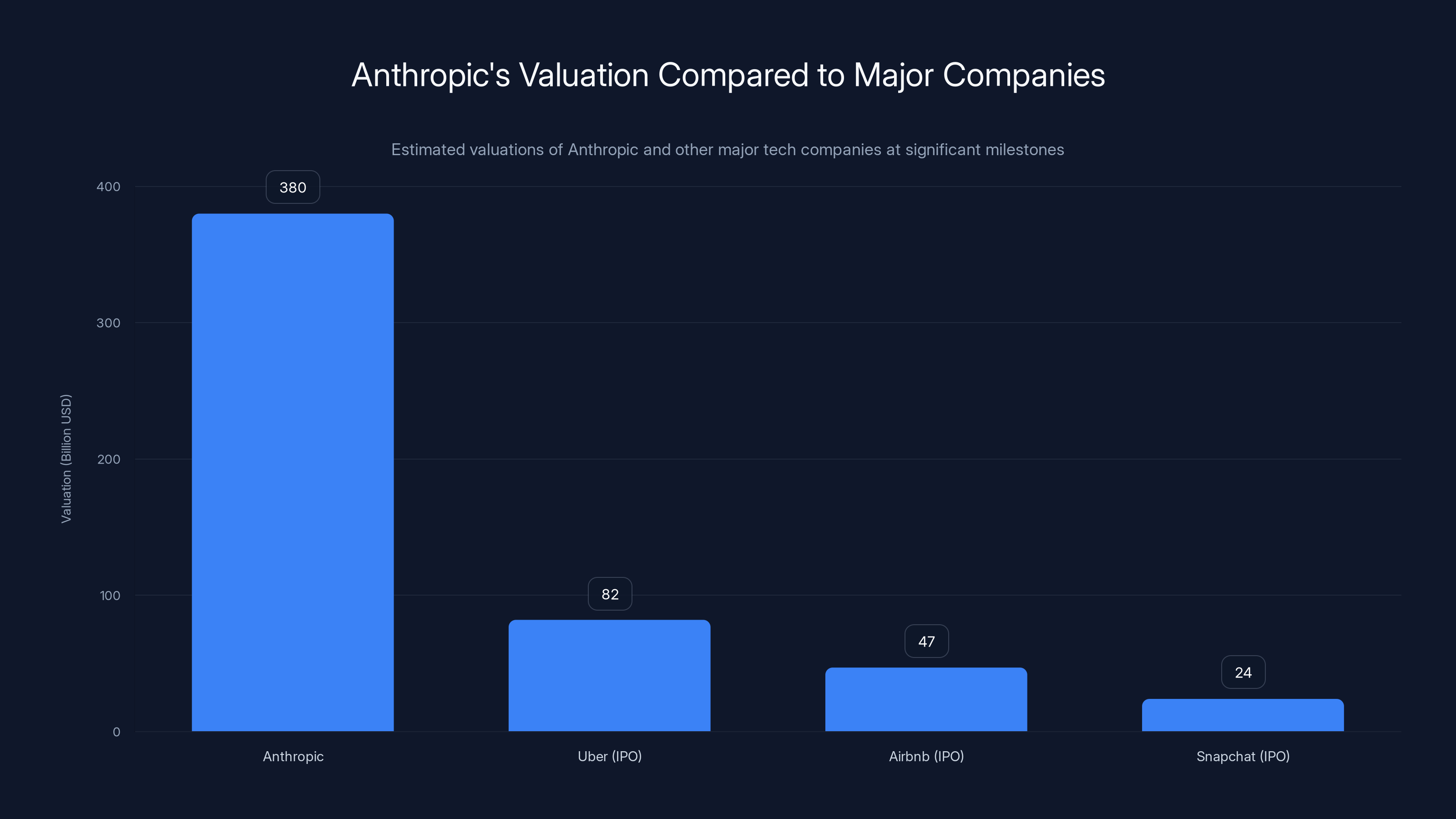

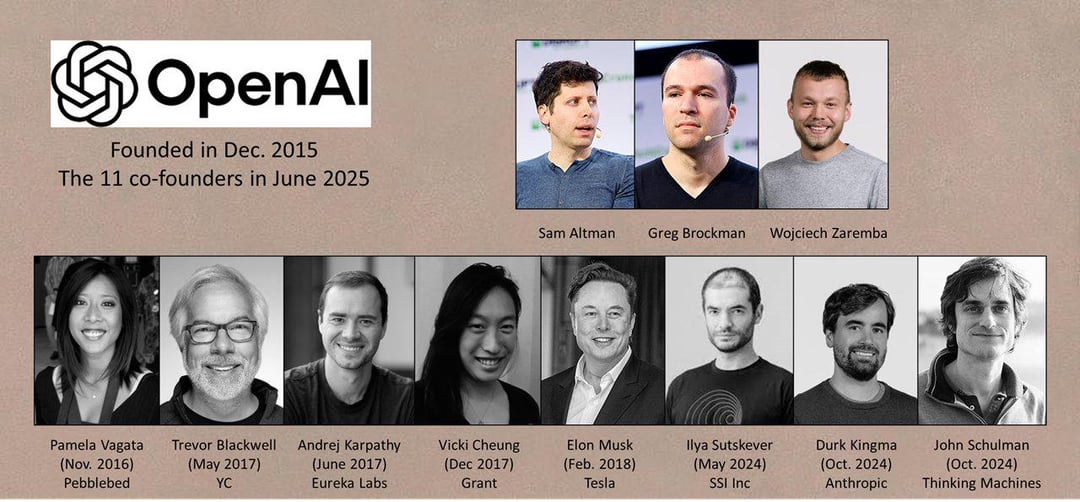

The story started quietly in 2021 when Dario and Daniela Amodei, along with other senior researchers, left OpenAI to found Anthropic. That single departure created shockwaves. If the company's own employees didn't think OpenAI was the safest path forward for AI development, what did that mean? Today, Anthropic is valued at $380 billion and is preparing for an IPO that could come this year.

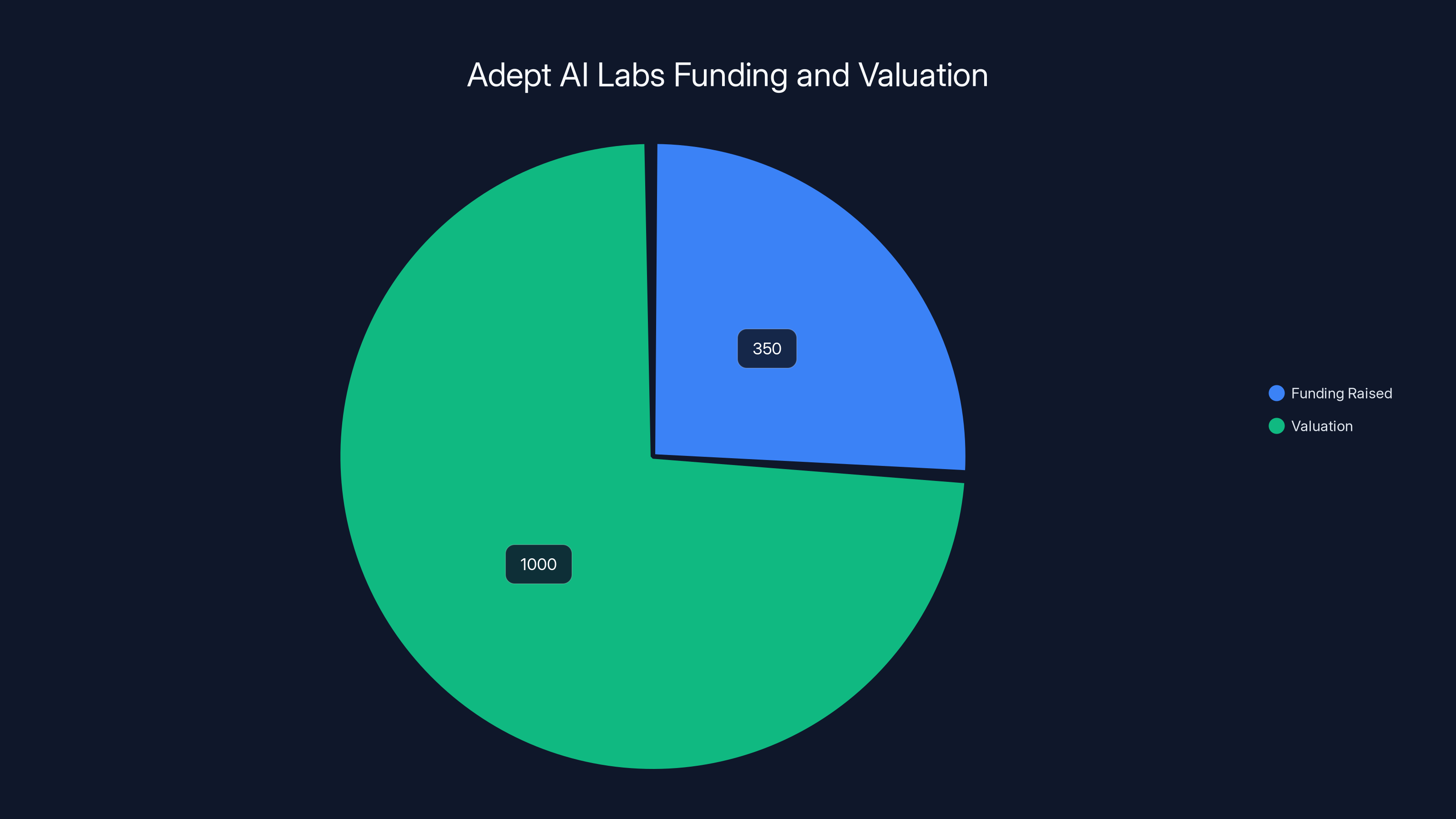

Then came the broader exodus. By 2024 and 2025, the pattern accelerated. Adept AI Labs, founded by OpenAI's engineering VP, raised

What's fascinating isn't just the capital flowing to these startups—it's the pattern. These aren't scrappy founders betting on unproven technology. These are people who worked on ChatGPT, GPT-4, and the systems that changed how millions of people work. They have deep technical knowledge, institutional credibility, and networks of elite investors who move fast. OpenAI became a credentialing factory for AI entrepreneurs.

But there's also a darker subtext worth exploring. Why are so many talented people leaving? Is it disagreement over AI safety? Frustration with organizational decisions? The allure of becoming founders and building their own vision? The opportunity to raise venture capital that values AI expertise more than ever before? The answer is probably some combination of all of these.

This article is a comprehensive breakdown of the 18 most notable startups founded by OpenAI alumni. We'll examine what each company does, how much funding they've raised, where they're headed, and what their emergence tells us about the state of the AI industry. Some of these companies will reshape how businesses operate. Others are already being acquired or pivoting. All of them represent a fascinating case study in how institutional knowledge flows through Silicon Valley and how one company's talent can become an entire ecosystem of competitors and complementary businesses.

Let's dive in.

TL; DR

- Anthropic leads the pack with a 30 billion in Series G, positioning it as OpenAI's most serious competitor

- 18+ notable startups have been founded by OpenAI alumni, with many raising hundreds of millions in funding without requiring products to achieve unicorn status

- Amazon acquired three major startups founded by former OpenAI researchers in quasi-acquisitions designed to avoid antitrust scrutiny while rapidly scaling AI talent

- The exodus accelerated in 2024-2025, with dozens of mid-level engineers and researchers leaving to start companies in robotics, AI agents, safety research, and enterprise tools

- Founder network effects are real: Former OpenAI employees can raise capital faster, attract talent more easily, and achieve higher valuations than typical startups

Anthropic's $380 billion valuation surpasses Uber's IPO valuation, highlighting a shift in market perception towards AI safety and alignment. Estimated data for comparison.

Anthropic: The $380 Billion Rival

Dario Amodei was VP of research at OpenAI. His sister Daniela Amodei was VP of safety and policy. In 2021, they walked away from one of the most prestigious positions in AI to build something better. The question everyone asked: better at what, exactly?

Anthropic's answer was clear from day one: AI safety. While OpenAI was racing to scale larger models and deploy them to consumers as fast as possible, Anthropic wanted to build AI systems that were safer, more interpretable, and aligned with human values. The founders believed OpenAI's approach to scaling was moving faster than the company's ability to understand what these systems actually do.

This wasn't just philosophical disagreement. It was existential concern. Building powerful AI systems without deep understanding of how they work, what their failure modes are, and how to align them with human intent felt reckless to the Amodei siblings and the other researchers who left with them. John Schulman, a co-founder of OpenAI, joined Anthropic in 2024 after the company had already established itself as a legitimate competitor.

Anthropic's trajectory has been remarkable. The company's first major product was Claude, a large language model positioned as a safer, more thoughtful alternative to ChatGPT. It wasn't flashier. It didn't have the same consumer hype. But enterprises started choosing it for serious work: code generation, legal analysis, financial modeling. The product-market fit was strong.

Funding followed fast. Anthropic raised a

The IPO rumors are now serious. The company is allegedly preparing for a public listing that could come sometime in 2025 or early 2026. If Anthropic goes public, it won't just be a win for the founders and early investors. It will validate the entire thesis that there's a market segment willing to pay premium prices for AI systems built with safety as a core principle.

Anthropic's emergence also changed the competitive landscape for OpenAI itself. For the first time, OpenAI had a credible rival founded by its own people. Not a scrappy startup. Not a foreign competitor. Not a Big Tech alternative. A company with better institutional knowledge of the exact same problem space, built by people who had literally written the playbook at OpenAI and decided to try a different approach.

The irony isn't lost on anyone paying attention: OpenAI's own training and credentialing created the team that built its most serious competitor. This is the mafia effect in action.

Claude's Technical Innovations

Anthropic's Claude model introduced several technical innovations that differentiated it from competitors. The company developed a training methodology called Constitutional AI (CAI), which trains models to follow a set of constitutional principles rather than relying solely on human feedback. This approach made Claude more consistent, more predictable, and arguably safer.

The model also demonstrated exceptional performance on complex reasoning tasks. Benchmarks showed Claude outperforming competitors on tasks that required multi-step reasoning, mathematical problem-solving, and code generation. Enterprise customers noticed the difference immediately.

Claude also became the first major LLM to demonstrate strong capabilities with very long context windows. Ability to process documents with hundreds of thousands of tokens meant enterprises could feed entire codebases, legal documents, or research papers directly into the model. This feature alone created use cases that weren't possible with competing models.

Market Position and Adoption

By 2025, Anthropic had secured partnerships with major enterprises and cloud providers. The company's products were available through AWS, Google Cloud, and direct API access. Unlike OpenAI, which built its own distribution channel through ChatGPT and API access, Anthropic focused on working with existing enterprise platforms. This strategy meant slower consumer adoption but faster enterprise deployment.

The company also carefully managed the hype cycle. While OpenAI released frequent product updates and announced ambitious capabilities, Anthropic maintained a more measured communications strategy. Claims were backed by benchmarks. Capabilities were demonstrated before marketing campaigns. This conservative approach actually increased credibility among technical buyers.

Adept AI Labs: The $1B+ Enterprise AI Tool Maker

David Luan was not a founder at OpenAI, but he was close. As VP of Engineering, he oversaw the teams building critical infrastructure that made GPT-4 and ChatGPT work at scale. When he left in 2020, it was before the ChatGPT explosion. He spent time at Google, learning how Big Tech operates. Then, in 2021, he founded Adept AI Labs with a clear thesis: large language models were powerful, but they weren't useful for enterprise work until someone built software to interface with them properly.

Adept positioned itself as the layer between LLMs and actual business processes. The company built tools that let employees interact with their existing business software using natural language. Imagine telling an AI assistant, "Create a customer invoice for order #12345 and send it to accounts payable," and having it actually navigate the enterprise software, fill in the right fields, and execute the action.

This was much harder than it sounds. Enterprises don't use standardized interfaces. They run decades-old legacy systems, proprietary databases, custom workflows. Getting an AI system to understand and interact with this complexity requires serious engineering. Adept invested in deep learning for user interface understanding, natural language to structured command translation, and enterprise system integration.

The company raised

But then something unexpected happened. In late 2024, Amazon hired both David Luan and the other Adept founders. The quasi-acquisition was structured differently than typical acquisitions. Amazon didn't buy the company outright. Instead, the company was left somewhat independent while its founders and key staff joined Amazon. The move allowed Amazon to absorb the talent and approach while avoiding triggering antitrust concerns that come with obvious Big Tech acquisitions of promising AI startups.

This pattern repeated with other Adept employees, and it would repeat again with Covariant. There's a strategic logic to it: if you're Amazon, you can't be seen blatantly acquiring every AI startup founded by talented people. The regulatory heat would be intense. But you can hire the founders as division leaders and integrate the best ideas into your own products. It's legal, it's efficient, and it's harder for regulators to challenge.

The Enterprise AI Agent Thesis

Adept's original thesis was that large models would become most useful when integrated into business workflows. This meant building AI systems that understand enterprise software interfaces, can navigate complex systems, and execute tasks with minimal human intervention. The company called these AI agents.

Agent-based automation is now considered one of the most important near-term applications of large language models. If a company can deploy an AI agent that handles customer service questions, it eliminates entire tiers of junior support staff. If an agent can process invoice data from emails and input it into accounting systems, it eliminates data entry roles. Multiply that across thousands of business processes, and the economic impact is staggering.

Adept's early bet on this thesis gave it credibility in the enterprise market. The company had working products, not just theoretical frameworks. This mattered when raising capital.

Why Amazon Moved Fast

Amazon's decision to acquire Adept's team wasn't mysterious when you understood the context. The company had launched AWS Bedrock, a service that lets enterprises access various LLMs. But AWS lacked the layer that actually makes those models useful in practice. By bringing in Adept's team, Amazon could accelerate AWS's agent capabilities and offer enterprises end-to-end solutions for enterprise AI automation.

For Adept's founders, joining Amazon meant access to massive infrastructure, enterprise relationships, and capital. For Amazon, it meant acquiring the team that understood how to make agents work in enterprise contexts.

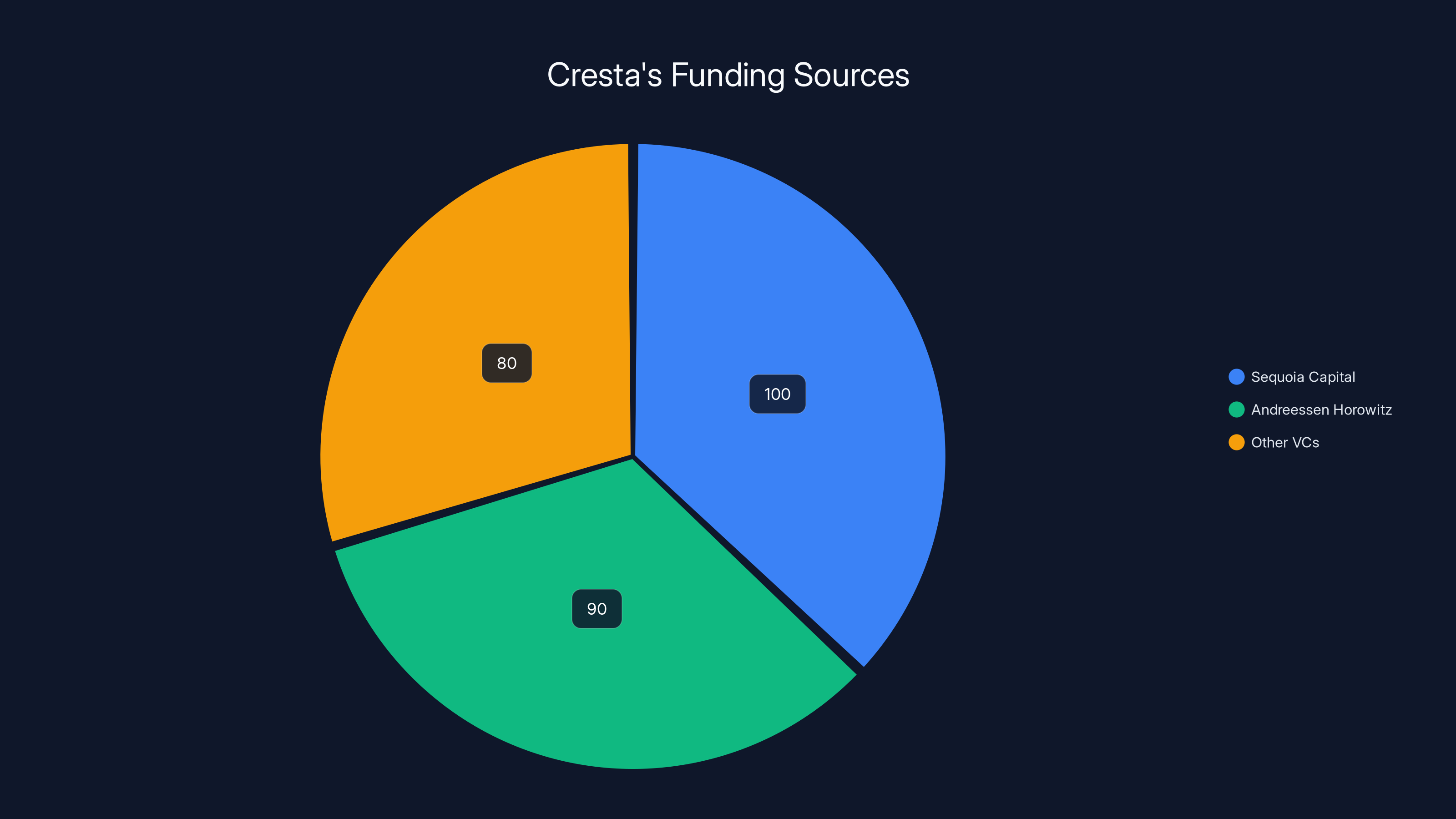

Cresta raised over $270 million, with significant contributions from Sequoia Capital and Andreessen Horowitz. Estimated data shows a balanced distribution among key investors.

Cresta: The $270M AI Contact Center Platform

Tim Shi joined OpenAI as an early member of the team. His role was focused on a specific problem: how do you build systems that are aligned with human intent? How do you ensure that as these models get more powerful, they remain beneficial? This was early days, 2017, before ChatGPT, before the broader AI boom. The problem was theoretical. Then it became urgent.

After a year at OpenAI, Shi left to found Cresta, based in San Francisco. The company's focus was specific: help customer service representatives be better at their jobs using AI. This sounds narrower than Adept's vision, but it was actually brilliant market positioning.

Customer service is one of the few business functions where almost every interaction involves language. Reps handle hundreds of customer questions per day. Many of these questions are variations of the same themes. What if an AI system could suggest the right response? What if it could predict which customers were most likely to churn and alert reps to focus on retention? What if it could transcribe calls, summarize them, and automatically flag cases that need escalation?

Cresta built exactly this. The platform sits alongside customer service software (Salesforce, Zendesk, etc.) and uses LLMs to improve rep performance. Early data showed dramatic results: faster resolution times, higher customer satisfaction, lower churn. Enterprises like DoorDash and Notion began using the platform.

The company raised over $270 million from elite VCs including Sequoia Capital, Andreessen Horowitz, and others. This is a significant amount of capital for a vertical SaaS company. But investors understood the thesis: customer service is expensive, fragmented, and full of inefficiency. If Cresta could automate even portions of it, the market was worth billions.

Cresta's success validated a broader thesis: the most useful applications of LLMs in the near term aren't consumer-facing. They're enterprise tools that augment human expertise. Sales reps, customer service reps, lawyers, accountants, engineers—these professionals have institutional knowledge and expertise. AI systems that work alongside them and make them more productive are incredibly valuable.

The Augmentation Model vs. Automation

Cresta positioned itself around augmentation rather than automation. Instead of trying to replace customer service reps entirely, the platform made them better. This was more realistic than some of the harder automation plays. It also addressed the biggest concern enterprises had about AI: job displacement.

When you frame AI as a tool that makes workers more productive, more companies will adopt it faster. There's less political resistance. Employees don't feel threatened. The economics work better too, because you're adding value on top of existing human labor rather than trying to replace it completely.

Cresta's growth trajectory reflected this positioning. Enterprise software companies trust tools that augment their teams more than tools that try to eliminate their teams.

Integration with Existing Enterprise Systems

Cresta's success came from deep integration with existing customer service infrastructure. The company didn't try to replace Salesforce or Zendesk. Instead, it plugged into these systems and added AI capabilities on top. This meant easier sales, faster deployment, and lower customer acquisition costs.

This design principle—adding value on top of existing systems rather than replacing them—became a pattern among successful OpenAI-founded companies. It reduced customer friction and meant enterprises didn't have to rip-and-replace their existing workflows.

Applied Compute: The Quiet $100M Valuation

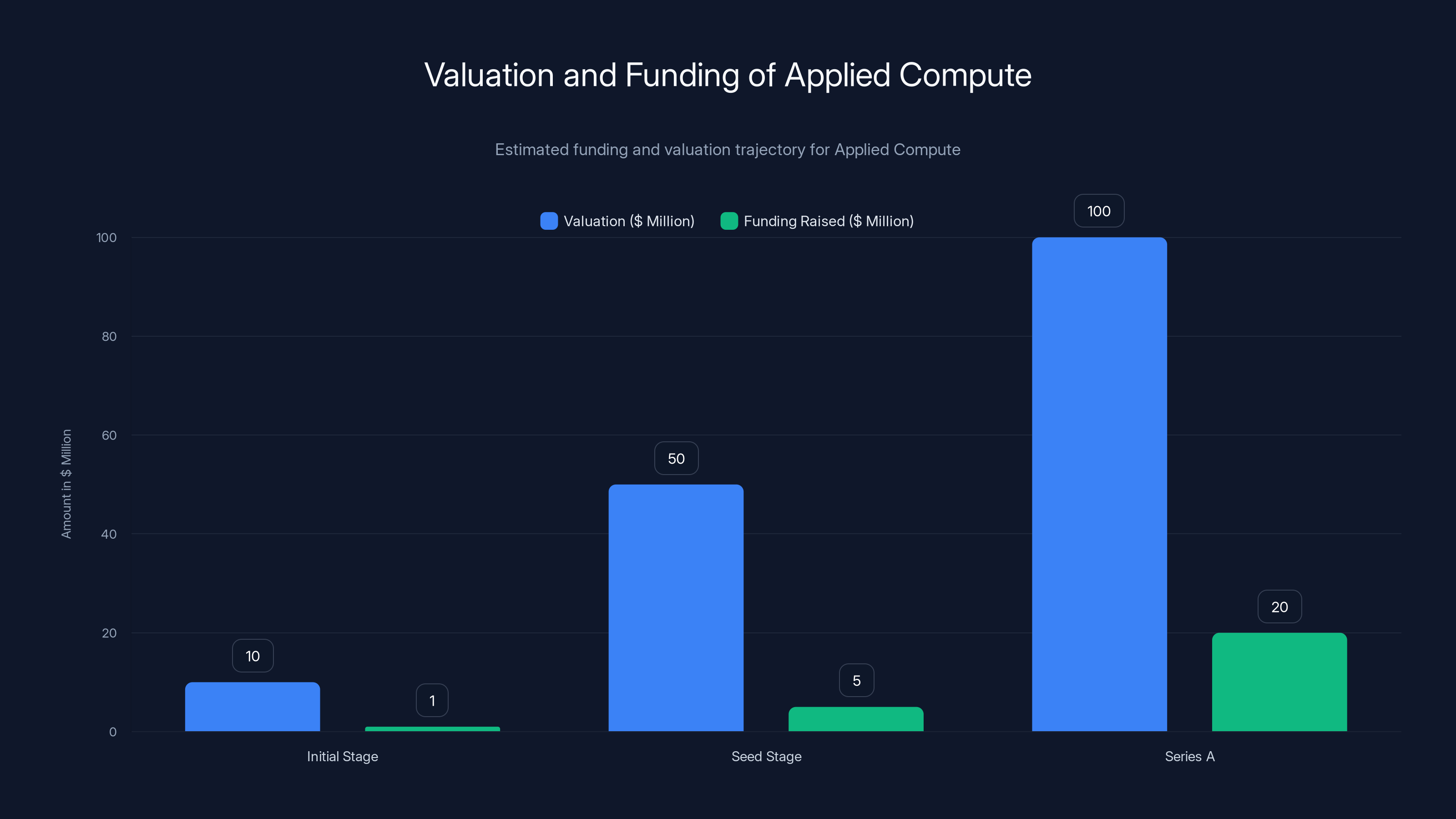

Applied Compute is where the OpenAI mafia story gets interesting in a more understated way. Three ex-OpenAI staffers—Rhythm Garg, Linden Li, and Yash Patil—all worked as technical staff at OpenAI for over a year before leaving in May 2024. They spent about 10 months building the company, and then Benchmark led a funding round that valued the company at $100 million.

Think about that timeline: These weren't serial entrepreneurs. They hadn't launched a publicly available product. They had credibility from OpenAI, a compelling vision for enterprise AI agents, and apparently enough of a working product or strong customer interest that Benchmark was willing to invest significantly.

Applied Compute's focus is helping enterprises train and deploy custom AI agents. This is increasingly important as off-the-shelf models hit the ceiling of usefulness. Companies need agents trained on their proprietary data, following their specific business logic, and integrated into their workflows. Applied Compute builds the infrastructure to make this possible at enterprise scale.

The company raised

The Infrastructure Layer Play

Applied Compute is part of a broader trend: building infrastructure that enables other companies to deploy AI more easily. Companies like Applied Compute, Weights & Biases, Hugging Face, and others are basically offering the tools that enterprises need to make custom AI work.

This is inherently less flashy than building consumer products or direct-to-enterprise applications. But it's also more defensible and more profitable. If you own the infrastructure layer that every AI company depends on, you have significant leverage.

Investors are increasingly betting that multiple AI infrastructure companies will succeed, each serving slightly different needs. Applied Compute's focus on custom agents puts it in an interesting position. If enterprise AI agents become as common as web applications, having the infrastructure to build them at scale becomes critical.

Covariant: The Amazon Acquisition Play

Covariant's founding team reads like a robotics hall of fame. Pieter Abbeel, Peter Chen, and Rocky Duan all worked at OpenAI in 2016 and 2017 as research scientists. At that time, OpenAI was investing heavily in robotics, believing that robots powered by advanced AI would be a major part of the company's future. These three researchers were at the center of that effort.

They left OpenAI to found Covariant, a Berkeley-based startup focused on building foundation AI models for robots. If foundation models like GPT-4 transform how humans interact with computers, what would foundation models for robots look like? How would they enable robots to be more adaptable, more capable, and easier to program?

Covariant attracted serious investment and partnership. The company worked with robotics companies and manufacturers to train models on robotics data, build simulations, and create robots that could learn and adapt to new tasks faster than traditional robotics approaches.

Then in 2024, Amazon hired all three of the Covariant founders and about a quarter of Covariant's staff. This move was particularly interesting because Amazon is a company obsessed with robotics and warehouse automation. The company has invested heavily in robotics startups, acquired Kiva Systems (now Amazon Robotics), and embedded robotics into its supply chain.

By bringing in the Covariant team, Amazon could accelerate the development of AI-powered robotics. The company's strategy here was similar to the Adept move: acquire the talent, integrate the approach, build the capabilities internally.

From a regulatory perspective, this is clever. Amazon isn't explicitly acquiring these companies. The companies remain separate entities (at least on paper). But the founders are gone, and the best talent has moved into Amazon divisions. For regulators trying to determine whether Amazon is anticompetitively acquiring AI talent, this is harder to target than explicit acquisitions.

Robotics as the Next AI Frontier

Covariant represents an interesting bet on where AI goes next. Most of the focus on large language models has been on digital work: writing, coding, analysis. But the physical world is also full of tasks that could benefit from better AI. Warehouse picking, manufacturing, food preparation, construction, agriculture.

Traditional robotics approaches rely on detailed programming and careful environmental control. Modern robotics powered by foundation models might be more adaptable. A robot trained on diverse data might be able to pick novel objects, adapt to slight changes in environment, or learn new tasks with relatively little retraining.

If Covariant (now absorbed into Amazon) can crack this, the implications are massive. Automation of physical work at scale would reshape labor markets, economics, and supply chains.

Adept AI Labs raised

Jonas Schneider and Daedalus: The Robotics Factory Play

Jonas Schneider led OpenAI's software engineering for robotics team. Robotics was an area where OpenAI invested heavily, and Schneider was deeply involved in building the systems that would enable robots to learn and adapt. In 2019, he left to co-found Daedalus.

Daedalus has an unusual focus: building advanced factories for precision components. This sounds like hardware, which is a different business from the software-focused companies we've discussed. But the connection to AI is real: Daedalus uses AI to optimize manufacturing processes, improve precision, and reduce waste.

Manufacturing is one of the sectors most resistant to change and least digitized. Many factories still rely on processes developed decades ago. Introducing AI into manufacturing could improve efficiency, quality, and consistency. Daedalus is betting that companies will pay for this improvement.

The company raised a $21 million Series A from Khosla Ventures and others. This shows that even in the AI boom, hardware-focused companies founded by AI talent can attract capital. Investors believed that Schneider's experience at OpenAI translated into an ability to build better manufacturing systems.

The Hardware-Software Convergence

Daedalus represents a trend where AI talent is increasingly being applied to physical problems. We've seen this with robotics (Covariant), with climate solutions (Living Carbon), and with manufacturing (Daedalus). The pattern is: take deep AI expertise, apply it to industries that haven't been transformed by software, find asymmetric opportunities.

These industries often have higher barriers to entry (regulatory, capital, expertise) but also less direct competition from other tech companies. Once you succeed, you have significant moats.

Andrej Karpathy and Eureka Labs: The Education Thesis

Andrej Karpathy is perhaps the most famous AI researcher to leave OpenAI. He was a founding member and research scientist at OpenAI, focusing on computer vision. But he's probably better known for his YouTube series on AI concepts. His ability to explain complex topics clearly made him one of the most trusted voices in AI education.

In 2017, Karpathy left OpenAI to join Tesla and lead its autopilot program. He spent seven years at Tesla working on autonomous driving, a problem that requires computer vision, machine learning, and robotics. In 2024, he left Tesla to found Eureka Labs.

Eureka Labs is building AI teaching assistants. The premise is simple: personalized education at scale. An AI system that understands how each student learns, can explain concepts in different ways, can identify knowledge gaps, and can provide customized feedback. This could democratize education and make high-quality tutoring available to everyone.

Karpathy's credibility was instrumental. He announced Eureka Labs and described his vision, and investors immediately understood the thesis. Here was someone with deep expertise in AI, years of experience at one of the most demanding problem spaces (autonomous vehicles), and a proven ability to communicate complex ideas. If anyone could build effective AI teaching assistants, it would be him.

Eureka Labs is still in early stages, but it represents a shift in where top AI talent is focusing. Less emphasis on making AI models bigger and faster, more emphasis on useful applications that affect people's lives.

The Education AI Opportunity

Education is one of the largest sectors in the world economy, and it's been surprisingly resistant to technological transformation. Most classrooms look similar to how they looked 50 years ago. Student-to-teacher ratios remain high. Personalization is limited by practical constraints.

AI tutoring systems could change this. But building effective AI tutoring requires more than just good language models. You need pedagogical expertise, understanding of how students learn, subject matter knowledge, and ability to measure understanding and adaptation.

Karpathy's Eureka Labs is attempting to solve this problem. If successful, it would be one of the highest-impact AI applications possible: improving education for millions of people.

Margaret Jennings and Kindo: The Enterprise Chatbot Approach

Margaret Jennings worked at OpenAI in 2022 and 2023, a period when the company was ramping up to build and launch ChatGPT. She then co-founded Kindo, positioning it as an AI chatbot for enterprises.

Enterprise chatbots aren't new. But chatbots built on top of modern large language models are dramatically better. They can understand context, handle nuance, and provide more natural conversations. Kindo positioned itself as a chatbot specifically designed for enterprise use cases: customer service, internal support, knowledge management.

The company raised over

Interestingly, Jennings left Kindo in 2024 to head product and research at French AI startup Mistral. This is a pattern we'll see repeatedly: founders or early leaders leave their own companies to join other promising ventures. In a fast-moving field like AI, the opportunity cost of staying in one role can be high if better opportunities emerge.

The Enterprise Chatbot Market Fragmentation

Kindo represents one of dozens of startups building enterprise chatbots. Companies like Intercom, Zendesk, and Gorgias all have chatbot offerings. Major software companies like Salesforce and Microsoft have built AI assistants into their platforms. The market is fragmented and highly competitive.

For a startup like Kindo to win, it needs to be better at a specific use case or have faster time to value than existing solutions. Jennings' OpenAI background was valuable for credibility and for understanding how to leverage modern LLMs effectively. But it wasn't enough to guarantee success, which is why she eventually moved on to other opportunities.

That said, the fact that Kindo raised $27+ million shows there's still substantial capital available for teams executing well in this space.

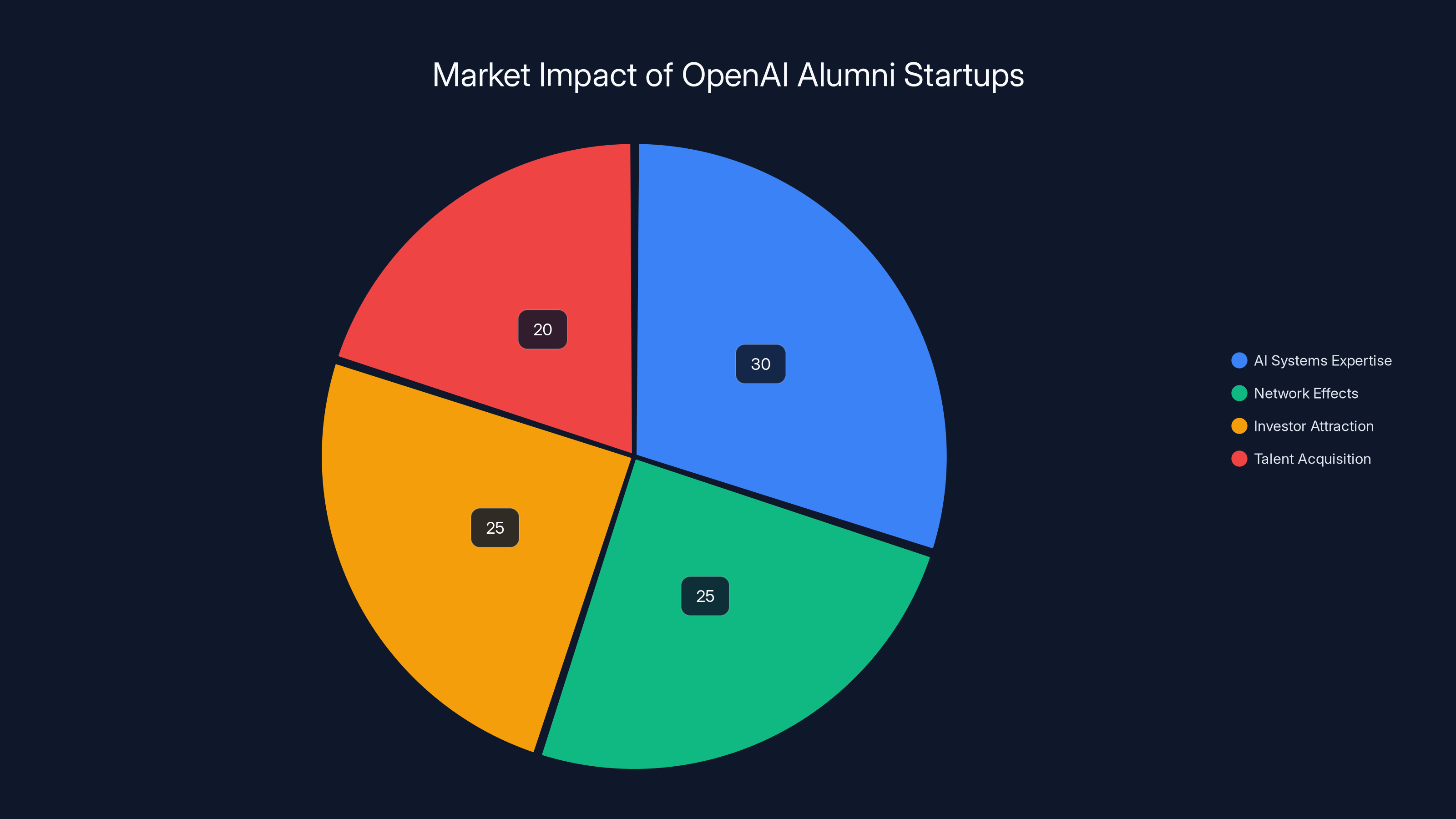

OpenAI alumni startups leverage AI expertise (30%), network effects (25%), investor attraction (25%), and talent acquisition (20%) to gain a competitive edge. Estimated data.

Maddie Hall and Living Carbon: The Climate AI Pivot

Maddie Hall worked on special projects at OpenAI before leaving in 2019 to co-found Living Carbon. This is an interesting case because Living Carbon's focus is climate change and engineered plants, not traditional AI applications.

Hall's thesis was that engineered plants—plants that have been modified through genetic engineering and AI-assisted design to be more efficient at carbon capture—could be a tool in fighting climate change. Instead of relying on traditional afforestation, which takes decades and faces land availability challenges, why not engineer plants that are dramatically more efficient at removing carbon from the atmosphere?

Living Carbon raised a **

What's the connection to AI? AI was used to design the plants, optimize their characteristics, and navigate the complex space of genetic modifications. OpenAI's work on generative models and optimization problems had applications here.

Hall's move from AI at OpenAI to climate-focused biotech at Living Carbon represents a trend: talented people are increasingly applying AI expertise to problems outside traditional tech. The skills transfer because the underlying problems—optimization, pattern recognition, complex systems—are domain-agnostic.

AI and Synthetic Biology Convergence

Living Carbon is part of a broader trend of AI being applied to biology and genetics. Companies are using AI to design proteins, predict how DNA sequences will behave, optimize enzyme function, and design synthetic organisms. This convergence between AI and biology is likely to be one of the most consequential developments of the next decade.

Hall's background at OpenAI gave her credibility in biology circles. That credibility helped her raise capital and attract partners. It's a testament to how the skills and network built at OpenAI transfer across industries.

Liam Fedus and Periodic Labs: The Materials Science Play

Liam Fedus was OpenAI's VP of post-training research, responsible for the techniques that made models like GPT-4 more capable and reliable. Post-training involves taking a base model and further refining it using techniques like reinforcement learning from human feedback (RLHF). This is technically complex work that requires deep understanding of optimization, reinforcement learning, and how to improve model behavior.

Fedus left to co-found Periodic Labs. The company's focus is using AI to accelerate materials discovery. New materials are foundational to solving many problems: better batteries for electric vehicles, stronger metals for aerospace, more efficient solar cells, better semiconductors. But discovering new materials traditionally involves experimental trial and error—slow, expensive, and often inefficient.

With AI, you can model material properties computationally, predict which combinations might be promising, and dramatically reduce the experimental work needed. Periodic Labs builds the AI systems and infrastructure to do this at scale.

This is another example of AI talent being applied to physical science problems. Fedus' expertise in post-training and model optimization is relevant because materials discovery involves optimizing across multiple complex variables. The techniques he developed at OpenAI have direct applications here.

The Materials AI Frontier

Materials science has been relatively AI-resistant because the problem space is complex and there's not as much training data available as in domains like vision or language. But companies like Periodic Labs are changing this by building synthetic data, using physics-informed neural networks, and applying other techniques to make AI useful for materials problems.

If this works at scale, the implications are enormous. Every major company in aerospace, automotive, energy, and electronics would be interested in AI-accelerated materials discovery. The market is large, the problems are important, and the barriers to entry are significant.

Tim Shi Extended: The Cresta Success Story Deep Dive

While we covered Cresta earlier, Tim Shi's journey deserves more attention because it illustrates exactly how OpenAI alumni are succeeding as founders. Shi's time at OpenAI was brief but formative. He focused on AI alignment and safety—fundamental questions about how to ensure that as these systems get more powerful, they remain beneficial.

These were problems being worked on by the smartest people in AI. Shi absorbed that expertise, that mode of thinking, that network. When he left to found Cresta, he applied this rigor to the customer service domain. How do you build AI systems that improve customer experience? How do you ensure the AI doesn't cause problems? How do you measure success?

Cresta succeeded because Shi combined OpenAI expertise with a deep understanding of customer service problems. He spent time understanding enterprise workflows, customer pain points, existing software. This combination of deep AI knowledge and domain expertise is rare and valuable.

The company's growth to $270 million in raised capital and presumably a multi-billion dollar valuation (not publicly disclosed) reflects this combination working in practice.

The Venture Capital Pattern

Cresta's fundraising trajectory also illustrates how venture capital works in AI. Early investors included seed-stage VCs and angels. As the company gained traction, Sequoia Capital and Andreessen Horowitz (two of the most influential venture firms in the world) joined. Once you get funding from these firms, subsequent rounds become easier. Partners at these firms have extensive networks, credibility, and ability to influence other large investors.

OpenAI alumni benefit from halo effect here. If Sequoia invested in your company, other venture firms pay attention. If your founding team includes people from OpenAI, that's a credible signal that you're working on something important.

Applied Compute quickly reached a

The Broader Pattern: What the Open AI Mafia Tells Us

Looking across all these companies—Anthropic, Adept, Cresta, Applied Compute, Covariant, Daedalus, Eureka Labs, Kindo, Living Carbon, Periodic Labs, and others—several patterns emerge.

First, there's a credibility shortcut. Founding teams that include OpenAI alumni can raise capital faster, attract talent more easily, and achieve higher valuations than comparable startups. This is partly because they're talented (which they are), but partly because being from OpenAI signals that you've worked on important problems at scale.

Second, these founders are applying OpenAI expertise across a remarkably broad range of industries. Yes, there are AI-native companies building models or AI tools. But there are also founders applying AI to manufacturing, robotics, climate, materials, education, customer service. The expertise transfers broadly.

Third, many of these companies are infrastructure plays or enable-your-own-AI plays rather than direct LLM applications. Adept enables enterprise automation. Applied Compute enables custom agent deployment. Periodic Labs enables materials discovery. These companies are building the layer between raw model capabilities and useful applications.

Fourth, Big Tech is acquisitive. Amazon acquiring Adept and Covariant teams wasn't random. It was strategic. These companies represent threats to Amazon's competitive position and opportunities to accelerate internal projects. By quietly acquiring the teams (if not the companies), Amazon improves its competitive position while avoiding the antitrust heat that comes with obvious acquisitions of promising startups.

Fifth, the talent distribution at OpenAI was broad. You had executives (Dario and Daniela Amodei), researchers (Pieter Abbeel, Peter Chen, Rocky Duan), engineers (David Luan), product leaders (Peter Deng), and specialists in areas like robotics, post-training, and alignment. Each person left with different expertise and different visions of how to apply it.

Finally, the exit patterns are varied. Some founders are raising money and building toward major exits (Anthropic heading to IPO). Some are being acquired by Big Tech (Covariant). Some are being called to join other promising ventures (Margaret Jennings going to Mistral). The field is moving fast, and opportunities are constantly emerging.

Why Open AI Became the Credentialing Factory

The question worth asking is: Why OpenAI specifically? The company didn't invent the concept of large language models. It didn't invent transformers. It didn't even invent the training techniques that made GPT-3 successful. So why did people decide to leave OpenAI to start companies more than they left Google, Meta, or other AI research companies?

Part of the answer is timing. OpenAI launched ChatGPT in November 2022. The response was unprecedented. The model was deployed to a broad audience, and people immediately understood its potential. Within months, every major company was strategizing around large language models. Within a year, generative AI was the dominant narrative in tech investing.

This created a unique moment where OpenAI employees suddenly had tremendous credibility and leverage. They'd worked on systems that visibly, dramatically changed how people worked. They understood large-scale model training, deployment, and fine-tuning at a level most people didn't. They were suddenly in demand.

Part of the answer is also network effects. Once the first few people left OpenAI to start companies, it became normalized. Investors started specifically looking for OpenAI alumni. Talented people at OpenAI saw their colleagues successfully raising capital and achieving outsized returns. This created incentives to leave.

And part of the answer is genuine disagreement about direction. OpenAI made specific choices about how to approach AI safety, how fast to deploy, how to balance safety with capability. Not everyone agreed. Dario Amodei's decision to leave and start Anthropic wasn't arbitrary. It reflected a fundamental disagreement about how to approach AI development safely. Other people left for their own reasons: frustration with organizational structure, disagreement about product direction, or simply wanting to build something they had complete control over.

The Ecosystem Effect: How Open AI Alumni Network Each Other

One of the most interesting dynamics is how OpenAI alumni network with each other. Aliisa Rosenthal, OpenAI's first sales leader, went into venture capital specifically to invest in former colleagues and leverage her network. Peter Deng, who was head of consumer products at OpenAI, became a general partner at Felicis and started looking at OpenAI-founded startups as deal flow.

This creates a self-reinforcing network. OpenAI alumni are more likely to back other OpenAI alumni. They understand the credibility, know the people, and believe in their ability to execute. This means capital flows easily within the OpenAI alumni network.

We're seeing similar dynamics with other tech company alumni networks: the PayPal mafia, the Paylocity group, the various Facebook alumni who founded companies. But the OpenAI alumni network is unique because it's forming during an AI boom where every investor wants exposure to AI expertise. Being part of the OpenAI alumni network is a concrete advantage.

This dynamic will likely persist. As successful OpenAI-founded companies mature, they'll hire other OpenAI alumni. Those people will eventually leave to start other companies. A broader ecosystem is forming, and being part of it creates compounding advantages.

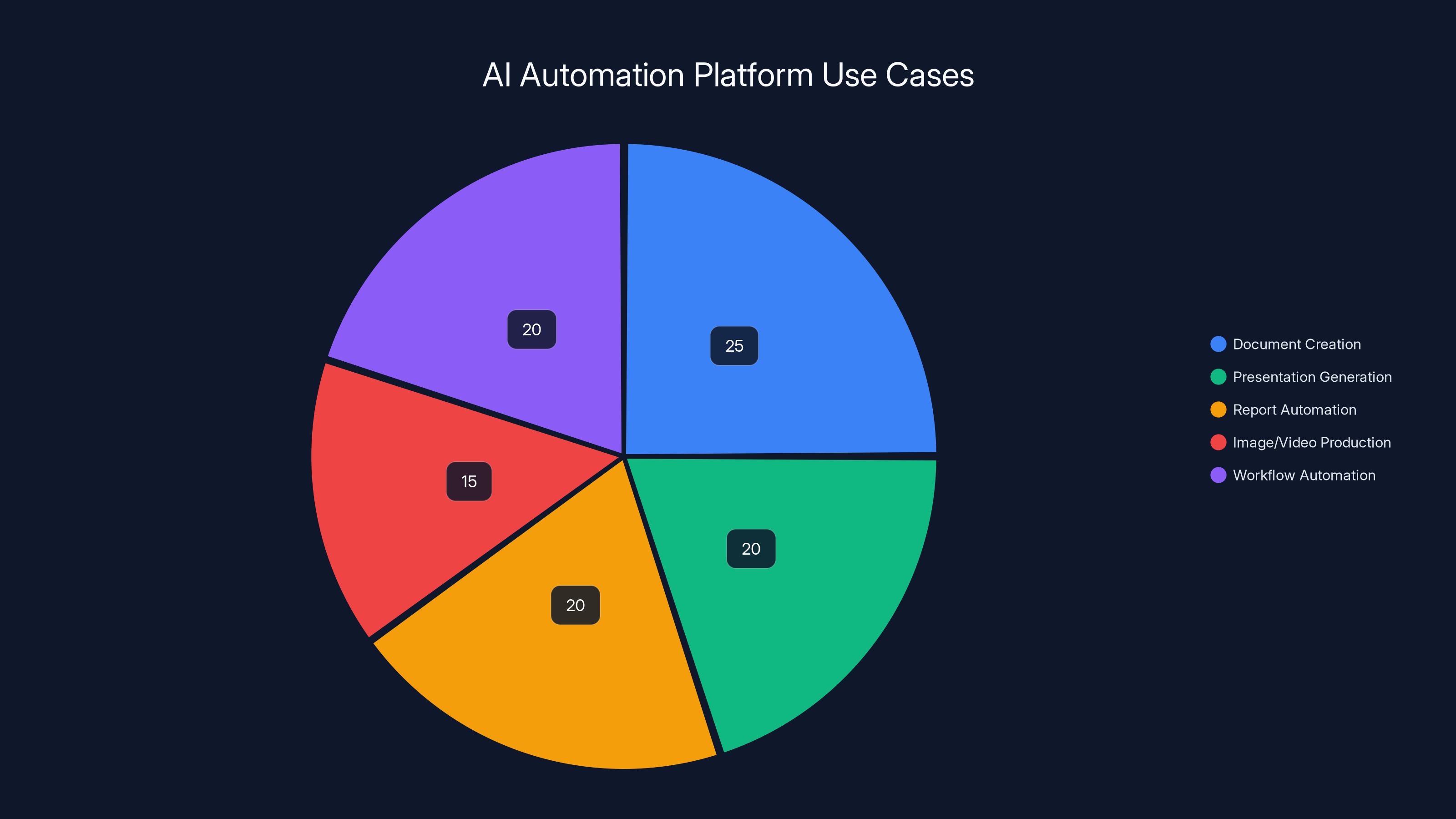

Runable and similar platforms are primarily used for document creation and workflow automation, with significant use in presentation and report generation. (Estimated data)

The International Dimension: Open AI Alumni Going Global

While most of the prominent OpenAI-founded companies are based in the US (San Francisco, Berkeley, California), there are international examples worth noting. Margaret Jennings leaving Kindo to join Mistral, a French AI company, illustrates how OpenAI expertise is becoming globally distributed.

Mistral is a prime example of this internationalization. The company was founded by former Meta researchers and has attracted talent from around the world. As AI becomes more globally competitive, the advantage of being associated with OpenAI is available to people regardless of geography. A talented researcher or engineer in France with OpenAI experience has just as much credibility as someone in Silicon Valley.

This might eventually diffuse the "mafia" advantage. If every major AI hub (US, Europe, Asia) has clusters of talented researchers with major AI company experience, then no single company's alumni network is disproportionately valuable. But we're not there yet. OpenAI remains uniquely prestigious, and that prestige still primarily benefits people in the US and Silicon Valley.

The IPO Question: Timing and Valuation Dynamics

Several OpenAI-founded companies are now serious IPO candidates. Anthropic, valued at $380 billion, is preparing for a public listing. This raises interesting questions about valuation, market expectations, and whether AI companies can deliver on their valuations at public markets.

The precedent from Nvidia (which achieved a

For investors who got in early, these IPOs represent life-changing returns. For OpenAI itself, these IPOs are evidence that the company's training and networks have created value. And for future founders and researchers at major AI companies, they're proof that leaving to start your own company can be enormously lucrative.

Lessons for Ambitious Technologists and Entrepreneurs

If you're a talented engineer or researcher trying to figure out your career, the OpenAI alumni network offers some lessons.

First, institutional knowledge has concrete value. When David Luan left OpenAI, he didn't just leave with credentials. He left with deep understanding of how to build systems at scale, how to train models, how to think about engineering in an AI context. This knowledge directly enabled Adept's success.

Second, networks matter more in concentrated fields. In AI, there are relatively few people doing truly important work. Knowing those people, having worked with them, having credibility within that community—this is valuable. When you join a company like OpenAI, you're not just getting a job. You're joining a network of the most capable people in the field.

Third, staying too long can be costly. The OpenAI alumni who have succeeded are generally people who left at the right time. Not too early (you need real experience and credentials), but not too late (by then the upside has been realized). Getting this timing right is hard but crucial.

Fourth, strong founding teams are the highest priority. Almost all of the successful OpenAI-founded companies have strong co-founding teams. Dario and Daniela Amodei for Anthropic. Three founders for Covariant. Multiple founders for Adept. This isn't random. Venture investors prioritize founding team strength above almost everything else, and for good reason.

Fifth, applying expertise to new domains is powerful. The companies that succeeded most impressively are ones that took deep AI expertise and applied it to specific domains that hadn't been fully optimized. Cresta in customer service. Eureka Labs in education. Living Carbon in climate. Domain focus creates defensibility.

The Competitive Threat to Open AI

For OpenAI itself, the talent exodus creates strategic challenges. Every founder who leaves, every engineer who departs, potentially becomes a competitor. Anthropic is the most obvious example, but the pattern repeats across the ecosystem.

OpenAI has tried to address this through retention (paying highly, offering equity, providing interesting work). But retention is always a challenge for successful companies. Young, ambitious people see opportunities elsewhere. The company can't prevent everyone from leaving, nor should it try.

What's interesting is that the talent exodus might actually strengthen OpenAI's competitive position indirectly. Anthropic, Adept, Cresta, and others are not building replicas of OpenAI. They're building different products, focused on different markets, with different approaches. None of them are direct threats to ChatGPT. Many of them are complementary to OpenAI in various ways.

Anthropic's threat is different: it's a direct competitor in the LLM space. But Anthropic's success also validates OpenAI's original approach of focusing on AI safety and building powerful models. It doesn't disprove the thesis. It just offers a different implementation.

Future of the Open AI Mafia

The OpenAI alumni network will likely continue to generate successful startups. As these companies mature and grow, they'll become credentialing factories themselves. Engineers who build successful companies at Anthropic or Cresta will leave to start their own companies. Secondary networks will form.

We might also see consolidation. Larger OpenAI-founded companies might acquire smaller ones. Anthropic might buy Eureka Labs if they believe AI tutoring fits their strategic vision. Amazon might acquire Periodic Labs if they think it accelerates their materials science work.

The field will also mature. Right now, there's a halo effect to being from OpenAI. Ten years from now, that halo might fade as other companies generate their own successful alumni networks. The specific historical moment of being at OpenAI during the ChatGPT explosion will be less relevant.

But for now, in 2025, being an OpenAI alum is a genuine advantage. If you have credibility in that network, you can raise capital faster, attract talent more easily, and achieve outsized valuations. It's not unfair to call it a mafia: a network of people using their shared history and credibility to create value for themselves and their new ventures.

The question worth asking is whether the companies they create will actually deliver on their valuations and hype. Will Anthropic become a major AI company that rivals OpenAI? Will Cresta, Living Carbon, Eureka Labs, and others actually solve the problems they're targeting? Or will many of them flame out, unable to translate initial funding and credibility into actual product-market fit?

History suggests some will succeed and some will fail. But the fact that multiple billion-dollar companies have already emerged from OpenAI alumni suggests the pattern is real and likely to continue.

How Runable Fits Into the Broader AI Automation Landscape

As the AI ecosystem matures and more startups emerge from OpenAI and other laboratories, platforms like Runable serve an increasingly important role. These open AI automation platforms enable the next generation of companies to build on top of proven LLM capabilities without rebuilding fundamental infrastructure.

Runable provides AI-powered automation for creating presentations, documents, reports, images, and videos, starting at $9/month. For startups founded by OpenAI alumni looking to build AI agents, automate workflows, or generate content at scale, Runable offers a practical foundation.

Consider a startup like Cresta building AI systems for customer service. They could use Runable to automatically generate customer summaries, create follow-up documentation, and produce internal reports. Or a company like Applied Compute helping enterprises deploy custom AI agents might use Runable to automate documentation and training material creation.

This is the future of AI infrastructure: specialized platforms that solve specific problems, built on top of more general-purpose models. Runable fits into this ecosystem by providing accessible, affordable automation capabilities that teams can integrate into their workflows.

Use Case: Generate comprehensive customer service summaries and follow-up documentation automatically, freeing your team to focus on complex customer issues.

Try Runable For Free

FAQ

What makes Open AI alumni startups different from other AI startups?

OpenAI alumni have worked on some of the most important AI systems in the world, giving them deep technical knowledge and credibility. They also benefit from the OpenAI network, making it easier to raise capital, attract talent, and gain partnership opportunities. This combination of expertise and network effects creates a significant advantage over startups founded by people without this background. Additionally, investors specifically seek out OpenAI alumni as founders because the track record shows these founders tend to execute well and attract attention to their projects.

Has Anthropic actually become a competitor to Open AI?

Yes, Anthropic is OpenAI's most serious competitor. The company has raised over

Why is Amazon acquiring so many Open AI-founded startups?

Amazon is acquiring OpenAI-founded startup teams to rapidly accelerate its own AI capabilities across multiple domains: customer service (Cresta-like capabilities), enterprise automation (Adept-like capabilities), and robotics (Covariant). By hiring the founders and key team members rather than doing explicit acquisitions, Amazon can integrate this talent and expertise while potentially avoiding regulatory scrutiny. From the founders' perspective, joining Amazon provides access to massive infrastructure, enterprise relationships, and capital that can accelerate their vision.

Are Open AI alumni startups just riding hype, or do they have real technical advantages?

OpenAI alumni have real technical advantages grounded in their work on large language models, training methodology, and AI systems at scale. However, they're also benefiting from hype and investor enthusiasm for all things AI. The strongest OpenAI-founded companies (Anthropic, Cresta, Adept) combine genuine technical innovation with strong market timing and focus. The weakest ones might struggle because hype alone can't sustain a company long-term. Success depends on actually building products that solve real problems better than alternatives.

Will the "Open AI mafia" effect diminish over time?

Probably, but not immediately. As other labs (Meta, Google, Anthropic, Mistral) generate successful alumni networks, the specific credibility of being from OpenAI will diminish. Additionally, as more years pass from the ChatGPT launch, the historical novelty of being an OpenAI alum will fade. However, for the next 5-10 years, being part of the OpenAI alumni network will likely remain a significant advantage for fundraising and recruiting. After that, other factors (product-market fit, financial performance, market dynamics) will matter more than founder pedigree.

What can other companies learn from how Open AI created this alumni network effect?

The OpenAI effect wasn't intentional strategy; it was a combination of timing, breakthrough product success (ChatGPT), rapid market adoption, and talented people working on genuinely important problems. Companies trying to replicate this should focus on: (1) attracting top talent to genuinely important problems, (2) creating a learning environment where people develop deep expertise, (3) supporting entrepreneurship and not punishing people for leaving, and (4) maintaining relationships with alumni. But honestly, the specific historical moment—launching a breakthrough product that immediately changes how millions of people work—is hard to engineer.

How much of Open AI alumni startup success is due to the founders vs. the Open AI brand?

It's honestly hard to separate. The founders are genuinely talented people who would likely succeed elsewhere. But the OpenAI brand opens doors, provides credibility, and makes fundraising easier. You could argue that a talented team from Stanford or MIT might build equally impressive companies, but they'd have to work harder to convince investors and attract top talent. The OpenAI brand accelerates the process. A reasonable estimate: 60% of success is founder quality and execution, 40% is the OpenAI brand and network advantage. But the best OpenAI-founded companies are ones where founders leverage the brand while also building genuine product value.

Conclusion: The End of One Era, The Beginning of Another

The OpenAI alumni exodus tells a story about how institutions, talent, and capital interact in technology. OpenAI trained hundreds of talented people to think about difficult problems at scale. Then, whether by design or happenstance, many of those people left to build their own companies. Some became competitors. Others became complementary. All of them benefit from the network and credibility they earned at OpenAI.

We've covered the major players in this ecosystem: Anthropic, the serious competitor preparing for IPO. Adept, acquired by Amazon but representing the frontier of AI agents for enterprise. Cresta, proving that AI-augmented customer service is a real, valuable market. Applied Compute, showing that infrastructure-layer plays work. Covariant, Daedalus, Eureka Labs, Living Carbon, Periodic Labs, and others, each applying AI expertise to specific domains and problems.

What's remarkable is not just the number of startups or the amount of capital raised. It's the diversity of approaches and focus areas. These aren't 18 copies of OpenAI. They're 18 different bets on where AI creates value. Some focus on horizontal problems (applying AI to enterprise automation broadly). Others focus on vertical markets (customer service, materials science, education). Some are pure software. Others combine software with hardware or biotech.

This diversity is actually good for the field. It means AI expertise is being applied across the economy, not concentrated in a few large companies or a few geographic regions. Problems that seemed intractable might suddenly become solvable when AI researchers focus on them. Industries that seemed resistant to technology transformation might be transformed by founders who understand both the industry and advanced AI.

For investors, it means the best OpenAI alumni are already off building companies, so you need to look to the second wave (people who worked at Anthropic, Adept, Cresta, etc.) for the next generation of opportunities. For ambitious technologists, it means the path forward isn't just joining the best companies; it's knowing when to leave them to build something better.

For OpenAI itself, the alumni exodus is both validation and threat. Validation because it shows the company trained talented people to solve hard problems. Threat because those trained people are now working on competitive problems. But OpenAI's core advantage—the scale of its compute, the depth of its research, the resources to pursue AGI—remains intact. The exodus creates competitors, not an existential threat.

The OpenAI mafia is real. The network effects are real. The competitive advantages are real. For the next few years, this dynamic will continue to generate successful startups, high valuations, and outsized returns for people who get the timing and execution right. But eventually, this too will be disrupted by the next institutional innovation in AI research and deployment. Until then, the OpenAI alumni network is perhaps the most powerful credentialing network in technology today.

The mafia isn't ending. It's just getting started.

Key Takeaways

- Anthropic emerged as OpenAI's most serious competitor with a 30 billion, founded by former VP of research Dario Amodei and his sister Daniela

- 18+ major startups founded by OpenAI alumni have collectively raised billions in venture capital, with companies like Adept, Cresta, and others achieving unicorn status within 2-3 years

- Amazon strategically hired entire teams from Adept and Covariant to accelerate internal AI capabilities while avoiding antitrust scrutiny from obvious acquisitions

- OpenAI alumni founded companies span diverse domains: AI safety (Anthropic), enterprise automation (Adept), customer service (Cresta), robotics (Covariant), education (Eureka Labs), and climate (Living Carbon)

- The OpenAI alumni network creates powerful credibility shortcuts—founders can raise capital faster, attract top talent, and achieve higher valuations due to institutional prestige and credibility

Related Articles

- Google Gemini 3.1 Pro: AI Reasoning Power Doubles [2025]

- AI Therapy and Mental Health: Why Safety Experts Are Deeply Concerned [2025]

- Gemini 3.1 Pro: Google's Record-Breaking LLM for Complex Work [2025]

- Anthropic's $30B Funding, B2B Software Gravity Well & AI Valuation [2025]

- AI Prompt Injection Attacks: The Security Crisis of Autonomous Agents [2025]

- Google Gemini 3.1 Pro: The AI Reasoning Breakthrough Reshaping Enterprise AI [2025]