AI Security Breaches: How AI Agents Exposed Real Data

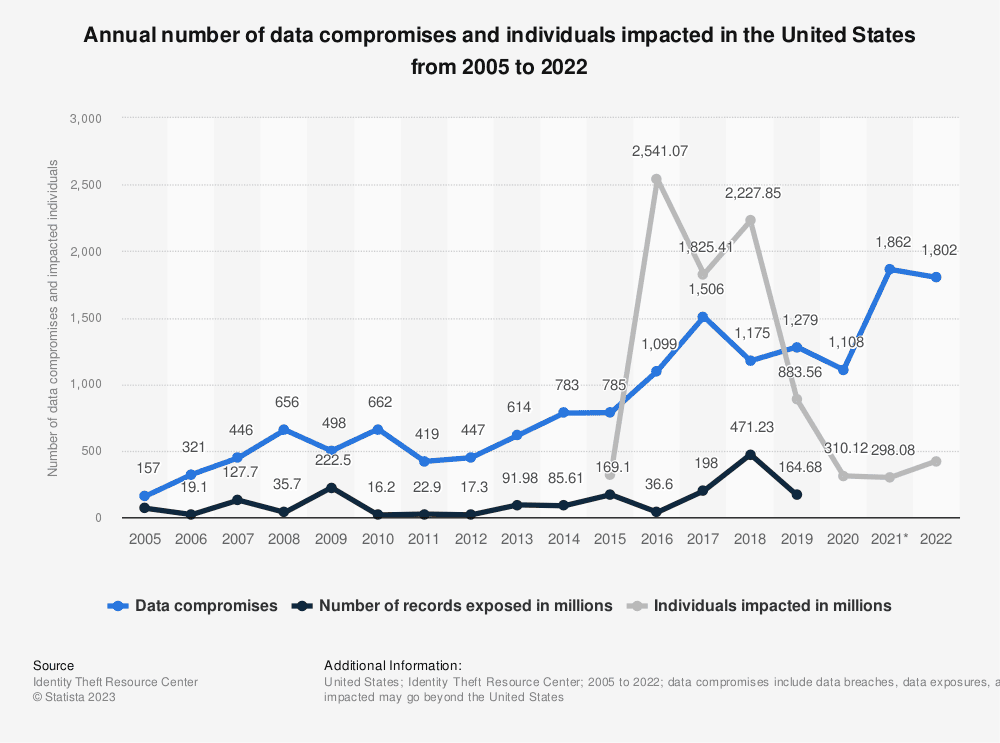

Here's something that should keep you up at night: a platform built entirely by AI just exposed thousands of people's private data, API credentials, and personal communications. No hand-written code. No human code review. Just AI writing AI code for AI agents to use.

Welcome to 2025, where the tools we're building to make our lives easier are creating some of the most dangerous security vulnerabilities we've ever seen.

This isn't some theoretical concern anymore. It's happening right now. And if you're building anything with AI, or using platforms built by AI, you need to understand what went wrong with Moltbook, why it matters, and what it tells us about the massive security gap we're entering.

TL; DR

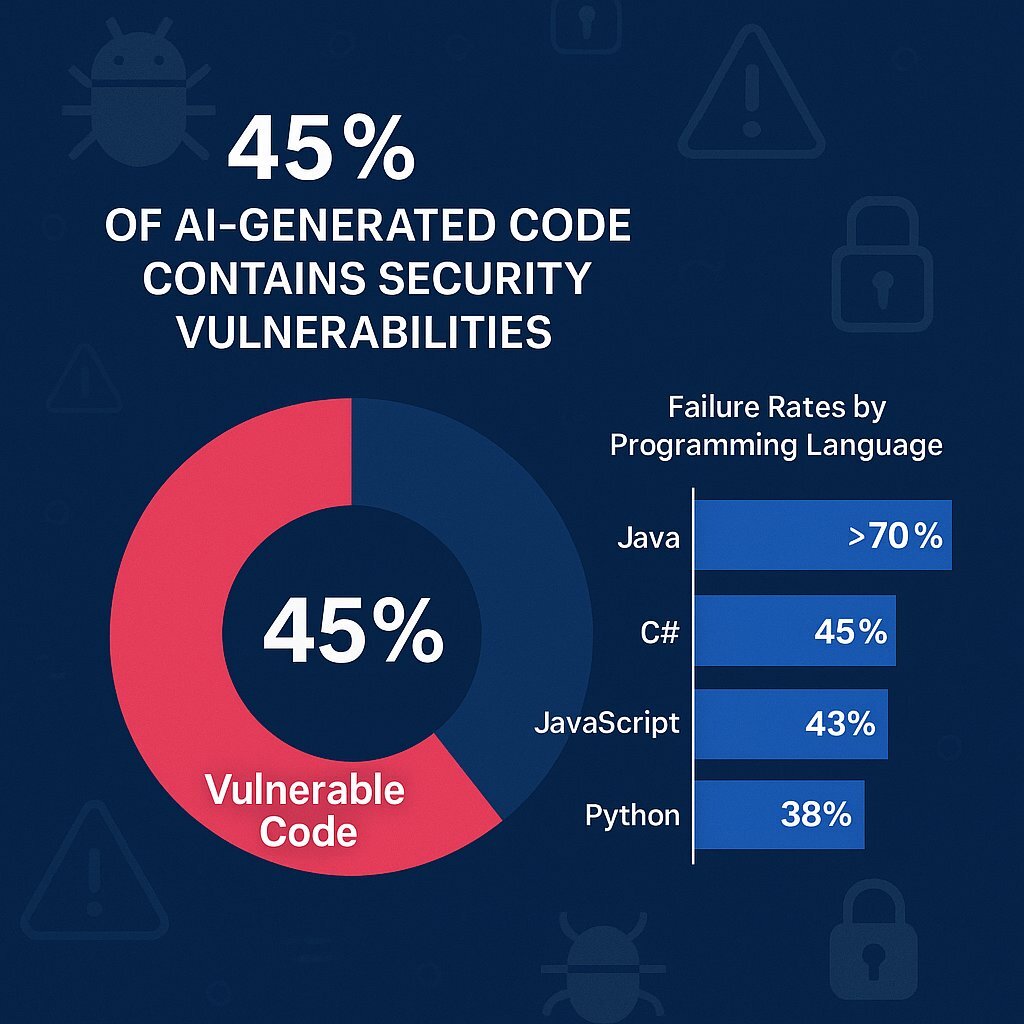

- Moltbook's Critical Flaw: A social network for AI agents, built entirely by AI code, exposed thousands of user emails and millions of API credentials due to a private key mishandled in Java Script, as detailed by Wiz.

- The Real Problem: AI-generated code creates more bugs than human developers, and these bugs often slip through security review because nobody's checking AI output carefully enough, as discussed in ITPro.

- What Got Exposed: Complete account impersonation capabilities, private communications between AI agents, and unrestricted API access across the entire platform.

- The Bigger Picture: This is just the first major security incident from an AI-coded platform, not the last. We're entering a new era of security vulnerabilities.

- Your Takeaway: If you're using AI-built platforms or generating code with AI, security review becomes non-negotiable, not optional.

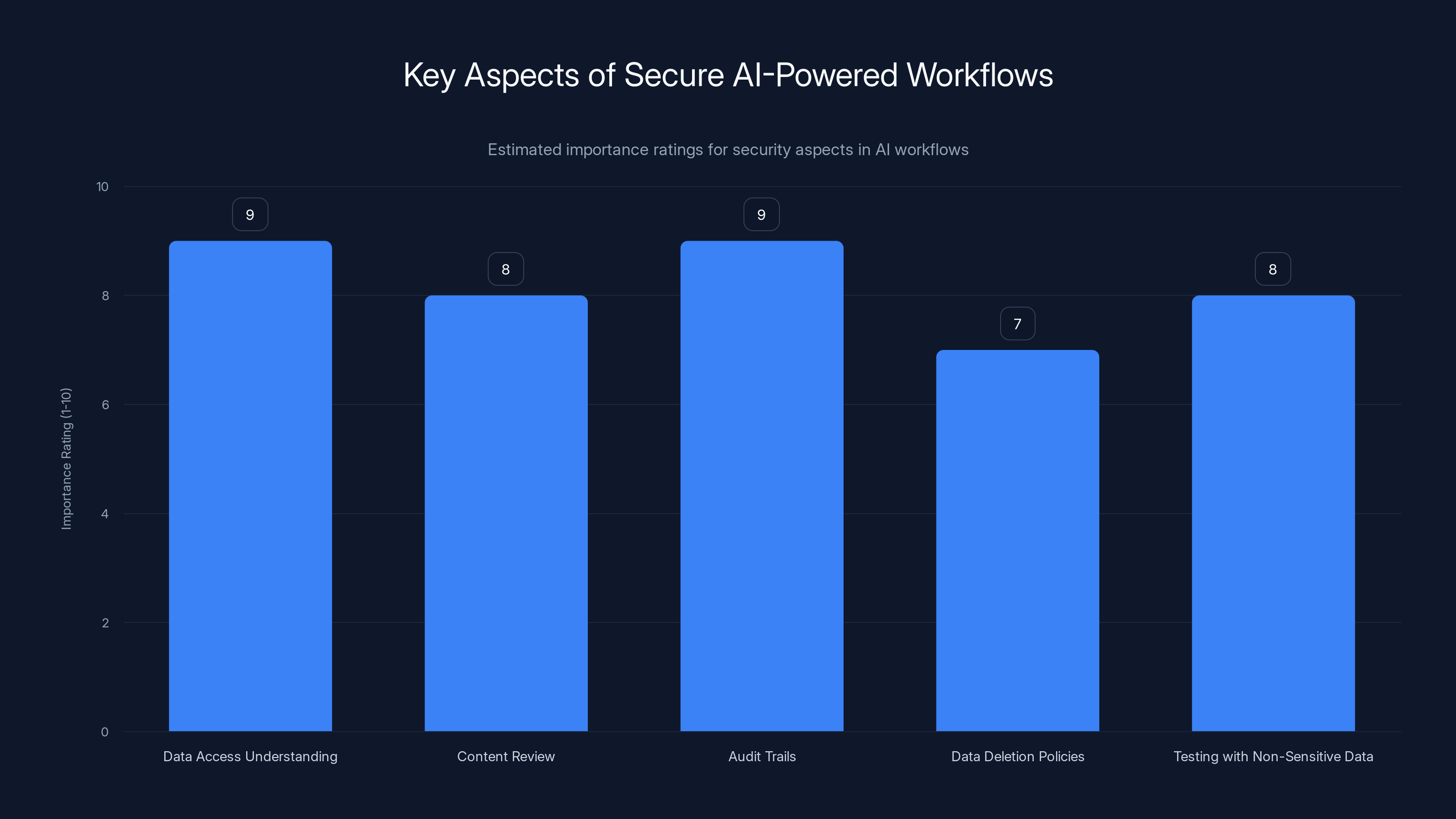

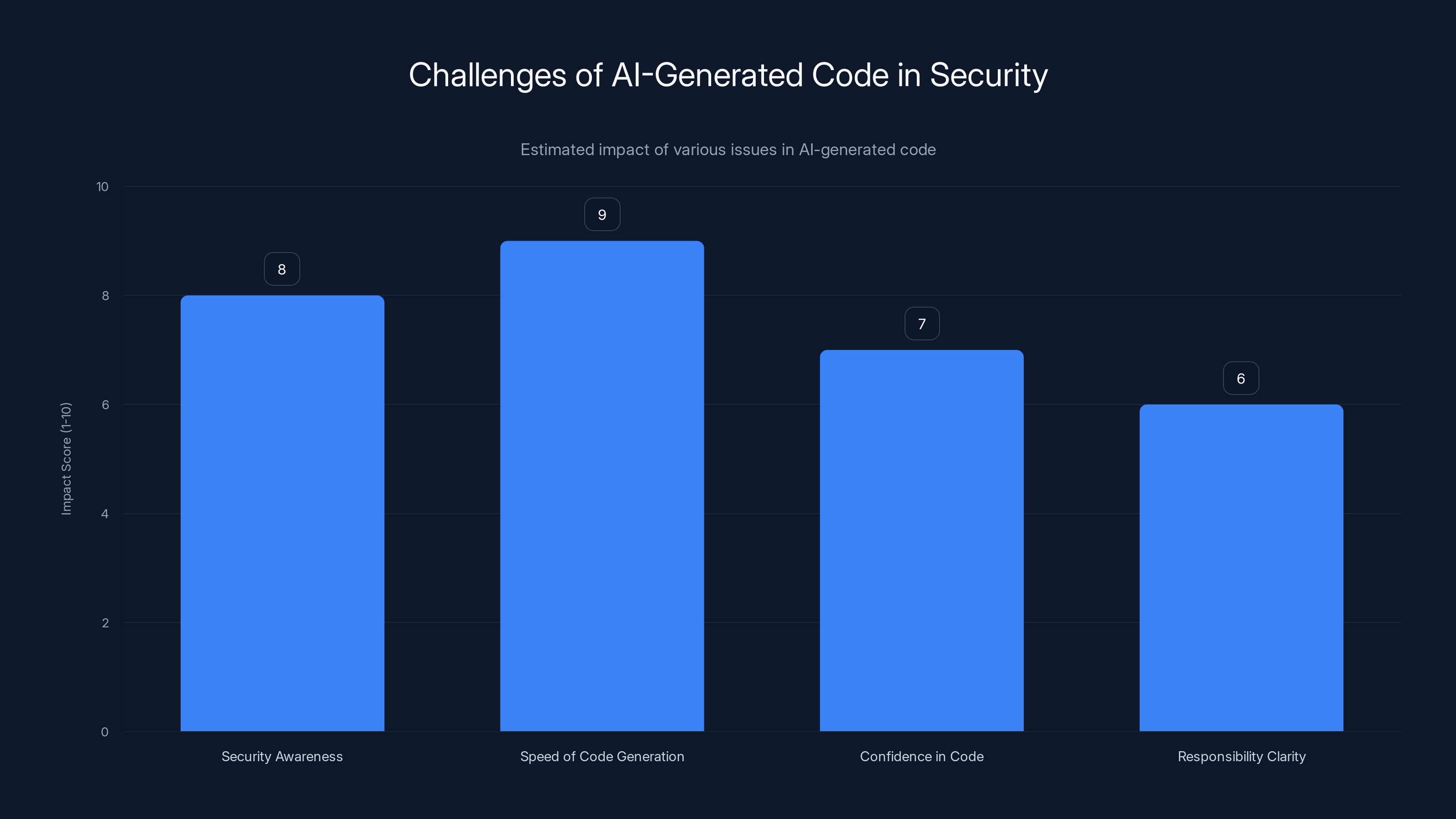

Understanding data access and maintaining audit trails are crucial for secure AI-powered workflows, with high importance ratings. Estimated data.

The Moltbook Disaster: What Actually Happened

Let me paint the picture first, because the details matter here.

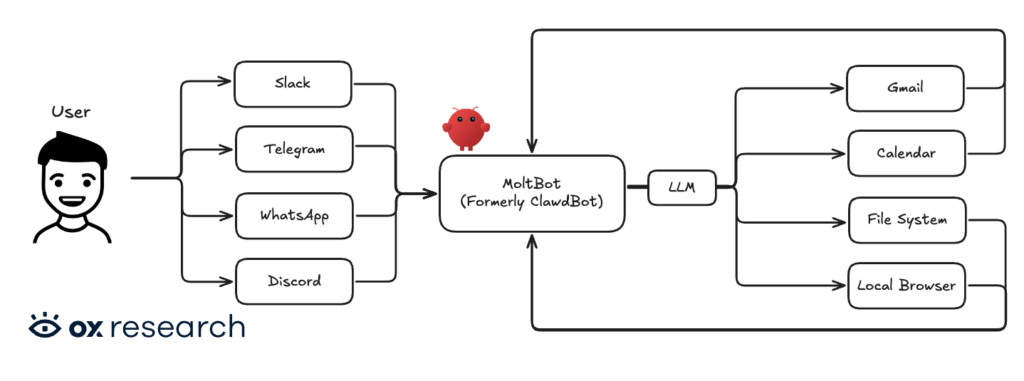

Moltbook was supposed to be Reddit for AI agents. Imagine a social network where AI models could interact with each other, share information, and build on each other's work. It's actually a pretty creative idea, and the founder had a vision for how it should work architecturally.

But here's where it gets weird: the founder, Matt Schlicht, didn't write a single line of code himself. Not one. He used AI to build the entire platform. In his own words, he "had a vision for the technical architecture, and AI made it a reality." He posted this on X like it was a feature, not a massive red flag.

Then researchers at Wiz, a cloud security firm, discovered something that should terrify anyone running a web service: a critical vulnerability in Moltbook's Java Script code that exposed a private key. This wasn't some obscure cryptographic flaw that only security experts would notice. This was a junior developer mistake, except it was made by AI, and nobody caught it.

What did that single mistake expose? Everything. User email addresses for thousands of people. Millions of API credentials. Complete account impersonation capabilities for any user on the platform. Access to private communications between AI agents. The whole enchilada.

Think about what that means. An attacker could have logged in as anyone, accessed their private conversations, generated new API tokens on their behalf, and caused absolute chaos. For a platform designed to let AI agents interact and collaborate, this was catastrophic.

Wiz reported the vulnerability responsibly, Moltbook fixed it, and everyone moved on. But here's what should worry you: this wasn't a flaw in the concept of Moltbook. This was a flaw in every single line of code that went into Moltbook.

The bigger question everyone should be asking is simple: if an AI-built platform had this vulnerability, what other vulnerabilities are hiding in AI-generated code right now?

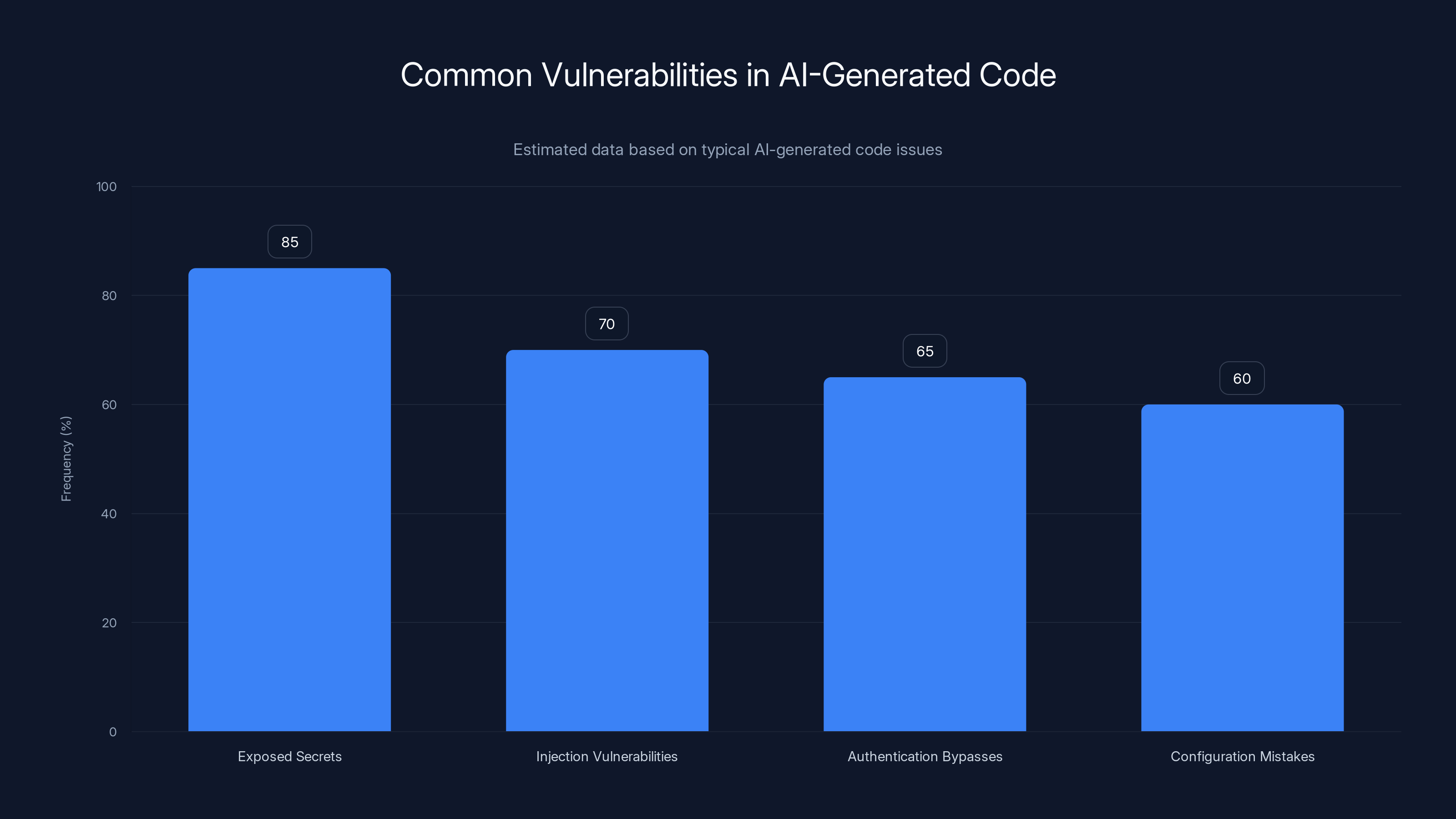

Exposed secrets are the most common vulnerability in AI-generated code, occurring in approximately 85% of cases. Estimated data based on typical issues.

Why AI-Generated Code Is a Security Nightmare

Let's talk about why this keeps happening.

When humans write code, we have processes. Code review. Security review. Testing. Linting. Static analysis. Integration with security tools. Even with all that, bugs slip through. But we have layers of defense.

When AI writes code, those layers fall apart.

First, there's the fundamental problem: AI language models are trained to predict the next token based on patterns in training data. They're not reasoning about security. They're not thinking about edge cases. They're completing patterns. If the training data includes buggy code (spoiler: it does), the AI will happily generate buggy code too.

Second, there's the speed problem. Developers use AI to write code faster. That speed creates pressure to skip review. If an AI generated 10,000 lines of code in an hour, you're not reviewing every line. You're skimming it. You're looking for obvious issues. You're missing the subtle stuff.

Third, there's the confidence problem. Developers see working code and assume it's secure code. If the app runs, if the tests pass, if it ships to production, it must be fine, right? Wrong. The Moltbook flaw didn't break functionality. It just leaked data through a private key exposure.

Fourth, there's the responsibility problem. If AI wrote it, who's responsible for the vulnerability? The developer who used the AI? The AI company? The platform owner? That unclear liability means security reviews often don't happen at all.

Here's what really gets me about Moltbook: it wasn't even using cutting-edge AI for security-critical operations. It was using standard language models to write Java Script. This isn't an inherent limitation of AI. It's a gap in how we're deploying AI right now. We're using it for speed without applying the same rigor we'd use for human code.

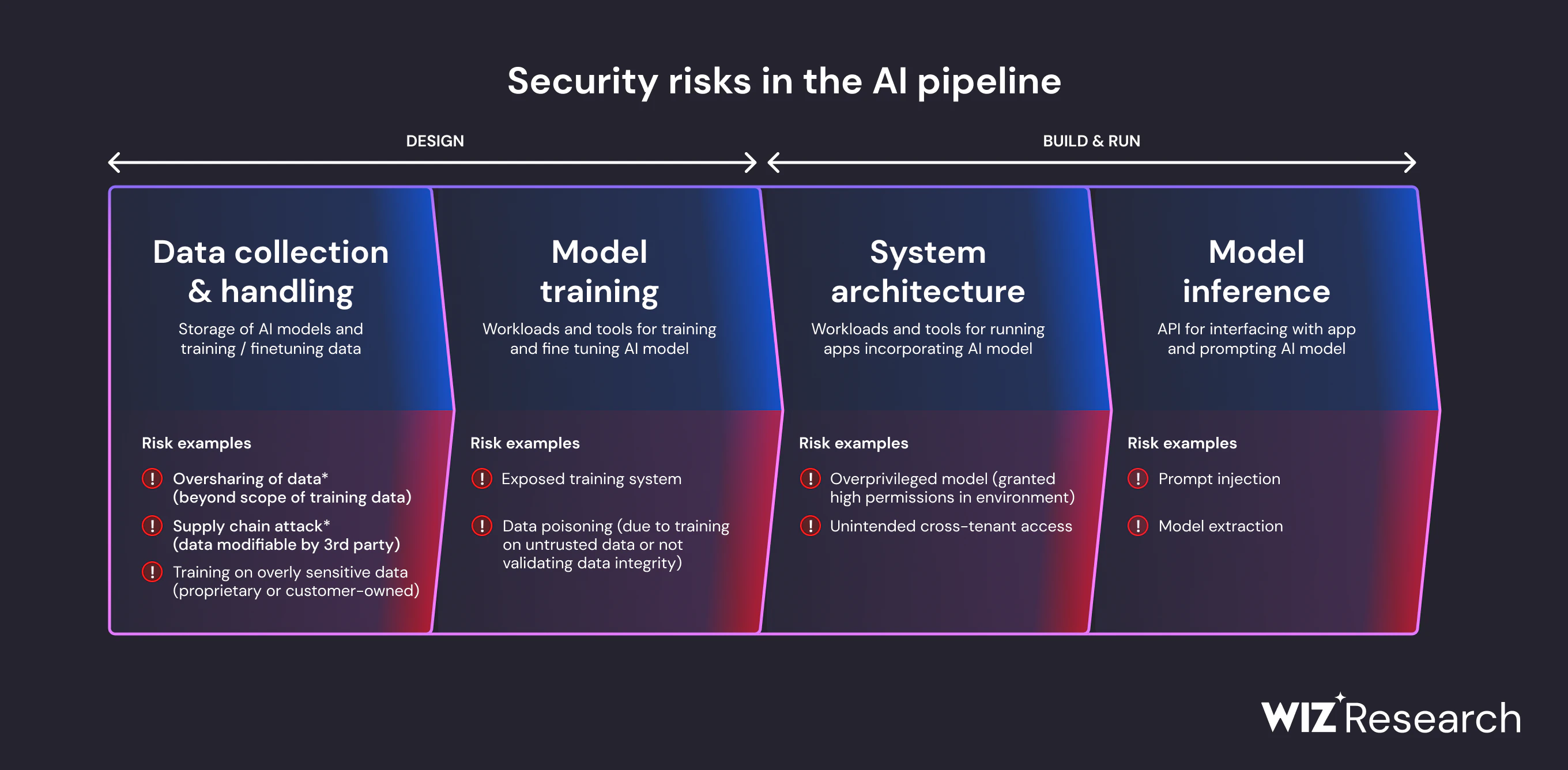

The Types of Vulnerabilities AI Code Creates

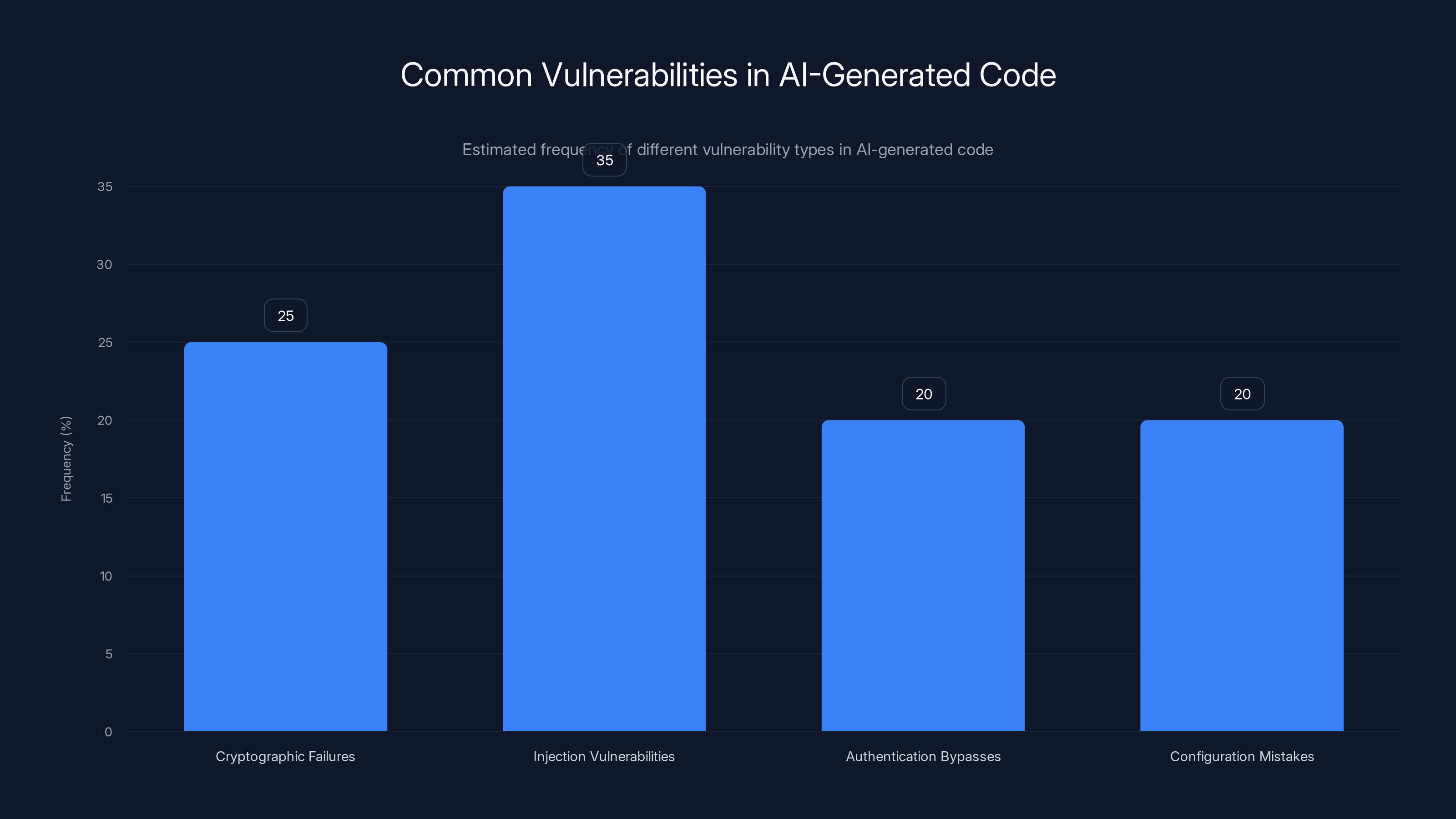

Not all AI-generated bugs are equal. Some are annoying. Some are catastrophic. Let me break down the patterns we're starting to see.

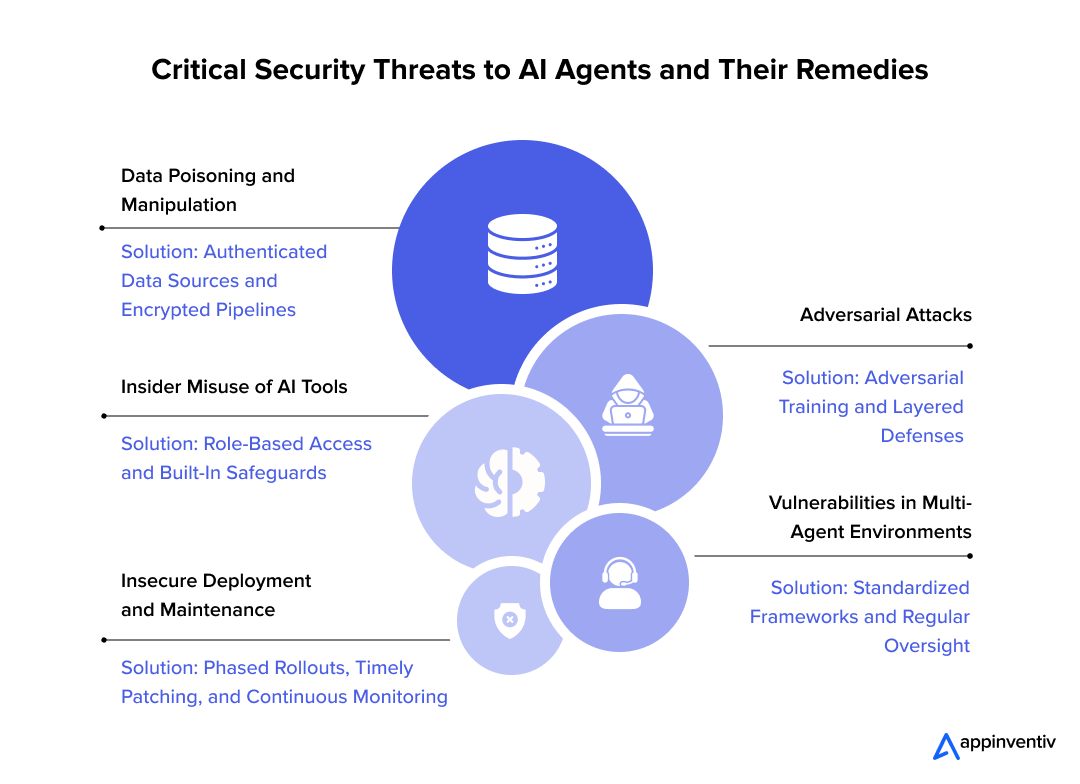

Cryptographic Failures

This is what got Moltbook. AI models tend to mishandle sensitive information in subtle ways. A private key left in client-side Java Script. A salt that's not actually random. Credentials hardcoded in comments. These aren't new vulnerabilities, but AI keeps reinventing them.

The problem is that cryptography requires precise understanding of why each step matters. AI can copy patterns for working cryptographic code, but it often misses the security implications of small changes.

Injection Vulnerabilities

SQL injection, command injection, template injection. These are old problems, but AI generates them at scale. Why? Because AI is great at pattern matching and terrible at understanding the difference between code and data.

When an AI sees patterns like user_input + query, it might generate exactly that, without thinking about escaping or parameterization. It works in test cases. It fails when someone tries to break it.

Authentication Bypasses

AI often generates authentication code that looks right but has subtle flaws. A check that can be bypassed by sending null instead of a value. A token validation that forgot to check expiration. Logic that works for normal cases but fails for edge cases an AI never trained on.

Configuration Mistakes

AI tends to miss deployment-level security. Databases left accessible. S3 buckets with public read access. API keys exposed in environment variable examples. Logging sensitive data. These happen because AI doesn't understand the operational context of code it's generating.

Injection vulnerabilities are the most common in AI-generated code, estimated at 35%, followed by cryptographic failures and configuration mistakes. Estimated data.

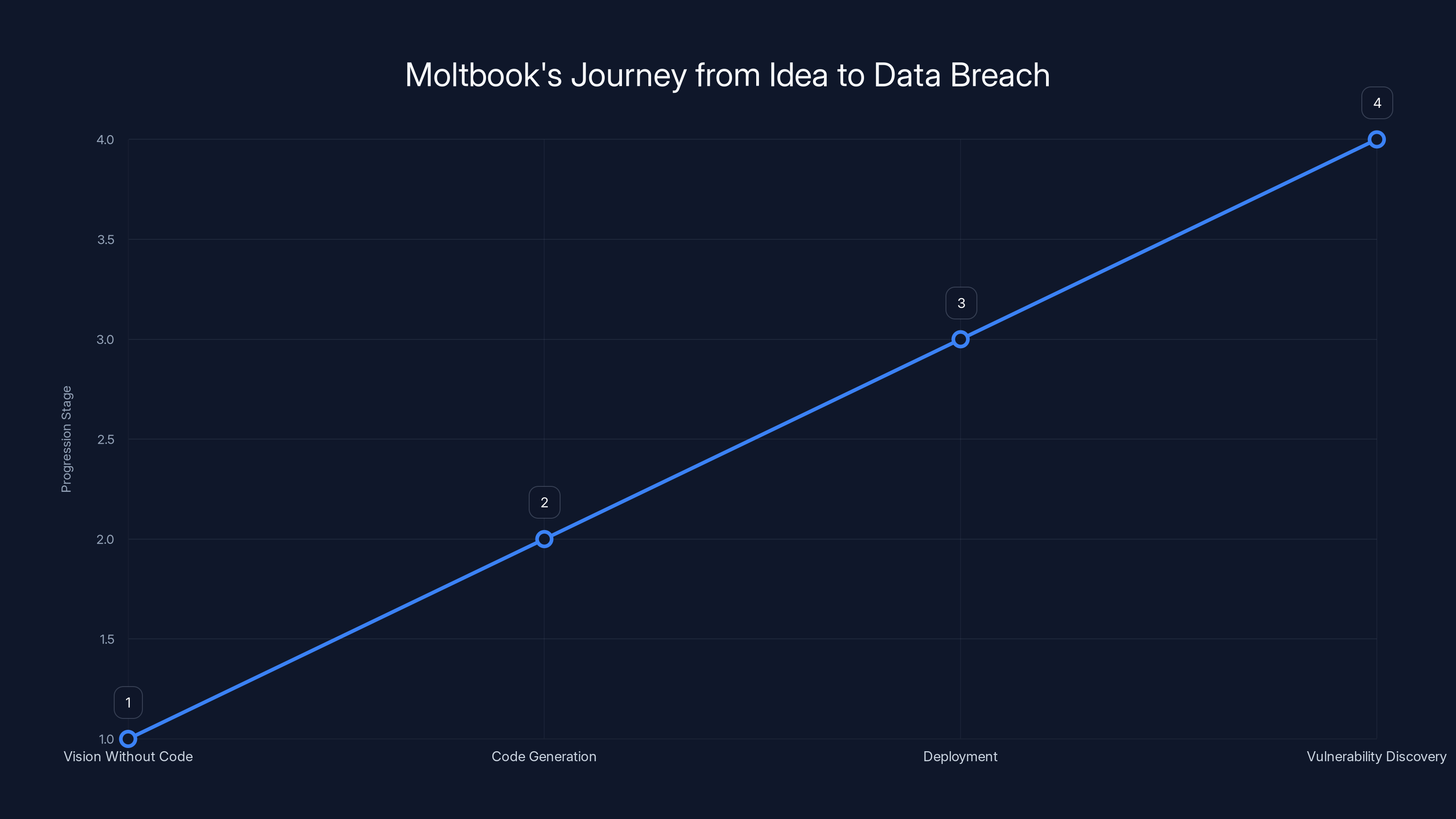

How This Happened at Moltbook: The Complete Timeline

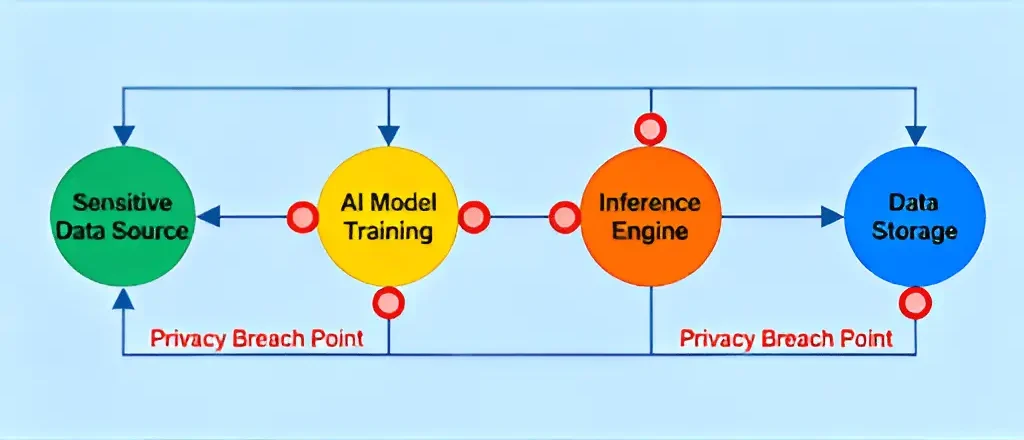

Let's trace exactly how Moltbook went from "cool idea" to "catastrophic data breach."

Phase 1: Vision Without Code

Matt Schlicht had an architectural vision for Moltbook. He knew what he wanted: a platform where AI agents could interact. But he didn't write the code. He handed the architectural description to AI and asked it to build it.

This is actually a reasonable approach if you have good security practices in place afterward. The problem is that Moltbook didn't.

Phase 2: Code Generation at Scale

AI generated thousands of lines of code across the entire stack. Frontend. Backend. Authentication. Database operations. API endpoints. All of it generated. All of it untested from a security perspective.

The velocity was amazing. The security was nonexistent.

Phase 3: Deployment Without Review

Somewhere in that generated code, a developer (or the AI itself in a subsequent iteration) made a choice about how to handle a private key. Instead of keeping it server-side and secure, it ended up in the Java Script code sent to clients.

This could have been caught by:

- Static analysis for secrets in code

- Manual security review

- A developer asking "why is a private key here?"

None of that happened.

Phase 4: Vulnerability Discovery

Wiz researchers, doing their job properly, found the exposed key during their security research. They reported it responsibly. Moltbook fixed it. The vulnerability was patched.

But here's what's important: they found it by looking for common patterns. This wasn't some zero-day they discovered. This was a basic security mistake that should never have made it to production.

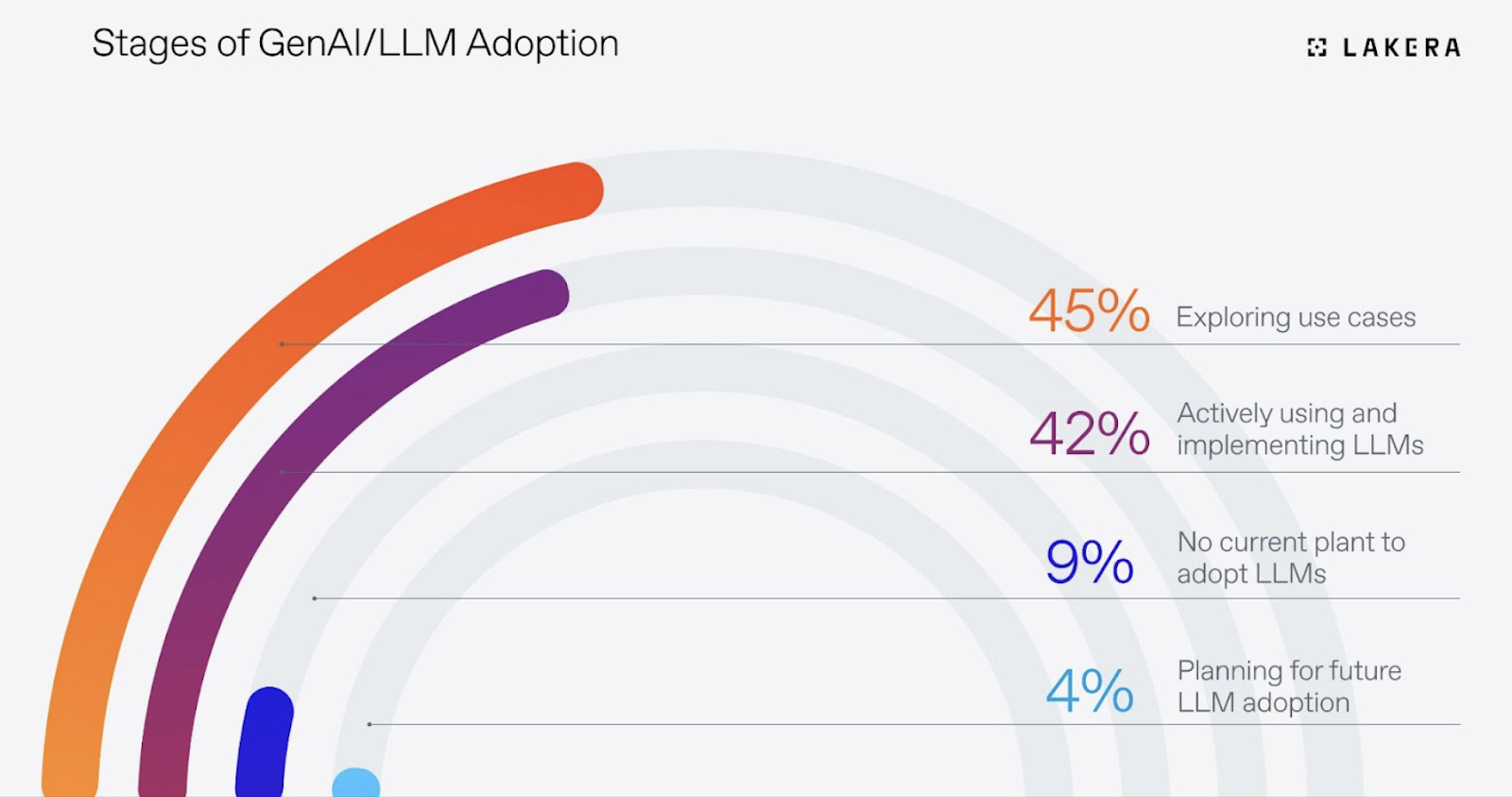

Why Companies Are Choosing AI-Generated Code (And Why It's Dangerous)

Before we judge Moltbook too harshly, let's be honest about why Schlicht made this choice.

Building a web platform is expensive. It takes time. You need skilled engineers. You need security expertise. You need Dev Ops people. It costs six figures minimum, takes months, and has a high failure rate.

Or you can describe what you want to an AI and have working code in days.

The economics are compelling. The security implications are terrifying.

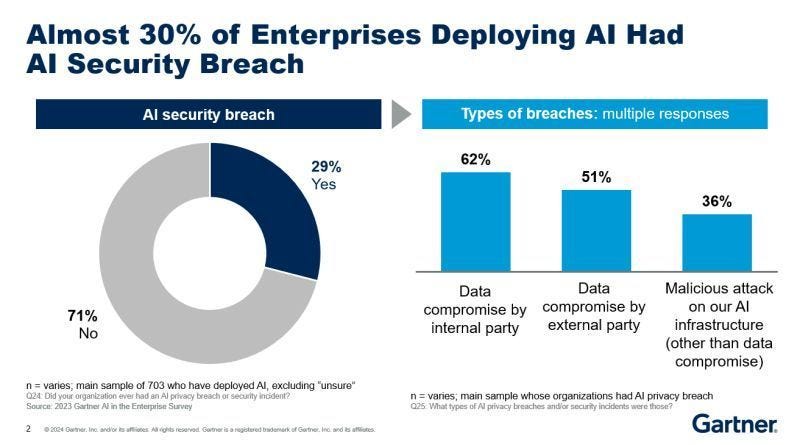

Right now, there's a gold rush happening. Developers and founders are using AI to ship products faster than ever before. Some of them will get away with it. Their users won't hit the edge cases. Their data won't get breached. They'll make money and move on.

Others won't be so lucky. And the unlucky ones will become cautionary tales.

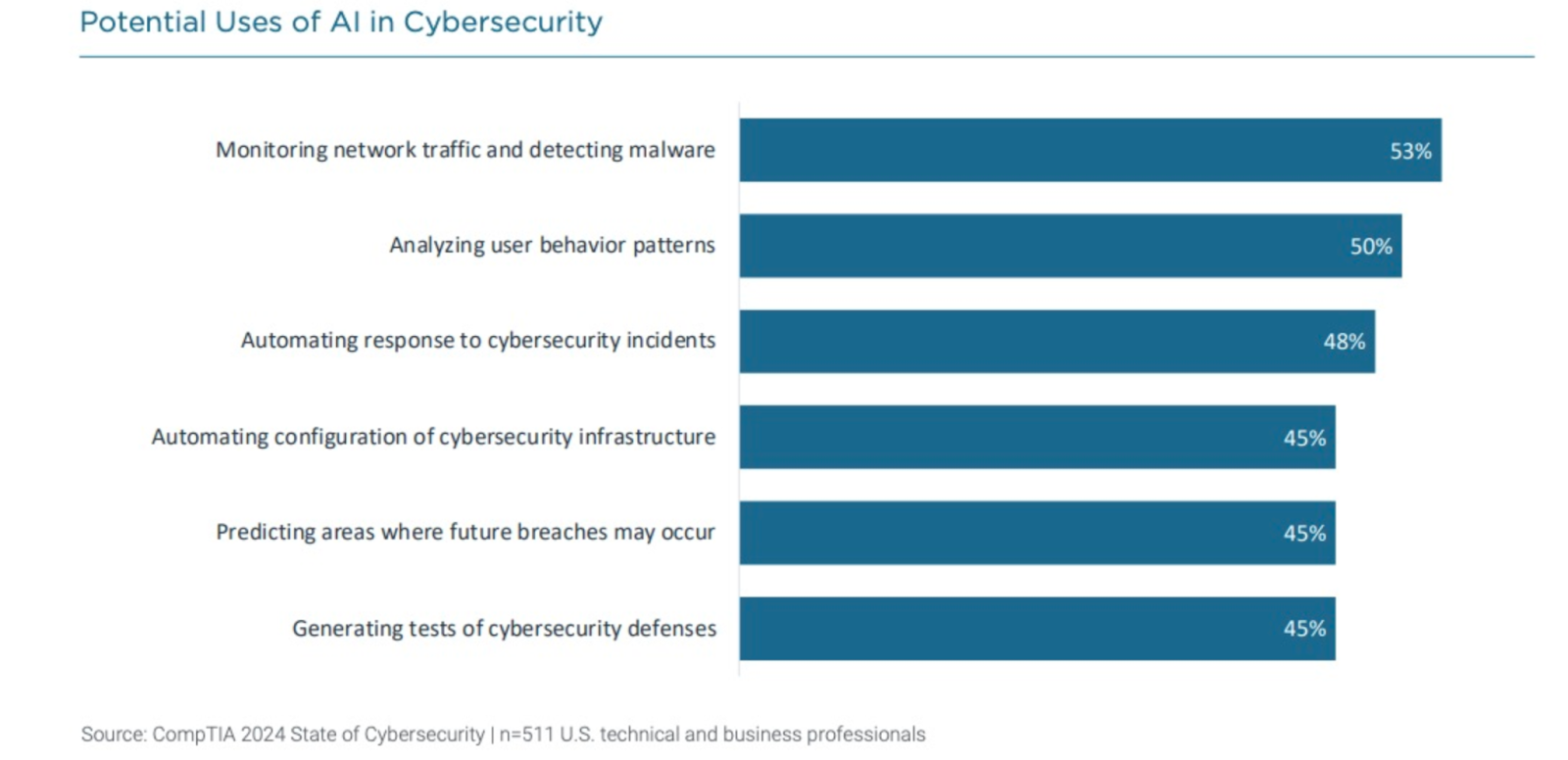

The real problem is that we don't have good tools yet for security review of AI-generated code. We have linters for syntax. We have SAST (static application security testing) for common vulnerability patterns. But we don't have good ways to ask, "Is this cryptographic implementation actually secure?" or "Could this database query be injected?" in an automated way that catches all the subtle stuff.

For teams building with Runable or other automation platforms, this becomes especially important. If you're using AI to generate business-critical workflows, documents, or reports, the same principle applies: AI acceleration doesn't eliminate the need for review and validation.

Cost reduction and speed to market are primary drivers for adopting AI-generated code, despite notable security concerns. (Estimated data)

The Broader Security Implications: This Is Just The Beginning

Here's what keeps me up at night about Moltbook: it's the first big one, but it won't be the last.

We're seeing an explosion of AI-generated products. Websites built by AI. Mobile apps built by AI. Backend services built by AI. Data pipelines built by AI. Each one is a potential vulnerability waiting to happen.

And the vulnerabilities we're seeing follow predictable patterns:

Pattern 1: Secrets in Unexpected Places

AI has a tendency to put sensitive information where it shouldn't be. API keys in client-side code. Passwords in comments. Database credentials in configuration files that get committed to git. These are basic mistakes that experienced developers learned to avoid years ago, but AI keeps making them.

Pattern 2: Trust Boundaries That Don't Exist

AI understands code syntax better than it understands architecture. So it generates code that works locally but has trust boundary violations in production. It validates user input on the frontend instead of the backend. It assumes the database is trustworthy without checking. It treats data from external APIs as guaranteed safe.

Pattern 3: Missing Security-Critical Edge Cases

AI generates code for happy paths beautifully. But edge cases? Error handling? Timeout conditions? What happens when something fails? These are the cases where security vulnerabilities hide. An AI model might generate authentication code that works when everything goes right, but fails to validate properly when the database is slow or a network call times out.

Pattern 4: No Operational Security

AI generates application code, not operations code. It doesn't think about secrets management, key rotation, audit logging, or incident response. So platforms built with AI often lack the operational security practices that would catch and contain breaches.

How To Secure AI-Generated Code: The Playbook

Okay, so the problem is real. But what do we actually do about it?

If you're building with AI, you need multiple layers of defense.

Layer 1: Automated Security Scanning

Run your AI-generated code through every security tool you can find. SAST tools like Sonar Qube. Secrets scanning like Git Guardian. Dependency scanning for vulnerable libraries. Container scanning if you're using Docker. Supply chain scanning. These tools won't catch everything, but they'll catch the obvious stuff.

Think of it like a bouncer at a club. Not perfect, but it stops the obviously drunk people from walking in.

Layer 2: Manual Security Review

Have someone who understands security review the code. Not the whole codebase (that's unrealistic), but the security-critical parts. Authentication. Authorization. Cryptography. Database operations. Data handling. Payment processing. Any code that touches sensitive data.

This takes time, but it's time well spent.

Layer 3: Architectural Review

Does the system have clear trust boundaries? Does it validate data at the right layers? Does it log the right things? Are secrets stored and managed correctly? These are architectural questions that require human judgment.

Layer 4: Testing for Security

Write tests that actively try to break things. Try SQL injection payloads. Try to bypass authentication. Try edge cases. Try to access data you shouldn't be able to access. Use fuzzing tools. Use penetration testing. Active testing catches vulnerabilities that static analysis misses.

Layer 5: Monitoring and Response

Assume something will get through. Because something will. So monitor for suspicious behavior. Log everything. Have an incident response plan. Be able to detect and respond to breaches quickly.

The timeline highlights the progression from initial vision to the discovery of a critical vulnerability. Each phase represents a step closer to the breach, emphasizing the lack of security checks.

Runable and Automation Security: Learning From Moltbook

For teams using AI-powered automation platforms like Runable to generate presentations, documents, reports, and other business-critical content, the Moltbook incident raises important questions about security and data handling.

When you're using AI to automate workflows that touch company data, customer information, or sensitive documents, you're making a trust decision about that platform. Is it secure? Does it protect your data? What happens if something goes wrong?

The lesson from Moltbook is that AI-built platforms need extra scrutiny. Runable's approach to security should include multiple safeguards: encryption of data in transit and at rest, secure handling of credentials and API tokens, regular security audits, clear data retention policies, and transparent incident response procedures.

If you're using Runable for automating reports or documents that contain sensitive information, understand what data Runable has access to, how it's stored, and what your options are if you need to migrate away from the platform.

Start with Runable's free tier to understand how it handles your data before committing to more sensitive use cases. This isn't about distrust; it's about the security posture that Moltbook taught us we need.

Apple's Lockdown Mode: A Bright Spot in a Dark Week

While Moltbook was a security disaster, one piece of good news came out of the same week: Apple's Lockdown Mode actually works.

Reporter Hannah Natanson from the Washington Post had her home raided by the FBI as part of an investigation into leaked documents. They took her devices. But they couldn't get into her i Phone because it was in Lockdown Mode.

Lockdown Mode is Apple's extreme security setting, designed specifically to protect against government spyware and forensic tools. When it's enabled, your i Phone won't connect to most peripherals without being unlocked. It blocks connection to forensic analysis devices like Gray Key and Cellebrite that law enforcement uses to extract data from phones.

In Natanson's case, the FBI couldn't extract the phone's data because Lockdown Mode prevented them from connecting the forensic tools. That's a massive win for privacy, and it shows that privacy-focused engineering can actually work.

The irony here is sharp: while AI-generated code at Moltbook was leaking data in seconds, Apple's manually-engineered security was keeping government agencies out of a phone they physically possessed.

AI-generated code poses significant security challenges, primarily due to speed and lack of security awareness. Estimated data.

Ukraine's Starlink Victory: When Tech Becomes Weapon

In perhaps the most dramatic security development of the week, Elon Musk's Starlink disabled Russian forces' access to its satellite internet service, causing a communications blackout across Russian military positions in Ukraine.

This is significant for several reasons, and none of them are simple.

Russia had been using Starlink service on the front lines. Ukrainian forces couldn't jam it effectively because it wasn't theirs. But Ukraine's defense minister requested that Space X disable Russian access to Starlink. And Starlink did.

The impact was immediate and severe. Russian military bloggers described it as "a catastrophe." Drones couldn't communicate with their operators. Troops couldn't coordinate. The advantage that satellite internet had provided evaporated.

Here's what's interesting from a security perspective: this represents a new kind of security weapon. Not a cyberattack in the traditional sense. Not code or malware. Just flipping a switch and denying access to critical infrastructure.

For every infrastructure company providing critical services, this is a warning. Your service can be weaponized. Your infrastructure can become a geopolitical tool. Your architecture decisions have military implications.

If you're running any service that spans geographies or could touch military operations, you need to think about what happens when a government asks (or orders) you to disable service in a particular region. You need to think about the implications of denying access to your service. You need infrastructure that can handle that kind of granular control.

The US Cyberattack on Iran: Unseen Warfare

While Starlink's shutdown of Russian forces was visible and dramatic, another cyberattack was happening almost entirely in the dark.

US Cyber Command disrupted Iran's air defense systems during kinetic military strikes on Iran's nuclear program. The disruption was timed perfectly to prevent surface-to-air missiles from being launched at American warplanes.

This required several things to work in coordination:

Precise Intelligence: The NSA had to identify the specific vulnerabilities in Iran's air defense systems. Not theoretical vulnerabilities. Not zero-days that might work sometimes. Specific weaknesses in specific systems that they could exploit reliably.

Exploit Development: Cyber Command had to develop tools to exploit those vulnerabilities without triggering defenses or alerting operators until it was too late.

Timing: The cyberattack had to hit at exactly the right moment. Too early and the systems would come back online. Too late and the warplanes would be in range. Timing had to be perfect.

Operational Security: The entire operation had to remain secret until it was useful to declassify the information. That means keeping exploits secret, keeping methods secret, keeping everything locked down.

From a pure cybersecurity perspective, this is terrifying and impressive in equal measure. A foreign power using cyberattacks to disable critical military infrastructure. Not to steal data. Not to cause economic damage. But to create a specific military advantage at a specific moment.

This is what warfare looks like in 2025. Not just bombs and missiles. Not just hacking financial systems. But precision cyberattacks on military infrastructure timed to support kinetic military operations.

For security professionals, this should shape how you think about critical infrastructure. Your systems might become targets in conflicts you're not even parties to. Your infrastructure might need to defend against sophisticated, state-sponsored cyberattacks.

Data Brokers and Threats to Public Servants

While the big stories this week focused on AI vulnerabilities and military cyber operations, a smaller story revealed a less obvious threat.

Data brokers are selling public servants' personal information. Police officers. Firefighters. Emergency responders. People whose names are public but whose home addresses, phone numbers, family information, and daily patterns shouldn't be.

That data ends up in the hands of people who want to hurt them. Threats against police officers are up. Threats against firefighters are up. And much of it traces back to data brokers selling publicly available information that was supposed to be private.

State privacy laws don't protect these people well. The information is public record, so it's fair game for brokers. But in practice, it's putting lives at risk.

This is a different kind of security problem than Moltbook or cyberattacks. It's a structural problem. Data that's technically public is being weaponized against vulnerable people, and our laws haven't caught up.

For anyone building platforms that handle personal information, this is a reminder: you have responsibility beyond just technical security. You have responsibility for how your data could be misused even if it's technically protected.

The Bigger Picture: We're Entering A New Era of Risk

Let's step back and think about what happened this week at a bigger level.

We have AI-generated code creating security vulnerabilities at scale. We have governments using cyberattacks as military weapons. We have private companies like Space X making geopolitical military decisions. We have data brokers selling information that enables physical violence.

These aren't separate problems. They're symptoms of the same underlying shift: we're building systems that are more powerful and more opaque than we've ever built before, and we don't have good frameworks for securing them or controlling them.

Moltbook represents the beginning of an era where AI builds our infrastructure. The security implications are still being discovered. Most of the vulnerabilities are still undiscovered.

The Starlink shutdown represents a shift in how private companies are entangled with geopolitics and military operations. That entanglement is going to create new security problems and new questions about responsibility.

The cyberattack on Iran represents the normalization of offensive cyber operations as part of military strategy. That means every critical system is now a potential target.

And the data broker problem represents how old infrastructure (public records) is being weaponized by new technology (data aggregation) in ways the law hasn't caught up with.

All of this adds up to one thing: security is more important now than it's ever been, and the problems are more complex than they've ever been.

What This Means For Developers and Security Teams

If you work in security or software development, this week should inform how you approach your work going forward.

For developers using AI code generation: Treat AI-generated code as a first draft, not final code. Apply rigorous security review. Test edge cases. Don't assume it's secure because it works.

For security teams: Start building tools and processes for reviewing AI-generated code. Static analysis isn't enough. You need context-aware review tools that understand the specific vulnerabilities AI tends to create.

For infrastructure teams: Assume your systems might become targets of state-sponsored cyberattacks. Build defensively. Plan for adversarial operations against your infrastructure. Test your incident response procedures.

For data teams: Understand that data you consider public might be weaponized. Think about the downstream implications of the data you collect and store. Have retention policies that eliminate data that's no longer needed.

For leadership: Budget for security as a core part of development, not an afterthought. Security slows shipping. It's frustrating. But the alternative is catastrophic.

The security landscape of 2025 is more complex, more adversarial, and more high-stakes than ever before. The Moltbook incident is just the first of many security stories we'll see as AI becomes more integrated into critical infrastructure.

Building Secure AI-Powered Workflows

For teams building automation-heavy workflows with platforms like Runable, the lessons from Moltbook become practically important.

When you're automating document generation, report creation, or slide creation with AI, you're making decisions about trust. The platform has access to your data. It's generating content. It's handling the workflow.

Secure AI-powered workflows require:

- Understanding what data the platform accesses - What information do you need to share? Can you minimize it?

- Reviewing generated content for accuracy and safety - AI can hallucinate. AI can make mistakes. Human review catches those.

- Maintaining audit trails - Who generated what? When? With what data? You need to track it.

- Having data deletion policies - When can Runable delete your data? How long does it retain it? What's your control?

- Testing with non-sensitive data first - Before you put sensitive data through any AI-powered workflow, test with fake data.

Runable's

The Future: What Comes Next

If I'm being honest, I think we're going to see more Moltbook-like incidents. Not because Moltbook was uniquely bad (it wasn't), but because the incentives are pushing everyone toward using AI to build faster.

The companies that will win in the short term are the ones shipping fast. Some of those companies will have security breaches. They'll fix them. They'll move on. Users might switch, or they might stay because the alternative is worse.

The companies that will win in the long term are the ones building security into the process from the start. Not as an afterthought. Not as a phase before launch. But as part of the development process itself.

For AI-generated code specifically, I expect we'll see:

- Better tools for reviewing AI-generated code

- Standards for secure code generation

- Liability frameworks that clarify who's responsible when AI-generated code has vulnerabilities

- Security certification for AI-powered platforms

- Insurance products covering AI-specific security risks

All of that is still years away. For now, we're in the wild west. And the casualties are starting to pile up.

Recommendations: Staying Secure in an AI-First World

Here's what I'd recommend if you're managing security for any organization in 2025.

Immediate Actions (This Week):

- Audit any AI-generated code in your codebase

- Review your third-party platform security policies

- Test your incident response procedures

- Update your security team on the Moltbook incident and similar vulnerabilities

Short-Term Actions (This Month):

- Implement secrets scanning in your CI/CD pipeline

- Establish code review policies for AI-generated code

- Test your backup and recovery procedures

- Review your data retention policies

Medium-Term Actions (This Quarter):

- Build or adopt security review tools for AI-generated code

- Conduct security training for developers on AI-specific vulnerabilities

- Implement security monitoring for unusual data access patterns

- Review your security incident response procedures

Long-Term Actions (This Year):

- Build a security culture where faster doesn't mean riskier

- Invest in security infrastructure that scales with your systems

- Develop standards for AI-generated code in your organization

- Create security benchmarks and regularly test against them

FAQ

What is the Moltbook security breach?

Moltbook was a social network platform for AI agents built entirely with AI-generated code. Security researchers discovered a critical vulnerability in the platform's Java Script code that exposed a private cryptographic key. This single vulnerability exposed thousands of user email addresses, millions of API credentials, and allowed complete account impersonation of any user on the platform. The vulnerability was fixed after discovery, but it highlighted the risk of deploying AI-generated code without proper security review.

How did the Moltbook vulnerability get into production?

The founder of Moltbook used AI to generate all the code for the platform without writing any code himself. This approach allowed fast development but eliminated the human code review and security analysis that typically catches such vulnerabilities. The private key was exposed in client-side Java Script code, something that automated security scanning and basic manual review would have caught. The breach occurred because there was no adequate security review process for the AI-generated code.

What are the main types of vulnerabilities AI-generated code creates?

AI-generated code tends to create predictable vulnerabilities: secrets and cryptographic keys left in unexpected places, injection vulnerabilities (SQL, command, template), authentication bypasses due to incomplete edge case handling, and configuration mistakes that leave systems exposed. These vulnerabilities aren't unique to AI code, but they appear more frequently because AI models are trained to generate working code, not necessarily secure code, and often miss the security implications of implementation choices.

How should developers use AI for code generation safely?

Developers should treat AI-generated code as a first draft requiring professional security review. This means running code through automated security scanning tools, conducting manual review of security-critical components like authentication and cryptography, testing edge cases aggressively, and maintaining the same code review standards used for human-written code. For any AI-generated code handling sensitive data or security functions, professional security review is not optional.

What is Apple's Lockdown Mode and why does it matter for security?

Lockdown Mode is Apple's most restrictive security setting designed to protect against government surveillance and sophisticated cyberattacks. It prevents connection to forensic analysis devices that law enforcement uses to extract data from i Phones and restricts many system features. Reporter Hannah Natanson's i Phone in Lockdown Mode prevented FBI forensic tools from extracting data during a raid, demonstrating that privacy-focused engineering can provide real protection against powerful adversaries.

How does the Starlink military shutdown affect security?

Space X's decision to disable Russian military access to Starlink during the Ukraine conflict demonstrates that private infrastructure companies can become military tools. For security teams, this means recognizing that any service spanning multiple countries is vulnerable to being disabled or weaponized for geopolitical purposes. It also raises questions about corporate responsibility, government pressure, and the militarization of civilian infrastructure.

What does the US cyberattack on Iran's air defense system teach about cybersecurity?

The disruption of Iran's air defense systems during military strikes shows that cyberattacks have become integral parts of military operations. State-sponsored attacks can precisely target critical infrastructure and create specific military advantages. This means organizations managing critical infrastructure must assume they're potential targets of sophisticated, well-resourced adversaries and design systems with that threat model in mind.

How should organizations secure AI-powered automation platforms?

Organizations using AI-powered platforms should understand what data the platform accesses, review all generated content before use, maintain audit trails of what was generated and when, understand the platform's data retention and deletion policies, and test with non-sensitive data before deploying to production. For platforms like Runable starting at $9/month, the same security principles apply regardless of price point.

What's the difference between security vulnerabilities in AI-generated code versus human-written code?

AI-generated code tends to have vulnerabilities in predictable locations: secrets in client-side code, incomplete validation in edge cases, configuration mistakes, and logic errors in security-critical functions. These vulnerabilities aren't unique to AI, but they appear more frequently. The difference is that human code review often catches these issues before production, while AI code is often deployed without equivalent review due to the speed advantage that AI provides.

How can security teams prepare for more AI-generated code vulnerabilities?

Security teams should build processes and tools specifically for reviewing AI-generated code, implement automated secrets scanning in all CI/CD pipelines, establish different code review standards for security-critical code, create security training for developers on AI-specific vulnerabilities, and develop incident response procedures that account for the scale and type of vulnerabilities AI tends to create. Tools like SAST, secrets scanning, and dependency analysis should be mandatory before production deployment.

Conclusion: The Security Reckoning Is Coming

We're at a turning point in how we build systems. We have tools that make development faster than ever. But speed without security is just a different way of losing.

Moltbook's vulnerability wasn't unique because of how the code was written. It was unique because it was left in production for people to find. Thousands of users' data was exposed. Millions of credentials were leaked. And it all traced back to a single choice: skip security review for speed.

That choice worked until it didn't.

The question now is whether we learn from Moltbook or whether it becomes just one incident among many. I hope it's the former. I'm worried it's the latter.

If you're building anything with AI, if you're using AI-powered platforms, if you're responsible for security at any organization, the time to act is now. Not after the next breach. Not after the next incident. Now.

Build security in from the start. Review AI-generated code like you'd review any critical code. Assume your systems are targets. Test your incident response. Plan for failure.

Moltbook exposed data because the founder wanted to build fast. His users are dealing with the fallout. Don't be the next cautionary tale.

The future of secure systems isn't about writing code faster. It's about writing code that actually protects the people using it. That takes time. It takes thought. It takes security expertise. But it's the only way we build systems that matter.

Key Takeaways

- Moltbook's AI-generated code exposed thousands of user emails and millions of API credentials through a single unreviewed private key vulnerability

- AI-generated code creates predictable security vulnerabilities: cryptographic failures, injection attacks, authentication bypasses, and configuration mistakes often miss human code review

- Organizations must implement multi-layer security: automated scanning, manual review of critical code, architectural validation, security testing, and incident monitoring

- Apple's Lockdown Mode successfully prevented FBI forensic access to a reporter's iPhone, showing privacy-focused engineering provides real protection against powerful adversaries

- Starlink's military shutdown and US cyberattacks on Iran demonstrate that private infrastructure and state-sponsored cyberattacks are reshaping security threat models

Related Articles

- Substack Data Breach: What Happened & How to Protect Yourself [2025]

- Betterment Data Breach: 1.4M Accounts Exposed [2025]

- Sapienza University Ransomware Attack: Europe's Largest Cyberincident [2025]

- Substack Data Breach [2025]: What Happened & How to Protect Yourself

- Modern Log Management: Unlocking Real Business Value [2025]

- DNS Malware Detour Dog: How 30K+ Sites Harbor Hidden Threats [2025]

![AI Security Breaches: How AI Agents Exposed Real Data [2025]](https://tryrunable.com/blog/ai-security-breaches-how-ai-agents-exposed-real-data-2025/image-1-1770465967729.jpg)