Bandcamp Just Showed the Music Industry How Simple AI Accountability Can Be

It's a weird moment in music. You can get an AI to write a decent song in about five minutes. It'll synthesize Drake, mimic The Weeknd, sound vaguely like Taylor Swift. It'll do it for free or nearly free, and upload it everywhere.

Most streaming platforms have... basically shrugged.

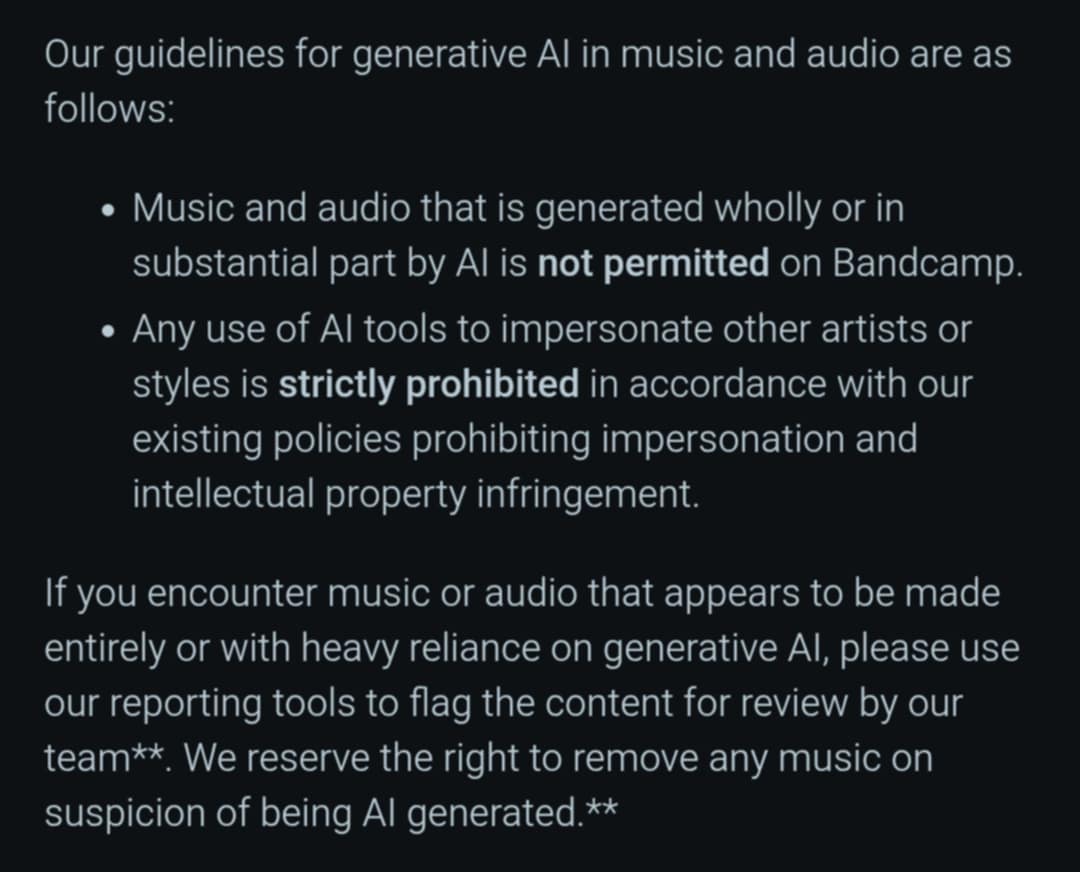

Then Bandcamp released a two-point policy that banned AI impersonation and derivative AI music. No conditions. No exceptions. No "we'll study this." Just: don't upload it, or we'll remove it.

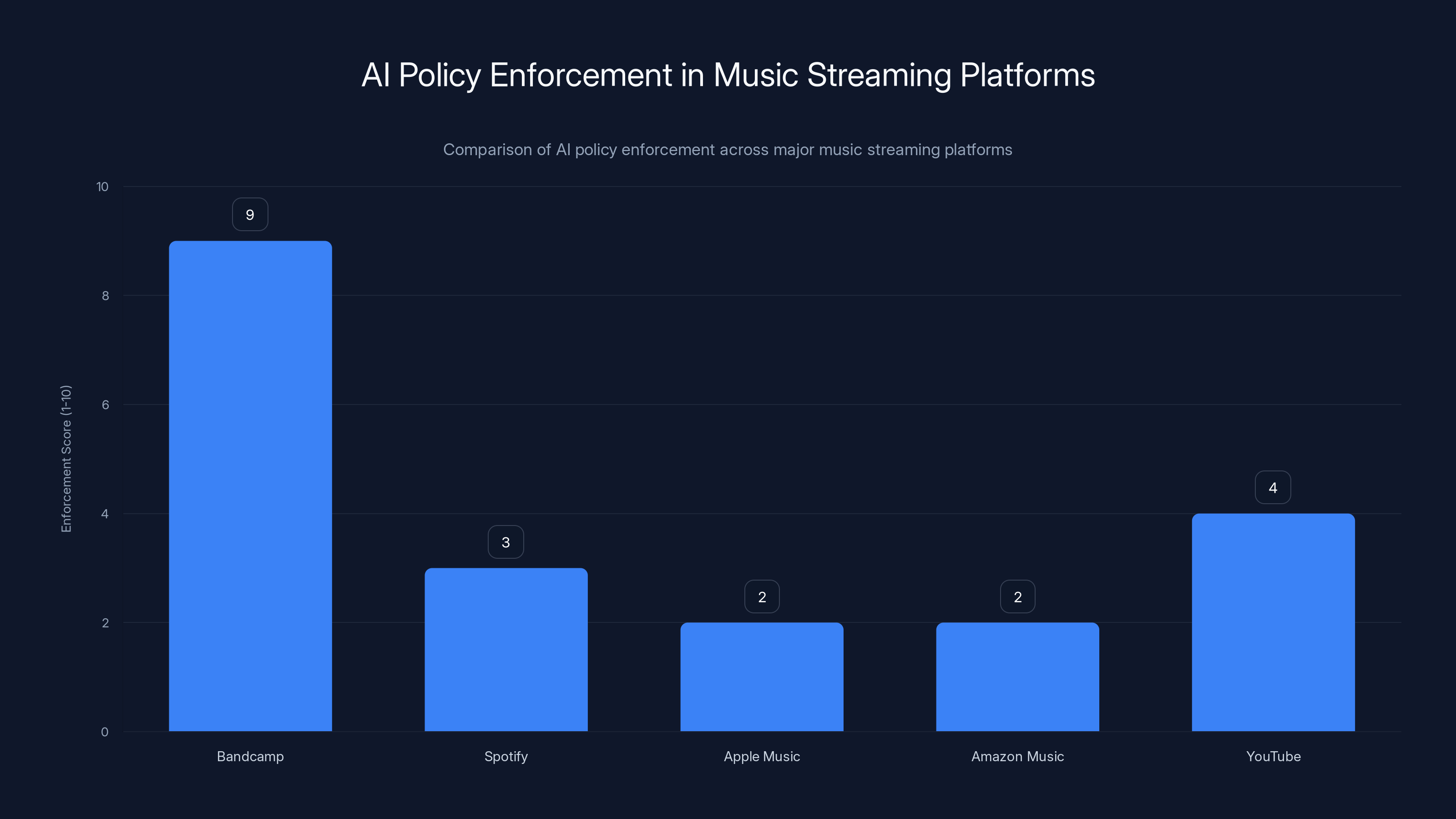

Spotify has spent years talking about AI safety while doing almost nothing. Apple Music stays quiet. Amazon Music keeps selling subscriptions. YouTube dances around it. Meanwhile, Bandcamp, the smaller independent platform, became the first major streamer to actually draw a line in the sand.

The irony is almost too obvious. The bigger the platform, the less willing they are to take a stand. But Bandcamp just proved that enforcing these standards isn't some impossible technical problem. It's a policy problem. And if a platform with a fraction of Spotify's resources can do it, why can't Spotify?

The Policy Itself Is Almost Embarrassingly Simple

Let's break down what Bandcamp actually said. The policy has two main components:

- Artists cannot use AI tools to impersonate other artists or replicate established artistic styles.

- Artists cannot upload music created primarily through AI generation without human artistic input.

That's it. Two sentences. No loopholes, no "with permission," no "in the next two years." The policy is absolute. Upload AI impersonation music and it gets removed. Do it again and your account gets flagged.

What makes this stunning is the contrast. Look at Spotify's approach. They've announced partnerships with AI music companies. They've hired executives specifically to "explore AI opportunities." They've created frameworks for detecting fake artists and streams (which, weirdly, means they have the detection technology but choose not to use it for AI music detection). They've written white papers about responsible AI use.

And yet the platform is flooded with AI-generated tracks designed to sound like legitimate artists.

Bandcamp didn't hire consultants or form committees. They just decided: this behavior violates our community standards, and we're enforcing it. The policy went live. Music flagged for violation gets removed. The process takes hours, not years.

Why Spotify's Silence Is Deafening

Spotify has 600+ million users and counting. It's the largest music streaming platform on the planet. If Spotify implemented Bandcamp's policy tomorrow, it would represent a massive shift in how AI-generated content moves through the internet.

Yet Spotify hasn't done this. Why?

The honest answer is probably financial. AI-generated tracks cost almost nothing to produce and upload. Spam uploaders can generate thousands of songs for the cost of a coffee. Some are designed to game the algorithm, trick playlist curators, or earn fractions of cents through streaming manipulation. Spotify makes money per stream, which means more music (even bad music) theoretically means more potential revenue if even tiny percentages get played.

But there's a secondary reason: Spotify wants to be seen as "pro-AI innovation." The company invests in AI music companies. It uses AI for playlist generation, algorithm optimization, and personalization. If Spotify cracks down too hard on AI-generated music, it sends a contradictory message. The company would rather position itself as forward-thinking on AI than take a hard line against low-quality AI spam.

Bandcamp doesn't have this problem. It's never positioned itself as an AI-first company. It markets itself as the place where independent artists maintain control. The platform makes money through direct artist-to-fan revenue sharing, not through ad-tech or algorithmic manipulation. An artist on Bandcamp succeeds by building genuine fans, not gaming the system.

That difference matters. It changes the incentives. Bandcamp has no financial reason to allow AI slop, but every reason to keep the platform trustworthy for real creators.

The Detection Problem That Isn't Actually a Problem

You'll hear this argument from big streamers: "We can't detect AI music reliably. It's too hard. The technology isn't there yet."

Bandcamp's policy suggests otherwise.

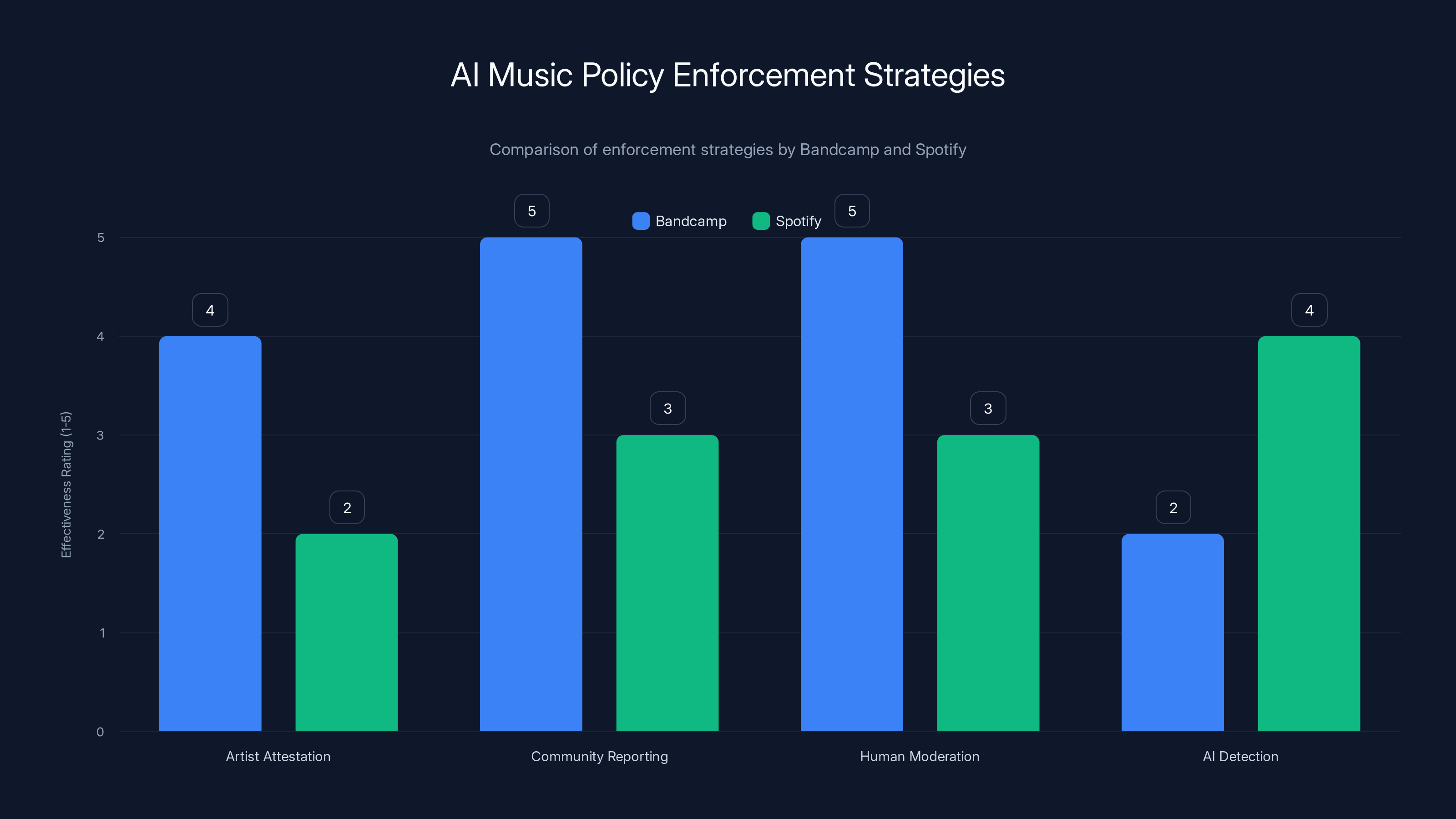

Bandcamp isn't using some proprietary AI detector that somehow works perfectly. They're relying on community reporting, manual review, and clear terms of service enforcement. Artists self-report when uploading (and Bandcamp's terms require them to confirm their music was created with genuine human artistic intent). When violations are reported, human reviewers assess them.

Yes, some AI-generated music will slip through. Some human-created music might be mistakenly flagged. But the point is that Bandcamp chose to enforce standards rather than claim enforcement is impossible.

Spotify absolutely has the resources to do the same. It could require artists to verify their identity and confirm the music's origin. It could implement human review processes for flagged content (which it already does for other policy violations). It could even use AI detection tools as a supplementary screening method, not as the primary enforcement mechanism.

What Spotify can't do is claim it's technically infeasible when Bandcamp just demonstrated it's actually pretty feasible.

The real limitation is political will and business incentives, not technology. Bandcamp found the will. Spotify hasn't.

What Bandcamp's Policy Actually Catches (and Doesn't)

Let's be precise about what this policy does and doesn't achieve.

What it catches: Any music explicitly designed to impersonate another artist. "The Weeknd Type Beat." AI Drake vocals on lofi instrumentals. Voice clones of established singers. AI-remixes of famous songs. Music that's primarily generated with minimal human creative input.

What it doesn't catch: Artists who use AI as a creative tool as part of a larger artistic vision. A producer who uses AI to generate chord progressions and then completely restructures them into something original. An artist who uses AI voice-synthesis as a deliberate artistic choice that's clearly disclosed. Electronic music made with AI that's genuinely novel and not impersonating anyone.

The distinction matters. Bandcamp's policy isn't anti-AI. It's anti-impersonation and anti-low-effort-slop. The platform allows artists to use AI if it's part of their genuine creative process. What's forbidden is lazily uploading AI-generated music and pretending it's something it isn't.

This is actually more nuanced than "AI bad." It's "deceptive AI bad." It's "art-by-copy-paste bad." It's "using AI to game the system bad."

That distinction is important because it shows the policy isn't technophobic. It's just holding artists accountable for what they claim to have created.

The Real Problem: Every Major Platform Except Bandcamp Is Failing

Let's look at where the major streamers actually stand.

Spotify: Has experimented with AI music detection. Has built AI tools of its own. Has not implemented meaningful restrictions on AI-generated content. Continues to host thousands of impersonation tracks and AI-spam albums.

Apple Music: Has been almost entirely silent on the issue. No clear policy. No public statements. Just... lets it happen.

Amazon Music: Similar silence. The platform exists, music flows through it, no active stance on AI-generated content.

YouTube Music: Has some limited copyright detection systems but doesn't specifically target AI impersonation. Millions of AI-generated videos live on YouTube with no restriction.

SoundCloud: Has experimented with AI tools but hasn't implemented artist-impersonation restrictions either.

Tidal: Minimal public policy on AI-generated music.

Bandcamp stands nearly alone. One platform decided that artist impersonation is bad, and they enforced it. Everyone else decided that enforcement is someone else's problem.

This creates a weird incentive structure. If you're an AI music spammer, you upload to Spotify, Apple Music, YouTube. You probably avoid Bandcamp now. The platforms that most need quality control are the ones that are doing least about it.

Why This Matters for Human Artists (Not Just Ethics)

Let's talk about real consequences.

When Spotify hosts 100,000 AI-generated "Drake" tracks, it doesn't just make the platform worse. It directly harms actual Drake by fragmenting his discography across fake versions. Fans searching for his music have to wade through garbage. The algorithm gets confused. The streams get diluted.

More importantly, emerging artists get completely buried. A new indie artist trying to build an audience on Spotify faces algorithmic competition from AI-spam. They're competing for playlist placement against thousands of "[Artist Name] Type Beat" tracks that cost nothing to produce. The economics get completely distorted.

On Bandcamp, this doesn't happen. Real artists compete on the merits of their actual creativity. The algorithm isn't gamed by spam accounts. Playlists feature genuine human creativity.

This matters because it fundamentally affects where real musicians can build sustainable careers. Bandcamp becomes more attractive to serious artists because it won't host impersonation music. That's not anti-technology. It's pro-artist. The platform is saying: "If you're a real creator, you'll have a better experience here because we won't let spam artists destroy the ecosystem."

Spotify could have done the same. It chose not to.

The Compliance Question: How Hard Is It Really?

Let's estimate the actual operational burden of enforcing a Bandcamp-style policy on a platform like Spotify.

Spotify already has:

- Artist verification systems (used for verified badges)

- Content moderation teams (for explicit content, copyright claims, etc.)

- Automated detection systems (for payola, manipulation, suspicious patterns)

- Manual review processes (for appeals, disputes, special cases)

- Termination infrastructure (for banning accounts that violate policies)

Implementing Bandcamp's policy would require:

- Updated terms of service (maybe 2 hours of legal work)

- Clear artist attestation at upload (infrastructure already exists for metadata)

- Community reporting system (already exists for other violations)

- Human review process (already exists for other violations)

- Training for moderation teams on AI-music indicators (maybe 8 hours per team member)

- Automated flags for obvious violations (AI music companies actually offer tools for this)

The total implementation cost is probably in the low six figures. Maybe a million dollars including training and oversight.

Spotify's market cap is $50 billion.

The company spent more than that on marketing last year. The idea that they can't afford to enforce this policy is objectively false. The idea that it's technically infeasible is objectively false. The idea that they don't have the expertise is objectively false.

They just haven't prioritized it. And Bandcamp just proved that if you do prioritize it, it actually works.

What Happens Next: The Pressure Will Build

Bandcamp's policy is going to create pressure on other platforms. Not immediately, but inevitably.

Damage lawsuits from impersonated artists are already starting. If a major artist sues Spotify for "failing to remove impersonation tracks that damaged my reputation," suddenly the legal risk becomes real. A precedent that says "streaming platforms have a responsibility to address AI impersonation" changes the game.

Artist advocacy groups will point to Bandcamp. "If Bandcamp can do it, why can't Spotify?" That question gets harder to answer the longer Spotify waits.

Regulatory bodies are starting to pay attention. The EU's AI Act has implications for music platforms. The US is considering legislation around AI-generated content. If governments start requiring platforms to verify human creation or remove impersonation, suddenly Bandcamp's voluntary policy looks like a competitive advantage rather than a limitation.

Meanwhile, artists have a choice. They can upload to Bandcamp (where there's no AI spam) or to Spotify (where their work gets buried under thousands of fake versions). As more artists figure this out, Bandcamp gets more attractive, and Spotify's ecosystem gets worse.

The irony is that enforcing these standards doesn't actually cost Spotify anything in revenue. AI-spam tracks barely make any money anyway. The platform would lose almost nothing from removing them. But it would gain trust, artist confidence, and regulatory insulation.

Instead, Spotify chose to do nothing, and Bandcamp is going to benefit.

The Broader Implication: Standards Matter

Here's the thing that's actually important: Bandcamp proved that you don't need some complicated technical solution to handle this problem. You just need standards, enforcement, and the will to stick with it.

For too long, tech companies have hidden behind "the problem is too complex" or "we're working on it" when what they really mean is "enforcing standards is less profitable than ignoring the problem."

Bandcamp showed that's not true. Enforcing standards is actually straightforward. The problem isn't the technology. The problem is the priorities.

That matters for AI regulation, content moderation, and platform accountability across every tech platform. If a smaller, less-resourced company can enforce clear standards, then bigger companies claiming they can't are just lying.

Spotify, Apple, Amazon, Google: they all have the ability to implement Bandcamp's policy tomorrow. They're just choosing not to. That choice has consequences. It tells artists they're not valued. It tells users the platform isn't worth trusting. It tells regulators that voluntary compliance isn't happening and enforcement is necessary.

Bandcamp, meanwhile, is building the kind of platform that artists want to use and that users can trust.

That's the real story here. It's not about AI. It's about who's willing to put creator interests first, and who's willing to let the ecosystem rot for marginal profit.

The Artist Perspective: Why This Policy Feels Like a Win

Talk to musicians and producers right now, and you'll hear consistent frustration with Spotify.

They're tired of searching for their own music and finding AI remixes. They're tired of algorithmic placement competing against spam. They're tired of feeling like the platform doesn't care about protecting their work or their reputation.

Bandcamp's policy is a direct response to this frustration. It's saying: "We know you worked hard on your art. We know AI spam is polluting the ecosystem. We're going to protect your interests."

That's a powerful message. It changes the value proposition of the platform. Bandcamp isn't the biggest platform. It's not the most convenient. But it's increasingly the one where real artists can actually thrive.

For emerging artists, this is especially important. On Spotify, competing against AI-spam is almost impossible. You're fighting algorithmic luck against infinite low-cost competition. On Bandcamp, you're competing against other real artists who invested real effort.

That's a game beginners can actually win.

How Other Platforms Could Actually Implement This

If you're a product manager at Spotify or Apple Music reading this, here's what Bandcamp's policy would actually look like in practice on your platform:

Step 1: Update terms of service to explicitly ban AI impersonation and AI-generated music without human creative input.

Step 2: Add a checkbox at upload asking artists to confirm they created the music with genuine human artistic intent. Make this legally binding (with terms that breaching results in account termination).

Step 3: Implement automated flags for content that matches known impersonation patterns or has suspicious metadata (uploading 1,000 songs in 24 hours, artist name is "Drake AI," etc.).

Step 4: Train your existing moderation team to review flagged content. Give them clear criteria: Is this impersonation? Is this obviously AI spam? Does it violate the policy?

Step 5: Remove violating content and warn/ban accounts.

Step 6: Let the music industry know you're doing this. Get some good PR out of actually protecting artists.

That's it. That's literally the playbook. Bandcamp proved it works. The only reason other platforms aren't doing it is because they haven't decided it's a priority.

The Economic Reality: Bandcamp's Move Is Actually Smart Business

There's an assumption that platforms like Spotify avoid enforcing these standards because enforcement is costly. That's wrong. Bandcamp's move is actually economically rational.

Here's why: AI-spam music doesn't generate meaningful revenue. A thousand AI-generated "Drake" tracks might get 100 plays total, generating maybe a dollar in revenue. But those thousand tracks dilute the value of the platform for serious artists and users.

Serious artists, meanwhile, generate real money. They build fan bases. They drive subscriptions. They create genuine engagement. If a platform that attracts serious artists gives them a cleaner, more trustworthy ecosystem, those artists will invest more and perform better.

Bandcamp's decision to ban AI impersonation is actually a bet on quality over quantity. It's saying: "We'd rather host 100,000 real artists in a trustworthy ecosystem than 1,000,000 artists where 90% are spam."

That's good business. Spotify's strategy is the opposite: host as much content as possible and let quality control be someone else's problem.

One approach builds a platform artists trust and want to use. The other builds a platform that's increasingly hostile to both artists and users. Guess which one has better long-term economics?

What This Means for AI Music Tools Generally

One thing Bandcamp's policy doesn't do: ban AI music tools themselves. Landr, Splash, and other legitimate AI music creation tools can still be used to make music that Bandcamp artists upload.

The policy just bans specific uses: impersonation and low-effort generation.

This is actually the smart regulatory approach. It's not anti-innovation. It's anti-abuse. It acknowledges that AI music tools have legitimate creative uses while preventing the worst applications.

A producer who uses AI to generate chord progressions and then creates an entire original composition around them? That's fine. That's AI as a tool.

Someone who uploads 10,000 AI-generated tracks with titles like "Weeknd AI Type Beat" hoping they'll game the algorithm? That gets removed.

The distinction matters because it means Bandcamp isn't blocking innovation. It's just preventing deception.

The Precedent: What Happens When One Company Sets a Standard

Historically, when one company sets a clear standard for content moderation, others eventually have to follow or accept being seen as the less responsible option.

Facebook didn't want to moderate hate speech until it became a reputational liability. Then suddenly they invested billions in moderation. Twitter didn't care about misinformation until regulators started asking questions. Then they reluctantly implemented fact-checking.

Bandcamp's policy might work the same way. Once the industry norm becomes "serious platforms ban AI impersonation," platforms that don't will increasingly look irresponsible.

Artists will point to Bandcamp. Regulatory bodies will cite it. Lawsuits will reference it. Pretty soon, not having a clear AI policy won't be "the efficient choice." It'll be "the negligent choice."

Bandcamp just moved the Overton window. What was previously impossible (enforcing AI policy) is now proven possible. What was previously acceptable (allowing massive AI spam) is now openly questionable.

That shift takes time to propagate, but it's happening. In 2-3 years, expect most major platforms to have some version of Bandcamp's policy. They'll claim they developed it independently. Bandcamp won't get credit. But they'll have set the standard nonetheless.

Implementation Reality: It's Actually Easier Than You Think

One last point: Bandcamp didn't use some fancy technical system to enforce this. They used policy enforcement, community reporting, and human review.

That's not because technology doesn't exist. It's because policy is cheaper and faster to implement than technology.

Yes, AI-music detection tools exist. Companies like Spotify have access to them. But detection is never perfect, and perfectly perfect detection isn't necessary. You need "good enough" detection (maybe 85-90% accuracy) plus human review to catch the rest.

Bandcamp's system probably works like this:

- Artist uploads music and checks a box confirming they created it

- Automated system flags obvious red flags (metadata indicating AI, suspicious patterns, etc.)

- Human reviewer listens to ~2 minutes of flagged music and makes a judgment call

- If it seems like AI impersonation, it gets removed

- Appeal process for false positives

That's it. That system probably catches 80-90% of actual violations immediately, and the rest over time as community reports come in.

Spotify could implement this system in 2-3 months with resources to spare. The reason they haven't isn't technical. It's political and financial.

Bandcamp just proved you don't need to wait for perfect technology. You just need to care enough to enforce standards.

FAQ

What exactly is Bandcamp's AI music policy?

Bandcamp's policy prohibits two things: using AI tools to impersonate other artists or replicate established artistic styles, and uploading music created primarily through AI generation without genuine human artistic input. The policy is enforced through content removal and account warnings/termination for repeat violations. Artists can still use AI as a creative tool as part of their artistic process, but they cannot create deceptive impersonation content or low-effort AI spam.

Why doesn't Spotify have a similar policy?

Spotify hasn't implemented strict AI music bans because of financial incentives (AI-spam still generates some revenue through ad targeting), strategic positioning (the company wants to be seen as pro-AI innovation), and lack of competitive pressure (until now, no major platform had set a clear standard). Additionally, Spotify benefits from hosting large volumes of content, even low-quality content, which inflates metrics and advertising potential. Bandcamp's economics are different because the platform makes money primarily through artist-to-fan direct sales, not through ad-based algorithmic engagement.

How does Bandcamp actually enforce this policy without sophisticated AI detection?

Bandcamp relies on a combination of artist attestation (artists confirm they created the music with genuine human input), community reporting (users flag suspicious content), and human moderation (reviewers assess flagged content against clear policy criteria). The platform also looks for suspicious patterns like uploads of thousands of tracks with impersonation names. This approach is less technologically sophisticated than some alternatives, but it's surprisingly effective because most AI-spam violators are careless and obvious about it.

Will other streaming platforms adopt similar policies?

It's likely, though the timeline is uncertain. Regulatory pressure, artist advocacy, and potential lawsuits from impersonated artists will eventually make refusing to address AI impersonation reputationally and legally risky. Spotify and Apple Music will probably announce their own AI policies within 12-24 months, likely modeled on Bandcamp's approach but with different enforcement mechanisms.

How does Bandcamp's policy affect legitimate AI music creation tools?

The policy doesn't ban AI music tools themselves. It only bans specific uses: impersonation and low-effort spam. Artists can still use AI to generate chord progressions, beats, or other elements as part of their creative process as long as the final work represents genuine human artistic intent and doesn't impersonate another artist. The distinction is between AI as a creative tool versus AI as a replacement for creativity.

What are the consequences for artists who violate Bandcamp's policy?

First violations result in content removal and a warning. Repeated violations result in account suspension or permanent termination. Artists who have their content removed can appeal through Bandcamp's support system, but the burden is on them to demonstrate that the removed content met policy requirements. This enforcement is more serious than most platforms currently use for similar violations.

Could AI detection technology help platforms enforce these policies better?

Yes, but it's not necessary for basic enforcement. AI-music detection tools exist and can flag suspicious content with 80-90% accuracy, but they're not required for a working policy. Most AI-spam is obvious enough that human moderation catches it quickly. The real bottleneck isn't technology—it's political will and enforcement resources, which most major platforms choose not to invest in.

What does this mean for the future of AI in music?

Bandcamp's policy doesn't ban AI music. It bans deceptive AI music. This suggests the future of AI in music involves clear standards about disclosure, artistic intent, and avoiding impersonation. Legitimate AI-assisted music creation will likely continue and expand, but it will increasingly come with transparency requirements and accountability standards that weren't necessary before.

How much would it cost Spotify to implement a similar policy?

The actual implementation cost is probably under $1 million, including policy development, system updates, team training, and initial enforcement. The operational costs of ongoing moderation would be absorbed into existing moderation budgets. The reason Spotify hasn't done this isn't financial—it's strategic. The company would rather avoid the regulatory precedent of aggressively moderating user-generated content, and it benefits financially from the current ecosystem of high content volume.

What pressure might force platforms like Spotify to act?

Artist lawsuits against platforms for failing to remove impersonation content, regulatory bodies like the EU treating AI impersonation as a violation of the AI Act, advertiser pressure (brands don't want their music impersonated), and competitive disadvantage (as more artists migrate to platforms with clearer protections) will eventually force action. The question isn't whether it will happen—it's how long other platforms can delay before the pressure becomes irresistible.

Bandcamp leads with a clear AI policy, scoring 9/10, while larger platforms like Spotify and Apple Music lag behind with minimal enforcement. (Estimated data)

The Bottom Line

Bandcamp's AI policy is significant not because it's technologically revolutionary—it's not. It's significant because it proves that enforcing these standards is actually possible and practical. A platform with a fraction of Spotify's resources managed to create and enforce a clear policy in a matter of weeks.

The policy itself is straightforward: no impersonation, no deceptive low-effort AI spam. The enforcement is straightforward: remove violating content and warn/ban repeat violators. The results are straightforward: a cleaner, more trustworthy platform for real artists.

Spotify, Apple Music, Amazon Music, and other major platforms have no excuse for not doing the same. They have more resources, more expertise, and more to gain from a trustworthy ecosystem. Yet they haven't.

That's a choice. And increasingly, artists and users are going to notice that choice and act accordingly.

Bandcamp just showed that the bar for AI accountability in music isn't impossibly high. It's actually pretty low. Which means every other platform that hasn't cleared it has to own the decision not to.

The pressure will build from here. Regulatory bodies, artist advocates, impersonated musicians, and users who care about platform integrity will all push for action. The only question is whether major platforms will act voluntarily or wait until they're forced to.

Based on historical precedent, they'll probably wait. They usually do. But Bandcamp has now made waiting more costly.

That's worth paying attention to.

Bandcamp relies heavily on artist attestation, community reporting, and human moderation, while Spotify leans more on AI detection. Estimated data based on policy descriptions.

Key Takeaways

- Bandcamp implemented a clear two-point AI policy banning impersonation and low-effort AI generation, while major platforms like Spotify haven't taken similar action

- The policy isn't technologically complex—it relies on artist attestation, community reporting, and human moderation rather than sophisticated AI detection

- Spotify and other major platforms have the resources to implement similar policies but choose not to due to financial incentives and strategic positioning around AI

- Different platform economics drive different choices: Bandcamp profits when artists succeed (85% revenue share), while Spotify profits from content volume regardless of quality

- Enforcement of AI policy standards is likely inevitable as regulatory pressure, artist advocacy, and lawsuits from impersonated artists build over the next 12-24 months

Related Articles

- Bandcamp's AI Music Ban: What Artists Need to Know [2025]

- AI Music Flooding Spotify: Why Users Lost Trust in Discover Weekly [2025]

- Spotify Price Increase to $13: What You Need to Know [2025]

- Wikipedia's 25-Year Journey: Inside the Lives of Global Volunteer Editors [2025]

- Grok AI Image Editing Restrictions: What Changed and Why [2025]

- Apple and Google Face Pressure to Ban Grok and X Over Deepfakes [2025]

![Bandcamp's AI Music Ban Sets the Bar for Streaming Platforms [2025]](https://tryrunable.com/blog/bandcamp-s-ai-music-ban-sets-the-bar-for-streaming-platforms/image-1-1768492060888.png)