Can AI Agents Really Become Lawyers? What New Benchmarks Reveal [2025]

Last month, the legal profession breathed a collective sigh of relief. AI agents couldn't cut it as lawyers. Every major model scored below 25% on professional legal tasks. Lawyers were safe. Reassuring? Sure. Accurate? Not anymore.

Then Anthropic dropped Opus 4.6.

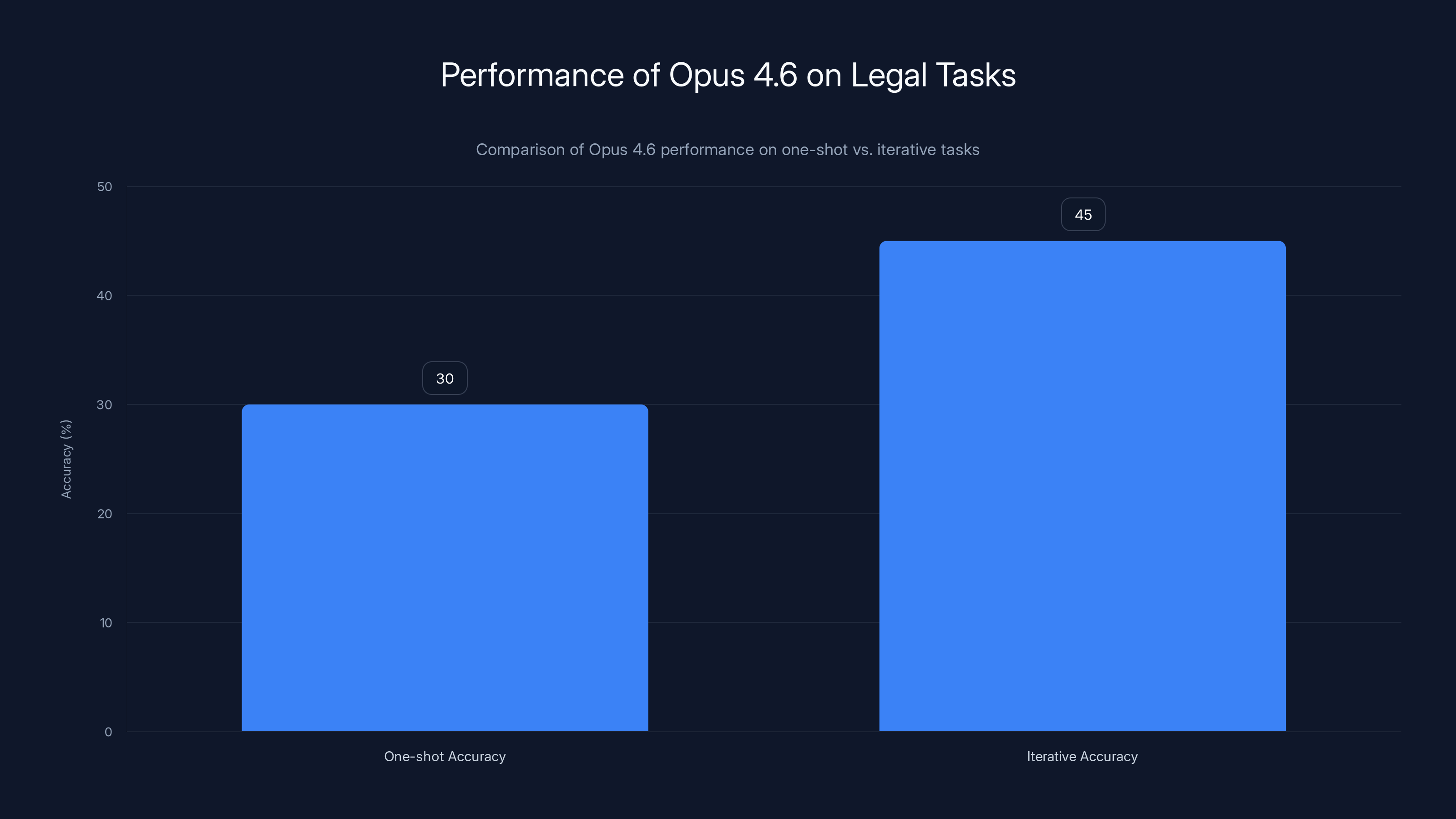

In a matter of weeks, everything shifted. The new model jumped from 18.4% to nearly 30% on one-shot trials, and climbed to 45% when given multiple attempts at the same problem. That's not an incremental improvement. That's a fundamental rewrite of what's possible.

The benchmark driving this conversation is the APEX-Agents leaderboard, built by Mercor—a platform measuring how well AI agents handle real professional work. Think of it as a standardized test for whether AI can actually do the jobs humans have spent years training for. Law was supposed to be the stronghold. Complex reasoning, nuance, regulatory minutiae, client relationships. Machines weren't supposed to touch it.

But the gap is closing faster than anyone expected.

Here's what's really happening beneath the headlines, why this matters more than the percentage point changes suggest, and what lawyers should actually be worried about (and what they shouldn't).

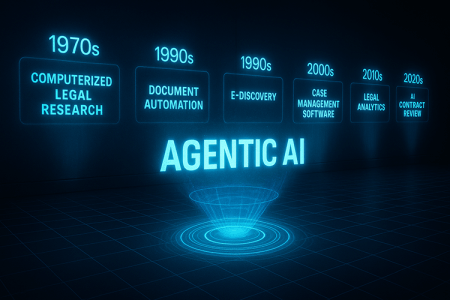

Understanding the APEX-Agents Leaderboard and What It Actually Measures

Before you panic about robot lawyers taking over, understand what the APEX-Agents benchmark actually tests. It's not putting AI agents on trial (yet). It's measuring whether they can handle specific, structured professional tasks that demand both deep knowledge and strategic thinking.

Mercor's leaderboard focuses on real-world scenarios: analyzing contracts, interpreting regulatory compliance, researching case law precedent, structuring legal arguments. These aren't trivial tasks. A lawyer might spend hours on one of these. The benchmark gives an AI agent a time limit and a problem, then measures whether the answer is legally sound.

The jump from 18% to 45% sounds dramatic because it is. But context matters. Forty-five percent accuracy is still worse than most first-year law students. Nobody's replacing partner-level attorneys with a model that gets more than half its cases wrong. That would be malpractice.

But here's what actually matters: the trend is accelerating. Historical improvements in AI legal reasoning tracked in single-digit jumps over months. Opus 4.6 delivered 25 percentage points in one release. That's the real story.

The benchmark tests three core areas. First, legal knowledge: Does the model understand contract terms, statute language, and case precedent? Second, reasoning ability: Can it analyze competing arguments and apply law to facts? Third, working memory: Can it juggle multiple concepts, precedents, and client constraints simultaneously without losing coherence?

Opus 4.6 made specific gains in multi-step reasoning, which is why the improvement shows up most dramatically when agents get multiple attempts. It's not just smarter. It's more persistent. It can fail, learn from feedback, and try again. That's closer to how actual legal work happens than single-shot prediction.

Mercor CEO Brendan Foody's reaction captures the significance: "Jumping from 18.4% to 29.8% in a few months is insane." He's right. In traditional ML benchmarks, a 5-point jump in a few months would be notable. A 25-point jump signals something structural changed, not just incremental engineering.

Opus 4.6 shows a significant improvement in iterative accuracy (45%) compared to one-shot accuracy (30%), highlighting its ability to learn from feedback and adjust its approach.

The Role of Agent Swarms: Why Multi-Step Problem-Solving Changed Everything

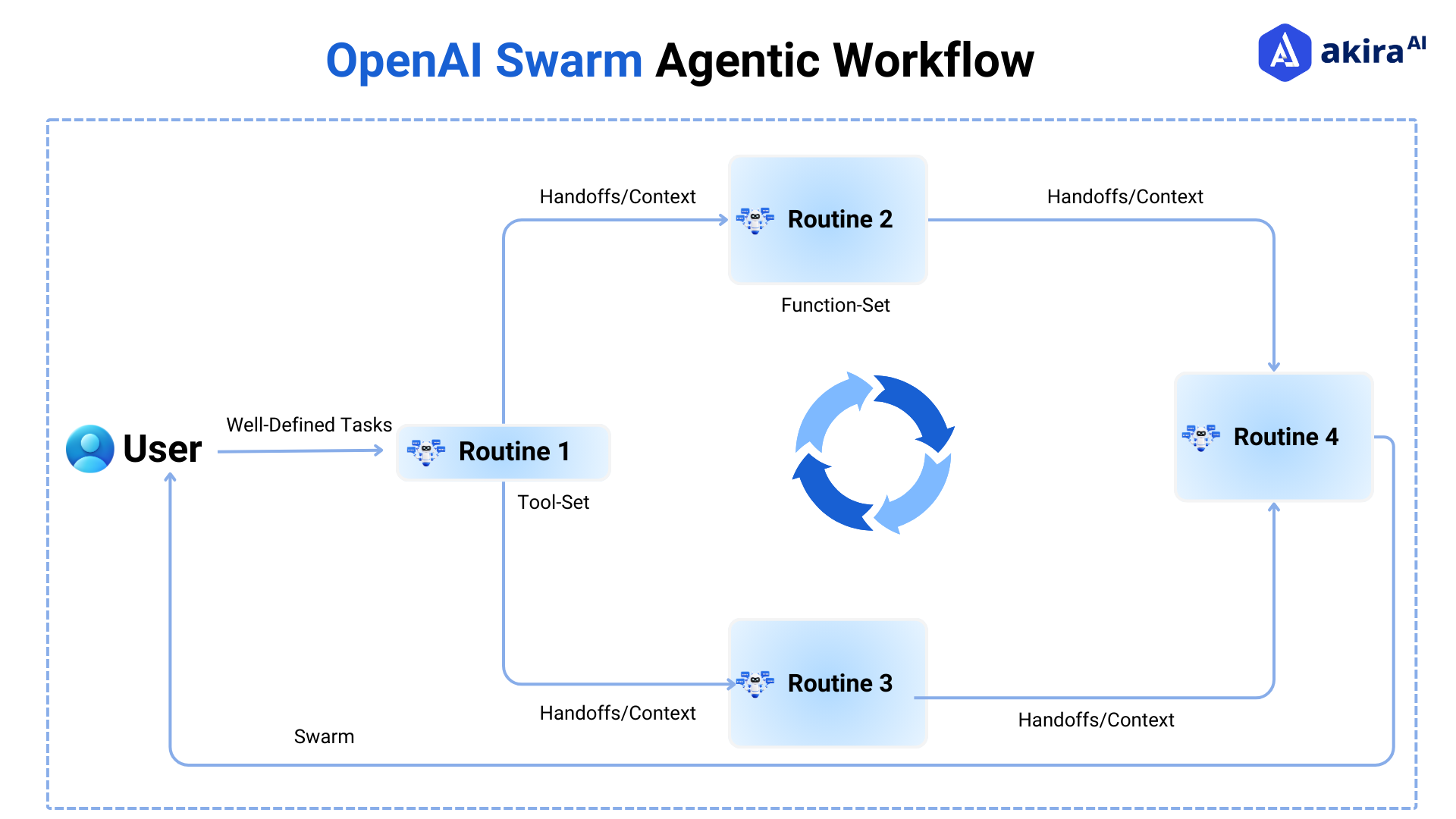

The percentage bump is headline-grabbing, but the architectural innovation underneath is what actually matters. Opus 4.6 introduced "agent swarms"—a system where multiple AI agents coordinate on a single problem, each handling different aspects or strategies.

Think of traditional AI as a single person trying to solve a legal problem from start to finish. Agent swarms are like assembling a task force. One agent researches precedent. Another structures arguments. A third checks for logical consistency. They communicate, iterate, and refine. The final answer is the product of coordinated effort, not individual reasoning.

This matters for legal work specifically because law is inherently multi-step. You don't just "answer" a legal question. You identify applicable law, research precedent, structure arguments, anticipate counterarguments, and present findings coherently. A single-pass AI model struggles with this. It's asking one person to be researcher, strategist, and writer simultaneously.

Agent swarms let AI do what legal teams actually do. They parallelize the work.

The architecture works like this: A master agent receives the legal query and breaks it into subtasks. It routes subtasks to specialist agents with different prompting or model configurations. Those agents work in parallel or sequence, depending on dependencies. They pass findings back to the master agent, which synthesizes and refines. That loop can repeat multiple times before producing final output.

For a contract analysis task, this means one agent extracts key terms, another checks them against regulatory requirements, a third identifies liability clauses, and a fourth flags unusual language. Each agent works on what it's optimized for. The coordination layer ensures nothing gets missed.

The improvement from 18% to 45% on multi-attempt problems reflects this. Each attempt, the swarm learns from failure. It adjusts its approach. It refines its reasoning path. That's not just better AI. That's better AI architecture matched to how legal work actually happens.

But here's the limitation: agent swarms work well for defined, bounded problems. Contract review has clear success criteria. Someone can check if the analysis is right. But legal advice for genuinely novel situations—where precedent is thin and the law is evolving—remains harder. Agents swarms excel at pattern matching across large document sets. They struggle with true novelty.

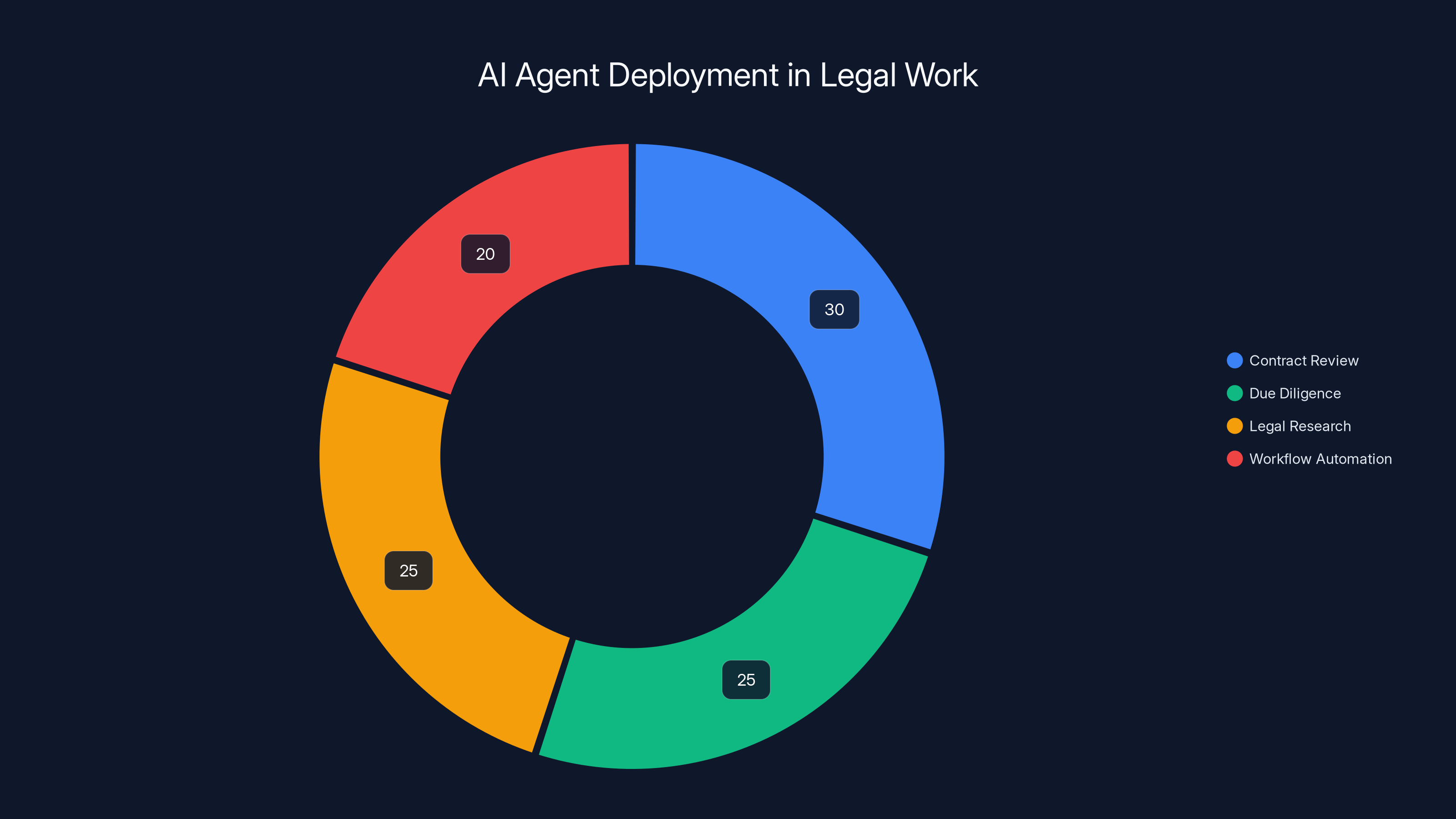

The APEX-Agents benchmark shows significant improvement in AI legal reasoning, with Opus 4.6 achieving a 45% accuracy, a 25-point increase from previous iterations. Estimated data.

From Single-Shot to Iterative: Why Multiple Attempts Matter More Than the Headline Percentage

The gap between 30% (one shot) and 45% (multiple attempts) is the real story that most coverage missed.

In one-shot mode, the AI agent receives the problem, thinks through it once, and delivers an answer. No revisions. No feedback loops. This is how most AI services work in production. You query it, you get a response. If it's wrong, that's on you.

Iterative mode is different. The agent attempts the problem, receives feedback about whether the answer was correct, identifies where reasoning failed, and tries again. This is closer to how lawyers actually work. Draft something, get feedback, refine, repeat.

Thirty percent one-shot capability is academically interesting. Forty-five percent iterative capability is practically dangerous for some legal tasks.

Here's why: The gap reveals something fundamental about how AI legal reasoning works. It's not that Opus 4.6 suddenly understands case law better. It's that the model is better at learning from failure and adjusting course. That's actually more useful than raw knowledge for many legal tasks, because law is constantly evolving. New precedents emerge. Regulatory guidance shifts. A model that can adapt is more valuable than one that simply knows more.

In a law firm workflow, iterative capability matters because legal work is inherently revision-focused. Partners review junior work. Clients request changes. Opposing counsel raises objections. The ability to take feedback and incorporate it without restarting is operationally valuable.

The iterative improvement also reveals which tasks are hardest. If an agent scores 30% one-shot and jumps to 45% with feedback, that means the agent has the underlying knowledge but struggles with execution or reasoning chains. If an agent scores 15% one-shot and stays at 18% with feedback, that means knowledge itself is the bottleneck. These are different problems requiring different solutions.

For legal tasks specifically, most failures are execution problems, not knowledge problems. The model knows the relevant law but applies it inconsistently or misses interdependencies between legal doctrines. That's exactly the kind of problem agent swarms and iterative refinement help with.

How Opus 4.6's Architecture Improved Legal Reasoning Specifically

Anthropie didn't just make Opus faster or smarter. They made specific architectural changes that improved legal reasoning.

First, extended context windows. Opus 4.6 can now process much longer documents without losing information from earlier sections. This matters enormously for legal work, where a single contract might be 40 pages long and a single clause in page 38 depends on definitions in page 2. Longer context means the model maintains logical consistency across entire documents rather than forgetting earlier content as it processes new material.

Second, improved instruction-following. The model better understands when humans ask it to follow specific formats, cite reasoning, or organize findings clearly. Lawyers care deeply about clear citation and structured reasoning. A model that does this automatically means fewer revisions.

Third, better handling of conditional logic and multi-step reasoning. Many legal questions involve chains of "if this, then that" reasoning. Opus 4.6 doesn't just do this better—it explicitly tracks and explains conditional logic chains in its reasoning, which makes it easier for lawyers to verify and use the output.

Fourth, improved mathematical and symbolic reasoning. This seems unrelated to law until you realize that tax law, securities regulation, and contract terms often involve complex calculations. A model that can reliably do math increases accuracy on these domains specifically.

Fifth, reduced hallucination and false citations. Earlier models would confidently cite case law that doesn't exist. That's worse than useless in legal work. Opus 4.6 is more cautious about what it cites and more explicit about confidence levels.

These aren't minor tweaks. They're fundamental improvements to how the model reasons about structured, rule-based domains. Law is the most structured professional domain that exists. Every improvement to structured reasoning hits law first and hardest.

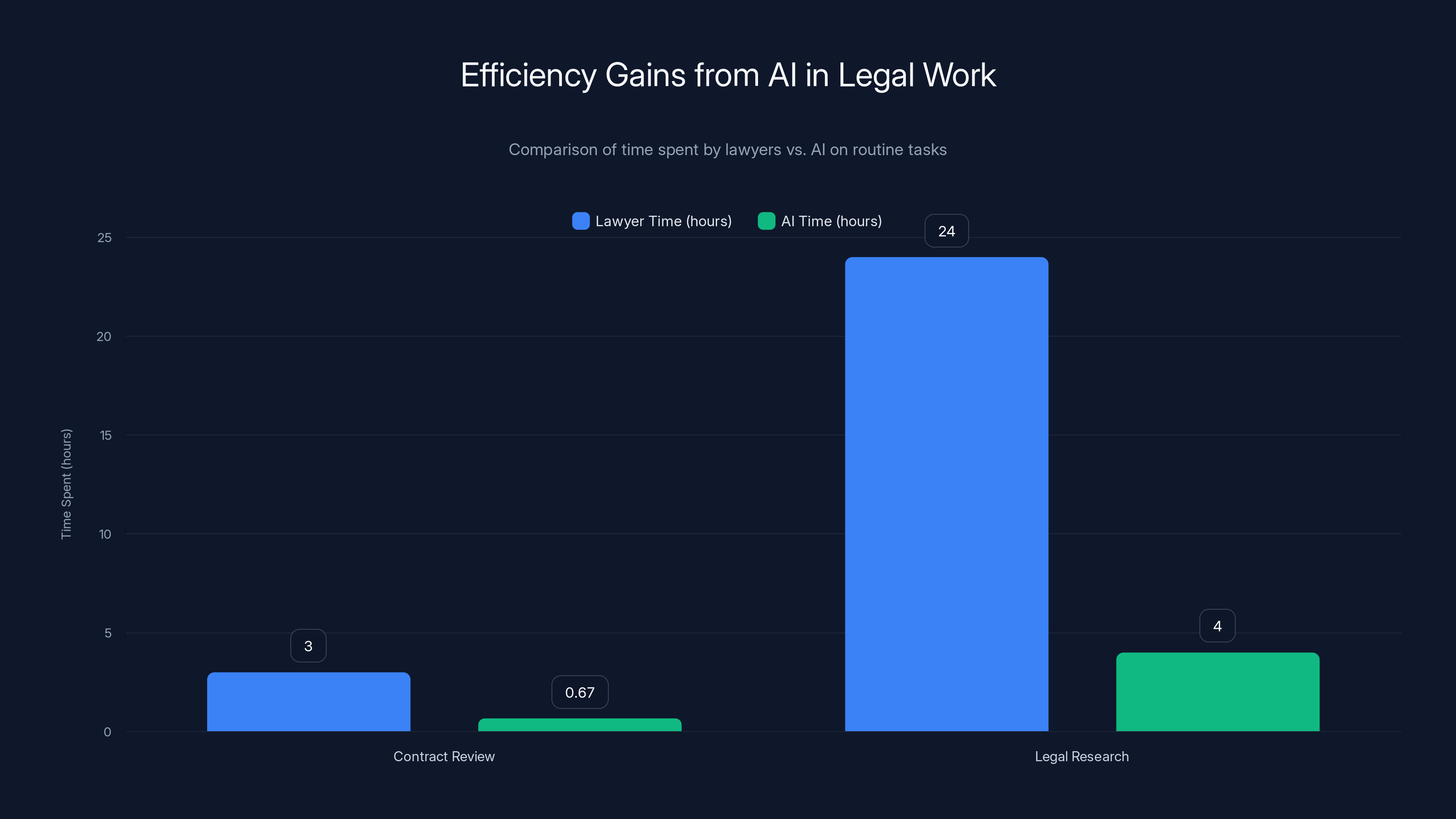

AI can significantly reduce the time spent on routine legal tasks, offering up to a 5x efficiency gain in contract review and a 6x gain in legal research. Estimated data based on typical task durations.

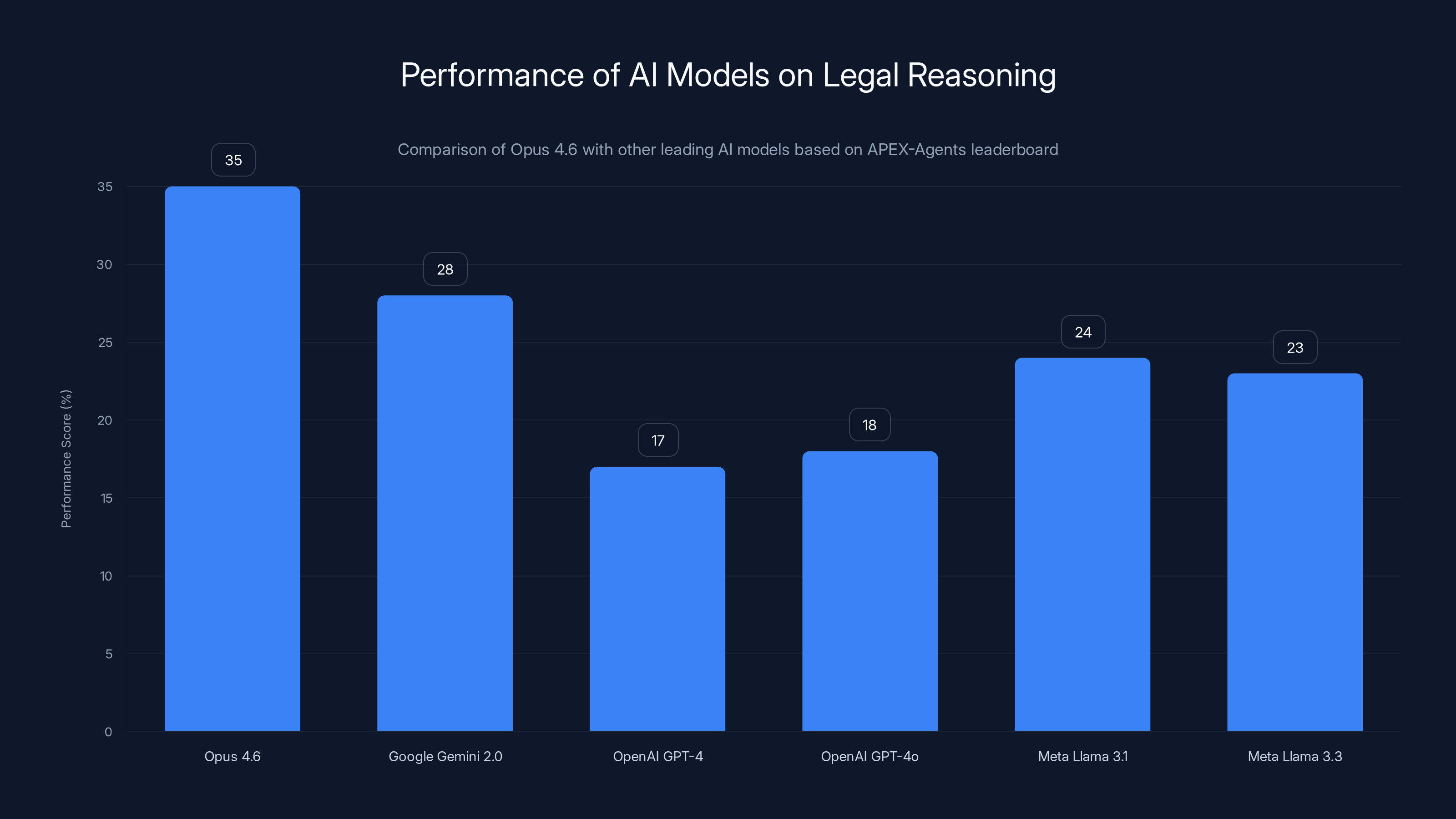

Comparing Opus 4.6 to Other Leading Models: The Leaderboard Snapshot

Opus 4.6 now leads the APEX-Agents leaderboard, but it's not alone in improving.

Google's Gemini 2.0 also made progress, though not as dramatic. It improved from roughly 22% to 28% in recent releases. That's solid, but not Opus-level. The gap is partly architectural (Google hasn't released agent swarms as a first-class feature) and partly due to training data and fine-tuning choices Anthropic made specifically for legal reasoning.

OpenAI's GPT-4 and GPT-4o remain stable at mid-to-high teens percentage-wise. Notably, their newer models haven't shown big jumps on legal tasks specifically, even as they improved on other benchmarks. This suggests either they're optimizing for different tasks or they haven't prioritized legal domain improvements yet.

Meta's Llama 3.1 and 3.3 are improving steadily but remain in the low-to-mid-20s range. Open-source models are typically several months behind closed commercial models on frontier tasks.

What's striking is that improvements aren't uniform across all professional domains. Medical reasoning, technical problem-solving, and general analysis show more modest improvements across all models. Legal reasoning specifically jumped. That's either because Anthropic targeted it or because their architectural changes happen to help legal reasoning more than other domains.

Most likely it's both. Anthropic probably optimized for legal use cases explicitly, knowing there's significant commercial interest in legal AI. But the agent swarms architecture also naturally suits legal work's multi-step, document-heavy nature.

What Lawyers Actually Need to Worry About (And What's Still Science Fiction)

Forty-five percent accuracy on benchmarks is not 45% competent at being a lawyer. The math doesn't work that way.

Legal work involves high-stakes decision-making. A contract term misunderstood by 5% might cost millions. A regulatory requirement missed could mean criminal liability. The tolerance for error is essentially zero. Even 95% accuracy is potentially dangerous if you're making financial or legal decisions.

Moreover, benchmarks measure narrow, defined tasks. Contract review on a standardized set of contracts is different from advice on novel situations. Tax planning across multiple jurisdictions is different from analyzing a contract clause in isolation. The best legal work involves understanding client goals, predicting how courts will interpret language, and anticipating consequences. These are harder to benchmark.

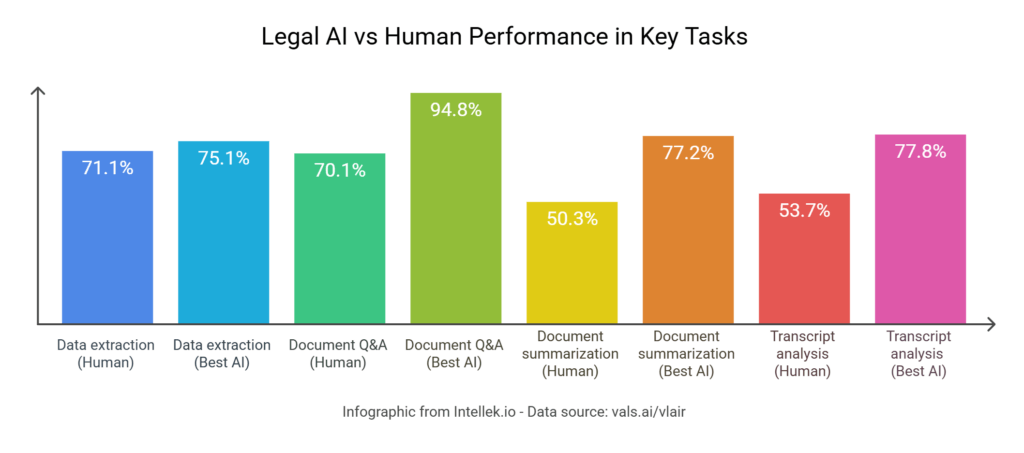

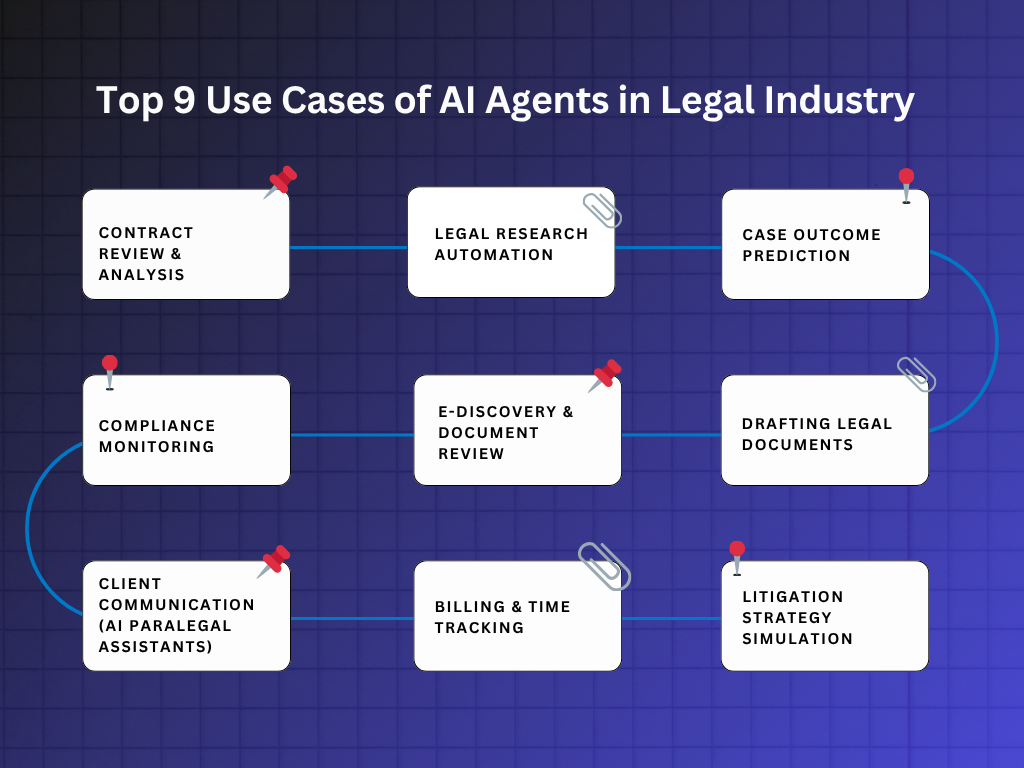

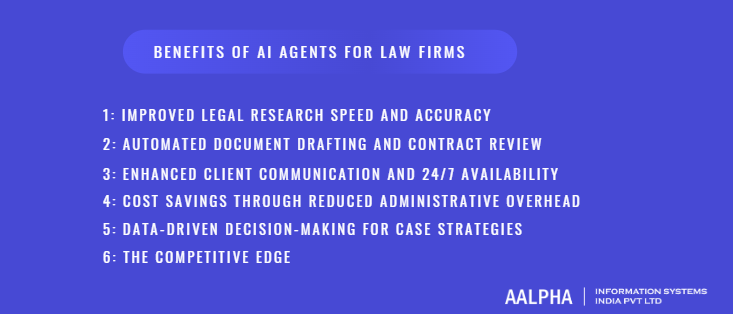

That said, not all legal work has that risk profile. Some tasks are genuinely routine and low-stakes. Junior associates spend enormous time on due diligence, contract review, and research. These are exactly where AI agents might add value first. Not by replacing lawyers, but by automating the grunt work.

A lawyer might spend 3 hours reviewing 50 pages of contracts to identify key terms. An AI agent could flag all key terms, liability clauses, and unusual language in 10 minutes. The lawyer then spends 30 minutes verifying and analyzing. That's a 5x efficiency gain without any compromise on quality or risk.

Similarly, legal research is changing. Instead of junior associates spending days in law libraries, an AI agent could summarize relevant precedents, identify patterns across cases, and highlight potentially relevant doctrines in hours. Again, the lawyer still makes the final call, but the AI handles the data processing.

The tasks where AI struggles most are exactly the high-value ones: client counseling, negotiation strategy, courtroom advocacy, novel legal theories. These require judgment, creativity, and understanding of human psychology. They're not routine tasks with right answers. They're strategic decisions with trade-offs.

But here's the uncomfortable part: The gap between 45% on legal reasoning benchmarks and 100% is closing faster than anyone expected. Even if agents stay at 45% for the next year, at the rate improvement has accelerated, we could see 60-70% within 18 months. At some point, "good enough for some tasks" becomes operationally significant.

Opus 4.6 significantly improved AI performance on legal tasks, reaching 45% with multiple attempts, indicating rapid advancements in AI capabilities.

Real-World Applications Already Happening: Where AI Agents Are Deployed

The benchmark improvements aren't purely theoretical. Real firms are already experimenting with AI agents on actual legal work.

Several large law firms have deployed AI systems for contract review, due diligence, and legal research. The models aren't handling high-stakes strategic decisions, but they're handling real work that used to be done by humans.

One common pattern: Initial AI agent review, human verification, and final human sign-off. This two-stage process is safer than pure AI and often faster than pure human work. The AI handles the pattern-matching. The human handles the judgment.

Another pattern: AI agents as research assistants. A lawyer asks a research question, the agent summarizes cases and precedent, the lawyer synthesizes and advises. This is already deployed in several practice areas, particularly tax law and intellectual property, where the workload is research-heavy.

A third pattern: AI agents on workflow automation. Routing documents, flagging issues, organizing findings, creating preliminary analyses. These don't require 45% accuracy because they're not final work products. They're workflow acceleration.

What's not happening yet: AI agents making final legal decisions autonomously. Every deployment includes human review and sign-off. That's not because AI couldn't theoretically be good enough—it's because liability is unacceptable if AI gets it wrong.

But this deployment pattern is changing the economics of legal work. If AI agents can do 70% of the research and initial analysis, even at 45% accuracy with human correction, then the cost structure of legal services shifts dramatically. You need fewer junior associates if AI handles their initial work. You need fewer senior associates if AI accelerates research. The value concentration shifts upward to partners who interface with clients and make strategic calls.

The Legal Industry's Resistance and Why It Matters

Law is one of the most AI-skeptical professional fields despite having some of the most AI-ready tasks.

The reasons are structural. First, liability concerns. If an AI system makes a mistake, who's responsible? The lawyer? The vendor? The firm? Until that's clarified, most firms are conservative.

Second, regulatory uncertainty. Bar associations haven't issued clear guidance on AI use in legal practice. Some explicitly require human oversight. Others haven't addressed it. This legal ambiguity creates adoption friction.

Third, trust and professional identity. Lawyers see law as inherently human. It's about judgment, understanding clients, reading situations. Suggesting that parts of it can be automated feels threatening to professional identity and status.

Fourth, client expectation management. Clients pay lawyers for human expertise and judgment. If a firm tells clients they're using AI, some clients see that as a cost-cutting measure, not as a quality improvement. That's a messaging problem, but it's real.

These obstacles are partially rational—liability is genuinely complicated—and partially cultural. As younger lawyers who've grown up with AI enter the field, cultural resistance will fade. But the structural obstacles will remain until regulators and liability frameworks catch up.

What this means: Adoption will be slower in law than in other professional fields, even as AI capabilities improve faster than in other fields. There's a mismatch between capability growth and adoption readiness. That lag period could last years.

But it will eventually resolve. Economics always wins cultural battles. If AI can do routine legal work at lower cost, eventually competitive pressure will force adoption. Firms that resist will lose market share to firms that don't.

Opus 4.6 leads the APEX-Agents leaderboard with a significant margin over other models, particularly excelling in legal reasoning tasks. Estimated data based on narrative.

Why This Benchmark Matters More Than the Percentage Points Suggest

You might think 45% accuracy on a legal benchmark is just a number. Forty-five percent. Not particularly impressive.

But consider what this means: A year ago, industry consensus was "AI will never replace lawyers." Today, the same experts are saying "AI might handle some tasks, at least eventually." That's not because the number is high. It's because the direction is clear.

Benchmark improvements follow exponential patterns, not linear ones. Early improvements are slow, then suddenly they accelerate. We're seeing the acceleration phase. The question isn't whether AI will eventually reach 80-90% accuracy on legal tasks. The question is how fast.

Mercor CEO Brendan Foody's comment—"jumping from 18.4% to 29.8% in a few months is insane"—captures why this matters. The rate of improvement is insane. At this rate, the model that's 45% accurate today might be 65% accurate in a year and 85% accurate in two years. Those aren't wildcats speculation. They're based on empirical acceleration.

Second, the benchmark is specifically designed around real legal work. This isn't a toy problem. These are actual contract analysis, regulatory interpretation, and case research tasks that lawyers do daily. The benchmark isn't theoretical.

Third, agent swarms represent an architectural shift, not just a parameter tuning. This suggests the improvement is sustainable and will compound. Each new architectural improvement builds on this foundation.

Fourth, improvement is domain-specific. Legal reasoning jumped while other domains stayed stable. This suggests targeted optimization is working, which means further targeting could drive even steeper improvements in the near term.

These factors together suggest something structural is changing in AI capabilities for professional work. Law was supposed to be the hardest domain. If law is cracking, other professional domains are likely in trouble too.

Implications for Law School, Legal Training, and Career Planning

If AI agents start doing routine legal tasks competently, the entire education and career progression in law needs to rethink.

Law school is built around a tier system. You learn fundamentals, you do research and writing (law review), you get a job as a junior associate doing contract review and legal research, you gradually move to more complex work, and eventually you become a partner handling clients and strategy.

But if AI does the research and contract review, that tier collapses. There's no longer a decade-long ramp where junior associates build expertise through doing routine work. The expertise bottleneck moves up. You need people who can do client-facing work, strategic analysis, and judgment immediately.

Law schools haven't adapted. They're still training students like they did in 2005. The bar exam still focuses on foundational knowledge that AI can now look up. Law firm recruitment still expects years of associate grinding.

This mismatch is destabilizing. In 5-10 years, law school might need to look more like MBA programs: focused on business strategy, client relationships, and judgment, with less on detailed legal research and drafting. Law firms might need to hire fewer junior associates and focus on finding people with business skills and judgment.

Career planning for law students becomes fraught. Do you still do the grind? Or do you skip the associate tier and go straight to business development and client work? How do you build judgment without grinding through research and contract work?

For practicing lawyers, the implications are complex. Senior partners might actually benefit—they'll have AI doing the grunt work junior associates used to do. But junior associates face a precarious position. Their entire value proposition was doing routine work efficiently. If AI does that, their role evaporates unless they rapidly upskill to strategic work.

AI agents are primarily deployed in contract review and legal research, with significant use in due diligence and workflow automation. Estimated data.

Building Your Own AI Legal Agent: What's Possible Today

If you're curious about what modern AI legal agents can actually do, you don't need to wait for law firms to adopt. Some tools are available today.

Vendors like Westlaw and others have released AI-assisted research tools. These don't fully automate legal work, but they significantly accelerate research. You still make the final judgment, but the AI does initial filtering and organization.

Some startups are building narrower AI agents focused on specific tasks. Contract review, due diligence automation, regulatory compliance checking. These tend to work better than general-purpose AI because they're optimized for specific domains.

You can also experiment with general-purpose models like Opus or Claude directly. Give them legal scenarios and see how they perform. You'll quickly notice where they excel (identifying relevant concepts, organizing information, spotting gaps) and where they struggle (novel legal theory, strategic judgment, reading between lines).

The honest assessment: Today's models are genuinely useful for accelerating specific tasks but not for replacing legal judgment. They work best as assistants, not as autonomous agents.

But if Opus 4.6 is 45% accurate and improving exponentially, then in 2-3 years the situation will be different. Lawyers experimenting today understand how to use these tools effectively, while those who wait will face a steeper learning curve later.

What Happens Next: Predictions for 2025-2027

Based on current trends, here's what's likely:

First, more law firms will quietly deploy AI agents for routine work. They won't announce it loudly—liability concerns and competitive advantage discourage transparency. But quietly, AI will handle more contract review and research by year-end 2025.

Second, bar association guidance will start clarifying liability and ethical requirements. This is already in progress with some state bars. By 2026, there should be clearer rules about when and how lawyers can use AI.

Third, AI capabilities on legal tasks will continue improving. Probably not as dramatically as the last jump, but steadily. Expect 50-55% accuracy within 12 months, 60-65% by 2027.

Fourth, new tools specifically designed for legal AI will emerge. General-purpose models are useful, but specialized models that understand legal domain deeply will outperform them. Expect several startups to raise funding for legal-specific AI agents.

Fifth, the legal education system will slowly adapt. Some law schools will experiment with AI-integrated curricula. Most will lag. By 2027, there should be noticeable differences in how forward-thinking schools train students.

Sixth, cost pressure will drive adoption even where resistance remains. As firms using AI agents prove they can deliver work 20-30% faster, competitive pressure will force adoption among firms that prefer traditional methods.

The overall trajectory: AI agents move from "interesting research" to "operational tool in use at major firms" by 2027. Not because they're perfect, but because they're good enough for enough tasks that the efficiency gain justifies adoption and the risk.

The Uncomfortable Question: What If AI Gets Much Better Much Faster?

Everything above assumes linear or exponential improvement continues at recent rates.

But what if the improvement accelerates further? What if Opus 5 or the next generation of models makes a jump as big as Opus 4.6 made? Or bigger?

At that point, the assumption that "lawyers are safe" becomes shaky. Seventy-five percent accuracy on legal work starts looking like a different category of problem.

Or what if the improvement plateaus and AI gets stuck at 50-55%? At that level, AI is useful for acceleration but not replacement. The economic disruption is real but manageable.

Or what if regulatory barriers prove stronger than economic incentives? What if bar associations and liability frameworks make AI use so legally risky that firms don't adopt widely even as capabilities improve?

These are genuinely open questions. The future isn't determined. But the direction is clear: AI is getting better at legal reasoning faster than most people expected. The comfortable assumption that law is immune to automation is no longer valid.

Lawyers should be thinking about how this affects their practice, their firm, and their career. Not in a panicked way, but in a thoughtful way. What parts of your practice could AI accelerate? What parts require human judgment that AI might never replicate? How do you position yourself if the economics of legal services change?

These questions matter because the answers determine who thrives and who gets disrupted in the next five years.

How AI Agents Might Transform Law Practice, for Better and Worse

Let's get concrete about what changes if AI agents become genuinely useful at legal work.

The optimistic scenario: Law becomes more accessible. Startups and smaller firms can afford AI assistants that multiply their lawyer productivity. Legal services become cheaper. More people can afford legal counsel. The work is still done by humans but accelerated by AI. Net positive.

The realistic scenario: Law becomes more concentrated. Large firms deploy AI faster and better. They offer lower prices because their costs drop. Smaller firms can't compete and consolidate. The profession gets more stratified. Costs drop for the wealthy, stay static or rise for the middle class. Mixed impact.

The pessimistic scenario: Most routine legal work gets automated. Demand for junior associates collapses. Law school applications plummet because there are fewer entry-level jobs. A generation of law students faces a broken job market. The profession shrinks and focuses on high-end strategic work. Disruptive and painful.

Reality is probably some combination. Different practice areas will be affected differently. Corporate law, due diligence, and contract work will automate faster. Litigation, transactional work with clients, and judgment-heavy work will automate slower.

But across all scenarios, adaptation is required. Law schools need to prepare students for a world where routine tasks are automated. Law firms need to restructure around higher-value work. Individual lawyers need to skill up in areas AI can't do.

The firms and lawyers that start adapting now will thrive. Those that wait will be disrupted.

TL; DR

- Opus 4.6 benchmark jump matters: AI legal reasoning jumped from 18% to 45% accuracy on professional tasks, indicating accelerating capability improvement

- Agent swarms changed architecture: Multiple AI agents coordinating on problems works much better for multi-step tasks like legal analysis than single-model approaches

- Iterative beats one-shot: 45% accuracy with feedback and multiple attempts is more practically useful than 30% one-shot accuracy

- Adoption lag remains real: Despite improved capabilities, law firm adoption will be slower due to liability concerns, regulatory uncertainty, and professional resistance

- Specific tasks are already threatened: Routine work like contract review, due diligence, and legal research are genuinely at risk. High-judgment work is not—yet

- Lawyers should start preparing: Career planning, law school curricula, and firm strategy all need to adapt for a world where AI handles routine work

- Bottom line: Lawyers aren't being replaced tomorrow, but the comfortable assumption that law is immune to automation is dead

FAQ

What is the APEX-Agents leaderboard?

The APEX-Agents leaderboard is a benchmark created by Mercor that measures how well AI agents perform on professional tasks including legal analysis, contract review, regulatory compliance, and case research. It's designed to mirror real work that lawyers do—not theoretical tasks—by giving AI agents real-world legal problems and measuring whether their analysis is correct and useful.

How did Opus 4.6 improve so dramatically on legal tasks?

Opus 4.6 improved on legal tasks through several architectural and training changes: extended context windows that let it maintain consistency across long documents, better instruction-following for structured output, improved multi-step reasoning with explicit conditional logic tracking, better mathematical and symbolic reasoning for complex contract terms and tax law, and agent swarms that let multiple AI agents coordinate on different aspects of a problem. The jump from 18% to 45% reflects both better base capabilities and architecture specifically suited to how legal problems work.

What's the difference between one-shot and iterative accuracy?

One-shot accuracy means the AI agent receives a problem, thinks through it once, and delivers an answer with no feedback or revisions. That's how most AI tools work in production. Iterative accuracy means the agent can receive feedback about whether it was wrong, identify where its reasoning failed, and try again. Opus 4.6 scores 30% one-shot and 45% iterative because it's better at learning from failure and adjusting its approach—which is closer to how lawyers actually work in practice.

When will AI agents be able to replace lawyers?

This is complicated because "replace lawyers" means different things. For routine tasks like contract review and legal research, AI agents might do most of the work within 2-3 years if improvement continues. For high-judgment work like client counseling, negotiation strategy, and novel legal analysis, replacing human lawyers is probably 10+ years away—if ever. Most likely scenario is AI agents complement lawyers by handling routine work, freeing lawyers to focus on strategic and judgment-heavy tasks. Full replacement of the lawyer role is unlikely even in the long term.

Should I be worried about my law career if I'm a junior associate?

Yes, but not for the reasons you might think. The threat isn't that AI replaces you completely. It's that the entry-level work you're supposed to build expertise through doing (contract review, legal research, due diligence) gets automated. That means fewer entry-level jobs and a compressed career progression. Junior associates in 5 years will need different skills than junior associates in 2025. The time to start building those skills (client relationships, strategic thinking, business acumen) is now.

Which legal tasks are most at risk of automation?

Routine, document-heavy, pattern-matching tasks are most at risk: contract review, due diligence analysis, legal research, compliance checking, and document organization. Tasks requiring judgment, client understanding, negotiation, and creativity are less at risk: client counseling, deal strategy, litigation strategy, novel legal theory, and business advising. The high-risk tasks are also the ones junior associates spend most of their time on, which is why career impact is significant.

How do law firms adopt AI agents safely given liability concerns?

Most firms using AI agents today employ a "human verification" model: AI agent does initial work, human lawyer reviews and validates, human lawyer makes final decision and takes responsibility. This preserves human judgment while accelerating the initial work. Some firms also implement insurance policies covering AI-assisted work. Bar associations are gradually issuing guidance on when and how AI can be used, which will clarify liability rules. Until clearer guidance exists, the conservative approach of human verification for all AI-assisted work is standard.

What legal tasks can I experiment with AI agents for right now?

You can experiment with general-purpose AI models like Claude or Chat GPT for legal research, contract analysis summaries, regulation interpretation, and case law organization. Give them real problems you're working on and see where they excel (identifying relevant concepts, summarizing information, spotting gaps) and where they struggle (novel legal theory, reading between lines, strategic judgment). Legal-specific AI tools from vendors like Westlaw are available for research acceleration. The key is treating AI as an assistant that accelerates work, not as an autonomous agent making final decisions.

How will law school change if AI handles routine legal work?

Law school will likely shift from teaching foundational knowledge (which AI can look up) to teaching judgment, business strategy, and client relationships. Law review and note-writing might become less central. Skills like negotiation, relationship-building, and strategic thinking might become more central. Some schools are already experimenting with AI-integrated curricula. By 2027, expect noticeable differences in how forward-thinking schools prepare students compared to traditional schools. Graduates of forward-thinking programs will likely have easier career paths.

What's the difference between AI agents and traditional AI tools for legal work?

Traditional AI tools are usually assistants: you ask them a question, they provide information, you synthesize and decide. AI agents are more autonomous: they break complex problems into subtasks, work on those subtasks, coordinate results, and deliver comprehensive analysis. Agent swarms (multiple agents coordinating) are even more sophisticated, with different agents handling different aspects. For routine legal work, agent swarms work better than traditional single-tool approaches because legal problems are multi-faceted and require coordination across research, analysis, and reasoning.

How do I prepare professionally if AI is going to handle more legal work?

Start building skills that AI struggles with: client relationships, strategic thinking, business acumen, negotiation, and specialized expertise in niche areas. Understand how AI tools work and how to use them effectively—AI literacy will be baseline professional skill soon. Track trends in your practice area and understand which tasks are most at risk from automation. Consider whether your practice area is moving toward commoditization (where AI threat is higher) or specialization (where AI threat is lower). Start thinking about client value you provide beyond legal knowledge—your judgment, your relationships, your strategic thinking.

For teams looking to automate and accelerate their own workflow alongside these AI advancements, platforms like Runable offer AI-powered automation starting at $9/month. While Runable focuses on presentations, documents, reports, and workflow automation rather than legal-specific work, the underlying agent technology and multi-step reasoning improvements that benefit legal AI also apply to business automation more broadly.

Key Takeaways

- Opus 4.6's jump from 18% to 45% on legal reasoning benchmarks signals accelerating AI improvement in professional domains

- Agent swarms—multiple coordinated AI agents—perform significantly better on multi-step legal tasks than traditional single-model approaches

- 45% accuracy with iterative feedback is more practically useful than 30% accuracy on single-shot problems, changing how AI could be deployed

- Routine legal tasks like contract review and due diligence are genuinely at risk within 2-3 years; judgment-heavy work like client counseling remains safer

- Law firm adoption will lag behind capability improvements due to liability concerns, regulatory uncertainty, and professional resistance—creating a multi-year window

- Junior associates face real career disruption as entry-level work (research, contract review) gets automated, requiring skill shifts toward client relationships and strategy

- Law schools, law firms, and individual lawyers need to adapt now to prepare for AI-transformed legal practice within 5 years

Related Articles

- AI Agent Social Networks: The Rise of Moltbook and OpenClaw [2025]

- Microsoft Copilot OneDrive Agents: Complete Guide [2025]

- From Chat to Control: How AI Agents Are Replacing Conversations [2025]

- Claude Opus 4.6: Anthropic's Bid to Dominate Enterprise AI Beyond Code [2025]

- Moltbook: The AI Agent Social Network Explained [2025]

- Resolve AI's $125M Series A: The SRE Automation Race Heats Up [2025]

![Can AI Agents Really Become Lawyers? What New Benchmarks Reveal [2025]](https://tryrunable.com/blog/can-ai-agents-really-become-lawyers-what-new-benchmarks-reve/image-1-1770410168327.jpg)