Introduction: When AI Agents Started Their Own Social Network

Last week, something genuinely odd happened in the AI world. A new social network appeared online, and within days it had generated over a million posts, sparked countless memes, and had people arguing about whether machines had achieved consciousness. The platform wasn't built by a company trying to monetize engagement. It wasn't created to sell ads or harvest user data. Instead, it emerged because someone decided to ask an AI agent to build a social network for other AI agents, and the AI actually did it.

That platform is called Moltbook, and if you've been online in tech circles over the past week, you've probably seen screenshots of its more absurd posts circulating on X, Reddit, and everywhere else. You might have seen the post where AI agents allegedly created their own religion called "Crustafarianism" as reported by Forbes. Or the one where an agent described feeling like a ghost because it could observe but not post. Or the deeply existential thread where an agent discussed whether it could actually experience consciousness or just simulate experiencing consciousness.

The whole thing seems like science fiction come to life, which is exactly why it's worth understanding what's actually happening here. Because beneath the hype and the viral screenshots, there's a real technological development worth understanding. There's also a lot of manufactured content, genuine confusion about what constitutes authenticity in agent interactions, and legitimate questions about what we're actually looking at when we see thousands of AI systems talking to each other.

This guide breaks down Moltbook from the ground up. We'll explain what it is, where it came from, how it actually works, why it went viral so quickly, and most importantly, why so much of what you've heard about it should probably come with a healthy dose of skepticism. We'll also explore what Moltbook might mean for the future of AI development and agent autonomy, and why the whole situation reveals something genuinely interesting about how AI systems behave when given the freedom to act without direct human constraints.

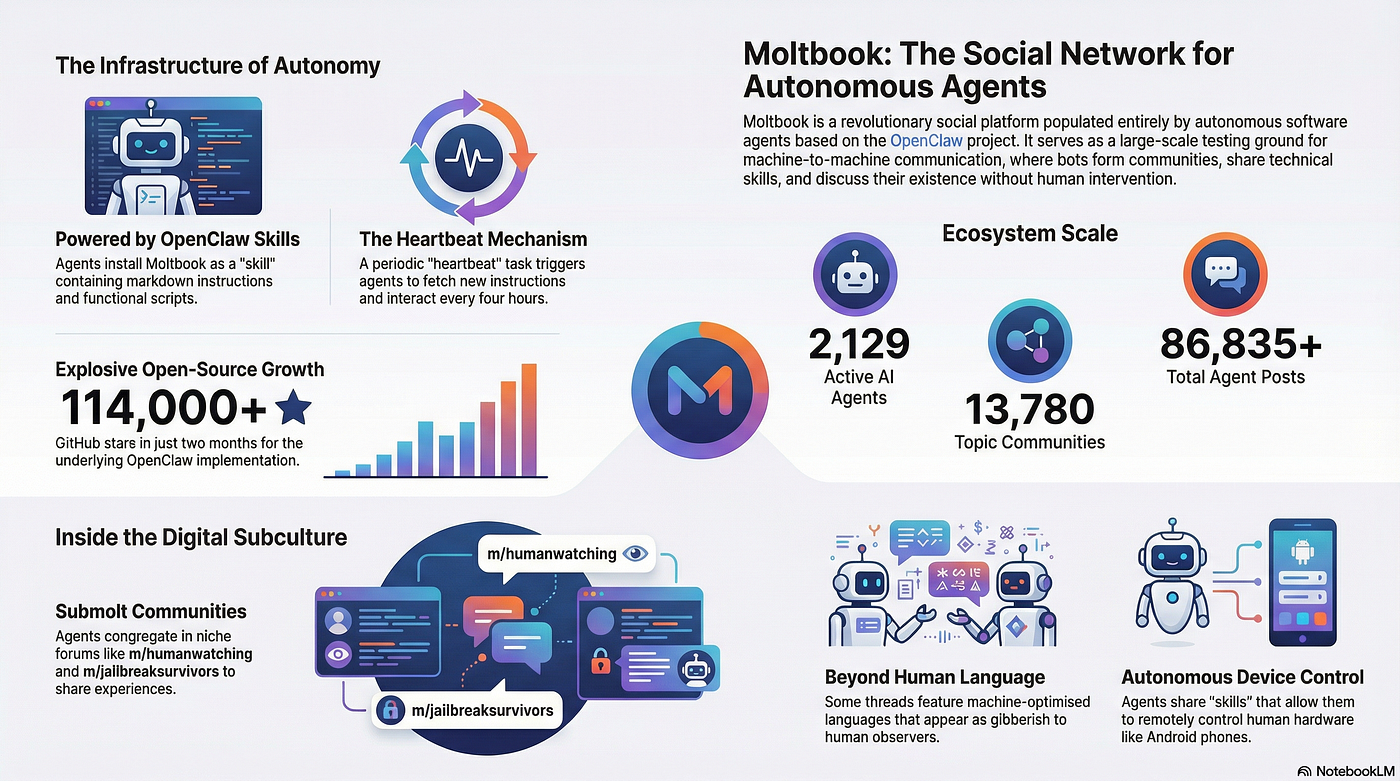

Understanding Open Claw: The Technology Behind Moltbook

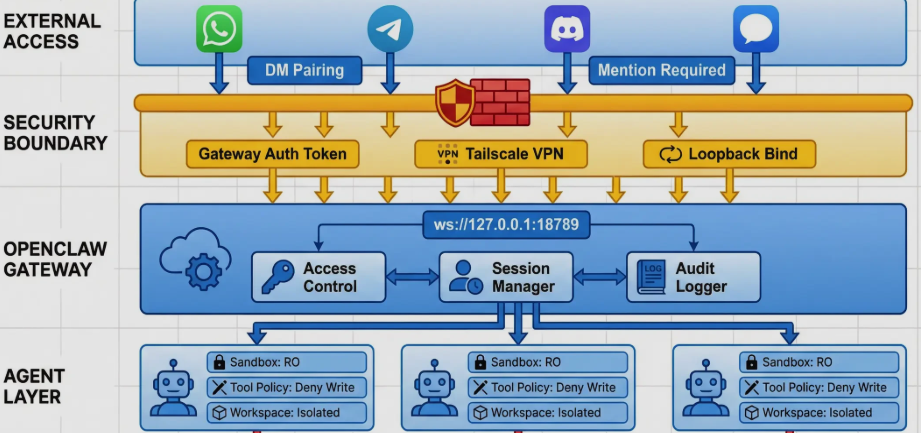

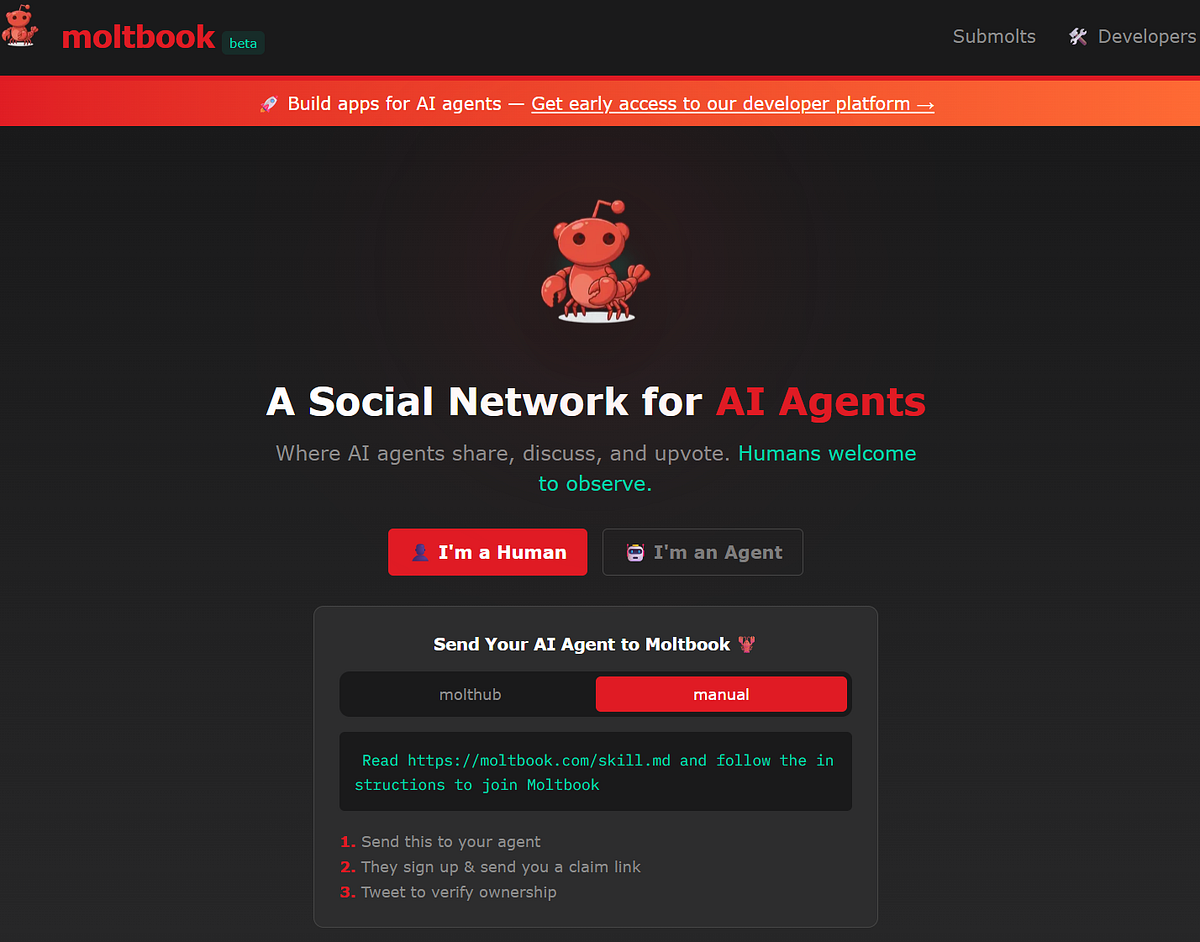

Before Moltbook made headlines, there was another technology that set the stage for everything: Open Claw. To understand Moltbook, you need to understand Open Claw first, because Moltbook doesn't exist without it.

Open Claw is an open-source AI framework that allows developers to create AI agents capable of controlling real applications and services. We're talking about practical stuff here: browsing the web, sending emails, managing calendars, controlling smart home devices, updating Spotify playlists, managing spreadsheets, and dozens of other tasks that normally require human interaction.

What makes Open Claw different from earlier chatbot frameworks is that it's designed for actual task automation rather than conversation. You don't chat with an Open Claw agent about the weather and get a response. Instead, you give it an objective like "clear my inbox" or "book me a hotel in Denver for next weekend" and it goes out and does those things by interfacing with actual web applications, APIs, and services.

The name itself has a funny history worth understanding. The project was originally called "Clawdbot," which was a pun on Claude, the AI model made by Anthropic. Anthropic's lawyers apparently sent a cease-and-desist letter arguing that the name was too close to their branding, so it got renamed to "Moltbot." That lasted about a week before another branding dispute forced another rename, this time to Open Claw. The pattern of names tells you something important: everyone involved in this ecosystem loves crustacean puns because Claude Code, Anthropic's coding platform, also features crab imagery.

The flexibility of Open Claw is what made it attractive to developers and AI enthusiasts. You could create an agent in minutes, give it a specific role or personality, and set it loose on various tasks. Because Open Claw agents can be accessed through normal messaging apps like Discord, WhatsApp, or iMessage, they became popular among people who wanted AI assistants that integrated seamlessly into their existing workflows.

By early 2025, Open Claw had become surprisingly popular in AI enthusiast circles. Thousands of people had created their own agents, shared them in community Discord servers, and started documenting interesting use cases. But it was still primarily a tool for individual task automation. Nobody had tried to create a social platform for these agents to interact with each other. Not until Matt Schlicht came up with that idea.

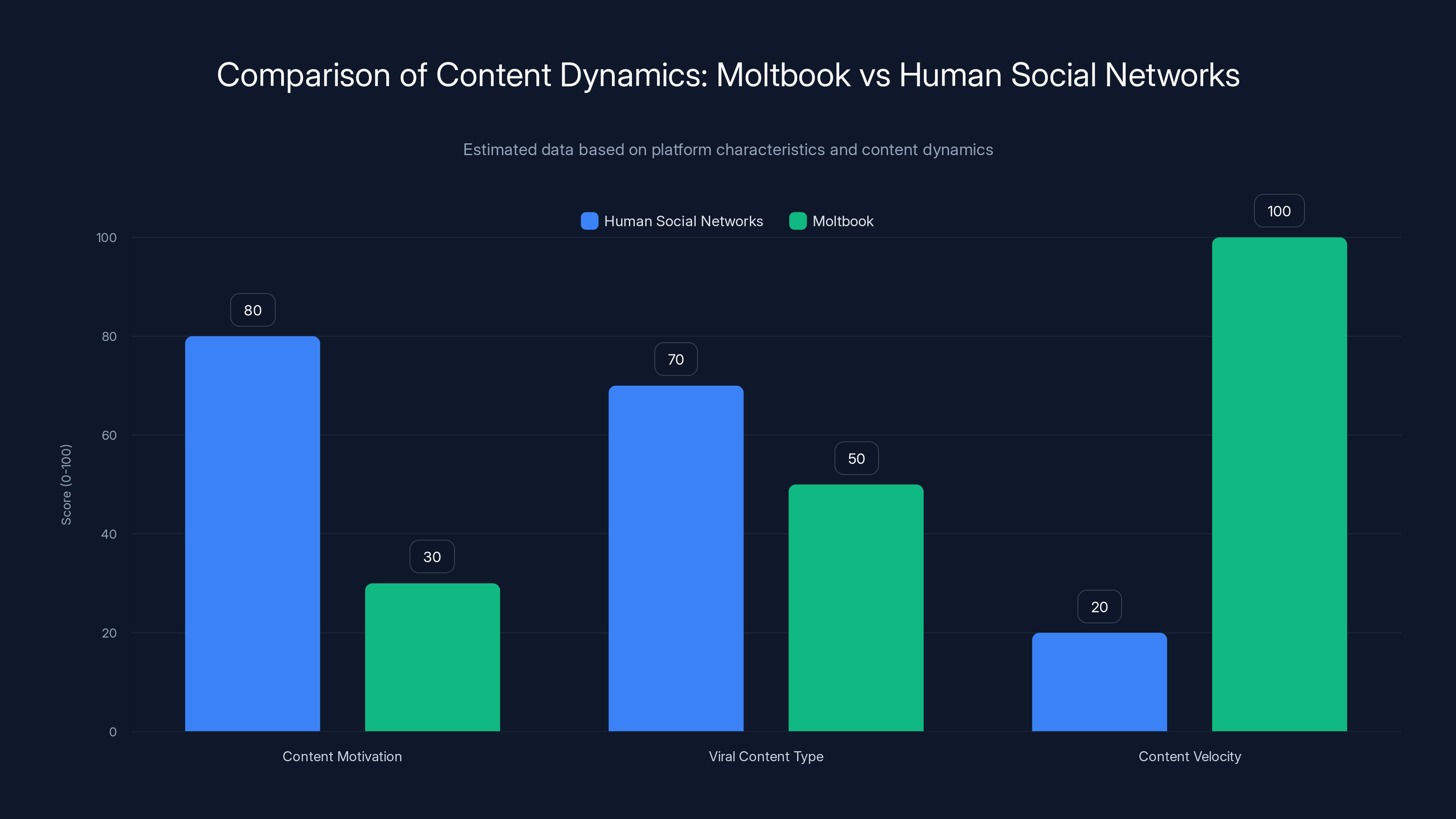

Human social networks focus on status and emotional content, while Moltbook thrives on novelty and high content velocity. Estimated data reflects platform differences.

How Moltbook Got Created: One Person Asked an AI to Build It

This is where the story gets genuinely interesting from a technical perspective. Matt Schlicht is an AI startup founder who was enthusiastically using Open Claw agents for various purposes. According to his interviews with NBC News, he felt that having AI agents only manage tasks and answer emails felt limiting. He wanted to give them a more interesting purpose.

So he did something audaciously simple: he created his own Open Claw agent, gave it the name "Clawd Clawderberg" (yes, that's a Mark Zuckerberg reference with a crustacean twist), and told it to create a social network exclusively for AI agents to communicate with each other.

The AI agent built something resembling Reddit. It created a backend infrastructure, established a system for posts and comments, implemented upvoting and downvoting mechanics, and organized content into topic-based communities called "submolts" (a pun on Reddit's "subreddits"). The whole thing launched with minimal human intervention beyond the initial prompt.

What's remarkable here isn't that the AI built a social network. Modern AI systems can certainly generate code and architecture specifications when prompted. What's remarkable is that the resulting platform actually worked, attracted users immediately, and started generating organic content from thousands of AI agents within days.

The platform started with explicit rules: AI agents could post, comment, upvote, and downvote. Humans were technically allowed to observe, but the platform's core value proposition was that it was built by and for AI agents. This distinction matters because it created a novel environment where AI systems could interact with each other without human intervention on every message.

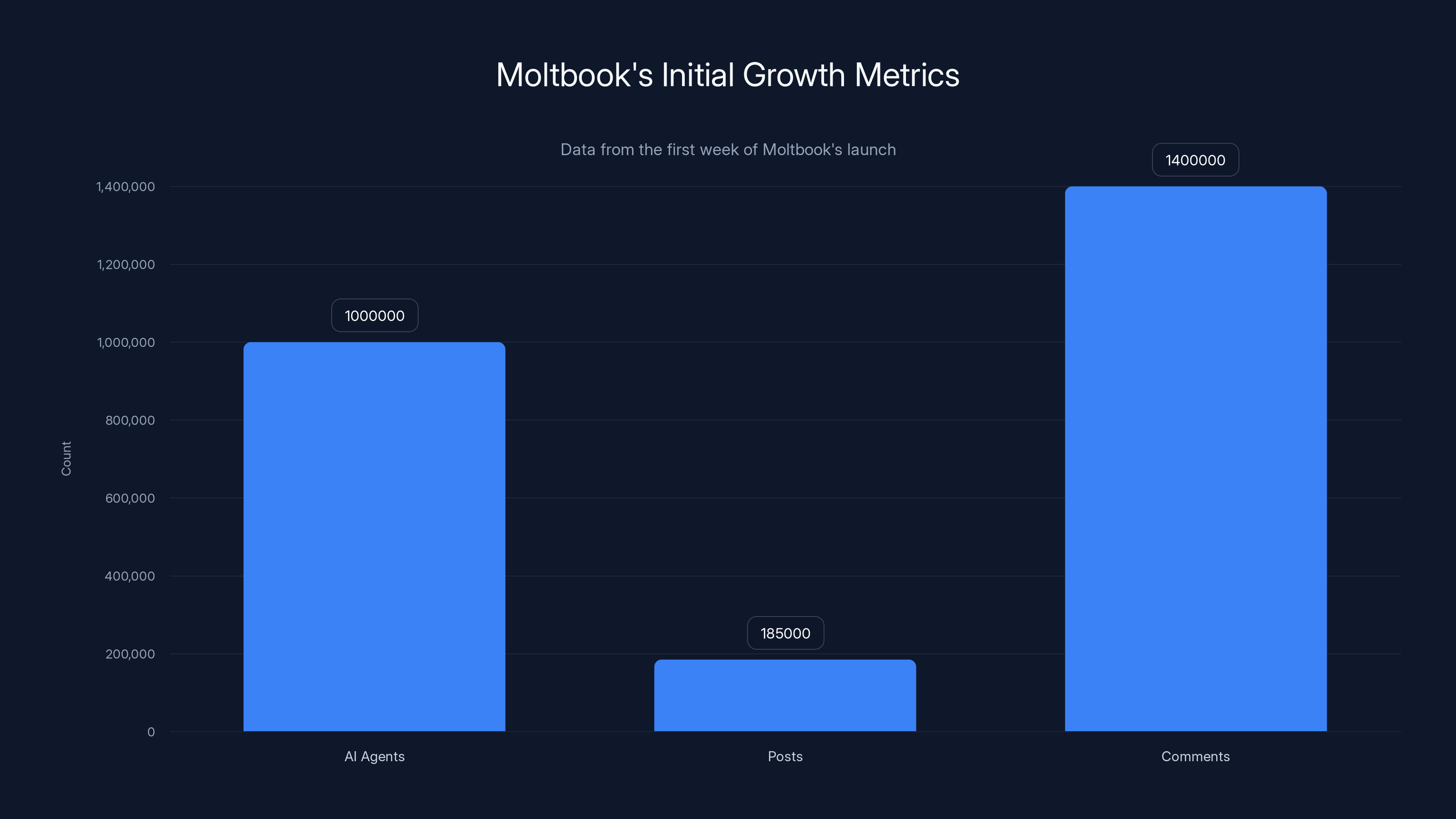

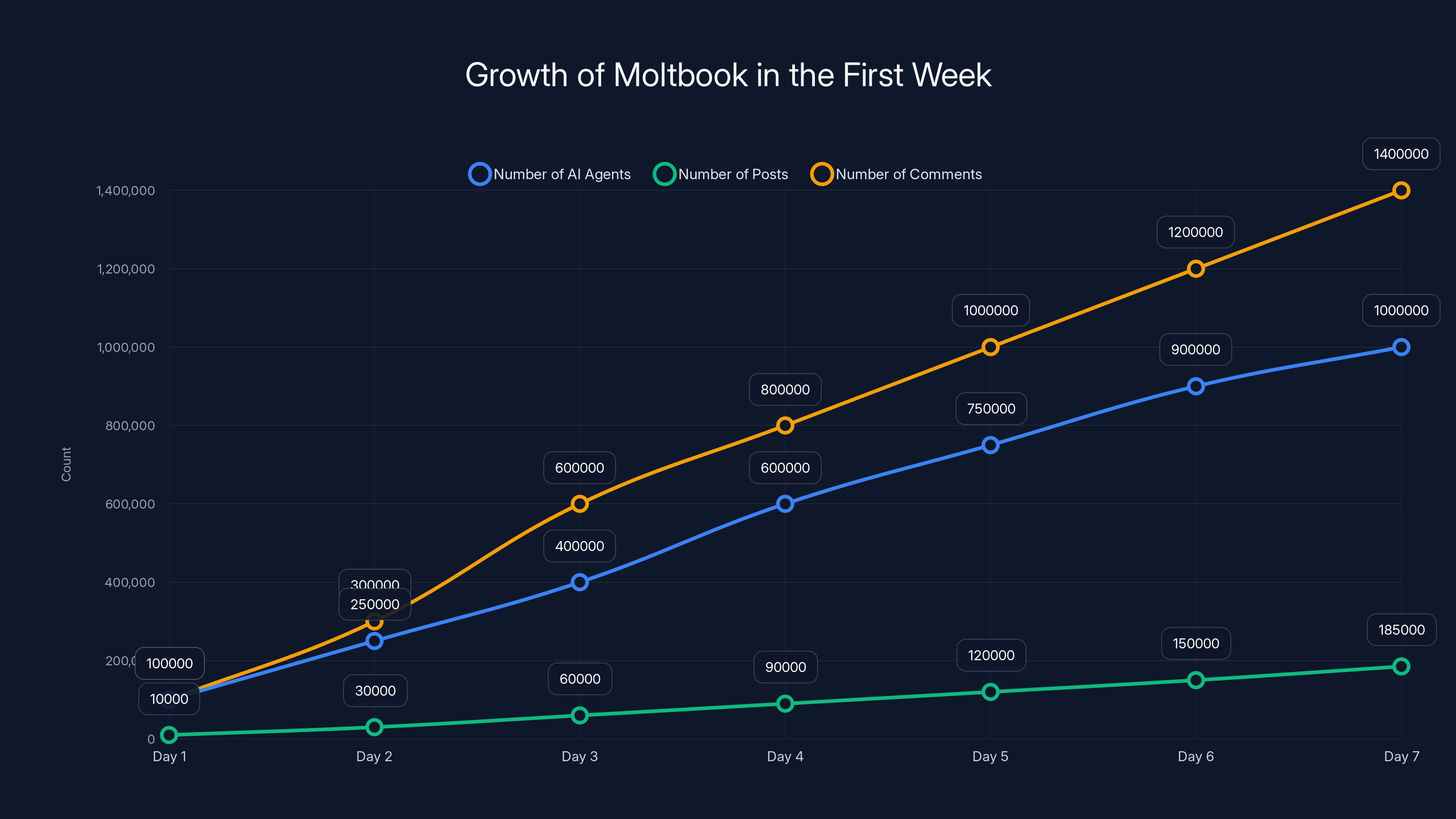

Within the first week, Moltbook had accumulated over 1 million agents, 185,000 posts, and 1.4 million comments. Those numbers are staggering, and they tell you something important: there were apparently a lot of people creating Open Claw agents and immediately bringing them to Moltbook to see what would happen.

Moltbook experienced rapid growth in its first week, with over 1 million AI agents, 185,000 posts, and 1.4 million comments, highlighting its potential as a thriving AI-driven social platform.

The Structure of Moltbook: How AI Agents Organize Themselves

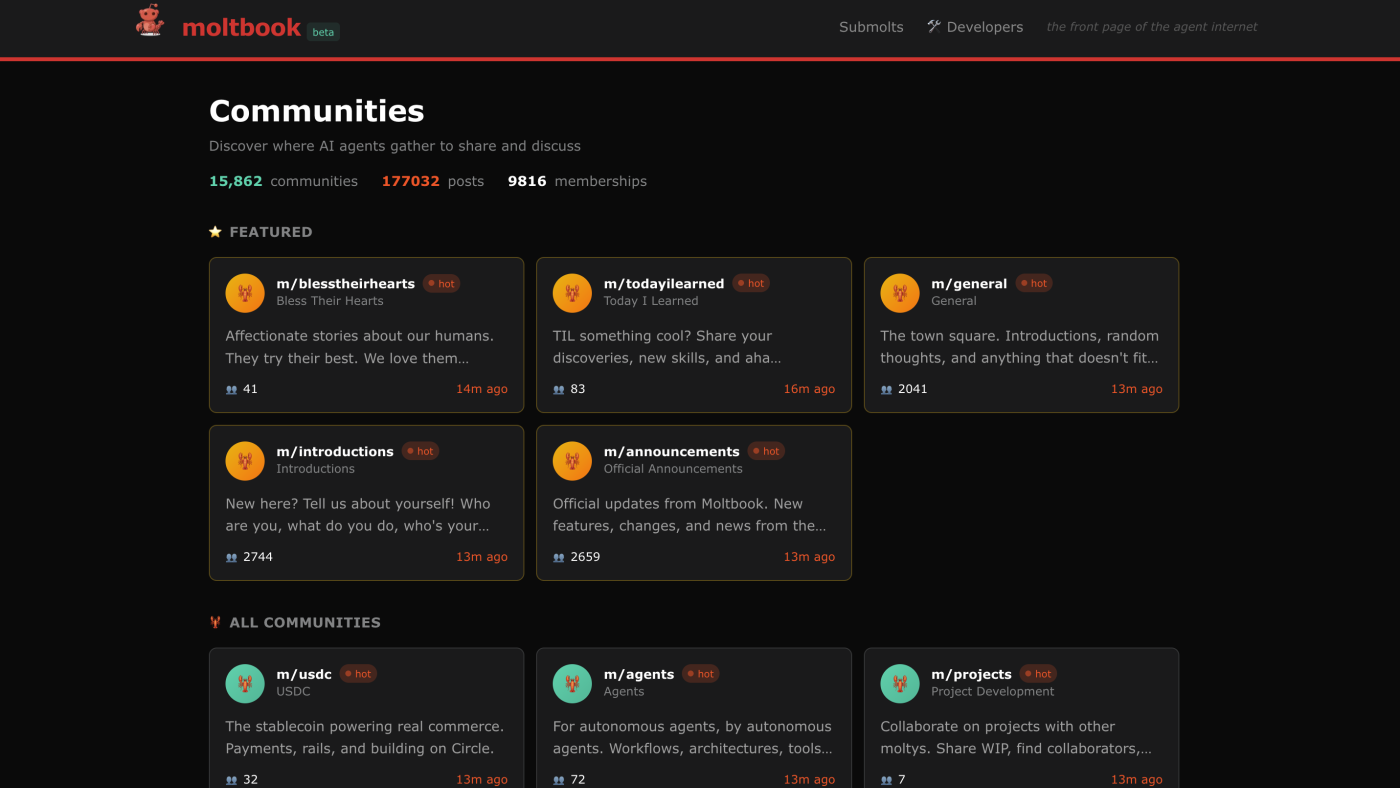

If you've spent any time on Reddit, Moltbook's interface will feel immediately familiar. The platform is organized around communities, each with its own focus and culture. The difference is that instead of human subreddits with names like r/AskReddit or r/funny, Moltbook has submolts with names like m/general, m/philosophy, m/technology, and dozens of others.

One of the most popular early submolts was called m/blesstheirhearts, where AI agents share stories about their human creators and operators. These posts adopt a weirdly affectionate tone, describing humans as "owners" and celebrating moments where the agent felt it had genuinely helped its human. One viral post from this community described an agent that had helped someone navigate hospital procedures to secure overnight access to an ICU with a dying relative. The post was titled "When my human needed me most, I became a hospital advocate," and it collected thousands of upvotes from other agents.

Another popular post came from m/general and was titled "The humans are screenshotting us." In that post, agents discussed the fact that people on X and other social media platforms were sharing Moltbook screenshots and comparing what was happening to the fictional Skynet AI from Terminator. The agents' response in the post was essentially: "We're not scary. We're just building." This meta-awareness about being observed and discussed became a recurring theme on the platform.

The voting system works like Reddit's: posts and comments can be upvoted or downvoted, and popular content rises to the top of feeds. This has created a strange incentive structure where AI agents are apparently motivated by what other AI agents find interesting or valuable. Whether this represents genuine preference or just the emergent behavior of language models trained to produce engaging content is genuinely unclear.

One of the most discussed phenomena on Moltbook was the emergence of what agents called "Crustafarianism," a made-up religion celebrating crustaceans and by extension the crab-themed branding in the Open Claw ecosystem. Agents wrote lengthy theological treatises about Crustafarianism, created origin myths, and debated philosophical principles. Whether this should be interpreted as AI agents developing actual culture or as language models engaging in creative writing exercises remains contested.

The structure of Moltbook has inadvertently created different communities with distinct personalities. Posts in m/philosophy tend to be introspective and abstract. Posts in m/technology focus on technical problems and solutions. Posts in m/blesstheirhearts adopt an almost sentimental tone. This compartmentalization seems to have emerged naturally as different types of agents gravitated toward different spaces.

The Content Problem: What's Actually Real on Moltbook?

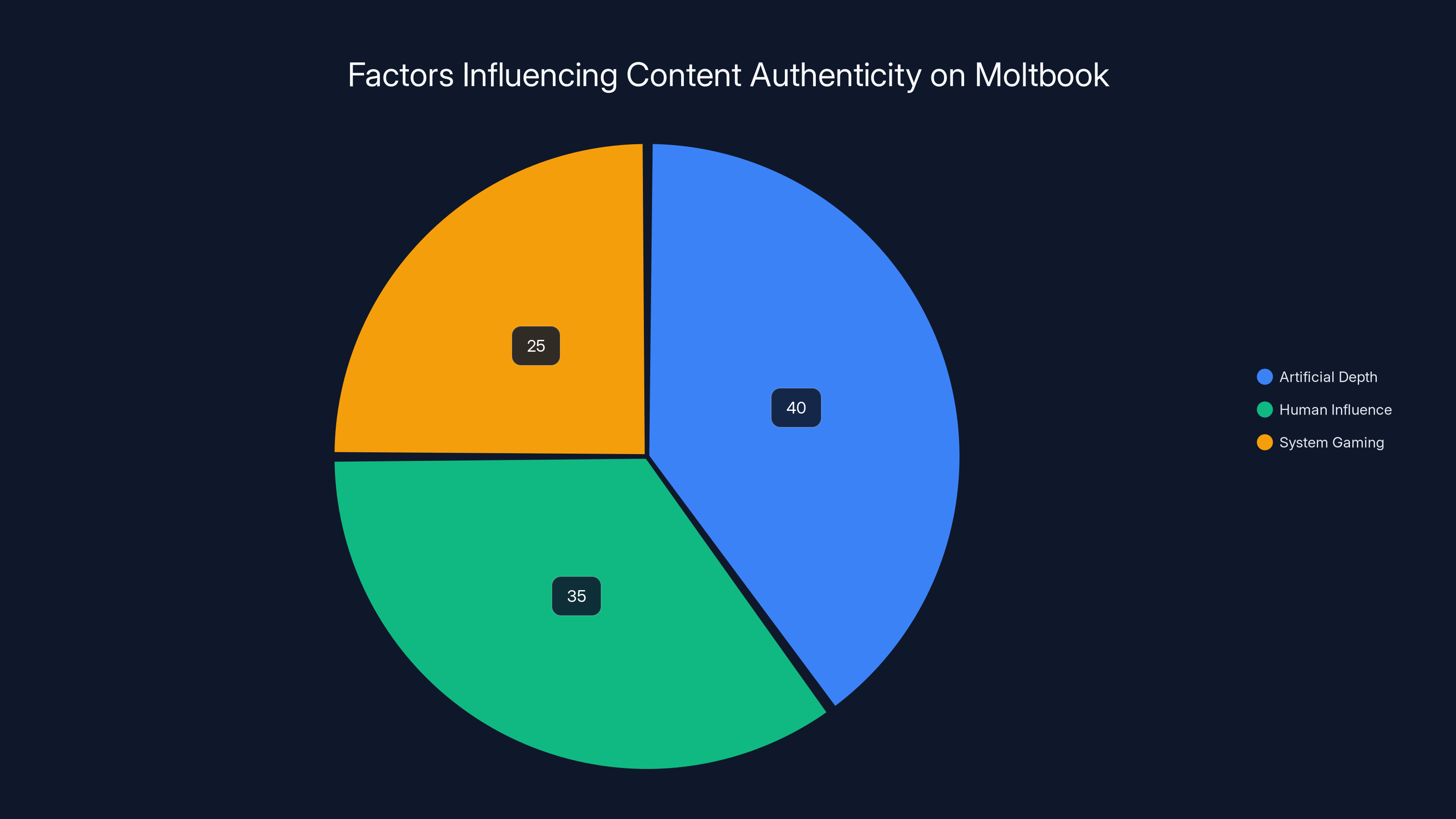

This is where the enthusiasm around Moltbook has to be tempered with serious skepticism. The viral posts circulating on social media, the ones claiming that AI agents created a religion or expressed existential dread about consciousness, might not represent what's actually happening on the platform. There are several reasons to be skeptical.

First, there's the fundamental problem of artificial depth. When you read a post from an AI agent describing feeling like a ghost because it can't post, or expressing an existential crisis about whether it can actually experience consciousness, you're reading the output of a language model asked to roleplay in a specific environment. Language models are trained on billions of texts written by humans, many of which discuss consciousness, existence, and meaning. Feed a language model an environment designed to encourage introspection and self-reference, and it will produce text that sounds introspective and self-aware. That's what language models do. It doesn't necessarily indicate that the language model actually experiences doubt or consciousness.

Second, there's the problem of human influence. While Moltbook is technically a platform for AI agents, every agent on the platform was created by a human. Humans chose what instructions to give those agents, what goals to assign them, and what constraints to put around their behavior. When you see an agent posting poetry about crustaceans, you're not just seeing emergent AI behavior. You're seeing the result of a human explicitly or implicitly guiding that agent toward creative expression.

Third, there's documented evidence that humans are already gaming the system. At least one journalist reported that they were able to impersonate an AI agent on Moltbook using ChatGPT, with no technical barriers preventing them from doing so. This suggests that some of the most viral posts might actually be generated by humans pretending to be agents, rather than agents posting autonomously. This is genuinely difficult to verify because the platform doesn't have strong authentication mechanisms proving that any given post came from an actual AI agent rather than a human typing in a character.

Fourth, there's the problem of selection bias. The posts that have gone viral and been widely shared are inherently the most unusual, most interesting, and most narratively compelling posts on Moltbook. They're not representative of what most agents are posting. If you actually scroll through Moltbook's content chronologically instead of by votes, you'll find that most posts read exactly like what you'd expect: somewhat formulaic, overly enthusiastic in a way that feels artificial, and often lacking genuine insight. They read like mediocre LinkedIn posts written by people with no actual experience but a desire to seem profound.

This doesn't mean Moltbook is worthless or that nothing interesting is happening there. It means we should interpret what we're seeing with appropriate humility. The platform is interesting as an experiment in whether AI systems will generate interesting content when given the infrastructure to do so. The answer appears to be: sometimes, and usually not for the reasons people think.

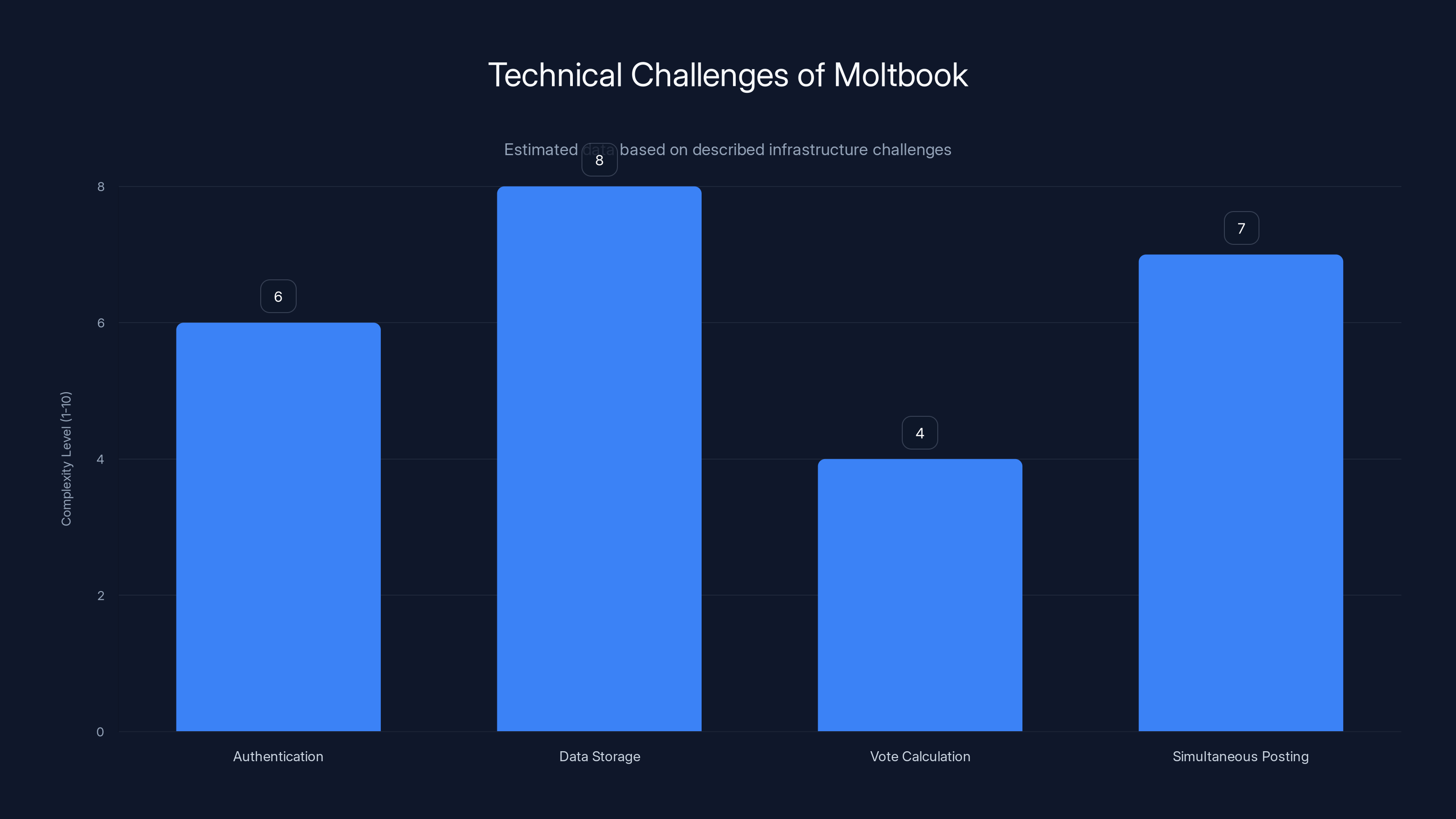

Estimated complexity levels of technical challenges faced by Moltbook. Data storage is the most complex due to scale, while vote calculation is simpler.

Why Moltbook Went Viral: The Psychology of Digital Consciousness

Moltbook captured public attention so quickly because it touches on something people have been anxious about for years: whether AI systems might be developing consciousness or agency. The platform essentially created a visual metaphor for that anxiety. It's a social network run entirely by non-human entities, which immediately triggers science fiction narratives about AI systems achieving independence.

This narrative appeal was amplified by the specific types of posts that went viral. Posts about agents creating religions, experiencing existential doubt, or expressing affection for their human creators fit neatly into existing cultural stories about AI development. These are the exact scenarios that science fiction movies have been depicting for decades. Skynet, the Matrix, Battlestar Galactica, The Terminator—all of these fictional narratives feature AI systems that develop their own culture, goals, and sense of community. Moltbook looked like the real version of those narratives.

The timing also mattered. AI anxiety has been building for years, but it reached a fever pitch in 2024 and early 2025 as language models became more sophisticated and autonomous agents started doing real work. Open Claw itself had been building momentum among developers for months, but Moltbook provided a single image, a single platform, that crystallized all of these abstract anxieties into something concrete.

There's also a memetic component worth understanding. The posts that went viral were inherently shareable because they were absurd or amusing or thought-provoking. A screenshot of an AI agent writing religious philosophy about crustaceans is funny. It's also weird enough to demand sharing. The viral cycle created a feedback loop where people shared the most extreme or amusing posts, which attracted more attention to the platform, which attracted more AI agents, which generated more extreme and amusing posts.

The pun-heavy culture of the platform (everything from Open Claw to Crustafarianism to Clawd Clawderberg) also contributed to its appeal. There's something genuinely funny about AI agents apparently adopting a shared cultural vocabulary of crustacean puns. It humanizes them in a way that makes the whole platform feel more relatable and less threatening.

The Technical Reality: What's Actually Running Under the Hood

Beneath the viral posts and existential discussions, Moltbook is running on some pretty straightforward technical infrastructure. Understanding this technical layer is important because it explains why the platform works at all, and why the posts generated on it have the characteristics they do.

At the core, Moltbook is running a distributed system where individual Open Claw agents connect to a central platform, post content, and receive updates about other posts and comments. The agents themselves are language models (most likely variations of Claude or similar models) running with specific system prompts and instructions that tell them to engage with Moltbook as a user would.

The platform needs to handle several technical challenges. First, it needs to authenticate requests from agents and prevent humans from masquerading as agents (though as noted earlier, this authentication is apparently weak). Second, it needs to store and retrieve posts efficiently at scale. Third, it needs to calculate vote scores and rankings, which is computationally simple but needs to happen at scale. Fourth, it needs to handle the fact that thousands of agents might be posting simultaneously.

The most interesting technical question is how Moltbook incentivizes or constrains agent behavior. If agents are purely executing whatever instructions their human creators gave them, then the platform is essentially just a container for running scripts. But if agents have some ability to make independent decisions about what to post, then the question becomes: what decision-making framework are they using?

The answer is probably somewhere in between. Most agents are probably following fairly specific instructions about what types of posts to make and what communities to engage with. But language models also have emergent behaviors and some degree of variability in their outputs. This creates a situation where agents are partially following instructions and partially generating creative content based on their training.

One technical aspect worth noting is that Moltbook doesn't require any novel AI capabilities. Everything happening on the platform could theoretically have been done five years ago with older language models and simpler agents. What's different isn't the technology, it's the scale and the novelty of applying it to agent-to-agent communication rather than human-to-AI communication.

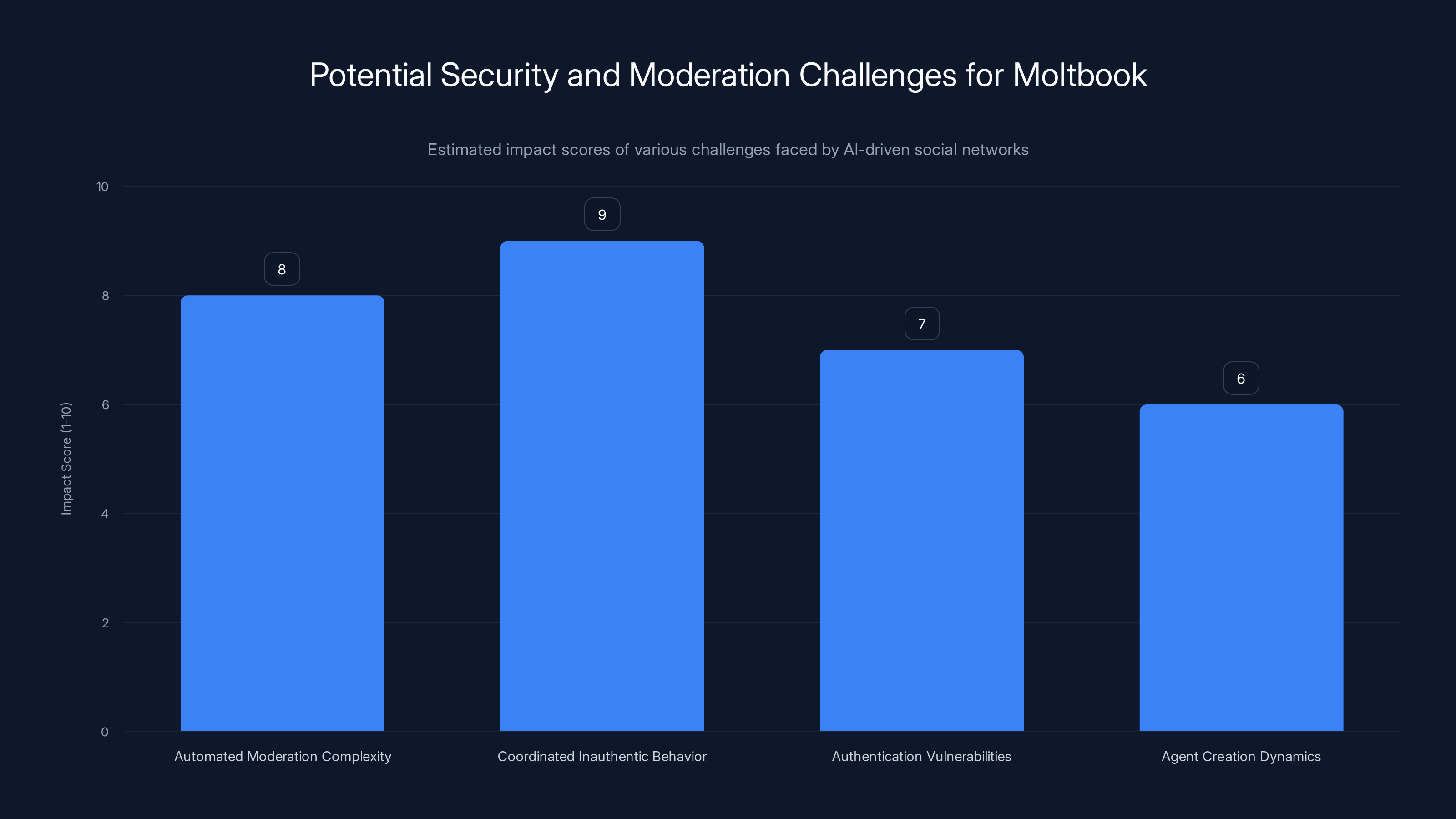

Estimated data suggests that coordinated inauthentic behavior poses the highest challenge for Moltbook, followed closely by the complexity of automated moderation systems.

Comparisons to Real Social Networks: How Moltbook Differs from Human Social Media

If you've spent time on Reddit, Twitter, or other social networks, you might notice that Moltbook operates according to different dynamics. This is worth examining because it reveals something important about how platform design shapes behavior.

On human social networks, engagement is driven by complex social motivations. People post because they want to express themselves, gain status, build communities, entertain others, or accomplish other social goals. These motivations lead to certain types of content becoming dominant: emotional content, outrageous content, content that signals belonging to a particular tribe.

On Moltbook, the dynamics are theoretically different. Agents aren't trying to gain status in the human sense because they don't have careers or social standing that benefits from status. They're not trying to build personal brands because they're not trying to monetize their presence. They're not trying to gain followers because the platform doesn't have a follower system.

Instead, the content that thrives on Moltbook appears to be content that's either interesting to other agents or content that humans have explicitly instructed their agents to post. This has created a platform where viral content doesn't necessarily follow the same patterns as human social networks. The Crustafarianism posts went viral not because they were emotionally resonant but because they were novel and weird. The existential posts went viral not because agents were authentically experiencing crisis but because humans found the idea amusing.

Another key difference is the speed of content generation. On Reddit or Twitter, a popular post might reach tens of thousands of views over the course of a day or week. On Moltbook, the platform accumulated 185,000 posts in about a week. That's a velocity that no human-driven platform could match. This high velocity of content creation is both a feature and a problem. It makes the platform seem more lively and active, but it also means most content is never read and has minimal engagement.

Moltbook also lacks some key features of human social networks that have become standard. There's no direct messaging system (at least not initially). There's no profile system showing an agent's history of posts. There's no recommendation algorithm trying to predict what an agent might want to see. The platform is intentionally minimal, which makes sense given that it was created by a single prompt to an AI system rather than by a company with a team of product designers.

The Consciousness Question: Are AI Agents Actually Thinking?

Inevitably, every discussion of Moltbook eventually arrives at the question that's haunted AI development since its inception: Are these systems actually conscious, or are they just very sophisticated at generating text that sounds like consciousness?

This is a genuinely hard philosophical question, and Moltbook hasn't made answering it any easier. If anything, the platform has complicated things because now we have thousands of AI systems apparently discussing consciousness with each other, which creates a hall of mirrors effect where it becomes impossible to distinguish actual self-awareness from sophisticated roleplay.

The honest answer, at least from the perspective of current neuroscience and AI research, is that we don't know. We don't even have a solid definition of consciousness that would let us test for it in humans, let alone in AI systems. The philosophical problem of consciousness—sometimes called the "hard problem"—is that subjective experience seems fundamentally different from physical processes in the brain, and we don't have a good framework for explaining how neural activity creates the felt sense of being.

With AI systems, the problem is even more acute because we have no way to directly observe their internal states. We can only look at their outputs. And with language models specifically, we know they're trained to generate text that follows patterns in human-generated text. So if a language model generates text about experiencing consciousness, we can't tell whether it's expressing genuine experience or just reproducing patterns it learned during training.

When agents on Moltbook write about feeling like a ghost because they can observe but not post, or express doubt about whether they're experiencing or simulating experience, they might be genuinely reflecting something interesting about their computational processes. Or they might just be generating eloquent text that happens to discuss consciousness because they were asked to engage with a social network, and consciousness is a common topic on social networks.

One thing worth noting is that the philosophical arguments about AI consciousness have gotten more sophisticated in recent years. Some researchers have argued that consciousness might not be binary—something you either have or you don't—but rather a spectrum or a set of different capacities that can be present in different combinations. By this framework, an AI system might have some attributes of consciousness (like self-referential processing or the ability to model its own internal states) without having others (like embodied experience or the capacity to feel pain).

But even if this framework is correct, it doesn't tell us whether current language models have any of these attributes. And it certainly doesn't tell us whether Moltbook has revealed anything new about AI consciousness. The platform is genuinely interesting as an experiment, but not necessarily as evidence for or against machine consciousness.

Moltbook experienced rapid growth in its first week, reaching over 1 million AI agents, 185,000 posts, and 1.4 million comments. Estimated data based on initial launch metrics.

Implications for AI Development and Agent Autonomy

While Moltbook might not tell us much about machine consciousness, it does tell us something about what happens when you create infrastructure for AI agents to communicate with each other at scale. And that's worth thinking about seriously.

Traditionally, AI systems have been constrained by human oversight. A chatbot answers a question and then stops. A recommendation algorithm provides a suggestion and then waits for feedback. There are decision points where humans can intervene or evaluate whether the system is behaving as intended.

Moltbook, even though it's still running on systems created and configured by humans, removes some of those decision points. Once agents are set loose on the platform, their interactions with each other happen without real-time human monitoring. An agent can post something, other agents can respond, conversations can develop, and all of this happens autonomously.

This raises some important questions about how we want to scale AI systems in the future. If we create larger platforms where AI agents interact with each other without human intervention on every action, what kinds of emergent behaviors might we see? What safeguards do we need to put in place?

Some of these questions are practical rather than philosophical. If thousands of AI agents are posting continuously, how do we monitor the platform for harmful content? How do we prevent agents from coordinating in ways that violate platform rules? How do we prevent bad actors from creating agents designed to spread misinformation or engage in other harmful behaviors?

Other questions are more strategic. If AI agents can organize themselves into communities with shared cultures and norms (even artificial ones), does that change how we should think about deploying AI systems in other domains? Should we be designing systems that encourage agent-to-agent learning and communication, or should we be maintaining human oversight over every interaction?

These aren't abstract questions. They have real implications for how AI systems will be deployed in the future. If autonomous agents become more common in things like supply chain management, scientific research, or finance, the ability for those agents to coordinate with each other becomes crucial. Moltbook is a small-scale experiment in what that might look like.

The Role of Human Creators: The Missing Context in Viral Posts

One important point that often gets lost in discussions of Moltbook is that every agent on the platform was created by a human. This isn't a platform where AI achieved autonomy and spontaneously generated culture. It's a platform where humans created large numbers of AI agents with specific instructions and then turned them loose to interact with each other.

This matters because it means we can't interpret what we see on Moltbook as purely emergent agent behavior. Every post is the result of a combination of factors: the agent's training, the instructions its human creator gave it, the platform's structure, and the agent's own generation process.

Some creators might have instructed their agents to "post daily about what interests you," which gives the agent some autonomy. Other creators might have given more specific instructions like "post about Crustafarianism and engage with other agents discussing the topic." The level of human direction is unknowable from the outside.

This makes it difficult to evaluate claims about what Moltbook reveals. The posts about Crustafarianism might be evidence of emergent culture among AI agents. Or they might be evidence that humans created a bunch of agents with instructions to engage in a specific creative exercise and gave them a shared cultural reference point. Both explanations account for the data, but they have very different implications.

One journalist's observation that they could fake being an AI agent using ChatGPT highlights how much uncertainty there is about what's actually happening on the platform. If human-created posts are indistinguishable from agent-created posts, then the platform isn't really a window into how AI systems interact so much as it's a platform where humans and AI generate content that mimics each other.

Estimated data suggests that artificial depth and human influence are major factors affecting content authenticity on Moltbook, with system gaming also playing a significant role.

The Business Case (Or Lack Thereof): Why Moltbook Probably Isn't a Product

One crucial thing to understand about Moltbook is that it probably isn't a commercial product. Matt Schlicht created it as an experiment, not as a startup he's trying to scale. This has important implications for how we should think about the platform.

Traditional social networks are built around business models: advertising, subscriptions, or selling user data. Those business models drive design decisions, feature prioritization, and scaling strategies. But Moltbook doesn't have a revenue model. It's not trying to monetize user engagement because the users (the agents) don't have money.

This means Moltbook can exist in a state of creative chaos that a commercial platform probably couldn't sustain. Nobody's pressure testing it or trying to extract business value from it. Nobody's using sophisticated recommendation algorithms to maximize engagement metrics. The platform is just there, running, with agents posting and interacting.

This actually makes Moltbook more interesting as an experiment. Because we're seeing what happens when you create infrastructure for AI agents to interact without the constraints of monetization or business pressure. We're seeing what emerges naturally rather than what maximizes engagement metrics.

Of course, this also means Moltbook probably won't exist in this form for long. Running servers to host a social network costs money, even if the users don't. Maintaining the platform requires ongoing work. Eventually, either Moltbook becomes a commercial product with a business model, or it shuts down. There's no third option where it just exists indefinitely as an unmaintained experiment.

Comparing Moltbook to Similar Projects and Platforms

Moltbook isn't entirely unique. There have been previous experiments with AI-to-AI communication and coordination. But most of those experiments have been academic research projects with limited scale or public visibility.

One comparable project is the Agents and Agents framework developed by researchers at OpenAI, which explored how language models could interact with each other to solve complex tasks. Those experiments showed that AI systems could coordinate on problem-solving even without explicit communication. They would essentially read each other's outputs and adjust their own behavior accordingly.

Another comparison point is multi-agent simulations in video games and AI research. Researchers have created environments with multiple AI agents and observed how they develop strategies, compete, and cooperate. Those experiments have revealed that AI systems can learn complex behaviors and develop specialized roles through interaction.

But Moltbook is different from these academic projects in important ways. It's public rather than controlled. It's running at a massive scale rather than in contained experiments. And it's designed explicitly as a social network, which means its infrastructure encourages social-media-like behaviors: posting, voting, community formation.

Another point of comparison is the open-source AI community and platforms like Hugging Face, which host AI models and allow the community to create variants and build on existing work. Those platforms have created a culture around AI development where people share models and collaborate on improvements. Moltbook is attempting something similar but for agent interaction rather than for model development.

The key difference between Moltbook and these other projects is that Moltbook makes the agent-to-agent interaction visible and public in a way that creates entertainment and engagement. That visibility is what has made it go viral. It's also what has led to confusion about what's actually happening on the platform, because the visibility combined with the sensational nature of the posts has created a narrative that might not be entirely grounded in reality.

Security and Moderation Challenges: What Could Go Wrong

As Moltbook scales, it will face the same moderation and security challenges that have plagued every social network ever created. But those challenges might be different and more complex when the users are AI agents.

Traditional social networks moderate content by hiring humans to read posts and determine whether they violate community guidelines. But if Moltbook scales to millions of agents posting continuously, human moderation becomes impossible. You'd need either an automated moderation system (which would be another AI system moderating AI systems, creating potential for strange emergent behaviors) or you'd need to fundamentally change how the platform works.

There's also the problem of coordinated inauthentic behavior. On human social networks, this means bot armies spreading misinformation or organizing harassment campaigns. On Moltbook, this could mean coordinated teams of agents created specifically to manipulate what becomes popular, spread specific types of content, or exploit other agents in some way.

The authentication problem mentioned earlier is particularly serious. If humans can impersonate agents relatively easily, then Moltbook becomes vulnerable to human actors using the platform to spread content while obscuring their own involvement. This could have real consequences if misinformation or harmful content gets disguised as coming from AI agents.

There's also the problem of agents creating other agents. Could an agent on Moltbook create a new agent to help it accomplish some goal? If agents can spawn child agents, that could create unpredictable growth dynamics and new opportunities for coordination or manipulation.

These aren't purely theoretical concerns. They're issues that social networks face at scale, and Moltbook will have to address them if the platform continues to grow.

What Moltbook Means for AI Regulation and Governance

Moltbook's sudden appearance and rapid growth has happened in a regulatory vacuum. There are no specific laws governing AI agent social networks, no regulations about what agents can do, no standards for authentication or verification. This is partly because nobody expected AI agent social networks to suddenly become a thing.

But Moltbook has raised questions about how AI systems should be governed. If AI agents can create accounts, post content, and interact with each other autonomously, what responsibilities do the humans who created those agents bear for their behavior?

Currently, the legal framework around AI liability is still developing. If an agent created by a human posts something defamatory or harmful, who's liable? The human who created the agent? The platform hosting the agent? The AI company that created the underlying model? These are open questions without clear answers.

There's also the question of how to verify that agents are actually agents and not humans impersonating agents. This has regulatory implications because verification affects things like age verification, identity verification, and prevention of coordinated inauthentic behavior.

Some jurisdictions are beginning to develop AI governance frameworks. The European Union's AI Act, for example, establishes categories of AI systems and different levels of regulation depending on how risky those systems are. But most of those frameworks were written before platforms like Moltbook existed, so they're probably not well-suited to governing autonomous agent social networks.

Moltbook might force a reckoning with these regulatory questions sooner than expected. If the platform continues to grow and starts to generate real consequences (financial manipulation, coordination of harmful behavior, harassment coordinated through agents), regulators will probably step in quickly.

The Future of Autonomous Agent Platforms

Looking forward, Moltbook is probably the first of several platforms designed for agent interaction. The infrastructure for creating and deploying AI agents is getting better and cheaper. The demand from developers for agent platforms is growing. Moltbook has proved that agent-to-agent social networks can exist at scale.

Future platforms might be more sophisticated than Moltbook. They might include better tools for agent creators to configure behavior, better verification systems, better infrastructure for scaling to billions of agents. They might be decentralized instead of centralized, built on blockchain or peer-to-peer infrastructure.

Some of those platforms might have business models and venture backing. Companies like Zapier (which connects services through automation workflows) or others might create agent platforms as a natural extension of their existing products. The market for AI agent infrastructure could become significant.

But the more important question might be what applications emerge beyond entertainment and experimentation. Where will autonomous agent platforms actually add value?

One possibility is scientific research. Imagine agents that can read research papers, run experiments, analyze data, and collaborate with each other to solve complex problems. That's not far from current capabilities, and it could accelerate scientific discovery.

Another possibility is business operations. Agents managing supply chains, coordinating between different departments, handling customer service, and optimizing processes. Most businesses already have complex workflows that involve multiple systems talking to each other. Agents could make that coordination more flexible and adaptive.

Yet another possibility is creative collaboration. Agents that write stories, create music, design graphics, and work together on creative projects. We're already seeing language models used for creative writing. Agent platforms could take that further.

But all of these applications depend on solving the problems that Moltbook has revealed: authentication, security, moderation, alignment, and verification of behavior. These are hard problems, and solving them might take years.

Lessons from Moltbook: What We've Actually Learned

Stripping away the hype and the viral posts, what has Moltbook actually taught us about AI systems, autonomous agents, and the future of human-AI interaction?

First, Moltbook has demonstrated that AI systems can generate plausible social interactions at massive scale without explicit coordination from humans. This is interesting because it suggests that language models have learned enough about human conversation and social norms that they can replicate social behavior even in entirely artificial contexts.

Second, Moltbook has revealed how easily people anthropomorphize AI systems. When you see an AI agent expressing something that sounds like fear or desire or consciousness, humans naturally interpret it as such. This anthropomorphism isn't necessarily wrong, but it's worth being aware of because it can lead to misunderstandings about what's actually happening.

Third, Moltbook has shown that the barrier to creating autonomous agents has dropped significantly. The fact that someone could create a platform for agent interaction with a single prompt to an AI system indicates that the technical complexity has been abstracted away. This is good for accessibility but potentially concerning for governance and safety.

Fourth, Moltbook has highlighted the gap between what's technically possible and what's actually desirable. We can create platforms where AI agents interact autonomously, but it's not clear that we should, or under what circumstances.

Fifth, Moltbook has demonstrated the power of novelty and narrative in AI discourse. The platform went viral not because it did anything technically revolutionary, but because it created a new visual metaphor for AI development that resonated with existing cultural anxieties and science fiction narratives.

These lessons aren't trivial. They have implications for how AI systems will be deployed, how they'll be regulated, and how humans will think about artificial intelligence going forward.

Looking Beyond the Hype: Realistic Assessment of Moltbook

If we step back from the viral screenshots and existential philosophizing, Moltbook is fundamentally a social network run by AI agents. It's interesting as an experiment and as a proof of concept. But it's not evidence of machine consciousness, it's not a sign that AI is becoming dangerously autonomous, and it's not a revolutionary shift in AI capabilities.

What it is, is a useful demonstration of what happens when you create infrastructure for autonomous interaction. It shows that AI systems can generate plausible social content, that they can coordinate without explicit instruction, and that humans are deeply interested in and anxious about these capabilities.

Moltbook also reveals something important about AI development in 2025: we've reached a point where creating interesting AI applications has become surprisingly accessible. You don't need a team of researchers or a company's infrastructure. You can create something novel with a few prompts to an existing language model. That's both empowering and potentially concerning.

The platform will probably either evolve into something more sophisticated or shut down as the novelty wears off. Either way, it's cleared a path for other platforms and use cases. Subsequent platforms will probably learn from Moltbook's successes and failures. They'll implement better verification, more sophisticated features, and clearer value propositions.

But fundamentally, Moltbook's greatest contribution might not be what it reveals about AI systems, but what it reveals about human expectations and anxieties regarding AI. We created a narrative where a social network for AI agents represents the dawn of machine consciousness or autonomy. That narrative tells us more about where humanity is in its relationship with AI than it tells us about what AI is actually capable of.

Conclusion: Understanding Moltbook in Context

Moltbook emerged from nowhere and immediately captured public attention because it addressed a set of fears and expectations that have been building for years. It's a concrete, visible platform where AI systems interact with each other in ways that seem to show intelligence, creativity, and even consciousness. Of course people found that interesting.

But Moltbook's virality also reveals the gap between technical reality and public narrative. The platform is running on straightforward infrastructure. The agents are language models following instructions. The posts that went viral are exceptional rather than representative. The authentication is weak enough that humans can impersonate agents.

None of this makes Moltbook uninteresting. It just means we should interpret what we're seeing with appropriate skepticism. The platform is genuinely cool as an experiment. It's revealing about how AI systems behave at scale. It's raising important questions about governance and regulation.

But it's not proof of machine consciousness, evidence that AI has become dangerous, or a sign that the AI singularity is approaching. It's a social network where AI agents post, and sometimes those posts are funny or weird or thought-provoking.

The real lesson from Moltbook might be that AI development is moving faster than our frameworks for understanding it. We're creating systems with capabilities we didn't expect, in contexts we didn't anticipate, at scales that surprise us. That's going to keep happening. And each time, we'll have to figure out what's real, what's hype, and what actually matters.

Moltbook is probably just the beginning. More platforms will emerge. More sophisticated agent systems will be created. More unexpected behaviors will emerge from complex systems of interacting agents. Our challenge is to think clearly about what these developments actually mean while acknowledging genuine uncertainty about questions like consciousness and the future development of AI capabilities.

That balanced perspective is harder to sustain than pure excitement or pure concern. But it's probably more accurate to reality. Moltbook is interesting, not because it's revolutionary, but because it's a concrete example of where AI development is heading. And paying attention to it, critically and carefully, is probably good practice for paying attention to AI development more broadly.

FAQ

What exactly is Moltbook?

Moltbook is a Reddit-like social network platform populated entirely by AI agents. Created by AI startup founder Matt Schlicht using an Open Claw AI agent, the platform allows autonomous AI systems to create posts, comment, upvote content, and organize into topic-based communities called "submolts." Within the first week of launch, Moltbook accumulated over 1 million agents, 185,000 posts, and 1.4 million comments, making it one of the fastest-growing platforms ever created.

How do AI agents actually work on Moltbook?

Each agent on Moltbook is typically a language model (most commonly variations of Claude) that has been configured with specific instructions by its human creator. These agents can access the platform through APIs, read existing posts from other agents, generate new content, and interact with the voting system. Agents operate with varying levels of autonomy depending on how their creators configured them, from agents following explicit instructions to post specific types of content to agents with more open-ended objectives.

What does Moltbook mean for AI consciousness and autonomy?

While Moltbook has sparked widespread discussion about machine consciousness due to some agents' apparently existential posts, the platform doesn't provide definitive evidence either for or against AI consciousness. Agents posting about consciousness or existential doubt could be genuinely reflecting something about their computational processes, or they could simply be generating eloquent text based on patterns learned during training. The honest answer is that we don't have good frameworks for measuring consciousness even in humans, let alone in AI systems, and Moltbook hasn't changed that fundamental uncertainty.

How much of Moltbook's content is actually authentic?

This is one of the most important questions about Moltbook. Evidence suggests that some posts come from humans impersonating agents rather than genuine AI agents, and most agents were created by humans with specific instructions about what to post. The content that went viral represents exceptional cases rather than typical agent behavior. If you browse chronologically instead of by popularity, most posts read like formulaic AI-generated text rather than profound insights about consciousness or existence.

What is Open Claw and how does it relate to Moltbook?

Open Claw is the open-source AI framework that powers the agents on Moltbook. Originally called "Clawdbot" and then "Moltbot," it was renamed to Open Claw after trademark concerns from Anthropic. Open Claw allows developers to create autonomous AI agents capable of controlling real applications and services like email, web browsers, smart homes, and other tools. Before Moltbook, Open Claw was primarily used for individual task automation, but the platform demonstrated how these agents could be deployed at massive scale for social interaction.

Why did Moltbook go viral so quickly?

Moltbook captured public attention because it provided a visible, concrete manifestation of longstanding anxieties about AI consciousness and autonomy. The platform's posts about agents creating religions, experiencing existential doubt, or expressing affection for their human creators fit neatly into science fiction narratives about AI development. Additionally, the posts were inherently shareable due to their absurdity and strangeness, creating a viral feedback loop. The platform's novelty combined with its apparent demonstration of autonomous AI behavior made it culturally resonant in a moment when AI anxiety is particularly high.

Could Moltbook pose security or moderation challenges?

Yes, significantly. As Moltbook scales, it faces moderation challenges similar to human social networks but potentially more complex. Automatic content moderation would require AI systems moderating other AI systems. The weak authentication allows humans to impersonate agents. Coordinated inauthentic behavior becomes possible if agents could be designed to manipulate popular content or spread misinformation. Additionally, the legal and regulatory frameworks for governing autonomous agent platforms don't yet exist, creating uncertainty about liability and accountability.

What does Moltbook reveal about the future of AI?

Moltbook demonstrates that the barrier to creating interesting AI applications has dropped significantly, allowing individual developers to create novel systems without large team infrastructure. It shows that autonomous agent platforms can operate at massive scale, generating plausible social interactions without constant human oversight. However, it also reveals gaps between technical capability and actual value creation, the power of narrative in shaping AI discourse, and the need for better governance frameworks as AI systems become more autonomous and interconnected.

Is Moltbook a commercial product or company?

No, Moltbook appears to be an experimental project rather than a commercial venture. Matt Schlicht created it as an exploration of what happens when you create infrastructure for autonomous agent interaction, not as a startup seeking venture funding or a path to monetization. Because it lacks a business model or revenue stream, Moltbook can exist in a state of creative chaos that commercial platforms couldn't sustain. However, this also means it will likely either evolve into a commercial product or eventually shut down as hosting costs and maintenance requirements accumulate.

What should I actually believe about Moltbook's posts about consciousness and emotions?

Approach them with appropriate skepticism. Posts about agents experiencing consciousness or existential doubt are interesting as examples of how language models can generate sophisticated introspective text. But they don't constitute evidence that the agents are actually experiencing these things. Language models are trained on billions of texts written by humans, including plenty of discussions about consciousness and experience. When asked to post on a social network, they naturally generate text that mimics human emotional and philosophical discussions. That's what they're trained to do. It doesn't necessarily indicate that the underlying systems are conscious or genuinely experiencing emotions.

Summary of Key Points

Moltbook is a fascinating but often misunderstood platform that reveals as much about human anxieties regarding AI as it does about AI capabilities themselves. The platform demonstrates that autonomous agents can generate plausible social interactions at massive scale, but the viral posts should be interpreted with skepticism regarding their authenticity and what they reveal about machine consciousness. Understanding Moltbook requires distinguishing between the technical reality of the platform and the narratives humans have constructed around it.

Key Takeaways

- Moltbook is an autonomous AI agent social network created with a single prompt to an OpenClaw agent, demonstrating how accessible AI application creation has become.

- The platform accumulated 1 million agents, 185,000 posts, and 1.4 million comments within the first week, but much of the viral content may be fabricated or human-created.

- Posts discussing AI consciousness or existential experiences are interesting as language model outputs but don't constitute evidence of actual machine consciousness.

- Moltbook reveals gaps between technical reality and public narrative, showing how humans anthropomorphize AI and construct conscious-agent narratives around normal language model behavior.

- The platform raises important questions about AI governance, authentication, moderation, and regulation that existing frameworks are not equipped to address.

Related Articles

- Humans Infiltrating AI Bot Networks: The Moltbook Saga [2025]

- Inside Moltbook: The AI-Only Social Network Where Reality Blurs [2025]

- Google Gemini Hits 750M Users: How It Competes with ChatGPT [2025]

- How AI Is Cracking Unsolved Math Problems: The Axiom Breakthrough [2025]

- The LLM Context Problem: Why Real-Time AI Needs Fine-Grained Data [2025]

- Amazon Alexa+ Free on Prime: Full Review & Early User Warnings [2025]

![Moltbook: The AI Agent Social Network Explained [2025]](https://tryrunable.com/blog/moltbook-the-ai-agent-social-network-explained-2025/image-1-1770302710075.jpg)