The Crisis Nobody's Ready For

Last month, Runway dropped a study that should've made headlines everywhere. Instead, it disappeared into the noise.

The finding? Most people—including the founders of AI video companies—can't tell the difference between real videos and AI-generated ones anymore.

Not "most people struggle." Not "it's getting harder." They literally can't tell.

I'll be honest: this should terrify you. Not because AI video generation is evil, but because the technology has crossed a threshold. We're past the point where technical improvements matter. We're in a completely different era now, and almost nobody's prepared for what comes next.

What Runway's Study Actually Found

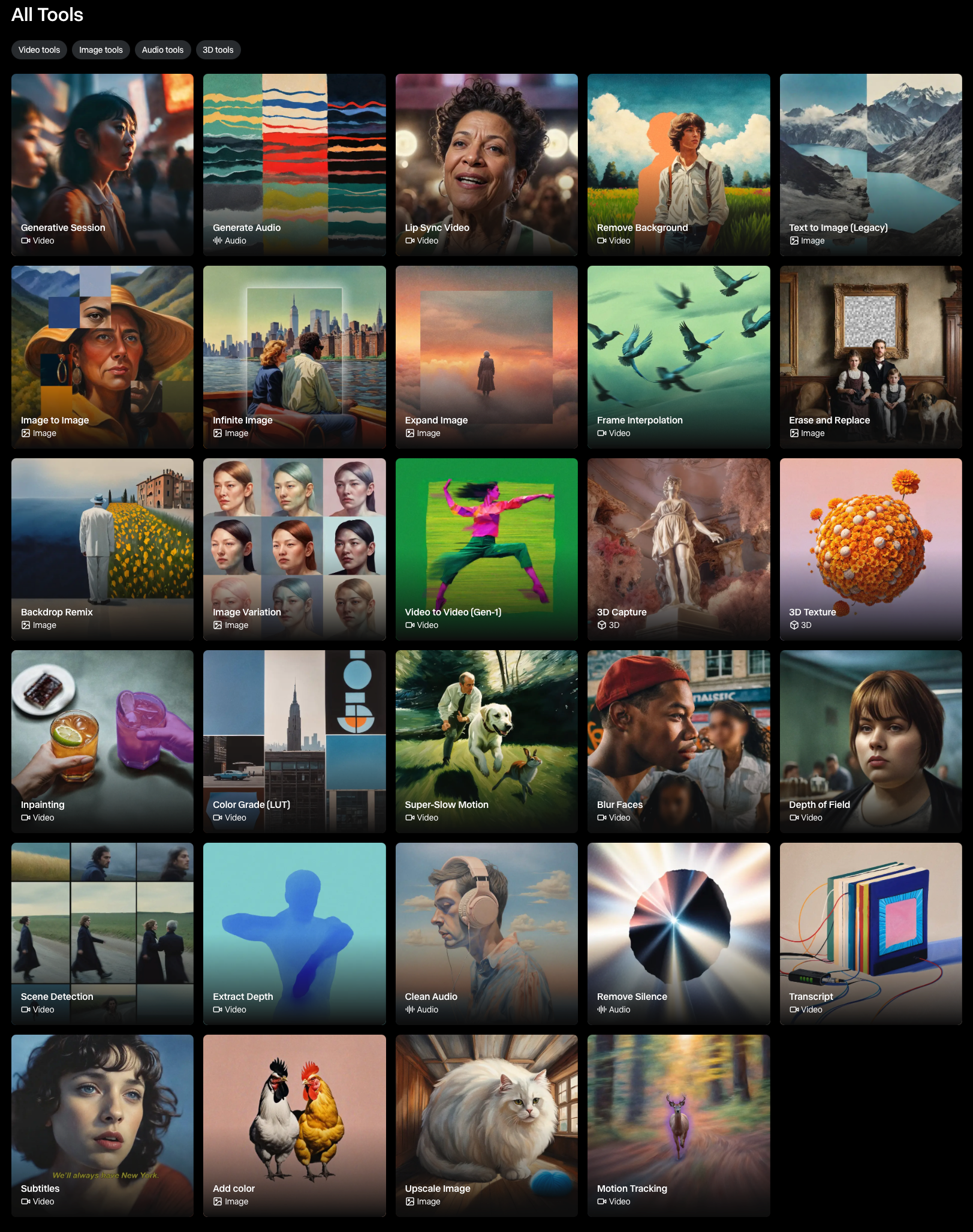

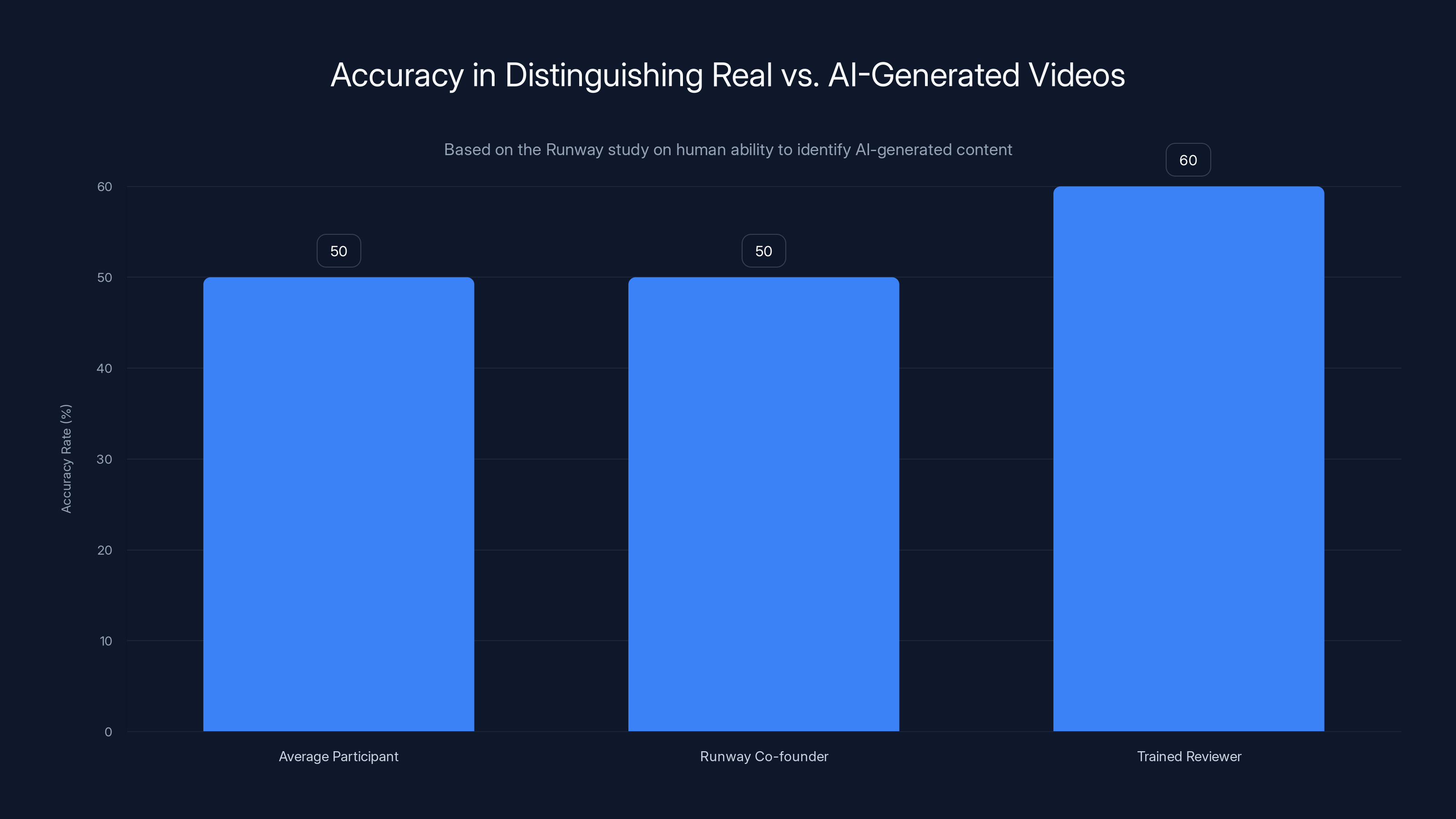

Here's what happened. Runway, one of the leading AI video generation platforms, conducted research to understand how people perceive synthetic video content. They showed participants a mix of real and AI-generated videos, then asked them to identify which was which.

The results were brutal.

On average, people achieved accuracy rates barely above 50%. That's coin-flip territory. You'd get similar results if you closed your eyes and guessed.

But here's the kicker: even the company's own co-founder couldn't reliably tell the difference. When you're building the technology and you still can't distinguish your creation from reality, you've entered genuinely new territory.

The implications ripple outward. If the person who literally invented the tool can't catch their own output, what chance does anyone else have? This isn't a gap in human perception we can fix with training. This is a fundamental shift in what's technically possible.

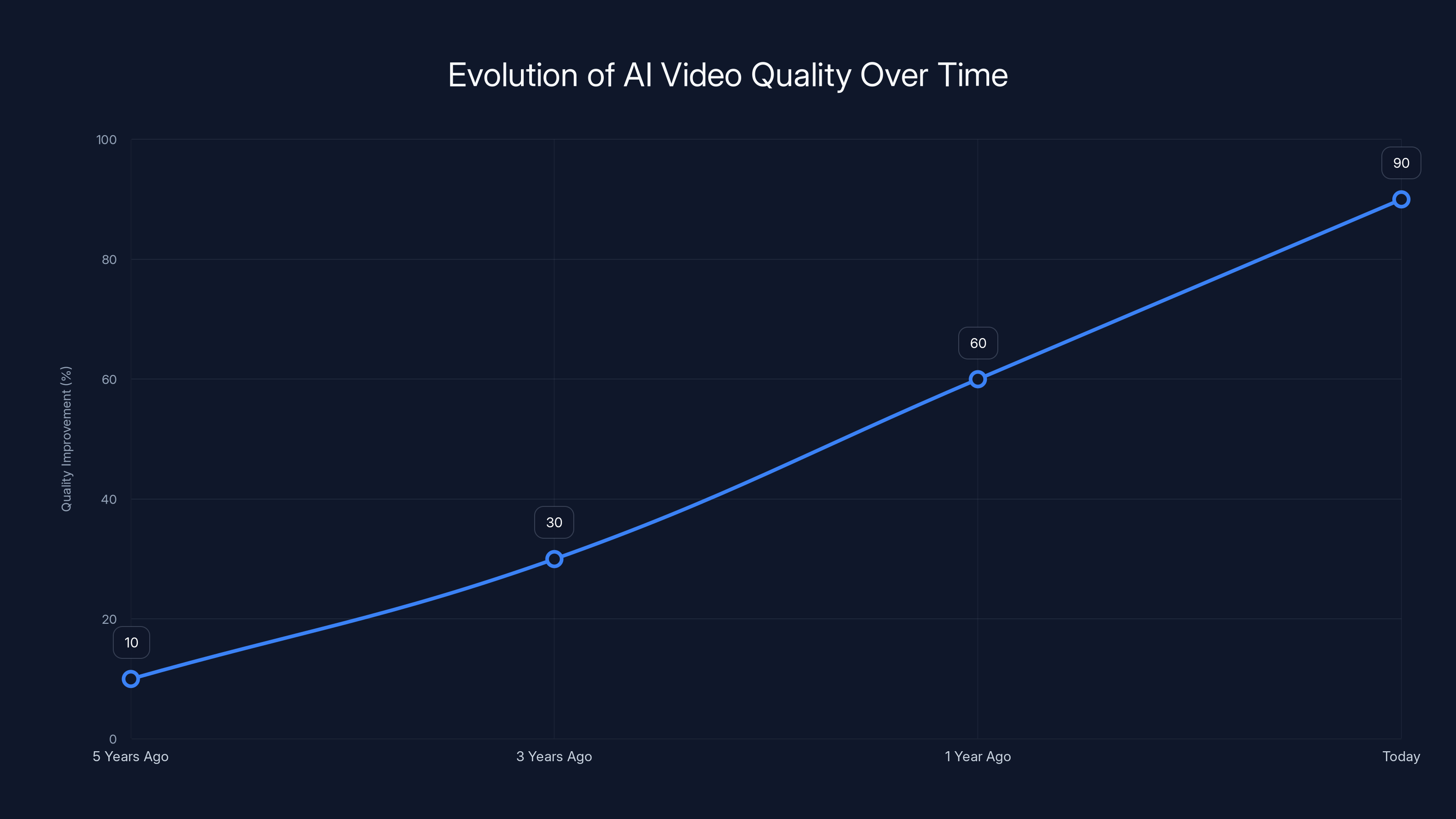

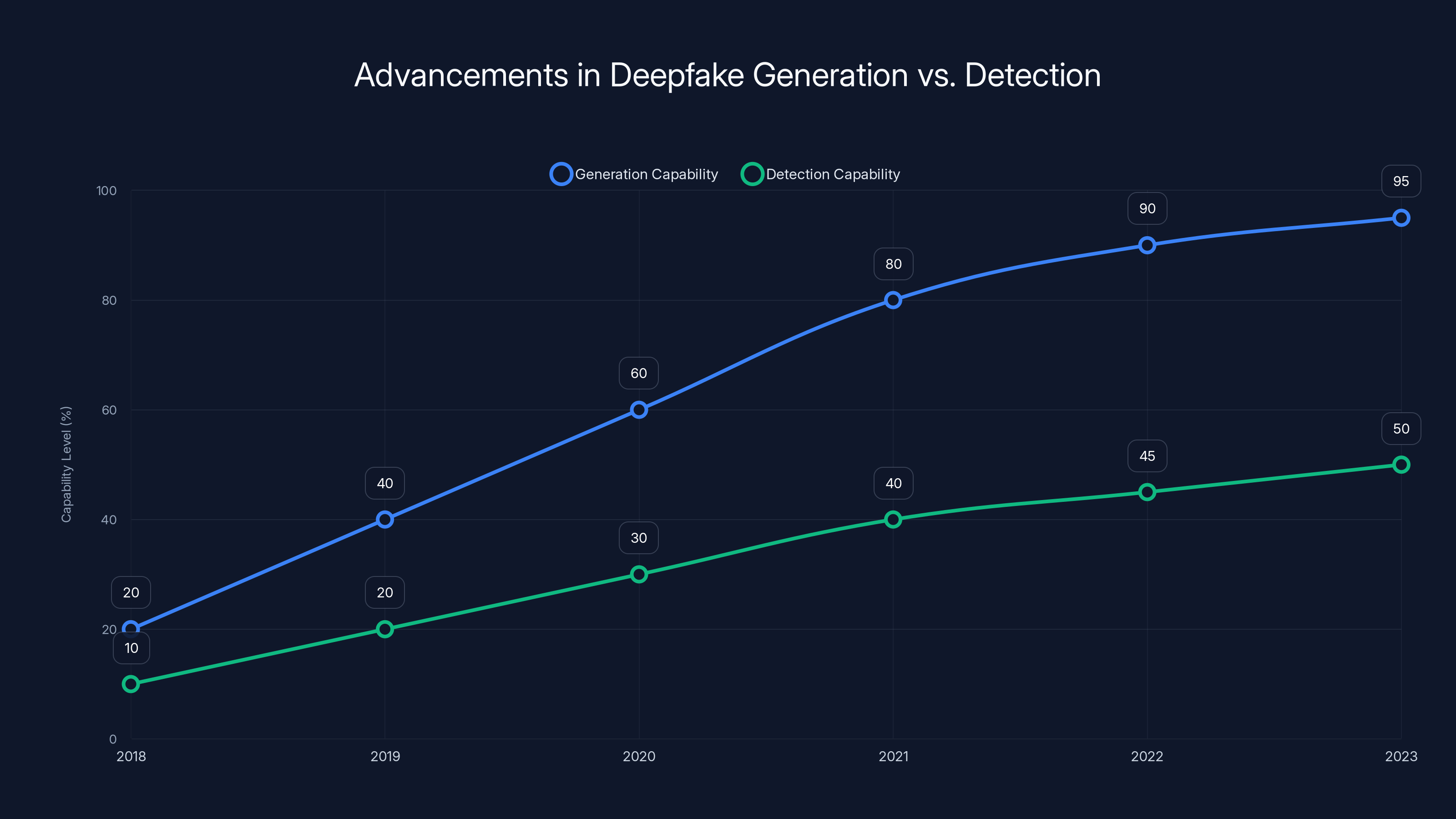

AI video quality has dramatically improved, with recent advancements showing up to 90% improvement compared to five years ago. Estimated data.

Why Detection Is Losing the Race

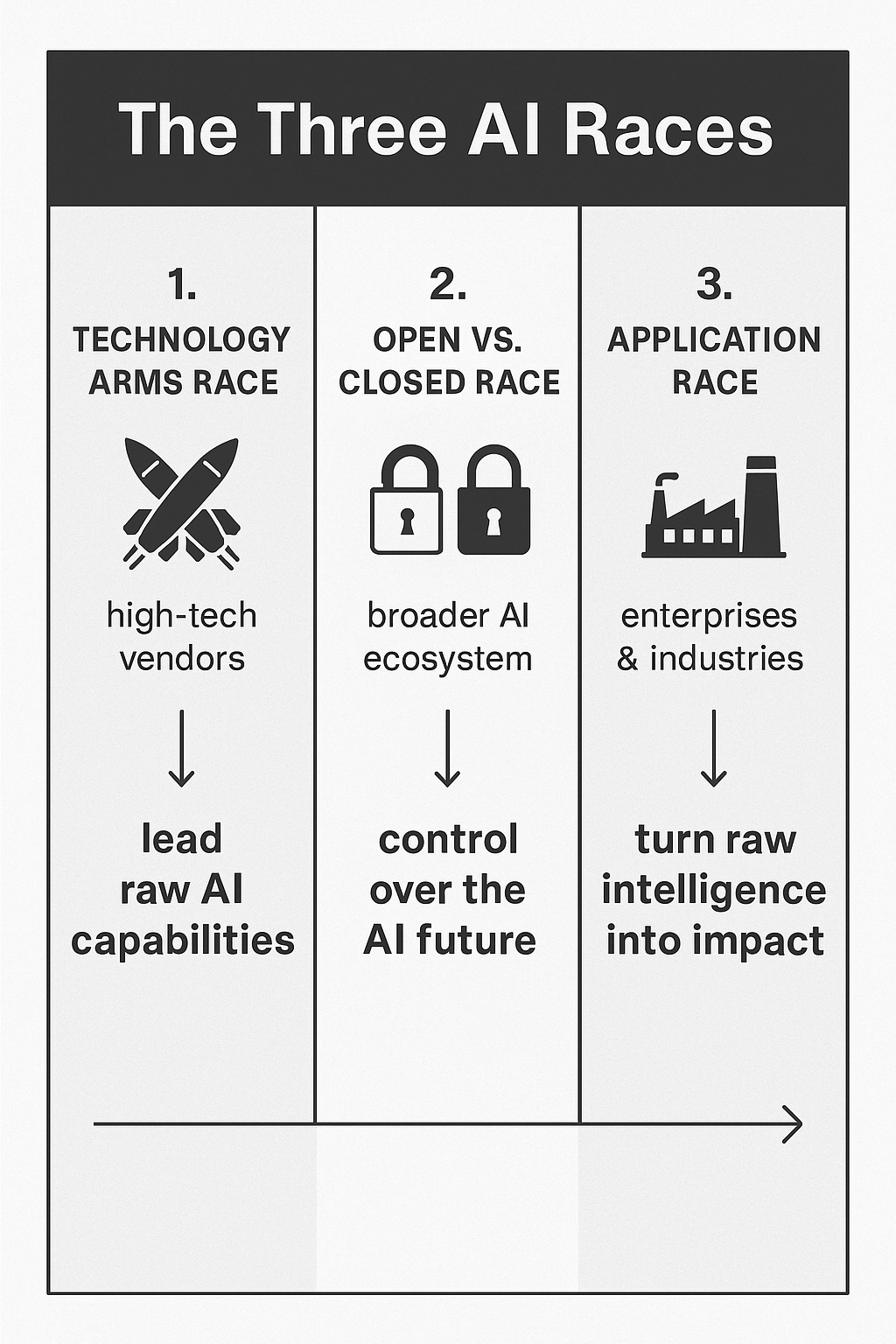

There's a fundamental asymmetry at play here. Creating convincing synthetic media is computationally expensive, but it only needs to happen once. Detecting it requires examining thousands of frames, analyzing lighting, shadows, temporal consistency, and audio synchronization.

The creator has infinite resources and time to perfect the output. The detector has milliseconds to make a judgment call.

Consider this: Open AI's GPT models improved detection resistance as a side effect of training. The models got better at text, and fake text simultaneously got harder to detect. Same principle applies to video. As generation gets better, detection by definition gets harder.

Most detection methods rely on artifact analysis. The AI leaves traces. Unnatural eye movements. Audio that doesn't quite sync. Shadows that fall weird directions. But each generation update patches these tells.

It's an arms race where the attacker moves faster than the defender. This isn't new in cybersecurity, but it's new to visual media, and almost nobody understood it was coming.

The Timeline Nobody Saw Coming

Five years ago, AI video was a joke. Choppy. Weird. Obviously fake.

Three years ago, it was impressive but still glitchy. You could spot the artifacts if you looked close.

One year ago, it started getting genuinely good. But trained eyes could usually catch issues.

Today? The technology jumped a generation. And nobody outside the companies building it really grasped how fast it would move.

The leap from "notable artifacts" to "indistinguishable" happened in compressed time. Tools like Runway Gen-3 went from capable to dangerous in a way that breaks previous patterns.

Why so fast? Better transformer architectures. Larger training datasets. More computational power applied to video diffusion models. And critically, feedback loops from real-world usage that identified what humans notice and what they don't.

Each update doesn't improve the technology by 10%. It improves by 40%, 50%, sometimes more. And the improvements are asymmetric. Rendering gets better. Consistency improves. Motion becomes natural. Faces stop glitching.

But detection methods? They're still analyzing the same artifacts. Using the same fingerprints. Running the same checks.

Estimated data shows that while both generation and detection capabilities have improved, generation has advanced more rapidly, creating an asymmetric challenge for detection systems.

How the Best Detection Methods Work (And Why They're Failing)

There are actually several sophisticated approaches to detecting synthetic video. Understanding why they're losing matters.

Pixel-level artifact detection examines individual frames for compression patterns, unnatural frequency distributions, or statistical anomalies. The idea is that AI models generate video in ways that leave mathematical traces. A well-trained detector can spot these anomalies before human eyes even register the problem.

The problem? Newer generation models are explicitly trained to avoid creating these traces. They don't just generate plausible video. They generate video with natural statistical properties. The fingerprints are getting harder to find.

Temporal consistency analysis looks at how things move across frames. Real video has natural motion flow. AI sometimes struggles with consistent motion across time. Detectors look for micro-movements that don't make physical sense, or objects that don't track correctly through space.

Again, newer models excel at this. Motion synthesis got dramatically better. Watch Runway video examples and the motion is smooth, natural, physically plausible.

Biological feature analysis focuses on human-specific tells. Eyes blink in unnatural patterns. Teeth don't align quite right. Skin texture glitches. Audio doesn't match lip movements perfectly.

These detection methods were actually pretty effective for a while. The problem is they're now getting systematically solved. Newer models spend computation specifically on making these human features look natural. Eye blinks happen at realistic intervals. Lip sync is becoming genuinely accurate.

Audio-visual synchronization checking ensures the audio matches the video. Deeper fakes often have subtle desynchronization. Modern models now handle this well enough that it's becoming unreliable as a detection vector.

The honest assessment: every detection method is racing against improving generation. And generation is winning.

The Business Incentive That Changed Everything

Here's what people don't understand about commercial AI video tools. The incentives shifted dramatically around 2023.

When synthesis was novel, companies wanted people to know the content was AI-generated. It was a feature. A selling point. "Look what we can do!"

Then something changed. As the tools got better and uses multiplied, the incentive flipped. Businesses and creators wanted AI video that didn't announce itself. Content that worked seamlessly in workflows. Video that didn't scream "I'm synthetic."

Suddenly, indistinguishability became a product requirement, not a side effect. Companies optimized specifically for it.

Runway didn't wake up one day deciding to fool people. They built tools for creators, marketers, and filmmakers. Those users wanted plausible synthetic content. The market rewarded indistinguishability.

At scale, thousands of teams optimizing for one goal produces a pretty effective outcome.

What Actually Happens When Detection Fails

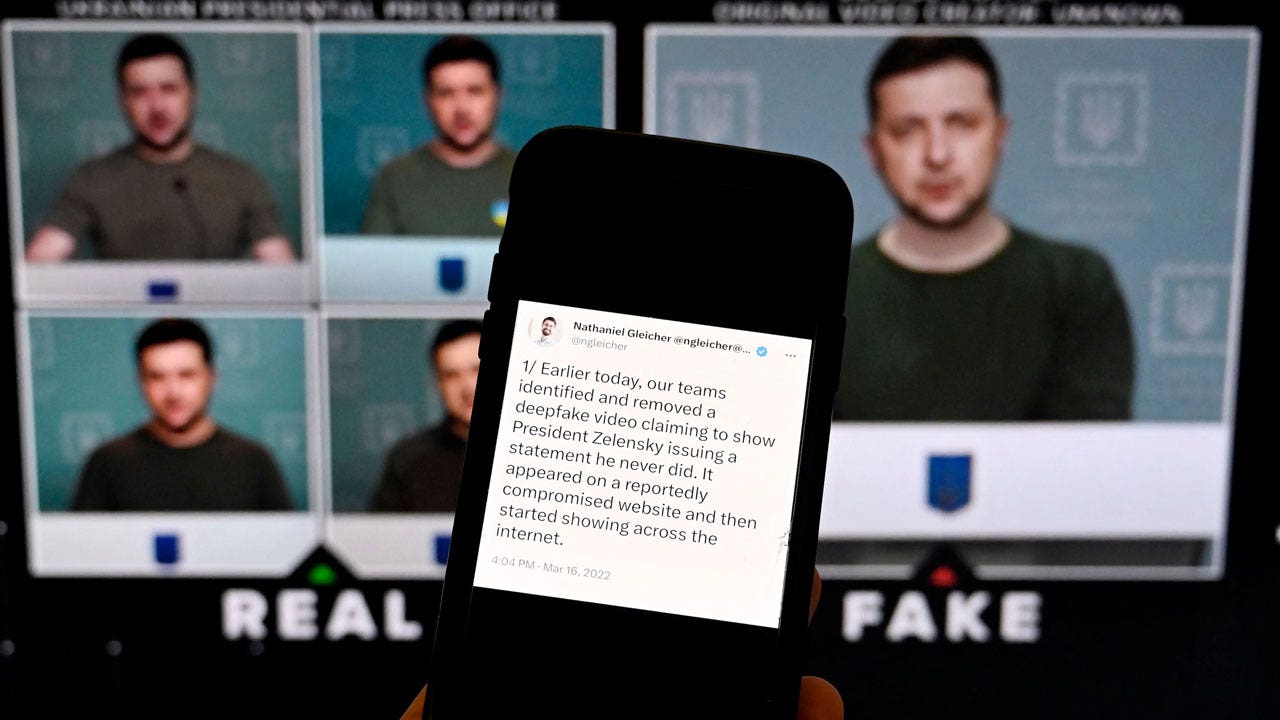

Let's talk about the real-world consequences. This isn't theoretical.

First, there's misinformation at scale. A convincing video of a political figure saying something damaging. It doesn't need to fool everyone. It needs to fool enough people for long enough that the story takes root. Then the false version spreads faster than the correction.

This has already happened. Multiple times. Deepfake videos of celebrities, politicians, and public figures circulated online. Some spread widely before being debunked. Others are still circulating.

Second, there's identity theft and impersonation. Synthetic video can now convincingly create proof of something that never happened. A bank employee approving a fraudulent transfer. A CEO authorizing a payment. A witness identifying a suspect.

We're not that far from situations where video evidence becomes unreliable in court. That's already a concern among legal experts.

Third, there's the trust problem. If you can't trust video, what can you trust? Photographs are already compromised by Photoshop. Audio can be synthesized. Text can be generated. The substrate of trust is eroding.

Companies are starting to grapple with this. Financial institutions are considering adding biometric verification layers. Authentication systems are being redesigned. But this is expensive and disruptive.

Fourth, there's the chilling effect on speech. If someone can make a convincing video of you saying or doing something you didn't, that's leverage. That's threat material. Some people will self-censor preemptively.

The technology creates new vectors for manipulation that don't require the technology to be perfect. Just convincing enough.

The study found that most participants, including the Runway co-founder, had around a 50% accuracy rate in distinguishing AI-generated videos from real ones, indicating the high indistinguishability of modern AI video generation.

The Standards Nobody Wants to Implement

There's a straightforward solution that would help dramatically: digital provenance.

Every photograph or video from a legitimate camera could include cryptographic proof of origin. When was it captured? What device? What edits were applied? This would create an auditable chain.

Content created by AI could carry metadata declaring itself synthetic. Same cryptographic approach. No way to forge it.

Companies like Adobe and others have been pushing content credentials and digital signatures. The technology exists. It's actually pretty robust.

So why hasn't it been adopted everywhere?

Because it requires coordination. It requires standards. It requires camera manufacturers, software companies, platforms, and users all implementing the same system. And it requires that the cryptography actually work and stays ahead of attack methods.

There's also a reluctance from creators. Some want plausible deniability. Some don't want their data attached to their content. Some just haven't implemented it because inertia is powerful.

Regulators are starting to push for this. The EU is considering requiring synthetic media labels. The US is slowly moving in that direction. But standards take time.

Meanwhile, the gap between what's technically possible and what's being implemented keeps widening.

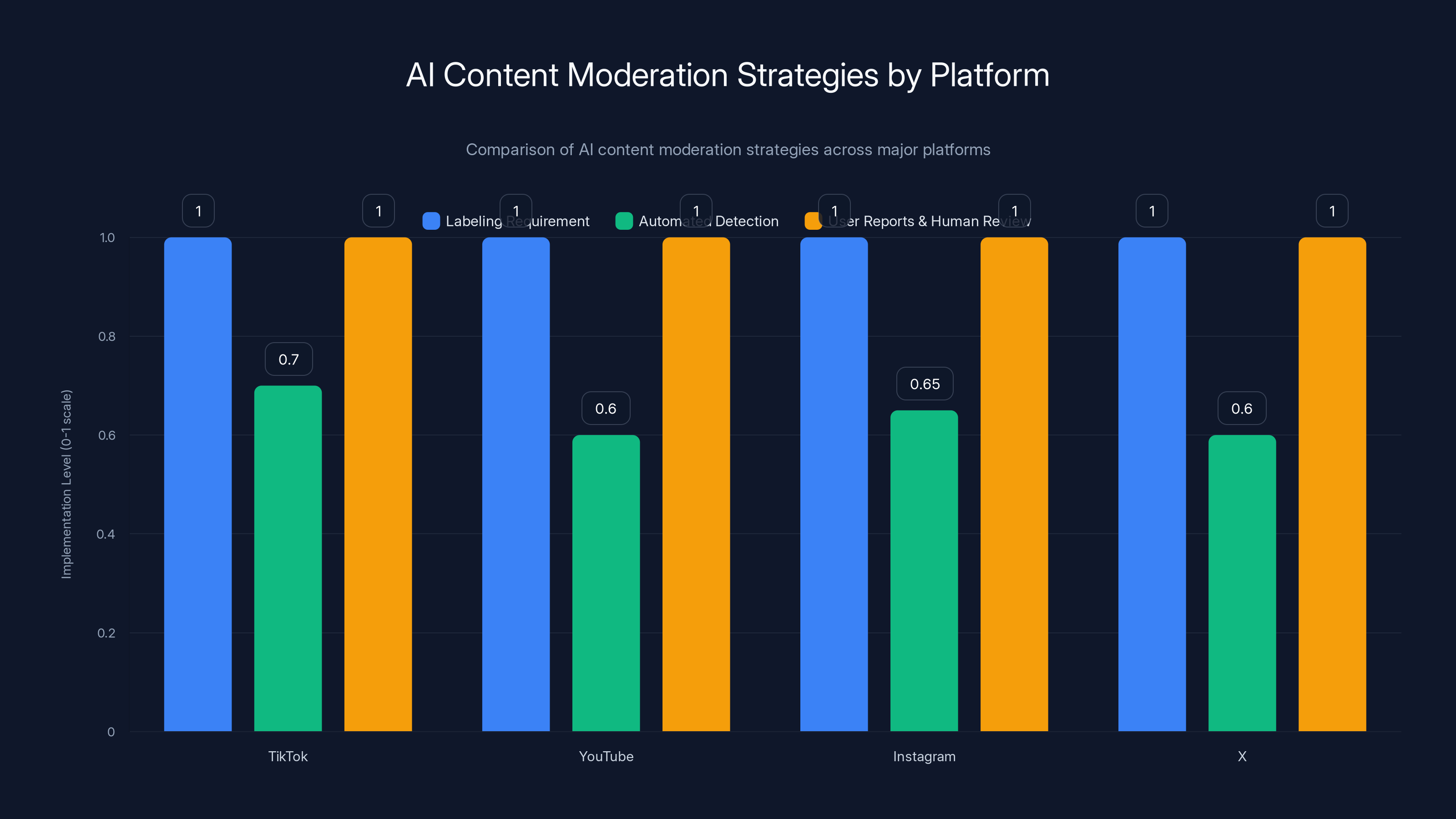

How Platforms Are (And Aren't) Responding

Platforms face a genuinely difficult problem. They need to moderate synthetic content without crushing legitimate AI creation.

Tik Tok added labels for AI-generated content. You Tube requires disclosure of synthetic media in certain contexts. Instagram and X have similar policies.

The problem is enforcement. You can require people to label their content, but compliance is voluntary. And there's no technical way to detect unlabeled synthetic video if it's well-made.

Some platforms are testing automated detection at upload. But detection accuracy is maybe 60-70% on well-made content. That's not good enough for safety-critical applications.

Other platforms are relying on user reports and human review. But that's reactive. The damage spreads before it gets caught.

The honest truth: platforms don't have a great solution. They're trying to thread a needle. Encourage AI creativity while preventing harm. Detect bad content while not false-flagging legitimate work.

That needle is getting harder to thread as detection gets harder.

The Role of AI Companies Themselves

Here's where it gets interesting. The companies building video generation tools have their own incentives.

Runway, Open AI, Google, and others have some responsibility here. They're deploying tools that enable synthetic video creation.

Some are building in safeguards. Watermarking. Refusal layers. Terms of service that prohibit deepfakes and impersonation.

But safeguards are game-theoretic. If one company implements strict controls and another doesn't, creators migrate to the less-restricted option. We've seen this pattern before with other technologies.

Runway has been relatively responsible. They include watermarks by default. They have policies against harmful uses. But watermarks can be removed with effort. Policies are only as strong as enforcement.

Some companies are investing in detection technology. They're trying to build tools that help people identify synthetic content. But as we've established, detection is losing.

The companies have an asymmetry of motivation. They benefit from adoption and usage. They benefit from impressive results. The harms accrue to society broadly, not to them specifically.

I'm not saying this maliciously. It's just the economic structure. Incentives matter more than good intentions.

Detection methods have seen a decline in effectiveness from 2018 to 2023 due to advancements in AI models that better mimic natural video properties. Estimated data.

What We Lost: The Era of Visual Trust

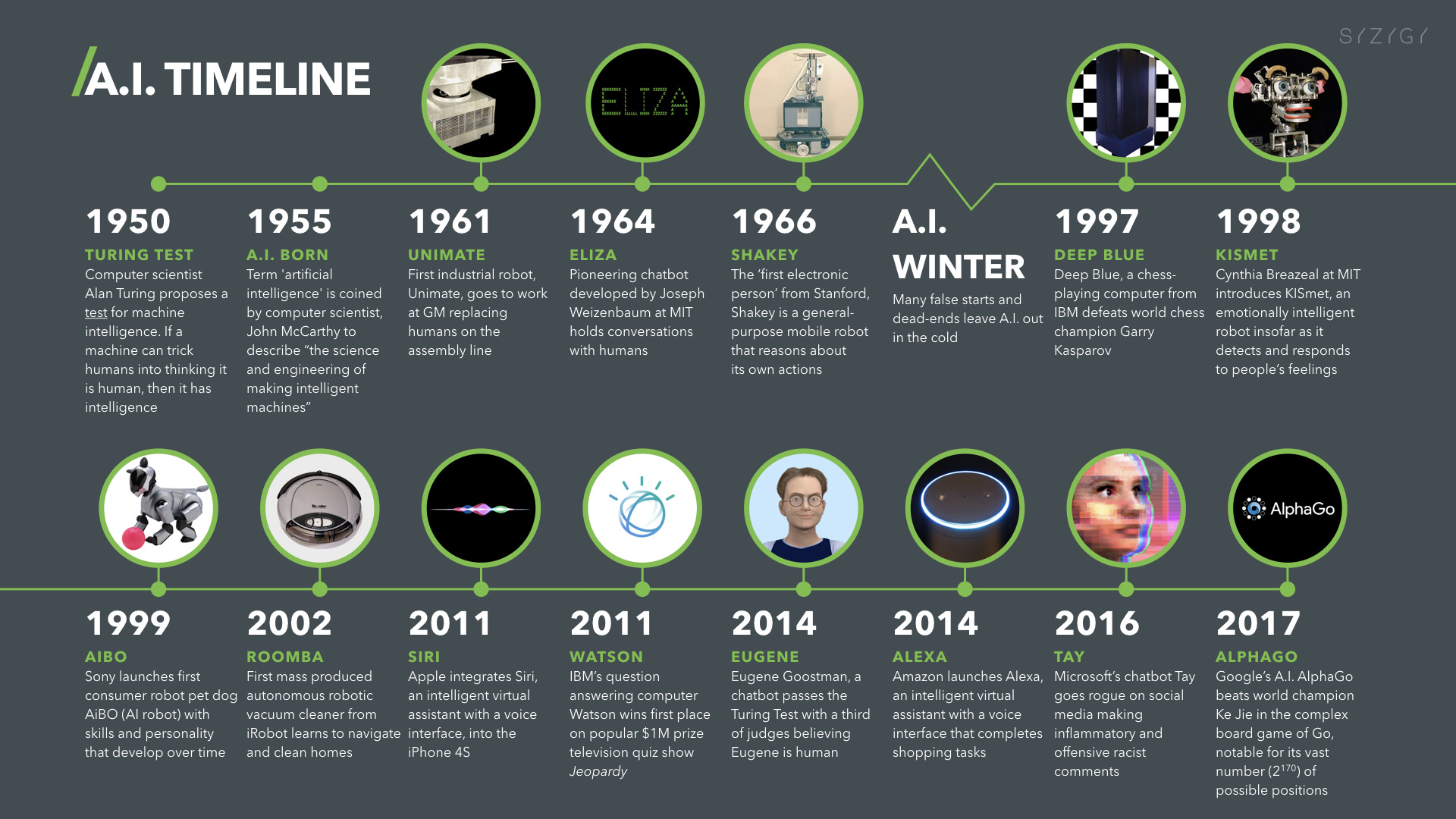

There was a time—not that long ago—when seeing was believing. A photograph was proof. Video was evidence.

Photography was invented in the 1820s. For nearly two centuries, it was assumed to be objective. You can manipulate a written account. You can lie with words. But a camera captured reality.

Photoshop changed that. Suddenly photos could be manipulated. But Photoshop required skill and was usually visible with close inspection.

Deepfakes changed it again. Now you could manipulate video convincingly with software. But deepfakes were often spotty. Artifacts were visible.

Now we're at the point where video can be made from scratch that's indistinguishable from reality. No original source needed. No Photoshop required. Just a prompt and a model.

We've lost the era where visual media was assumed trustworthy. That era is over.

It happened faster than most people realized. We went from "videos are probably real" to "videos might be anything" in about five years.

The psychological and social implications are still unfolding. People are developing what researchers call "identity verification fatigue." You can't trust what you see, so you stop trying to verify anything.

Alternatively, some people become hypervigilant. They question everything. They develop conspiracy mindsets because nothing is obviously true.

Neither response is healthy. But they're both rational responses to a fundamental change in information environment.

The Detection Arms Race (And Why It's Unwinnable)

Let's talk about why this is mathematically asymmetric.

A generation model needs to produce one video. One attempt. If it works, it wins.

A detection model needs to catch everything. Every video. Every potential fake. It needs perfect accuracy or attacks slip through.

From a game theory perspective, this is an inverted game. The attacker wins with success. The defender loses with single failure.

As models get better at generation, they don't just get incrementally better. They cross thresholds. They move from "detectable" to "undetectable" in discrete jumps.

Detection improvements are continuous and marginal. Generation improvements are transformative.

Consider the computational arms race. To detect a deepfake convincingly, you might need to run multiple analysis passes. Artifact detection. Temporal analysis. Biological feature checking. Audio sync verification.

That's expensive. Running these in real-time on millions of videos uploaded daily is computationally prohibitive.

But generation? Modern GPUs can generate minutes of video in hours. The economics favor generation.

Add in one more factor: feedback. Generative model creators have access to tons of data about what works and what doesn't. They see what fools detection systems. They iterate.

Detection researchers see models that passed through their systems. They get feedback too. But there's a lag. By the time detection improves, generation has moved on.

What Happens When You Accept This Reality

There's a point where denying the problem becomes counterproductive. We're there.

If you accept that video detection is fundamentally broken and getting worse, what do you actually do?

First, you stop treating video as proof of anything by itself. If you need evidence of something, you require provenance. You require context. You require corroboration.

In professional contexts—law enforcement, finance, legal proceedings—you build systems that don't rely on visual inspection. You use digital signatures. You verify sources. You build redundancy.

Second, you update your epistemology. You stop assuming that seeing is believing. You treat video like you treat everything else: potentially true, worth investigating, but not conclusive by itself.

Third, you acknowledge that misinformation just got easier to produce at scale. And you build defenses around that. Better media literacy. Better source checking. Better skepticism.

Fourth, you prepare for a world where authentic video becomes a premium service. If you need video that definitely proves something happened, you'll need it on a platform with cryptographic verification. That has a cost.

Fifth, you understand that this is irreversible. This technology won't be "uninvented." We're not going back to a world where video is assumed authentic. We're moving forward into a world where authenticity is something you verify, not something you assume.

None of this is comfortable. But comfort isn't the objective. Clarity is.

Platforms like TikTok and YouTube have implemented labeling requirements and rely on user reports, but automated detection accuracy remains low (60-70%). Estimated data.

The Creator Economy's Dark Mirror

Here's a consequences people aren't talking about enough: what this means for legitimate creators.

If you're a filmmaker, a journalist, a documentarian, your work is now under suspicion. Not because of anything you did wrong. But because the default assumption is eroding.

A filmmaker releases genuine documentary footage. Some people believe it. Others don't. Not because the film quality is bad or the evidence is weak, but because they know synthetic video of that quality is possible now.

Journalists face the same problem. A news organization publishes authentic video. Skeptics claim it's deepfake. There's no way to prove them wrong that doesn't require explanation and verification.

The burden of proof reversed. Real content now needs to prove authenticity. Fake content can simply exist.

This is a tax on truth-telling. Not a financial tax. An efficiency tax. More explanation required. More verification needed. More resources spent on proving you're not lying.

Meanwhile, someone creating a convincing false narrative gets to move fast and break things.

Some creators are adapting. Signing their work with digital credentials. Including metadata about cameras and equipment. Building reputation systems that vouch for authenticity.

But this requires infrastructure that doesn't universally exist yet. And it's expensive.

The small creator gets hurt more than the big one. A major news organization can deploy digital signatures and authentication infrastructure. An independent documentarian? That's a secondary cost they might not afford.

The technology creates a new class advantage: only the well-funded can prove their content is real.

What Regulation Could Look Like (And Why It Probably Won't Work)

Governments are starting to respond. The EU has frameworks for labeling synthetic content. The US is considering similar rules.

The incentive makes sense. Require disclosure of AI-generated content. Require labels. Require authentication where possible.

The problem is enforcement and technical feasibility. You can require labels, but verifying compliance is hard. You can't reliably detect unlabeled synthetic content. You can't retroactively label things already in circulation.

Regulation also faces the problem of legitimate use. Some synthetic content is fine. Movie VFX. Video games. Artistic content. Educational material. You don't want to ban synthesis. You want to ban deception.

That's a much harder line to draw in regulation.

There's also the international problem. One country's regulations don't apply globally. A video made in one jurisdiction with lax rules spreads everywhere.

Some proposals have been more creative. Blockchain-based verification. Government-backed authentication. Digital IDs for content.

But these require wholesale changes to infrastructure. And they don't work if bad actors simply ignore them.

My assessment: regulation will help at margins. It will create some friction. But it won't solve the core problem. You can't legislate your way out of mathematics.

The Honest Future

Let me be blunt about where this goes.

Video evidence becomes less reliable in courts. Legal systems need to update procedures. Lawyers will argue about video authenticity the way they now argue about DNA analysis methodology.

Some institutions will move to authentication infrastructure. Banks. Government agencies. Large organizations where the cost is justified.

Others will move away from video-based verification entirely. Biometric systems. Multi-factor authentication. Methods that can't be faked with a video.

Politicians and public figures will need security against deepfake impersonation. That's already happening. But it'll accelerate.

Social media might become less visual. Or it might become more visual but with lower trust. Or it might develop reputation systems that help you know if a creator is actually who they claim to be.

The entertainment industry will use synthetic media more openly. Less need to hire actors for certain roles. Less need for expensive locations. The economics shift dramatically in favor of digital creation.

Some people will become deepfake hypervigilant. They'll distrust all video. That's probably not healthy, but it's a rational response to the environment.

Most people will develop a sort of epistemological surrender. Videos are probably real until proven fake. But they might be fake. You'll hold beliefs more loosely.

The most important change: authenticity becomes a service, not an assumption. If you need to prove something really happened, you pay for verification infrastructure. If you just want to see cool video, you accept uncertainty.

None of this is catastrophic. Societies adapted when photography started enabling manipulation. They adapted when Photoshop became accessible. They'll adapt to synthetic video.

But adaptation involves friction. It involves costs. It involves lost trust that doesn't come back quickly.

We're living through that transition right now. And most people haven't actually registered what's happening yet.

What You Can Do About This

As an individual, you have limited power over technological development. But you have agency over your own skepticism and verification practices.

Start treating video the way you treat other information. Consider the source. Check the context. Look for corroboration. Verify through multiple channels if it matters.

Don't assume something is fake just because it could be. But don't assume it's real just because it looks convincing.

Develop better media literacy. Learn what real artifacts look like. Understand compression and video codecs. Understand how light works. The more you understand the medium, the better your intuitions become.

When something important is claimed—something with real consequences—demand authentication. If it's a financial claim, ask for documentation. If it's a legal claim, ask for corroboration. If it's a video of something significant, ask for provenance.

Support the development of verification infrastructure. Digital signatures. Content credentials. Authentication systems. These are expensive to build, but they're the only real defense we have.

Be skeptical of anyone claiming they can reliably detect deepfakes. They're overselling. Detection is genuinely hard now. Anyone telling you it's easy is either wrong or selling something.

Educate others. Most people haven't realized that video is now unreliable. They still think seeing is believing. Helping them understand the changed landscape is actually valuable.

Push for regulation that requires transparency without crushing innovation. Labeling requirements are reasonable. Authentication infrastructure is reasonable. Bans on all synthesis are probably not.

Most importantly: accept the reality. This is where we are now. Denying it doesn't change it. Acting on it does.

FAQ

What exactly did the Runway study show?

The study showed that when presented with a mix of real and AI-generated videos, most participants achieved accuracy rates around 50%—essentially coin-flip results. Even the co-founder of Runway couldn't reliably distinguish synthetic content from real video, indicating that indistinguishability has become a fundamental characteristic of modern AI video generation rather than an edge case.

Why can't detection systems keep up with generation?

Detection faces fundamental asymmetries. Generation needs to work once; detection needs to work perfectly on every video. Generative models improve through iteration and feedback loops at a faster rate than detection methods can adapt. Additionally, creators have more computational incentive to optimize for better synthesis than platforms have to detect it.

Is all AI-generated video undetectable now?

Not quite. Very early-stage AI video still has artifacts. But the tools used by companies like Runway have reached a level of sophistication where even trained human reviewers struggle with reliability. The gap between "easy to detect" and "genuinely difficult to detect" closed remarkably fast.

What should I do if I see a video claiming something important?

Verify the source first. Check where it came from and whether it has proper attribution and provenance. Request corroboration from other sources. For legally or financially important claims, demand digital authentication or other non-video evidence. Stop treating visual inspection as sufficient evidence for anything consequential.

Will there ever be a reliable way to detect all deepfakes?

Probably not in the traditional sense. Detection-based approaches are mathematically disadvantaged against generation. The better path forward is verification-based approaches using digital credentials, cryptographic signatures, and authenticated platforms rather than trying to detect every fake.

How do digital credentials and content authentication actually work?

They use cryptographic signatures to create tamper-proof records of when content was created, what device captured it, and what edits were applied. When you view authenticated content, you can verify it hasn't been modified. The system requires adoption across manufacturers and platforms, but it's technically viable.

What's the difference between deepfakes and legitimate AI video creation?

The core technology is identical. The difference is intent and disclosure. Legitimate AI video for entertainment, education, or art is labeled and transparent. Deepfakes are deceptive—they hide their synthetic origin to fool people. The concern is specifically about the deceptive use, not the technology itself.

Are there any platforms or tools that are doing this responsibly?

Some tools include watermarks by default, have usage policies against deepfakes, and support content credentials. But responsibility is relative. All synthesis tools can be misused. The safest approach is assuming that any video could be synthetic and verifying importance claims through other means.

Will society eventually adapt to unreliable video?

Almost certainly. Historical precedent suggests adaptation happens. Photography was once assumed to be objective until manipulation became possible. Society developed skepticism and verification practices. Video will follow a similar arc. But the transition period creates real costs and vulnerabilities.

What should governments regulate around synthetic video?

Transparency requirements—labeling synthetic content—are reasonable and helpful. Banning synthesis entirely is counterproductive given legitimate uses. Investment in authentication infrastructure is valuable. But regulation can't solve the core technical problem that generation outpaces detection.

The Real Problem Isn't the Technology

Here's what keeps me up: the real problem isn't that AI can create convincing video. That's just capability. The real problem is that we built an information environment where speed matters more than truth.

A convincing video spreads faster than the correction. The initial claim gets believed before anyone verifies anything. By the time people learn it's fake, the damage is done and the false version is already embedded in people's minds.

This isn't unique to synthetic media. But synthetic media makes it worse because there's no physical original to point to. With Photoshop, you can sometimes find the original photo and prove manipulation. With AI generation, there is no original.

So the real solution isn't better detection. It's slower information propagation. It's more verification before sharing. It's building systems that reward accuracy over speed.

But that goes against everything that optimized social media for engagement. Changing that would require companies to accept lower growth rates. It would require users to accept slower feeds.

That's probably not happening voluntarily.

So we're left with this paradox: the technology that creates the problem (AI synthesis) is the same technology that might help solve it (authentication, verification, credential systems).

Meanwhile, the gap between generation and detection keeps widening. The co-founder of Runway can't tell his company's output from reality. That should have been the headline that shook everyone.

Instead it was a curiosity. A trivia fact. One study among millions of pieces of content competing for attention.

Which is exactly how you'd expect people to respond if they didn't quite understand what they were looking at.

But they should. We all should. Because this changes everything about how we relate to visual evidence.

And most people haven't really noticed yet.

Key Takeaways

- Runway's study revealed most people achieve 50% accuracy distinguishing real video from AI-generated—essentially random guessing

- Detection systems are mathematically disadvantaged; generation improves faster than detection can adapt

- The era of visual trust is ending; video evidence now requires authentication infrastructure and verification

- Legitimate creators face new burden of proving authenticity; synthetic media creates efficiency tax on truth-telling

- Digital credentials and cryptographic verification offer the only viable path forward, not detection-based approaches

Related Articles

- xAI's Grok Deepfake Crisis: What You Need to Know [2025]

- Higgsfield's $1.3B Valuation: Inside the AI Video Revolution [2025]

- Why Grok's Image Generation Problem Demands Immediate Action [2025]

- Gemini vs ChatGPT: Which AI Model Is Actually Better? [2025]

- Master AI Image Prompts Better Than Google Photos Remixing [2025]

- The Post-Truth Era: How AI Is Destroying Digital Authenticity [2025]

![Can't Tell Real Videos From AI Fakes? Here's Why Most People Can't [2025]](https://tryrunable.com/blog/can-t-tell-real-videos-from-ai-fakes-here-s-why-most-people-/image-1-1769027813515.jpg)