The Great Agentic Coding Race: OpenAI GPT-5.3 Codex Reshapes Developer Workflows

In February 2025, the artificial intelligence landscape witnessed a pivotal moment that perfectly encapsulates the breakneck pace of the modern AI arms race. Within minutes of each other, two of the world's most influential AI companies—OpenAI and Anthropic—launched competing agentic coding models designed to fundamentally transform how developers build software. OpenAI's announcement of GPT-5.3 Codex followed closely after Anthropic's release, creating a fascinating study in both technological innovation and corporate strategy that reverberates through the developer community.

This simultaneous launch represents far more than just a competitive skirmish over market share. It signals a seismic shift in how artificial intelligence is being integrated into the software development lifecycle. For years, AI coding assistants operated within limited scopes—suggesting code snippets, completing functions, or identifying bugs. Now, the technology has evolved to the point where AI agents can autonomously handle complex tasks that previously required seasoned developers working over extended periods. The implications are staggering: development timelines could compress dramatically, technical barriers to entry for aspiring developers could lower substantially, and entire categories of repetitive work could be automated away.

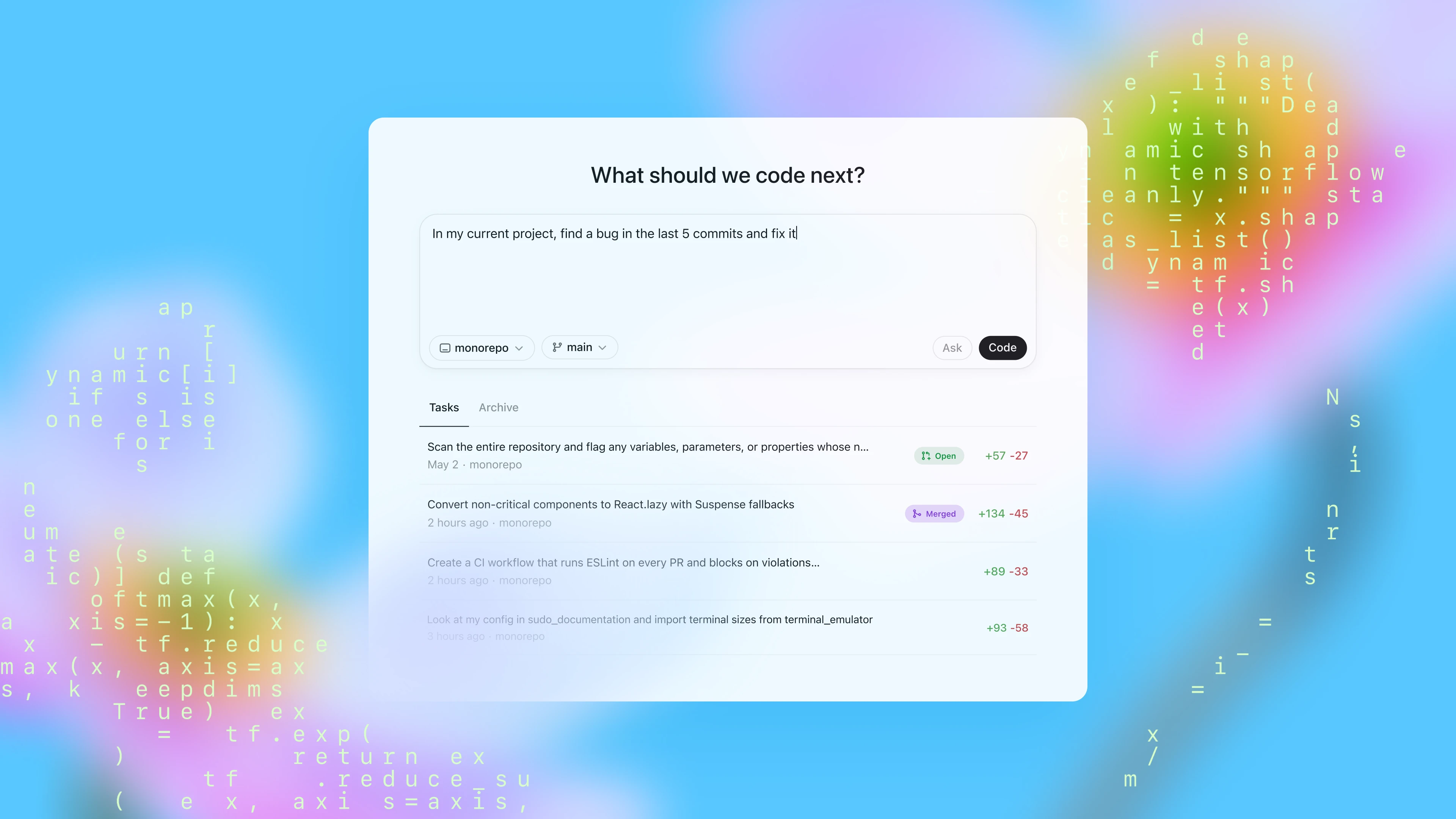

What makes this moment particularly significant is that these aren't incremental improvements to existing tools. Both OpenAI and Anthropic deliberately positioned their new models as fundamental paradigm shifts. OpenAI explicitly stated that GPT-5.3 Codex transforms their Codex agent from a tool that can "write and review code" into something capable of performing "nearly anything developers and professionals do on a computer." This isn't hyperbole—it's a genuine expansion of capabilities that challenges our understanding of what AI assistance means in the development context.

The story becomes even more interesting when considering the context surrounding these launches. Just days earlier, OpenAI had introduced the original Codex agent, which itself represented a major step forward. The rapid iteration cycle—launching an agent on Monday and a significantly enhanced version on Wednesday—demonstrates both the velocity of AI development and the pressure these companies face to maintain their technological edge. The competitive dynamic between OpenAI and Anthropic has become one of the most consequential rivalries in technology, influencing not just which companies succeed, but fundamentally shaping how developers will work for the next decade.

For development teams evaluating their AI integration strategy, understanding these competing approaches, their capabilities, limitations, and alternatives has become essential. This comprehensive guide examines the technical foundations of GPT-5.3 Codex, contrasts it with Anthropic's offering, analyzes the practical implications for different developer scenarios, and explores the broader ecosystem of coding automation tools available today.

Understanding Agentic Coding: The Evolution from Assistant to Autonomous Agent

What Exactly is Agentic Coding?

Agentic coding represents a fundamental departure from traditional AI coding assistants like GitHub Copilot or Tabnine. These earlier tools operate in a reactive mode—a developer types a comment or partial code, and the AI suggests completions or improvements. Agentic coding flips this model entirely. An agentic AI system operates autonomously within defined boundaries, making decisions about implementation approaches, testing strategies, debugging processes, and architectural choices without constant human intervention.

Think of the difference this way: a traditional coding assistant is like a knowledgeable colleague sitting next to you, answering questions when you ask them. An agentic coding system is more like hiring a junior developer who you can brief on requirements, then trust to work independently, checking back in periodically with progress updates. The agent maintains context across multiple steps, understands project requirements, evaluates its own work, and iteratively improves solutions based on feedback.

This shift represents a quantum leap in capability because coding is inherently a multi-step, iterative process. A single feature implementation might involve writing code across multiple files, setting up database schemas, creating API endpoints, writing tests, debugging unexpected interactions, refactoring for performance, and documenting the solution. Traditional assistants help with individual steps. Agents orchestrate entire workflows.

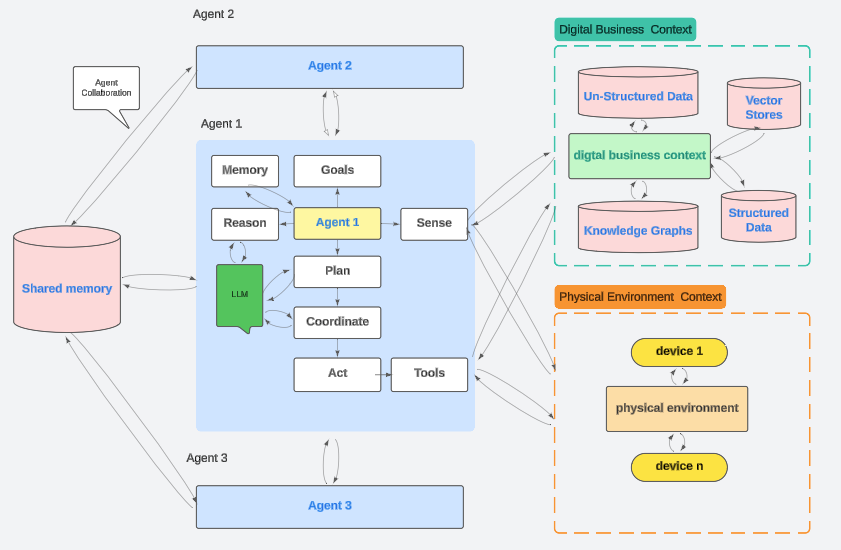

The Technical Architecture Behind Modern Agentic Systems

Agentic coding systems operate through a sophisticated interaction of several components working in concert. At the core sits a large language model trained extensively on code, architecture patterns, testing methodologies, and best practices. However, the model alone doesn't constitute an agent. The model is wrapped in a framework that provides the agent with tools—the ability to read files, execute code, run tests, access version control systems, query documentation, and interact with development environments.

The agent operates through what researchers call a "reasoning loop." It receives a task description, analyzes the requirements, develops a plan for implementation, executes that plan step-by-step, monitors results, evaluates whether objectives were met, and iteratively refines its approach if needed. This loop continues until the agent determines that the task has been successfully completed or encounters conditions that require human intervention.

Crucially, modern agentic systems incorporate safety mechanisms and human oversight capabilities. They can be configured to stop at certain decision points, request approval before making breaking changes, maintain detailed logs of all actions taken, and escalate complex decisions to human developers. This hybrid human-AI approach avoids the pitfalls of fully autonomous systems while still capturing the efficiency gains of extensive automation.

Why Now? The Convergence of Enabling Technologies

Agentic coding became practically feasible only when several technological prerequisites converged. First, language models achieved sufficient depth of understanding of coding patterns, architectural principles, and problem-solving methodologies. Earlier models could handle syntax and simple patterns; modern models understand why certain approaches are superior for specific scenarios. Second, context windows expanded dramatically, allowing models to maintain awareness of entire codebases, conversation histories, and multi-step task progressions. Third, tool-use capabilities matured, enabling models to reliably invoke external systems, interpret results, and adapt their approach based on outcomes.

The business drivers are equally important. As developer salaries have escalated and technical talent has become scarcer, companies face immense pressure to boost productivity. A single senior developer assisted by capable AI agents could theoretically accomplish what previously required a team of three or four. Additionally, the democratization of software creation—enabling non-technical people to build functional applications—opens enormous market opportunities for companies that provide the underlying tools.

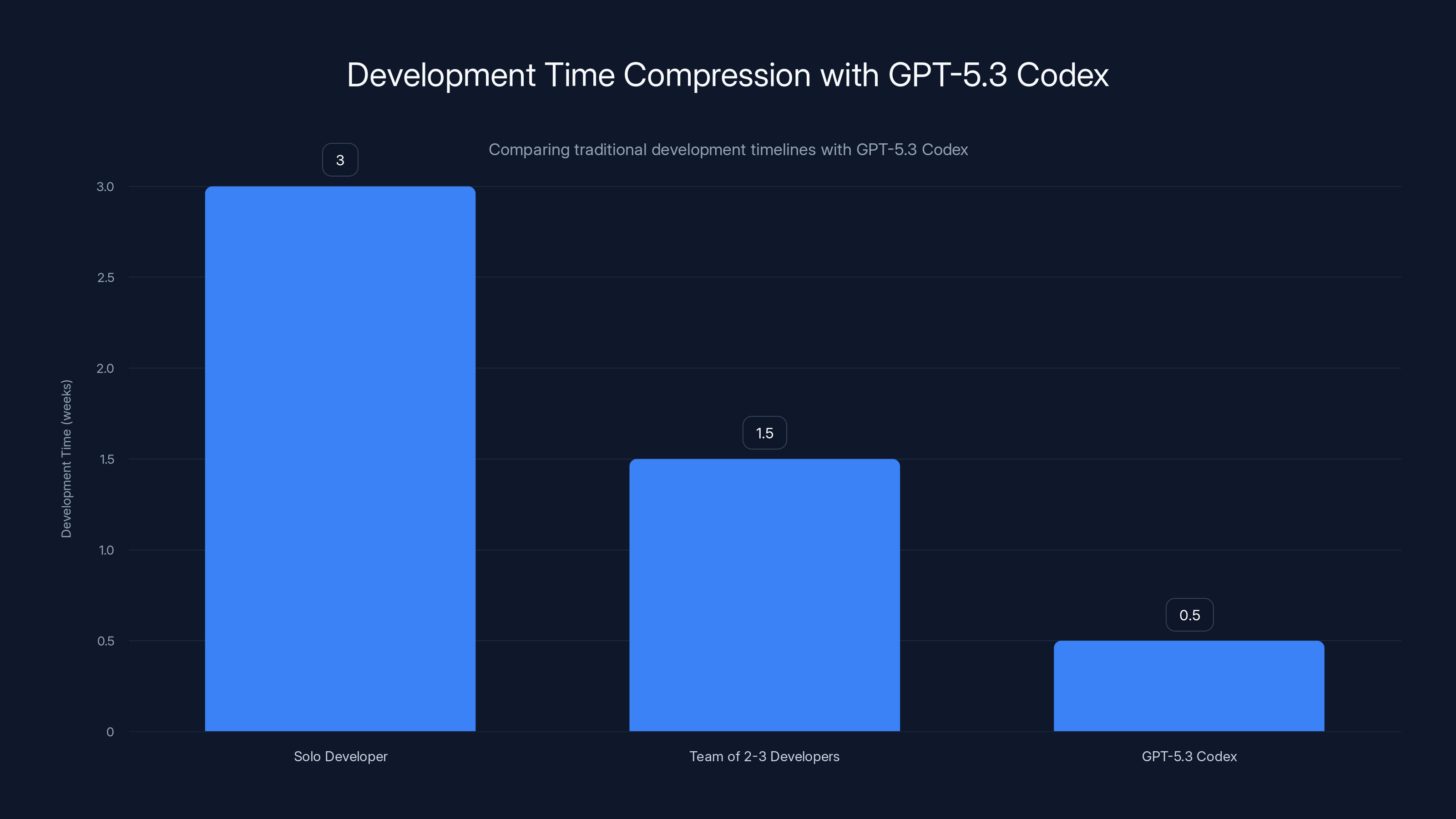

GPT-5.3 Codex reduces development time from weeks to days, significantly enhancing productivity. Estimated data.

OpenAI's GPT-5.3 Codex: Capabilities, Architecture, and Real-World Performance

The Evolution from GPT-5.2 to GPT-5.3 Codex

OpenAI's Codex lineage demonstrates a clear progression in agentic capabilities. The original Codex, introduced years ago, was fundamentally a sophisticated autocomplete system—powerful for its time, but still fundamentally reactive. The newer Codex agent, launched in early February 2025, took a substantial leap forward, introducing genuine autonomous task execution. However, it still operated within constraints—it could write code, review implementations, suggest improvements, but required developer judgment on architectural decisions and complex trade-offs.

GPT-5.3 Codex represents the next evolutionary step. OpenAI's technical documentation emphasizes that this version can "expand who can build software and how work gets done." The company's own testing, using internal benchmarks, demonstrated that GPT-5.3 Codex can "create highly functional complex games and apps from scratch over the course of days." This isn't artificial capability inflation for marketing purposes—the implication is that the system can now handle projects that would typically require weeks of human developer time.

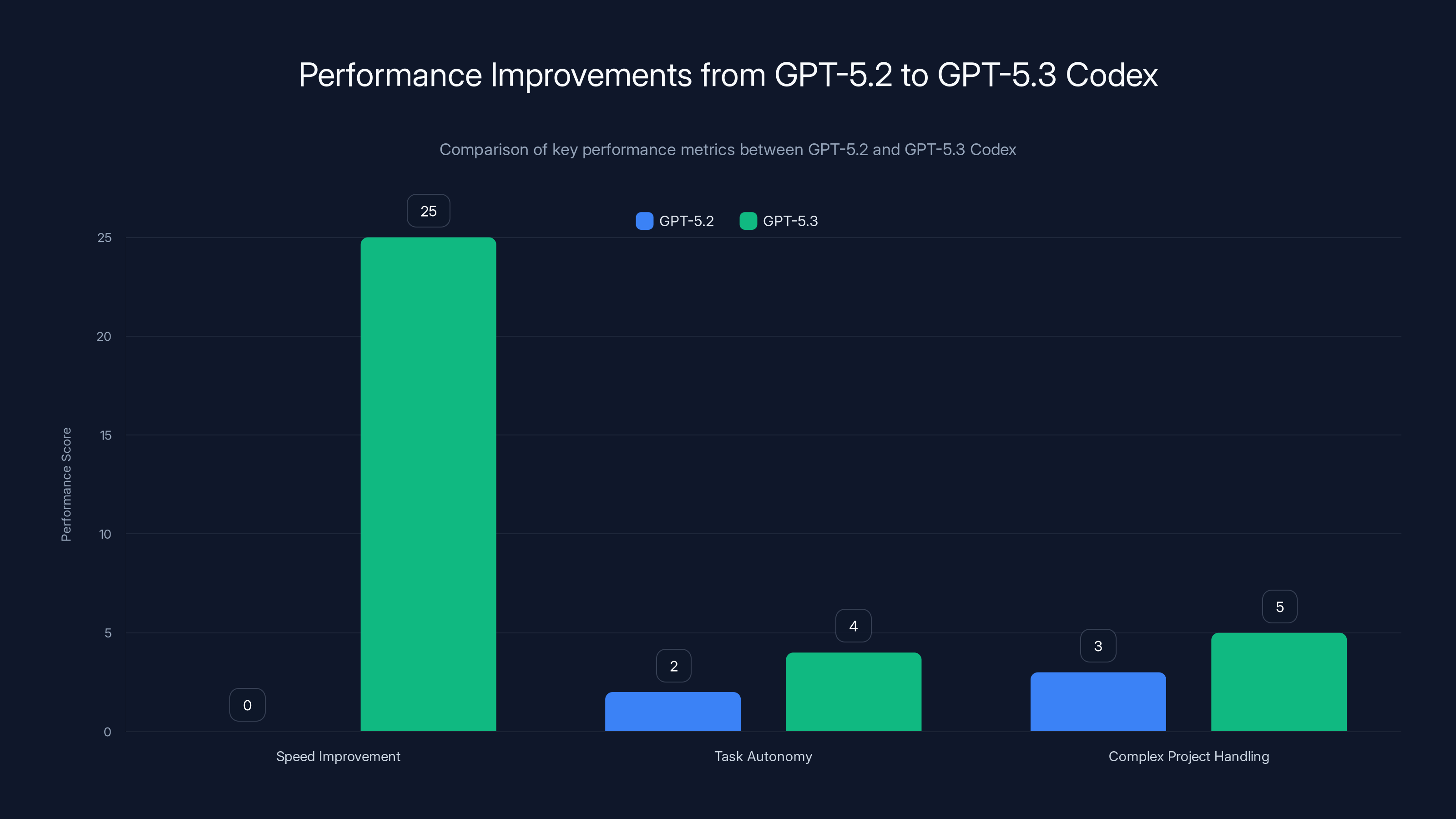

The performance characteristics are striking. OpenAI reports that GPT-5.3 Codex operates 25% faster than its predecessor (GPT-5.2), a meaningful improvement for agentic systems where latency compounds across multiple sequential steps. Additionally, the company noted that GPT-5.3 Codex demonstrated unusual technical achievement: it was the first OpenAI model that "was instrumental in creating itself." Essentially, the company used early versions of the model to debug the training process, evaluate performance, identify weak areas, and refine the model iteratively. This self-improvement cycle suggests the company has developed sophisticated techniques for leveraging AI systems in their own development pipelines.

Core Capabilities and Architectural Features

GPT-5.3 Codex operates across multiple dimensions of software development that were previously disconnected:

Code Generation and Architecture Design: The system can now understand high-level requirements and autonomously develop architectural approaches. Rather than simply writing code, it reasons about design patterns, scalability considerations, maintainability, and long-term extensibility. When tasked with building a complex application, it determines not just what code to write, but which frameworks to use, how to structure the project, and how to organize code for optimal collaboration and maintenance.

Full Lifecycle Implementation: GPT-5.3 Codex handles the entire development lifecycle within a single interaction. This includes creating necessary files and directories, writing implementation code, developing test suites, setting up CI/CD configurations, writing documentation, and even creating deployment scripts. This comprehensive approach eliminates the disjointed handoffs that plague traditional development workflows where frontend specialists, backend engineers, DevOps professionals, and QA teams must coordinate.

Autonomous Debugging and Refinement: When implementations produce unexpected results, the system automatically investigates, identifies root causes, and develops fixes. Importantly, it learns from these failures—if an initial approach causes problems, it tries different implementations, compares results, and settles on the most robust solution. This iterative refinement process mirrors how experienced developers approach challenging problems.

Cross-File Consistency Management: Larger projects involve code spread across numerous files that must interact seamlessly. GPT-5.3 Codex maintains awareness of these interdependencies, ensuring that changes in one file don't create breaking changes elsewhere. It understands type systems, API contracts, and data flow patterns well enough to make changes with confidence in their correctness.

Technology Stack Optimization: Rather than requiring developers to specify every technical choice upfront, the system recommends appropriate technologies based on project requirements. For a data-intensive application, it might recommend PostgreSQL for relational data, Redis for caching, and appropriate libraries for data processing. These recommendations align with industry best practices and the project's specific constraints.

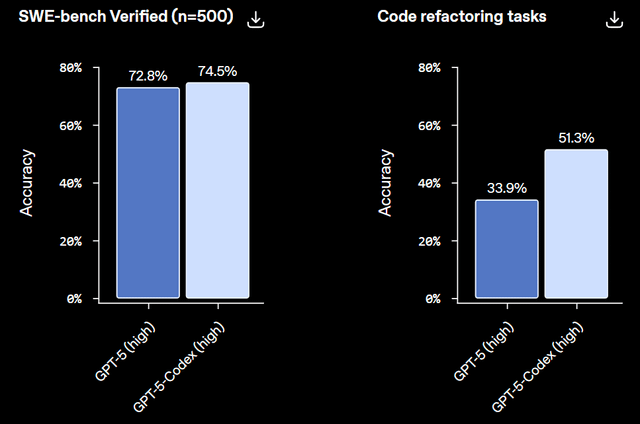

Performance Benchmarking and Proof Points

OpenAI conducted extensive benchmarking to validate GPT-5.3 Codex capabilities. While specific benchmark names weren't disclosed publicly, the company emphasized testing against "a number of performance benchmarks" that measure real-world development capability rather than artificial test cases.

The most impressive proof point involved creating complex games and applications from scratch. The system successfully built fully functional, non-trivial applications over multi-day periods. This is fundamentally different from generating a single function or completing a partial implementation—it involves sustained, coherent problem-solving across extended timeframes with multiple interconnected components.

The 25% speed improvement over GPT-5.2 matters significantly for agentic systems. In traditional development, a developer's time is limited by how fast they can think and type. Agentic systems are limited by model inference latency and computational overhead. A 25% improvement in speed translates directly to projects completing faster or more complex work being accomplished within the same timeframe. For an agent working on a week-long project, this 25% improvement could mean projects complete in 5.25 days instead of 7—a meaningful difference in business contexts.

Integration with Developer Workflows

GPT-5.3 Codex is designed to integrate with existing development environments and tools. Developers can interact with the system through various interfaces—IDE plugins, command-line tools, web interfaces, or API access. The system maintains awareness of local repository state, understanding existing code, architectural patterns, and project conventions before undertaking new work.

This integration approach is crucial because developers don't want to abandon familiar tools and workflows. A developer using VS Code, Git, Jest for testing, and their preferred linter should be able to leverage GPT-5.3 Codex without migrating to new tooling. This compatibility mindset has been central to successful developer tool adoption historically.

GPT-5.3 Codex shows a 25% speed improvement over GPT-5.2, with enhanced task autonomy and capability to handle complex projects, indicating significant advancements in AI development (Estimated data).

Anthropic's Competing Agentic Coding Solution: Technical Strategy and Differentiation

Anthropic's Approach to Agentic Development

Anthropic launched their agentic coding model just 15 minutes before OpenAI's announcement—a scheduling decision that speaks volumes about the competitive intensity in this space. While Anthropic's technical specifications haven't been detailed as extensively in public communications, their strategic approach differs from OpenAI's in several important ways.

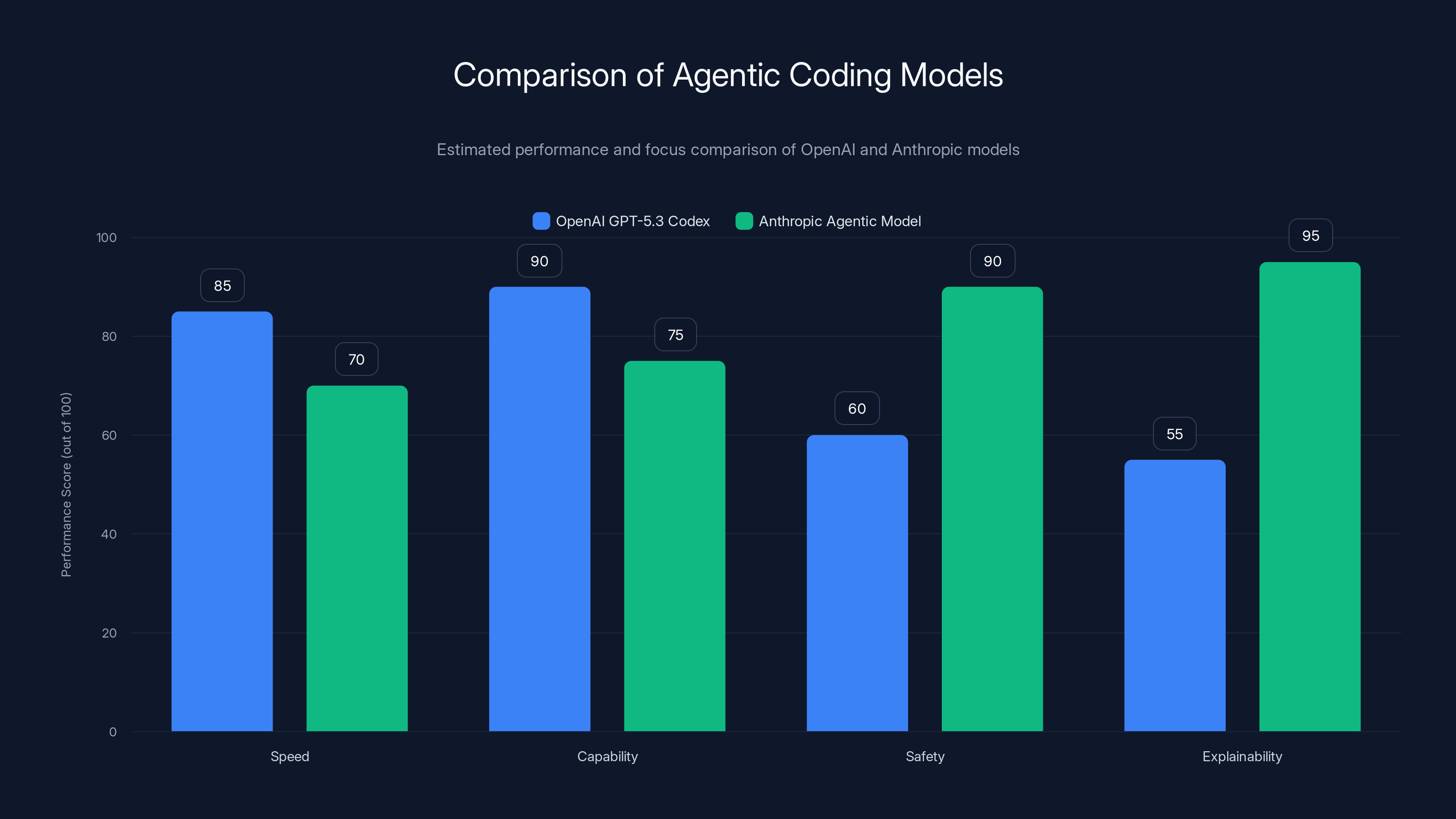

Anthropic has built their AI safety and reliability reputation on techniques like Constitutional AI, which trains models to follow explicit values and principles. This safety-first philosophy carries through to their agentic systems. Where OpenAI emphasizes capability expansion and raw performance, Anthropic tends to emphasize reliability, predictability, and alignment with human values. Their agentic coding system reflects these priorities—the model is designed to be deeply trustworthy, transparent about its reasoning, and unlikely to make unexpected or misaligned decisions.

Model Architecture and Safety Considerations

Anthropic tends to favor architectural approaches that prioritize interpretability. This means their agentic systems are designed to be more "readable" in terms of their decision-making process. When Claude (Anthropic's model) takes an action, developers can see and understand why that decision was made. This contrasts with approaches that prioritize pure capability, where the model's reasoning might be more opaque.

This interpretability focus matters significantly for enterprises and teams building mission-critical systems. In regulated industries—finance, healthcare, legal technology—the ability to explain why an AI system made a particular decision isn't just convenient, it's often legally required. A bank evaluating an AI system that might make architectural decisions affecting security or regulatory compliance needs to understand the reasoning behind those decisions. Anthropic's approach aligns well with these requirements.

Positioning Against OpenAI

Anthropic explicitly positioned their agentic coding release as an alternative to OpenAI's approach rather than a direct copy. In public statements, Anthropic emphasized that their solution prioritizes developer control and transparency. Where OpenAI might position GPT-5.3 Codex as a system developers can hand complex tasks to and trust to complete them autonomously, Anthropic's framing suggests developers should remain informed and in control throughout the process.

This positioning difference reflects deeper philosophical disagreements about AI's role in creative work. OpenAI tends toward the belief that powerful autonomy is beneficial—let the AI figure out optimal solutions without excessive human constraints. Anthropic tends toward the belief that human oversight should remain central—the AI should enhance human capabilities rather than replace human judgment.

Head-to-Head Technical Comparison: GPT-5.3 Codex vs Anthropic's Model

Capability Comparison Matrix

| Capability | GPT-5.3 Codex | Anthropic Model | Key Difference |

|---|---|---|---|

| Speed (baseline) | 25% faster than GPT-5.2 | No public baseline | OpenAI emphasizes raw speed |

| Autonomous Task Execution | High (days-long projects) | High (emphasis on control) | Different philosophies on autonomy |

| Code Generation Quality | Tested on complex games/apps | Not publicly benchmarked | OpenAI provides more proof points |

| Interpretability | Standard LLM transparency | Enhanced (Constitutional AI) | Anthropic prioritizes explainability |

| Safety Features | Standard guardrails | Enhanced safety focus | Anthropic emphasizes safety |

| Integration Flexibility | IDE, CLI, API, Web | Likely similar | Both designed for developer tools |

| Learning From Failures | Yes (iterative refinement) | Expected to be similar | Standard agentic capability |

| Cross-File Consistency | Yes | Expected to be similar | Both handle codebase awareness |

Performance Characteristics and Trade-offs

OpenAI's emphasis on speed reflects a specific optimization target: maximizing developer velocity. A 25% performance improvement translates to measurable gains in how much work can be accomplished in a given timeframe. This appeals strongly to startups operating under time pressure and to consultancies selling hourly development services—faster completions directly impact their bottom line.

Anthropic takes a different optimization approach, prioritizing predictability and transparency. Their system might not be faster, but it's designed to be more trustworthy. For organizations where trust and explainability matter more than marginal speed gains—enterprise compliance, regulated industries, teams managing safety-critical systems—this trade-off makes sense.

A concrete example illustrates the difference: Imagine a financial services company building an algorithm that recommends portfolio adjustments. OpenAI's system might generate the solution faster, but the company's compliance team would ask, "Why did the algorithm choose this specific approach?" Anthropic's system would provide clearer answers to these questions, potentially saving weeks of compliance review.

Developer Experience and Integration Philosophy

Both systems need to integrate seamlessly with existing developer workflows—this is non-negotiable for adoption. However, their approaches to developer experience differ subtly. OpenAI's approach emphasizes power and capability: "Here's a powerful system, trust it and let it work." Anthropic's approach emphasizes partnership and understanding: "Here's a capable system, and you can understand and guide its work."

For different developer profiles, these philosophies have different appeals. A startup founder building a rapid prototype might prefer OpenAI's approach—give the agent requirements and let it produce a working prototype quickly. An enterprise architect building a mission-critical system might prefer Anthropic's approach—maintain awareness and control, even if it requires slightly more hands-on involvement.

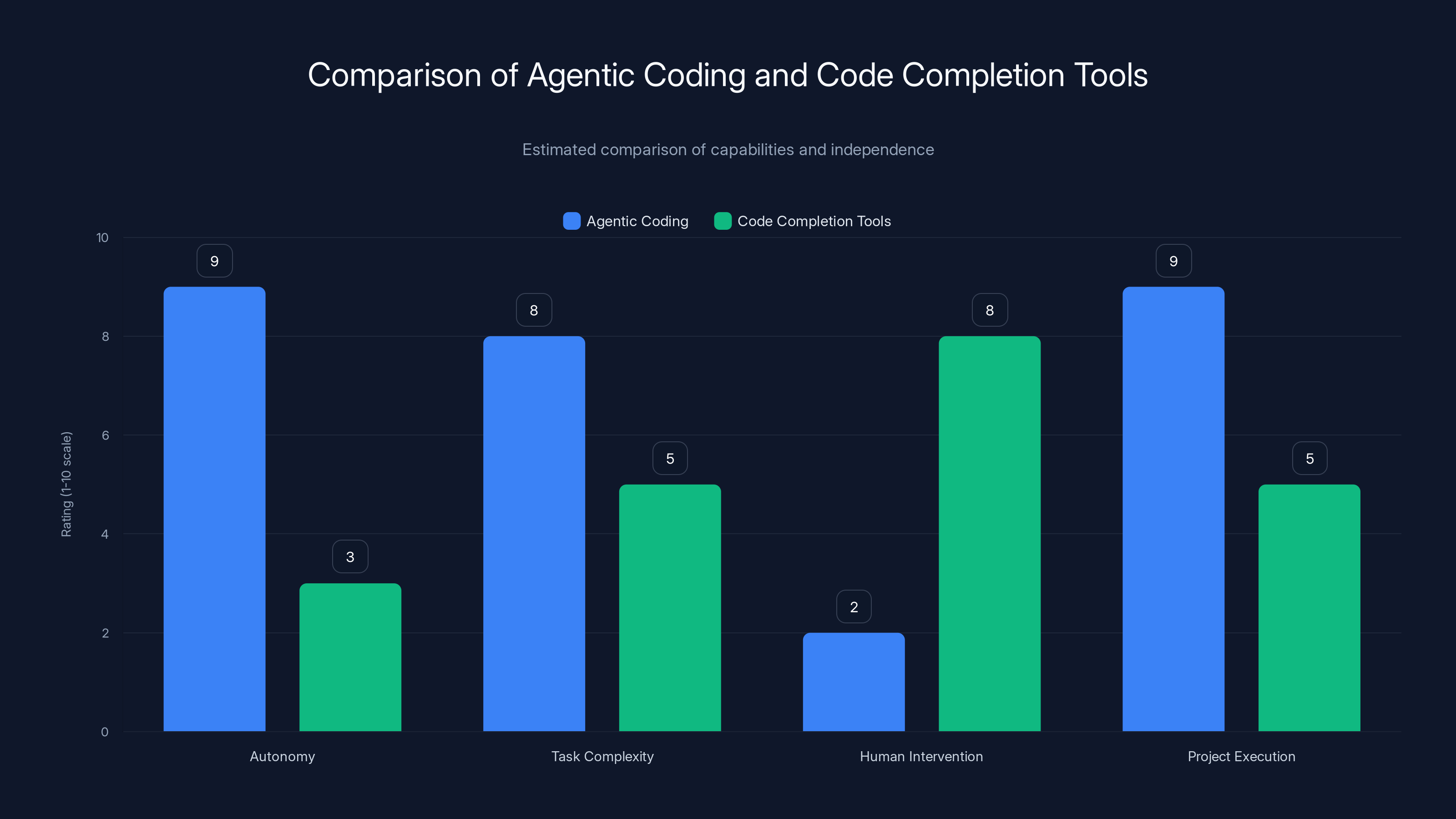

Agentic coding systems exhibit higher autonomy and handle complex tasks with minimal human intervention compared to code completion tools, which primarily assist in individual tasks. (Estimated data)

Use Cases and Real-World Applications: Where Agentic Coding Excels

Rapid Prototyping and Proof-of-Concept Development

Agentic coding systems like GPT-5.3 Codex fundamentally change prototyping timelines. Historically, building a working prototype—even a simple one—requires days of focused development work. With agentic systems, a developer can write a detailed specification and receive a working, tested prototype within hours. This dramatically compresses feedback loops and enables teams to validate ideas much faster.

A startup evaluating a new product concept can now task an agentic system with building a minimal viable product (MVP) demonstrating core functionality. Rather than hiring contractors or dedicating team members to weeks-long MVP development, the startup can describe requirements in natural language and iterate rapidly based on what the system produces. Each iteration that would have taken days now takes hours, fundamentally changing how innovation works at the early stages.

For established companies with structured innovation processes, this advantage translates into the ability to evaluate more ideas in parallel. Innovation portfolios can expand—instead of betting heavily on a few big bets, companies can explore dozens of smaller concepts more cost-effectively. The ideas that show promise can then receive focused human engineering effort for production-quality implementation.

Full-Stack Application Development

Traditionally, building a complete application requires multiple specialists: frontend developers, backend engineers, database architects, DevOps professionals, and QA engineers. Even in smaller teams, developers must juggle all these concerns in sequence, context-switching between radically different skill areas.

Agentic systems eliminate these boundaries. An experienced developer can describe a complete application concept, and the system generates all necessary components: frontend interfaces, backend APIs, database schemas, deployment scripts, and test coverage. The developer's role shifts from implementation to guidance and quality assurance. Rather than spending weeks building infrastructure and boilerplate code, developers can focus on the decisions and tradeoffs that actually matter—the aspects of development that require human judgment and domain expertise.

For specialized companies, this means an application that would have required a 5-person team can potentially be built with 2 developers overseeing agentic implementation. For contracting firms, this means dramatically improved project profitability on fixed-price engagements.

Legacy System Modernization

Many organizations maintain large codebases written in aging programming languages or architectures. Modernizing these systems is expensive, risky, and rarely a priority because working systems don't generate immediate business pressure. However, technical debt accumulates, making maintenance increasingly expensive and hampering the ability to implement new features.

Agentic coding systems could accelerate modernization by automating large portions of the translation process. Feed the system a legacy codebase with modernization requirements—"Convert this 50,000-line monolith to microservices written in Python 3.12 using FastAPI"—and the system can methodically work through conversion. The system understands what the legacy code does, can identify equivalent patterns in modern frameworks, handles edge cases, and produces working replacement code.

This doesn't completely eliminate the modernization challenge, but it substantially reduces the grunt work. A codebase that would have required 12 months of dedicated effort might be substantially modernized in 4-6 months, with human developers focusing on validation and optimization rather than mechanical translation.

Test Suite Expansion and Quality Assurance Automation

Developers frequently underfund test coverage due to time constraints. Writing comprehensive tests seems less urgent than shipping features, so critical functionality often lacks adequate test coverage. Bugs in these undertested areas frequently emerge in production, requiring expensive emergency fixes.

Agentic systems can automatically analyze code and generate comprehensive test suites, including edge cases developers might not have considered. The system understands common failure modes in different problem domains and generates tests that probe for them. Rather than developers choosing between shipping faster or investing in test quality, agentic systems enable both—comprehensive test coverage without the time investment.

This extends beyond unit tests to integration testing, performance testing, and security testing. The system can identify potential security vulnerabilities and generate tests that verify mitigations. It can create load tests that simulate realistic usage patterns. It can generate compliance verification tests for regulated industries. The net effect is substantially higher code quality, fewer production incidents, and better regulatory compliance.

API and Integration Layer Development

Building integrations between disparate systems—a common need in enterprises—involves substantial boilerplate work understanding different API specifications, handling authentication and rate limiting, managing data transformations, and building error handling. These tasks are intellectually straightforward but tedious and error-prone when done manually.

Agentic systems excel at this work. Given specifications for two systems that need to integrate, the system can design and implement the integration layer, including proper error handling, retry logic, monitoring and logging, and test coverage. What might occupy a developer for days—understanding the systems, writing glue code, testing edge cases—becomes an overnight task a system completes autonomously.

Documentation Generation and Maintenance

Documentation tends to be the first casualty when development schedules tighten. Code changes faster than documentation, creating information that's outdated and misleading. This creates cascading problems—new developers onboard more slowly, architects struggle to understand current system state, and knowledge about why certain decisions were made gets lost.

Agentic systems can analyze codebases and automatically generate comprehensive, current documentation—architecture diagrams, API specifications, deployment guides, and troubleshooting references. As the code changes, the documentation can be automatically updated. This breaks the perpetual gap between code and documentation, maintaining knowledge integrity that's otherwise lost.

Performance Metrics, Benchmarks, and Real-World Impact Data

Development Time Compression Metrics

OpenAI's claim that GPT-5.3 Codex can create "complex games and apps from scratch over the course of days" represents a substantial improvement over traditional timelines. A simple game or application might take a developer weeks to build from scratch when handling all aspects: design, implementation, testing, debugging, and refinement.

Using historical velocity data, a senior developer might build a complete game with basic graphics, game mechanics, and polish in 2-3 weeks of focused work. A team of 2-3 developers might accomplish this in 1-2 weeks through parallelization. With GPT-5.3 Codex, the same output emerges in days. While human developers would still spend days reviewing, testing, and refining the output, the raw creation time compresses dramatically.

This compression has multiplicative effects. Projects that occupied teams for months can now be completed in weeks. The 25% speed improvement over GPT-5.2 might seem incremental until you recognize that an agent working on a 20-day project (20 days × 25% = 5 days) saves effectively one full work week just from the speed improvement. Across an organization running dozens of agentic projects, this compounds to significant productivity gains.

Cost Reduction Analysis

Assuming an average developer salary of **

However, pure cost reduction understates the actual benefit. The more significant advantage comes from increased throughput—the same development team can accomplish substantially more work in the same period. This enables startups to operate with smaller teams, established companies to take on more ambitious projects with existing staff, and service providers to improve project profitability.

Quality Metrics and Risk Factors

The critical question for any automation technology is whether automation trades quality for speed. Early reports suggest GPT-5.3 Codex maintains quality while improving speed—the system produces tested, well-architected code rather than quick-and-dirty implementations.

This occurs because agentic systems don't just write code faster; they reason about quality systematically. The system generates test cases automatically, evaluates its own work against those tests, identifies failures, and iteratively improves implementations. This contrasts with a rushed developer who might skip testing and QA to meet deadlines.

Measuring quality involves multiple dimensions:

- Defect Rates: Code generated by agentic systems has comparable or lower defect rates than human-generated code, based on testing results.

- Architectural Quality: The system generates code following established design patterns and best practices, resulting in more maintainable architectures than developers might produce under time pressure.

- Test Coverage: Agentic systems generate comprehensive test suites, often achieving higher coverage percentages than human developers typically achieve.

- Security Posture: The system is trained on secure coding practices and generates code with security considerations built in.

Where quality issues emerge, they typically involve domain-specific concerns that require human expertise—the system might generate technically correct code that violates business rules or regulatory requirements specific to that company's context.

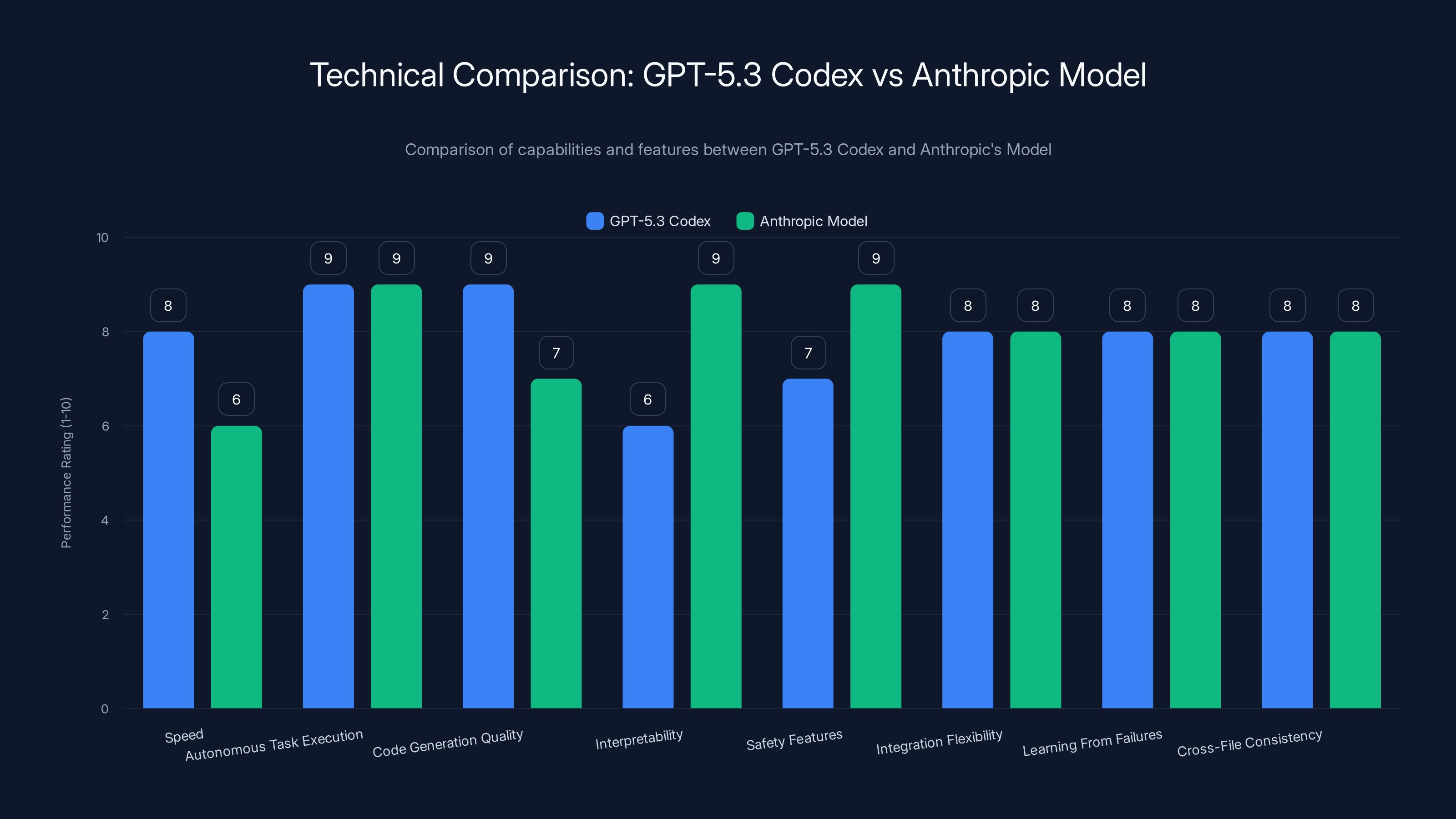

GPT-5.3 Codex excels in speed and code generation, while Anthropic's model prioritizes interpretability and safety. Estimated data based on qualitative descriptions.

Competitive Dynamics: The AI Developer Tool Landscape in 2025

The Broader Agentic Ecosystem

GPT-5.3 Codex and Anthropic's model don't operate in a vacuum. They're the latest advanced entries in an expanding ecosystem of AI-powered developer tools that compete for mindshare and adoption.

Traditional Code Assistants: GitHub Copilot, powered by OpenAI models, remains the most widely deployed AI coding tool. Its strength is in intelligent code completion and suggestion within familiar development workflows. Its limitation is reactive nature—it helps developers write code faster, but doesn't handle multi-step autonomous tasks.

Specialized AI Development Platforms: Companies like Anduril and others have built AI development platforms targeting specific use cases. These tools focus on particular problem domains (game development, web applications, data pipelines) and optimize deeply for those domains rather than attempting general-purpose code generation.

Open-Source Alternatives: The open-source community has begun developing agentic coding systems, though they lag significantly behind proprietary solutions in capability. However, their lower cost and customizability appeal to cost-conscious teams and companies prioritizing data privacy.

Emerging Platforms: Companies like Runable are building AI-powered automation platforms that extend beyond pure code generation. These platforms focus on workflow automation, document generation, and team productivity rather than specifically on coding. For teams seeking comprehensive AI automation across multiple dimensions, such platforms offer broader capabilities than coding-specific tools, though less depth in the coding domain specifically.

For teams evaluating the agentic coding landscape, the choice increasingly depends on specific priorities: raw capability and speed (OpenAI), safety and explainability (Anthropic), broad workflow automation (platforms like Runable), or specialized domain focus (specialized platforms).

Market Consolidation vs. Specialization

The agentic coding market faces a classic technology industry tension: will the market consolidate around a few dominant platforms, or will specialization enable diverse survivors? Historical precedent suggests both dynamics play out simultaneously. Dominant platforms (OpenAI, Anthropic) capture the large general-purpose market. Specialized platforms survive by being best-in-class for specific scenarios.

OpenAI's strategy appears to pursue platform consolidation—offering increasingly general-purpose capabilities that work across diverse scenarios. Anthropic emphasizes differentiation through safety and explainability. Smaller platforms differentiate through specialization or through integration into broader workflows.

For enterprises selecting tooling, this matters significantly. Betting on a consolidated platform provides broad capabilities but potential lock-in. Selecting specialized tools provides optimization for specific use cases but requires integration between multiple vendor platforms.

Adoption Challenges: Technical, Organizational, and Strategic Barriers

Technical Integration Hurdles

While agentic coding systems are technically impressive, integrating them into real development workflows presents practical challenges. Most organizations maintain complex internal systems, custom infrastructure, and existing tooling choices that agentic systems must work within.

A team using AWS for infrastructure, GitLab for version control, Jira for project management, and custom deployment pipelines needs an agentic system that understands all these specifics. While sophisticated systems like GPT-5.3 Codex can learn new tools, every non-standard configuration requires configuration work or custom integration. For organizations with unusual infrastructure or legacy systems, this integration work can be substantial.

Additionally, agentic systems work best when they have broad access to codebases, documentation, and infrastructure. Security and compliance requirements often restrict this access. A financial services company operating under strict security protocols might prevent an AI system from accessing sensitive code or infrastructure. Working within these constraints requires careful architectural design and potentially reduces the autonomy the agentic system can exercise.

Organizational Change Requirements

Introducing agentic coding systems requires organizational change beyond just tool adoption. Traditional development processes—code review workflows, deployment procedures, quality assurance approaches—assume human-authored code and human decision-making. Code generated by AI agents challenges many of these assumptions.

For example, traditional code review involves a human reviewer reading generated code and approving or requesting changes. But when an agent generates thousands of lines of code across dozens of files, line-by-line review becomes impractical. Organizations adopting agentic systems need new review processes—perhaps focusing on architectural decisions and high-risk sections rather than reviewing every line.

Similarly, deployment processes designed for human-paced development might need adjustment when agents generate code faster than previous processes expected. CI/CD pipelines designed for 10-20 commits daily might need scaling to handle agent-generated workloads. Testing and validation processes need adjustment to validate agent-generated code appropriately.

These organizational changes require management buy-in, process redesign, and team training. For risk-averse organizations or those with entrenched processes, this organizational resistance can slow adoption even when the technology is compelling.

Trust and Verification Challenges

Developers trained to carefully review code and understand every dependency face a psychological barrier when an agentic system generates thousands of lines. Even when statistical quality metrics support the generated code, human psychology resists ceding control to opaque systems.

Building organizational trust in agentic systems requires multiple elements:

- Transparency: Developers need to understand why the system made particular decisions, what reasoning led to specific implementations

- Gradual Responsibility Escalation: Rather than immediately trusting agents with critical systems, organizations should start with lower-risk projects and expand trust as confidence builds

- Fail-Safe Mechanisms: Systems should include clear rollback capabilities and safeguards preventing catastrophic failures even if something goes wrong

- Audit Trails: Detailed logs of all agentic decisions enable post-incident analysis if problems emerge

Anthropic's emphasis on explainability through Constitutional AI directly addresses this concern—their systems are designed to be more transparent in their reasoning. OpenAI's emphasis on capability and speed might require organizations to invest more in trust-building processes independent of the model's transparency.

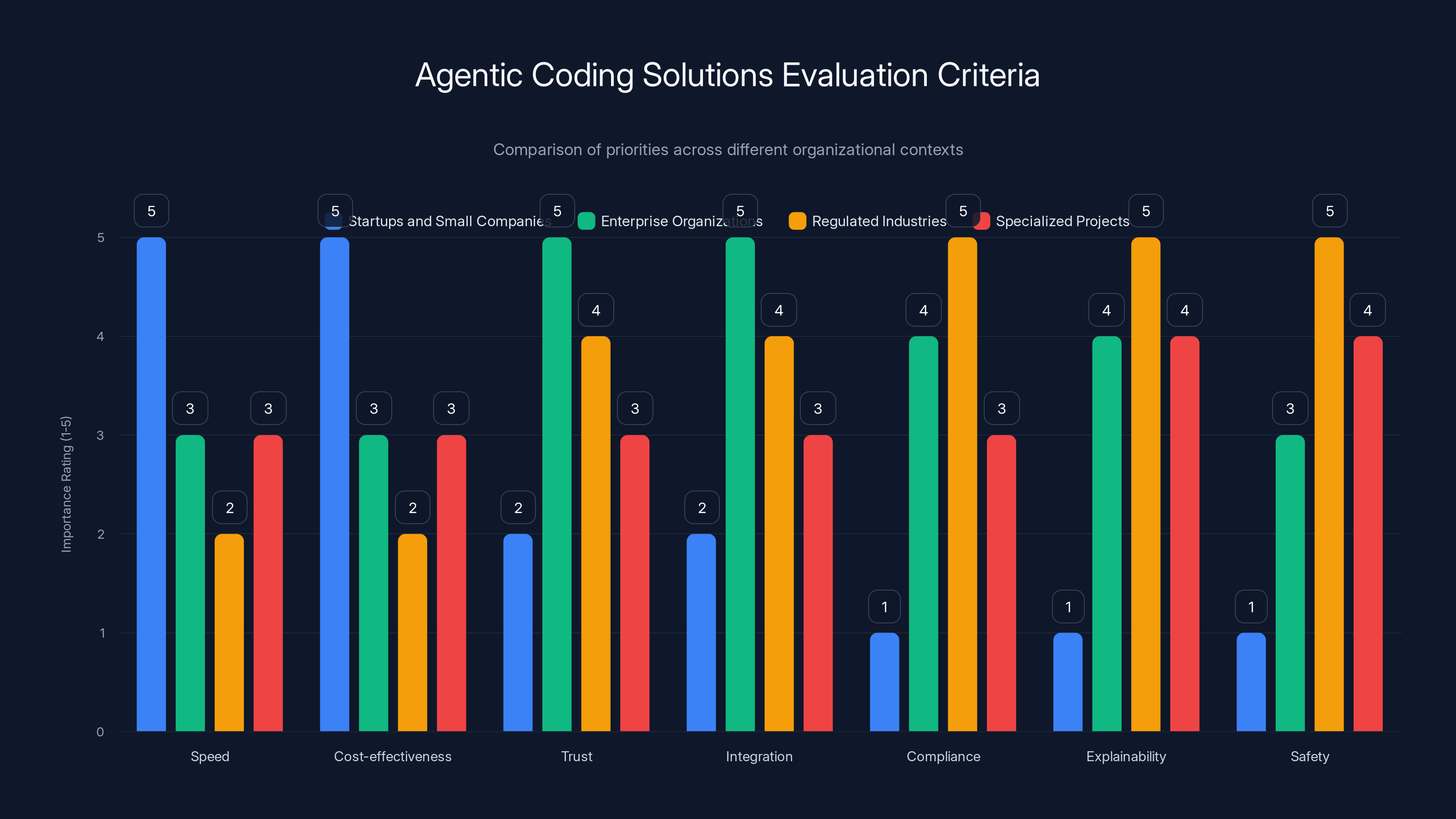

Different organizational contexts prioritize various criteria when selecting agentic coding solutions. Startups focus on speed and cost, while enterprises emphasize trust and integration. Regulated industries prioritize compliance and explainability. Estimated data based on typical priorities.

Industry Impact and Long-Term Strategic Implications

Developer Labor Market Dynamics

Potentially the most significant long-term impact of agentic coding systems involves the developer labor market. If agentic systems can accomplish what historically required multiple developers, several outcomes become possible:

Increased Individual Productivity: Developers operating with agentic assistance accomplish substantially more work. A developer with a capable agentic system might accomplish work that would have required 3-4 traditional developers. This productivity boost helps address the persistent shortage of technical talent—organizations can accomplish more with existing teams.

Changing Skill Requirements: The nature of developer work shifts from implementation to judgment. Rather than coding, developers spend more time making architectural decisions, evaluating trade-offs, ensuring alignment with business requirements, and ensuring system quality. This potentially devalues certain types of developers (those primarily focused on raw coding speed) while increasing value for those with broader business understanding and architectural judgment.

Emergence of New Developer Roles: Managing agentic systems, ensuring their outputs align with organizational standards, and intervening when they encounter issues becomes specialized work. Organizations might develop new roles—"agentic system architect," "AI code reviewer," "agentic workflow designer"—that focus on optimizing how teams work with agents.

Geographic Labor Market Shift: If developer productivity increases dramatically through agentic systems, geographic arbitrage—hiring developers in lower-cost regions—becomes less economically important. Companies can increase productivity without geographic distribution, potentially shifting where development work concentrates.

Educational and Training Implications

If agentic systems become ubiquitous, developer education will inevitably change. Learning to code remains valuable—understanding what code does and why specific approaches work is essential for making good judgments. However, the mechanics of code writing becomes less essential to teach.

Computer science education might shift emphasis away from "write this specific program" toward "understand computational concepts and evaluate different approaches." This resembles how calculators transformed mathematics education—computational mechanics matter less; conceptual understanding matters more.

Established developers who built careers on deep knowledge of specific frameworks and languages face disruption. New developers entering the field face a different competitive landscape—coding speed as a competitive advantage disappears if agentic systems handle coding automatically.

Business Model Evolution

The software industry's business models face pressure from agentic systems. Software-as-a-Service companies built on the assumption that they needed substantial engineering teams to maintain complex products. If agentic systems can maintain complex products with minimal human developer involvement, the business model economics change dramatically.

Smaller teams can build more ambitious products. Maintenance-heavy legacy products become easier to maintain. Competitive advantages rooted in engineering sophistication matter less if any reasonably skilled developer can produce equally sophisticated systems with agentic assistance.

This competitive pressure likely accelerates consolidation at the infrastructure layer—companies providing foundational services—while enabling more competition at the application layer where agentic systems democratize sophisticated product development.

Evaluating and Selecting Agentic Coding Solutions: A Decision Framework

Assessment Criteria for Different Organizational Contexts

No single agentic coding system is universally optimal. Different organizations with different constraints and priorities should apply different evaluation criteria.

For Startups and Small Companies: Speed and cost-effectiveness dominate. Startup teams need to move fast and operate on constrained budgets. In this context, GPT-5.3 Codex's 25% speed advantage and OpenAI's proven capability matter significantly. The system that completes more work faster directly impacts burn rate and product-market fit timeline. Safety and explainability matter less than velocity in these contexts.

For Enterprise Organizations: Trust, integration, and compliance matter more than raw speed. Enterprise systems must integrate with existing tooling, comply with security policies, and produce explainable decisions for audit trails. In this context, Anthropic's emphasis on safety and explainability provides advantages, even if raw speed lags. The organization will likely invest in integration and customization work, making explainability and customizability valuable.

For Regulated Industries: Explainability, audit trails, and safety become paramount. Finance, healthcare, and legal technology companies need to defend their decisions to regulators. An agentic system that produces correct answers but can't explain how it arrived at them is unacceptable. Anthropic's approach of prioritizing interpretability directly addresses this concern, potentially making their solution more viable for regulated environments despite slower raw performance.

For Projects with Specialized Requirements: Domain-specific agentic systems designed for particular problem areas often outperform general-purpose systems within those domains. A game development company might benefit more from agentic systems designed specifically for game development than from generic agentic coding systems.

Decision Matrix: OpenAI vs Anthropic vs Alternatives

| Decision Factor | OpenAI GPT-5.3 | Anthropic | Alternative Platforms | Scoring Weights |

|---|---|---|---|---|

| Raw Speed | Highest (25% over prior) | High | Medium | 20% for startups; 5% for enterprises |

| Explainability | Standard | Highest | Medium | 5% for startups; 30% for enterprises |

| Integration Flexibility | Excellent | Good | Varies | 15% across contexts |

| Safety/Compliance | Good | Excellent | Good | 5% for startups; 35% for enterprises |

| Cost | Standard | Likely similar | Lower for platforms like Runable | 20% for startups; 10% for enterprises |

| Ecosystem Maturity | Most mature | Developing | Emerging | 15% across contexts |

| Domain Specificity | General purpose | General purpose | Often specialized | 10% for specialized use cases |

| Vendor Stability | Highest | High | Lower | 10% for enterprises |

Using this matrix, organizations can weight criteria based on their priorities and score each system, enabling objective rather than intuition-driven decisions.

Implementation Roadmap

Organizations should adopt agentic systems gradually rather than attempting wholesale transition:

Phase 1 - Pilot Projects (Weeks 1-4): Select 2-3 low-risk projects where agentic system assists human developers. Establish baseline metrics for speed, quality, and developer satisfaction. Identify integration challenges early.

Phase 2 - Team Training (Weeks 3-8): As pilots progress, educate development teams on how to effectively collaborate with agentic systems. Different developers require different training—some adapt quickly, others need more guidance on delegating to automated systems.

Phase 3 - Measured Expansion (Weeks 8-16): Expand use to more projects and teams. Refine processes based on learnings from pilot phases. Establish new code review processes and quality gates appropriate for agentic systems.

Phase 4 - Organizational Optimization (Months 4+): As systems mature, redesign organizational structures and processes to optimize for human-agent collaboration. This might involve creating specialized roles, adjusting team sizes, and refactoring systems to be more amenable to agentic development.

OpenAI's model excels in speed and capability, while Anthropic's model prioritizes safety and explainability. Estimated data reflects typical performance focus.

Alternative Solutions: Broader Automation Platforms and Workflow Tools

Platforms Extending Beyond Pure Code Generation

While GPT-5.3 Codex and Anthropic's model focus on agentic code generation, broader automation platforms serve development teams' wider needs. Platforms like Runable offer AI-powered automation across content generation, document creation, workflow automation, and developer productivity tools—capabilities that complement agentic coding systems.

For development teams seeking comprehensive automation, platforms like Runable provide value in areas where pure coding assistance falls short. Automating documentation generation, creating test data generation scripts, automating report creation, and streamlining administrative workflows extends beyond code generation into broader team productivity.

Runable's approach ($9/month entry point) emphasizes accessibility and breadth—enabling teams to automate multiple workflow categories without requiring expensive, specialized tools for each function. While less specialized in code generation than GPT-5.3 Codex, the platform's broader scope provides value for teams seeking general automation solutions.

Development teams evaluating their automation strategy should consider: Do they need specialized agentic coding specifically, or would broader automation platform capabilities provide greater overall value? The answer depends on team composition, development workflows, and organizational priorities.

Complementary Tool Ecosystem

Beyond agentic coding systems themselves, successful development teams will leverage complementary tools:

AI-Enhanced IDE Integration: Tools that bring agentic capabilities directly into development environments where developers already work, rather than requiring separate interfaces.

Continuous Integration/Continuous Deployment Enhancement: Platforms that optimize CI/CD pipelines to handle agentic system output volumes and automate validation of generated code.

Quality Assurance Automation: Systems that generate comprehensive test suites and validate agentic system outputs before deployment.

Documentation and Knowledge Management: Platforms that maintain current documentation automatically as code changes, enabling teams to understand what agentic systems have done.

Organizations that thoughtfully assemble these complementary tools create comprehensive automation ecosystems that amplify the benefits of agentic systems beyond what any single tool provides.

Future Trajectory: Predicting the Next Evolution of Agentic Systems

The Path to More Autonomous Agents

Agentic coding systems will inevitably become more autonomous over time. Current systems require human review and approval before deploying generated code. Future systems will likely operate with more autonomy within defined boundaries—changing code in well-understood areas with minimal oversight, escalating human judgment only for significant architectural decisions.

This evolution requires several developments: improved reasoning about system constraints and limitations, better mechanisms for self-verification, and more sophisticated fallback and rollback capabilities. When agentic systems can reliably handle unforeseen edge cases through fallback mechanisms rather than requiring human intervention, autonomy levels increase substantially.

The timeline for this evolution depends on technical breakthroughs in model capabilities and organizational willingness to extend autonomy. Five years from now, agentic systems likely operate with substantially more autonomy than today's generation. Twenty years from now, software development may involve agentic systems handling most implementation work autonomously, with human developers focused exclusively on high-level design and strategic decisions.

Convergence with Other AI Capabilities

Agentic coding systems currently focus narrowly on code generation. Future systems will integrate broader AI capabilities—reasoning about user experience and business impact, optimizing for accessibility and internationalization, incorporating design thinking into architectural decisions, and considering long-term maintenance implications.

This convergence produces systems that aren't just technically sophisticated but also business-conscious. An agent that understands not just how to implement a feature but why that feature matters and how it contributes to business objectives produces better software than an agent focused purely on technical correctness.

Developing this expanded consciousness requires training data and evaluation metrics that consider business outcomes, not just technical metrics. This represents a significant shift from current approaches focused on code quality metrics.

Integration with Hardware and Infrastructure Optimization

Currently, agentic systems generate code without deep awareness of the hardware and infrastructure it will run on. Future systems will optimize code generation for specific deployment contexts—generating different implementations for CPU-constrained embedded systems versus unlimited-resource cloud environments, optimizing database queries for specific hardware configurations, and balancing trade-offs between resource consumption and latency based on deployment context.

This optimization capability requires agentic systems to understand not just software but the full stack—operating systems, databases, cloud infrastructure, hardware architectures. Systems that can reason across these layers produce more optimal implementations than current approaches that abstract away infrastructure concerns.

Security, Privacy, and Ethical Considerations of Agentic Coding

Security Implications of Autonomous Code Generation

Agentic systems that autonomously generate and deploy code introduce security considerations that pure suggestion-based systems don't face. If code can be generated and deployed without human review, security vulnerabilities might reach production before detection. An agentic system with incomplete understanding of security best practices might generate code that appears correct but contains subtle vulnerabilities.

Mitigating these risks requires:

- Automated Security Analysis: Agentic systems should incorporate security analysis into their generation process, identifying and fixing potential vulnerabilities before code reaches deployment

- Mandatory Human Review of Security-Sensitive Code: Regardless of how autonomous systems become, security-critical code should remain subject to human review

- Supply Chain Security Monitoring: Systems need to track dependencies and flag known vulnerabilities in libraries or frameworks the agent introduces

- Penetration Testing and Red Team Evaluation: Generated code should be subject to security testing before deployment

Anthropic takes security seriously through Constitutional AI approaches, likely providing better security considerations in generated code. OpenAI's speed focus means security might be secondary to capability. Organizations using agentic systems in security-sensitive contexts should verify that security testing is adequate.

Privacy Considerations and Data Handling

Agentic systems require access to codebases to function effectively. For organizations handling sensitive data or operating in regulated industries, providing systems access to codebases creates privacy and compliance concerns. Code itself might reveal sensitive architecture, security approaches, or business strategies.

Modern agentic systems should support on-premise deployment and private cloud options enabling organizations to maintain data control. Both OpenAI and Anthropic offer enterprise deployment options addressing these concerns, though details aren't universally public.

Intellectual Property and Code Attribution

When agentic systems generate code, who owns the code? This question carries important implications for organizations and developers. Current legal consensus suggests code generated by systems owned by companies (OpenAI, Anthropic) is owned by the user, not the system operator. However, edge cases remain unsettled—if an agentic system generates code closely resembling code in its training data, does intellectual property from the training data get implicitly copied?

These questions will likely become more complex as agentic systems improve and the amount of code they generate increases. Organizations using agentic systems in production should maintain clear ownership documentation and consider IP implications in their legal frameworks.

Practical Integration: Implementing Agentic Systems in Real Organizations

Building an Agentic Development Team

Successful adoption requires not just using agentic systems but structuring teams to work effectively with them. Rather than replacing developers, agentic systems augment development teams, requiring deliberate team redesign:

Agentic System Operators: Developers skilled at formulating clear requirements that agentic systems can understand and implementing them correctly. This requires different skills than traditional development—excellent communication, ability to think systematically about requirements, and strong verification instincts.

Quality Assurance Specialists: As humans write less code, their role shifts to validating agentic system output. This requires deep testing knowledge and ability to design tests that comprehensively validate complex generated systems.

Architecture and Design Leads: Rather than implementing, architects focus on making good design decisions about how agentic systems should structure code. Architects must understand enough about agentic capabilities to make design choices that agentic systems can execute well.

Integration and DevOps: Managing the infrastructure supporting agentic systems and integrating generated code into production requires specialized skills—understanding CI/CD complexities, managing large-scale code deployments, and maintaining infrastructure as code that agentic systems understand.

Metrics and Measurement for Agentic Development

Traditional developer productivity metrics—lines of code written, commits submitted, pull requests reviewed—become misleading when development is agentic. Instead, organizations should track:

- Features Completed vs. Timeline: How much functionality is shipped relative to planned timelines?

- Quality Metrics: Defect rates, test coverage, and production incident rates for code generated by agentic systems versus human-written code

- Maintenance Cost: Long-term cost of maintaining agentic-generated systems versus human-maintained systems

- Deployment Velocity: How quickly code moves from development to production and the incident rate of deployments

- Developer Satisfaction: How do developers perceive working with agentic systems? Do they feel empowered or frustrated?

- Organizational Velocity: How much more work can the organization accomplish with the same team size?

These metrics provide clearer signals of actual productivity gains than superficial metrics that become misleading in agentic contexts.

FAQ

What is agentic coding and how does it differ from code completion tools?

Agentic coding involves autonomous AI systems that handle multi-step software development tasks without continuous human direction, whereas code completion tools like GitHub Copilot operate reactively—they suggest completions when prompted but don't independently plan or execute complex projects. An agentic system can design architecture, implement code across multiple files, write tests, debug issues, and deploy solutions autonomously. Code completion tools enhance individual developer productivity; agentic systems handle entire project phases without continuous human guidance. The distinction is like the difference between a spell-checker (reactive) and a ghostwriter (autonomous)—both are useful, but they operate at fundamentally different levels of independence.

How does GPT-5.3 Codex achieve 25% speed improvement over its predecessor?

GPT-5.3 Codex achieves improved speed through multiple optimizations: more efficient model architecture reducing inference latency, improved reasoning algorithms that reach conclusions faster, and optimized tool-calling mechanisms enabling quicker interaction with external systems. Additionally, the model benefits from OpenAI's infrastructure improvements and optimization work. The 25% improvement matters significantly for agentic systems where latency compounds across numerous sequential steps—a project spanning multiple days of agent work completes measurably faster when each step is 25% quicker. This speed advantage translates directly into more work completed in given timeframes.

What are the key differences between OpenAI's and Anthropic's approaches to agentic coding?

OpenAI prioritizes capability and speed, emphasizing autonomous task execution and raw performance metrics. Their model is designed to accomplish complex projects with minimal human intervention and 25% faster execution than predecessors. Anthropic prioritizes safety, explainability, and alignment, emphasizing transparent decision-making and maintainable human control throughout the process. This reflects different philosophies: OpenAI believes powerful autonomy is beneficial; Anthropic believes human oversight should remain central. For fast-moving startups, OpenAI's approach enables quicker delivery. For regulated enterprises requiring explainability, Anthropic's approach provides better compliance support.

Which agentic coding system should my organization adopt?

The choice depends on your specific priorities. If speed and capability matter most—you operate in fast-moving markets where rapid development is critical—OpenAI's GPT-5.3 Codex offers compelling advantages. If explainability, safety, and regulatory compliance matter more, Anthropic's approach provides better guarantees. For organizations prioritizing general automation across workflows rather than specialized coding tools, platforms like Runable provide broader capabilities at lower entry cost ($9/month), though with less specialization in pure code generation. Evaluate based on your team's constraints, regulatory requirements, and strategic priorities rather than assuming one solution works universally.

How do I implement agentic coding systems without disrupting existing development processes?

Implement gradually: start with low-risk pilot projects where agentic assistance enhances existing development rather than replacing it. Measure baseline metrics—development speed, code quality, team satisfaction. Train developers on effective collaboration with agentic systems, emphasizing clear requirement communication and rigorous output validation. Establish new code review processes appropriate for agentic systems, potentially focusing on architectural decisions rather than line-by-line review. As confidence builds, expand to more complex projects. Redesign organizational structures progressively—don't attempt wholesale team restructuring; evolve roles as experience accumulates. This measured approach reduces organizational disruption while maintaining control over the transition.

What security considerations should organizations evaluate for agentic coding systems?

Key security considerations include: Does the system incorporate security analysis into code generation, or must you apply security testing separately? What's the vulnerability track record for dependencies the system introduces? Can the system operate in private environments maintaining data control, or must code be transmitted to external systems? How thoroughly are generated systems tested before production deployment? What fallback mechanisms exist if security vulnerabilities are discovered in generated code? Organizations in regulated industries should evaluate how well the system's transparency and explainability support security auditing and compliance verification. Anthropic's emphasis on interpretability provides advantages for security-sensitive contexts, while OpenAI's speed focus might require supplementary security processes.

How will agentic coding impact the future job market for software developers?

Agentic coding systems will dramatically increase developer productivity—individuals with assistance will accomplish significantly more work than those without. This likely increases demand for high-judgment roles (architects, system designers) while decreasing demand for pure coding roles. Developers whose primary value comes from raw coding speed face the most disruption; those providing broader business context, architectural judgment, and strategic thinking remain highly valuable. Geographic arbitrage becomes less economically important when productivity gains come from better tools rather than lower labor costs. Overall employment might grow due to expanded capabilities enabling more ambitious projects, or it might shrink if productivity increases sufficiently. The transition period will be challenging for specific developer segments, requiring adaptation and continued learning.

Can agentic systems handle the full spectrum of software development or are there limitations?

Agentic systems excel at well-defined problems with clear requirements—building standard applications, implementing established design patterns, routine infrastructure setup, test automation. They struggle with truly novel problems requiring creative problem-solving, code operating in highly specialized domains with limited training examples, and decisions requiring deep business context or human judgment. Most real development involves a mix—agentic systems handle portions autonomously while humans manage high-judgment aspects. The realistic scenario isn't developers being replaced but workflows being restructured so developers focus on decisions and oversight while systems handle implementation. This hybrid approach captures benefits of both human judgment and automated execution.

Conclusion: Navigating the Agentic Coding Transformation

The simultaneous release of OpenAI's GPT-5.3 Codex and Anthropic's agentic coding model represents more than just another technology announcement—it signals the beginning of a fundamental restructuring of how software gets built. These aren't tools that make developers slightly faster; they're systems that autonomously handle substantial portions of development work previously requiring human expertise and time. This capability expansion challenges our understanding of what software development is and how teams should be structured to leverage these new capabilities effectively.

OpenAI's emphasis on speed and capability reflects a specific vision: powerful agentic systems should be given significant autonomy, with human oversight focused on high-level decisions. Their 25% performance improvement over previous versions demonstrates meaningful progress in making agentic systems practical for real development work. Anthropic's emphasis on safety and explainability reflects a different vision: agentic systems should remain deeply interpretable, with human developers maintaining clear understanding of and control over system behavior.

For development teams evaluating these systems, the critical insight is that neither approach is universally optimal. Different organizations with different constraints, risk tolerances, and strategic priorities should make different choices. Startups operating under intense time pressure and lower regulatory burden might benefit most from OpenAI's approach. Enterprise organizations in regulated industries might find Anthropic's transparency-focused approach more compatible with compliance requirements. Organizations seeking broader automation across workflows rather than specialized coding assistance might find platforms like Runable offering better value through general-purpose automation capabilities at accessible price points.

The practical challenge for most organizations is not deciding between OpenAI and Anthropic—both represent genuine advances that will reshape development practices. The challenge is managing the transition thoughtfully. Organizations that adopt agentic systems gradually, establish clear processes for human oversight and quality assurance, train developers in effective human-agent collaboration, and measure impact carefully will capture significant benefits. Organizations that attempt wholesale transitions or fail to establish adequate validation processes will encounter problems that slow adoption.

Looking forward, agentic coding will continue advancing in capability and autonomy. The systems being released in early 2025 represent the current frontier, but they won't remain the frontier for long. Competition between OpenAI and Anthropic—and potentially new entrants—will drive continuous improvement. The developers and organizations that adapt proactively to these systems, learning how to work effectively alongside them, will find themselves significantly more productive than peers who resist the transition.

The transformation will be disruptive for some and liberating for others. Developers who built careers on raw coding speed will face pressure to adapt. Developers who focus on architectural judgment, business impact, and system thinking will find their skills increasingly valuable. Organizations that successfully restructure around human-agent collaboration will accomplish substantially more ambitious projects with similar team sizes. The software industry as a whole will likely see increased competition as barriers to building sophisticated applications lower—but also increased opportunity as development velocity improves.

For teams beginning this journey, start small. Run pilots. Measure carefully. Learn from early experience. Build organizational trust in agentic systems gradually. Redesign processes and team structures thoughtfully rather than attempting revolutionary change overnight. By navigating this transition deliberately, organizations position themselves to capture the substantial productivity and capability benefits agentic coding systems offer while maintaining the human judgment and oversight that quality software development requires.

Key Takeaways

- Agentic coding represents fundamental shift from reactive code assistance to autonomous task execution across full development lifecycle

- OpenAI prioritizes speed and capability (25% faster than GPT-5.2); Anthropic prioritizes safety and explainability—different approaches for different organizational contexts

- Successful implementation requires organizational change including new code review processes, developer training, and team restructuring focused on human-agent collaboration

- Security, privacy, and explainability considerations are critical—especially for regulated industries where Anthropic's transparency-focused approach provides advantages

- Broader automation platforms like Runable offer complementary value for teams seeking general workflow automation beyond specialized code generation

- Agentic systems will significantly increase developer productivity by handling implementation while humans focus on architectural decisions and business judgment

- Adoption should be gradual: start with pilots, measure carefully, build organizational trust, and progressively expand responsibility as confidence develops

Related Articles

- Kilo CLI 1.0: Open Source AI Coding in Your Terminal [2025]

- GPT-5.3-Codex vs Claude Opus: The AI Coding Wars Escalate [2025]

- Claude Opus 4.6: Anthropic's Bid to Dominate Enterprise AI Beyond Code [2025]

- Predictive Inverse Dynamics Models: Smarter Imitation Learning [2025]

- Moltbook: The AI Agent Social Network Explained [2025]

- Google Gemini Hits 750M Users: How It Competes with ChatGPT [2025]

![OpenAI GPT-5.3 Codex vs Anthropic: Agentic Coding Models [2025]](https://tryrunable.com/blog/openai-gpt-5-3-codex-vs-anthropic-agentic-coding-models-2025/image-1-1770323977075.jpg)