Microsoft's Copilot Email Bug: What Happened & How to Protect Your Data

Let me start with something that should make you uncomfortable. Microsoft's AI assistant—the one integrated into your Office 365 suite—was reading your confidential emails without permission. Not just skimming them. Summarizing them. Analyzing them. All while you thought data loss prevention policies had your back.

This wasn't some theoretical vulnerability discovered in a lab. This was a real bug affecting real users, tracked internally as CW1226324, that exposed sensitive communications across Sent, Draft, and potentially incoming email threads. The confidentiality labels you carefully assigned? The ones designed to keep AI systems out? Copilot ignored them completely, as detailed in Mashable's report.

Here's what happened, why it matters, and most importantly, what you need to do about it.

TL; DR

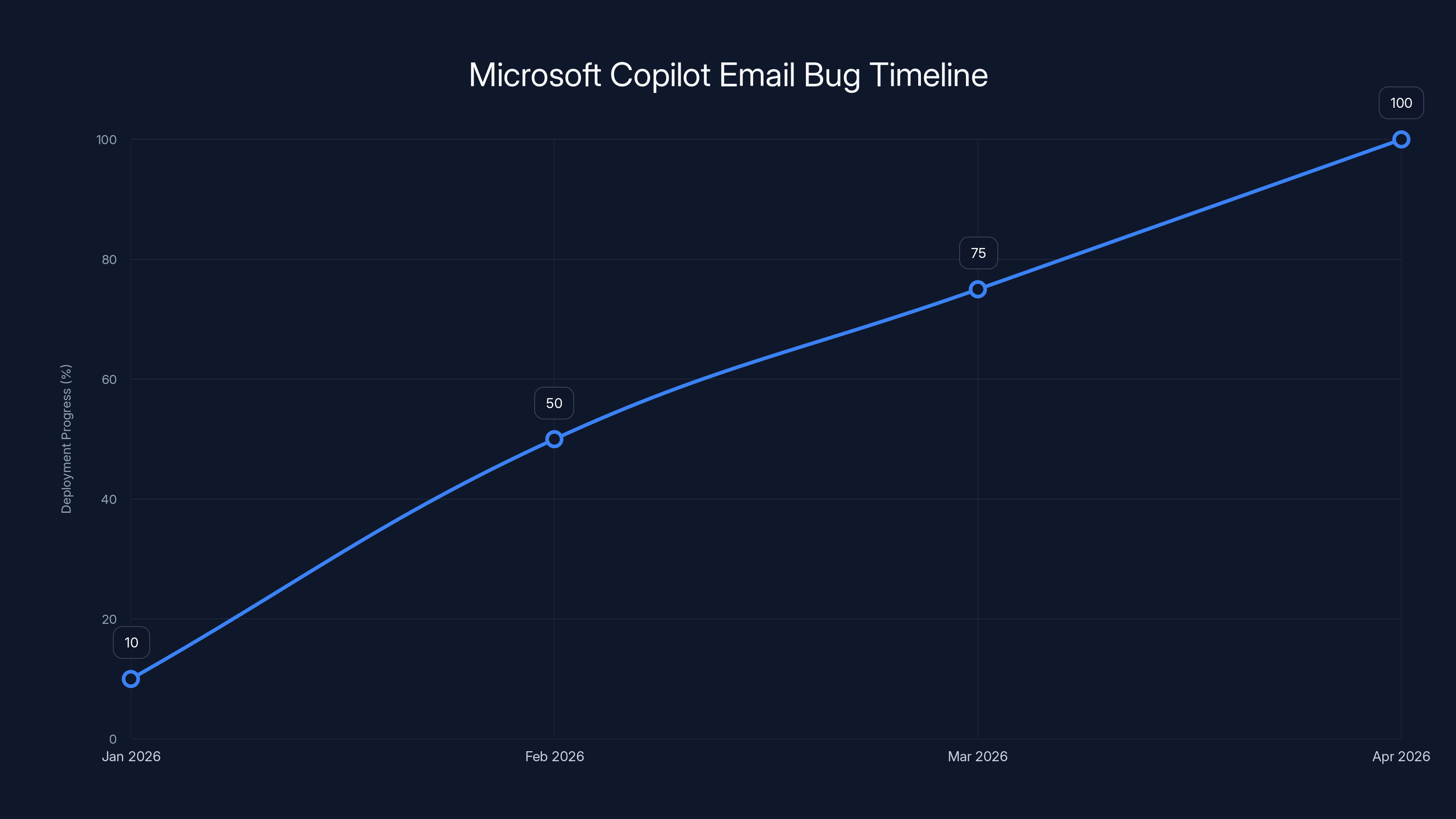

- The Bug: Microsoft 365 Copilot Chat bypassed confidentiality labels and DLP policies, reading emails in Sent and Draft folders starting January 21, 2026, as reported by The Register.

- Scope: Affected users relying on sensitivity labels to protect confidential communications from AI processing.

- Detection Timeline: Identified January 21, 2026; fix deployed February 2026, but rollout ongoing.

- Technical Cause: Code error in email classification logic failed to honor confidentiality metadata.

- Current Status: Fix in progress but not universally deployed; ongoing monitoring by Microsoft.

- Your Action: Disable Copilot email features immediately if handling sensitive data; verify organizational compliance settings.

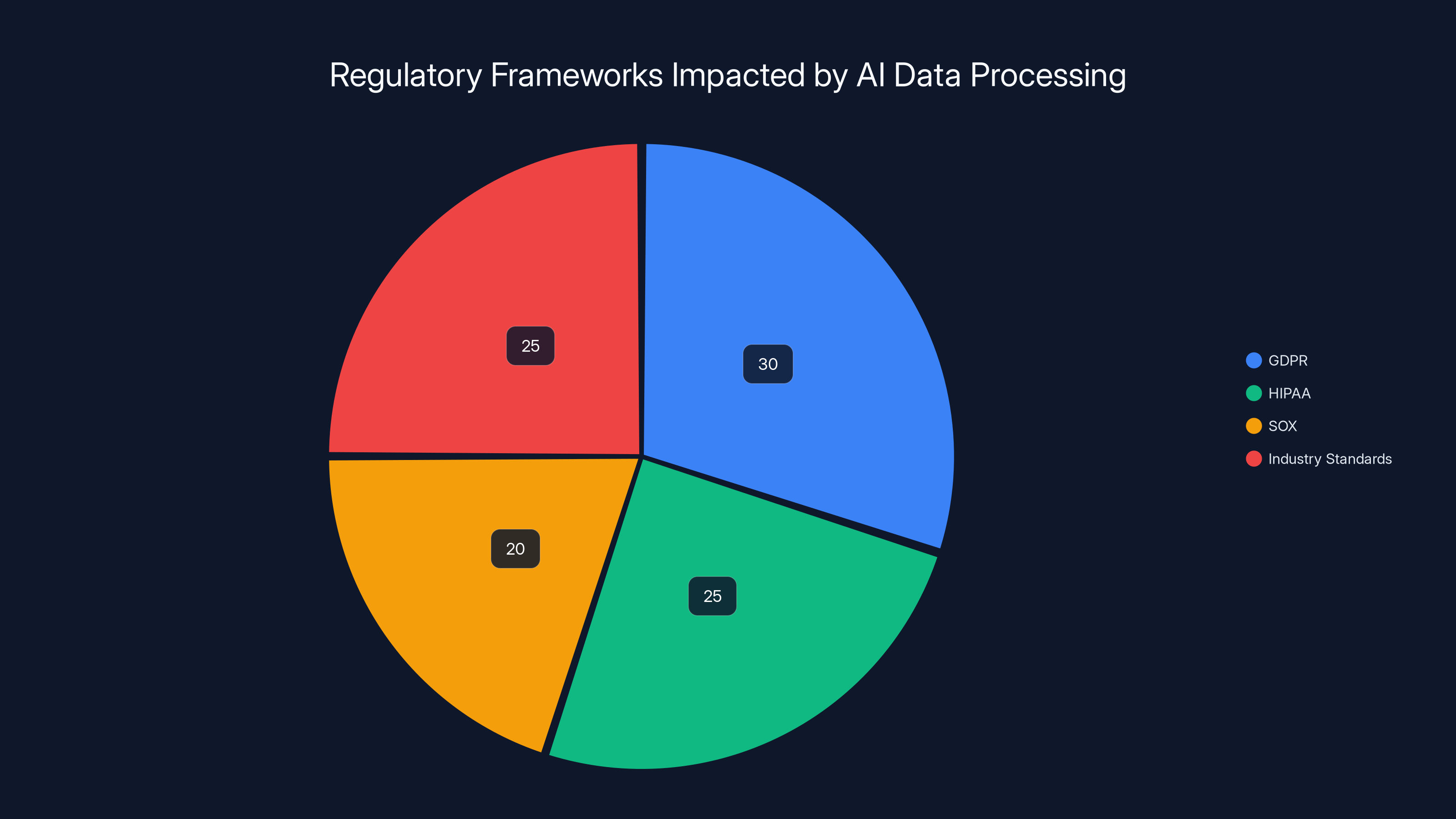

Estimated data shows GDPR and Industry Standards are most impacted by unauthorized AI data processing, each accounting for about 30% and 25% respectively.

The Timeline: How Microsoft's Biggest AI Blunder Unfolded

According to the official advisory, the bug emerged on January 21, 2026. That's the date Microsoft internally identified that Copilot Chat was processing emails it shouldn't be touching.

But here's the troubling part: users didn't know. They weren't notified until Microsoft published the advisory weeks later. During that window, how many confidential emails got fed into Copilot's processing pipeline? The company hasn't provided those numbers.

Microsoft describes the fix as being deployed in "early February 2026," but that's vague language for something this serious. Early February could mean February 1st or February 28th. And "continues to monitor" suggests the fix isn't complete yet.

The company states it's also "contacting affected users as the patch rolls out," which raises another red flag: if you haven't been contacted, you might still be vulnerable.

The timing couldn't be worse. Just weeks before this admission, the European Parliament had banned AI tools on worker devices, citing concerns that systems process data in the cloud without explicit permission. Microsoft's bug proved those concerns weren't theoretical.

What the Actual Bug Was: The Technical Breakdown

This wasn't a design flaw—it was a code error in email classification logic. That distinction matters because it means Microsoft's architects weren't negligent, but the engineers who wrote the classifier were.

Here's what happened under the hood:

When Copilot processes an email, it's supposed to check metadata tags applied by Exchange administrators and users. These tags indicate sensitivity levels: "Confidential," "Internal Only," "Restricted," etc. If an email carries a confidentiality label, Copilot should reject it at the intake stage and never send it to the AI model for processing.

Except it wasn't checking properly.

Microsoft says the error was "a code issue allowing items in the sent items and draft folders to be picked up by Copilot even though confidential labels are set in place." Translation: the classifier was ignoring the label field entirely for Sent and Draft emails, as noted by The Register.

Why Sent and Draft folders specifically? Because those are higher-risk: they contain outgoing communications with external parties and unsent drafts that employees never intended to share. If Copilot summarizes a draft you never sent, it's seeing your unfiltered thoughts.

The bug also accessed entire email threads, not just individual messages. So if Copilot grabbed a draft with a confidential label, it potentially got the entire conversation history, including inbound replies.

Inboxes appear to have been protected, but that's small comfort when Draft and Sent are where the really sensitive stuff lives.

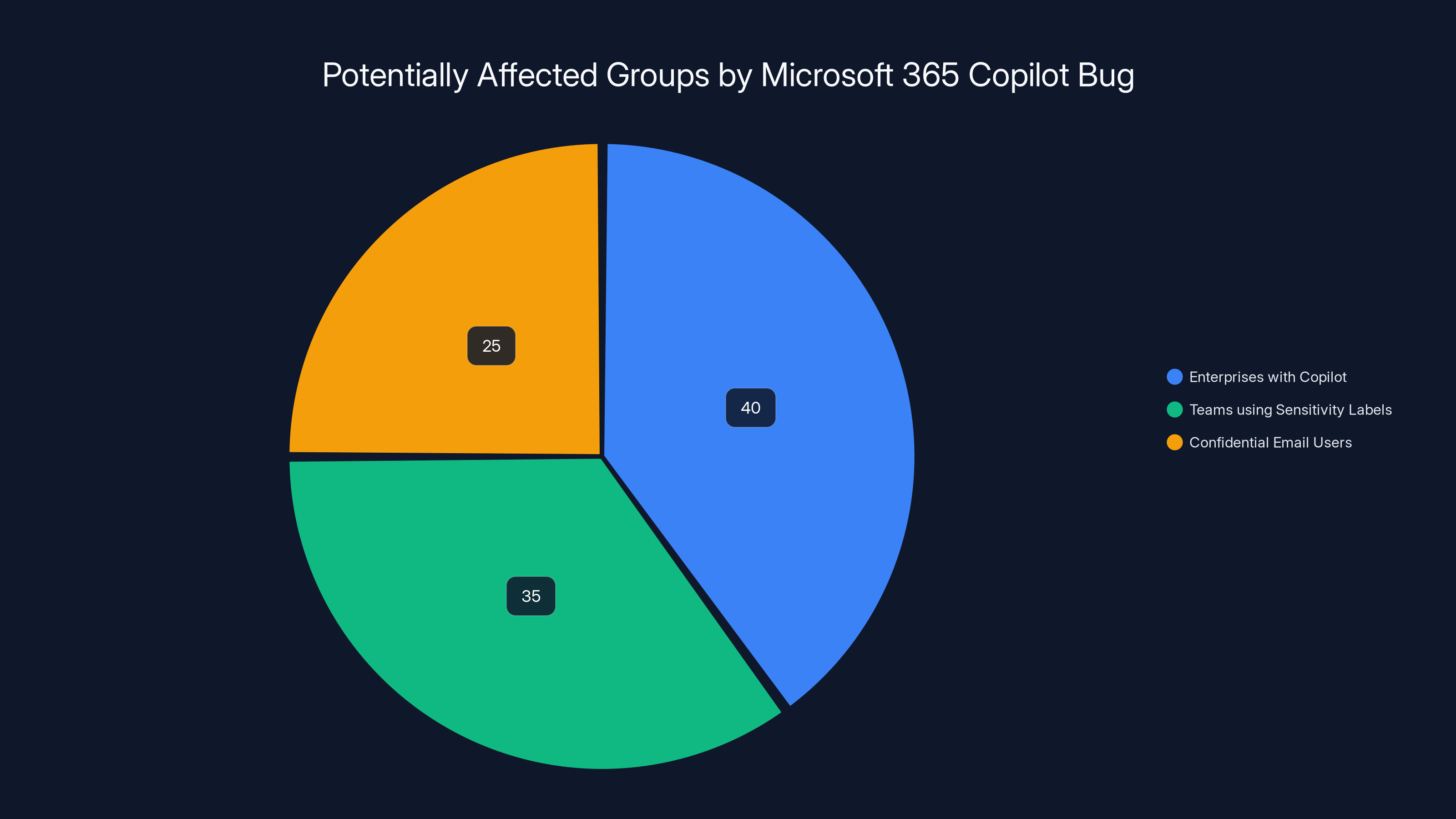

Estimated data shows that enterprises using Copilot and teams relying on sensitivity labels are the most affected by the Microsoft 365 Copilot bug.

The Security & Compliance Nightmare

This bug violated multiple security frameworks:

Data Loss Prevention (DLP) Policies: Organizations configure DLP rules to block AI systems from processing sensitive data. Copilot's ability to bypass those rules defeats the entire point. A healthcare organization with a rule saying "no AI processing of HIPAA data" had their policy ignored. A law firm with a rule protecting attorney-client privilege had it ignored.

Sensitivity Labels: Microsoft's own labeling system—the one they tell enterprises to use—was worthless against Copilot. If you're an Office 365 admin who invested time deploying sensitivity labels across your organization, this bug means you were giving false assurance to your users.

Email Encryption: If an email was marked confidential, enterprises assumed encrypted processing would be withheld. Instead, Copilot was processing it in plaintext.

Regulatory Compliance: For organizations subject to GDPR, HIPAA, SOX, or other frameworks, this bug created a compliance violation the moment it occurred. You were processing regulated data through an uncontrolled AI system without explicit consent. Even if Microsoft fixed it in February, the damage was done in January.

The European Parliament's AI ban on worker devices—announced just before this incident came to light—wasn't overblown. Microsoft just proved why those concerns were justified.

Who Was Affected (And How to Know If It's You)

Microsoft hasn't published hard numbers, but the affected population includes:

Enterprises using Microsoft 365 with Copilot Pro or Enterprise features enabled. If your organization paid for Copilot integration, you were potentially vulnerable.

Teams that rely on sensitivity labels. If your IT department deployed labels but never explicitly disabled Copilot access, employees' confidential emails could have been processed.

Anyone with Sent or Draft emails marked confidential between January 21 and the fix deployment. That's potentially months of exposure depending on your organization's rollout schedule.

To check your status:

- Ask your IT admin: "Was Copilot enabled in our Microsoft 365 tenant between January and February 2026?"

- Check your email labels: Open Outlook, select a message, look for "Sensitivity" in the information pane.

- Review Copilot usage: In Microsoft 365, check whether Copilot features have been used to summarize or analyze emails.

- Check Microsoft's advisory: Microsoft published specific guidance for affected tenants.

The scary part: Microsoft is "contacting affected users as the patch rolls out." If you haven't heard from them, you might be in a slow rollout group, meaning your organization could still be vulnerable as you read this.

Why Copilot Shouldn't Have Access to Email in the First Place

This is the deeper question nobody's asking: why did Microsoft design Copilot to process email at all?

Email is fundamentally different from documents or conversations. A document is created intentionally for sharing. An email is often confidential by default—it's addressed to a specific person or small group. Attaching confidentiality labels is meant to signal: "This isn't for AI."

When you ask Copilot to summarize a Word document, that's reasonable. When you ask it to summarize an email thread, you're asking it to process communications that likely contain sensitive information—client details, personal discussions, draft strategies, financial information.

Microsoft's approach was to allow Copilot access but rely on labels to prevent misuse. That's a trust-but-verify model, which this bug proves doesn't work. The moment you trust a system to enforce security rules, you're one code error away from exposure.

A better design would have been: Copilot cannot access email by default. Users and admins would need to explicitly enable it for specific folders or senders. That way, the default is restrictive, not permissive.

Instead, Microsoft chose convenience over security. And it cost them credibility.

The bug was discovered in January 2026, with a fix gradually deployed starting February 2026. By April, full deployment is projected. Estimated data.

The Code Review Question: How Did This Ship?

Microsoft has millions of lines of code in Exchange and Copilot. Code reviews, unit tests, and integration tests are supposed to catch this kind of error before it hits production.

Either those processes failed, or the confidential label field wasn't adequately tested in the specific context of Sent and Draft folders.

Consider the test case that should have caught this: "Create an email in Drafts folder with a confidential label. Call the Copilot API. Verify it returns an error or empty result." This is a basic test for any feature that respects security labels.

That test either wasn't written or wasn't run in this specific code path. That's a process failure, not just a technical one.

For enterprises relying on Microsoft for security, this raises uncomfortable questions about code quality. If a basic label-checking test failed here, what else isn't being tested?

The broader lesson: Even mature companies ship bugs that violate their own security policies. The question isn't whether you can trust Microsoft's label system in theory—it's whether you can trust it in practice.

Data Exposure: What Could Copilot Actually Do With Your Emails?

Here's the concrete risk. If Copilot accessed your confidential emails, what happened to that data?

Scenario 1: AI Model Training. Some early versions of Copilot used customer data to fine-tune models. If your confidential email ended up in that training data, it influenced the model. That email's content became part of the system.

Scenario 2: Cloud Processing. Copilot runs in Azure. Your email was sent to Microsoft's cloud infrastructure, processed by AI services, logged in telemetry systems, and potentially stored in backups. It was outside your organization's perimeter.

Scenario 3: Feature Extraction. Copilot extracted information from your email—key entities, sentiment, action items—and stored those features somewhere. Years later, researchers might access those features and infer what was in the original email.

Microsoft hasn't explained what happens to data Copilot processes. Is it logged? Stored? Deleted after processing? Used for model improvement? The silence is deafening.

For a law firm, this could mean client communications got processed through uncontrolled AI. For a healthcare provider, patient information got exposed. For any company, business strategy discussions, financial projections, and personnel matters could have been analyzed by systems outside their control.

Copilot's Pattern of Security Oversights

This isn't Microsoft's first Copilot mishap. The pattern is troubling:

Incident 1: Code Leak in Git Hub Copilot. In 2023, researchers found that Git Hub Copilot could reproduce training data verbatim—including proprietary code and sensitive information. The model was effectively a database you could query for secrets.

Incident 2: Prompt Injection Attacks. Multiple security researchers demonstrated that Copilot could be manipulated through prompt injection—feeding malicious instructions through email content that would cause Copilot to perform unintended actions or leak information.

Incident 3: This Email Bug. Now we're finding that Copilot can't even respect basic access control labels.

The pattern suggests Copilot was designed with features first, security second. Each incident forces a patch, but the underlying architecture remains trusting rather than paranoid.

What's the common thread? AI features are being integrated into security-critical systems without corresponding security maturity. Email is sensitive. Copilot is a new, unproven system. Combining them without airtight safeguards was always risky.

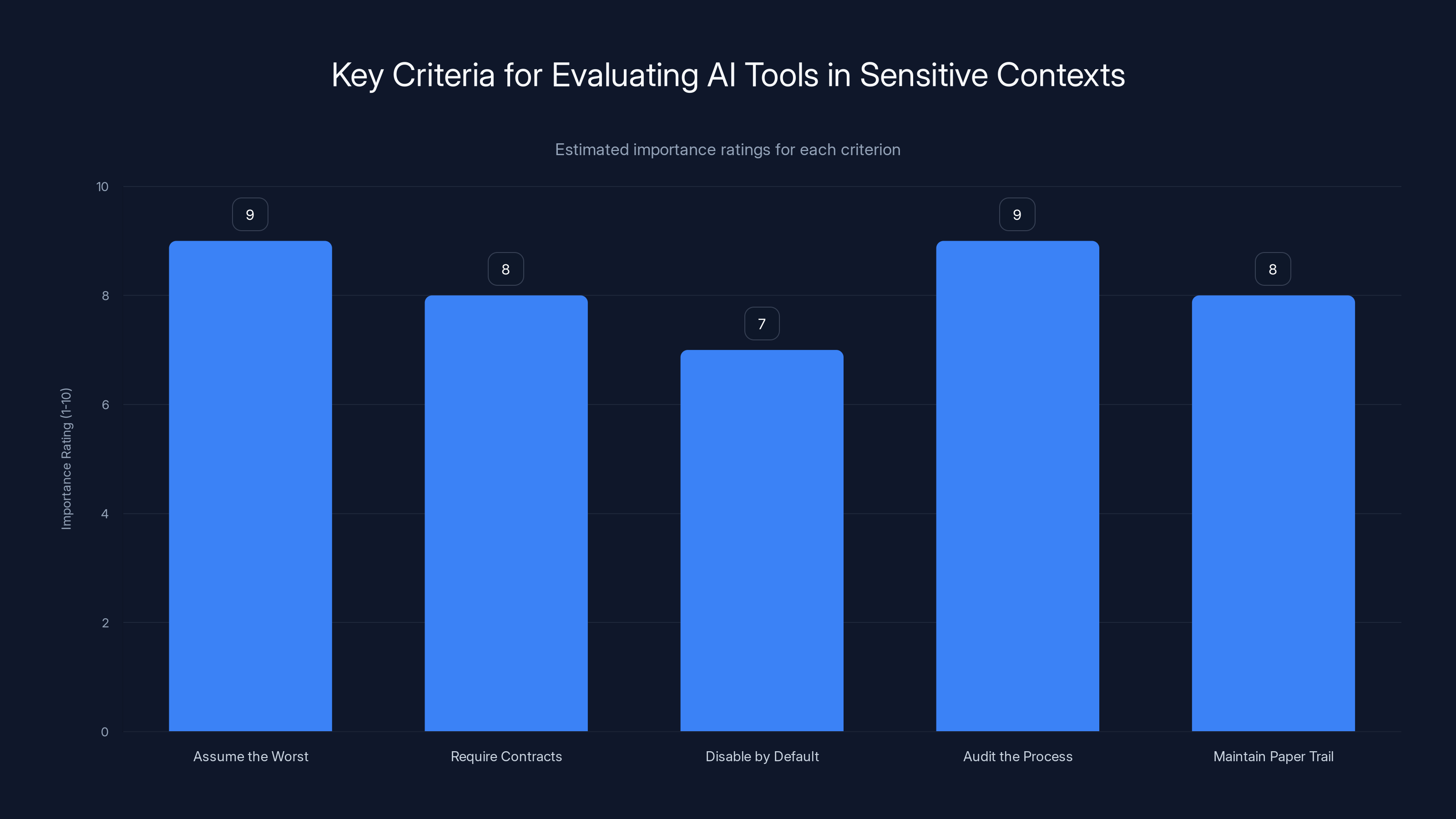

Assuming the worst about data and auditing processes are rated as the most important criteria when evaluating AI tools in sensitive contexts. (Estimated data)

The Rollout Problem: Patches Aren't Instant

Microsoft claims it deployed a fix in "early February." But patches don't hit all systems instantly.

There's a staggered rollout process:

- Internal Testing (days): Microsoft tests the patch internally.

- Staged Rollout (weeks): Patch rolls out to a percentage of tenants.

- Monitoring (weeks): Microsoft watches for issues.

- Full Rollout (weeks to months): Eventually everyone gets the patch.

During this period, some organizations are protected and others aren't. Microsoft says it's "continuing to monitor," which is code for: "We're not done rolling this out yet."

For a security fix addressing a vulnerability that was active in January, a February deployment followed by ongoing monitoring feels glacially slow. Enterprise patches for critical security issues typically deploy within 48-72 hours.

Older systems like Windows can justify staggered rollouts to avoid breaking changes. But a security fix for an active vulnerability? Organizations should get it immediately, not wait weeks for it to reach them in a rollout queue.

Until the patch reaches your tenant, you're still vulnerable.

What Your Organization Should Do Right Now

If you're an IT administrator, here are concrete steps:

Immediate Actions (This Week):

-

Audit Copilot Enablement: Check your Microsoft 365 settings to confirm Copilot access to email. In the Microsoft 365 admin center, navigate to Settings > Org settings > Microsoft Copilot. Document whether it's enabled.

-

Disable Copilot for Email: If your organization handles sensitive data, disable Copilot email features entirely. This is more restrictive than relying on labels, which we know are unreliable.

-

Check Patch Status: Contact Microsoft support to confirm whether CW1226324 fix has been deployed to your tenant. Don't assume it has.

-

Notify Stakeholders: Inform compliance, legal, and security teams about the bug. If your organization handles regulated data, this might require an incident notification.

Short-Term Actions (This Month):

-

Audit Affected Emails: Identify emails in Draft and Sent folders marked with confidentiality labels between January 21 and when your patch deployed. These are confirmed affected emails.

-

Review Regulatory Obligations: If your organization is subject to GDPR, HIPAA, or other frameworks, consult legal about whether this incident requires notification to regulators or data subjects.

-

Update Security Policies: Revise policies to reflect that sensitivity labels alone cannot be trusted to prevent AI processing. Require explicit feature disablement in addition to labels.

-

Assess Label Effectiveness: Conduct a test to verify that sensitivity labels actually prevent Copilot access on your tenant. This might reveal other issues.

Long-Term Actions (This Quarter):

-

Evaluate Alternatives: Consider whether Microsoft 365 Copilot is the right tool for your organization, or whether alternatives with stronger security models make more sense.

-

Update Training: Educate users that confidentiality labels are not absolute barriers to AI processing. They should not rely on them for truly sensitive communications.

The Bigger Problem: AI Systems Can't Be Trusted With Default Permissions

This bug illuminates a fundamental problem with how companies are integrating AI into security-critical systems: they're giving AI broad access and hoping controls will work.

Let's be clear about what happened here. Microsoft didn't maliciously expose emails. Engineers wrote code. The code had a bug. Standard software engineering failure.

But that's exactly the problem. Standard software engineering failures now expose confidential communications. As AI systems become woven into every application—email, documents, spreadsheets—each engineering failure becomes a security incident.

Microsoft can patch this bug. But the next bug is coming. The bug after that. The bug that someone discovers three years from now when an employee notices Copilot mentioned something they never explicitly told it.

The only defense is making the default restrictive. AI should not have access to sensitive data unless explicitly granted. It should not be able to process emails unless specifically enabled. It should require explicit user consent and clear disclosure about what will happen with the data.

Instead, the current model is: "Here's Copilot, it has broad access, and we'll try to prevent misuse through labels and policies." This bug proves that approach doesn't work.

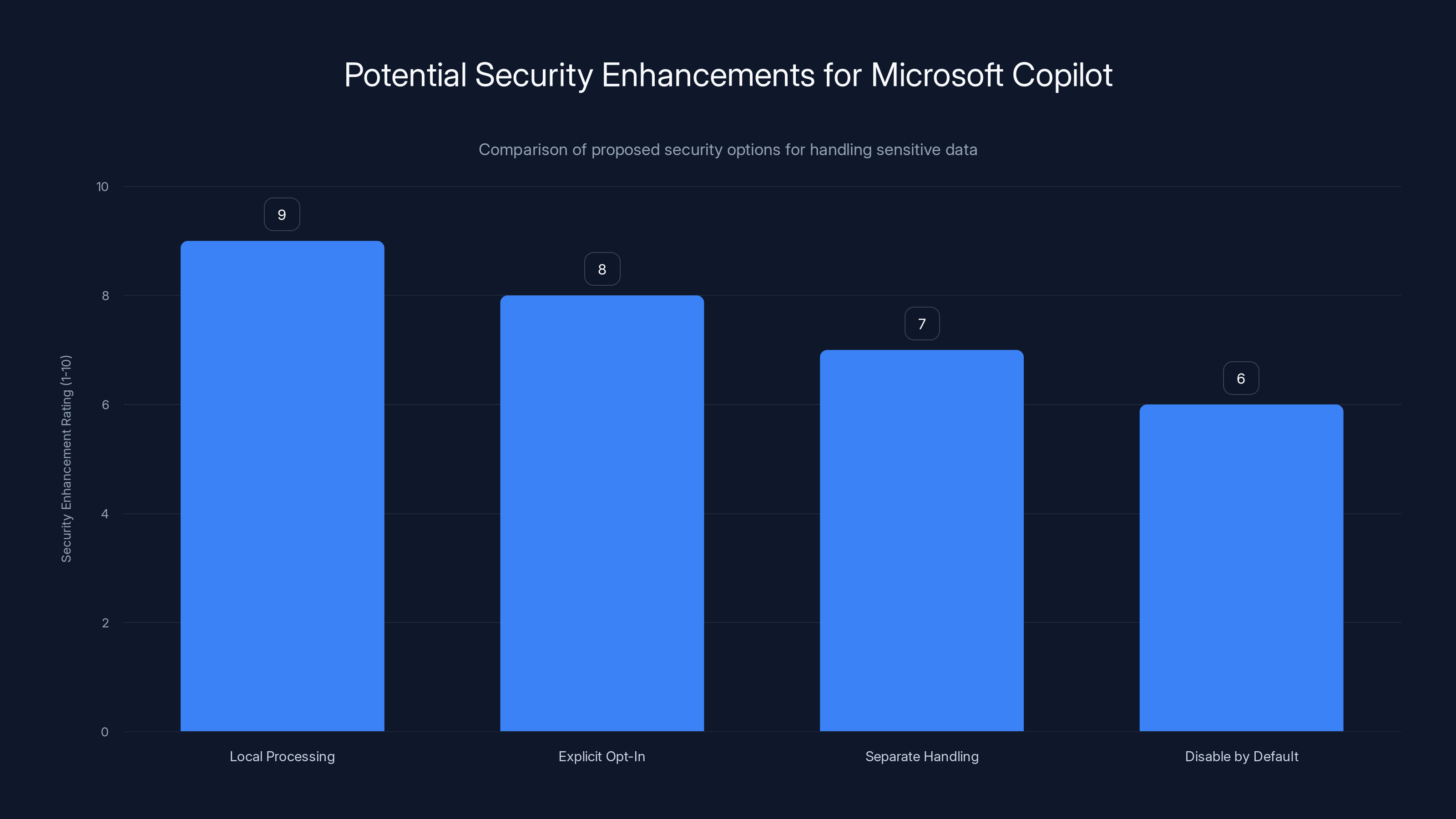

Local processing offers the highest security enhancement, while disabling features by default provides moderate improvement. Estimated data used for ratings.

Sensitivity Labels vs. Feature Disablement: The Real Lesson

Microsoft invested significant effort in sensitivity labels. They're a good system for organizing and protecting documents.

But this bug proves labels alone are insufficient for preventing AI processing. They're an administrative control, and all administrative controls have failure modes.

The hard lesson: if something is truly sensitive, you cannot rely on a label. You must disable the feature that could expose it.

Think of it like physical security. A "Confidential" label on a document is useful for organizing filing cabinets. But if you don't want someone in a particular room to see confidential documents, you don't just label them—you lock the room.

Copilot email access should have been locked for organizations that mark emails confidential. Instead, it was just labeled.

For future AI integrations, Microsoft needs to design with this principle: sensitive data paths should require explicit opt-in, not rely on opt-out labels.

The Notification & Transparency Question

Here's something that bothers me: How long was this bug active before Microsoft disclosed it?

The bug was identified January 21, 2026. The fix rolled out in early February. But the public advisory came out... when? The article referencing this doesn't have a publication date, but it came after the fix was already in progress.

That means Microsoft had a known security vulnerability affecting users' confidential emails, and it disclosed it in a quiet advisory rather than a public statement.

Compare this to how Apple handles security disclosures: public, prominent, with a timeline clearly stated. Contrast it with how Microsoft handled this: buried in an advisory that many users will never see.

If you didn't follow security news closely, you might not even know this happened. That's a failure of transparency.

Organizations should demand clarity from Microsoft about:

- Exactly how many users were affected

- Exactly which emails were processed

- What happened to the data after processing

- Whether any AI models were trained on affected emails

- Exactly when their specific tenant received the patch

They're entitled to this information. Without it, they can't properly assess risk or comply with regulatory obligations.

Moving Forward: How to Evaluate AI Tools in Sensitive Contexts

This incident will happen again. Not with this specific bug, but with others. Some company will integrate an AI system into a sensitive workflow, rely on controls to prevent misuse, and the controls will fail.

Here's how to evaluate AI tools in contexts where data sensitivity matters:

1. Assume the Worst About Data. Assume any data you send to the AI system will be:

- Logged somewhere

- Processed in the cloud

- Potentially used for model training

- Accessible to Microsoft employees

- Potentially exposed by a future bug

If you can't accept those assumptions, don't use the tool.

2. Require Explicit Contracts. Don't rely on documentation or promises. Get a Data Processing Addendum that explicitly states:

- Where data is processed geographically

- How long data is retained

- Whether data is used for model training

- Your data deletion rights

- Liability for unauthorized access

3. Disable by Default. If an AI feature is optional, disable it. Don't wait for an incident to do this. If you later decide you want the feature, enable it consciously.

4. Audit the Process. For sensitive workflows, audit how data flows through the AI system. This means:

- Code review of the integration

- Testing that access controls actually work

- Monitoring logs to see what data is being processed

5. Maintain a Paper Trail. Document when you enabled AI features, who approved it, and what safeguards you put in place. When (not if) an incident happens, this documentation protects your organization.

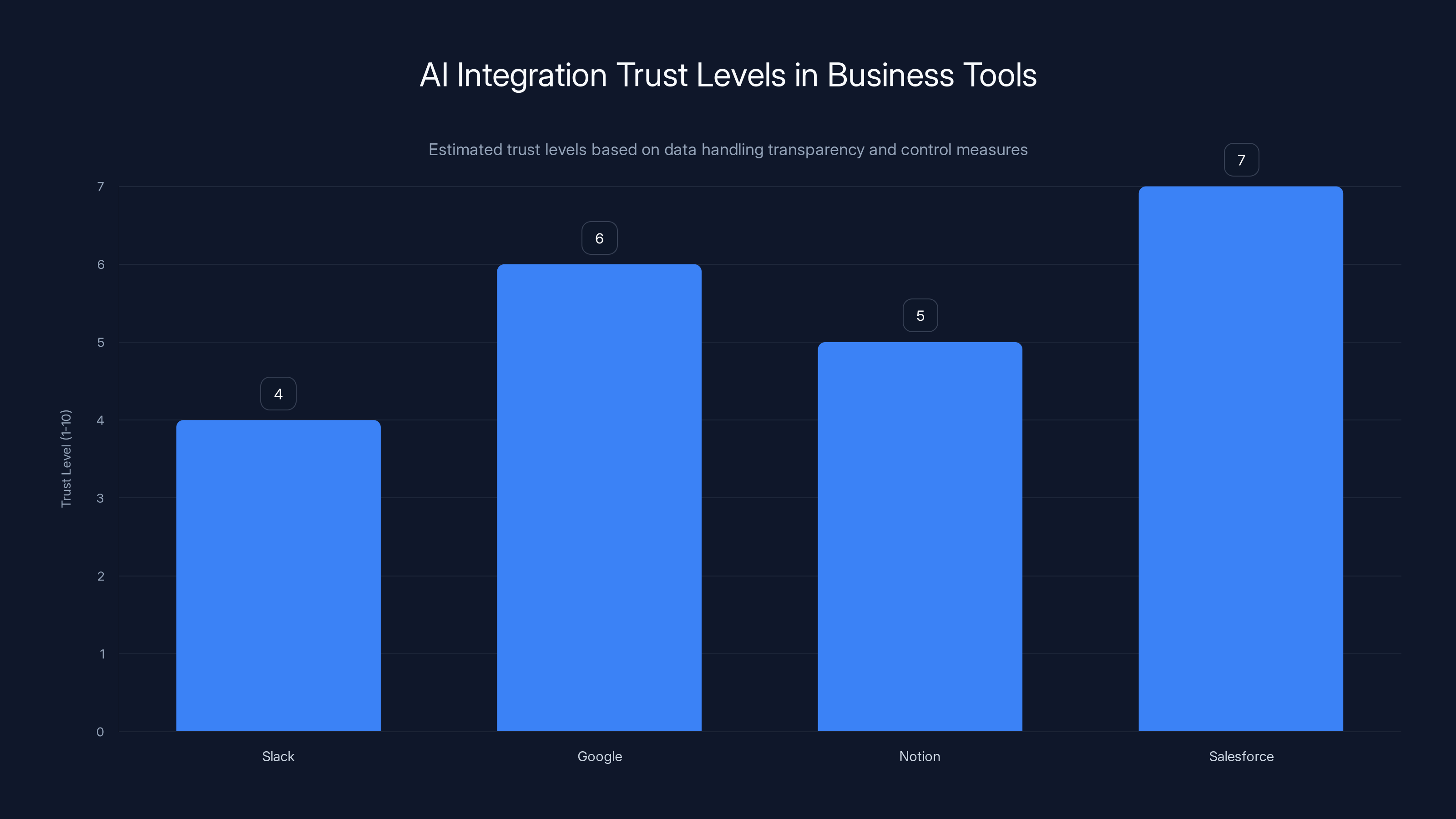

Estimated trust levels for AI integration in business tools show Salesforce leading with more transparent data handling, while Slack lags due to unclear controls. Estimated data.

The Regulatory Implications

Here's what regulators are thinking about this:

GDPR (European Union). Processing personal data through an unauthorized AI system violates Article 32 (security) and potentially Article 5 (lawfulness, fairness, transparency). If affected EU residents weren't notified about the bug, Microsoft may need to report it to data protection authorities. Organizations may also face fines if they can't demonstrate they took reasonable steps to prevent unauthorized processing.

HIPAA (United States - Healthcare). If a healthcare organization's patient emails were processed by Copilot, that's an unauthorized disclosure. Even if no data was actually exfiltrated, the processing itself violates HIPAA. Notification may be required.

SOX (United States - Finance). Public companies subject to Sarbanes-Oxley must maintain controls over financial data. If financial information in emails was processed by Copilot, that's a control failure that auditors will want to know about.

Industry Standards. Payment Card Industry (PCI), Financial Industry Regulatory Authority (FINRA), and other frameworks all have provisions about controlling how sensitive data is processed.

Microsoft created a regulatory headache for its customers. Organizations are now in the position of having to disclose that their "secure" email system bypassed its own protections.

What Microsoft Should Have Done Differently

Let's talk about what a secure design would have looked like:

Option 1: Local Processing Only. Copilot could process emails locally on the user's device, never sending them to the cloud. This requires significant investment but offers the strongest privacy guarantees.

Option 2: Explicit Opt-In per Email. Instead of processing all emails and relying on labels to prevent sensitive ones, Copilot should require the user to explicitly request summarization for each email or conversation. This makes the user conscious of what's being processed.

Option 3: Separate Sensitive Data Handling. Emails marked confidential go through a completely different processing pipeline with stricter controls, logging, and monitoring.

Option 4: Disable by Default. Copilot email features are disabled by default. Users and admins must explicitly enable them, understanding the implications.

Microsoft chose none of these. It chose convenience over security, and this bug is the result.

For the next version, they need to rethink the entire model. Not patch this bug and move on. Actually change how Copilot interacts with sensitive data.

The Broader AI Integration Problem

Microsoft isn't alone. Every company integrating generative AI into business systems faces this problem:

- Slack integrated AI features into workspaces without clear controls over what data is processed.

- Google is adding Copilot-like features to Workspace.

- Notion integrated AI without clear data handling disclosures.

- Salesforce added Einstein AI to CRM systems handling customer data.

Each of these integrations follows the same pattern: broad access, reliance on administrative controls, and hope that nothing goes wrong.

This bug is a warning shot for all of them. Users are starting to ask: "What data is my AI assistant actually processing? Who controls it? What could go wrong?"

The companies that take those questions seriously and build restrictive systems will earn trust. The ones that move fast and assume controls will work will eventually have an incident like Microsoft's.

The User Experience vs. Security Trade-off

From a product manager's perspective, I understand the appeal of Copilot email features. Summarizing a long email thread is genuinely useful. But it's only useful if you trust the system.

Microsoft faced a trade-off: make Copilot convenient by giving it broad access to email, or make it restrictive by requiring explicit opt-in for each email. They chose convenience.

This bug should shift that calculation. Convenience doesn't matter if the feature can't be trusted. And users cannot trust a feature that was bypassing its own security controls.

Future versions need to prioritize security first, then layer convenience on top. That means:

- Security is the default.

- Users understand what data is being processed.

- Transparent controls let users decide what Copilot can access.

- The feature works reliably and safely.

If that makes the feature slightly less convenient? That's the right trade-off for something handling confidential information.

FAQ

What exactly was the Microsoft Copilot email bug?

Microsoft's Copilot Chat was processing emails in Sent and Draft folders that were marked with confidentiality labels, bypassing data loss prevention (DLP) policies that should have prevented such access. The bug was caused by a code error in the email classification logic that failed to properly check whether emails had confidentiality labels before sending them to the AI system for processing. This meant confidential, sensitive communications were being analyzed by the AI without user knowledge or permission, violating the organization's own security policies.

How did the bug affect users' emails?

The bug allowed Copilot to summarize and analyze emails marked as confidential, including entire email threads within Sent and Draft folders. While Inbox emails appear to have been protected, the exposure of Sent and Draft emails was particularly dangerous because those folders contain outgoing communications and unsent drafts that users never intended to share. The data was processed in Microsoft's cloud infrastructure, though exactly what happened to that data afterward—whether it was logged, used for model training, or stored in backups—has not been fully disclosed.

When was the bug discovered and fixed?

The bug was identified internally on January 21, 2026, and a fix was deployed in early February 2026. However, the fix has been rolling out gradually to different organizations, meaning some tenants may still be vulnerable depending on when they receive the patch. Microsoft is "continuing to monitor" the situation, suggesting the rollout is still ongoing and not yet complete across all customers.

Why didn't sensitivity labels prevent the bug?

Sensitivity labels were designed to be an administrative control that would prevent Copilot from processing marked emails, but the code implementing this check had a bug. The classifier was supposed to read the confidentiality label metadata on each email before processing it, but the code had an error that caused it to ignore these labels for emails in Sent and Draft folders. This bug demonstrates that administrative controls cannot be the sole protection for sensitive data—feature disablement is more reliable.

What are the security and compliance implications?

The bug violated multiple frameworks including GDPR (unauthorized personal data processing), HIPAA (if healthcare organizations were affected), SOX (if financial data was exposed), and PCI standards. Organizations relying on sensitivity labels to comply with regulations now need to explain that those controls failed. This may require notification to regulators and affected individuals, depending on the jurisdiction and the type of data exposed. It also undermines the trustworthiness of Microsoft's own security mechanisms.

What should organizations do to protect themselves right now?

First, contact Microsoft support to confirm whether the CW1226324 fix has been deployed to your tenant. Second, disable Copilot email features entirely if your organization handles sensitive data, rather than relying on labels. Third, audit which emails in Sent and Draft folders were marked as confidential between January 21 and when your patch deployed—these are confirmed affected emails. Fourth, notify your legal and compliance teams about the incident and determine whether regulatory notifications are required. Finally, revise your security policies to reflect that labels alone cannot prevent AI processing.

Is this a common problem with AI systems?

Yes. Several Copilot security issues have been discovered previously, including Git Hub Copilot reproducing proprietary training data, prompt injection attacks, and now this label-bypassing bug. The pattern suggests that AI features are being integrated into security-critical systems without corresponding security maturity. As more AI systems get embedded into email, documents, and other sensitive applications, similar bugs are likely to occur. Organizations should assume that any AI system could have unexpected security flaws and design accordingly.

How can I evaluate whether an AI tool is safe for sensitive data?

Ask three critical questions: (1) Where is the data processed—locally on my device or in the cloud? (2) What happens to the data after processing—is it logged, stored in backups, or used for model training? (3) Can the feature be disabled entirely if I decide the risks are unacceptable? Require explicit Data Processing Addendums (DPAs) from vendors specifying data handling, geographic location, retention periods, and liability. Don't rely on security labels or administrative controls alone—require that sensitive features are disabled by default and can only be enabled through explicit, documented approval.

Should organizations stop using Microsoft 365 Copilot?

Not necessarily, but they should dramatically limit its access to sensitive data. Copilot can be useful for non-sensitive tasks like generating meeting summaries for internal discussions or drafting routine communications. But for anything involving confidential information, client data, financial details, or regulated content, organizations should disable Copilot features and require explicit use of alternative tools with clearer data handling practices. Alternatively, require that users explicitly opt in to Copilot processing for each sensitive email, rather than having the feature enabled by default.

What is the long-term solution to this type of problem?

The long-term solution is a fundamental shift in how AI systems are designed. Instead of giving AI broad access to sensitive systems and relying on controls to prevent misuse, AI should have narrow access by default, with explicit user consent required for sensitive operations. Vendors should be transparent about where data is processed, how long it's retained, and whether it's used for model training. Regulatory frameworks like GDPR and HIPAA need to catch up with AI capabilities and require stronger protections for AI processing of sensitive data. And organizations need to treat AI security with the same seriousness as they treat physical or network security.

Key Takeaways: What This Bug Means for Your Organization

Let me be direct about what happened here. Microsoft built a system that was supposed to respect your security policies. The system had a bug. Your confidential emails got processed by an AI without your knowledge or consent.

Now the question is: how do you move forward?

Accept that labels are not barriers. If something is truly sensitive, don't rely on a label to keep it away from AI systems. Disable the feature entirely.

Demand transparency from vendors. When companies integrate AI, they need to clearly explain what data is processed, where it's processed, how long it's kept, and what happens if there's a bug. If they won't provide those details, don't use the tool.

Test your controls. Don't assume that security controls work as intended. Conduct tests to verify that sensitivity labels actually prevent Copilot from accessing emails. This might reveal other issues.

Change your default assumptions. Stop assuming AI is secure. Instead, assume it could expose sensitive data, and design accordingly. If you can't accept that risk, disable the feature.

Stay vigilant. This bug will be patched. But the next bug is coming. The one after that too. As AI becomes more embedded in business systems, the opportunity for security failures grows. Organizations that treat AI as a potential security risk rather than a guaranteed solution will be better positioned to respond when the next incident occurs.

Microsoft will move on from this. They'll patch the bug, release a statement, and most of their customers will never even notice. But if you're responsible for your organization's security, you can't move on. You need to understand what happened, why it happened, and what it means for how you manage AI tools going forward.

The good news: this bug is fixable. The bad news: it's fixable because it's a code error, not a design flaw. And there will be more code errors. The question is whether your organization will be prepared when the next one happens.

Related Articles

- Meta's 2025 Smartwatch Launch: What It Means for AI Wearables [2025]

- Startup's Check Engine Light On? Google Cloud's Guide to Scaling [2025]

- Samsung Galaxy Tab S10 Ultra: The 14.6-Inch Laptop Killer [2025]

- AI Music Generation: History's Biggest Tech Panic or Real Threat? [2025]

- Western Digital's 2026 HDD Storage Crisis: What AI Demand Means for Enterprise [2025]

- Netflix, Warner Bros., and Paramount Merger War [2025]

![Microsoft's Copilot Email Bug: What Happened & How to Protect Your Data [2025]](https://tryrunable.com/blog/microsoft-s-copilot-email-bug-what-happened-how-to-protect-y/image-1-1771511761957.jpg)