Claude for Healthcare vs Chat GPT Health: The AI Medical Assistant Battle [2025]

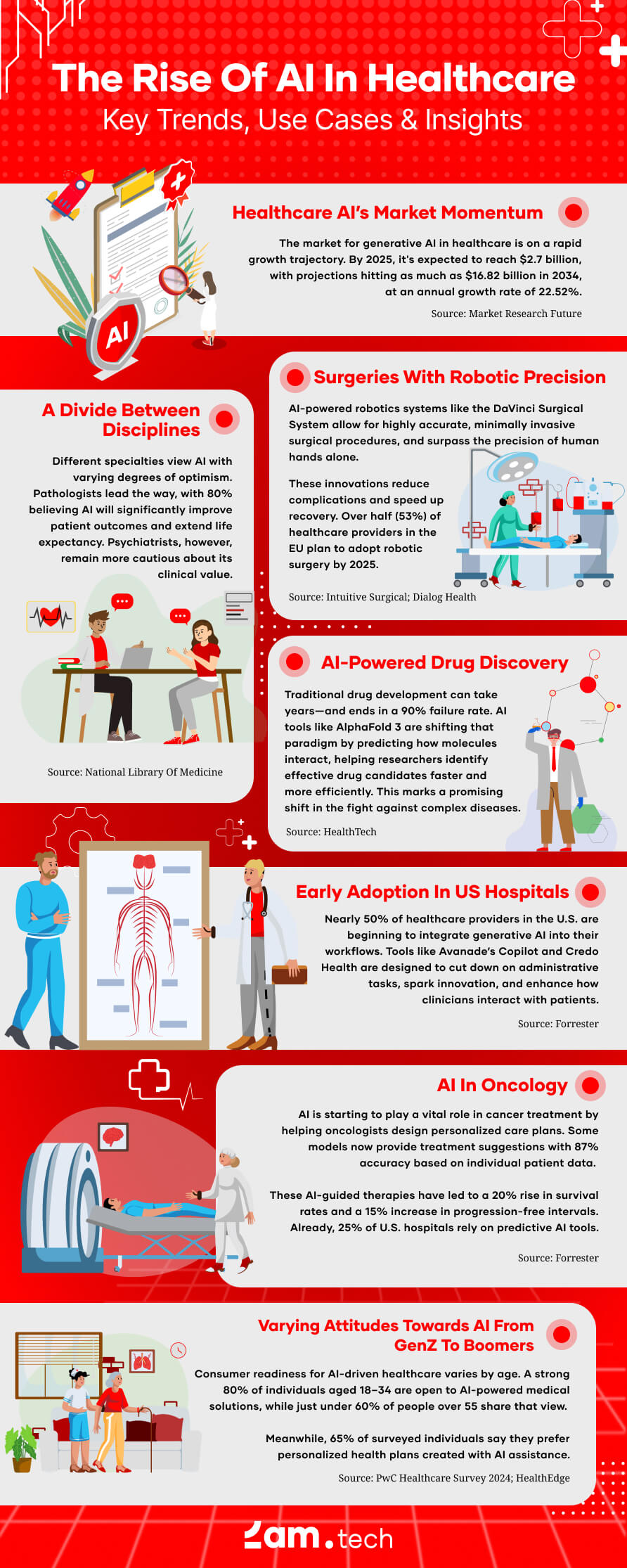

It feels like overnight, AI companies decided that healthcare was the next frontier. Last week, OpenAI dropped Chat GPT Health. This week? Anthropic announced Claude for Healthcare. And suddenly every doctor's office, insurance company, and patient portal is asking the same question: which AI platform is actually ready to handle the complexity of modern medicine.

But here's what's actually fascinating about this competition. It's not really about who built the slicker chatbot. It's about who can solve the administrative nightmare that's crushing healthcare right now. Prior authorizations, medical coding, clinical documentation, insurance claims. The stuff that makes doctors want to quit medicine. That's where the real battle is being fought.

I've spent the last week digging into both platforms, analyzing their architectures, understanding their approach to the regulatory minefield that is healthcare AI. And what I found is that while OpenAI and Anthropic are moving toward the same destination, they're taking fundamentally different paths to get there. One is focused on patient empowerment. One is focused on provider efficiency. And honestly, they might both be right.

Let's break down what's actually happening here, because the implications go way beyond two tech companies launching new products. This is about whether AI can actually fix healthcare's broken administrative backbone, and whether it can do so without making medicine less human.

TL; DR

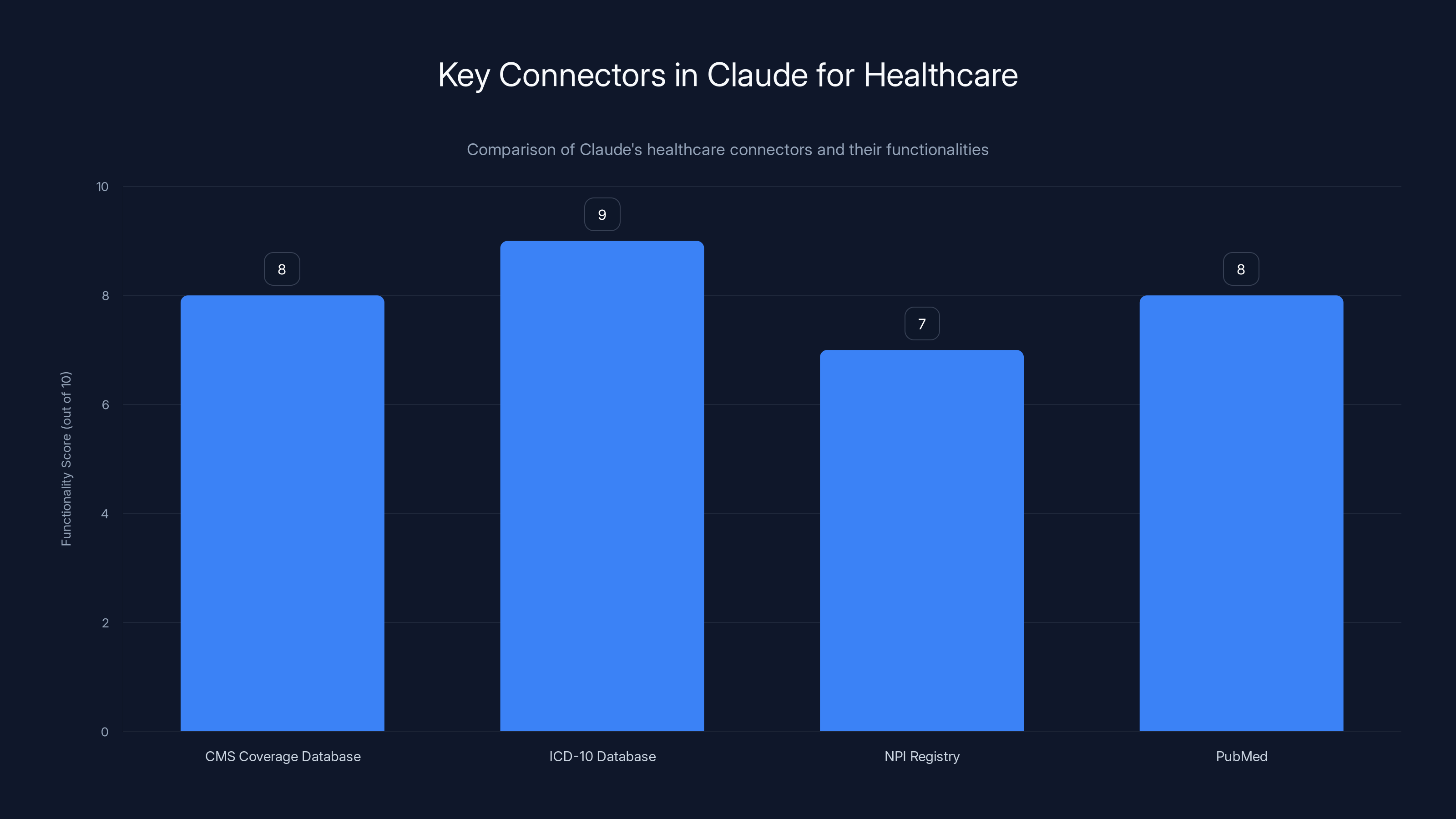

- Claude for Healthcare includes specialized connectors to CMS databases, ICD-10 codes, Pub Med, and provider registries, designed primarily for automating prior authorization and administrative workflows.

- Chat GPT Health focuses on the patient-side experience, allowing users to aggregate health data from phones and wearables for personal health tracking and insights.

- Prior authorization automation is where Claude aims to create immediate ROI, potentially saving providers 15-20 hours per week on administrative tasks.

- Hallucination risks remain the central concern for both platforms when providing medical advice, despite guardrails and disclaimers.

- Data privacy is a competitive differentiator, with both companies explicitly stating health data won't be used for model training.

- Regulatory approval and integration with existing healthcare systems will determine which platform gains healthcare adoption first.

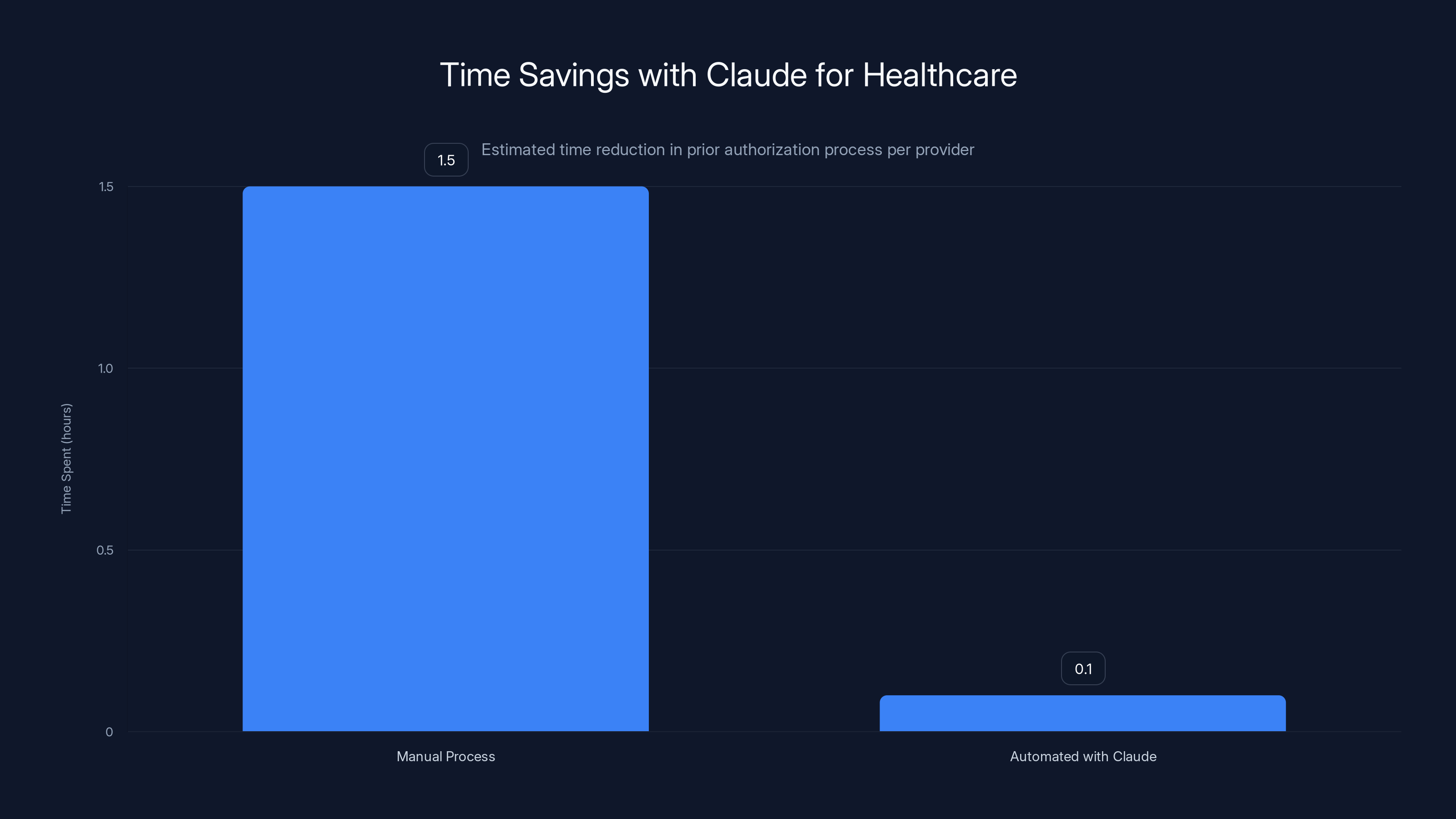

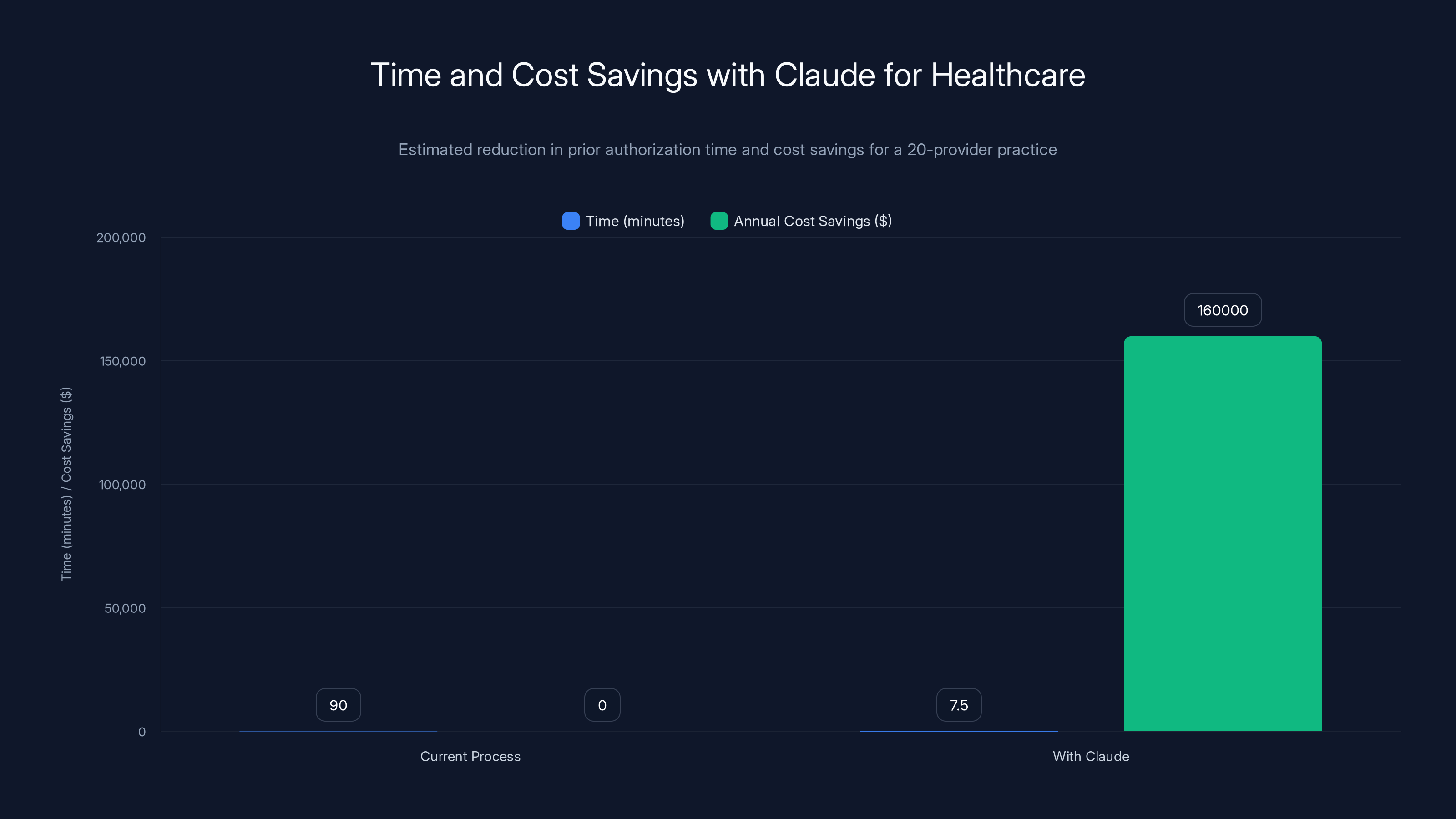

Using Claude for Healthcare can reduce the time spent on prior authorizations from an average of 1.5 hours to just 0.1 hours per authorization, significantly improving efficiency. (Estimated data)

The Healthcare Administrative Crisis That Makes AI Actually Essential

Before we talk about what these AI platforms are doing, we need to understand what problem they're actually solving. And the problem is genuinely bad.

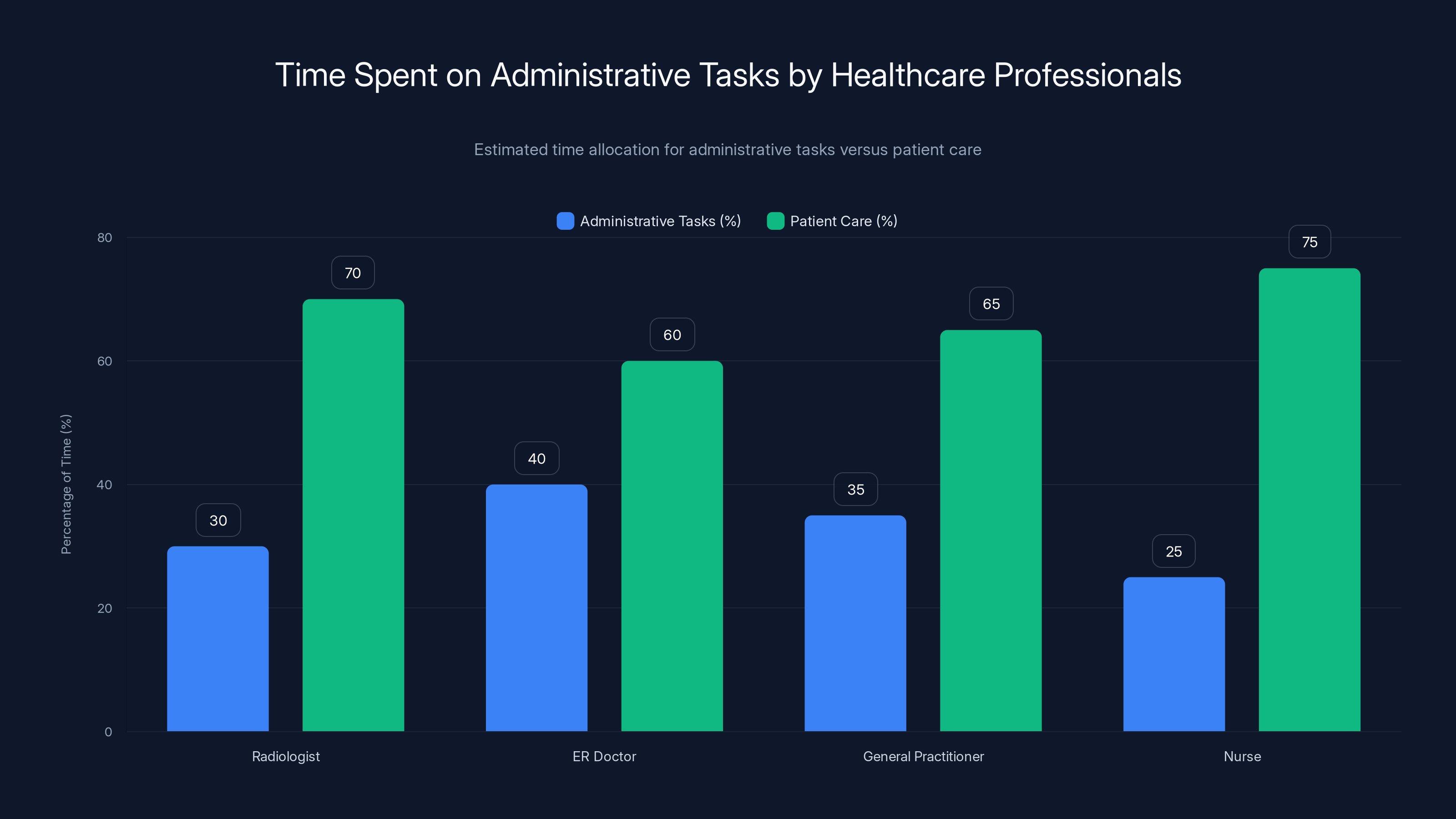

Clinicians spend more time on documentation and paperwork than they do seeing patients. This isn't hyperbole from an AI company pitch deck. This is something every doctor I've talked to will confirm over coffee. A radiologist spends 30% of their day on administrative tasks. An emergency room doctor might spend 40% of their shift documenting what they already know they did. It's not just inefficient. It's actively harming healthcare delivery.

And then there's prior authorization. This is the process where a doctor recommends a treatment, then has to submit additional documentation to the insurance company to get approval before the patient can actually receive that treatment. It's bureaucratic, it's time-consuming, and it frequently delays care. Some estimates suggest that up to 90% of physician practices report that prior authorization requirements interfere with their ability to provide timely care.

Here's the actual math on this: a single prior authorization request can take between 15 minutes and 2 hours to compile. A busy practice might handle 50-100 of these per week. That's one full-time employee, potentially earning

This is why AI suddenly matters in healthcare. It's not because doctors want to hand off diagnoses to algorithms. It's because they want 5 hours back in their week to actually practice medicine.

Both Claude for Healthcare and Chat GPT Health are being positioned as solutions to this problem, but they're approaching it from different angles. Understanding that difference is crucial.

Healthcare professionals, such as radiologists and ER doctors, spend a significant portion of their time on administrative tasks, detracting from patient care. Estimated data highlights the critical need for AI solutions to reduce this burden.

Claude for Healthcare: The Infrastructure Play

Anthropic's approach to healthcare AI is fundamentally different from OpenAI's, and it starts with something called "connectors." These aren't just API integrations. They're purpose-built bridges between Claude and the actual systems healthcare organizations use every day.

Let's be specific about what these connectors do. Claude for Healthcare can directly access the CMS Coverage Database. That's the Centers for Medicare and Medicaid Services database that contains coverage information for essentially every medical procedure and medication in the United States. When a doctor is trying to determine whether a specific treatment will be covered for a specific patient, instead of manually searching through a database that was last updated in 1985 and requires a separate login, Claude can just ask the database directly.

Then there's the ICD-10 connector. ICD-10 is the International Classification of Diseases, 10th Revision. It's the global standard for medical coding. Every diagnosis, every procedure, every condition has a specific code. A single diagnosis can have multiple valid codes depending on specificity. Getting the right code matters because it affects billing, coverage, research data, and patient records. Medical coders spend time every single day hunting for the right ICD-10 code. Claude with direct access to the ICD-10 database can suggest the correct codes and explain why they apply.

The National Provider Identifier Registry connector gives Claude access to a database of 10 million healthcare providers, with their credentials, specialties, locations, and insurance network affiliations. For insurance companies running claims, for referral services trying to find specialists, for patients trying to figure out if their doctor is actually in-network, this data is gold.

And then Pub Med. That's the National Library of Medicine's search engine for biomedical research. Millions of peer-reviewed journal articles, all accessible through Claude. A clinical researcher or a doctor wanting to check the latest evidence on a specific treatment can now ask Claude to search Pub Med and synthesize findings instead of spending an hour doing it manually.

But here's what's genuinely sophisticated about this: Claude doesn't just have access to these databases. It has what Anthropic calls "agent skills." This is where it gets genuinely interesting. An agent skill is different from a simple API call. It's a set of instructions for how Claude should approach a task, what steps it should take, what information it should gather, and how it should validate its work.

For prior authorization specifically, Claude can follow a workflow: accept a request, check the CMS database for coverage criteria, search for relevant clinical literature, cross-reference against the patient's existing conditions, and then generate a complete prior authorization package with supporting documentation. The entire process that currently takes humans 1-2 hours can potentially be done in minutes.

The architecture here is important because it determines what Claude can actually accomplish. This isn't a chatbot where you type a question and get an answer. This is an AI system that can autonomously navigate complex workflows, pull information from multiple sources, synthesize that information, and produce structured output.

For providers and payers, that's potentially transformative. For patients asking health questions, it's probably overkill.

Chat GPT Health: The Patient-Centric Approach

OpenAI's Chat GPT Health is fundamentally focused on a different use case: the individual patient managing their own health.

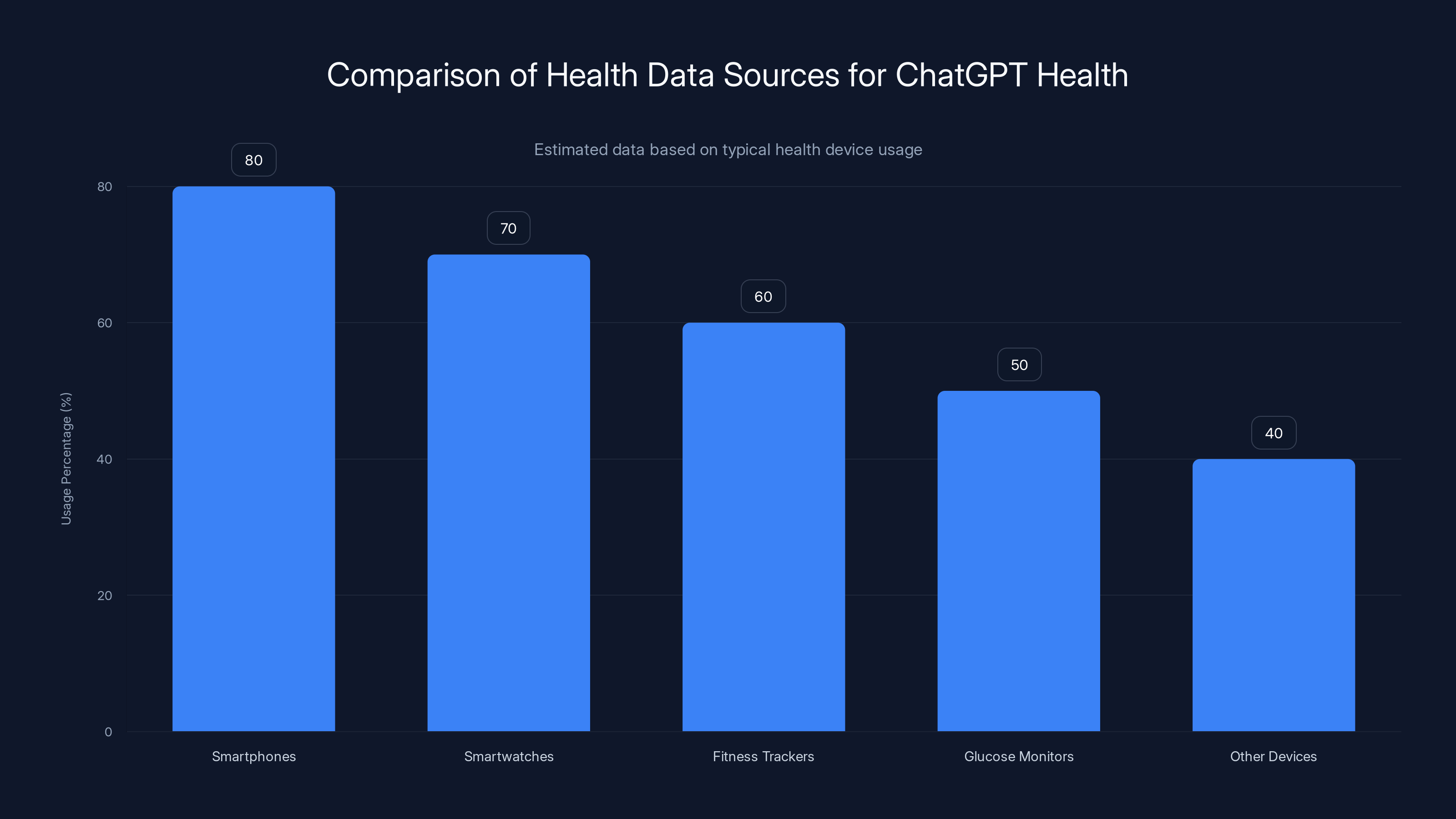

The core functionality is health data aggregation. Users can sync data from their phones, smartwatches, fitness trackers, continuous glucose monitors, and other health devices. Then Chat GPT can analyze that data and provide insights. If your smartwatch shows that your heart rate is elevated during certain times of day, Chat GPT can notice patterns and ask clarifying questions. If your fitness tracker shows you've been consistently hitting your step goals but your sleep has dropped, Chat GPT can make connections.

This is fundamentally different from what Claude is doing. Claude is an enterprise tool designed to automate professional workflows. Chat GPT Health is a consumer application designed to make health data more useful to the person whose data it is.

OpenAI has been explicit about this positioning. Chat GPT Health will roll out gradually, focused initially on the chat experience where patients can ask questions about their health. The tool is designed to be conversational. It's designed to feel like you're talking to someone who knows your health history, understands your concerns, and can provide context-aware responses.

One of the interesting design choices OpenAI made is around data aggregation. Instead of requiring users to manually upload health data, Chat GPT can sync with popular health platforms and devices. This means a patient doesn't need to remember to record their blood pressure readings or manually input their medications. If they're already using Apple Health, Google Fit, or other standard health platforms, Chat GPT can pull that data automatically.

The use case is compelling. A patient wakes up feeling unwell. Instead of immediately calling their doctor, they can ask Chat GPT "I've had a headache for 3 hours, my heart rate is 15% higher than normal, and I haven't slept well in 2 days, what could this be?" Chat GPT can review their health history, note any relevant medications, check for any conditions they're being treated for, and provide a thoughtful response that includes suggestions for when to seek professional care.

OpenAI has also been clear that Chat GPT Health won't be providing definitive medical advice. The tone is more like a knowledgeable friend who happens to understand health rather than a doctor. The guardrails include explicit recommendations to speak with healthcare professionals for reliable, tailored guidance.

But here's the thing that makes this interesting: 230 million people already use Chat GPT to talk about their health every week. OpenAI isn't inventing this use case. People are already doing this. Chat GPT Health is just making it more systematic, more personalized, and more connected to actual health data.

Claude for Healthcare can significantly reduce prior authorization time from an average of 90 minutes to approximately 7.5 minutes and save a 20-provider practice an estimated $160,000 annually.

The Hallucination Problem That Won't Go Away

Let's talk about the elephant in every healthcare AI conversation: hallucination.

Hallucination is when a language model generates information that sounds plausible but is factually incorrect. It's the AI's equivalent of confidently stating something wrong. In most contexts, hallucination is annoying. You ask an AI how to fix a bug and it suggests code that looks right but doesn't work. You ask for a recipe and it invents ingredients. Frustrating, but not dangerous.

In healthcare, hallucination is potentially harmful.

Imagine a patient asks Chat GPT Health about a specific medication interaction, and Chat GPT hallucinates a side effect that doesn't actually exist. The patient becomes worried about something that isn't a real risk. Or imagine Claude for Healthcare, during a prior authorization process, cites a coverage policy from the CMS database that it actually invented. The authorization gets denied for the wrong reasons, and a patient doesn't get needed treatment.

Both companies have built safeguards to address this. Anthropic has been particularly focused on what they call "constitutional AI," which is essentially a framework for training AI systems to refuse to do certain things even when asked. Claude is supposed to be more reluctant to make claims it's not confident about. It's supposed to hedge more, to say "I don't have enough information" more often than other models.

For Claude for Healthcare specifically, the fact that it can directly access authoritative databases reduces hallucination risk. If Claude can pull the actual ICD-10 code from the ICD-10 database rather than remembering it from training data, it's much less likely to invent codes. If it can cite actual research from Pub Med rather than paraphrasing from memory, it's more trustworthy.

But the problem isn't completely solved. A hallucination could still happen in how Claude synthesizes information, in the logic it applies, or in its interpretation of what the data means. The guardrails are better, but they're not perfect.

OpenAI has taken a different approach. Chat GPT Health is designed to be more conversational and less authoritative. It's explicitly positioned as a tool for understanding your own health better, not for medical diagnosis. The framing is different. Instead of "here's the answer," it's "here's something worth discussing with your doctor."

But hallucination risks remain for both platforms. This is why both companies have been so explicit about their limitations. Both have said users should consult healthcare professionals for reliable, tailored guidance. Both have built in guardrails to refuse certain types of requests. Both are acutely aware that hallucinating in healthcare has legal, ethical, and potentially life-or-death consequences.

The practical reality is that these systems will get better at reducing hallucinations over time, but they'll never be perfect. The question for healthcare organizations is whether the time savings and efficiency gains are worth managing the hallucination risk.

Data Privacy: The Unspoken Competitive Advantage

Here's something both companies have been very explicit about, and it matters more than you might think: health data from these platforms will not be used to train their AI models.

This is genuinely important. Medical data is extremely sensitive. It contains diagnoses, medication information, genetic information, mental health records, everything. If that data was being used to train AI models, privacy advocates would be justifiably furious.

Both OpenAI and Anthropic have said no. The health data you sync into their platforms stays separate from training data. When you talk to Chat GPT Health about your symptoms or sync your smartwatch data, that data isn't being fed back into the model improvement pipeline.

But here's where it gets interesting: this is also a competitive differentiator, and both companies know it.

If OpenAI were using health data from Chat GPT Health to improve Chat GPT's overall capabilities, they'd get a massive advantage in model quality. Health data is nuanced and complex. It contains real-world examples of medical reasoning, patient concerns, and health outcomes. Training data like this would genuinely improve AI model performance across the board.

The fact that they're not using it says something about their respect for privacy, but it also says something about the regulatory environment. Healthcare data is heavily regulated. HIPAA violations can result in massive fines. Using patient data for model training without explicit consent would be legally risky and ethically indefensible.

So both companies are making a choice: respect privacy, maintain trust, and operate within the regulatory framework, even though it means giving up a potential competitive advantage.

For patients and providers evaluating these platforms, privacy practices matter. You need to know:

- Where is your data stored?

- Who has access to it?

- How long is it retained?

- Can you request deletion?

- What happens if the company changes ownership?

- How is the data encrypted in transit and at rest?

Both companies have published privacy policies addressing these questions, but the details matter. Healthcare organizations should be auditing these policies and making sure they align with their own compliance requirements.

Smartphones and smartwatches are the most commonly used devices for health data aggregation in ChatGPT Health. Estimated data based on typical device usage patterns.

Prior Authorization: Where the Real ROI Lives

If you want to understand why healthcare organizations will care about Claude for Healthcare more than patients care about Chat GPT Health, focus on prior authorization.

Prior authorization is the specific, defined, measurable problem that Claude is positioned to solve immediately.

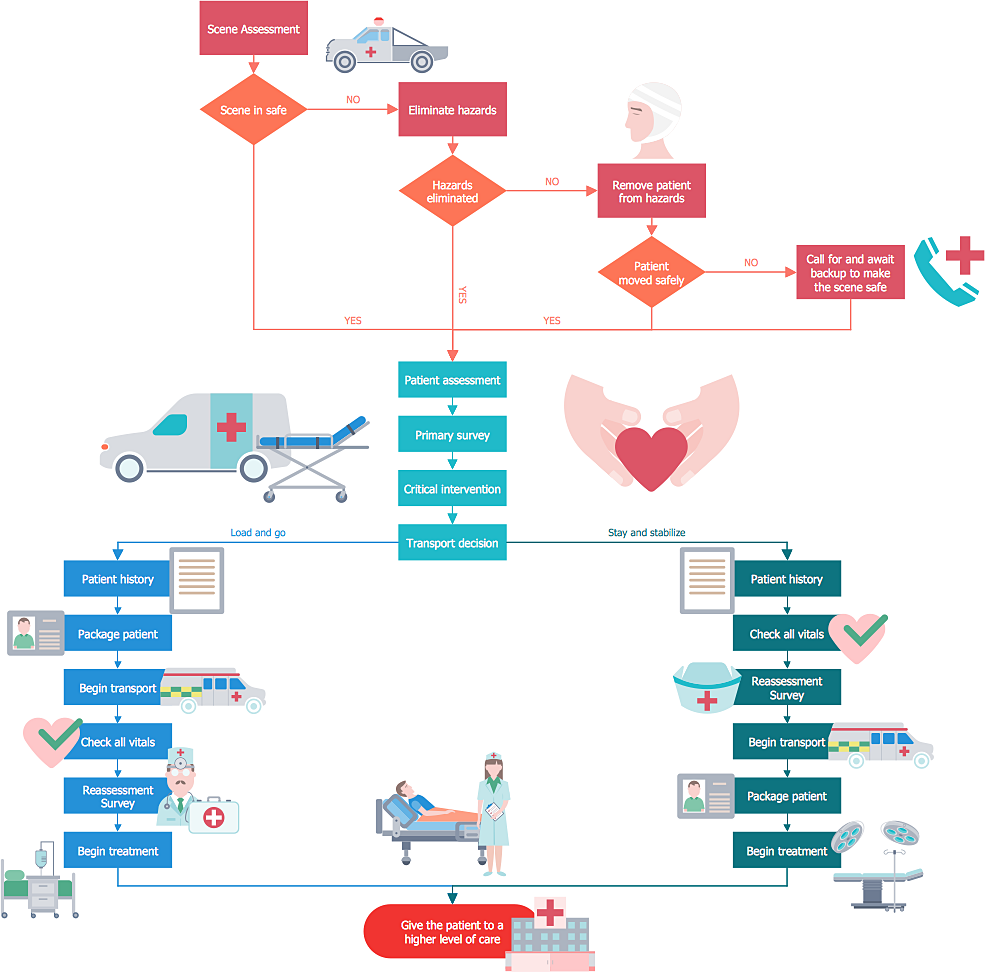

Here's how a prior authorization typically works today:

- A doctor determines that a patient needs a specific medication or treatment.

- The doctor (or more likely, the doctor's administrative staff) contacts the insurance company.

- The insurance company requests documentation justifying why this treatment is medically necessary.

- The doctor's office compiles information: patient history, relevant test results, clinical reasoning for why this specific treatment is appropriate.

- This documentation gets submitted to the insurance company.

- An insurance company reviewer (who might or might not have medical training) reviews the documentation.

- The insurance company either approves, denies, or requests additional information.

- If denied, the doctor can appeal, which restarts the process.

This entire workflow is information gathering and synthesis. It's exactly what AI is good at.

With Claude for Healthcare, steps 4 and 5 potentially become automated. A doctor enters a request: "Patient needs Humira for rheumatoid arthritis." Claude can:

- Retrieve the patient's medical history from the EHR.

- Check the CMS coverage database for coverage criteria.

- Search Pub Med for recent clinical evidence.

- Cross-reference the patient's current medications for any interactions.

- Check the patient's demographics for any policy-specific factors.

- Generate a complete prior authorization request with all supporting documentation.

The entire process that took a human 1-2 hours happens in minutes.

Let's do the math on the financial impact:

Scenario: A midsize healthcare practice with 20 providers

- Average prior authorizations per provider per week: 10

- Total weekly prior authorization requests: 200

- Average time per request (current): 45 minutes

- Total current time spent: 150 hours per week

- Staff cost (average 3,750 per week

- Annual cost: $195,000

With Claude for Healthcare automation:

- Time per request: 5 minutes (mostly verification)

- Total time spent: 16.7 hours per week

- Reduction: 92% fewer human hours

- Annual savings: $180,000

- Implementation cost: Approximately 30,000 annually

- Net annual savings: 170,000

And that's just the direct staff cost savings. There are indirect savings: faster approvals mean patients get treatment sooner, which potentially improves outcomes and reduces complications. Fewer administrative headaches means better staff retention. Faster prior authorizations might also reduce insurance claim denials due to incomplete documentation.

This is why healthcare organizations will adopt Claude for Healthcare. It's not because it's innovative or impressive. It's because the ROI is immediate, measurable, and significant.

The Enterprise Integration Challenge

Here's where both platforms run into reality: healthcare infrastructure is old, fragmented, and resistant to change.

Most healthcare organizations run electronic health record (EHR) systems built 10-20 years ago. Epic, Cerner, Medidata—these are the dominant systems. They work, they're trusted, but they're also complex and their APIs are sometimes not well-documented. Integrating a new AI platform with an existing EHR infrastructure isn't trivial.

For Claude for Healthcare, the connectors to CMS databases and Pub Med are valuable, but real value in a healthcare system comes from integration with the EHR. A doctor needs to invoke Claude without leaving their normal workflow. If using Claude for Healthcare requires logging into a separate system, copying and pasting patient information, and then manually importing the results back into the EHR, adoption will be slow.

OpenAI has the advantage here: most healthcare organizations already have Chat GPT access through enterprise agreements. Chat GPT Health might feel more like an extension of existing tools rather than a new system to integrate.

Anthropic's advantage is that Claude is built with integration in mind. The connector architecture suggests that Anthropic is thinking about deep EHR integration from the start. They're not trying to bolt AI onto existing systems. They're building AI that understands healthcare system architecture.

But both companies face the same challenge: regulatory approval and integration. Before a healthcare organization can use either platform for clinical workflows, they need:

- Compliance with HIPAA (Health Insurance Portability and Accountability Act)

- Compliance with any state-specific health data regulations

- Internal security audits

- Physician oversight and approval

- Documentation of AI decision-making for liability purposes

- Training for staff on appropriate use

This takes time. It typically takes 6-18 months from product announcement to actual clinical deployment in a healthcare system.

The organizations that will adopt these tools first are likely:

- Large healthcare systems with dedicated IT infrastructure and resources to handle integration

- Insurance companies that have direct control over their systems and immediate ROI from prior authorization automation

- Healthcare startups building new systems with AI integration from the start

- Progressive independent practices willing to be early adopters if the ROI is clear

Smaller practices and rural healthcare providers might lag by several years, not because they don't want to adopt AI, but because they lack the infrastructure and technical resources to do so.

Estimated data shows that insurance companies are likely to adopt Claude for Healthcare more rapidly than patients and providers, reaching significant adoption by 2027. ChatGPT Health for patients and Claude for Healthcare for providers are expected to reach mainstream adoption by 2029-2030.

Regulatory Reality: What Gets Approved and What Doesn't

Here's something both companies are dancing around but need to address directly: which uses of AI in healthcare are actually legal and approved?

The FDA (Food and Drug Administration) has been remarkably clear on this. Clinical decision support tools require some level of FDA oversight. If an AI system is making recommendations about patient diagnosis, treatment, or medication, it might be classified as a medical device, which means it needs FDA approval.

Both Chat GPT Health and Claude for Healthcare are positioning themselves as decision support tools, not as making final medical decisions. This is an important distinction. A decision support tool provides information and recommendations. A medical device makes decisions.

Theoretically, this distinction lets both platforms avoid FDA approval. OpenAI has said Chat GPT Health is designed to provide health information, not medical advice. Anthropic has positioned Claude for Healthcare as automating administrative workflows, not making clinical decisions.

But there's fuzziness here. If Claude generates a prior authorization recommendation, and a doctor accepts it without verifying, is Claude making a clinical decision? If Chat GPT Health provides a health insight based on patient data, and a patient acts on it without consulting a doctor, is it providing medical advice?

The regulatory framework hasn't fully settled these questions. The FDA is currently working on guidance for AI in healthcare, but complete regulatory clarity might still be 1-2 years away.

For healthcare organizations, this creates risk. If they deploy these tools for critical clinical workflows and the FDA later determines that the use requires approval that wasn't obtained, there could be compliance issues.

The safest approach is to use these tools for clearly non-clinical functions first: administrative automation, documentation, research support. These uses are less likely to trigger regulatory scrutiny while both companies and regulators figure out the boundaries.

Adoption Timelines: Who Gets This First

Let's be realistic about how fast these tools will actually be adopted in healthcare.

Healthcare is cautious. It moves slowly. New technologies take years to gain traction. Consider EHR adoption: it took 15 years after meaningful use incentives were introduced for most practices to fully transition. Electronic prescribing took even longer.

For Claude for Healthcare and Chat GPT Health, realistic adoption timelines are:

Chat GPT Health for patients:

- Early adopters (tech-forward patients, health-conscious individuals): 2025-2026

- Mainstream consumer adoption: 2027-2028

- Significant percentage of patient base using the tool: 2029-2030

OpenAI's advantage is that they already have hundreds of millions of Chat GPT users. The conversion rate from Chat GPT user to Chat GPT Health user will probably be high. If 5-10% of current Chat GPT users try Chat GPT Health, that's 20-50 million people.

Claude for Healthcare for providers:

- Pilot programs and early adopters: 2025-2026

- Rollout in mid-size and large health systems: 2027-2028

- Mainstream adoption in healthcare organizations: 2029-2030

This is slower because it requires integration with existing systems, regulatory navigation, and staff training. But the ROI is clearer, so adoption among organizations that have the technical capacity will move relatively quickly.

For insurance companies:

- Rapid adoption (2025-2027) because the ROI is immediately clear and they control their own infrastructure

- Insurance companies will likely be the first major adopters of Claude for Healthcare

The timeline matters because it determines when these tools actually start impacting healthcare at scale. A lot of the hype around AI in healthcare is about 2027-2030 potential. The reality of 2025-2026 is that these tools will exist, early adopters will use them, but healthcare systems broadly will still be mostly doing things the old way.

Claude's connectors provide robust functionality, with the ICD-10 database scoring highest due to its critical role in medical coding. Estimated data.

Competitive Differentiation: What Actually Matters

When you strip away the marketing, here's what actually differentiates these platforms:

Claude for Healthcare's actual advantages:

- Direct access to authoritative healthcare databases reduces hallucination risk

- Agent skills enable autonomous workflow automation

- Enterprise focus means better integration design

- Clear ROI for organizational decision-makers

- Infrastructure that can handle complex medical data flows

Chat GPT Health's actual advantages:

- Existing user base of 200+ million people

- Proven consumer adoption patterns

- Integration with popular health platforms and wearables

- Simpler onboarding and lower barrier to entry

- OpenAI's resources for rapid iteration

- Consumer brand trust and familiarity

Neither platform is inherently superior. They're solving different problems for different audiences.

For a healthcare system administrator trying to reduce prior authorization time and cost, Claude is the obvious choice. For a patient trying to understand their own health better, Chat GPT Health is the obvious choice.

The real question isn't which one will "win." The question is whether both can achieve meaningful adoption without running into regulatory walls, integration challenges, or the fundamental problem that healthcare innovation is slow.

The Broader AI-in-Healthcare Trend

Neither of these tools exists in isolation. They're part of a larger wave of AI adoption in healthcare.

Healthcare organizations are deploying AI for:

- Diagnostic imaging analysis: AI systems helping radiologists interpret scans, flagging potential issues

- Administrative automation: Scheduling, billing, claims processing

- Clinical research: Using AI to identify potential clinical trial candidates

- Drug discovery: AI accelerating the identification of potential new medications

- Predictive analytics: Predicting hospital readmissions, disease progression, patient deterioration

- Natural language processing: Extracting structured data from unstructured clinical notes

Claude for Healthcare and Chat GPT Health are just two pieces of this broader trend. The healthcare industry knows it has a productivity problem. It knows it's drowning in paperwork and administrative overhead. It's actively looking for solutions, and AI is the obvious place to look.

The success of these platforms will probably depend less on their specific features and more on whether they can demonstrate clear ROI, navigate regulatory requirements, and integrate smoothly with existing systems.

OpenAI and Anthropic both have the resources to make this work. But so did numerous other AI companies that have had limited healthcare adoption. Healthcare is genuinely difficult. The margin between success and failure is often just execution and persistence.

Looking Forward: What Healthcare AI Needs to Succeed

For either of these platforms to meaningfully impact healthcare, they need to solve problems beyond just being good AI systems. They need to be:

Interoperable: They need to work with existing EHR systems, health data standards, and healthcare infrastructure. A platform that requires workarounds or manual data transfers won't achieve broad adoption.

Demonstrably safe: Not just safe in theory, but safe in practice with real monitoring, auditing, and oversight. Healthcare organizations need to see evidence that these tools don't introduce new risks.

Regulatorily compliant: They need to navigate healthcare regulations without requiring extensive customization for different jurisdictions and organizations.

Transparent: Healthcare needs to understand how AI is making decisions, what data it's using, and what alternatives exist. Black-box AI won't gain trust in clinical settings.

Economically beneficial: The ROI needs to be clear, measurable, and sustainable. Healthcare budgets are tight. Expensive tools that provide marginal improvements won't get funding.

Owned and controlled: Healthcare organizations need to feel like they own and control their data and their implementations, not like they're dependent on a third-party platform.

Both Claude for Healthcare and Chat GPT Health have work to do on all these dimensions. OpenAI has a brand and user base advantage. Anthropic has an architecture advantage. But neither has fundamentally solved the integration, regulatory, and adoption challenges that have historically made healthcare innovation slow.

The next 12-24 months will be telling. We'll see whether these platforms can move from announcement to actual deployment in healthcare organizations. We'll see whether the claimed time savings materialize in practice. We'll see whether regulatory obstacles emerge.

Healthcare AI isn't a sprint. It's a marathon. These platforms might win that marathon. But they're going to need to prove it in the real world first.

FAQ

What is Claude for Healthcare and how does it differ from Chat GPT Health?

Claude for Healthcare is Anthropic's AI platform designed for healthcare providers, payers, and insurance companies, with specialized connectors to medical databases like CMS, ICD-10 codes, Pub Med, and provider registries. It's focused on automating administrative workflows, particularly prior authorization. Chat GPT Health, by contrast, is OpenAI's consumer-facing tool designed for individual patients to aggregate and analyze their personal health data from phones, smartwatches, and fitness trackers. The fundamental difference is audience: Claude targets healthcare organizations seeking to reduce administrative burden, while Chat GPT Health targets patients seeking better understanding of their own health.

How can Claude for Healthcare reduce prior authorization time and costs?

Claude for Healthcare uses AI agent skills to autonomously navigate the prior authorization workflow. When a doctor initiates a request, Claude can simultaneously access the CMS Coverage Database for policy information, search Pub Med for relevant clinical evidence, cross-reference the patient's medical history and current medications, and compile a complete authorization package with supporting documentation. A process that currently requires 45 minutes to 2 hours of human work can potentially be completed in 5-10 minutes, with calculations showing potential annual savings of

What are the main risks associated with AI providing medical advice?

The primary risk is hallucination, where AI systems generate plausible-sounding but factually incorrect information. In healthcare, this could lead to wrong diagnoses, inappropriate treatment recommendations, incorrect medical coding, or missed critical information. Both platforms address this through guardrails, but the risk isn't eliminated. Neither platform recommends using them as substitutes for professional medical advice, and both explicitly recommend consulting healthcare professionals for reliable, tailored guidance.

How do these platforms handle patient health data privacy?

Both Anthropic and OpenAI have explicitly stated that health data will not be used to train their AI models. Data is stored separately from training pipelines and subject to HIPAA compliance requirements. However, organizations should verify specific data handling practices, encryption standards, data retention policies, and deletion procedures before deploying these platforms, as privacy practices may vary based on implementation and jurisdiction.

When will these platforms be available for widespread healthcare use?

Chat GPT Health is expected to see early consumer adoption in 2025-2026, with mainstream adoption by 2027-2028. Claude for Healthcare will likely see faster adoption among insurance companies and large health systems in 2025-2026, with broader healthcare system adoption by 2027-2029. Adoption timelines depend on regulatory clarity, integration with existing EHR systems, and organizational readiness to implement new workflows.

Do these platforms require FDA approval to be used in healthcare?

The regulatory status is still evolving, but currently both platforms are positioned as decision support tools rather than medical devices, which theoretically avoids FDA approval requirements. However, if either platform is used for critical clinical decision-making, regulatory issues could emerge. The safest approach for healthcare organizations is to start with clearly non-clinical uses like administrative automation before expanding to clinical workflows. The FDA is developing guidance for AI in healthcare, with more complete regulatory clarity expected in 2026-2027.

How quickly can healthcare organizations integrate these platforms with existing systems?

Integration timelines typically range from 6-18 months depending on organizational complexity, existing system architecture, and desired scope. Large healthcare systems with dedicated IT infrastructure can implement faster. Smaller practices may take longer due to resource constraints. Claude for Healthcare's connector architecture is designed for integration, but real-world implementation still requires coordination with EHR vendors, compliance review, staff training, and testing before clinical deployment.

Which platform should my healthcare organization choose?

The answer depends on your specific needs. If your priority is reducing administrative burden (prior authorization, medical coding, documentation), Claude for Healthcare is purpose-built for those workflows. If your goal is improving patient engagement and health literacy, Chat GPT Health is more appropriate. Some healthcare systems will deploy both for different use cases. Evaluation should focus on: specific organizational pain points, technical integration requirements, regulatory compliance, vendor stability, and measurable ROI.

How do these platforms handle accuracy and quality assurance?

Both platforms use different approaches. Claude for Healthcare reduces hallucination risk by directly accessing authoritative databases rather than relying solely on trained knowledge. Chat GPT Health uses constitutional AI and more conservative framing (providing insights rather than diagnoses). Both implement quality checks and monitoring. However, neither platform offers perfect accuracy. Healthcare organizations need to implement oversight mechanisms, human verification workflows, and continuous monitoring to ensure quality.

What happens if there's a security breach or the company changes ownership?

This is a legitimate concern for healthcare organizations handling sensitive data. Current contracts with both OpenAI and Anthropic should address data protection in event of security breaches and company acquisition. Organizations should require business associate agreements (BAAs) under HIPAA, specific data handling clauses, audit rights, and clear procedures for data deletion or return if the relationship ends. Legal review of vendor agreements is essential before deployment.

The Bottom Line

The announcement of Claude for Healthcare and Chat GPT Health within days of each other signals something important: major AI companies are seriously betting on healthcare as a major market. They're not making casual entries. They're building specialized products with purpose-built features for healthcare's specific challenges.

But let's be realistic. Healthcare moves slowly. Integration is hard. Regulatory uncertainty exists. And both platforms have work to do before they become standard tools in most healthcare organizations.

What's genuinely exciting isn't which platform "wins." It's that healthcare organizations now have multiple sophisticated AI options for addressing real, quantifiable problems. Prior authorization automation isn't a futuristic concept anymore. It's something you can implement now, from established companies with real infrastructure and resources.

For healthcare providers exhausted by administrative burden, Claude for Healthcare offers immediate practical value. For patients wanting better tools to understand their own health, Chat GPT Health provides a starting point.

Neither is perfect. Neither eliminates human judgment or professional oversight. Neither solves healthcare's fundamental challenges of cost, access, and quality.

But both are meaningful steps toward healthcare that's less burdened by paperwork and more focused on care. In a system desperate for efficiency improvements, that matters.

The next year will be telling. Watch for real-world deployment stories, measured outcomes, and honest assessments of what these tools can and can't do. That's when we'll know whether this is genuine healthcare innovation or just hype with good marketing.

Key Takeaways

- Claude for Healthcare targets healthcare providers and insurance companies with prior authorization automation and medical database connectors, while ChatGPT Health serves individual patients seeking personalized health insights from aggregated data.

- Prior authorization automation with Claude could save a 20-provider practice 170,000 annually by reducing administrative time from 45-120 minutes to 5-10 minutes per request.

- Both platforms explicitly state that patient health data won't be used for model training, but hallucination risks remain the central concern for clinical applications despite implemented safeguards.

- Healthcare organizations should expect 6-18 month integration timelines for EHR system compatibility, with insurance companies adopting Claude fastest and smaller practices adopting slowest due to technical resource constraints.

- Regulatory clarity around FDA approval for healthcare AI is still evolving; organizations should start with administrative automation rather than clinical decision-making to minimize regulatory risk.

Related Articles

- ChatGPT Health & Medical Data Privacy: Why I'm Still Skeptical [2025]

- Google Removes AI Overviews for Medical Queries: What It Means [2025]

- 7 Biggest Tech Stories: CES 2026 & ChatGPT Medical Update [2025]

- ChatGPT Health: How AI is Reshaping Medical Conversations [2025]

- Eyebot Eye Test Kiosk: Revolutionizing Vision Screening [2025]

- 33 Top Health & Wellness Startups from Disrupt Battlefield [2025]

![Claude for Healthcare vs ChatGPT Health: The AI Medical Assistant Battle [2025]](https://tryrunable.com/blog/claude-for-healthcare-vs-chatgpt-health-the-ai-medical-assis/image-1-1768252000051.jpg)