The AI Music Crisis That Nobody Saw Coming (But Should Have)

Here's the thing about the music streaming industry right now: it's quietly dealing with an existential problem that makes most tech scandals look quaint.

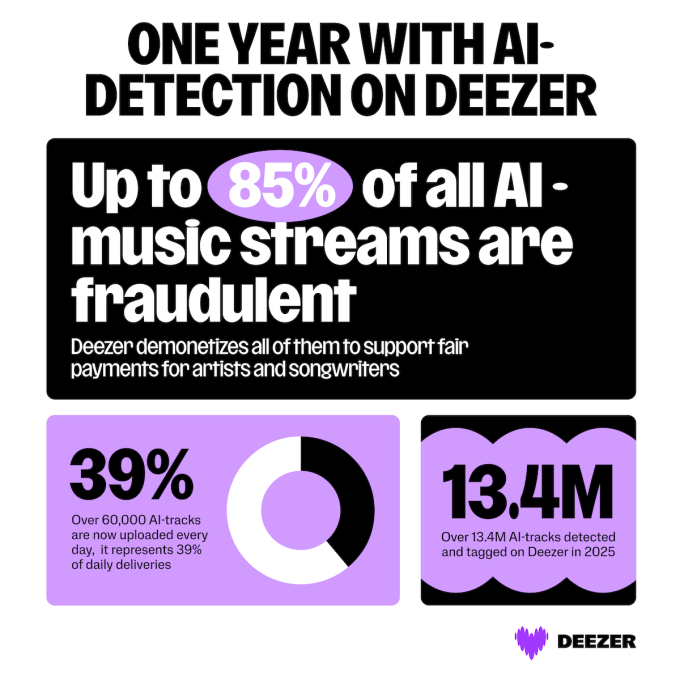

Every single day, platforms like Spotify, Apple Music, and YouTube Music are getting absolutely flooded with AI-generated tracks. We're not talking about a few hundred songs. We're talking about thousands upon thousands of pieces of music created by algorithms, uploaded by bad actors trying to game the system, and polluting the listening experience for millions of people.

The problem got so severe that in 2024, Spotify started taking aggressive action. The company began removing songs generated by AI tools used to manipulate streams and artificially inflate artist earnings. But here's where it gets interesting: Spotify didn't have its own detection system. That technology came from somewhere else entirely.

Enter Deezer, a French streaming platform that's been quietly operating in Spotify's shadow for years. In a move that shocked industry observers, Deezer decided to open-source its AI music detection technology and make it available to competing platforms, including Spotify itself. This isn't just a corporate goodwill gesture. It's a strategic masterstroke that reveals how desperate the music industry has become to solve this problem.

Why would Deezer, a company fighting for market share against global giants, voluntarily share technology that could level the playing field? The answer is complicated, and it gets at the heart of what happens when the music industry realizes that the real enemy isn't each other—it's the technology that threatens to destroy the entire ecosystem.

This article digs deep into what Deezer's move means for the future of music streaming, why Spotify is suddenly vulnerable, and what this says about the industry's broader struggle with artificial intelligence. Because this isn't just about fake music. It's about control, authenticity, and whether platforms can actually police themselves before the system collapses entirely.

TL; DR

- Deezer developed proprietary AI detection technology that identifies artificially generated music with high accuracy, and made it publicly available to other platforms (Deezer Newsroom)

- Spotify had no native AI detection system and was forced to rely on third-party solutions, making the platform vulnerable to AI-generated music exploitation (TechBuzz)

- AI-generated music flooding represents a multi-billion dollar problem for streaming platforms, affecting artist earnings, algorithm integrity, and user experience (The Guardian)

- The move benefits everyone except bad actors, which is why industry players are quietly celebrating despite competitive tensions (TechRadar)

- This signals a broader industry shift toward collaborative problem-solving when faced with existential technological threats (RouteNote)

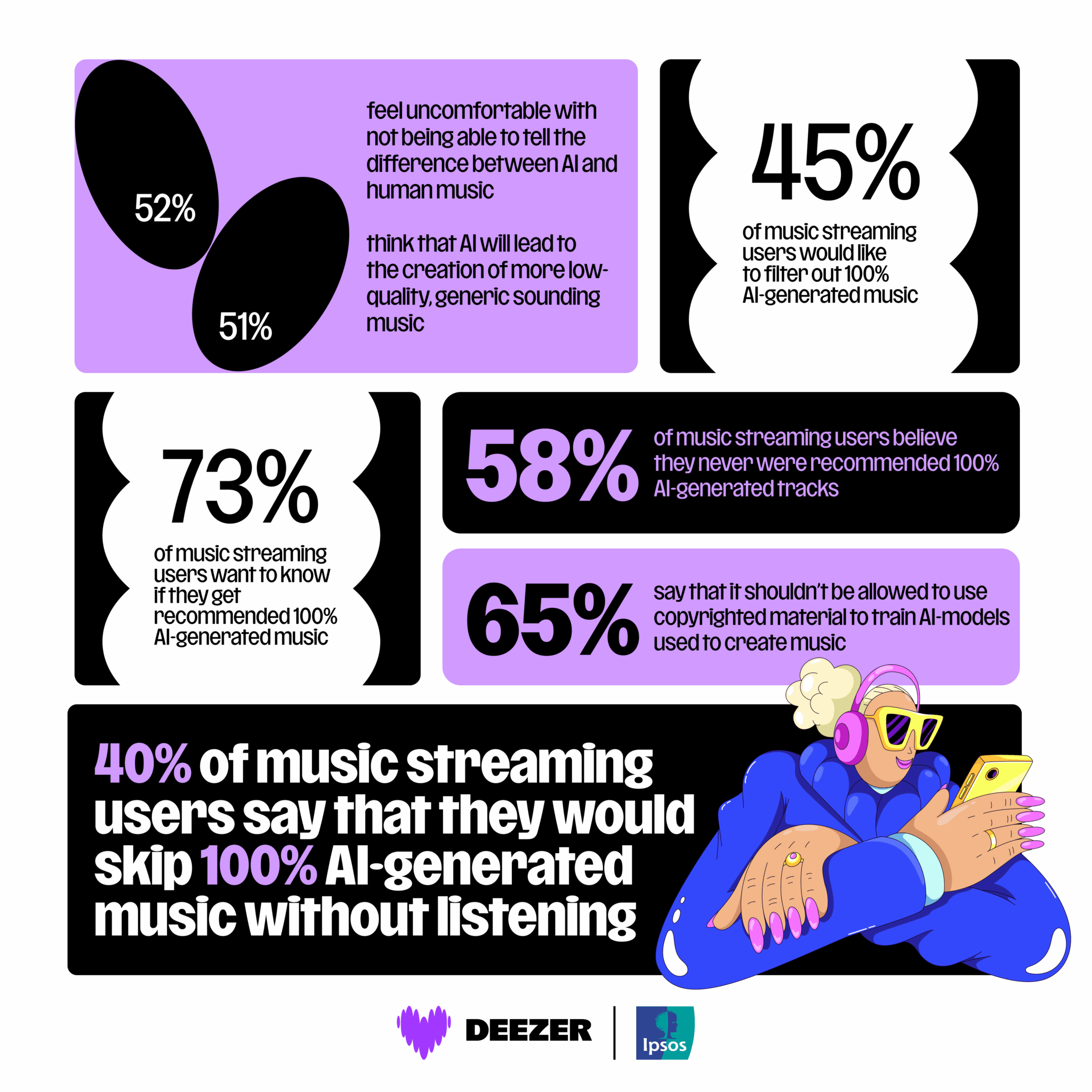

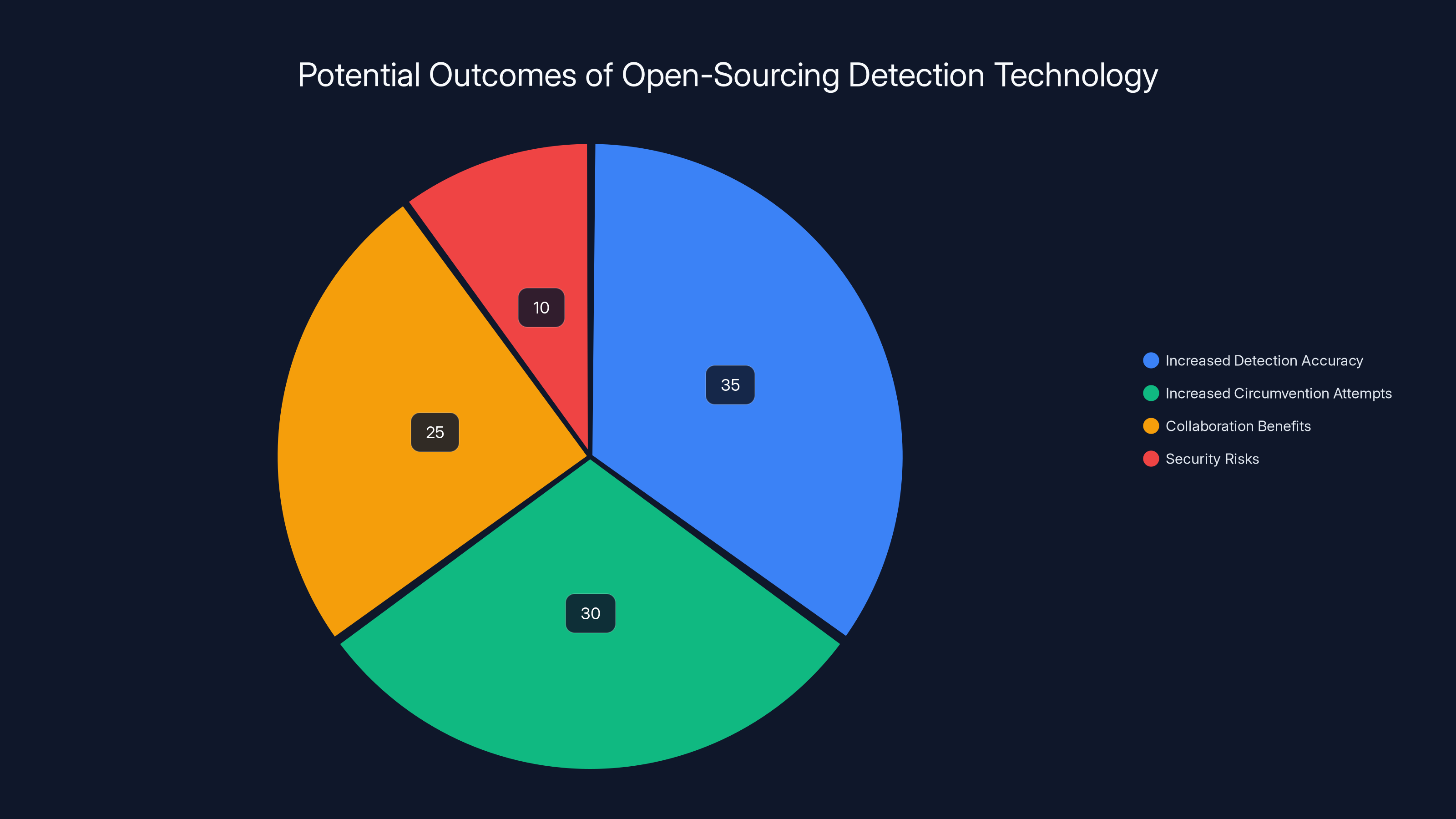

Estimated data shows that open-sourcing detection technology may increase detection accuracy and collaboration benefits, but also poses risks of circumvention and security challenges.

Why AI-Generated Music Became Streaming's Worst Nightmare

Take a step back and picture the incentive structure that created this mess. Music streaming platforms pay artists based on streams. Artists with millions of streams earn significantly more than artists with thousands. So what happens when someone figures out how to generate unlimited music cheaply and upload it to every platform simultaneously?

They get paid.

That's exactly what started happening around 2023. Bad actors discovered they could use AI music generation tools like AIVA, Amper, and others to create thousands of songs in days, upload them with different artist names, and start collecting royalties. Some of these generated tracks would get zero listens. Others, through manipulation tactics and algorithmic gaming, would land on playlists and earn actual money.

For Spotify specifically, this became a PR nightmare. The company had built its entire value proposition around discovery: helping listeners find new artists and helping artists reach new audiences. But if the system was flooded with AI garbage, that discovery mechanism broke down. Users would dig through playlists looking for actual human artists and find computer-generated filler instead. Meanwhile, real artists—people with families and mortgages who depended on streaming income—were watching their earnings get diluted by algorithm-generated competitors.

The economic impact was staggering. Deezer estimated that AI-generated music was responsible for millions of streams that should have gone to legitimate artists (SentinelOne). More importantly, it degraded the quality of the platform itself. When your recommendation algorithm is trained on data that includes thousands of low-quality AI tracks, the entire machine learning model deteriorates. It's like training a model to recognize cats but filling your dataset with pictures of cats that don't actually exist.

Spotify's response was brutal and swift. The company began aggressively removing AI-generated tracks and blocking artists who were engaging in stream manipulation. But there was a catch: they were doing this without a native detection system of their own. They were relying on external signals, user reports, and manual review. That's not scalable when you're dealing with thousands of uploads daily.

This is where the entire narrative shifts. Spotify, the undisputed king of music streaming with over 600 million users, suddenly found itself at a significant disadvantage. It needed detection technology, and it needed it yesterday.

Deezer, meanwhile, had spent years developing exactly what Spotify needed. The company had invested heavily in machine learning models trained to identify AI-generated music specifically. The technology analyzes audio characteristics that are difficult to mask—harmonic structures, temporal patterns, artifact signatures—and determines whether a track was created by humans or machines.

Here's where the story gets genuinely interesting: instead of hoarding this technology and using it as a competitive advantage, Deezer opened it up. The company made its detection tools available to other platforms, including Spotify (Hollywood Reporter).

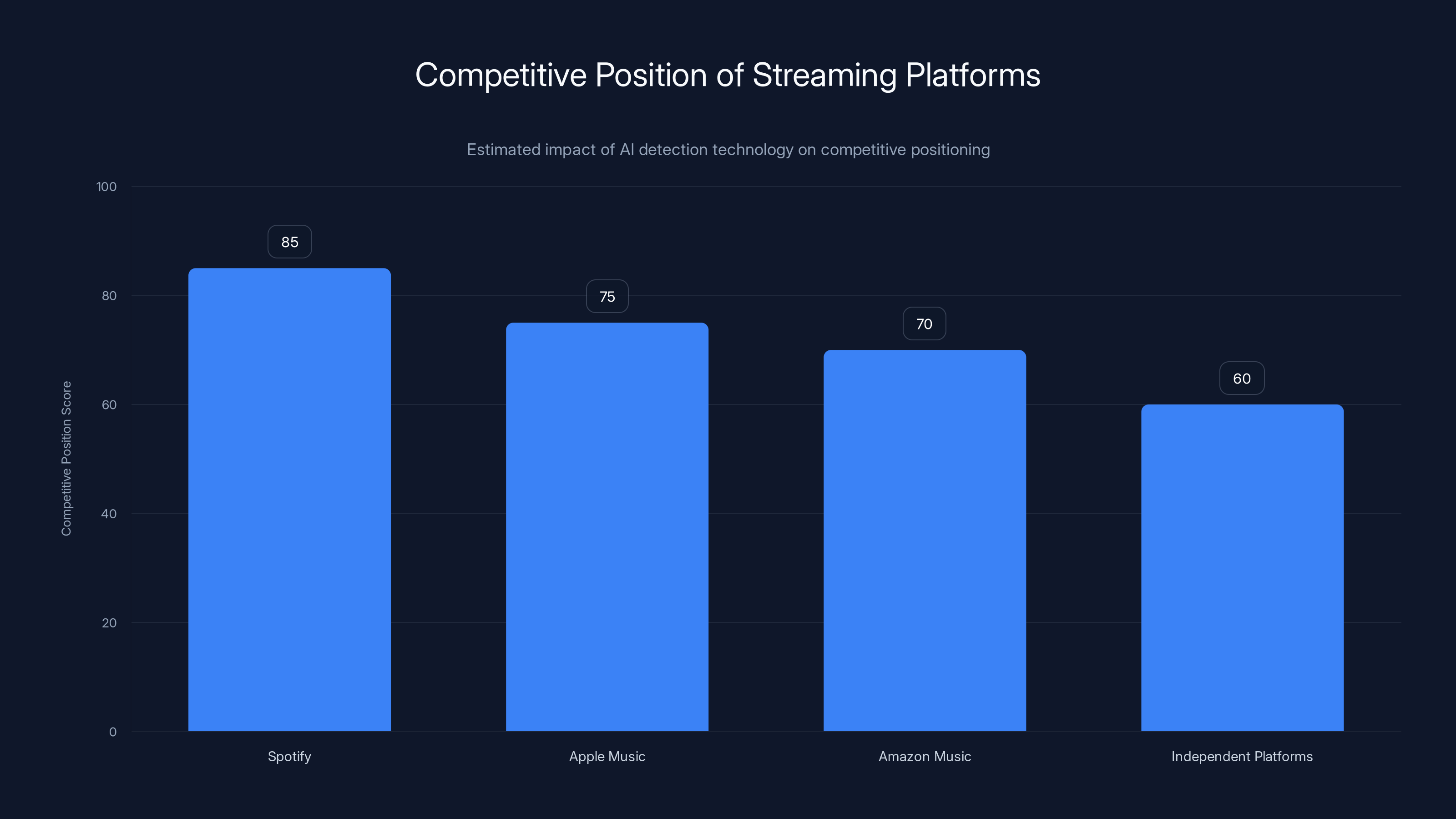

Spotify's adoption of AI detection technology significantly boosts its competitive position, surpassing Apple Music and Amazon Music. (Estimated data)

Understanding Deezer's AI Detection Technology: How It Actually Works

Before we can understand why Deezer's move is so significant, we need to understand what the company actually built.

Deezer's AI detection system works on a principle called "digital fingerprinting with behavioral analysis." Essentially, the system doesn't just listen to music and make a yes-or-no decision. It builds a multi-dimensional profile of the audio and compares it against known patterns of both human-created and machine-generated music.

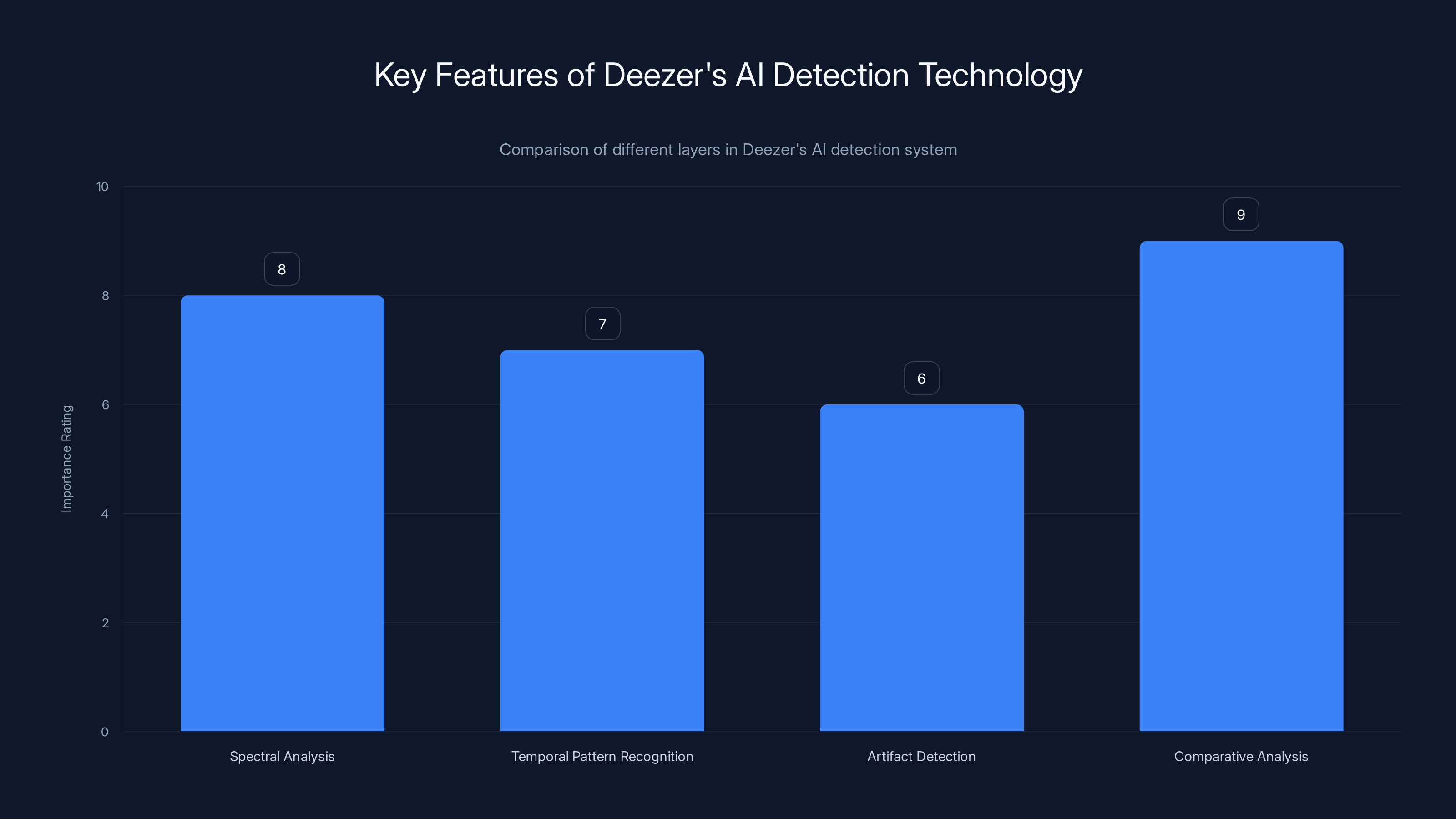

The technical architecture involves several layers. The first layer performs spectral analysis—breaking down the audio into frequency components and examining how those frequencies behave over time. AI-generated music, especially from older tools, tends to have certain statistical properties that differ from human-created music. For instance, AI models often struggle with subtle variations in timbre and dynamics that come naturally to human musicians.

The second layer involves temporal pattern recognition. Human musicians, even when playing the same note or phrase multiple times, introduce micro-variations. A pianist strikes a key slightly differently each time. A singer's voice has natural oscillations. These variations are so small that listeners don't consciously notice them, but they're detectable by machine learning models. AI-generated music, particularly from tools that synthesize from scratch rather than manipulating human recordings, tends to have more consistent temporal patterns.

The third layer is what Deezer calls "artifact detection." This involves looking for specific signatures that suggest an audio file was processed through an AI system. These might be clicking artifacts, frequency discontinuities, or other anomalies that result from how neural networks process audio.

The fourth and perhaps most important layer is comparative analysis. The system doesn't just analyze a single track in isolation. It compares metadata, upload patterns, artist history, and network relationships. If you're uploading 500 songs in a week, all credited to different artists with no social media presence, all with suspiciously similar audio signatures—the system flags it. If you're uploading batches of tracks that use the same underlying AI model (which is detectable), the system catches that too.

Deezer claims its system achieves detection accuracy rates above 90% for music generated by common AI tools. But—and this is crucial—accuracy varies significantly depending on the AI tool used to generate the music and how sophisticated the generation process was.

Older AI music generation tools like early versions of AIVA were relatively easy to detect because they had distinctive artifacts. Newer tools, particularly those trained on massive datasets of human music and using advanced transformer architectures, are harder to distinguish. Some of the most recent models can generate music that's challenging to distinguish from human-created music without additional context.

This is why the behavioral analysis component matters so much. Even if a track sounds convincingly human, the uploading patterns, metadata inconsistencies, and network analysis can reveal that something is off. If you're uploading 1,000 "unique" songs to Spotify with 1,000 different artist names, all from the same account, all within 48 hours, no amount of acoustic sophistication will save you.

When Deezer decided to share this technology, the company was essentially handing Spotify the keys to a security system that Spotify desperately needed. The move had massive implications for how Spotify could police its platform going forward.

Why Spotify Was Vulnerable (And Why It Matters)

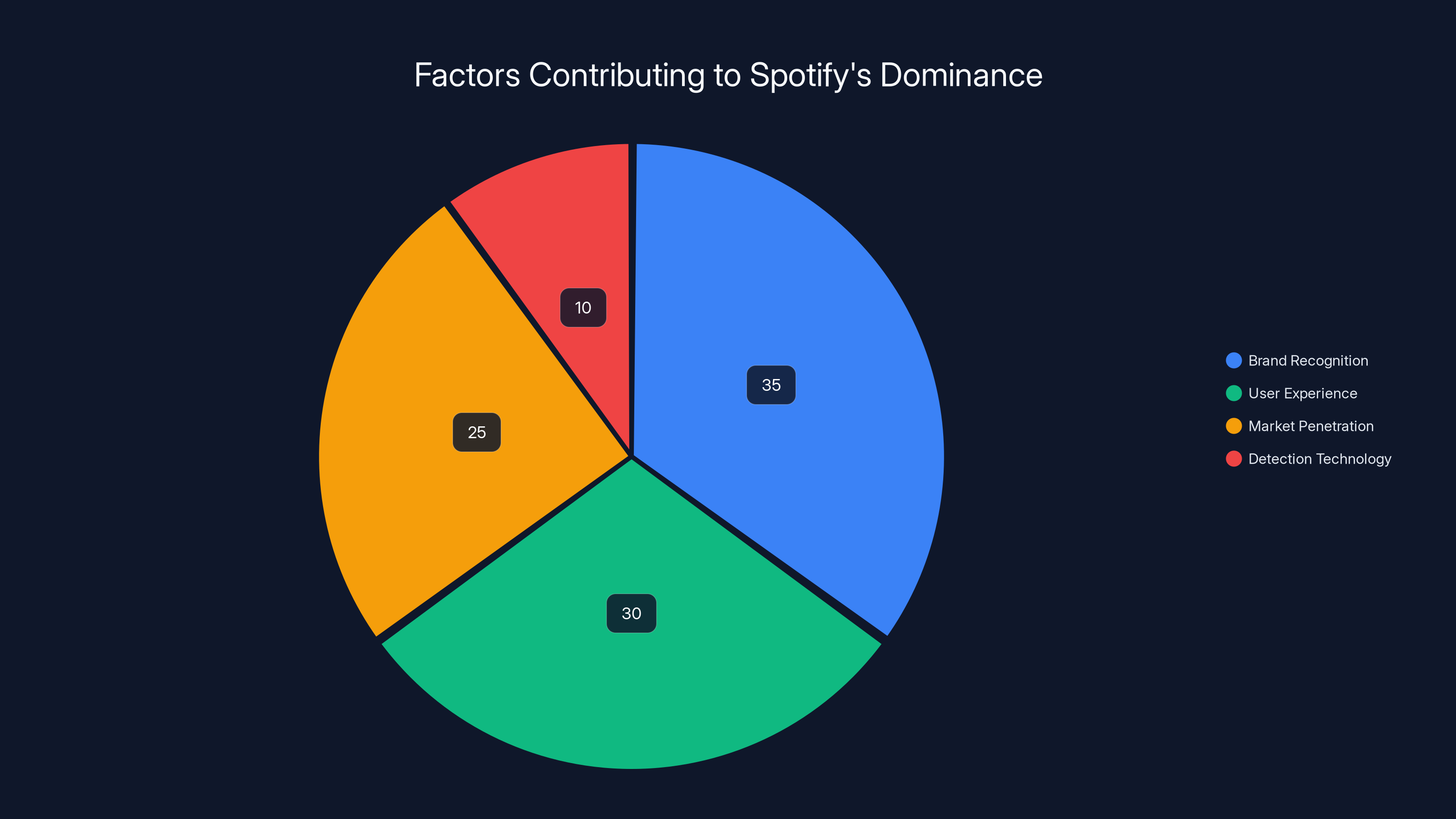

Let's be direct about this: Spotify is the dominant player in music streaming, but it's not dominant because it has the best detection technology. It's dominant because of brand recognition, user experience, and market penetration. When it comes to identifying AI-generated music, Spotify was actually falling behind.

This wasn't incompetence. It was a strategic choice. Building proprietary AI detection systems requires significant investment in data collection, machine learning expertise, and continuous training as new AI music generation tools emerge. For a company focused on growth, user acquisition, and profitability, that's not where the resources were flowing.

Instead, Spotify relied on a combination of approaches: user reports, algorithmic anomaly detection (looking for suspicious upload patterns), and partnerships with distributors who, in theory, were supposed to filter out obvious spam. This approach works okay for the most egregious violations but misses sophisticated attempts at fraud.

The real vulnerability wasn't technical—it was systematic. Spotify's algorithm is trained to recommend music based on what people listen to. If AI-generated music is landing on playlists and getting streams (through manipulation), then Spotify's algorithm learns to recommend more of it. The platform becomes progressively worse at its core function: helping people discover good music.

There's also the financial angle. Spotify pays out billions in royalties annually. If a significant portion of those payouts are going to fraudulent accounts running AI music operations, that's money not going to legitimate artists. It's also money Spotify might eventually have to recoup or explain to investors.

Major labels had been complaining about this for years. Universal Music Group, Sony Music, and Warner Music Group all publicly expressed concern about AI-generated music flooding the platform and degrading the ecosystem. Spotify needed to act, and it needed tools to act quickly and at scale (Saving Country Music).

Enter Deezer's detection technology. By adopting Deezer's system, Spotify could suddenly identify AI-generated music at scale, in real-time, without building the entire system from scratch. This wasn't just convenient—it was strategically essential.

But here's the catch that the original article hinted at: sharing detection technology creates a new set of problems that nobody really wants to talk about.

Spotify's dominance is primarily driven by brand recognition, user experience, and market penetration, with less emphasis on detection technology. (Estimated data)

The Catch: Why Open-Sourcing Detection Technology Is More Complicated Than It Seems

On the surface, Deezer's decision to open-source its AI music detection tool sounds noble. The company is fighting for the integrity of the entire ecosystem. It's working collaboratively to solve an industry-wide problem. It's putting principle above profit.

But technology, especially detection technology, is inherently adversarial. The moment you publish how your detection system works, bad actors get a roadmap for how to circumvent it.

This is a fundamental principle in security: detection systems are only effective as long as the people trying to evade them don't know exactly how they work. In cryptography, it's called Kerckhoffs's principle: assume the attacker knows your system, and design accordingly. But even with this principle in mind, there's a difference between an attacker understanding your general approach and having access to your exact codebase.

When Deezer made its detection tool available, it essentially gave the bad actors a test suite. They could now run AI-generated music through the system, see what failed, adjust their generation techniques, and try again. It's like giving someone a lock while also giving them a key to test whether their lock-picking techniques work.

Deezer probably anticipated this. The company likely knew that making the technology public would prompt attempts to defeat it. But the calculation was that the benefits of catching 90% of AI music fraud outweighed the costs of bad actors gradually learning to circumvent the system.

There's also the question of who has access. Deezer made the detection tool available to platforms, but did it also make it available to the people generating the AI music? If independent researchers could access it, then certainly the bad actors could too. The moment the tool is public, it's not really private anymore.

Another complexity: different platforms have different tolerance levels for false positives. Spotify might be aggressive and remove any song flagged as AI-generated, accepting some false positives in exchange for safety. Apple Music might be more conservative, requiring additional manual review before removing anything. This creates inconsistency across the ecosystem.

There's also the intellectual property angle. Deezer was essentially giving away something it had invested significant resources to build. While the company probably benefits from being seen as an industry leader and good actor, there's an opportunity cost. If Deezer had kept this technology proprietary, it could have charged other platforms to use it, creating a revenue stream.

Instead, Deezer chose collaboration. This tells us something important about how the company views the industry's future. Deezer likely calculated that a flooded, degraded streaming ecosystem was worse for everyone, including Deezer itself, than a slightly less competitive but healthier market.

However, there remains the minor but significant issue that making this technology public might accelerate arms races between detection and evasion. As soon as bad actors understand how the detection system works, they'll refine their methods. This could lead to an escalating cycle where detection tools get more sophisticated, AI generation tools get more sophisticated, detection tools get more sophisticated again, and so on.

How This Changes Spotify's Competitive Position

Let's think strategically about what Deezer's move actually does for Spotify.

Before this, Spotify was vulnerable. The platform had no native AI detection and was relying on external approaches that weren't nearly sufficient. This created a window of opportunity for competitors to position themselves as more trustworthy, more artist-friendly, or more focused on authenticity.

Apple Music, for instance, could have invested in detection technology and marketed itself as "the AI-spam-free alternative to Spotify." Amazon Music could have done the same. Independent streaming platforms or new entrants could have leveraged superior AI detection as a competitive advantage.

By adopting Deezer's technology, Spotify eliminates that vulnerability. Suddenly, Spotify can claim to have industry-leading AI music detection. The platform can be aggressive in removing fraudulent content while maintaining the trust of legitimate artists and listeners.

But there's a bigger strategic implication here. Spotify can now use this detection technology to market itself more effectively to artists and labels. Universal, Sony, and Warner have all been concerned about AI fraud on streaming platforms. With Deezer's detection tool, Spotify can demonstrate concrete action on that concern.

This is particularly important because those major labels have influence over what music gets promoted, what artists get resources, and where label-backed promotion dollars flow. If Spotify can convince major labels that it's serious about protecting them from AI fraud, that's significant bargaining power.

Moreover, Spotify can use this technology to improve its algorithms. By filtering out fraudulent music, Spotify's recommendation system becomes more accurate. The platform's discovery features improve. Users get better recommendations. This creates a virtuous cycle where improved recommendations lead to higher engagement, which leads to more subscription revenue.

However, Spotify also faces some challenges with adopting Deezer's technology. First, there's the implementation challenge. Integrating a detection system into your infrastructure requires engineering resources, testing, and careful rollout. You don't want false positives that remove legitimate music from the platform.

Second, there's the perception challenge. If Spotify becomes too aggressive with AI music removal, independent artists who use AI tools as part of their creative process might feel unfairly targeted. Spotify needs to be able to distinguish between "music generated entirely by AI and uploaded by fraudsters" and "music created by humans with AI assistance as part of the production process."

Third, there's the ongoing maintenance challenge. As AI music generation tools evolve and improve, the detection system needs to evolve as well. This isn't a one-time implementation. It's an ongoing arms race.

Deezer's AI detection system uses multiple layers, with Comparative Analysis being the most critical, rated at 9 out of 10 for its role in identifying AI-generated music.

The Broader Music Industry Implications: Who Benefits, Who Loses

Deezer's decision to open-source its AI detection tool ripples far beyond Spotify. It affects the entire music streaming ecosystem.

Obviously, the major beneficiaries are legitimate artists. Musicians who create music through traditional methods—performing instruments, singing, recording—now have better protection against fraudsters diluting their earnings. When Spotify and other platforms aggressively remove AI-generated spam, the remaining streams are more concentrated among actual artists.

Major record labels benefit significantly. Universal, Sony, and Warner have been pushing platforms to deal with AI fraud for years. With Deezer's detection technology, platforms can now demonstrate concrete action. This gives labels more confidence that platforms are serious about protecting their catalogs and their artists' interests.

Independent distributors benefit too, assuming they integrate the detection technology. Platforms like DistroKid, Tunecore, and CD Baby can now position themselves as gatekeepers that filter out fraudulent uploads, providing value to their legitimate artist customers.

Strangely, some aspects of the AI music generation industry benefit as well. Companies building consumer-focused AI music tools—products designed to help independent artists create music—can now position themselves as legitimate alternatives to spam operations. If the detection system specifically targets obvious fraud while being more lenient with human-created music that uses AI assistance, then legitimate AI music tools become more valuable, not less.

But the clear losers are the bad actors. Fraudsters who were making money by uploading thousands of AI-generated songs and gaming the system can no longer operate as easily. The barriers to entry for that business model just increased dramatically.

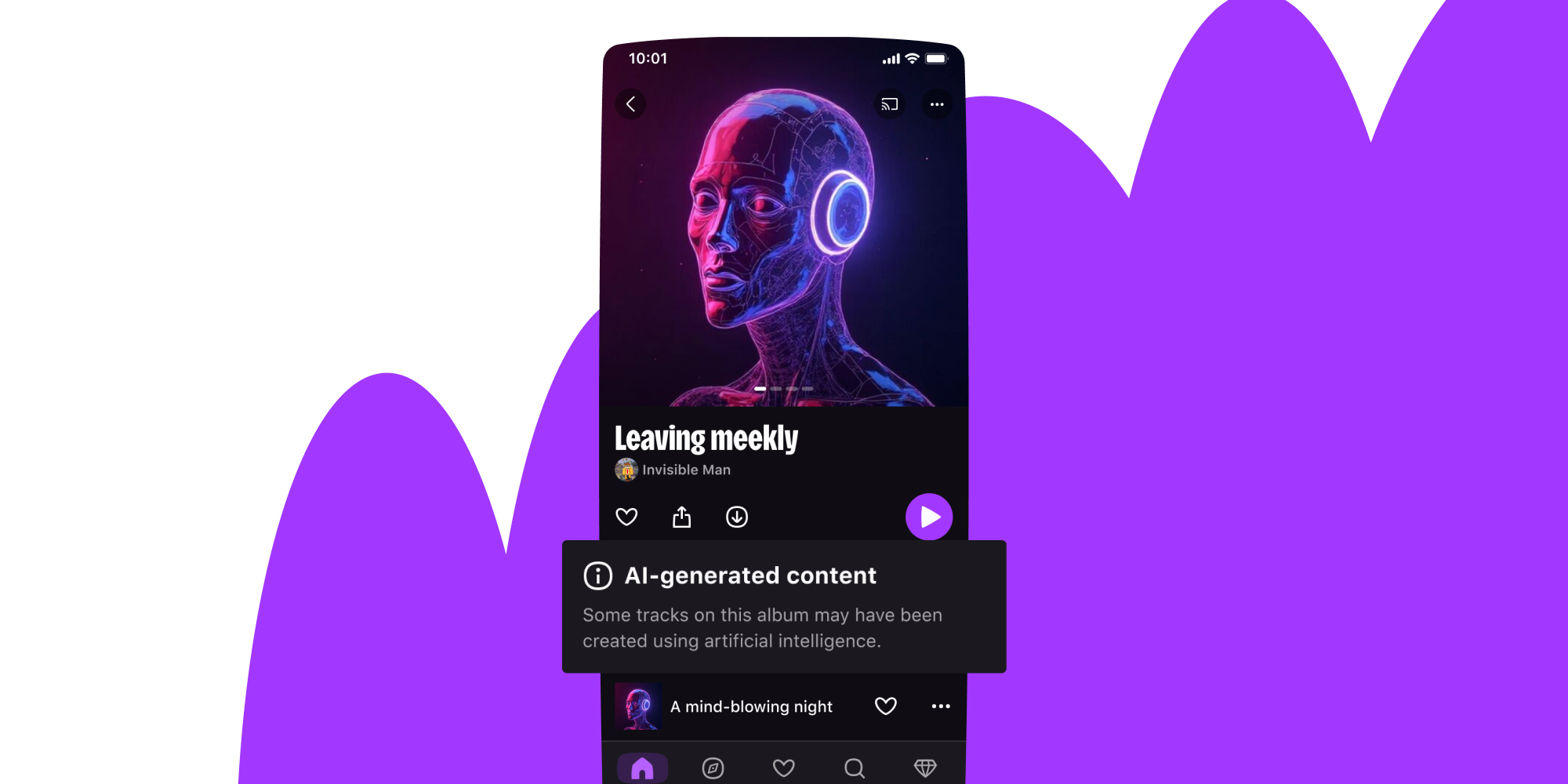

There's also a potential secondary impact on the music industry's relationship with AI in general. By solving the fraud problem through detection rather than prohibition, the music industry is essentially saying: "We don't want to ban AI-generated music. We just want to ensure it's legitimate and properly credited."

This is important because it opens a door for AI-generated music to become a legitimate category on streaming platforms. Instead of being spam, it could become a genre or category that users can choose to explore or avoid. Artists could legitimately publish AI-generated music (with proper labeling) without fear of automatic removal.

This is actually closer to how the music industry has handled other controversial technologies historically. When electronic music first emerged, it was controversial. Synthesizers were seen as inauthentic. Now, electronic music is a legitimate and respected genre. The same evolution could happen with AI-generated music if the detection systems allow the industry to separate legitimate creative use from fraud.

The Technical Arms Race: Detection vs. Evasion

Here's where the story gets genuinely complicated.

The moment Deezer's detection technology became public, the bad actors started thinking about how to evade it. This is inevitable. It's the nature of adversarial technology. You create a lock, and someone figures out how to pick it.

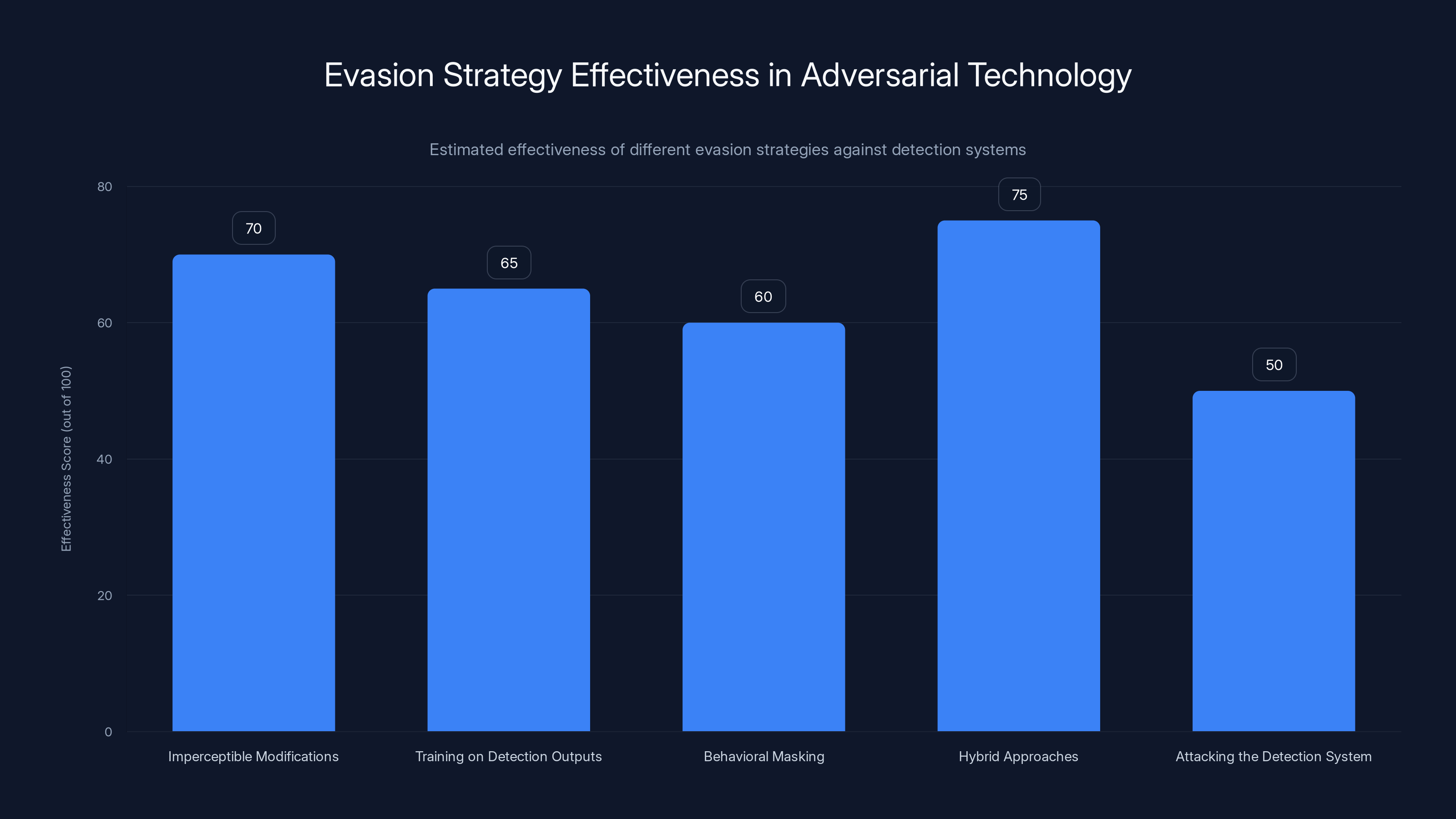

So what are the evasion strategies that bad actors are likely to try?

Strategy 1: Imperceptible Modifications. Take AI-generated music and apply subtle modifications that don't change how it sounds to human ears but do change its audio signature. This could include adding slight random noise, altering timing microscopically, or applying imperceptible frequency shifts. The goal is to make the audio "look" more like human-created music to the detection system.

Strategy 2: Training on Detection Outputs. Bad actors could run AI-generated music through the detection system, see what specifically makes it detectable, and then use that information to train new AI music generation models. Essentially, using the detection system as a training signal to create undetectable AI music.

Strategy 3: Behavioral Masking. Instead of uploading 1,000 songs at once, upload them slowly over weeks. Instead of uploading under one account, use distributed accounts with realistic metadata. Instead of uploading to all platforms simultaneously, stagger uploads across platforms and regions. The goal is to evade the behavioral analysis component of the detection system.

Strategy 4: Hybrid Approaches. Use AI to generate the core structure of a song, then have humans manually edit and modify it. If enough human editing is involved, the modified version might be too different from the original AI generation for detection systems to catch it.

Strategy 5: Attacking the Detection System. Directly attack the detection system itself. If the system is deployed on cloud infrastructure, find vulnerabilities and exploit them. If the system relies on specific machine learning models, attempt to reverse-engineer or mislead those models with adversarial inputs.

Of these strategies, the most likely to work in the near term is probably behavioral masking combined with imperceptible modifications. These are relatively easy to implement and require less sophisticated understanding of the underlying technology.

Deezer and the platforms using its detection technology are probably anticipating these evasion strategies. That's why the detection system has multiple layers—audio analysis, behavioral analysis, metadata analysis. Defeating all of them simultaneously is much harder than defeating just one.

But here's the thing: this arms race isn't static. It's dynamic. As detection systems improve, evasion techniques improve. As evasion techniques improve, detection systems need to adapt. This is similar to the historical arms race between spam filters and spammers, between antivirus software and malware creators, between credit card fraud detection and fraudsters.

The key difference is that in this case, the detection technology is public, which accelerates the evasion innovation cycle. Bad actors don't have to guess how the system works—they can study it directly.

Deezer probably knew this would happen. The company made a strategic choice that the immediate benefit of improved detection outweighed the long-term cost of accelerated evasion innovation. In other words, removing 90% of AI music fraud now is worth it, even if that means dealing with 80% of AI music fraud in six months as evasion techniques improve.

Hybrid approaches are estimated to be the most effective evasion strategy against detection systems, with a score of 75 out of 100. Estimated data.

What This Means for Independent Artists and Creators

If you're an independent musician, Deezer's move has several immediate implications for you.

First, the good news: the playing field just got somewhat leveled. If you're creating legitimate music and uploading it to Spotify, Apple Music, and other platforms, you're now competing against less AI spam. Your music has a better chance of being discovered because the platforms are more aggressively removing fraudulent uploads.

Second, if you're using AI tools as part of your creative process—which an increasing number of musicians do—you need to be more transparent about it. Make sure your metadata clearly indicates that you're the artist, even if AI was involved in production. Document your creative process. Be able to explain why your production techniques resulted in specific audio characteristics.

Third, be aware that detection systems can have false positives. If your music happens to have audio characteristics that resemble AI-generated music (even though you created it yourself), there's a non-zero risk that it could be flagged. If this happens, you'll have a process to appeal and get your music restored, but you need to be aware of the possibility.

Fourth, understand that how you upload your music matters. If you're uploading music as an independent artist, avoid patterns that look like fraud. Don't batch-upload hundreds of songs simultaneously. Don't create multiple artist accounts in a short timeframe. Don't engage in obvious stream manipulation tactics. Even if your music is legitimate, suspicious uploading patterns can trigger flags.

Fifth, there's a longer-term opportunity. As detection systems improve and fraudulent AI music gets filtered out, platforms might actually create dedicated AI music categories where AI-generated music can exist legitimately and transparently. This could be a new market category for artists interested in exploring AI-assisted creativity.

The broader message for independent artists is that the platforms are now taking fraud seriously. This is good for the ecosystem. But it also means being more deliberate and transparent about how you operate as a creator.

The International Dimension: How This Differs Across Regions

Here's something that doesn't get discussed enough: Deezer's AI detection move has very different implications depending on where you are in the world.

Deezer is a French company, and it has strong positions in Europe, particularly in France, Germany, and the Benelux countries. The company is much smaller than Spotify globally, but it's a major player in specific regions.

In Europe, regulation around AI is much stricter than in the United States. The EU's AI Act, which came into effect in 2024, imposes specific requirements around AI systems, transparency, and accountability. Deezer's decision to share its detection technology aligns well with this regulatory environment. The company is positioning itself as a responsible actor in AI deployment.

In the United States, the regulatory environment is less prescriptive but more market-driven. American platforms tend to compete on technical capabilities and feature sets. Deezer's detection tool gives Spotify a technical advantage that the company can market directly to users and investors.

In Asia and emerging markets, the situation is more fragmented. Some countries have homegrown streaming platforms that compete with Spotify and Apple Music. How these platforms adopt or develop their own AI detection capabilities will significantly influence the regional music ecosystem.

There's also the question of cultural differences in how fraud is viewed. In some regions, the line between "aggressive copyright enforcement" and "censorship" is more contentious. Deploying aggressive AI detection systems could face different cultural and legal resistance in different parts of the world.

For Deezer specifically, making its detection technology available to competitors is a smart strategy in a fragmented global market. By positioning itself as an industry collaborator, Deezer gains credibility and influence even in regions where Spotify is dominant. The company becomes the good actor that helped solve an industry problem, which can help with regulatory relationships, partnership opportunities, and brand positioning.

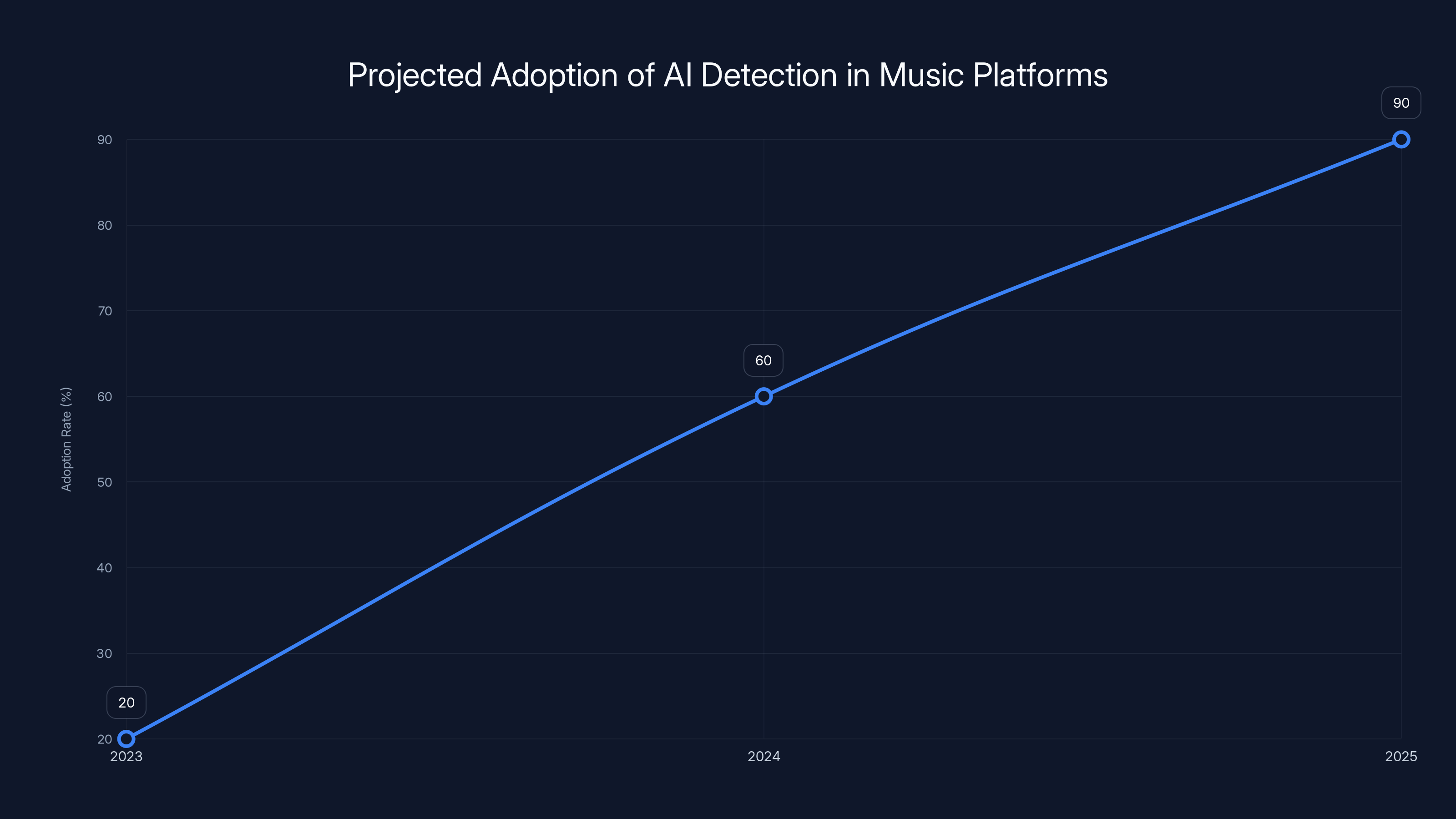

Estimated data suggests that by 2025, 90% of major music streaming platforms will have implemented AI music detection technology.

Looking Ahead: The Future of Music Platform Integrity

Deezer's move is a snapshot of where the music streaming industry is heading. Let's think about what comes next.

In the immediate term, we should expect to see other streaming platforms adopting similar detection capabilities. Apple Music will likely implement or develop its own AI detection. Amazon Music will do the same. Tidal, YouTube Music, and other smaller platforms will follow. Within 12-18 months, aggressive AI music detection will be standard across all major platforms.

The second wave will be improvements to detection accuracy and reduction of false positives. Platforms will realize that incorrectly flagging legitimate music creates problems: artist complaints, potential legal issues, public relations challenges. So they'll invest in making detection systems more accurate and implementing better appeal processes.

The third wave will be transparency and labeling. Rather than simply removing AI-generated music, platforms might create categories, tags, or labels that indicate when music was created or significantly assisted by AI. This creates a path for AI-generated music to exist legitimately on platforms while maintaining user choice and transparency.

The fourth wave will be regulation. Governments will probably start imposing requirements around AI music detection, disclosure, and artist protection. We've already seen hints of this in some European discussions about AI and creativity rights.

Longer-term, we might see detection technology becoming so sophisticated that it's essentially worthless to try to upload fraudulent AI music to platforms. The barrier to entry for this type of fraud becomes prohibitively high. But as that happens, bad actors will probably pivot to other types of fraud on streaming platforms—different schemes entirely.

For independent artists, the long-term implication is clear: authenticity becomes more valuable. As platforms make it harder to succeed through fraud and manipulation, success increasingly depends on actually creating music that people want to listen to. This is probably good for the ecosystem overall, even if it's more challenging for bad actors.

For platforms like Spotify, this evolution requires ongoing investment in technology, policy, and enforcement. But the alternative—allowing AI-generated spam to proliferate and degrade platform quality—isn't viable. So platforms will continue to invest in detection, improvement, and arms race management.

For the music industry more broadly, this evolution suggests that AI isn't going away—it's being integrated. The future probably isn't "AI-free music" or "AI-generated music replaces human musicians." It's more likely a hybrid world where AI tools assist human creativity, where AI-generated music exists as a transparent category, and where the industry distinguishes carefully between assisted creativity and fraudulent spam.

Why Deezer's Generosity Isn't Actually That Surprising

When you step back and look at Deezer's position in the market, the company's decision to open-source its detection technology starts to make strategic sense.

Deezer is the David to Spotify's Goliath. The French streaming platform operates primarily in Europe and has a market share that's a fraction of Spotify's. In this situation, Deezer actually benefits from collaborating with larger platforms rather than competing head-to-head.

By sharing detection technology, Deezer becomes a technology leader that's contributing to industry standards. This improves Deezer's brand and positioning, particularly in Europe where the company has institutional relationships and regulatory influence.

It also creates reciprocal value. When Spotify adopts Deezer's technology, Spotify implicitly endorses Deezer's technical approach. If that approach works well (which it seems to), it reflects well on Deezer. It positions the company as a serious technical player in the streaming industry.

From a competitive standpoint, Deezer is playing a longer game. The company probably knows it won't outcompete Spotify on scale or brand. But Deezer can compete on values, transparency, and technical responsibility. By positioning itself as the company that solved the AI fraud problem, Deezer strengthens its competitive position in a niche where it can win: users who care about artist integrity and platform ethics.

There's also a defensive component. If Spotify had developed superior AI detection technology independently, Spotify could have used that as a competitive advantage. By sharing knowledge now, Deezer prevents Spotify from gaining that advantage. In the language of strategic competition, Deezer is preventing Spotify from creating a moat around superior content quality.

Finally, there's the regulatory angle. In Europe, regulators are increasingly concerned about platform responsibility, AI governance, and how platforms police themselves. By voluntarily sharing detection technology with competitors, Deezer is demonstrating responsibility and collaboration. This builds political capital with regulators and policymakers.

From this perspective, Deezer's move isn't altruism—it's smart strategy.

The Minor Setback Nobody Wants to Fully Discuss

The original article hinted at "one minor setback," so let's address what that actually is.

The setback is that once detection technology is public, bad actors can study it and adapt. We've already discussed this in the adversarial machine learning section, but it's worth underlining: this is a real limitation of the approach.

Deezer's detection system is probably quite good at catching obvious AI-generated music. But as bad actors learn how the system works, they'll find ways to evade it. The system might go from being 90% accurate to 85% accurate, then 75%, as evasion techniques improve.

Platforms will respond by continuing to improve detection, but there's an inherent tension: the more public the detection system, the easier it is for bad actors to evade. The more aggressive the detection, the higher the risk of false positives that incorrectly flag legitimate human-created music.

Another minor setback is that sharing detection technology might actually slow down Deezer's own competitive progress. If Deezer had kept this technology proprietary, the company could have used it as a differentiator. Smaller independent platforms could have chosen Deezer as a partner because of superior detection capabilities. By open-sourcing it, Deezer loses that potential competitive advantage.

There's also the question of liability. If Deezer's detection system is available to everyone but produces false positives that incorrectly flag legitimate music, does Deezer bear any responsibility? This could become legally complex, especially in Europe where AI liability frameworks are developing.

But the framing of these as "setbacks" is probably too strong. They're more like "trade-offs" that Deezer consciously accepted. The company chose the benefits of being seen as an industry collaborator and solving an ecosystem problem over the benefits of maintaining a proprietary competitive advantage.

FAQ

What is AI-generated music and why is it a problem for streaming platforms?

AI-generated music is music created entirely or partially by artificial intelligence systems, typically using neural networks trained on existing music. The problem for streaming platforms is that bad actors use AI music generation tools to create thousands of songs cheaply, upload them with fake artist names, and manipulate streams to generate fraudulent royalty payments. This floods platforms with low-quality content, degrades recommendation algorithms, and dilutes earnings for legitimate artists.

How does Deezer's AI detection technology work?

Deezer's detection system uses a multi-layered approach combining audio analysis, behavioral analysis, and metadata examination. It analyzes spectral characteristics, temporal patterns, and artifact signatures in audio to identify machine-generated music, then compares this against known AI generation patterns. The system also examines upload patterns, account history, and network relationships to flag suspicious behavior. The combination of these layers achieves detection accuracy above 90% for most common AI music generation tools.

Why did Deezer make its AI detection tool available to competitors like Spotify?

Deezer made this strategic choice because solving the AI fraud problem benefits the entire ecosystem, including smaller competitors like Deezer. By positioning itself as a technical leader contributing to industry standards, Deezer strengthens its brand and competitive position, particularly in Europe. The company also prevents larger competitors from gaining exclusive access to superior detection capabilities. Additionally, this aligns with European regulatory expectations around platform responsibility and AI governance.

What are the drawbacks of open-sourcing detection technology?

The primary drawback is that bad actors can study the public detection system and develop evasion techniques to circumvent it. This accelerates the arms race between detection and evasion, potentially reducing the system's accuracy over time as adversaries refine their methods. There's also the question of false positives—legitimate human-created music might occasionally be incorrectly flagged as AI-generated. Additionally, Deezer sacrificed a potential competitive advantage by sharing proprietary technology.

How does AI music detection differ across different streaming platforms?

Each platform implementing AI detection makes different choices about accuracy tolerance, false positive handling, and user communication. Spotify might be more aggressive in removing flagged music, while Apple Music might require manual review. Different platforms also have different integration timelines and technical capabilities. This creates inconsistency across the ecosystem, but also allows each platform to tune detection according to its specific user base and risk tolerance.

What should independent artists do to protect themselves from false detection positives?

Independent artists should maintain clear documentation of their creative process, especially if they use AI tools as part of production. Use transparent metadata that clearly identifies you as the artist. Avoid uploading patterns that look like fraud, such as batch-uploading hundreds of songs simultaneously or creating multiple artist accounts in a short timeframe. Most platforms have appeal processes if your legitimate music is incorrectly flagged, so stay informed about those processes and be prepared to use them if necessary.

Is AI-generated music going to be banned from streaming platforms entirely?

No, platforms are not banning AI-generated music outright. Instead, they're using detection systems to identify fraudulent uploads (spam created by bad actors) and separate them from legitimate creative uses of AI. In the longer term, platforms might create dedicated AI music categories or labels that allow AI-generated or AI-assisted music to exist transparently. The goal is not prohibition but rather integrity and transparency.

How will AI music detection technology continue to evolve?

Detection technology will likely become more sophisticated, with improved accuracy and reduced false positives. Platforms will invest in better appeal processes and transparency mechanisms. Regulation will probably require certain standards around AI music detection and disclosure. Simultaneously, AI generation tools will improve, potentially making AI-generated music harder to distinguish from human-created music. This creates an ongoing arms race where both detection and evasion techniques continuously improve.

The Bottom Line: An Industry at an Inflection Point

Deezer's decision to open-source its AI music detection technology represents something larger than just one company solving one problem. It's a signal that the music streaming industry has reached an inflection point where collaboration matters more than competition when facing existential threats.

Spotify didn't need Deezer to make its music platform better. Spotify could have built detection technology independently. But it would have taken longer, cost more, and delayed critical anti-fraud measures. By adopting Deezer's approach, Spotify immediately improves platform integrity while maintaining focus on other priorities.

For the music industry broadly, this moment clarifies where things are heading. AI isn't going away. AI music generation tools will continue to improve. But platforms are committing to detecting and filtering out fraudulent use while maintaining legitimate uses of AI-assisted creativity.

For independent artists, this should be encouraging. The platforms are investing in their protection. But it should also be motivating—the era of easy manipulation and low-quality content succeeding through fraud is ending. Your music needs to be good, authentic, and legitimately created to succeed long-term.

For those fascinated by the adversarial technology angle, this is just the beginning of what promises to be a fascinating arms race between detection and evasion, innovation and fraud prevention, platform integrity and bad actor adaptation.

In the end, Deezer gave Spotify more than just a detection tool. The French streaming platform gave the entire industry permission to collaborate on solving problems, and a blueprint for how to contribute value even when you're not the largest player in the market. That's something Spotify should acknowledge, and that the entire industry should learn from.

Because in a world where AI can generate anything, authenticity becomes the rarest resource. And that benefits everyone who creates legitimately.

Key Takeaways

- Deezer developed and open-sourced AI detection technology that identifies machine-generated music with 90%+ accuracy by analyzing audio characteristics, temporal patterns, and behavioral uploading anomalies

- Spotify, despite being the dominant streaming platform, lacked native AI detection capabilities and was vulnerable to fraudulent AI-generated music flooding the platform and degrading recommendations

- Deezer's decision to share proprietary technology was strategic—the company benefited from being positioned as an industry collaborator while preventing larger competitors from gaining exclusive detection advantages

- The move created an immediate improvement in platform integrity across the industry but also accelerated the arms race between detection and evasion techniques, as bad actors study the public system to develop circumvention methods

- Independent artists benefit from better fraud filtering but need to maintain transparent documentation of their creative process, especially if using AI tools, to avoid false positive detection flags

Related Articles

- AI Fake Artists on Spotify: How Platforms Are Fighting AI Music Fraud [2025]

- Deezer's AI Music Detection Tool: How Streaming Platforms Fight Fraud [2025]

- Bandcamp's AI Music Ban Sets the Bar for Streaming Platforms [2025]

- Bandcamp's AI Music Ban: What Artists Need to Know [2025]

- CBP's AI-Powered Quantum Sensors for Fentanyl Detection [2025]

- Spotify's AI-Powered Prompted Playlists: How They Work [2025]

![Deezer's AI Detection Tool: How It's Reshaping Music Streaming [2025]](https://tryrunable.com/blog/deezer-s-ai-detection-tool-how-it-s-reshaping-music-streamin/image-1-1769690232823.jpg)