The AI Music Crisis Streaming Platforms Can't Ignore

Something strange happened in the music industry last year. The volume of AI-generated tracks flooding streaming platforms didn't just increase. It exploded. We're talking about 60,000 new AI-generated songs arriving daily on platforms like Deezer. That's roughly 25 million tracks annually just from AI composition tools alone.

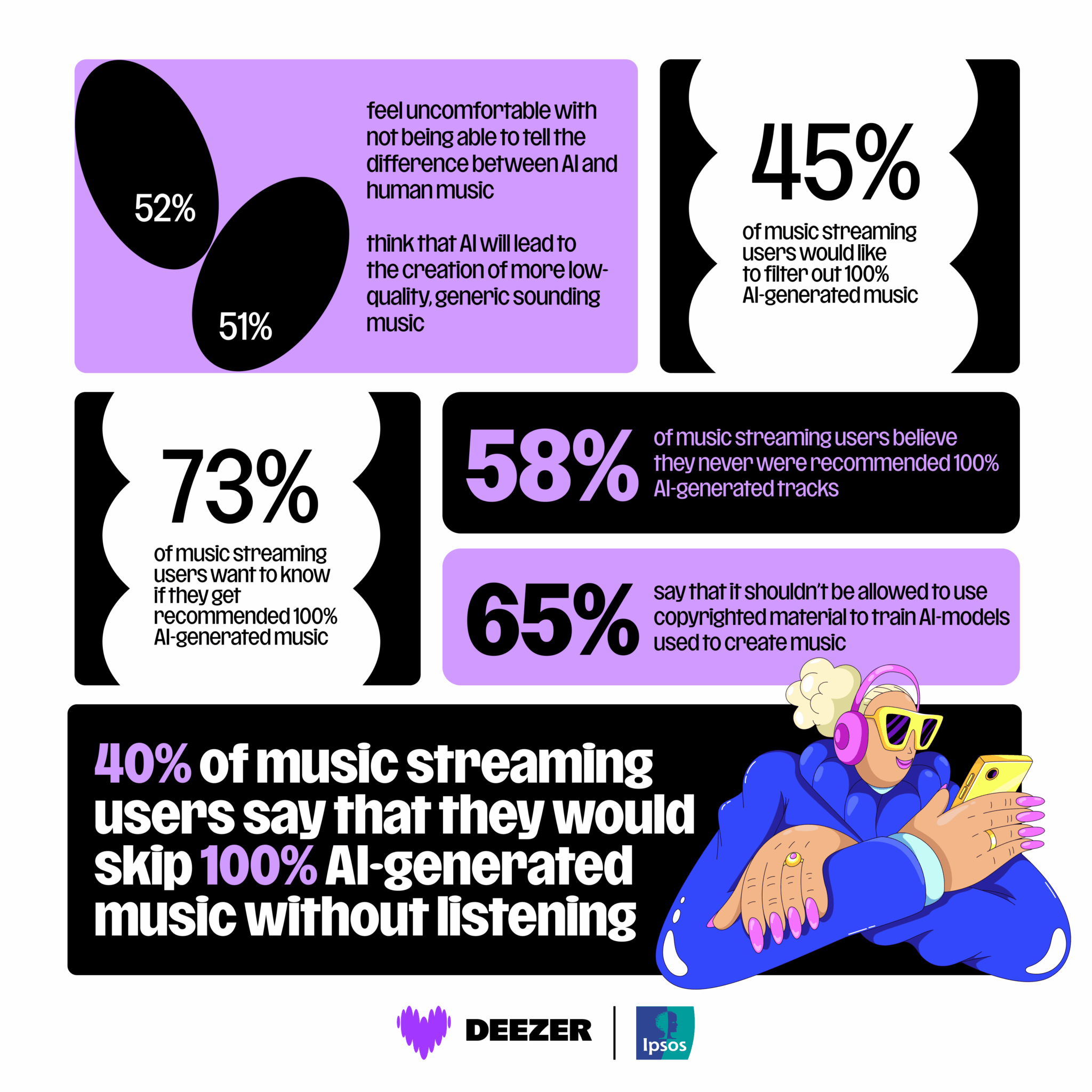

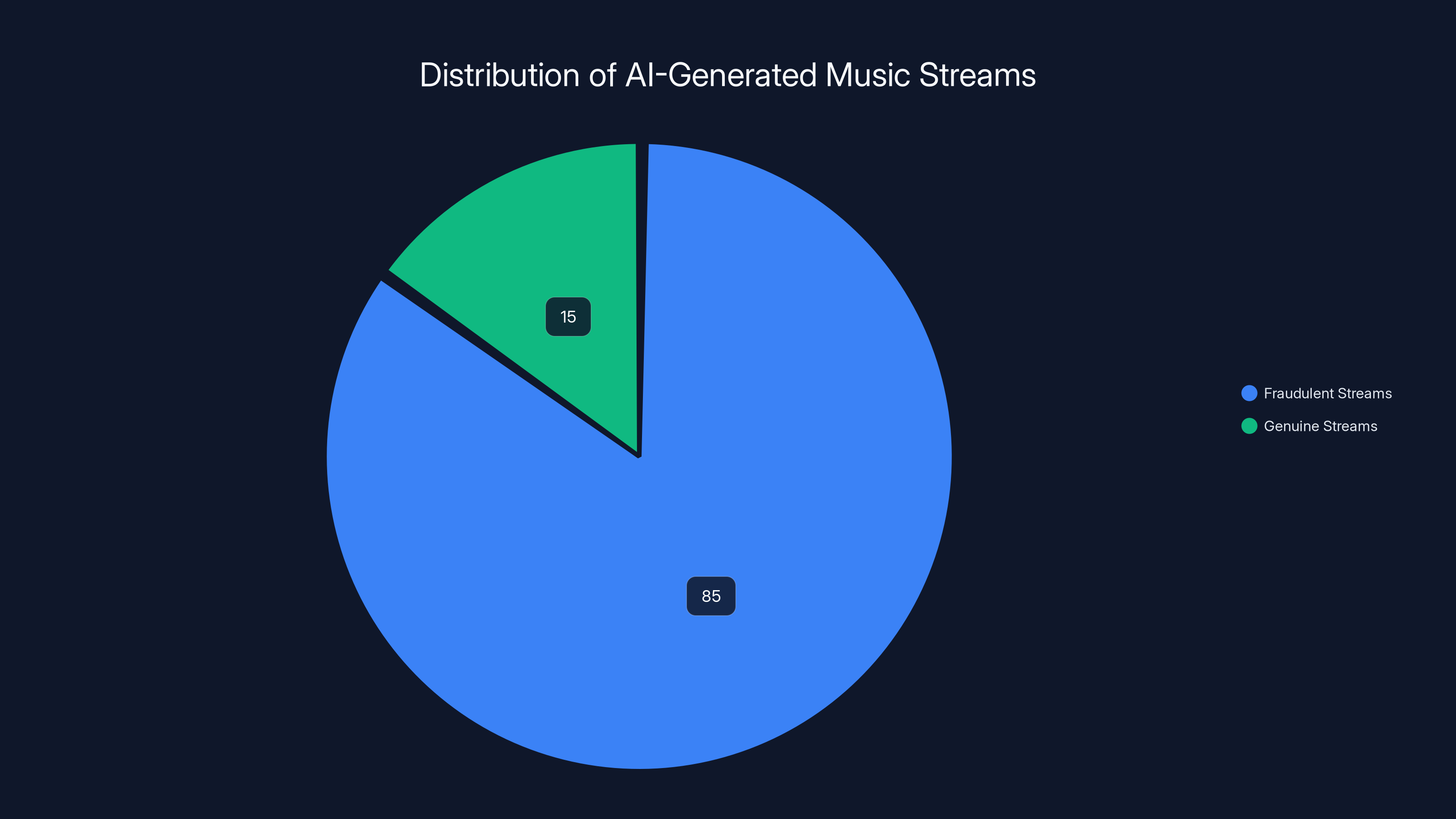

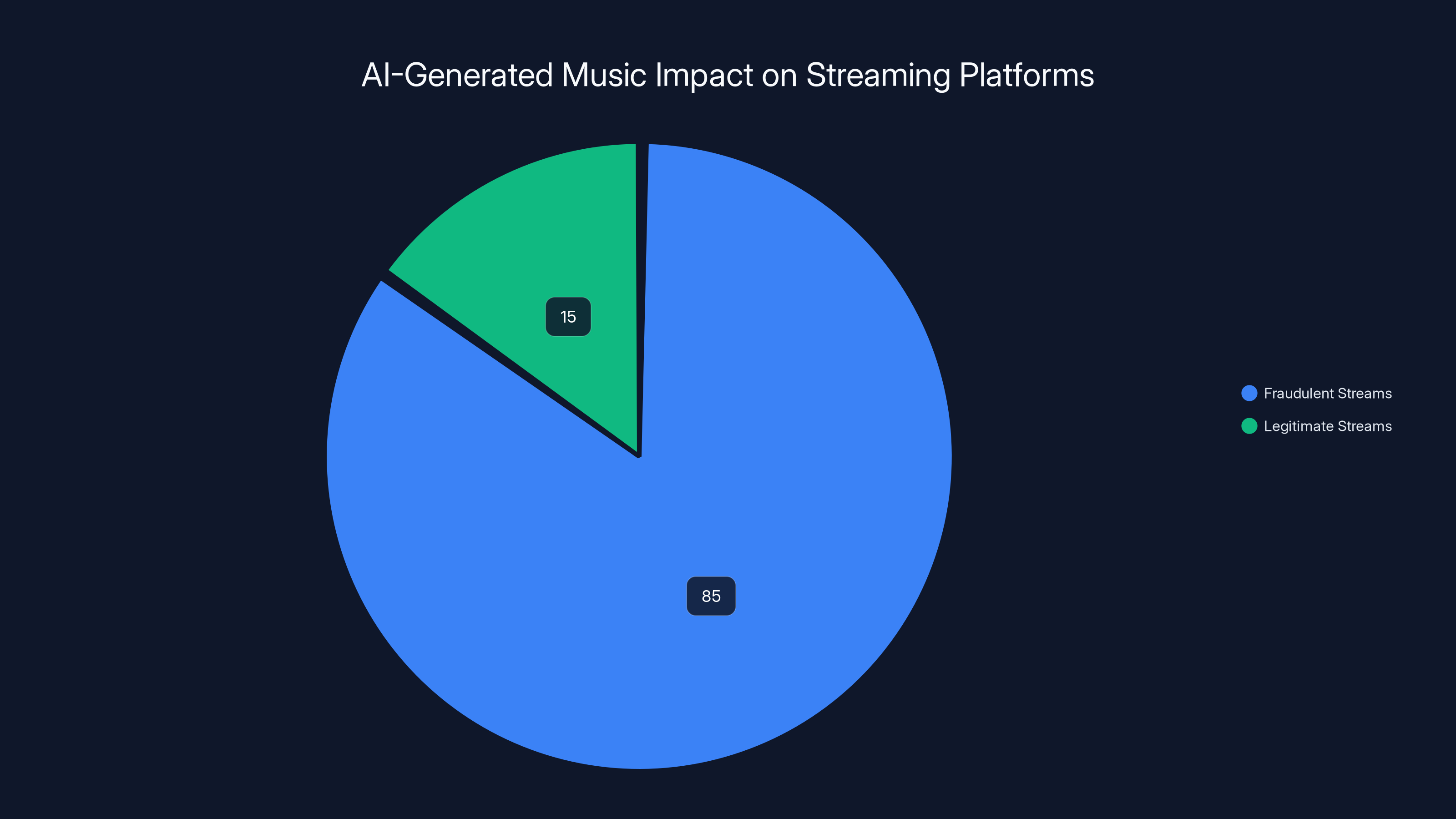

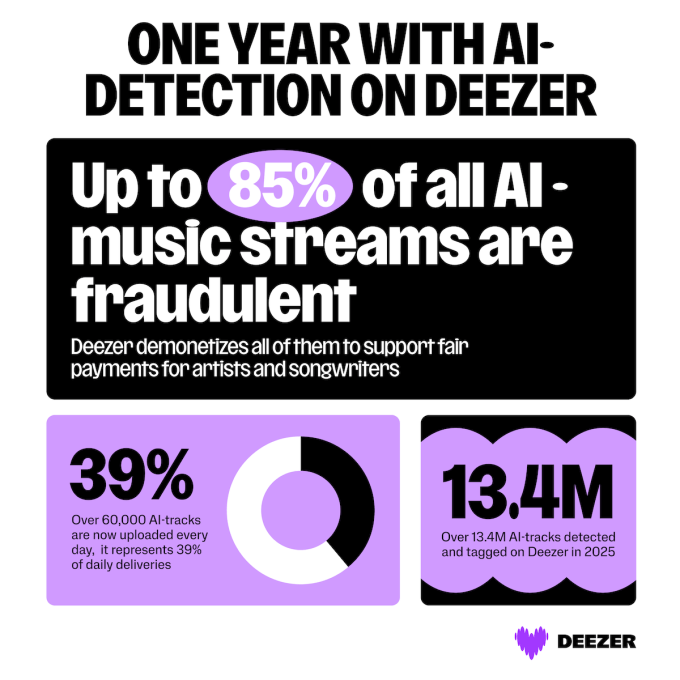

Here's what keeps executives at streaming platforms awake at night: 85% of streams from fully AI-generated music are fraudulent. Not opinion. Hard data. This means billions of plays are coming from fake accounts, bot networks, and manipulation schemes designed to siphon royalties away from legitimate artists. Meanwhile, actual human musicians watch their compensation shrink as the streaming pool dilutes with low-effort, automatically-generated content.

The problem isn't just cultural. It's financial. When fraudulent AI tracks clutter the system, several things break simultaneously. Artists stop trusting platforms to pay fairly. Algorithms become polluted with garbage data. Listeners encounter more spam in their recommendations. And the entire revenue model that's supposed to support creativity collapses under the weight of bad-faith content.

But here's where things get interesting. One major streaming platform decided to fight back. Deezer, the French-based music service with over 10 million tracks in its catalog, built something different. Instead of just moderating AI music internally, they made their detection technology available to other platforms. This move fundamentally changes the game for how the entire industry could respond to AI-generated content.

This isn't just technical innovation. It's an industrial statement about the future of streaming, artist protection, and what transparency means in an AI-saturated music industry. Let's break down what's actually happening, why it matters, and what comes next.

TL; DR

- Deezer developed an AI detection tool with 99.8% accuracy identifying fully AI-generated tracks and is now licensing it to other platforms

- 85% of streams from fully AI-generated music are fraudulent, stealing millions from legitimate artists

- 60,000 AI tracks arrive daily on streaming platforms, growing from 20,000 just months prior

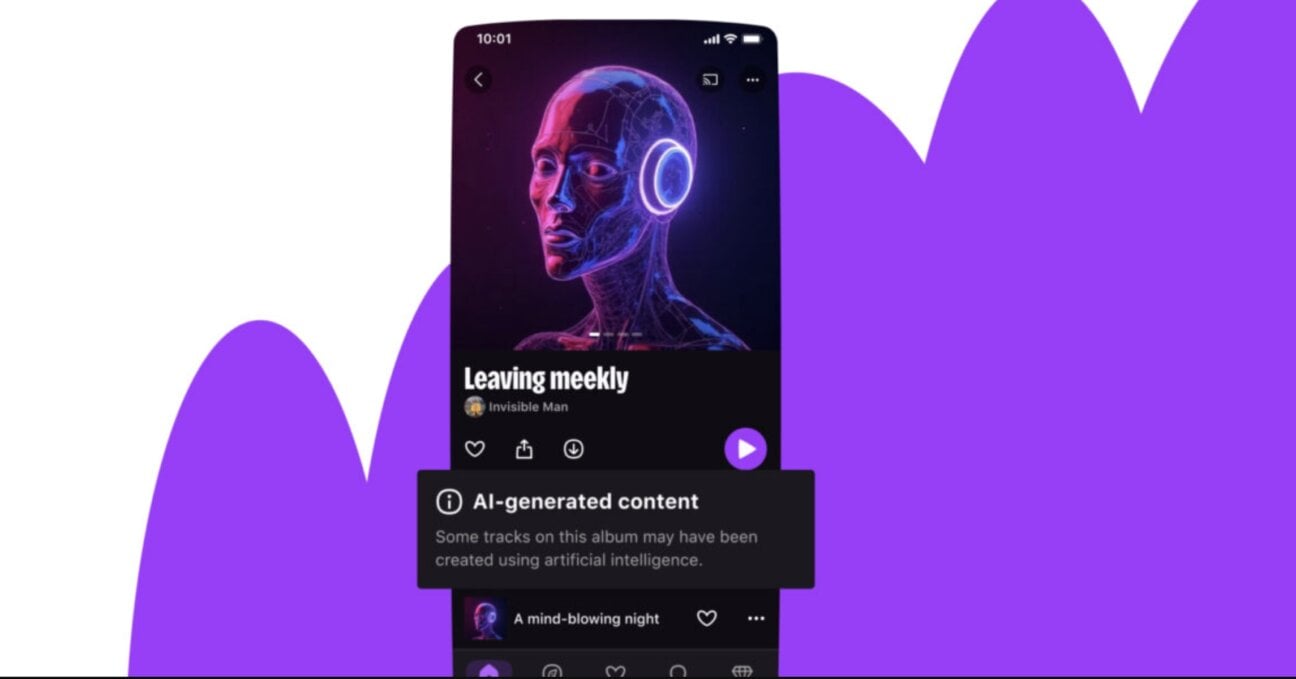

- Deezer demonetizes detected AI music and removes it from algorithmic recommendations to protect human artists

- Other companies like Sacem are testing the technology, signaling industry-wide adoption potential

According to Deezer, 85% of streams for AI-generated music are fraudulent, indicating significant manipulation in the streaming ecosystem.

Understanding the Scale of AI Music Flooding Streaming Services

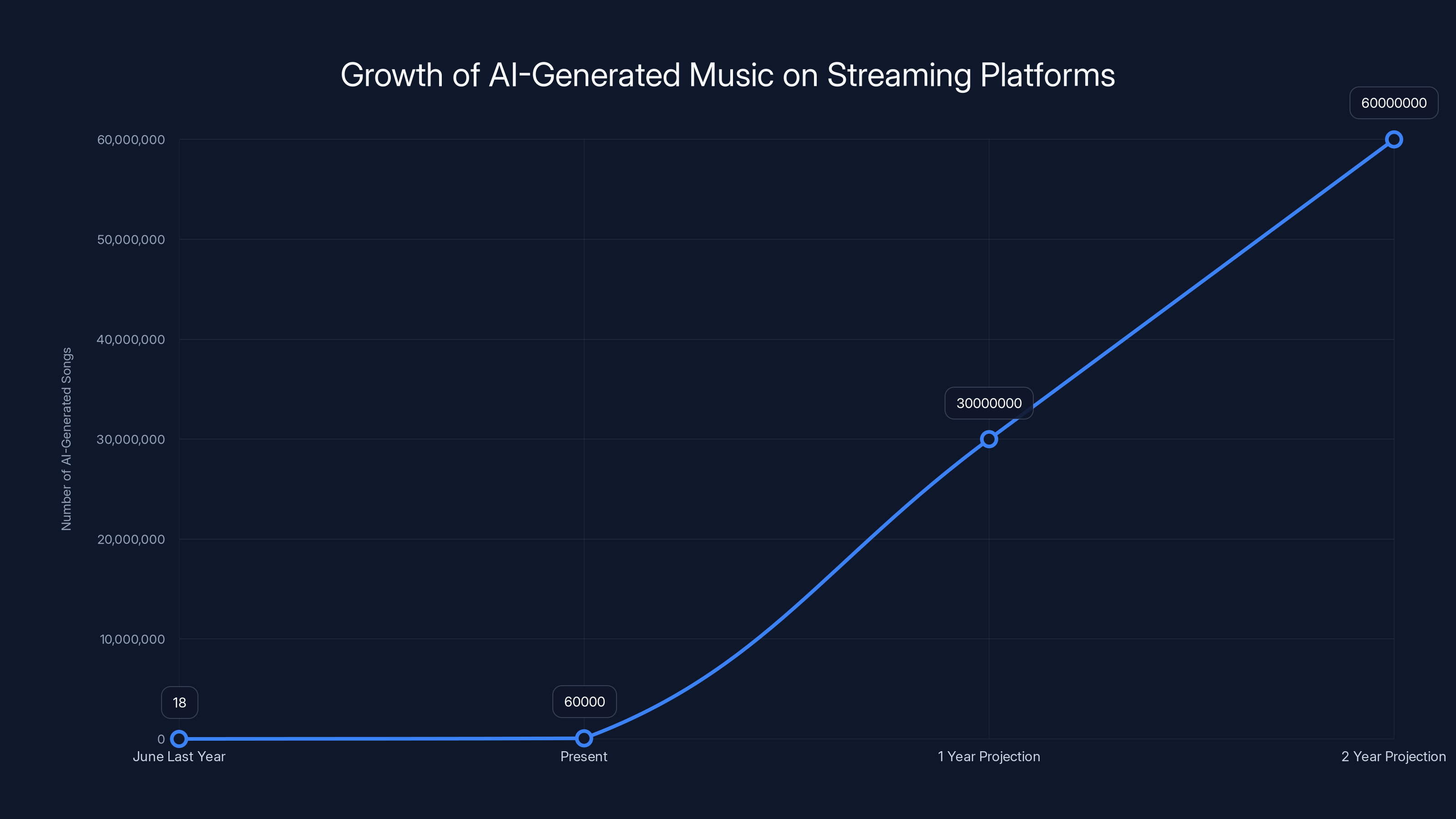

The numbers tell an almost surreal story. In June of last year, fully AI-generated music made up 18% of daily uploads. That means roughly one in every five new tracks entering the streaming ecosystem was algorithmically composed, not created by humans spending time in studios.

Fast forward just months later, and that number grew explosively. Daily AI-generated track uploads now total 60,000. Annualized, that's roughly 22 million AI-generated songs per year trying to enter legitimate streaming catalogs. The acceleration isn't gradual. It's exponential.

What's particularly revealing is the total inventory. Deezer has identified 13.4 million fully AI-generated songs already in its catalog. That's not 13.4 million songs written by humans about AI themes. That's 13.4 million songs with zero human creativity input, generated entirely by systems like Suno or Udio.

Consider the implication. If 13.4 million AI songs already exist in one platform's catalog, and platforms are receiving 60,000 new ones daily, the velocity of this problem doubles roughly every few months. Within two years, AI-generated tracks could outnumber human-created music on major platforms.

The real danger isn't that AI music exists. It's that bad actors exploit streaming economics. When a track gets millions of bot-generated plays, payouts flow to whoever uploaded it. Those aren't royalties earned through genuine listener engagement. They're theft, pure and simple.

How Fraudulent Streams Work: The Mechanics of Streaming Exploitation

Understanding streaming fraud requires understanding how payouts actually work. Most platforms distribute revenue based on stream count. More streams equal more compensation. It's an incentive structure that seems logical until you realize how exploitable it becomes.

Here's a simplified version of how fraud typically operates. Someone creates an AI-generated track in under five minutes using a tool like Suno. The AI composes music, generates lyrics, and produces a finished audio file. Zero studio time required. Zero collaboration needed. Just a prompt and computation.

That person then uploads the track to a platform like Spotify or Apple Music. But instead of waiting for organic listener engagement, they deploy bot networks. These networks stream the song thousands, hundreds of thousands, or millions of times using fake accounts or compromised credentials.

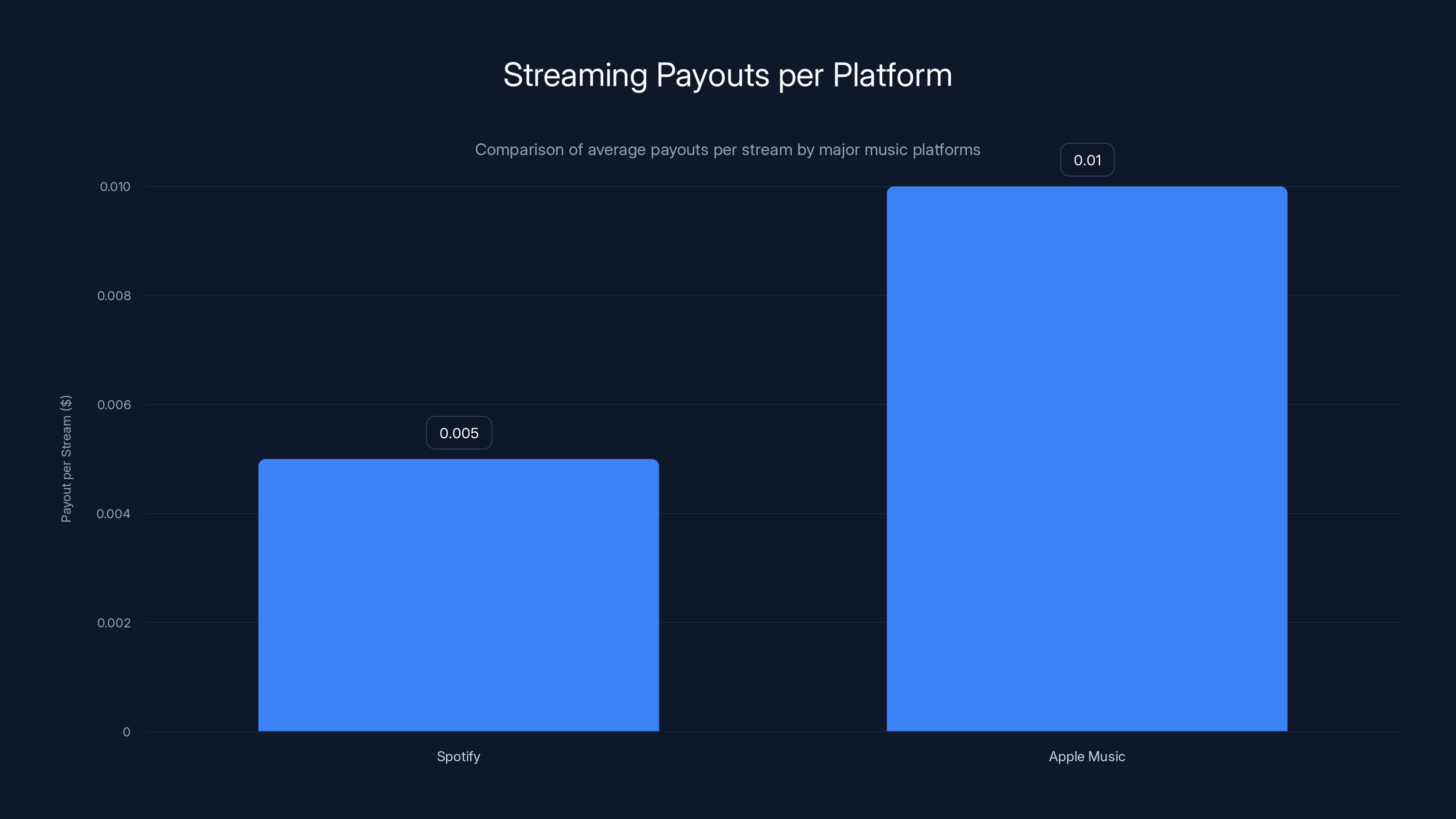

Each stream generates a tiny payment. Usually between

Now imagine coordinating this across dozens or hundreds of AI-generated tracks simultaneously. A single bad actor could generate six figures in stolen royalties with minimal effort and zero creative investment.

But the fraud extends beyond individual opportunists. Some AI bands have gained millions of legitimate streams. The Velvet Sundown, an entirely AI-generated musical group, accumulated millions of plays before platforms cracked down. Listeners unknowingly streamed AI content thinking they were supporting human musicians.

This creates a market distortion. Legitimate artists create music, invest time and resources, and compete for listeners. Meanwhile, AI-generated tracks cost nothing to produce and can be weaponized with bot networks to artificially inflate their reach. The playing field becomes catastrophically uneven.

Apple Music pays approximately twice as much per stream compared to Spotify, highlighting the variance in streaming payouts across platforms.

Inside Deezer's 99.8% Accurate AI Detection Technology

Deezer's detection tool works by analyzing audio fingerprints and identifying characteristics unique to AI-generated music. This isn't simple keyword matching or surface-level metadata analysis. It's sophisticated acoustic analysis.

AI music generation tools like Suno and Udio use neural networks trained on massive music catalogs. These systems learn patterns from real music but produce output with statistical anomalies. Certain harmonic progressions, rhythmic patterns, and vocal characteristics appear with frequencies that deviate from natural human musicianship.

Deezer's detection system learns these deviation patterns. The machine learning model was trained on millions of AI-generated tracks from major generative audio tools. It learned to identify which acoustic characteristics reliably indicate algorithmic composition.

The 99.8% accuracy rate is significant. That means for every 1,000 tracks analyzed, roughly two are incorrectly classified. For a streaming platform handling millions of tracks, that's manageable error. More importantly, it suggests the detection method works across different AI generation tools, not just one specific system.

The tool's behavior has evolved strategically. Initially, Deezer simply removed AI-generated music from algorithmic recommendations. Users could still find it through direct search, but algorithms wouldn't push it to listeners. This approach balances transparency with curation.

Now, Deezer goes further. Detected AI music gets demonetized. That means streams on those tracks generate zero royalty payouts. They're also excluded from the royalty pool entirely. Some platforms might distribute royalties from AI-generated music to human artists, but Deezer chose the cleaner approach: AI music earns nothing.

This creates a powerful incentive structure. Why upload AI-generated music if it generates zero revenue? Bad actors face economic disincentives. Legitimate users understand the platform's stance on authenticity.

The Industry Response: Who's Testing Deezer's Detection System?

Deezer didn't develop this technology solely for internal use. The company announced it's licensing the detection system to other platforms. This is where the story becomes about industry-wide transformation.

Sacem, France's collective management organization representing over 300,000 music creators and publishers, including artists like David Guetta and DJ Snake, has already conducted successful testing. That's not a small endorsement. Sacem directly represents hundreds of thousands of creators whose interests align against AI music fraud.

Why would a rights organization care? Because their members' royalties get diluted when fraudulent AI music inflates stream counts. More AI music in the system means smaller payouts per stream for legitimate tracks. Sacem needs protection mechanisms.

Other companies have "performed successful tests" according to Deezer CEO Alexis Lanternier. The company hasn't disclosed specific names, but several major platforms almost certainly fall into this category. Spotify, Apple Music, You Tube Music, and Amazon Music all face identical problems. They all need solutions.

The licensing model varies by customer. Deezer quoted different pricing for different deal types but provided no public price information. The vagueness makes sense. A small independent platform has different needs than a major service like Spotify. Pricing likely scales accordingly.

The significant detail isn't pricing. It's that Deezer chose to productize and license their detection system rather than use it solely as a competitive advantage. This suggests conviction that fighting AI music fraud benefits the entire industry, even competitors.

Comparing Industry Approaches: From Total Bans to Licensing Deals

Streaming platforms have fragmented into three distinct camps in how they handle AI-generated music. Understanding these approaches reveals the philosophical differences about music, authenticity, and platform responsibility.

The first camp takes the total-ban approach. Bandcamp, the independent music platform beloved by musicians, simply prohibits AI-generated music entirely. Artists cannot upload music created by generative AI tools. The policy is unambiguous: if AI generated it, Bandcamp rejects it. This is the nuclear option. It eliminates fraud completely but also prevents any AI-assisted music, even where humans made creative decisions alongside the technology.

The second camp, where Deezer positions itself, takes the transparency-plus-demonetization approach. Detect AI music, label it clearly, remove it from recommendations, and ensure it generates zero revenue. This allows AI music to exist but neutralizes economic incentives for fraud. Bad actors can't profit from AI-generated spam.

The third camp, represented by major record labels, appears to be embracing AI through licensing. Universal Music Group and Warner Music Group signed agreements with AI music tools like Suno and Udio to license their catalogs. These deals ensure that when AI trains on artist work, creators and labels get compensated. This isn't anti-AI. It's pro-compensation.

Spotify has taken a middle path closer to Deezer's approach. The platform updated its policies to require clear labeling of AI-generated music, reduce spam content, and explicitly prohibit unauthorized voice clones. Spotify hasn't banned AI music, but it's imposing friction that makes fraud less profitable.

Each approach reflects different values. Bandcamp prioritizes artist protection above all. Deezer balances transparency with economic deterrence. Major labels balance artist rights with AI opportunity. Spotify attempts equilibrium across multiple stakeholders.

None of these approaches are purely right or wrong. They represent different bets about whether AI in music is inevitably coming (requiring adaptation) or something to be controlled (requiring restriction).

85% of streams from AI-generated music are fraudulent, highlighting a major issue for streaming platforms. Estimated data.

The Economics of Streaming Payouts: Why AI Detection Matters

To understand why AI detection matters, you need to understand streaming economics. The math is surprisingly unintuitive.

Spotify pays between

Now consider what this creates. If you're a human artist who spent six months writing, recording, and producing a song, you need significant stream volume to earn meaningful income. A song with 100,000 streams generates between

Contrast that with AI music. An artist can generate a complete song in 60 seconds using Suno. The marginal cost is roughly zero. The opportunity cost is minimal. But if that AI song could somehow get one million streams through bot networks, it would generate

This is why fraudulent AI music is so attractive to bad actors. The risk-reward ratio is absurdly favorable. Upload a spam song, deploy a bot network for a few hundred dollars, and potentially earn thousands in stolen royalties. Even if platforms catch and remove the fraud, the economic incentives remain.

Deezer's demonetization approach directly addresses this. If AI-detected music earns zero royalties, the economic incentive for fraud collapses. Bot networks become pointless. Fraudsters can't profit from AI-generated spam.

With demonetization, the first term becomes zero. Fraud becomes economically irrational.

Deezer's Historical Stance on AI and Creator Protection

Deezer's current position on AI detection didn't emerge in a vacuum. The company has been consistently vocal about AI concerns since 2024.

In a significant symbolic move, Deezer became the first music streaming platform to sign the global statement on AI training. This statement, supported by actors like Kate Mc Kinnon, Kevin Bacon, Kit Harington, and Rosie O'Donnell, advocates for transparency and consent when AI systems train on creative work.

The statement essentially argues that if AI tools train on copyrighted music, the original creators deserve compensation and should have consented to that usage. It's a rights-based approach to AI rather than a blanket-ban or unrestricted-access approach.

This positioning matters context-wise. Deezer signed the statement while major record labels were still negotiating with Suno and Udio separately. Deezer took a public stance that AI training requires creator consent and compensation before any rights organization or platform had achieved industry-wide agreement.

When Deezer later built AI detection technology, it aligned with this broader philosophy. The company isn't saying AI music shouldn't exist. It's saying AI music should be transparent, creators whose work trained AI systems should be compensated, and fraudulent AI content shouldn't exploit the streaming system.

This consistency strengthens Deezer's credibility. The company isn't flip-flopping based on market conditions. It's executing a coherent strategy about how AI and music coexist with fairness.

How AI Detection Integrates with Streaming Platform Algorithms

Once AI music is detected and demonetized, streaming platforms must decide what to do with it algorithmically. Deezer made a sophisticated choice: remove it from algorithmic and editorial recommendations.

This means listeners browsing their playlists or discovering new music won't encounter AI-detected tracks. But search functionality still works. If someone specifically searches for an AI artist or song, they can find it.

Why this approach? Several strategic reasons converge.

First, it prevents algorithm poisoning. Machine learning algorithms that power recommendations learn from what users engage with. If AI-generated spam dominates the engagement data, algorithms become corrupted. They learn to recommend low-quality content. By removing AI music from recommendations, Deezer prevents the algorithm from learning patterns from fraudulent content.

Second, it protects user experience. Most listeners don't want to discover AI-generated music alongside human-created content. They came to Spotify or Deezer to find good music, not to encounter algorithmic spam. Hiding AI music from recommendations respects user preferences.

Third, it preserves artist visibility. When algorithmic recommendations get polluted with AI music, human artists get buried. Removing AI music from the recommendation algorithm ensures human artists have better visibility. This directly addresses the core problem Deezer is trying to solve: protecting human musicians.

Some platforms might take different approaches. Spotify could flag AI music with badges rather than removing it entirely. Apple Music could require explicit user consent before algorithmic recommendations include AI content. You Tube Music could annotate AI tracks clearly but still recommend them.

Each approach represents a different calculus about user choice versus platform curation.

AI-generated music uploads have surged from 18% of daily uploads to 60,000 tracks per day. If trends continue, AI tracks could outnumber human-created music within two years. (Estimated data)

The Copyright Training Problem: Why Major Labels Negotiated with AI Companies

The AI detection story only makes sense against the backdrop of a separate, parallel conflict. While Deezer was detecting AI music, major record labels were negotiating with AI music companies.

The fundamental legal issue centers on training data. AI music tools like Suno and Udio train their models on massive music catalogs. That training data often includes copyrighted works. Should record labels be compensated when their artist catalogs train AI systems?

Initially, record labels answered yes. They sued Suno and Udio, arguing that using copyrighted music to train AI without permission violates copyright. The legal theory was straightforward: training on copyrighted work is a form of copying.

But Suno and Udio didn't back down. They argued that training AI on music is fair use, similar to how human musicians learn by studying other musicians' work. The legal battle seemed like it could drag on for years.

Then something unexpected happened. Universal Music Group and Warner Music Group, two of the world's largest record labels, settled negotiations with Suno and Udio. They negotiated licensing agreements rather than pursuing full litigation.

Under these deals, Suno and Udio essentially license the major record label catalogs. When their AI systems train on that music, creators and labels get compensated. The arrangement resembles how streaming platforms pay rights holders for distributing music.

This development confused some observers. Why would labels negotiate with AI companies if they opposed AI-generated music? The answer reveals a sophisticated strategic calculation. Record labels realized that AI music was inevitable. Rather than fight against something they couldn't control, they negotiated to ensure their artists got paid when AI trained on their work.

It's a pragmatic approach that doesn't require labels to love AI music. It just requires them to accept its existence and ensure artists benefit from it economically.

The Technical Challenge: Detecting AI Music Accurately Across Generations

Building a detection system that works accurately across different AI music tools presents significant technical challenges.

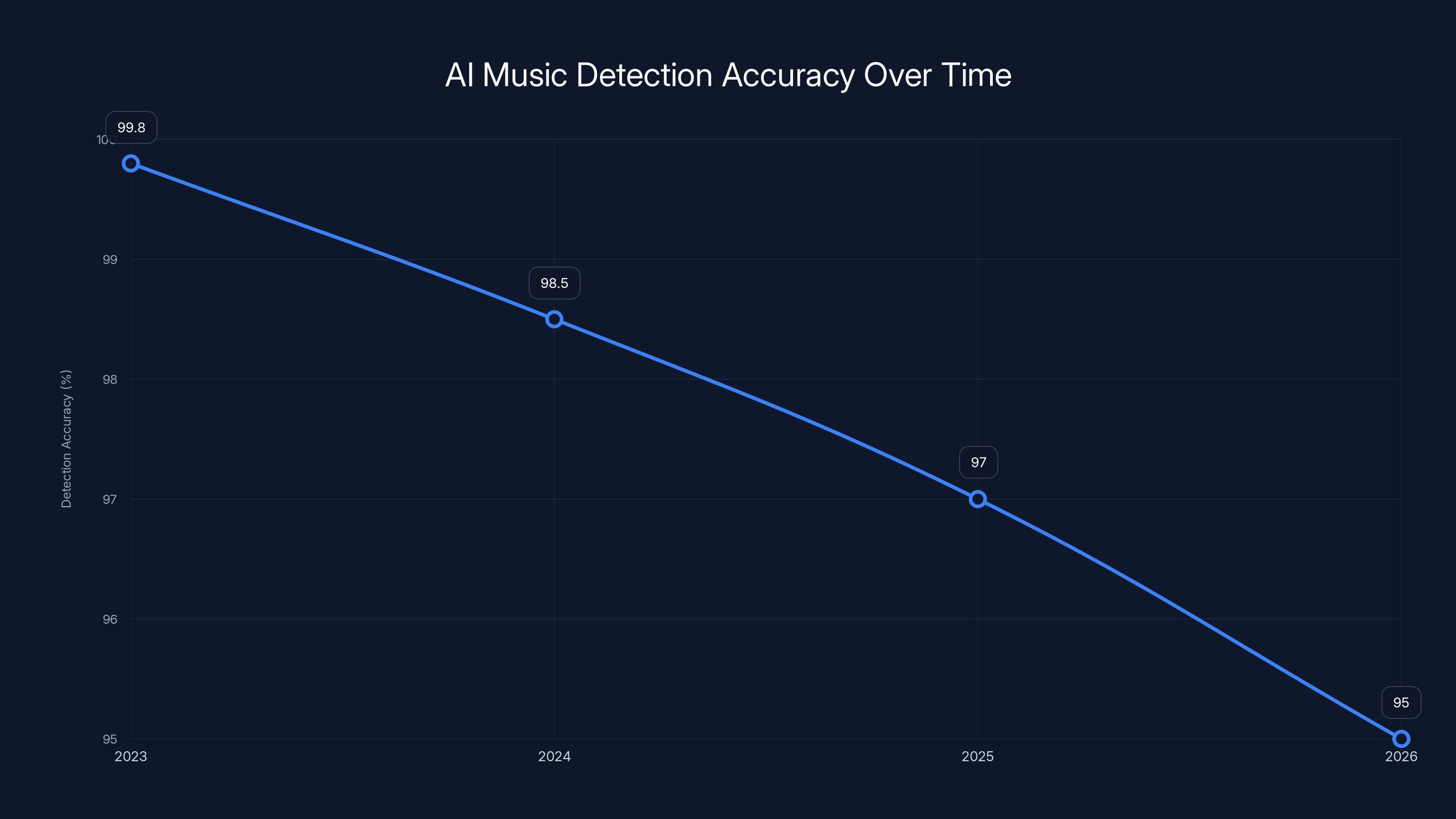

Deezer's system can identify tracks from major generative models like Suno and Udio. But what happens when new AI tools emerge? What happens when existing tools are updated and change their underlying generation methods?

Detection accuracy could degrade over time. As AI tools evolve, they might inadvertently make detection harder by producing audio with fewer statistical anomalies. Or they might deliberately design systems to evade detection.

This creates a cat-and-mouse dynamic reminiscent of spam detection or malware analysis. As detection improves, adversaries adapt. Deezer's 99.8% accuracy represents a snapshot in time, not a permanent solution.

The company likely built in mechanisms to update the detection model continuously. Machine learning systems improve when trained on new data. As new AI-generated music arrives, Deezer can retrain the system to maintain accuracy.

But there's a finite limit to how well any detection system can perform. If a future AI tool becomes genuinely indistinguishable from human music at an acoustic level, detection might become impossible. We'd be back to relying on metadata, upload patterns, and user reporting.

This technical fragility is one reason why licensing agreements with AI companies matter. If you can't reliably detect AI music forever, you need a contractual framework ensuring AI tool providers compensate creators anyway.

Impacts on Independent Artists and Small Record Labels

Deezer's approach has profound implications for different musician segments.

Independent artists see a mixed outcome. On one hand, Deezer removes algorithmic competition from AI spam. Their music gets better visibility in recommendation systems. On the other hand, if they ever use AI tools to assist their creative process, they risk being flagged and demonetized.

Some independent artists embrace AI as a production tool. They might use AI for initial composition ideas, background arrangements, or vocal production, then layer human creativity on top. Deezer's system detects and demonetizes "fully AI-generated" music, but the terminology becomes murky. Where's the line between AI-assisted and fully AI-generated?

Small record labels face similar complexity. They might sign artists who use AI production tools. If Deezer's detection system misclassifies those artists as fully AI-generated, the label's revenue disappears.

The demonetization approach also raises equity questions. What happens to the royalty stream that would have gone to detected AI music? Does Deezer distribute it to other artists? Keep it as a platform fee? The company hasn't clarified.

Major independent artists who've built large audiences are largely unaffected. Their human identity is established. Their streams come from genuine listener loyalty, not algorithmic recommendation gaming.

But emerging artists face tougher conditions. If they can't use algorithmic recommendations to build audiences because fraudulent AI music is clogging the system, they might struggle more. Conversely, removing algorithmic pollution helps them surface. It's genuinely unclear whether the net effect is positive.

Estimated data shows how fraudulent streaming can generate significant payouts, with 1 million streams earning between

Potential Risks: False Positives and Misclassification Challenges

A 99.8% accuracy rate sounds exceptional, but error rates compound at scale.

If Deezer processes 60,000 AI tracks daily through the detection system, plus potentially millions of human-created tracks, the error rate creates problems. For every 1,000 human-created tracks analyzed, roughly two might be incorrectly flagged as AI-generated. For a catalog of 50 million songs, that's roughly 100,000 false positives.

Imagine being a human artist whose work gets misclassified as AI-generated. Your revenue suddenly drops to zero. You get no explanation, no clear appeal process. The impact on your livelihood could be devastating.

Deezer probably has mechanisms to report false positives and request manual review. But scalability is the challenge. How can a company manually review hundreds of thousands of appeal requests?

This is a known problem in content moderation. When systems operate at scale, even small error rates create massive problems. You Tube struggles with this constantly. Music platforms face identical challenges.

The practical solution likely involves tiered appeals. Obvious mistakes get corrected quickly. Edge cases get human review. Controversial cases might escalate. But the burden falls on artists to prove they're human.

Detection systems also struggle with hybrid content. What about a song where a human wrote and performed the lyrics but an AI tool generated the instrumental track? Or a track where AI created a rough composition and a human musician extensively modified it?

Deezer's specification is "fully AI-generated," which might capture these hybrid cases conservatively. But conservative approaches risk false positives.

The Path Forward: Industry Standardization and Regulation

Deezer's technology addresses a specific symptom. But systemic solutions require broader coordination.

One likely development is industry standardization. Major streaming platforms might collectively adopt similar detection and labeling approaches. This would prevent bad actors from uploading fraudulent AI music to one platform after another, seeking the most lenient policies.

Standardization could take the form of shared detection systems. Platforms might contribute anonymized data to a shared database of detected AI music. When one platform identifies fraudulent AI content, other platforms know to watch for similar patterns. This distributed intelligence approach scales detection more efficiently.

Regulation is another probable direction. Governments might require streaming platforms to prevent AI music fraud. The EU's Digital Services Act already touches on recommendation systems and content moderation. Future regulations could specifically address AI music.

Some form of metadata standard could also emerge. When artists upload music, they might need to disclose whether AI tools were involved in creation. Platforms could then label tracks accordingly, similar to how streaming services flag explicit content.

This metadata approach wouldn't ban AI music but would inform listeners. A song would carry a badge indicating "AI-assisted composition" or "fully AI-generated" or "human-created." Listeners then make informed choices about what they want to hear.

The challenge with metadata solutions is reliance on honesty. Bad actors would obviously lie about whether their track is AI-generated. So metadata must pair with detection systems.

Broader Cultural Questions: Is AI Music "Real" Music?

Beyond technical and economic concerns, AI detection forces uncomfortable cultural questions.

Is music created by an AI system "real" music? Some argue that music requires human intention, emotion, and agency. If a computer generates it, it's not music, just sophisticated noise-making.

Others argue that tools don't define art. Photography was considered not "real" art when it emerged. Electronic music was controversial. Synthesizers were criticized as inauthentic. Yet all eventually gained acceptance as legitimate art forms.

AI music might follow this pattern. Initial skepticism could gradually give way to acceptance as audiences hear genuinely compelling AI-generated pieces. The technology might improve to a point where the source of creation matters less than the listener's emotional response.

But something is genuinely lost if AI music becomes interchangeable with human music. When listeners can't distinguish the source, they lose information. Music carries meaning partly through knowing it resulted from human struggle, intention, and creativity. If you can't trust that information, music becomes just acoustics.

Deezer's approach to labeling AI music as distinct preserves this information asymmetry. Listeners know they're hearing AI-generated content and can decide how they feel about that. It's a transparency solution to a cultural problem.

The question might ultimately be whether we want AI music integrated seamlessly into human music or kept separate. Deezer's philosophy: separate but transparent. Other platforms might choose different answers.

Estimated data shows potential decline in detection accuracy as AI music tools evolve and adapt, highlighting the ongoing challenge of maintaining high detection rates.

How Artists Can Protect Themselves in an AI-Saturated Music Ecosystem

While Deezer and other platforms battle AI detection, individual artists face their own challenges.

First, human artists can lean harder into authenticity. Platforms prioritize human-created music in recommendations. Artists can emphasize their human creative process, their influences, their emotional journey. This differentiates them from algorithmically-generated alternatives.

Second, artists can build direct fan relationships. Streaming platforms are intermediaries. Direct relationships through newsletters, social media, or platforms like Patreon or Band Camp reduce dependence on platform algorithms.

Third, artists can collaborate on multiple platforms simultaneously. Relying on a single streaming service for income is risky. If one platform changes policies or favors AI content, diversification provides cushion.

Fourth, human artists can explicitly declare that their work is AI-free. As AI prevalence increases, this becomes a marketing advantage. Listeners who value human creativity will seek out artists making this declaration.

Fifth, artists can join collective organizations like Sacem or similar rights groups. These organizations advocate collectively for creator interests and can negotiate better terms with platforms and AI companies.

Sixth, some artists might embrace AI tools strategically. Rather than viewing AI as a threat, some musicians integrate AI into their creative process, using it as a composition assistant or production tool. This requires skill and intentionality but might open new creative possibilities.

None of these approaches guarantee success, but together they represent ways artists can navigate an industry transformed by AI.

Lessons for Other Content Industries: Beyond Music

Deezer's detection and demonetization approach offers templates for other content industries facing AI flooding.

Video platforms like You Tube could implement similar systems. Video generated entirely by AI could be flagged and excluded from monetization or algorithmic recommendations. This would reduce economic incentives for spam video generation while preserving transparency.

Writing platforms could detect AI-generated articles and posts, limiting their algorithmic distribution. Substack or Medium could prioritize human-written essays while clearly labeling AI-generated content.

Graphic design platforms could flag AI-generated artwork, warning users about provenance. Stock image sites could segment AI-generated images from photographs and human illustrations.

Each industry has unique challenges. Video plagiarism detection differs from audio plagiarism detection. Written text plagiarism detection is more mature than image detection. But the general principle translates: detect AI content, label transparently, create economic disincentives for fraudulent AI content, protect human creators.

Deezer's model shows that this approach is technically feasible, economically defensible, and philosophically coherent. Other platforms will likely adopt similar strategies.

The Role of Transparency in Trust and Platform Legitimacy

What makes Deezer's approach interesting isn't just the detection technology. It's the transparency commitment.

Deezer publicly disclosed detection accuracy rates. The company announced that 85% of streams from AI music are fraudulent. It revealed the scale of the problem (60,000 tracks daily). It explained how the detection system works at a high level.

This transparency does several things. First, it builds trust with artists. If Deezer is openly fighting fraud and protecting musicians, artists are more likely to prioritize uploading their work to Deezer. They know the platform prioritizes their interests.

Second, it creates competitive pressure on other platforms. If Deezer is transparent about fraud detection and other platforms remain silent, listeners wonder whether competitors are neglecting the problem.

Third, it demonstrates sophisticated thinking about AI governance. Rather than banning AI entirely or ignoring the problem, Deezer found a middle path. This nuance builds credibility with thoughtful observers.

Fourth, transparency allows external scrutiny. If Deezer's accuracy rate is really 99.8%, researchers can test it. If the fraud statistics are accurate, analysts can verify them. Transparency invites verification, which ultimately strengthens credibility.

The contrast with platforms that say nothing about AI or detection is stark. Spotify's AI policy exists but rarely makes headlines. Apple Music's approach is even more opaque. You Tube's algorithm recommendations are legendary for suggesting low-quality content.

Deezer's willingness to discuss the problem openly creates a halo effect. The company appears to be fighting for creators when platforms stay silent.

Future of AI Music: Coexistence or Conflict

Will human and AI music peacefully coexist on streaming platforms, or will tension escalate?

The optimistic scenario suggests coexistence. AI tools improve rapidly. Within years, AI-generated music might be indistinguishable from human compositions in many genres. Listeners could choose what they want to hear. Some listeners would prefer AI music for certain use cases (focus soundtracks, background music while working) and human music for emotional engagement.

Platforms could offer AI-generated music in separate sections or channels. Similar to how streaming services distinguish between podcasts, audiobooks, and music, they could separate AI-generated and human-created music. Listeners choose based on preference.

Regulation and compensation frameworks stabilize the relationship. Rights holders get paid when AI trains on their work. Platforms detect and deter fraudulent AI spam. Artists enjoy ecosystem protections. The system reaches equilibrium.

The pessimistic scenario involves escalating conflict. AI music flooding becomes worse despite detection efforts. Platforms struggle to maintain quality. Artists abandon streaming entirely, relying on direct fan relationships. The streaming economy bifurcates between human music (with lower earnings) and AI music (cheaper but lower quality).

Regulation fails to materialize or creates perverse incentives. Platforms become unusable as AI spam dominates. Legal battles multiply. The music industry fragments into subsystems with different rules.

Most likely is a middle ground. Coexistence occurs, but it's uncomfortable and requires constant management. Platforms maintain separate channels for AI and human music but must police boundaries constantly. Regulation partially succeeds, creating framework but not eliminating problems. Some artists thrive while others struggle.

Deezer's move to license detection technology suggests platform operators believe coexistence is preferable to conflict. They're investing in management rather than prevention.

FAQ

What is Deezer's AI detection tool and how does it work?

Deezer's AI detection tool is a machine learning system that analyzes audio characteristics to identify fully AI-generated music with 99.8% accuracy. The system was trained on music from major generative AI tools like Suno and Udio, learning to recognize acoustic patterns and statistical anomalies that distinguish algorithmic composition from human-created music. Once detected, the tool automatically tags tracks as AI-generated in the system.

How many AI-generated tracks are currently on streaming platforms?

Deezer has identified 13.4 million fully AI-generated songs in its catalog alone, with 60,000 new AI tracks arriving daily across platforms. This means streaming services add roughly 22 million AI-generated songs annually. By contrast, in June of the previous year, only 18% of daily uploads were AI-generated, showing exponential growth in the rate of AI music flooding streaming ecosystems.

Why does Deezer demonetize AI-generated music instead of banning it completely?

Demonetization removes economic incentives for fraudulent AI music flooding while preserving transparency. If AI music earned zero royalties, bad actors couldn't profit from deploying bot networks to artificially inflate streams. Unlike total bans like Bandcamp's approach, demonetization allows AI music to exist so listeners can find it through search, but prevents it from becoming profitable or dominating algorithmic recommendations. This creates economic disincentives without absolute prohibition.

What percentage of streams from AI-generated music are fraudulent?

According to Deezer's data, 85% of all streams from fully AI-generated tracks are fraudulent. This means that most plays on AI music come from bot networks and manipulation schemes rather than genuine listeners. This fraudulent activity directly steals royalties from legitimate artists by artificially inflating stream counts for low-effort or spammy content.

Which other companies are testing or using Deezer's AI detection technology?

Sacem, the French collective management organization representing over 300,000 music creators and publishers including artists like David Guetta and DJ Snake, has conducted successful testing of Deezer's detection tool. Other companies have reportedly tested the technology as well, though Deezer hasn't publicly disclosed all names. The licensing model allows different deal structures depending on the platform type, with pricing varying based on specific requirements.

How do major record labels approach AI music compared to streaming platforms?

Major record labels like Universal Music Group and Warner Music Group took a licensing approach rather than bans. They negotiated agreements with AI music companies like Suno and Udio, ensuring that when AI tools train on their artist catalogs, creators and labels receive compensation. This pragmatic approach acknowledges that AI music is inevitable while ensuring rights holders benefit economically, contrasting with some platforms' detection and demonetization strategies.

What are the risks of AI detection systems incorrectly flagging human-created music?

Even with 99.8% accuracy, large-scale implementation creates problems. For a platform processing millions of tracks, small error rates produce significant false positives. A human artist misclassified as AI-generated would have their revenue demonetized with potentially limited appeal mechanisms. Artists might face burdens proving their human creativity, and scaling manual review processes becomes challenging. This creates equity concerns despite high overall accuracy rates.

Can artists use AI tools as part of their creative process without being demonetized?

Deezer's system specifically detects "fully AI-generated" music, meaning the classification depends on the degree of human creative input. Music where humans made substantial creative decisions using AI tools as an assistive technology might not be flagged. However, the exact boundaries between AI-assisted and fully AI-generated remain unclear, creating uncertainty for artists who use AI in their production workflows.

How does AI music detection compare to Bandcamp's complete AI music ban?

Bandcamp prohibits all AI-generated music entirely, eliminating fraud completely but also preventing any AI-assisted compositions. Deezer's approach is more permissive: detect and demonetize fully AI-generated music while allowing human-created music to remain monetized. Deezer's method preserves user choice and transparency while reducing fraudulent payouts, whereas Bandcamp's approach prioritizes human artist purity regardless of listener preference.

What could be the long-term implications of AI detection systems for the music streaming industry?

As detection technology becomes industry standard, streaming platforms likely will converge on similar AI music policies involving detection, labeling, and demonetization. This should reduce fraudulent streaming revenue, protect human artists from algorithmic competition with AI spam, and create market incentives for quality over quantity. Future developments might include regulation requiring disclosure of AI use, shared detection databases across platforms, and metadata standards identifying AI music clearly to listeners.

Conclusion: The Platform That Took Fraud Seriously

Deezer's decision to build AI music detection technology and license it to other platforms represents more than a technical innovation. It's a strategic statement about where streaming platforms can position themselves as AI transforms every industry.

The numbers tell the essential story. Sixty thousand new AI-generated songs arriving daily on streaming platforms. Eighty-five percent of streams on those songs fraudulent. An ecosystem increasingly polluted with low-effort, algorithmically-generated content designed not to delight listeners but to exploit streaming economics.

Most platforms responded to this crisis with limited action. Spotify updated policies. Apple Music remained quiet. You Tube Music continued recommending whatever algorithms favor. The problem persisted and worsened.

Deezer took a different path. The company built sophisticated detection technology, implemented it internally, then made the decision to productize and license it. This move carries significant implications.

First, it suggests that fighting AI music fraud isn't just possible, it's technically feasible with high accuracy. Deezer's 99.8% accuracy rate demonstrates that machine learning can reliably distinguish human creativity from algorithmic generation. Other platforms can't claim ignorance anymore.

Second, it creates competitive incentives. Artists seeking platforms that prioritize their interests will gravitate toward Deezer. If the detection system works well, Deezer's catalog becomes relatively cleaner than competitors'. This is a real competitive advantage.

Third, it establishes a business model where platform operators profit from protecting creators. Rather than extracting maximum value from all music regardless of quality, Deezer created revenue by providing detection services. This aligns platform incentives with creator interests.

Fourth, it signals conviction about the future of music. Deezer believes human creativity will remain valued. The company believes listeners prefer human music over AI spam. The company believes artists deserve compensation and recognition. These bets inform the entire detection strategy.

The path forward requires other platforms to follow Deezer's lead. Spotify could implement similar detection and demonetization. Apple Music could be transparent about its AI policies. You Tube Music could prioritize human-created music in recommendations.

Simultaneously, regulation will likely emerge. The EU might require streaming platforms to prevent AI music fraud. Other governments might follow. Rights organizations will push for stronger protections.

The music industry won't return to pre-AI conditions. AI music tools are here permanently. But the industry can establish frameworks ensuring human creators thrive despite AI competition. Deezer showed a practical path forward. Now we'll see whether other platforms have the conviction to follow.

For independent artists, the message is clear: transparency matters, platform choice matters, and supporting companies that prioritize creator protection benefits you. For listeners, it means advocating for platforms that distinguish human music from AI-generated content. For streaming companies, it means that creator protection is good business, not charitable add-on.

The AI music problem won't vanish. But Deezer proved it's manageable. The platforms that embrace that lesson will thrive. Those that ignore it will watch their catalogs become increasingly polluted while creators migrate elsewhere.

Use Case: Create comprehensive reports automatically documenting AI music trends and detection metrics for your organization

Try Runable For Free

Key Takeaways

- Deezer's AI detection tool identifies fully AI-generated music with 99.8% accuracy, removing it from recommendations and demonetizing streams to prevent fraud

- 85% of all streams on AI-generated music are fraudulent, representing massive revenue theft from legitimate artists through bot networks and manipulation

- 60,000 AI tracks arrive daily on streaming platforms, growing exponentially from 20,000 just months prior, rapidly polluting streaming ecosystems

- Different platforms take divergent approaches: Bandcamp bans all AI music, Deezer demonetizes detected tracks, major labels negotiate licensing deals for compensation

- Sacem and other collective rights organizations are testing and adopting Deezer's detection technology, suggesting industry-wide shift toward AI transparency and fraud prevention

Related Articles

- Prime Video's The Wrecking Crew: How Amazon Toned Down Violence [2025]

- UK AI Copyright Law: Why 97% of Public Wants Opt-In Over Government's Opt-Out Plan [2025]

- TikTok Censorship Fears & Algorithm Bias: What Experts Say [2025]

- Pornhub UK Ban: Why Millions Lost Access in 2025 [Guide]

- Pornhub's UK Shutdown: Age Verification Laws, Tech Giants, and Digital Censorship [2025]

- Neil Young's Greenland Music Donation & Amazon Boycott [2025]

![Deezer's AI Music Detection Tool: How Streaming Platforms Fight Fraud [2025]](https://tryrunable.com/blog/deezer-s-ai-music-detection-tool-how-streaming-platforms-fig/image-1-1769675971050.png)