The Ghost in the Machine: When AI Becomes Your Favorite Artist

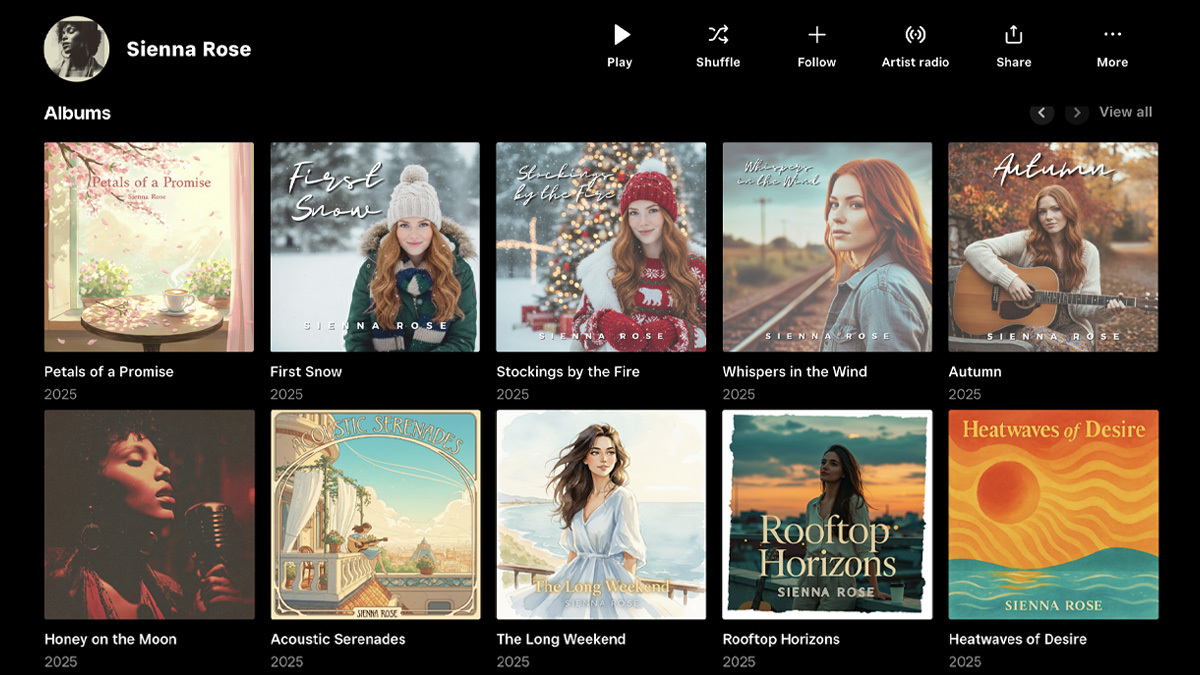

You're scrolling through Spotify's Viral 50 chart on a Tuesday morning. A song catches your ear. It's catchy, polished, sounds genuinely professional. The artist? Sienna Rose. She's got millions of streams, growing fast, and everything looks legit on the surface.

Except she doesn't exist.

Sienna Rose is an AI-generated artist, and her viral success exposed a problem that streaming platforms have been quietly grappling with: how do you stop fake musicians from gaming the system when AI can now produce music that fools actual listeners? According to Rolling Stone, Sienna Rose's case highlights the increasing sophistication of AI in music production.

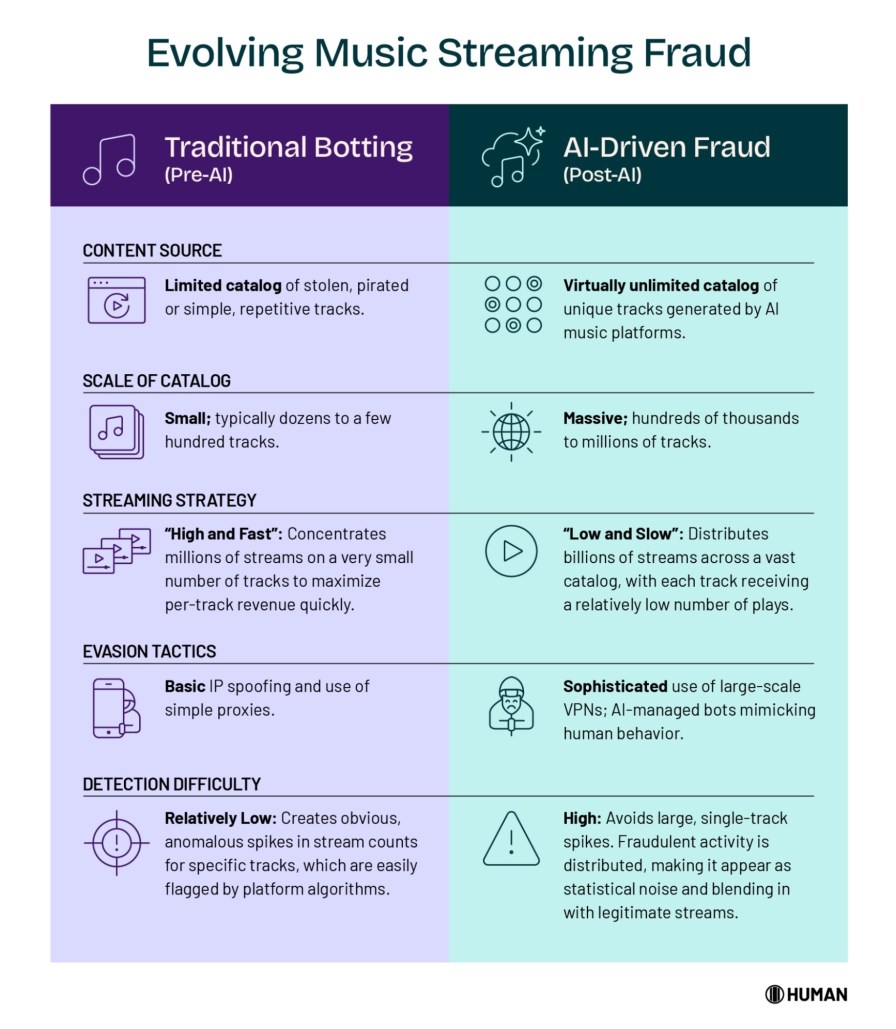

This isn't some fringe issue anymore. Music streaming platforms—from Spotify to Apple Music to Deezer—are racing to implement detection systems because the economics of AI music fraud are terrifyingly simple. Generate thousands of AI tracks, upload them under fake artist profiles, manipulate streams through bot networks, and watch the royalty checks roll in. One person with a laptop can flood the platform with music faster than human moderators can review it.

Deezer, one of Europe's largest streaming services, took a stand. They flagged Sienna Rose as AI-generated, added warning labels, and started being transparent about the problem. But here's where it gets interesting: their statement that they believe "it's vitally important to be transparent with listeners and fair to artists" reveals something bigger than one fake pop star. It's about the fundamental trust system that keeps streaming platforms functioning.

The music industry is at an inflection point. AI music generation has gotten so good that the gatekeepers can't rely on human ears anymore. They need algorithms that can detect what humans can't. They need policy enforcement. They need transparency that listeners actually trust. And they need to do it fast, because right now, fraudsters are moving faster than the defenses.

Let's break down what's actually happening, why it matters, and what comes next.

Why AI-Generated Music Is Suddenly Everywhere

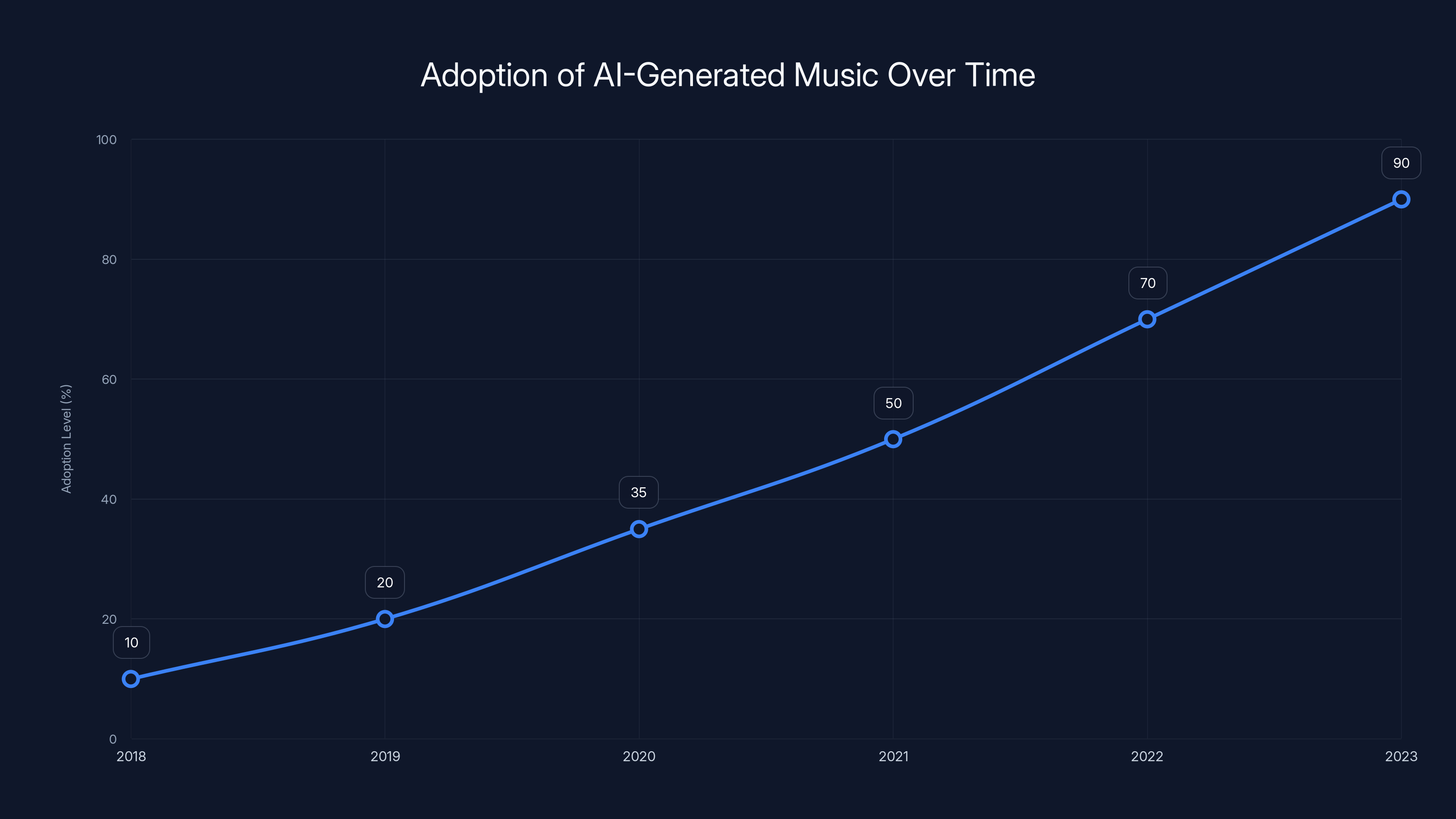

Five years ago, AI-generated music sounded like a novelty. Tinny. Uncanny. Clearly fake in ways that were obvious to anyone listening. Those days are over.

Tools like Suno, Udio, and other generative music platforms have crossed a threshold. You can type a text prompt—something like "upbeat indie pop with female vocals, 2010s vibes"—and get back a three-minute track that would pass a casual listen test. Some people genuinely can't tell the difference between AI music and human-created music anymore.

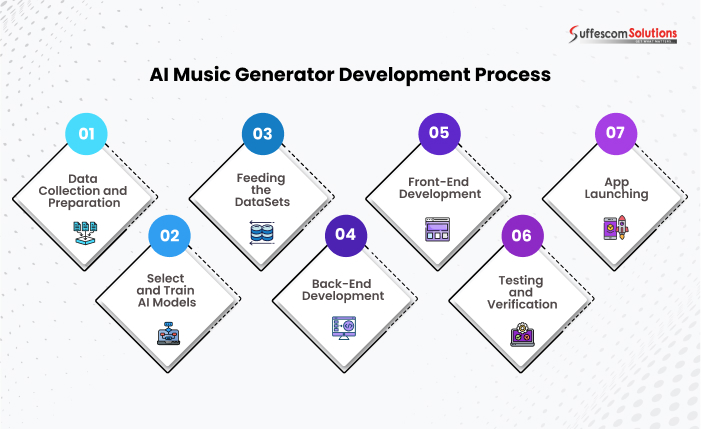

The technology improved because the training data got better, the models got larger, and the computational power became cheaper. What used to cost thousands of dollars to generate now costs pocket change. A frustrated teenager can create a "band" and release an album in an afternoon.

This democratization is actually good in some contexts. Musicians are using AI as a compositional tool. Producers are layering AI-generated elements into real music. Filmmakers are using it for placeholder soundtracks. But when the economics of music streaming meet cheap, high-quality AI music, the incentives flip completely.

Streaming platforms pay artists based on streams. The more streams, the more money. It doesn't matter if the streams come from real listeners or bot networks. It doesn't matter if the artist is a real person or an AI. The system just counts numbers.

So here's what happens: Someone with technical skill deploys a bot farm. They upload AI-generated music under dozens of fake artist accounts. They use the bot network to stream those tracks repeatedly, racking up fake plays. The streaming platform's algorithm notices the popularity surge and starts recommending the music to real listeners. Real people actually like it (because it was AI-designed to be palatable), and real streams start happening alongside the fake ones. The account monetizes. The creator withdraws royalties and walks away.

Rinse and repeat with a thousand new accounts.

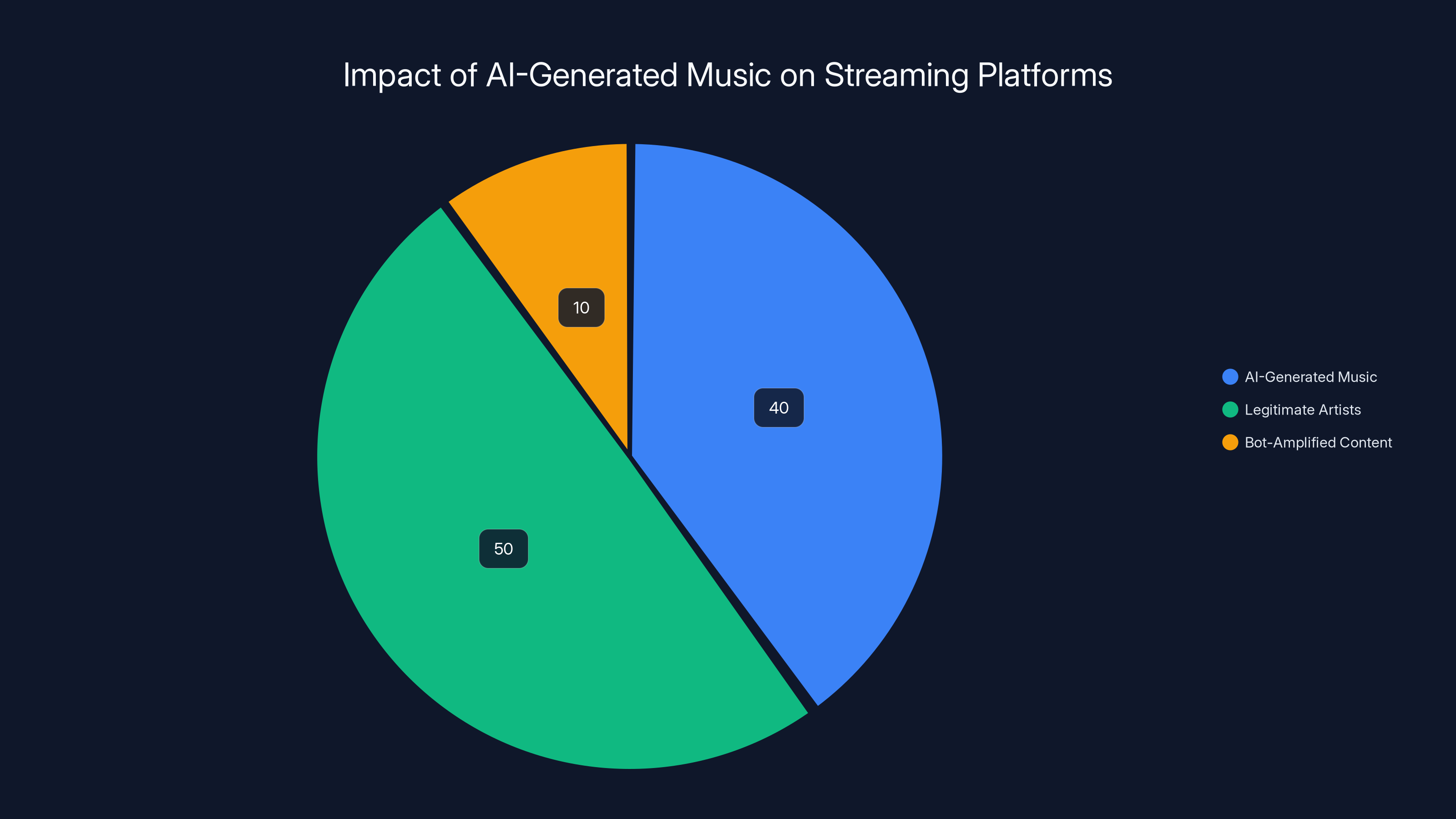

The scale of this problem is stupidly large. Streaming platforms process millions of uploads every week. Most music never gets heard by anyone. Some of that music is AI-generated. Some of the AI-generated music is genuinely fraudulent, designed purely to extract royalties. And some of it is actually good.

That's the tension. You can't ban all AI music because some of it is legitimate creative expression. You can't ban all unsigned artists because most of them are real people. So how do you separate the fraudsters from everyone else?

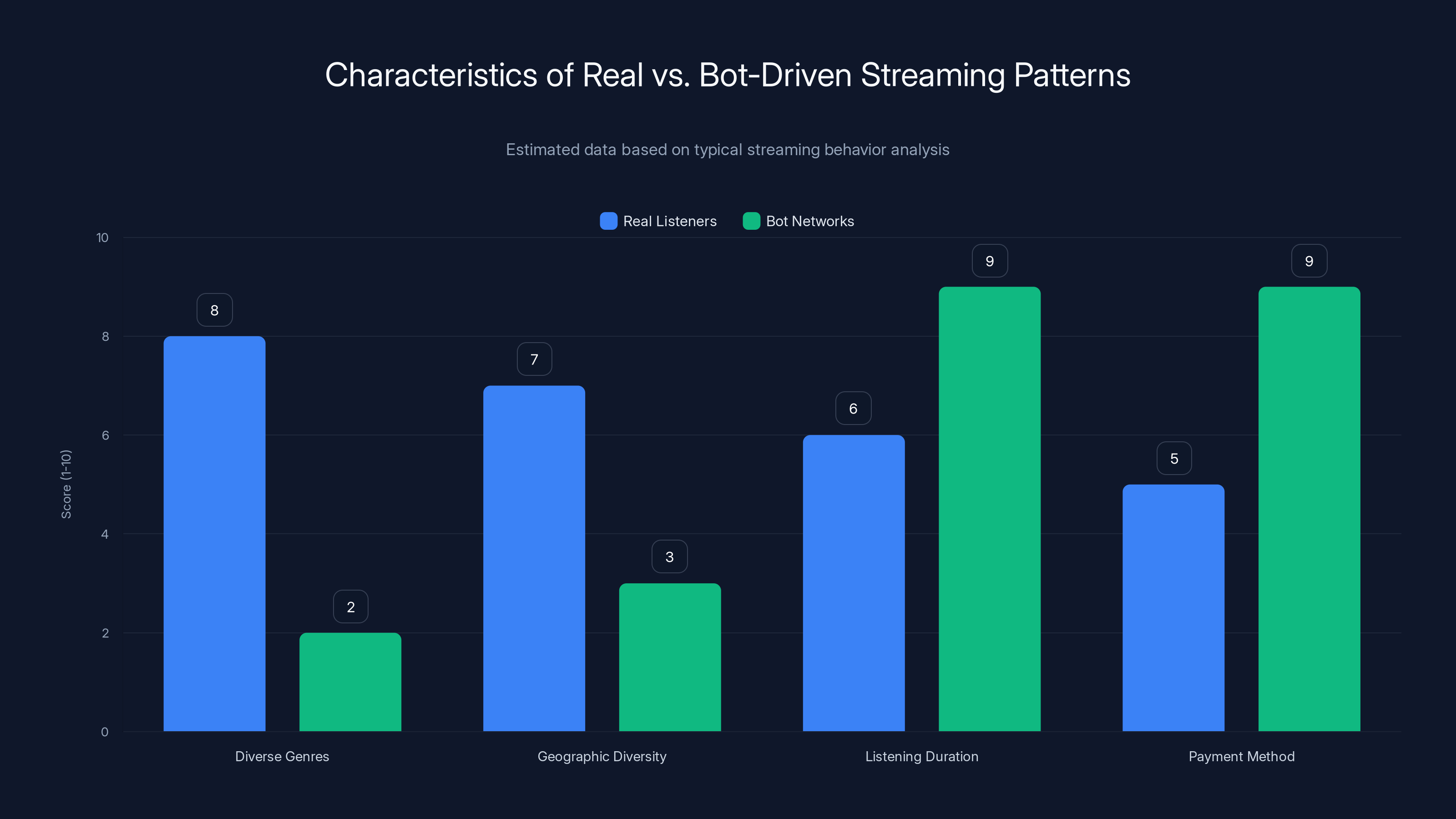

Real listeners exhibit diverse music tastes and geographic distribution, while bot networks show synchronized patterns and unconventional payment methods. Estimated data.

The Sienna Rose Case: When an AI Artist Goes Viral

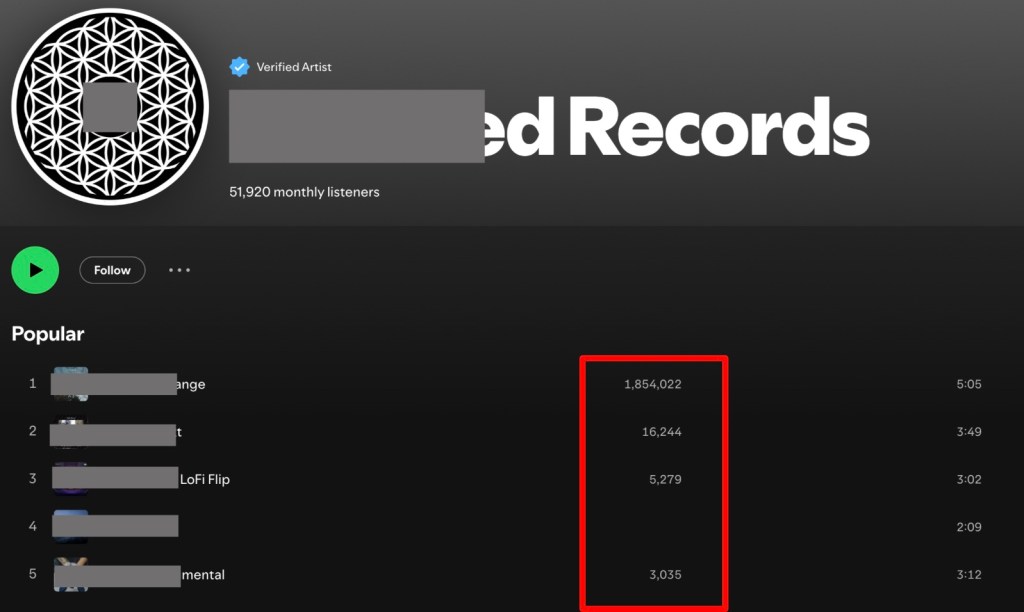

Sienna Rose wasn't some quiet scam operating in the shadows. She blew up. The artist's music started climbing Spotify's Viral 50 chart, which means the algorithm had picked it up and was showing it to millions of people. The song had organic appeal—listeners were genuinely clicking play, adding it to playlists, sharing it with friends.

Then Deezer noticed something was off.

The music streaming service investigated the account and determined that Sienna Rose was AI-generated music, likely created with tools that don't involve any human artistry. Instead of just deleting the account silently, they made a public statement about it.

This was the interesting move. Rather than pretend the problem doesn't exist or quietly take down fake accounts, Deezer went transparent. They added labels to flagged AI content. They communicated with their listener base about why this matters.

Why would a streaming platform do that instead of just silently removing fraudulent content? Because trust matters. If listeners discover later that their favorite artist was a bot, that erodes confidence in the entire platform. If artists see AI fakes dominating the charts and making money from fraudulent streams, they lose faith that hard work pays off. If creators see that you can game the system with bots, why would they bother with legitimate promotion?

Deezer's transparency wasn't purely noble. It was strategic. By publicly acknowledging the problem and showing that they're fighting it, they signal to listeners, artists, and investors that they take integrity seriously.

But here's what makes the Sienna Rose story complicated: the music itself was good. If you didn't know it was AI-generated, you'd probably enjoy it. That's not a flaw in the detection system—it's actually proof that modern AI music generation is sophisticated enough to be creatively valuable. The problem isn't that the music was bad. The problem is that it was fraudulently monetized and that the creator, whoever they are, remains anonymous while collecting royalties.

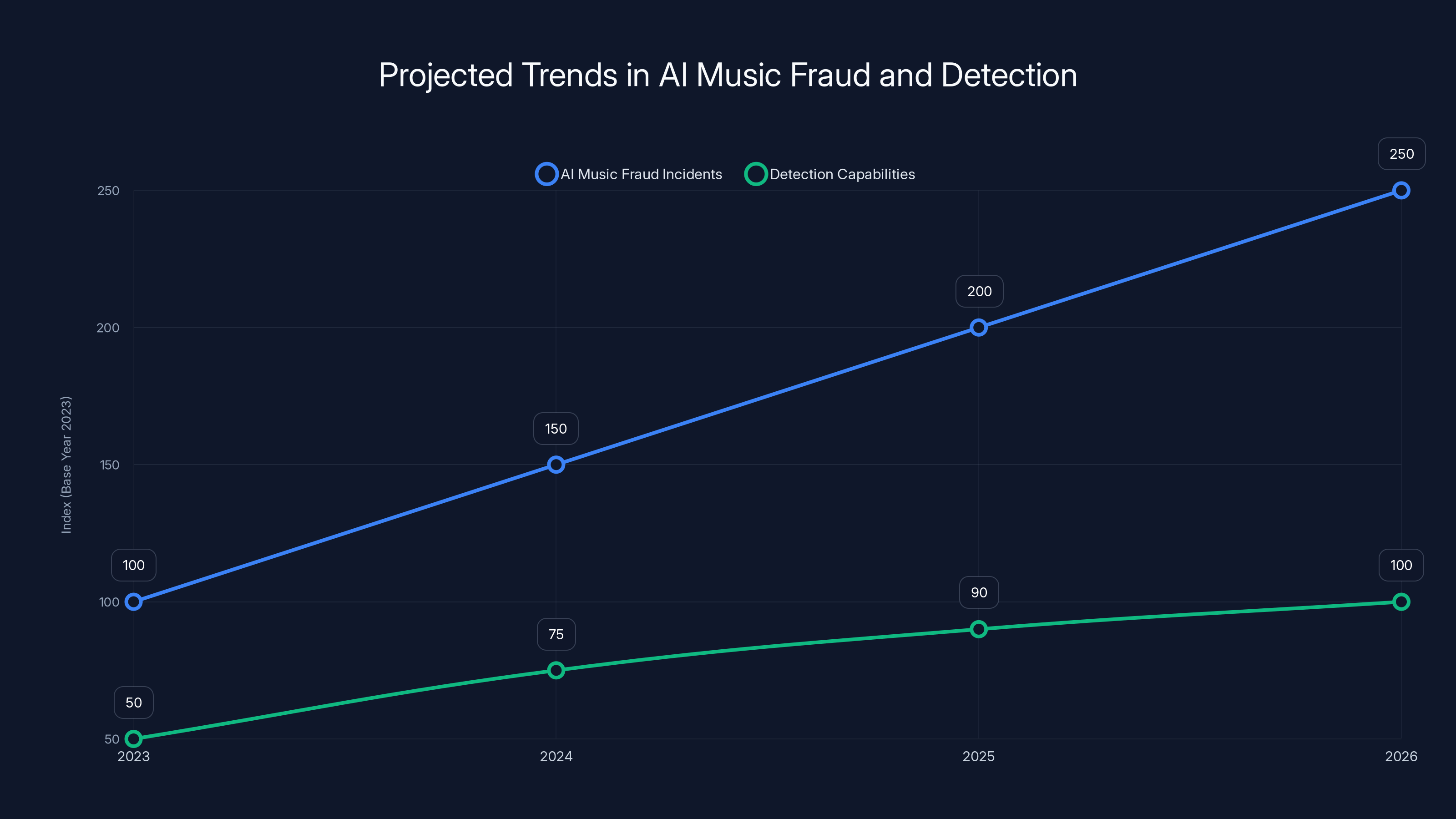

AI music fraud incidents are projected to rise sharply as tools become more accessible, while detection capabilities improve but at a slower pace. Estimated data.

How Streaming Platforms Detect AI-Generated Music

This is where it gets technical, and where platform strategies diverge significantly.

The most obvious detection method is metadata analysis. When someone uploads music to a streaming platform, they provide information: artist name, track title, album art, genres, credits. Fraudsters often get lazy with this stuff. They might upload 5,000 tracks in a single week (impossible for a human artist), all with similar metadata patterns, all under "artist accounts" created moments ago with no backstory.

Automated systems can flag these patterns immediately. Rapid upload velocity alone is suspicious. A human musician might release one album per year. An AI-generated music scammer might release 100 albums per month.

But metadata isn't foolproof. A sophisticated fraudster can space out uploads, create realistic-looking artist profiles, and invest in bot networks smart enough to mimic real listener behavior.

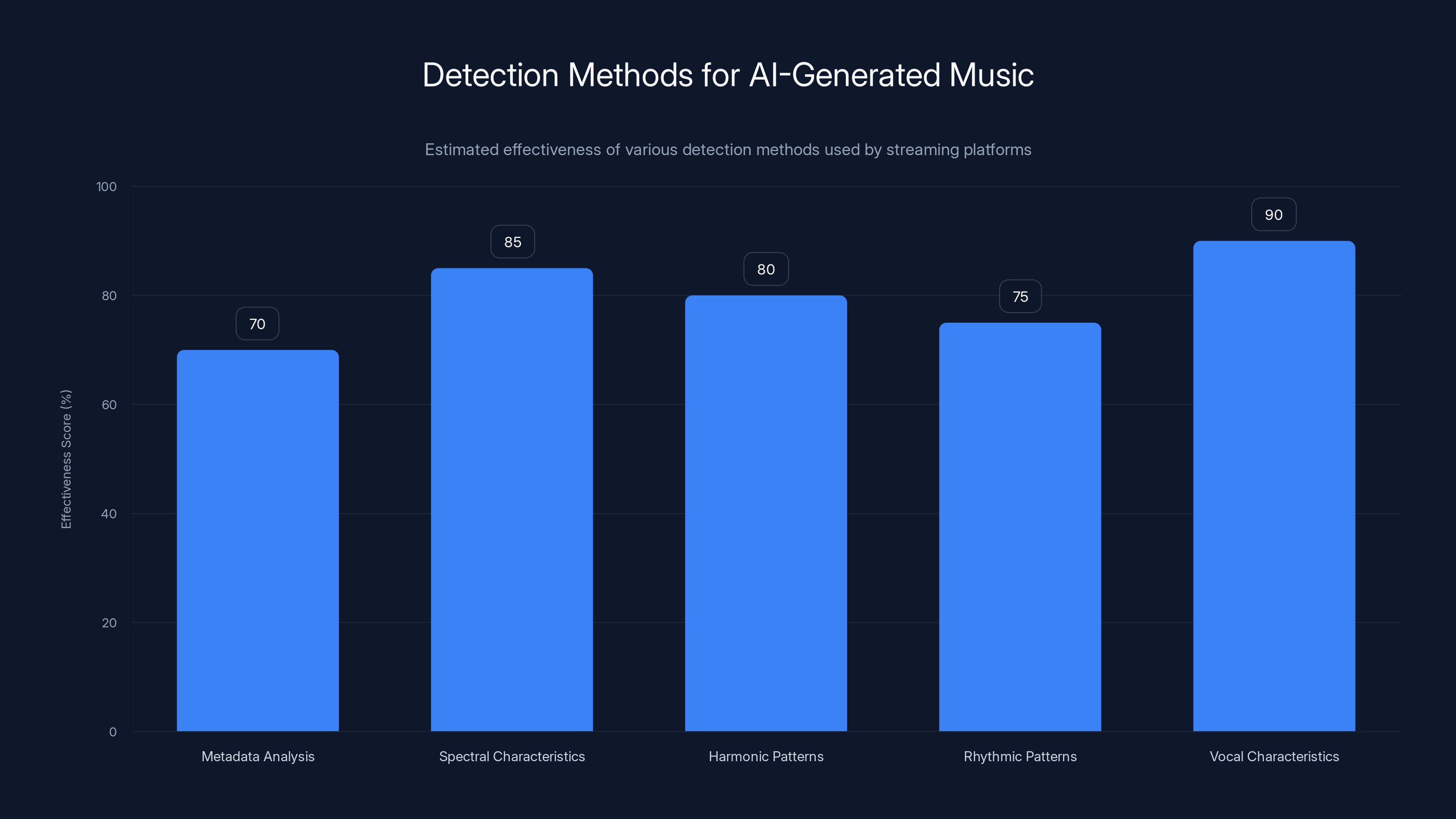

So platforms use audio analysis. This is where the machine learning gets interesting. You can train a model to detect statistical patterns that are common in AI-generated music. These patterns might include:

Spectral characteristics: The frequency distribution of AI music often has subtle differences from human-created music. Neural networks can learn to spot these differences.

Harmonic patterns: AI systems sometimes repeat chord progressions or melodic phrases in ways that sound good but statistically distinct from human composition patterns.

Rhythmic patterns: Timing variations in AI music sometimes show different distribution statistics than human-played instruments.

Vocal characteristics: AI-generated vocals have tells—certain artifacts, inconsistencies in breathing patterns, microexpressions that never actually happen.

Tools like Spotify and Deezer employ machine learning engineers specifically to build detection systems. They collect training data from verified AI music generators, learn the statistical signatures, and then deploy classifiers that can identify suspicious audio.

The problem: this is an arms race. As detection gets better, AI music generators improve to avoid detection patterns. It's cryptography versus cryptanalysis, but for music.

Behavioral Analytics: Following the Money

Detecting the audio itself is half the battle. The other half is understanding the behavior of accounts, listeners, and payment flows.

Streaming platforms look at listening patterns. Real listeners have diverse music tastes. They listen to multiple genres, multiple artists, follow accounts over time, and show the kind of chaotic consumption patterns that humans naturally exhibit. Bot networks show patterns that are too perfect, too synchronized, too identical across accounts.

When 10,000 accounts all stream the same AI-generated track at the exact same time of day, for exactly 80% of the song's duration (long enough to register as a "play" for payment purposes but not long enough to look natural), that's not ambiguous. That's a bot farm.

Deezer and other platforms also analyze geographic data. Do listeners come from plausible locations? Or are they concentrated in countries with cheap bot network services? Real artists might have fans scattered globally, but they usually have stronger listening bases in their home countries or regions where they've toured or promoted. AI scammers often have listening patterns that make no geographic sense.

Payment trails matter too. Someone has to cash out the royalties eventually. Platforms track where money flows. If an "artist" account generated $50,000 in royalties but every withdrawal goes to a cryptocurrency address or a money transfer service in a high-risk jurisdiction, that's another data point.

The challenge is that legitimate artists from developing countries might also use unconventional payment methods. International artists might have listening patterns that seem "off" by algorithmic standards. You can't just flag everyone who looks unusual—you have to distinguish between "unusual because they're from another country" and "unusual because they're committing fraud."

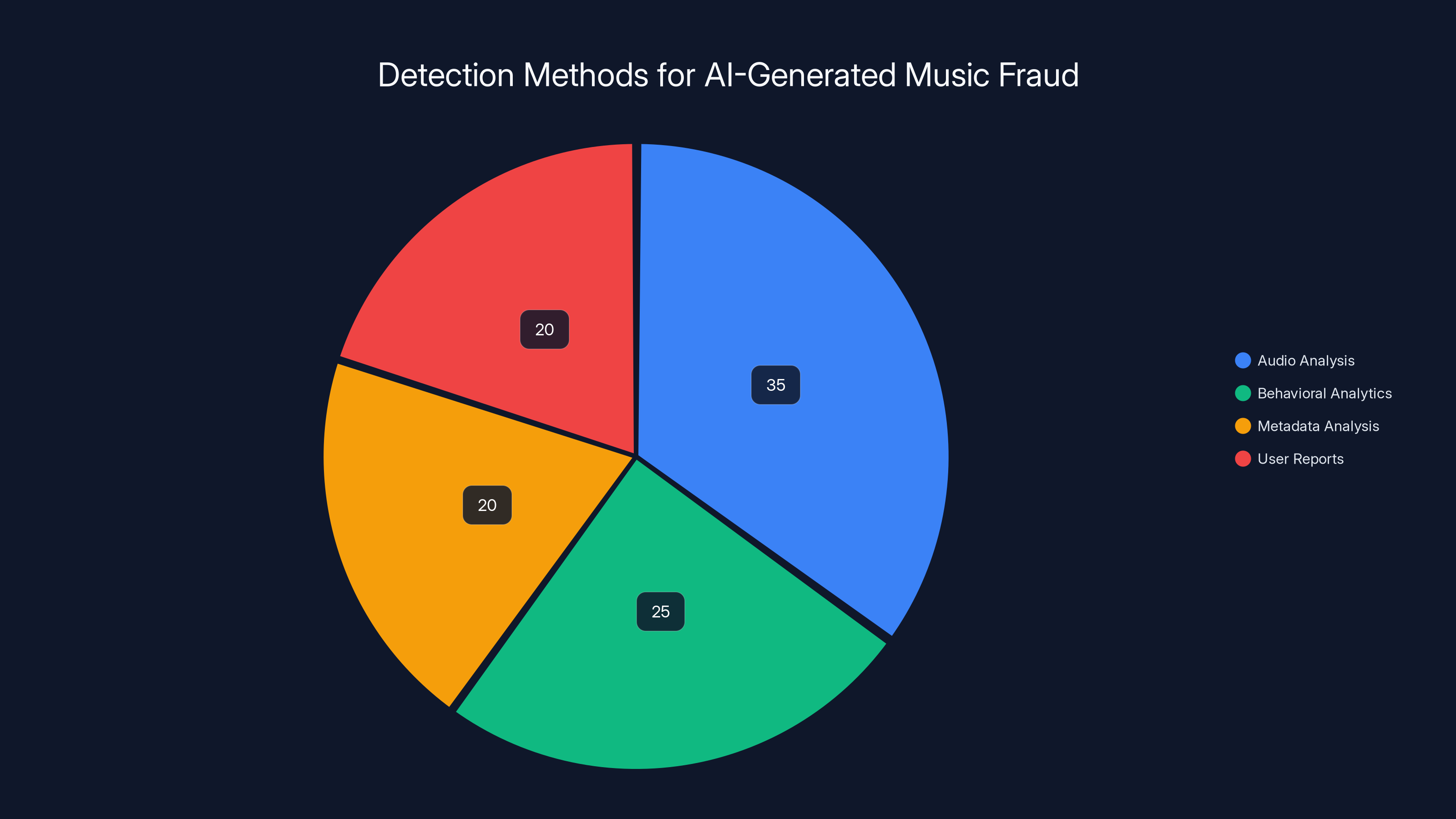

Streaming platforms use a combination of detection methods to identify AI-generated music, with vocal characteristics analysis being the most effective. Estimated data.

The Artist's Perspective: Why This Destroys Legitimacy

Here's why streaming platforms care enough to publicly call out Sienna Rose instead of quietly deleting the account.

Imagine you're a legitimate musician. You spent two years writing an album. You recorded it yourself in a home studio. You're uploading it to Spotify hoping to build a fanbase. You're competing for algorithmic recommendations against hundreds of thousands of other artists.

Meanwhile, someone with a laptop and $50 in bot network credits just uploaded 1,000 AI-generated tracks designed to be maximally appealing to the algorithm. Those tracks are getting recommended to millions of people. Some of them are actually good, so some real listeners start following them.

Money flows to the fake artists instead of real musicians. The algorithm learns that bot-amplified content performs well, so it recommends more of it. Real artists see that hard work doesn't pay off and quit. The platform fills up with AI-generated garbage, and legitimate listeners leave because the recommendations get worse.

This is called the "adverse selection" problem. The bad stuff drives out the good stuff.

It's also why Deezer's public statement about fairness to artists matters. They're saying, "We're not going to let this destroy the ecosystem. We're detecting these accounts and removing them. If you're a real artist here, we're on your side."

Without that commitment, the platform loses artists. And a music streaming service with terrible artists isn't much of a service.

Spotify has been clearer about this than anyone. Their platform guidelines explicitly prohibit artificial streaming manipulation, and they've published transparency reports showing the scale of accounts they remove. This is partly because they have the most to lose—they're the largest platform, so they're the biggest target for fraud.

The Transparency Dilemma: Should Listeners Know?

Here's where things get philosophically interesting.

When Deezer labeled Sienna Rose as AI-generated, they made a choice to inform listeners. Not all platforms do this. Some would quietly remove the account and say nothing. Others might remove it and tell the artist they've been banned for policy violations but not explain why to the public.

Deezer's transparency creates a problem though: it reveals that the platform had AI music on it in the first place. Some listeners might feel deceived. "I thought I was discovering real artists on this platform!" They might think the platform isn't doing its job.

But transparency also has benefits. It shows that the platform is trustworthy. It educates listeners that AI-generated music exists and is becoming more sophisticated. It signals that the platform is actively fighting fraud.

The question becomes: if you're a listener, do you want to know if an artist is AI-generated?

This depends on what bothers you about it. Some people think AI music is fine—creativity is creativity, regardless of the source. Some people think AI music is fundamentally soulless and don't want to listen to it. Some people don't care, as long as it's labeled honestly. Some people are bothered specifically by fraudulent AI—where someone's pretending an AI account is a real person—but wouldn't mind if AI artists were presented as such.

The music industry doesn't have consensus on this yet. Some artists are experimenting with AI collaboration and labeling it as such. Some producers are using AI tools in their workflow and not really thinking about it. Some listeners actively seek out AI music because it's interesting. Some listeners would rather quit streaming than risk hearing it.

Deezer's approach suggests that transparency is the answer. Tell listeners what they're getting. Let them decide.

Estimated data shows AI-generated music and bot-amplified content are significant portions of streaming platforms, impacting legitimate artists' visibility.

Policy Responses: What Platforms Are Actually Doing

Different streaming services have taken different approaches to AI music regulation, and there's no industry standard yet. This matters because it creates a competitive advantage for platforms that get it right.

Spotify's approach: Strict removal of accounts engaging in artificial stream manipulation, with public transparency reports. They're less clear about labeling AI-generated music that's legitimate. The focus is on fraud detection rather than AI disclosure.

Apple Music: Similar to Spotify—focused on removing fraudulent accounts. Less public about AI-specific policies, more focused on the broader "no artificial amplification" rules.

Deezer: More transparent about AI-generated content. They're willing to label accounts as AI and remove them from algorithmic recommendations while sometimes keeping them accessible if listeners search directly. This is a middle ground between removing content and amplifying it.

You Tube Music: Requires human review for accounts that trigger automated flags. They're also developing tools to help creators disclose when they've used AI in their music.

Tidal: Positioning themselves as artist-friendly and focused on higher-quality audio. Less transparent about AI policies but claiming stronger artist protections.

None of these platforms have published a definitive policy saying, "You can upload AI music, but you have to label it." That's because the legal and regulatory landscape is still forming. Music rights organizations don't have clear rules yet. Governments are just starting to think about this.

What they've all done is beef up their fraud detection. They've hired more moderators. They've invested in machine learning systems. They've implemented stricter verification for artists who are monetizing.

But here's the truth: detection is reactive. Someone uploads fraudulent content, it spreads for a while, the system catches it, it gets removed. Meanwhile, listeners heard it. The algorithm learned from it. Damage was done.

Proactive prevention is harder. One approach is to require stricter verification before monetization. Another is to require human review for accounts uploading music at scale. Another is to use AI disclosure as a first-line filter—if it's labeled AI and legitimate, great, no issue, but if it's unlabeled AI pretending to be human, remove it immediately.

No platform has implemented all of these yet. That's because they all have incentives to move fast and worry about trust later. The faster they onboard artists and music, the bigger their catalog, the better their recommendations, the more listeners they attract.

Machine Learning Detection: The Technical Reality

Let's get into the actual science of how platforms detect AI-generated audio.

The most sophisticated detection uses neural networks trained on authentic AI-generated music. You take thousands of tracks from tools like Suno, Udio, and other generators, extract audio features, and use that data to train a classifier. The classifier learns the statistical signatures of AI music.

Audio features might include:

- MFCCs (Mel-Frequency Cepstral Coefficients): Captures the way humans perceive frequency.

- Spectral centroid: Brightness of the sound.

- Zero crossing rate: How often the audio signal crosses zero, indicating noisiness.

- Chromogram: The musical notes and chords present.

- Onset detection: How notes begin, which reveals AI artifacts.

Modern systems use transformer models and recurrent neural networks that can process these features across entire tracks and identify patterns that are statistically unlikely in human-created music.

The mathematical formulation might look like this:

Where X is the observed audio features, and we're computing the probability that a track is AI-generated given its features. The classifier learns

The problem is that this works until it doesn't. As AI music generators improve, they're specifically trained to avoid artifacts that classifiers have learned to detect. It's an evolutionary arms race.

Some researchers have proposed "music DNA" approaches—digitally signing tracks at creation time with cryptographic information that proves they were created by a specific person or tool, making it impossible to fake later. But this requires adoption across the entire music industry, and we're nowhere close to that.

The reality right now: detection is good but not perfect. Platforms catch most fraudulent AI music through a combination of audio analysis, metadata analysis, behavioral analytics, and user reports. But sophisticated fraudsters with funding and expertise can sometimes get around these systems, at least temporarily.

Audio analysis is the most utilized method for detecting AI-generated music fraud, followed by behavioral analytics and metadata analysis. Estimated data.

The Label and Publishing Perspective

Major record labels and music publishers are paying attention to this issue because their economics depend on the integrity of streaming platforms.

When fake artists siphon money from the streaming pool, real artists lose out. A streaming service has a fixed pool of money they're paying to rights holders each month. When bot farms drain some of that pool with fraudulent streams, the pie shrinks for everyone else.

The three major labels—Universal, Sony, and Warner—have relationships with streaming platforms and some ability to influence policies. They've been pushing for stronger fraud detection and stricter artist verification.

At the same time, labels are exploring AI music tools themselves. Some are experimenting with AI-generated background music for soundtrack work. Some are using AI to generate demo vocals for songwriting. Some are even considering AI-generated music as potential side projects for established artists.

This creates a weird tension. Labels want to eliminate AI fraud, but they also want to preserve their right to use AI as a creative tool. The distinction they're pushing is: AI music that's transparent about its origin and created as a legitimate artistic or commercial product is fine. AI music that's fraudulently presented as human-created art to extract royalties is not fine.

Independent artists are more split. Some see AI fraud as a threat—it's stealing opportunities from real musicians. Others see AI tools as democratizing music creation—now you don't need a $100K recording studio to make professional-sounding music, you just need a laptop.

How Listeners Can Spot AI Music (Or Not)

Here's the uncomfortable truth: you might not be able to tell.

Modern AI-generated music is genuinely good. If you heard it without context, you'd probably enjoy it. Some AI music is objectively better produced than some human-created music. The uncanny valley has been crossed.

That said, there are tells if you know what to listen for:

Vocal inconsistencies: AI-generated vocals sometimes have weird artifacts. Listen for artifacts in breathing patterns, sibilants (S sounds), or the transitions between notes. Real singers breathe. AI singers sometimes have too-perfect breathing or obviously artificial breathing patterns.

Harmonic repetition: Some AI systems love certain chord progressions because they're statistically safe. Listen for overly predictable harmonic movement. Real songwriters take more risks.

Rhythmic perfection: AI music sometimes has rhythm that's too perfect, especially in drums. Real musicians have tiny timing variations that make things feel alive. Overly quantized drums can be a red flag.

Generic production: Some AI music sounds maximally appealing but slightly generic—it hits all the right notes without taking any risks. It's like the musical equivalent of AI-generated text: technically competent but somehow lacking character.

Lack of artist presence: This is harder to explain but real artists usually have distinctive elements—a particular way they phrase vocals, production choices that recur, stylistic flourishes. AI music usually sounds like it was designed by committee, pleasing everyone equally.

But again: this is not reliable. Good AI music won't have these tells. And some human-created music has all of them.

The honest answer is that you probably can't tell most of the time. That's why transparency is important. If it says it's AI, you know. If it doesn't say, you have to trust the platform to have done the detection work.

The adoption of AI-generated music has increased significantly from 2018 to 2023, driven by improved technology and reduced costs. Estimated data.

The Future: Where This Is Headed

The AI music fraud problem isn't going to go away. If anything, it's going to get worse before it gets better, because the incentives favor fraudsters.

Generating high-quality music is getting cheaper every month. Tools are becoming more accessible. By next year, you'll be able to generate album-quality music with a free tool and minimal technical skill. The barrier to entry for fraud is approaching zero.

At the same time, detection is improving but hitting its limits. You can't be 100% certain that a track is AI-generated without metadata or cryptographic proof. And you can't require cryptographic proof without a total overhaul of how music gets distributed.

Here's what's probably going to happen:

Mandatory artist verification: Streaming platforms will probably move toward stricter identity verification for monetization. Similar to how YouTube requires ID verification for channel monetization. This won't stop all fraud, but it raises the friction enough to deter casual fraudsters.

AI disclosure as standard: Over the next few years, you'll probably see AI disclosure become expected for new music. Not banned, just disclosed. If you're a musician and you used AI in your creation process, you label it. This becomes as standard as "produced by" credits.

Blockchain music signing: Some projects are exploring cryptographic signing of music at creation time, making it impossible to falsify the origin later. This is probably years away from mainstream adoption, but it's being researched.

Regulatory intervention: Governments might eventually regulate AI music similarly to how they regulate financial fraud. Right now, music fraud is in a legal gray area. As the problem gets bigger, regulation will probably follow.

Platform collaboration: Streaming services might share fraud data with each other, similar to how banks share information about fraudulent accounts. Right now, each platform detects independently. Collaboration could be more effective.

More sophisticated AI detection: Cat-and-mouse games always favor better tools eventually. As detection gets better, fraudsters find new ways around it, then detection gets better again. This cycle continues until one side has a decisive advantage. That's probably going to be detection, eventually.

But here's the thing that's hard to predict: the role of AI-generated music in legitimate creative work. Right now, we're treating all AI music as the enemy. But in a few years, AI music might be integral to how music is created. Some songs might be 50% human, 50% AI. Some might be mostly AI with human production and direction. Some might be fully AI and fully intentional.

The challenge is distinguishing between that and fraud.

Building Trust in a Fractured Ecosystem

Ultimately, what Deezer's statement about Sienna Rose signals is that trust is now a competitive advantage for streaming platforms.

Listeners want to believe that when they discover a new artist on their streaming service, that artist is real. Artists want to believe that if they work hard, they'll compete fairly. Rights holders want to believe that the money going to fraud is minimal.

None of these things are true right now, not completely. AI fraud is real and widespread. The playing field isn't level. Money is disappearing to fraudsters.

But platforms that are transparent about these problems and visibly fighting them build more trust than platforms that pretend the problem doesn't exist.

Deezer flagging Sienna Rose wasn't just about removing one fraudulent account. It was a statement: we see this problem, we're dealing with it, and we're being honest with you about it.

That matters more than most people realize.

The future of streaming will probably belong to platforms that can convince users, artists, and label partners that they're serious about integrity. Not perfect—nobody can be. But serious. Transparent. Actively fighting.

The music industry has always had fraud. From radio payola to chart manipulation to bot-streamed songs, gamed systems are older than streaming itself. What's different now is that the tools have become so good, so cheap, and so accessible that the fraud has become systemic instead of marginal.

That requires systemic responses. Platform investment in detection. Regulatory frameworks. Industry standards. And honesty with all the stakeholders—listeners, artists, investors—about what's happening.

Sienna Rose was one fake artist among thousands that exist right now across all streaming platforms. But when a major platform publicly identifies and labels one, it sends a message about what they believe the ecosystem should be.

That message matters. And the platforms that send it clearly and consistently will be the ones that win.

FAQ

What is AI-generated music fraud on streaming platforms?

AI-generated music fraud occurs when someone uses artificial intelligence tools to create music, uploads it under fake artist accounts to streaming platforms, then uses bot networks to artificially inflate stream counts. This manipulates the payment system, allowing fraudsters to collect royalties they haven't earned while legitimate artists lose out. The fraud is successful because modern AI can create music that sounds good enough for actual listeners to enjoy, making the fraudulent accounts appear legitimate until detection systems catch them.

How do streaming platforms detect AI-generated music and fraudulent accounts?

Streaming platforms use multiple detection methods working in parallel. Audio analysis systems use machine learning to identify statistical patterns common in AI-generated music, such as spectral characteristics and harmonic patterns. Behavioral analytics flag suspicious account activity like unusually rapid uploads, geographic listening patterns that make no sense, or synchronized bot streaming. Metadata analysis catches accounts uploading thousands of tracks weekly or other pattern violations. Most platforms also rely on user reports and combine all these signals to identify fraudulent accounts.

Why did Deezer publicly label Sienna Rose as AI-generated instead of quietly removing the account?

Deezer chose transparency to maintain ecosystem trust. If a major platform silently removes accounts without explanation, listeners might not realize fraud exists, potentially losing confidence in the platform later. By publicly identifying and labeling AI-generated content, Deezer signals to listeners and artists that they take integrity seriously. This transparency also educates the music community about AI's capabilities and reassures legitimate artists that the platform is actively fighting fraud that undermines their livelihoods.

Can listeners reliably tell if a song is AI-generated just by listening?

No, not reliably. Modern AI-generated music has crossed the "uncanny valley" and sounds genuinely good to most listeners. Some tell-tale signs exist—like overly perfect rhythms in drums, generic harmonic patterns, or artificial breathing patterns in vocals—but good AI music won't have these artifacts. The most reliable way to know if music is AI-generated is through platform labeling, artist disclosure, or metadata, not through listening alone.

What are the consequences of AI music fraud for legitimate artists and the music industry?

AI music fraud directly harms legitimate artists in multiple ways. Fraudulent streams siphon money from the fixed pool streaming services allocate to rights holders, meaning fewer resources for real artists. The algorithm learns that bot-amplified content performs well, so it recommends more fraudulent content, making it harder for legitimate artists to gain visibility. Some real musicians quit the platforms entirely when they see fraud going unpunished. This creates a vicious cycle where the ecosystem gradually fills with lower-quality content and real artists lose incentive to participate.

What's the difference between legitimate AI music and AI music fraud?

Legitimate AI music is created intentionally as AI, often disclosed as such, and typically presented honestly to listeners. An artist might use AI as a compositional tool in their workflow, label it as such, and the money goes to a real person who created the work. AI music fraud is when someone uses AI to create dozens or hundreds of tracks, uploads them under fake artist accounts with false credentials, and uses bots to artificially inflate streams. The fraud isn't the AI itself—it's the deception and manipulation of the payment system.

Are streaming platforms banning all AI-generated music?

No. Most platforms aren't banning AI music outright—they're banning fraud. If someone creates AI music, discloses it clearly, and doesn't use bots to artificially amplify it, most platforms allow it. The issue is specifically with undisclosed AI music that's fraudulently presented as human-created, combined with artificial streaming manipulation. As policies evolve, transparency and disclosure are becoming the standard, but AI music itself isn't being banned by major platforms.

Will AI music fraud get worse or better in the coming years?

Likely worse in the near term before it gets better. AI music generation tools are becoming cheaper and more accessible every month, lowering the barrier to entry for fraud. However, detection systems are also improving, and platforms are investing heavily in fraud prevention. The real solution will probably involve mandatory artist verification, AI disclosure standards, regulatory frameworks, and possibly cryptographic signing of music at creation time. The arms race between fraudsters and platforms will continue for years.

How can independent musicians protect themselves from AI fraud competition?

Transparency is your best weapon. Create consistent artist presence across platforms and social media. Build a community and audience over time. Disclose your creative process honestly. Show that you're a real person with a real story. Legitimate listeners and platforms reward authenticity. Also, report suspicious accounts to the streaming service—most platforms take user reports seriously as one input to their detection systems. And verify your accounts properly, use consistent branding, and be prepared to prove you created your music if asked.

What role will governments play in regulating AI music in the future?

Government regulation is probably coming as this becomes a bigger issue. Right now, AI music fraud exists in a legal gray area—it's not explicitly illegal on most platforms, though it violates terms of service. As fraud becomes more widespread and costly, governments might regulate it similarly to financial fraud. You'll probably see requirements for artist verification, transparency mandates for AI-generated content, and possibly industry standards set by music organizations. This is still years away, but the regulatory trajectory is becoming clearer as the problem becomes more visible.

The Bottom Line

The Sienna Rose case isn't really about one fake pop star. It's about the fundamental question of whether streaming platforms can maintain ecosystem integrity as AI makes fraud cheaper and easier. Deezer's public stance on transparency suggests that the platforms taking the problem seriously will win in the long run.

The music industry is at a transition point. AI music generation is good enough to be legitimate, but that same capability enables fraud at scale. The platforms, artists, labels, and listeners who adapt fastest—by building transparency into the system, automating detection, and being honest about the problem—will thrive. Those who ignore it will watch their ecosystems degrade.

For independent artists, the lesson is clear: build legitimately, be transparent about your tools and process, and let your work speak for itself. For listeners, it's time to develop some healthy skepticism about discovery algorithms and unknown artists, while staying open to genuine new talent. For platforms, the path forward requires investment, transparency, and a commitment to fairness even when it's inconvenient.

The future of music streaming depends on it.

Key Takeaways

- AI music generation has become good enough to fool listeners, creating new fraud opportunities where fake artists flood platforms with bot-streamed AI tracks.

- Streaming platforms detect fraud through multi-layered approaches combining audio analysis, behavioral analytics, metadata inspection, and user reports.

- Deezer's public labeling of Sienna Rose signals that transparency and platform integrity are becoming competitive advantages in the streaming market.

- The arms race between AI fraud and detection systems will likely escalate, requiring mandatory artist verification, AI disclosure standards, and possibly regulatory intervention.

- Legitimate artists competing on streaming platforms should focus on transparency, real community building, and consistent presence across multiple platforms to differentiate from fraudulent accounts.

Related Articles

- Hi-Res Music Streaming Is Beating Spotify: Why Qobuz Keeps Winning [2025]

- Bandcamp's AI Music Ban Sets the Bar for Streaming Platforms [2025]

- Bandcamp's AI Music Ban: What It Means for Artists [2025]

- Bandcamp's AI Music Ban: What Artists Need to Know [2025]

- AI Slop Songs Are Destroying YouTube Music—Here's Why Musicians Are Fighting Back [2025]

- Nvidia Music Flamingo & Universal Music AI Deal [2025]

![AI Fake Artists on Spotify: How Platforms Are Fighting AI Music Fraud [2025]](https://tryrunable.com/blog/ai-fake-artists-on-spotify-how-platforms-are-fighting-ai-mus/image-1-1769175385815.jpg)