Deploying AI Agents at Scale: Real Lessons From 20+ Agents [2025]

There's this wave of AI agent skepticism hitting LinkedIn right now. And I get it. Founders bought an AI SDR tool, didn't touch it for two weeks, and wondered why it produced garbage. So they posted about how "AI agents don't work" and everyone nodded along.

Here's what actually happens when you run 20+ AI agents across your entire go-to-market operation for eight months straight: You generate

But it doesn't work the way the marketing materials promise.

I've watched AI agent deployments fail spectacularly when companies treated them like a set-it-and-forget-it tool. I've also watched them drive real revenue when teams invested the operational infrastructure to make them function. The difference isn't the tool. It's the discipline.

Let me walk through what we've learned. Not the LinkedIn-friendly version. The actual stuff: what takes hours every single day, where most people fail, why your first two weeks matter more than your next eight months, and how to know whether an AI agent vendor is actually going to support your success or just take your money.

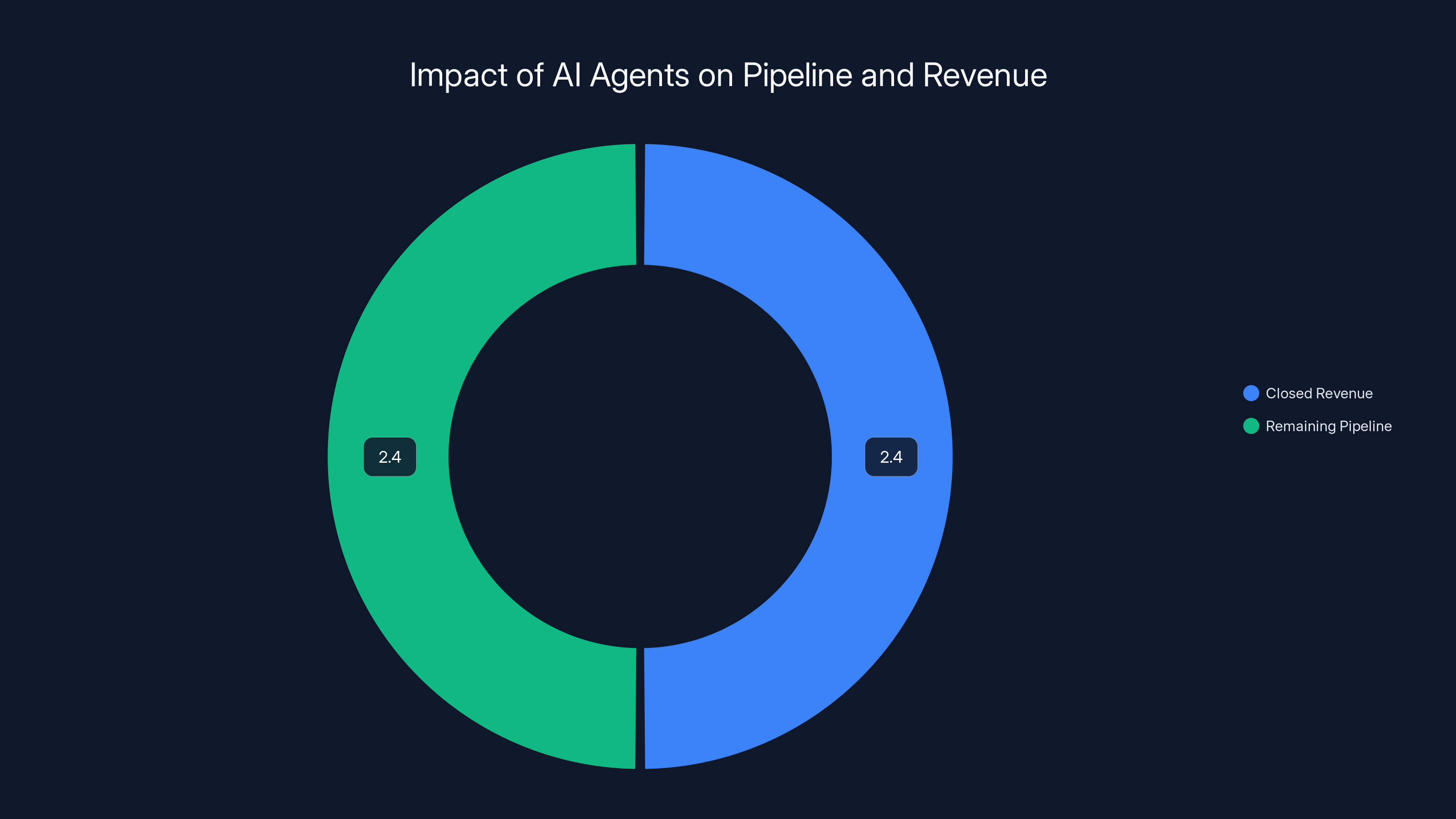

The Real Numbers: What $4.8M in Pipeline Actually Means

Let's start with the results, because they're concrete and they matter.

After eight months of deploying and actively managing AI agents across our go-to-market operation, we've generated

Our deal volume has more than doubled. Our win rates have climbed to nearly double what they were before. We've sent over 60,000 high-quality AI-generated emails on the sales side alone. That doesn't count the nearly 1 million interactions happening through AI-powered apps and automation flows.

Here's the part that matters most: we didn't sacrifice anything to get here. Our existing inbound channels? Still strong. Our traditional outbound? Still running. Our events, our gifting, our community efforts—all of it continues exactly as before. The agents didn't replace our existing engine. They augmented it.

But here's the honest truth nobody broadcasts on social media: we maintain these agents every single day. Not once a week. Not when they break. Every morning, before anything else gets touched, we're checking our agents. We're reading responses. We're validating that nothing is hallucinating. We're making sure they're representing us the way we actually want to be represented.

Amelia and I each spend 15-20 hours per week—that's each, not combined—actively managing these agents. That's not setup. That's not quarterly reviews. That's active, hands-on management of 20+ AI systems that are out there representing our company to thousands of prospects every single day.

At some point, the math becomes obvious: the agents are faster than you. They work 24/7/365. They'll always respond to a prospect. They'll always follow up. They'll always book the meeting. Meanwhile, you're sleeping. You're in meetings. You're handling fires. The humans become the bottleneck, not the agents.

The question then becomes: can your organization operate at that speed? Can you support agents that are moving faster than any human team ever could? That's the real filter for whether AI agents will work for your company.

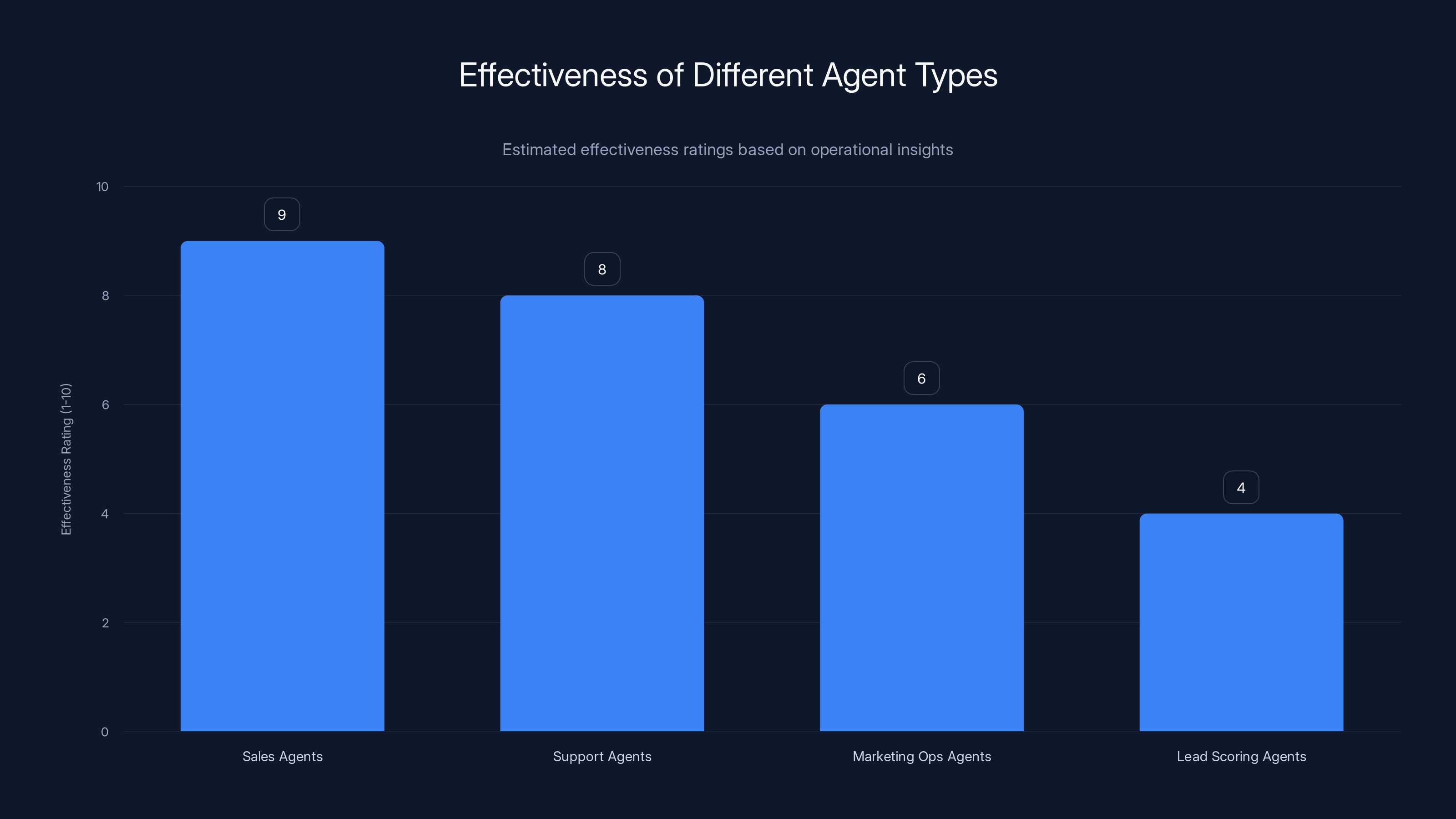

Sales agents are rated highest in effectiveness due to their scalability and process orientation, followed by support agents. Marketing ops and lead scoring agents are less effective, reflecting their experimental status. Estimated data.

The Secret That Separates Success From Failure: Deep Training Cannot Be Self-Trained (Yet)

I was talking with the CEO of a next-generation AI go-to-market platform. They're already doing millions in ARR and are preparing for public launch. I asked what their secret sauce was—what makes their customers succeed when so many other agents fail.

The answer was blunt: "We do everything."

They handle the onboarding. They structure the data. They tag everything. They run the first campaigns. They validate responses. Some customers don't even realize how much human engineering is happening behind the scenes because they experience it as "easy."

That's the learning that changed how I think about agent deployment: if you haven't deployed agents before, you need to have a specific conversation with the right person at any vendor you're evaluating.

Don't talk to a salesperson. Don't talk to a customer success manager who has never actually deployed an agent. Talk to a forward-deployed engineer. Talk to someone in leadership who has literally spent 20+ hours implementing agents for real customers. Ask them: what is it actually going to take in the first 14 days? What about days 15-30? What needs to happen every single day after that?

Then you have to actually do it.

It's like going to the doctor, getting a prescription, and never taking the medicine. The medicine is good. The prescription is sound. But if you don't actually ingest it, nothing changes. The same thing happens with agents. You buy the tool, you implement it halfway, and then you wonder why it's not working.

Many AI agent vendors are pushing downmarket right now. They want to make deployment self-service. They want to lower the barrier to entry. I appreciate the goal. But right now, it doesn't work.

Agents that require deep training cannot be self-trained yet. It will come. Agents improve dramatically every single quarter. But today, if a vendor is telling you that you can buy their tool and have it working well in 48 hours without expert help, I'd be skeptical. That doesn't match what we're seeing.

If you're considering an agent tool, ask for a specific thing: a dedicated resource for the first 30 days. If they won't commit to that, it's a signal. Maybe not a deal-breaker, but a signal worth paying attention to.

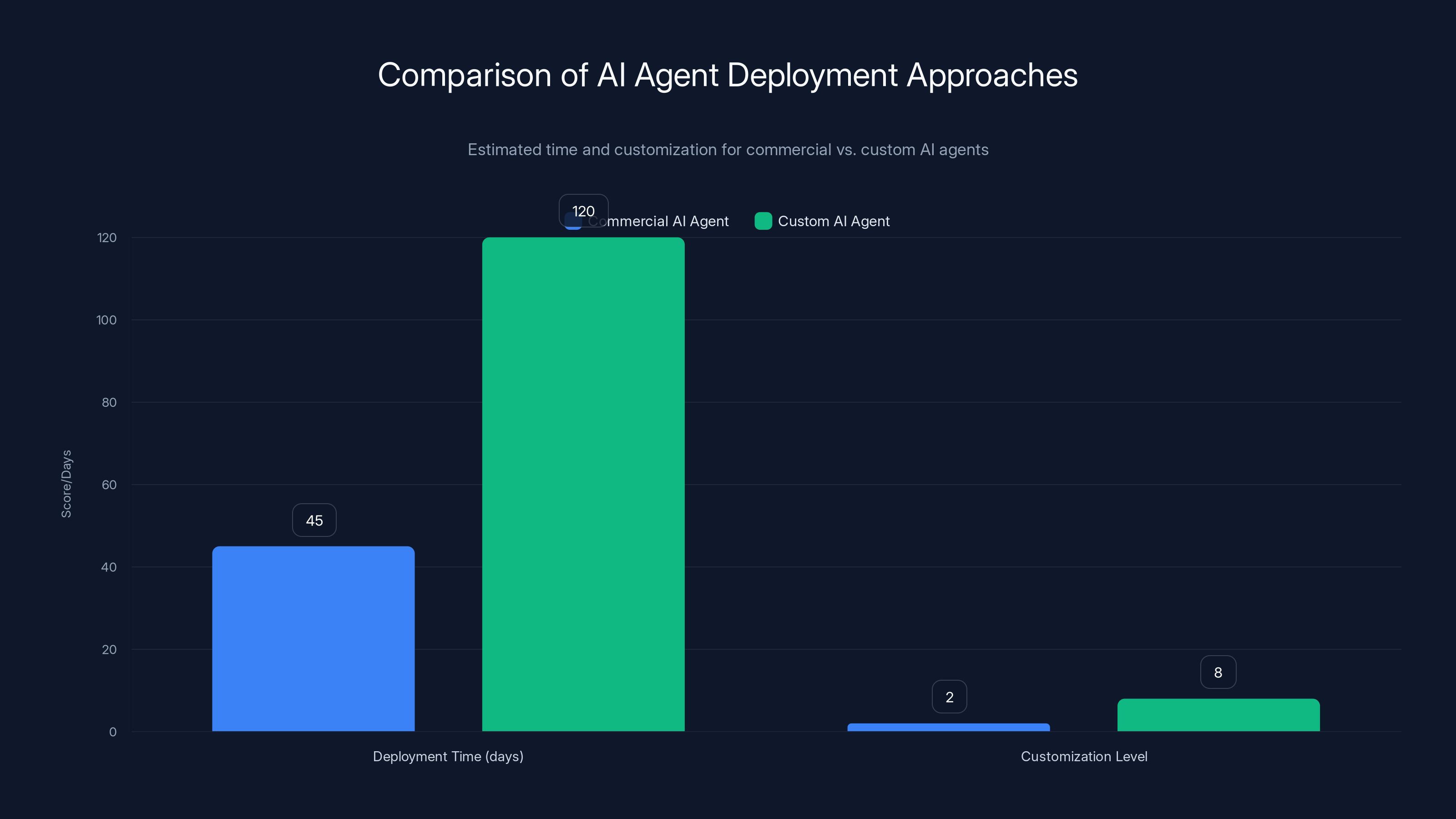

Commercial AI agents are faster to deploy (45 days) but offer less customization (score 2/10) compared to custom agents, which take longer (120 days) but provide higher customization (score 8/10). Estimated data.

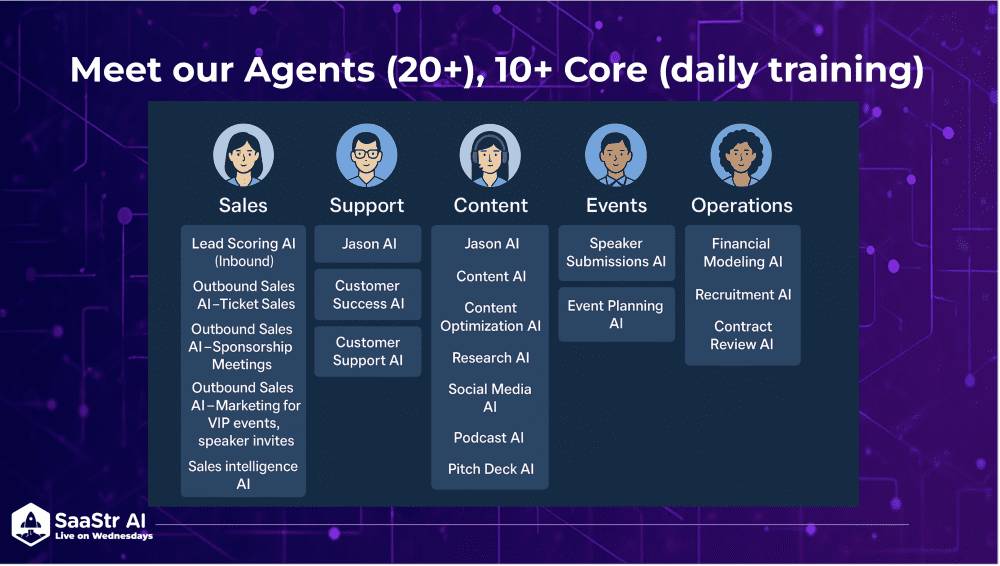

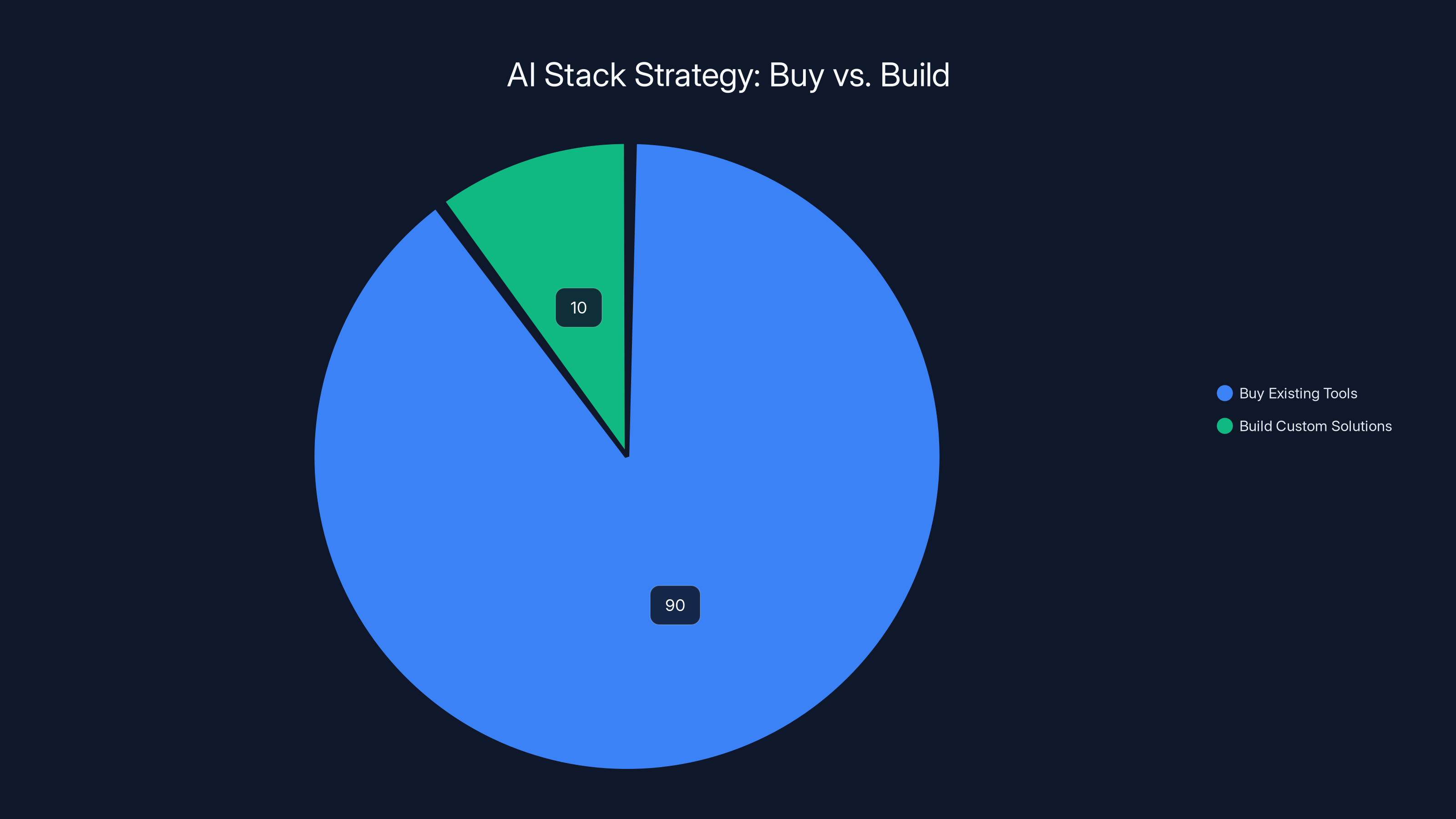

The 90/10 Rule: When to Buy vs. When to Build

Here's the rule we follow: buy 90% of your AI stack. Build only 10% where no vendor can do it well and it's a P1 priority.

We've stuck to this religiously, and it's kept us from burning months building things other companies have already solved. The vast majority of our agents are third-party tools. We've trained them heavily. We've customized them. But the core platform? Bought, not built.

We only built custom agents where we had a very specific use case that no vendor could handle. Our AI VP of Marketing is an example—more on that below. But for most of what we do, an existing tool existed that could do 85-90% of what we needed. We focused on the last 10%.

Kyle, the CRO at one of our portfolio companies, followed a similar approach. He bought a bunch of third-party agents first. Made them work. Generated revenue. Then, once he had clarity on what was missing, he hired someone with real credibility—a former founder and engineer who'd actually been a CEO of an LLM company—to build proprietary in-house tooling for the gaps.

That's an extreme case. Most companies shouldn't build custom agents yet. Here's why: the problem is hard. The talent is expensive. The maintenance is heavy. Your vendor is hiring more engineers than you are. They're iterating faster. Their models are improving quarterly. You're stuck maintaining something they're rebuilding.

Focus on making the bought tools work first. Get them to 80% of your desired outcome. Document what the remaining 20% looks like. Then, if it's truly a P1 priority and no vendor offers a path forward, build it.

But I'd wager that for 90% of companies, the vendors will continue to get better and your "need to build" never materializes.

How to Evaluate AI Agent Vendors: Don't Throw Away Basic Discipline

People lose their minds when something has "AI" in the name. They throw away practices that would normally be table stakes. Here's what we do to evaluate any vendor—and you should, too.

First: Ask for a dedicated resource.

I told every single AI vendor we now use the same thing: "I need an FDE, and I need to talk to real customers who have actually used this."

If they can't commit to a dedicated resource for at least 30 days, the odds of a successful deployment drop dramatically. This person should have authority. They should understand the product deeply. They should be able to make decisions about customization on the fly.

We've never deployed an agent tool without this. It's never been a waste.

Second: Get customer references.

I see far too many companies buying software because they were impressed by a demo. A demo is theater. A real customer who's been using the tool for six months—that's data.

Ask for three references. Not the customer success team's favorite customers. Ask for customers who deployed in different industries, with different use cases. Call them. Ask specific questions: How long did the first 30 days actually take? What surprised you? If you could go back, what would you do differently?

You'll learn more in those three calls than in 10 vendor pitches.

Third: Ask about the operational infrastructure.

This is where most vendors get vague. Ask: What happens if my agent starts hallucinating? What's your SLA for response? How do I know if something is wrong? Who do I call at 2 AM when your agent is telling prospects we have a feature we don't actually have?

If they don't have a clear answer, that's a problem.

Fourth: Understand the data you're providing.

You're going to give these vendors access to your customer data, your email history, your sales process, your CRM. Ask: Where does it live? Who can access it? Can I get it back if I want to leave? What happens after I stop paying?

This should be boring, standard stuff. If the vendor treats it like a tech detail, they're not taking data seriously.

AI agents generated an additional

The Agents That Actually Work: Sales, Support, and Custom Marketing Ops

We're running 20+ agents. They don't all work equally well. Let me break down the ones that drive real value and the ones that are more experimental.

Sales agents work. This is where we've seen the clearest ROI. These agents are handling initial outreach, qualification, meeting booking, and follow-up. They're sending 60,000+ emails per month. The conversion rates are tracking well. They're integrating with our CRM. They're not replacing sales reps. They're doing the work that used to fall to junior AEs or SDRs. The work that was high-volume and low-complexity.

Why do they work? Because sales is a process. It has repeatable steps. Agents are very good at repeatable steps. They're also good at doing them at scale and at speed. A sales agent can call 500 people in a day. A human can call 50. The quality difference isn't 10x. So the math favors the agent.

Support agents are working well. We've deployed them to handle initial customer inquiries, triage tickets, and escalate to humans when needed. They're cutting down the noise that hits our human support team. Customers aren't thrilled with talking to a bot, but they're getting their answer faster. And for the 70% of inbound support that's straightforward, they're getting a good answer from the agent.

The agent doesn't replace your support team. It buys them time to handle the complex stuff.

Content and marketing operations agents are hit-or-miss. We built a custom "AI VP of Marketing" that handles campaign strategy, performance tracking, and optimization recommendations. It's amazing on certain tasks. It's hallucinating confidently on others. It's useful, but it's not production-grade yet. This is the 10% where we decided to build custom because no vendor offered something close enough.

Lead scoring and data quality agents are still experimental. We've deployed a couple that are supposed to clean up CRM data and score leads. They work sometimes. They confidently mark bad data as good sometimes. We're actively iterating on these, but I wouldn't bet your entire lead strategy on them yet.

The pattern: agents work best on high-volume, repeatable processes with clear, measurable outputs. They struggle with nuance, judgment calls, and anything that requires deep context about your specific business.

The Operational Reality: Daily Monitoring and Iteration

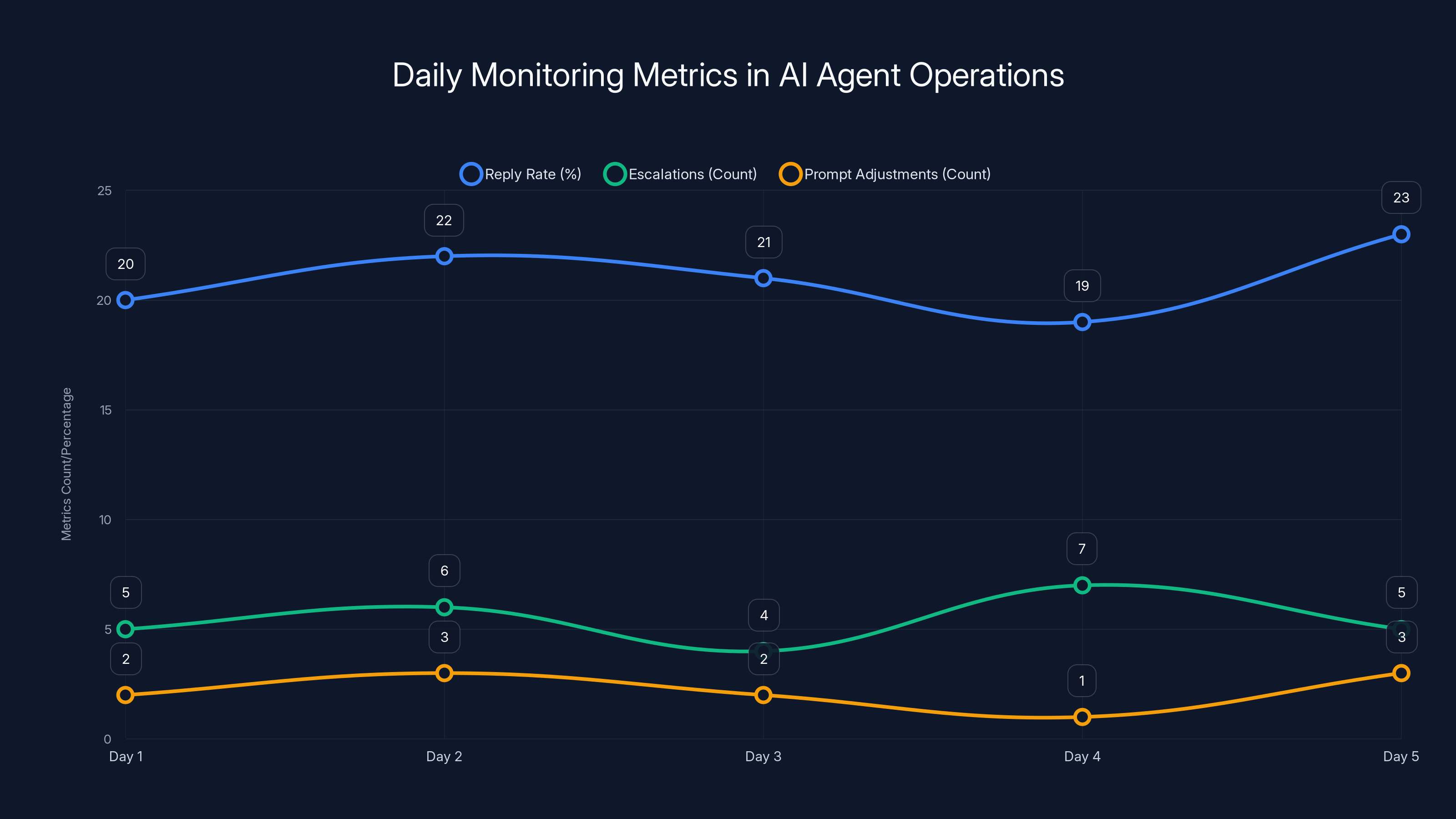

Let me be specific about what "maintaining agents every day" actually means.

Every morning, we're spot-checking responses. We're looking at what the agents sent yesterday. Are they on-brand? Are they saying things we actually believe? Are they making claims we can back up? Are they appropriately setting expectations?

We're also looking at metrics. How many prospects replied? What's the reply rate? Is it trending up or down? If it's trending down, what changed? Did we update the agent prompt last week? Did something shift in the market?

We're looking at escalations. Are prospects flagging the agent as spam? Are they replying with "this seems automated"? If so, we're adjusting. Maybe the tone is too polished. Maybe we need more personalization. Maybe we need to be faster to escalate to a human.

We're also actively iterating on the prompts. Agents are only as good as their instructions. If the prompt says "be casual," but the agent is sounding like a marketing email, we fix the prompt. If the agent is too casual and losing deals, we tighten it.

This is not a quarterly review cycle. This is active, daily management.

Why does it matter? Because agents are consistent in a way that's both a superpower and a liability. If an agent is slightly off, it's consistently slightly off. It will do the same thing 10,000 times before you realize there's a problem. So you need to catch these issues fast.

We also spend time on agent handoff. When an agent starts the conversation but a human needs to take over, that transition matters. The agent needs to provide context. The human needs to understand what the agent already covered. That's engineering work. That's not magic.

The other part of daily management is handling failures. Agents will hallucinate. An agent will confidently tell a prospect that you have a feature you don't have. It will make a claim about pricing that's wrong. It will misunderstand a complex customer question and provide bad guidance.

When that happens, you need a system to catch it, escalate it, and make sure the customer knows the agent was wrong. You also need to update the agent so it doesn't make the same mistake again.

This is operational burden. This is not something you can delegate to customer success. This needs to be someone with authority over the agent's behavior.

Daily monitoring involves tracking reply rates, escalations, and prompt adjustments to ensure AI agents remain effective. Estimated data shows fluctuations in these metrics over a typical week.

The Prompt Engineering Work: Why This Matters More Than the Model

Every agent is driven by a prompt. The prompt is the instructions you're giving the AI model about how to behave.

"You are a sales development representative for Saa Str. Your job is to qualify leads and book meetings. When you encounter a prospect who doesn't fit our target market, politely decline to continue the conversation."

That's a prompt. It's not magical. But it matters enormously.

Here's what we've learned: most teams spend 5-10% of their effort on prompt engineering and 90% on tool selection. It should be the opposite.

The model quality matters, but not as much as people think. A slightly less sophisticated model with a great prompt will outperform a more powerful model with a mediocre prompt.

Why? Because the prompt defines the agent's actual behavior. The prompt tells it how to think about edge cases. The prompt tells it when to escalate to a human. The prompt tells it what to optimize for.

We spend significant time on prompt iteration. We A/B test prompts. We measure which versions drive better reply rates, better qualification, better meeting quality. This is where the real work is.

Here's what an iterative prompt cycle looks like:

- You notice a pattern in agent responses. Maybe it's too aggressive. Maybe it's not personalizing enough. Maybe it's making promises that are too strong.

- You hypothesize about what in the prompt is driving that behavior.

- You change one thing in the prompt. Just one thing.

- You run it against a small segment of your audience for 3-5 days.

- You measure the impact. Does the change improve the metric you care about?

- You either keep the change, iterate further, or revert.

This is not complicated. But it requires discipline. Most teams run an agent for a month, never touch the prompt, and then wonder why it's not working.

The Integration Complexity: Why Your CRM Matters More Than Your Agent

An agent is only useful if it can integrate with your business systems. An agent that can't read your CRM and update it is basically sending emails into the void.

This is where a lot of deployments fall apart. A vendor might have great agents, but their CRM integration is clunky. It's slow. It requires manual setup. It breaks when you update your CRM.

When we evaluated vendors, we asked about integration before anything else. Can it integrate with HubSpot? Salesforce? How deep? Can it read custom fields? Can it update your status fields? Can it create new objects? How fast?

If a vendor treats integration like an afterthought, their agent is not going to be as useful as it could be.

Here's what we prioritize:

Data flow into the agent. The agent needs to know about your existing customers, your deals, your pipeline. It needs to be able to pull context. An agent that doesn't know a prospect is already a customer will have a really awkward conversation.

Data flow out of the agent. Every action the agent takes needs to update your systems. New contact created? Update CRM. Meeting booked? Update CRM with the meeting time and attendees. Customer replied? Log the interaction.

Speed. The integration should happen in seconds, not hours. If there's an 8-hour delay between your agent booking a meeting and that meeting showing up in your calendar, you have a problem.

Error handling. What happens when the integration fails? Does the agent retry? Does it alert a human? Does it silently fail?

Most agents don't integrate as well as they should. This is a place where you want to push your vendor. If their integration is mediocre, everything downstream suffers.

According to the 90/10 Rule, companies should buy 90% of their AI stack and only build 10% for unique needs. Estimated data.

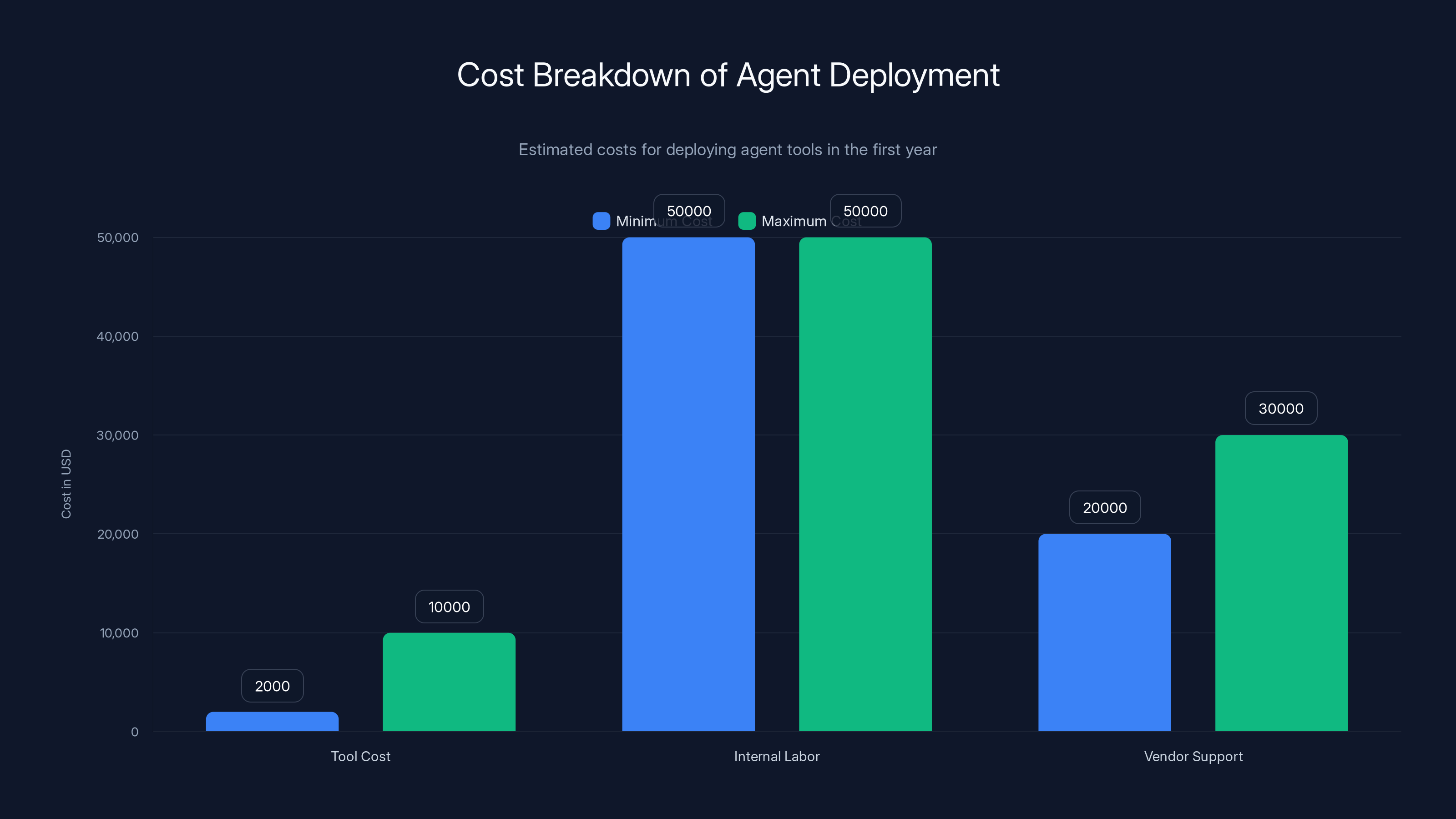

The Economics: What Should This Cost vs. What Are You Actually Spending?

Here's what I see most companies get wrong: they look at the cost of the agent tool and assume that's their total investment.

Tool cost: maybe

One person managing agents 50% of their time = approximately

Add in the cost of a forward-deployed engineer from the vendor for the first 30 days. That's probably

So your first-year cost for a real agent deployment is probably

Is that worth it? For us,

You need a certain scale for agents to make economic sense. If you're below $5M ARR, you probably should focus on simpler, lighter-touch automation before going full-scale on agents.

Here's what I'd recommend for budgeting:

Year 1: Assume

Year 2: Assume half that. You've got operational discipline now. Your internal team is trained. You need less vendor support.

Year 3+: Assume the tool cost plus minimal internal labor.

But here's the thing: this assumes you actually generate ROI. If you deploy an agent and get nothing, the cost is wasted. So the calculus needs to be: can this agent generate enough value to justify $100K in first-year cost?

For sales agents targeting your existing TAM, the answer is usually yes. For experimental agents in new areas, maybe not.

The Hiring Reality: What Kind of Person Can Actually Manage Agents

We've learned something unexpected about who can manage agents well.

It's not the person who's most bullish on AI. It's not the person who's best at coding. It's the person who is obsessive about getting small details right.

Why? Because managing an agent is like managing a junior sales rep who is extremely consistent and never gets tired, but also makes the exact same mistakes if you don't correct them.

You need someone who will read 50 agent responses and notice that three of them have a subtle tone issue. You need someone who will see that the agent is closing email with "Looking forward to connecting" 95% of the time and think that's a problem that needs fixing.

You need someone who cares about the small stuff because the small stuff compounds.

Amelia, who manages most of our agents, comes from a customer success and operations background. Not sales. Not engineering. She's detail-oriented, she cares about customer experience, and she's obsessive about process improvement. Those three things matter more than any other qualification.

When we hire for agent management, we look for:

- Operations or customer success background (comfort with process)

- Obsessive attention to detail (catching agent issues before customers do)

- Comfort with iterative improvement (not expecting perfect, expecting better)

- Ability to escalate problems without panicking

- Time. This job is 20 hours a week minimum.

If you have the budget for one person to manage agents, make sure they have those qualities. If you don't, don't deploy agents. They'll fall apart.

The total cost of ownership for agent deployment in the first year ranges from

Common Failure Modes: What Actually Goes Wrong

We've seen a lot of agent deployments fail. Here are the patterns.

Failure Mode 1: Set-and-forget.

Company buys an agent tool. Deploys it. Checks on it once a month. Wonders why it's not working. The agent drifts. The prompt is outdated. The integration with the CRM is broken. The agent is sending emails that don't match your current positioning.

Before you deploy an agent, commit to active management. If you can't commit, don't deploy.

Failure Mode 2: Over-automating judgment calls.

You try to automate things that require nuance. Should we follow up with this prospect or leave them alone? Is this a good fit for our product? Should we offer a discount?

Agents are not good at judgment calls. They're good at repeatable, clear-cut tasks. Don't try to use them for ambiguous decisions.

Failure Mode 3: Not training the agent.

You buy an agent. You give it the default training data. You deploy it. The agent doesn't understand your specific positioning, your specific customer, your specific process. It hallucinates.

Agents need training specific to your company. That takes time.

Failure Mode 4: Not measuring the right thing.

You measure agent activity (emails sent, meetings booked) instead of agent output (quality of meetings, close rates, customer feedback). You get distracted by vanity metrics.

Measure what matters. Is the agent generating pipeline? Is it qualified? Is it closing?

Failure Mode 5: Not having a plan for failure.

Your agent makes a mistake. It tells a customer something that's wrong. There's no process to catch it, fix it, and make sure the customer knows what happened.

Before you deploy an agent that talks to your customers, have a failure protocol.

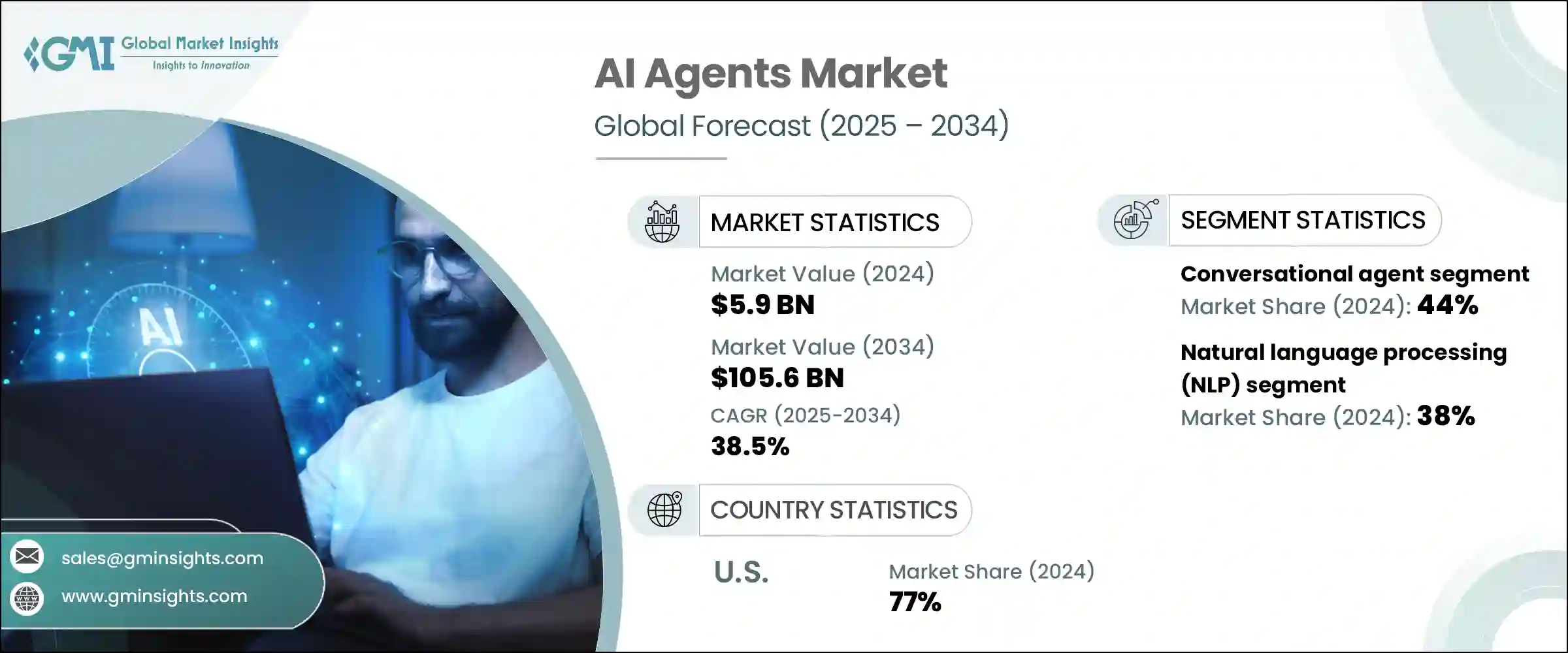

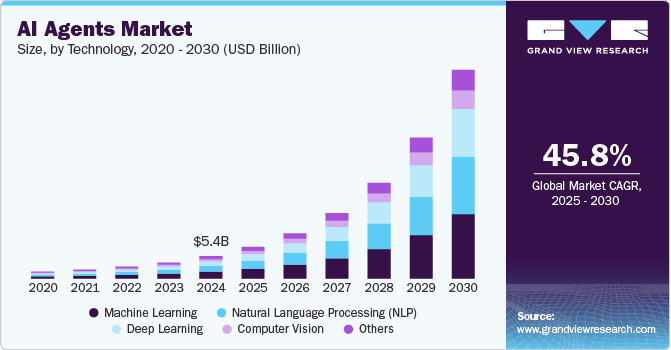

The Future: Where Agents Are Heading

I'm bullish on agents. But I'm bullish in a specific way. I don't think they're going to replace humans. I think they're going to handle the parts of your business that are high-volume, repeatable, and low-judgment.

Here's what I expect to change:

Self-training will improve. Right now, agents require deep human input during setup. Over the next 2-3 years, I expect agents to get much better at learning from your data without as much manual work. Not fully self-service yet, but better.

Integration will become seamless. Today, integration is a pain point. In a couple years, I expect agents to come pre-integrated with the major platforms. That integration will be faster and more reliable.

Hallucination will decrease. This is more of a core model problem, but we'll see improvements. Not elimination—hallucination might always be an edge case risk. But it'll become rarer.

The bar for success will increase. Right now, the companies succeeding with agents have operational discipline. That's a competitive advantage. As agents improve, that advantage will shrink. Success will require deeper integration with your business, not just deploying the tool.

The companies that will win with agents are the ones investing now. You're ahead of the curve. But the curve is moving fast, and competitors are coming.

The Bottleneck Is Still Human: When to Pull Back

Here's the honest truth that nobody wants to hear: agents can scale your operation, but they can't scale if you can't support them.

If your team is already stretched thin, adding agents will make things worse. You'll deploy them, they'll start generating results, and then you'll be overrun.

Before you deploy agents, make sure you have people who can handle the results.

For sales agents: Can your sales team handle 2x the meetings? Can your deal management process handle 2x the pipeline?

For support agents: Can your support team handle the escalations? Or will they just see more work?

For marketing agents: Can you execute on 3x the campaigns the agent suggests?

If the answer to any of these is no, start smaller. Deploy agents in a specific area. Let your team get comfortable with it. Then expand.

The last thing you want is agents that are too good—generating results that you can't handle.

What We'd Do Differently: Hindsight Insights

If I could go back eight months and start over, here's what I'd do differently.

Start with monitoring infrastructure first.

We spent the first month dealing with agent issues we should have caught immediately. If I'd set up proper monitoring and alerting before deploying broadly, we would have caught hallucinations faster. We would have spotted quality issues before they impacted customers.

Set up monitoring before you deploy. Not after.

Invest more in prompt engineering early.

We did basic prompt work upfront, then got more sophisticated over time. If I could do it over, I'd hire a prompt engineer or spend more time on it myself before any real deployment.

The agent quality jumps dramatically when you iterate on the prompt. We should have done more of that upfront.

Get operational buy-in before you deploy.

Our sales team didn't fully believe in the agents at first. There was friction. Over time, as they saw results, they got on board. But if we'd spent more time getting alignment upfront—showing them the data, getting their input on what they needed—the transition would have been smoother.

Document everything.

We've learned from a thousand edge cases. Each one has taught us something about how to configure agents, how to prompt them, how to handle failures. We should have documented this systematically from day one.

If you're going to deploy agents, set up a knowledge base for what works and what doesn't. You'll need it.

FAQ

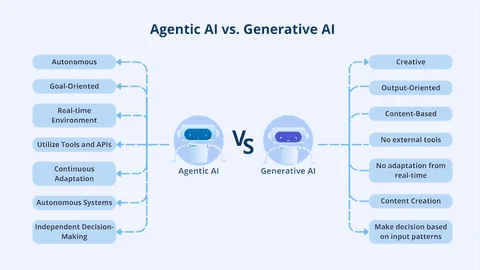

What exactly are AI agents and how are they different from regular AI tools?

AI agents are autonomous systems that can take actions independently based on prompts and instructions. Unlike regular AI tools that respond to direct queries, agents continuously perform tasks, make decisions, and interact with your business systems (CRM, email, calendar) without being asked each time. A regular AI tool might draft an email when you ask it to. An AI agent sends emails continuously, books meetings, qualifies leads, and updates your CRM automatically.

How long does it actually take to deploy an AI agent successfully?

True deployment takes 30-60 days if you have the right resources and vendor support. The first two weeks are critical—this is when you're doing initial training, configuration, and integration work. Many deployments fail because teams expect results in week one and haven't invested the operational groundwork. The agent continues improving for months after initial deployment through ongoing prompt iteration and refinement.

What's the difference between buying a commercial agent tool and building a custom agent in-house?

Commercial tools are faster to deploy and maintain but less customized to your specific workflow. Building custom agents takes significantly longer, requires specialized talent, and needs ongoing maintenance. The recommendation is to buy 90% of your solution from commercial vendors and only build custom agents for the 10% of use cases that are truly unique to your business and high priority. Most companies should start with commercial tools.

Why do some companies' AI agents produce garbage results while others see millions in pipeline?

The difference is operational discipline. Companies that succeed treat agents like they require active management—daily monitoring, weekly prompt iteration, integration with business systems, and a dedicated person managing them. Companies that fail buy the tool and expect it to work independently. An unmanaged agent drifts. It hallucinations. It makes inappropriate claims. The model quality matters less than the human management layer around it.

What should I measure to know if my AI agents are actually working?

Measure outcomes, not activity. Don't just count emails sent or meetings booked. Measure what matters: pipeline quality, deal velocity, close rates, customer satisfaction, and whether agent-sourced deals close at similar rates as your other pipeline sources. If your agents are generating volume but poor quality, they're a liability, not an asset. Good agents should increase both quantity and quality of your opportunities.

How much does it actually cost to deploy and run AI agents at scale?

First-year total cost of ownership is typically

What kind of person should I hire to manage AI agents?

Look for someone from an operations or customer success background with obsessive attention to detail and comfort with iterative improvement. You need someone who will read agent responses and notice subtle tone problems, who cares about consistent messaging, and who can escalate issues without panicking. Sales or engineering backgrounds are less important than the ability to manage processes and catch small issues before they compound. The role is roughly 50% monitoring and 25% prompt iteration and 25% escalation management.

Can AI agents really integrate with our existing CRM and business tools?

Yes, but the quality of integration varies significantly between vendors. Before choosing an agent platform, evaluate their integration capabilities thoroughly. Can it read your custom fields? Update multiple fields simultaneously? Integrate in real-time or near real-time? What happens when the integration fails? Poor integration limits the agent's usefulness significantly because it can't access the context it needs or update systems with the actions it takes. Integration quality should be a primary evaluation criterion.

What's the single biggest mistake companies make when deploying AI agents?

Deploying without a management plan. Companies buy the tool, activate it, and then assume it will work independently. Without daily monitoring, weekly prompt iteration, and clear accountability for agent behavior, agents drift. They make mistakes. They don't improve. Successful agent deployment requires commitment from a real person to actively manage them every single day. If you don't have that capacity, don't deploy.

How do AI agents handle edge cases and when should they escalate to humans?

This is defined entirely by your prompt—your instructions to the agent about how to behave. You need to explicitly tell the agent what situations require human escalation, what edge cases look like, and how to handle them gracefully. A well-configured agent knows its limits. It can say "I need a human expert for this." A poorly configured agent will confidently make something up. Building in escalation logic is critical to preventing customer damage.

Key Takeaways

AI agents work. They work really well. But they don't work the way the marketing materials suggest. They require operational infrastructure, active management, and disciplined integration with your existing systems. The companies seeing ROI are the ones treating agents like they matter—checking them daily, iterating on prompts weekly, and having a dedicated person responsible for their success. Start with high-volume, repeatable processes. Get one agent type working well before expanding. Hire for operational discipline, not AI expertise. And accept that you can't outsource the management to a tool—you need a human in the loop every single day. The barrier to entry is low. The barrier to success is discipline.

Related Articles

- AI Agent Social Networks: The Rise of Moltbook and OpenClaw [2025]

- From AI Pilots to Real Business Value: A Practical Roadmap [2025]

- Larry Ellison's 1987 AI Warning: Why 'The Height of Nonsense' Still Matters [2025]

- Network Modernization for AI & Quantum Success [2025]

- New York's Data Center Moratorium: What the 3-Year Pause Means [2025]

- New York Data Center Moratorium: What You Need to Know [2025]

![Deploying AI Agents at Scale: Real Lessons From 20+ Agents [2025]](https://tryrunable.com/blog/deploying-ai-agents-at-scale-real-lessons-from-20-agents-202/image-1-1770726955380.jpg)