Roblox Lawsuit: Understanding the LA County Child Safety Crisis [2025]

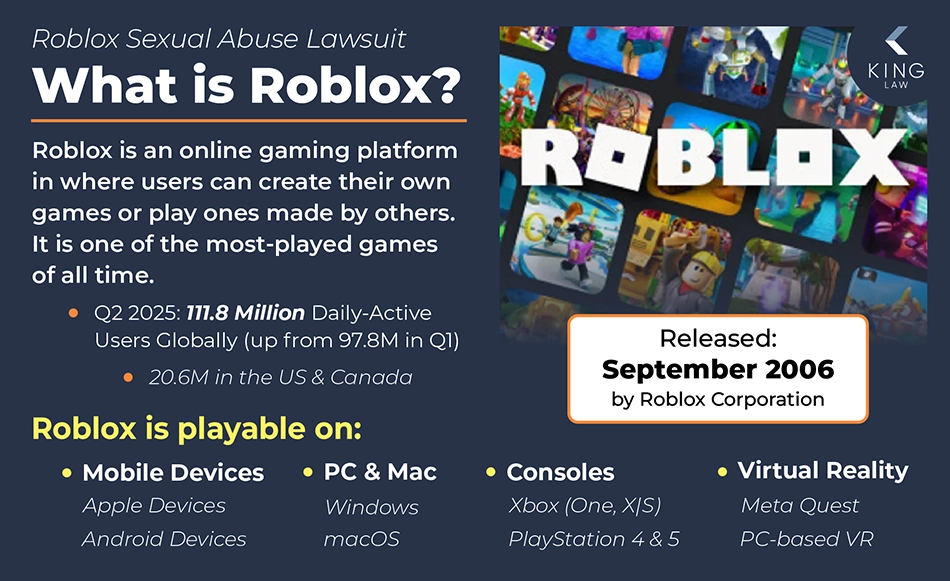

When a gaming platform with 144 million daily active users becomes the subject of a major lawsuit from Los Angeles County, it's not just another corporate dispute. It's a wake-up call about how technology companies handle child safety in spaces where kids spend hours every day.

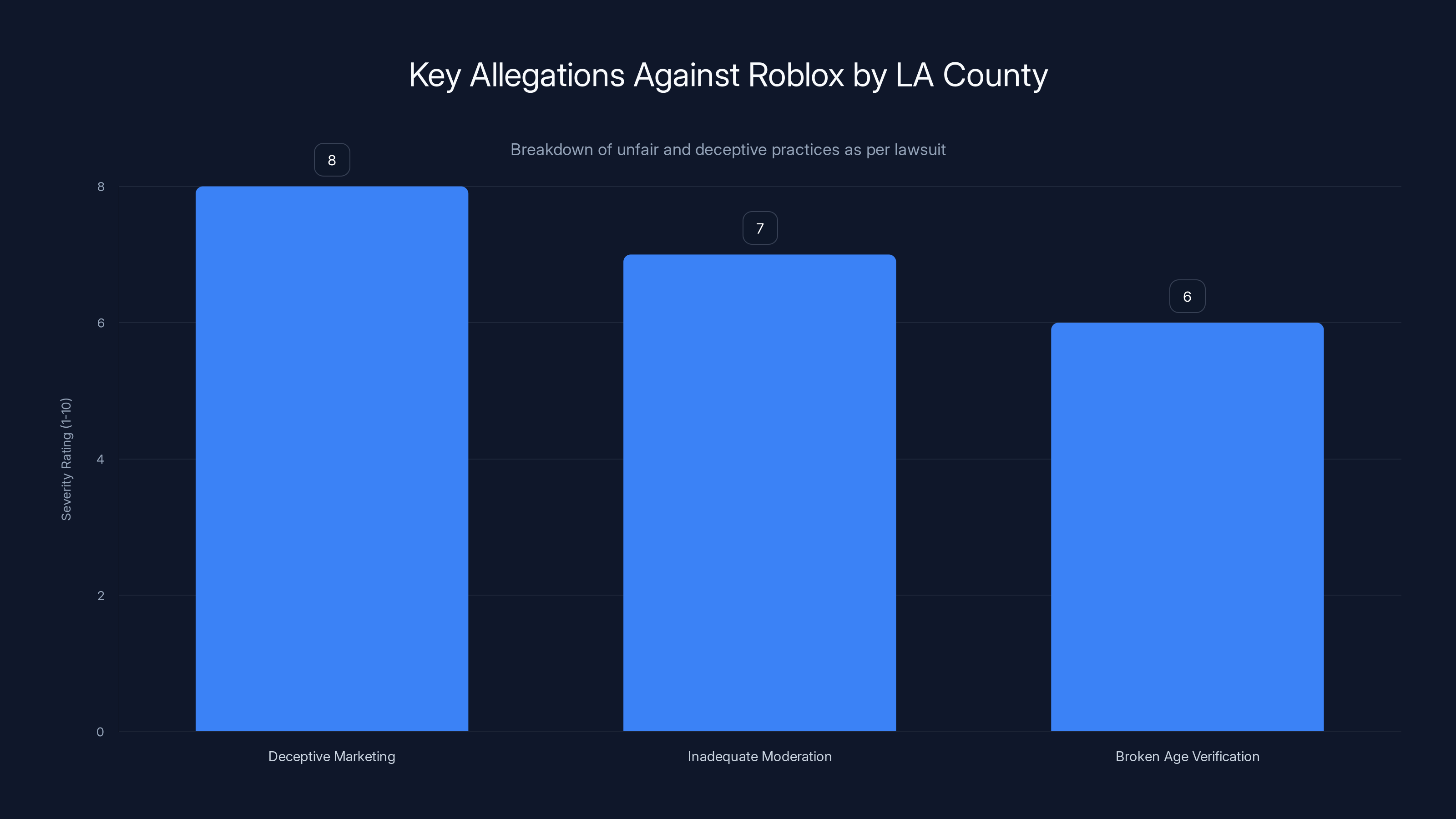

In early 2025, Los Angeles County filed a lawsuit against Roblox Corporation, accusing the platform of engaging in unfair and deceptive business practices by failing to adequately protect children from grooming, exploitation, and sexual predation. The complaint doesn't mince words. It alleges that Roblox markets itself as a safe space for kids while simultaneously operating a platform where predators find easy targets due to weak moderation, insufficient age verification, and inadequate safety features.

This isn't an isolated incident. Roblox has faced similar legal action from Louisiana, Texas, Florida, and Kentucky, each detailing disturbing patterns of child endangerment. One case involved a predator using voice-altering technology to impersonate a child and lure young players into exploitative interactions. These aren't hypothetical risks—they're documented cases where the platform's failures allegedly enabled real harm.

The lawsuit raises fundamental questions about platform responsibility, corporate accountability, and whether current safety measures are sufficient. For parents, educators, and policymakers, it highlights a critical gap between how companies market themselves and how they actually operate. For the gaming industry, it signals that regulatory scrutiny and legal consequences for inadequate child protection are only going to intensify.

This article breaks down what the lawsuit alleges, what Roblox's response has been, how the platform's safety systems actually work (and where they fall short), what other states have claimed, and what this means for the future of gaming platforms and child protection online.

TL; DR

- LA County alleges Roblox knowingly markets an unsafe platform: The complaint states Roblox portrays itself as safe while failing to implement adequate moderation, age verification, and content controls that would prevent predatory behavior.

- Multiple states are suing for similar reasons: Louisiana, Texas, Florida, and Kentucky have filed or are pursuing legal action against Roblox, citing consistent failures to protect children from sexual exploitation and grooming.

- The platform has 144 million daily users, 40%+ under age 13: Despite marketing heavily to children, Roblox's safety infrastructure lags behind what experts recommend for platforms with this demographic concentration.

- Roblox claims it has "advanced safeguards": The company argues it monitors content, blocks image sharing in chat, and now requires selfie-based age verification—but critics say these measures come too late and aren't enforced consistently.

- This lawsuit could reshape platform accountability standards: If LA County prevails, it could establish legal precedent forcing gaming platforms to implement significantly stronger child protection measures or face major financial penalties.

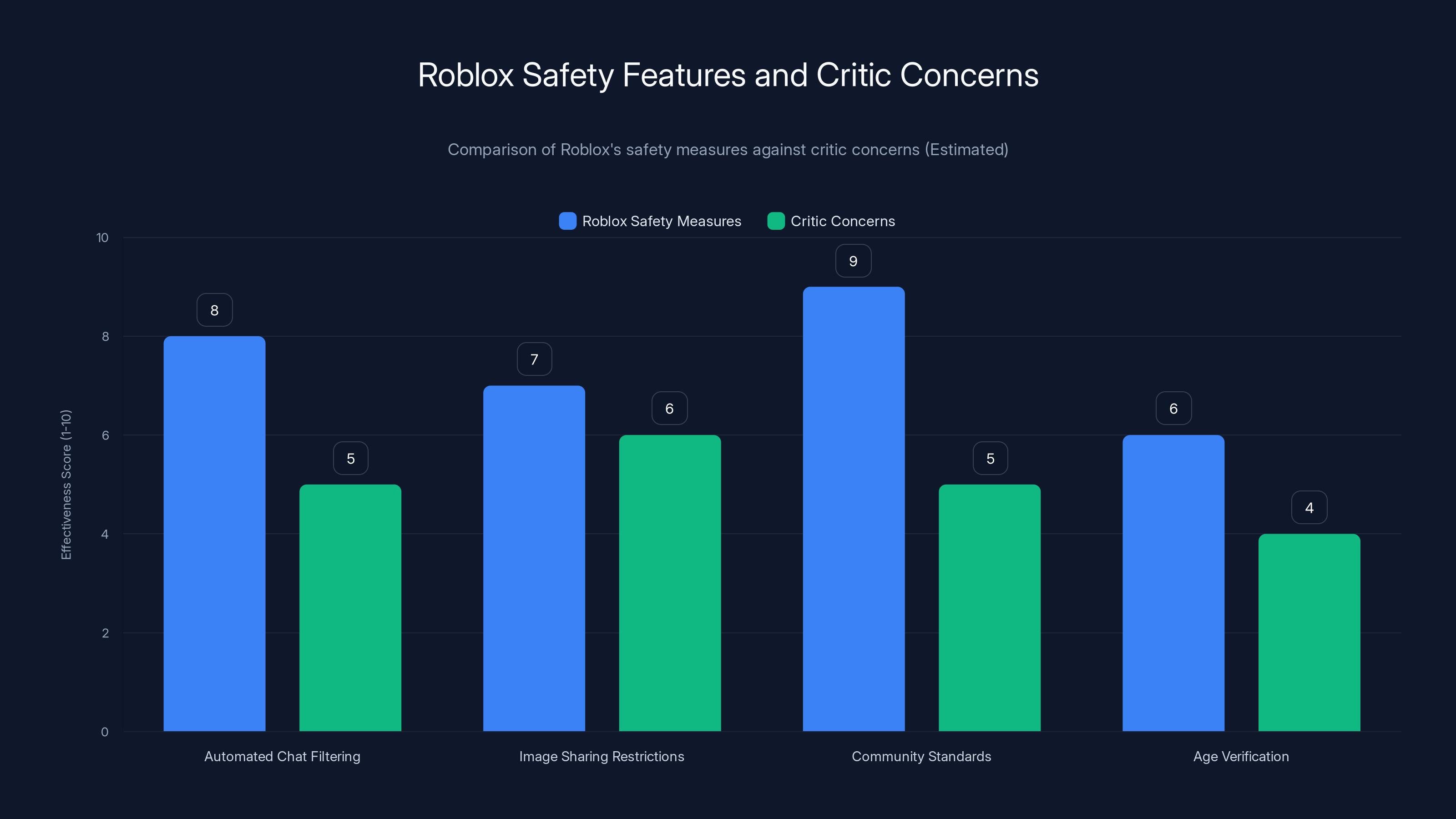

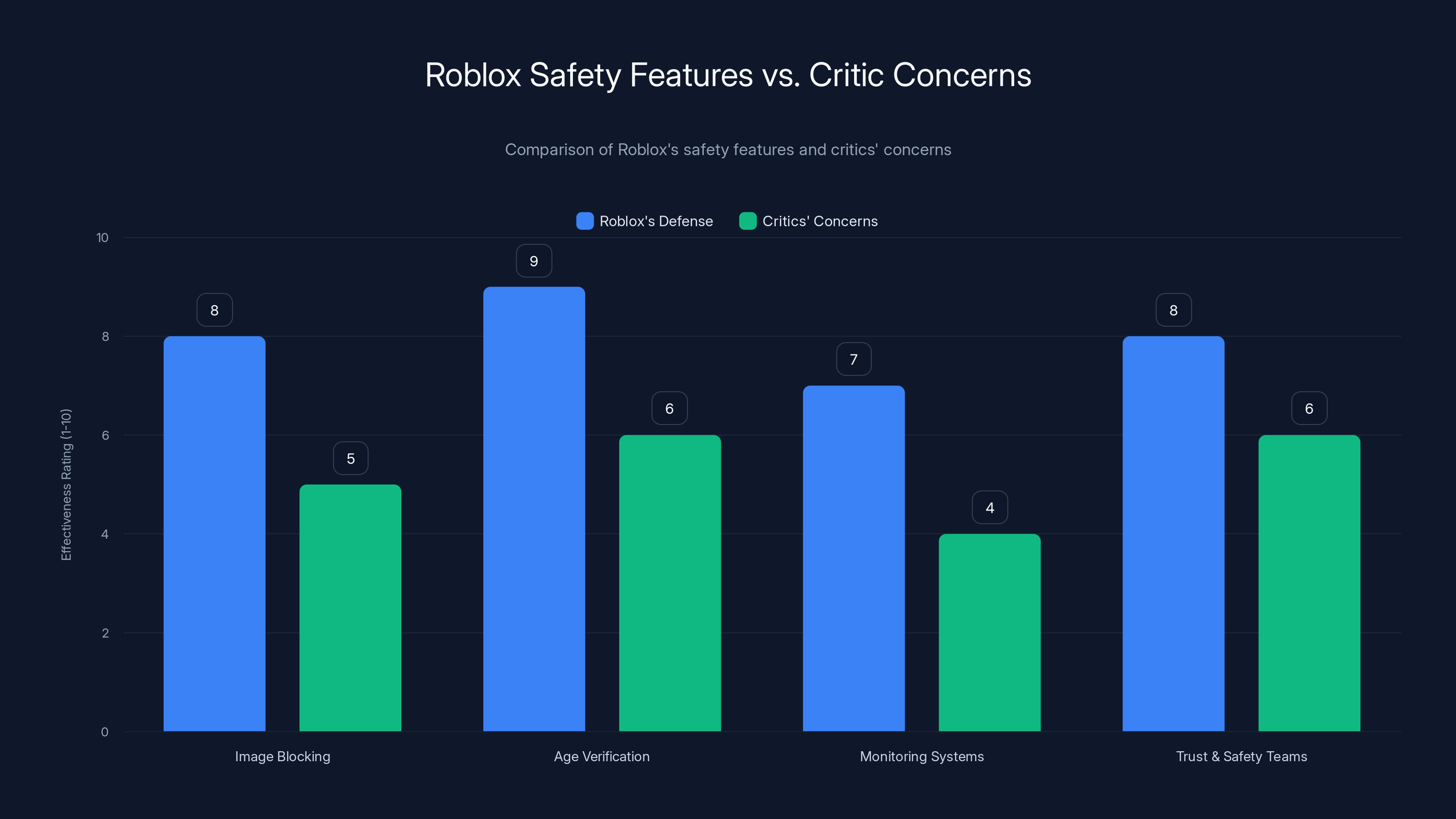

Roblox's safety measures are rated higher by the company than by critics, who argue the measures are more reactive than proactive. Estimated data.

What Exactly Is LA County Accusing Roblox Of?

The lawsuit filed by Los Angeles County isn't vague about its allegations. The complaint makes a specific claim: Roblox Corporation has engaged in unfair and deceptive business practices that endanger children. Let's break down what "unfair and deceptive" actually means in this context.

The deceptive part centers on marketing. Roblox aggressively advertises itself as a kid-safe platform. Marketing materials emphasize parental controls, moderation, and age-appropriate content restrictions. The company positions itself as a trusted destination where children can safely explore creative games and interact with other players. This messaging appears across the platform's website, social media, app store descriptions, and advertising campaigns.

The problem, according to the lawsuit, is that this marketing doesn't match reality. The complaint alleges that Roblox internally knew the platform facilitated predatory behavior, grooming, and exploitation—yet continued promoting it as safe. This creates what legal experts call a "deceptive practice"—when a company knowingly misrepresents a product's safety or functionality.

The unfair practices aspect covers several specific areas:

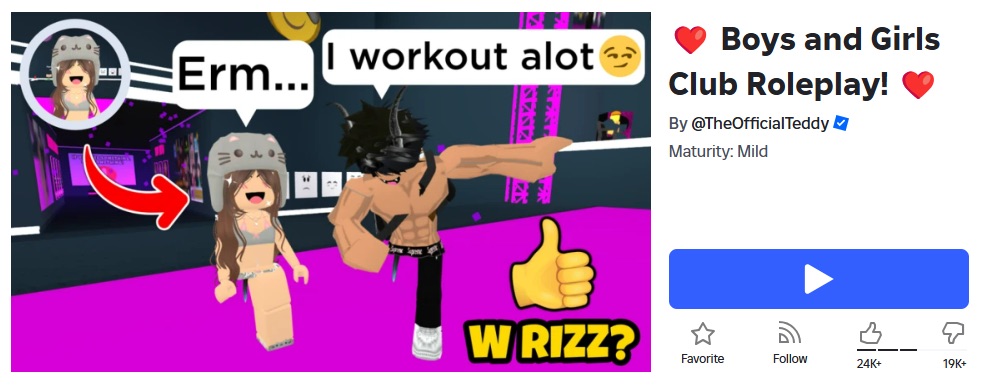

Inadequate content moderation: The complaint states that Roblox failed to effectively moderate game content or enforce the age-appropriate restrictions that creators themselves established. Game developers who create games on Roblox set their own age recommendations. However, the lawsuit claims these recommendations are largely unenforced. A game marked as age 13+ might still contain sexual content, predatory language, or inappropriate interactions that violate the stated rating.

Broken age verification systems: Roblox originally offered minimal age verification. Players could create accounts claiming any age with no verification mechanism. This meant a 45-year-old could claim to be 8 years old, gain access to spaces designated for children, and interact with actual minors. Only recently (2024) did Roblox implement selfie-based age verification for players under 13, and the company made this optional rather than mandatory.

Weak chat and communication controls: The platform allows players to message across multiple games, even outside the games they're playing together. Predators exploited this by befriending young players in public spaces, then moving conversations to private channels where behavior could be less monitored. The lawsuit specifically mentions that Roblox allowed predatory and inappropriate language to persist between users despite having the ability to moderate these exchanges.

No disclosure of risks: Roblox didn't adequately inform parents or users about documented dangers. Parents buying their kids Robux (the in-game currency) or encouraging them to play often didn't understand the platform's actual safety deficiencies. The company didn't clearly disclose the risk of encountering sexual predators, exposure to explicit content, or the methods predators use to exploit young players.

Prioritizing growth over safety: The complaint explicitly states that Roblox pursued growth and revenue targets over implementing necessary safety protections. This is significant legally because it suggests deliberate negligence—the company wasn't ignorant of the problems, but rather chose not to fix them because safety infrastructure costs money and might reduce engagement.

One particularly damaging allegation: Roblox portrays itself as a safe platform precisely because it's profitable to do so. Parents who believe the platform is safe are more likely to allow their children to spend time there, purchase premium features, and defend the platform against criticism. Regulatory scrutiny is less likely when a company enjoys parental trust.

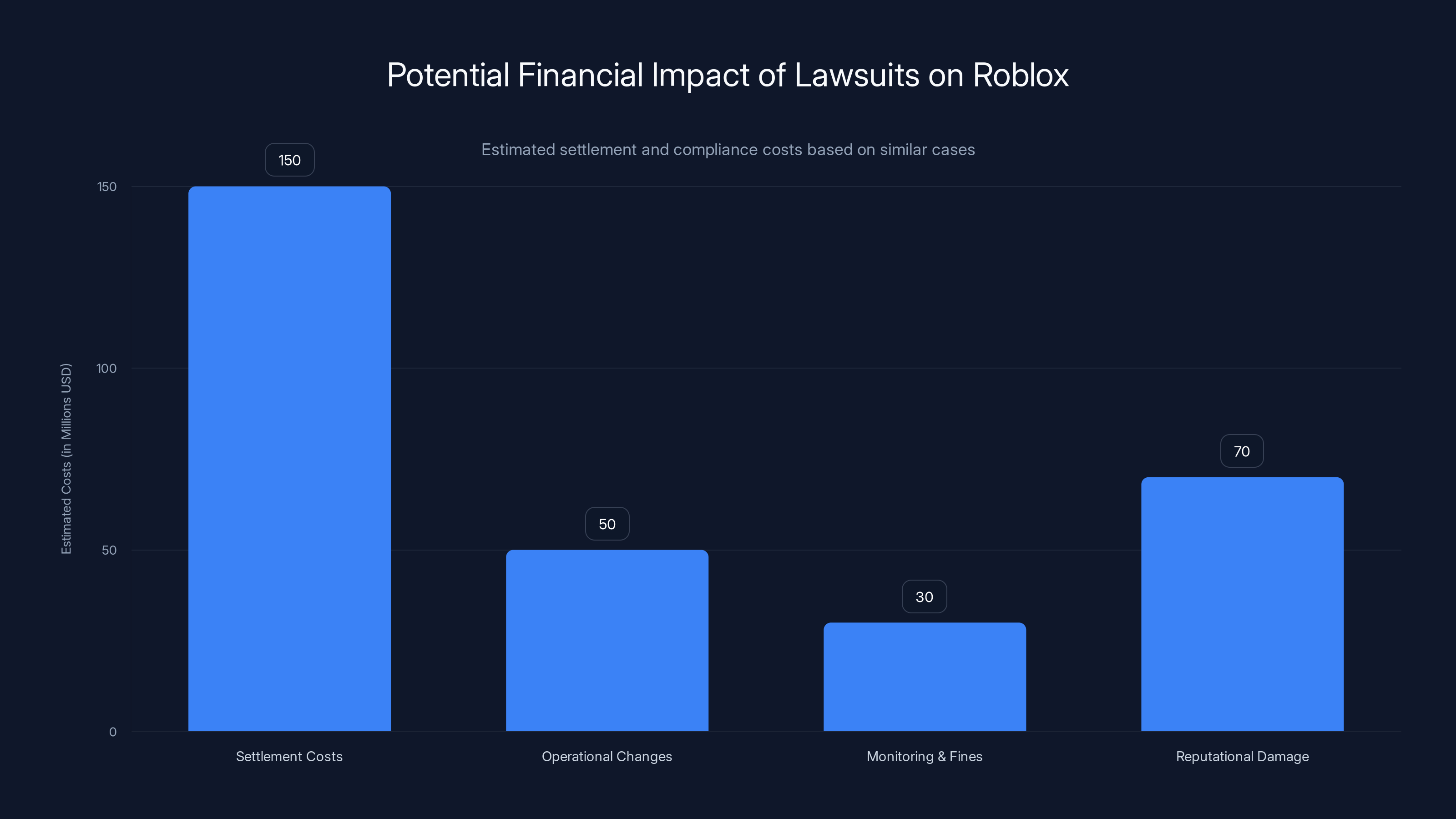

Roblox could face significant financial implications from lawsuits, with estimated settlement costs ranging from

The Specific Examples: How Predators Actually Use Roblox

Lawsuits are most powerful when they include concrete examples of how the alleged failures actually harmed real people. The LA County complaint, along with lawsuits from other states, provides disturbing specifics about how predators exploit Roblox's security gaps.

Louisiana's lawsuit cited a documented case involving a predator who used voice-altering technology to lower his voice and mimic a young girl. He joined Roblox spaces populated by children, created a convincing persona as a peer, and gradually built relationships with minors. Once he'd earned their trust through weeks of interaction in the game, he shifted conversations to private messaging. There, away from even the platform's minimal moderation, he worked to sexually exploit the children he'd befriended.

This case reveals how Roblox's design enables predatory tactics:

-

Low barrier to entry: Creating an account takes seconds and requires minimal verification. An adult with bad intentions can instantly access spaces full of children.

-

Private communication channels: Once you've "friended" someone in a game, you can message them across the platform, outside of specific game environments where interaction might be logged or monitored.

-

No real-time oversight: Roblox doesn't employ human moderators actively monitoring private conversations. Instead, it relies on automated systems that can be bypassed with coded language, and it depends on users reporting abuse—which children often don't do because they're embarrassed, don't understand they're being groomed, or are isolated from adults who might help.

-

Anonymity and pseudonymity: Players know each other only by usernames. There's no way to verify someone's actual age, identity, or intentions. A predator can claim to be 14 when he's 44.

-

Gradual normalization: Predators don't immediately ask for explicit material or suggest meetings. They build trust over weeks or months, gradually introducing inappropriate topics while maintaining the facade of friendship. By the time a child realizes something is wrong, they're often emotionally invested in the relationship and manipulated into complying with increasingly inappropriate requests.

Another pattern identified across multiple state lawsuits involves the use of Roblox's platform to distribute explicit content. Game creators have embedded adult content into games accessible to children, including in loading screens, in-game messages, and chat interactions. When moderation fails—or when it's dependent on user reports—this content persists for weeks or months before being removed.

A third example involves in-game sexual solicitation. Players (typically adults posing as peers) initiate conversations in games, then quickly move to private messaging where they ask children to engage in sexual roleplay, share photos, or meet in person. Some predators have used Roblox to scout potential victims, gathering information about their location, school, schedule, and family situation before attempting to facilitate real-world meetings.

These aren't isolated incidents or edge cases. The Louisiana lawsuit documented one case in detail. Federal law enforcement has made arrests related to Roblox exploitation. The National Center for Missing and Exploited Children receives reports of predatory behavior on Roblox with regularity. Each case represents a failure of the platform's safety systems to prevent or adequately respond to abuse.

How Roblox's Safety Systems Are Actually Supposed to Work

When Roblox responds to criticism, the company points to specific safety features it has implemented. Understanding these features—and their actual effectiveness—is essential to evaluating whether the lawsuits have merit.

Chat filtering and content moderation: Roblox uses automated systems to detect and filter inappropriate language, sexual content, and predatory communication patterns in in-game chat. The system is designed to catch obvious explicit content and flag repetitive attempts to solicit explicit material from other users.

The problem: automated systems can be circumvented. Predators use coded language, misspellings, number substitutions (like "4" for "for"), and off-platform communication to avoid detection. A player who says "let's talk on Discord" in chat might be flagged, but someone who says "add me on the other place" could slip through. Additionally, the system relies on pattern recognition, which means isolated instances of inappropriate behavior might not trigger alerts if the user doesn't repeat the same pattern frequently.

Image blocking in direct messages: Roblox doesn't allow players to share images through the platform's direct messaging system. This is a significant control because one of the most common exploitation tactics involves exchanging explicit photos. By blocking image sharing in DMs, Roblox theoretically prevents a major vector of abuse.

However, this doesn't prevent predators from moving to other platforms for image exchange. If a predator builds a relationship with a child on Roblox and then asks them to message on Snapchat, Discord, or another platform, this control is useless. Additionally, link sharing is still possible, which could direct users to external sites with explicit content.

Community standards and reporting tools: Roblox publishes community standards that prohibit grooming, sexual content, bullying, and predatory behavior. The platform provides reporting mechanisms—buttons within games and chat interfaces that allow users to report abuse. When a report is filed, it's supposed to be reviewed by moderation staff and acted upon.

The weakness: child victims often don't report abuse. They may not recognize they're being groomed because manipulation is gradual. They may fear getting in trouble if their parents discover they were in inappropriate conversations. They may be embarrassed or feel complicit. Predators often explicitly discourage reporting, saying things like "if you tell anyone, we can't be friends anymore" or "your parents will blame you for being on this site." Relying on victims to report their own abuse is fundamentally flawed.

Age verification and access controls: As of 2024, Roblox began asking users under 13 to verify their age using a selfie. This creates a basic identity check—the system confirms that the person claiming to be a child is actually a child (or at least a person, not just a random account). The company also restricts messaging for under-13 users, allowing them to message only within specific games with other players they've played with, rather than across the entire platform.

The limitation: the selfie-based age verification isn't perfect. People can use photos of minors to verify accounts. The rollout was gradual, and it wasn't retroactively applied to existing accounts. Existing accounts of players over 13 (who might actually be much older predators) could continue operating without additional verification. Additionally, restricting messaging for under-13 users is helpful, but it's more of a band-aid than a cure. A predator can still interact with children within games, and if he pretends to be a child, the system won't catch him.

Creator accountability and game rating systems: Game creators on Roblox are supposed to rate their games appropriately and implement controls matching that rating. Games marked as age 13+ should theoretically include stronger moderation or content filtering appropriate to older audiences. The platform claims it monitors creators and enforces these standards.

Yet the complaint alleges that age-appropriate restrictions established by creators are largely unenforced. This suggests either that Roblox isn't adequately auditing games for compliance, or that it's not removing games that consistently violate their own stated age requirements.

The fundamental problem with all these systems: they're reactive, not proactive. They catch problems after they've occurred or after users report them. For a child who's never been groomed before and doesn't understand the warning signs, reactive moderation comes too late.

This chart compares the implementation levels of key child safety features across Discord, YouTube, and Fortnite. YouTube excels in age verification and parental transparency, while Fortnite focuses on human moderation. (Estimated data)

Why Are Multiple States Suing? A Pattern of Failures

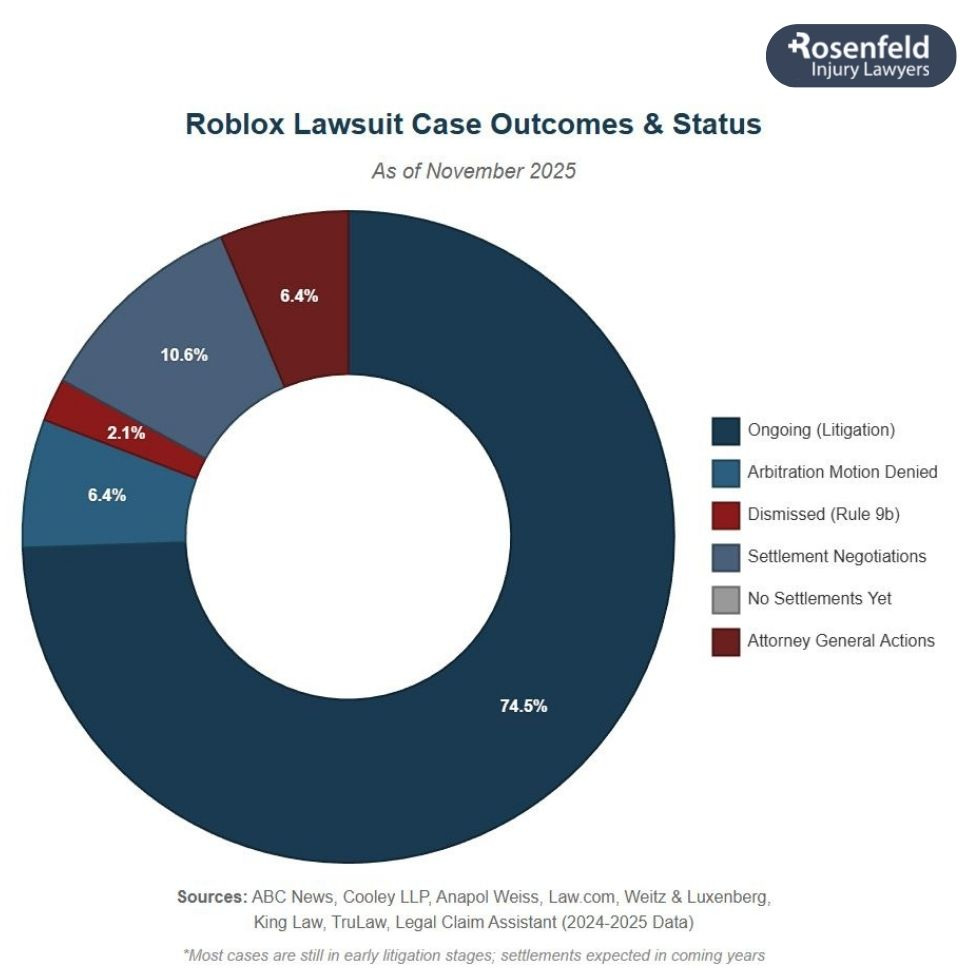

LA County's lawsuit isn't unique. The fact that multiple states have independently filed similar legal action is significant—it suggests a pattern rather than an isolated problem.

Louisiana's case: Louisiana's Attorney General accused Roblox of having a "lack of safety protocols" that endanger children in favor of "growth, revenue and profits." This framing is crucial. Louisiana isn't just saying Roblox has some safety gaps—it's alleging that the company made a deliberate business choice to prioritize growth over safety. The lawsuit points to specific cases where predators used Roblox to exploit minors, suggesting that if Roblox had invested in stronger safety measures, these exploitations could have been prevented.

Texas's involvement: Texas has been active in technology regulation and consumer protection. The state's interest in Roblox reflects broader concerns about how gaming platforms handle child safety. Texas, along with other states, is signaling that weak self-regulation isn't sufficient and that legal action is becoming the appropriate enforcement mechanism.

Florida and Kentucky: Both states have filed or are pursuing legal action, adding to a coordinated pressure campaign. When multiple states independently reach similar conclusions about a company's practices, it strengthens each individual case and suggests a pattern that meets the threshold for broad legal action.

The convergence of multiple state lawsuits serves several purposes:

-

Demonstrates a pattern, not an exception: A single lawsuit could be dismissed as one state's interpretation. Multiple lawsuits prove this is a documented, systematic problem.

-

Increases pressure for settlement: Facing litigation in multiple states simultaneously is expensive and damaging to reputation. Settlements often become more attractive.

-

Creates precedent potential: If one state wins a significant judgment, other states use that precedent to strengthen their own cases.

-

Signals to regulators: Federal agencies like the FTC notice when multiple states take coordinated action. It can prompt federal investigation or regulatory changes.

-

Demonstrates consumer harm across regions: If Roblox's problems were isolated to one state's specific player base or culture, that would be one thing. Multiple states prove the issues are systemic.

The National Center for Missing and Exploited Children has reported receiving complaints about Roblox multiple times. Law enforcement in various states has investigated child exploitation cases involving the platform. The accumulated evidence from these independent sources, combined with state lawsuits, creates a compelling case that this isn't a matter of a few isolated bad actors on the platform—it's a matter of the platform's design and governance enabling exploitation at scale.

Roblox's Response and Defense

Roblox hasn't remained silent. The company has issued public statements defending its platform and refuting the lawsuit's allegations.

Roblox's core defense is that it has "advanced safeguards that monitor our platform for harmful content and communications." The company emphasizes that users cannot send or receive images via chat—a feature designed to prevent image-based exploitation. The company also highlights its recent implementation of age verification for younger users.

Beyond these basic defenses, Roblox argues that it takes child safety seriously and has invested significantly in safety infrastructure. The company points to the fact that it employs dedicated trust and safety teams, has community standards that explicitly prohibit the behaviors the lawsuit describes, and actively removes content and accounts that violate those standards.

However, there's a critical gap between the company's defense and what critics argue are the real problems. Roblox defends the features it has implemented, but the lawsuit isn't claiming these features don't exist. Instead, the lawsuit argues these features are inadequate. That's a fundamentally different claim.

Consider the image-blocking feature. Roblox correctly states that images can't be shared via direct message. But the lawsuit's position would be: that's good, but not nearly sufficient. Predators don't need images to be shared on Roblox. They build relationships on Roblox and move to other platforms to exchange images. The fact that Roblox doesn't facilitate image sharing doesn't prevent exploitation—it just happens elsewhere.

Similarly, Roblox's argument about advanced monitoring systems assumes these systems are both effective and comprehensively applied. The lawsuit's position is that these systems have blind spots, can be circumvented, and depend on user reports rather than proactive detection. A system that flags explicit language can miss coded conversation. A system that depends on reports won't catch abuse that happens between people who know each other well and understand not to report each other.

The company's age verification defense is also challenged by the lawsuit's timeline. Roblox only implemented selfie-based age verification in 2024—years after the problems described in the lawsuit became apparent. If age verification was necessary and effective, why wait until after multiple child exploitation cases were documented? The plaintiffs would argue this proves Roblox didn't prioritize safety until it faced legal pressure.

Roblox's fundamental position appears to be: "We've implemented reasonable safety measures, and like any platform, there are edge cases where bad actors exploit loopholes." The plaintiffs' position is: "The platform's design fundamentally enables grooming and exploitation, and Roblox consciously chose not to address this because it would cost money and reduce engagement."

These are irreconcilable positions, which is why the lawsuits will likely be decided based on evidence of:

-

Intent: Did Roblox know about the safety problems before implementing solutions? Internal emails and testimony could reveal whether executives understood the risks.

-

Feasibility: Were safer alternatives technically feasible and economically reasonable to implement? Expert testimony will cover whether stronger age verification, real-time human moderation, or other measures were possible.

-

Industry standards: How do Roblox's safety measures compare to what other platforms do? If competitors implement significantly stronger protections, that strengthens the argument that Roblox's measures were inadequate.

-

Harm: What actual harm resulted from Roblox's failures? The more specific cases of exploitation that can be traced to Roblox's inadequate safety, the stronger the plaintiffs' case.

Roblox emphasizes its safety features, but critics argue these measures are insufficient. Estimated data based on typical concerns.

The Financial Implications: What These Lawsuits Could Cost Roblox

When states sue corporations for deceptive practices and endangering children, the financial stakes are significant. Understanding what Roblox could face financially helps explain why the company is likely to take these lawsuits seriously.

Settlement costs: If Roblox settles (rather than fight these lawsuits to trial), it will likely pay millions to each state. Settlement amounts in major tech regulation cases typically range from tens of millions to hundreds of millions. For context, in 2020, Facebook agreed to a

Mandatory changes to operations: Alongside any settlement, Roblox would likely be required to implement specific safety measures approved by the states. This could include:

- Mandatory age verification for all new accounts

- Real-time human moderation of high-risk communication (conversations between substantially different ages)

- Stronger content filtering with human review

- Restrictions on private messaging between players of substantially different reported ages

- Regular third-party audits of safety compliance

- Restrictions on monetization of under-13 accounts

- Enhanced parental controls and notification systems

Each of these measures costs money to implement and maintain.

Ongoing monitoring and fines: Many settlements include provisions for ongoing monitoring. If a company violates the settlement terms, it faces escalating fines. This creates a permanent compliance cost.

Reputational damage: Lawsuits alleging that a platform enables child exploitation are incredibly damaging to brand reputation. Parents who previously felt comfortable with Roblox may switch to competitors. The company's ability to advertise itself as "kid-safe" is permanently compromised. This affects not just direct user loss, but also partnerships, platform features, and future growth opportunities.

Impact on investment and valuation: Roblox went public in 2021. Legal liability related to child safety creates significant risk for investors. A major judgment against Roblox could lower its stock price, making it harder to raise capital for growth.

Regulatory expansion: A loss or large settlement in LA County and other states could prompt federal regulation. The FTC has been increasingly active in tech regulation. Congress has considered legislation specifically targeting children's online safety. A Roblox loss could accelerate federal action that applies to Roblox and competitors, creating broader industry compliance costs.

The Broader Context: Why Child Safety on Gaming Platforms Matters

This lawsuit isn't just about Roblox. It's about a fundamental question: what responsibility do online platforms bear for protecting minors from predatory behavior?

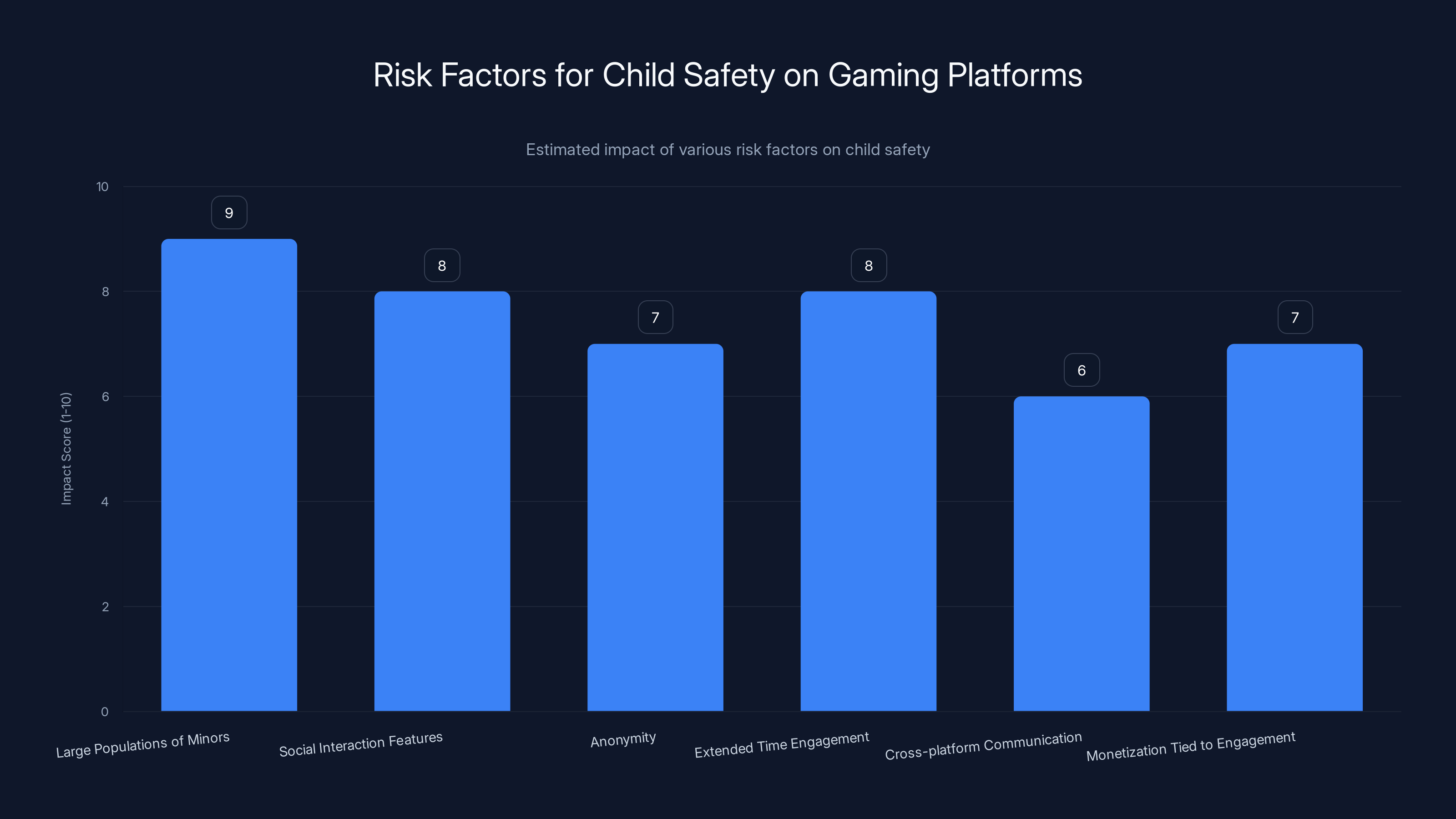

Gaming platforms are unique because they combine several risk factors:

-

Large populations of minors: Games marketed to children naturally attract child players. Roblox having over 40% of its user base under age 13 means predators can target a massive population of vulnerable individuals.

-

Social interaction features: Games are increasingly social. Multiplayer gaming, friend lists, group chat, and guild systems create environments where minors can build relationships with strangers. These social features are central to engagement and retention but create opportunities for grooming.

-

Anonymity: Players interact via usernames, not real identities. This anonymity protects legitimate players' privacy but also allows predators to hide their true age, appearance, and intentions.

-

Extended time engagement: Gaming is not a brief interaction like checking social media. Players spend hours in games, building relationships and emotional investment. Long engagement periods mean more opportunity for grooming to progress.

-

Cross-platform communication: Many games integrate with Discord, YouTube, Twitch, and other platforms. A predator can use Roblox to establish initial contact, then quickly move to platforms with even weaker moderation.

-

Monetization tied to engagement: Gaming platforms often make money by maximizing engagement. The more time players spend, the more in-game purchases they make, and the more valuable they are to the platform. This creates an incentive structure that can conflict with safety—implementing stronger moderation might reduce engagement.

These factors exist on other platforms, but gaming is particularly vulnerable because:

- Children are expected to be there (unlike on platforms primarily designed for adults)

- The environment is inherently social

- Interaction is often real-time and difficult to oversee

- The culture normalizes spending significant daily time online

- Parental oversight is often minimal (many parents assume a "game platform" is safe)

The Roblox lawsuit is part of a broader movement to establish that platform companies have responsibility for child safety, not just that users have responsibility for their own safety.

The lawsuit highlights deceptive marketing as the most severe issue, followed by inadequate moderation and broken age verification systems. Estimated data based on lawsuit context.

How Predators Actually Succeed on Under-Moderated Platforms

Understanding predatory tactics on gaming platforms helps explain why the lawsuit's specific allegations have weight. This is uncomfortable to discuss but essential to understand why Roblox's defenses may be inadequate.

The grooming process: Predators rarely (never, really) immediately ask a child for explicit material or request a meeting. Instead, they:

-

Establish credibility: The predator creates an account claiming to be a child, adopts a child's perspective, and quickly learns the platform's culture and language. This makes them seem like an authentic peer.

-

Find vulnerable targets: They might look for children who are lonely, socially isolated, in conflict with parents, or new to the platform. These children are more receptive to someone offering attention and friendship.

-

Build rapport: Early conversations are entirely innocent. They find shared interests (the child likes building games; the predator talks about enjoying building games), celebrate the child's achievements, and position themselves as a supportive friend.

-

Isolate gradually: Conversations move from public game chat to private messaging. The predator suggests moving to Discord or another platform "so we can talk more easily." They encourage the child not to mention their new friend to parents ("they might not understand the age difference in our friendship").

-

Normalize inappropriate topics: Over weeks or months, conversations gradually shift. The predator might start by mentioning relationships, then talking about physical attraction, then discussing sexual topics casually as if this is normal conversation between friends.

-

Create complicity: At some point, the predator asks the child to engage in something inappropriate—share a photo, describe something sexual, or come meet. By this time, the child often feels complicit ("I've been talking about this too") and invested in the relationship ("If I tell an adult, they'll think I'm bad").

-

Escalate gradually: Once a child has crossed one boundary, escalation is easier. "You shared one photo; what about a video?" "You agreed to video chat; let's meet in person."

This process typically takes weeks to months. The predator is patient. Each step is presented as something a close friend would ask, which makes it harder for the child to recognize as exploitation.

Why platform moderation struggles with this pattern: Automated systems look for explicit language, but grooming conversations might not contain any explicit language. They look for solicitation of explicit material, but the predator might embed requests in seemingly innocent conversation. They look for patterns of abuse, but a predator might only abuse one child and then move on or wait a long time between contacts.

Human moderation struggles because it's impossible to monitor all conversations, and human reviewers might miss the subtle pattern of escalation. They might see one conversation about relationships and nothing else, not realizing it's part of a months-long process.

Why children often don't report: Understanding this is critical to understanding why relying on victim reporting is insufficient. A child being groomed often:

- Doesn't recognize what's happening

- Feels ashamed and doesn't want to tell adults what they've discussed

- Is emotionally invested in the relationship and would be devastated if the "friend" was removed

- Has been explicitly told not to tell anyone (and the predator has positioned telling as a betrayal of friendship)

- Fears getting in trouble with parents for being on the platform in the first place

- May have been given gifts or money by the predator and feels indebted

For all these reasons, a child is unlikely to report. A moderation system that depends on victim reports will miss abuse happening in plain sight (in private messages between people who know each other).

What Successful Child Safety Looks Like: Industry Benchmarks

To evaluate whether Roblox's safety measures are truly inadequate, it helps to understand what leading platforms have implemented and what experts recommend.

Discord implemented features including age-gating certain servers, limiting who can DM younger users, and requiring phone verification for accounts that participate in age-gated channels. The company also published detailed community safety guidelines and has a transparency report on moderation actions.

YouTube (which targets families) requires active parental consent for accounts under 13, limits what data can be collected from child accounts, restricts personalized advertising, and prevents public commenting by default on child-oriented content.

Fortnite (a major competitor to Roblox) implemented cross-game progress to reduce the need for alternate accounts, limits voice communication between players of substantially different reported ages, and has a dedicated safety team with published guidelines.

Beyond individual platform features, child safety experts recommend:

-

Mandatory age verification: Not optional, not gradually rolled out to new accounts, but mandatory for all accounts. This is technically feasible using combinations of ID verification, credit card verification, or phone number verification.

-

Age-appropriate communication controls: Preventing or heavily monitoring communication between accounts claiming substantially different ages. A platform where every 40-year-old can message a 9-year-old claiming to be 12 has a fundamental design flaw.

-

Human moderation for high-risk interactions: At minimum, spot-checking conversations between accounts with large age differences or rapid escalation patterns. This requires investment but isn't prohibitively expensive.

-

Parental transparency: Real-time visibility for parents into who their children are communicating with, what games they're playing, and how much time they're spending. This isn't surveillance of content (which can violate child privacy advocates' concerns) but visibility into connection patterns.

-

Regular third-party audits: Independent security researchers or auditing firms evaluating safety measures quarterly or annually. This creates accountability and identifies gaps that internal teams might miss.

-

Clear harm reporting pathways: Not just a generic report button, but clear pathways for reporting and quick response to high-confidence abuse cases. If a report comes in about a suspected predator, that account should be suspended within hours pending investigation, not days.

-

Cooperation with law enforcement: Maintaining detailed logs of user interactions and being responsive to law enforcement requests for evidence. This requires privacy considerations but enables prosecution of predators.

Roblox has implemented some of these (age verification, chat filtering) but not others (age-gated communication, mandatory parental transparency, clear rapid response protocols, visible cooperation with law enforcement). The lawsuit would argue this patchwork approach is insufficient.

Estimated data suggests that large populations of minors and social interaction features are the highest risk factors for child safety on gaming platforms.

The Legal Theory: Unfair and Deceptive Practices

To understand what Roblox actually faces legally, it's worth diving into what "unfair and deceptive business practices" means in law.

Deceptive practices are when a company makes false or misleading representations about its product. The standard test is whether a reasonable consumer would be misled by the representation. In Roblox's case:

- Roblox advertises the platform as "safe" and "designed for children"

- A reasonable parent considering whether to let their child use Roblox would interpret this as meaning the platform has adequate protections against predatory behavior

- If Roblox actually has inadequate protections (the premise of the lawsuit), then the advertising is deceptive

The fact that Roblox doesn't explicitly say "we have 100% effective protection" doesn't matter. The implicit claim in marketing the platform to families is that it's reasonably safe. If it isn't, that's deceptive.

Unfair practices are broader. They include practices that cause substantial injury to consumers in ways that aren't outweighed by benefits to consumers or competition. Specifically:

- Is there substantial injury? Yes—child exploitation and trauma (if the lawsuits' allegations are proven)

- Is it avoidable? Yes—stronger safety measures could prevent or reduce the harm

- Is it outweighed by benefits? This is where Roblox's defense would focus: the platform provides creative outlet, social interaction, and entertainment to millions of children. Isn't that worth the risk of some edge cases of abuse?

The plaintiffs would respond: that's not a reasonable trade-off when the abuse is preventable with better design choices.

These are fact-based disputes that will likely come down to evidence presented at trial or during settlement negotiations. But the legal theory is sound—deceptive and unfair practices law is regularly used against companies that misrepresent product safety.

Timeline: How Did We Get Here?

Roblox's safety challenges didn't appear overnight. Understanding the timeline helps explain how a platform can operate for years with known problems before facing major legal action.

2006-2015: Roblox founded and grows as a platform. The company attracts players with an emphasis on user-created content and creativity. Safety measures are minimal—basic content filtering and user reporting, but no age verification or specialized protections for children.

2016-2019: Roblox's user base grows exponentially. The company reaches significant profitability and attracts venture capital investment. During this period, parents and educators recognize Roblox as a major gaming platform for children. However, early warnings about safety issues emerge—parents report grooming attempts, researchers document exploitation cases.

2019-2021: Despite documented safety concerns, Roblox doesn't substantially upgrade safety infrastructure. The company goes public in March 2021 with a valuation exceeding $40 billion. In IPO materials, Roblox touts its "safety-first" approach. During this period, law enforcement agencies and the National Center for Missing and Exploited Children report receiving increasing complaints about exploitation on Roblox.

2022-2023: Investigations and reporting by journalists and safety advocates reveal specific cases of exploitation. Parents report grooming their children experienced on Roblox. The company receives criticism for inadequate response. Despite this pressure, Roblox doesn't implement major safety upgrades.

2024: Finally responding to accumulated pressure, Roblox implements selfie-based age verification for new accounts and restricts messaging for under-13 accounts. However, these measures are partial and don't apply to existing accounts.

Early 2025: LA County and other states file lawsuits alleging Roblox knowingly failed to implement adequate safety measures despite understanding the risks.

This timeline is important because it demolishes one potential defense: "We didn't know there were problems." The company had years of warnings. Parents were reporting issues. Law enforcement was documenting cases. The company's response (implementing age verification and messaging restrictions only after facing lawsuits) suggests the company understood the problems but didn't prioritize fixing them until legal pressure became inevitable.

What Could Happen Next: Possible Outcomes

Predicting legal outcomes is inherently uncertain, but we can outline plausible scenarios.

Settlement: Most corporate litigation settles before trial. Roblox could settle with LA County and other states by agreeing to:

- Pay damages (tens to hundreds of millions of dollars)

- Implement specific safety measures

- Submit to ongoing monitoring

- Modify marketing claims

- Issue public apologies

Settlement avoids the uncertainty of trial but requires admitting culpability (or appearing to). It's typically the preferred outcome for both sides—plaintiffs get meaningful relief, and companies avoid years of litigation and the risk of larger judgments.

Trial and judgment: If cases go to trial, a judge or jury would evaluate evidence and determine whether Roblox engaged in deceptive practices and whether its safety measures were inadequate. A judgment in the plaintiffs' favor would establish legal precedent and expose Roblox to significant damages. A judgment in Roblox's favor would largely protect the company from similar suits.

Appeal: Whatever initial outcome, the losing party would likely appeal. Appeals are expensive and slow, potentially adding years to the process.

Regulatory action: Regardless of litigation outcome, regulators (state AGs, FTC) might impose additional restrictions. The European Union's Digital Services Act already imposes specific child safety requirements on platforms. The US could move toward similar federal legislation, especially if Roblox's case highlights systemic gaps in child protection.

Broader impact: A major judgment against Roblox or a large settlement would likely trigger similar lawsuits against other gaming platforms (Fortnite, Minecraft, Discord, etc.). This could reshape industry standards for child protection across gaming.

How Platforms Could Do Better: Practical Solutions

If Roblox and similar platforms want to genuinely improve child safety (beyond the minimum required by law), what should they actually do?

Implement mandatory age verification for all accounts: This seems obvious but is often resisted because it reduces account growth and alienates some users. However, if executed properly (using privacy-preserving techniques), it's feasible and would eliminate the ability of adults to masquerade as children.

Age-gate risky interactions: Accounts claiming to be children shouldn't be able to receive private messages from accounts claiming to be adults. This simple rule would prevent a huge percentage of grooming attempts.

Hire and train human moderators specifically for abuse detection: Machine learning is useful for flagging obvious issues, but detecting grooming requires human judgment. A team of 100 moderators trained in child exploitation patterns could review flagged conversations and catch patterns algorithms miss.

Implement real-time parental visibility: Parents should have real-time dashboards showing who their children are communicating with, in what games they're playing, and how much time they're spending. This isn't surveillance of content (which many privacy advocates oppose), but visibility of patterns.

Create rapid response protocols for abuse reports: If a report comes in alleging a specific user is grooming children, that account should be suspended within 2 hours pending investigation. Currently, response times are measured in days or weeks.

Conduct independent third-party audits: Hire reputable security or safety researchers to audit the platform quarterly. Publish findings publicly. This creates accountability and identifies gaps internal teams might miss or ignore.

Partner with law enforcement and child safety organizations: Maintain direct relationships with FBI units handling child exploitation, the National Center for Missing and Exploited Children, and international child safety organizations. When law enforcement requests evidence, provide it within hours, not weeks.

Restrict monetization of under-13 accounts: If a child account can't make in-game purchases and platform can't monetize their attention, the incentive structure changes. The company would benefit less from maximum engagement of young users and could implement stronger (but engagement-reducing) safety measures without financial penalty.

These solutions would cost money and might reduce engagement metrics. That's precisely why they haven't been implemented at scale. The lawsuits are trying to force companies to absorb these costs because child safety should matter more than engagement and profit optimization.

What Parents Should Actually Know

Given all this complexity, what's the practical takeaway for parents?

First, Roblox is not uniquely unsafe compared to other online gaming platforms. The issues described in the lawsuit—inadequate moderation, exploitation, and grooming—exist on many platforms. Roblox is just facing legal scrutiny first, partly because it's large enough to sue and partly because its security gaps are documented.

Second, no major online gaming platform has perfect safety. Parents assuming that a platform marketed to children is totally safe are being too trusting. Every platform has gaps that predators exploit.

Third, parental involvement is essential. If your child plays Roblox or any similar platform:

- Know who they're interacting with

- Review their friend lists regularly

- Be alert to rapid escalation of new friendships (if a child suddenly spends all time playing with one new person, ask about it)

- Educate them about grooming tactics

- Maintain open communication so they feel comfortable reporting uncomfortable interactions

- Monitor time spent and spending on in-game purchases

- Set clear rules about what information they share (real name, location, school, etc.)

Fourth, report suspicious behavior. If you see an account behaving suspiciously on a platform your child uses, report it. If you suspect exploitation, contact the National Center for Missing and Exploited Children's Cyber Tipline (cybertipline.org) in the US or equivalent organizations in other countries.

Fifth, understand the business model. Roblox makes money by maximizing engagement, particularly spending by younger users. This creates inherent pressure to prioritize engagement over safety. Knowing this context helps parents approach the platform more skeptically.

The Bigger Picture: Platform Accountability and Regulation

The Roblox lawsuits are part of a much larger conversation about how much responsibility technology companies should bear for what happens on their platforms.

For decades, platforms claimed they were "neutral conduits" not responsible for user behavior. Section 230 of the Communications Decency Act, passed in 1996, provided broad immunity for platforms from liability for user-generated content. This was intended to enable platforms to host diverse speech without constant legal threats.

However, this immunity created moral hazard. If companies face no consequences for harmful content, they have minimal incentive to spend money on safety measures beyond the bare minimum. Child exploitation is an area where this incentive structure breaks down. A reasonable society decides that protecting children is so important that platforms should invest heavily in it, not just implement the minimum required.

The trend in regulation is clear:

- The EU's Digital Services Act requires specific child protections

- US state laws are increasingly imposing duty of care standards for children

- Congress is considering federal legislation (Kids Online Safety Act and similar)

- Courts are increasingly finding that platforms have duty of care toward users, including children

Roblox's lawsuits are part of this trend. They're establishing (through litigation) that platforms can't simply claim neutrality and avoid responsibility. If a company knowingly operates a platform where children congregate, that company has a responsibility to implement reasonable safety measures.

This doesn't mean platforms will be held liable for every bad thing that happens. But it means companies can't use profitability or engagement metrics as justification for failing to implement known safety measures.

Looking Forward: What Changes Are Coming

Based on the trajectory of these lawsuits and broader regulatory trends, several changes seem likely:

Mandatory safety standards for gaming platforms: Just as platforms are required to have privacy policies and security measures, they'll likely be required to implement specific child safety standards. These might include age verification, communication controls, mandatory parental visibility, and third-party auditing.

Increased transparency: Platforms will be required to publish data on how many accounts they've suspended for suspected abuse, how quickly they respond to reports, and what safety investments they've made.

Financial consequences for violations: Currently, most penalties platforms face are relatively small compared to revenue. Larger settlements and fines would significantly change the incentive structure.

Expanded liability for platforms: Courts may reduce the broad immunity platforms currently enjoy under Section 230, at least in cases involving child exploitation. This would mean platforms could be sued directly by victims of abuse that happened on their platforms.

Industry standards development: Industry groups may develop and publish best practices for child safety, allowing platforms to meet standards without waiting for regulation.

International coordination: Child exploitation is global. Regulation is moving toward international coordination, with INTERPOL and EUROPOL increasingly involved in pursuing platform-enabled abuse.

For Roblox specifically, the company will emerge from these lawsuits with significantly higher compliance costs and reduced ability to market itself as uniquely safe. But if the company genuinely implements better safety measures (rather than just paying fines), young players might actually be safer.

Key Takeaways for Different Stakeholders

The Roblox lawsuit has different implications depending on who you are.

For parents: Be actively involved in your children's gaming. No platform is perfectly safe. Know who your kids are interacting with. Education about online risks is essential.

For educators: This lawsuit highlights why digital literacy and online safety education is essential. Students need to understand grooming tactics, how to recognize them, and how to report them.

For platform companies: Investment in child safety is no longer optional. Companies that treat safety as afterthought will face legal consequences. Companies that prioritize safety will have competitive advantage.

For regulators: The lawsuit demonstrates that self-regulation by tech companies is insufficient. Regulation establishing minimum child safety standards is needed.

For investors: Tech companies' liability for child safety is increasing. Investment in platforms targeting children should factor in potential legal costs and regulatory requirements.

For child protection advocates: These lawsuits prove that legal action is an effective tool for forcing corporate accountability. Similar action against other platforms is likely and warranted.

Conclusion: Safety, Growth, and Accountability

The LA County lawsuit against Roblox represents a crucial moment in how society addresses child safety on gaming platforms. The lawsuit isn't claiming that isolated bad actors will never find ways to exploit online spaces. Rather, it's claiming that when a company designs a platform, markets it to children, and then fails to implement reasonable safety measures despite knowing about foreseeable harms, that's unacceptable.

Roblox's response—that it has "advanced safeguards"—misses the plaintiffs' point. The issue isn't whether safeguards exist. It's whether safeguards are adequate. It's whether a company with $40 billion valuation and millions of child users can reasonably claim to have done enough when it took major lawsuits to motivate implementing basic age verification.

The company's position also reveals a tension at the heart of modern tech business models. Maximizing engagement and spending by users (especially younger users with developing financial restraint and impulse control) is fundamentally profitable. Implementing strong safety measures—age verification that might reduce account growth, communication restrictions that reduce engagement, or mandatory parental oversight that reduces perceived privacy—cuts into that profitability. For years, Roblox apparently decided profitability was worth the risk of exploitation.

The lawsuits are attempting to change that calculus. If successful, they'll establish that the risk of exploitation—both in terms of actual harm to children and in terms of legal and financial consequences—is high enough that safety investment is necessary regardless of engagement impact.

Whether Roblox settles or goes to trial, the company's safety practices will change. Other platforms are watching. If Roblox faces major consequences, they'll accelerate their own safety improvements. If Roblox settles for a relatively small amount and makes minimal changes, that will embolden plaintiffs in similar actions against other platforms.

The outcome will likely be that gaming platforms become significantly more regulated and safety-focused than they are today. That's good for children. It might mean slightly less engagement, slightly higher operating costs for platforms, and slightly less revenue from monetizing child attention. These are acceptable trade-offs for a safer online environment for the next generation.

The broader message from these lawsuits is clear: you can't build a massive platform targeting children, market it as safe, and then ignore documented exploitation because fixing it would cost money. Regulators, courts, and society increasingly won't accept that bargain. The question now is how fast platforms respond and whether they change proactively or only when forced to by litigation.

FAQ

What is the specific allegation in the LA County lawsuit against Roblox?

LA County alleges that Roblox engaged in unfair and deceptive business practices by marketing the platform as safe for children while knowingly operating inadequate moderation and age verification systems that enable grooming and sexual exploitation. The complaint specifically states that Roblox portrays itself as "a safe and appropriate place for children to play" while in reality its design makes "children easy prey for pedophiles." The lawsuit claims Roblox failed to effectively moderate content, enforce age-appropriate restrictions, prevent sexual predatory language and interactions, and disclose dangers including sexual content and predator risks to children and parents.

How does Roblox currently try to protect children from predators?

Roblox implements several safety features including automated chat filtering and content moderation to detect inappropriate language and potential grooming attempts, a policy preventing users from sharing images via direct messaging to prevent exploitation tactics, community standards that explicitly prohibit grooming and predatory behavior with user reporting mechanisms, and since 2024, selfie-based age verification for new accounts under 13 and restricted messaging capabilities for verified underage users. The company employs dedicated trust and safety teams and claims to actively remove content and accounts violating community standards. However, critics argue these measures are reactive rather than proactive and don't sufficiently prevent predators from building relationships that migrate to other platforms or private communication channels.

What makes children particularly vulnerable to grooming on gaming platforms?

Children are especially vulnerable on gaming platforms because these spaces combine large populations of young users, extensive social interaction features enabling relationship-building with strangers, anonymity that allows predators to hide their true age and identity, extended engagement times that facilitate gradual grooming, and cultures that normalize spending significant daily time online with minimal parental oversight. Gaming platforms are also expected to contain children, making them natural hunting grounds for predators. The inherent social features needed for engaging multiplayer experiences create opportunities for predatory manipulation that would be harder to conduct on non-social platforms.

What is grooming and how do predators actually do it on platforms like Roblox?

Grooming is a process where an adult builds trust with a child over time through manipulation, with the goal of eventually sexually exploiting them. On gaming platforms, predators typically establish fake child personas to seem like peers, identify vulnerable or isolated children, build rapport through shared interests and support, gradually move conversations to private channels away from oversight, slowly normalize inappropriate topics through seemingly casual discussion, create emotional investment so children feel complicit and reluctant to report, and then escalate to requesting explicit material or in-person meetings. This process typically takes weeks or months and is effective because children often don't recognize what's happening, feel ashamed about discussions they've participated in, and are manipulated into believing reporting would betray the relationship.

Why are multiple states suing Roblox and what does this pattern suggest?

Multiple states including Louisiana, Texas, Florida, and Kentucky have filed or are pursuing legal action against Roblox for similar reasons, suggesting this represents a systematic problem rather than isolated incidents. Multiple state action strengthens each individual case, demonstrates a clear pattern, increases pressure on the company to settle, creates potential precedent if one state succeeds, and signals to federal regulators like the FTC that intervention may be warranted. Louisiana specifically cited a documented case where a predator used voice-altering technology to appear younger and sexually exploited children on Roblox. The convergence of multiple independent state investigations proving similar exploitation patterns is significant legal and practical evidence that Roblox's safety failures are widespread and intentional rather than accidental or limited.

What could happen if Roblox loses these lawsuits or is forced to settle?

If Roblox settles or loses these lawsuits, the company could face settlements ranging from tens to hundreds of millions of dollars, be required to implement specific safety measures approved by states such as mandatory age verification for all accounts, real-time human moderation of high-risk communications, stronger content filtering, restrictions on messaging between substantially different ages, and regular third-party safety audits. Roblox would likely face ongoing monitoring and escalating fines for violations of settlement terms, permanent reputational damage making it harder to market as kid-safe, negative impact on stock price and investor confidence, reduced ability to recruit top talent concerned about working for a company enabling child exploitation, and possible triggering of federal regulation that applies industry-wide. A major judgment would also likely encourage similar lawsuits against other gaming platforms and accelerate federal legislation addressing child safety online.

What are other gaming platforms doing for child safety that Roblox hasn't implemented?

Discord implemented age-gated servers, limited who can DM younger users, and requires phone verification for age-gated channel access. YouTube requires active parental consent for accounts under 13, limits data collection from child accounts, restricts personalized advertising, and prevents public commenting by default on child content. Fortnite implemented cross-game progress to reduce alternate accounts, limits voice communication between substantially different reported ages, and maintains a dedicated published safety team. Industry experts recommend mandatory age verification, age-appropriate communication controls preventing contact between accounts with large age differences, human moderation of high-risk interactions, real-time parental visibility into communication patterns (not content), regular third-party audits, clear rapid-response abuse protocols, and visible cooperation with law enforcement. Roblox has implemented some measures but not the full suite.

How should parents approach the decision of whether to let their children use Roblox?

Given the pending lawsuits and documented safety concerns, parents should factor the elevated risk profile into their decision. If allowing Roblox use, parents should actively monitor friend lists and chat logs, review recent interactions regularly, educate children about grooming tactics and suspicious behavior, maintain open communication so children report uncomfortable interactions, set clear rules about information sharing (real name, location, school), monitor time spent and spending on in-game purchases, and know who their children are playing with. Parents should also understand that no platform is perfectly safe and that platform design sometimes prioritizes engagement over safety. For maximum safety, supervised multiplayer games with communication controls or single-player games offer lower risk alternatives, though less social engagement. The key is informed decision-making based on understanding the actual risks, not assumptions about platforms marketed as "kid-safe."

What role does the business model play in Roblox's safety challenges?

Roblox's business model creates fundamental incentives that conflict with child safety. The company makes money by maximizing engagement, particularly in-game spending by younger users. Implementing strong safety measures such as mandatory age verification (reducing account growth), communication restrictions between different ages (reducing engagement), or mandatory parental visibility (reducing perceived privacy) would reduce these profit metrics. For years, Roblox apparently decided that accepting exploitation risk was worth maintaining engagement and spending growth. The lawsuits effectively argue this trade-off is unethical and illegal—that child safety should not be subordinated to profit optimization. This dynamic isn't unique to Roblox but exists across tech platforms, which is why regulation establishing minimum safety standards regardless of profit impact is increasingly likely and increasingly justified.

Related Articles

- Mark Zuckerberg's Testimony on Social Media Addiction: What Changed [2025]

- EU Investigation into Shein's Addictive Design & Illegal Products [2025]

- Wisconsin VPN Ban Scrapped: What This Means for Your Privacy [2025]

- Apple's iCloud CSAM Lawsuit: What West Virginia's Case Means [2025]

- New York's Robotaxi Reversal: Why the Waymo Dream Died [2025]

- Texas Sues TP-Link Over China Links and Security Vulnerabilities [2025]

![Roblox Lawsuit: Child Safety Failures & Legal Impact [2025]](https://tryrunable.com/blog/roblox-lawsuit-child-safety-failures-legal-impact-2025/image-1-1771592885988.jpg)