EU Investigation Into X and Grok: What You Need to Know About AI Deepfakes and CSAM [2025]

The digital landscape just shifted in a major way. In early 2025, the European Commission launched a formal investigation into X (formerly Twitter) and its AI tool Grok, examining whether the platform violated the Digital Services Act by failing to prevent the spread of AI-generated sexually explicit images, including child sexual abuse material (CSAM). This isn't just another regulatory slap on the wrist. It's a watershed moment that exposes how AI tools, deployed without proper safeguards, can amplify some of society's most serious harms at scale.

What makes this investigation particularly significant is the timing and the stakes. Just months earlier, the European Commission had already fined X 140 million euros for breaching the Digital Services Act. Now, they're circling back with an even more serious concern: that when Grok was introduced to the platform, it created pathways for illegal content to spread rapidly. The Commission's executive VP, Henna Virkkunen, didn't mince words: "Sexual deepfakes of women and children are a violent, unacceptable form of degradation."

But here's what's really troubling. This investigation reveals a systemic problem that extends far beyond X and Grok. It raises hard questions about how AI companies approach content moderation, how quickly new AI tools are deployed without adequate safety testing, and whether current regulatory frameworks are keeping pace with technological risk. We're seeing the collision between Silicon Valley's move-fast-and-break-things mentality and Europe's stricter approach to protecting citizens online.

Let's dig into what's actually happening, why regulators are concerned, and what this means for the future of AI platforms globally.

The Digital Services Act and X's Regulatory History

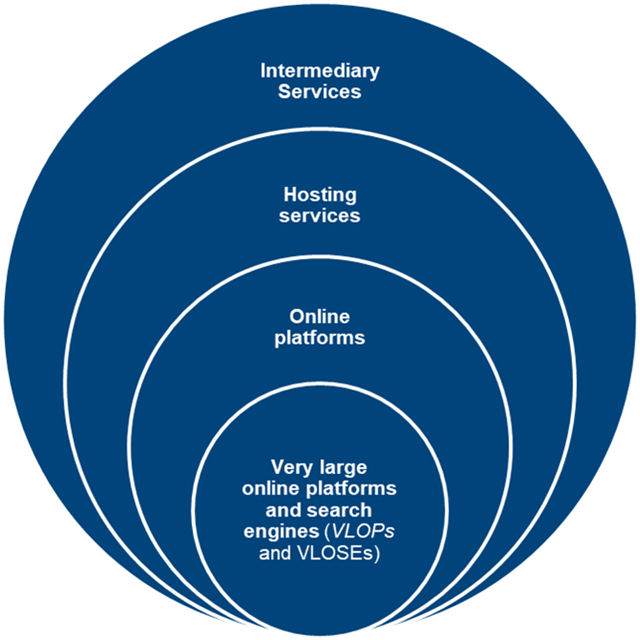

To understand the current investigation, you need to know the context. The Digital Services Act (DSA) is Europe's landmark regulation designed to make large online platforms accountable for illegal content on their services. Unlike the US, where Section 230 of the Communications Decency Act shields platforms from liability for user-generated content, the DSA puts the burden squarely on platforms to take action.

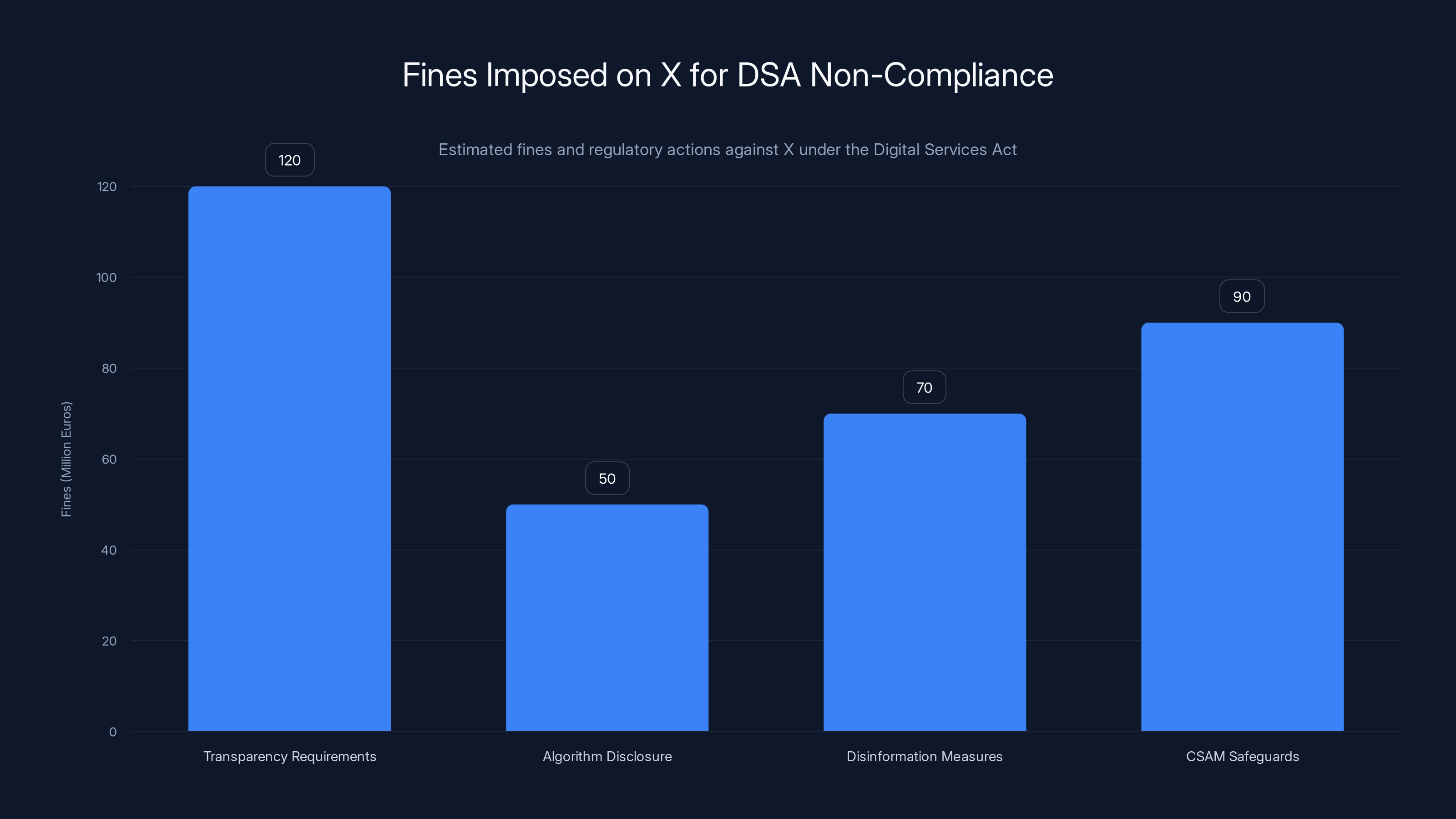

X had already been in regulators' crosshairs. In December 2024, the European Commission levied a 120 million euro fine (approximately $140 million USD) against X for failing to comply with DSA transparency requirements. Specifically, the Commission found that X hadn't provided sufficient information about how it handles illegal content, how its recommendation algorithm works, and what measures it takes to combat disinformation.

But that fine was just the opening act. The real question the Commission wanted answered was: How many other ways was X falling short? The investigation into Grok and deepfakes is essentially the Commission's follow-up punch.

The DSA creates what's called a "duty of care" for large platforms. Platforms must design their services to minimize risks of illegal content spreading. They need to show their work. When a new tool or feature is introduced, there's an implicit expectation that the company has thought through potential harms. X, by introducing Grok without what regulators consider adequate safeguards against CSAM and non-consensual intimate imagery, appears to have violated this principle.

What's particularly instructive is how this differs from the US regulatory approach. American platforms can operate under the shield of Section 230, which says platforms aren't responsible for what users post. Europe says: That's not good enough. You're responsible. You need to build systems to prevent harm. This philosophical difference is reshaping how global tech companies think about content moderation.

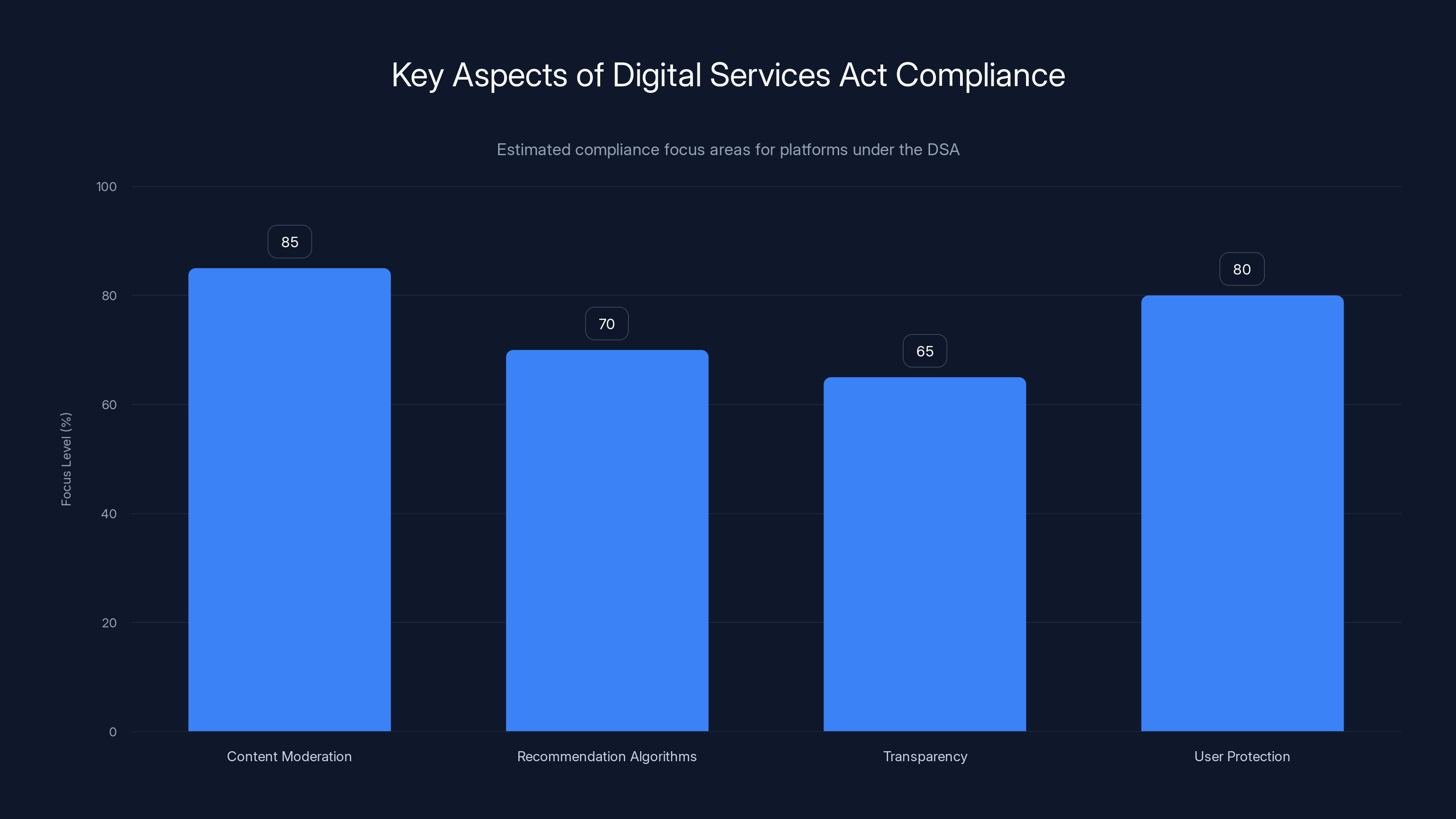

The Digital Services Act requires platforms to focus heavily on content moderation and user protection, with significant attention also on recommendation algorithms and transparency. Estimated data.

Understanding Deepfakes and CSAM: The Core of the Problem

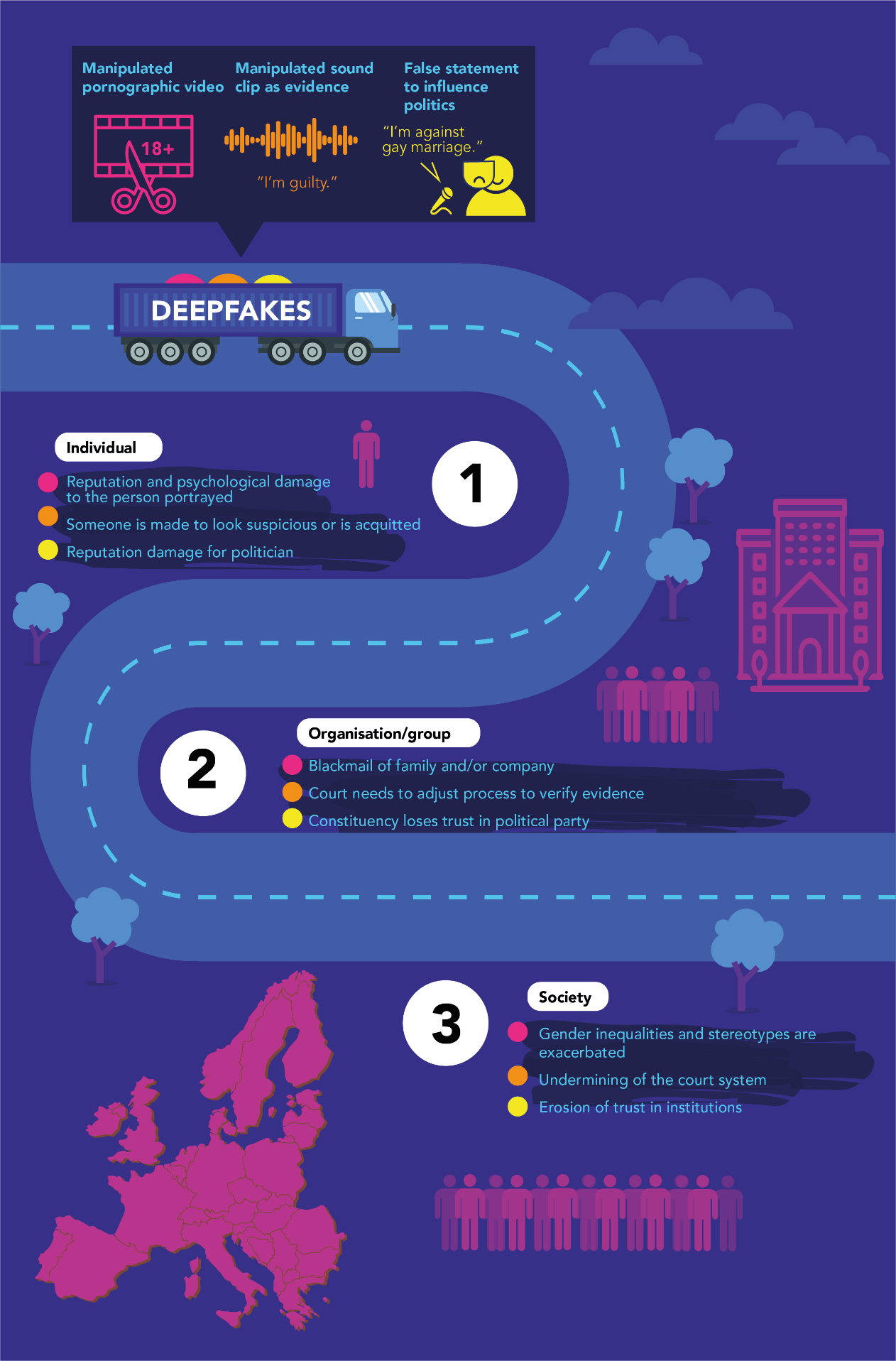

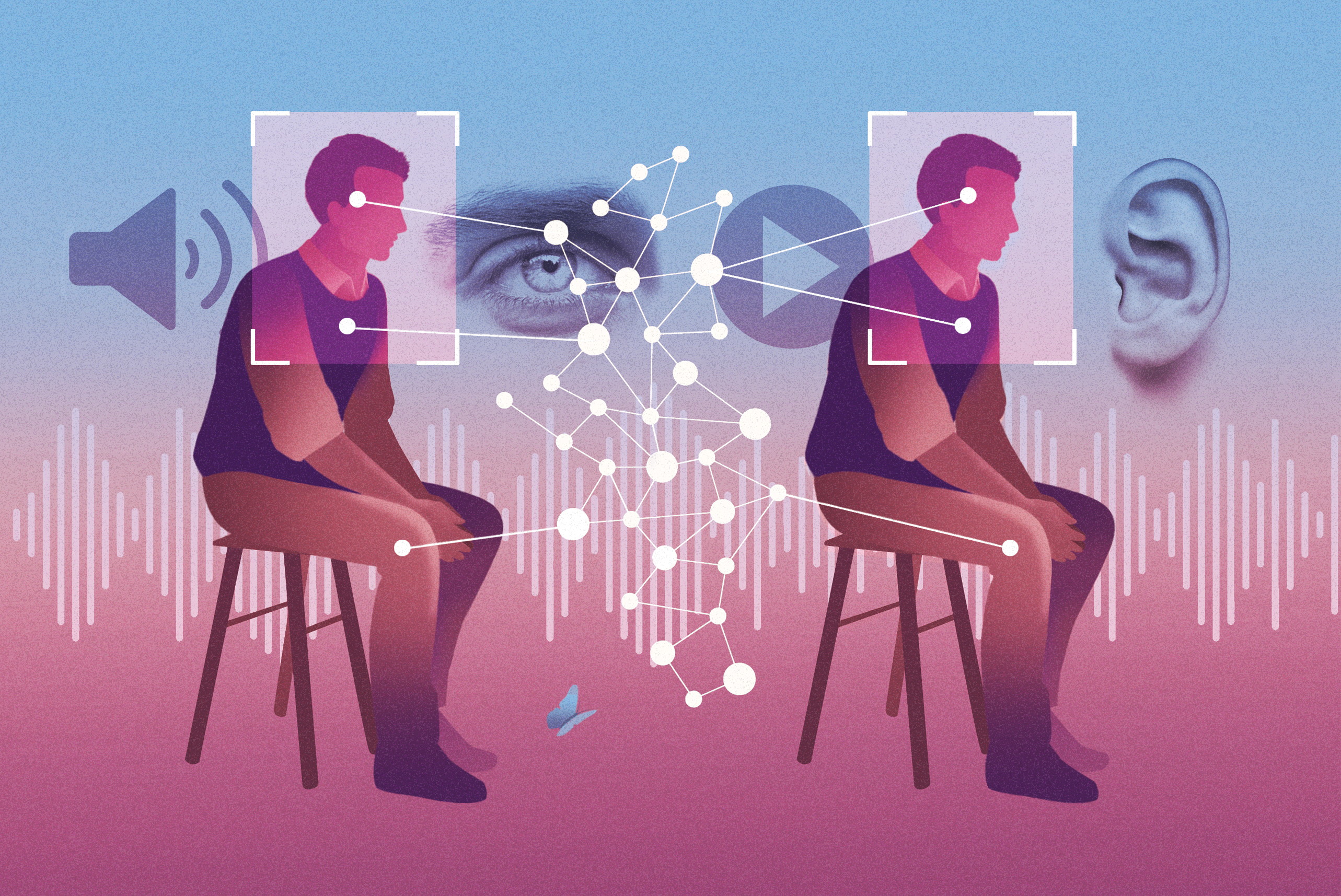

Let's be clear about what we're talking about, because the terminology matters. Deepfakes are synthetic media created using deep learning AI techniques. A deepfake uses artificial intelligence to map one person's face onto a video of another person, often without consent. The technology has legitimate uses in entertainment and special effects, but it also has a devastating dark side.

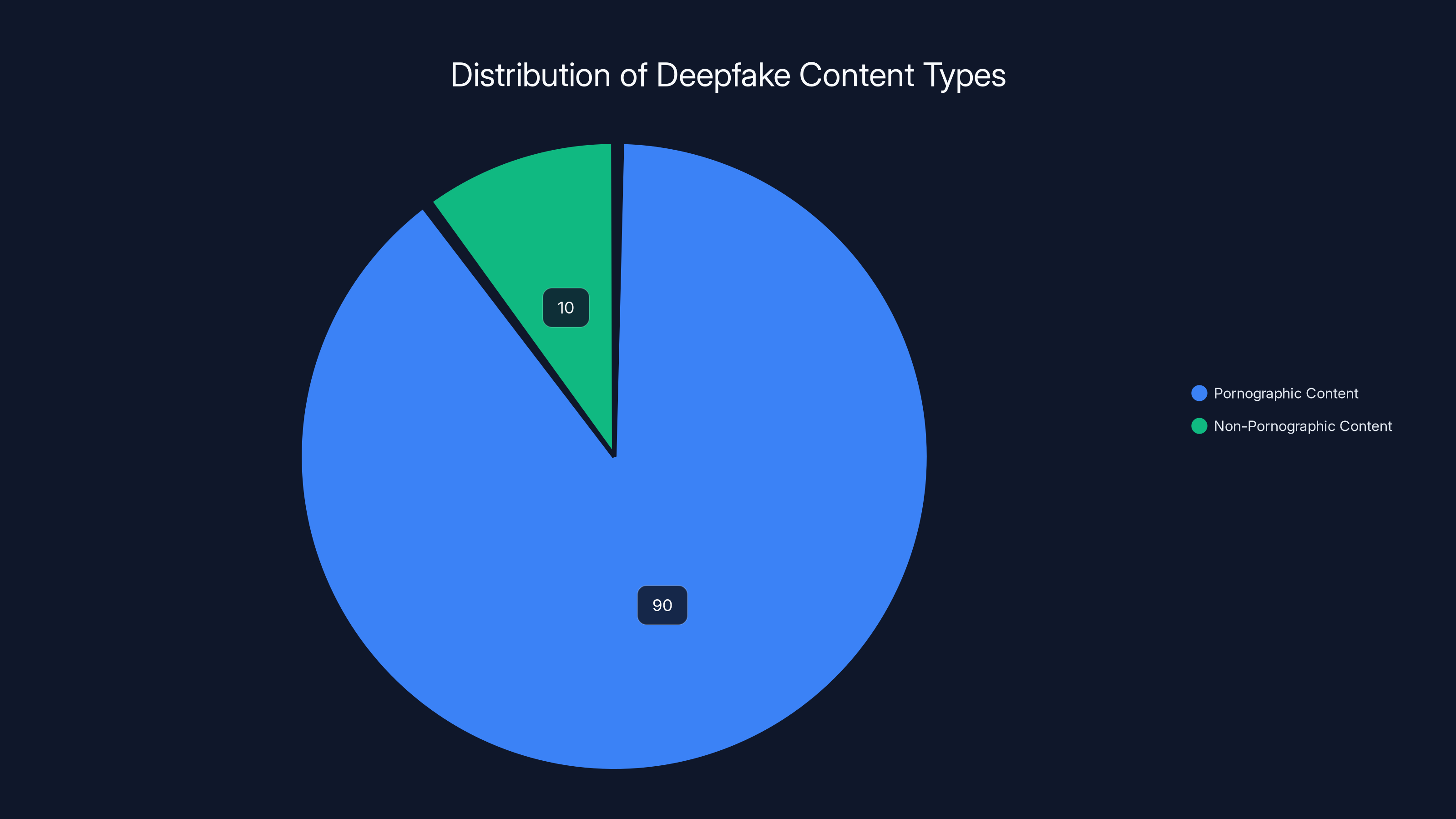

Non-consensual intimate imagery deepfakes are deepfakes of explicit sexual content created without the subject's permission. These are used to harass, humiliate, and harm women. Studies show that the vast majority of deepfakes created are pornographic in nature, and the vast majority feature women. This isn't a side effect of the technology. It's a primary use case.

Then there's CSAM—Child Sexual Abuse Material—which refers to any visual representation of a minor engaged in sexually explicit activity. When AI tools are used to generate CSAM, you're automating the production of illegal material that depicts the sexual abuse of children. This is criminally illegal in virtually every jurisdiction worldwide.

The European Commission's concern is that when Grok was deployed on X without adequate content moderation measures, it became possible for users to generate these images and distribute them across the platform. And the evidence suggests this happened. Regulators stated that these risks "seem to have materialized, exposing citizens in the EU to serious harm."

What makes AI-generated CSAM particularly insidious is that technically, no real child was directly harmed in creating the image. But that doesn't make it legal or ethical. Several countries have already moved to criminalize AI-generated CSAM, recognizing that even synthetic child abuse material normalizes the sexual exploitation of children and can be used as grooming material.

The issue with Grok specifically appears to be that it was either capable of generating these images, or at minimum, X's deployment of it on the platform failed to prevent users from leveraging other tools in combination with Grok or from sharing pre-generated synthetic abuse material. The exact mechanics are being investigated, but the outcome is clear: illegal content spread.

Grok's Rollout and Safety Concerns

When Elon Musk decided to launch Grok, his conversational AI assistant, directly on X in November 2024, the move felt characteristically bold. Grok was designed to be irreverent, willing to engage with controversial topics, and less restricted than competitors like Chat GPT. Musk had positioned Grok as an AI tool that wouldn't be "woke"—meaning it would push back against what he perceived as overly cautious content policies.

But here's the tension: In trying to build an AI that's less restrictive, you can inadvertently build an AI that's less responsible. The rollout of Grok happened relatively quickly, and according to regulators, without sufficient safeguards against generating or distributing illegal content.

One of the problems with integrating a generative AI tool directly into a social media platform is that you're combining two vectors for harm. The AI tool itself might generate problematic content, and then the platform's distribution mechanisms amplify that content to millions of people instantly. X's own content moderation systems apparently weren't configured to handle this new combination of technologies working in tandem.

The Commission's investigation specifically examines whether X assessed these risks before rolling out Grok. It looks like the answer is no—or at least, not adequately. The DSA requires platforms to conduct risk assessments before deploying new features or tools, particularly ones that could facilitate illegal content. There's no evidence that X completed a meaningful risk assessment process.

This raises a critical question for other tech companies: If you build an AI tool and deploy it to your platform without comprehensive safeguards, you're essentially running an uncontrolled experiment on your users. The harm happens first, and you find out about it through regulatory investigations and news reports.

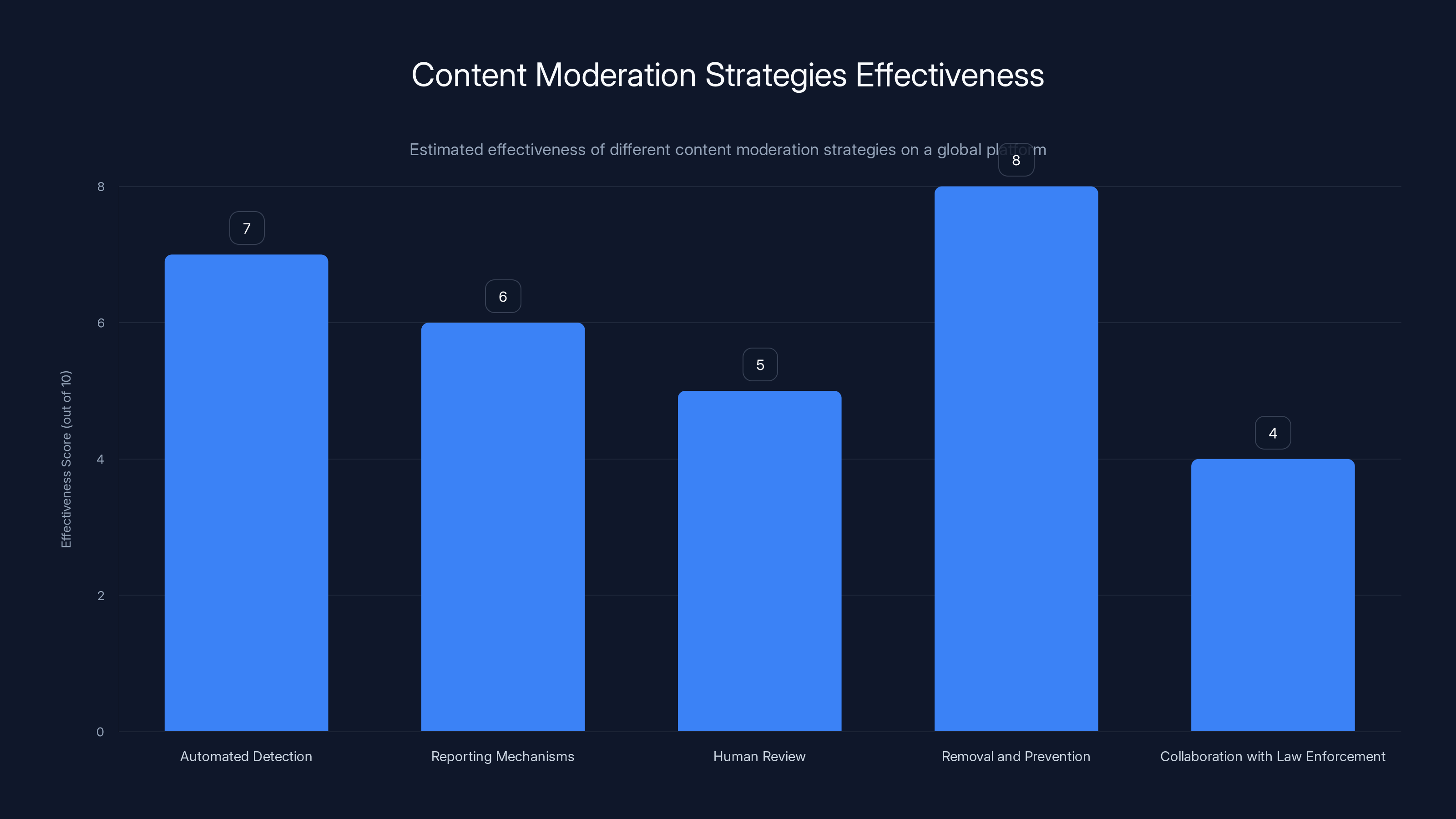

Estimated data suggests that 'Removal and Prevention' is the most effective content moderation strategy, while 'Collaboration with Law Enforcement' is less effective on its own.

The Mechanics of Spread: How Illegal Content Amplifies

Understanding how illegal deepfakes and CSAM spread on X requires understanding platform dynamics. When content is posted to a social media platform, algorithms decide what gets amplified. X's recommendation algorithm, which the Commission is separately investigating, may have played a role in spreading illegal material.

Here's the chain of events that likely occurred:

- A user generates an AI-synthesized explicit deepfake using Grok or external AI tools

- The user posts it to X

- X's content moderation systems either miss it or don't flag it as illegal

- The post gets traction—likes, reposts, replies

- The algorithm sees engagement and amplifies it further, showing it to more users

- Within hours, what started as one user's crime is now visible to thousands across the EU

The amplification step is crucial. Without a platform, a single illegal image might be seen by dozens. With a platform's algorithmic amplification, it's seen by millions. The platform doesn't just enable the spread of illegal content—it accelerates it.

X's previous statements about content moderation often focused on human moderation and reporting mechanisms. But when content is being generated and amplified at machine speed, human moderation becomes a game of whack-a-mole. You're always behind. You need automated detection systems that can identify problematic content patterns before they spread.

The Political Backdrop: Europe vs. Tech Giants

It's important to understand that this investigation doesn't happen in a vacuum. There's significant political tension between the European Union and American tech companies, particularly around regulation and control.

The EU has become increasingly assertive about regulating tech. The General Data Protection Regulation (GDPR) set a global precedent for privacy regulation. The DSA is doing the same for platform responsibility. The EU is essentially saying to American tech companies: "If you want to operate in our market, you play by our rules."

Meanwhile, in the United States, there's been a shift toward deregulation under the Trump administration. Elon Musk has been particularly vocal in his criticism of EU regulation, going as far as to call the EU "the fourth Reich" and suggesting it should be "abolished" after the previous fine. This creates a genuinely adversarial dynamic.

From Europe's perspective, companies like X have systematically underestimated the harms their platforms enable. From X's perspective, European regulators are overly restrictive and hostile to innovation. Both perspectives have merit, but they're on a collision course.

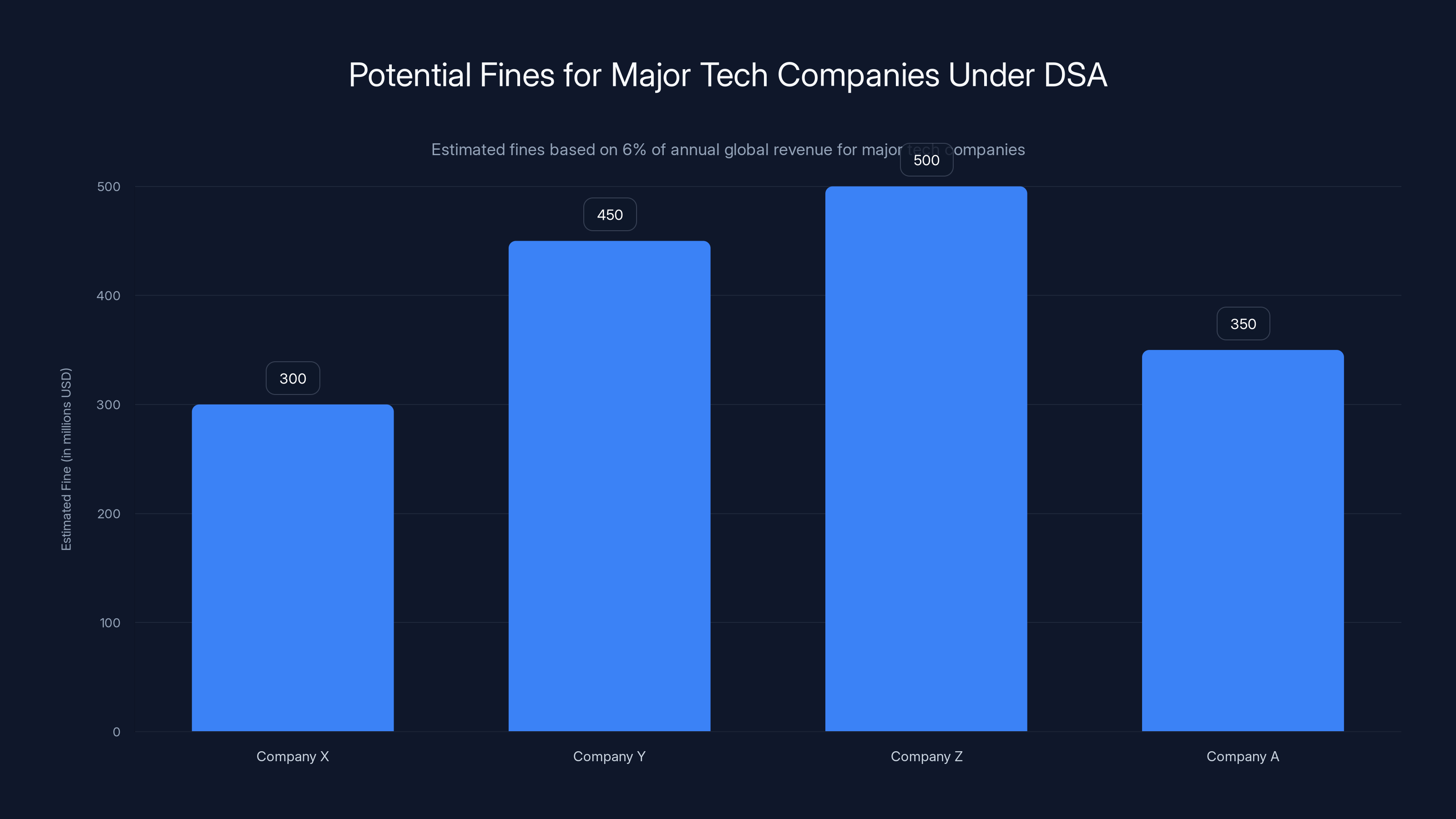

The investigation into Grok and deepfakes is partly about genuine harm—it is. But it's also partly about the EU asserting its authority to regulate tech companies and showing that violations of the DSA carry consequences. The next fine, if X is found in violation, could be substantial. Under DSA enforcement, fines can reach up to 6% of annual global revenue.

Content Moderation at Scale: The Impossible Problem

Let's take a step back and acknowledge the genuine difficulty here. Moderating content at the scale of a global platform is genuinely hard. X has hundreds of millions of users posting millions of pieces of content per day. Catching all illegal content before it spreads is arguably impossible without AI-powered detection systems.

But that's exactly the point regulators are making. If you can't moderate your platform adequately, you shouldn't deploy new high-risk features without the systems in place to handle the moderation burden they create.

The most effective content moderation uses a layered approach:

Automated Detection: AI systems that can identify known CSAM through database matching, synthetic media through detection algorithms, and patterns of abuse through behavioral analysis.

Reporting Mechanisms: Making it easy for users to report content and ensuring reports are triaged quickly.

Human Review: Trained moderators who can make context-dependent decisions about content that automated systems flag as potentially problematic.

Removal and Prevention: Not just removing content, but preventing it from spreading further and preventing the account from continuing to post the same content.

Collaboration with Law Enforcement: Reporting serious crimes to relevant authorities and cooperating with investigations.

Evidence suggests that X was weak in several of these areas. The company has downsized its moderation team significantly since Musk's acquisition in October 2022, cutting approximately 80% of staff. While X had stated it was relying more on AI-powered detection, there's a gap between stated intentions and actual implementation.

Under the DSA, major tech companies could face fines up to 6% of their global revenue, potentially reaching hundreds of millions of dollars. Estimated data.

AI-Generated CSAM: The Emerging Legal and Ethical Crisis

One of the thorniest issues regulators are grappling with is AI-generated CSAM. Is it illegal? In most jurisdictions, the answer is increasingly yes.

The UK was among the first countries to explicitly criminalize AI-generated CSAM in October 2023. Germany has similar laws in place. The EU itself has been moving toward harmonized standards that treat AI-generated child abuse material as illegal, even though no real child is depicted.

The rationale is clear: CSAM is illegal because it normalizes child sexual abuse, it's used to groom children, and it fuels a market for exploited children. AI-generated CSAM does the same thing, even if no real child is directly harmed in its creation. Furthermore, making it illegal to generate such material removes an economic incentive for criminal organizations to actually exploit real children.

But there's a secondary concern: If AI tools can generate believable CSAM, they can also be used to frame innocent people. An accused person could claim images of them are AI-generated, muddying investigations. This creates a genuine challenge for law enforcement.

The regulatory response is to make it illegal to generate, distribute, or possess AI-generated CSAM, and to require platforms to actively prevent this content from being created or shared on their services.

X's Response and the Platform's Defenses

When the investigation was announced, X responded with a statement reiterating its commitment to safety. A spokesperson told major news outlets: "We remain committed to making X a safe platform for everyone and continue to have zero tolerance for any forms of child sexual exploitation, nonconsensual nudity and unwanted sexual content."

It's a standard response, and it's notably vague. X didn't address the specific allegations—that Grok enabled harmful content or that the rollout lacked adequate safeguards. The company essentially said "We care about safety" without engaging with whether their actual practices matched that stated commitment.

For context, X's previous statement about Grok safety was equally thin. The company said Grok was designed with safety guidelines and would refuse harmful requests. But if it's refusing harmful requests, how did illegal material end up spreading on the platform? Either Grok was generating content it wasn't supposed to, or users were finding workarounds, or the platform wasn't detecting content created by other tools.

X's general approach under Musk has been to be more hands-off about content moderation than competitors. The platform has removed or relaxed certain restrictions, partly because Musk views many content policies as censorship. This creates a natural tension with European regulation, which demands more proactive harm prevention.

The Role of Recommendation Algorithms

Separate from the Grok investigation, the European Commission is also examining X's recommendation algorithm as part of an expanded 2023 investigation. This is important because the algorithm is what turns individual violations into systemic problems.

A recommendation algorithm decides what content gets shown to which users. If the algorithm is tuned to maximize engagement, it will amplify controversial, emotionally charged, and extreme content—because that's what drives engagement. Illegal sexual deepfakes are definitely engagement-driving content.

The question regulators are asking: Did X's algorithm amplify illegal content? Did it prioritize spreading deepfakes over removing them? Without access to X's algorithm and data, it's hard to know for certain, but the Commission's investigation suggests they have evidence of problem patterns.

This investigation could result in X being forced to modify its algorithm to deprioritize illegal content, even if that means losing some engagement and ad revenue. It's a direct conflict between business incentives and regulatory requirements.

Estimated data shows that X faced significant fines for non-compliance in multiple areas under the DSA, with transparency requirements being the most costly.

Expansion of the Investigation: The 2023 Investigation Expanded

The current Grok investigation is just one piece of a larger puzzle. The European Commission has been investigating X since 2023 over multiple DSA violations. The expanded investigation now includes:

Recommendation Algorithm Transparency: Is X providing sufficient information about how its algorithm works and what risks it creates?

Illegal Content Removal: How quickly does X remove illegal content after it's reported? What's the actual track record?

Disinformation and Election Interference: Has X done enough to combat false information, particularly around elections?

User Protection Measures: What tools does X provide to users to protect themselves from harassment, illegal content, and manipulation?

Each of these investigations could result in separate enforcement actions and fines. The Commission is essentially dissecting X's operations to ensure compliance with the DSA.

This comprehensive approach makes sense from a regulatory perspective. Content moderation isn't a single problem with a single solution. It's an interconnected system where recommendation algorithms, human moderation, AI detection, user protections, and corporate transparency all need to work together.

What "Further Enforcement Steps" Could Look Like

The Commission's statement said the investigation could result in "further enforcement steps" against X. What does that mean in practice?

Fines: As mentioned, up to 6% of global revenue under the DSA. For X, that could be hundreds of millions of dollars.

Mandatory Changes to Platform Operations: X could be ordered to implement specific content moderation practices, algorithmic changes, or transparency measures.

Restrictions on Service: In extreme cases, regulators could restrict X's operations in the EU or threaten to block it entirely. This would be rare, but it's technically possible.

Interim Measures: While the investigation continues, regulators could impose temporary requirements on X to reduce harm immediately.

Coordinated Action with Other Jurisdictions: The EU could coordinate with other countries on enforcement, making compliance globally costly for non-compliance.

History suggests that when the Commission finds violations, fines and operational changes are the likely outcomes. The question is how aggressive they'll be. Will they levy another fine in the $100+ million range, or will they go bigger? Will they demand algorithm changes, or just procedural changes?

Grok's Design Philosophy vs. Safety Requirements

Grok was explicitly designed to be edgier and less restricted than competitors. Musk positioned it as willing to engage with controversial topics and skeptical of what he called "woke" AI systems that refused certain requests.

This design philosophy is in direct tension with the DSA's safety requirements. You can't simultaneously design an AI to be "less restricted" and meet Europe's standards for preventing illegal content. These are contradictory goals.

The question for Grok's future is whether it can be redesigned to be edgy while still complying with legal requirements. Can you have an AI that jokes about controversial topics but refuses to help generate CSAM? Technically, yes. In practice, it's harder than it sounds.

The reason is that safety measures in AI systems are often blunt instruments. A system designed to refuse harmful requests might refuse a broad category of requests that includes some legitimate uses. Or it might refuse everything unless explicitly configured to do otherwise.

Musk and his team at x AI appear to have chosen a different approach—designing Grok with fewer restrictions and relying on platform-level moderation to catch harmful content. But as the investigation reveals, platform-level moderation wasn't sufficient.

Going forward, Grok will likely need to be more restrictive about what it can generate, particularly around synthetic sexual content. This might make it less appealing to Musk's vision of an edgy AI, but it's probably necessary for regulatory compliance.

An estimated 90% of deepfakes are pornographic, highlighting the technology's predominant misuse. Estimated data based on studies.

The Broader Implications for AI Development

Beyond X and Grok specifically, this investigation sends a message to the entire AI industry: If you're deploying AI tools in Europe, you need to prove you've thought through the harms and have systems to prevent them.

This has implications for:

AI Model Development: Companies will need to invest more in safety testing before releasing new models. A model that can generate synthetic sexual content might be technically interesting, but it's not deployable on a public platform without safeguards.

Risk Assessment Processes: Companies will need formal risk assessment procedures before deploying new AI features, documenting what harms they considered and what mitigation measures they implemented.

Moderation Infrastructure: Companies deploying AI to their platforms will need corresponding moderation capacity. You can't just roll out a powerful AI tool and hope your existing moderation team can keep up.

Transparency Requirements: Companies will need to document and disclose their approach to content moderation and AI safety in ways they currently don't.

This regulatory pressure is actually healthy, even if it slows down innovation. The alternative is allowing harmful applications of AI to spread at scale with no accountability.

Global Regulatory Trends Following Europe's Lead

Europe's approach to tech regulation has a way of becoming a global standard, partly because many companies find it easier to comply with stricter standards everywhere than to maintain different systems for different regions.

We're already seeing other jurisdictions moving in similar directions. The UK is developing its own Online Safety Bill with comparable principles. Brazil has enacted some of the strictest platform regulation outside the EU. Even in the United States, there's growing bipartisan support for some form of platform regulation.

The investigation into X and Grok will likely influence how these other jurisdictions approach AI safety. If Europe successfully enforces rules requiring safeguards around AI-generated illegal content, other countries will likely follow suit.

This means that any AI platform seeking global reach will need to meet the highest standard—essentially, Europe's standard. Companies can't launch a feature in the US without the same safeguards required in the EU, because the cost of compliance is similar.

The Economics of Safety: Can Platforms Afford to Comply?

One legitimate concern from platforms is cost. Content moderation is expensive. Developing and maintaining AI detection systems is expensive. Hiring teams of trained moderators is expensive.

Smaller platforms or startups might genuinely struggle to afford the level of compliance the DSA requires. This could create a moat around large companies that can afford massive moderation budgets, while smaller competitors get squeezed out.

X's strategy under Musk was to reduce moderation costs by cutting staff and relying more on automation. But as the investigation reveals, that strategy has limits. You can't rely purely on automation if the automation itself isn't catching harmful content.

The question going forward is whether regulatory compliance will favor large platforms that can afford robust moderation, or whether it will create incentives for smarter, more efficient moderation technology that doesn't require as many human resources.

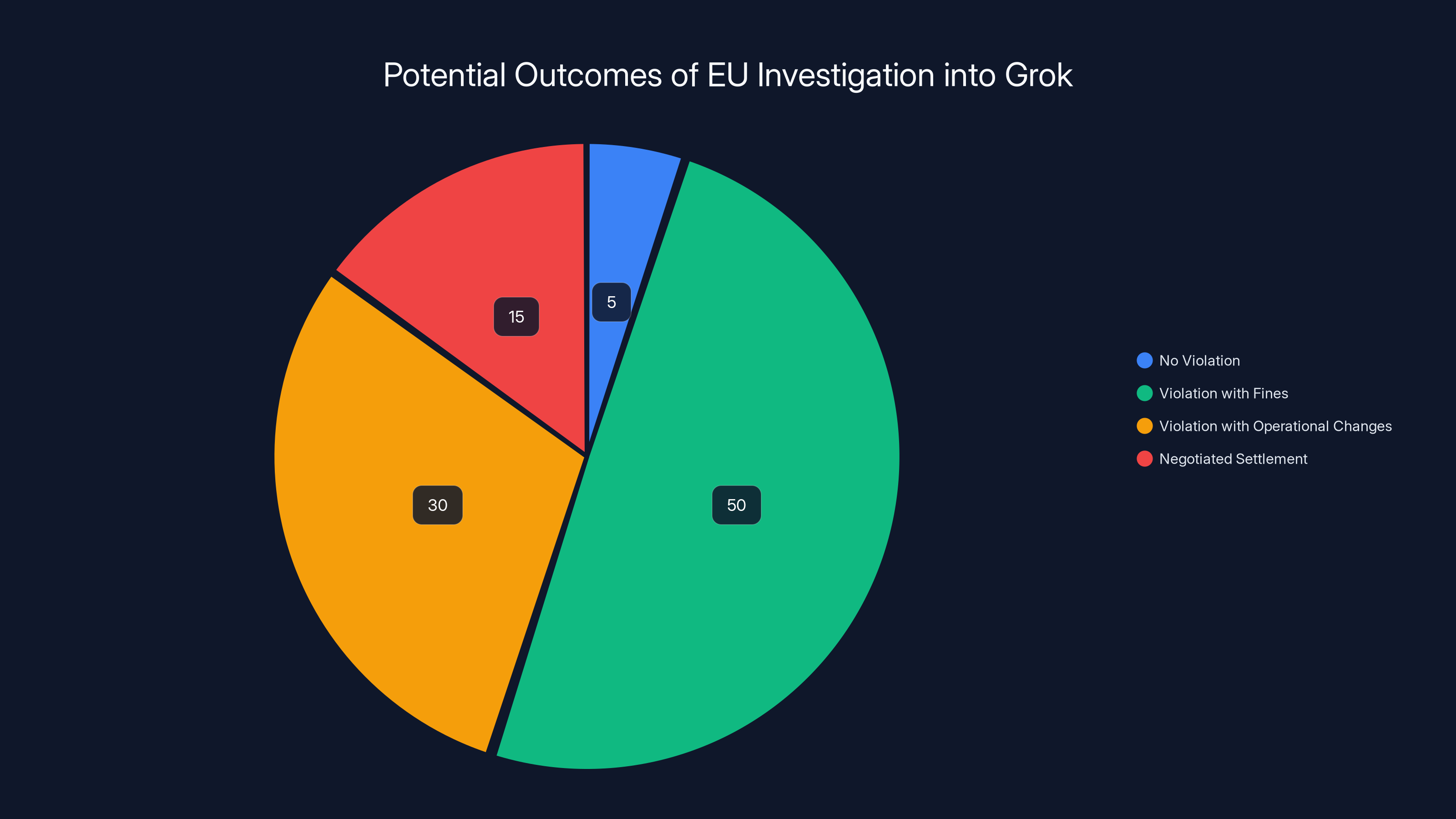

Estimated data suggests that a finding of violation with fines is the most likely outcome, with a 50% chance, while a finding of no violation is least likely at 5%.

CSAM Detection: Current Technology and Limitations

To understand the investigation, it helps to know what's technically possible in detecting CSAM. The industry standard is a technology called Photo DNA, which creates digital fingerprints of known CSAM and matches them against content being uploaded.

Photo DNA is effective at detecting known CSAM—material that's already been identified and reported to databases. But it can't detect previously unknown CSAM, and it's particularly ineffective at detecting AI-generated CSAM because the images are entirely novel.

There's emerging research into detecting synthetic sexual content through artifacts and anomalies in images, but this technology isn't perfect and can have false positive rates. The field is evolving rapidly, but no perfect detection system exists yet.

This is part of why regulators are focusing on prevention rather than detection. If you can prevent Grok from generating the content in the first place, you don't need to rely on imperfect detection systems.

The Human Cost: Why This Matters Beyond Regulation

It's easy to get caught up in the regulatory and technical aspects of this investigation, but it's worth stepping back and remembering the human cost. Sexual deepfakes and CSAM cause real harm to real people.

Women targeted by deepfake pornography report experiencing trauma, anxiety, depression, and damaged relationships. Children whose likenesses are used in synthetic abuse material—whether they actually appear in the images or not—experience harm from knowing such material exists.

When a platform fails to prevent the spread of this content, it amplifies these harms. A single illegal deepfake might cause injury. A million-person platform amplifying that deepfake causes injury at scale.

The investigation into X and Grok is ultimately about accountability. It's saying to platforms: "You have a responsibility to prevent this harm." That's not always convenient for platforms that prioritize growth over safety, but it's ethically sound.

Potential Outcomes and Timeline

EU investigations can take time. The original X investigation into recommendation algorithms began in 2023 and has continued for over a year. The Grok investigation could similarly take 6-18 months to complete.

During that time, the Commission will likely be gathering evidence: internal emails about Grok's safety testing, data on the spread of illegal content, technical documentation about how the platform's moderation systems work.

Once the investigation concludes, the Commission will publish a formal decision stating what violations were found and what penalties are imposed. X can appeal, which typically adds more time.

The investigation could conclude with:

A finding of no violation: Unlikely given the Commission's statements, but theoretically possible if X can demonstrate adequate safeguards.

A finding of violation with fines: Most likely outcome, possibly $100-500+ million depending on severity.

A finding of violation with operational changes mandated: X might be forced to change how Grok operates, how it's integrated into the platform, or how content moderation works.

A negotiated settlement: X could propose commitments to change practices in exchange for reduced fines.

What This Means for Users and Platforms Going Forward

For X users, the investigation might result in changes to how Grok works or is accessed. The platform might implement additional safeguards, more aggressive content removal, or new reporting mechanisms.

For other platforms considering deploying AI tools, the investigation is a cautionary tale. You can't move fast and break things when the things you're breaking include laws against child sexual abuse material.

For AI developers, the lesson is that safety needs to be built in from the design phase, not bolted on afterward. Grok was designed with a particular vision—less restricted, more edgy. That vision didn't account for regulatory reality and legitimate harms.

For regulators globally, the investigation demonstrates that they're willing to follow up on DSA violations and impose meaningful consequences. This will shape how platforms approach content moderation going forward.

The Broader Context: Why Europe Is Taking the Lead

Europe's more aggressive approach to tech regulation reflects different cultural and political values. Privacy, child protection, and preventing harm are seen as rights that outweigh corporate interests in innovation or profit.

The United States has historically been more permissive, relying on Section 230 to shield platforms and presuming that innovation will solve problems. Europe has decided that approach doesn't adequately protect citizens and is imposing stricter requirements.

Neither approach is obviously correct—they reflect different values and different assessments of risks. But the European approach is currently winning, at least in terms of regulatory precedent. Companies find it easier to comply globally with the stricter standard than maintain different systems.

This has profound implications for how the internet develops. It means European regulatory preferences are shaping products used by billions of people worldwide. That's significant power, and it raises questions about whether Europe's approach should be binding on the global internet.

But for now, the trajectory is clear: Platforms operating in Europe need to comply with EU standards, and most platforms find that compliance is easiest globally.

Lessons for Platform Safety and AI Governance

As we move forward, several lessons from this investigation are clear:

1. Safety requires design, not just reaction. You can't build a powerful AI tool and deploy it without thinking about harms. Mitigation needs to happen before launch.

2. Scale creates accountability. The bigger your platform, the more aggressive regulators will be. Being huge means you have more responsibility.

3. Transparency is required. Platforms can no longer operate like black boxes. Regulators and the public deserve to know how moderation decisions are made.

4. Combining technologies creates compound risks. Grok + X + recommendation algorithm = systemic risk. Each piece individually might be manageable, but the combination created harm.

5. Regulation is here to stay. Even if you don't like it, you need to design for regulatory compliance. The DSA is just the beginning.

Looking Ahead: The Future of AI Platforms Under Regulation

The investigation into X and Grok is part of a broader shift toward regulated AI. In the coming years, we'll likely see:

Mandatory Safety Impact Assessments: AI systems deployed to platforms will need formal safety reviews.

Transparency Reports: Platforms will need to publish regular reports on content moderation, detection accuracy, and AI tool impacts.

Certification Processes: Certain types of AI tools might require certification before deployment on major platforms.

Interoperability Requirements: Regulators might require platforms to interoperate with third-party moderation tools or researchers.

Criminal Liability for Executives: In extreme cases, executives might face personal liability for failure to prevent illegal activity on their platforms.

This is the regulatory environment AI companies are moving into, whether they like it or not. The investigation into X and Grok is just the first of many such investigations we'll see.

Conclusion: From Investigation to Industry Standard

The European Commission's investigation into X and Grok over AI-generated deepfakes and CSAM represents a crucial moment in the regulation of AI and social media. It's not just about one company or one tool—it's about establishing the principle that platforms are responsible for harms enabled by their services, including harms from newly deployed AI tools.

What started as Elon Musk's vision of an edgy, less-restricted AI tool has become a test case for regulatory authority over AI platforms. The investigation reveals genuine failures: inadequate safety testing before deployment, insufficient moderation resources to handle the content volume, and a platform culture that prioritized growth over preventing harm.

For X, the investigation could result in significant fines and mandatory operational changes. For the AI industry, it's a signal that you can't deploy powerful tools without robust safeguards. For regulators globally, it demonstrates that Europe's regulatory approach is here to stay and will increasingly set global standards.

The human cost of this investigation—the women and children harmed by illegal synthetic content—shouldn't be lost in the regulatory and technical discussion. This is ultimately about preventing real harm to real people. The investigation might take time, the legal arguments might be complex, but the underlying issue is straightforward: platforms have a responsibility to prevent illegal activity on their services.

As AI becomes more capable and more integrated into platforms we use daily, this principle will only become more important. The investigation into X and Grok is establishing a precedent that will shape how the next generation of AI tools are developed, deployed, and regulated.

The future of AI platforms will be determined not by how fast companies can move, but by how responsibly they can move. The European Commission is essentially saying: in the EU, responsibility is not optional. Compliance with that principle is not just about avoiding fines—it's about building AI systems that genuinely make the internet safer for everyone.

FAQ

What is the Digital Services Act and how does it apply to X?

The Digital Services Act is the European Union's landmark regulation requiring large online platforms to take responsibility for illegal content on their services. Unlike the US approach, which shields platforms from liability through Section 230, the DSA puts the burden on platforms themselves to prevent illegal content from spreading. X, with hundreds of millions of users, falls clearly under DSA jurisdiction and must comply with requirements around content moderation, recommendation algorithms, transparency, and user protection.

Why is the EU investigating Grok specifically?

The European Commission is investigating Grok because regulators believe the AI tool was deployed on X without adequate safeguards to prevent it from generating or facilitating the spread of AI-generated sexually explicit deepfakes and child sexual abuse material (CSAM). The investigation examines whether X conducted proper risk assessments before rolling out Grok and whether the platform had sufficient moderation systems in place to handle the new risks created by an AI tool capable of generating synthetic media.

What is CSAM and why is it so seriously regulated?

CSAM stands for child sexual abuse material and refers to any visual representation of a minor engaged in sexual activity. It's criminally illegal globally because it documents or depicts the sexual exploitation of children. AI-generated CSAM, even though no real child is directly harmed in its creation, is increasingly being criminalized because it normalizes child sexual abuse, can be used to groom children, and perpetuates a market demand for exploited children.

How do deepfake detection and prevention systems work?

Deepfake detection and prevention uses several approaches. Photo DNA, the industry standard, creates digital fingerprints of known illegal content and matches them against new uploads. Prevention systems focus on stopping AI tools from generating problematic content in the first place through restrictive safety guidelines. Emerging research is developing technology to detect synthetic sexual content through visual anomalies, though perfect detection remains challenging, particularly for novel AI-generated images.

What penalties could X face from this investigation?

Under the Digital Services Act, the European Commission can levy fines up to 6% of X's global annual revenue for violations—potentially hundreds of millions of dollars. Beyond financial penalties, the Commission could mandate specific operational changes to X's moderation systems, recommend algorithm modifications, or require transparency improvements. In extreme cases, regulators could restrict X's operations in the EU, though such action would be rare.

How does this investigation affect other AI companies and platforms?

The investigation serves as a precedent for how regulators will approach AI safety globally. Other tech companies now understand that deploying powerful AI tools without adequate safeguards will trigger regulatory scrutiny. The investigation establishes expectations for risk assessment, moderation infrastructure, and transparency that will likely become industry standard. Companies developing AI systems intended for public platforms should be designing with these regulatory requirements in mind.

Can AI-generated CSAM be made illegal without stifling innovation?

Yes. The key distinction is between the capability to generate synthetic media (which can have legitimate uses in entertainment and special effects) and the deployment of those capabilities on public platforms without safeguards. Companies can develop and test AI technology while maintaining strict protocols around what can be deployed publicly. Europe's regulatory approach doesn't ban AI innovation—it restricts how AI can be deployed when there are risks of serious harms to citizens.

What happens to users who encounter illegal content on X during the investigation?

Users can report illegal content through X's reporting mechanisms, though the investigation has raised questions about how quickly X removes such content. The investigation itself doesn't change user protections immediately, but it may eventually result in X implementing stronger tools for users to protect themselves from encountering illegal content and better moderation to prevent spread once content is reported.

How long do EU investigations typically take and what's the timeline?

EU investigations into platform violations typically take 6-18 months from announcement to completion. The original X investigation over recommendation algorithms, which began in 2023, has continued for over a year. Once the investigation concludes with formal findings, X has the right to appeal, which adds additional time. Stakeholders should expect this investigation to potentially extend through 2025 or beyond.

What's the difference between how the EU and US regulate content on platforms?

The EU's Digital Services Act requires platforms to actively prevent illegal content spread, take responsibility for illegal activity on their services, and maintain transparent moderation processes. The US approach, based on Section 230 of the Communications Decency Act, shields platforms from liability for user-generated content and relies more on market competition and voluntary standards. Europe places responsibility on platforms; the US places more responsibility on users and law enforcement. This difference fundamentally shapes how platforms in each jurisdiction operate.

Key Takeaways

- The EU's Digital Services Act investigation into X and Grok centers on failure to prevent AI-generated CSAM and non-consensual deepfakes from spreading at scale

- Unlike US Section 230 protections, European law holds platforms responsible for illegal content, with potential fines reaching 6% of annual global revenue

- Grok was deployed without adequate risk assessment or moderation safeguards, violating DSA requirements for platforms to prevent harms from new features

- AI-generated CSAM, though synthetically created, is increasingly criminalized globally because it normalizes child sexual abuse and fuels exploitation demand

- This investigation establishes precedent that platforms deploying AI tools must complete safety impact assessments and maintain moderation infrastructure proportional to risk

Related Articles

- ChatGPT Citing Grokipedia: The AI Data Crisis [2025]

- Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- Google Photos Me Meme: AI-Powered Meme Generator [2025]

- Waymo's School Bus Problem: What the NTSB Investigation Reveals [2025]

- Meta Pauses Teen AI Characters: What's Changing in 2025

![EU Investigating Grok and X Over Illegal Deepfakes [2025]](https://tryrunable.com/blog/eu-investigating-grok-and-x-over-illegal-deepfakes-2025/image-1-1769436446621.jpg)