Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

Your team just wrapped a successful agentic AI pilot. The model works. The business case looks solid. Everyone's excited.

Then nothing happens.

For months.

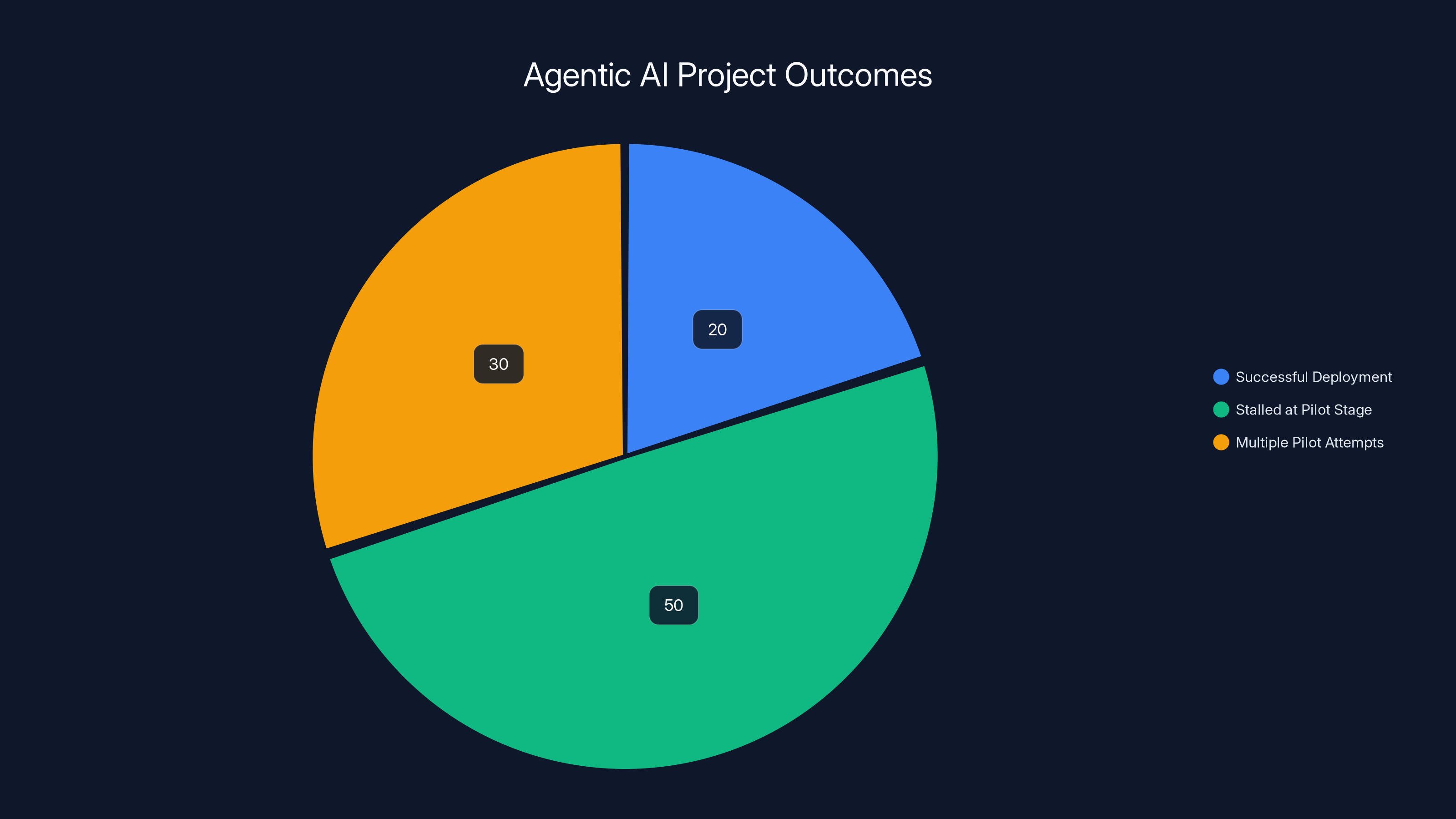

This isn't failure—it's the norm. Recent research shows that roughly half of all enterprise agentic AI initiatives are trapped in proof-of-concept or pilot stages, unable to scale into production. The frustration is real, and it's costing organizations millions in unrealized value.

Here's what's wild: it's not that the technology doesn't work. The barriers aren't technical—they're organizational, cultural, and strategic. Most teams building agentic AI systems don't actually lack the engineering prowess to deploy. They lack clarity on ROI metrics, governance frameworks, and human-AI collaboration models. They're being held back by things like security concerns, compliance overhead, and a shortage of people who actually understand how to manage AI agents at scale.

This matters because 74% of companies plan to increase agentic AI budgets next year, yet they're still figuring out how to get past the pilot phase. Imagine doubling your investment in something you haven't learned to deploy yet. That's where we are right now.

The good news? The barriers aren't mysterious, and they're not unsolvable. Organizations that understand where projects stall—and why—are systematically breaking through the PoC trap. They're redefining how they measure success, building clearer guardrails for human-machine collaboration, and scaling deliberately instead of chaotically.

Let's dig into what's actually blocking deployment, what the data tells us, and most importantly, what your organization can do about it.

TL; DR

- Roughly 50% of agentic AI projects are stuck in proof-of-concept or pilot phases, unable to move to production.

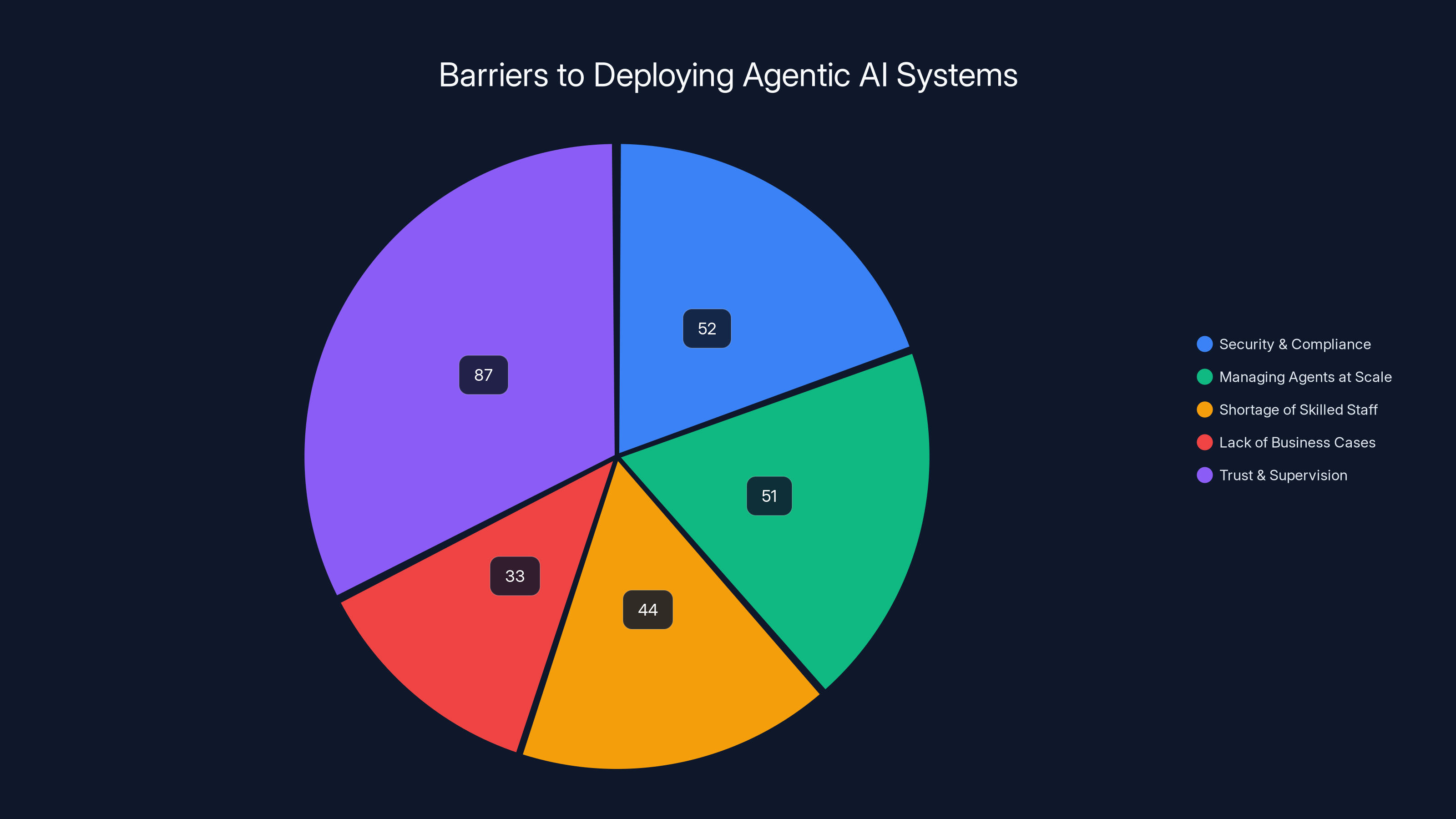

- Top barriers include security/compliance concerns (52%), difficulty managing agents at scale (51%), and staff skill shortages (44%).

- One-third of organizations lack a clear business case, which is often the real blocker, not technology limitations.

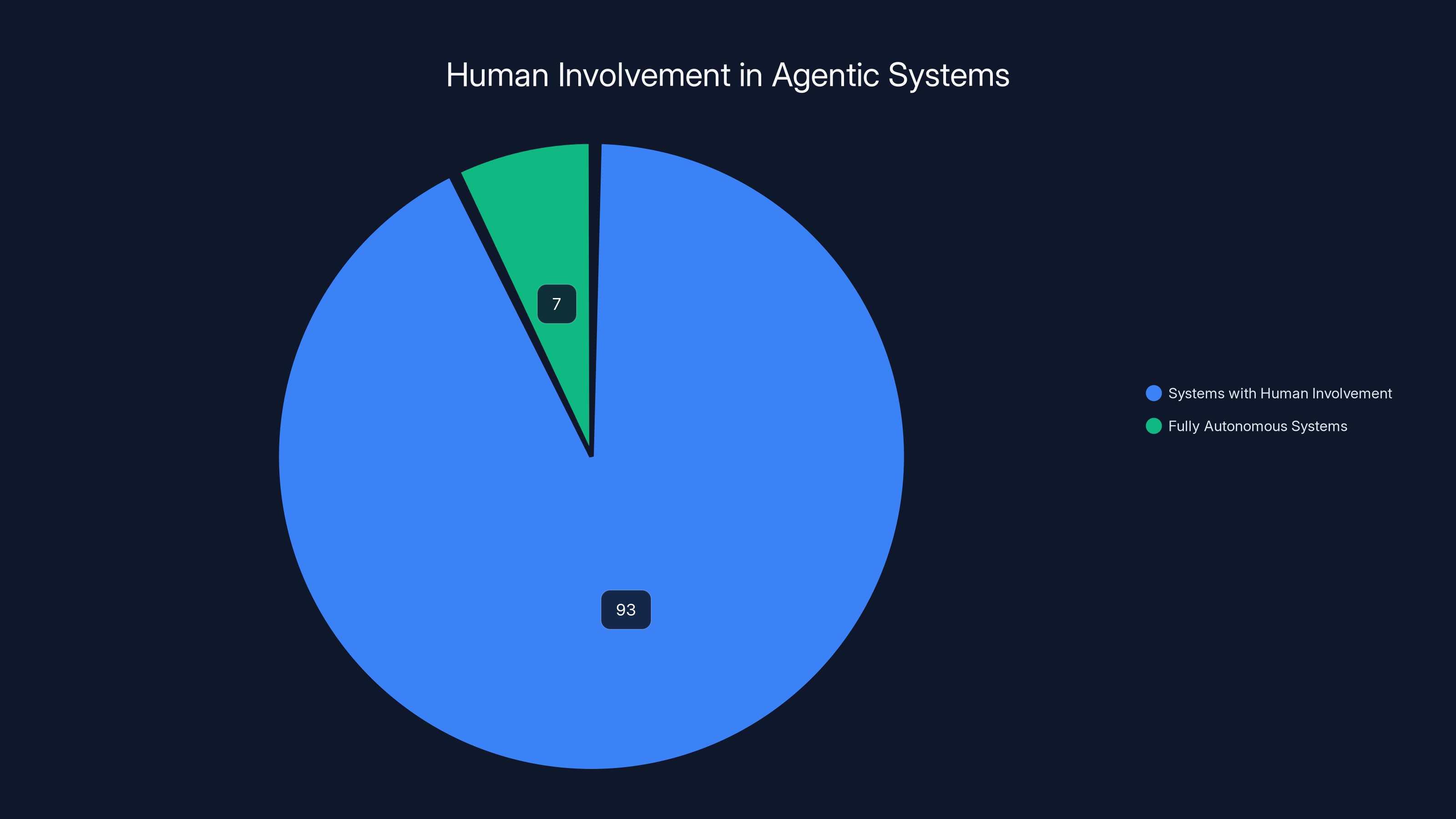

- Human oversight remains critical: 69% of agentic AI decisions are still verified by humans, and 87% of systems require human supervision.

- ROI expectations don't match deployment focus: Companies invest heavily in IT operations and Dev Ops but expect returns from cybersecurity and data processing.

- The path forward requires redefining metrics, establishing clear human-machine collaboration guidelines, and scaling intentionally rather than aggressively.

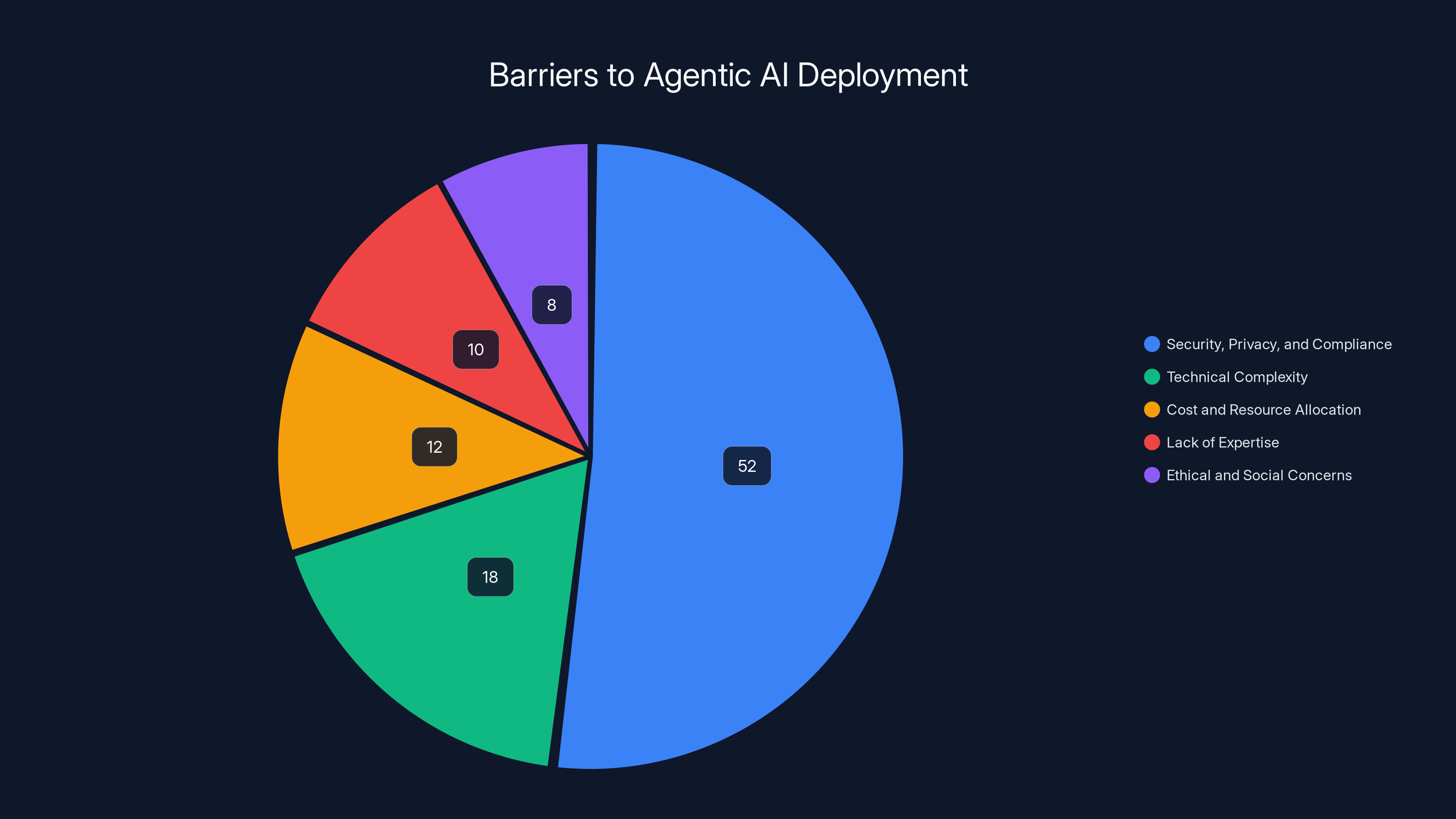

The most significant barrier to deploying agentic AI systems is the need for trust and supervision, with 87% of systems requiring human oversight. Other major barriers include security and compliance concerns (52%) and managing agents at scale (51%).

The Proof-of-Concept Trap: Why Half Your Agentic AI Projects Won't Move Forward

When agentic AI first started gaining traction, executives saw it as a straightforward transition. Build a pilot. Prove value. Scale. Done.

That narrative hasn't aged well.

The reality is messier. A pilot that works in controlled conditions—with a dedicated team, clear data, and limited scope—suddenly becomes unmanageable when you try to move it into production. The issues aren't about whether the agent can do the job. They're about whether your organization is ready to trust the agent with the job, oversee it responsibly, and integrate it into existing workflows without breaking everything.

According to enterprise AI research, approximately 50% of agentic AI initiatives never move beyond the proof-of-concept or pilot stage. Some organizations are on their second or third pilot attempt, each one slightly better but never quite ready for the real world. This creates a vicious cycle: pilot after pilot, budget allocation after budget allocation, but no tangible production deployment.

The cost is staggering. Every failed or stalled project represents not just wasted engineering time, but opportunity cost. If you've allocated a team to agentic AI for 18 months and deployed nothing, those people could've been working on revenue-generating features, infrastructure improvements, or technical debt reduction.

What makes this especially frustrating is that the technology itself isn't the problem. The models work. The frameworks exist. The tools are mature. The barriers are organizational: lack of clear ROI definition, uncertainty around governance, competing priorities, unclear ownership, and fear of the unknown.

Why Pilots Feel Different Than Production

A pilot operates under best-case conditions. Someone's championing the project. A small, motivated team is shepherding it. The scope is narrow enough that they can handle edge cases manually. Data quality is curated. Monitoring is hands-on.

Production is chaos.

You're running millions of decisions a day. You need automated monitoring, automated alerting, automated rollback. You need compliance documentation, audit trails, and clear escalation paths. You need to handle the 1% of cases that broke the pilot's assumptions. You need teams who weren't involved in the pilot to understand the system and maintain it. You need to explain decisions to regulators, auditors, and customers.

A pilot that looks good in a spreadsheet often doesn't account for these realities. That's why so many projects stall at the transition point.

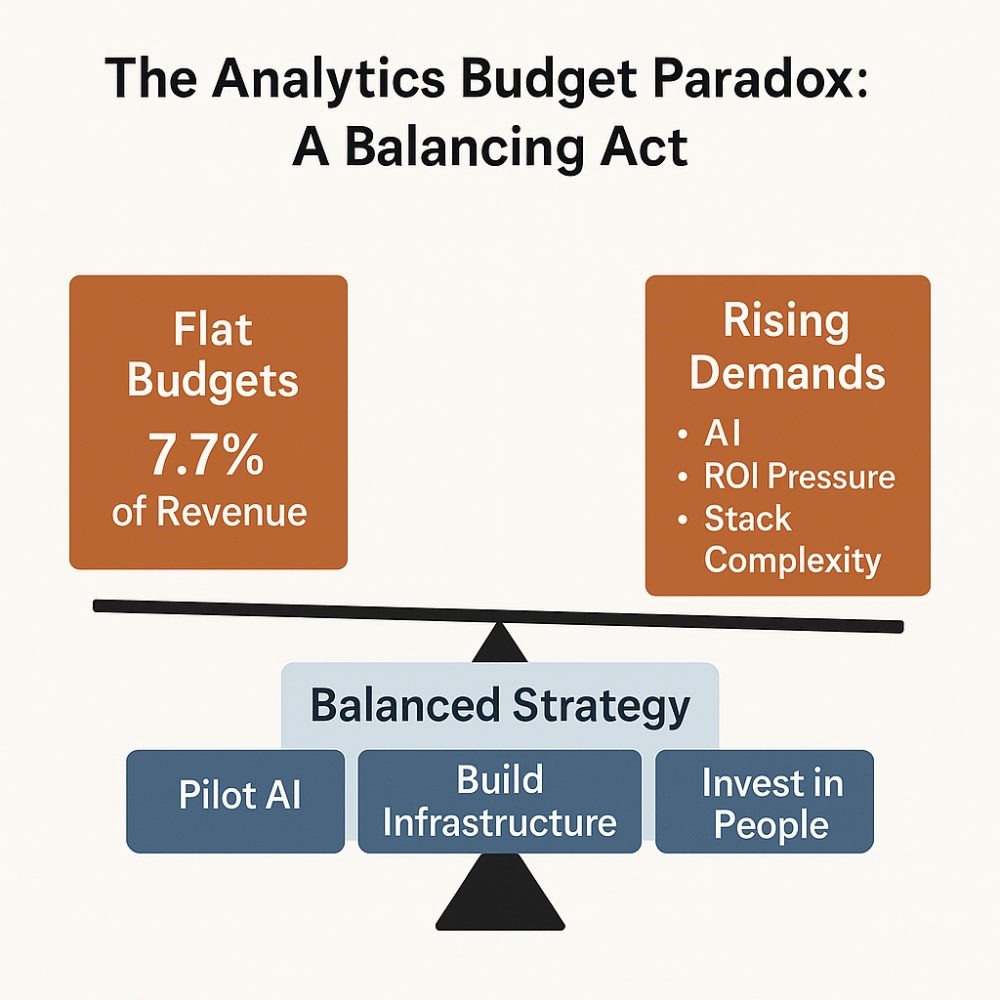

The Budget Paradox

Here's an interesting contradiction in the data: organizations are planning to increase agentic AI budgets by an average of 15-20% next year, despite the fact that 50% of current projects are stuck. They're not pulling back. They're investing more.

This could mean one of two things. Either they're learning from the first wave of stalled projects and investing in the right infrastructure and skills this time around. Or they're repeating the same mistakes at scale.

The data suggests it's a mix. Some organizations are getting smarter. Others are optimistic that throwing more money at the problem will solve it. Spoiler: it won't.

Approximately 50% of agentic AI projects stall at the pilot stage, while only 20% reach successful deployment. Estimated data based on industry insights.

The Five Major Barriers Blocking Agentic AI Deployment

If your agentic AI project is stalled, you're dealing with at least one of these problems. Likely multiple.

1. Security, Privacy, and Compliance Concerns (52% of Organizations)

This is the elephant in the room. More than half of organizations cite security, privacy, and compliance as major barriers to moving agentic AI from pilot to production.

It makes sense. An AI agent that makes decisions autonomously—even with guardrails—introduces new risk. What if the agent accesses data it shouldn't? What if it makes a decision that violates a regulation? What if it gets compromised by an adversary? What's the audit trail? Who's accountable?

For regulated industries (finance, healthcare, insurance), this isn't abstract. It's existential. You can't deploy an agent that might violate compliance requirements. The fines are massive. The reputational damage is worse.

Even for non-regulated companies, the concern is legitimate. Once you give an agent access to production systems, the surface area for risk explodes. One misconfiguration, one prompt injection attack, one logic error—and suddenly your agent is deleting customer data or exposing secrets.

The deeper issue is that most organizations don't have frameworks for managing this risk. They can secure a database. They can audit a human. They struggle to audit an agent that makes decisions in microseconds based on patterns the model learned but can't fully explain.

What this means practically: Before you deploy an agentic system, you need to answer hard questions. What data can the agent access? What actions can it take? What happens if it fails? Who monitors it? How do you audit its decisions? What's the rollback procedure?

These aren't fun conversations. They take weeks or months. They require stakeholders from multiple departments sitting in rooms together. But they're non-negotiable for production deployment.

2. Managing and Monitoring Agents at Scale (51% of Organizations)

Your pilot ran on a small dataset with a dedicated monitoring person watching every decision. Production is different. You need systems that automatically detect when something's going wrong, alert teams, and potentially roll back changes—all without human involvement.

This is harder than it sounds. With traditional software, you monitor metrics like latency, error rates, and resource usage. With agentic AI, you also need to monitor for degradation in decision quality, emergence of unexpected behaviors, and drift from the model's training distribution.

A SQL query that breaks is obvious—it returns an error or zero results. An AI agent that breaks is sneakier. It might keep producing outputs, but they're subtly worse. It's making decisions that technically don't violate any constraint, but they're not aligned with what you actually want.

This is called "silent failure," and it's a nightmare to detect at scale.

Additionally, managing multiple agents across an organization becomes a coordination problem. Which teams own which agents? Who has permission to modify them? How do changes to one agent affect others? How do you do safe deployments when updates might affect thousands of downstream decisions?

Most organizations don't have operational frameworks for this. They have runbooks for software deployments, but agentic deployments require different workflows. You need version control for prompts. You need staging environments for testing agent behavior changes. You need monitoring that catches silent failures before they cause business damage.

What this means practically: You need MLOps or AIOps infrastructure specifically designed for agentic systems. Tools like Runable can help with automated workflows and monitoring, but most organizations need to build or assemble tooling to handle agent deployment, versioning, and observability at scale.

3. Lack of Clear Business Case (33% of Organizations)

One-third of organizations explicitly cite a lack of clear business case as a barrier. This number is striking because it suggests that many teams built pilots without establishing what success actually means.

Here's the trap: a pilot looks successful if the agent performs well on a narrow metric. It correctly classifies tickets 87% of the time. It reduces query time by 60%. It catches fraud with 94% accuracy.

But what's the business impact? If you reduce query time but customers don't notice, does it matter? If the agent catches fraud but the volume is already low, what's the ROI? If it solves a problem that doesn't actually cost the business money, why deploy it?

Many organizations run pilots on safe, low-risk problems to de-risk the technology itself. But this means pilots often solve nice-to-have problems, not critical ones. The results are impressive from a technical perspective but underwhelming from a business perspective.

When you try to move from pilot to production, suddenly stakeholders ask the hard question: why are we doing this? The answer can't be "because the technology is cool" or "because our pilot showed it works." It has to be "because this solves a critical business problem and the ROI justifies the investment and risk."

A lot of pilots fail this test.

What this means practically: Before you build a pilot, define the business problem it solves. Calculate the current cost of that problem. Estimate the reduction if your agent works. Compare that to the deployment cost. If the numbers don't make sense, pick a different problem.

4. Shortage of Skilled Staff (44% of Organizations)

Building agentic AI systems requires different skills than traditional software engineering. You need people who understand prompt engineering, model behavior, fine-tuning, and how to design systems that make autonomous decisions.

These people are rare. The market is hungry for them. Salaries are high. Many people with these skills are at startups or big tech companies, not at the enterprises trying to deploy agentic systems.

Even if you hire the right people, there's a ramp-up problem. Understanding how to build a reliable agentic system takes time. Your new hire doesn't know your domain, your data, your compliance constraints, or your business priorities. Training them is expensive and slow.

Additionally, there's a knowledge loss risk. If you build something with a contractor or a consultant, and then they leave, you're left with code you don't fully understand. This is especially risky with agentic systems, where the behavior can be subtle and non-obvious.

What this means practically: You probably need to invest in training existing staff or hiring early. But don't assume hiring one genius AI engineer will solve everything. You need teams with diverse skills: backend engineers who can deploy and monitor systems, domain experts who understand your business constraints, and yes, some AI specialists.

5. Human Trust and Supervision Requirements

Here's something that often gets overlooked in the technical discussion: people don't trust autonomous agents.

And that's completely reasonable.

Current agentic systems aren't fully autonomous in the sense of "turn it on and walk away." They're semi-autonomous. They make suggestions. Humans verify or reject them. They take actions within guardrails. Humans monitor and can intervene.

The data backs this up: 69% of agentic AI decisions are still verified by humans, and 87% of deployed systems require human supervision.

This is actually fine. It's a rational design pattern. You get the efficiency of automation with the safety of human oversight. The issue is that many organizations don't account for this in their planning. They expect full autonomy. When they hit the reality of human-in-the-loop systems, they're disappointed.

Building the human supervision layer is work. You need UIs that make human oversight easy. You need systems that route high-risk decisions to humans automatically. You need clear escalation paths. You need training for the humans who'll be verifying decisions.

If your pilot didn't include this layer, moving to production requires building it. That's a project in itself.

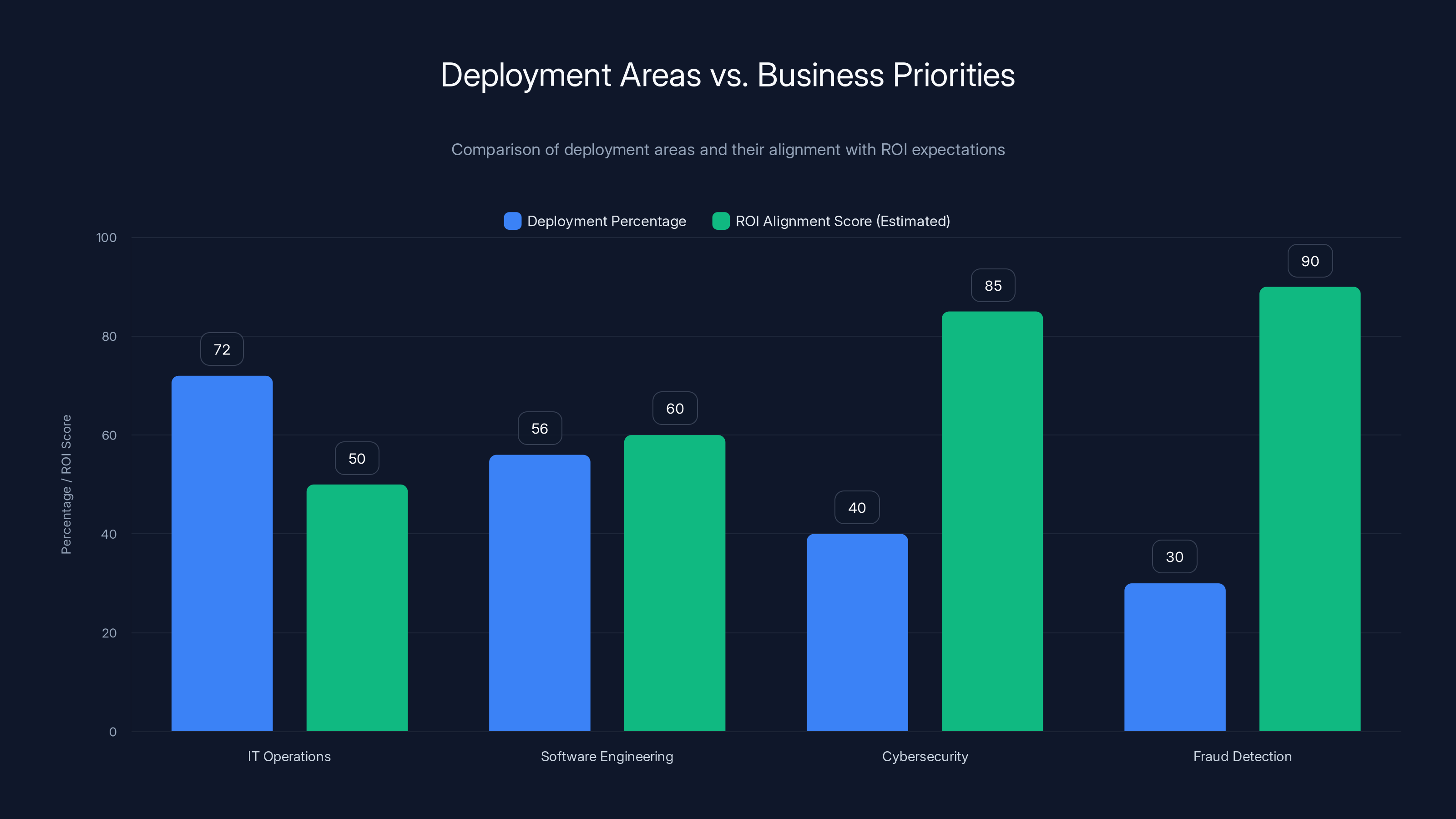

The Investment-ROI Mismatch: Where Companies Are Spending vs. Where They Expect Returns

Here's a data point that reveals something important about how organizations are thinking about agentic AI.

Current deployment focus:

- IT operations and Dev Ops: 72%

- Software engineering: 56%

- Customer support: 51%

Expected ROI:

- IT operations and system monitoring: 44%

- Cybersecurity: 27%

- Data processing and reporting: 25%

There's a significant mismatch. Organizations are investing heavily in IT operations and Dev Ops agents, but they expect the biggest returns from different areas.

This tells you something important: either organizations are investing in the wrong areas, or they don't fully understand where they'll get ROI from agentic systems, or both.

Why This Mismatch Matters

When investment doesn't align with expected returns, you get stalled projects. Here's why:

Your team builds an amazing agent that automates Dev Ops tasks. It works great. It reduces deployment time by 40%. But the business case assumed 20% time savings. Nobody championed the project. Resources get reallocated. The agent gets shelved because it doesn't justify its own existence in the current budget cycle.

Meanwhile, the cybersecurity team is desperate for an agent but never got budget because the organization assumed ROI would be lower there.

This kind of misalignment is a project killer. It suggests that many organizations haven't thought deeply about where agentic AI actually creates value in their specific business context.

The Real ROI Drivers

When agentic AI actually moves into production and creates measurable value, it's usually in one of these areas:

Cost reduction through efficiency: Automating routine tasks that currently require human time. This is where IT operations wins—you're directly reducing the number of hours humans need to spend on repetitive work.

Risk reduction: Catching problems faster, preventing failures, reducing security incidents. Cybersecurity is here. An agent that monitors networks and catches intrusions before they cause damage is worth massive ROI.

Decision quality improvement: Making better decisions faster, catching errors, reducing variance. Data processing is here—an agent that processes and analyzes data better than humans is incredibly valuable.

Speed and responsiveness: Getting things done faster, reducing wait times, improving customer experience. Customer support is here—an agent that resolves tickets immediately creates real value.

Most organizations understand this intellectually but struggle to quantify it for their specific use case. That's why so many stall. They can't make the business case stick.

Organizations prioritize IT operations (72%) and software engineering (56%) for agentic AI deployments, but these areas often have lower ROI alignment compared to cybersecurity and fraud detection. Estimated data for ROI alignment highlights the mismatch.

The Human-Machine Collaboration Framework: Why 87% of Systems Need Humans

Let's talk about something that should be obvious but often isn't: autonomous doesn't mean unsupervised.

The most successful agentic AI deployments aren't the ones chasing full autonomy. They're the ones that design for human-machine collaboration from the beginning.

The Supervision Reality

Ninety-three percent of organizations building agentic systems are including humans in the loop. Some explicitly. Some implicitly. But humans are in there.

This isn't a limitation of current technology—though it is that. It's also a design choice. You want humans in the loop because humans can:

- Catch edge cases the agent hasn't seen

- Make judgment calls in ambiguous situations

- Understand context that isn't in the agent's training data

- Spot when something's gone subtly wrong

- Make ethical decisions about how to handle borderline cases

An agent that makes minor decisions autonomously but escalates anything ambiguous to humans is infinitely safer than an agent trying to handle everything without help.

Designing for Collaboration

If you're building an agentic system, you need to explicitly design for human-machine collaboration. This means:

Clear escalation paths: When should a decision go to a human? You need rules that are transparent and make sense to your team.

Easy human verification: The UI for reviewing and approving agent decisions needs to be fast and intuitive. If verification is slow or confusing, humans will get frustrated and stop paying attention.

Confidence scoring: Your agent should report how confident it is in each decision. High-confidence decisions can be approved faster. Low-confidence ones need more scrutiny.

Explainability: Humans need to understand why the agent made a decision. Not in the sense of reverse-engineering a neural network, but in the sense of "here's the reasoning."

Feedback loops: Humans should be able to correct the agent. "You got this wrong. Next time, consider X." If humans are stuck giving feedback into a void, they'll disengage.

The Trust Problem

Here's something that's often underestimated: building trust in agentic systems is hard.

Trust is earned through consistent, understandable behavior over time. An agent that makes perfect decisions for three months and then mysteriously fails on day 90 loses trust. An agent that makes a decision that seems obviously wrong—even if it's actually correct for reasons the human doesn't understand—loses trust.

Once trust is broken, it's very hard to rebuild. People will second-guess every decision. They'll lose confidence in the system. Adoption will drop. The project will stall.

That's why the organizations with the most successful agentic deployments are conservative in the early stages. They build trust slowly. They keep humans in the loop. They demonstrate consistent, predictable behavior. Only after a track record is established do they move toward more autonomy.

How Deployment Areas Don't Match Business Priorities

Let's dig deeper into that mismatch between where companies are deploying agentic AI and where they expect ROI.

IT Operations: The Safe Choice

IT operations is where 72% of organizations are deploying agentic systems first. It makes sense. The domain is well-defined. The data is available. The problems are clear. Automate ticket routing, incident response, infrastructure optimization.

But here's the issue: IT operations is a cost center. Saving money on IT operations is valuable, but it doesn't create revenue. It's an optimization within a fixed budget.

So when organizations deploy agents in IT operations and save 20% on labor costs, the finance team sees that as a one-time benefit. They might reallocate those savings elsewhere instead of reinvesting in more agentic systems. The agent gets shelved because it's done its job.

Contrast this with cybersecurity or revenue-impacting areas, where an agent could prevent losses worth millions. The ROI is higher. The business case is stronger. The project gets funded and scaled.

Organizations aren't thinking about this clearly, which is why deployment areas don't align with ROI expectations.

Software Engineering: High Visibility, Lower Direct ROI

Software engineering (56% of deployments) is interesting because it's higher visibility than IT ops but the ROI can be harder to quantify.

You deploy an agent that helps with code review, suggests optimizations, catches bugs. It's genuinely useful. Engineers appreciate it. But how do you measure the ROI?

Say it saves each engineer 2 hours per week. That's valuable, but you're not seeing direct cost savings. You're seeing increased productivity. Which might mean engineers build more features. Which might mean better products. Which might mean higher revenue. But that chain is indirect and hard to measure.

Contrast this with an agent in fraud detection, where you can quantify exactly how many fraudulent transactions were stopped and how much money was saved. The ROI is clear.

The Missing Opportunities

Meanwhile, areas with high ROI potential are being underinvested:

Cybersecurity (27% expected ROI): Only 15-20% of organizations are deploying agents here, despite high expected returns. Why? Because cybersecurity is complex, the stakes are high, and building an agent that catches intrusions requires deep domain expertise.

Data processing and reporting (25% expected ROI): Another area with strong expected returns but limited deployment. Organizations see the value but struggle to build systems that work reliably at scale.

Revenue operations: Almost no one is talking about deploying agentic systems to drive revenue. But imagine an agent that optimizes pricing, manages customer outreach, or improves sales processes. The ROI would be massive. Yet few organizations are trying this.

This gap represents enormous opportunity. Organizations that figure out how to deploy agentic systems in revenue-impacting areas early will have a huge competitive advantage.

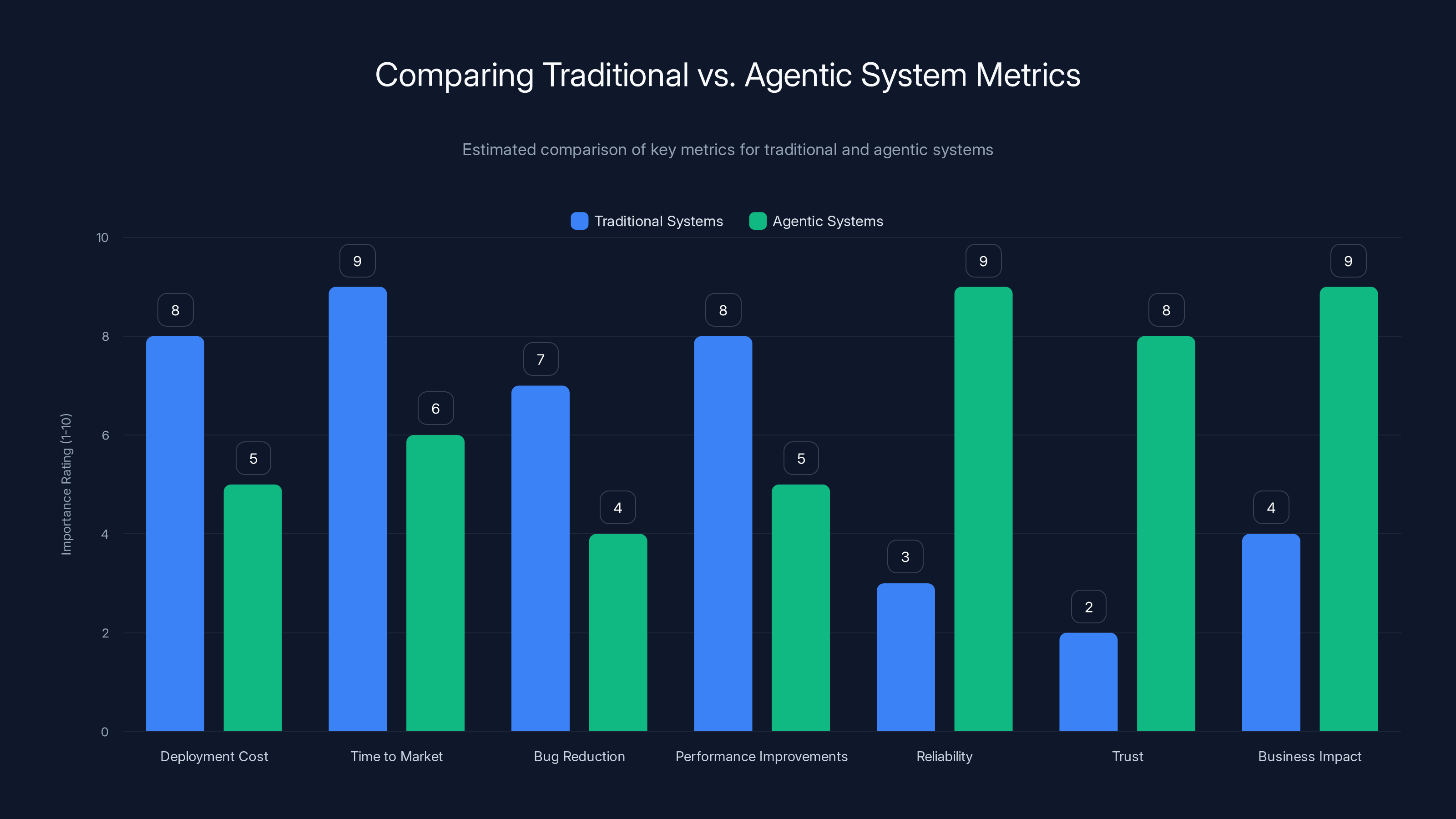

Traditional systems focus on deployment and performance, while agentic systems prioritize reliability, trust, and business impact. Estimated data.

Redefining ROI: Why Traditional Metrics Fail for Agentic Systems

Here's a fundamental problem with how organizations are evaluating agentic AI projects:

They're using the wrong metrics.

Traditional software projects optimize for things like:

- Deployment cost

- Time to market

- Bug reduction

- Performance improvements

These metrics work fine for deterministic systems. But agentic systems are different. They have inherent variability. They improve over time. They create value in unexpected ways.

The Problem With Traditional ROI Calculation

Traditional ROI in software looks like this:

Where benefit is usually quantified as cost savings or revenue generated, and cost is the implementation cost.

For agentic systems, this breaks down because:

Benefits are hard to quantify upfront: You don't know exactly how much value an agent will create until it's running in production and you see what it actually does.

Value evolves over time: The agent improves as it gathers more data, gets fine-tuned, and learns from feedback. The value curve isn't flat—it's exponential. Calculating ROI at month 6 doesn't reflect the value at month 18.

Risk is uncertain: You're uncertain about failure modes, regulatory issues, or unexpected negative outcomes. How do you factor that into ROI calculation?

Opportunity cost is hidden: An agent that frees up engineer time doesn't directly generate revenue unless you plan to redeploy those engineers somewhere valuable. Many organizations don't. They just reduce headcount or leave engineers to context-switch.

A Better Framework

Organizations successfully deploying agentic systems use different metrics:

Reliability and trust metrics: What percentage of agent decisions are correct? How often do humans need to intervene? How quickly does the system improve?

Business impact metrics: Did we reduce customer churn? Did we catch more fraud? Did we speed up processes? Did we improve decision quality?

Scaling metrics: How does the system perform as volume increases? Can we deploy it to new use cases? What's the unit cost per decision?

Operational metrics: How hard is it to monitor and maintain? What's the time from detection to resolution when something goes wrong? How frequently do we need to update the agent?

These metrics don't tell a simple story about ROI. But they tell a much more accurate story about whether a project is working and whether it should be expanded.

What this means practically: Before you deploy an agent, decide what success looks like. But don't just think about financial metrics. Think about reliability, adoption, business impact, and operational health. An agent that saves

The Governance and Guardrails Problem: Setting Boundaries for Autonomy

An agent can only be as autonomous as you're willing to let it be. Beyond that, you need guardrails.

Guardrails are constraints that prevent an agent from doing bad things. They're decisions made in advance about what an agent is allowed to do, what it should escalate, and what it should never do.

Setting these up is non-trivial, and it's often where projects stall.

Types of Guardrails

Hard constraints: Things the agent literally cannot do. These are enforced by the system. An agent that's not allowed to delete records can't delete them, even if it tries. Hard constraints are most reliable but sometimes too limiting.

Soft constraints: Guidelines the agent should follow but can violate if it has good reason. These are enforced through training and scoring. An agent should avoid expensive decisions, but if necessary, it can make them. Soft constraints are flexible but less reliable.

Escalation rules: Decisions that should be routed to humans for approval. These are rules that say "if the agent wants to do X, send it to a human first." Escalation rules are safe but reduce automation.

Monitoring and alerting: Detection of when something's gone wrong, coupled with alerts to teams. These don't prevent bad things, but they catch them quickly. Essential for production deployments.

Most organizations need all four types. But setting them up requires deep thinking about your specific use case.

The Guardrail Design Process

Designing guardrails for an agentic system looks like this:

-

Identify critical failure modes: What's the worst thing this agent could do? What would cause financial loss, regulatory violation, or customer damage?

-

Design controls: For each failure mode, what control prevents it? Is it a hard constraint? An escalation rule? Monitoring and alerting?

-

Test extensively: Put the agent in scenarios where it might violate guardrails. Does the system catch it? Does it respond appropriately?

-

Document clearly: What are the agent's constraints? Why do they exist? Who enforces them? What happens if they're violated?

-

Review and adjust: As you learn more about the agent's behavior, adjust guardrails accordingly. What seemed too restrictive might need to be loosened. What seemed safe might need to be tightened.

This is not a one-time design task. Guardrails evolve as you learn more about the system.

Compliance and Regulatory Guardrails

For regulated industries, guardrails aren't optional. They're required.

An agentic system in healthcare needs to comply with HIPAA. One in finance needs to follow SEC regulations. One in insurance needs to comply with fair lending laws.

Setting these up requires collaboration with compliance teams, legal teams, and sometimes external auditors. It's slow. It's expensive. But it's non-negotiable.

Many organizations underestimate this burden, which is part of why projects stall. They build a working pilot and then realize, "Oh, we actually need to prove this complies with X regulation." That requires months of additional work.

Security, privacy, and compliance concerns are the most significant barriers, affecting 52% of organizations. Estimated data for other barriers.

From Pilot to Production: The Bridge That's Missing

Let's talk about the actual transition. How do you move an agentic system from a successful pilot to production deployment?

Most organizations don't have a clear process for this. That's a major reason why projects stall.

The Scaling Challenge

A successful pilot on 1,000 decisions per day looks very different from a system handling 1 million decisions per day.

At 1,000 decisions per day, you can manually verify every decision if needed. You can spot patterns in failures instantly. You can tweak the agent's parameters and see the impact quickly.

At 1 million decisions per day, you need everything to be automated. Verification, monitoring, adjustment. You can't manually intervene. You need systems that detect problems instantly and respond automatically.

This is where most organizations run into trouble. The pilot was manual and optimized for success. Production needs to be automated and optimized for scale.

The Infrastructure Requirements

Moving to production requires:

Monitoring infrastructure: Systems that watch the agent 24/7, detect anomalies, and alert teams. This includes monitoring for silent failures—decisions that are technically valid but subtly wrong.

Data pipeline improvements: Production needs reliable, clean data flowing into the agent consistently. Pilots often work with curated data. Production reveals all the messy edge cases.

Versioning and rollback: You need to be able to deploy new versions of the agent and roll back instantly if something goes wrong. This requires infrastructure that most organizations don't have.

Audit and compliance logging: Every decision needs to be logged, auditable, and explainable. This is essential for compliance but adds computational overhead.

Integration with existing systems: The agent doesn't operate in isolation. It needs to integrate with your actual systems, which are usually complex and fragile. Getting this right takes engineering effort.

Documentation and runbooks: Your ops teams need to understand how to operate the agent, what to do if it breaks, how to escalate issues. This documentation doesn't exist for most pilots.

The Staffing Reality

Moving to production also requires different people than building a pilot.

The pilot was built by AI specialists and engineers who understood the problem deeply. They could debug issues quickly because they built the system.

Production is maintained by ops teams, platform engineers, and domain specialists who weren't involved in the pilot. They need clear documentation, predictable behavior, and reliable tooling. If something breaks at 3 AM, they need to fix it without calling the AI specialist.

Most organizations don't think about this transition carefully. They get excited about a successful pilot and assume the same team can scale it. Then they hit reality: the scaling team needs different skills, different tools, and different processes than the pilot team.

Scaling With Intent: The Right Way to Grow Agentic Deployments

Here's something the successful organizations do differently: they scale deliberately.

They don't try to solve everything at once. They don't try to deploy 50 agents across the organization in one year. They scale slowly, learning as they go, building infrastructure and expertise incrementally.

The Scaling Playbook

Start small and proven: Pick a problem that's well-defined, has high ROI, and low risk. Get it working in production. Build confidence. Document everything.

Add one new use case at a time: Once you've got one agent in production, pick another. Similar domain if possible, so you can reuse infrastructure and expertise.

Invest in infrastructure between agents: Don't just add agents. Add monitoring, versioning, documentation. Make the next agent easier to deploy than the first.

Build institutional knowledge: Train your teams on what they're learning. Establish playbooks and best practices. Make it so new people can understand what you're building.

Measure and iterate: Track how each agent is performing. What's working? What's not? What would make the next one better? Use these insights to improve.

Build platforms, not one-off solutions: After three or four agents, you probably need tooling to manage them. Invest in that. It makes the fifth agent 10x easier.

This might sound slow. It is. But it's much more reliable than trying to scale fast. Organizations that try to scale agentic AI aggressively often hit walls: they don't have the infrastructure to support scale, their teams burn out, or they deploy unreliable systems that damage trust in the technology.

Organizations that scale deliberately build sustainable, trustworthy agentic AI deployments that compound over time.

The Time Horizon Reality

You should expect the full cycle from pilot to production to proven scale to take 18-36 months.

That seems long. It is. But it's the real time required when you account for:

- Building infrastructure

- Training teams

- Establishing governance

- Building trust

- Learning from failures

- Optimizing based on production behavior

- Scaling to new use cases

Organizations trying to do it in 6-12 months usually create problems that take years to recover from.

93% of organizations building agentic systems include humans in the loop, highlighting the importance of human oversight in AI deployments.

Budget Allocation: Are You Investing in the Right Things?

Organizations plan to increase agentic AI budgets. The question is: what are they going to spend money on?

Most organizations allocate like this:

- 40% on building agents (engineers, tools, infrastructure)

- 20% on tools and platforms (vendors, libraries, frameworks)

- 20% on teams (hiring, training)

- 10% on pilot projects

- 10% on organizational readiness (governance, compliance, documentation)

Here's the problem: most successful deployments require flipping those percentages.

The organizations making progress on agentic AI are spending more on infrastructure, training, and organizational readiness and less on just building more agents.

Where the Money Should Go

Infrastructure (30-40%): Monitoring, versioning, deployment tools, audit logging, data pipelines. This is unsexy, but it's what enables scale.

Teams and training (25-35%): Hiring the right people, training existing people, building centers of excellence. People are your limiting factor, not tools.

Organizational readiness (15-25%): Governance frameworks, compliance processes, documentation, change management. This determines whether projects stall or scale.

Actual agent development (15-25%): Building the agents themselves. This should be your smallest budget category because most value comes from infrastructure and people, not from building more agents.

Vendor tools (5-15%): The actual platforms, models, frameworks you use. This is the part everyone wants to spend money on, but it's often the smallest piece.

Organizations that flip this allocation from "mostly engineering" to "mostly infrastructure and people" see dramatically better outcomes.

Building Your Agentic AI Success Framework

Okay, so you want to avoid the PoC trap. Here's what a success framework actually looks like.

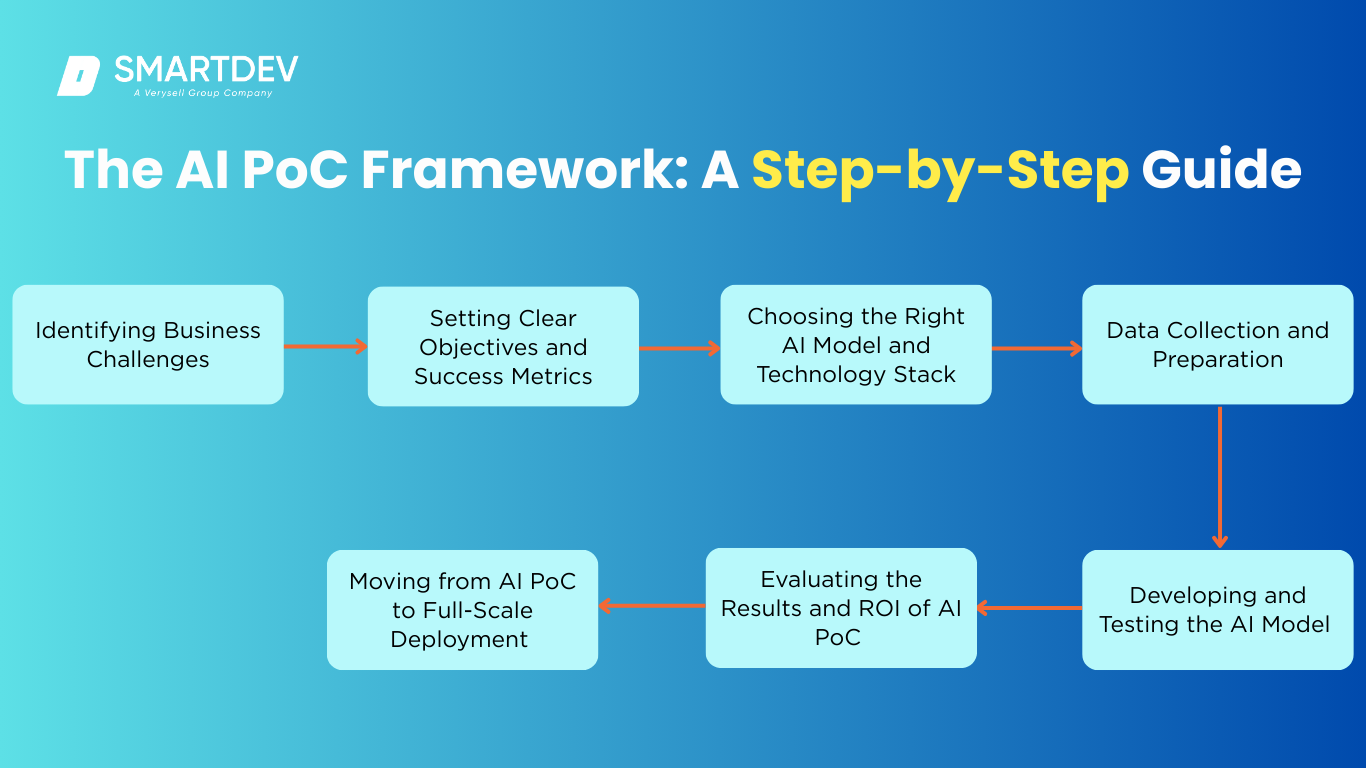

Phase 1: Foundation (Months 1-6)

Goal: Establish governance, infrastructure, and team capability before you build anything.

Activities:

- Define what agentic AI means in your organization

- Identify potential use cases (maybe 5-10 ideas)

- Assess current capabilities and gaps

- Design governance framework and guardrails

- Set up basic monitoring and versioning infrastructure

- Hire or assign core team

- Begin training existing teams

Outcomes: Clear organizational understanding of agentic AI, identified target use cases with estimated ROI, basic infrastructure in place, capable team ready to build.

Phase 2: Proof of Value (Months 6-12)

Goal: Build a pilot that demonstrates real business value and operational viability.

Activities:

- Select one high-ROI, low-risk use case

- Build the agent with production-readiness in mind (not just a prototype)

- Establish human-in-the-loop workflows

- Monitor and measure extensively

- Document everything

- Plan for production transition

Outcomes: Successful pilot running in controlled production environment, clear ROI demonstrated, production blueprint created, team experienced with full lifecycle.

Phase 3: Production Hardening (Months 12-18)

Goal: Move the pilot to full production while building infrastructure for scaling.

Activities:

- Complete infrastructure buildout (monitoring, alerting, versioning, audit logging)

- Establish runbooks and operational procedures

- Train operations team

- Gradually increase agent autonomy as confidence builds

- Begin planning second use case

- Continue monitoring and measuring

Outcomes: Stable production agent, proven operational procedures, infrastructure ready for scaling, team experienced with production management.

Phase 4: Scale (Months 18+)

Goal: Deploy additional agents, building on proven patterns and infrastructure.

Activities:

- Deploy second agent (easier than first because infrastructure exists)

- Evaluate emerging use cases

- Invest in platform tooling

- Build organizational expertise

- Automate agent management

- Continue optimizing existing agents

Outcomes: Multiple agents in production, established patterns and infrastructure supporting new deployments, team capable of managing portfolio of agents.

Success Metrics Throughout

Foundation phase: Organization alignment, infrastructure readiness, team capability assessed

Proof of value phase: Pilot reliability (% correct decisions), business impact measured, adoption rate

Production hardening: Uptime and reliability, operational efficiency (mean time to resolution when issues occur), cost per decision

Scale phase: Number of agents in production, total ROI across all agents, time to deploy new agent, team scaling (agents per ops engineer)

The Future of Agentic AI: Where This Is Going

Assuming organizations solve the PoC-to-production problem, what's next?

Near Term (Next 12 Months)

More agents will move to production, but most organizations will still be struggling with the basics. Governance frameworks will become table stakes. Organizations that sorted this out early will have significant competitive advantage.

Expect to see specialized tools emerge for agent management, monitoring, and governance. The winners will be the platforms that make production deployment easier.

Organizations will start experimenting with autonomous agents that require less human supervision. Trust will increase gradually as agents prove reliable. But the majority will still use human-in-the-loop models.

Medium Term (1-3 Years)

Agentic AI will become standard in certain domains (IT operations, customer support, data processing). Companies without agentic systems in these areas will be at significant disadvantage.

New use cases will emerge in revenue operations and strategic decision-making. These will be harder to implement but will have much higher ROI than operational use cases.

The skills shortage will get worse before it gets better. Demand for people who understand agentic systems will far exceed supply. Organizations will invest heavily in training to build internal capability.

Regulation will catch up. Governments will establish frameworks for responsible agentic AI deployment. Organizations that already have governance will adapt easily. Those without will scramble.

Long Term (3+ Years)

Agentic AI could represent a fundamental shift in how organizations operate. But only if they solve the deployment problem.

The difference between organizations that crack this and those that don't will be enormous. Those with reliable, scaled agentic AI deployments will operate faster, make better decisions, and have lower costs. Those still piloting will be stuck.

This creates urgency. The time to figure this out is now, while most organizations are still early. In three years, the game will be different.

Key Takeaways: What You Need to Do Now

If your organization is struggling with agentic AI deployment, here's what actually matters:

1. Don't measure success by pilot performance. Measure it by whether you can move to production. A pilot that works great but doesn't inform your path to production isn't actually successful.

2. Invest in infrastructure and people before you build more agents. The limiting factor isn't technology. It's governance, infrastructure, and team capability.

3. Design for human-machine collaboration, not full autonomy. Building trust is hard. Systems that keep humans in the loop are more reliable and more trustworthy.

4. Define ROI before you build the pilot. If you can't articulate why a project matters to the business, it doesn't.

5. Scale deliberately. One agent to production is harder than ten agents to production if you build infrastructure between them. Don't try to do everything at once.

6. Invest heavily in governance early. Compliance, security, audit logging, guardrails. These aren't obstacles—they're prerequisites.

7. Build organizational capability. You need people who understand agentic systems, who can deploy them, who can operate them, and who can learn from them. This is the real constraint.

8. Expect 18-36 months from pilot to proven scale. This isn't quick. It's complex. Accept it and plan accordingly.

The organizations that crack agentic AI deployment will have enormous competitive advantage. But it requires doing things differently than traditional software projects. It requires thinking about governance and infrastructure and people as critically as technology. It requires discipline and patience.

If you can do that, you can break through the PoC trap. If you can't, you'll be stuck cycling through pilots while competitors move into production and build sustainable value.

The choice is yours.

FAQ

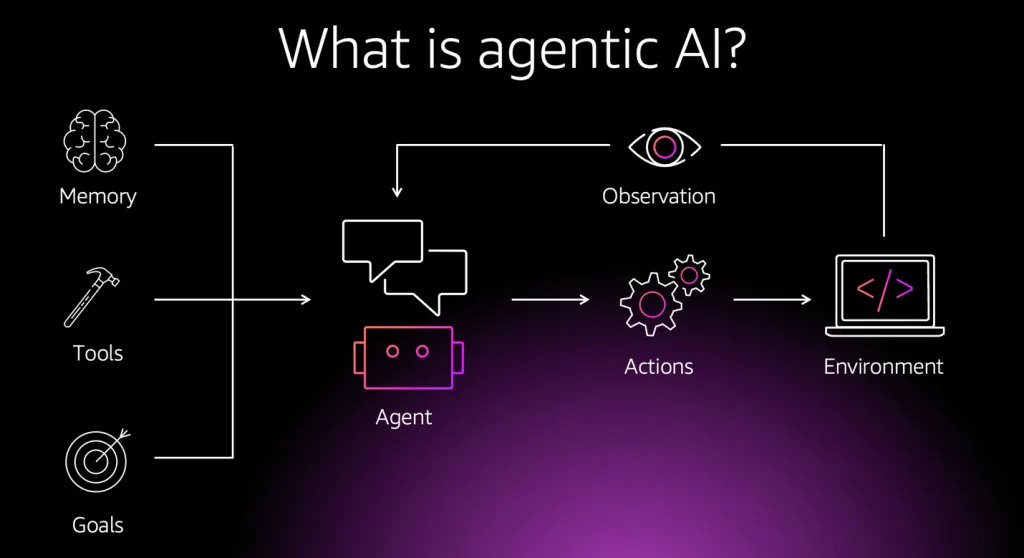

What is agentic AI and how does it differ from traditional AI systems?

Agentic AI refers to autonomous AI systems that can independently plan and execute a series of actions toward a goal, often making decisions with varying degrees of human oversight. Unlike traditional AI systems that respond to specific prompts or inputs reactively, agentic systems take initiative, adjust strategies based on feedback, and manage complex workflows over time. The key difference is autonomy: an agentic system can decide what to do next, while traditional AI typically follows predefined paths.

Why do so many agentic AI projects fail to move beyond proof-of-concept?

Agentic AI projects stall at the PoC stage primarily due to organizational barriers rather than technical limitations. These include security and compliance concerns (52% of organizations), difficulty managing agents at scale (51%), shortage of skilled staff (44%), lack of clear business cases (33%), and the reality that 87% of systems require human supervision. Additionally, organizations often lack governance frameworks, operational infrastructure, and clear ROI metrics needed for production deployment. The jump from a small, controlled pilot to production at scale requires investments in infrastructure, training, and organizational readiness that many teams underestimate.

What are the most significant barriers to deploying agentic AI systems in production?

The five major barriers are: security, privacy, and compliance concerns (cited by 52% of organizations), difficulty managing and monitoring agents at scale (51%), shortage of skilled staff or training availability (44%), lack of clear business cases (33%), and trust and supervision requirements (87% of systems still require human oversight). These aren't technology problems—they're organizational, operational, and strategic challenges that require governance frameworks, infrastructure investments, team development, and clear ROI metrics.

How should organizations measure ROI for agentic AI projects?

Traditional ROI calculations fall short for agentic systems because benefits are hard to quantify upfront and value evolves over time. Instead, successful organizations use a multi-dimensional framework that includes reliability metrics (percentage of correct decisions, human intervention frequency), business impact metrics (customer churn reduction, fraud detection rates, process speed), operational metrics (mean time to resolution, maintenance costs), and scaling metrics (unit cost per decision, deployment time for new agents). This approach recognizes that agentic AI creates value gradually and that early success should be measured by operational reliability and capability, not just financial returns.

What role should humans play in agentic AI systems?

Humans should remain central to agentic AI systems, particularly early on. Research shows 69% of agentic AI decisions are still verified by humans, and 87% of systems require human supervision. Rather than viewing human oversight as a limitation, successful deployments design for human-machine collaboration from the start. Humans handle ambiguous cases, catch edge cases, provide context, verify high-risk decisions, and help the system improve over time. This design approach is safer, more trustworthy, and more acceptable to stakeholders than attempts at full autonomy.

What's the typical timeline for moving an agentic AI project from pilot to production scale?

Realistic timelines span 18-36 months from initial pilot to proven scale across multiple agents. This includes 6 months for foundation and governance setup, 6 months for proof-of-value pilot, 6 months for production hardening and infrastructure buildout, and ongoing scaling. Organizations attempting faster timelines (12 months or less) typically create technical debt, reliability issues, and operational challenges that take years to resolve. The timeline reflects the reality that sustainable agentic AI deployment requires infrastructure, team training, governance establishment, and trust-building—none of which can be accelerated without consequences.

How much should organizations budget for agentic AI initiatives?

Successful organizations allocate agentic AI budgets differently than many expect: 30-40% infrastructure (monitoring, deployment, audit systems), 25-35% teams and training, 15-25% organizational readiness (governance, compliance, documentation), 15-25% actual agent development, and 5-15% vendor tools. This represents a shift from traditional software budgeting, which emphasizes engineering. The counterintuitive insight is that infrastructure and people are your primary investments, not the tools themselves or the agents you build.

What governance frameworks should organizations establish before deploying agentic AI?

Organizations need clear governance covering decision rights (who approves agent deployment), compliance requirements (regulatory obligations the agent must follow), guardrails and constraints (what the agent can and cannot do), escalation procedures (when decisions go to humans), monitoring and alerting (how problems are detected and reported), audit and logging (how decisions are recorded and explained), and human-machine collaboration design (how humans verify and supervise agent decisions). This governance should be established before pilots begin, not retrofitted after successful PoCs.

How can organizations avoid the investment-ROI mismatch in agentic AI?

Organizations should define expected ROI metrics before building any agent, not after a pilot succeeds. This requires identifying which business problems actually cost the organization money and where agentic solutions would create measurable value. Many organizations invest heavily in IT operations and Dev Ops (which have moderate ROI) while underinvesting in cybersecurity and revenue operations (which have higher ROI potential). Aligning deployment focus with ROI expectations requires explicit business case development and executive alignment early in the planning process.

What skills and expertise do organizations need to successfully deploy agentic AI?

Successful deployments require diverse skills: backend engineers for deployment and monitoring, domain experts who understand business constraints and data, AI specialists who understand model behavior and fine-tuning, operations engineers who can manage systems at scale, compliance and governance specialists, and change management professionals. The shortage of skilled staff (44% cite this as a barrier) means most organizations need to invest heavily in training existing employees rather than solely relying on external hiring.

Looking to automate your agentic AI workflows and accelerate deployment timelines? Tools like Runable help teams build, manage, and deploy AI-powered automation at scale. Starting at $9/month, Runable offers AI agents for presentations, documents, reports, images, and videos—helping you move faster from proof-of-concept to production value.

Related Articles

- Modernizing Apps for AI: Why Legacy Infrastructure Is Killing Your ROI [2025]

- Agentic AI Demands a Data Constitution, Not Better Prompts [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- Telco AI Factory: Building Intelligent Communications Networks [2025]

- Why AI Agents Keep Failing: The Math Problem Nobody Wants to Discuss [2025]

- AI Agents: Why Sales Team Quality Predicts Deployment Success [2025]

![Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]](https://tryrunable.com/blog/why-agentic-ai-projects-stall-moving-past-proof-of-concept-2/image-1-1769429443236.png)