Express AI: The Privacy-First AI Platform Keeping Your Data Completely Safe [2025]

Artificial intelligence has become woven into how we work and live. We ask AI tools about our health concerns, financial decisions, and deeply personal matters. Yet something doesn't sit right about it, does it? You're trading privacy for convenience, sending sensitive information into systems designed primarily for company profit, not your protection.

Express VPN, the company that's spent two decades building VPN infrastructure around a single idea—that your data shouldn't be accessible to anyone—just extended that philosophy directly into AI. They announced Express AI, a platform built on a radical premise: the best way to protect user data is not to collect it in the first place.

This isn't marketing speak. It's a technical solution to a real problem that most AI platforms ignore completely. While Chat GPT, Claude, and Gemini train on user conversations (with varying opt-out options), Express AI uses confidential computing enclaves to ensure your prompts, files, and interactions exist in cryptographically isolated environments that even Express VPN's own staff cannot access.

We're going to walk through exactly how this works, why it matters, what it actually protects (and what it doesn't), and whether this represents the future of private AI or a niche solution for security-obsessed users.

TL; DR

- What it is: A privacy-focused AI platform using confidential computing enclaves to isolate user data during processing

- Core promise: User data cannot be collected, accessed, or used for training by Express VPN, infrastructure operators, or cloud providers

- How it differs: Most AI platforms collect conversation data; Express AI keeps it completely isolated and ephemeral

- Key feature: Run the same prompt across multiple AI models simultaneously to compare outputs without data merging

- Pricing: Available through Express VPN subscriptions, with premium features on higher tiers

- Bottom line: Represents the most technically rigorous approach to AI privacy available today, but with practical trade-offs in speed and feature availability

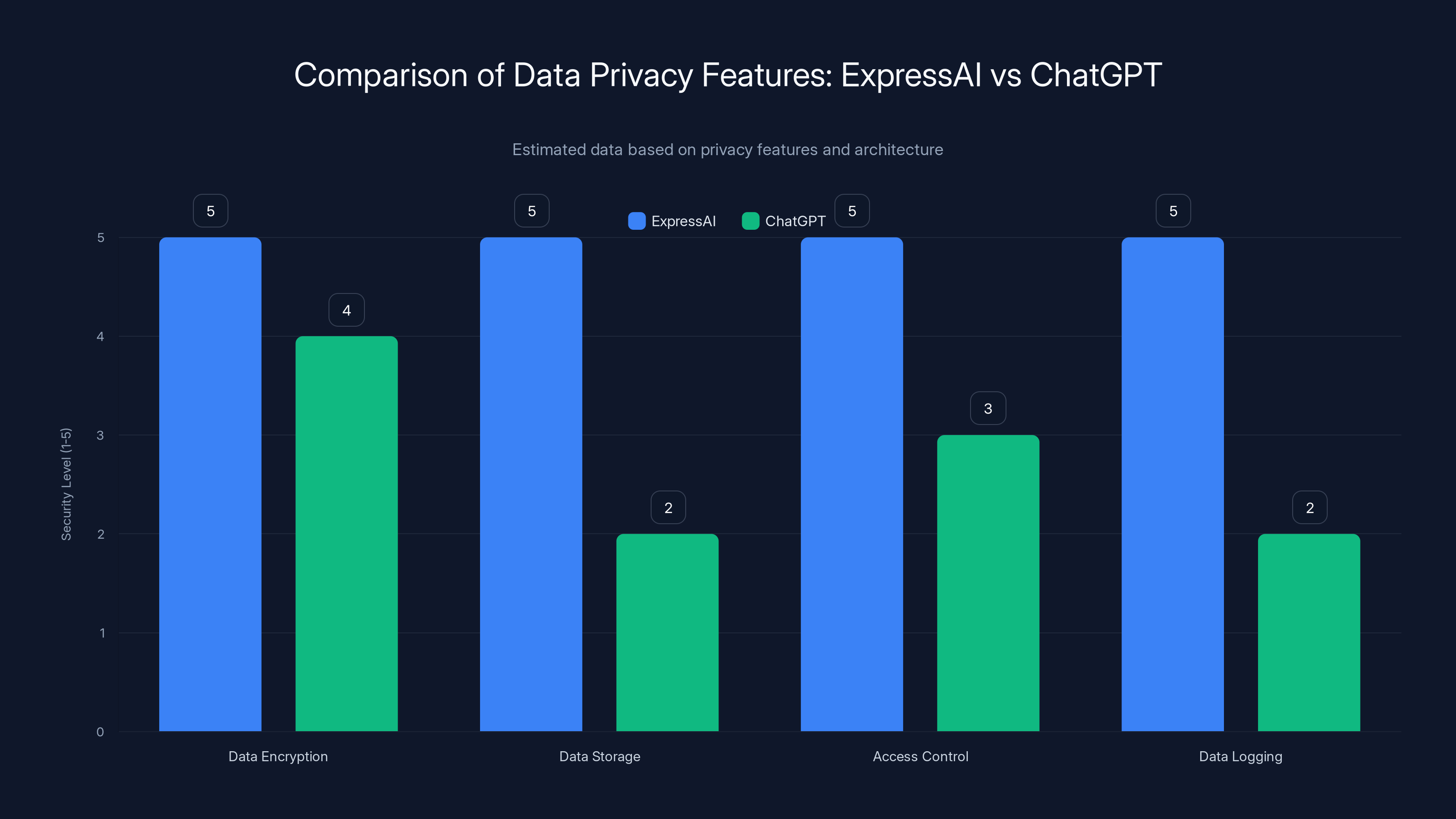

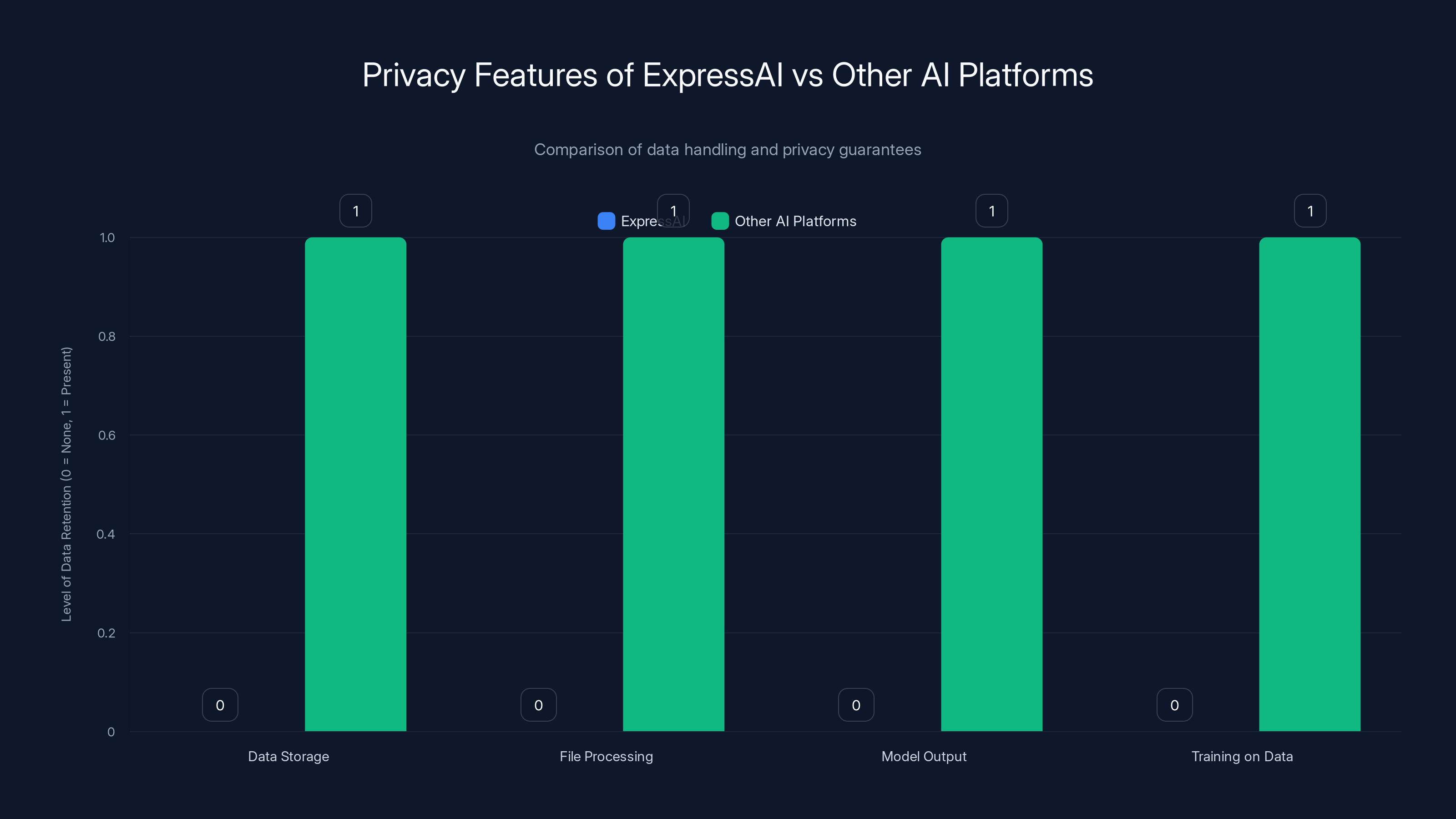

ExpressAI offers superior data privacy with maximum scores in encryption, storage, access control, and logging due to its use of confidential computing enclaves. Estimated data.

The Privacy Crisis in Modern AI: Why Express AI Exists

Let's be direct: most AI platforms are not privacy-first. They're convenience-first, with privacy bolted on as an afterthought.

When you open Chat GPT and type "I think I have depression and my marriage is falling apart, what should I do?", that prompt enters Open AI's servers. Open AI's terms allow them to use conversation data for training and improvement, though they provide an opt-out toggle. Even with opt-out enabled, your data still moves through their infrastructure. They know what you asked, when you asked it, and from where.

The same applies to Claude, Gemini, Copilot, and virtually every consumer AI tool. These platforms need data—lots of it—to build products that investors will fund. User conversations are a goldmine of behavioral data, preferences, and decision-making patterns.

But here's what companies don't talk about: data in motion is still data at risk. Even if a platform promises not to train on your data, that data has to exist somewhere during processing. It gets logged in servers, cached in memory, backed up to disaster recovery systems, potentially accessed by engineers debugging issues. The more people and systems that touch your data, the more ways it can be compromised.

Express VPN's insight is simple but powerful: if the data never enters your infrastructure in a readable form, you can't train on it, you can't access it, and bad actors can't steal it. This requires a completely different technical approach than how most AI platforms are built.

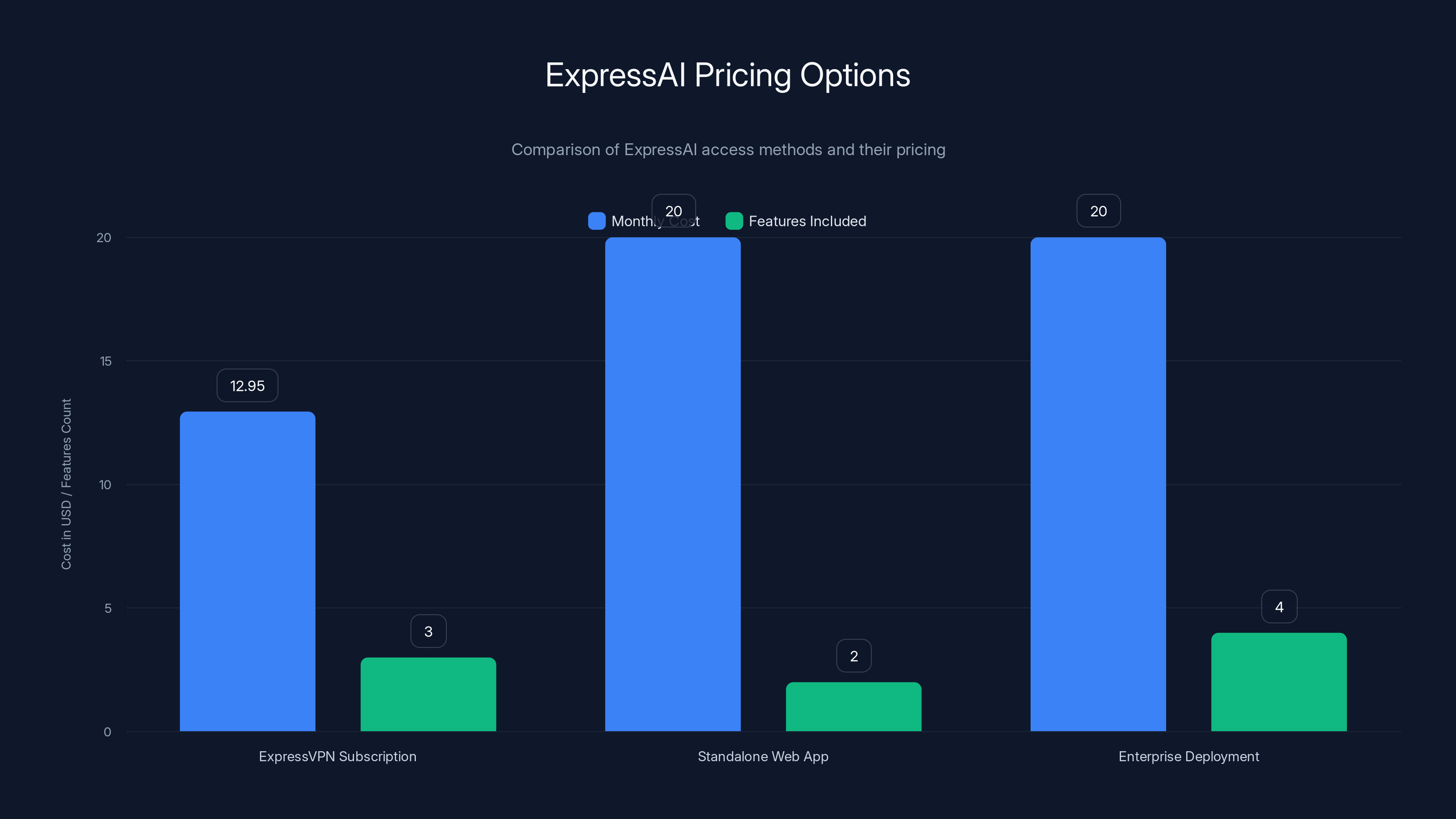

ExpressAI can be accessed via ExpressVPN subscriptions, standalone web app, or enterprise deployments. The ExpressVPN subscription offers the most features included, while the standalone app and enterprise options are priced similarly to other AI platforms. Estimated data.

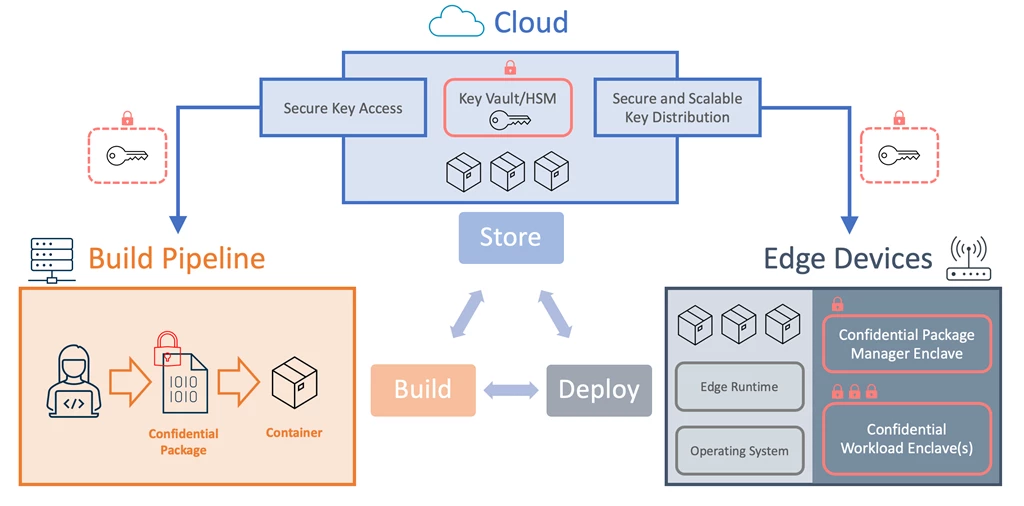

How Confidential Computing Enclaves Actually Work

Confidential computing is the technical foundation that makes Express AI's privacy claims possible. It's worth understanding, because it's not magic—it's cryptography applied at the hardware level.

Traditional cloud computing works like this: your data travels from your device to a server, gets processed, and the results come back. Throughout this journey, the cloud provider (AWS, Google Cloud, Azure) can theoretically access your data. They have administrative privileges. Security is enforced by policy and access controls, which are only as strong as the humans enforcing them.

Confidential computing flips this model. The processing environment itself becomes encrypted and isolated using specialized CPU features (Intel SGX, AMD SEV, or ARM CCA). Think of it like a locked safe inside a locked room inside a locked building. The key is held only by cryptographic code running inside the enclave, not by the cloud provider or anyone else.

Here's how it works in practice:

-

Your prompt arrives encrypted: You send "How do I set up an S-corp?" to Express AI, but it's sealed in encryption that only the enclave can decrypt.

-

The enclave decrypts in isolation: Inside the protected hardware environment, the prompt is decrypted. At this moment, the data is readable, but only inside the enclave—no system administrator can peek at it.

-

Processing happens in isolation: The AI model (Chat GPT, Claude, whatever you chose) runs inside the enclave with your unencrypted prompt. It generates a response.

-

Response is re-encrypted and deleted: The response gets encrypted again. The original prompt and temporary data are deleted from the enclave's memory. No logs remain.

-

The cloud provider sees nothing readable: Even though all of this happened on their hardware, the provider only saw encrypted data moving in and encrypted data moving out. They never saw what you actually asked.

The elegance here is that you're not trusting Express VPN or cloud providers to keep secrets. You're using hardware-enforced cryptography that makes it mathematically impossible for them to access your data, even if they wanted to and had the passwords to everything.

The Architecture Behind Express AI: Technical Breakdown

Understanding the architecture helps you grasp both the power and the limitations of Express AI.

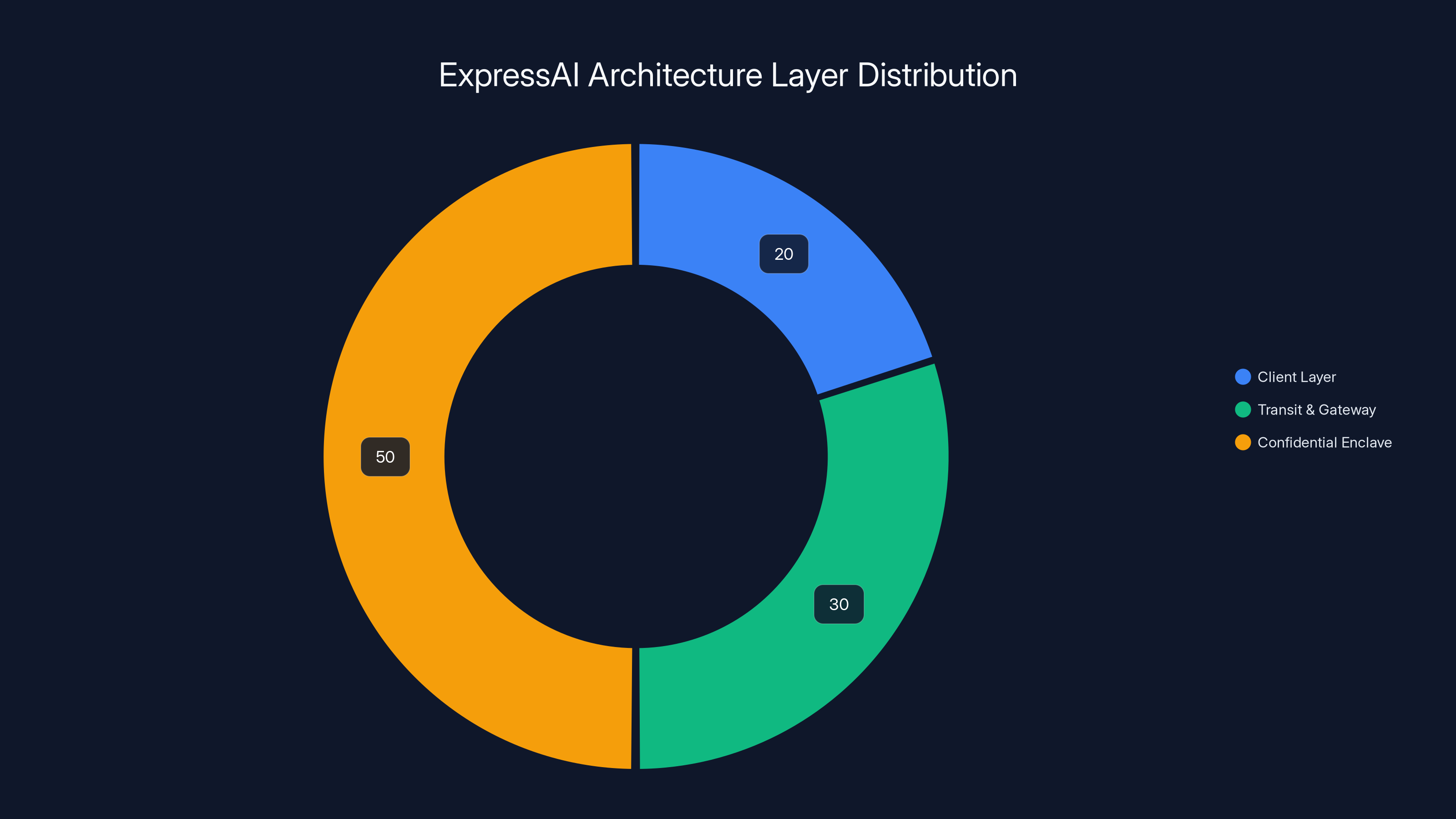

Express AI is built on a three-layer architecture:

Layer 1: The Client (Your Browser)

When you access Express AI, either through the standalone web application or through the Express VPN app, encryption starts on your device. Your prompt gets encrypted client-side before it leaves your browser. This means even if someone intercepted your traffic, they'd only see ciphertext, not your actual question.

The client also handles model selection. You choose which AI model(s) you want to use—Chat GPT, Claude, Gemini, or others. This choice is made locally on your device.

Layer 2: The Transit and Gateway

Your encrypted prompt travels through the internet to Express VPN's infrastructure. Here's where the VPN architecture matters. Because Express VPN routes the data through its own network (rather than directly to cloud providers), they can apply additional privacy protections during transit. The data never travels in the clear, and Express VPN's routers see only encrypted packets, not content.

This is a significant difference from platforms that send unencrypted data directly to Open AI or Google's servers, where the cloud provider can see your IP address, metadata, and timing information.

Layer 3: The Confidential Enclave

Once your encrypted prompt arrives at the cloud infrastructure (Express VPN uses multiple cloud providers to avoid lock-in), it enters the confidential computing enclave. This is where the heavy cryptography happens.

The enclave is running specialized code that:

- Decrypts your prompt using a key that only the enclave possesses

- Loads the AI model you selected into memory

- Runs the model against your prompt

- Encrypts the response

- Overwrites all temporary data from memory

- Sends only the encrypted response back to your client

The entire process happens in hardware-isolated memory. The cloud provider's operating system cannot access it. System administrators cannot dump memory to see what was processed. Law enforcement could theoretically seize the hardware, but the data would already be gone—overwritten by the encryption process.

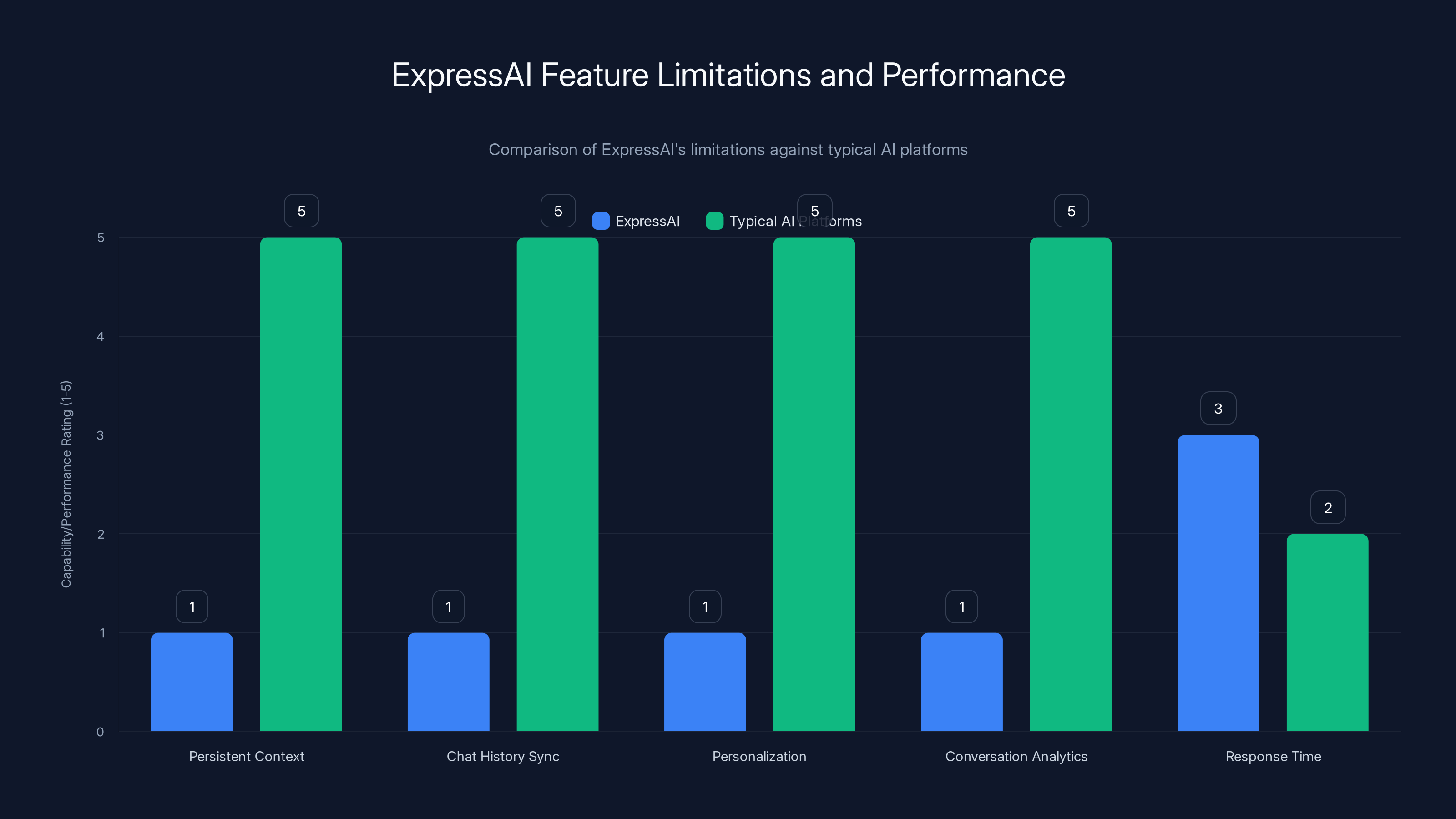

ExpressAI trades off some features and performance for privacy, with lower scores in persistent context, chat history sync, personalization, and conversation analytics compared to typical AI platforms. Response time is slightly slower due to encryption overhead.

Data Handling: What Actually Happens to Your Information

This is where the rubber meets the road. What exactly happens to your data in Express AI?

Your prompts are not stored: Unlike Chat GPT or Claude, which maintain conversation history, Express AI processes your prompt and then deletes it. The data never sits on a database. This is the core privacy guarantee.

You can view your conversation history within your session, but once you close the browser, that history doesn't sync to the cloud. It's locally stored only. This is a trade-off—you lose conversation continuity across devices—but you gain absolute privacy.

Your files are processed in memory, not stored: If you upload a document—say, a confidential financial report or a draft legal agreement—that file is processed entirely within the enclave's memory. It's never written to disk, never moved to object storage, never backed up. It's decrypted, processed by the AI model, and then permanently overwritten from memory.

This is radically different from Google Docs with AI features, where document content gets stored, indexed, and potentially used for training.

Model outputs are only sent to you: When the AI generates a response, that response is encrypted and sent directly to your client. Express VPN doesn't retain a copy. There's no logging of questions and answers for product improvement. This is the hard constraint that most AI companies cannot accept because they want to improve their products based on real usage.

No training on your data: The most important guarantee. Your prompts never enter the training pipelines for any model. Open AI won't use your prompt to improve GPT-5. Anthropic won't use it for Claude 4. Google won't use it for Gemini 2. This is enforced architecturally—the data never reaches the training systems because it never leaves the isolated enclave.

The Multi-Model Comparison Feature: How It Works

One of Express AI's most useful features is the ability to run the same prompt across multiple AI models simultaneously and compare outputs side-by-side.

Why is this valuable? Different models have different strengths. Chat GPT excels at writing and creative tasks. Claude is better at reasoning through complex problems. Gemini is strong at code generation. By comparing outputs from all three on the same prompt, you get a richer perspective and can choose the best response for your use case.

But here's the privacy twist: running the same prompt through multiple models means sending it to multiple providers. Normally, this would mean Chat GPT sees your prompt, Claude sees your prompt, and Gemini sees your prompt. Three separate data collection points.

Express AI's architecture prevents this. The prompt is decrypted once, inside the enclave. The same unencrypted prompt is sent to all three models while they're all running inside the same confidential enclave. Critically, the models don't merge their outputs—you see three separate responses, not a blended answer.

Each model runs independently. None of them know that their output was compared to another model's output. The comparison is happening at the client level, not at the infrastructure level.

This is a genuine architectural innovation. It lets you compare models without multiplying the number of companies that see your data.

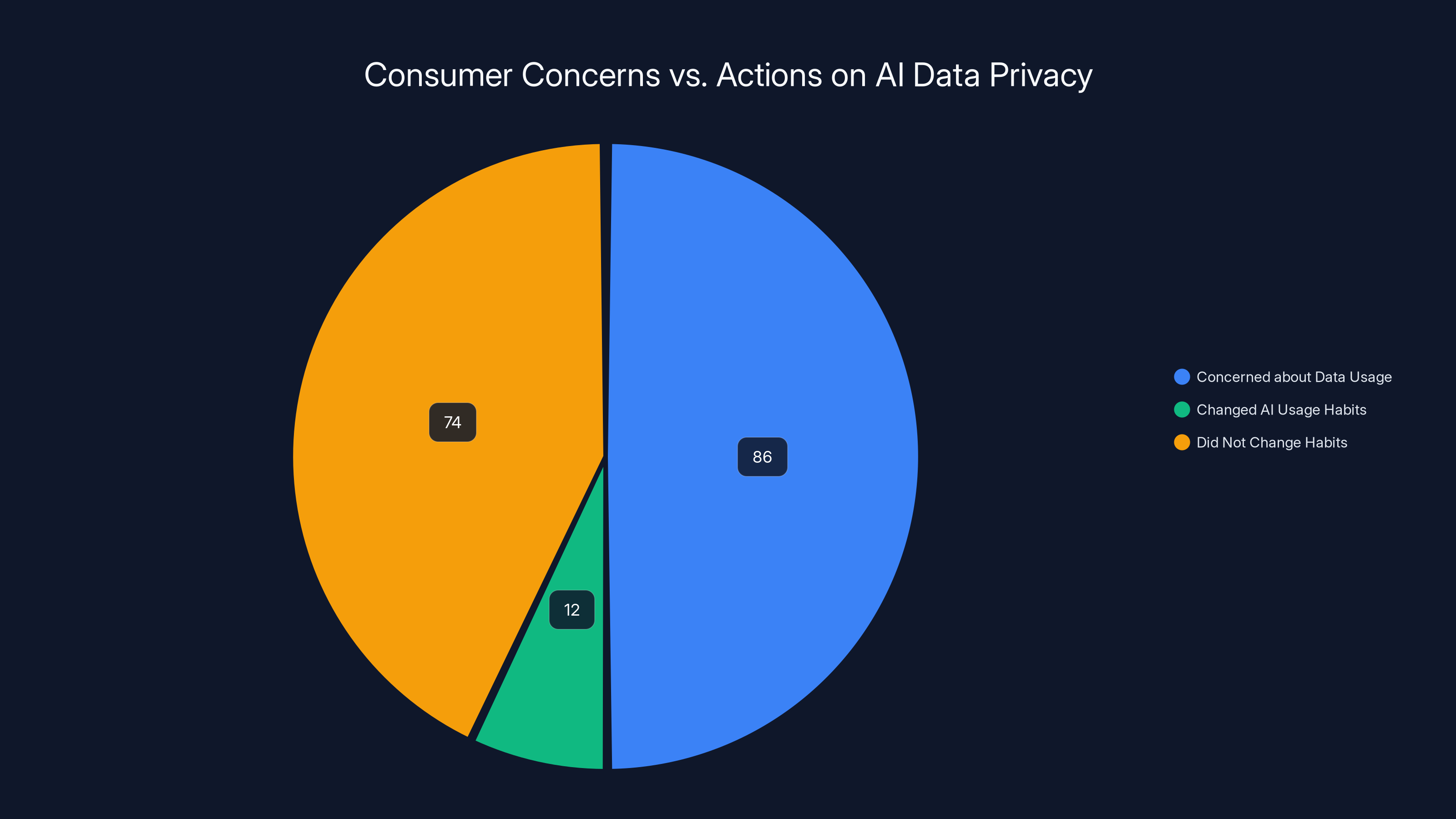

While 86% of consumers are concerned about AI data usage, only 12% alter their usage habits, indicating a gap between concern and action.

What Express AI Doesn't Protect Against

Before we get too excited about Express AI, let's be clear about its limitations. Privacy technology is always about trade-offs, and understanding what isn't protected is just as important as understanding what is.

Your IP address is still visible: Even though Express VPN routes your traffic through its network, the final connection to the confidential computing infrastructure reveals your IP address to the cloud provider. If you care about hiding your location, you'd need to route through Express VPN (which they integrate, but it's an additional step and configuration).

Metadata reveals patterns: Express AI can hide the content of your prompts, but metadata like "this user submitted a prompt at 3 AM on Tuesday" or "this user runs 50 prompts per day, mostly about legal topics" is harder to hide. If an attacker has access to metadata, they can infer information about you even without seeing the actual prompts.

Model vulnerabilities still exist: Confidential computing protects data in transit and at rest, but it doesn't protect against the AI model itself being fooled. If someone crafts a prompt designed to extract training data from an AI model (a technique called "prompt injection"), that vulnerability exists in Express AI just as it does in any AI platform. The difference is that Express AI won't use your data to fix the vulnerability—they can't, because they don't retain it.

Speed is slower: Confidential computing has overhead. Encryption and decryption add latency. For real-time use cases where speed is critical, you might notice Express AI responses are slightly slower than direct Chat GPT access. This isn't a deal-breaker for most use cases, but it's real.

Regulatory bodies can't be excluded: If you live in a jurisdiction where the government can compel cloud providers to reveal data, confidential computing doesn't protect you from government surveillance. However, it does protect you from the cloud provider voluntarily selling or exposing your data. The threat model shifts from "many companies could potentially access this" to "only government with warrants can access this."

Comparing Express AI to Other Privacy-Focused AI Solutions

Express AI isn't the only privacy-focused AI platform out there. Let's see how it stacks up against alternatives.

Open AI with "Chat History Disabled"

Open AI lets you toggle off chat history. When disabled, conversations aren't stored in your account. Sounds similar to Express AI, right? Not quite.

With chat history disabled, your prompt still travels to Open AI's servers, still gets processed by their infrastructure, and still enters their logging systems. Engineers can still access it during debugging. It just won't be automatically attached to your account for review later.

The difference: Open AI can theoretically still use disabled-history conversations for research (with anonymization). Express AI can't, because the data never enters readable storage in the first place.

Claude with a VPN

Anthropic's Claude is generally considered more privacy-respecting than Open AI—they don't train on conversation data by default. But their architecture is still conventional: your data goes to their servers, gets processed, and is stored until you delete the conversation.

Adding a VPN on top doesn't help much. Claude still sees your unencrypted prompt. You're just hiding your IP address, not the content of your conversation.

Signal's Disappearing AI Messages

Signal (the private messaging app) launched an experimental AI chatbot with disappearing messages. The model deletes conversations after a set time. This is better than permanent storage, but it's still not as strong as confidential computing because the data still exists in readable form during the time window.

Also, Signal is running this on their own infrastructure with a single model. They can't offer model comparison like Express AI does.

Private GPT (Local-Only AI)

Some open-source projects let you run AI models locally on your device without ever sending data to the internet. This is the ultimate privacy guarantee—if your data never leaves your device, it can't be stolen online.

But this requires powerful hardware, slow inference times, and you're limited to smaller models with less capability. For complex reasoning tasks or specialized models like GPT-4, local-only AI isn't practical.

Express AI splits the difference: your data still goes to cloud infrastructure, but that infrastructure is configured so it can't be accessed or retained.

Duck Duck Go's AI Chat

Duck Duck Go (the privacy-focused search engine) launched an AI chat feature. They promise not to log your data. Similar promises to Express AI, but Duck Duck Go doesn't use confidential computing to enforce it—they rely on policy, not architecture. If Duck Duck Go gets breached or changes their terms of service, the guarantee evaporates.

Express AI's advantage: the architecture itself enforces privacy. Policy is secondary.

The Confidential Enclave handles the majority of processing tasks, emphasizing security and privacy. Estimated data.

Express AI's Use Cases: Where It Actually Matters

Privacy in AI is a spectrum. Some use cases need Express AI's level of protection. Others don't. Let's be realistic about where Express AI is genuinely useful.

Financial and Legal Planning

If you're drafting a business proposal, asking AI for advice on tax strategies, or discussing a sensitive acquisition, you probably don't want that data sitting in a server somewhere that could be breached, sold, or discovered in litigation.

Express AI shines here. A lawyer could ask "What are the weaknesses in this contract clause?" without that question being discoverable later. An accountant could discuss tax strategies without leaving a data trail.

Health and Mental Health Discussions

This is where the original Express VPN quote makes sense. People are increasingly using AI for health questions and mental health support. These conversations are sensitive—you might ask about symptoms, medications, or personal struggles.

With Express AI, that data genuinely isn't stored. With Chat GPT, even with history disabled, it's processed by their systems. For health conversations, many people would choose Express AI's stronger guarantee.

Enterprise and Confidential Business Use

Companies have compliance requirements. If you're processing customer data, employee information, or trade secrets, you need to ensure that third parties aren't storing it. Express AI's architecture makes this provable—you can show auditors and compliance teams that the architecture itself prevents data retention.

This is where you'd likely switch from consumer Express AI to enterprise versions with additional controls.

Research on Sensitive Topics

Researchers studying privacy, security, crime, or controversial subjects might not want their research queries showing up in AI training data. Express AI ensures that researchers can explore topics without that exploration becoming public through training data leaks.

Where Express AI is Overkill

On the flip side, not every AI use case needs confidential computing. If you're asking Chat GPT "What's a good recipe for chocolate cake?", the privacy provided by a locked-down enclave is unnecessary.

For casual use, the overhead (slower speed, more limited features) isn't worth the privacy gain. You're probably fine with a standard AI platform's privacy controls.

Similarly, if you're using public information that you don't mind being public (brainstorming blog post ideas, learning programming concepts), Express AI is overkill.

The sweet spot: you're processing sensitive information, want the strongest possible guarantee, and are willing to accept slightly slower performance and limited feature set.

The Broader Context: AI Privacy as a Competitive Advantage

Express AI isn't just about paranoia or edge cases. It's part of a larger shift in how companies are thinking about AI and privacy.

Why did Express VPN build this? Because they saw a gap. They've spent 20 years as a VPN company proving that there's a market for privacy-first infrastructure. People will pay for it. People will recommend it. People will trust you if your entire business model depends on privacy being real.

Express VPN's core business is selling VPN subscriptions. Express AI is a feature that extends that trust into AI. It's a logical product extension for a company built on privacy as a first principle.

But here's the interesting part: if Express VPN can do this, why can't Open AI or Google? They certainly have the engineering talent and cloud infrastructure.

The answer is business model. Open AI and Google benefit from collecting data. They use it to improve models, train new models, and sell insights to enterprises. Privacy-first architecture cuts into that value proposition.

Express VPN has no such incentive. They don't train AI models. They don't sell user insights. They make money by providing a privacy-first service. Building privacy-first AI directly supports their business model.

This reveals something important about AI privacy: it will only become standard if the business incentives align with privacy. As long as companies profit from data collection, they'll minimize privacy even if they add privacy features.

Express AI suggests that there's room for specialized vendors who compete on privacy rather than on feature richness or raw capability.

ExpressAI offers superior privacy by not storing data, processing files only in memory, not retaining model outputs, and not using data for training, unlike other AI platforms. Estimated data based on typical practices.

Pricing and Availability: How to Access Express AI

Express AI is available in two ways:

Through Express VPN Subscriptions

If you're already an Express VPN subscriber, you have access to Express AI. The platform is integrated into the Express VPN app and available as a standalone web application.

Pricing for Express VPN ranges from roughly

Standalone Web Application

You don't necessarily need an Express VPN subscription to try Express AI. It's also available as a standalone web application, meaning you can access it directly without being an existing VPN customer.

The freemium model offers limited access to test the service. Like most AI platforms, higher-tier subscriptions unlock faster response times, higher rate limits, and access to premium models.

Enterprise Deployments

For enterprise customers with specific compliance requirements, Express VPN likely offers custom deployments and dedicated infrastructure. These are typically discussed directly with their enterprise sales team and priced based on usage and requirements.

The pricing is competitive with other AI platforms. You're paying roughly what you'd pay for Chat GPT Plus (

The Limitations and Honest Assessment

Let's be candid: Express AI has real limitations that matter.

Feature Limitations

Because data is processed in isolation and deleted immediately, Express AI can't offer some features that conventional AI platforms provide:

- Persistent conversation context: You can't reference previous conversations across sessions. Each conversation starts fresh. This makes it harder to work on long-term projects.

- Chat history across devices: Your conversation on your laptop doesn't sync to your phone because conversations aren't stored centrally.

- Personalization: AI platforms typically improve by learning your preferences over time. Express AI can't do this without retaining data.

- Conversation analytics: You can't ask "How many times did I ask about React this month?" because that data isn't stored.

These aren't bugs—they're consequences of the privacy guarantee. You're trading convenience for privacy.

Performance Overhead

Confidential computing adds latency. Encryption, decryption, and isolation have computational costs. Response times in Express AI are measurably slower than direct access to Chat GPT or Claude.

For interactive use cases where you're iterating quickly, you notice the difference. For one-off questions, it's acceptable.

Model Limitations

Not every AI model works in confidential computing environments. Some models are too large. Others have licensing restrictions. Express AI supports major models like GPT-4, Claude 3, and Gemini, but the selection is more limited than what you get with direct access to Open AI's latest models.

You're always getting models from the previous generation or current release, not cutting-edge preview access.

Cost

If you need both VPN and AI, Express AI is cost-effective. If you only need AI, it's more expensive than Chat GPT Plus because you're essentially paying for VPN features you might not use.

For personal use, this is a trade-off. For enterprise use, the privacy guarantee might justify the cost.

The Honest Reality

Express AI is a genuinely privacy-first product for people who genuinely care about privacy. It's not designed to be better than Chat GPT at generating answers. It's designed to be better at protecting you while you generate answers.

If you don't care about privacy, use Chat GPT—it's faster and cheaper. If you care about privacy but don't need bank-vault-level protection, Open AI's privacy controls are probably sufficient.

Express AI is for people who believe that data is inherently risky and want the strongest technical guarantees that their data can't be misused, even if it means sacrificing convenience and speed.

The Future of Private AI: What Express AI Signals

Express AI doesn't exist in a vacuum. It's part of a broader reckoning with how AI intersects with privacy.

Regulatory Pressure

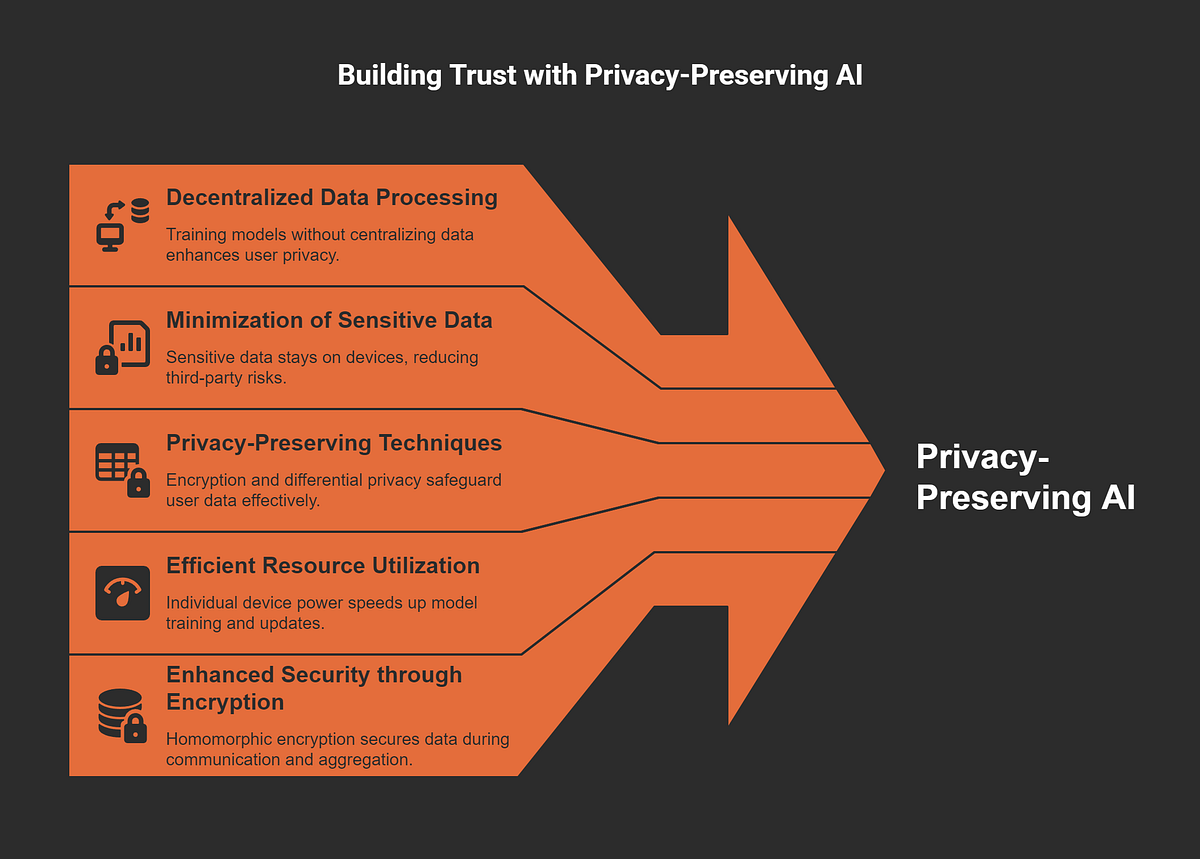

The EU's Digital Services Act and AI Act are forcing AI companies to think more carefully about data collection and training. Regulations are moving in the direction of "minimize data collection." Express AI is ahead of this wave—it's built on the regulatory principle that the best way to comply with data protection laws is to not collect data in the first place.

As regulations tighten globally, we'll likely see more AI platforms adopt similar architecture, not because they want to, but because they'll be legally required to offer privacy-first options.

Enterprise Demand

Enterprises processing sensitive data are increasingly demanding privacy-first AI. Banks can't use public Chat GPT without legal risk. Healthcare companies can't use standard Gemini. But they can use Express AI because the architecture eliminates the legal risk.

This creates a market opportunity. Express VPN is proving that there's genuine demand for privacy-first AI from enterprises willing to pay for it.

Technical Maturity of Confidential Computing

Confidential computing is still young (Intel SGX launched in 2015, but commercial services only became viable in the last 5 years). As the technology matures and becomes faster, we'll see more AI platforms offering similar privacy guarantees.

In 5-10 years, confidential computing might be table stakes rather than a differentiator. The way HTTPS became standard for all websites, privacy-preserving AI might become standard.

The Bigger Question: Should AI Companies Collect Data?

Express AI raises a philosophical question that the AI industry has mostly avoided: Do AI companies actually need to collect conversation data?

Open AI says they need it to improve models. Maybe that's true, but it's also convenient for business purposes. Anthropic has shown that you can build competitive AI models without training on user conversations. Claude is arguably better than Chat GPT for many tasks, and they don't collect user data by default.

This suggests the data collection argument is partially justified, partially convenient. Express AI proves you can offer AI functionality without collection. The question is whether the industry will choose to.

Implementation Considerations: If You're Evaluating Express AI

If you're seriously considering adopting Express AI for personal or professional use, here are practical questions to answer:

Do you actually need persistent data?

Before you dismiss Express AI for lacking conversation history, honestly ask: do you need it? For many use cases (brainstorming, one-off questions, quick coding help), you don't. You might be overestimating how much you rely on conversation persistence.

What's your actual threat model?

Express AI protects against data collection by Express VPN and cloud providers. It doesn't protect against:

- Government surveillance with warrants

- Your own device being compromised

- Phishing attacks

- Network-level attacks at your ISP

If your threat model is "I'm worried about commercial data collection," Express AI is perfect. If it's "I'm worried about advanced persistent threats," you need different tools.

Can you afford the performance trade-off?

If you're using AI for interactive work where latency matters, the slower response times in Express AI might be frustrating. Test it before committing.

Do you need advanced features?

If your workflow depends on features like persistent files, advanced browsing, image generation, or custom models, Express AI's limitations might be dealbreakers.

Is privacy aligned with your values?

This is the real question. If you believe that data minimization is good policy and you're willing to accept trade-offs for it, Express AI aligns with your values. If you prefer maximum features and assume privacy will be fine, a standard AI platform is a better fit.

Conclusion: The Privacy-First AI Movement Starts Here

Express AI represents something rare in AI: a product that prioritizes privacy as a first principle rather than an afterthought. Not because privacy is trendy, but because the company's entire business model depends on privacy being real and trusted.

The privacy guarantee is genuine. Confidential computing enclaves are real technology. The data architecture is sound. Express VPN has been transparent about the limitations. This isn't marketing theater.

But it's also not a silver bullet. Express AI won't replace Chat GPT for most people. It's slower, less convenient, and more limited. What it does offer is a technical solution to a real problem: how do you use AI for sensitive conversations without creating data trails that can be exploited, regulated, or discovered?

For enterprise users processing sensitive data, the answer is clear: Express AI or similar privacy-first platforms become necessary. For individuals with genuine privacy concerns, it's a reasonable choice. For casual users, it's overkill.

The bigger significance is what Express AI signals: that privacy-first AI is architecturally possible and commercially viable. If Express VPN can do it, others will. As regulations tighten and enterprise demand increases, privacy-first AI will shift from niche to mainstream.

We're at an inflection point. For years, AI companies have collected data because they could and because it benefited them. Express AI proves they don't have to. The question moving forward isn't whether privacy-preserving AI is possible—it's why more companies aren't doing it.

If you're processing sensitive information, care about data minimization, and are willing to accept trade-offs for privacy, Express AI is worth trying. If you're building enterprise AI applications that must be compliant, it's worth evaluating.

For everyone else, it's a sign that the privacy landscape is shifting. The expectation that companies will minimize data collection is becoming more reasonable. Express AI is the proof.

FAQ

What exactly is Express AI and how does it differ from Chat GPT?

Express AI is a privacy-focused AI platform built by Express VPN that uses confidential computing enclaves to process your prompts in complete isolation. Unlike Chat GPT, where Open AI can potentially access, log, and use conversation data for training and improvement (even with history disabled), Express AI's architecture makes it impossible for anyone—including Express VPN staff—to access or retain your prompts. Your data is encrypted before leaving your device, decrypted only inside hardware-isolated enclaves, processed, and then permanently deleted. The fundamental difference is architectural: Chat GPT's data enters readable storage; Express AI's data never enters readable storage at all.

How do confidential computing enclaves actually protect my data?

Confidential computing enclaves are secure, isolated regions within a CPU that encrypt data and processing. When you send a prompt to Express AI, it arrives encrypted and only the enclave can decrypt it using keys that even the cloud provider and system administrators cannot access. Your prompt is processed inside this isolated hardware environment where no operating system or external software can peek at it. Once processing completes, the data is re-encrypted and overwritten from memory. The entire process is enforced by hardware-level cryptography, making it mathematically impossible for anyone to access your unencrypted data, even with full administrator privileges.

Can I still use AI tools for sensitive conversations if I use Express AI?

Yes, absolutely. Express AI is specifically designed for sensitive conversations about health, finances, legal matters, personal relationships, and confidential business topics. Your prompts are never stored, never logged, and never used for training. This makes it suitable for discussions about medical symptoms, mental health concerns, tax strategies, business strategies, or any other sensitive topic where you wouldn't want the conversation existing in a searchable database somewhere. The guarantee is enforced architecturally, not just by company policy, which means it's much more trustworthy than privacy promises from conventional AI platforms.

What are the main limitations of Express AI compared to Chat GPT or Claude?

Express AI trades features for privacy. Key limitations include: conversations don't persist across sessions or devices (you lose conversation history), you can't rely on the AI remembering context from previous chats, personalization features are minimal since the platform doesn't track your preferences over time, some advanced features like custom instructions or fine-tuning aren't available, response times are slower due to encryption overhead, and the selection of available AI models is more limited than direct access to Open AI or Anthropic. These aren't bugs—they're intentional consequences of the privacy-first architecture. You're choosing privacy over convenience.

Is Express AI faster or slower than Chat GPT?

Express AI is noticeably slower than direct Chat GPT access because of the encryption, decryption, and isolation overhead. Response times are typically 20-40% slower depending on the prompt complexity. For one-off questions or thoughtful analysis where you don't need instant responses, the difference is acceptable. For interactive use cases where you're iterating quickly through multiple prompts, you'll notice the latency. This is a real trade-off: you gain privacy, but you sacrifice speed.

How much does Express AI cost and how do I access it?

Express AI is included with Express VPN subscriptions, which range from about

Can Express AI be used for enterprise applications with compliance requirements?

Yes, this is actually one of Express AI's strongest use cases. Enterprises that must comply with data protection regulations (HIPAA for healthcare, GDPR for EU customers, SOC 2 compliance for service providers) can demonstrate that their AI usage is compliant by using Express AI. The architecture itself prevents data retention, making it auditable and provable. The platform can be configured for enterprise deployments with dedicated infrastructure and custom controls. If your organization processes sensitive customer or employee data and needs a legally defensible way to use AI, Express AI is worth evaluating with your compliance and legal teams.

What happens to my data if I close my browser or stop using Express AI?

Your data is permanently deleted. Conversations aren't synced to the cloud or stored in any account database. Once you close the browser, the conversation is gone from Express AI's systems. You might see conversation history in your browser's local storage temporarily, but that's stored on your device, not on Express AI's servers. If you want to preserve a conversation, you need to manually save it (copy and paste to a local document). This is part of the privacy guarantee—nothing is retained that could later be discovered or subpoenaed.

Is Express AI good for personal use, or is it only for enterprises?

It's useful for both, but serves different purposes. For personal use, it's best if you regularly have sensitive conversations with AI about health, finances, relationships, or personal decisions where you genuinely don't want that data existing in corporate databases. For casual use (recipes, brainstorming, learning), standard AI platforms are probably sufficient and faster. For enterprises, it's essential if you're processing customer or employee data and need compliance. The personal use case is: "I value privacy enough to accept slower performance and limited features." That's a real segment of users, but not everyone.

How does Express AI's multi-model comparison feature work without violating privacy?

Instead of sending your prompt separately to Chat GPT, Claude, and Gemini (which would mean three separate companies seeing your data), Express AI decrypts your prompt once inside the confidential enclave, runs all three models simultaneously within that same isolated environment, and sends only the outputs back to you. The models don't see each other's responses, and none of them know they were compared. This keeps your prompt within a single privacy boundary while giving you the benefit of comparing multiple models. It's a clever architectural solution that wouldn't work without confidential computing.

Final Thoughts

Express AI isn't going to be everyone's choice for AI, and that's okay. It's a specialized tool for a specific need: using AI for genuinely sensitive conversations while maintaining the strongest possible privacy guarantees.

What matters is that it exists and that it works. Privacy-first AI is no longer theoretical. It's architecturally sound, commercially available, and technically proven. The question for the rest of the AI industry isn't whether they can do this—they can. The question is whether they will.

For now, if you care about privacy more than convenience, Express AI deserves a look.

Key Takeaways

- ExpressAI uses confidential computing enclaves to isolate your AI prompts in hardware-encrypted environments that even ExpressVPN and cloud providers cannot access

- Unlike ChatGPT, where conversations can be logged and used for training, ExpressAI deletes all data immediately after processing with zero retention

- The platform trades convenience for privacy: slower response times, no persistent conversation history, and limited features compared to mainstream AI platforms

- Confidential computing is architecturally proven but expensive in computational overhead, making it most valuable for sensitive conversations about health, finance, or legal matters

- As AI regulation tightens globally, privacy-first architectures like ExpressAI will likely become table stakes rather than differentiators in the enterprise AI market

Related Articles

- Harvard and UPenn Data Breaches: What You Need to Know [2025]

- Substack Data Breach Exposed Millions: What You Need to Know [2025]

- Reclaim Your Browser: Best Ad Blockers & Privacy Tools [2025]

- Android Privacy Tips: Protect Your Device From Prying Eyes [2025]

- X's Paris HQ Raided by French Prosecutors: What It Means [2025]

- StationPC PocketCloud Portable NAS Review: Complete Guide [2025]

![ExpressAI: The Privacy-First AI Platform Keeping Your Data Completely Safe [2025]](https://tryrunable.com/blog/expressai-the-privacy-first-ai-platform-keeping-your-data-co/image-1-1770302818215.jpg)