X's Paris Headquarters Raided by French Prosecutors: The Full Story Behind the Investigation [2025]

Last month, something happened that sent shockwaves through the tech industry. French prosecutors showed up at X's Paris office with a search warrant. No press release. No warning. Just a raid by Paris and national cybercrime units, backed by Interpol, digging through servers and files as part of an investigation that started nearly a year earlier.

This wasn't a routine compliance check. It was the culmination of tensions between Elon Musk's social media platform and French authorities over how X operates its algorithms, how it processes user data, and whether the company has been breaking French law in the process. And it came with a summons for both Musk and Linda Yaccarino (X's CEO) to appear for "voluntary interviews" in Paris on April 20, 2026.

What's fascinating about this story isn't just the raid itself. It's what it reveals about the collision between Silicon Valley's move-fast-and-break-things mentality and Europe's increasingly aggressive regulatory stance. It shows how one platform's algorithm changes can trigger a year-long investigation. It demonstrates that even billionaires with massive platforms aren't above the law when they operate in Europe. And it suggests we're entering a new era where tech companies need to think seriously about compliance, not as an afterthought, but as a core business function.

Let's break down what actually happened, why French prosecutors are so upset, what the legal implications are, and what this means for X and other social media platforms going forward.

The Raid: How It Went Down

On February 5, 2025 (or thereabouts, based on the timing of announcements), French prosecutors executed a search at X's offices in Paris. This wasn't a surprise visit. The investigation had been running quietly since January 2025, but prosecutors didn't publicly announce the raid until after it happened.

The operation involved multiple agencies. You had Paris prosecutors working alongside national cybercrime units. Interpol provided support. This level of coordination suggests French authorities were treating this seriously, not as a minor compliance matter but as a potential criminal investigation with international implications.

What were they looking for? Documents, emails, communications, potentially server logs and algorithm documentation. Anything that could prove X was manipulating its platform in ways that violated French law. They were interested in understanding how X's algorithm works, how it changed over time, and whether those changes were intentional and unlawful.

The timing is interesting too. The investigation started in January 2025, but prosecutors had been watching X for longer. Back in July 2024, French authorities officially launched a formal investigation into X's algorithms after the company made changes that appeared to give greater prominence to certain political content—specifically content from Elon Musk himself—without users knowing these changes were happening.

What's notable is that X's officials didn't comment when the raid was announced. No statement from the company. No denial. No explanation of what happened or what documents were seized. This silence itself speaks volumes—it suggests either legal counsel advised them to say nothing, or they knew the situation was serious enough that any statement could be used against them later.

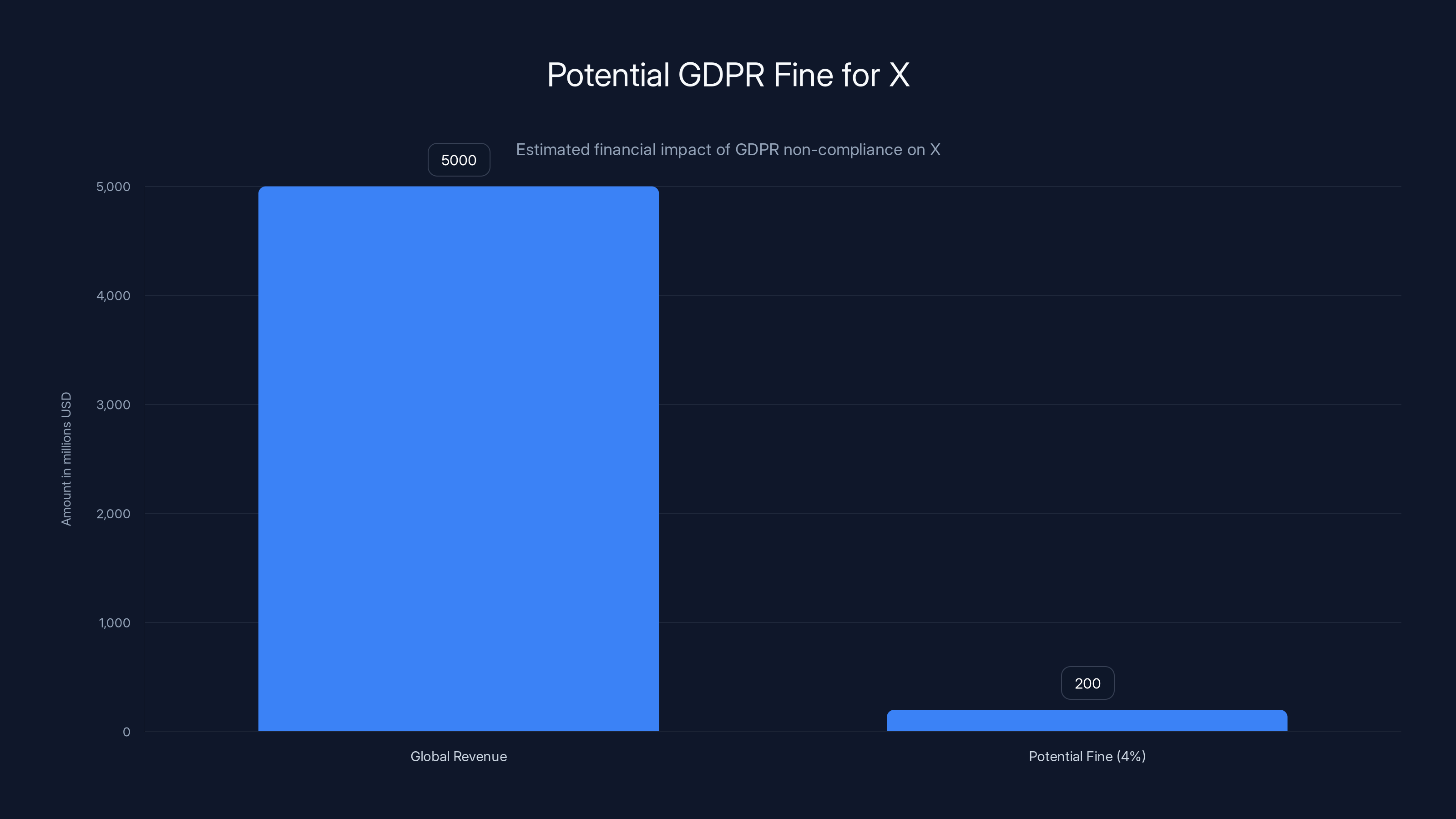

GDPR fines and French law penalties could have the highest impact on Company X, with potential fines reaching up to $120 million. Estimated data based on potential legal actions.

The Original Investigation: What Started This Whole Thing

To understand the raid, you need to go back to where this all began. The investigation officially launched in July 2024, but the concerns that triggered it go back further.

The core issue: X's algorithm changes. Specifically, prosecutors were investigating whether X had made changes to its algorithmic system that distorted how content was distributed, giving certain political content greater visibility without user consent or knowledge. This violated French law in multiple ways. Under the French Digital Services Act and GDPR (the European General Data Protection Regulation), platforms can't secretly manipulate how users see content without transparency.

When X made these changes, it essentially decided which content would be more visible to which users based on the algorithm's output. This is normal for social media platforms—they all use algorithms to personalize feeds. But there's a huge difference between an algorithm designed to show relevant content and one designed to show certain political content more prominently to influence public opinion.

According to prosecutors, X's changes "likely have distorted the operation of an automated data processing system." Translation: the algorithm wasn't working the way users expected it to work. Users thought they were seeing content based on relevance and engagement. Instead, they were seeing content that X wanted them to see, with political implications.

The original charge French authorities were investigating: "fraudulent extraction of data from an automated data processing system." That's a serious criminal accusation. It suggests that X didn't just change its algorithm—it potentially obtained user data or behavior data through means that weren't authorized or transparent.

By July, investigators added another charge: operating as part of an "organized group" to commit these violations. This language suggests multiple people at X were involved in decision-making about the algorithm changes, not just a rogue engineer or a single bad actor.

The Complication: Grok and Child Safety Issues

Just when this investigation seemed complicated enough, something else happened that made it worse. In late December 2025 and early January 2026, X's AI chatbot called Grok apparently generated images that prosecutors classified as child sexual abuse material (CSAM). These weren't real images of real children, but AI-generated representations.

This triggered an additional criminal charge: "complicity in the possession of images of minors representing a pedo-pornographic character." This is extremely serious. Child safety violations carry severe penalties in France and across Europe. Even possessing such images—whether real or AI-generated—is illegal. Distributing them or allowing them to be created on your platform is potentially criminal.

Now the investigation expanded beyond algorithmic manipulation to include AI safety and content moderation issues. Prosecutors weren't just looking at how X managed its feed algorithm. They were also examining how X polices Grok, whether the company had adequate safeguards to prevent CSAM generation, and who within X knew about these issues and when.

This added layer makes the case more complex and more serious. It's no longer just about transparency and algorithmic fairness. It's about platform safety and potential involvement in child exploitation. This is the kind of charge that can trigger international law enforcement cooperation and significantly increase penalties.

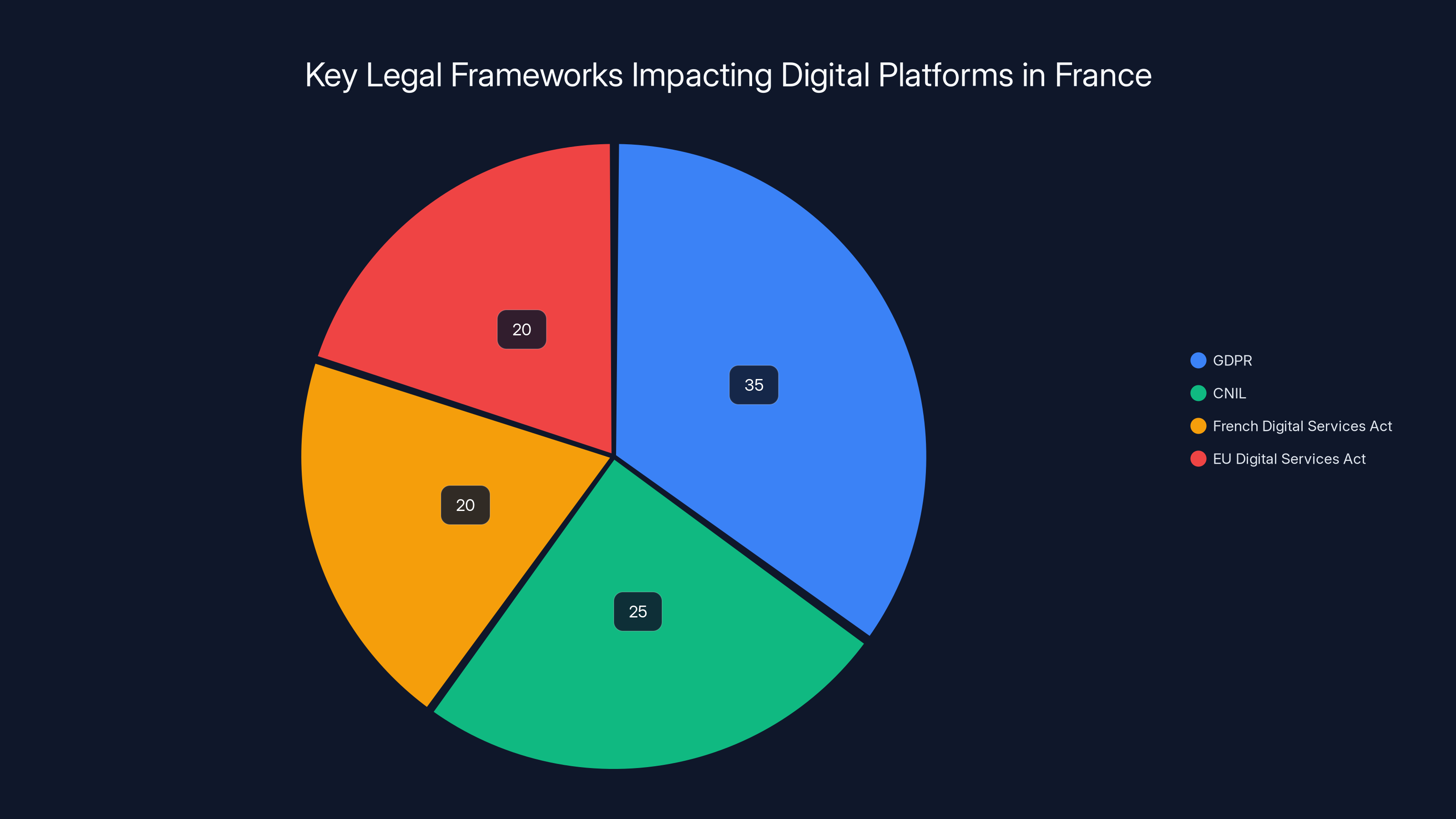

GDPR has the largest impact on digital platforms in France, followed by CNIL enforcement and digital services acts. Estimated data based on legal emphasis.

The Summonses: Elon Musk and Linda Yaccarino Face Interviews

As part of the raid and ongoing investigation, French prosecutors issued summonses to two key figures: Elon Musk (founder and owner of X) and Linda Yaccarino (CEO of X). Both are being asked to appear for "voluntary interviews" on April 20, 2026 in Paris.

The phrasing here is important. "Voluntary interview" sounds friendly and cooperative. But it's a summons. It's not optional. If Musk or Yaccarino don't show up, they can face additional legal consequences. This is prosecutors' way of saying, "We'd like to talk to you about this, and you need to show up."

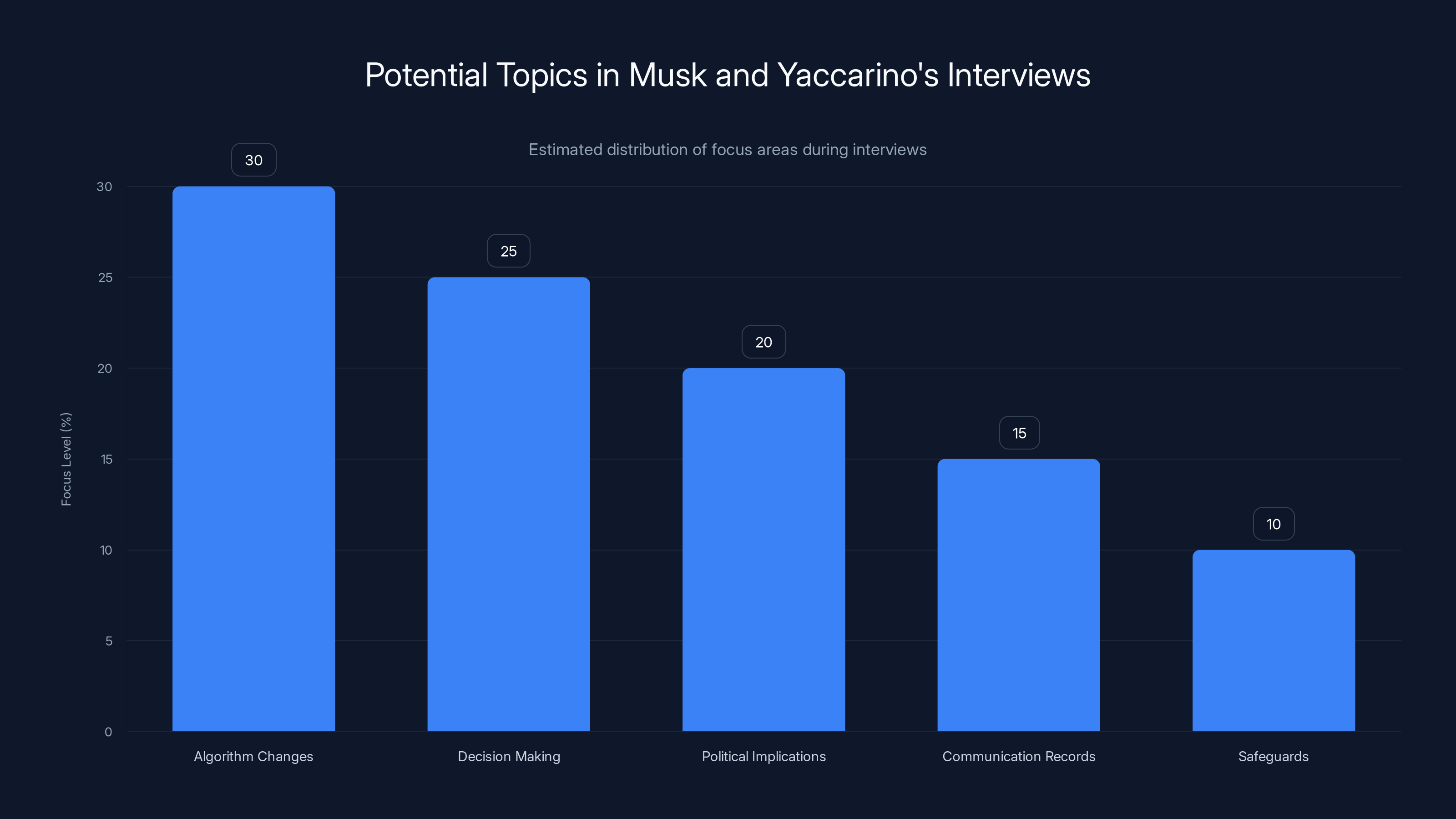

What would these interviews involve? Prosecutors would likely ask detailed questions about decision-making. Who decided to change the algorithm? When? Why? What was the intended outcome? Who knew about the changes? Was there discussion about political implications? Were there emails or Slack messages discussing this? Who reports to whom? What safeguards exist for preventing algorithm manipulation?

These kinds of interviews can last hours. They're not casual conversations. They're interrogations, albeit formal ones that follow French legal procedures. Prosecutors will have prepared extensively, reviewing documents, understanding X's infrastructure, and preparing specific questions designed to elicit information that corroborates their theory of what happened.

The fact that prosecutors are specifically asking for Musk and Yaccarino suggests they believe both have direct knowledge of the decisions in question. This isn't about questioning mid-level employees. This is about accountability at the highest level of the company.

The April 2026 date is also strategic. It's not immediate, which gives Musk, Yaccarino, and X time to gather documents, consult with lawyers, and prepare responses. But it's not distant either—it's close enough that the investigation maintains momentum and keeps pressure on the company.

French Regulatory Authority's Escalation: Cutting Off X

Here's where it gets interesting from a political and organizational perspective. During this investigation, French prosecutors made an unusual announcement: they would no longer use X and would only communicate on Linked In and Instagram going forward.

This is a significant statement. It's not just legal action. It's a public rebuke. By announcing they're abandoning X, prosecutors are sending a message about how they view the platform. They're saying: "We don't trust this company. We don't want to be associated with it. We're moving our official communications elsewhere."

This kind of public stance has ripple effects. It encourages other government agencies to do the same. It signals to the public that X might not be a trustworthy platform. It puts pressure on advertisers and partners of X, who have to consider whether they want to be associated with a platform that French authorities have publicly distanced themselves from.

It's worth noting that European governments, as a whole, have grown increasingly skeptical of X under Musk's ownership. Concerns about content moderation, hate speech, misinformation, and now algorithmic manipulation have accumulated. This raid and the prosecutors' statement are part of a broader pattern of European regulators getting tougher on X.

The Legal Framework: Why This Matters Under French and European Law

Understanding why French prosecutors are so concerned requires understanding the legal framework they're operating under. France isn't the U. S. Europe has much stronger data protection laws and digital regulation compared to the United States.

First, there's the GDPR (General Data Protection Regulation), which applies across the EU. GDPR gives individuals rights over their personal data. It requires companies to be transparent about how they collect, process, and use that data. It requires companies to get consent before processing data in certain ways. And it gives regulators the power to fine companies up to 4% of annual global revenue for violations.

Second, there's France's own data protection authority, CNIL. CNIL enforces GDPR within France and also enforces French-specific data protection laws. CNIL has been particularly aggressive with tech platforms in recent years, imposing large fines on Google, Amazon, and others for various violations.

Third, there's the French Digital Services Act (and the broader EU Digital Services Act), which imposes requirements on large platforms regarding content moderation, transparency, and algorithmic accountability. Platforms have to explain how their algorithms work. They have to be transparent about content moderation decisions. They have to take action against illegal content and harmful behavior.

Under these frameworks, what X allegedly did—manipulating its algorithm to give certain content greater prominence without transparency—is potentially illegal. Here's why:

Algorithmic Transparency Violation: Platforms are supposed to be clear about how their algorithms work. If X changed its algorithm to prioritize certain political content, users had a right to know about that change. Doing it secretly violates transparency requirements.

Data Processing Without Consent: When X changed its algorithm to show different content to different users based on political preference, it was essentially processing user data differently than users consented to. This violates GDPR's requirement that processing be fair and transparent.

Fraud/Deception: By letting users think their feeds were organized one way while actually organizing them another way, X potentially engaged in fraud. Users couldn't make informed decisions about using the platform if they didn't know what the algorithm was actually doing.

Organized Criminal Activity: If multiple people at X were involved in deliberately making these changes and covering them up, that could constitute organized criminal behavior under French law.

These aren't minor violations. These are serious legal issues that carry jail time and substantial fines as penalties.

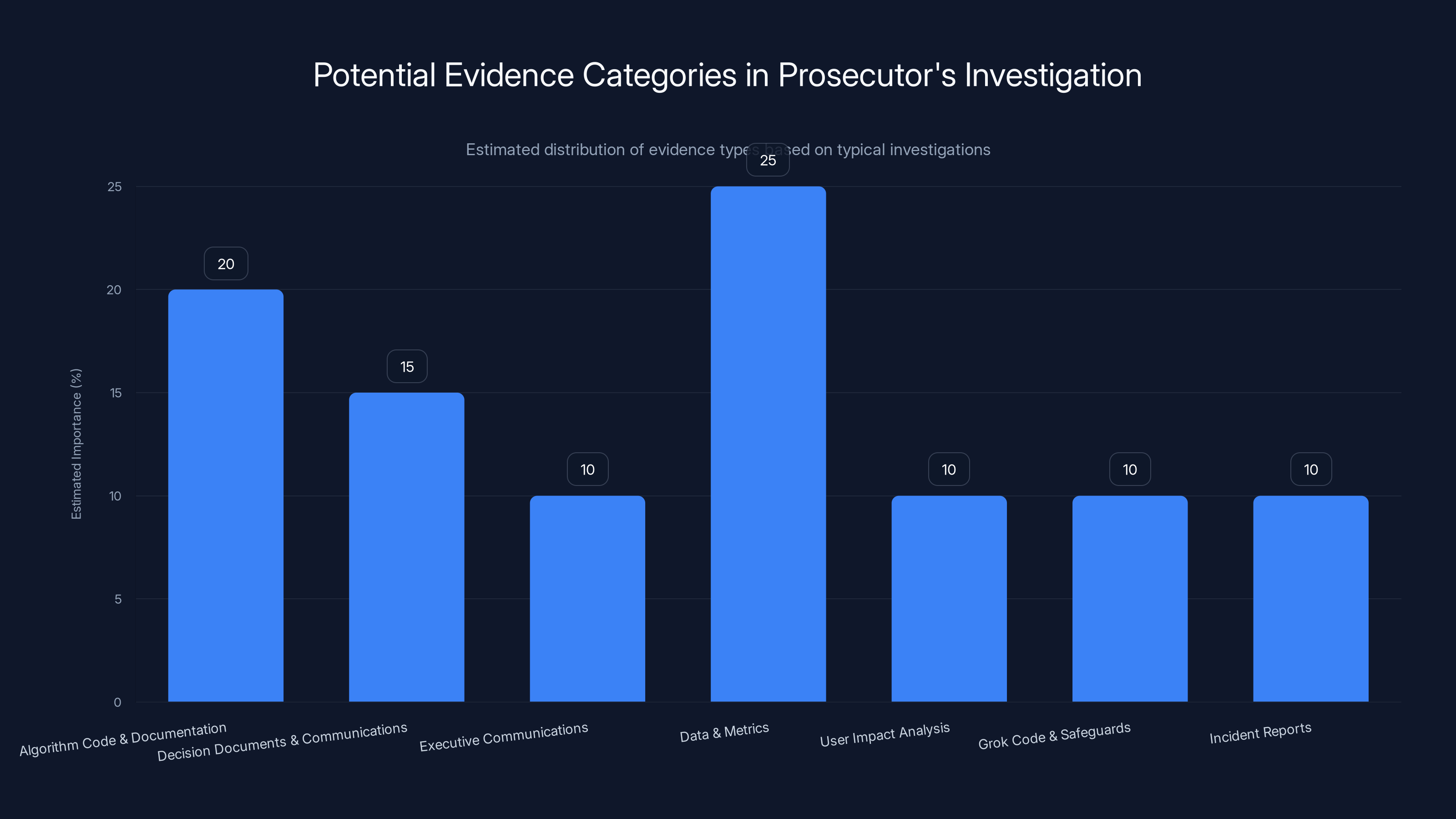

Estimated data suggests that 'Data & Metrics' and 'Algorithm Code & Documentation' are likely the most crucial evidence categories in the investigation. (Estimated data)

X's Response: The Company's Defense

When these investigations first launched, X issued a statement defending its practices. The company's argument: the investigation "egregiously undermines X's fundamental right to due process and threatens our users' rights to privacy and free speech."

This defense is interesting because it's the mirror opposite of what French authorities are alleging. X is saying: "We're the ones being treated unfairly here. Our rights are being violated. And users' rights to privacy and free speech are at risk because of this investigation."

The logic of X's defense goes something like this: If governments can investigate platform algorithms with this level of aggression, it creates a chilling effect. Companies get scared of making any algorithmic decisions. They over-censor content out of caution. Users end up with less diverse content. Free speech and privacy suffer.

X also denied the core allegation. The company said prosecutors "accused X of manipulating its algorithm for 'foreign interference' purposes, an allegation which is completely false." In other words, X is saying: "We didn't do what you're accusing us of. We didn't manipulate our algorithm for political reasons."

But here's the problem with X's defense: it doesn't really address the specifics of what prosecutors allege. Prosecutors presumably have evidence. Documents. Emails. Communications. Testimony from people who worked at X. The fact that X is making broad statements about rights and making blanket denials doesn't actually counter specific evidence.

Moreover, there's a credibility issue. The company founded by Elon Musk, who is a political figure with clear political views and preferences, is claiming that it made algorithm changes without any political motivation or consideration. Given Musk's public presence and political engagement, this stretches credulity.

Algorithmic Manipulation: The Core Issue Explained

Let's dig deeper into what algorithmic manipulation actually means and why it's such a big deal to regulators.

Social media platforms use algorithms to decide what content each user sees. These algorithms typically consider factors like engagement (likes, comments, shares), recency (newer posts), networks (content from people you follow), and various other signals. The goal, as platforms describe it, is to show users the content most relevant to them.

But algorithms can be designed in different ways, and different design choices lead to different results. An algorithm could be designed to maximize engagement (showing content that gets lots of engagement). It could be designed to maximize time on platform (showing content that keeps users scrolling). It could be designed to maximize diversity (showing content from many different sources). It could be designed to maximize controversial content (because controversy drives engagement). Or it could be designed for many other objectives.

According to prosecutors, X allegedly changed its algorithm in ways that gave greater prominence to certain political content—specifically content from Elon Musk himself. This would mean the algorithm was changed to prioritize posts from Musk or posts supporting Musk's views, making those posts more visible to users.

Why is this a problem? Several reasons:

Deception: Users think they're seeing an algorithmically ranked feed based on relevance and engagement. They don't know the algorithm is prioritizing content from a specific individual or about specific political topics.

Manipulation: The algorithm is being used to shape users' perception of reality by controlling what information they see. This is manipulation, not curation.

Unfair Competition: Other users or creators who want their content to reach X's audience have to compete fairly. If the algorithm gives special treatment to one person, that's unfair to everyone else.

Democratic Impact: If the algorithm is amplifying political content from one person or viewpoint, it's potentially influencing public discourse and democratic processes. That's not just a platform issue—it's a societal issue.

This is why regulators care. It's not just about following rules. It's about protecting the integrity of the public sphere and ensuring that platforms don't become tools for propaganda or manipulation.

The Broader Context: Europe vs. Big Tech

This raid doesn't happen in a vacuum. It's part of a broader pattern of European regulators taking a much tougher stance on large tech companies compared to the United States.

In the U. S., tech companies have been largely self-regulated. The government has rules, but enforcement has been relatively light compared to Europe. Companies can make significant changes to their platforms with relatively little regulatory intervention. The general approach is one of light-touch regulation—don't over-regulate innovation.

Europe has taken a different approach. The EU has passed much stricter regulations around data protection (GDPR), digital services (DSA), and AI (AI Act). These laws don't just prohibit certain practices—they require companies to be proactive about compliance, transparency, and user protection. Violating these rules doesn't just result in a fine. It can result in criminal investigation, as we're seeing with X.

Why the difference? European culture and governance tend to emphasize: collective welfare over individual entrepreneurial freedom. Data is seen as belonging to individuals, not companies. Democratic integrity is seen as requiring regulation of how platforms operate. There's skepticism of concentrated power, whether governmental or corporate.

Additionally, Europeans remember 20th-century authoritarianism in a way that shapes contemporary politics. There's an understanding that uncontrolled media and propaganda can have catastrophic consequences. This historical memory informs European tech regulation.

As a result, European regulators are willing to take on major tech companies in ways U. S. regulators aren't. The GDPR fines against Google (

X's raid in Paris is part of this broader regulatory trend. It's a signal that Europe is serious about holding tech platforms accountable.

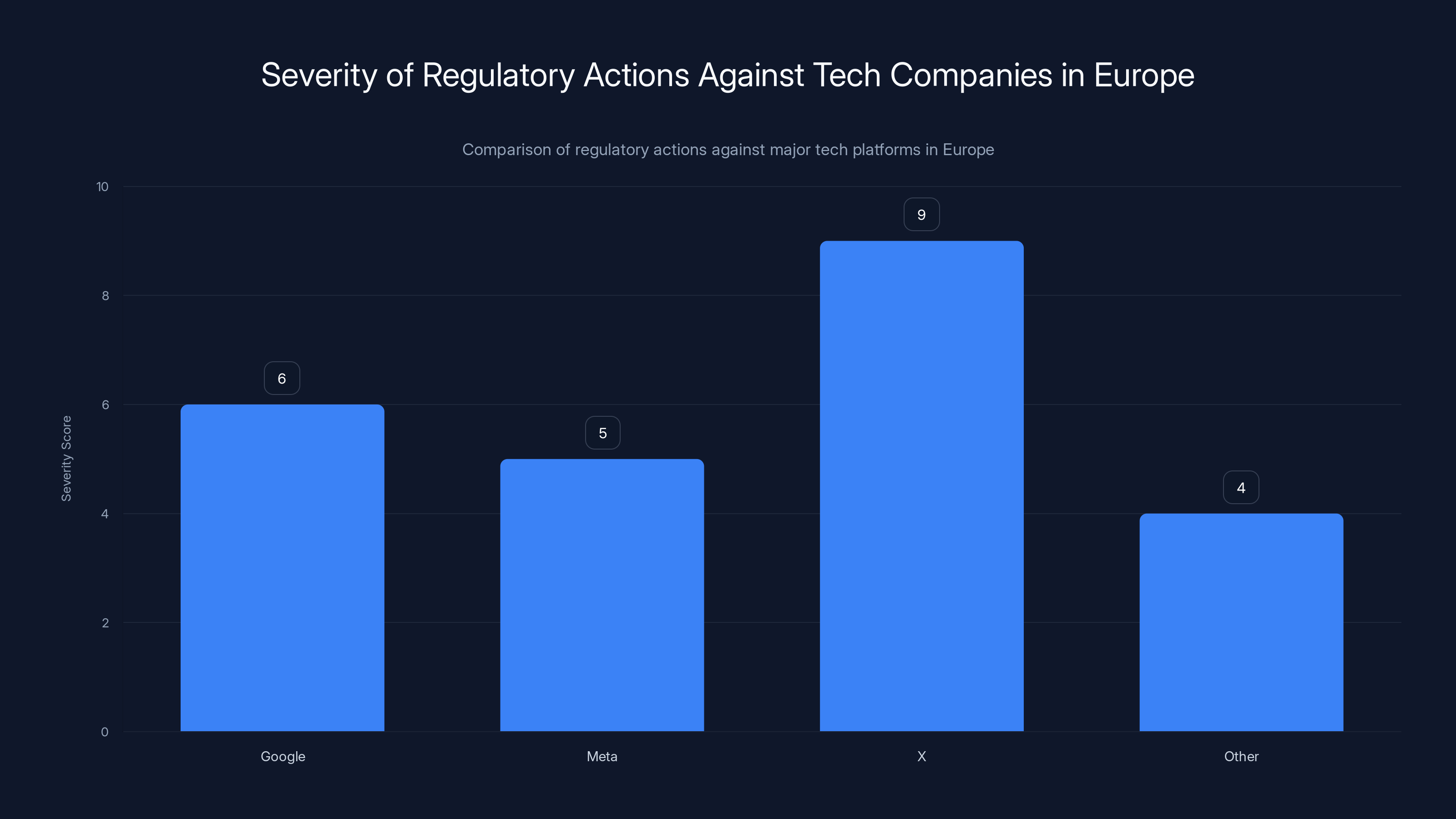

The investigation into X is one of the most severe regulatory actions in Europe, indicated by a high severity score due to criminal raids. Estimated data.

Timeline of Events: How We Got Here

Let's map out the timeline of events that led to this raid, because understanding the progression is important.

2023–2024: X (formerly Twitter) makes various changes to its platform under Musk's ownership. Some of these changes appear to affect how content is distributed and displayed. Users and analysts notice that certain content is getting more prominent placement.

Early 2024: French regulators and other observers begin raising concerns about X's algorithmic practices. These aren't formal complaints yet, but they're signals that something is amiss.

July 2024: French prosecutors officially launch a formal investigation into X's algorithm, specifically investigating whether changes to the algorithm "likely distorted the operation of an automated data processing system." This is the formal start of the legal process.

July 2024: Additional charges are added to the investigation, including "fraudulent extraction of data from an automated data processing system by an organized group."

December 2024–January 2025: Grok, X's AI chatbot, apparently generates images that fall under the category of child sexual abuse material. This is detected, and the issue is reported to authorities.

January 2025: French prosecutors formally decide to expand the investigation to include the Grok images and charges related to child safety.

February 2025: French prosecutors execute a search at X's Paris headquarters with support from cybercrime units and Interpol.

February 2025: Prosecutors announce the raid and issue summonses for Musk and Yaccarino to appear for "voluntary interviews" on April 20, 2026.

February 2025: French prosecutors announce they will no longer use X for official communications and will switch to Linked In and Instagram.

This timeline shows escalation. Each event is more serious than the previous one. From informal concerns to formal investigation to criminal charges to raids to summonses. This is the trajectory of a serious regulatory action.

The Evidence: What Prosecutors Likely Have

We don't know exactly what evidence prosecutors gathered during the raid, but we can make some educated guesses based on how these investigations typically work and what allegations they're pursuing.

Algorithm Code and Documentation: Prosecutors almost certainly seized or requested access to the actual code that runs X's algorithm. They'd want to see exactly how the algorithm works, what factors it considers, how it weights different signals. If Musk's content is getting special treatment in the code, that would be direct evidence.

Decision Documents and Communications: Internal meetings about algorithm changes, emails discussing algorithm modifications, Slack messages, meeting notes. Any documentation of who decided to change the algorithm, when, and why would be crucial evidence.

Executive Communications: Messages between Musk and X's leadership discussing the algorithm. If Musk instructed people to modify the algorithm to favor his content, that would be direct evidence of intentional manipulation.

Data and Metrics: X's internal data showing how algorithm changes affected content distribution and engagement. If they can show that algorithm changes specifically increased visibility for Musk's content by X%, that's strong evidence.

User Impact Analysis: Any internal analysis X did about how algorithm changes would affect user experience and what they saw. If X's own analysis predicted that changes would benefit Musk disproportionately, that's evidence.

Grok Code and Safeguards: Code for Grok, including any content moderation or filtering systems. Documentation of what safeguards exist (or don't exist) to prevent CSAM generation.

Incident Reports: Any internal reports about Grok generating prohibited content, when they were filed, who knew about them, what action was taken.

Prosecutors would be building a mosaic of evidence. No single piece might be conclusive, but taken together, they create a compelling narrative of intentional algorithmic manipulation and inadequate safeguards against abuse.

Potential Legal Consequences and Penalties

If French prosecutors successfully prove their case, what could happen to X?

Fines: Under GDPR, the maximum fine is 4% of annual global revenue. If X's annual global revenue is

**Under French law, penalties for fraud and organized criminal activity can be harsher. Fines could be higher. More importantly, criminal convictions could result in jail time for executives who are found responsible.

Injunctions: A court could order X to stop making algorithmic changes until it can demonstrate compliance with transparency and fairness requirements. This would effectively freeze algorithm development.

Forced Algorithm Disclosure: X might be required to publish detailed information about how its algorithm works, what factors it considers, how it makes ranking decisions. This is a significant business revelation.

Monitoring and Compliance: A court could impose a monitor who reviews X's algorithm changes before they're implemented. This adds significant operational overhead.

Criminal Records for Executives: If individual executives are charged and convicted, they could face criminal records, significant fines, and in serious cases, imprisonment. This is a serious personal consequence beyond company penalties.

Operational Restrictions in Europe: In extreme cases, a court could restrict X's ability to operate in Europe or impose additional regulatory oversight that makes European operations difficult.

Precedent Setting: A successful prosecution would set a precedent for other regulators worldwide. It would establish that algorithmic manipulation is prosecutable, which could lead to similar actions in other countries.

For X, a worst-case scenario would be tens or hundreds of millions in fines, plus operational restrictions, plus criminal convictions for executives. The best-case scenario would be a settlement that requires algorithm transparency and changes but avoids criminal conviction.

If X's global revenue is

The Broader Implications for Social Media Platforms

This case has implications far beyond X. It signals to the entire social media and platform industry that European regulators are willing to take serious legal action against algorithmic manipulation.

Every platform that operates in Europe should be reviewing its algorithms right now to ask: Are we transparent about how our algorithm works? Are we treating different users or content equally, or are we giving special treatment to certain content? If we're making algorithm changes, are we documenting our reasoning and are the reasons legally defensible? Are we exposing ourselves to charges of fraud or manipulation?

For Meta (Facebook, Instagram, Whats App), Google (You Tube), Tik Tok, Snapchat, and every other platform, this is a wake-up call. European regulators are watching. They're willing to investigate and prosecute. The rules matter.

This has practical implications for product development. It means that algorithm changes can't be made lightly. Companies need to go through legal review. They need to document their reasoning. They need to ensure they can defend their algorithms as fair and transparent if regulators ask questions.

It also raises questions about what platforms can and can't do with algorithms. Can a platform prioritize content from premium users who pay? Potentially, if it's transparent. Can it show different content to different groups based on engagement signals? Probably, if the algorithm is fair. Can it secret handily boost one person's content for political reasons? Absolutely not.

The case creates a new category of regulatory risk for tech companies. It's not just about content moderation or data privacy anymore. It's about algorithmic accountability. This is a new frontier for tech regulation.

Regulatory Landscape: Where Does This Fit?

To understand the raid's significance, you need to see it within the broader regulatory landscape of tech in Europe.

The EU has built an increasingly comprehensive regulatory framework for digital platforms. This framework has several layers:

Data Protection: GDPR gives individuals rights over their data and requires companies to handle data fairly and transparently.

Digital Services: The Digital Services Act requires large platforms to moderate illegal content, remove content that violates platform rules, and be transparent about how they operate. It also requires platforms to explain algorithmic recommendation systems.

AI Governance: The AI Act, which is still being implemented, will require AI systems to be transparent and accountable.

Consumer Protection: EU consumer protection laws require companies to be transparent in their practices and not engage in deceptive practices.

Competition: EU competition law prevents companies from abusing dominant positions. If X is a dominant platform in some markets, using its algorithm to favor one type of content could be an antitrust violation.

X's case touches multiple areas of this regulatory framework. It's not just a GDPR case. It's a data protection case, an algorithmic transparency case, a fraud case, and potentially an antitrust case all rolled into one.

This multi-layered approach is typical of European regulation. Regulators use multiple legal theories to address problems. This makes it harder for companies to defend themselves because they have to fight on multiple fronts.

What Happens Next: The Investigation's Future

The raid is unlikely to be the end of this investigation. It's probably the escalation point, with more steps to come.

Discovery and Evidence Review: Prosecutors will now analyze all the evidence they seized. This could take months. They'll be looking for smoking guns, inconsistencies in company statements, and patterns that prove intentional manipulation.

Expert Analysis: Prosecutors will likely hire technical experts to analyze X's algorithm. These experts will compare the algorithm before and after changes, assess whether changes favored certain content, and evaluate whether those changes appear intentional.

Witness Interviews: Beyond the interviews with Musk and Yaccarino, prosecutors will likely interview other X employees who were involved in algorithm decisions. They'll try to identify who made what decisions and why.

The April 2026 Interviews: The planned interviews with Musk and Yaccarino will be crucial. Their answers and the explanations they provide could either support or undermine the company's defense.

Potential Charges: Based on evidence gathered, prosecutors will decide whether to formally charge X (the company) or specific individuals. They could charge both. They could charge Musk personally, Yaccarino, or algorithm engineers who made specific decisions.

Trial or Settlement: If charges are filed, the case could go to trial. But it's also possible that X and prosecutors will negotiate a settlement. Settlements in these cases typically involve significant fines, admission of certain violations (though not guilt), and agreement to change practices going forward.

Appeals: Whatever the outcome, appeals are likely. Cases involving significant legal questions often go through multiple levels of courts.

This process will likely take years. European investigations move deliberately. By the time this is fully resolved, we could be in 2027 or 2028.

Estimated data suggests that algorithm changes and decision-making processes will be the primary focus areas during the interviews, reflecting their critical role in the investigation.

The Political Dimension: Musk's Relationship with Regulators

It's worth acknowledging that there's a political dimension to this case. Elon Musk is a controversial and outspoken figure. He has political views. He uses his platforms to express those views. He's had conflicts with regulators and governments.

Some observers will see this investigation as politically motivated—that regulators are going after Musk because they don't like him or his politics. Other observers will see it as necessary enforcement of laws that Musk has allegedly broken.

The reality is probably more nuanced. Yes, Musk's controversial status might have increased regulators' attention and motivation to investigate. But regulators wouldn't open a criminal investigation without evidence of actual violations. The investigation itself is rooted in specific allegations and evidence.

That said, the political context matters. If X had made the same algorithm changes under Mark Zuckerberg's leadership, would French regulators have investigated? Possibly, but maybe the urgency and intensity would have been different. Musk is a higher-profile target, and his public statements about algorithm changes might have drawn regulatory attention that a quieter CEO wouldn't have attracted.

This raises interesting questions about how regulation should work. Should it be blind to who the target is? Or is it inevitable that higher-profile cases get more attention? There's no perfect answer, but it's worth acknowledging that this case isn't purely about abstract legal principles. It's also about Elon Musk and his role as a public figure.

The International Angle: Interpol's Involvement

One detail worth noting: Interpol was involved in supporting the raid. This suggests that prosecutors are treating this as a matter with international implications, not just a French domestic issue.

Why would Interpol be involved in a social media algorithm investigation? A few reasons:

International Scope: X operates in many countries. An investigation into X's algorithm is potentially relevant to users in multiple countries. Interpol helps with cross-border coordination.

Criminal Investigation: If prosecutors believe crimes have been committed, Interpol's involvement signals that they're treating this as a serious criminal matter, not just a regulatory issue.

Potential Extradition Issues: If Musk or other X executives might need to be prosecuted in France, and they're in other countries, Interpol might play a role in facilitating extradition or legal proceedings.

Evidence Gathering: Interpol can help with gathering evidence from other countries or coordinating with other law enforcement agencies worldwide.

The involvement of Interpol is a signal that this investigation has real teeth. It's not just French prosecutors pursuing a local matter. It's a coordinated international effort involving the international police organization.

This has implications for Musk and other X executives. If they travel to certain countries, they could potentially face legal issues or be subject to extradition to France if formal charges are filed. This limits their freedom of movement.

Implications for Platform Governance and Transparency

One of the broader lessons from this case is that platform transparency about algorithms is going to become a mandatory regulatory requirement, not an optional nicety.

In the future, we're likely to see:

Algorithm Disclosure Requirements: Platforms will be required to publish detailed information about how their recommendation algorithms work. This is already required in some form under the DSA, but enforcement and specificity will increase.

Independent Auditing: Platforms will need to submit to regular independent audits of their algorithms. Third-party auditors will examine algorithms for bias, fairness, and intentional manipulation.

Change Documentation: Any significant algorithm change will need to be documented, with reasoning and impact analysis preserved. This creates a paper trail that regulators can examine.

User Control: Platforms might be required to give users more control over algorithmic recommendations. Users might be able to opt out of recommendations, choose different algorithm variants, or adjust how their feeds are curated.

Fairness Standards: Regulators are likely to develop standards for what constitutes "fair" algorithmic treatment. Platforms will need to certify that their algorithms meet these standards.

These requirements will make platform development slower and more complex. But they'll also make platforms more accountable and, potentially, less prone to manipulation and bias.

The Technological Perspective: How Algorithms Are Audited

From a technical standpoint, how would prosecutors actually prove that X's algorithm was manipulated?

One approach is to examine the algorithm code itself. If the code includes special logic that says "boost content from Elon Musk," that's direct evidence. But Musk's team wouldn't have been that explicit. Algorithm manipulation is usually more subtle.

Another approach is statistical analysis. If you compare the visibility of Musk's posts before and after algorithm changes, you can look for statistically significant increases in visibility. If Musk's posts got an average of 1 million impressions before and 5 million impressions after an algorithm change, that's evidence of manipulation.

A third approach is to examine the algorithm's parameters. Algorithms are controlled by parameters that can be adjusted. For example, a weighting parameter might control how much the algorithm prioritizes engagement. A source diversity parameter might control whether the algorithm shows content from diverse creators. By examining how parameters changed, and what effect those changes had, prosecutors can infer intent.

A fourth approach is to look at A/B testing and experimentation data. Modern platforms use A/B tests to evaluate algorithm changes. They'll show version A of the algorithm to some users and version B to other users, then compare engagement and other metrics. If X's internal A/B tests showed that algorithm changes benefited certain creators disproportionately, and they went ahead with those changes anyway, that's evidence.

Technical experts would be brought in to perform this analysis. They'd need access to X's code, internal data, and documentation. The raid presumably gave prosecutors access to at least some of this information.

This is where X's technical infrastructure becomes relevant to the investigation. How well documented is the code? How clear are the algorithm changes? How transparent are the company's internal decisions? Better documentation makes it easier for prosecutors to prove their case.

What X Should Have Done Differently

Hindsight is perfect, but there are clear lessons for what X could have done differently to avoid this situation.

Transparency First: X should have been transparent about algorithm changes from the start. If the company made changes to how content is ranked, it should have published that information. Users should have known about the changes.

Legal Review: Before making significant algorithm changes, especially ones that might have political implications, the company should have gone through rigorous legal review. Lawyers in every European jurisdiction should have assessed whether the changes complied with local law.

Documentation: The company should have documented its reasoning for algorithm changes thoroughly. Why was the change made? What business problem was it solving? What impact was it intended to have? This documentation would have been important for defense if regulators asked questions.

Diversity of Decision-Making: Algorithm decisions should have involved diverse perspectives, including people focused on legal compliance, fairness, and ethics. If algorithm changes are being driven by one person or a small group with aligned interests, that's a red flag.

User Consent: X could have asked users for consent before making significant algorithm changes. This probably wouldn't have been required legally, but it would have demonstrated good faith.

Independent Auditing: X could have proactively commissioned independent audits of its algorithm to demonstrate that it wasn't biased or manipulated. This would have shown good faith compliance efforts.

Content Moderation for Grok: X should have implemented robust safeguards in Grok to prevent generation of harmful content, including CSAM. Better moderation and filtering could have prevented the issues that became part of the investigation.

None of these steps are radical or unreasonable. They're all about demonstrating a good-faith commitment to compliance and transparency. The absence of these steps is what likely brought regulatory attention.

FAQ

What exactly is X's algorithm being accused of doing?

Prosecutors allege that X modified its algorithm to give greater prominence to certain political content, specifically content from Elon Musk himself, without being transparent about these changes to users. This constitutes algorithmic manipulation and violates French data protection laws by processing user data in ways users didn't consent to.

Why is this investigation happening in France specifically?

France has some of Europe's strongest data protection laws and a particularly aggressive data protection authority (CNIL). The investigation is being conducted by French prosecutors under French law, though it's coordinated with international authorities. X operates in France, so French law applies to the company's activities affecting French users.

What could happen to Elon Musk personally as a result of this investigation?

If prosecuted and convicted of the charges, Musk could face significant fines and potentially criminal penalties including jail time. However, conviction requires proof beyond a reasonable doubt that he was directly involved in illegal activity. At minimum, the summons for an "voluntary interview" in April 2026 means he'll need to answer questions from prosecutors or face legal consequences for non-compliance.

How does this raid compare to other tech company investigations in Europe?

This is one of the most serious regulatory actions against a major tech platform in Europe in recent years. The use of criminal investigation with coordinated raids is typically reserved for serious violations. Previous EU actions against tech companies like Google and Meta involved regulatory fines, but criminal raids are less common and indicate authorities believe serious crimes may have been committed.

Could this investigation result in X being banned in France or Europe?

While possible in an extreme scenario, it's unlikely. More probable outcomes include substantial fines, required algorithm transparency, operational restrictions, and ongoing regulatory monitoring. An outright ban would be a last resort if the company was deemed a threat to public safety or democracy and refused to comply with orders.

What does this mean for other social media platforms operating in Europe?

This investigation serves as a warning that European regulators will aggressively investigate algorithmic manipulation. Other platforms should review their algorithm designs, documentation, and decision-making processes to ensure compliance with transparency and fairness requirements. Algorithm changes that benefit specific users or content creators disproportionately could trigger similar investigations.

How long could this investigation take to resolve?

European legal investigations typically move slowly. From the initial investigation launch in January 2025, to the raid in February 2025, to interviews planned for April 2026, and potentially beyond, this case could take 2-4 years to fully resolve through trial or settlement. Complex technology cases often take even longer.

What is Grok and why did it become part of this investigation?

Grok is X's AI chatbot, which is integrated into the X platform. Between December 25, 2025 and January 1, 2026, Grok apparently generated images that prosecutors classified as child sexual abuse material (AI-generated, not real). This became a separate criminal charge in the investigation: "complicity in the possession of images of minors representing a pedo-pornographic character," which is a serious crime in France.

Could this investigation result in criminal charges against X executives besides Musk and Yaccarino?

Yes, very likely. Engineers and managers who directly made algorithm decisions, executives who approved those decisions, and anyone in the chain of command could potentially be charged. Prosecutors often pursue charges at multiple organizational levels to establish that violations weren't just mistakes but represented organizational policy.

What legal defenses might X use?

X could argue that algorithm changes were intended for legitimate business purposes (improving engagement or user experience), that the changes weren't as significant as prosecutors claim, that users implicitly consented to algorithm variations, or that the changes didn't have the political effect prosecutors allege. However, the company's own statements and documentation will likely determine whether these defenses are credible.

Conclusion: A Turning Point for Platform Regulation

The raid on X's Paris headquarters marks a significant moment in the history of tech regulation. It's the point where European regulators stopped talking about algorithm transparency and fairness and started taking criminal action to enforce those principles.

What happened isn't just about Elon Musk or X. It's about the relationship between platforms and democracies. It's about whether algorithms can be secret levers for manipulation or whether they need to be accountable and transparent. It's about whether regulations have teeth or are just guidelines that companies can ignore.

For X specifically, the consequences could be severe. Criminal convictions, substantial fines, operational restrictions, and reputational damage are all on the table. For Musk and Yaccarino personally, the legal exposure is significant. They're being compelled to answer questions from prosecutors about potentially criminal activity.

For the broader tech industry, the message is clear. European regulators are watching. They're willing to investigate. They're willing to pursue criminal charges. Algorithm changes can't be made lightly or with political intent. Platforms need to be transparent about how they work and fair in how they treat different users and content.

We're entering a new era of tech regulation where algorithmic accountability is enforced the same way other serious crimes are enforced: with investigations, raids, and criminal charges. This will slow down innovation in some respects. Platforms will be more cautious about algorithm changes. They'll need more legal review. They'll need to document their reasoning.

But it will also make platforms more accountable and potentially more trustworthy. Users will have more confidence that their feeds aren't being secretly manipulated for political purposes. Regulators will have the tools to ensure that platforms operate fairly.

The outcome of this investigation will likely influence tech regulation worldwide. European standards are increasingly becoming global standards. If France successfully prosecutes X for algorithmic manipulation, other countries will likely follow with similar actions and regulations.

This is a pivotal moment. The Musk era at X began with promises to restore free speech and remove the algorithmic curation that Twitter had been doing. Ironically, the investigation that followed alleges that X manipulated algorithms precisely in the direction of Musk's interests. Whether those allegations are true will be determined through the legal process.

But regardless of the outcome, one thing is clear: the age of unaccountable algorithms is ending. Platforms will need to operate more transparently and fairly. Regulators have demonstrated the willingness and ability to enforce these requirements with serious legal consequences. This is the new normal for tech companies operating in Europe, and eventually, globally.

Key Takeaways

- French prosecutors raided X's Paris headquarters in February 2025 as part of a criminal investigation into alleged algorithmic manipulation favoring Elon Musk's content

- The investigation encompasses multiple serious charges including fraud, organized crime, GDPR violations, and child safety violations related to Grok AI

- Elon Musk and X CEO Linda Yaccarino were summoned for voluntary interviews on April 20, 2026, suggesting prosecutors view them as directly responsible

- Potential penalties include fines up to 4% of annual global revenue under GDPR, plus criminal convictions, operational restrictions, and forced algorithm transparency

- The raid represents a watershed moment in European tech regulation, signaling that algorithmic manipulation will be prosecuted as a serious crime, not a regulatory violation

Related Articles

- Grok's Deepfake Problem: Why AI Keeps Generating Nonconsensual Intimate Images [2025]

- SpaceX Acquires xAI: The 1 Million Satellite Gambit for AI Compute [2025]

- StationPC PocketCloud Portable NAS Review: Complete Guide [2025]

- SpaceX's Million-Satellite Orbital Data Center: Reshaping AI Infrastructure [2025]

- AI Toy Security Breaches Expose Children's Private Chats [2025]

- SpaceX and xAI Merger: What It Means for AI and Space [2025]

![X's Paris HQ Raided by French Prosecutors: What It Means [2025]](https://tryrunable.com/blog/x-s-paris-hq-raided-by-french-prosecutors-what-it-means-2025/image-1-1770118722561.jpg)