Google's Hume AI Acquisition: The Future of Emotionally Intelligent Voice Assistants [2025]

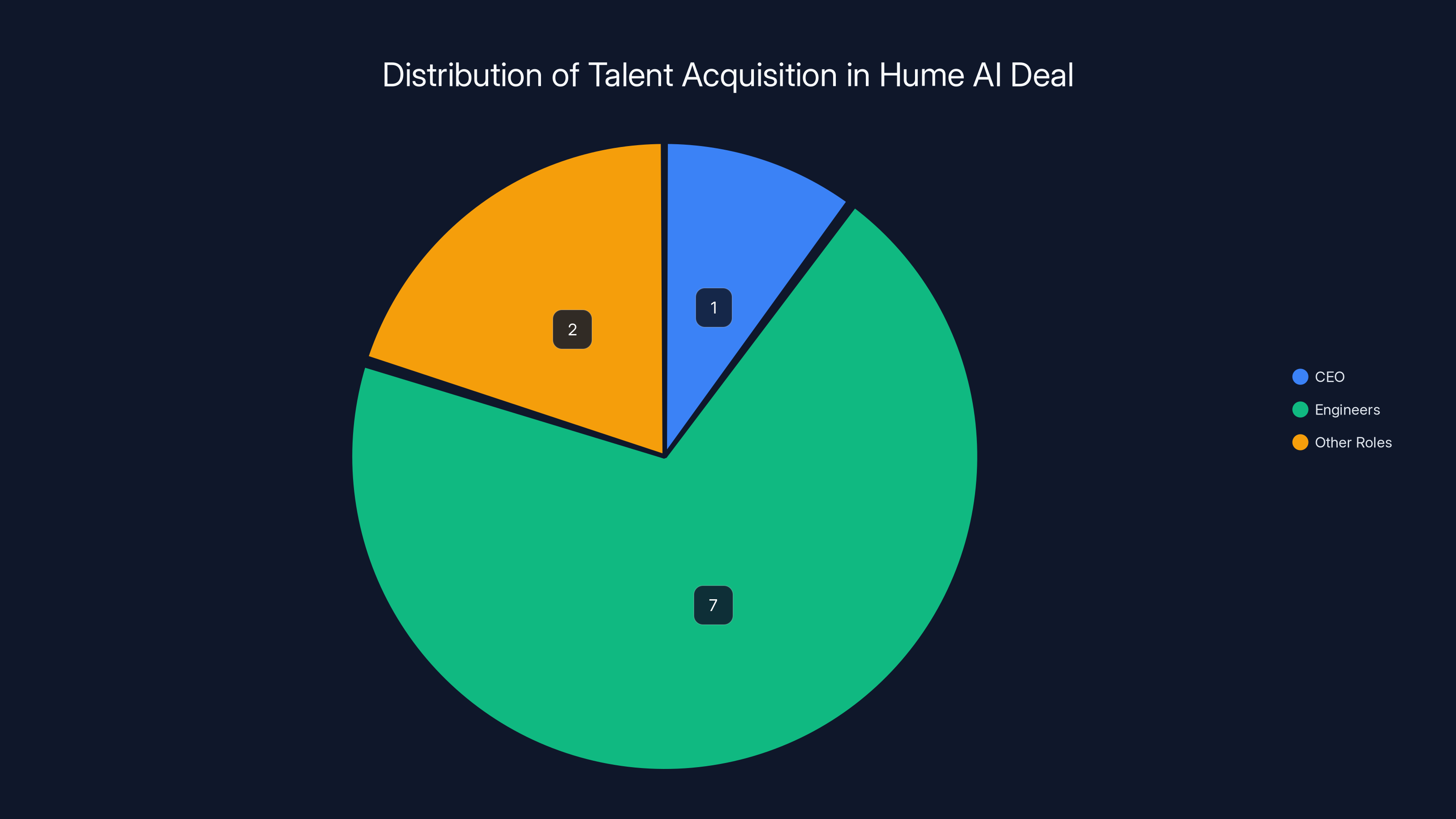

Google just pulled off a move that tells you everything about where AI is heading. Not with a traditional acquisition announcement or press release, but something quieter, more strategic. The search giant is bringing in the CEO and roughly seven engineers from Hume AI, a startup that's spent years perfecting something most AI companies barely understand: how to make voice assistants actually understand how you're feeling.

This isn't just another talent grab. It's Google placing a massive bet that the future of AI interaction won't be text prompts or images. It'll be your voice, your emotions, your actual intent coming through in how you speak. And the company willing to nail that first? They own the next decade of consumer AI.

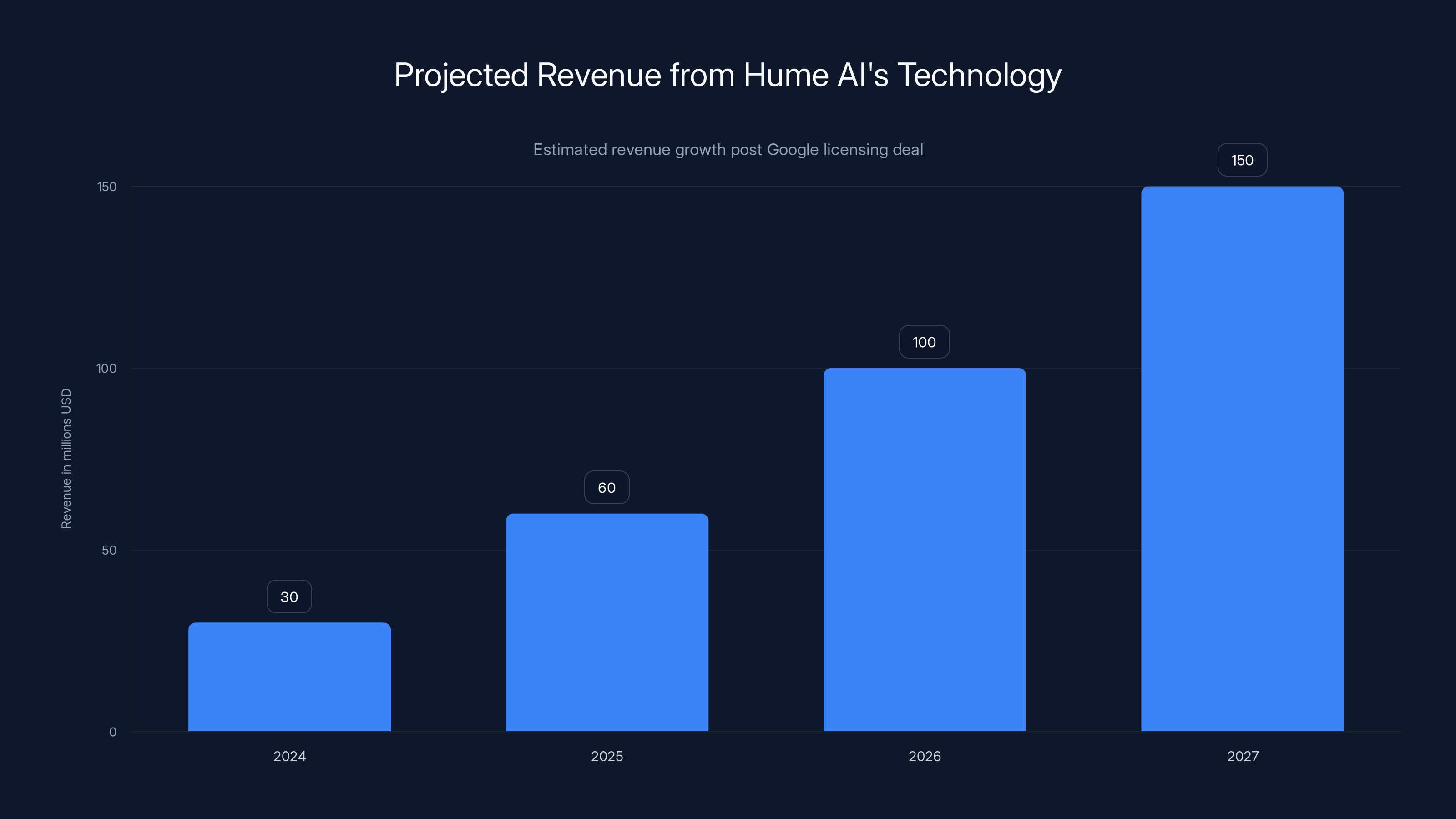

Here's what makes this move different from the typical acquisition play. Google didn't just buy Hume AI outright. They structured it as a licensing deal where both parties benefit. Google gets the talent and technology integration rights. Hume AI gets to keep operating, continue selling to other AI labs, and bring in an estimated $100 million in revenue by 2026. The Federal Trade Commission is watching these arrangements more closely now, but the reality is they're becoming the preferred way big tech extracts valuable IP without the overhead of a traditional acquisition.

The deeper story here reveals something fundamental about AI's next chapter. Text-based interfaces got us here. But voice, emotion, and genuine human understanding? That's the frontier. And Google knows OpenAI's Chat GPT already has a voice mode that millions of users love. Apple's Siri is about to get smarter with Google Gemini integration. The race isn't just about being smart anymore. It's about being human.

In this comprehensive guide, we're breaking down what the Hume AI deal means for Google, why emotional AI matters more than you think, and what it signals about where the entire industry is headed.

TL; DR

- Google acquires Hume AI talent as part of a licensing deal, bringing CEO Alan Cowen and seven engineers into Google Deep Mind

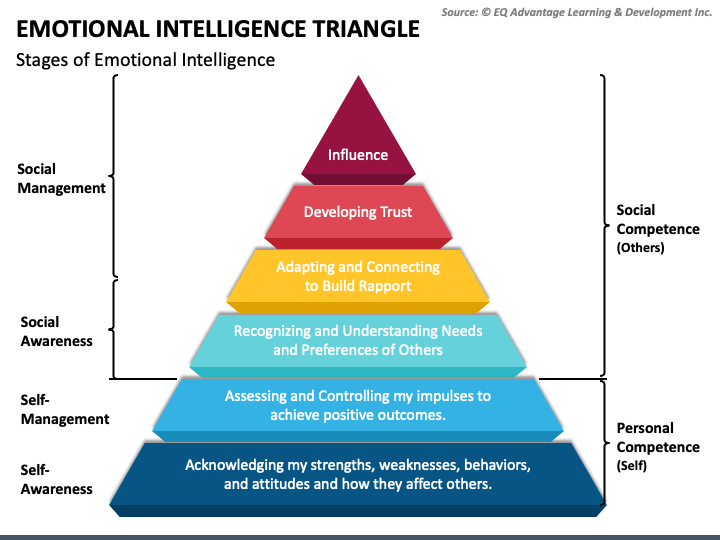

- Emotional voice AI is critical for the next generation of voice assistants that understand user mood and adapt responses accordingly

- Hume AI will continue operating independently while supplying technology to other AI labs, expecting $100 million in revenue by 2026

- Voice interfaces are becoming primary for AI interaction, competing with Chat GPT's voice mode and positioning Google against Open AI

- Aqui-hire model protects both parties from regulatory scrutiny while allowing tech companies to access high-value talent and IP

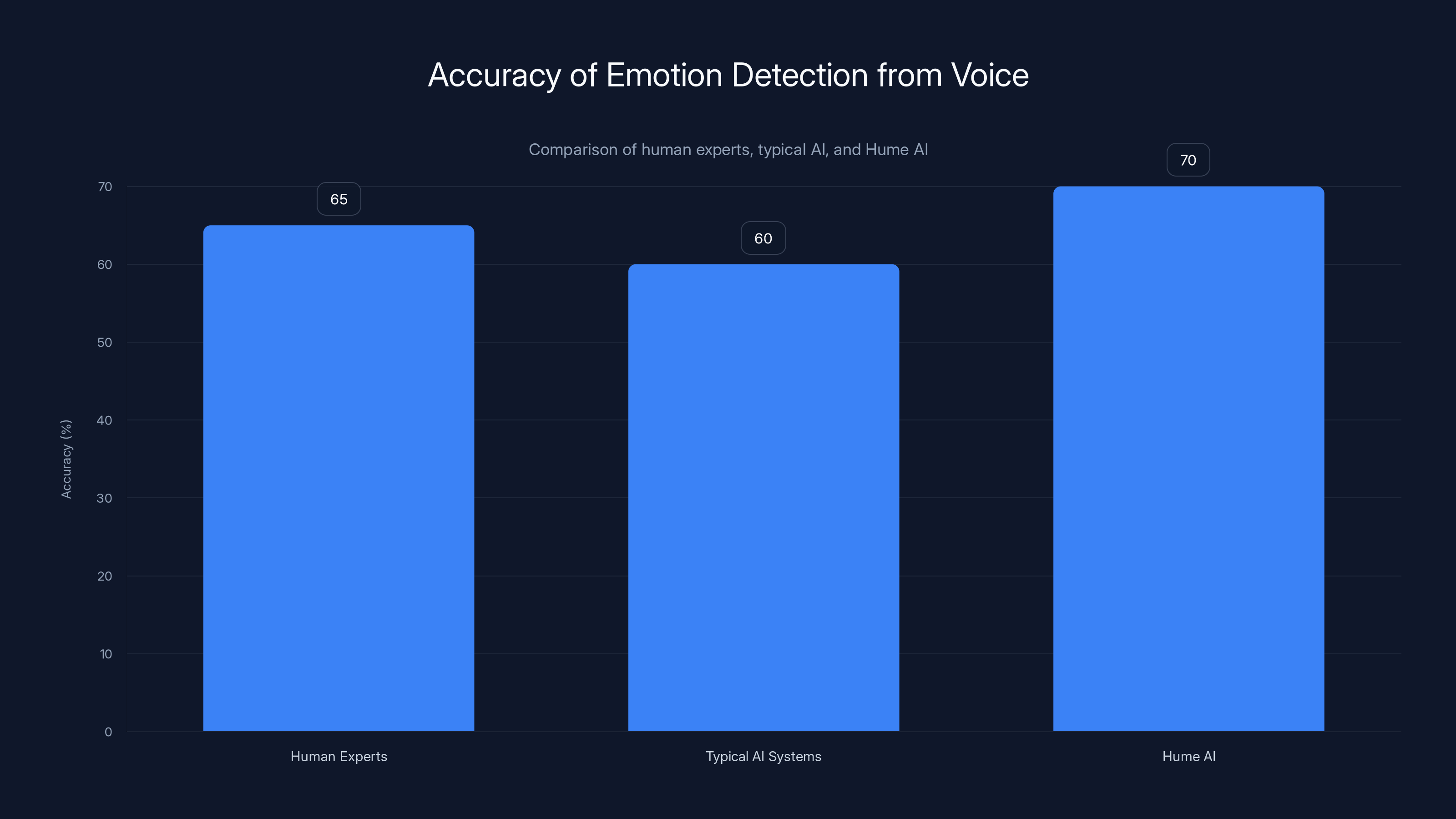

Hume AI's models are closing the gap in emotion detection accuracy, reaching 70% compared to typical AI systems at 60%.

What Hume AI Actually Does (And Why It Matters)

Hume AI isn't building another chatbot. The company has spent four years developing technology that does something remarkably difficult: it listens to how you speak, not just what you say, and figures out what you're actually feeling beneath the words.

Think about the last time you talked to a customer service bot. Frustrated, right? You wanted help, but the system responded with the same cheerful script whether you were angry, confused, or just having a bad day. Hume AI changes that equation.

The technology works by training models on millions of real conversations where human experts annotate emotional cues. A rising tone at the end of a sentence. The pace of your speech accelerating. The slight hesitation before answering. These micro-signals that humans read instantly take most AI systems years to understand, if they understand them at all.

Alan Cowen, Hume AI's CEO, brought something unusual to this problem. He has a PhD in psychology. Not machine learning. Not computer science. Psychology. That background shaped everything Hume AI built. The company treats emotions as data. Complex, nuanced, measurable data that AI models can be trained to recognize and respond to appropriately.

The practical implications are massive. In customer support, emotional AI can escalate frustrated customers to human agents before they hit a breaking point. In healthcare, voice analysis could flag depression or anxiety in a patient's tone during routine check-ins. In education, adaptive tutoring systems could slow down when a student sounds confused and speed up when confidence emerges in their voice.

Hume AI has already built real products. Their voice interface platform integrates with major AI models. They offer emotion detection APIs that other companies can license. The tech is mature enough that they're projecting $100 million in revenue within a year. That's not theoretical. That's market validation.

What's interesting is how the company approached this problem differently than bigger tech firms. Most major AI labs built voice interfaces as an afterthought. Chat works? Add text-to-speech and speech-to-text and you've got voice. Hume AI started from the opposite direction. They asked: what would voice interfaces look like if emotion understanding was fundamental, not an add-on?

The answer: completely different. Better. More human.

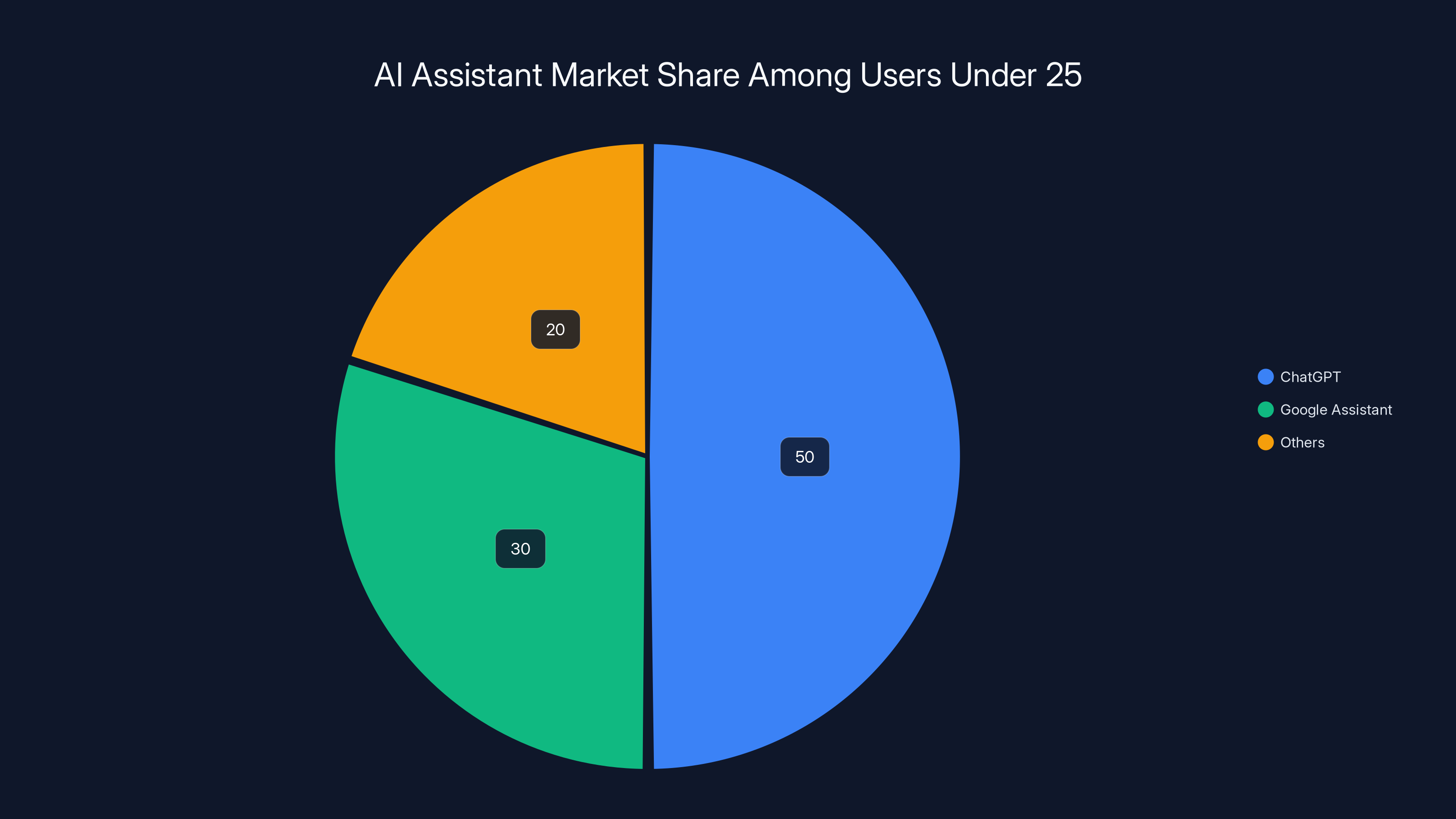

ChatGPT leads the AI assistant market among users under 25 with an estimated 50% share, highlighting its growing popularity over Google Assistant.

Why Google Needs This (And Why Now)

Google's position in consumer AI is complicated. Gemini is impressive from a technical standpoint. But it's not Chat GPT. Chat GPT has momentum. It has millions of paying users. It has a voice mode that people actually like using. And it's eating Google's lunch in certain demographics.

When you ask someone under 25 what AI assistant they use, they're increasingly likely to say Chat GPT, not Google Assistant. That's a problem for a company built on search dominance. Search is where Google makes its money. AI is where the future is. And right now, Open AI is winning the future narrative.

The Hume AI deal is Google's move to leapfrog some of that competition. Here's the strategic thinking: if voice is going to be the primary interface for AI (and every major tech company now agrees it will be), then the company that makes voice actually understand people will have enormous leverage.

Google already has the infrastructure. Billions of Android devices. Integration with smart homes. Deep relationships with telecom carriers and hardware manufacturers. What they didn't have was the emotional intelligence layer. That's what Hume AI provides.

Consider the timing. Apple just announced a multi-year partnership with Google to power the next generation of Siri. That's a huge win for Google on the hardware side. But Siri also needs to be smart enough to understand that you're frustrated when you ask for directions three times, and maybe offer alternatives instead of repeating the same response.

Hume AI's technology makes that possible.

Google's also watching what happened with the Character.ai deal. In 2024, Google Deep Mind paid $3 billion to license technology and hire talent from Character.ai, another company working on conversational AI with personality. That deal worked. It accelerated Google's timeline on emotional and personality modeling. The Hume AI deal follows that same playbook but focuses specifically on voice and emotion detection.

The deeper calculation: what percentage of AI interaction will happen through voice in 2026? In 2028? Conservative estimates say 40-50% of all AI interactions. Aggressive estimates say 70%+. But everyone agrees the direction is toward voice as the dominant interface. The company that controls emotional understanding in that space has significant power over user experience, trust, and retention.

Google can't afford to let Open AI own that entirely. This deal is insurance and acceleration all at once.

The Aqui-Hire Model: Why Big Tech Loves This Deal Structure

Formally, what Google did is called an aqui-hire. It's short for "acquisition-hire." The company acquires a smaller startup's talent and technology primarily to bring the team on board, rather than acquiring it for its existing products or customer base.

The model has exploded over the last five years. Microsoft did it with Inflection. Amazon did it with Adept. Meta did it with Scale AI's CEO. Big tech companies have figured out it's often better than a full acquisition.

Here's why: full acquisitions come with regulatory scrutiny. The Federal Trade Commission now actively investigates major tech M&A deals. They're looking for evidence of market consolidation or anti-competitive behavior. If Google directly acquired Hume AI, the FTC would probably take a closer look, ask questions, maybe delay the deal.

With an aqui-hire and licensing arrangement, it looks different to regulators. Hume AI isn't disappearing. They're a partner now. They'll continue serving other AI labs. They're not being absorbed into Google's monopoly. The company stays independent (sort of) and Google gets the talent and IP rights.

The FTC has started cracking down on these arrangements, saying they'll scrutinize them more carefully. But the structure is still far more attractive than a full acquisition from a business and legal standpoint.

For Hume AI, the deal is different than being acquired. They get Google's distribution, resources, and credibility. But they keep their independence and their other customer relationships. Andrew Ettinger, an experienced investor and executive, is taking over as CEO. That signals Hume AI will keep operating as its own entity while being deeply integrated with Google.

This model is becoming standard in the AI industry. It lets companies move fast without government delays. It protects smaller companies from being completely absorbed into bigger ones. And it lets investors and founders make money from their companies without actually selling the whole thing.

That said, the relationship is inherently unequal. Google is the 800-pound gorilla here. They set the terms. They decide how much of the technology to share with other AI labs. They determine Hume AI's product roadmap at Google. Hume AI gets to keep operating, but in Google's shadow.

Google's licensing deal with Hume AI primarily focused on acquiring engineering talent, with the CEO and a few other roles also included. Estimated data.

Voice as the New Interface: The Bigger Strategic Shift

Text was the interface that got us here. Type a prompt, get an answer. That worked. Chat GPT proved it could work at massive scale. But text has massive limitations.

You can't express emotion in text the way you can in voice. Nuance gets lost. Tone vanishes. Sarcasm breaks everything. And for accessibility, text is terrible. Not everyone can type easily. Not everyone has hands-free time to type.

Voice solves all of that. You can talk to AI while driving, cooking, doing dishes, or just living your life. Your emotional state comes through naturally. Your actual intent is easier to understand because it includes tone, pace, hesitation, and emphasis.

Every major AI company now agrees: voice will be the primary interface by the end of this decade. Open AI is betting on it with Chat GPT's voice mode. Google is betting on it with Gemini. Apple is betting on it with Siri. Even smaller companies building specialized AI applications are prioritizing voice.

But there's a problem. Voice interfaces created before emotional understanding was built in tend to feel robotic. They respond to what you say, but they don't respond to how you're feeling. Ask Siri for directions three times and you get the same response three times, even though it's increasingly obvious you're not satisfied with that route.

That's where Hume AI's technology changes things. A voice assistant that understands you're frustrated can offer alternatives. One that detects uncertainty can ask clarifying questions. One that hears fatigue in your voice can suggest taking a break or offering to handle something for you.

This isn't sci-fi. It's applied psychology. It's training models to recognize patterns that human ear already hears instantly.

The business implications are significant. User satisfaction with voice assistants has plateaued. Most people still prefer text for complex queries because they don't trust voice to understand what they mean. But emotionally intelligent voice could flip that dynamic. If the system actually seems to understand your frustration and responds appropriately, usage patterns change. Retention improves. Monetization becomes easier because users are more satisfied.

Google's betting that whoever cracks this first has significant competitive advantage. And with Hume AI's team, they think they have the best shot.

Alan Cowen: The Psychology Ph D Who Brought Science to Emotion AI

Understanding the Hume AI deal requires understanding Alan Cowen. He's not a typical AI startup founder. He didn't come from a machine learning background. He studied psychology.

That background shaped Hume AI's entire approach. Most machine learning engineers approach emotion detection as an engineering problem. How do we build a system that classifies emotions from acoustic features? Cowen approached it as a psychology problem. What emotions actually exist? How do they manifest in voice? How do people subjectively experience them?

That difference matters enormously. It's why Hume AI's models work better than competitors. They're not just fitting acoustic data to emotion categories. They're modeling emotions the way psychologists understand them. Complex. Dimensional. Context-dependent.

Cowen brought something else valuable to the company: credibility. He has a PhD. He published research. He understood both the academic rigor needed to build good models and the commercial opportunity to deploy them. That combination is rare.

At Google Deep Mind, Cowen's role will be integrating emotional intelligence into Gemini and other frontier models. Deep Mind has some of the best AI researchers in the world. Adding a psychologist to that mix changes how they think about voice and emotional understanding.

The question for Hume AI is who leads the company now that Cowen is gone. The answer is Andrew Ettinger, an experienced investor and executive who's taking over as CEO. Ettinger's background is in scaling tech companies and managing investor relations. He's not a researcher. That signals that Hume AI's immediate focus will be on commercialization and revenue, not on cutting-edge research. The research side moves to Google.

That's the aqui-hire model in action. The founder and researchers go to the big tech company. The business operators and sales people stay to keep the company running.

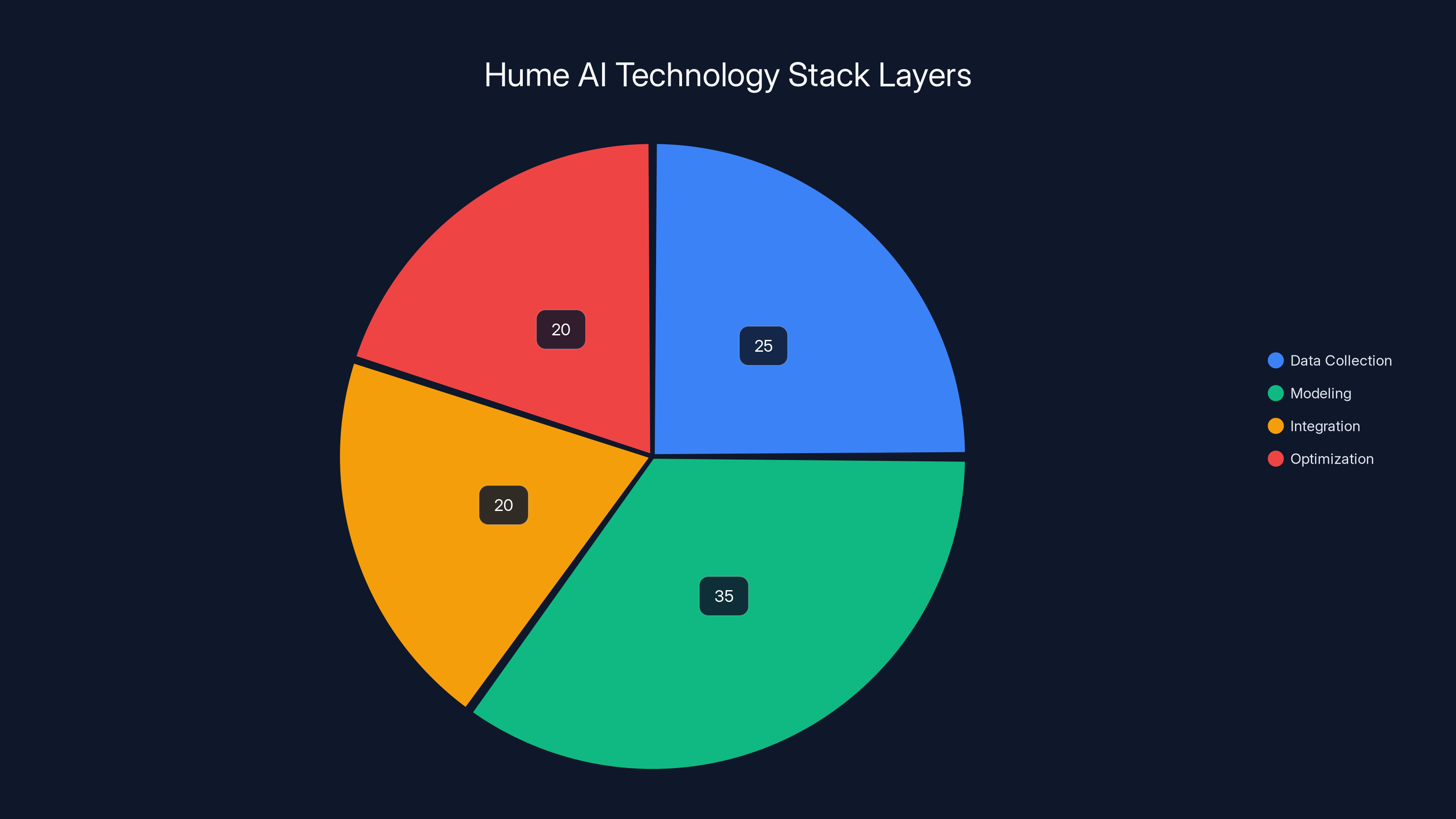

Estimated distribution shows a significant focus on modeling (35%) and data collection (25%), highlighting the complexity and foundational role of these layers in Hume AI's emotional AI technology.

Hume AI's Technology Stack: How Emotional AI Actually Works

Hume AI's technology is built in layers. At the bottom is data. Real conversations from thousands of interactions. Humans annotate emotional cues. That data becomes the foundation for everything else.

The second layer is modeling. Machine learning models trained on that data learn to recognize acoustic patterns associated with specific emotions. This is where the complexity lives. Emotions don't map neatly to specific sounds. Anger can sound like loud speech, but so can excitement. Sadness can show up as slow, quiet speech, but so can calm. The models have to learn the contextual patterns.

The third layer is integration. Hume AI has built APIs and platforms that let other companies use these models. They work with foundation models from Open AI, Anthropic, Google, and others. The emotion detection runs alongside the language model. The system understands what you're saying and how you're feeling while you say it.

The output is that the AI system can adjust its response based on detected emotion. Frustrated user? Offer alternatives. Confused user? Slow down and explain. Tired user? Be concise. Excited user? Match that energy.

Implementing this at scale is harder than it sounds. There's latency to manage. Processing voice, analyzing emotion, and generating response all happen in real-time. Delay too long and the conversation feels unnatural. Process too fast and accuracy suffers.

Hume AI solved this by focusing on efficient models. They run emotion detection in parallel with language processing, not sequentially. They've optimized for inference speed without sacrificing too much accuracy.

The models are also continuously improving. As more people use Hume AI's technology, more data flows in. More annotations happen. Better models emerge. This creates a reinforcing cycle that makes emotional AI better over time.

The Competitive Landscape: Google vs Open AI in Voice AI

Open AI has a significant head start with Chat GPT's voice mode. Millions of users are already familiar with it. It works well. It's integrated into the product people already use.

But Open AI's voice mode is relatively basic on emotion detection. It understands what you're saying. It doesn't understand how you're feeling while saying it. That's the gap Google is moving to close.

Google has something Open AI doesn't: Hume AI's team and technology. That's a meaningful advantage. But advantage isn't certainty. Open AI could license similar technology from other startups. They could build emotion detection models in-house. They have the resources and the talent.

The real competition isn't Google vs Open AI. It's whoever builds emotionally intelligent voice assistants that people actually prefer to use. That could be Google. Could be Open AI. Could be Apple with Siri. Could be someone unexpected.

What's clear is that the bar just moved. A voice assistant without emotion detection is going to feel outdated by 2026. That's going to drive massive investment in this space. Startups will pop up specifically to solve this problem. Existing startups will pivot toward it. The talent market for psychologists and voice specialists will get competitive.

Google moving first with Hume AI sends a signal: this matters. This is core. This is worth paying for and bringing in-house.

Open AI will likely respond. They might license technology from a different voice startup. They might announce a voice enhancement to Chat GPT. They might hire competing talent. The story of AI voice in 2025-2026 will be partly defined by how aggressively both companies compete on emotion understanding.

Hume AI is projected to significantly increase its revenue post-Google licensing deal, reaching an estimated $100 million by 2026. (Estimated data)

Customer Support and Enterprise Applications: Where This Gets Real

Hume AI's investor, John Beadle from AEGIS Ventures, highlighted an important use case: customer support. That's where the business value becomes immediately obvious.

When a customer calls support frustrated, they're going to have a bad experience if the system doesn't detect that frustration. They'll be transferred to automated systems. They'll explain their problem again. Their frustration will grow. Eventually they'll either give up or escalate.

With emotional AI, the experience changes. A support system that detects frustration on the first call can escalate immediately to a human. Or it can offer premium support instead of standard support. Or it can prioritize solving the problem instead of following a script.

That sounds simple. It's worth hundreds of millions of dollars when you're managing millions of support interactions annually.

In healthcare, emotional AI has different applications. Doctors spend time analyzing tone and emotion to understand patients better. An AI system that flags when a patient sounds depressed or anxious during a routine call could enable preventive care. That's not sci-fi. That's already being tested.

In education, adaptive tutoring systems could adjust teaching style based on student emotional state. Confused students get more explanation. Frustrated students get breaks. Engaged students move faster. It's personalization based on emotional intelligence.

Most of these applications don't require perfect emotion detection. They require good enough detection, deployed at scale, integrated into existing systems. That's exactly where Hume AI's technology sits. It's past the research phase. It's production-ready.

Google bringing Hume AI's team in-house signals that they see enterprise applications as a major revenue driver. Gemini API customers could license emotion detection as an add-on. Enterprises building custom voice applications could use it. The play is to make emotional AI a standard part of the Google Cloud ecosystem.

That's how platform winners are made. Control the base layer. Everything built on top of it depends on you.

The Broader Trend: Acquiring Talent vs Acquiring Products

The Hume AI deal is part of a larger trend in tech. Big companies are increasingly valuing talent and technology over existing customer bases and products.

This makes sense from a strategic perspective. If you're Google with billions in revenue and distribution, customer bases aren't that valuable. What's valuable is the team that knows how to build the next generation of technology. And talent becomes even more valuable when combined with Google's resources.

The Math is interesting here. Hume AI raised $74 million from investors. They're building technology that Google thinks is strategic. Rather than acquire the whole company, Google brings in the team and licenses the technology. Hume AI keeps operating, keeps the rest of the team, keeps selling to others.

From Google's perspective: they get what they need (talent and IP) without the overhead (existing customers, product support, organizational structure that might not fit).

From Hume AI's perspective: they get Google's backing, distribution, resources. They keep their independence. They keep revenue from other customers.

It's a clever arrangement that works better for both parties than a traditional acquisition would.

The FTC is right to start scrutinizing these arrangements more closely. They're a way for big tech to acquire strategic assets and talent without the formal acquisition oversight. But from a business perspective, they're becoming the preferred model.

Expect more of this. Microsoft, Amazon, Meta, Apple are all doing it. Every major AI lab now has acquisition-hire deals in flight. It's how the industry moves fast while managing regulatory risk.

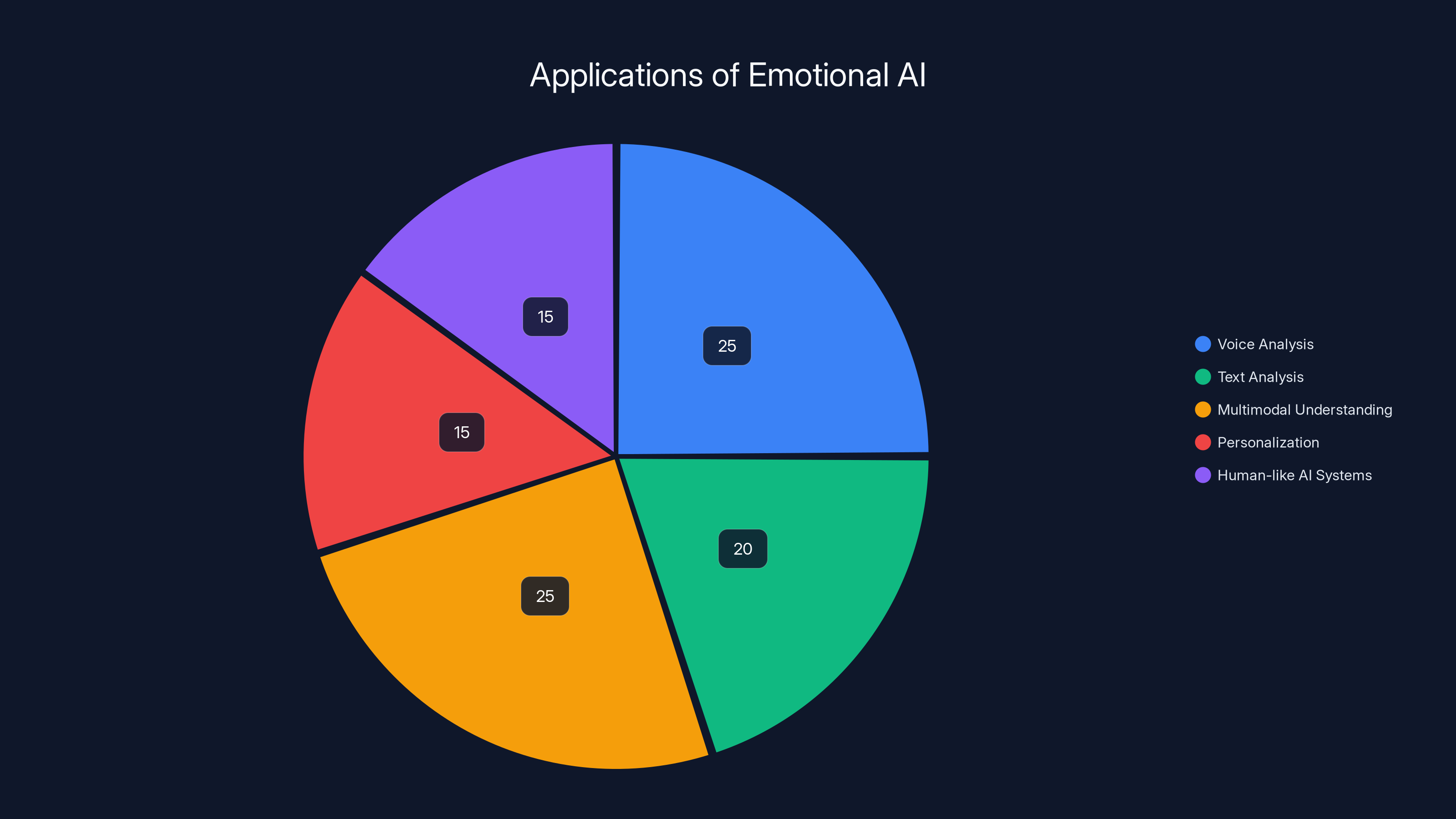

Estimated data shows that voice and multimodal understanding are leading applications of emotional AI, each representing 25% of the focus. Text analysis follows closely, highlighting its importance in content moderation and feedback analysis.

What This Means for the Voice AI Industry

Hume AI's deal with Google has immediate ripple effects across the voice AI space.

First, it validates the market. Hume AI is young but already significant enough that Google wants what it's built. That sends a signal to investors: voice and emotion detection is a real business, not speculative.

Second, it changes the competitive dynamic. Startups working on similar technology now know that the big players are moving aggressively. If you're building voice AI, you need to move faster and differentiate better. The window to build something defensible is shrinking.

Third, it raises the bar for voice assistants. If Google/Hume AI is building emotion detection into Gemini, then users will expect that from other assistants. It becomes table stakes, not a differentiator.

Fourth, it highlights the importance of talent. Hume AI wasn't acquired for its customer list or its revenue. It was acquired for its team. That's a signal to top researchers and engineers that there's significant value in being part of a team building cutting-edge AI. It attracts more talent to the space.

The broader impact is consolidation. When big tech moves this aggressively into an emerging area, it tends to consolidate power. Hume AI isn't disappearing, but it's losing some independence. The research team is at Google now. The product vision will be influenced by Google's priorities.

Startups that want to compete will either need to build something better, faster, and more differentiated than Google. Or they'll eventually get acquired or out-competed. That's the natural progression of tech markets.

For founders and investors, the lesson is clear: if you're working on emerging AI, move fast and position yourself as either essential enough to acquire or differentiated enough to survive alongside the incumbents. There's no middle ground anymore.

Timeline and Integration: What Happens Next

The deal is already in motion. Alan Cowen and the engineering team are moving to Google Deep Mind. That transition typically takes weeks, not months. Key people have probably already started in their new roles.

Andrew Ettinger is taking over as Hume AI CEO. His first priority will be stabilizing the company and keeping other customers from panicking. The message will be: we're independent, we're focused on growth, Google is a partner and customer, not a parent company. That's the narrative that keeps other AI labs comfortable licensing from Hume AI.

Hume AI is releasing new models in the coming months. Those will likely benefit from input from the Google team, but they'll be released under the Hume AI brand. The technology flow will be bidirectional. Hume AI gives Google emotional AI capabilities. Google gives Hume AI access to larger, more capable foundation models to build emotion detection on top of.

Integration into Gemini will happen over quarters, not months. Google will likely start with Gemini Advanced or a new tier of service. Voice-based Gemini with emotion detection will be a new product offering. They'll position it as more natural, more human, more helpful than competitors.

The customer support and enterprise applications will probably come next. Google Cloud teams will build integrations. API documentation will go live. Beta customers will start experimenting. By mid-2026, emotion detection will be a standard feature in Google's voice AI offerings.

That timeline is aggressive but achievable. Google has the resources to move fast, and Hume AI's team knows their tech deeply. The integration is complicated but not unprecedented.

Implications for Apple and Siri

Apple is in an interesting position here. They just partnered with Google to power the next version of Siri. That's a smart move for Apple. They don't have to build their own foundation model. They get Gemini's capabilities plus their own specializations.

But the emotion detection piece wasn't part of that announcement. Siri would get smarter at understanding intent and language. But would it understand emotion?

That's now a question. If Google's Siri integration includes emotion detection but Siri on Apple devices doesn't, Apple is at a disadvantage. Users will notice the difference. They'll expect all voice assistants to work the same way.

Apple could respond in a few ways. They could license emotion detection from another startup. They could build it in-house. They could ask Google to include it in Siri's integration.

Most likely, Apple will want proprietary emotion detection for Siri, even if powered by Gemini under the hood. That means licensing from someone other than Google. Or building quickly in-house.

This is a competitive dynamic that will play out over the next year. Apple doesn't like being dependent on Google for core capabilities. But they're betting that the Gemini foundation is so good, the dependency is worth it. Emotion detection could test that calculation.

The Future of Emotional AI: Beyond Voice

The Hume AI deal focuses on voice, but emotional AI has broader applications.

Text analysis could detect emotional undertones in written communication. Important for content moderation, customer feedback analysis, and mental health applications.

Multimodal emotional understanding could combine voice, text, and eventually video or sensor data. More information about emotional state means more accurate detection and better responses.

Personalization at scale becomes possible when systems understand not just what users want, but how they're feeling while trying to get it. That's where the real business value emerges.

Longer term, emotional AI could be one component of more human-like AI systems. Systems that don't just process information but actually relate to users as distinct individuals with emotional lives. That's not AI becoming conscious or sentient. It's AI becoming more useful and more trusted because it demonstrates understanding of human emotional reality.

The research is ongoing. Universities are still studying emotion recognition. Startups are applying it in vertical markets. Big tech is integrating it into platforms. The field is accelerating.

Hume AI's deal with Google is an inflection point. It signals that emotional AI has moved from research to production. That it's strategic enough to fight for. That it's central to the future of AI interfaces.

FAQ

What is Hume AI and why did Google acquire it?

Hume AI is a startup specializing in emotionally intelligent voice interfaces and emotion detection technology. Google acquired top talent from the company, including CEO Alan Cowen and approximately seven engineers, through a licensing deal rather than a traditional acquisition. The deal allows Google to integrate emotional AI capabilities into its Gemini models while Hume AI remains independently operational and continues serving other AI labs.

How does emotional AI in voice interfaces work?

Emotional AI analyzes acoustic patterns in speech such as tone, pace, hesitation, and emphasis to determine a user's emotional state. Hume AI trained its models on millions of real conversations annotated by human experts to recognize emotional cues. When integrated into voice assistants, the system can adjust responses based on detected emotion, offering alternatives to frustrated users, slowing down for confused users, or matching energy with excited users.

What makes this an "aqui-hire" rather than a traditional acquisition?

An aqui-hire (acquisition-hire) is a deal structure where a larger company brings on a smaller company's team and licenses their technology, while the startup remains operationally independent. This approach attracts less regulatory scrutiny than traditional acquisitions, allows founders to maintain some independence, and lets big tech companies access critical talent and IP without the overhead of fully integrating an acquired company. The Federal Trade Commission has begun scrutinizing these arrangements more closely.

Why is voice becoming the primary interface for AI?

Voice offers significant advantages over text: it's more accessible, allows hands-free interaction, naturally conveys emotional tone, and works in situations where typing isn't practical. Every major AI company now recognizes that voice will be the dominant interface for AI by the end of the decade. Adding emotion detection makes voice interfaces more effective by enabling systems to understand not just what users say but how they're feeling while they say it.

What are the real-world applications of emotion detection in AI?

Emotion detection has practical applications across multiple industries. In customer support, it enables systems to escalate frustrated customers to human agents immediately. In healthcare, voice analysis could flag depression or anxiety during routine check-ins. In education, adaptive tutoring systems can adjust teaching style based on student emotional state. In enterprise applications, emotion detection improves user satisfaction and retention by making interactions feel more personalized and human.

How does this deal affect Open AI's Chat GPT and voice mode?

Open AI has a head start with Chat GPT's voice mode, which millions of users already adopt. However, Chat GPT's voice mode is relatively basic on emotion detection. Google's integration of Hume AI's technology creates a meaningful competitive advantage in understanding user emotional state. Open AI could respond by licensing similar technology, building emotion detection in-house, or making strategic partnerships, making the voice AI competition increasingly focused on emotional understanding.

What happens to Hume AI now that Google acquired the team?

Hume AI continues operating as an independent company under new CEO Andrew Ettinger, an experienced investor and executive. The company expects to generate $100 million in revenue by 2026 by supplying emotion detection technology to other AI labs and enterprises. The relationship with Google is both partnership and customer relationship, allowing Hume AI to maintain other business relationships while benefiting from Google's resources and distribution.

Why does Google's partnership with Apple on Siri matter in this context?

Apple recently partnered with Google to power the next generation of Siri with Gemini. This deal raises questions about whether Siri will include emotion detection capabilities. If Google integrates Hume AI's technology into Siri, Apple's voice assistant gains emotional intelligence. If not, Apple may need to license similar technology from another provider or develop it in-house to keep Siri competitive with other emotionally aware voice assistants.

How does this signal affect the broader AI startup ecosystem?

The Hume AI deal validates emotional AI as a real, strategic business opportunity. It accelerates the consolidation of talent and capability around major tech companies. Startups working on similar technology face increased pressure to differentiate and move faster. The market signal is clear: big tech is moving aggressively into voice and emotion AI, raising the bar for what counts as competitive in the voice assistant space.

What does this tell us about the future of AI interfaces?

The deal signals that the future of AI interaction will prioritize emotional understanding and voice interfaces over text-based interaction. Systems that can detect user emotional state and adapt responses accordingly will become table stakes, not differentiators. Multimodal emotional understanding combining voice, text, and potentially other data will become increasingly sophisticated. The companies that master emotional AI will have significant competitive advantage in building trusted, effective AI systems that users actually prefer to use.

Conclusion: The Emotional Intelligence Era of AI Has Begun

The Hume AI deal represents more than a talent acquisition. It's Google placing a massive strategic bet on how the future of AI will work. Not through text prompts. Not through perfect accuracy on random benchmarks. But through systems that understand people. Truly understand them. Understanding what they mean, what they need, and how they feel.

That sounds obvious. Humans understand each other emotionally constantly. It's baseline for human interaction. But for AI, it's revolutionary. Most current AI systems process information. Hume AI's technology helps systems understand intention and emotion. Those are different things.

The business implications are significant. User satisfaction with voice assistants has plateaued because current systems feel robotic. They don't adapt. They don't understand frustration. They don't respond to emotional context. Emotional AI fixes that fundamental problem.

The competitive implications are equally significant. Open AI owns the narrative right now with Chat GPT's dominance. Google is making a move to shift that narrative toward voice and emotional understanding, where they believe they can compete more effectively. The next 18 months will show whether that strategy works.

The industry implications are broader. Big tech is consolidating power around voice and emotion AI. Startups will continue to innovate in verticals and specializations. But the core platform that matters will likely be controlled by Google, Open AI, or maybe one or two other players.

For anyone building applications involving voice or voice assistants, the message is clear. Emotion detection isn't a nice-to-have anymore. It's becoming essential. Users will expect it. Competitors will ship it. Your products need to include it or you'll lose retention and satisfaction battles.

Alan Cowen brought something unusual to the AI industry. A psychology PhD focused on emotional intelligence in voice. That perspective shaped Hume AI. That perspective is now at Google Deep Mind. It will influence how Gemini evolves. How Google's voice assistants work. How the company thinks about human-AI interaction.

That's the real story. Not a company acquisition, though it is that. But a paradigm shift in how AI companies are thinking about building systems that humans actually want to use. Systems that understand us. Systems that feel less like algorithms and more like something closer to genuine comprehension.

The future of AI is voice. And the future of voice AI is emotional understanding. This deal confirms both of those things and accelerates a shift that was always coming. Google is just making sure they're not left behind when it arrives.

For anyone working in AI, voice technology, customer support, or human-computer interaction, pay attention. The next few years will be defined by companies that get emotional intelligence right. Hume AI just moved that timeline forward significantly by joining forces with Google's massive resources and reach.

The era of emotionally intelligent AI interfaces isn't coming. It's here. And it's going to change how people interact with technology in ways that are hard to overstate.

Key Takeaways

- Google DeepMind acquired Hume AI talent and licensed their emotional AI technology, bringing CEO Alan Cowen and seven engineers into the company

- Hume AI specializes in emotion detection from voice, allowing AI systems to understand user emotional state and adapt responses appropriately

- Voice interfaces are becoming the primary way users interact with AI, making emotional understanding a critical competitive differentiator

- The aqui-hire deal structure allows Google to access strategic talent and IP while avoiding full acquisition regulatory scrutiny

- Enterprise applications in customer support, healthcare, and education demonstrate immediate business value for emotionally intelligent AI systems

- OpenAI's ChatGPT voice mode has momentum, but lacks emotion detection that Google is now integrating through Hume AI partnership

- This deal signals that emotion detection is moving from research to production, becoming table stakes for voice AI systems by 2026

Related Articles

- Apple's AI Pivot: How the New Siri Could Compete With ChatGPT [2025]

- Gemini vs ChatGPT: Which AI Model Is Actually Better? [2025]

- Alexa Plus Gets Sassy: Why AI Assistants Are Developing Attitude [2025]

- AI Bubble Myth: Understanding 3 Distinct Layers & Timelines

- Apple's Siri AI Overhaul: Inside the ChatGPT Competitor Coming to iPhone [2025]

- US and China AI Collaboration: Hidden Partnerships [2025]

![Google's Hume AI Acquisition: The Future of Emotionally Intelligent Voice Assistants [2025]](https://tryrunable.com/blog/google-s-hume-ai-acquisition-the-future-of-emotionally-intel/image-1-1769085479601.jpg)