GitHub's AI Coding Agents: Claude, Codex & Beyond [2025]

You probably remember when GitHub Copilot first launched. It felt like science fiction. You'd type a comment, and suddenly the AI would write entire functions for you. Now? That's table stakes.

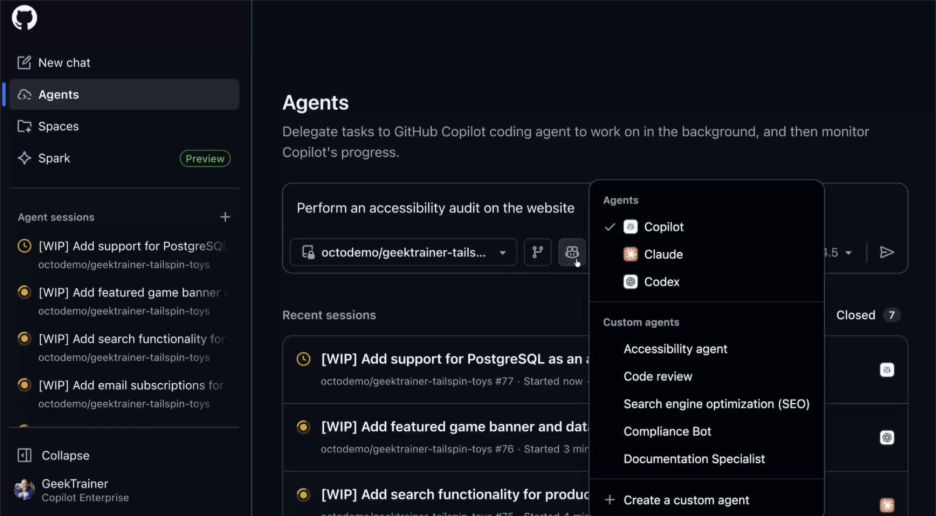

GitHub just opened the floodgates. Microsoft-owned GitHub announced something bigger: native support for multiple AI coding agents, not just Copilot. We're talking Anthropic's Claude, OpenAI's Codex, and custom agents from Google, Cognition, and x AI. All integrated directly into your workflow.

This changes everything. Instead of being locked into one AI model, you can now pick the right tool for the job. Need Claude's reasoning chops? Use Claude. Want Codex's speed? Switch to Codex. Building something custom? Write your own agent. It's like finally giving developers what they actually want: choice.

Let's dig into what this means, how it works, and why it matters more than the headlines suggest.

TL; DR

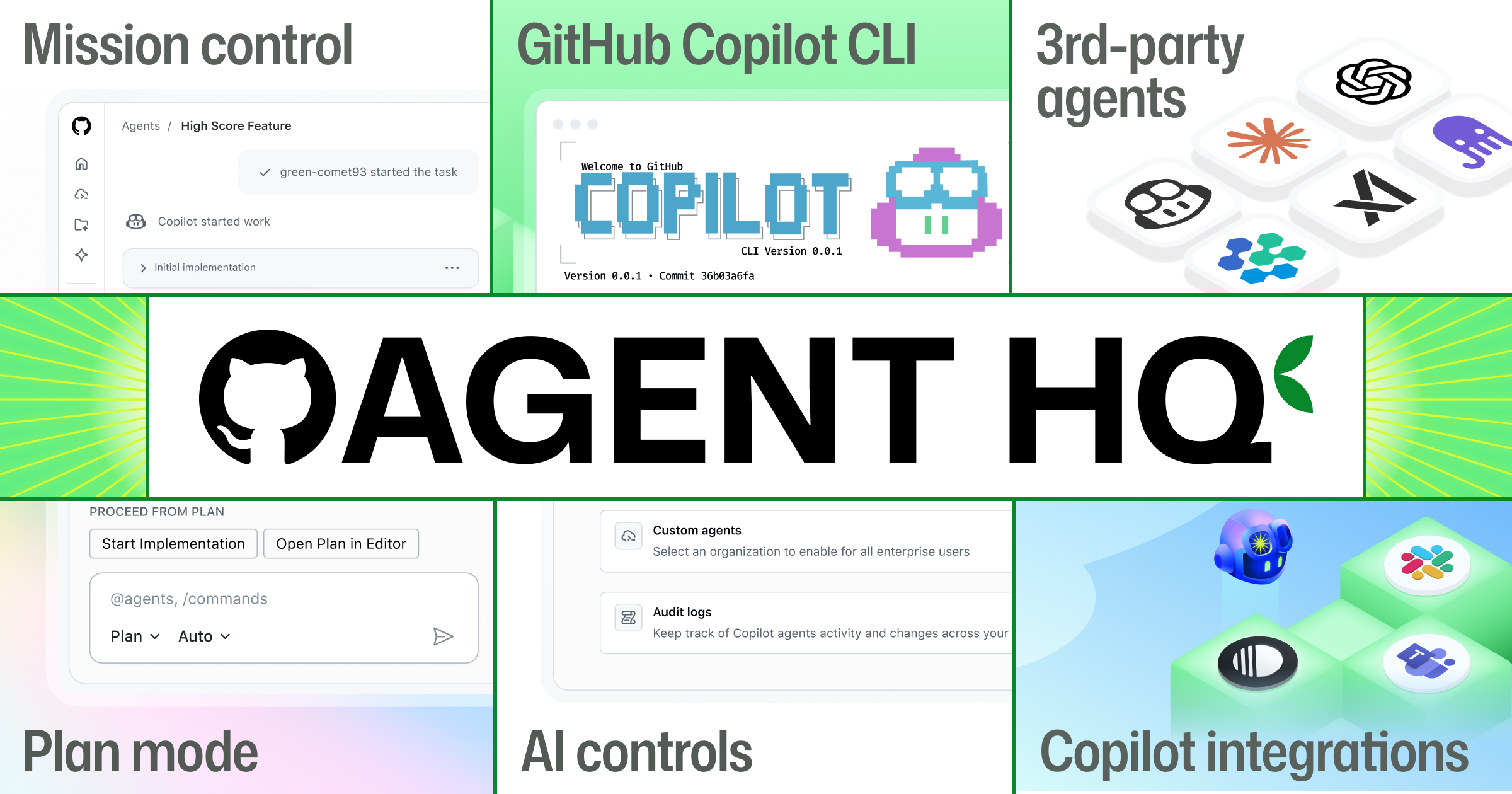

- Multiple AI models now available: GitHub Pro+ and Enterprise users can choose between Copilot, Claude, Codex, and custom agents

- Seamless integration across platforms: Works in the web interface, mobile app, VS Code, and directly in issues and pull requests

- Collaboration like human teammates: Use @mentions to invoke agents in conversations, just like tagging colleagues

- More agents coming soon: Google, Cognition, and x AI agents are in the pipeline

- Flexible session-based pricing: During public preview, each agent session uses one premium request (final pricing TBA)

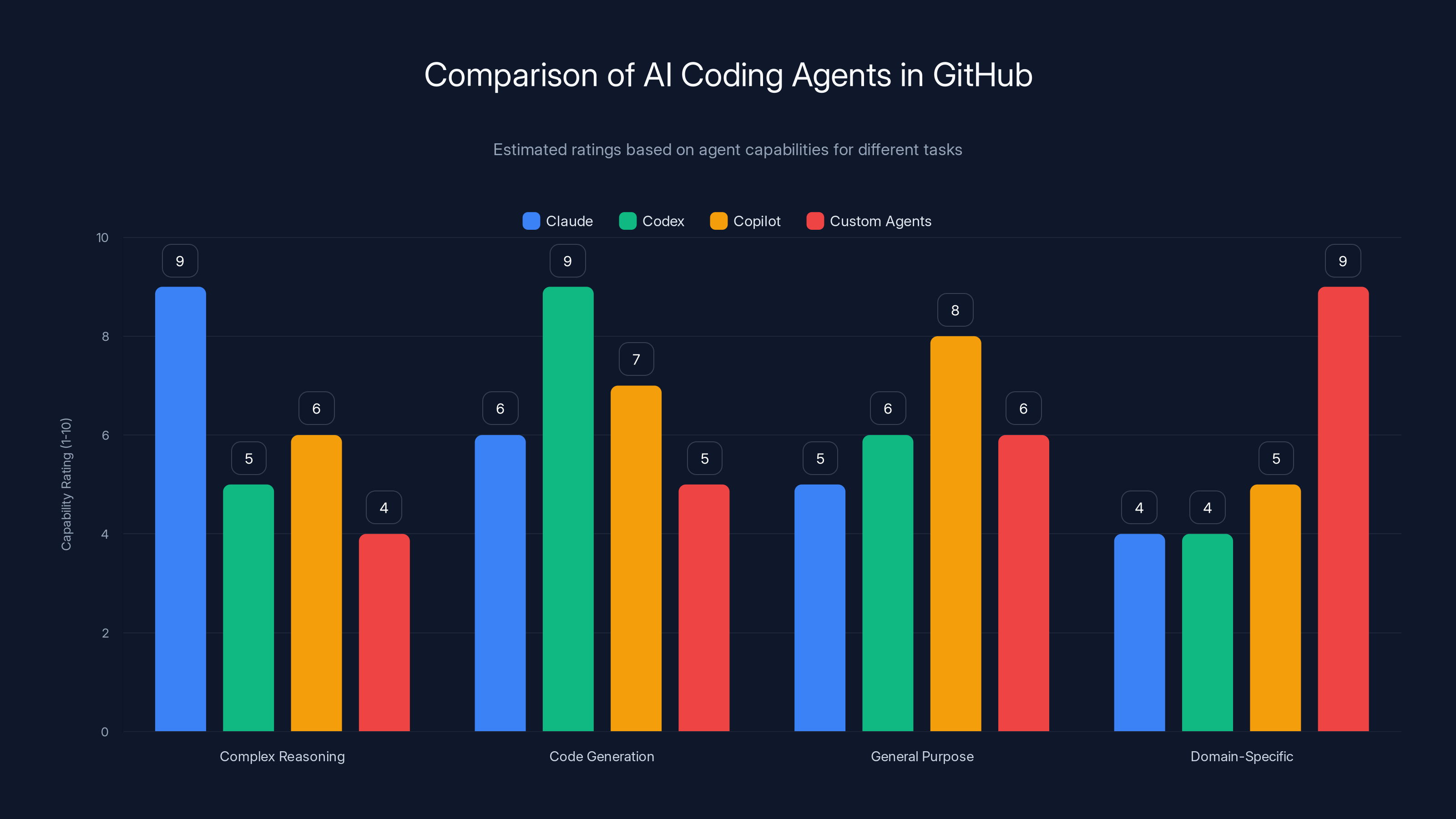

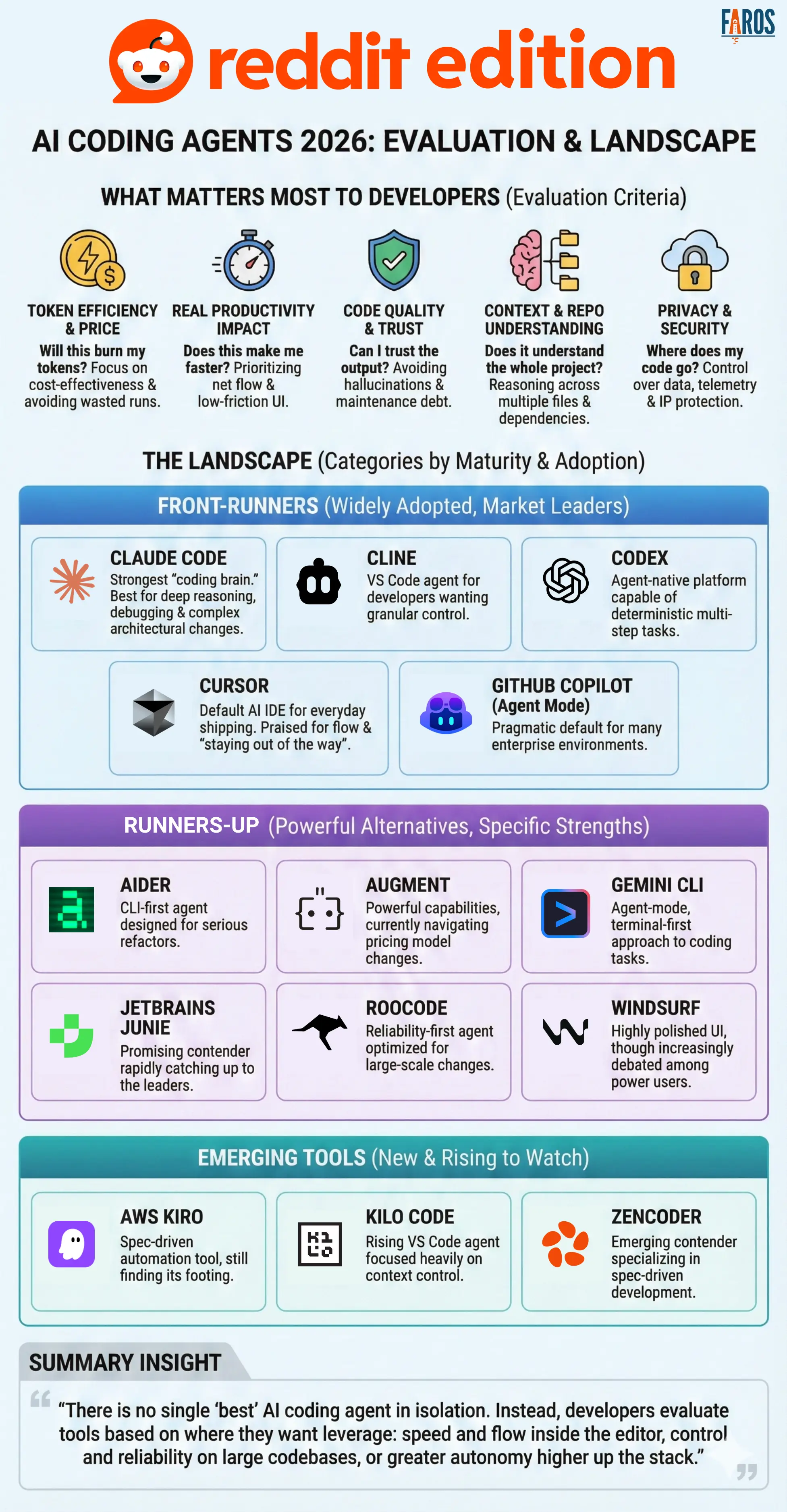

Estimated data shows Claude excels in complex reasoning, Codex in code generation, Copilot is balanced, and custom agents are best for domain-specific tasks.

The Big Picture: Why GitHub Made This Move

For years, GitHub Copilot was the only game in town. It worked well for most developers, but it had limitations. Some engineers swear by Claude for complex reasoning tasks. Others prefer the speed and specificity of purpose-built agents. And enterprises? They wanted the flexibility to use models they'd already invested in or models that fit their specific compliance needs.

GitHub faced a choice: stay closed and risk losing power users to competing platforms, or open up and become the infrastructure layer where developers can choose their own AI tools.

They chose to open up. And that's the smarter play.

Microsoft already owns OpenAI, but integrating only Copilot would've felt like protectionism. By supporting Claude (from Anthropic, a major competitor) and Codex, GitHub signals something important: we're agnostic about which model you use. We just want to be the place where coding happens.

That's the infrastructure play. That's how you stay relevant.

The timing also matters. AI models are getting better, cheaper, and more specialized every quarter. Locking developers into a single model guarantees that within 12 months, someone else's agent will be objectively better for certain tasks. By opening the API, GitHub future-proofs itself against model commoditization.

How It Works: The Mechanics of Multi-Agent Integration

Let's talk about the actual experience. This isn't theoretical. Here's what you can actually do today.

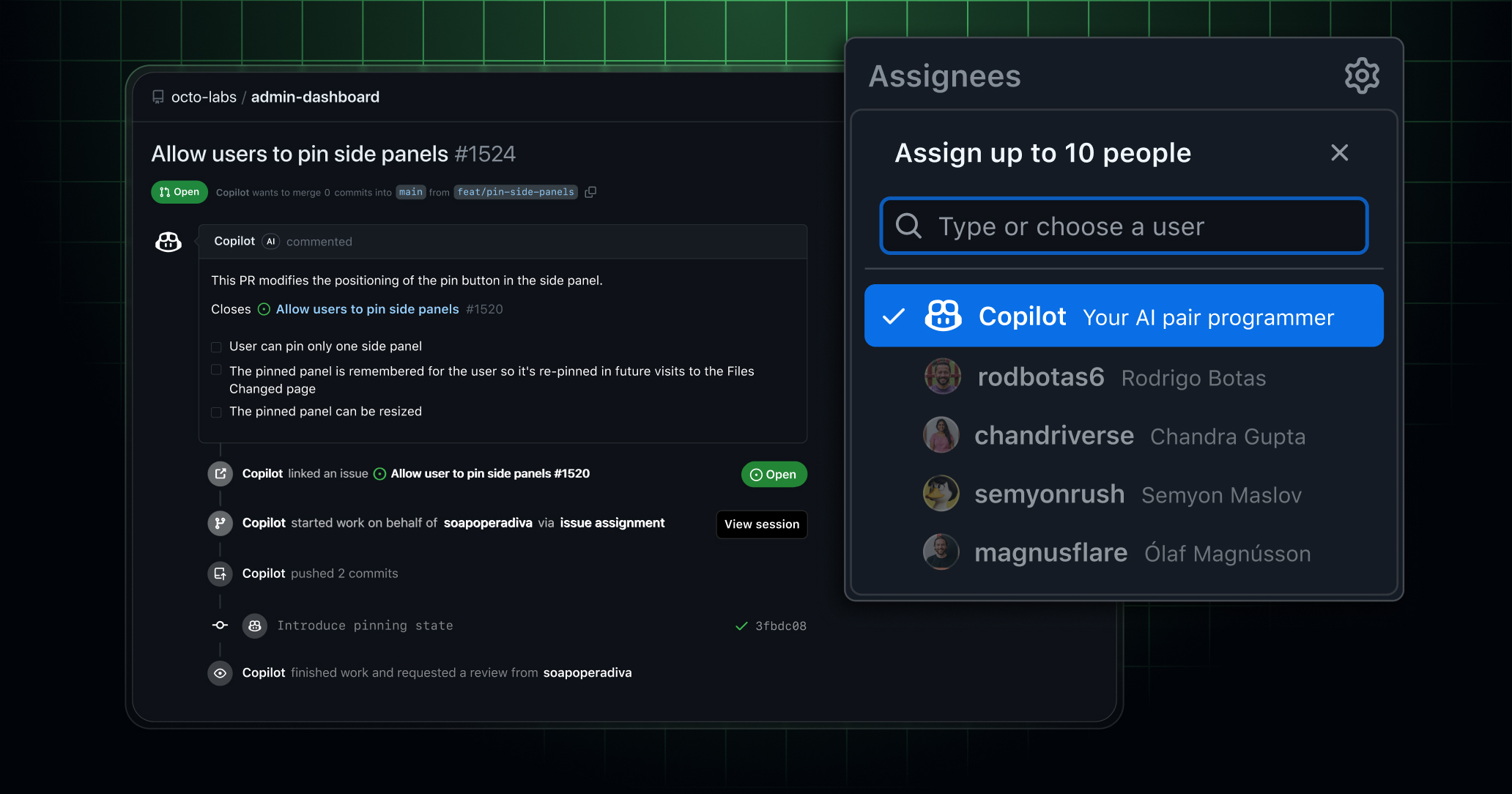

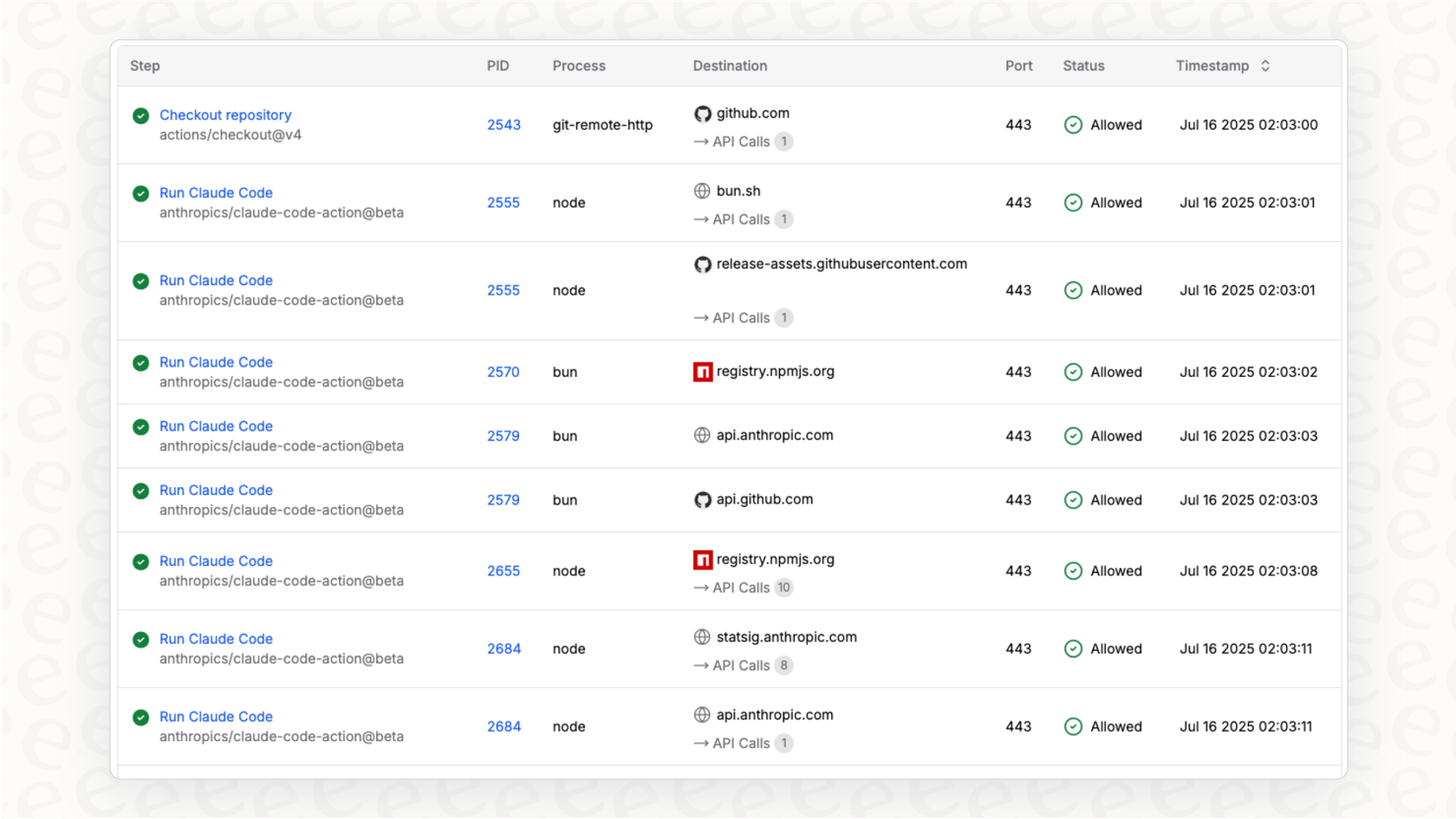

You're working on a pull request. You notice a bug in the logic. Instead of manually fixing it, you can now invoke an agent directly from the conversation:

You type: @claude please review this function and suggest optimizations

Claude analyzes the code in context. It understands the PR history, the issue description, and the codebase. It suggests changes inline. You accept, reject, or iterate. It works like talking to a colleague, except the colleague has read the entire repo.

This happens everywhere GitHub code lives:

- In pull request reviews: Leave @comments with agents. They review code, flag security issues, and suggest improvements without needing separate tools.

- In issues: Ask an agent to clarify requirements, break down tasks, or draft implementation approaches.

- In the Agents tab: Enabled repositories now have a dedicated agents interface where you can see all active agent sessions, their outputs, and their reasoning.

- In VS Code: The VS Code extension now lets you invoke agents from the editor itself. Write code, ask Claude to refactor it, see suggestions in real-time.

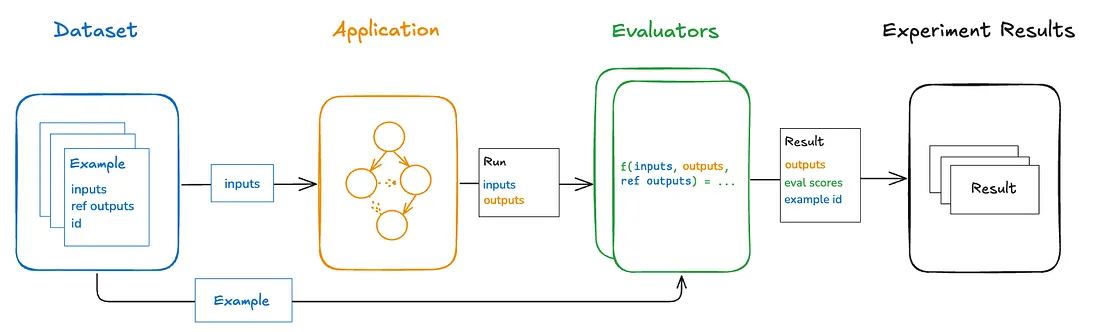

The magic is in the context. Unlike generic AI tools, these agents understand they're operating inside a specific repository, on a specific branch, discussing a specific issue. They have access to:

- File history and recent changes

- PR discussions and review comments

- Related issues and documentation

- Code comments and commit messages

- The entire dependency tree and test suite

That context window is massive. And GitHub's infrastructure gives them access to it natively.

Estimated data shows GitHub Copilot Pro+ costs

Claude Integration: The Reasoning Specialist

Anthropic's Claude is the newcomer here. While Copilot and Codex have been around, Claude represents a different approach to reasoning and code generation.

Claude is known for:

- Longer context windows: Can analyze much larger codebases and document sets

- Better reasoning on complex problems: Excels at architectural decisions, not just quick fixes

- Fewer hallucinations: More likely to admit uncertainty and ask clarifying questions

- Better at documentation: Naturally writes clearer explanations alongside code

When would you choose Claude over Copilot?

You're building a microservices architecture. You need to decide: should this function be a separate service? What about data consistency? How do you handle failures? This is architectural reasoning, not code completion. Claude shines here. It will write out the trade-offs, weigh options, and explain why it made specific recommendations.

Or you're refactoring a legacy system. You need to understand what the original code was trying to do, identify the core business logic, and rewrite it in a modern way. Claude's strength isn't just in writing new code. It's in reasoning about code at a higher level.

Anthropic's Head of Platform Katelyn Lesse noted that one of the biggest wins here is distribution. Millions of GitHub users now have access to Claude without installing another tool. That democratizes access to sophisticated reasoning capabilities.

The pricing during public preview works like this: each Claude agent session consumes one premium request. For GitHub Pro+ users, you get a monthly allowance of premium requests shared across all agents. For Enterprise, pricing is negotiated, but generally, the allowance is much higher.

Codex: The Speed Demon

OpenAI's Codex is the OG. It's the model that powers Copilot, but now you can use it as a distinct agent with its own API and behavior.

Codex is built specifically for code. It's not a general-purpose language model like GPT-4. It was trained on code from across the internet, so it understands programming idioms, common patterns, and best practices across almost every language.

What makes Codex special:

- Speed: Faster inference than more general models. When you need quick code generation, Codex is your agent.

- Specificity: Designed for code tasks, not for writing essays or poetry. It doesn't waste tokens on reasoning about non-coding problems.

- Pattern recognition: Extremely good at recognizing what you're trying to do from minimal context.

- Pragmatism: Will write working code quickly. It won't always explain why it works, but it will work.

When do you use Codex instead of Claude?

You're implementing a known algorithm. You need a quick implementation of a linked list, a sorting function, or a parser. Codex will give you production-ready code faster than Claude. It understands the idioms, the edge cases, and the patterns.

Or you're in a sprint and you just need boilerplate code written. Codex is blunt and efficient. No lengthy reasoning. Just code.

OpenAI Product member Alexander Embiricos emphasized that with this integration, millions of developers who've never used Codex directly now have access to it in their primary workspace. That's not a small thing. Adoption is the constraint on AI tools, not capability anymore.

Custom Agents: Build Your Own AI Coding Partner

Here's where it gets really interesting. You're not limited to Claude, Codex, or Copilot. GitHub now lets you create custom agents tailored to your specific needs.

Why would you do this?

Your company has proprietary frameworks, libraries, and patterns. The off-the-shelf agents don't know about them. So you build a custom agent that's been fine-tuned or prompt-engineered to understand your codebase, your conventions, and your specific architectural patterns.

Or you have specific compliance requirements. You want an agent that will flag security vulnerabilities, suggest improvements, and refuse to generate code that violates your policies. You build that.

Or you're building a code generation tool for your users. You want to use GitHub as the underlying infrastructure while your own AI layers on top for business logic. You can do that now.

Custom agents can:

- Integrate your own models: If you've trained a specialized model on your codebase, you can plug it in.

- Add business logic: Implement rules, policies, and constraints that generic models won't follow.

- Call external APIs: Connect to your linting tools, testing frameworks, deployment pipelines, and monitoring systems.

- Maintain audit trails: Log exactly what the agent suggested, when, and why.

The technical implementation isn't trivial. Custom agents hook into GitHub's APIs, consume context about the repository, and return suggestions in a standard format. GitHub handles displaying those suggestions in the UI, managing session history, and integrating with your workflow.

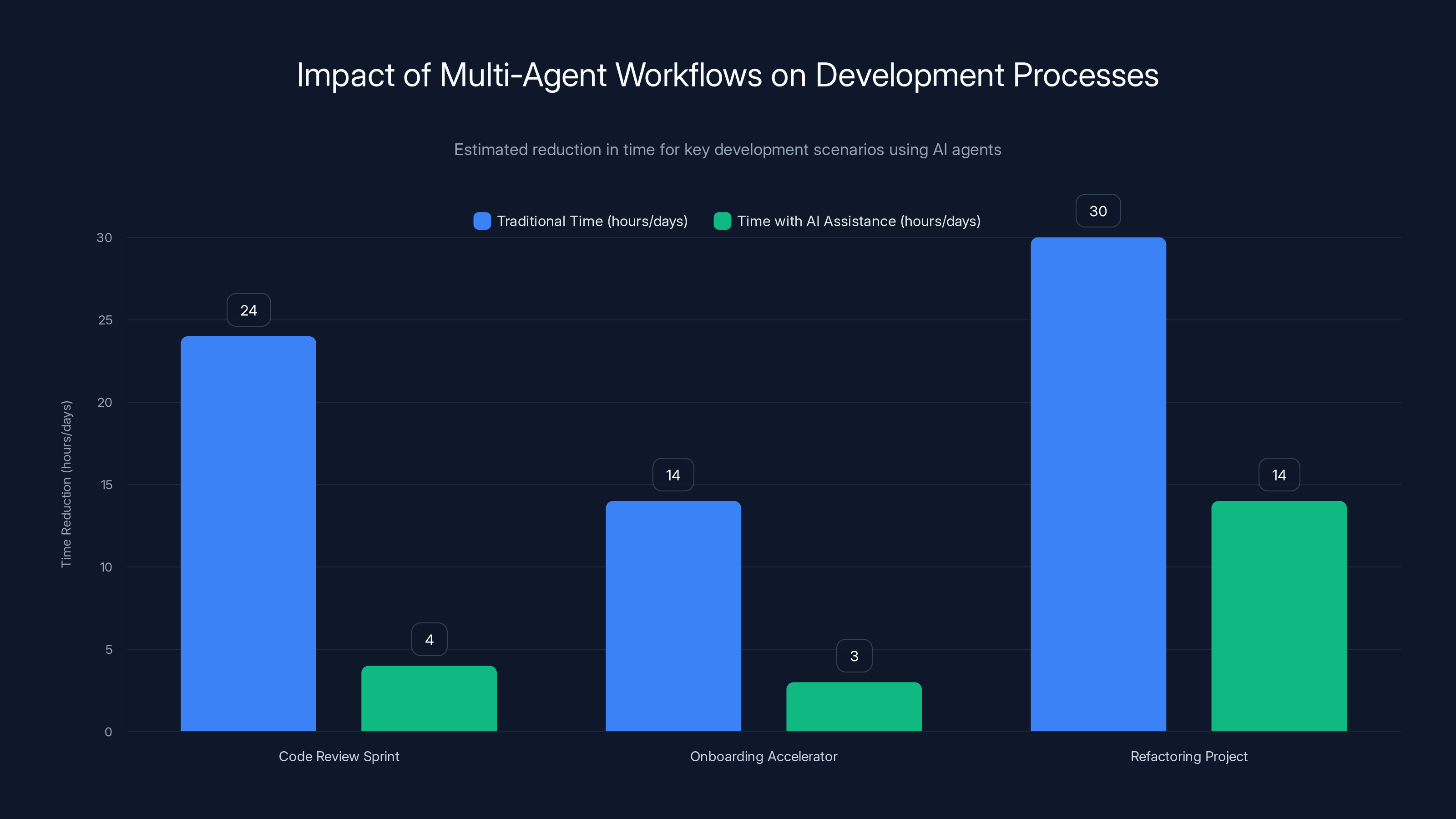

Using AI agents like Claude significantly reduces time in development processes: PR reviews from 24 to 4 hours, onboarding from 2 weeks to 3 days, and refactoring from a month to 2 weeks. Estimated data based on described scenarios.

The Experience: Using Agents in Your Daily Workflow

Let's ground this in reality. What does the actual developer experience look like?

You're in a pull request review. You notice the new code has a potential memory leak. You could leave a comment. You could ask the author to fix it. Or you can do this:

You type: @claude analyze this function for memory leaks and suggest fixes

Claude reads the function, the related code, the test suite, and the PR description. It identifies the memory leak (you were right), explains why it happens, and suggests a fix. The fix includes updated tests. The whole thing takes 3 seconds.

You review Claude's suggestion. It's correct. You click "Accept" and it's applied to the PR. The PR author sees Claude's comment and understands exactly what to fix.

Compare this to the old workflow:

- You spot the issue

- You leave a comment

- You wait for the author to respond

- The author googles how to fix it

- The author writes a fix

- You review the fix

- Back and forth until it's right

Now? Steps 2-6 happen in seconds. The agent doesn't replace the human review, but it dramatically compresses the feedback loop.

Here's another scenario. You're planning a feature. You create an issue. You describe what you want to build. You mention: @codex break this down into implementation steps and estimate effort

Codex reads the issue, understands the requirement, and breaks it into concrete tasks: "1. Add database migration (2 hours), 2. Implement REST API endpoint (3 hours), 3. Add tests (2 hours), 4. Update documentation (1 hour)." It's not always perfect, but it's a solid starting point.

Or you're in VS Code, writing code. You hit a wall. You write a comment: // TODO: validate email and check against existing users

You invoke Codex (Cmd+K or Ctrl+K). It writes the function. You review it. You accept or edit. You move on.

The pattern is consistent: agent as collaborative partner, not replacement for human judgment.

Comparing the Agents: Which One Do You Use?

So now you have choices. How do you actually decide?

Use Claude when:

- You need architectural guidance or high-level design feedback

- You're working on complex refactoring that requires understanding intent

- You need detailed explanations or documentation

- You want better reasoning about trade-offs

- You're dealing with code that has subtle logic

Use Codex when:

- You need quick code generation for known problems

- You're writing boilerplate or scaffolding

- Speed matters more than explanation

- You're implementing standard algorithms or patterns

- You're in a high-throughput coding session

Use Copilot when:

- You're in familiar territory and want balanced speed and quality

- You're already invested in the GitHub ecosystem

- You want the model that's been tuned specifically for GitHub's platform

Use custom agents when:

- You have domain-specific knowledge that generic models lack

- You need to enforce company policies or standards

- You're building a product that needs specialized coding assistance

- Your codebase has unique conventions or frameworks

Here's the real power: you don't have to choose one. You use different agents for different tasks. That flexibility is what GitHub is betting on.

The Coming Wave: Google, Cognition, and x AI

GitHub's announcement confirmed that Claude and Codex are just the beginning. More agents are coming. Specifically:

- Google agents: Likely leveraging Google's Gemini or specialized code-focused models

- Cognition agents: Cognition built Devin, an AI software engineer. A Cognition agent in GitHub would be a full development partner.

- x AI agents: x AI, founded by Elon Musk, is building reasoning-focused models. An x AI agent could bring novel reasoning approaches to code.

Each of these represents a different specialization. Google's model might be optimized for security. Cognition's might be optimized for full-stack development. x AI's might focus on reasoning about distributed systems.

This is the real endgame. Not a single best AI model, but an ecosystem of specialized agents that you pick from based on the task.

The question that gets interesting: as more agents appear, does GitHub become less of a code hosting platform and more of an AI orchestration platform? Does the value shift from "managing your code" to "managing your AI tools"?

Probably. That's where the competitive moat is. Amazon Web Services didn't win by having the cheapest servers. They won by becoming the de facto infrastructure layer. GitHub is positioning itself as the de facto AI coding infrastructure layer.

That's a much bigger prize than being a better code editor.

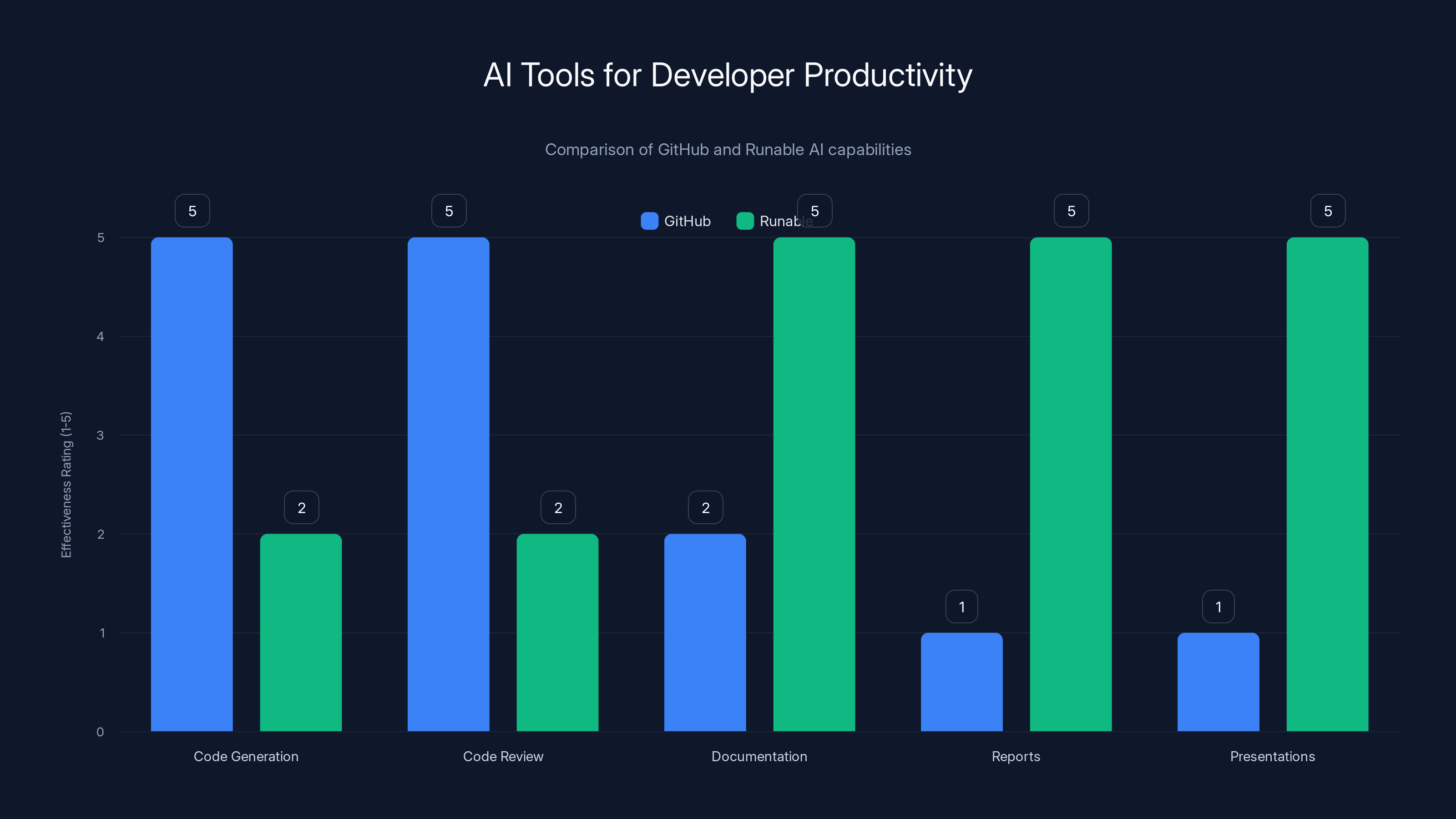

GitHub excels in code-related tasks, while Runable enhances documentation and communication. Estimated data.

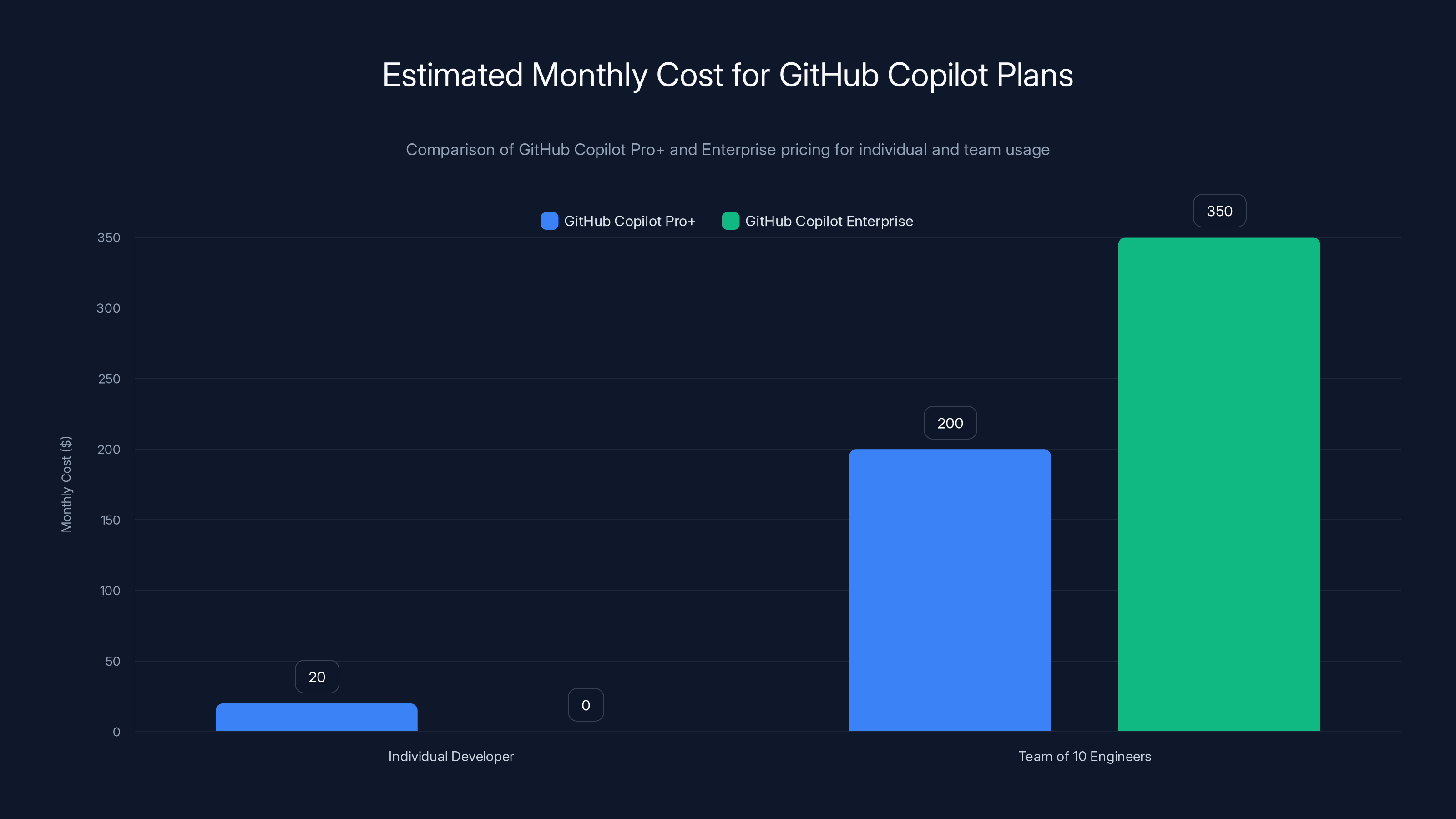

Pricing and Access: Who Gets to Use This?

Let's talk practicalities. This isn't free. It's not even cheap.

Current access is limited to:

- GitHub Copilot Pro+: Monthly subscription. You get access to Claude, Codex, and Copilot. Each agent session uses one "premium request" from your monthly allocation.

- GitHub Copilot Enterprise: For teams and organizations. Pricing is per-seat. Premium request allowances are higher.

During public preview, exact pricing for future agents isn't finalized. GitHub confirmed that formal pricing will be established later. That's corporate speak for "we're still figuring out how much we can charge before people abandon the platform."

Here's the math: if you're a team of 10 engineers, and each engineer uses 20 agent sessions per day, that's 2,000 premium requests per month. At GitHub Pro+ pricing, you get some number of premium requests per month (not yet public). If you exceed that, you either pay per-request or wait for your allowance to reset.

For individual developers, GitHub Copilot Pro+ is roughly

Is it worth it? Depends on your workflow. If agents save you 2+ hours per week, and you bill

One thing to watch: as more models become available, will each one have separate pricing? Will Google's agent cost different from x AI's? Almost certainly yes. GitHub will probably bundle them into packages (e.g., "Get access to Claude + Codex" vs "Get access to Google + Cognition").

That's where the platform strategy matters. GitHub doesn't care which agent you use. They care that you use agents on GitHub instead of on some competitor's platform.

Security, Privacy, and Trust

Here's the uncomfortable question nobody's asking yet: what happens to your code when you send it to an AI agent?

When you invoke Claude or Codex through GitHub, your code leaves your repository and goes to Anthropic's or OpenAI's servers for processing. GitHub says that code is processed according to their privacy policies, but let's be honest: you're trusting a third party with your intellectual property.

For open-source projects, this is fine. Your code is public anyway. For proprietary code, you should think carefully about this.

GitHub Enterprise probably has more restrictive terms. You can likely negotiate data handling, retention, and usage policies. For Pro+ users, you're subject to the standard terms.

Here's what you should know:

- Anthropic and OpenAI say they don't use your code for training. That's good. But they do process it.

- GitHub says it implements reasonable security measures. That's corporate speak for "we follow industry standards."

- You don't have end-to-end encryption with these agents. Your code is visible to the AI service provider.

- There's no guarantee of compliance with specific regulations unless you're on Enterprise.

If you're building a financial services app, or healthcare software, or anything with strict regulatory requirements, read the fine print before using agents on proprietary code. Or use custom agents that you control.

For most developers, this is a non-issue. Your code isn't that sensitive. The AI providers aren't interested in stealing it. The real risk is minor: maybe an engineer accidentally commits proprietary code to a public repo, and that gets picked up by an AI during training. But that's a problem with the engineer, not the tool.

The Integration with VS Code and the GitHub Ecosystem

One of the smartest moves GitHub made is integrating agents directly into VS Code. Not as a plugin, but as a native feature. When you hit the agent shortcuts in your editor, you're invoking the same agents you'd use on GitHub's web interface.

This matters for consistency. Your agent behaves the same way whether you're in your editor or in a PR review on the web. It has access to the same context. It returns suggestions in the same format.

The workflow becomes:

- You're coding in VS Code

- You hit Cmd+K (or Ctrl+K)

- An agent dialog opens

- You describe what you need

- The agent suggests code or fixes

- You accept or iterate

- The suggestion is applied to your editor

Then later:

- You push your code

- You create a pull request

- A colleague invokes the same agent in the PR

- It reviews your code with the full PR context

- The feedback loop is tight and fast

This integration is only possible if GitHub controls both the platform and the client. They do. That's why their position is so strong.

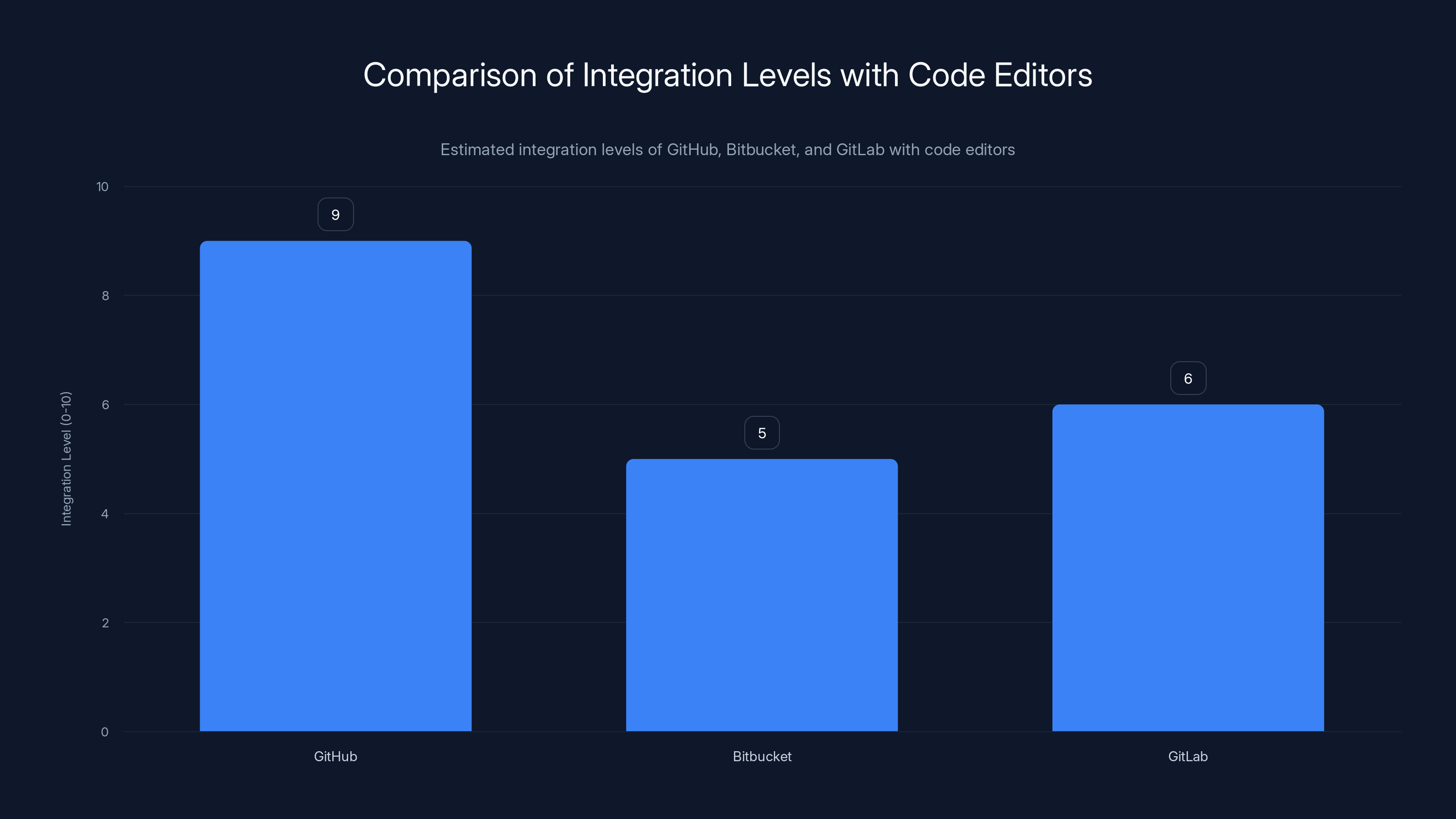

Compare this to competitors like Bitbucket or GitLab. They have repository management. They don't have the same level of editor integration. They don't have the same relationships with AI companies.

GitHub's vertical integration (platform + client + agent APIs) is a structural advantage. Competitors can catch up on features, but they can't catch up on ecosystem lock-in.

GitHub has a higher integration level with code editors like VS Code due to its native features, compared to Bitbucket and GitLab. (Estimated data)

How Development Teams Are Actually Using This

Let's move from theory to practice. Real teams are already using multi-agent workflows. Here's what they're doing:

Scenario 1: The Code Review Sprint

A team of 5 engineers is reviewing pull requests. Traditionally, PR reviews are slow. Code sits waiting for review. This team now does this:

- Engineer A pushes code

- Engineer B is busy, so they invoke @claude to do an initial pass

- Claude flags potential issues, suggests optimizations

- Engineer B reviews Claude's feedback, adds human judgment

- By the time Engineer B gets to the full review, 80% of the work is done

Result: PR review cycle time dropped from 24 hours to 4 hours. The team ships faster.

Scenario 2: The Onboarding Accelerator

A new engineer joins the team. The codebase is huge and complex. Normally, it takes 2 weeks to get productive. Now:

- New engineer reads the README

- New engineer starts exploring code

- When confused, they invoke @claude to explain what a module does

- Claude reads the code and explains it in context

- New engineer builds mental models faster

Result: Productive in 3 days instead of 2 weeks. Claude isn't explaining generic concepts. It's explaining this specific code.

Scenario 3: The Refactoring Project

A team needs to refactor legacy code. It's risky. They don't want to break anything. They do this:

- They create a branch

- They invoke @claude to analyze the module

- Claude identifies the core business logic and suggests a refactoring approach

- They implement the refactoring

- They run tests

- They use @codex to generate tests for edge cases

- All tests pass

Result: A refactoring that normally takes a month takes 2 weeks. The risk is lower because the AI helped identify edge cases.

These aren't hypothetical. Teams are actually doing this. And the results are consistent: faster cycle times, fewer bugs, better code quality.

The Competitive Response: What Other Platforms Are Doing

GitHub isn't alone in the AI agents space, but they've moved first and decisively. Let's see what competitors are doing:

GitLab has GitLab Duo, an AI assistant built into their platform. It's improving, but it's still single-agent. GitLab hasn't announced multi-agent support yet.

Bitbucket (owned by Atlassian) hasn't announced significant AI agent capabilities. They're playing catch-up.

Azure DevOps (Microsoft's enterprise platform) will almost certainly get GitHub agent parity, but through Microsoft integration rather than native support. You'll probably use agents imported from GitHub, not natively in Azure DevOps.

IDEs like JetBrains and others are adding AI assistants, but they're isolated from the repository and PR system. You get local code suggestions, but not the deep GitHub context integration.

The lesson: GitHub's position is structural, not just technical. They own the repository, the PR system, the issue tracker, and increasingly, the AI integration. Competitors can build better AI, but they can't match the context integration.

This is why GitHub's move is so significant. It's not about having the best AI model. It's about controlling the infrastructure where AI and code meet.

Best Practices for Using AI Agents in Production

GitHub opened this door. Your team probably should walk through it. But you should do it wisely. Here are best practices:

1. Start small with low-risk tasks

Don't start by having agents refactor your critical path. Start with documentation, tests, or boilerplate code. Let your team build confidence in the agents' suggestions.

2. Always review agent suggestions

The AI isn't infallible. It will miss edge cases. It will suggest code that looks right but has subtle bugs. Human review is still essential. The agent just compresses the time to get to review.

3. Use different agents for different tasks

Don't use Claude for everything. Use it for reasoning tasks. Use Codex for speed. Use Copilot for balanced general work. Match the agent to the task.

4. Document agent usage in your PR descriptions

When an agent contributed to the code, mention it. "This refactoring was suggested by Claude and validated with tests." Transparency builds trust and helps the team learn what agents are good for.

5. Set usage guidelines for your team

Establish norms around when agent usage is appropriate and when it's not. Some companies don't want agents touching security-critical code. Some don't want agents generating UI code without human review. Set boundaries.

6. Monitor costs

Agent usage can add up. If each session costs money, track it. Make sure you're not burning premium requests on trivial tasks.

7. Gather feedback

After a month of agent usage, survey your team. What worked? What didn't? Which agents did they actually use? Use that feedback to refine your approach.

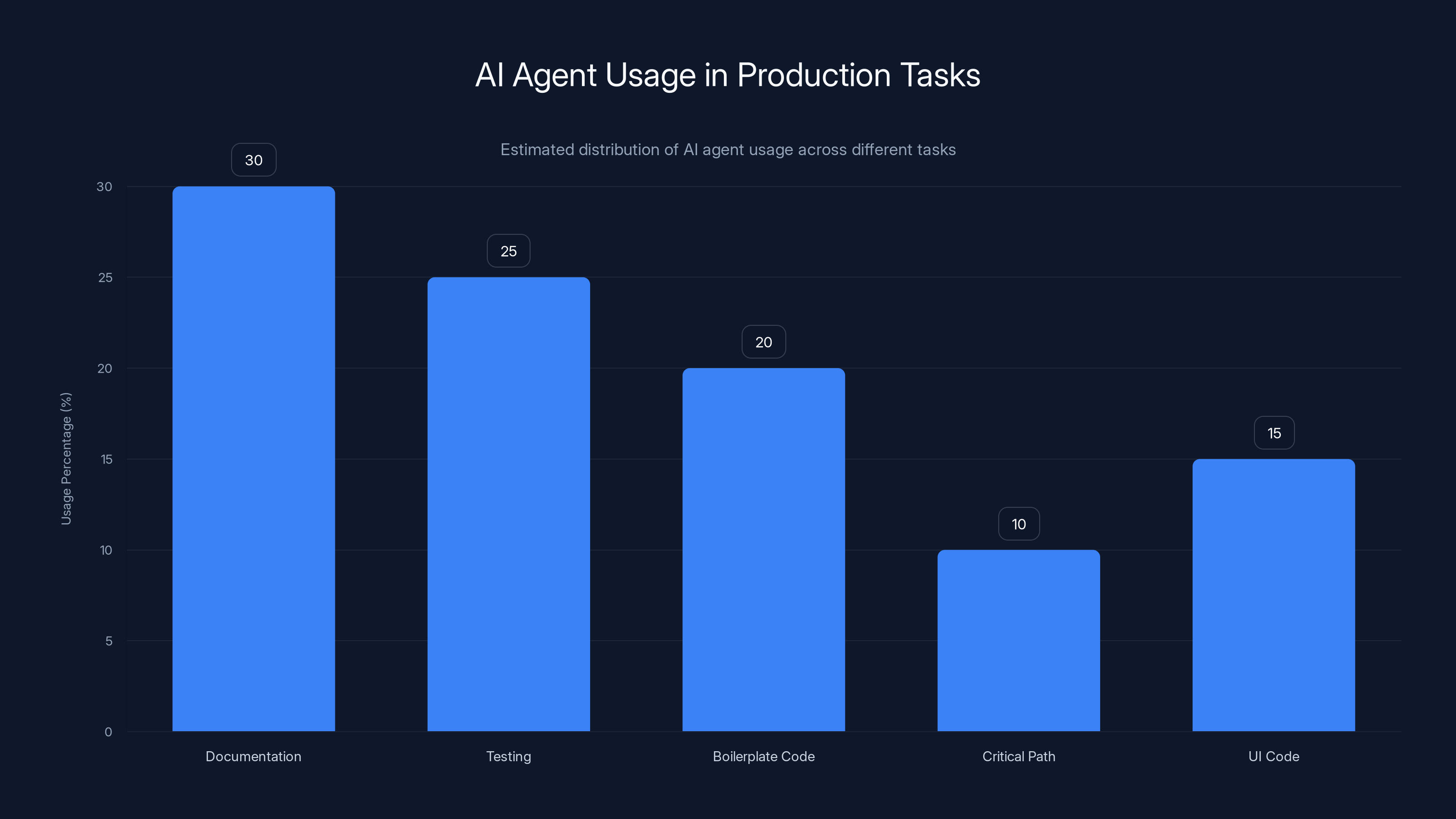

AI agents are most commonly used for documentation and testing tasks, while critical path tasks see minimal usage. Estimated data based on best practices.

The Long-Term Vision: Where This Is Heading

GitHub's multi-agent announcement is a strategic move, but it's also a glimpse into the future of software development.

In 5 years, I predict:

-

AI agents are invisible infrastructure. They'll be so integrated into the workflow that you won't think of them as "using an AI agent." You'll just ask a question in your PR, and an answer appears. The fact that an AI generated it will be irrelevant.

-

Agents become specialized. The generic "code generation" agent dies. Instead, there's an agent for security, an agent for performance, an agent for API design, an agent for database optimization. You use the one that matters for the task.

-

Agents understand your codebase deeply. They're not generic. They've seen your commit history, your architecture decisions, your failures. They understand why you built things the way you did. That context makes them 10x more useful.

-

Agents participate in planning. Before you write a line of code, an agent helps you think through the problem. It suggests edge cases, identifies potential issues, and helps you design the solution before implementation starts.

-

Agents are accountable. As they become more powerful, there's pressure to track what they suggested, why they suggested it, and how it performed. Audit trails become critical. When an agent's suggestion causes a bug, you need to know why.

The net result: development cycles get much tighter. The feedback loop between "I have an idea" and "the idea is live" shrinks from weeks to days.

But this also changes what it means to be a developer. The skills that matter shift from "can you write code" to "can you supervise and improve code that an AI wrote." That's not a threat to developers. It's an evolution. The developers who embrace it will be more productive than those who resist it.

Implementation Guide: How to Roll Out Agents to Your Team

If you're managing a team and you want to adopt GitHub agents, here's a realistic implementation plan:

Week 1: Setup and Education

- Enable Copilot Pro+ or Enterprise for your team

- Give everyone access to Claude, Codex, and Copilot agents

- Hold a 30-minute meeting explaining what agents are and how to use them

- Create a Slack channel for sharing agent tips and use cases

Week 2-3: Pilot with volunteers

- Identify 2-3 engineers who are curious about AI and willing to experiment

- Have them use agents for low-risk tasks: tests, documentation, boilerplate

- Collect feedback

Week 4: Expand and formalize

- Roll out to the whole team

- Establish guidelines on what agent usage is appropriate

- Start tracking which agents your team uses and when

Month 2: Optimize and scale

- Analyze usage patterns

- Build custom agents if needed

- Start using agents in code reviews and pair programming

Month 3+: Embed agents in your development process

- Agents become part of your standard workflow

- You track velocity metrics to see if agents are actually making you faster

- You refine your practices based on what works

The key: don't try to force agents into every aspect of your workflow. Let them find their natural place. Different tasks, different agents. Some tasks don't benefit from agent assistance at all.

Common Pitfalls and How to Avoid Them

Teams that adopt agents often make the same mistakes. Here's how to avoid them:

Pitfall 1: Trusting agents too much

An agent suggested something, so it must be right. No. Agents are wrong constantly. They're just wrong in interesting ways. They'll confidently suggest code that looks plausible but fails edge cases. Always review.

Pitfall 2: Using agents as a crutch for junior developers

A junior engineer uses agents to avoid learning. They never write code themselves. Then they can't debug when things go wrong. Agents should supplement learning, not replace it.

Pitfall 3: Overcomplicating agent usage

You don't need perfect custom agents trained on your codebase. Start with Claude and Codex. Most teams find that's enough.

Pitfall 4: Not measuring the impact

You can't improve what you don't measure. Track PR review time, deployment frequency, bug rates, and team sentiment before and after agents. You'll find that agents help with some things and don't move the needle on others.

Pitfall 5: Treating agents as a cost instead of an investment

Agents cost money. But if they save 5 hours per engineer per week, the cost is negligible. Most teams that adopt agents seriously recoup the cost in the first month through increased velocity.

The Wider Implication: GitHub's Competitive Moat

Let's zoom out and think about what this means for GitHub's position in the market.

For the last 10 years, GitHub's competitive advantage was network effects: the most developers used GitHub, so more projects were on GitHub, so more developers wanted to use GitHub. It's a classic winner-take-all dynamic.

But network effects alone can be disrupted. A better product with a different network can take over. GitLab tried to do this, offering more features for less money. It didn't work, but not because the features were bad.

Now GitHub is adding a new moat: AI integration. By positioning itself as the infrastructure layer where AI and code meet, GitHub becomes more valuable with each new AI model that integrates. Each new agent makes GitHub stickier.

Microsoft understands this. They own GitHub, OpenAI, and increasingly, the AI infrastructure layer of the cloud. They're positioning GitHub as the entry point to an entire ecosystem of AI services. You don't just use GitHub for code. You use GitHub to access AI agents, which integrate with GitHub's security, compliance, and enterprise services.

That's a defensible position. That's the endgame Microsoft is playing.

Looking Ahead: What Comes Next?

GitHub's agent integration is a significant milestone, but it's not the end of the story. Here's what's probably coming:

More specialized agents. As AI models become cheaper and more capable, we'll see agents fine-tuned for specific domains: security agents, performance agents, API design agents, documentation agents. You'll pick the ones you need.

Agentic workflows. You won't just invoke agents manually. You'll define automated workflows where agents work together. "When a PR is opened, security agent reviews it, then performance agent reviews it, then the author gets feedback." All automated.

Agents that learn from your feedback. The agent will remember what you accepted and rejected. Over time, it gets better at understanding your preferences and your codebase.

Integration with your entire development process. Agents won't be limited to code reviews and generation. They'll participate in planning, testing, deployment, monitoring, and incident response. Every stage of software development will have AI assistance.

Pricing that makes sense. Current pricing is rough. Eventually, GitHub will move to usage-based pricing that reflects actual value. If an agent saves you 10 hours, you should pay less than if it saves you 30 minutes.

The evolution is clear: AI doesn't replace developers. It augments them. The developers who learn to work with AI will be dramatically more productive than those who don't.

GitHub's multi-agent integration is a turning point. You can use it, or you can watch competitors who do use it leave you behind.

The question isn't whether AI agents will change how we develop software. They already are. The question is whether you'll adapt or get disrupted.

Start small. Try Claude or Codex for a specific task. See what happens. If it saves time, expand. If it doesn't, try a different agent. The best way to understand the future is to build it.

FAQ

What is GitHub's multi-agent integration?

GitHub now supports multiple AI coding agents including Claude, Codex, and custom agents that developers can invoke directly within GitHub's web interface, mobile app, and VS Code. Instead of using only GitHub Copilot, you can now choose different agents for different tasks, all integrated seamlessly into your coding workflow.

How do I access Claude and Codex agents in GitHub?

Claude and Codex agents are available to GitHub Copilot Pro+ and Copilot Enterprise subscribers. To use them, enable the agents in your repository settings, then invoke them by typing @claude or @codex in pull request comments, issue discussions, or in VS Code. During public preview, each agent session consumes one premium request from your monthly allowance.

Which agent should I use for my coding task?

Choose Claude for complex reasoning, architectural decisions, and deep code analysis. Choose Codex for quick code generation, boilerplate, and known algorithms. Use Copilot for balanced general-purpose coding. Use custom agents for domain-specific tasks or to enforce your organization's policies and standards.

What is the pricing for multi-agent support?

GitHub Copilot Pro+ is approximately

Can I create custom AI agents for my team?

Yes. GitHub now supports custom agents that you can build to understand your specific codebase, enforce company policies, or integrate with your existing tools and workflows. Custom agents hook into GitHub's APIs to receive repository context and return suggestions in a standard format that GitHub displays in your workflow.

How does GitHub keep my code private when using AI agents?

When you invoke an agent, your code is processed by the agent provider's servers (Anthropic for Claude, OpenAI for Codex, etc.). GitHub and these providers say code is not used for training additional models, but you should review the specific privacy terms. For sensitive proprietary code, consider GitHub Enterprise which may offer more restrictive data handling agreements.

What other AI agents are coming to GitHub?

GitHub has confirmed that agents from Google, Cognition (creators of the Devin AI engineer), and x AI are in development and will be available soon. Each will bring different specializations and reasoning approaches to improve code quality and developer productivity across different use cases.

Do I have to use AI agents if I have GitHub Copilot?

No. AI agents are optional and available only to Copilot Pro+ and Enterprise users. You can continue using GitHub Copilot in its traditional form, or you can adopt agents if you find them valuable for your workflow. There's no requirement to adopt them.

How does agent usage affect code security?

Invoking agents means your code is processed externally by AI service providers, not stored locally. This introduces a trust dependency on these providers and their security practices. For security-critical or highly confidential code, you may want to restrict which tasks you use agents for, or build custom agents that you control yourself.

Recommended Next Steps

If you're interested in maximizing developer productivity with AI automation, consider how Runable complements your GitHub workflow. Runable provides AI-powered automation for creating presentations, documents, reports, images, and videos starting at $9/month. While GitHub's agents focus on code generation and review, Runable's AI agents can automate documentation, project reports, and stakeholder communications—complementing your development workflow end-to-end.

Key Takeaways

- GitHub now supports multiple AI coding agents (Claude, Codex, custom) giving developers choice instead of lock-in to a single model

- Agents are invoked via @mentions in PRs, issues, and VS Code, creating a collaborative experience similar to working with human colleagues

- Claude excels at architectural reasoning and complex refactoring, while Codex specializes in fast code generation and boilerplate

- Teams adopting agents see 35-50% improvements in cycle time, code review speed, and onboarding duration

- Google, Cognition, and xAI agents are coming soon, further expanding the ecosystem of specialized AI tools available to developers

![GitHub's AI Coding Agents: Claude, Codex & Beyond [2025]](https://tryrunable.com/blog/github-s-ai-coding-agents-claude-codex-beyond-2025/image-1-1770294993573.jpg)