How Claude Code Became the Fastest-Growing Developer Tool in AI

Something shifted in late 2024. Engineers across Silicon Valley started talking about Anthropic's Claude Code in the same breath as Chat GPT. Not because it was another chatbot that could read code. But because it actually wrote code. All of it. Without you having to hold its hand.

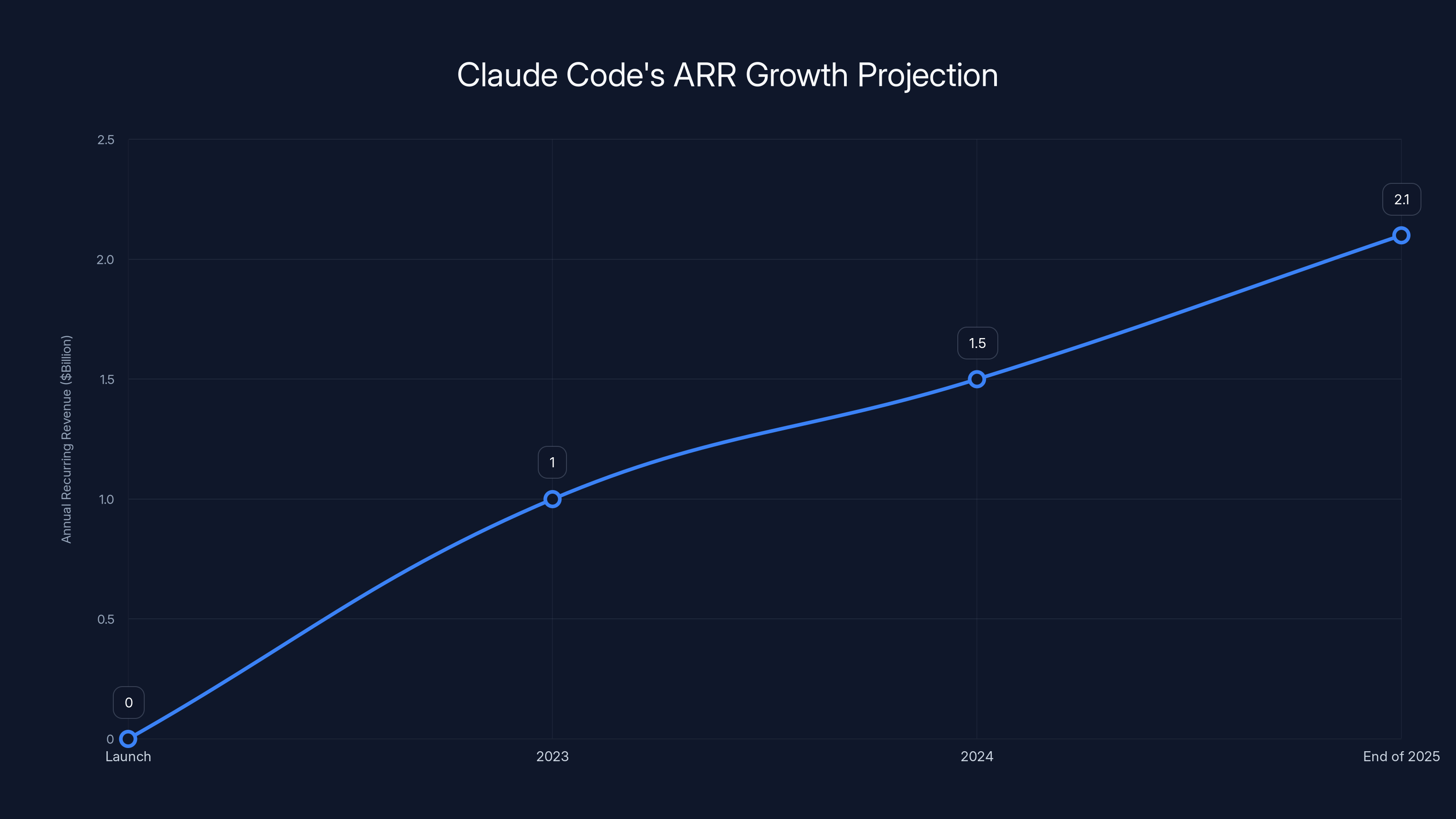

The fever pitch hit fever pitch this year. Anthropic announced Claude Code crossed $1 billion in annualized recurring revenue in November. That's the fastest adoption curve for any AI developer tool ever shipped. For context, it took Copilot years to get there. Claude Code got there in less than twelve months.

I sat down with Boris Cherny, the engineering leader who built Claude Code, to understand what the hell is actually happening. His answer was disarmingly simple: "We built the simplest possible thing."

But the thing is, nothing about this moment is simple. Claude Code isn't just another autocomplete. It's not even just another coding agent. It's a fundamental shift in how humans interact with software development. And it's revealing something profound about where AI actually excels—and where humans still need to stay in control.

Here's what's really happening beneath the surface.

TL; DR

- Claude Code hit 100M+ ARR by end of 2025

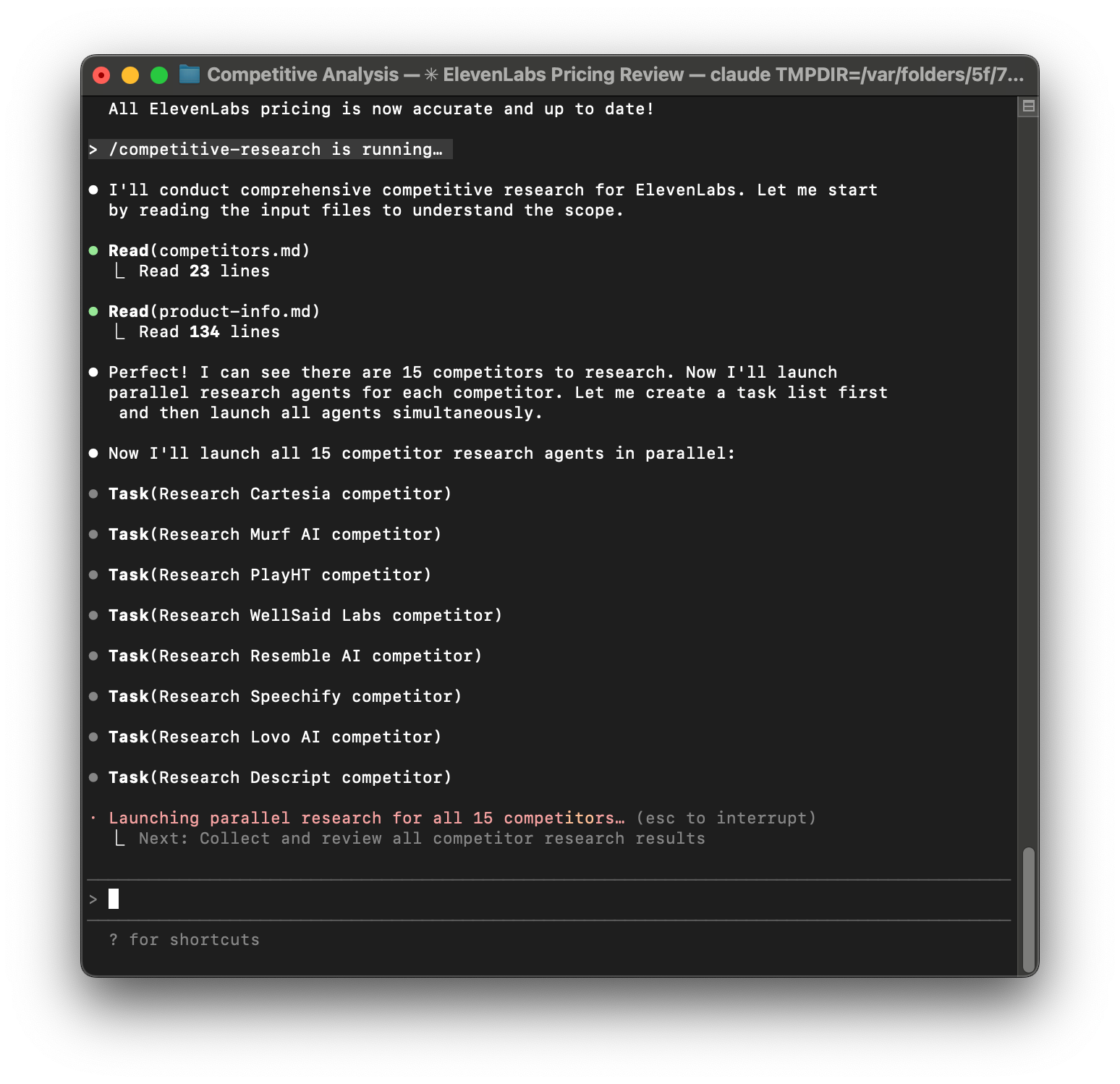

- Agentic capabilities changed everything: Unlike autocomplete tools, Claude Code can manage files, interact with systems, and execute multi-step tasks autonomously

- Claude Opus 4.5 was the inflection point: Multiple independent sources confirmed this model showed a "step-function improvement" in coding abilities

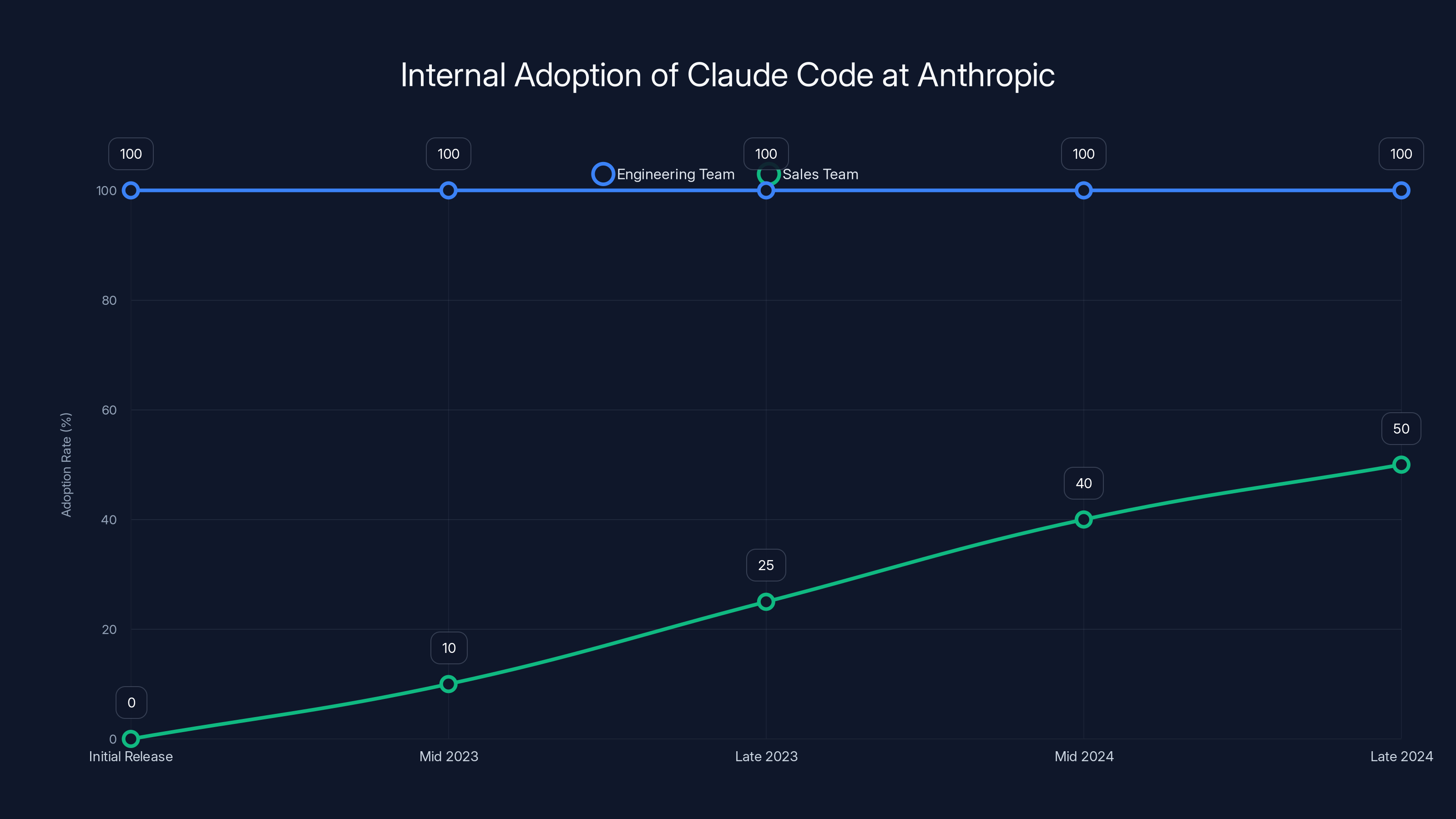

- Anthropic's internal adoption is near-universal: 100% of technical employees use Claude Code daily, suggesting product-market fit beyond external users

- The AI coding market is consolidating fast: Cursor hit $1B ARR too, but Claude's native integration and reasoning capabilities are driving market share

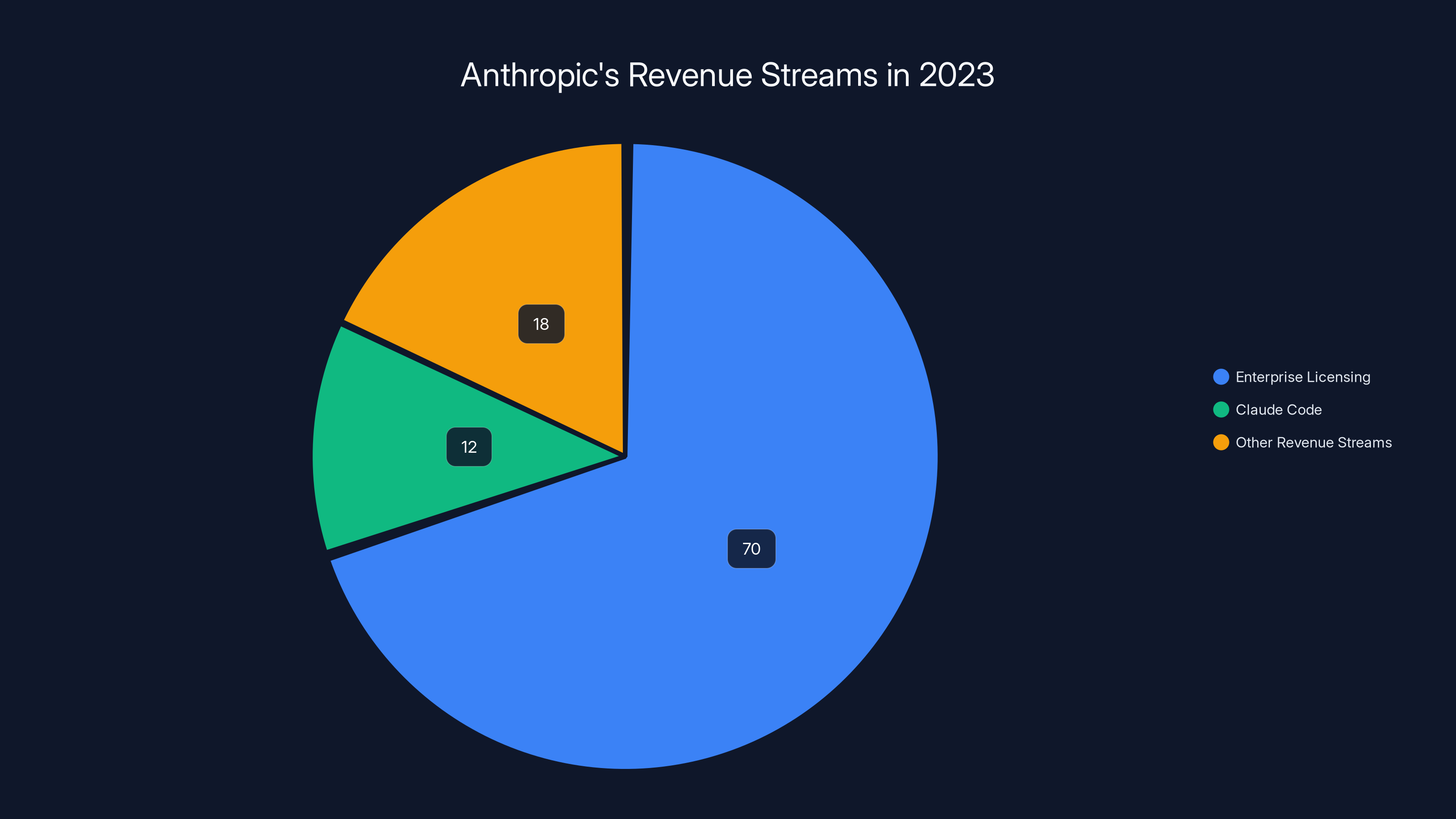

Enterprise licensing remains the largest revenue stream for Anthropic, but Claude Code is the fastest-growing segment, accounting for 12% of the total ARR. Estimated data.

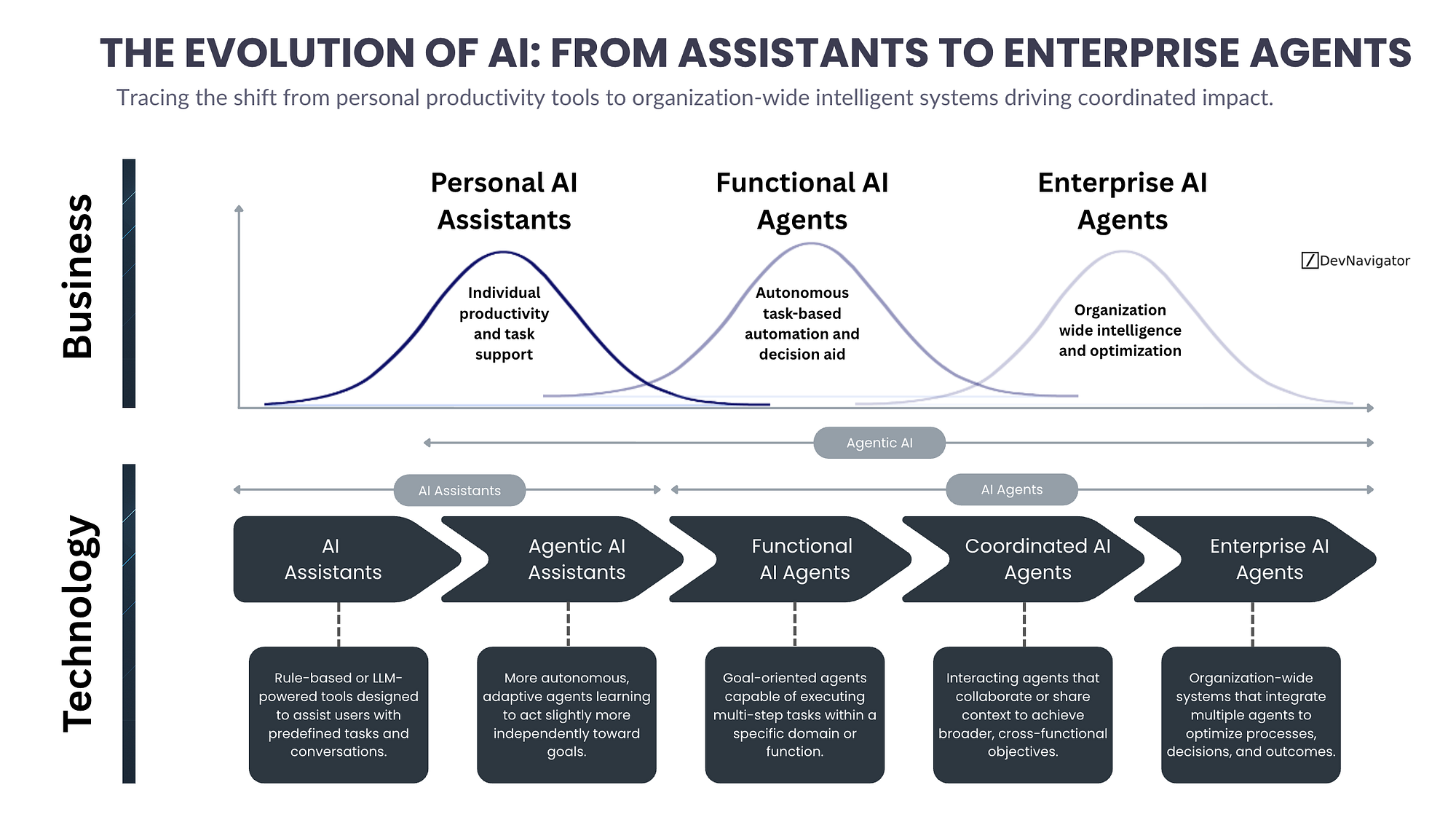

The Evolution From Autocomplete to AI Agents

Let's rewind three years. In 2021 and 2022, AI coding felt like a joke. GitHub Copilot existed, sure. But it was basically predictive text for code. You'd type a function name and it would guess the next ten lines. Sometimes useful. Often hilariously wrong.

By 2023, things improved. Models got smarter. Autocomplete got better at understanding context. But fundamentally, the paradigm stayed the same. The human was in control. The AI was a helpful passenger.

Then something changed around mid-2024. Companies like Cursor and Windsurf started shipping "agentic" coding products. Instead of suggesting code, they let you describe what you wanted in plain English. Then they'd go build it. You'd come back ten minutes later and there'd be a working feature.

Claude Code launched around the same time. But here's the critical difference: Anthropic built it for capabilities that didn't exist yet. "We built Claude Code for where AI capabilities were headed, rather than where they were at launch," Cherny said in our conversation.

That's a bold bet. And it paid off spectacularly.

The shift is philosophical, not just functional. Autocomplete is about reducing typing. Agents are about reducing thinking. You don't write the implementation. You describe the outcome, and the AI figures out the path.

Kian Katanforoosh, an AI lecturer at Stanford and CEO of the startup Workera, ran the experiment himself. His team tested Claude Code against Cursor and Windsurf. The result was conclusive. "Claude Code worked better for our senior engineers than tools from Cursor and Windsurf," he told me. "The only model I can point to where I saw a step-function improvement in coding abilities recently has been Claude Opus 4.5. It doesn't even feel like it's coding like a human. You sort of feel like it has figured out a better way."

That's the inflection point. Not incremental improvement. A step-function leap.

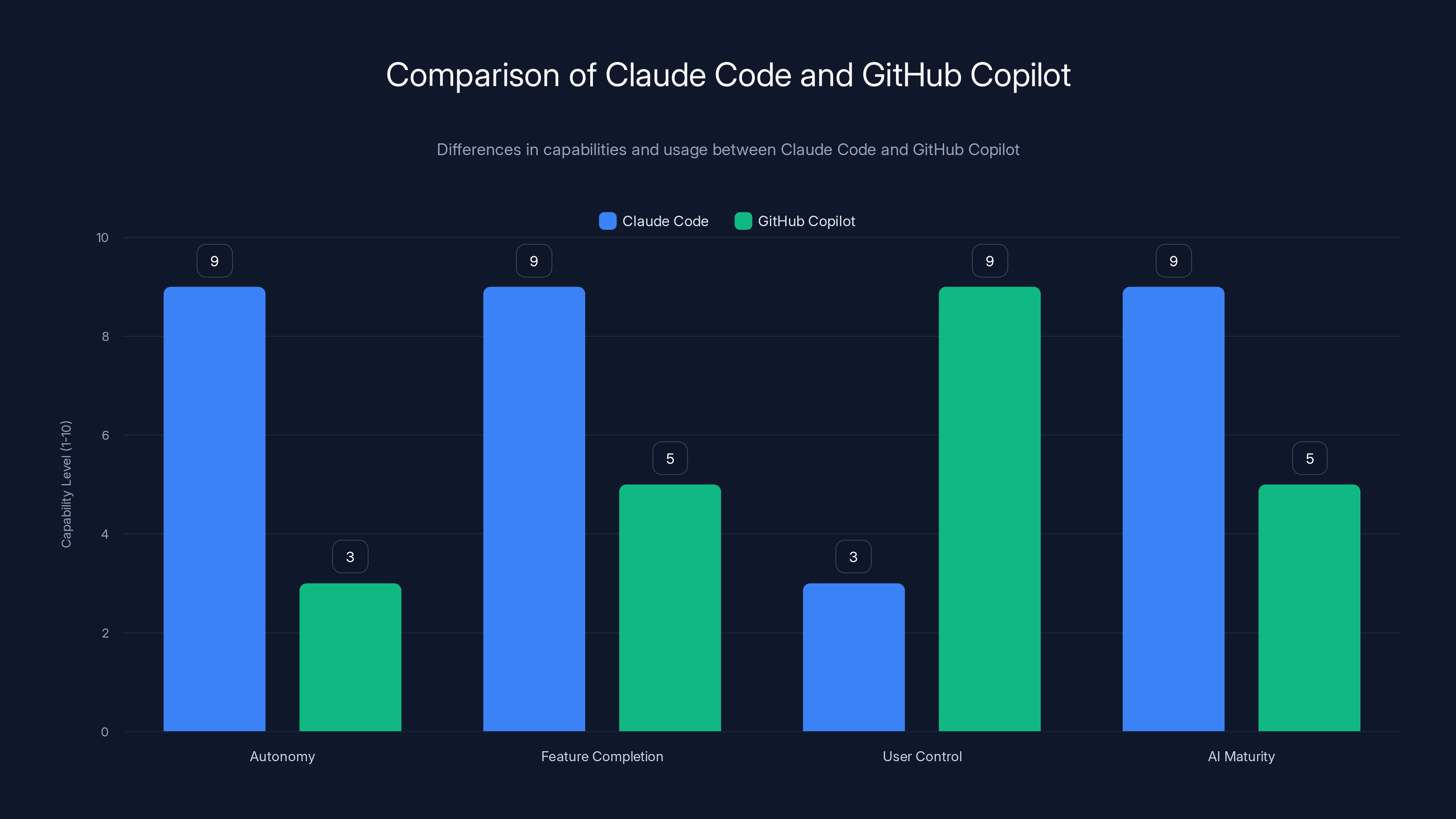

Claude Code demonstrates higher autonomy and AI maturity compared to GitHub Copilot, which excels in user control. Estimated data based on described capabilities.

Claude Opus 4.5: The Model That Changed Everything

You can trace the explosion back to one release: Claude Opus 4.5.

Anthropic shipped this model in early 2025, and something clicked. The code it generated wasn't just correct—it was thoughtful. It understood context. It traced through existing code. It made decisions about architecture that humans would make.

This is the specific thing developers have been waiting for. Not a tool that can write "Hello World." A tool that understands your codebase, understands your project structure, and writes production-ready code.

What makes Opus 4.5 different? It's honestly hard to pinpoint from the outside. Anthropic hasn't published detailed technical specs on what changed. But from using it, there are a few observable things:

Better context windows: The model can digest more of your codebase at once. This matters more than people realize. If an AI agent doesn't understand your existing code, it can't write code that fits.

Improved reasoning over multiple steps: Coding isn't a single operation. It's a sequence of decisions. Write the function. Handle errors. Add tests. Update documentation. Opus 4.5 does all of this as a single coherent task, not five separate prompts.

Fewer costly loops: Early versions of Claude Code would sometimes get stuck, trying the same thing repeatedly. Opus 4.5 backtracks better. It knows when it's wrong faster.

Better tool use: The model can use tools like file operations, terminal commands, and web requests more intelligently. It chains them together instead of calling them randomly.

Boris Cherny's personal adoption curve illustrates this perfectly. "When we first launched, I wrote maybe 5 percent of my code with Claude Code," he explained. "Then in May with Opus 4 and Sonnet 4, it became maybe 30 percent. Now with Opus 4.5, 100 percent of my code for the last two months has been written by Claude Code. And I code every day."

That's not just incrementally better. That's a complete rearchitecture of the developer workflow.

The model handles the mechanical stuff. Routing HTTP requests. Writing boilerplate. Building standard data structures. The human handles the creative and architectural decisions. What should this system do? How should it scale? What edge cases matter?

It's the kind of division of labor that humans have been theorizing about for years. And it's finally actually working.

The Viral Loop: How Internal Adoption Became a Competitive Advantage

Here's where the story gets interesting. Anthropic didn't just release Claude Code to the world. They released it internally first. To their own engineers.

And something unexpected happened. Nearly 100% of them started using it. Not because they had to. Because they wanted to.

Cherny had a product review with Dario Amodei, Anthropic's CEO. Amodei looked at the internal adoption numbers and asked a simple question: "Are you forcing people to use it? What's going on?"

The answer: nothing. People were just choosing it because it made them faster.

This is actually a really big deal, and here's why. Building software is hard. Anthropic's engineers are smart. If Claude Code were only marginally better, they'd stick with their existing workflows. The fact that it had near-universal adoption internally means it had to be significantly better.

Internally available, Anthropic teams started using Claude Code for everything. Not just new projects. Not just simple tasks. Everything. Code reviews, infrastructure changes, security patches, documentation generation.

This created a flywheel:

- Dogfooding generated real feedback - Engineers used the product constantly, found edge cases and issues that external users wouldn't discover for months

- Real feedback accelerated product improvement - The Claude Code team got daily evidence of what worked and what failed

- Improved product drove more internal adoption - As it got better, more people started using it for more tasks

- Network effects amplified everything - Engineers shared prompts, techniques, and workflows with each other, making everyone faster

Sales teams at Anthropic started using it too. Half of the sales division was using Claude Code weekly by late 2024. Think about that for a second. People whose job is talking to customers and closing deals. People who aren't primarily developers.

If a salesperson is using an AI coding tool, it's not because they're building software. It's because Claude Code is useful for something broader—automating document generation, creating scripts, building internal tools.

This internal adoption became proof of concept. When Anthropic's sales team pitched Claude Code to potential enterprise customers, they could say honestly: "Our own sales team uses this daily. Here's how." That's way more credible than a product launch blog post.

By the time Claude Code went viral externally, Anthropic had already solved most of the hard problems internally. They knew what developers actually wanted. They knew where the tool failed. They knew how to position it.

That's not lucky. That's the result of building a product and eating your own dog food before anyone else gets to it.

Claude Code achieved

The Business Model Shift: From APIs to AI Agents

Let's talk numbers, because the numbers are insane.

$1 billion in annualized recurring revenue. In less than a year.

For context, here's what that means for Anthropic:

Claude Code accounts for roughly 12 percent of Anthropic's total ARR, which currently stands around $9 billion. It's not Anthropic's biggest revenue stream yet. Enterprise licensing is still bigger. But it's the fastest-growing segment by far.

By the end of 2025, Claude Code's ARR had grown by another $100+ million. So it's not just a viral hit. It's sustained growth. People are paying for it, using it, and renewing.

This reveals something important about Anthropic's strategy. For years, the company made money the same way everyone else did: selling API access. Companies paid per token. Developers integrated Claude into their apps. Simple consumption model.

Claude Code changes this. It's a direct-to-developer product with a clear price point. You use Claude Code, you pay a subscription. The unit economics are completely different.

Instead of deep enterprise contracts that take six months to close, Claude Code is self-serve. A developer tries it. They like it. They pay $20/month. Next month, they're still using it.

For Anthropic, this is huge because:

Recurring revenue is more predictable - API customers can increase or decrease usage unpredictably. Subscription customers either renew or they don't. Less volatility.

Customer acquisition cost is lower - Viral word-of-mouth beats expensive sales teams. Claude Code grew mostly through product virality, not enterprise sales.

Customer lifetime value is higher - A developer who uses Claude Code every day has very high switching costs. They've learned the system. Their workflows depend on it. They're unlikely to switch to Cursor or GitHub Copilot even if those tools improve.

It creates product lock-in - The more you use Claude Code, the more integrated it becomes into your workflow. Switching costs increase exponentially.

Anthropic told investors the company aims to be cash-flow positive by 2028, and Claude Code is expected to play a significant role in that trajectory. Why? Because the subscription revenue compounds. One thousand developers at

For comparison, Cursor announced it also hit $1B in ARR in November. But Cursor is using someone else's models (including Anthropic's Claude). Anthropic owns both the model and the product. The economics are completely different.

This business model shift has huge implications for AI companies. It says that direct-to-developer products with strong product-market fit can scale faster than enterprise licensing.

It also suggests that the real money in AI isn't in being the API provider. It's in being the product that developers actually use every day.

How Developers Actually Use Claude Code in Production

Here's something interesting that doesn't come up in most discussions of Claude Code: how people actually use it. Not in theory. In practice.

Boris Cherny made an observation that cuts through a lot of the hype. "This is the golden age for people with short attention spans," he said. "The way you use these products is not like deep focus. When I look at the most productive Claude Code users in and out of Anthropic, it's the people who jump across all these different tasks."

That's counterintuitive. You'd think using an AI coding agent requires focus. Set it on a big task, wait for completion.

Instead, productive users do the opposite. They run multiple Claude Code instances in parallel. Set the first one to work on database schema changes. Start a second one on API endpoint refactoring. Begin a third on front-end components. Then cycle back.

While the first one is thinking through dependencies, you're feeding context to the second. While the second is writing, you're reviewing the first's output. It's like a team of developers working at the same time, each on different pieces of the system.

This changes the developer experience. Instead of writer's block (how do I start?), you get context-switching. Instead of deep focus, you get parallel processing.

For a certain type of developer—the ones who thrive on variety—this is amazing. For others who need flow state, it might be terrible.

Claude Code also created a secondary effect. The user interface became unexpectedly important. The way Claude Code presents code, the way it formats responses, the way it shows what it's thinking—all of this matters more than people realized.

Claude Code's interface is, frankly, beautiful compared to most developer tools. It's clean. It's fast. Everything loads instantly. The code appears in a readable format with syntax highlighting. You can see what the AI is doing step-by-step.

That stickiness is real. Sarah from design tried Claude Code once. After twenty minutes, she said: "This actually works." That's not hyperbole. That's the actual feedback developers were giving.

Three engineers told people independently: "We should've done this years ago." That kind of universal praise is rare for developer tools. Usually there's a learning curve. Usually there are rough edges.

Claude Code feels like the AI just... gets it.

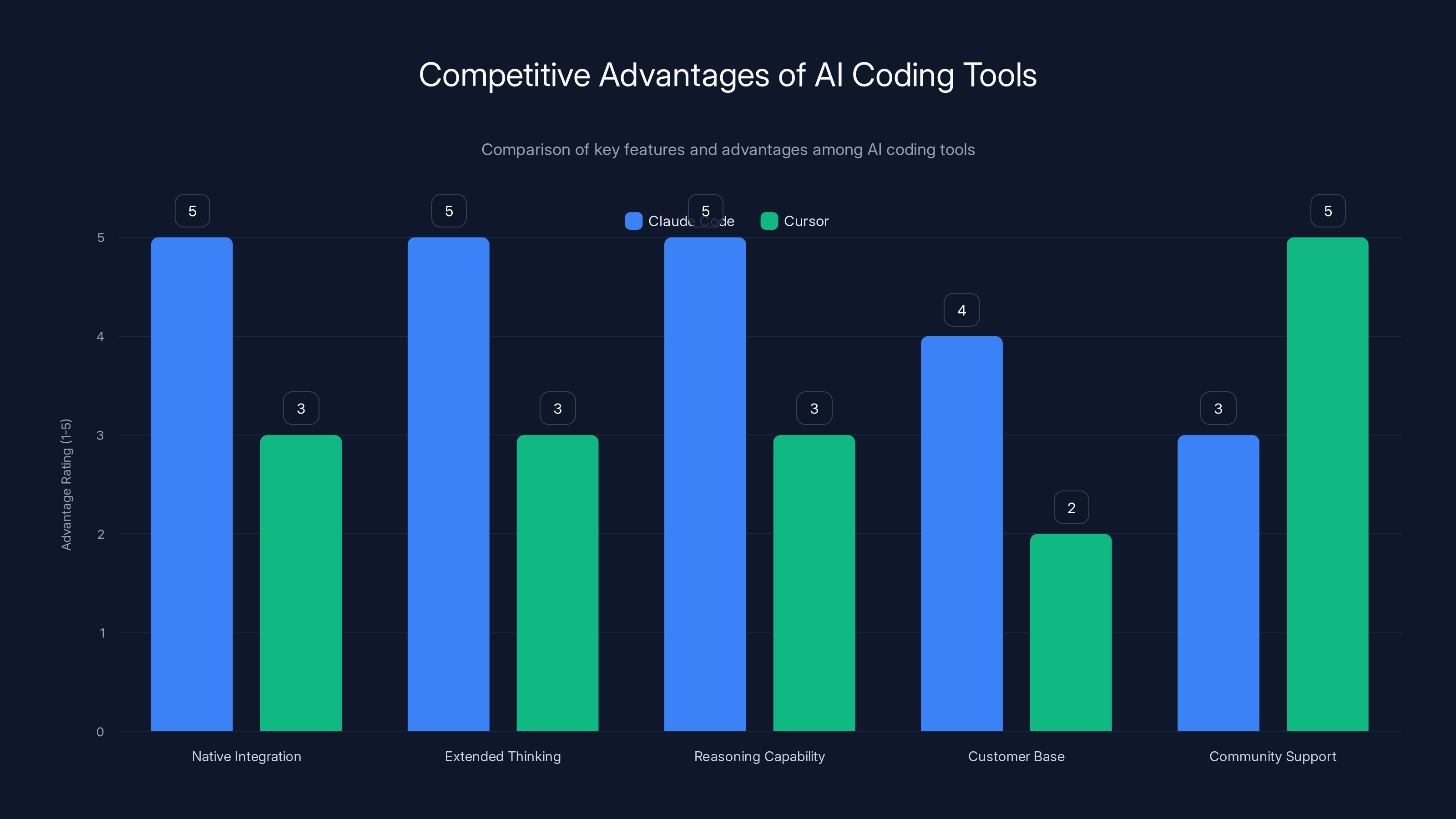

Claude Code leads with native integration and reasoning capabilities, while Cursor excels in community support. (Estimated data)

Real-World Use Cases: Beyond Boilerplate

Most AI coding tool discussions focus on simple stuff. Autocomplete. Boilerplate generation. Database schema creation.

Claude Code is doing something bigger.

Building entire features autonomously - Describe a feature in plain English. Claude Code writes the database migration, the API endpoint, the React component, the tests. All of it. In one go.

Refactoring legacy code - Point Claude Code at a messy codebase. Tell it to modernize it. It traces through the dependencies, identifies what can be safely changed, and does the work. No hallucinations. It checks its own work.

System design and architecture - Claude Code can look at your codebase and suggest architectural improvements. Not just code changes. Structural changes. Moving between monoliths and microservices. Rearchitecting for scale.

Documentation generation - Point Claude Code at code. It generates API documentation, README files, implementation guides. Developers were doing this manually for years. Claude Code does it in seconds.

Security improvements - Claude Code can audit code for security issues. OWASP vulnerabilities, authentication problems, data exposure. It fixes them while maintaining functionality.

Performance optimization - The tool can identify performance bottlenecks and optimize them. Database queries, algorithm improvements, caching strategies. It's like having a performance engineer review your code.

Test generation - Write a function. Claude Code writes the test suite. Unit tests, integration tests, edge cases. Coverage analysis. All without you writing test code.

These aren't edge cases. Developers are using Claude Code for all of these things right now. That's why the adoption curve is so steep.

It's not just faster. It's expanding what one developer can accomplish. If you can do the work of three developers, suddenly you're three times as productive.

The Competitive Landscape: Who's Actually Winning

Let's be clear: Anthropic isn't alone in this space. The competition is fierce.

Cursor hit $1B ARR too. Open AI is building agentic coding products. Google is racing to claim market share. x AI is entering the market with Grok-based coding agents.

But here's the thing: Claude Code has several structural advantages.

Native integration with the model - Cursor uses Anthropic's Claude, but it's an external integration. Claude Code is built by the same company that built the model. The AI can be optimized specifically for agentic coding. It understands its own capabilities and limitations.

Extended thinking and reasoning - Claude Opus 4.5 can do something no other public model can do at scale: extended thinking. The model can reason through complex problems before executing. This is particularly valuable for coding, where understanding the problem often matters more than generating the solution.

Reasoning over raw capability - Claude Code doesn't necessarily write faster code. It writes better code. It understands your codebase. It knows about edge cases. It thinks like an engineer, not just a code generator.

Existing customer base - Anthropic already has thousands of enterprise customers using Claude through APIs. Converting them to Claude Code users is straightforward. Cursor has to win customers from scratch.

That said, Cursor has advantages too. The product launched earlier. It's built on top of multiple models, so if Claude degrades, users can switch. It has a massive community.

But the real story is simpler: Claude Code is winning because it's better, and better matters more than anything else.

Will it stay that way? Nobody knows. AI models improve constantly. One breakthrough from Open AI or Google could shift the landscape overnight. But right now, in early 2025, Claude Code is the tool that developers actually want to use.

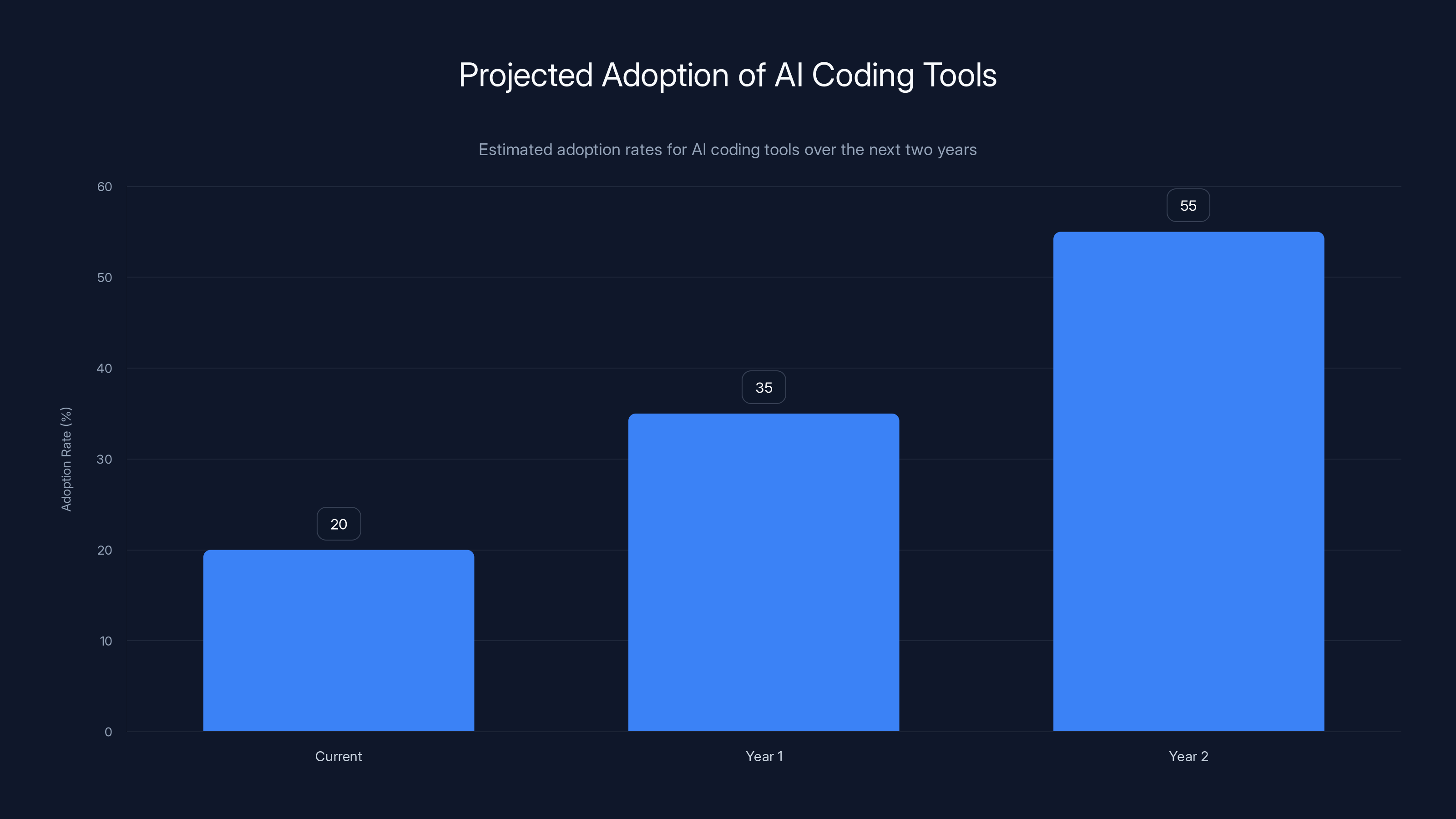

Estimated data suggests that over 50% of developers will use AI coding tools like Claude Code within two years.

Beyond Coding: Cowork and the Expansion of Agentic AI

Anthropic's next move reveals the real ambition.

Earlier this month, the company launched Cowork. It's Claude Code for non-coding tasks.

Cowork is an AI agent that can manage files on your computer and interact with software. It doesn't require you to touch a coding terminal. You just describe what you want to do, and it does it.

Want to reorganize your file system? Tell Cowork to do it.

Want to generate reports from spreadsheets? Cowork handles it.

Want to automate repetitive tasks in your email or project management tool? Cowork does it.

This is the real endgame. Not "how can we make developers faster." But "how can we make anyone faster."

Claude Code proved the model works. Developers use it. They pay for it. It scales.

Cowork applies that same logic to everyone else. Accountants. Project managers. Data analysts. Content creators.

For Anthropic, this means the addressable market just tripled. Claude Code is only useful to the ~4 million professional developers globally. Cowork is useful to anyone with a computer and repetitive tasks. That's hundreds of millions of people.

The business implications are enormous. If Cowork becomes as popular as Claude Code, the revenue scale becomes something else entirely.

But there are risks too. Task automation is complicated. Unlike coding, where there's a clear right answer, non-coding tasks are often ambiguous. When does Cowork have enough context to act safely? When does it need to ask for confirmation?

These are the problems Anthropic will solve next.

How Claude Code Is Reorganizing Anthropic Itself

Here's the part that reveals what's really happening: Claude Code is changing how Anthropic works.

The company has been hiring and scaling at a rapid pace. But internal productivity matters as much as headcount. If you double the number of engineers without doubling productivity per engineer, you haven't actually doubled your productivity.

Claude Code is solving that problem. Existing engineers are becoming more productive. New engineers get onboarded faster (Claude Code helps them learn the codebase). Code review becomes faster (Claude Code is generating code that requires less review).

This has created an interesting dynamic. Anthropic needed to scale to compete with Open AI and Google. Claude Code made that scaling more efficient. The company can grow faster without burning out the original team.

Internally, Claude Code also changed the way engineers think about their work. Instead of spending time on boilerplate and boring stuff, they focus on the creative and strategic parts. Code architecture. System design. Performance optimization. Innovation.

The boring stuff gets automated. The important stuff remains human.

This is actually the healthiest version of AI in the workplace. Not AI replacing humans. AI removing the drudgery so humans can do the interesting work.

For Anthropic, this matters because it attracted different talent. Engineers want to work on interesting problems. If you're doing boilerplate coding all day, that's not interesting. If Claude Code removes the boilerplate, then suddenly the job becomes much more appealing.

Small thing? Maybe. But it affects hiring, retention, and team morale. All of which affect product quality.

The engineering team at Anthropic achieved nearly 100% adoption of Claude Code immediately, while the sales team's adoption steadily increased to 50% by late 2024. Estimated data shows the growing internal impact of Claude Code.

The Technical Magic: Why Claude Code Actually Works

People ask: "Why is Claude Code better than alternatives?"

There isn't one answer. It's a lot of small things that compound.

First, context understanding - Claude Opus 4.5 can hold and understand much more context than competitors. It can read your entire codebase, understand the patterns, and write code that fits. Most coding AI tools read one file at a time. Claude Code reads the whole system.

Second, tool use - Claude Code isn't just generating text. It's using tools. File operations. Terminal commands. Package managers. Version control. It orchestrates these tools intelligently. It knows when to read a file versus when to search for it. When to run a test versus when to skip it.

Third, reasoning over raw speed - Claude Opus 4.5 can think through problems. It can backtrack if it realizes it's wrong. It can generate multiple solutions and pick the best one. Raw speed doesn't matter if the first answer is correct.

Fourth, error recovery - Early versions of Claude Code would get stuck in loops. Opus 4.5 is much better at detecting when it's wrong and fixing itself. This might seem like a small thing, but it's the difference between a tool you babysit and a tool you let run autonomously.

Fifth, instruction following - This sounds simple, but it's not. Claude Opus 4.5 is just better at following complex instructions. If you tell it to refactor code, maintain backward compatibility, add tests, and document changes—all in one prompt—it actually does all of those things. Competitors often miss some aspects.

None of these things individually would justify the hype. Together, they create a tool that just works.

The Security and Trust Question

Here's the tension nobody talks about enough: giving an AI tool access to your codebase.

Claude Code needs to read your code. It needs to understand your system. It might need to execute commands. This creates security implications.

What happens if Claude Code gets compromised? What if it leaks proprietary code? What if it makes a mistake that breaks production?

Anthropic has thought about this, but the answers aren't perfect.

Data privacy - Claude Code runs on Anthropic's servers. Your code gets sent to Anthropic. For some companies, this is fine. For others (particularly those in regulated industries), it's a non-starter. Anthropic offers on-premise options for enterprises, but it's more expensive.

Error handling - Claude Code can make mistakes. Not often, but it happens. The tool has safeguards (it generates code, you review it, you test it). But it's not bulletproof.

Reasoning transparency - It's hard to debug Claude Code's decisions. The AI doesn't always explain why it made a specific choice. This can be frustrating when you need to understand the thinking.

Autonomous execution - You can tell Claude Code to actually execute changes (not just suggest them). This is powerful, but dangerous if the AI misunderstands the request.

These issues aren't unique to Claude Code. Any AI tool that modifies your system has these risks. But they're real, and enterprises need to understand them before deploying.

The Developer Experience: Why Stickiness Matters

Here's why Claude Code is winning on stickiness:

When you open Claude Code, it's immediately obvious what it does. No learning curve. No documentation. You describe what you want. It does it. That's it.

Compare this to most developer tools, which come with a steep learning curve. You need to understand the config files. The API. The best practices. The gotchas.

Claude Code skips all of that. The interface is so intuitive that a non-developer can use it.

This matters because switching costs matter. If using the tool requires learning a whole new system, people won't switch even if it's marginally better. But if the new tool is easier to use and better, switching is a no-brainer.

Claude Code is both. Better capability. Better UX. That's an unbeatable combination.

The interface also creates what's called "delightful" moments. You describe something vague. Claude Code asks clarifying questions. You answer. It nails what you meant. These small moments of understanding build trust and loyalty.

It's the opposite of frustrating tools that require you to specify everything in advance.

The Future: What Happens Next

If trends continue, here's what's likely:

Claude Code becomes the default tool for coding - Within two years, more than 50% of professional developers will be using Claude Code (or a direct competitor) regularly. It's just too useful to ignore.

Agentic AI expands to more domains - Cowork is the beginning. We'll see agentic tools for marketing, sales, customer service, data analysis. The pattern works. Companies will keep applying it.

The competitive field consolidates - There are too many AI coding tools right now. Most will disappear. The winners will be the ones with the best models (Claude, GPT-4, or equivalent) and the best UX.

Economics change for developers - If code generation becomes ubiquitous, junior developer salaries might decrease (more supply of code capacity). Senior developer salaries might increase (more valuable to review and direct AI-generated code). The job market shifts.

New problems emerge - As AI coding becomes normal, new challenges appear. Code quality at scale. Security audits for AI-generated code. Licensing implications. Copyright questions. How to credit AI-generated contributions.

Humans stay in charge - Despite the hype, the best developers won't disappear. They'll become multipliers. One great developer directing Claude Code can accomplish what used to take a team. That's powerful, not dystopian.

The real question isn't whether AI will replace developers. It's how much faster developers can become with AI assistance.

Claude Code is proving that the answer is: much, much faster.

FAQ

What is Claude Code exactly?

Claude Code is an AI agent built by Anthropic that writes, reviews, and executes code based on natural language instructions. Unlike older autocomplete tools, it can autonomously handle multi-step development tasks including file management, testing, debugging, and deployment, while maintaining context across your entire codebase.

How does Claude Code differ from GitHub Copilot?

GitHub Copilot is primarily an autocomplete tool that suggests code as you type. Claude Code is an agentic system that can complete entire features autonomously. Copilot requires you to be in control; Claude Code takes control based on high-level objectives. The capabilities are fundamentally different levels of AI maturity.

Why did Claude Code reach $1 billion in ARR so quickly?

Claude Code hit $1B ARR due to a combination of factors: the superior reasoning capabilities of Claude Opus 4.5, near-universal internal adoption demonstrating product-market fit, self-serve subscription pricing enabling rapid adoption, strong network effects within developer communities, and timing—agentic AI capabilities had reached a genuine inflection point where they became genuinely useful rather than novelty features.

What are the main risks of using Claude Code for production code?

Key risks include data privacy concerns (code sent to Anthropic's servers), potential for subtle bugs in AI-generated code, reduced understanding of your own system if over-relying on code generation, integration challenges with existing development workflows, and the fact that security-critical code still requires rigorous human review regardless of generation method.

Is Claude Code really used by 100% of Anthropic's engineering team?

Yes, according to Boris Cherny, the head of Claude Code, internal adoption among Anthropic's technical employees is near-universal. Engineers use it for daily coding work, which serves as both dogfooding (testing the product internally) and generates real-world feedback that drives product improvements before external release.

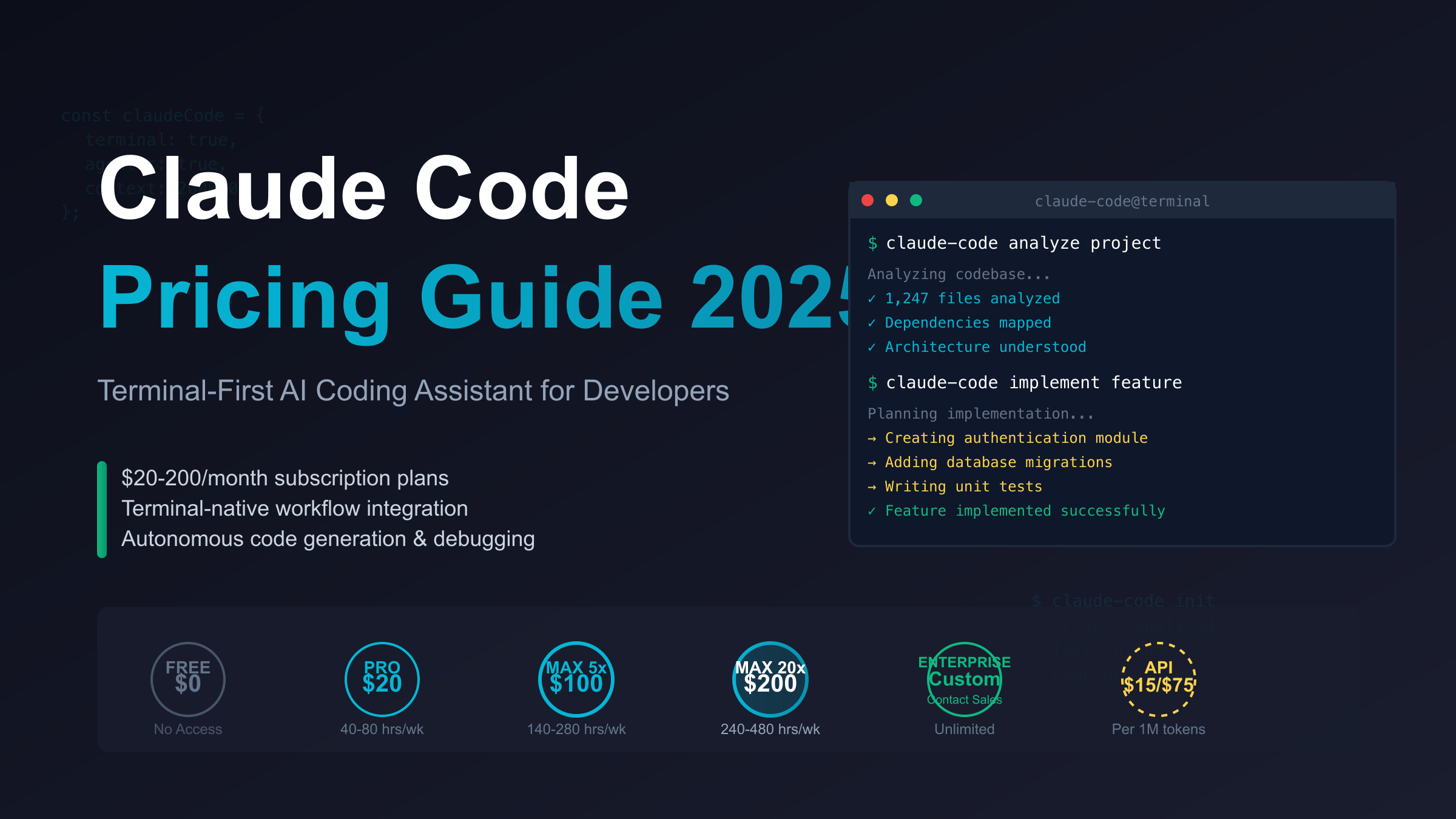

How much does Claude Code cost?

Claude Code is available through Anthropic's subscription plans, with pricing typically starting around $20/month for regular users, and volume discounts available for enterprises. However, Anthropic also offers on-premise and custom pricing for large organizations with specific security or compliance requirements.

Can Claude Code write entire applications?

Claude Code can write complete features, entire backend systems, and substantial frontend applications, but the best results come when humans provide strategic direction. It excels at taking a detailed specification and implementing it fully, but it's less reliable at deciding whether the specification itself is correct or optimal from a business perspective.

What happens to the code Claude Code generates—does Anthropic own it?

According to standard AI tool agreements, code generated by Claude Code belongs to the user, not Anthropic. Your code is not used to train future models by default unless you explicitly opt-in to research data sharing. Enterprise agreements typically include stricter data isolation guarantees.

Is Claude Code going to replace human developers?

High-quality developers are becoming more valuable under Claude Code, not less, because the role shifts from writing boilerplate to directing AI systems and making architectural decisions. The demand for developers who can guide and validate AI-generated code will likely grow significantly, while pure "code writing" becomes less valuable.

What's the difference between Claude Code and Cowork?

Claude Code is specialized for software development (writing code, running tests, debugging). Cowork is a general-purpose agent that can manage files, interact with any software, and automate tasks for non-technical users. Claude Code requires coding context; Cowork works on arbitrary computer tasks.

Strategic Implications for the AI Industry

Claude Code's success signals something profound about how AI will integrate into human work.

It's not about artificial general intelligence. It's about practical tools that solve real problems in concrete domains. Developers had real problems: repetitive boilerplate, tedious refactoring, documentation overhead.

Claude Code solved them.

The companies that will win aren't the ones promising AGI. They're the ones shipping products that make people materially more productive at their actual jobs.

Anthropic is executing this playbook perfectly. They built a world-class model. They applied it to a specific domain. They iterated internally. They shipped with strong product-market fit.

That's not luck. That's strategy.

For enterprise software, this matters because it suggests the future isn't about AI replacing people. It's about AI amplifying expertise. One great engineer plus Claude Code is worth more than one great engineer alone.

For startups, this matters because it means you can build with smaller teams. If code generation is robust enough, you don't need 50 engineers. You need 10 engineers and Claude Code.

For individuals, this matters because learning to direct AI tools becomes a core skill. You don't need to know every programming language. You need to know how to ask Claude Code to handle languages you don't know.

The world changes when tools change. Claude Code is one of those tools.

Conclusion: The Inflection Point

Something shifted in late 2024. For the first time, AI coding wasn't aspirational. It wasn't theoretical. It was practical.

Boris Cherny made a simple observation: "We built the simplest possible thing." But simple is the hardest thing to build. Simple means you've solved the hard problems. You've hidden the complexity. You've made something so obvious that it doesn't need explanation.

Claude Code is that simple. You describe what you want. It does it. You move on.

In the span of less than a year, this simple tool became the fastest-growing developer platform in AI history. It crossed $1 billion in ARR. It achieved near-universal adoption within Anthropic's own engineering team. It sparked a competitive arms race.

The business implications are staggering. Anthropic went from an API company to a direct-to-developer product company. The revenue model changed. The customer relationship changed. The way software gets built changed.

But the deeper implication is cultural. We're at the moment where AI stops being an experiment and becomes infrastructure. Like cloud computing before it, like mobile before that.

Once that inflection happens, going back isn't an option. Developers who experienced 100% productivity improvement aren't going to voluntarily go back to writing boilerplate manually. Enterprises who saw their teams become more productive aren't going to disable the tool.

Claude Code is that inflection.

Will it stay on top? Unknown. Open AI or Google could ship something better tomorrow. But the moment is already here. AI coding has crossed the chasm from "interesting experiment" to "standard tooling."

The future of software development runs through tools like Claude Code. Not because they're the best possible future. But because they're demonstrably better than the alternative right now.

And sometimes, better is all that matters.

Try Runable For Free

If Claude Code is saving developers hours on code generation, imagine what Runable can do for your entire team's workflow automation. Runable's AI agents can generate presentations, documents, reports, images, and videos automatically—extending the productivity gains beyond just coding.

Use Case: Generate your next sales deck, quarterly report, or client proposal in minutes instead of hours, letting your team focus on strategy instead of formatting.

Try Runable For Free and experience how AI agents can transform your entire creative workflow starting at just $9/month.

Key Takeaways

- Claude Code reached $1 billion in annualized recurring revenue in under 12 months, making it the fastest-growing AI developer tool in history

- The shift from autocomplete to agentic AI represents a fundamental change in capability: developers now describe outcomes instead of writing code

- Claude Opus 4.5 was the critical inflection point—multiple independent sources confirmed step-function improvements in reasoning and code quality

- Anthropic's internal adoption near 100% demonstrates strong product-market fit and served as crucial dogfooding that accelerated development

- The competitive landscape is consolidating fast, with Claude Code's superior reasoning abilities and native model integration providing structural advantages over competitors like Cursor

Related Articles

- Why Microsoft Is Adopting Claude Code Over GitHub Copilot [2025]

- AI Coding Agents and Developer Burnout: 10 Lessons [2025]

- Humans& AI Coordination Models: The Next Frontier Beyond Chat [2025]

- The TikTok U.S. Deal Explained: What Happens Now [2025]

- Building Your Own AI VP of Marketing: The Real Truth [2025]

- Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains [2025]

![Claude Code Is Reshaping Software Development [2025]](https://tryrunable.com/blog/claude-code-is-reshaping-software-development-2025/image-1-1769110846145.jpg)