What Google I/O 2026 Means for the Future of AI and Android

Google just made it official: I/O 2026 is happening. And if the pattern of the past few years tells us anything, this isn't just another developer conference with incremental updates and minor feature announcements.

This is Google's moment to reset expectations for what AI can actually do when it's built into the operating system itself, integrated into search, and woven through every product the company touches. We're not talking about Chat GPT integration or slapping AI onto existing features as an afterthought. We're talking about fundamental architectural changes.

Last year, Google showed us what's possible when you commit to AI-first thinking. But 2026 is shaping up to be the year where that vision stops being a pitch and becomes a shipping reality. The company has been quietly building toward this moment for 18 months, and the convergence of advances in language models, on-device processing, and system integration is about to hit hard.

Why should you care? Because if you use Android, Gmail, Docs, Search, or any of Google's 2 billion daily active users' products, this conference is literally defining what your digital life looks like in 12 months. We're talking about your phone understanding context the way your best friend does. We're talking about your productivity tools knowing what you're trying to accomplish before you fully articulate it. We're talking about Android becoming genuinely harder to live without.

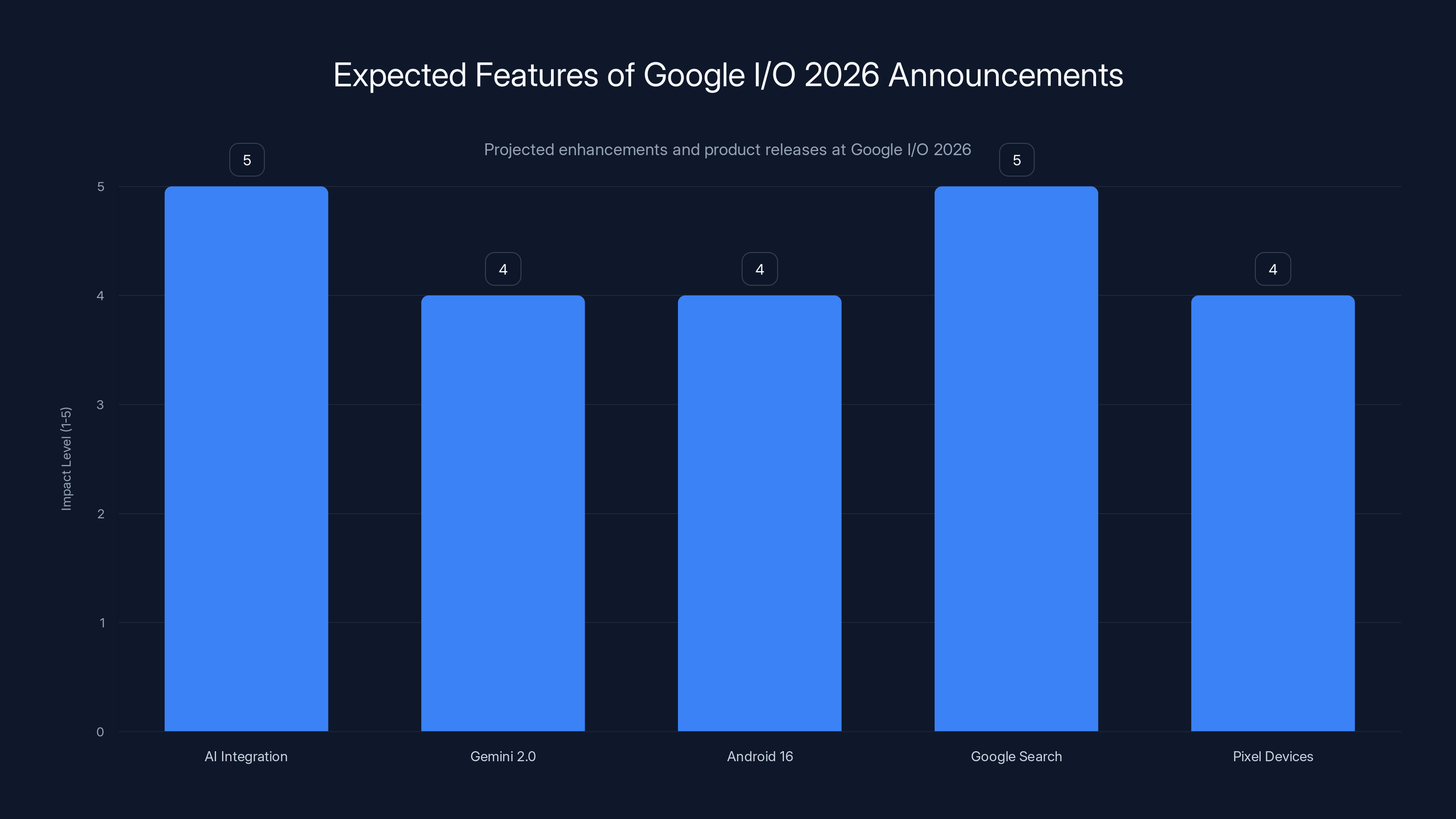

Let's dig into what's actually coming. These aren't wild guesses or wishful thinking. These are the natural next steps in the roadmap Google has been following for the last three years, based on their research publications, patent filings, and strategic hires.

TL; DR

- Gemini 2.0 and Beyond: Expect major improvements in reasoning, multi-modal capabilities, and real-time integration across Google products

- Android 16 Integration: AI features baked directly into the OS, not just as add-ons, with on-device processing for privacy

- Search Evolution: Google Search will move beyond keyword matching to understanding intent, context, and real-world needs

- Hardware Announcements: New Pixel devices, Pixel Fold improvements, and possibly new form factors leveraging AI capabilities

- Developer Tools: Major updates to Gemini API, improved developer experience, and new frameworks for building AI-powered apps

- Bottom Line: Google I/O 2026 will establish AI as the foundation of consumer tech, not a feature bolted on top

Gemini 2.0 is projected to significantly enhance multimodal understanding and on-device processing, offering improved privacy and speed over Gemini 1.5. Estimated data based on feature descriptions.

1. Gemini 2.0: The Next Leap in Large Language Models

Gemini 1.5 was good. Gemini 2.0 is going to redefine what a general-purpose AI model looks like. Here's what's coming.

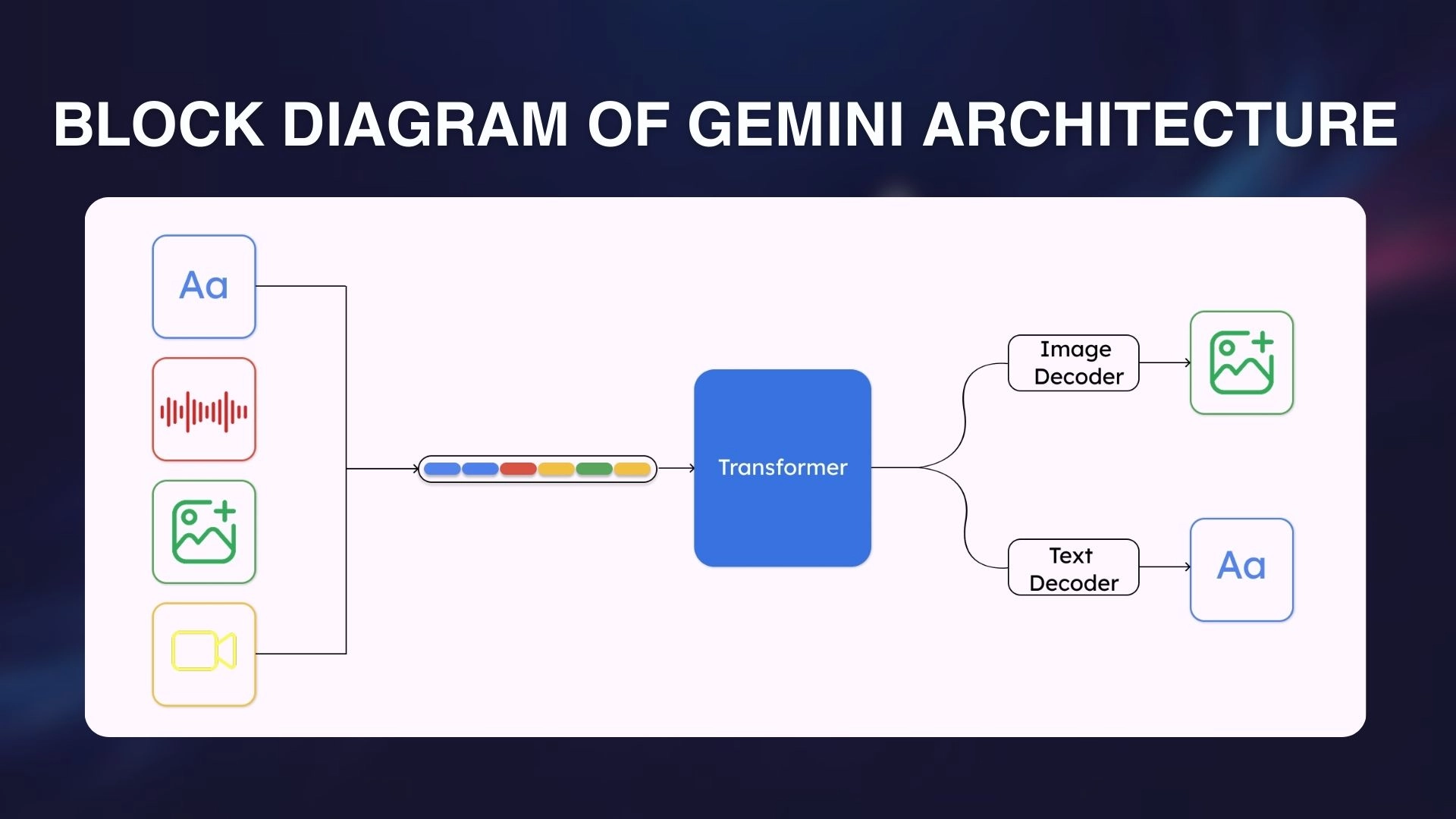

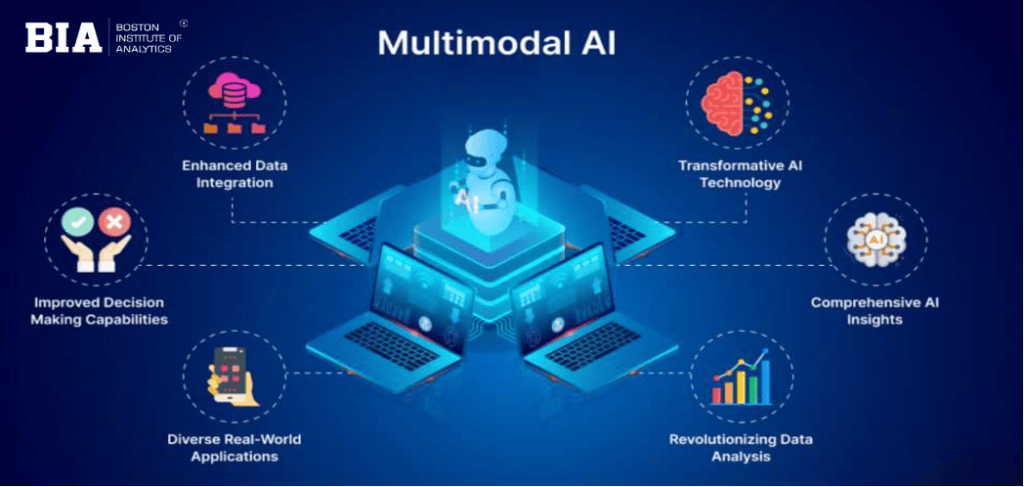

Multimodal Mastery That Actually Works

Current multimodal models can technically process images, videos, and audio alongside text. But there's a huge difference between being able to process something and actually understanding it at a level that feels human.

Gemini 2.0 is going to take a meaningful step forward here. Expect the model to understand spatial relationships, temporal context, and semantic nuance across different media types in ways that current models simply can't match. This isn't just about showing the model a picture and getting a caption back. This is about understanding a 30-minute video conference, extracting the key decisions made, identifying who disagreed about what, and summarizing it with the context that matters to you specifically.

Why? Because Google has access to massive amounts of multimodal training data—YouTube content, image search, video metadata, engagement signals—that most competitors don't have. They've been investing heavily in machine learning infrastructure, and that advantage compounds over time.

On-Device Processing Goes Serious

One of the biggest shifts we'll see at I/O 2026 is Google putting real muscle behind on-device AI. This doesn't mean replacing Gemini in the cloud. It means having smaller, specialized versions of Gemini running directly on your phone, with no internet required.

Why should you care? Privacy, obviously. But also speed, reliability, and the ability to use AI features when you're on a flight, in a tunnel, or in a region with poor connectivity. On-device models won't be as capable as cloud versions, but they'll be good enough for 70% of use cases, and those use cases will all get dramatically faster.

Google's been shipping on-device ML for years (voice recognition, gesture detection, translation), but the scale and sophistication of what they're bringing to I/O 2026 will be fundamentally different. Expect Gemini to run locally on high-end Pixels, with graceful fallback to cloud processing for complex queries.

Real-Time Integration and Context Awareness

Here's what separates a useful AI from a game-changing one: context. Not just what you're asking right now, but what you've been working on, what you care about, what you're trying to accomplish.

Gemini 2.0 will have significantly better access to your personal context without being creepy about it. It'll know you're an engineer working on a specific project, so when you ask a question, it'll give you answers tailored to that context. It'll understand the difference between you asking about Python for a production system versus a learning exercise. It'll remember what you decided last week and use that to inform what you're doing today.

This happens through better integration with Google Workspace (Docs, Sheets, Gmail, Meet), not because Google is storing more data about you, but because the model is getting better at understanding the signal that already exists in your digital life.

Reasoning and Planning Improvements

One of the biggest weaknesses of current LLMs is reasoning. They're phenomenal at pattern matching and generating plausible text, but they struggle with complex, multi-step logical problems that require backtracking, reconsidering premises, and actually thinking through a problem rather than pattern-matching to similar problems they've seen.

Gemini 2.0 is expected to show marked improvements here, partly through better training approaches and partly through having access to Google's computational power for inference-time reasoning. When you ask Gemini 2.0 a complex question, it'll be able to break it down into sub-problems, solve those, and synthesize the answer in ways that feel more like how a smart human would approach it.

The practical upshot? Better code suggestions, better analytical output, better help with genuinely difficult problems. Not perfect, but noticeably better.

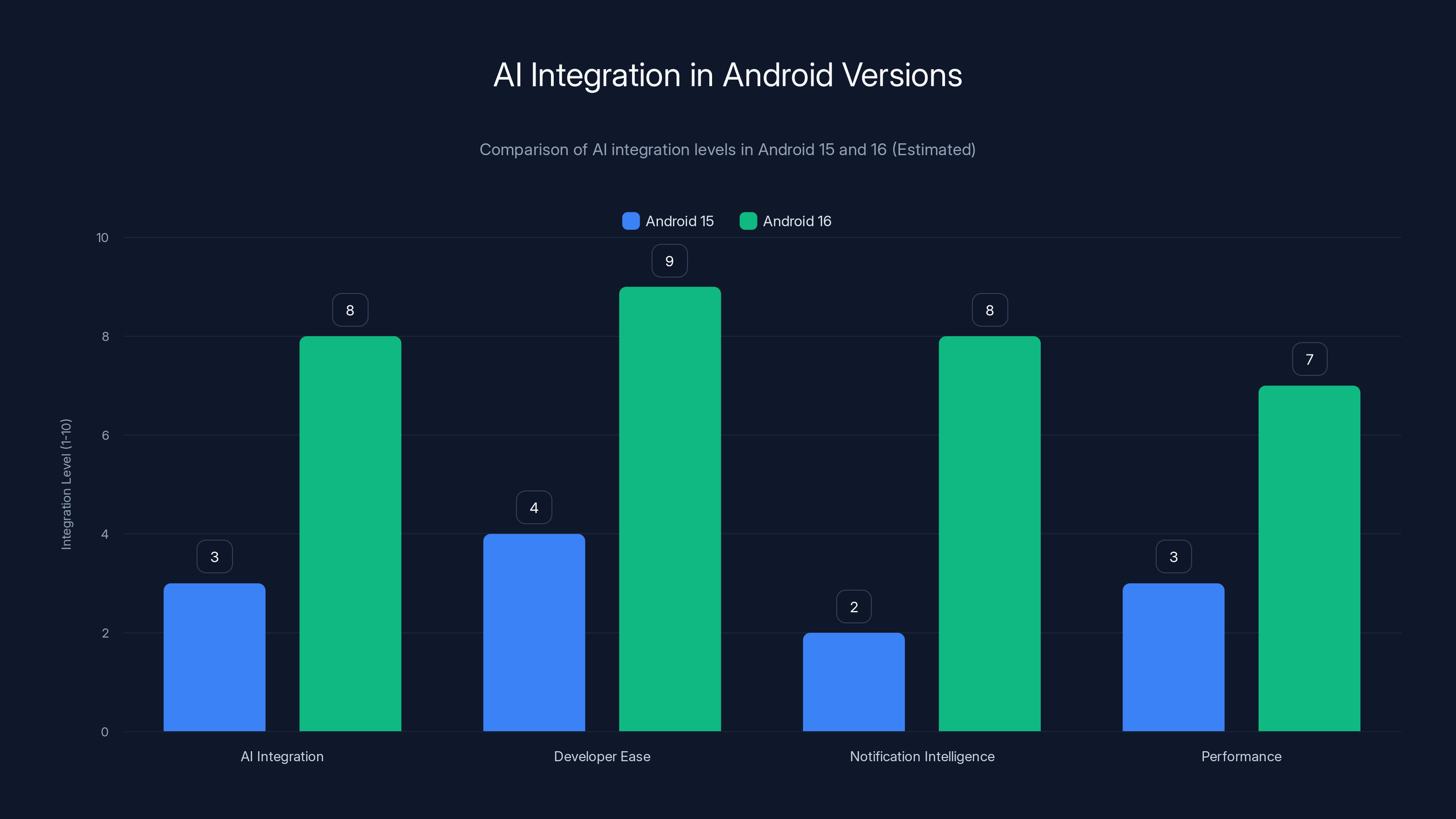

2. Android 16: Operating System Meets AI Foundation

Android 15 was decent. Android 16 is going to be fundamentally different because it's being built with AI as a core layer, not an afterthought.

AI as a First-Class System Component

Right now, AI features on Android feel like they're bolted on top of the OS. Android 16 will flip that. AI will be a first-class component, like the kernel, the file system, or the permission system. Apps will be able to request AI capabilities the same way they request location or camera access.

What does this mean in practice? It means developers can build AI features into their apps without worrying about the heavy lifting of model management, inference optimization, or figuring out when to run things on-device versus in the cloud. Google will handle all of that through a standardized API.

This is huge because it removes the friction that's currently keeping most developers from building AI-powered features. Right now, if you want to add AI to your app, you either need to make API calls to cloud services (expensive, slow, privacy concerns) or bundle models in your APK (bloated, outdated). Android 16 gives a third option that's lean, fast, and keeps improving over time.

Notification and Context Intelligence

Android notifications are broken right now. Your phone buzzes with dozens of low-value interruptions, and you have no good way to filter them at the OS level. You end up manually muting apps or living in a constant state of notification chaos.

Android 16 will use AI to understand which notifications actually matter to you, based on context. The system will learn that you care about notifications from your calendar around 8 AM (to know when to leave), but don't want them from shopping apps at 9 PM. It'll understand that a Slack message from your boss matters more than a Slack message in a low-priority channel. It'll know when you're in a meeting and batch notifications instead of interrupting you.

This isn't possible with simple rules or ML models that can't see your full context. But with Gemini integrated at the OS level, with access to your calendar, location, and usage patterns, the system can make genuinely smart decisions about what to surface and when.

Adaptive Performance and Battery Optimization

Google has been shipping adaptive battery for years, but it's a fairly blunt instrument. It looks at your usage patterns and tries to predict what you'll use next. Android 16 will be dramatically smarter about this.

The system will understand not just what apps you use, but what you use them for, in what contexts, and how important different tasks are. If it learns that you always check weather when you open the weather app first thing in the morning, it'll pre-load that data at 6:50 AM. If it knows you're about to enter a battery-intensive video call, it'll shed background workloads preemptively.

Battery life improvements could be meaningful—maybe 20-30% better on typical usage, more on light usage. But the bigger story is that your phone becomes genuinely responsive and optimized for your actual behavior, not generic usage patterns.

Keyboard and Input Prediction

Your phone's keyboard is currently dumb relative to what it could be. It predicts the next word based on language patterns and maybe a little context from what you've typed. Android 16 will make it genuinely smart.

Imagine a keyboard that understands you're writing an email to your boss versus a text to your friend, and adjusts tone and formality. Imagine one that knows you're answering a question someone asked, so it can suggest relevant information without you having to type it out. Imagine one that catches your mistakes not just at the character level but at the meaning level—flagging when you say something that contradicts what you meant to say.

This is the kind of feature that sounds like a gimmick until you use it, at which point you realize it saves you a meaningful amount of time every day. Over a year, that could add up to hours.

Android 16 shows a significant improvement in AI integration, developer ease, and notification intelligence compared to Android 15. Estimated data.

3. Google Search Gets Genuinely Smart

Google Search is the product that made Google valuable in the first place. And it's the product that needs to change the most to survive the AI era. I/O 2026 will show us how Google plans to stay relevant as search behavior itself transforms.

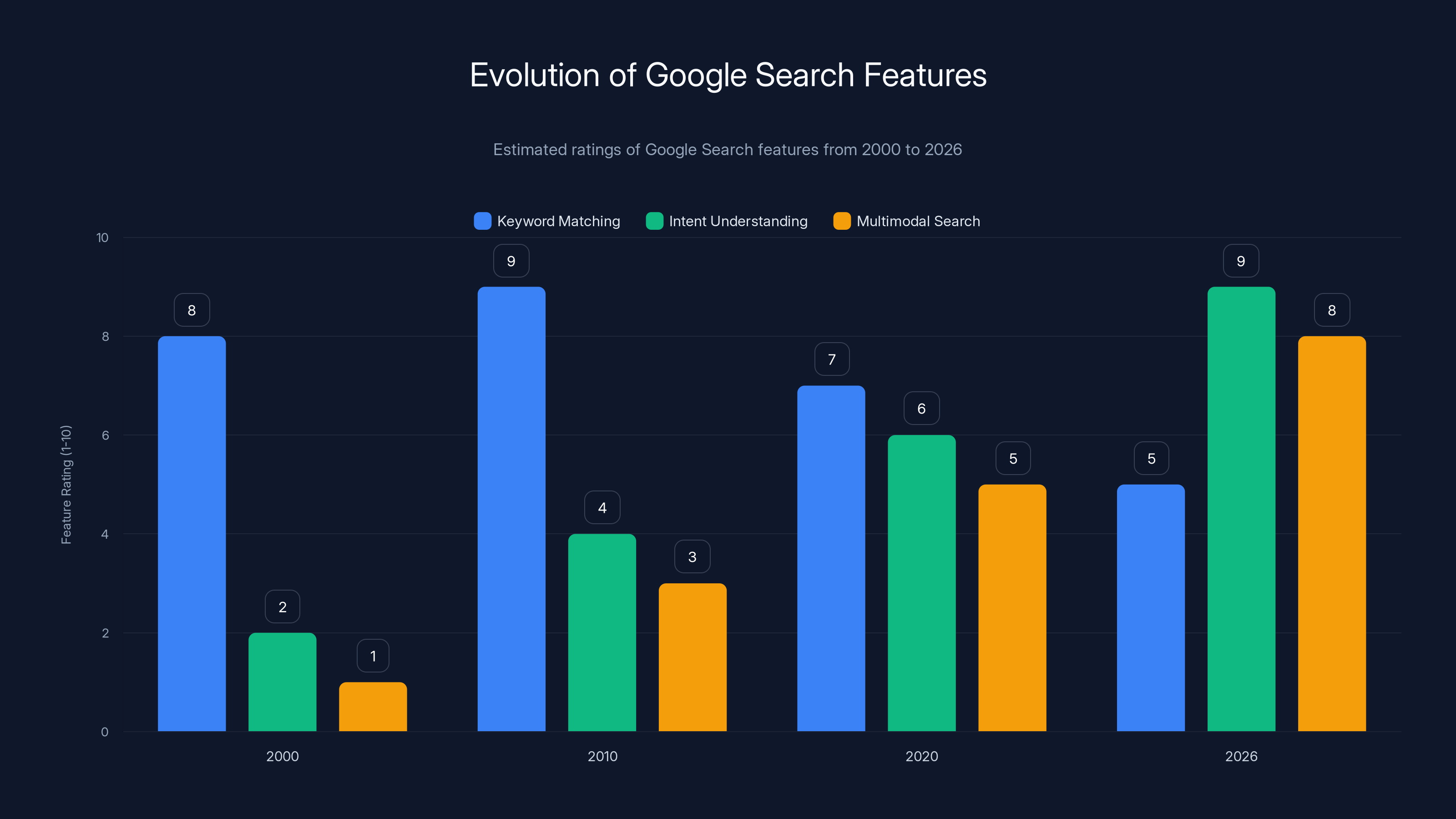

From Keywords to Intent Understanding

For 25 years, Google Search has been fundamentally about matching keywords to documents. You type "best coffee in Portland," Google returns pages that contain those words. Ranking algorithms are sophisticated, but the basic mechanic is keyword matching plus authority signals.

Google Search in 2026 will move decisively toward intent understanding. The system will genuinely understand what you're trying to accomplish, not just the words you used. You could ask "coffee nearby that's less crowded than Starbucks" and the system would understand location, preference, and implicit quality signals without you spelling it out.

How does this work? Partly through better natural language understanding (thanks to Gemini). Partly through having models process your request in a way that's more like human reasoning and less like keyword matching. Partly through having access to richer data about what places are actually like, not just what they're called.

The practical result is that search becomes more helpful even when you're not great at articulating what you want. Especially useful for complex queries, comparison shopping, and exploration.

Multimodal Search That's Useful

Google's been pushing image and video search for years, but it's still feels somewhat disconnected from text search. I/O 2026 will show a unified search experience where text, image, video, and audio input are all first-class citizens.

You'll be able to take a picture of something (a plant, a piece of furniture, an outfit) and get useful information about it. You'll be able to ask questions while pointing your camera at something. You'll be able to hum a song and find it, or record a voice clip and get relevant results. These features technically exist now, but they'll be dramatically more integrated and more useful.

What makes this work is computational power (Google has it), good ML models (Gemini handles this), and user data to learn from (Google has lots). The convergence of these factors in 2026 means multimodal search finally becomes genuinely useful rather than a gimmick.

Real-Time Information and Dynamic Results

One area where current search struggles: things that change. Stock prices, sports scores, weather, traffic, event listings. Google handles these through specialized data pipelines and knowledge panels, but it's not elegant.

With Gemini 2.0 and better real-time data integration, Google Search will handle dynamic information the same way it handles static information. You ask about something time-sensitive, the system pulls current data, understands what specifically you're looking for, and gives you an answer that's both comprehensive and current.

The difference between current search and 2026 search: current search excels at answering "what is X?" or "tell me about X." 2026 search will excel at answering "what's happening with X right now?" and "should I do X, Y, or Z?" (which requires understanding nuance and trade-offs).

Conversational Search That Doesn't Suck

Google's been shipping conversational search (where you ask follow-up questions and the system maintains context). It's technically there, but it doesn't feel natural. It feels like the system is pretending to be conversational when it's really just doing keyword matching at each turn.

I/O 2026 will show conversational search that actually feels like talking to a knowledgeable person. You can ask a complex question, get an answer, ask a follow-up that references part of the answer, ask another follow-up that combines information from multiple previous answers. The conversation flows naturally because the system genuinely understands what you've talked about, not just what your last query contained.

This is less about keywords and more about semantic understanding. Gemini 2.0 makes this possible in ways that older language models couldn't quite pull off.

4. Hardware Innovations: Pixel Meets AI

Google's going to announce new hardware at I/O 2026. The question isn't whether, it's what form it takes and how AI changes the value proposition.

Pixel 10 and the AI Phone

Pixel 9 is a good phone. Pixel 10 will be the phone where AI actually justifies the price. Not through gimmicks or feature checkboxes, but through genuine capabilities that competitors can't match because they don't have the integration.

Expect better on-device processing hardware (maybe a dedicated AI accelerator, or significant improvement to the existing Tensor chip). Expect Gemini to be so integrated into the experience that you don't think of it as a feature—you think of it as just how the phone works. Expect capabilities that don't exist on other phones because they rely on tight Pixel-specific integration.

Pricing will likely be aggressive, possibly trying to undercut competitors while justifying the premium through software. Google's learned (partially) that people are okay paying for phones if the software actually does things that matter to them.

Pixel Fold 2: The Form Factor Question

Pixel Fold (first generation) was a proof-of-concept. Pixel Fold 2 will be the first foldable that actually has a compelling reason to exist beyond novelty. That reason? The larger interior screen makes AI-powered multitasking and information density genuinely more useful than on a phone.

Think about using Gemini to research something while writing a document on the interior screen. Or looking at a large map while Gemini provides turn-by-turn navigation and context about what you're driving through. Or having a video call on one panel while having reference materials on the other, with Gemini helping you find specific information.

Foldables don't make sense for the same reason regular phones make sense. They make sense when the form factor enables things that flat screens can't do. I/O 2026 will show that argument finally being made convincingly.

Wearable Ecosystem Expansion

Google's wearable strategy has been somewhat disjointed (Wear OS, limited smartwatch options). I/O 2026 should show a more cohesive vision where Pixel Watch and other wearables become first-class members of the Google AI ecosystem.

Expect better voice control (with Gemini running partially on the watch itself). Expect smarter health monitoring that actually learns from your data and suggests interventions. Expect better integration with your phone, tablet, and home setup.

None of this requires groundbreaking new hardware. It's mostly about better software and tighter integration. But the overall effect is that Google's wearables become more useful because they're connected to an AI system that understands your health, schedule, and context.

Smart Home Integration That's Actually Smart

Google Home devices currently rely on a mix of routine-based automation and voice commands. They're capable, but not intelligent. They don't adapt, don't learn, don't anticipate.

I/O 2026 will likely show Google Home devices with local Gemini processing. Your smart home becomes actually smart in the sense that it learns your patterns, adapts to your needs, and anticipates what you want before you ask. Your lights adjust not just based on time of day but based on whether you're actually home and what you're doing. Your thermostat manages temperature not based on a schedule but based on your actual presence and preferences.

The privacy implications here matter—this only works if the processing is local—and Google will probably spend significant time on that messaging.

5. Developer Tools and the AI Developer Experience

All of these consumer features are only possible if developers can build them. I/O 2026 will show major improvements to Google's developer tools and APIs.

Gemini API Evolution

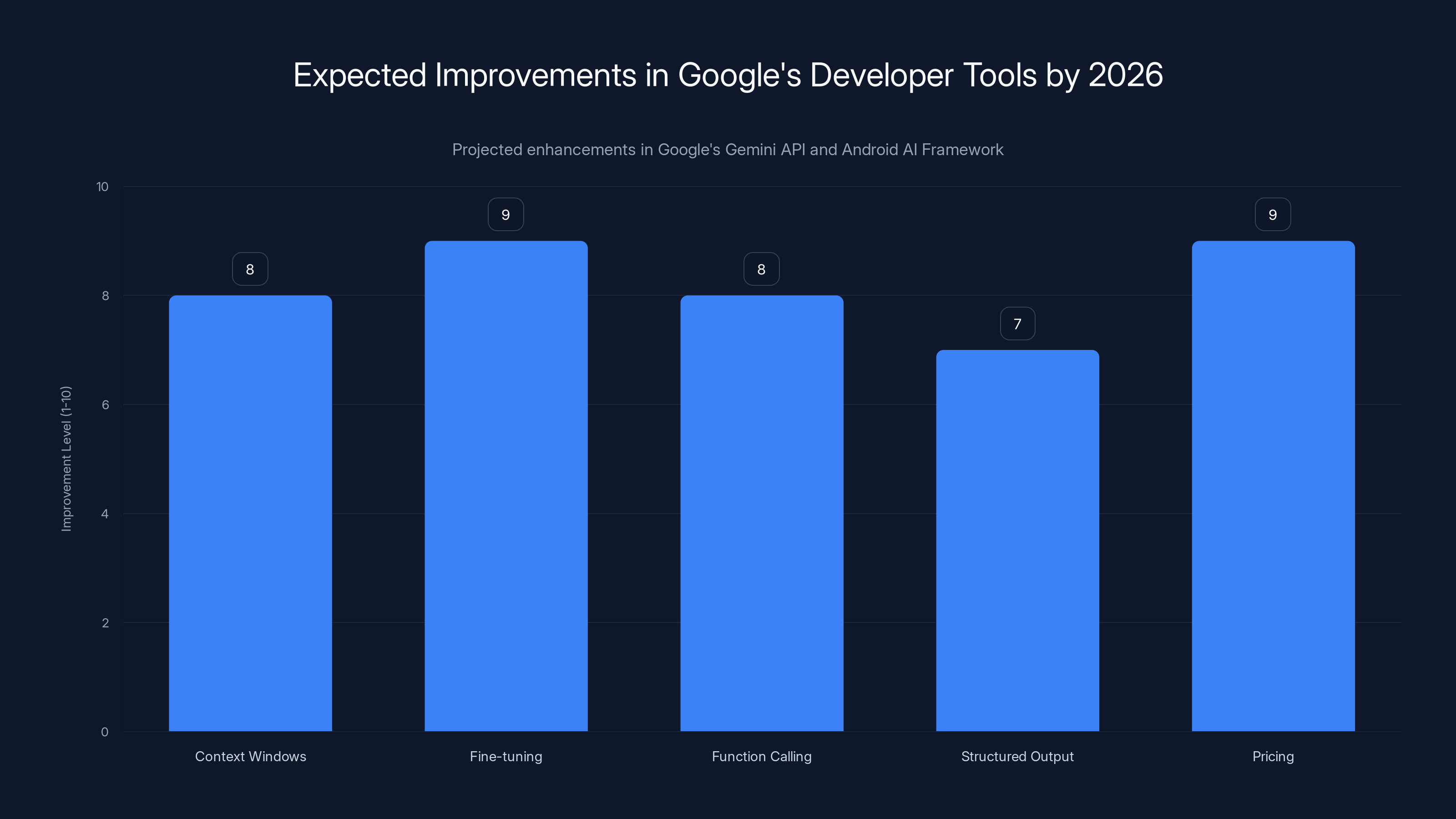

Google's Gemini API right now works, but it's not as seamless or developer-friendly as it should be. I/O 2026 will introduce significant improvements.

Expect better context windows (being able to feed more data into the model for processing). Expect better fine-tuning capabilities (training specialized versions of Gemini for specific tasks without needing millions of examples). Expect better function calling (having the model understand your API schema and call your functions appropriately). Expect better structured output (asking the model for JSON in a specific format and getting reliable results).

Expect pricing that's more competitive, with better options for high-volume users. Google wants more developers building on Gemini, and they're willing to make pricing attractive to encourage that.

Android AI Framework and Libraries

Right now, if you want to add AI to an Android app, you either use Google's ML Kit (limited), call cloud APIs (expensive and slow), or bundle ML models yourself (bloated). Android 16 will introduce a better option.

Google will likely announce a unified framework for on-device AI in Android, with standard APIs for common tasks (text generation, image generation, translation, object detection, etc.). Developers can request capabilities without caring about the underlying model or implementation. Google handles updates over time.

This is similar to how Android handles permissions or hardware access. It's a clean abstraction that shields developers from implementation details and lets Google improve things over time.

Improved Training and Documentation

Google's AI documentation is scattered across multiple sites and often assumes more ML expertise than most developers have. I/O 2026 will see improvements here.

Expect a unified developer documentation site for AI/ML. Expect clearer guides for common tasks (building an AI chatbot, adding AI to an e-commerce app, etc.). Expect better code samples and libraries. Expect better support for non-LLM use cases (vision, speech, classification, etc.).

This stuff sounds boring compared to new products, but it's actually critical. Documentation and developer experience are often what determines whether a platform becomes popular or not.

Workspace Extensibility

Google Workspace (Docs, Sheets, Slides, Gmail, Meet) will be significantly more extensible for AI. You'll be able to build custom Gemini-powered features that integrate deeply into these products.

Imagine building an extension that understands your company's internal terminology and context, then surfaces that information when you're writing documents or answering emails. Imagine building tools that process documents and spreadsheets in ways specific to your workflow. These are possible now, but I/O 2026 will make them much easier.

Google I/O 2026 is anticipated to significantly enhance AI integration across products, introduce Gemini 2.0, and evolve Android 16 and Google Search. Estimated data based on trends.

6. Gemini Integration Across Google Products

Gemini isn't a separate product that you open and use. It's becoming the underlying intelligence layer for everything Google makes.

Gmail Gets Genuinely Helpful

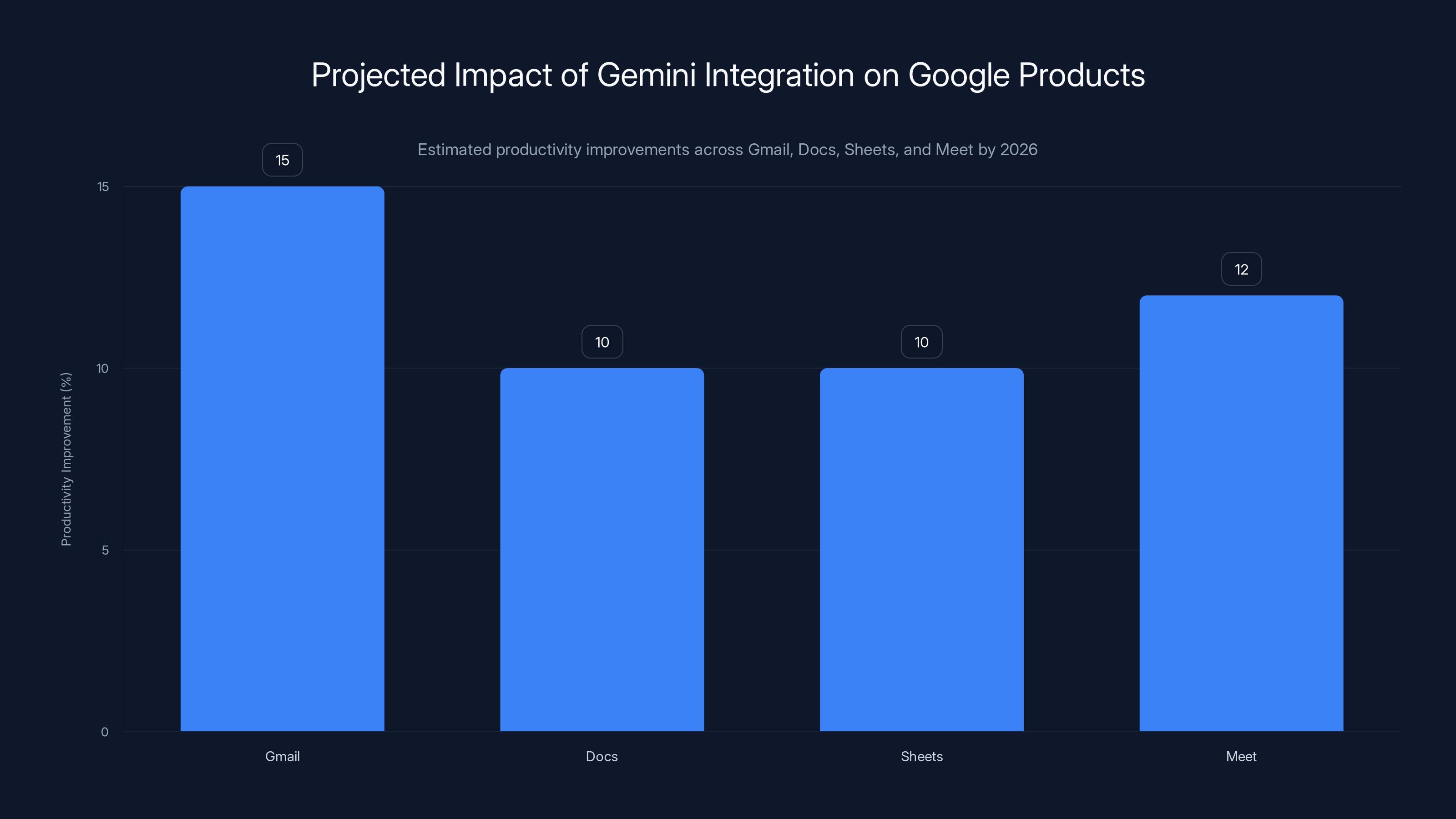

Gmail's AI features right now are pretty basic (Smart Compose, email summaries). I/O 2026 will introduce deeper integration. Gemini will understand the context of your inbox, the patterns in how you handle emails, and your implicit preferences.

It'll help you prioritize what matters. It'll suggest responses that match your tone and style. It'll summarize long email threads in ways that highlight what you need to know. It'll help you draft complex emails by understanding what you're trying to accomplish even when you haven't fully articulated it.

None of these are complicated AI tasks, but they all benefit from having deep contextual understanding. And they all make using email less like work and more like having a helpful assistant.

Docs and Sheets Get Smart Collaboration

Google Docs and Sheets will have Gemini deeply integrated for collaboration. When multiple people are working on a document, Gemini will understand what you're collectively trying to do. It'll suggest ways to structure information. It'll highlight potential conflicts or inconsistencies. It'll help synthesize information when everyone's edited things differently.

In Sheets, Gemini will help you structure data, suggest formulas, explain what other people's formulas do, and help you spot errors or inconsistencies in data.

These features sound incremental, but they compound. A 10% improvement in how productive you are in Docs and Sheets over the course of a year is massive.

Meet Gets AI Meeting Intelligence

Google Meet transcription exists now but is fairly basic. I/O 2026 will introduce real meeting intelligence. Gemini will watch your video calls, extract key decisions, identify action items, note who said what, and surface that in ways that actually matter.

It'll generate meeting summaries that are actually useful, not just transcripts. It'll help you prepare for meetings by reviewing what's relevant from previous conversations. It'll help you follow up by automatically creating tasks based on action items mentioned.

For distributed teams and people who spend a lot of time in meetings, this is genuinely valuable.

YouTube Gets Smarter Discovery and Engagement

YouTube's recommendation system is already sophisticated (the company has 15 years of data and ML investment). But I/O 2026 will show it getting smarter through Gemini integration.

Expect better understanding of what you're actually looking for (not just pattern matching to other users who watched similar videos). Expect better summaries and highlights of videos. Expect better tools for creators to optimize their content based on understanding user intent rather than just optimizing for watch time.

7. Privacy and On-Device Processing Strategy

Google's been taking privacy criticism for a long time. I/O 2026 will show a deliberate strategy shift toward on-device processing and data minimization.

Federated Learning Expansion

Federated learning is a technique where models are trained by having millions of devices contribute updates without sending raw data to servers. Google's been using this for years (for keyboard prediction, voice recognition, etc.). I/O 2026 will expand this significantly.

Expect more features to be trained using federated learning. Expect the benefits to be both technical (better models because they're trained on real data) and privacy-focused (you control what data leaves your device).

Explicit Privacy Choices

Google will likely introduce more explicit, granular privacy choices for AI features. Not the vague privacy policies and privacy dashboards that currently exist, but actual choices where you can see what data Gemini is accessing and make specific decisions about what's allowed.

This is partly for regulatory reasons (EU, California, etc.) and partly because Google's learned that privacy-conscious users will accept AI features if they have clear control and transparency.

Transparency About Data Usage

When Gemini sends data to cloud servers (because the query is too complex for local processing), Google will be more transparent about it. You'll see (or at least have the ability to see) what data is being sent and why.

Again, this is partly regulatory, partly because transparency is becoming table stakes for consumer AI products.

8. AI for Enterprise and Workspace

Google Workspace is huge for enterprise. I/O 2026 will show Gemini becoming core to the enterprise value proposition.

Duet AI Integration Deepens

Google's Duet AI (enterprise version of Gemini for Workspace) will be more integrated, more capable, and more specialized for enterprise use cases. You'll be able to use it to process confidential documents, integrate with enterprise data sources, and get answers that consider your company's specific context.

Expect better security, better compliance, and better integrations with enterprise systems (Salesforce, SAP, etc.).

Custom AI Models for Enterprise

Large enterprises will be able to fine-tune Gemini on their own data, creating models tailored to their specific needs without sharing data with Google. This is technically possible now but will be much more accessible in 2026.

Workflow Automation at Scale

Google will show how Gemini can automate entire business workflows. Not just individual tasks, but multi-step, cross-system processes. Process contracts, manage customer service, handle expense reports, whatever. Gemini understands the workflow and can execute it with human oversight.

Google Search is evolving from keyword matching to intent understanding and multimodal search, with significant improvements expected by 2026. (Estimated data)

9. Search Generative Experience Goes Mainstream

Google's Search Generative Experience (SGE) is currently an experimental feature. By I/O 2026, it will likely be mainstream.

Integration Into Default Search

SGE (generative summaries for search queries) will be the default experience, not an opt-in experiment. When you search for something, you'll get an AI-generated summary alongside traditional search results.

This is a big change because it fundamentally shifts how search works. You don't click through to a website. You read an AI summary on Google. Websites lose traffic. Publishers have to adapt.

Google will have worked out the kinks—ensuring summaries are accurate, that citations are clear, that the feature actually helps users rather than just replacing search results.

Multimodal SGE

SGE using just text is useful. SGE using images, videos, and other media is more useful. Expect I/O 2026 to show SGE evolved to handle all input types and generate multimodal summaries.

Impact on Publishing and SEO

For content creators and publishers, I/O 2026 will be a moment of reckoning. Google's openly shifting from a model where they send traffic to your site, to a model where they give users answers directly. The publishing industry will have adapted somewhat by 2026, but the shift will still be significant.

Expect Google to announce features that help publishers benefit from SGE (better attribution, ways to opt-in or opt-out, ways to make content more summaries-friendly). But the fundamental shift will be real.

10. AI Safety, Alignment, and Responsible AI

As Google ships more powerful AI, they'll need to discuss how they're thinking about safety and alignment.

Bias Mitigation and Fairness

Large language models can perpetuate biases from training data. Google will announce improvements to Gemini's fairness and bias detection. Probably some metrics showing improvement over previous versions.

Explainability and Interpretability

Gemini will be better at explaining its reasoning. When it gives you an answer, it'll be better at showing why. This matters for enterprise (you need to understand the recommendation) and for consumer use (you need to trust the output).

Content Moderation and Harmful Content Prevention

Google will talk about how Gemini prevents generating harmful content. This is an ongoing arms race (people keep finding ways to make models do things they're not supposed to), but there will be genuine improvements by I/O 2026.

11. Cross-Platform Ecosystem Integration

Google's products don't exist in isolation. I/O 2026 will show deeper integration across the entire ecosystem.

Phone, Watch, Tablet, Home Integration

Your Pixel phone will work seamlessly with your Pixel Watch, tablet, and Home devices. AI context flows across devices. You start something on your phone, continue on your tablet, get updates on your watch. The system understands what you're doing and adapts accordingly.

Cloud-to-Edge Computation

Work that's too complex for your phone automatically moves to more powerful devices. A complex analysis might run on your tablet. Real-time feedback happens on the watch. All orchestrated seamlessly through the cloud.

Device Switching Without Friction

Right now, switching between devices is annoying. By I/O 2026, it'll be seamless. Your context flows from one device to another. Applications understand the screen size and interact appropriately. You don't think about which device you're using, you just use the one that's convenient.

Projected improvements in Google's developer tools include enhanced context windows, fine-tuning, and competitive pricing. Estimated data based on anticipated updates.

12. Competition and Industry Response

I/O 2026 is partly about Google's innovations and partly about Google responding to competition.

Open AI and Chat GPT

Open AI's been ahead of Google in consumer AI mindshare. By I/O 2026, Google will have narrowed the gap. Gemini, integrated across Google's products, will offer capabilities that Chat GPT can't match simply because Chat GPT isn't integrated into billions of people's daily tools.

Microsoft and Copilot

Microsoft's been aggressively adding AI to Office products. Google will show that their approach is better because it's not bolted on—it's integrated from the ground up.

Apple and Private Intelligence

Apple's been emphasizing privacy. Google will probably concede the point while arguing that they've made privacy-respecting AI possible at scale.

Open Source and Llama

Meta's open-source Llama models have been gaining traction. Google will likely announce more open-source models alongside their closed commercial offerings.

13. Pricing and Business Model Evolution

How does Google monetize all this AI?

Gemini Subscription Expansion

Google One (the subscription service) will likely get new AI-powered tiers. Current Gemini Advanced costs $20/month alongside Google One. I/O 2026 will likely show tiered options with different Gemini capabilities at different price points.

API Pricing Changes

As Gemini becomes more capable and more widely used, Google will need to adjust API pricing. Probably moving toward more sophisticated pricing models (charging more for complex requests, cheaper for simple ones).

Enterprise Licensing

For businesses, Google will likely introduce enterprise licensing for Gemini, with custom features, better support, and higher reliability guarantees.

14. Research and Academic Announcements

Google always uses I/O to announce research breakthroughs.

New ML Techniques and Architectures

Expect announcements about improvements to transformer architectures, new training techniques, or breakthroughs in specific domains (vision, reasoning, etc.). These will be framed around how they power the consumer products, not just academic achievement.

Responsible AI Research

Google has a team dedicated to responsible AI. I/O 2026 will probably feature announcements about bias detection, fairness improvements, and other responsible AI research.

Open-Source Research Models

Google will likely release more research models (smaller, specific-use versions of Gemini) as open-source. This helps the research community and builds goodwill.

Estimated data suggests that Gemini integration could improve productivity by up to 15% in Gmail and 10-12% in Docs, Sheets, and Meet by 2026.

15. Future-Looking Announcements

I/O conferences always include some future-looking stuff—research that won't ship for years but gets people excited.

Longer Context Windows

One of the limitations of current models: they have a maximum context window (tokens they can process). This limits how much of your data they can see. By I/O 2026, expect announcements about significantly longer context windows, even if they don't quite ship with consumer products yet.

Better Multimodal Understanding

Current models can process images and video, but they're not great at understanding complex scenes or reasoning about spatial relationships. I/O 2026 will likely show research toward dramatically better multimodal understanding.

More Efficient Models

Larger isn't always better. Google's been researching ways to get more capability with smaller, more efficient models. By I/O 2026, expect announcements about models that are 10x more efficient while being just as capable.

Real-Time Processing

Currently, AI processing takes time. Google's researching ways to make inference nearly instantaneous. This would enable new use cases (real-time translation of conversations, real-time transcription and understanding, etc.).

16. Developer Conference Experience and Format

I/O itself will probably change.

Keynote and Announcements

Expect a keynote that's significantly more AI-focused than previous years. Google will probably spend 60-70% of the main keynote talking about AI (vs. the roughly 40-50% in previous years).

Sessions and Workshops

Thousands of sessions will be available, with heavy focus on AI/ML development. If you're a developer, almost everything will have an AI angle.

Demo Areas and Hands-On

Google will have massive demo areas where attendees can try out new features. The Pixel 10, new Workspace features, Android 16, new developer tools. Expect long lines and high interest.

Virtual Attendance

I/O is huge in-person, but Google will expand virtual attendance options. For people who can't travel, there will be meaningful ways to participate.

17. Competitive Positioning and Market Message

Underneath all the announcements, Google's trying to send a message to the market.

AI at Scale, Not Just Research

Google wants everyone to know: we're not just researching AI, we're shipping it to billions of people. Our AI isn't a chatbot or a demo. It's integrated into the tools you use every day.

Privacy-First AI

Google will emphasize that you don't have to choose between AI and privacy. With on-device processing and federated learning, you can have both.

Open Ecosystem

While Google's competitive products are closed, they'll announce more open-source tools and models. The message: we're advancing AI broadly, not just for Google products.

Accessibility and Inclusivity

Google will talk about how AI makes their products more accessible to people with disabilities. Better voice control, better translation, better understanding of context. Real, tangible improvements.

18. The Broader Implications

I/O 2026 isn't just about products. It's about what these announcements mean for the future of computing.

The Death of the App as We Know It

If AI is genuinely intelligent and integrated, you don't need separate apps for everything. You could have a unified interface that understands what you're trying to do and helps you do it. This is speculative, but it's the direction things are heading.

Computing Becomes More Conversational

Right now you interact with computers by navigating interfaces, clicking buttons, entering text. As AI improves, computing becomes more conversational. You just tell your device what you want. This is a fundamental shift.

Privacy Becomes a Competitive Advantage

Everyone's offering AI now. The differentiation increasingly comes down to privacy, transparency, and control. Google understands this and will be pushing it.

Interdependence Between Hardware and Software

Good AI on devices requires hardware optimization. Google's tight integration of hardware and software (Pixel, Tensor chip, Android) becomes increasingly valuable.

19. Realistic Limitations and Potential Disappointments

Not everything will be perfect.

AI Still Has Fundamental Limitations

Gemini 2.0 will be better than 1.5, but it'll still hallucinate, make mistakes, and fail at tasks that seem simple to humans. Google won't claim otherwise, but the messaging will emphasize progress.

Privacy Features Might Not Be Perfect

Google will talk a big game about on-device processing and privacy, but there will likely still be data collection that people don't feel comfortable with. The bar for privacy is constantly rising.

Integration Challenges

Integrating AI across dozens of products is hard. Some integrations will feel clunky. Some features will ship and then get pulled. This is normal for cutting-edge tech.

Regulatory Headwinds

By I/O 2026, various countries will likely have more AI regulation. Google might announce some limitations or restrictions based on compliance requirements.

Job Displacement Concerns

Google will probably address this explicitly. AI is automating jobs. The company will likely talk about retraining programs, working with policymakers, etc. But it won't change the fundamental reality that some jobs will be automated.

20. The Practical Takeaway: What Changes for Users

All of this abstract stuff boils down to some concrete changes for people actually using Google products.

Your Android Phone Gets Smarter

Not incrementally smarter. Dramatically smarter. Things you couldn't do before become possible. Things that were annoying become smooth.

Your Productivity Tools Become More Productive

Google Workspace users will see real time savings. Not from gimmicks, but from genuine improvements in how these tools help you work.

Search Becomes Less About Finding Links, More About Getting Answers

This is a big shift, and it has implications for how you find information online. Google's moving from "here are the links you need" to "here's what you need to know."

Your Data Is Safer (Probably)

On-device processing and privacy improvements mean more of your data stays on your devices. Not all of it, but more than before.

AI Is Unavoidable

If you use Google products, you're using AI whether you explicitly choose to or not. This has benefits (smarter tools) and drawbacks (less control, potential for abuse). I/O 2026 will show Google trying to address the drawbacks.

FAQ

What is Google I/O 2026?

Google I/O is Google's annual developer conference where they announce new products, features, and research. In 2026, it's expected to heavily focus on AI integration across their entire product suite, from search and Android to Workspace and consumer devices.

When and where will Google I/O 2026 take place?

Google typically holds I/O in May at venues in California, though they haven't officially announced specific dates or locations for 2026 yet. Expect announcements in early 2026 about timing and ticketing details once Google makes the event formal.

What is Gemini 2.0 and how will it differ from Gemini 1.5?

Gemini 2.0 is expected to be the next major iteration of Google's large language model with improvements in reasoning capabilities, multimodal understanding, on-device processing, and real-time integration across Google products. It'll handle complex analytical tasks better than current versions and provide context-aware assistance that feels more natural and helpful.

Will Android 16 have AI built into the operating system?

Yes, Android 16 is expected to have AI as a first-class system component, similar to permissions or hardware access. Developers will be able to request AI capabilities through standardized APIs, and Google will handle the underlying models and optimization. This should make it much easier to add AI features to apps without separate development effort.

How will Google Search change at I/O 2026?

Google Search is expected to move from keyword matching to true intent understanding, offering conversational, multimodal search with real-time information. Instead of just returning links, Search Generative Experience will provide direct answers synthesized from multiple sources, fundamentally changing how people find information online.

What new Pixel devices will be announced at I/O 2026?

Google will almost certainly announce the Pixel 10 flagship phone and likely new versions of the Pixel Fold, Pixel Watch, and possibly new form factors. These will feature tighter AI integration, improved on-device processing, and capabilities uniquely enabled by Google's integration of hardware and software.

How does Google plan to handle privacy with all these AI features?

Google is expected to emphasize on-device processing for sensitive operations, expanded federated learning, and more transparent data collection practices. While not perfect, the strategy is to give users more control over what data leaves their devices and how AI features use their personal information.

Will Gemini API be more accessible to developers after I/O 2026?

Yes, Google is expected to announce significant improvements to the Gemini API including better context windows, improved fine-tuning capabilities, more competitive pricing, and better function calling and structured output. These changes will make it much easier for developers to integrate Gemini into their applications.

How will Google Workspace change with Gemini integration?

Google Workspace tools (Docs, Sheets, Slides, Gmail, Meet) will have deeper Gemini integration including smarter collaboration features, better summarization, improved meeting intelligence, and more helpful suggestions. For enterprise users, there will be custom model training on company-specific data while maintaining privacy.

What impact will I/O 2026 announcements have on content creators and publishers?

Publishers will face continued challenges as Google Search Generative Experience shifts from sending users to websites to providing direct answers. However, Google is expected to announce new features helping publishers benefit from SGE including better attribution, improved summarization-friendly content recommendations, and tools to help creators optimize for AI-driven search.

Conclusion: Why I/O 2026 Matters More Than Previous Years

Google I/O has always been significant for developers and tech enthusiasts. But I/O 2026 marks something different. It's the moment when AI stops being a separate feature bolted onto products and becomes the foundation of how Google's entire ecosystem works.

This isn't just about new products. It's about a fundamental shift in how computing works. For the past 30 years, computers have been tools you command. You tell them what to do (click here, type this, navigate there). Starting with I/O 2026, that's changing. Computers become genuinely assistive. They understand what you're trying to accomplish and help you accomplish it.

The specifics matter—Gemini 2.0's capabilities, Android 16's architecture, new hardware. But the bigger story is that Google's organizing its entire company around a simple idea: AI isn't a product, it's the operating principle.

This has profound implications. For consumers, it means tools that are dramatically more helpful and more personalized. For developers, it means opportunities to build things that were impossible before. For the publishing industry, it means adaptation or irrelevance. For policymakers, it means hard decisions about AI regulation, labor displacement, and societal impact.

I/O 2026 won't answer all these questions. But it'll establish the direction for the next several years of computing. If you care about technology, AI, productivity, or the future of the internet, I/O 2026 will be worth paying attention to.

The conference is likely to produce significant announcements across search, Android, consumer products, developer tools, and enterprise software. Whether you're a developer trying to stay current, a business leader evaluating technology strategy, or someone who just uses Google products, I/O 2026 will be a reset moment.

Google's been working toward this for years. In May 2026 (or whenever the conference happens), you'll see the results. And they'll probably be more significant than most people expect.

The future of computing isn't about bigger screens or faster processors or new form factors. It's about tools that actually understand what you're trying to do and help you do it better. I/O 2026 will show that future becoming real.

Key Takeaways

- Gemini 2.0 will feature multimodal understanding, on-device processing, and deeper real-time integration across all Google products

- Android 16 treats AI as a core system component, making it dramatically easier for developers to build AI-powered features

- Google Search is shifting from keyword matching to intent understanding, with SGE becoming the primary search interface

- Pixel 10 and Pixel Fold 2 will showcase hardware-software integration specifically optimized for AI capabilities

- Google Workspace gains significant AI-powered productivity improvements in Docs, Sheets, Slides, and Meet

- Privacy-first approaches with on-device processing will become a competitive differentiator for Google's AI strategy

Related Articles

- Google I/O 2026: Dates, AI Announcements & What to Expect [2025]

- Google I/O 2026: May 19-20 Dates, What to Expect [2025]

- How Bill Gates Predicted Adaptive AI in 1983 [2025]

- RentAHuman: How AI Agents Are Hiring Humans [2025]

- EU Parliament Bans AI on Government Devices: Security Concerns [2025]

- AI Personalities & Digital Acceptance: What Neuro-sama Really Tells Us [2025]

![Google I/O 2026: 5 Game-Changing Announcements to Expect [2025]](https://tryrunable.com/blog/google-i-o-2026-5-game-changing-announcements-to-expect-2025/image-1-1771418311262.jpg)