EU Parliament Bans AI on Government Devices: What This Means for Enterprise Security [2025]

Last spring, the European Parliament made a quiet but significant decision that sent shockwaves through the tech industry. They turned off AI tools on government-issued devices. Not temporarily. Not as a test. A permanent ban, driven by cybersecurity and data protection concerns that frankly, most organizations should be losing sleep over.

Here's what happened: an internal memo revealed that the Parliament's IT department couldn't guarantee the security of AI tools that rely on cloud processing. These aren't niche, experimental systems. They're mainstream AI features built into productivity software that millions of workers use daily. The decision forced a reckoning that extends far beyond Brussels.

This isn't just about one European institution being cautious. This is about the fundamental tension between AI adoption and data security that every organization with sensitive information needs to address right now. If the EU Parliament—an institution literally writing AI regulations—is saying no to AI on work devices, shouldn't you be asking why?

Let's break down what happened, why it matters, and what your organization should actually do about it.

TL; DR

- The Ban: The European Parliament disabled AI features on government devices citing security risks from cloud-based processing.

- The Core Issue: AI tools sending data off-device to cloud servers create exposure risks that on-device processing wouldn't create.

- The Broader Impact: This signals regulatory skepticism toward cloud-dependent AI, affecting enterprise adoption globally.

- The Real Risk: Most organizations lack visibility into where their data goes when employees use AI tools.

- Bottom Line: You need data governance policies for AI use before regulators force your hand.

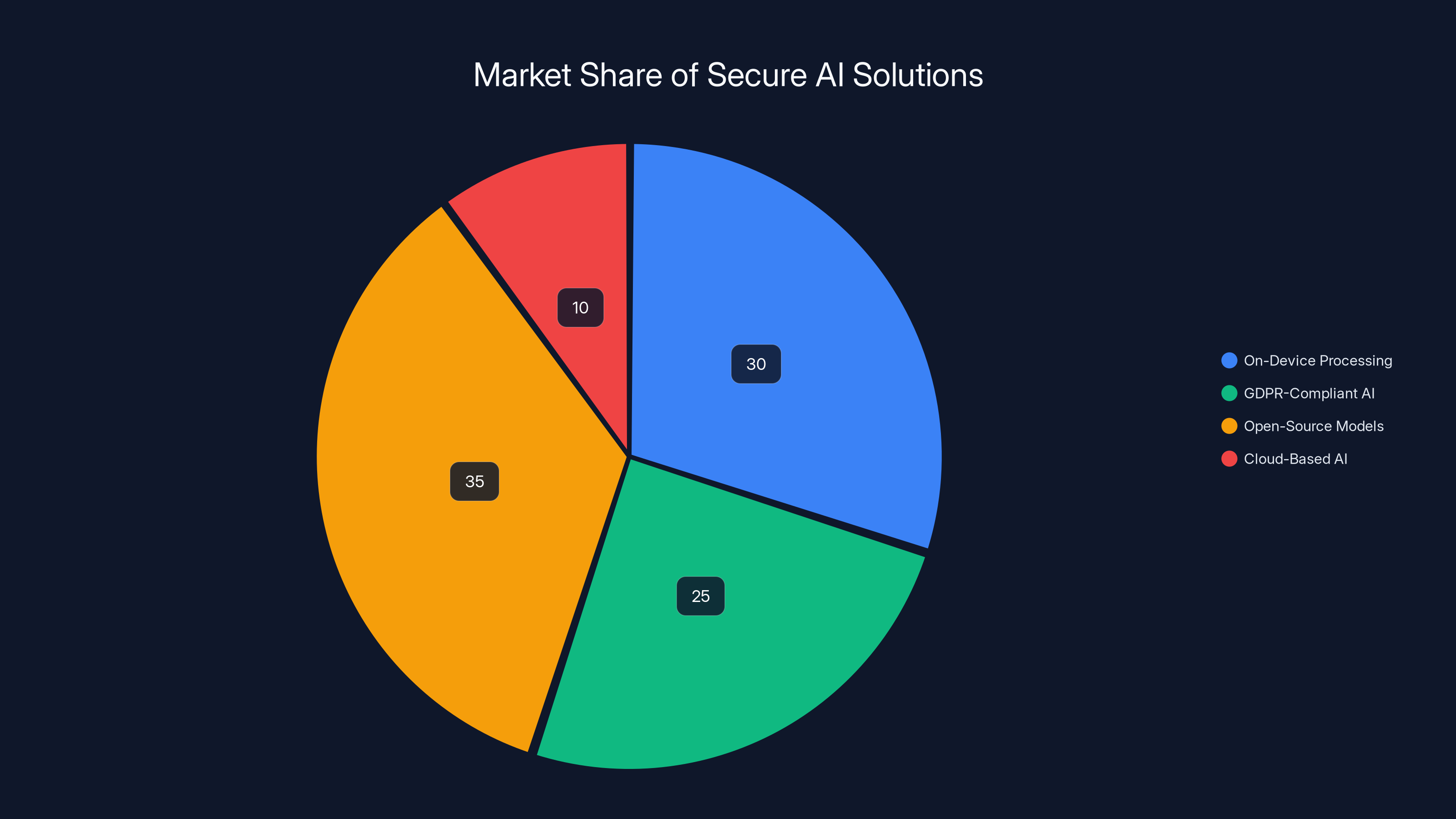

Open-source models lead the market with an estimated 35% share, reflecting their growing popularity as secure alternatives. Estimated data.

What the European Parliament Actually Did (And Why)

On the surface, the decision seems straightforward: disable AI. But the reasoning reveals something much more nuanced about how governments think about data security in the age of AI.

The Parliament's IT department issued guidance stating that "some of these features use cloud services to carry out tasks that could be handled locally, sending data off the device." That's the critical phrase. Cloud services. Off-device. Data transmission.

In practical terms, here's what was happening: employees would use built-in AI features to summarize documents, draft emails, or process information. Behind the scenes, the data would be transmitted to cloud servers operated by third-party vendors—often located outside the EU. The AI would process it there and send results back.

For government institutions handling classified information, legislative documents, and sensitive policy discussions, this represented an unacceptable risk. You can't guarantee data security when information is leaving your network, transiting through foreign infrastructure, and sitting in someone else's servers.

The memo was clear about one thing: this wasn't a blanket rejection of AI. It was specifically about AI tools that require cloud processing. Tools that could theoretically handle tasks locally—on the device itself—without external transmission, remained theoretically acceptable.

But here's where it gets complicated. The memo also stated something interesting: the Parliament "constantly monitors cybersecurity threats and quickly deploys the necessary measures." Translation: they might turn AI back on. Once. They can verify that data stays secure.

That's not happened yet. And it reveals a critical insight: even institutions with massive IT budgets and security expertise can't quickly determine if cloud-based AI is secure enough. What does that say about organizations with smaller security teams?

The most critical steps for organizations are inventorying AI usage and developing explicit governance, both rated at 5. Estimated data based on typical organizational priorities.

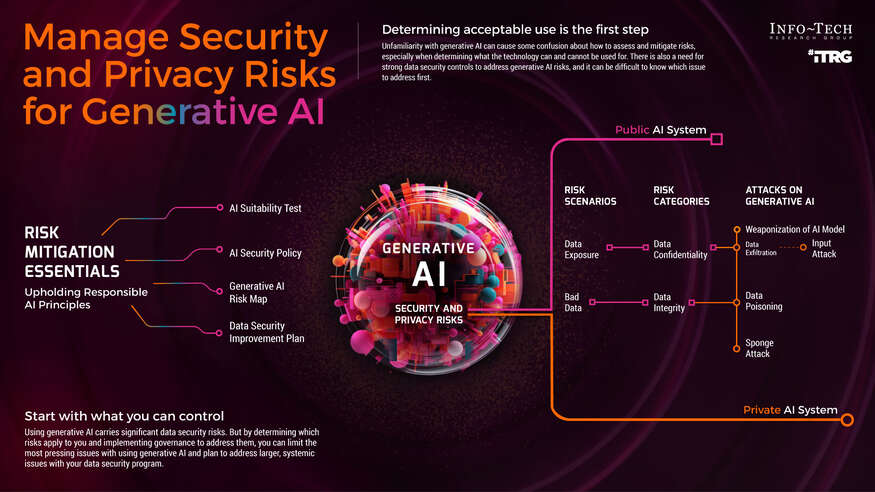

The Data Security Problem Nobody Talks About

When you use an AI tool on a cloud service, your data doesn't disappear into a black box. It goes through a predictable journey that most users never think about.

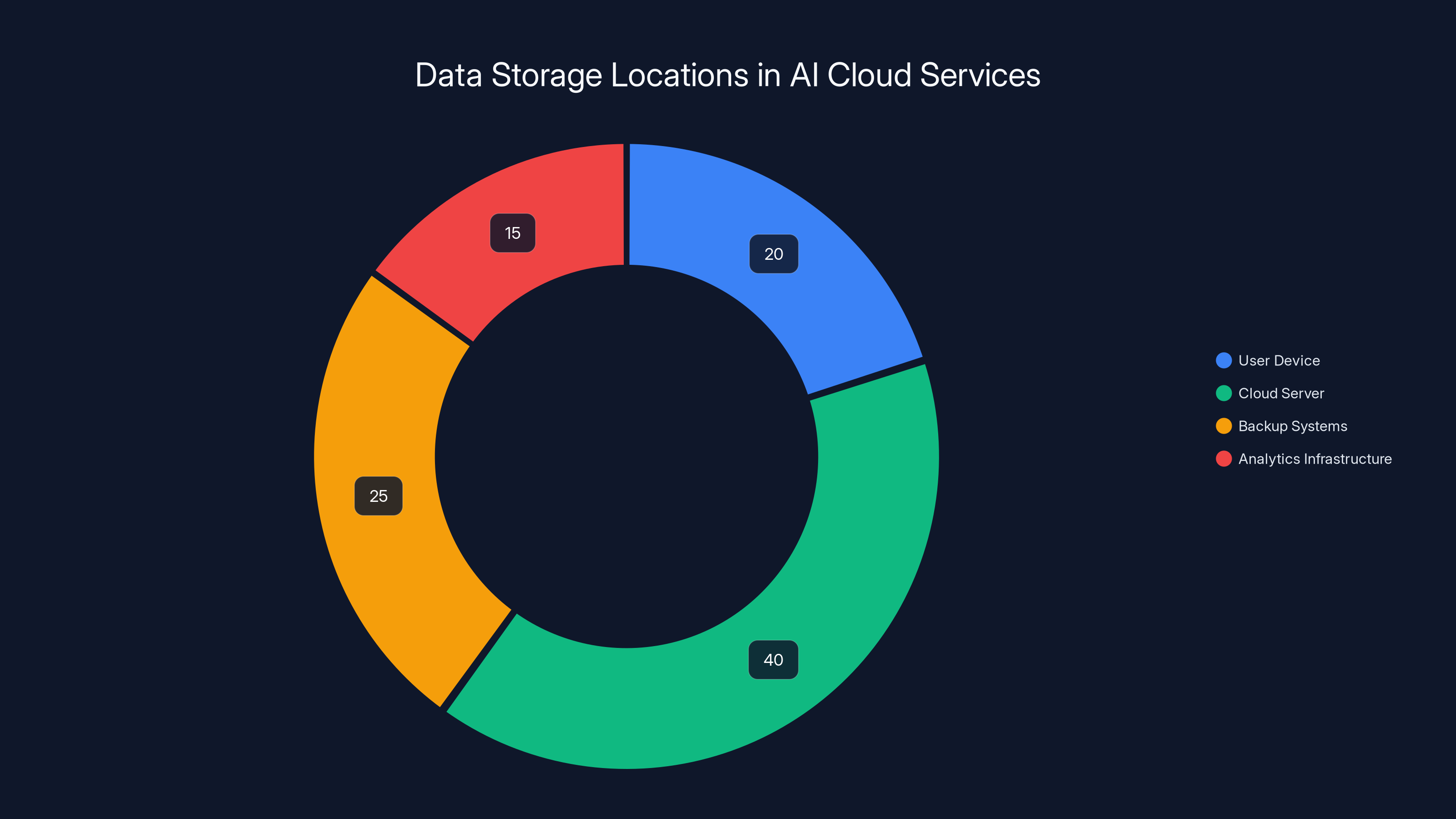

You type a prompt. That prompt gets transmitted over the internet to a cloud server. The server processes it, sometimes storing copies for training, logging, or analytics purposes. Results come back. Your data now exists in multiple places: your device, the cloud provider's servers, potentially their backup systems, and sometimes their analytics infrastructure.

Each of those handoffs is a potential vulnerability. Each location where your data sits is another target for attackers. Each third-party vendor in that chain is another organization with access to your sensitive information.

For a government, this is catastrophic. But for enterprises handling customer data, intellectual property, or proprietary business information, it's genuinely problematic.

Consider a real scenario: a financial services employee uses an AI chatbot to summarize a client account or draft a response to a sensitive inquiry. That data—client names, account details, transaction history—now exists on the AI vendor's servers. The vendor might use it for model improvement. It might be stored for compliance reasons. It might be accessible to researchers or contractors with vendor access. A breach exposes your client data.

The Parliament's concern wasn't paranoid. It was practical. European data protection regulations—particularly GDPR—create legal liability when data exits controlled environments. If you transmit customer data to a cloud AI service and that service gets breached, you're liable. The fine isn't a slap on the wrist. It's up to 4% of global annual revenue.

That regulatory framework shaped the Parliament's decision. But it's not unique to Europe. Organizations worldwide are starting to ask the same questions: where does our data go when we use AI? Who has access? What's our liability if something goes wrong?

Why Cloud-Based AI Created This Problem

Understanding the security issue requires understanding why cloud-based AI exists in the first place.

Most modern AI models are enormous. Training a large language model requires millions of dollars in compute resources, specialized hardware (GPUs, TPUs), and infrastructure that only major cloud providers can afford. Open AI can't host GPT-4 locally on your laptop. Anthropic can't run Claude locally on your desktop. The models are too large, too complex, too resource-intensive.

So vendors solved this with cloud architecture: centralized servers that process requests from millions of users. Your laptop doesn't need to run the AI. It just needs internet access to send a request and receive results.

This architecture solved an engineering problem. It created a security problem.

When you run software locally, your data stays local. When you run software in the cloud, your data travels to external infrastructure, where it's processed by systems you don't control, governed by terms of service you probably haven't read, and stored in databases managed by vendors with different security standards than you might have.

For years, most organizations accepted this tradeoff. The convenience of cloud services outweighed the security concerns. Cloud vendors promised security. They had compliance certifications. They issued detailed security whitepapers.

Then, reality happened. Cloud breaches, data exfiltration, unauthorized access, compliance violations. Not rare edge cases. Regular occurrences.

The Parliament looked at this landscape and said: not on our infrastructure.

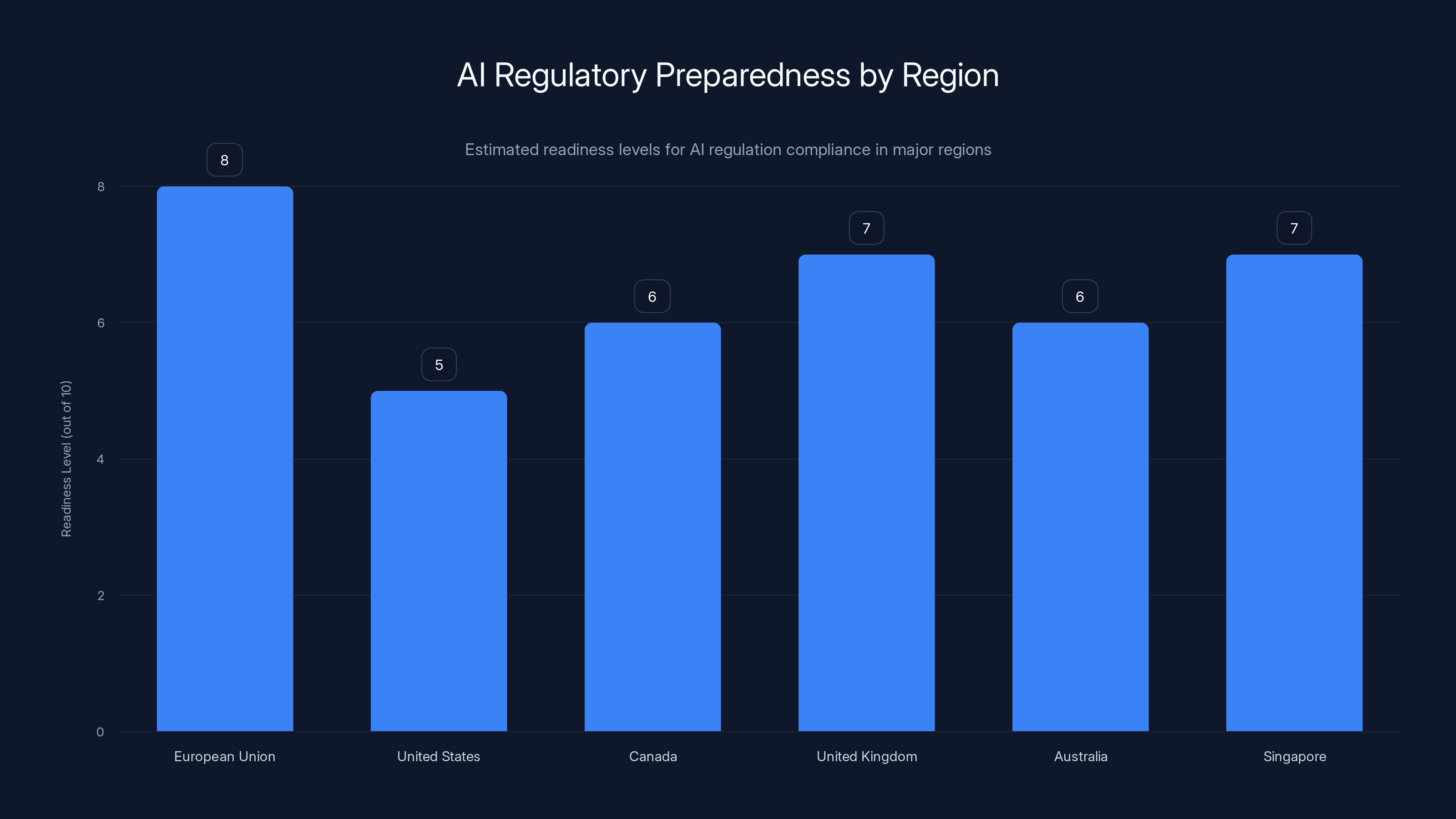

Estimated readiness levels show the EU leading in AI regulatory preparedness, with other regions catching up. Estimated data.

The Global Regulatory Pressure Mounting Against Cloud-Based AI

The European Parliament's decision didn't happen in isolation. It's part of a broader regulatory movement questioning cloud-dependent AI architecture.

The European Union is simultaneously rolling out the AI Act, a comprehensive regulatory framework covering everything from AI development to deployment. One theme runs throughout: data protection. The Act imposes strict requirements on how organizations use data for AI purposes, particularly for high-risk applications.

Meanwhile, individual EU member states are tightening their own rules. France's CNIL has issued guidance on AI and data protection. Germany's federal data protection authority has raised concerns about commercial AI tools in government. The UK's Information Commissioner's Office has started investigating AI vendors' data handling practices.

Outside Europe, the regulatory pressure is more fragmented but moving in similar directions. California's privacy laws create liability for unauthorized data transmission. Canada's PIPEDA imposes strict consent requirements for data processing. Australia's Privacy Act is being strengthened with explicit AI requirements.

For organizations operating in multiple jurisdictions, this creates a genuine problem: you can't just adopt whatever AI tools are convenient. You need to verify compliance across different regulatory regimes. If the data handling practices work in Europe (the toughest region), they might work elsewhere. But if they don't meet European standards, you're already limited.

The Parliament's ban accelerates this regulatory convergence. When a major government institution publicly disables AI due to security concerns, other governments notice. They ask their own IT departments the same questions. They run their own risk assessments. Some reach the same conclusion.

Which AI Tools Were Actually Affected?

The Parliament's memo didn't specifically name which AI features were disabled. This creates some ambiguity, but it's worth examining what was likely in scope.

Microsoft's Copilot integration into Microsoft 365 (Word, Excel, PowerPoint, Outlook) was almost certainly affected. These are mainstream tools that government staff use daily, and the Copilot features rely on cloud processing. Copilot processes your document in the cloud, analyzes it against Microsoft's infrastructure, and returns AI-generated suggestions.

Google's Gemini integration into Google Workspace (Docs, Sheets, Gmail) would face the same scrutiny. Again, cloud-dependent processing, external data transmission, regulatory concerns.

Open AI's Chat GPT—either accessed directly through the web interface or integrated into other tools—would be affected. Chat GPT explicitly requires sending your prompt to Open AI's servers.

But here's the interesting part: the memo didn't mention core productivity tools themselves. Office applications, email systems, file storage, calendars—these remained available. The Parliament didn't abandon Microsoft 365 entirely. It just disabled the AI features within it.

This distinction matters. It shows the decision was surgical, not sweeping. It was about protecting data when it moves into AI processing systems, not about rejecting technology broadly.

Some organizations might have interpreted the memo as removing AI entirely. That wasn't the case. It was removing AI options that send data off-device without strong security guarantees.

Data is distributed across multiple locations, with the cloud server storing the largest portion, increasing vulnerability points. (Estimated data)

The Shadow AI Problem Exposed by This Decision

The Parliament's ban highlighted something security teams have been quietly worried about for months: shadow AI.

Shadow AI is the informal, unsanctioned use of AI tools by employees working outside IT governance frameworks. A financial analyst uses Chat GPT to analyze spreadsheets. A legal reviewer uses Claude to summarize contracts. A developer uses GitHub Copilot to write code. None of these uses were formally approved. None of them went through security review. They just happened because the tools were available and convenient.

Before the ban, this was already risky. Employees were transmitting sensitive company information to cloud AI services with no oversight, no contracts, no compliance verification. A single employee copying customer data into Chat GPT could create massive legal liability.

The Parliament's decision forced a conversation organizations were avoiding: what's actually happening with AI right now, and is it acceptable?

Surprisingly, many organizations still haven't done this audit. They have no visibility into which cloud AI tools employees are using, what data is being transmitted, or what the security implications are. They have policies prohibiting personal cloud services but no enforcement mechanism for AI.

The gap between what security teams think is happening and what's actually happening is enormous. This gap is what the Parliament's decision illuminates.

On-Device AI as the Alternative Path Forward

If cloud-based AI created the security problem, on-device AI represents the potential solution. But it's not a perfect solution, and understanding the tradeoffs is crucial.

On-device AI means running the entire AI model locally, on your device, without any data transmission to external services. Your laptop becomes the processing center. All computation happens locally. Data never leaves your device.

For security-conscious organizations, this is incredibly attractive. No data transmission means no exposure to cloud breaches. No external processing means no compliance risks from data leaving your jurisdiction. No third-party involvement means you maintain complete control.

But on-device AI has significant constraints. Most powerful AI models are too large to run on local hardware. A standard laptop can't run a full-scale language model with the same capabilities as cloud-based options. You're trading capability for security.

However, this is changing. Smaller, specialized models designed to run locally are improving rapidly. Microsoft's Phi models, Meta's Llama running locally, Apple's on-device machine learning capabilities—these are getting better at handling real-world tasks without requiring cloud infrastructure.

The European Parliament is essentially betting that this trajectory continues. They're saying: we'd rather use capable local models than powerful cloud models with security concerns.

For organizations with similar risk profiles, this might be the right calculation. You might accept slightly less capable AI if it means significantly better security.

But implementing on-device AI at scale requires investment. You need to identify which models work for your use cases. You need to provision sufficient local compute resources. You need to handle updates and maintenance locally rather than centrally.

For government institutions, this complexity is acceptable if it means stronger security. For enterprises, the calculation depends on your risk profile and compliance requirements.

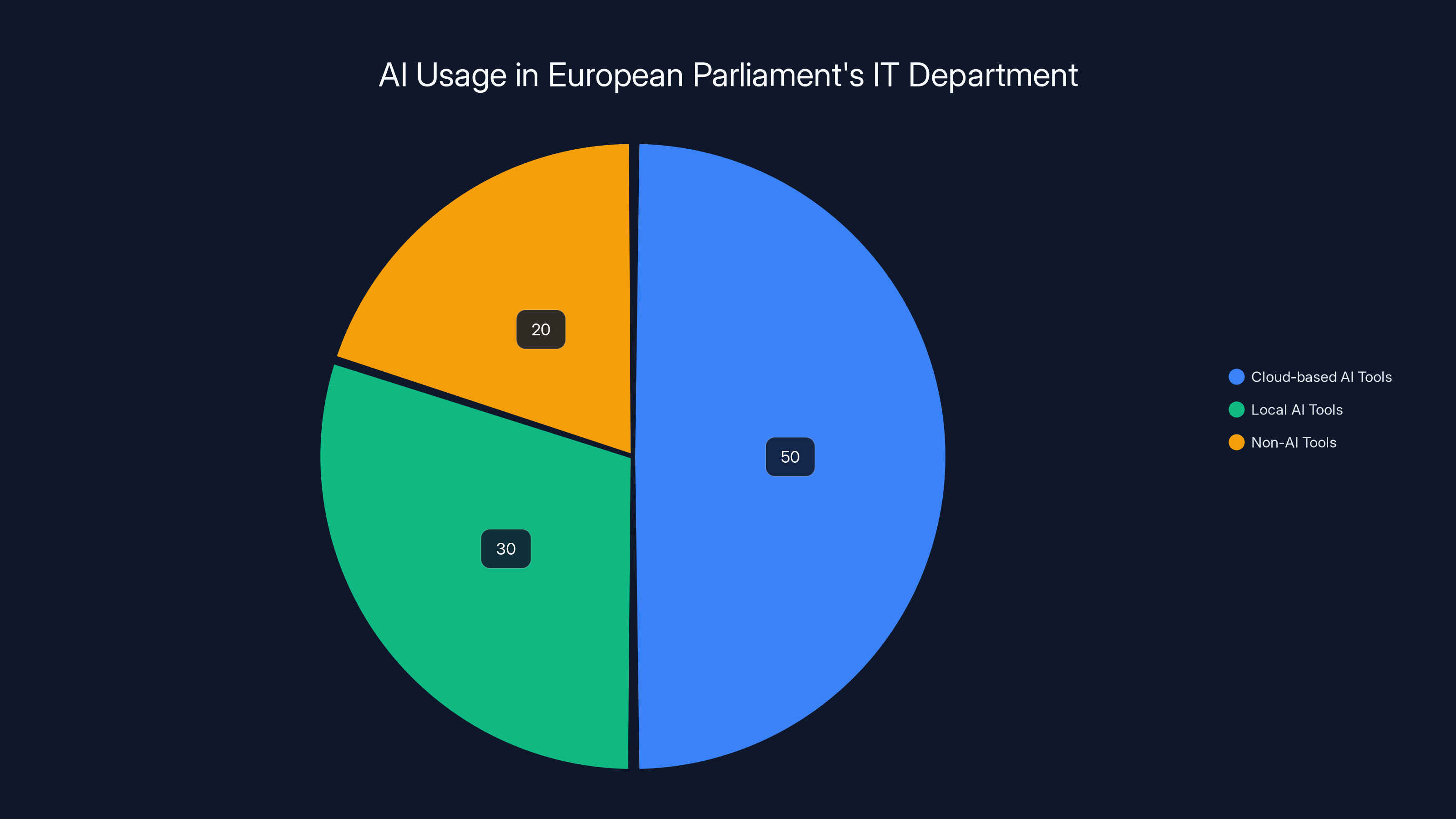

Estimated data shows that 50% of AI tools used by the European Parliament rely on cloud processing, posing potential data security risks. Local AI tools and non-AI tools make up the remainder.

What This Means for Enterprises Globally

The European Parliament's decision has ripple effects far beyond Brussels. It signals something fundamental: major institutions are reassessing the security premise underlying cloud-based AI adoption.

For enterprises, this creates several immediate implications.

First, regulatory scrutiny of cloud AI is increasing. If you're a vendor providing cloud-based AI services and major government institutions are disabling your tools, that's a credibility problem. Regulators will ask why. They'll demand security explanations. They'll question whether your architecture meets their standards.

Second, enterprise customers will start asking harder questions. If government institutions don't trust cloud AI with sensitive data, why should private companies? The Parliament's decision provides a legitimacy framework for internal discussions organizations have been avoiding.

Third, compliance requirements around AI data handling will tighten. The GDPR was already strict about data transmission. The EU AI Act will be stricter. Other jurisdictions will follow. If your current AI deployment doesn't meet EU standards, you'll eventually face regulatory pressure to change it.

Fourth, the market for on-device and locally-run AI solutions will accelerate. Vendors who can deliver capable AI without cloud transmission will have significant competitive advantages in government and regulated industries.

Fifth, data governance policies around AI need to exist now, not later. You can't wait for regulations to force your hand. You need internal frameworks classifying which data can go to cloud AI, which requires on-device processing, and which shouldn't go to AI at all.

Organizations that wait for regulations to force these changes will be scrambling to implement new architectures under deadline pressure. Organizations that proactively implement now will have time to test, learn, and optimize.

The Role of Tech Sovereignty in This Decision

There's a second narrative running parallel to the security story, and it's important to acknowledge it directly.

The European Union has been increasingly vocal about "tech sovereignty"—the idea that Europe should reduce dependence on American technology companies for critical infrastructure. This isn't abstract policy. It shapes real decisions about which technologies European governments will permit.

Microsoft is an American company. Open AI is an American company. Google is an American company. Cloud infrastructure handling sensitive EU government data is often physically located in the United States. European officials move through US systems even when those systems are technically separate subsidiaries.

For EU regulators concerned about digital autonomy, this isn't comfortable. They've been pushing for European alternatives: European cloud providers, European AI models, European computing infrastructure.

The Parliament's AI ban might seem like purely a security decision. But it also advances tech sovereignty objectives. By disabling American AI tools, the Parliament is signaling demand for European alternatives. This creates market incentives for European vendors to develop competing solutions.

This is mentioned not to suggest the security concerns are pretextual. They're genuine. But understanding the full context matters. The decision serves multiple objectives simultaneously.

For enterprises, this has implications. If you're primarily relying on American cloud AI vendors, European regulations might increasingly pressure you toward European alternatives. This doesn't mean American tools will become unavailable—GDPR doesn't ban US companies. But it does mean compliance friction increases, restrictions tighten, and the regulatory environment becomes more hostile.

Planning for regulatory diversity—having European vendor options alongside American ones—becomes strategically valuable.

How Other Governments Are Likely to Respond

The European Parliament's decision creates a precedent that other governments are watching carefully.

We're not seeing immediate replicas elsewhere. But we are seeing parallel concerns being raised. Several US government agencies have been conducting their own assessments of AI tool security. The UK government's NCSC is working on AI security guidance. The Australian government is reviewing AI policies.

Governments move slowly, but they typically move in similar directions when faced with similar risks. The questions the Parliament asked—where does data go? who has access? what's the breach scenario?—are questions other governments are asking internally.

The most likely path forward is fragmented but coordinated. Different governments might not issue identical bans, but they'll implement increasingly strict controls. Some might ban cloud AI entirely. Others might permit it only with specific certifications. Some might require data localization, demanding that AI processing happen within national borders.

The common thread: cloud-based AI without strong data security frameworks is becoming less acceptable to governments with real security concerns.

This creates a practical problem for vendors: serving government customers with cloud-based AI becomes harder when governments increasingly say no. Vendors need solutions that work within this constraint.

What Organizations Should Actually Do Right Now

The European Parliament's decision provides a useful framework for any organization reassessing its AI strategy. Here's what matters:

First: Inventory existing AI usage. Most organizations don't know which employees are using which AI tools, or what data is being transmitted where. This knowledge gap is unacceptable. Conduct an audit. Which cloud AI tools are being used? How are they being used? What data is being transmitted? Where is that data being processed?

Second: Classify your data. Not all data needs the same security treatment. Customer information requires different safeguards than marketing copy. Financial records require different handling than internal communication. Classify your data by sensitivity. Then determine which cloud AI tools (if any) are acceptable for each data class.

Third: Develop explicit governance. "Employees shouldn't use cloud AI" is too vague. "Employees may use Chat GPT for brainstorming marketing concepts but not for customer data analysis" is clear guidance. Your policies need to be specific enough that employees understand what's permitted and IT can enforce it.

Fourth: Evaluate on-device alternatives. For sensitive use cases, experiment with on-device models. You might find they're acceptable even if they're slightly less capable. If they work for 80% of your use cases, that's a significant security improvement without forcing you to abandon AI entirely.

Fifth: Plan for regulatory compliance. Whatever jurisdiction you operate in, assume AI rules will tighten. If GDPR-level compliance is reasonable for your primary market, design your AI infrastructure to meet those standards now. You'll avoid scrambling for compliance later.

Sixth: Document everything. When you do use cloud AI services, document what data was used, which service processed it, what the business justification was. This creates an audit trail that satisfies regulatory requirements and helps you manage risk proactively.

The Parliament's decision doesn't mean AI is bad. It means cloud-based AI without security verification is increasingly unacceptable to risk-conscious organizations. Planning for that reality now puts you ahead of organizations that will be forced to change later.

The Emerging Market for Secure AI Solutions

Where regulations create constraints, vendors see opportunities. The Parliament's ban is already spurring development of secure AI alternatives.

Microsoft is investing heavily in on-device processing for Copilot. Instead of processing everything in the cloud, newer versions are moving computation to local devices where possible. This addresses the exact concern the Parliament raised.

Apple's approach has always been on-device first. Features that could run locally do run locally. This architecture is appealing to governments and security-conscious enterprises now in ways it wasn't before.

European tech companies are positioning themselves as GDPR-compliant AI alternatives that keep data within EU borders. Mistral, a Paris-based AI company, is building models that can run locally or in European cloud infrastructure.

Open-source models are gaining traction precisely because they can run locally without dependence on cloud vendors. Meta's Llama, Hugging Face's offerings, and other community projects are becoming viable alternatives for organizations willing to manage their own infrastructure.

For vendors willing to invest, this represents a significant market opportunity. Organizations that currently use cloud AI are increasingly open to alternatives if those alternatives address security concerns. The addressable market for secure, compliant AI solutions is expanding.

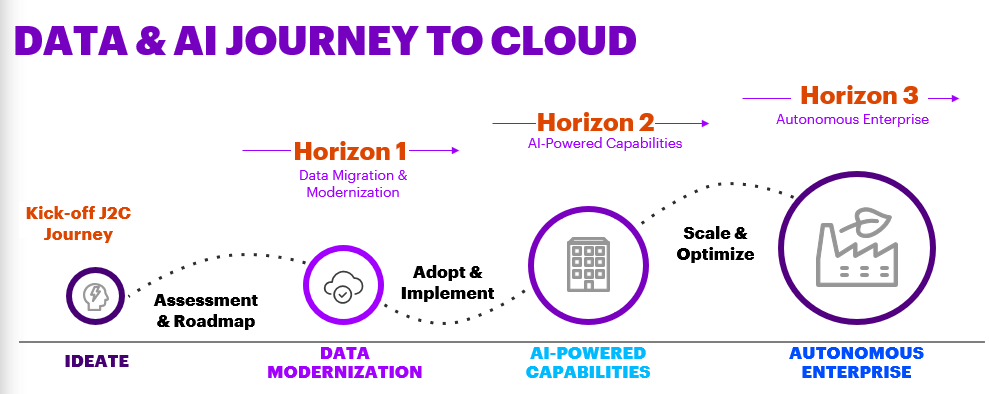

Preparing Your Organization for the Coming AI Regulation Wave

The European Parliament's decision is one data point in a larger trend: AI governance is tightening globally.

EU AI Act goes into effect in phases starting 2024-2025. Different risk categories face different compliance requirements. High-risk AI systems (those used in critical infrastructure, government, or affecting human rights) face the strictest rules.

US regulation is less comprehensive but moving. Executive orders on AI, industry-specific guidance, sector-based requirements—the pattern is regulation increasing from baseline near-zero.

Canada, UK, Australia, Singapore—all developing AI regulatory frameworks. The details differ, but the direction is consistent: more requirements, more documentation, more compliance burden.

For organizations operating across multiple jurisdictions, this means designing AI systems that meet the toughest standards. If your system works for EU compliance, it likely works elsewhere. If it only works under US regulatory assumptions, you'll need rework when you enter other markets.

The time to get ahead of this curve is now. Organizations that are already compliant with EU standards, that already have data governance policies, that already understand their regulatory obligations—these will outcompete organizations scrambling to retrofit compliance later.

The Parliament's decision is partly about security. It's also partly about establishing the baseline expectation: cloud-based AI without strong data governance is not acceptable for serious organizations.

FAQ

Why did the European Parliament ban AI tools specifically?

The Parliament disabled AI tools due to concerns that cloud-based AI processes data off-device, transmitting it to external servers without strong security guarantees. For government institutions handling sensitive legislative data and policy information, this represented unacceptable data exposure risk. The concern wasn't about AI technology itself, but about cloud architecture that sends data outside the institution's control.

Which AI tools were affected by the Parliament's ban?

The memo didn't name specific tools, but cloud-dependent AI features were most likely affected—particularly Microsoft Copilot integration in Microsoft 365 and similar cloud-based AI assistants in productivity software. The ban targeted AI systems requiring external cloud processing, not core productivity tools themselves (email, documents, spreadsheets remained available).

Could the Parliament turn AI tools back on at some point?

Yes. The memo indicated ongoing assessment of data security practices. If vendors or alternative solutions demonstrate that data stays secure without leaving the institution, AI tools could theoretically be re-enabled. However, this requires vendors to fundamentally change their architecture away from cloud-dependent processing, which most major vendors haven't done.

Why does this matter for non-government organizations?

The Parliament's decision signals to other governments and regulators that cloud-based AI without strong data governance is becoming unacceptable. Enterprises face similar security and compliance risks. If government institutions with sophisticated IT teams can't confidently secure cloud AI, the security risk is real. Additionally, regulatory scrutiny of cloud AI is increasing globally, so organizations should prepare for tightening rules.

What's the difference between cloud-based AI and on-device AI?

Cloud-based AI processes data on external servers, requiring data transmission, external storage, and dependence on vendor security. On-device AI runs the entire model locally without external transmission, keeping data completely under the organization's control. The tradeoff: on-device AI uses smaller models with less capability, while cloud AI offers more power but more security exposure.

Should our organization ban cloud AI entirely?

Not necessarily. Instead, implement data classification and context-specific policies. Some data (brainstorming, general research) may be safe for cloud AI. Sensitive data (customer information, proprietary research, financial records) shouldn't go to cloud AI. Many organizations find that a graduated approach—permitting cloud AI for low-risk tasks but restricting high-risk use—balances capability with security.

How does GDPR relate to the Parliament's AI concerns?

GDPR creates legal liability when personal data is transmitted to external processors without proper safeguards. Transmitting customer data to cloud AI services creates potential GDPR violations if there's inadequate security or unauthorized use. The Parliament's concern reflects GDPR's framework: organizations are liable for data security even when using third-party services.

What about AI services that use European data centers or claim GDPR compliance?

Data localization (keeping data in European servers) addresses some concerns but not all. Even European cloud providers can face breaches, unauthorized access, or regulatory noncompliance. The Parliament's skepticism suggests that physical location alone isn't sufficient—actual architectural guarantees that data doesn't go to cloud services at all are preferred.

Is this about rejecting American technology specifically?

Partially. Tech sovereignty concerns—Europe's desire for digital independence from American companies—does influence policy. But the core concern is genuinely about security. The fact that most cloud AI providers are American is a correlation, not a causation. European vendors using cloud architecture would face similar scrutiny.

What should enterprises do to prepare for similar AI restrictions?

Start with a data governance audit: identify sensitive data, determine safe and unsafe uses for cloud AI, develop explicit policies, implement monitoring, and experiment with on-device alternatives where practical. Organizations implementing governance now avoid the scramble when regulators tighten rules later.

Conclusion: The AI Governance Inflection Point

The European Parliament's decision to disable cloud-based AI on government devices represents more than a single institution's security policy. It's a signal that AI governance is inflecting from permissive to restrictive.

For years, organizations adopted cloud AI with minimal friction. The tools were available, convenient, and vendors promised they were secure. Questions about data transmission, cloud storage, and regulatory compliance got deferred or delegated to legal and IT specialists who often lacked visibility into actual usage.

The Parliament's ban interrupts that trajectory. It says: we've looked at cloud AI architecture, and we're not comfortable with it. Not yet. Not without stronger guarantees about security.

Other governments and enterprises will eventually reach the same conclusion. Some through regulatory mandates. Others through risk assessment. But the direction is clear: cloud-based AI without strong data protection frameworks is becoming less acceptable to organizations with serious security requirements.

This doesn't mean AI adoption stops. It means the terms of AI adoption change. Organizations will use AI more carefully, with better governance, and with stronger preferences for solutions that don't require transmitting sensitive data to external vendors.

The vendors and organizations that prepare for this shift now will be ahead of those forced to adapt later. Implementing data governance policies, exploring on-device AI alternatives, and building security-first evaluation processes into technology decisions—these aren't future concerns. They're present necessities.

The Parliament didn't reject AI. It rejected AI architecture that doesn't meet acceptable security standards. That's a distinction that matters enormously. Organizations should learn from it.

Start your own assessment. Inventory your current AI usage. Classify your data. Develop explicit policies about what cloud AI is acceptable for and what requires alternative approaches. Document your reasoning so you can explain your AI security practices to regulators, customers, and your board.

The regulatory environment is tightening. The Parliament just showed how much tighter it can get. Plan accordingly.

Key Takeaways

- European Parliament disabled cloud-based AI due to security risks from off-device data transmission and lack of guaranteed data protection.

- Cloud AI architecture sends sensitive government data to external vendors without strong security guarantees, creating unacceptable exposure for institutions handling classified information.

- The decision signals that major institutions globally are reassessing cloud-dependent AI adoption, with regulators increasingly demanding data governance and security verification.

- On-device AI offers enhanced security but requires smaller models and local infrastructure investment, representing the emerging preferred approach for high-security environments.

- Organizations should immediately implement data classification frameworks, explicit AI governance policies, and explore locally-runnable alternatives before regulatory requirements force costly changes.

- Regulatory pressure on cloud AI is accelerating across EU (AI Act), US (executive orders), and other jurisdictions, making security-first AI architecture a strategic necessity rather than optional optimization.

Related Articles

- Tenga Data Breach 2025: How a Phishing Email Exposed Customer Data

- Machine Credentials: The Ransomware Playbook Gap [2025]

- AI Apocalypse: 5 Critical Risks Threatening Humanity [2025]

- PostHog vs Mixpanel: Complete Feature Comparison [2025]

- David Greene Sues Google Over NotebookLM Voice: The AI Voice Cloning Crisis [2025]

- Seedance 2.0 and Hollywood's AI Reckoning [2025]

![EU Parliament Bans AI on Government Devices: Security Concerns [2025]](https://tryrunable.com/blog/eu-parliament-bans-ai-on-government-devices-security-concern/image-1-1771331744552.jpg)