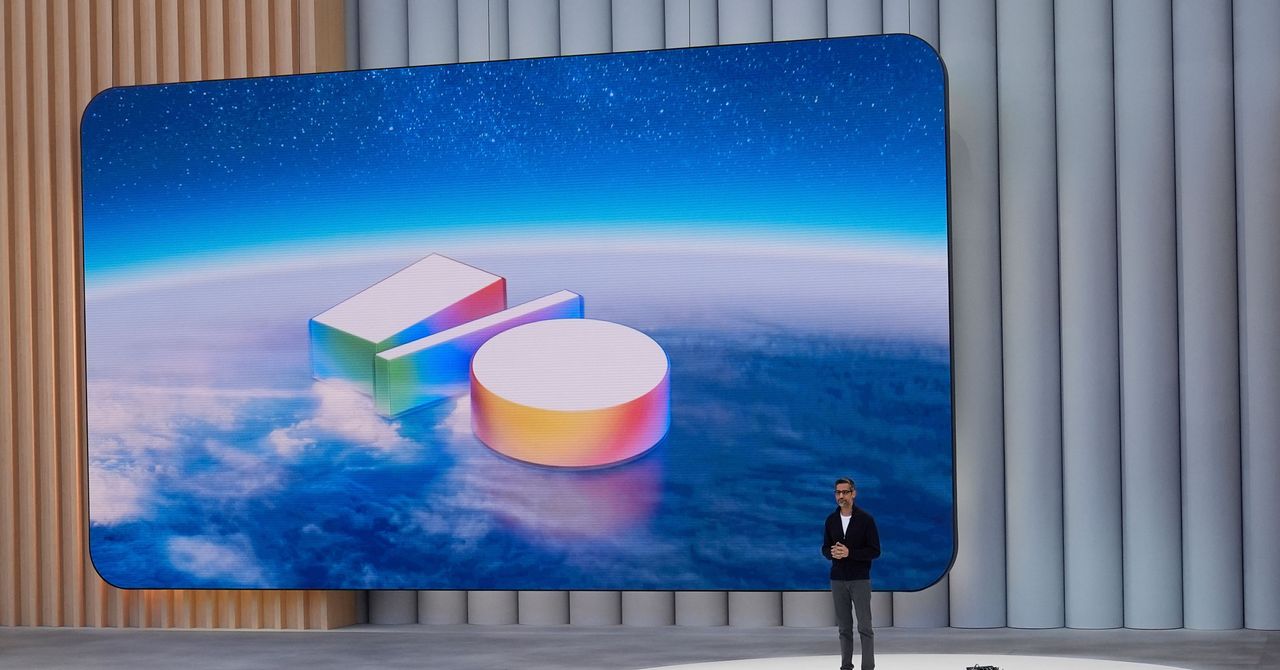

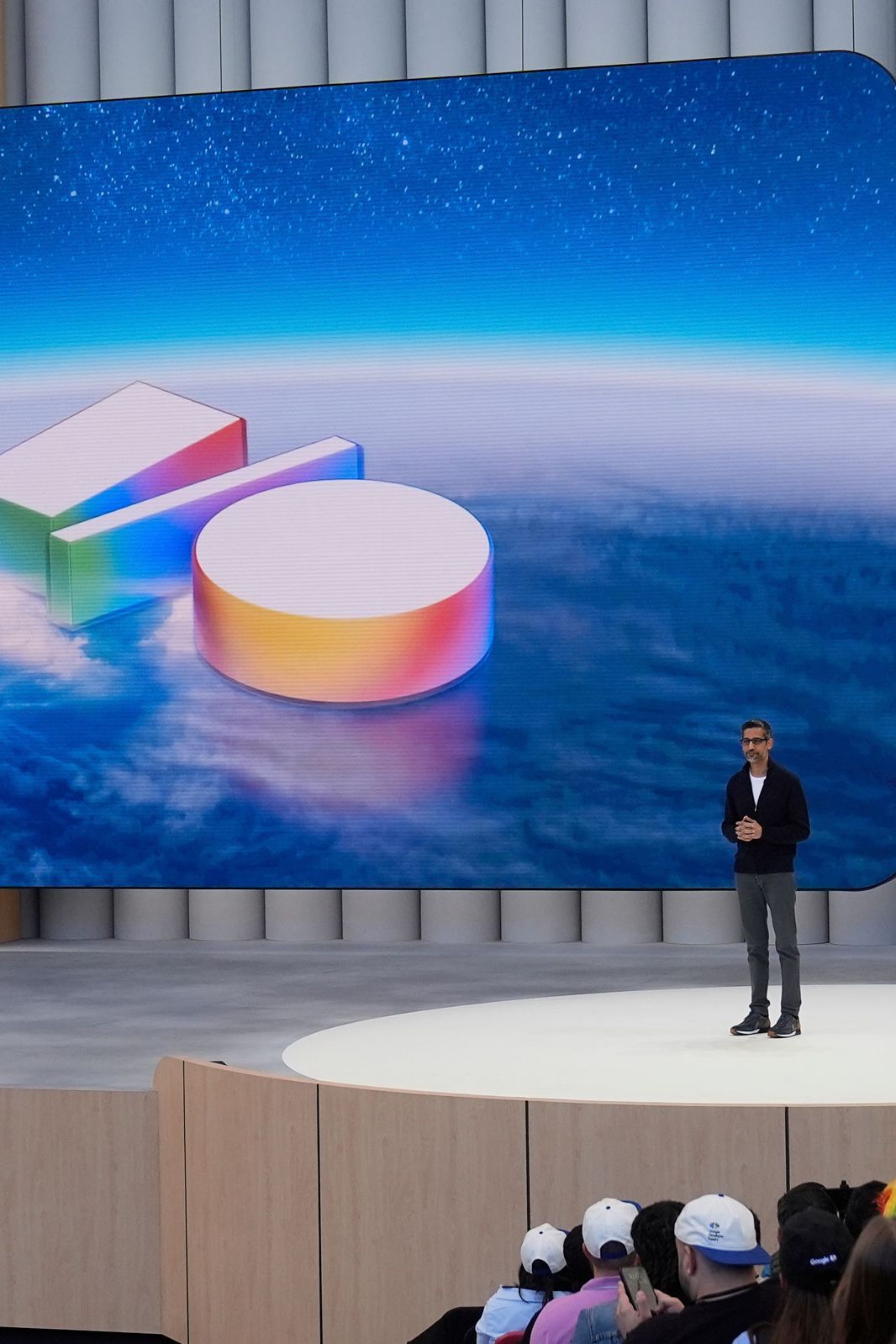

Google I/O 2026: The Ultimate Guide to Google's Biggest AI and Product Event [2025]

Google just confirmed it. May 19th and 20th, 2026. Mountain View, California. The Shoreline Amphitheatre, plus online access for everyone else watching from home.

It's official. Google I/O 2026 is locked in, and honestly, this year feels different. We're not just getting another developer conference where someone announces a new logo refresh or a marginally faster API. This is shaping up to be the AI event of the year, and I'm here to break down everything we know, what's probably coming, and why you should actually care about it.

Look, Google's been on an AI tear for the last eighteen months. They launched Gemini. They integrated AI into Search. They shipped AI Mode (yes, that's the actual name). They built an AI filmmaking app called Flow. And now? They're doubling down. Hard.

The company's official announcement says Google I/O 2026 will feature the "latest AI breakthroughs and updates in products across the company, from Gemini to Android and more." That's corporate speak for "we're about to blow your mind with a bunch of stuff you didn't expect." And based on what's been brewing in Mountain View, that's no exaggeration.

Here's what's actually happening at Google I/O 2026, what you need to know about attending, and what I'm predicting Google will announce (some of which might surprise you).

TL; DR

- Event Dates: Google I/O 2026 runs May 19-20, 2026 at Shoreline Amphitheatre in Mountain View, California

- Format: Hybrid event with in-person attendance and worldwide online streaming

- Main Focus: AI-heavy, featuring Gemini breakthroughs, Android updates, Chrome innovations, and Workspace features

- Keynote: Kicks off morning of May 19th with company leadership

- Registration: Developers can register starting immediately at Google's I/O website

- Interactive Experience: "Save the date" experience on Google's site includes Gemini AI minigames

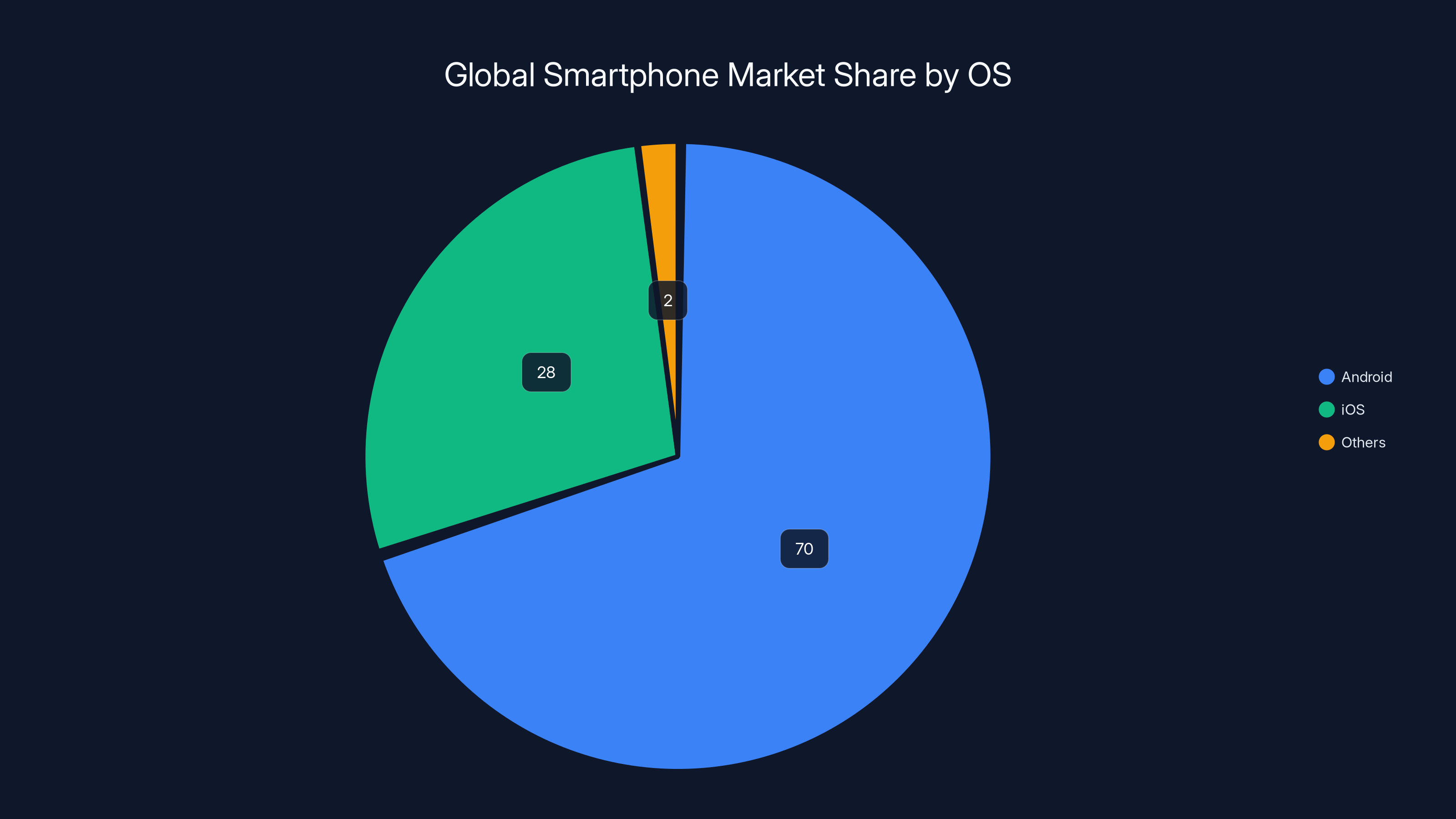

Android dominates the global smartphone market with an estimated 70% share, highlighting its potential impact if AI is integrated into its core.

What We Know About Google I/O 2026

Google announced the dates on Tuesday, which means we now have our calendar marked and our expectations set. But let's break down what we actually know versus what we're speculating about.

The official details are sparse, but telling. Google says the event will showcase "the latest AI breakthroughs and updates in products across the company, from Gemini to Android and more." That "and more" is doing a lot of heavy lifting. Translation: they're definitely announcing something major, and they're probably not mentioning it yet.

The event structure follows the traditional Google I/O format. Keynotes from leadership (we can expect the CEO and maybe some senior VPs). Fireside chats where executives pretend they're having natural conversations (they're not, but it's fun). Product demos where engineers show off what they've been working on for the last twelve months. Breakout sessions for developers where you can nerd out about specific technologies. Hands-on labs where you actually get to test new APIs and tools.

Google also won't release the full session schedule until closer to May, which is standard practice. But the morning keynote is locked in for May 19th. That's always the show-stealer, where Google announces the big stuff that makes headlines.

One interesting detail: Google built a "save the date experience" on their website using Gemini AI. It's a series of minigames. Nothing groundbreaking, but it's a signal about where Google's head is at. They're not just talking about AI being important—they're shipping AI-powered products to get people interested in the event. Meta move.

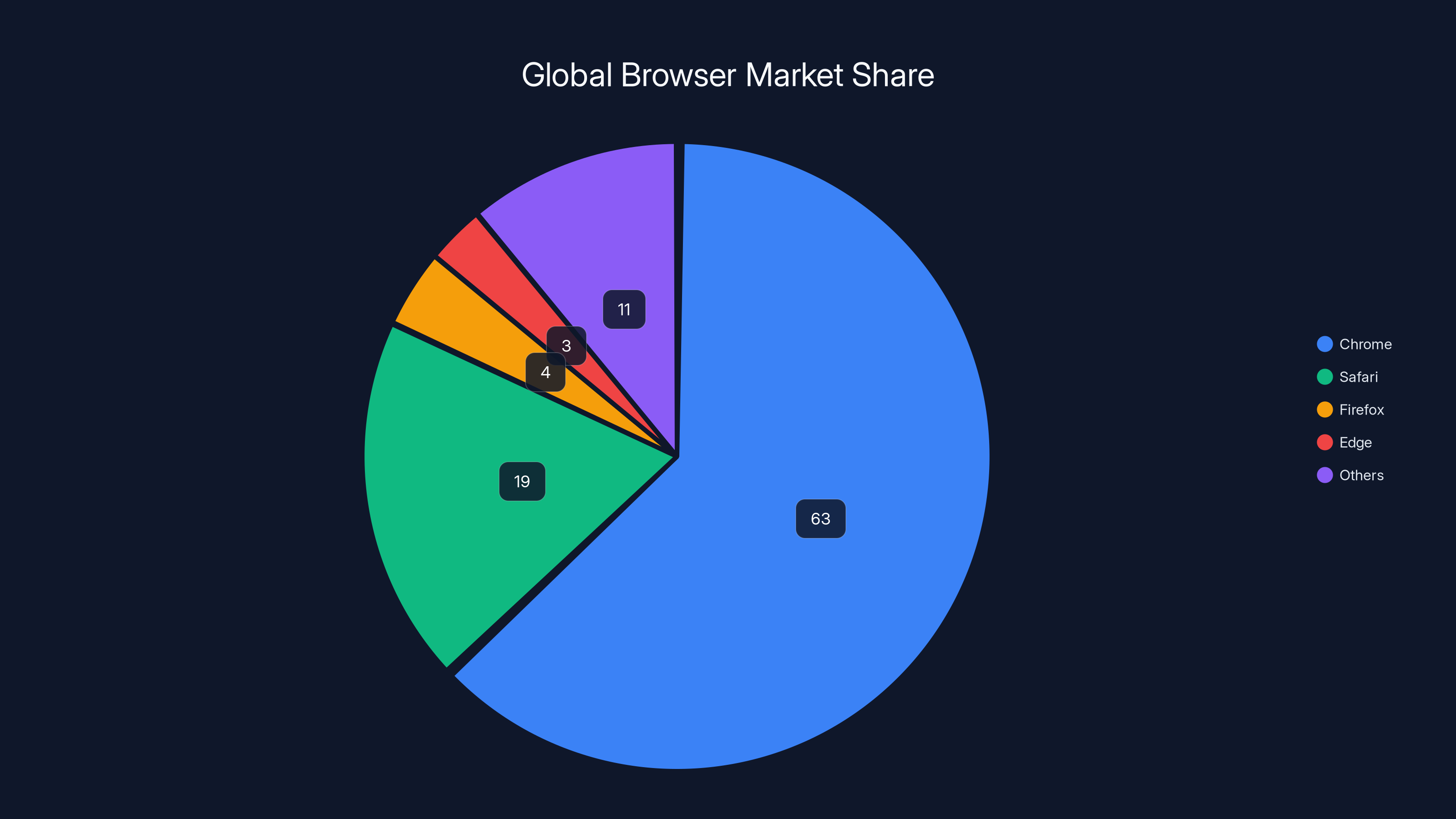

Chrome dominates the global browser market with a 63% share, significantly ahead of competitors like Safari and Firefox. Estimated data.

Why 2026 Is Different From Previous Google I/O Events

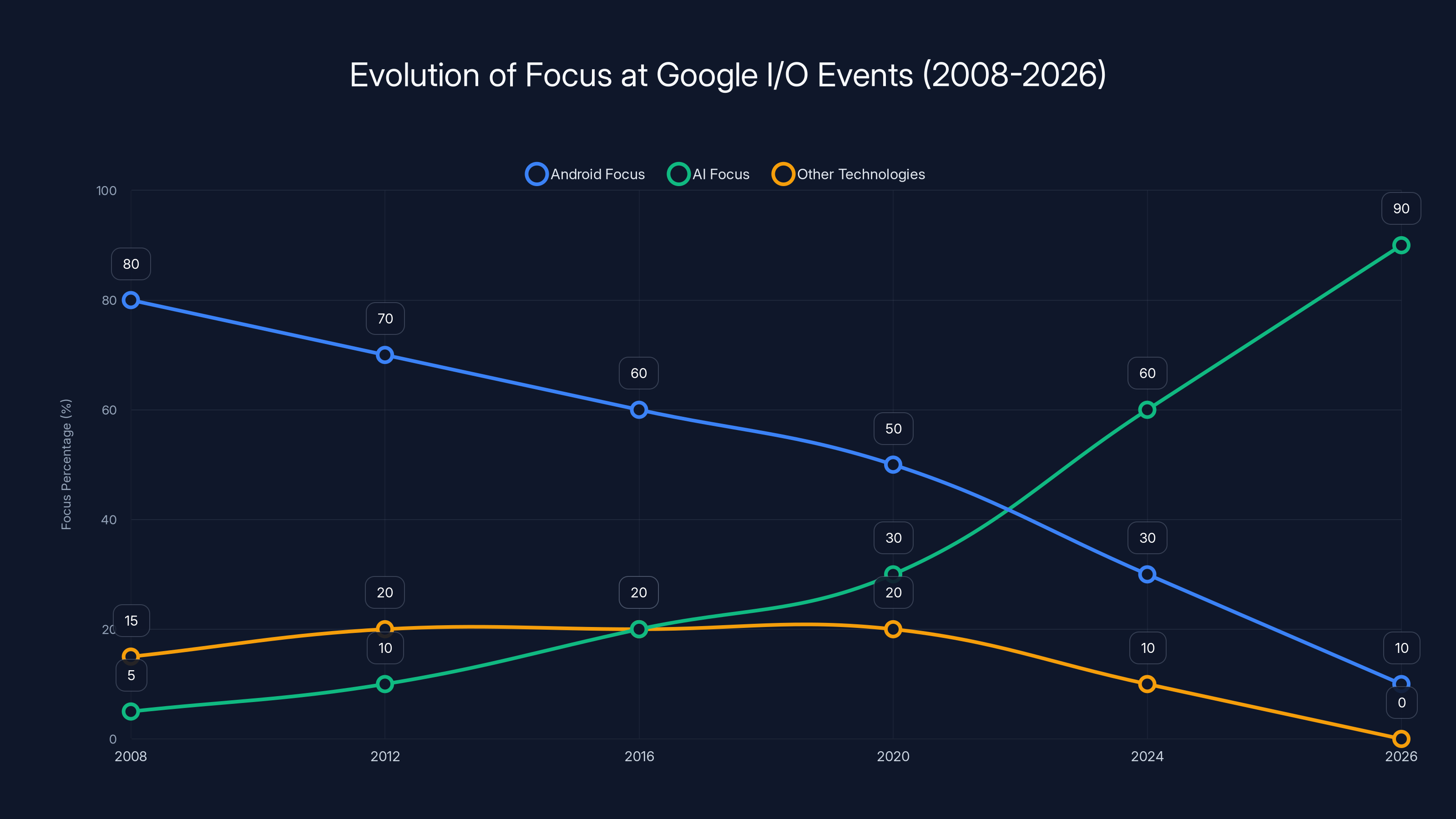

Google I/O has been happening since 2008. That's nearly two decades of the company unveiling new stuff. But 2026 feels like a pivot point. Here's why.

For years, Google I/O was about Android updates. New version, new features, new design language. Important for developers, kind of boring for regular people. Then it was about wearables and Wear OS. Then it was about tablets and Pixel devices. The event moved around based on whatever was shiny that year.

But starting with Google I/O 2024, something shifted. AI became the focus. And I mean the actual focus, not just a side attraction. Every product announcement had an AI angle. Search got AI Mode. Android got Gemini integration. Workspace got AI features. Even the Pixel phone got AI-powered photo editing.

Now, entering 2026, Google's not even pretending. The announcement specifically calls out "latest AI breakthroughs." Not "updates to Android that happen to include AI." Not "Gemini features we forgot to mention." They're saying the whole event is about AI. Everything else is secondary.

This matters because it tells us something about the tech industry's trajectory. Microsoft went all-in on AI with Copilot. OpenAI launched Chat GPT and changed everything. Google was late to react (which was wild given they basically invented modern machine learning). But now they're making up for lost time.

Google I/O 2026 is going to be the event where Google convinces everyone that they're not the company that almost missed the AI revolution. They're the company that's now leading it.

Gemini: The Star of the Show

Let's be real: Gemini is going to be the centerpiece of Google I/O 2026. And honestly, that makes sense. Gemini is Google's bet on the future of AI. It's their answer to Chat GPT. It's how they're going to compete with OpenAI and Microsoft.

Gemini's evolution has been fascinating to watch. It started as "Bard," which was... fine. Decent chatbot, nothing special. Then Google rebranded it to Gemini and said "actually, we've had three versions running this whole time." Gemini 1.0, 1.5, and Ultra. It was the business equivalent of finding money in your winter coat.

Then they released Gemini 2.0, which added some serious new capabilities. Better reasoning. Longer context windows (meaning it can remember more of a conversation). Multimodal features (text, images, video, audio). The thing's legitimately impressive now.

But here's what we're expecting for Google I/O 2026: next-level Gemini features. We're talking about:

Deeper integration into everything. Right now, Gemini feels like a separate thing you can access. Google's going to make it so fundamental to how Google products work that you forget it's even AI. Search queries answered by Gemini before you even click. Gmail automatically suggesting responses. Android doing stuff proactively based on understanding what you're trying to accomplish.

More capable reasoning models. Gemini 2.0 was already better at complex thinking than earlier versions. Expect them to announce an even more advanced version that can handle abstract reasoning, multi-step problem solving, and creative tasks better than anything Google's shipped before.

Better real-time capabilities. One of Chat GPT's big advantages is you can have a natural conversation and it responds instantly. Gemini's still catching up on that. Expect Google to announce infrastructure improvements that make Gemini feel snappier and more interactive.

Customization and enterprise features. Gemini for Business is already a thing, but it's early. Google's going to announce major upgrades for companies that want to use Gemini internally, build custom models, fine-tune for their specific use cases.

Gemini is the engine that powers everything Google's about to announce. If they nail it, they win the AI race. If they stumble, they're playing catch-up for another two years.

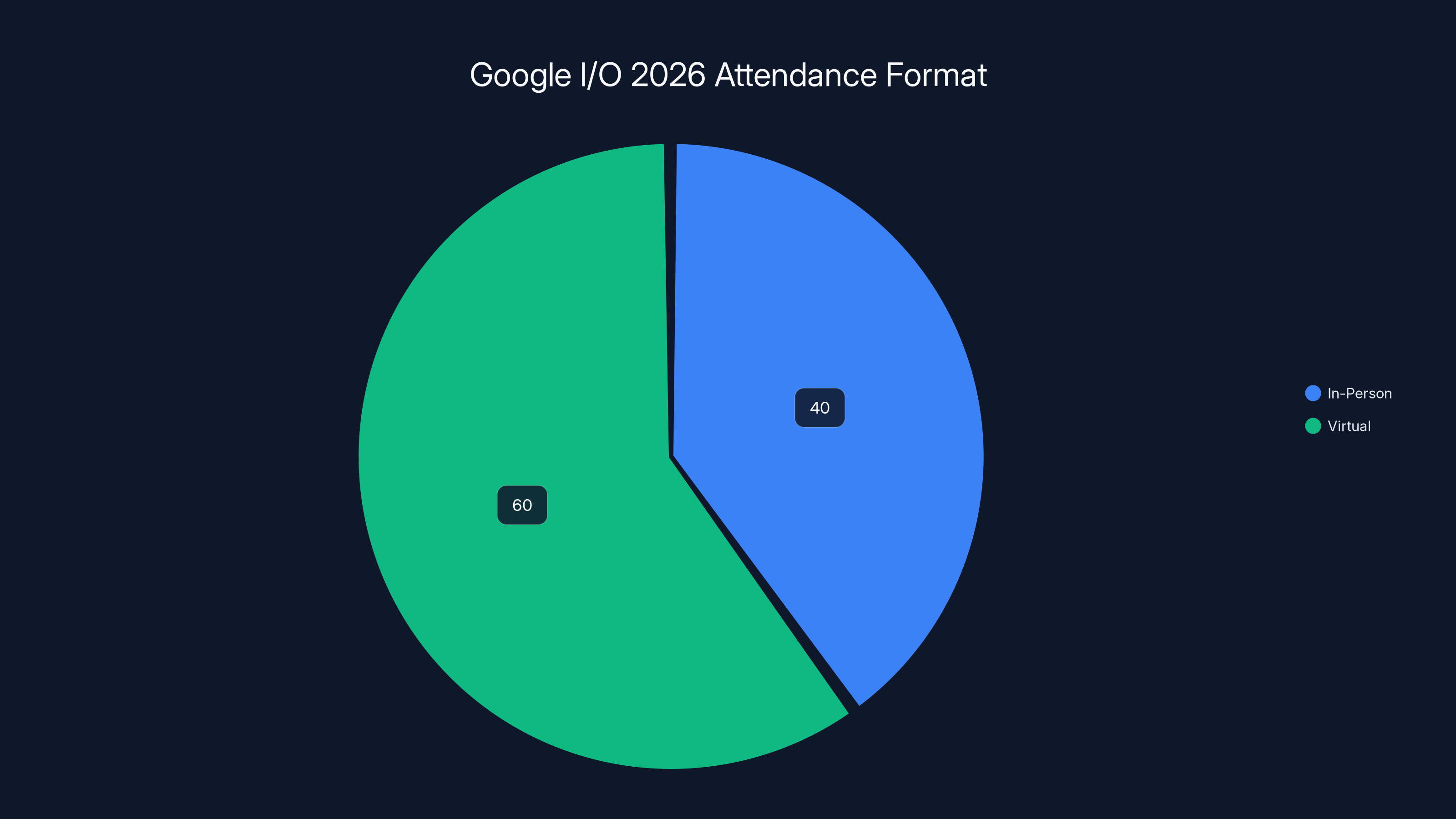

Estimated data suggests that 60% of attendees will participate virtually, while 40% will attend in person, reflecting the hybrid nature of Google I/O 2026.

Android Updates and the Future of Mobile AI

Android 16 is coming. That's not exactly surprising—Google ships a new Android version every year. But the question is: how integrated will AI be in Android 16?

Right now, AI features on Android are scattered. Your Pixel phone has Google Photos magic eraser (AI). It has Call Screen (AI). It has voice typing improvements (AI). But it's not cohesive. You don't think "I'm using AI" when you use those features. They just work.

Google I/O 2026 is probably where they announce a unified AI layer for Android. Something that makes AI features accessible to developers across the entire platform. Imagine if third-party apps could tap into Gemini capabilities natively. If any app could use on-device AI for image processing, text understanding, or task automation.

That's the play. Google owns Android (about 70% of smartphones worldwide run Android). If they build AI capabilities into the core of Android, every Android phone becomes an AI device. Automatically. Without users having to opt-in or download anything special.

Expect announcements about:

On-device AI processing. Not everything needs to go to Google's servers. Some AI tasks can run right on your phone. That's faster, it's more private, and it works offline. Google's going to announce improvements to their on-device models and framework for developers to build with them.

AI-powered search within apps. You should be able to search your photos, your emails, your notes the same way you search Google. Using natural language. Google's going to make that native to Android so app developers can build it in without reinventing the wheel.

Smarter notifications and app predictions. Android already does this a bit, but it's surface-level. Google's going to show off more sophisticated version where your phone actually understands context. It knows when you're driving and surfaces the right app. It knows you're about to need your boarding pass. It knows what you're probably going to do next.

Better privacy controls for AI. People are rightfully nervous about AI analyzing their personal data. Google's going to announce clearer controls and transparency about what AI features do with your information.

Android 16 is going to be the most AI-native version of Android ever. That's the whole story.

Chrome: Making the Browser Smarter

Chrome is the world's most popular browser. It has about 63% market share globally. That means when Google makes Chrome smarter, they're making the web smarter for billions of people.

Chrome already has some AI features. Side panel with AI assistance. Writing tools that suggest tone and length improvements. Image generation with Imagen. But these feel like experiments. Experiments on billions of people, sure, but still—they're optional features that most users don't know exist.

Google I/O 2026 is where Chrome becomes an AI-first browser. Not in a "Chrome is now powered by AI" marketing way. In a practical "every feature in Chrome is smarter because of AI" way.

Here's what I'm expecting:

Smarter tab management using AI. You have 47 tabs open. Chrome knows. It's not a bug, it's a feature. Expect Google to announce AI that actually understands what you're researching and groups tabs intelligently. Or automatically closes tabs you probably don't need anymore (with recovery, so they're not gone forever).

AI-powered password and security features. Password management is already in Chrome. But AI can make it smarter. Better threat detection. Automatic alerts if your password appears in a breach. Biometric authentication improvements. Chrome will become more secure using AI.

Better web page summaries and search. You're on a webpage. You want the gist. AI summarizes it for you. You want to search a site's content without using its built-in search (which usually sucks). You just ask Chrome and it AI-searches for you.

Developer tools powered by AI. Developers use Chrome Dev Tools. Imagine if those tools had an AI assistant that understood your code, spotted bugs before they ship, and suggested optimizations. Google's shipping that.

Smarter suggestions and autocomplete everywhere. Not just in search. In forms. In text fields. In every place where you type, Chrome's AI learns your patterns and suggests what you probably want to say next.

Chrome's transformation into an AI-first browser is going to be subtle. You probably won't notice it until you do, and then you'll wonder how you ever lived without it.

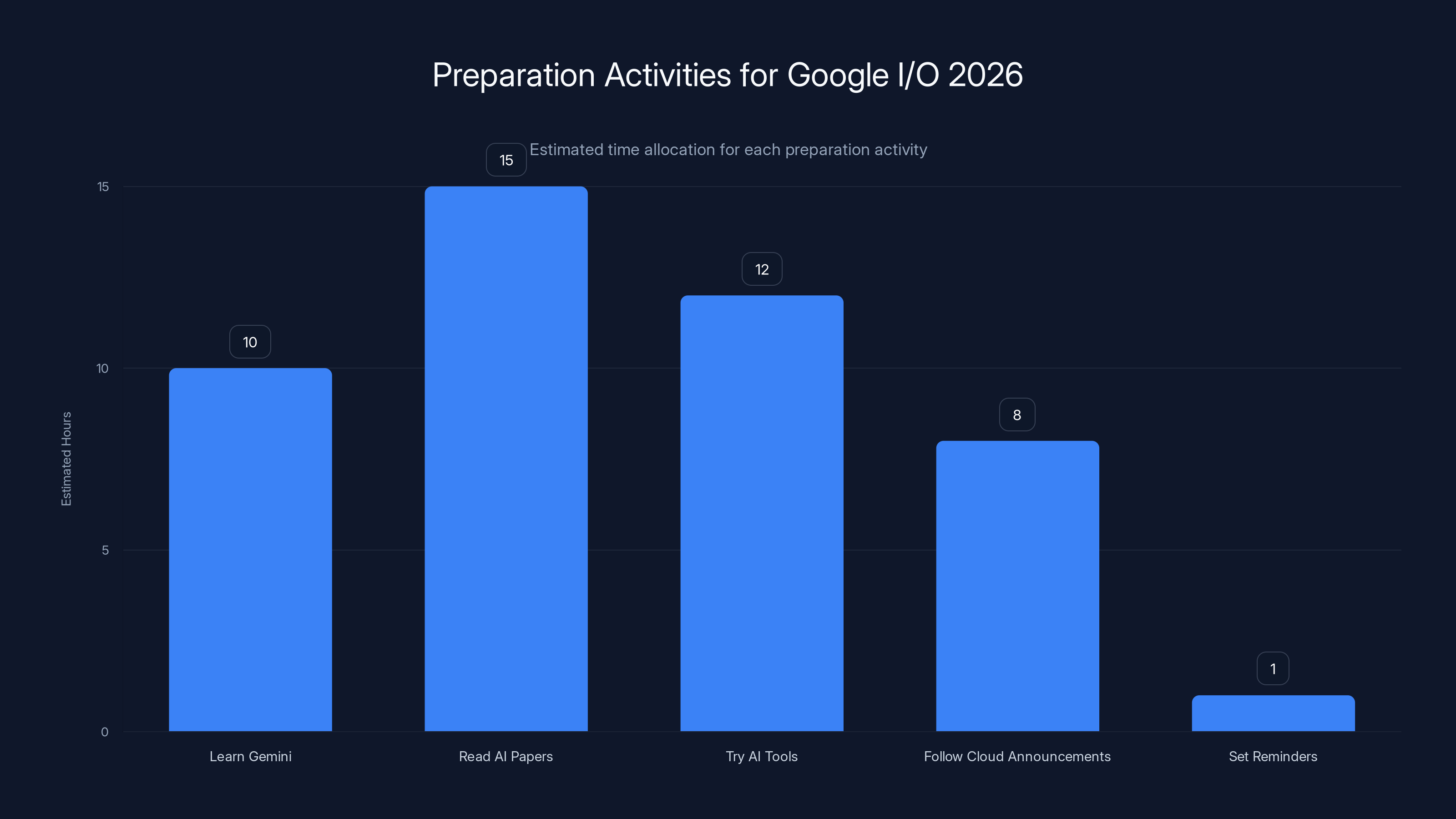

Estimated data suggests allocating more time to reading AI papers and trying AI tools, as these are crucial for understanding Google's advancements.

Google Workspace: AI for Productivity

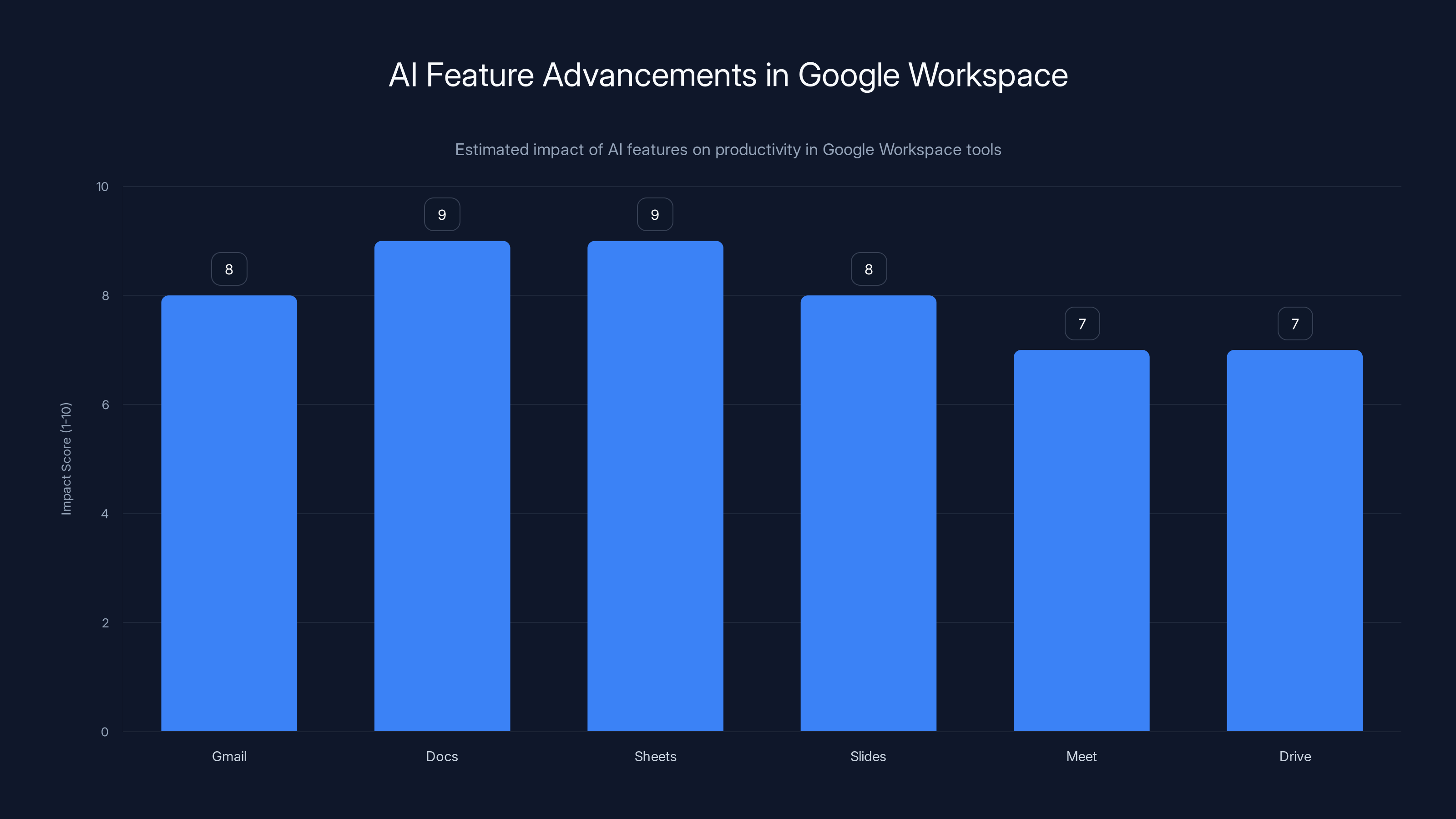

Google Workspace—that's Gmail, Docs, Sheets, Slides, Meet, and Drive—already has AI features. Gmail has "Help me write." Docs has "Help me organize." But they're basic. They're helpful, but they're not transformative.

Google I/O 2026 is probably where Workspace becomes legitimately AI-first. Like, you're not using AI features in Workspace. You're using Workspace, which happens to be powered by AI.

Gmail is about to get seriously smart. Imagine if Gmail could read your emails, understand what you're working on, and draft responses that sound like you. Not robotic suggestions. Actual drafts that capture your voice and handle the routine stuff. Gmail's shipping that. Probably with better spam filtering that actually understands context (not just keywords).

Docs becomes a writing assistant. You open a blank Google Doc. You tell Gemini what you're trying to write (a proposal, a blog post, meeting notes). It gives you a draft. You refine it. It learns your style. Next document, the suggestions are closer to how you'd actually write.

Sheets gets AI-powered analysis. You import data. Google Sheets analyzes it automatically. Spots trends. Suggests charts. Flags anomalies. Creates pivot tables for you. You're not manually doing analysis anymore. You're guiding the AI's analysis.

Slides generates entire presentations from prompts or data. You say "create a presentation about Q4 results using these numbers" and Gemini generates it. Layouts. Charts. Talking points. You just refine and present.

Meet becomes smarter about... meeting things. Better transcription. Automatic summaries. Real-time translation. Meeting scheduling that actually works. Gemini transcribes, summarizes, and even suggests action items.

Drive becomes intelligent storage. You upload a file. Drive automatically categorizes it, OCRs it if it's a PDF, makes it searchable, suggests where it should go, and alerts you if it's a duplicate of something you already have.

The theme here is automation. Workspace 2026 is about handling the repetitive, mechanical parts of office work so you actually have time to think.

Pixel and Hardware: AI at Your Fingertips

Google's Pixel phones are where Google's software and hardware meet. And right now, they're Google's proving ground for new AI features.

Pixel 9 already has some wild AI stuff. Photo Magic Eraser. Best Take (pick the best faces from multiple photos automatically). Audio Magic Eraser (remove background noise from videos). Call Screen (spam detection). These aren't just nice-to-haves. They're actually useful.

But Pixel devices in 2026 are going to lean even harder into on-device AI. The idea is that your phone is smart enough to do a lot without talking to Google's servers. That's faster, more private, and more reliable.

Expect announcements about:

Better on-device Gemini integration. Your Pixel phone runs a version of Gemini locally. Not connected to the internet for every task. It's there, always ready, always private. You can ask it to help you with a task right on your device.

AI-powered camera that's actually smart. Real-time scene understanding. Better night mode using AI. Smarter focus and exposure. Video stabilization using AI instead of just optical/digital tricks. Photos that know what you were trying to photograph and automatically optimize for it.

Voice assistant that finally understands context. Google Assistant still feels outdated compared to what's possible with modern AI. Expect a Gemini-powered assistant that understands nuance, remembers context, and actually helps you instead of just executing commands.

Predictive features that feel magical. Your phone predicts what you're about to need and surfaces it. You're driving toward work, it pops up navigation before you ask. It's your birthday, your phone reminds the people you'd probably want to hear from. It's not creepy, it's helpful.

Accessibility features powered by AI. Live transcription. Live relay (AI speaking for you). Captions for videos even when they don't have them. Accessibility is going to be a big part of the AI story.

Google Pixel 10 or Pixel 10 Pro will probably be announced, or at least teased, and the AI capabilities will be the whole story.

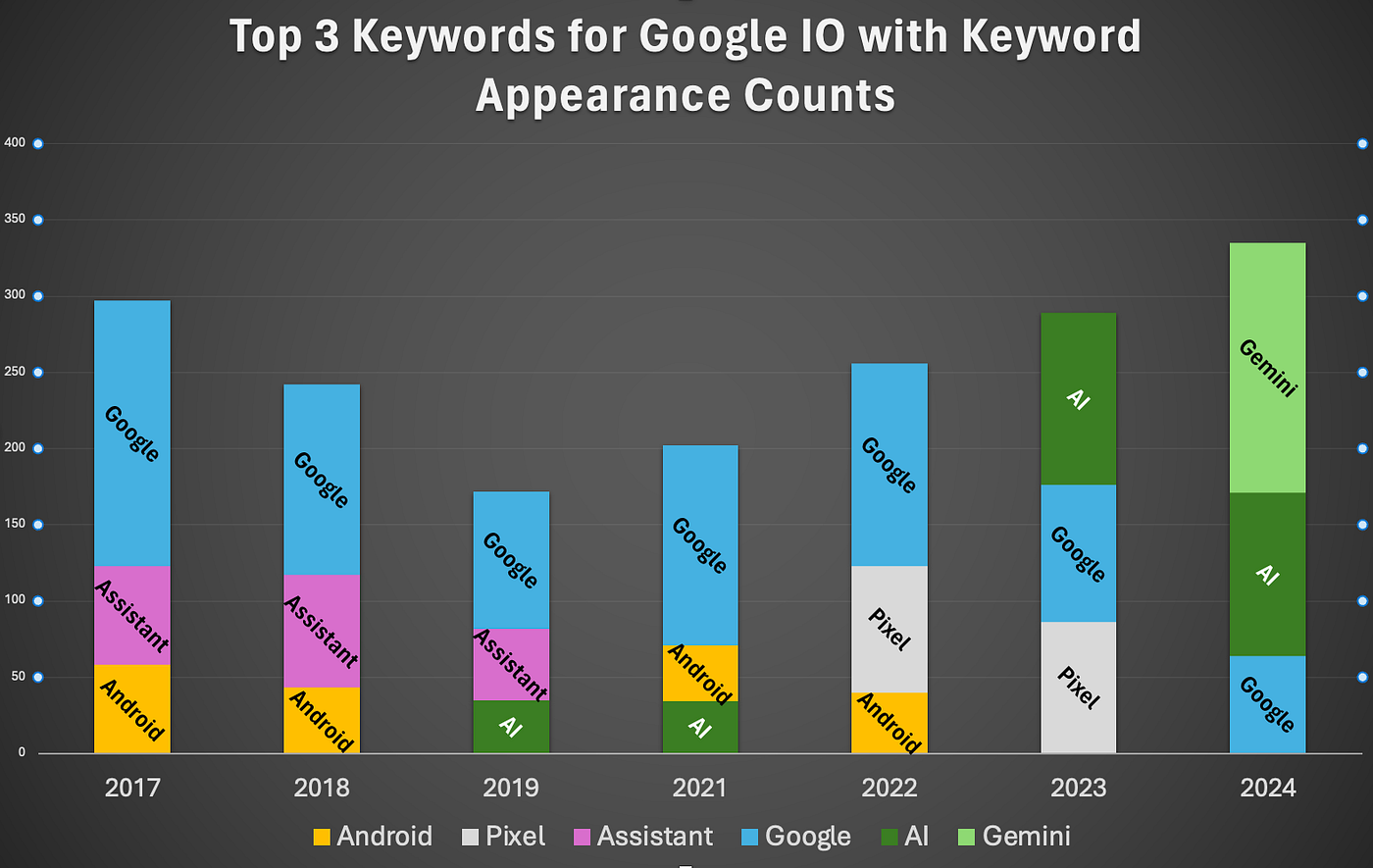

Estimated data shows a significant shift in focus from Android to AI at Google I/O events, especially from 2024 onwards, highlighting Google's strategic pivot towards AI.

Search, Reimagined Again

Google Search is already different from what it was a year ago. AI Mode lets you ask questions in natural language. Results show AI-generated summaries. Google's answering questions, not just showing links.

But there's a limit to how much you can change before people get confused and go use something else. Google I/O 2026 is where they push that boundary.

Multimodal search that understands everything. You point your camera at something and ask a question about it. You describe something you heard and ask "what song is this." You paste a photo and ask "where can I buy something like this." Search becomes this universal query interface that understands text, images, video, audio—everything.

Search that's actually conversational. Right now, AI Mode is better than regular search, but the conversation still feels a bit rigid. Google's going to make it feel like you're actually talking to someone knowledgeable. Follow-up questions. Clarifications. Context-awareness that spans multiple questions.

Search that understands intent better than ever. You search for "restaurants near me." Google doesn't just show restaurants. It understands you probably want to eat soon, at a reasonable price, that matches your dietary preferences, and that have good reviews. Ranked by relevance to what you actually want, not just proximity.

Search with real-time information that's actually real-time. Stock prices as you search them. Sports scores seconds after they happen. Breaking news properly contextualized. Live search results that feel less like a static index and more like a live wire to what's happening right now.

Search that goes beyond Google's own index. Google's been playing with Reddit integration and other sources. Expect more aggressive moves to make search feel less like "Google's database" and more like "the entire internet, curated for you."

Search is the product Google's most protective of. They're not going to kill the golden goose. But they're going to make search so much smarter that not using Google Search feels like using an old flip phone in 2026.

What About Competition?

It's worth noting that Google's not operating in a vacuum. OpenAI has Chat GPT. Microsoft has Copilot. Apple's about to ship "Apple Intelligence" across their devices. Meta's releasing open-source AI models. The AI race is real.

Google I/O 2026 is partially about keeping up, but also about proving Google's been leading all along. Gemini is good. The integrations are smart. The approach of embedding AI throughout products (rather than bolting it on top) is probably smarter than the competition's approach.

But announcements are announcements. Execution is what matters. We'll see if Google can actually deliver all this.

Estimated data suggests that AI features in Docs and Sheets will have the highest impact on productivity, with scores of 9 out of 10, due to their advanced writing assistance and data analysis capabilities.

Attending Google I/O 2026: Logistics and Tips

Okay, practical stuff. How do you actually attend or participate in Google I/O 2026?

In-person attendance: Google I/O takes place at the Shoreline Amphitheatre in Mountain View. It's a real venue, seats thousands. But in-person spots are limited and they usually lottery the tickets or charge (though some developers get in free). You'll need to register through Google's official I/O site.

In-person gets you access to keynotes (front and center), hands-on labs where you play with new APIs, networking with other developers, and the energy of being in the room when something big gets announced.

Virtual attendance: Not everyone can fly to California. Google streams everything online. You get access to keynotes, most sessions, some hands-on content (though that's better in person). It's free. It's good. You can pause, rewind, watch on your own schedule.

Registration: Opens immediately after the announcement. If you want in-person access, register fast. Spots fill up. Virtual registration stays open and usually stays free for most developers.

After the event: Google publishes session videos, code samples, documentation, and libraries related to all announcements. You don't need to attend to get the knowledge. But the energy and direct access to teams is worth something.

The Deeper Trend: AI Is Now Table Stakes

Here's the thing that strikes me about Google I/O 2026: the fact that it's going to be AI-focused isn't surprising anymore. That's the new normal. AI at a tech event isn't a novelty. It's expected.

Two years ago, when Chat GPT launched, it felt like a plot twist. AI was suddenly something consumers had to care about. Companies scrambled to figure out how to use it. Conferences became about AI strategy.

Now, we're past that. Every company has an AI strategy. Every event has AI announcements. The question isn't whether AI is important. It's how good are you at actually executing it.

Google I/O 2026 is where we see Google's execution. And based on the products Google already has, it's going to be impressive. Gemini is legitimately good (if not better than Chat GPT in some tasks). The integrations are thoughtful. The approach of making AI invisible by making it embedded in products you use every day is smarter than making AI a separate thing.

But execution matters more than marketing. We'll find out in May whether Google can deliver on all this.

What's Missing From Google I/O 2026 Announcements

Here's my honest take: some things won't be announced, and they should be.

Privacy and data handling. Google's going to talk about AI being private and secure. But the truth is more nuanced. On-device processing is genuinely more private. But not everything can run on-device. Some things need to talk to servers. Google hasn't been transparent about what data gets sent where, how long it's kept, and what it's used for. They should be.

Environmental impact. AI models are compute-intensive. Training Gemini used a lot of electricity. Running it at scale uses a lot of power. Google talks about clean energy, but they should be more explicit about the environmental cost of AI and what they're actually doing about it.

Safety and misuse. AI can be misused. Deepfakes, automated disinformation, automated spam, impersonation. Google's building safeguards, but they're not going to put up a giant slide saying "here's how people are going to abuse our AI and here's what we're doing about it." They should.

Economic impact. AI is going to displace jobs. Google benefits from that (fewer people needed to do work). But that's a big deal and most announcements gloss over it. Google should be clear about the societal impacts and what they're doing to address them.

Google's not alone in this. Every AI company is kind of doing the same thing—announcing capabilities while being vague about downsides. But I/O 2026 is a moment where Google could differentiate by being more honest about the tradeoffs.

Why Google I/O 2026 Matters Beyond Just Announcements

I/O isn't just about products. It's about direction. It signals where Google thinks the tech industry is headed. And it influences where the rest of the industry goes.

When Google announces something major at I/O, other companies take notice. If Gemini gets deep integration into Android, other companies think about how their AI can integrate into their platforms. If Chrome becomes AI-first, other browser makers accelerate their AI roadmaps. If Workspace gets serious AI features, Microsoft pays attention (they should—they're paying attention).

Google I/O 2026 is going to set the tone for AI in consumer products for the next two years. It's going to influence what developers build. It's going to shape what users expect from their devices.

So even if you don't care about Google specifically, you should care about this announcement. Because Google's about to tell you what the future looks like, and everyone else is going to follow.

Predictions: What I Actually Expect Google to Announce

Let me put my neck on the line and predict some specific things.

Gemini Pro or Gemini Ultra, Version 3.0 or later. Not just iteration, but a meaningful jump in capabilities. Better reasoning. Faster inference. Better understanding of edge cases. They'll probably announce some benchmark where Gemini beats GPT-4o on specific tasks.

A new Pixel device with significant AI hardware improvements. Not just a faster chip, but specific hardware designed for AI inference. Google's going to show they're serious about on-device AI by shipping the silicon to do it.

Major upgrades to Google Search that make AI Mode the default. By May 2026, Google's probably shipping a version of Search where AI-assisted summaries are the primary result type (not optional). This is a big deal.

A new AI feature in Workspace that automates a significant part of knowledge work. My guess is something around email management or document creation. Something that saves people hours every week.

Open-source Gemini models for developers. Google's going to release smaller versions of Gemini that developers can use and fine-tune. This is how they distribute the AI revolution beyond their products.

A clearer Google AI strategy narrative that addresses concerns about competition, regulation, and safety. This is the speech from the CEO. Less about new products, more about Google's vision for AI and why Google's bet is the right one.

These aren't wild guesses. These are extrapolations based on what Google's been working on and what makes sense as the next steps.

Preparing for Google I/O 2026

If you care about this stuff, here's what you should do now (before May).

Learn the basics of Gemini. If you haven't played with Gemini yet, do it. Understand what it's good at and what it's not. That context will make the announcements make more sense.

Read Google's AI research papers. Google publishes their research. It's dense, but it's where they hint at what's coming. Papers on new architectures, new training methods, new applications. This is the blueprint.

Try Google's AI tools. Google Search's AI Mode. Gmail's writing tools. Gemini directly. Pixel's AI features if you have a Pixel phone. Get hands-on. You'll understand better what Google's iterating on and where they might go next.

Follow Google Cloud's announcements. Google Cloud publishes AI tools for developers. This is where they're testing things before bringing them to consumer products. It's the canary in the coal mine.

Set a reminder. May 19-20, 2026. Mark your calendar. Follow along live if you can. These announcements might seem incremental in retrospect, but they're the announcements that shape the next two years of technology.

Beyond the Hype: What Actually Matters

Look, here's the thing about tech announcements. They sound impressive in the moment. But what matters is whether the stuff actually ships, whether it actually works, and whether regular people actually use it.

Google's been good at shipping things. That's their advantage. They're not like some companies that announce vaporware. When Google announces something at I/O, it usually shows up within months.

But execution matters. The AI features need to actually be useful, not just technically impressive. They need to handle edge cases. They need to be fast. They need to respect privacy. They need to be accessible to everyone, not just power users in San Francisco.

Google I/O 2026 is when Google tells us the roadmap. May 21-31, 2026, when the actual rollout starts, is when we see if they actually delivered.

The Bigger Picture: AI Is Becoming Like Electricity

I think we're at an inflection point. AI is going from "new technology" to "fundamental infrastructure." Like electricity or the internet. Just part of how everything works.

Google I/O 2026 is probably going to feel like the announcement of that shift. It's not going to be "here's Google's new AI product." It's going to be "AI is now the foundation of everything we do, and here's what that looks like."

That's bigger than any individual announcement. That's the actual story.

So mark your calendar. May 19-20, 2026. Something big's coming.

FAQ

What are the official dates for Google I/O 2026?

Google I/O 2026 takes place on May 19-20, 2026 at the Shoreline Amphitheatre in Mountain View, California. The keynote kicks off the morning of May 19th and covers Google's latest AI breakthroughs and product updates across the company. The event also streams online globally for remote attendees.

Where is Google I/O 2026 being held?

Google I/O 2026 is held at the Shoreline Amphitheatre in Mountain View, California, which is a real venue with in-person seating capacity for thousands of attendees. The event is also available as a virtual experience, allowing developers and tech enthusiasts worldwide to watch the keynotes and sessions online without attending in person.

What is the format of Google I/O 2026?

Google I/O 2026 combines in-person and virtual attendance options. The event features keynote presentations from company leaders, fireside chats with executives, product demonstrations, hands-on developer labs, and breakout sessions focused on specific technologies. In-person attendees get direct access to Google engineers and interactive workshops, while virtual attendees can watch livestreams of keynotes and select sessions.

Why is Google I/O 2026 focused on AI?

Google I/O 2026 is heavily focused on AI because Gemini and artificial intelligence have become fundamental to how Google develops products. The company has been integrating Gemini capabilities into Search, Android, Chrome, Workspace, and Pixel devices throughout 2025, and the May 2026 event will showcase the next evolution of these AI-powered integrations and entirely new Gemini capabilities.

What can I expect Google to announce about Gemini at I/O 2026?

Expect Google to announce significant Gemini advances including deeper integrations with all Google products, improved reasoning capabilities, faster inference speeds, enhanced multimodal features (text, images, video, audio), and possibly new versions like Gemini 3.0 or an "Ultra" tier. Google will likely showcase how Gemini makes products smarter while remaining seamlessly integrated into the user experience.

How do I register for Google I/O 2026?

Developers can register for Google I/O 2026 starting immediately following the announcement (as of early 2025). Registration happens through Google's official I/O website. In-person registration is limited and may use a lottery or registration fee system, while virtual registration is typically free and remains available throughout the registration period.

What Android announcements should I expect at Google I/O 2026?

Expect announcements about Android 16, which will feature deeper Gemini integration, on-device AI processing capabilities, improved AI-powered search within apps, smarter notifications and predictive features, and enhanced privacy controls. Google will likely showcase how Android becomes an AI-first operating system with native support for developers to integrate Gemini capabilities.

What about Google Search updates at I/O 2026?

Google I/O 2026 will probably feature significant Search announcements including more conversational AI search capabilities, multimodal search (searching with camera, audio, and images), better real-time information, improved understanding of user intent, and expanded integration of third-party content sources. AI Mode may become the default search experience rather than an optional feature.

Will Google announce new Pixel phones at I/O 2026?

Google typically announces Pixel phones at I/O, so expect either a Pixel 10 or Pixel 10 Pro announcement or teaser. These devices will likely feature significant AI hardware improvements, better on-device Gemini integration, more advanced AI-powered camera features, and a Gemini-powered voice assistant replacing Google Assistant.

How should I prepare for Google I/O 2026 announcements?

Start by exploring Gemini directly, trying Google Search's AI Mode, testing Gmail's writing tools, and reading Google's published AI research papers. Follow Google Cloud announcements (they test new features there first), and keep up with tech news about AI developments. Set a reminder for May 19-20, 2026, and plan to watch keynotes live or review them immediately after to catch what Google announces.

Key Takeaways

- Google I/O 2026 is scheduled for May 19-20, 2026 at Shoreline Amphitheatre in Mountain View with hybrid in-person and online attendance

- Gemini AI will be the centerpiece, with announcements about deeper integration, improved reasoning, faster inference, and new capabilities across all Google products

- Android 16 will be AI-first with on-device processing, native Gemini integration, smarter notifications, and developer-friendly AI APIs built in

- Chrome browser is transforming into an AI-first platform with smarter tab management, password security, page summarization, and developer tools powered by AI

- Google Workspace applications (Gmail, Docs, Sheets, Slides, Meet) are getting AI-powered automation that handles routine tasks and assists with content creation

- Google Search will likely shift toward AI-powered summaries and conversational queries as the default, with multimodal search capabilities

- Pixel devices will feature advanced on-device AI, improved camera using AI, and a Gemini-powered voice assistant replacing Google Assistant

Related Articles

- Google I/O 2026: May 19-20 Dates, What to Expect [2025]

- 7 Biggest Tech News Stories This Week: Claude Crushes ChatGPT, Galaxy S26 Teasers [2025]

- EU Parliament Bans AI on Government Devices: Security Concerns [2025]

- AI Personalities & Digital Acceptance: What Neuro-sama Really Tells Us [2025]

- LLM Security Plateau: Why AI Code Generation Remains Dangerously Insecure [2025]

- How Bill Gates Predicted Adaptive AI in 1983 [2025]

![Google I/O 2026: Dates, AI Announcements & What to Expect [2025]](https://tryrunable.com/blog/google-i-o-2026-dates-ai-announcements-what-to-expect-2025/image-1-1771362379392.png)