Introduction: AI Music Generation Enters the Mainstream

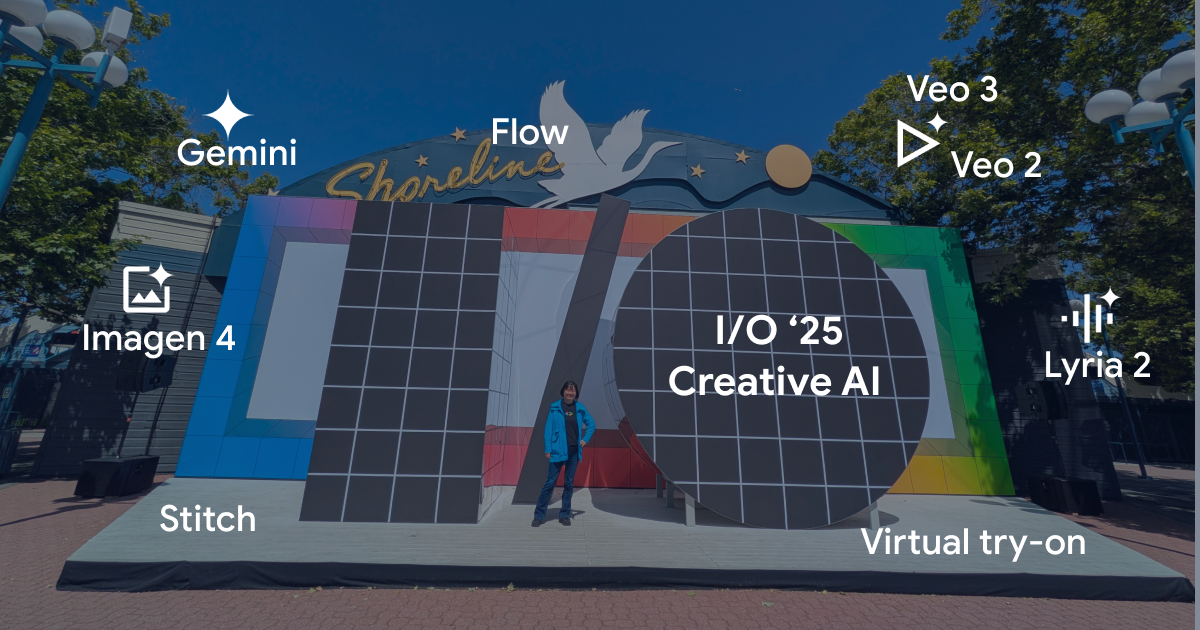

Google just changed the game. On Wednesday, the tech giant rolled out music-generation capabilities directly into the Gemini app, powered by Deep Mind's Lyria 3 model. This isn't a buried feature in a developer console or a beta program only for enterprise users. This is live, available to everyone over 18 globally, and it works.

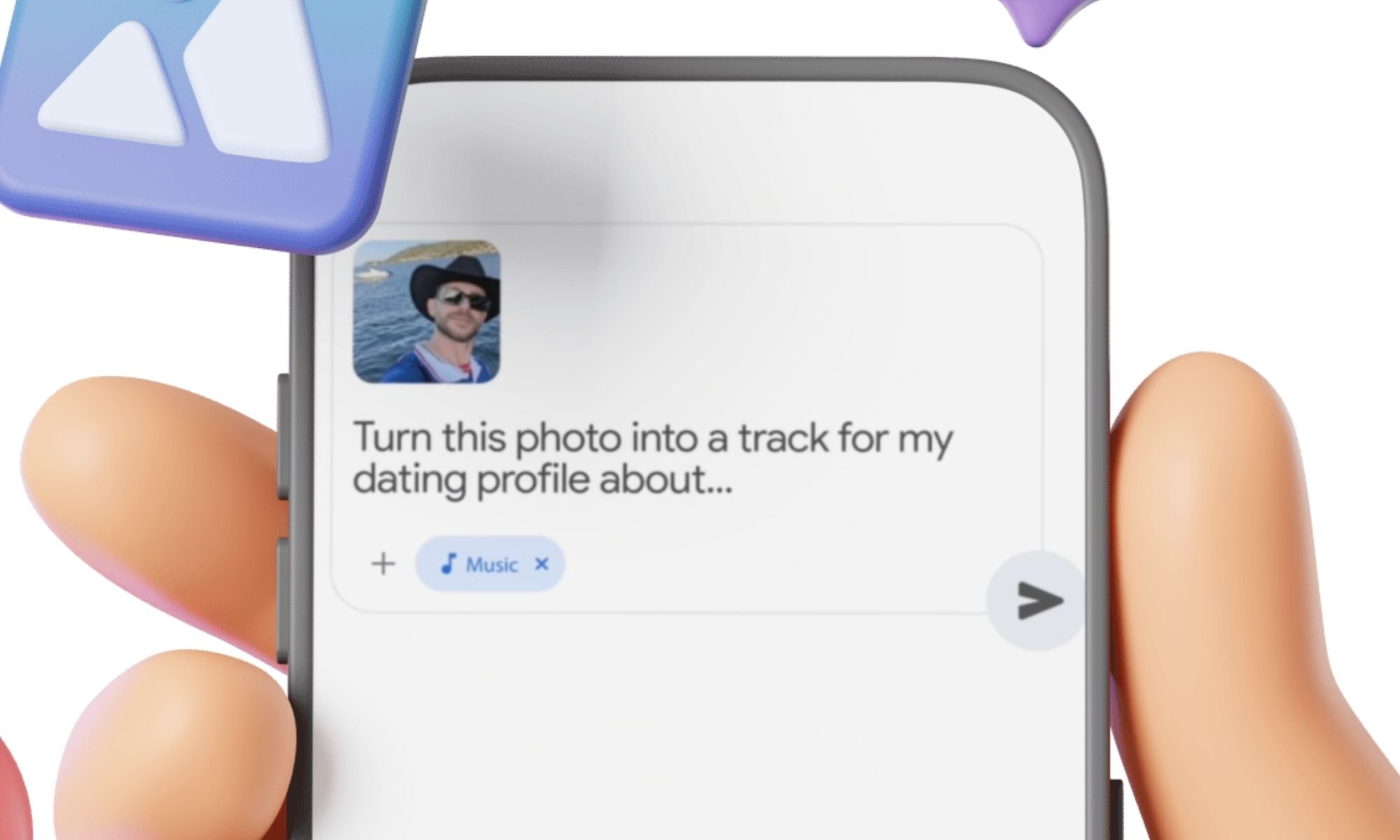

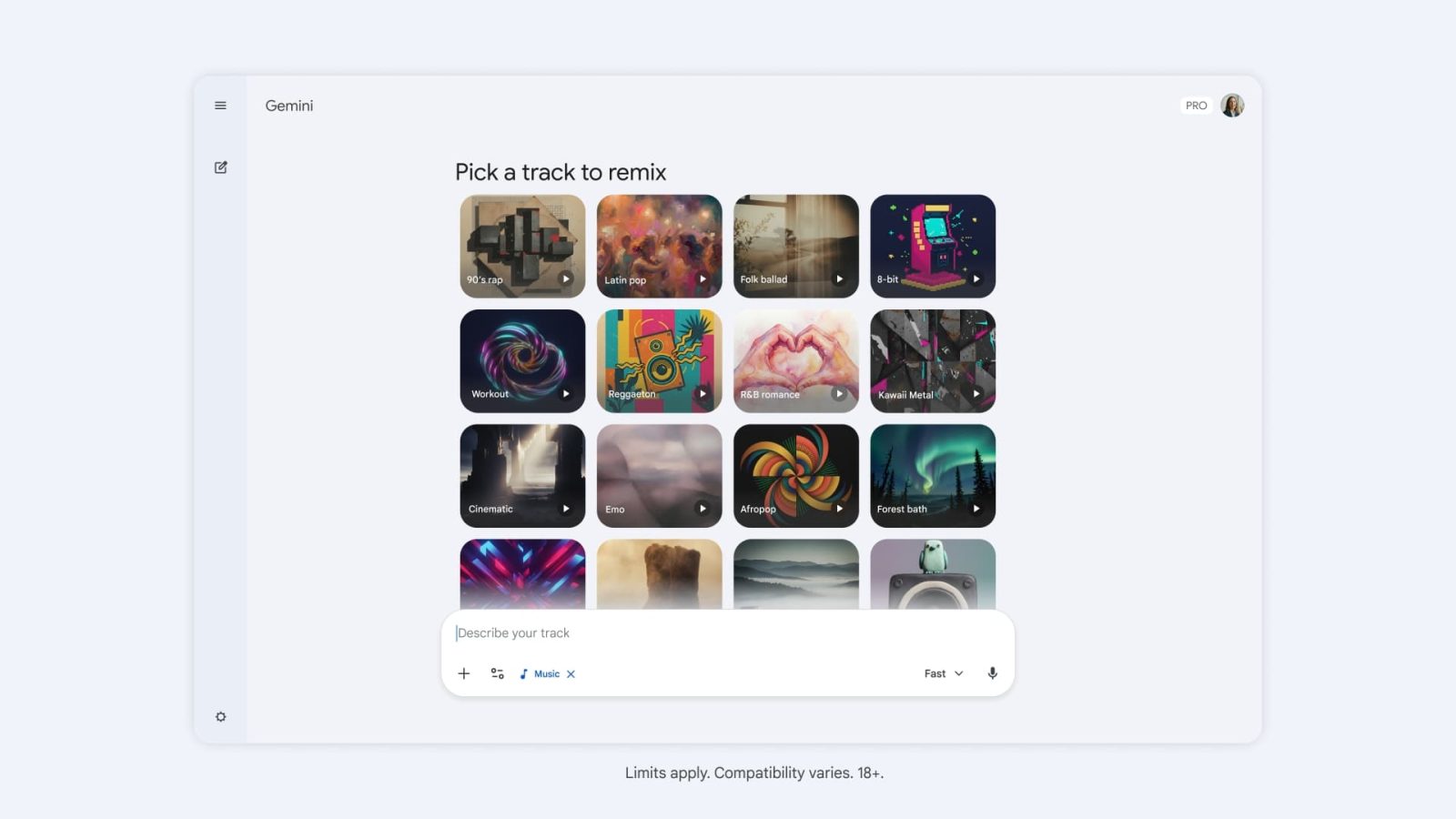

Here's what makes this significant: for the first time, you can open an app you already use daily, type "create a comical R&B slow jam about a sock finding its match," and within seconds, get back a fully generated 30-second track complete with original lyrics and AI-generated cover art. The model even creates the right mood, matches the vocal style, and controls tempo based on your instructions.

But here's what nobody's talking about yet. This isn't just about making music sound good anymore. It's about accessibility, copyright concerns, artist displacement, and the speed at which AI is eating entire industries. The music industry saw this coming. They've been fighting it in courts and boardrooms for two years. And now it's here, built into an app with over 2 billion potential users.

I've been following AI music generation since the first tools dropped in 2023. What started as novelty "beatboxing neural networks" has evolved into something genuinely sophisticated. Lyria 3 represents a technical leap. The outputs sound less robotic, more nuanced, and most importantly, more creative. You can't just generate generic background music anymore. You can generate intentional music with specific vibes, moods, and artistic direction.

This article breaks down everything you need to know about Google's music-generation feature: how it technically works, what it means for musicians and creators, the safeguards Google built in, and where this technology is headed. Whether you're a content creator looking for royalty-free background music, a musician worried about your career, or just curious about what AI can actually do in 2025, you're in the right place.

TL; DR

- Lyria 3 powers Gemini's new music feature: Text, images, or video prompts generate 30-second original tracks with lyrics and cover art

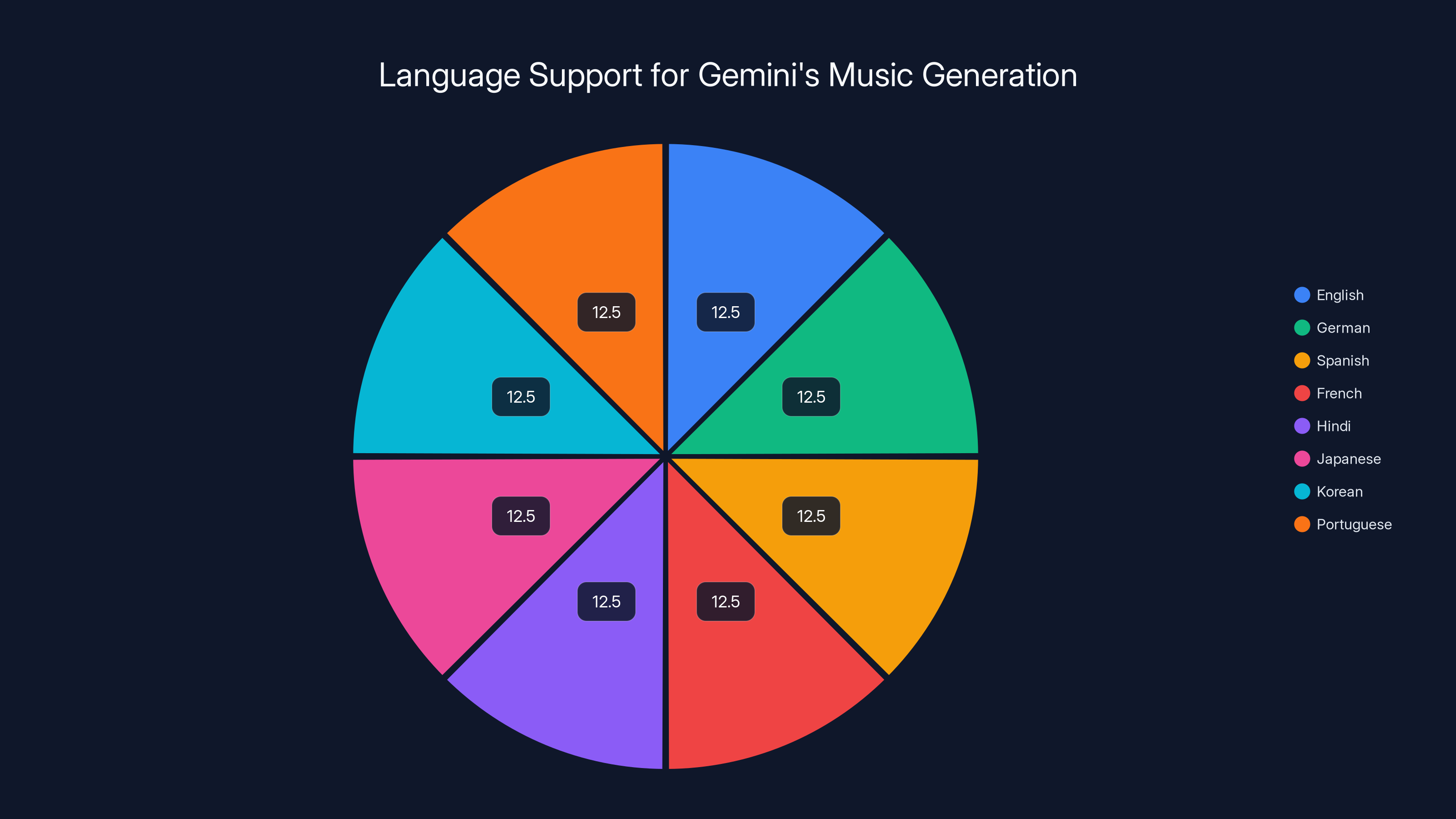

- Global rollout happening now: Available in English, German, Spanish, French, Hindi, Japanese, Korean, and Portuguese for all 18+ users

- Artist protection via Synth ID watermarks: All generated music is watermarked to identify AI creation; Gemini can detect AI-generated music when you upload it

- You Tube creators get global access: Dream Track feature (previously US-only) now available worldwide for channel monetization

- Mixed industry response: Major platforms (Spotify, You Tube) are integrating AI music with label contracts, while others face lawsuits over training data copyright

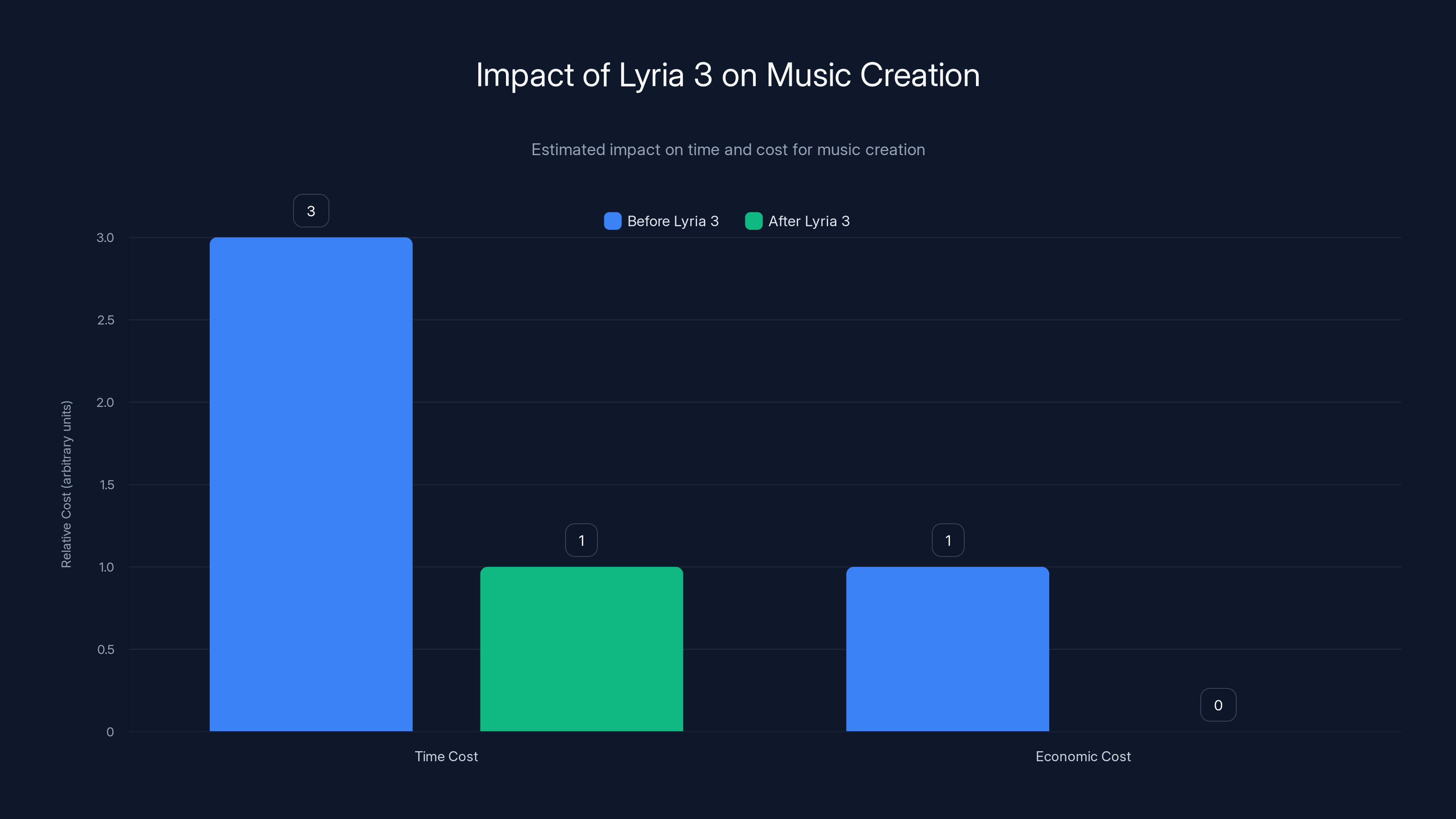

Lyria 3 significantly reduces both time and economic costs associated with creating background music, making it more accessible to content creators. (Estimated data)

What Is Lyria 3? Understanding Deep Mind's Latest Music Model

Lyria 3 isn't Google's first attempt at AI music generation. The company has been experimenting with music models for years. But this version represents a genuine technical advancement over previous iterations.

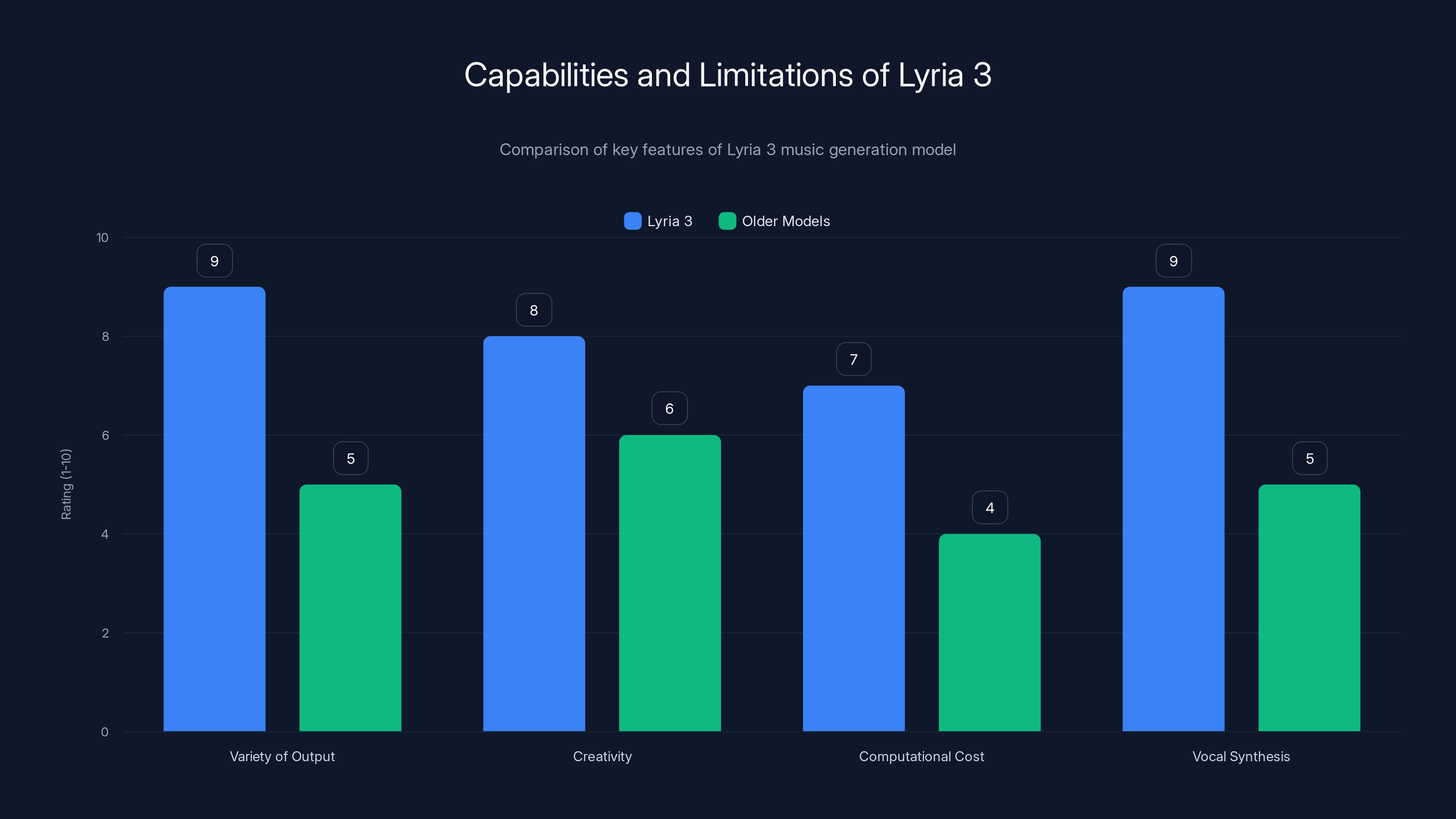

Deep Mind built Lyria 3 to understand music at a deeper level than earlier models. Here's the difference: older music-generation systems often produced output that sounded technically correct but emotionally flat. A generated jazz track might have the right chord progression but lack the subtle timing variations that make real jazz feel alive. Lyria 3 changed this.

The model was trained on a massive dataset of licensed music across multiple genres. This is important because it means Google paid for access to training data rather than scraping copyrighted material without permission (though the industry still disputes some aspects of AI training datasets). The training dataset includes everything from classical symphonies to trap beats, folk songs to EDM, and everything in between.

What makes Lyria 3 different technically comes down to a few key improvements. First, it generates more realistic and complex music tracks than previous versions. This means better instrument interactions, more natural transitions between sections, and music that actually sounds intentional rather than algorithmically stitched together.

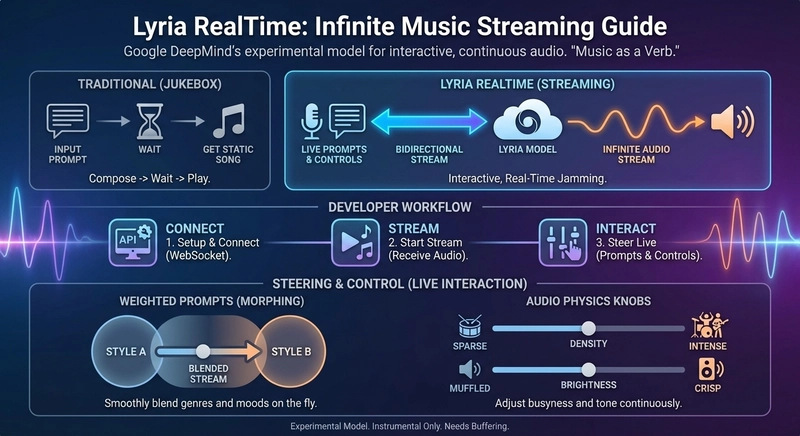

Second, the model has better control parameters. You're not just asking the AI to "make music." You're specifying style, tempo, vocal characteristics, instrumentation, and mood. Lyria 3 responds to these specifications. Tell it you want a "80s synth pop track with female vocals in a major key," and that's what you get. Tell it you want the same song "but make it melancholic," and it adjusts.

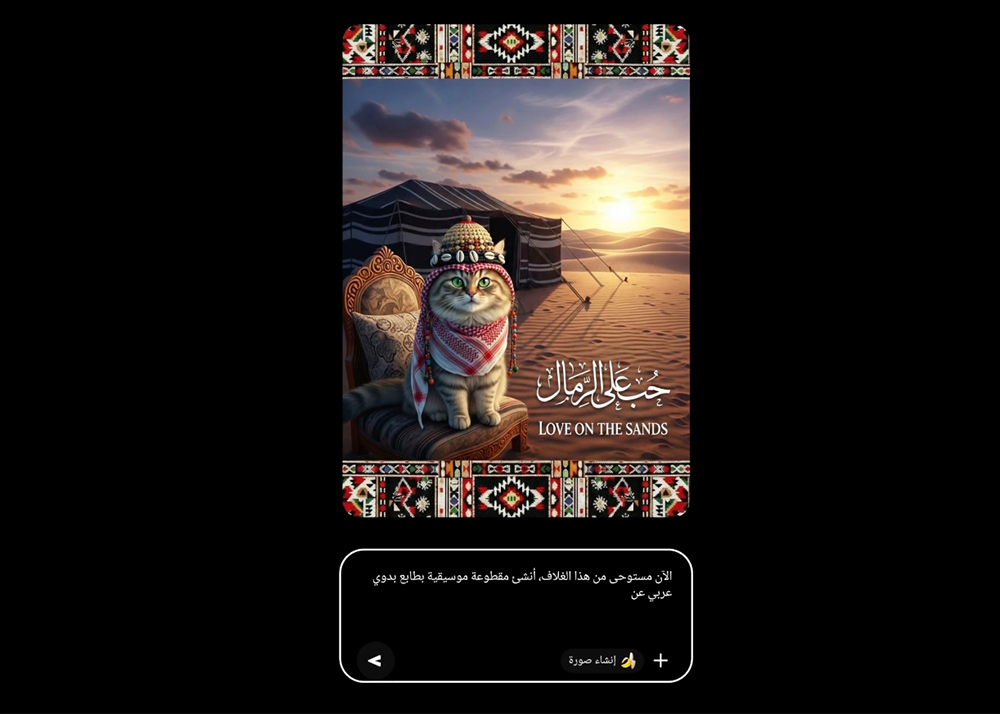

Third, Lyria 3 understands context from reference materials. Upload a photo of a sunset and describe the mood you want, and the model generates music that matches both the visual aesthetic and your description. This multimodal understanding is harder than it sounds. The model has to understand visual composition, emotional tone, and musical language simultaneously.

Google is also being transparent about what Lyria 3 can't do. The model specifically cannot mimic individual artists, even if you name them. If you prompt "create a track in the style of Taylor Swift," the system won't try to replicate her voice or signature songwriting patterns. Instead, it interprets that as "create something with similar emotional themes and production style." This is a deliberate design choice to avoid the major copyright and artistic-identity issues that have plagued AI music for the past year.

Lyria 3 excels in generating varied and creative music outputs with advanced vocal synthesis, though it is computationally expensive compared to older models. Estimated data.

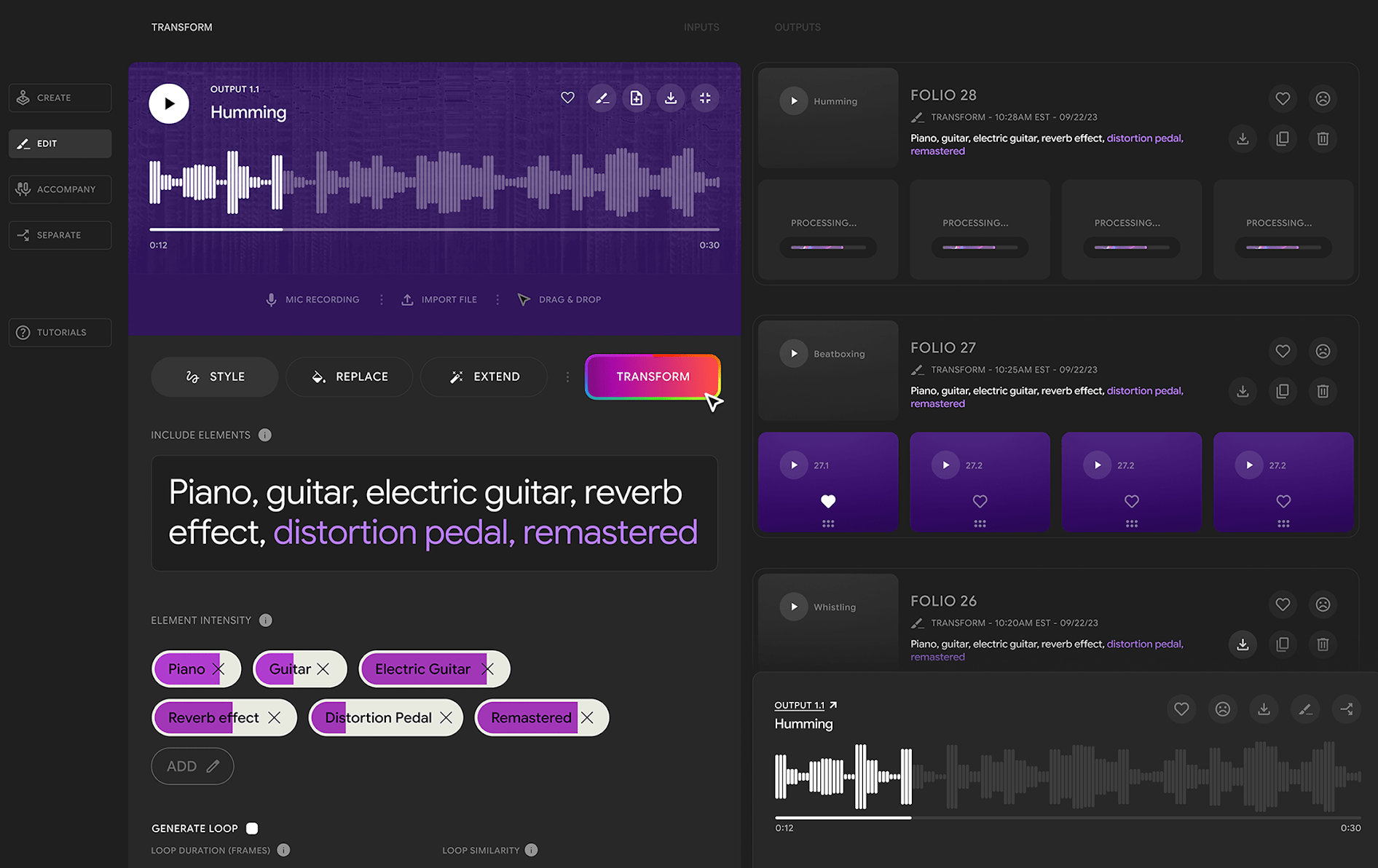

How to Use Gemini's Music Generation: A Step-by-Step Guide

Using Gemini's music generation is almost embarrassingly simple. But the simplicity hides surprising depth once you start experimenting.

Step 1: Open Gemini and Check Your Region/Language

First, confirm you're eligible. The feature is available to all users 18 and older globally, but support currently covers eight languages: English, German, Spanish, French, Hindi, Japanese, Korean, and Portuguese. If you're in a supported region and language, you'll see the music-generation option in the Gemini interface.

Step 2: Describe Your Song

In the Gemini chat, describe the song you want. Be specific. Vague prompts like "make me happy music" produce generic results. Specific prompts like "create an upbeat indie folk track with acoustic guitar and female vocals, inspired by a rainy autumn morning" work much better.

Here's what works best: include the genre, mood, instrumentation, vocal type (if applicable), and reference material or context. For example: "Make a lo-fi hip-hop beat for studying, around 90 BPM, with vintage samples and a chill vibe."

Step 3: Use Reference Materials (Optional)

You can upload a photo or video. Maybe you have a sunset photo and want music that matches its mood. Upload it and tell Gemini what you want the music to evoke. The model will analyze the visual aesthetic and generate music that complements it.

Step 4: Specify Musical Parameters (Optional)

Adjust the details. Want the vocals higher? The tempo faster? The instrumentation sparser? You can request changes without regenerating the whole track. "Make that last version but 20% faster and remove the vocals" works just fine.

Step 5: Review the Output

Gemini generates a 30-second track with original lyrics (if applicable) and cover art created by Nano Banana (Google's AI image model). Download it, export it, or share it directly. Every generated track includes a Synth ID watermark (invisible to listeners) that identifies it as AI-generated.

Step 6: Detect AI Music in Your Own Tracks

Reverse process: upload any audio file and ask Gemini if it's AI-generated. The system uses Synth ID to identify watermarked content and can detect whether music was generated by Lyria 3. This is useful if you're receiving music from creators and want to verify authenticity.

The entire process takes two to three minutes from idea to finished track. That's the real value proposition here. It's not that the output is better than a human composer. It's that the speed and accessibility eliminate friction for creators who need quick, original background music.

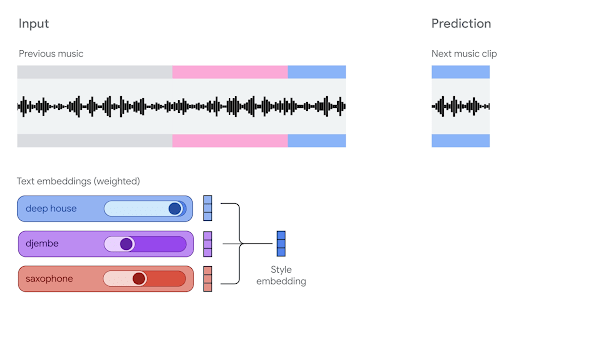

The Technical Magic Behind Music Generation

Understanding how Lyria 3 actually generates music helps explain both its capabilities and limitations.

At the core, Lyria 3 is what's called a diffusion model. Think of it this way: imagine starting with pure static noise, then gradually removing that noise while adding structure based on your prompt. After hundreds of steps, static becomes a coherent audio waveform that matches your description. This is fundamentally different from older generative models that tried to predict the next note in a sequence.

The advantage of diffusion models is they produce more varied, creative output. The disadvantage is they're computationally expensive. Generating a 30-second music clip requires significant processing power, which is why Google serves this from cloud infrastructure rather than running it locally on your device.

Lyria 3 also uses something called cross-modal attention. Basically, the model learns to pay attention to different parts of your text prompt, any uploaded images, and temporal information simultaneously. When you say "generate music matching this sunset photo," the model's attention mechanisms focus on the visual features (warm colors, horizontal composition, sense of scale) and translates those into musical characteristics (warm tonality, flowing progression, sense of space).

The vocal synthesis component deserves separate mention. When your prompt requests vocals, Lyria 3 isn't using pre-recorded vocals. It's synthesizing them from scratch. The model learns how humans sing, how phonemes sound, how emotions affect vocal timbre, and generates audio that sounds like a real singer performing lyrics that the model also generated.

Here's a formula that roughly describes the generation process:

Where

In human terms: the model starts with noise, iteratively removes it based on what you asked for, and injects controlled randomness so you get different results each time.

The training process took months on Google's TPU clusters (specialized AI-accelerated computers). The model learned from patterns in music: how genres structure themselves, how melodies typically develop, how production techniques add emotional weight. But it also learned from your text descriptions. The model understands that "melancholic" means certain chord progressions, slower tempos, and minor keys. That understanding comes from supervised learning where humans tagged music with emotional descriptors.

Gemini's music generation supports eight languages equally, each representing 12.5% of the total language support.

Synth ID Watermarking: Google's Approach to AI Identification

Given the growing concerns about AI-generated content flooding platforms, Google built identification directly into Lyria 3.

Every track generated through Gemini gets a Synth ID watermark. This is different from traditional audio watermarks you might add to a track. Synth ID is imperceptible to listeners. You won't hear it, see it, or notice it affects audio quality. But the watermark encodes information that identifies the track as AI-generated and, more specifically, as generated by Lyria 3.

How does invisible watermarking work? The model embeds information into the frequency content of the audio at levels below human hearing threshold. Specifically, it adds subtle variations to frequencies above 20 kHz (the upper limit of human hearing). These variations encode a signature that survives compression, slight audio processing, and even some reencoding. If someone downloads your generated track, converts it to MP3, uploads it somewhere else, and then someone else analyzes it, Synth ID can still identify it as AI-generated.

This addresses a major concern from the music industry: fraudulent streams. Imagine someone generates a thousand songs using Lyria 3, uploads them to Spotify with fake artist names, and streams them to themselves repeatedly to generate payments. Without watermarking, it's hard to detect. With Synth ID, the watermark identifies these tracks immediately.

But there's a catch. Synth ID watermarks are detectable by other AI tools. If someone runs a sufficiently sophisticated attack against Synth ID watermarking, theoretically they could remove it. This has already happened with image watermarks in other domains. Google and the security community are aware of this arms race and actively working on more robust watermarking approaches.

The reverse detection is equally interesting. Gemini can identify whether music uploaded to it contains Synth ID watermarks, even if the audio has been slightly modified. You can take a generated track, apply a little reverb, adjust the EQ, and Gemini will still recognize it as AI-generated. This is useful for creators who want to verify whether incoming tracks are authentic.

Google's transparency here is worth acknowledging. The company isn't hiding the watermark feature or making it optional. It's baked in by default. This sets a precedent for responsible AI deployment, even if other companies don't follow suit.

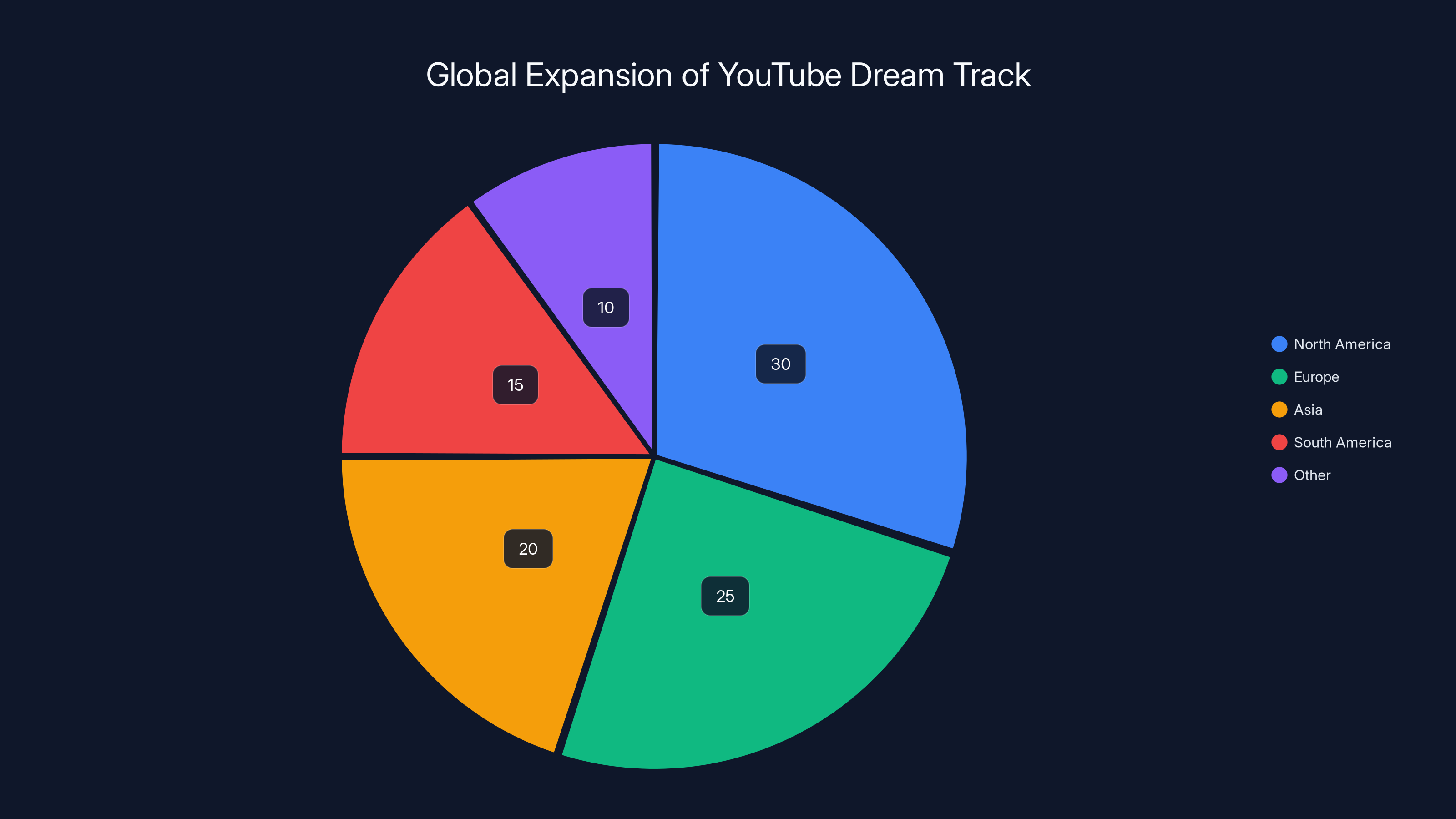

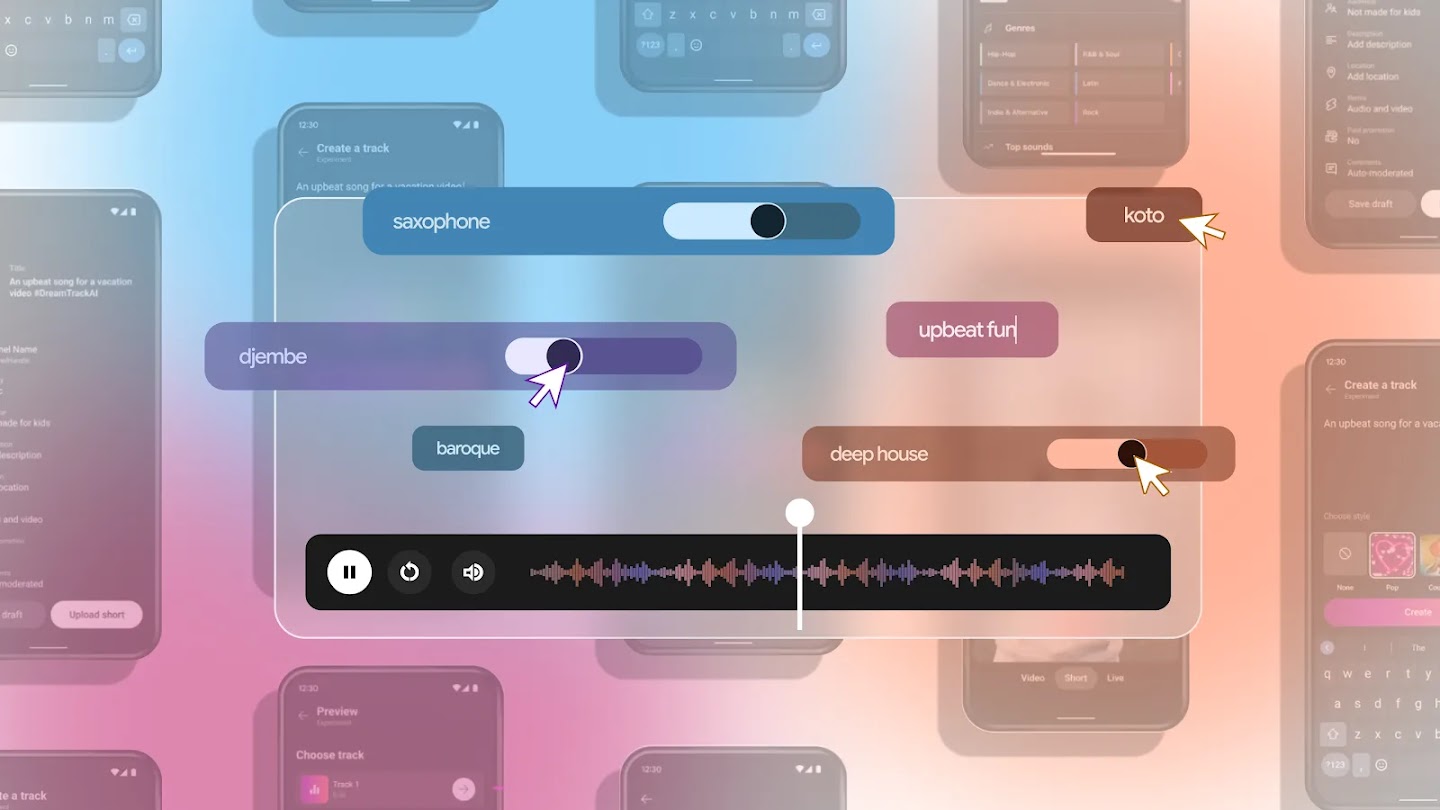

You Tube Dream Track: Global Expansion for Creators

Gemini's music generation is one application of Lyria 3. But Google is also rolling out these capabilities to You Tube creators through Dream Track, bringing it to a global audience.

Before this week, Dream Track was US-exclusive. You Tube creators could generate music for their videos, but only if they were in the United States. That created a weird artificial limitation. A creator in London couldn't use the feature. A creator in Tokyo couldn't use it. A creator in São Paulo couldn't use it. With this expansion, that changes.

Dream Track allows You Tube creators to generate original music specifically for their videos. This is huge for independent creators who can't afford licensing music from traditional composers or royalty-free libraries. A You Tube creator can describe what they need ("upbeat background music for a cooking tutorial"), generate it, and immediately use it in their video.

The generated music is also immediately monetizable. You Tube's creator program shares revenue from ads and sponsorships with creators. If you use Dream Track music in your videos, you're monetizing content that uses music you generated, eliminating middle-man royalty payments. This is actually a meaningful shift in economics for small creators.

Google has also signed licensing deals with major music labels. This means the training data used to build Lyria 3 includes compensation flowing back to rights holders, at least in theory. The label deals provide legitimacy that's missing from other AI music startups that trained on unlicensed material.

But here's the uncomfortable truth: the creator expansion of Dream Track is also about consolidation. By making music generation accessible through You Tube, Google increases creator dependence on Google infrastructure. A creator using Dream Track is less likely to leave You Tube because they've invested in that ecosystem.

You Tube is positioning Dream Track as part of its Creator Fund, a broader push to give independent creators tools that used to require enterprise budgets. It's smart strategy from a platform perspective: make the platform more valuable for creators, and creators stay on your platform.

The global rollout matters because You Tube's growth is increasingly international. The US creator market is saturated. Growth is happening in India, Brazil, Southeast Asia, and Africa. By expanding Dream Track globally now, Google locks in that next generation of creators before competitors do.

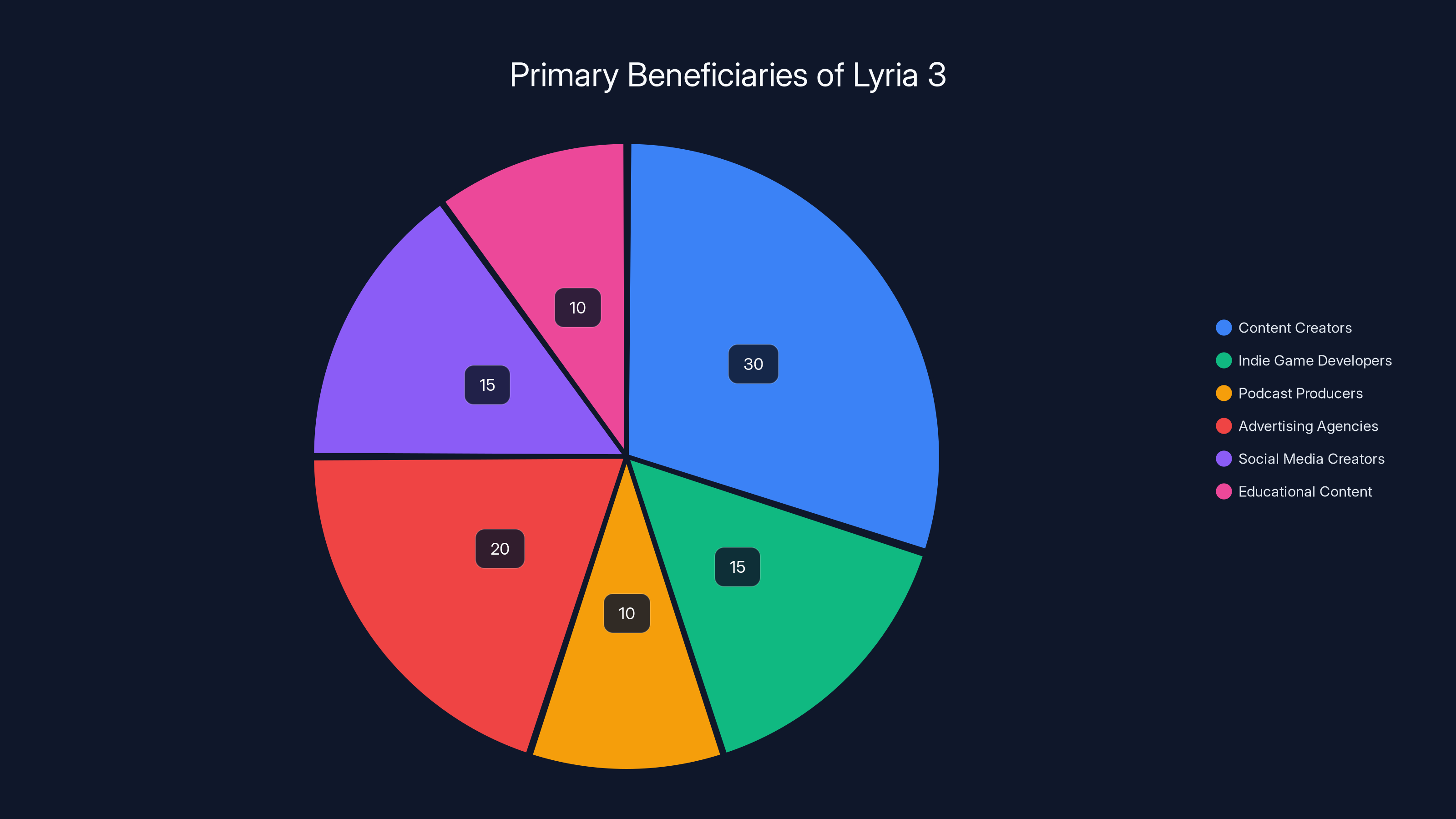

Content creators and advertising agencies are the largest beneficiaries of Lyria 3, making up 50% of its user base. Estimated data based on typical usage scenarios.

The Artist Perspective: Concerns and Displacement

Here's where things get complicated.

Musicians have every reason to be concerned about what Lyria 3 represents. The feature doesn't directly put musicians out of work, but it erodes demand for their labor in specific segments.

Consider a You Tube creator who needs background music. Historically, they'd either license a track from a service like Epidemic Sound (paid subscription), license from a composer (paid per-track), or commission original music (expensive). Now they generate it free through Dream Track.

Multiply that by millions of creators, and you're looking at a significant reduction in demand for background music composers. These are working musicians who made careers out of creating stock music, corporate audio branding, and content creation soundtracks. Lyria 3 targets exactly that market segment.

The music industry saw this coming. That's why they've been in legal battles with AI companies for the past two years. Artists and composers filed lawsuits arguing that training AI on their music without permission violates copyright. These lawsuits are still ongoing, and they matter.

Google's response is that their training was licensed. But the music industry argues the licensing deals are unfair and don't provide adequate compensation. There's also the broader principle: does training an AI on your work without your explicit permission feel like theft, even if technically licensed?

There's another dimension too. Lyria 3 can't perfectly mimic a specific artist, which is what Google wants. But it can generate music "in the style of" artists. Some artists view this as a violation of their artistic identity. If your entire sonic signature can be replicated by an AI trained on your work, what does that mean for your uniqueness?

That said, there are opportunities for musicians too. Some companies are paying musicians for AI training. Others are using Lyria 3 as a starting point and hiring musicians to refine and personalize the output. Some genres (experimental music, jazz) are harder for AI to generate well, so demand there remains strong.

The big question is whether the music industry can collectively push back on AI music adoption, or whether the technology is too useful for companies and creators to resist. History suggests the latter. Photography didn't disappear when cameras were invented, but it fundamentally changed. Music might be similar.

Copyright, Training Data, and Industry Lawsuits

Lyria 3's training data is at the center of ongoing legal battles.

Google says it licensed music for training. The music industry says the licensing was inadequate and doesn't address fundamental copyright concerns. Here's the actual situation:

Google partnered with music labels and publishers to access licensed music for training Lyria 3. These licensing deals meant money flowed to rights holders for the use of their catalogs. This is different from some other AI music companies that trained on datasets scraped from You Tube or other public sources without explicit permission.

But here's where it gets murky. Even with licensing, training an AI on music raises questions. Does licensing for streaming also implicitly license for AI training? Most artists and labels say no. They argue AI training is a different use case that should require separate licensing and compensation.

The lawsuits filed against AI companies include claims that training violated the copyright of specific artists. A lawsuit brought by major artists alleged that companies like Open AI and Anthropic trained on copyrighted music without permission, using the music to create tools that compete with the original artists.

Google's position is stronger than some competitors because it did license music. But the music industry still argues the licensing terms were unfair, the compensation inadequate, and the use case not properly addressed in original copyright agreements.

There's also a question about precedent. If Google's approach to licensing AI training becomes the industry standard, what does that mean for artists? Do they have to opt into AI training, or is it automatic? Who controls their decision?

The legal situation is genuinely unresolved. Courts haven't clearly established what AI companies owe to rights holders. Are they liable for copyright infringement? Do they need explicit permission? Is licensing sufficient? These questions will be answered in courts over the next two to three years.

In the meantime, platforms like Spotify and You Tube are trying to move forward cautiously. They're signing deals with labels that explicitly allow AI music generation and monetization. These deals acknowledge that AI music is happening and try to ensure rights holders get compensated.

But smaller artists and independent composers often aren't part of these label agreements. They don't get a seat at the table. Their music might be in training data, and they have no control over how their work is used.

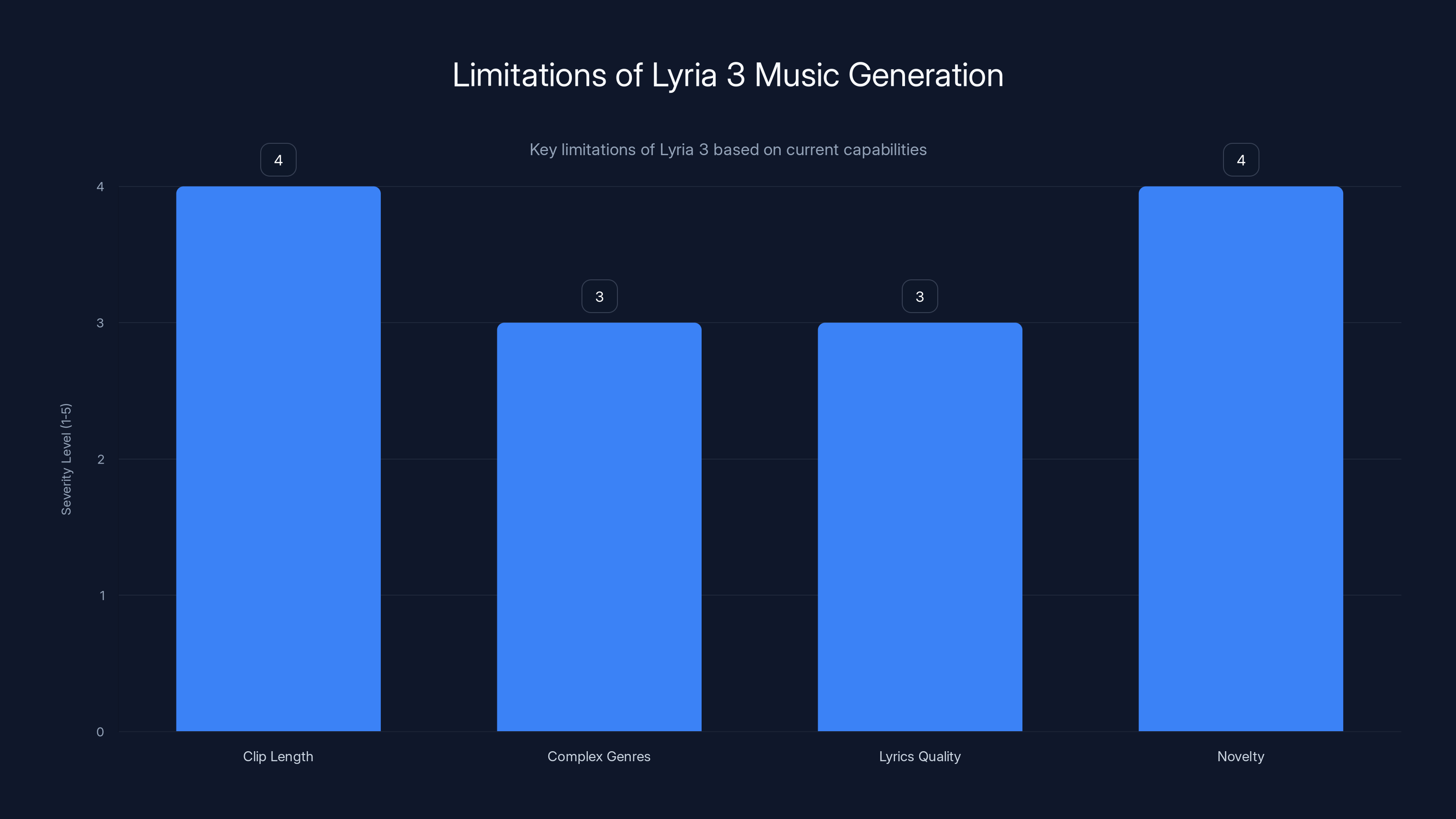

Lyria 3 currently has notable limitations in generating full-length tracks, handling complex genres, producing high-quality lyrics, and creating novel compositions. Estimated data based on described limitations.

Platform Integration: Spotify, You Tube, and the Music Streaming Wars

Where do streaming platforms stand on AI music?

Spotify's approach is cautious but accepting. The company has publicly stated that AI-generated music is valid and can be monetized on the platform, but it's implementing some safeguards. Artists can't upload unlimited AI-generated tracks to rapidly spam the platform. Each account has submission limits. Spotify is also using content ID technology to identify fraudulent content and remove it.

You Tube is more aggressive. Dream Track integration means You Tube is actively helping creators generate music. You Tube also shared revenue from monetized content with creators, which means You Tube benefits financially when creators use AI music in their videos.

Both companies have signed licensing deals with major music labels that explicitly address AI music. These deals provide some legitimacy and ensure traditional artists get compensated as the AI music market grows.

But here's the competitive dynamic: if Spotify doesn't embrace AI music features, You Tube might gain a platform advantage for creators. If You Tube moves faster on AI music adoption, it attracts creators who want quick, free music generation. Platform competition is actually accelerating AI music adoption.

Smaller streaming platforms and independent music services have taken harder positions against AI music. Deezer, a streaming service popular in Europe, developed tools to mark and identify AI-generated music, partly to protect their artist relationships but also to market themselves as "artist-friendly" alternatives to Spotify and You Tube.

This fragmentation is important. You're seeing a split where major platforms embrace AI music (because it helps creators and reduces licensing costs) and smaller platforms position themselves as artist advocates (because they need to differentiate somehow).

Real-World Applications: Who Benefits Most?

Let's get concrete. Who actually uses this, and why?

Content Creators and You Tubers: This is the obvious use case. Creators need background music for videos. Lyria 3 eliminates the friction of finding, licensing, and integrating music. A creator uploads their video idea description or a reference image and gets custom music instantly. The economic benefit for creators is massive. Instead of paying for music licensing ($5-50 per track), they generate it free.

Indie Game Developers: Small game studios need music for their games. Hiring a composer is expensive. Licensing existing music is restrictive. With Lyria 3, a developer can generate unique music that fits their game's mood and style. The barrier to creating professional-sounding games just dropped significantly.

Podcast Producers: Intro and outro music, background ambiance between segments, thematic music for specific show sections. Podcasters have been using generic royalty-free music forever. Now they can generate custom music that's actually unique to their show.

Advertising Agencies: Creating custom music for ads has historically meant hiring composers or licensing expensive tracks. Agencies can now generate custom background music for ad production internally, saving time and cost.

Social Media Creators: Tik Tok, Instagram Reels, You Tube Shorts all need snappy background music. Creators on these platforms can generate unique music that makes their content stand out.

Educational Content: Teachers, online course creators, and educational platforms can now generate background music for instructional videos without copyright concerns.

The common thread: anyone who needs custom, royalty-free, original music benefits from Lyria 3. That's actually a huge segment of content creators.

Estimated data shows North America leading in Dream Track usage, followed by Europe and Asia. The expansion aims to balance this distribution.

What Lyria 3 Still Struggles With

Despite the hype, Lyria 3 has real limitations.

30-Second Generation Limit: You can't generate five-minute songs. The feature generates 30-second clips, which is fine for background music but useless if you want to create full compositions. This is partly technical (longer generation requires exponentially more compute) and partly intentional (discourages creating full albums of AI music).

Genre Limitations: Lyria 3 works great for electronic music, lo-fi, ambient, and pop. It struggles with jazz, classical, and genres that require nuanced human interpretation. Ask it to generate jazz and you get something that technically sounds like jazz but lacks the subtle timing and feel that makes real jazz interesting.

Lyrical Quality: When you ask for lyrics, they're often generic and sometimes awkward. "Love is in the air tonight" level generic. Great music pairs great lyrics with great melodies. Lyria 3 does both at a competent but not exceptional level.

Originality: The model can't truly create something novel. It generates based on patterns in training data. This means generated music sounds like a blend of existing music rather than something genuinely new. It's interpolation, not invention.

Instrumental Complexity: The more complex the instrumentation request, the more likely the output has issues. Ask for "folk metal with traditional instruments" and the instrumental transitions get weird. Simpler requests work better.

These limitations aren't permanent. As the model improves, some of these constraints will disappear. But they're worth understanding now.

Comparing Lyria 3 to Competitor AI Music Tools

Google isn't alone in AI music generation. Other companies have built competing tools.

Suno is probably the closest competitor. Suno's AI can generate full-length songs (not just 30 seconds) with lyrics. Suno produces genuinely impressive output, sometimes better than Lyria 3. The catch is Suno is less integrated into platforms creators already use. You have to go to Suno's website, generate there, then import elsewhere.

AIVA (Artificial Intelligence Virtual Artist) focuses on longer compositions and actually lets you edit the generated music at a granular level. AIVA is better for serious composers who want AI as a starting point. But it requires more musical knowledge to use effectively.

Mubert is designed specifically for creators and streamers who need quick background music. It's more lightweight than Lyria 3 and faster to generate, but the output quality is generally lower.

Soundraw lets users generate music but gives more control over structure and instrumentation before generation. It's more customizable but slower and less intuitive than Gemini's integration.

The key advantage Google has is distribution. Lyria 3 is built into Gemini, which billions of people use. Competitors have better-quality tools but way smaller audiences.

The Future of AI Music: Where This Technology Heads

Lyria 3 is not the endpoint. It's a checkpoint.

In the next two years, expect several trends:

Longer Generation Lengths: 30-second clips will expand to full-length songs. Compute costs will drop, making longer generation economically viable. Models will improve, handling longer context windows.

Better Control Mechanisms: Rather than describing music in text, you'll be able to draw melodies, specify chord progressions graphically, or adjust parameters after generation in real-time. Musicians will gain more agency.

Better Genre Coverage: Classical, jazz, and complex genres will improve. Right now these are hard because they require more subtle understanding of musical rules. Future versions will handle them better.

Real-Time Generation: Imagine streaming music that's generated on-the-fly based on your activity, mood, or environment. Real-time personalized music. This is further away but technically feasible.

Voice Cloning Controversy: As AI voice synthesis improves, concerns about artists' voices being replicated without permission will become acute. Expect significant legal battles over vocal synthesis rights.

Musician Adaptation: Musicians will increasingly use AI as a tool rather than compete against it. A producer might use Lyria 3 to generate a rough track, then refine it with real musicians. AI becomes collaborative rather than replacement.

Regulatory Intervention: Governments will likely require metadata tagging and identification of AI music. The EU is already moving in this direction. Regulations will establish what AI companies can do with training data and what compensation creators deserve.

Copyright Clarification: Court decisions will establish clearer rules about AI music copyright, training data rights, and compensation models. Today's legal ambiguity will resolve into actual law.

Privacy and Data: What Google Does With Your Generated Music

When you generate music through Gemini, Google has a record of what you asked for, what was generated, and potentially what you did with it.

Google's privacy policy states that Gemini conversations are used to improve the AI model (unless you opt out). This means your music generation prompts and potentially the resulting audio could be used in future model training. This raises obvious privacy concerns, especially if you're generating sensitive or proprietary content.

For commercial use, this is important. If you generate music for a business project, you probably don't want that prompt and resulting music being potentially seen by Anthropic researchers improving future models.

Google's approach is: you can disable data storage for Gemini conversations in your account settings. If you do that, your music generation prompts aren't used for model improvement. But this is an opt-in setting that most users don't know about or understand.

Compare this to Suno, which explicitly states that user-generated content isn't used for model training without permission. Different companies handle this differently.

For public content creators who don't care about privacy, this doesn't matter. For businesses and professionals, you should understand that your prompts might be used to train future AI models. Set your privacy settings accordingly.

Best Practices for Using AI-Generated Music Legally and Ethically

If you're going to use Lyria 3 music, here's how to do it properly.

Understand Your Rights: Generated music through Gemini is owned by you. You can use it for commercial purposes. You can monetize it on You Tube or sell it. There's no royalty owed to Google. This is genuinely permissive.

Disclose AI Origin: If you're generating music for professional or editorial purposes, consider disclosing that it's AI-generated. Transparency builds trust with audiences. This doesn't apply to entertainment (nobody discloses that their visual effects are CGI), but it does apply to journalism, educational content, or anything where authenticity matters.

Don't Claim Authorship: Don't generate music and then claim you composed it. That's dishonest. If you're uploading it to You Tube, you can credit yourself as the prompt writer or curator, but be honest about the role of AI.

Don't Use It to Impersonate: Don't generate music that imitates a specific artist's style and then release it under their name or in a way designed to deceive people. That violates multiple laws.

Get Explicit Permission for Commercial Use: If you're generating music for a client or commercial project, confirm that AI-generated music is acceptable to them. Some contracts explicitly forbid AI-generated content. Know before you generate.

Keep Generated Content Organized: Save the Synth ID-watermarked original along with metadata about what you generated and when. This proves ownership and origin if questions arise later.

Respect Artist Rights: Even though Lyria 3 won't perfectly imitate artists, it won't generate music "inspired by" them. Use that responsibly. Don't try to game the system or create near-replicas and claim they're original.

The Bigger Picture: AI Democratization vs. Professional Displacement

Zoom out for a second.

What's really happening with Lyria 3 is democratization. Tools that used to require expertise, expensive equipment, and significant time investment are now accessible to anyone. A teenager with a smartphone can generate music that would have cost thousands of dollars to hire a composer for, five years ago.

This democratization is genuinely good in many ways. It lowers barriers to creative expression. It enables independent creators to compete. It makes professional-quality content creation accessible globally.

But democratization has a flip side. For professionals who made careers out of providing the service being democratized, it's devastating. A composer who specialized in stock music just watched their market evaporate. A background music library owner just saw demand for their service drop.

History shows this pattern repeating. Photography democratized image creation and destroyed the professional portrait studio industry (at least the mass market version). Digital audio destroyed the tape and vinyl manufacturing industry. Video streaming destroyed the rental video store industry.

Each time this happens, professionals have two choices: adapt or decline. Some photographers pivoted to fine art and specialized services. Some went out of business. The industry overall shrunk.

Music will be similar. The market for generic background music will compress significantly. The market for specialized, emotional, custom music will remain strong. Artists willing to adapt and use AI as a tool will thrive. Artists insisting that AI shouldn't exist will struggle.

FAQ

What exactly is Lyria 3 and how does it work?

Lyria 3 is Deep Mind's advanced music generation model that creates original music based on text descriptions, images, or videos. It uses diffusion-based learning to iteratively refine noise into coherent audio, guided by your prompt and parameter selections. The model was trained on licensed music across multiple genres and can generate 30-second tracks with lyrics and cover art in seconds.

Who can use Google's Gemini music generation feature?

Any user 18 or older globally can use the feature, provided they're in a supported region and language. Currently supported languages include English, German, Spanish, French, Hindi, Japanese, Korean, and Portuguese. The feature rolls out to all eligible users over the coming weeks, with priority given to Gemini subscribers.

What are the main limitations of Lyria 3's music generation?

Lyria 3 currently generates 30-second clips rather than full-length songs, struggles with complex genres like jazz and classical, produces sometimes-generic lyrics, and creates music that feels like a blend of existing patterns rather than truly novel compositions. These limitations will likely improve in future model versions as the technology matures and compute costs decrease.

How does Google ensure AI-generated music doesn't infringe on artists' rights?

Google integrated Synth ID watermarking to invisibly tag all generated tracks as AI-created and licensed music for Lyria 3 training. The model also refuses to perfectly mimic specific artists, though it can generate music "in the style of" artists. Google can detect Synth ID-marked AI music when users upload tracks, helping identify fraudulent content. However, the music industry still disputes whether licensing terms are adequate.

Can I use Lyria 3-generated music commercially?

Yes. You own the music you generate through Gemini and can use it commercially, monetize it on You Tube, or sell it without owing royalties to Google. However, confirm with any clients or platform partners that AI-generated music is acceptable for their specific use case, as some contracts or platforms explicitly prohibit it.

How does Dream Track on You Tube differ from Gemini's music generation?

Dream Track is You Tube's integration of Lyria 3 specifically for video creators. It's designed for generating background music for You Tube content with immediate monetization rights. Dream Track was previously US-exclusive but is now globally available. The core technology is the same, but the integration is optimized for video creators rather than general music creation.

What happens to independent musicians who rely on background music composition?

The market for generic background music will likely compress as AI generation becomes ubiquitous and free. Musicians can adapt by focusing on custom composition, specialized genres where AI still struggles, emotional depth, or collaborating with AI as a tool to refine and personalize generated music. Some opportunities will remain for musicians willing to pivot their business models.

Is the music industry suing Google over Lyria 3?

No direct lawsuits target Lyria 3 specifically, but the music industry has filed lawsuits against other AI music companies alleging copyright infringement during training. Google's approach is stronger because it licensed music for training, but the industry argues licensing terms are inadequate and that AI training represents a different use case requiring separate permission and compensation. Legal clarification is expected over the next two years.

How is privacy handled when I generate music through Gemini?

Your music generation prompts are recorded in your Gemini conversation history. By default, Google may use these conversations to improve the AI model, meaning your prompts could potentially be seen by researchers. You can disable this by adjusting your privacy settings to prevent Gemini conversations from being stored for model improvement. Professional and commercial users should review these settings carefully.

Will Lyria 3 eventually generate full-length songs instead of 30-second clips?

Likely yes, though currently Google hasn't announced a specific timeline. Longer generation requires exponentially more computational resources. As compute costs drop and model architecture improves, full-length song generation will become economically viable. This is probably 12-24 months away, though Google hasn't officially confirmed this roadmap.

Conclusion: The Inflection Point for Music Creation

Google's rollout of Lyria 3 through Gemini marks an inflection point. This isn't an experimental feature for early adopters. It's a mainstream tool available to billions of users. The barrier to entry for music creation just evaporated.

For content creators, this is genuinely exciting. The economic cost of background music dropped from $0 (if you used free content and dealt with copyright risk) to literally free. The time cost dropped from hours of searching and adapting someone else's music to minutes of describing what you want and generating it. That's meaningful.

For musicians, it's complicated. The market for certain types of music (generic background compositions) is about to compress. Demand for other types of music (emotionally sophisticated, culturally specific, artistically unique work) will remain strong or grow. The profession will change, but it won't disappear.

For the music industry broadly, legal questions about copyright, training data, and compensation remain unresolved. Those questions will be answered in courts and regulatory bodies over the next few years. Whatever those answers are, Lyria 3 and similar tools are already deployed. The industry can't uninvent this technology.

The pragmatic path forward is clear: platforms and creators should adopt responsible practices now, before regulation forces them. Disclose AI music when authenticity matters. Compensate artists appropriately. Watermark AI-generated content. Build trust through transparency.

Lyria 3 is powerful, accessible, and here. How we collectively decide to use it—and who benefits from that use—will define music creation for the next decade. The technology doesn't make that decision for us. We do.

Key Takeaways

- Lyria 3 generates 30-second original music from text, images, or video prompts with lyrics and AI-created cover art, now available globally in Gemini

- Every generated track includes SynthID watermarks to identify AI creation; Gemini can detect whether uploaded music is AI-generated

- Dream Track music generation expands to YouTube creators globally, providing free music generation and immediate monetization opportunities

- The technology targets background music composers and stock music library demand, likely compressing that market segment over next 12-24 months

- Unresolved copyright questions remain about AI training data and artist compensation, with lawsuits and regulatory changes expected through 2026

Related Articles

- Google I/O 2026: May 19-20 Dates, What to Expect [2025]

- Deezer's Bold Stand Against AI Slop: Why Spotify Users Are Finally Switching [2025]

- Apple's Siri Revamp Delayed Again: What's Really Happening [2025]

- Google Gemini Hits 750M Users: How It Competes with ChatGPT [2025]

- Deezer's AI Music Detection Tool Goes Commercial [2025]

- Deezer's AI Detection Tool: How It's Reshaping Music Streaming [2025]

![Google's Gemini Music Generation: What You Need to Know [2025]](https://tryrunable.com/blog/google-s-gemini-music-generation-what-you-need-to-know-2025/image-1-1771433014263.jpg)