Google Gemini Hits 750 Million Users: How It Competes with Chat GPT [2025]

Introduction: The AI Race Just Got Real

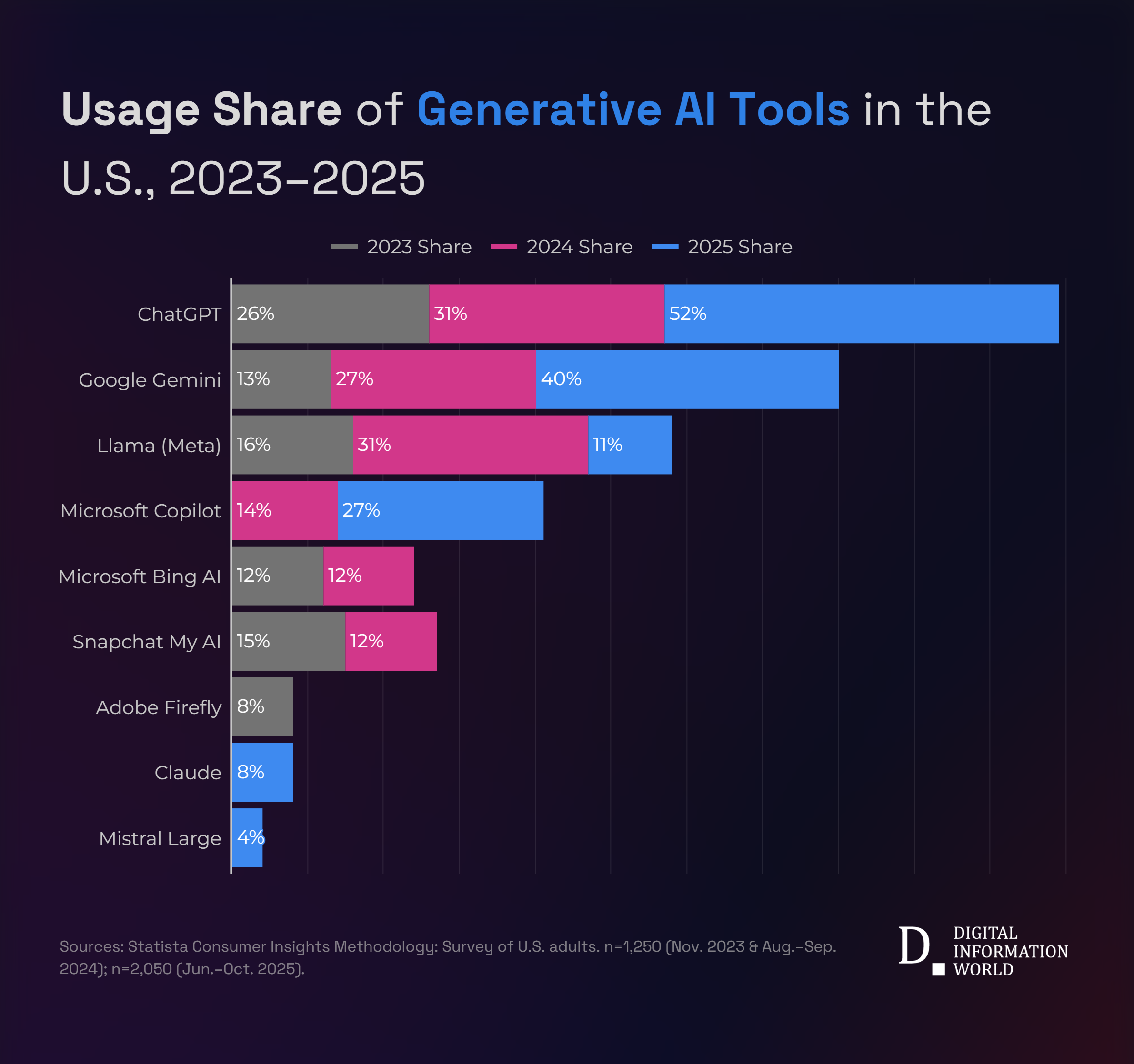

Back in early 2024, when Google launched Gemini, plenty of people asked the same question: "Can Google actually compete with Chat GPT?" At the time, it felt like a reasonable doubt. Chat GPT had already grabbed headlines, mindshare, and millions of users. Google, despite inventing the transformer architecture that powers modern AI, seemed to be playing catch-up.

Fast forward to early 2026, and the answer is crystal clear: yes, they can.

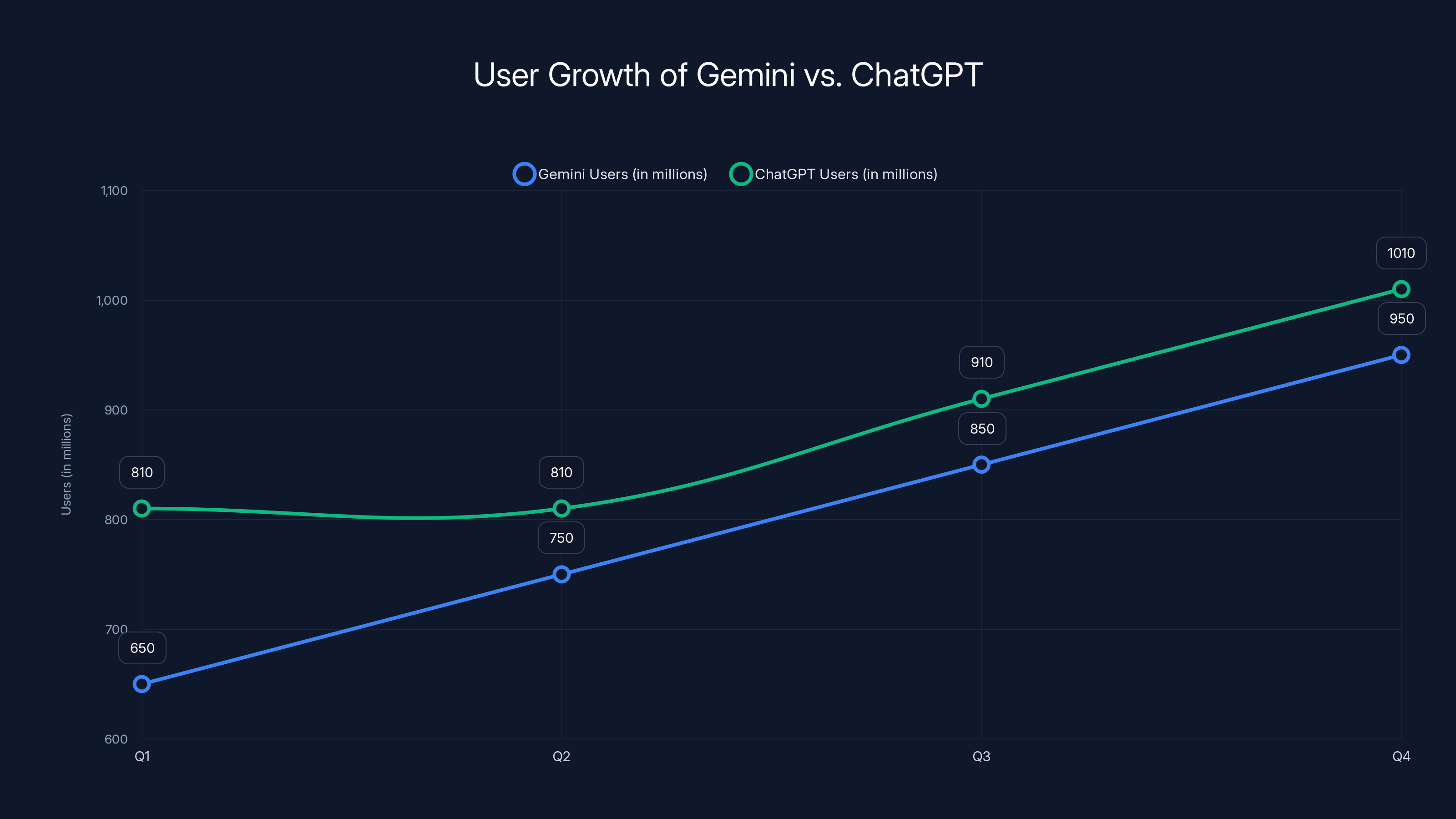

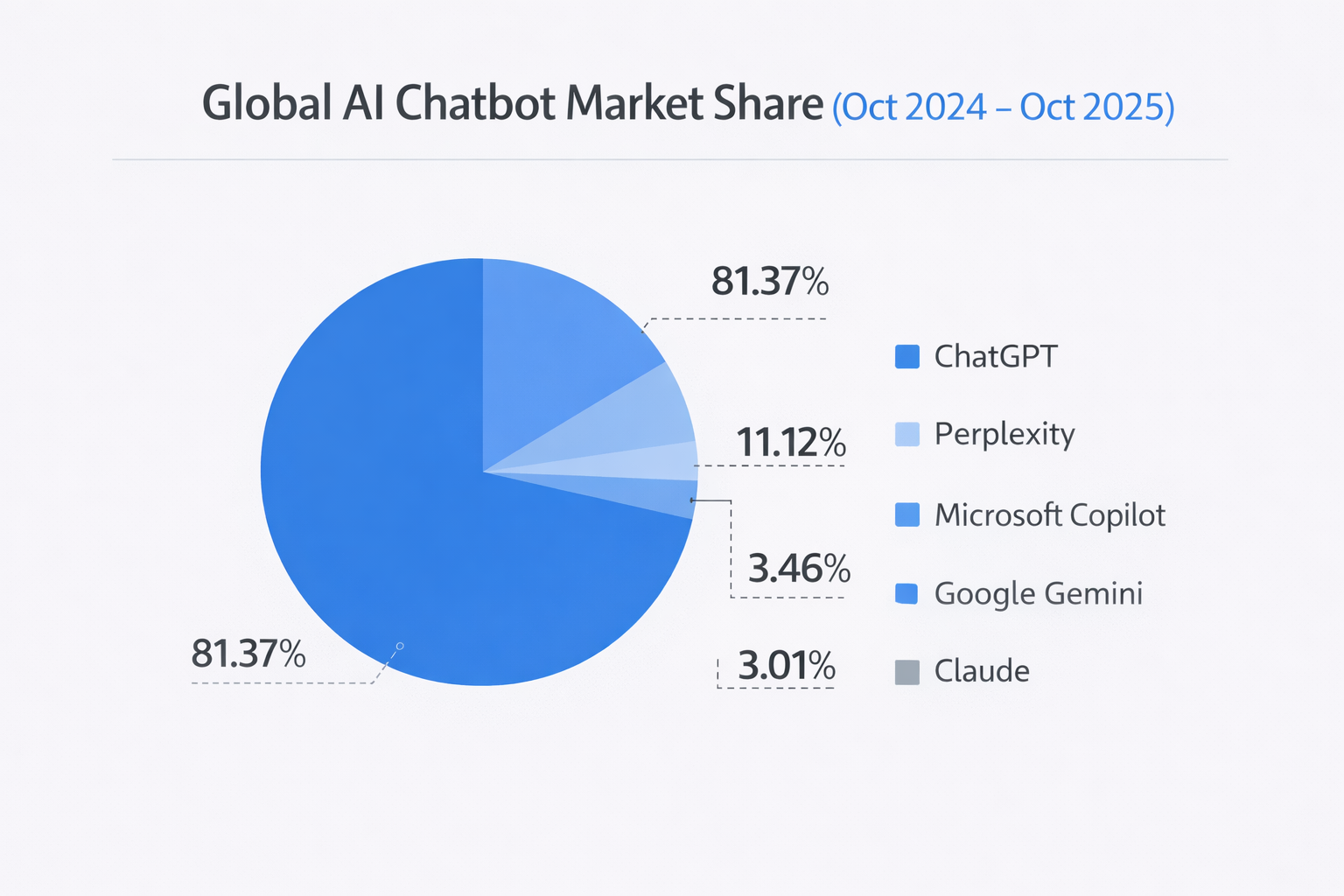

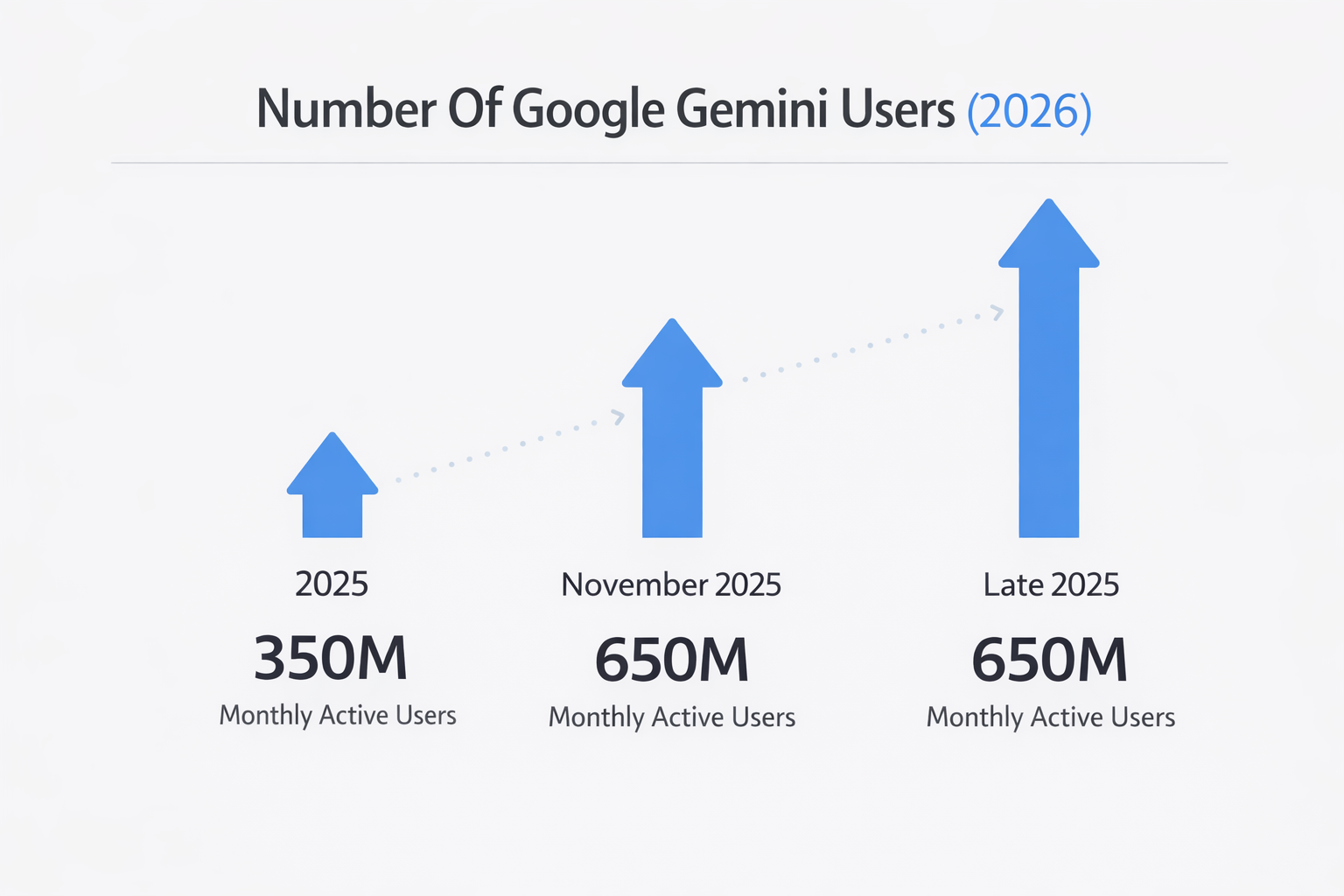

Google just announced that Gemini has surpassed 750 million monthly active users. That's not a typo. In just a few quarters, the app grew from 650 million to 750 million users. Meanwhile, Chat GPT sits at around 810 million MAUs. Meta AI trails at roughly 500 million. The competitive landscape has fundamentally shifted.

But here's what matters: this isn't just about bragging rights. The 750 million number tells us something much bigger about how AI is being adopted, what consumers actually want from AI tools, and where the real competitive advantages lie. It shows that search companies can leverage their existing distribution to build dominant AI products. It shows that integration matters more than innovation in many cases. And it shows that the AI arms race is no longer theoretical.

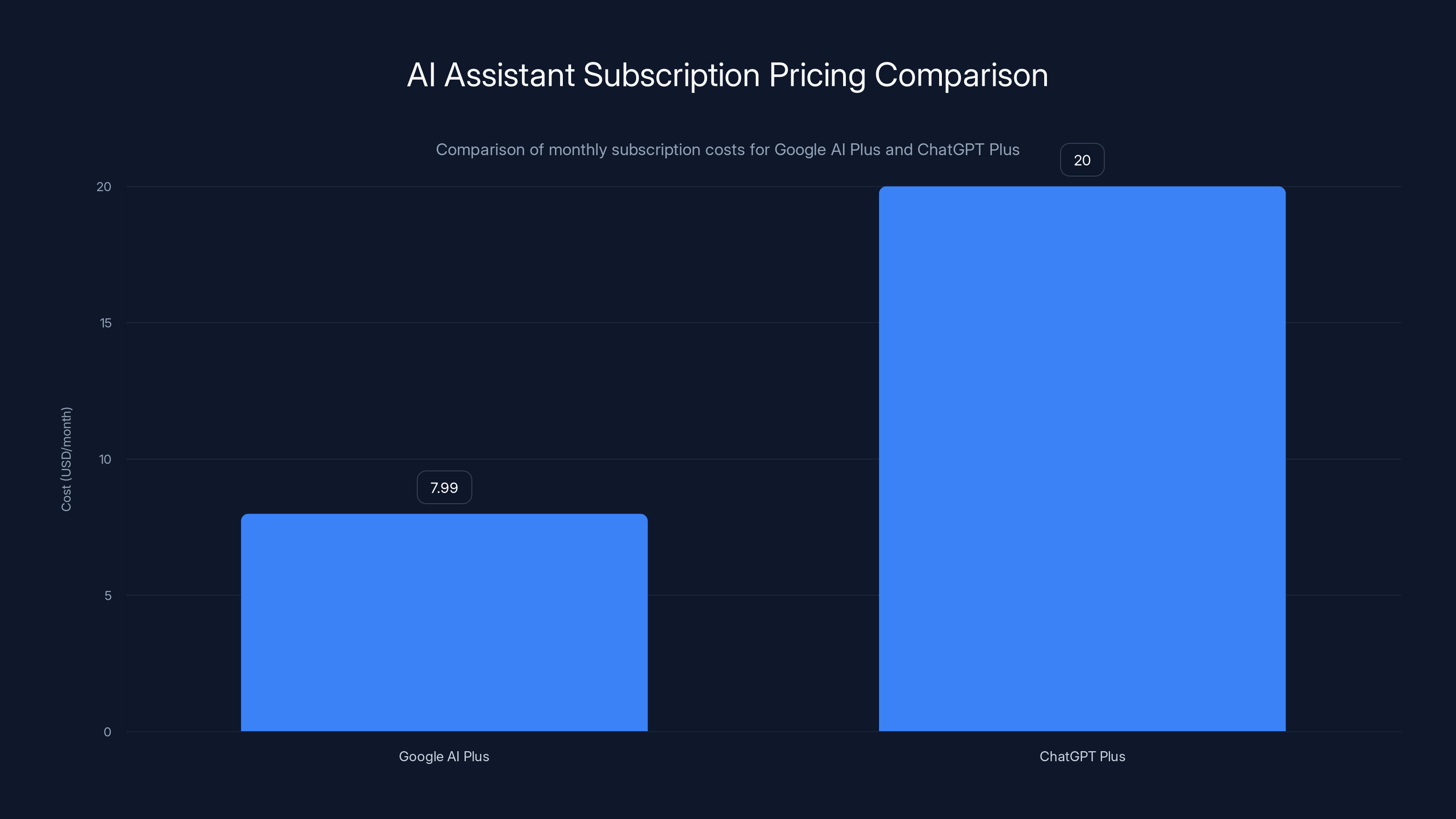

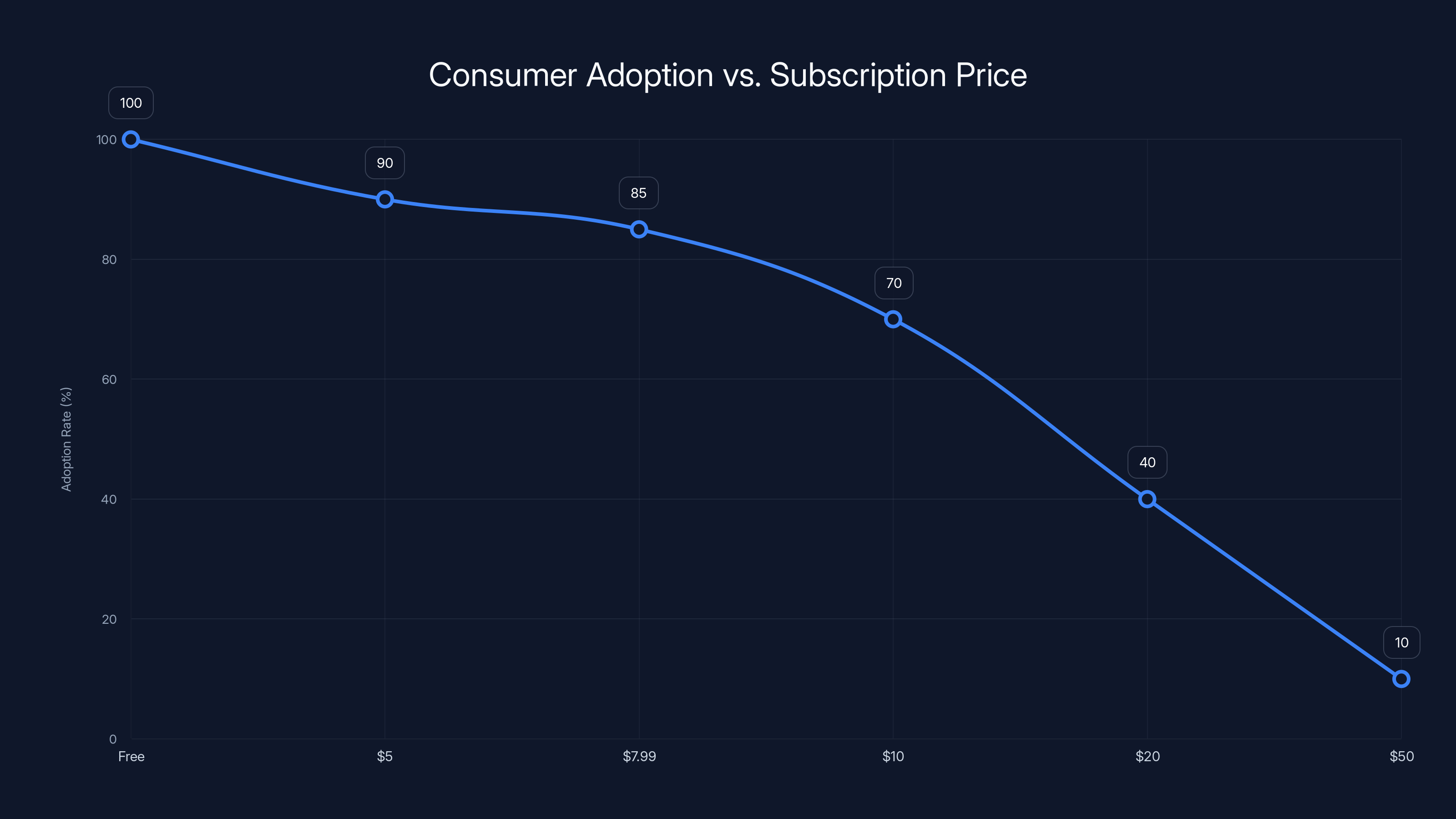

This milestone also arrives at a critical inflection point. Google's rolling out more affordable pricing ($7.99/month for AI Plus). Competitors are fighting harder for market share. The tools themselves are getting exponentially better. And enterprise adoption is just beginning to accelerate.

So what does 750 million actually mean? How did Gemini get there so fast? And what does it mean for the future of AI products? Let's dig into the numbers, the strategy, and the broader implications.

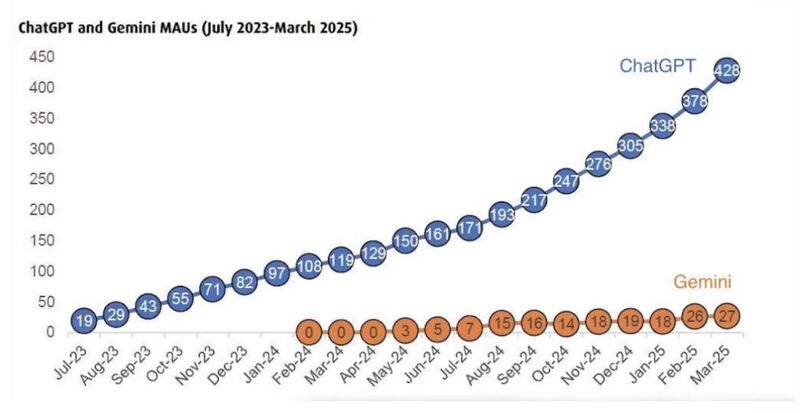

Gemini is rapidly closing the gap with ChatGPT, growing by 100 million users per quarter. Estimated data.

TL; DR

- 750 million monthly active users: Gemini grew from 650M to 750M in a single quarter, closing the gap with Chat GPT's 810M MAUs

- Search integration is everything: Google's ability to funnel users from its search product gave Gemini unprecedented distribution

- Price competition intensifying: New $7.99/month AI Plus plan targets budget-conscious users across all income levels

- Gemini 3 changes the calculus: New advanced model with claims of greater depth and nuance driving organic adoption

- Enterprise adoption accelerating: Direct API usage exceeds 10 billion tokens per minute from customer integrations

The 750 Million Milestone: Understanding the Scale

Let's put 750 million in perspective. That's roughly one out of every ten people on Earth using Gemini at least once a month. If Gemini were a country, it'd be the most populous on the planet, significantly larger than India or China.

But the number itself is less interesting than what it represents: consumer adoption velocity that would've seemed impossible just two years ago. Remember when launching a new consumer app meant grinding for months to hit one million users? Gemini did 750 million in about 18 months. Chat GPT took roughly a year to hit 100 million, then another year-plus to reach where it is now. Meta had Facebook as a distribution channel and still trails.

The growth trajectory matters here. Gemini went from 650 million to 750 million in one quarter. That's 100 million new monthly active users in roughly three months. Extrapolating that (yes, growth will slow), you're looking at 300+ million per year at current velocity. Whether it sustains or not, the trajectory is steep.

What's crucial to understand: these are monthly active users, not total registered accounts. That distinction matters because MAU counts actual engagement, not ghost accounts. Someone logged in once three months ago doesn't count. This means 750 million people used Gemini at least once in the last 30 days. Some used it once. Others use it daily. The variability is huge, but the baseline engagement is undeniable.

Compare this to Chat GPT's 810 million. The gap is closing. At this growth rate, Gemini could theoretically surpass Chat GPT within six to nine months, assuming Chat GPT growth plateaus. Of course, it won't. Open AI is fighting hard with GPT-4.5 improvements, more integrations, and enterprise focus. But the trajectory conversation has changed from "will Google catch up" to "when will Google catch up."

Google AI Plus is priced at

Why Google's Distribution Advantage Is Unbeatable

Here's the unfair advantage Google has that nobody else does: 8.5 billion searches per day. That's the daily active user base for Google Search. Every single person using Google can be shown a Gemini prompt without downloading anything, creating an account, or switching contexts. They're already there.

Open AI had to build Chat GPT's distribution from scratch. No email service with hundreds of millions of daily active users. No browser with 60%+ market share. No Android phone OS on 3 billion devices. No calendar, maps, or email integration. Chat GPT's success despite this disadvantage is remarkable. But it's still playing without the infrastructure advantage.

Google's strategy is simple: make Gemini available everywhere Google products live. Search results include Gemini responses. Gmail can draft emails with Gemini. Google Docs generates content. Android has a Gemini app. The Chrome browser surfaces Gemini. YouTube Shorts could integrate Gemini. Every single integration removes friction and exposes more people to the tool.

This doesn't guarantee success (Microsoft tried this with Bing and still lost market share). But it creates an asymmetric advantage that's difficult to compete against. When you can reach 100 million new users by flipping a switch in the search algorithm, you move at a different velocity than everyone else.

What's fascinating is that Google didn't rely purely on distribution. The product had to work. Gemini had to be useful enough that people chose to use it repeatedly. The tool had to be fast (crucial for search integration). It had to be reliable (major brand reputation risk). And it had to be different enough to justify switching from Chat GPT. Google executed on all of these.

But the real moat isn't the product quality. It's the distribution leverage. A startup building a better AI tool faces the same adoption problem every new consumer app faces: user acquisition cost is expensive, growth is slow, and alternatives are entrenched. Google faces none of these constraints. This is why Google's position in AI is fundamentally different from Open AI's, Meta's, or any startup's.

Gemini 3: The Model That Changed the Conversation

Right before hitting the 750 million milestone, Google released Gemini 3. This wasn't a minor update. According to CEO Sundar Pichai, it was a "positive driver" for growth and marked the introduction of Gemini 3 in AI mode with improvements in depth and nuance.

What does Gemini 3 actually do differently? Real talk: we don't have the full technical details from Google yet. The company has been cagey about the specifics. But based on available information and testing, Gemini 3 appears to focus on three core improvements.

First, reasoning. Gemini 3 seems to handle multi-step reasoning tasks more reliably. If you ask it to solve a complex problem that requires breaking down steps, considering dependencies, and synthesizing a conclusion, it performs better than earlier versions. This matters for professionals using AI for actual work, not just entertainment.

Second, instruction following. Earlier Gemini versions sometimes missed nuances in complex prompts. You'd give detailed instructions and the model would nail the core task but miss edge cases or specific formatting requirements. Gemini 3 appears more attentive to these details.

Third, coherence at longer outputs. Previous versions sometimes drifted or repeated themselves when generating long-form content. Gemini 3 maintains quality and coherence across extended outputs. For writers, researchers, and anyone generating substantial text, this is a meaningful improvement.

These improvements are incremental, not revolutionary. They're the kind of polish that top products implement between major versions. But incremental improvements at Gemini's scale matter enormously. When you're competing for mainstream adoption against Chat GPT, being slightly better at the tasks most people care about (writing, research, brainstorming, coding) is enough to move the needle.

What's strategic about Gemini 3's timing is that Google released it while growth was already accelerating. The PR is great. But more importantly, the product improvements give existing users a reason to return and new users a reason to try it instead of Chat GPT. In competitive markets, that's often the tipping point.

Google also claims Gemini processes over 10 billion tokens per minute via direct API use by customers. That's an astonishing number that suggests enterprise adoption is real and accelerating. Tokens are the unit of computation in LLMs. 10 billion per minute means millions of requests from paying customers. That's enterprise traction that consumer adoption alone doesn't explain.

The Pricing Strategy: Accessibility Over Margins

Google's not trying to maximize revenue per user. Not yet, anyway. The company just launched Google AI Plus at

Why? Market penetration over profit. Google's playing a long game. The company wants to be the default AI assistant for everyone, whether they're broke college students or Fortune 500 CEOs. At $7.99, the barrier to entry is negligible. A coffee and a muffin costs more. The friction of choosing between free Gemini and paid Chat GPT tilts heavily toward paid Gemini.

This pricing also serves multiple strategic purposes. First, it crowds out the middle. There's no sustainable business for a third AI assistant at

Second, it accelerates the path to profitability through scale. Google doesn't need high margins on each subscription. The company makes money through data insights, improved products, and better integration across its ecosystem. The Gemini subscription is a distribution play, not a revenue play. That's different from how Open AI thinks about it.

Third, it's a defensive move against enterprise customers threatening to leave. If Chat GPT Plus costs

Philipp Schindler, Google's chief business officer, emphasized during earnings that "We are focused on a free tier and subscriptions and seeing great growth." Translation: the freemium model is working. People are paying for the subscription tier, but the free tier is driving adoption. Once you have 750 million monthly active users, monetizing even a small percentage becomes a multi-billion-dollar business.

The $7.99 price point for Google's Gemini is strategically placed to optimize adoption, balancing affordability and perceived value. Estimated data.

Search Integration: The Killer Feature Everyone Overlooked

Here's something people don't talk about enough: Google's ability to integrate Gemini directly into search results is game-changing. When you search for something on Google, instead of clicking through to websites, you can now get a Gemini-generated summary with sources cited.

This changes search behavior fundamentally. Traditional search: you query, you get 10 blue links, you click through and read. New search: you query, you get an instant answer, you click through if you want more depth. Google's cutting out the middle step for a huge percentage of queries.

Is this good for the internet? That's a separate conversation (spoiler: publishers hate it). But it's undeniably good for Google's adoption metrics. If Gemini answers your query instantly, you don't need to leave Google. You don't need Chat GPT. You don't need Perplexity. You get what you need without context switching.

This integration also creates a feedback loop. More people use Gemini through search. Gemini's search results get better because they're trained on more data. Better search results mean more people use it. The loop reinforces Google's position.

Open AI tried to compete here with Search GPT, but it's always going to be at a disadvantage because Chat GPT isn't anyone's search engine. Users have to actively choose to use Chat GPT's search feature. With Google, using Gemini is the default path of least resistance.

Enterprise Adoption: Where The Real Money Is

The consumer metrics are impressive. But the real story is happening quietly in enterprise. Google mentioned that Gemini processes over 10 billion tokens per minute through direct API usage by customers. Let's unpack what that means.

Each token is roughly 4 characters of text. 10 billion tokens per minute means approximately 40 billion characters of text processed per minute. That's 57.6 trillion characters per day. To put that in context, that's roughly equivalent to processing the entire text of Wikipedia thousands of times per day, every single day.

This isn't consumer usage. Consumers don't generate anywhere near this volume. This is enterprises integrating Gemini into their products and workflows. A customer service platform using Gemini for response generation. A software company using it for code suggestions. A media company using it for content synthesis. These are all counted in that 10 billion token figure.

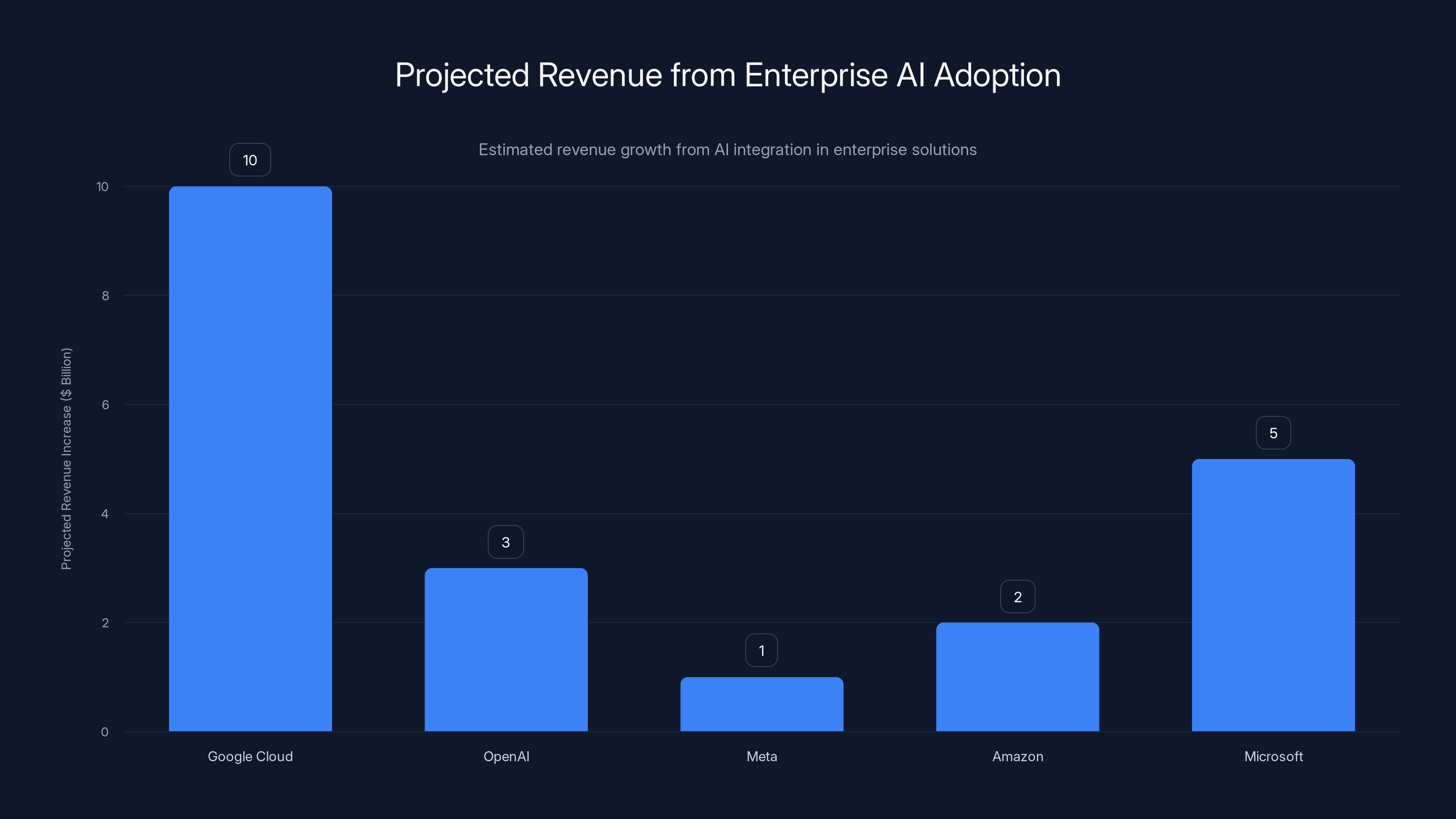

Why does this matter? Because enterprise adoption is where software companies make serious money. Google Cloud is already a

Meanwhile, Open AI is still figuring out enterprise go-to-market. The company has enterprise features and APIs, but it doesn't have the same infrastructure play. Google can offer Gemini as part of a complete data and analytics stack. Buy Google Cloud, get Gemini. It's integrated. It works. It scales.

Meta's situation is different. Meta has enormous reach but no enterprise software business to leverage. Amazon has enterprise infrastructure but has been slow to build meaningful AI tools. Microsoft has enterprise software but has chosen to partner with Open AI rather than build its own (though Copilot, which uses Open AI's models, is now in thousands of enterprises).

Google's position in enterprise AI is underrated. The company's not the flashiest. But it has the distribution, the infrastructure, and the incentives to dominate. The 750 million consumer number is impressive. The 10 billion tokens per minute is where the real competitive advantage lives.

The Chat GPT Comparison: Close, But Not Quite

Every headline comparing Gemini to Chat GPT comes with a number: Gemini has 750 million MAUs, Chat GPT has 810 million. That's a 60 million user gap. Sounds small when you're talking about hundreds of millions. It's actually enormous.

But context matters. Chat GPT launched in November 2022. Gemini launched in December 2023. Chat GPT got a full year head start and had the benefit of being the "first" consumer AI product that felt genuinely magical. People lined up to use it. Chat GPT built mindshare, brand recognition, and habit.

Gemini, by contrast, benefited from infrastructure. It didn't have to convince people to switch products or download an app. It was already there. The adoption strategy was completely different.

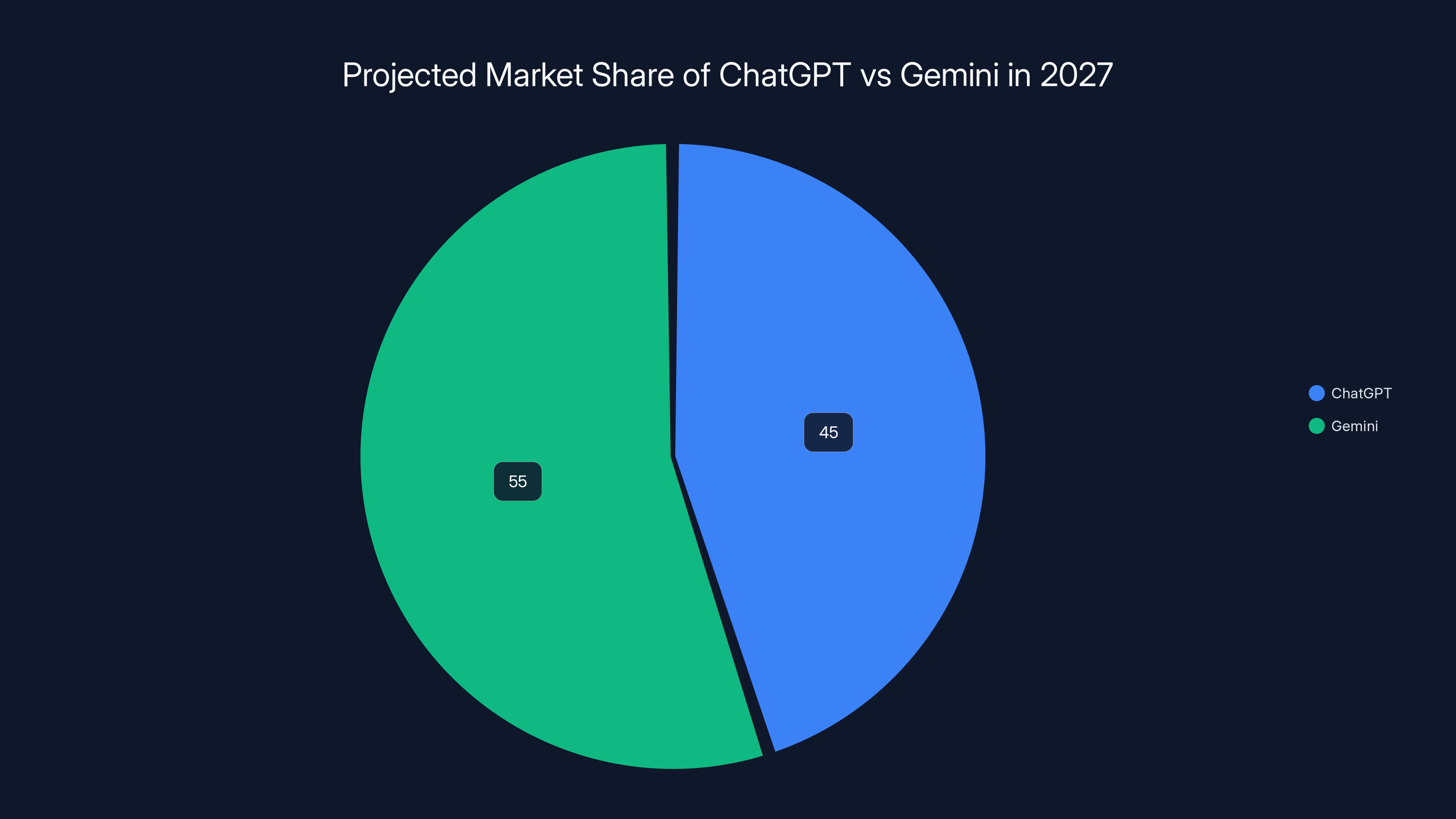

If you had to place bets on which product would be bigger in 2027, you'd probably bet on Gemini. Not because it's better (it's competitive, but "better" is subjective), but because Google's distribution moat is wider than Open AI's product moat. Over time, distribution wins.

That said, Chat GPT has advantages Gemini doesn't. Open AI's built a genuine community around the product. People love Chat GPT. They use it for work, creative projects, learning. The brand is strong. Switching costs are real (people have thousands of conversations saved, plugins set up, workflows built). Open AI also has been more aggressive on the pricing front with enterprise customers, using lower prices to win deals that would normally go to Google.

The realistic scenario isn't that one completely dominates. It's that both thrive, maybe splitting the market 45-55 or 40-60. Microsoft's stake in Open AI and massive enterprise relationships through Office 365, Teams, and Azure means Chat GPT will remain a significant player regardless of consumer adoption numbers.

But Gemini's arrival at 750 million closes the "Open AI is winning" narrative. Now the narrative is "it's competitive, and Google's closing the gap fast."

Google Cloud could see a $10 billion increase in annual revenue from enterprise AI adoption, significantly outpacing competitors. Estimated data.

Meta AI's Struggle: Why 500 Million Isn't Enough

Meta AI sits at roughly 500 million monthly active users. On the surface, that looks strong. It's bigger than most consumer products. But in the context of Gemini (750M) and Chat GPT (810M), it looks like third place.

Meta's in an awkward position. The company has massive reach through Facebook and Instagram. If Meta could convert even a fraction of its 3+ billion monthly active users to Meta AI users, the platform would be unbeatable. But conversion isn't happening at scale. Here's why.

First, Meta's AI tools don't feel like first-class products. They feel bolted on. You go to Facebook to see what your friends are up to, not to use AI. Yes, Meta's integrated AI into feeds, story suggestions, and recommendations. But these are background tools, not destination products. Gemini and Chat GPT are destinations. People go there specifically to use AI.

Second, Meta's execution on AI tools has been spotty. The early versions were clearly worse than Chat GPT and Gemini. Meta's worked on this, but the reputation stuck. Network effects matter enormously in consumer products. If your friends aren't using something, you're less likely to use it. If experts aren't talking about it, you're less likely to try it. Meta never had the early momentum that Chat GPT had.

Third, Meta's incentive structure is different from Google and Open AI. For Google, AI is a lever to improve search, cloud, and advertising. For Open AI, AI is the business. For Meta, AI is... peripheral. It's useful for content recommendations and advertising. But it's not core to the business model in the way it is for Google and Open AI.

This doesn't mean Meta AI is failing. 500 million MAUs is genuinely impressive. But it's a distant third, and the gap is widening, not narrowing. Meta would need a fundamental strategic shift (like making AI a core product offering rather than a feature) to compete with Gemini and Chat GPT at scale.

Alphabet's Bigger Picture: AI as a Strategic Imperative

The 750 million number is significant, but it sits within a larger context: Alphabet just surpassed $400 billion in annual revenue for the first time. The company attributes a significant portion of this growth to the AI division.

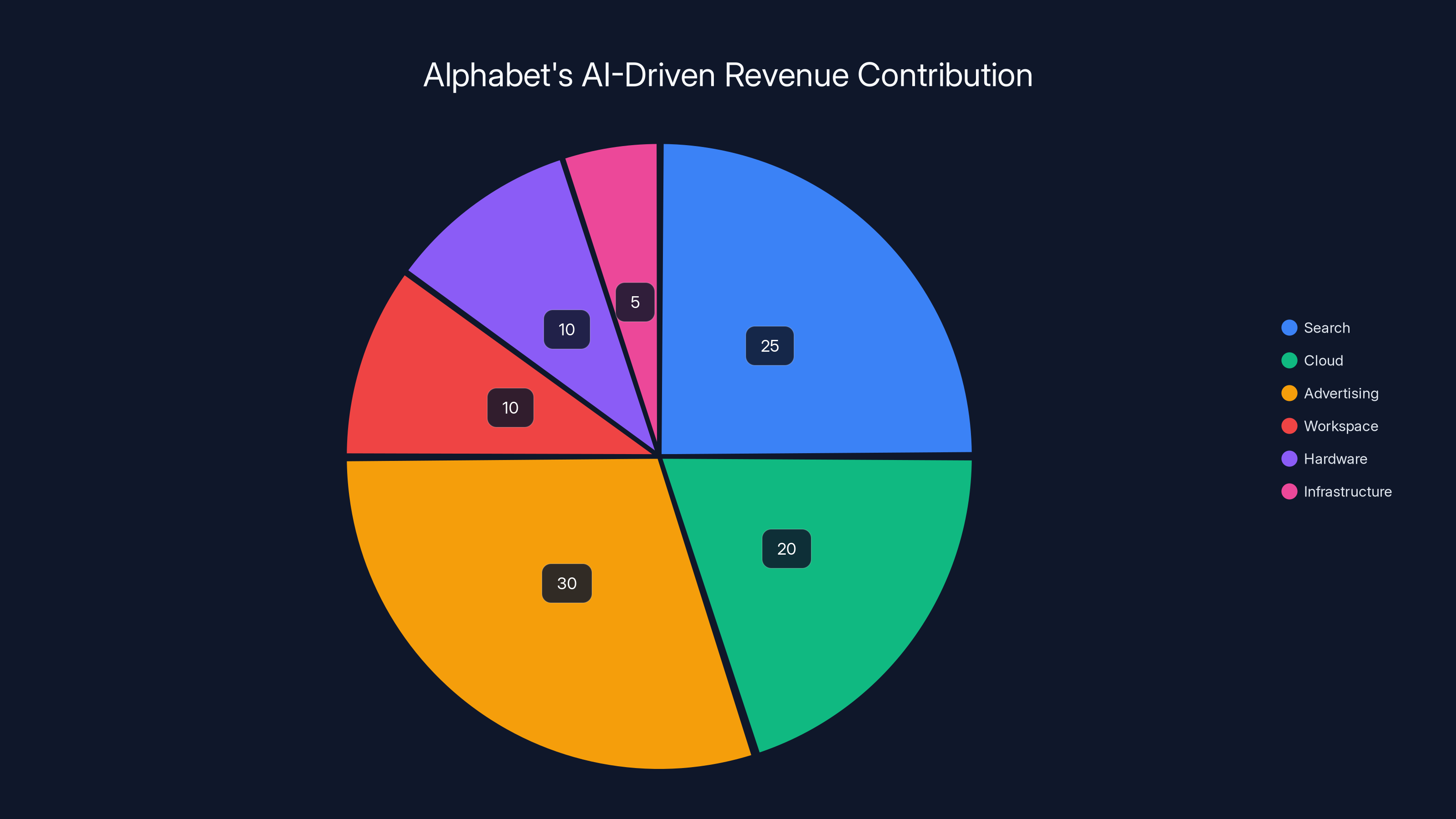

Let's zoom out. When Alphabet CEO Sundar Pichai talks about AI, he's not just talking about the Gemini app. He's talking about integrating AI across search, cloud, advertising, hardware, and internal tools. Gemini is the consumer-facing avatar of a much larger AI strategy.

Here's what Google's actually doing:

Search: Integrating Gemini answers into search results, increasing engagement and reducing click-through to external sites.

Cloud: Offering Gemini APIs and AI-powered analytics to enterprises, embedding AI into the entire Google Cloud infrastructure.

Advertising: Using AI to optimize ad targeting, bidding, and creative. AI's already making Google's advertising products more efficient.

Workspace: Integrating Gemini into Gmail, Docs, Sheets, and Slides so that work can be done faster.

Hardware: Running AI models on Pixel phones, Nest devices, and other hardware to deliver features that competitors can't match.

Infrastructure: Building specialized chips (like the new Ironwood TPU) to run AI models at scale more efficiently than competitors can.

The 750 million Gemini users is the consumer headline. But the real strategy is AI-powered everything. Gemini is the marketing vehicle for a wholesale transformation of Google's product line.

This explains why Google's willing to price Gemini at $7.99/month. The subscription isn't the money. The money is in making search stickier, making Cloud more valuable, making Workspace more indispensable, and making Android more capable. Gemini is a means to those ends.

Tokens Per Minute: The Real Enterprise Metric

Google mentioned something in passing that deserves more attention: Gemini processes over 10 billion tokens per minute through direct API usage by customers.

This number is actually bigger than the 750 million MAU number. Here's why. Monthly active users tells you about reach. Tokens per minute tells you about engagement intensity and revenue potential. Someone using Gemini once a month is counted in the 750 million. Someone making API calls through Gemini for production applications is part of the 10 billion tokens per minute.

Let's do some quick math. Assume an average API call uses 500 tokens (input and output combined). That's 20 million API calls per minute. Multiply by 60 minutes, and you get 1.2 billion API calls per day. Even at

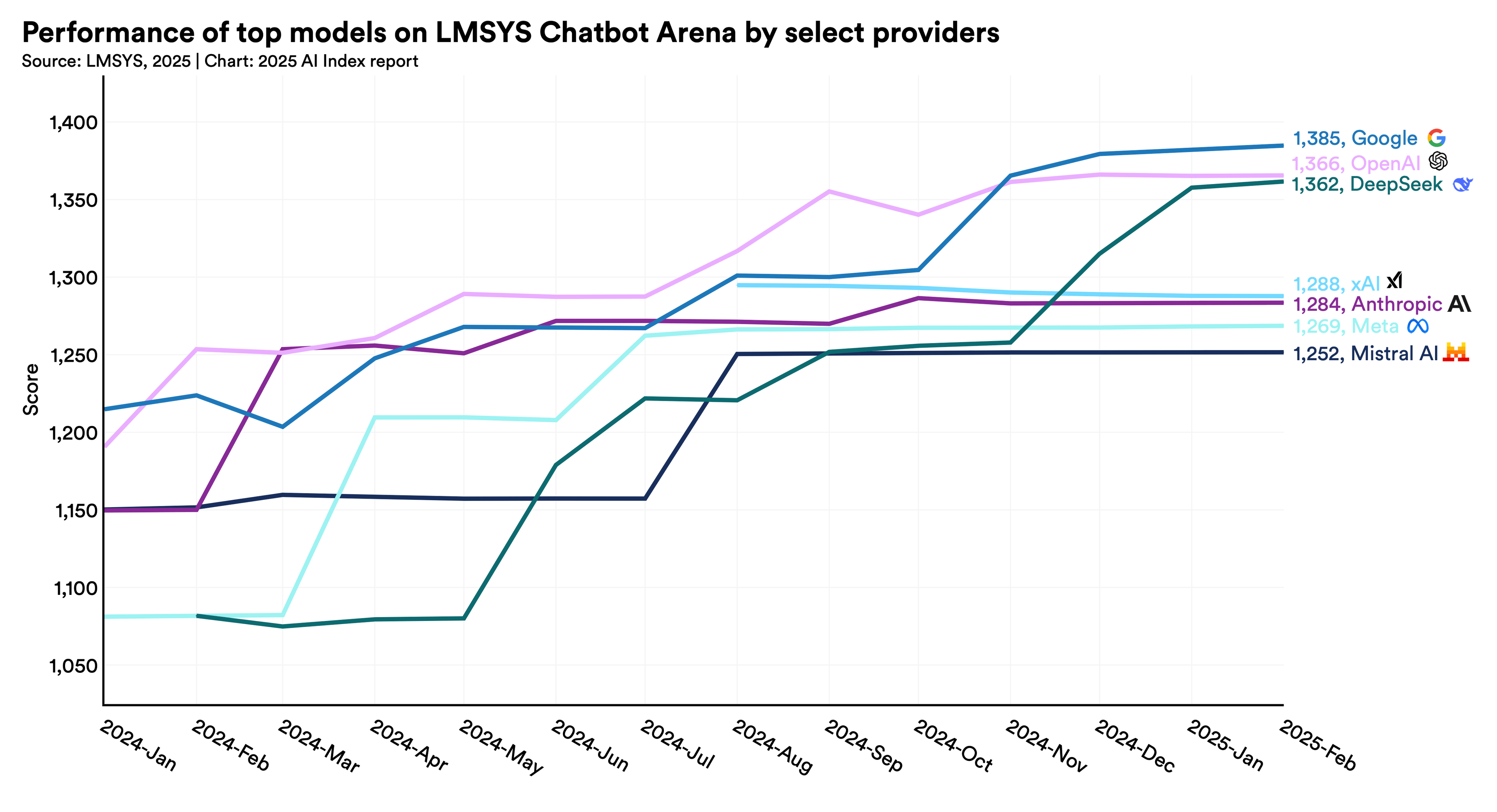

Open AI hasn't revealed its token throughput, but based on publicly available data, the company processes somewhere in the 4-8 billion tokens per minute range. Google's at 10 billion. That's a significant enterprise advantage.

What does this tell us? Enterprise customers are heavily integrating Gemini into their workflows. This is happening at scale. This means Google's AI strategy isn't just consumer facing. It's deeply embedded in enterprise infrastructure, which is where long-term competitive advantages live.

Estimated data suggests that by 2027, Gemini may capture a slightly larger market share due to its distribution advantages, despite ChatGPT's strong brand and community.

The Competitive Dynamics: Where We Go From Here

The AI assistant market is consolidating. You're looking at a world with five serious players:

Google (Gemini): 750M MAU, massive distribution advantage, enterprise traction, pricing advantage

Open AI (Chat GPT): 810M MAU, best brand, most engaged users, advanced models, enterprise go-to-market through partnerships

Microsoft (Copilot + integration with Open AI): Leveraging Office 365 distribution, enterprise relationships, Azure infrastructure

Meta (Meta AI): 500M MAU, integration with social platforms, improving product quality, but facing structural adoption challenges

Specialists (Anthropic Claude, Perplexity, others): Differentiated models, niche use cases, but no distribution advantage

In the next two years, expect:

Further consolidation: Smaller AI products will struggle against the Big Three (Google, Open AI, Microsoft). Having a "good" product isn't enough when you're competing against distribution moats.

Pricing compression: All three will continue pushing prices down to maximize penetration. $20/month for Chat GPT Plus is already under pressure. You'll see more bundling and tiering.

Feature parity: The core models will get increasingly similar in capability. Gemini 3, GPT-4, and Claude will all be "good enough" for most use cases. Competitive advantage will shift from model quality to distribution, integrations, and user experience.

Enterprise lock-in: Companies that integrate AI deeply into their workflows will face high switching costs. First-mover advantage in enterprise accounts matters enormously.

Regulatory pressure: AI models, especially in sensitive domains (finance, healthcare, government), will face more scrutiny. Companies that build compliance into their products early will have advantages.

The Ironwood Chip: Infrastructure Competitive Advantage

Almost overlooked in the earnings announcement was Google's introduction of the Ironwood TPU (Tensor Processing Unit). This is a big deal that deserves more attention than it got.

Nvidia's GPUs have dominated AI infrastructure for years. Companies building AI services need GPUs, and they're expensive. Nvidia's leveraging this to charge premium prices and control the market. But Nvidia doesn't design their chips specifically for training and running large language models the way Google does.

Google's custom silicon strategy flips the incentive structure. Google designs chips optimized for running Gemini and other Google AI services. This means Google can:

Reduce costs: Specialized silicon is more efficient than general-purpose GPUs for specific workloads. Lower costs mean higher margins or lower prices.

Reduce latency: Chips designed for specific inference patterns can deliver responses faster. Speed matters for user experience.

Increase throughput: Custom chips can process more requests simultaneously. This is crucial at 750 million user scale.

Maintain proprietary advantage: Google controls the silicon. Competitors can't easily replicate Google's infrastructure advantages.

Open AI doesn't control its hardware. The company buys from cloud providers or Nvidia. This gives Open AI flexibility but also makes Open AI dependent on suppliers. Microsoft's making a strategic bet on Azure infrastructure and custom chips (Maia, Kobold) but is still in earlier stages.

Google's Ironwood chip is a reminder that the AI competitive advantage isn't just about the model. It's about the entire stack: software, hardware, infrastructure, distribution, and integration. Google's advantages compound across all of these layers.

Alphabet's $400B Revenue Milestone: What It Means

Alphabet just hit $400 billion in annual revenue, a historic milestone for a company that started as a search engine. Google Search is still the core business, generating roughly 60% of revenue. But what's important is how AI is expanding that pie.

Google Search is a mature business. Revenue growth in search comes primarily from:

- Volume growth (more searches per person, more people online globally)

- Price growth (higher CPM, better ads through AI targeting)

- Mix shift (higher-value queries, more commerce searches)

AI enables all three. More people are using Google Search because Gemini integration makes search better. CPM is increasing because AI-powered advertising is more effective. Mix is shifting because AI can now handle complex queries that previously would've gone to competitors.

Google Cloud is growing faster than core Search. The company's cloud division is approaching $50+ billion in annual revenue run rate. AI is a key driver. Customers are adopting Google's AI APIs, embedding Gemini into their products, and paying more for intelligent services.

Alphabet's also benefiting from YouTube, Android, and Workspace growth, all of which are being accelerated by AI. The company's diversified across multiple high-growth businesses, all of which have AI as a tailwind.

The $400 billion number isn't just about scale. It's about sustainable competitive advantage. Companies with massive revenue bases and strong profit margins can invest more in R&D than competitors. Google's putting multi-billions into AI research. This compounds advantages over time.

AI significantly contributes to Alphabet's revenue, with advertising and search being the largest segments. Estimated data based on strategic focus areas.

The Role of GPT-5 and Gemini 4: What's Coming Next

Google announced Gemini 3. Open AI is rumored to be working on GPT-5. What happens next is crucial for understanding competitive dynamics.

If GPT-5 represents a meaningful leap over Gemini 3 in capabilities, Open AI regains ground. Users would switch back to Chat GPT. Enterprise customers would reconsider. Pricing power shifts back to Open AI.

If Gemini 3 holds its own against GPT-5, Google's distribution advantage becomes unbeatable. Switching from Gemini to Chat GPT for marginally better performance isn't worth the friction.

The reality is probably somewhere in between. GPT-5 will be better at some things (maybe reasoning or specific domains). Gemini will be better at others (integration, price, availability). Users will use both, keeping tabs open for both Chat GPT and Gemini.

But the trajectory matters. If Gemini closes the capability gap (which it has) and maintains the distribution advantage (which it will), Open AI's path to dominance becomes harder. Open AI would need either a breakthrough that makes GPT meaningfully superior to Gemini, or a different distribution strategy (like partnerships with device makers, deeper Microsoft integration, enterprise account expansion).

What's underrated is that this is a marathon, not a sprint. AI models are improving roughly every 3-6 months. In three years, models that seem revolutionary today will seem obvious. What matters is who's distributing the best model to the most people. Today, that's Google.

What 750 Million Users Means for the Future of Search

Here's a thought experiment: what happens to search if Gemini becomes the primary way people find information?

Traditionally, Google Search returns links. You click through, read, learn, and make decisions based on what you found. This model works, but it's inefficient. You have to do the research yourself.

If Gemini becomes mainstream for search, the model inverts. You ask a question. Gemini synthesizes information from across the web and gives you an answer. No clicking required. No research required. You get what you need instantly.

This is better for some use cases (quick facts, definitions, simple how-tos). It's worse for others (deep research, understanding nuance, learning about complex topics). But Google's betting that for most searches, synthesis is better than link clicking.

What does this mean for publishers and content creators? This is where it gets controversial. If Google's Gemini answers your query directly, you don't click through to a publisher's website. That means less traffic, less ad revenue, less opportunity to monetize. Publishers are rightfully concerned.

Google's solution is "cite sources." Gemini responses include links to the websites it pulled information from. But this doesn't solve the core problem. If a user gets their answer from the search results, fewer people click through. Publishers still lose revenue.

This is why some publishers have threatened legal action against Google and other AI companies for using their content in training data without permission. The tension between what's good for users (instant answers) and what's good for publishers (traffic and monetization) is real and unresolved.

Longterm, expect regulatory pressure on how AI companies can use publisher content. But in the near term, Google's going to continue pushing Gemini into search because it benefits Google's core business (longer search sessions, higher engagement) and users (faster answers).

Pricing Strategy Deep Dive: Why $7.99 Wins

Let's talk more specifically about why Google's pricing strategy is working.

Price sensitivity curve: Consumers' willingness to pay for AI follows a curve. At free, adoption is unlimited. At

Google placed Gemini at

Payback period: At

Bundle potential: Google's set up Gemini AI Plus to bundle with other services. Future bundling with Google One (cloud storage), YouTube Premium, or other services could happen. Once bundled, the price becomes invisible, and adoption accelerates.

Competitive positioning: At

Open AI could drop Chat GPT Plus to $14.99/month and probably wouldn't lose much revenue (lower price × higher volume = similar revenue). But Open AI's playing a different game. The company wants to establish premium positioning and maximize per-user revenue. Google wants to maximize penetration and lock in users.

Both strategies can work. But in consumer markets with high switching costs (which AI isn't yet—you can use both), penetration usually wins.

Integrations and Ecosystem Lock-In

One thing that's going to accelerate Gemini adoption that people aren't talking about enough is integrations. Google's building Gemini into every product it owns.

Imagine this workflow:

- You're in Google Docs writing a report

- You ask Gemini to generate a section on market trends

- You review it, edit it, keep what works

- You move to Google Sheets and ask Gemini to create visualizations from your data

- You export to Google Slides and ask Gemini to design a presentation

- You email it to colleagues using Gmail, which Gemini helped draft

- You get feedback in comments, which Gemini summarizes for you

This workflow is seamless if you're in Google's ecosystem. It's friction-filled if you're using Chat GPT and Microsoft Office, or other tools. Each additional integration creates a tiny amount of lock-in. Across dozens of integrations, lock-in becomes significant.

Microsoft's doing the same thing with Copilot across Office, Teams, Windows, and Azure. The company's leveraging its enterprise distribution to create the same lock-in for businesses that Google's creating for consumers.

Open AI has plugins and API access, but doesn't own the entire stack like Google or Microsoft do. This is a structural disadvantage that's harder to overcome than it initially seemed.

Real-World Impact: How Gemini 750M Changes Things

Let's ground this in reality. What does 750 million Gemini users actually mean for people living their lives?

For students: Gemini is now the default AI assistant for homework help, research, and writing. At 750 million users, it's likely your classmates are using it too. The default has shifted from "maybe I'll try Chat GPT" to "I'll use Gemini since it's integrated into Google."

For professionals: If your company uses Google Workspace, Gemini is likely being baked into your workflow. Email drafting, document generation, data analysis, presentation creation. You're using Gemini whether you realize it or not.

For businesses: Integrating Gemini APIs into your product becomes more justifiable. If 750 million consumers are familiar with Gemini, integration is less risky. You're building on something people already trust and use.

For publishers: More pressure. If search results increasingly feature AI-generated summaries instead of links, traffic drops. You need to figure out how to remain relevant in an AI-first world.

For startups: The competitive landscape gets harder. If Gemini is integrated everywhere and costs $7.99/month, building a specialized AI tool is a tougher pitch. You need a genuinely differentiated use case.

The Competitive Implications for Open AI

Let's be direct: Gemini hitting 750 million users is a strategic win for Google and a wake-up call for Open AI.

Open AI's advantages:

- Brand strength: Chat GPT is still the most recognized AI product

- User loyalty: Early adopters have workflows built around Chat GPT

- Enterprise traction: Open AI has strong relationships with large companies

- Advanced models: GPT-4 and upcoming models remain competitive

- Partnerships: Microsoft relationship ensures distribution through Azure, Copilot, and Office 365

Google's advantages:

- Distribution scale: 8.5 billion daily search users

- Price: 20 changes the calculus

- Integration: Gemini in Search, Workspace, Cloud, Android

- Hardware: Custom silicon (Ironwood) creates infrastructure advantage

- Enterprise: 10 billion tokens/minute processed

If I had to bet on the market in 2027, I'd bet on Google being the larger player by MAU, Chat GPT remaining strong in enterprise and with power users, and both thriving because the market is large enough for multiple winners.

The days when Open AI could be "the" AI company are over. The market's too big, competitors are too well-capitalized, and distribution advantages are too powerful. Open AI's future is as the leading AI research company and one of two or three major AI products, not as "the" dominant player.

Looking Ahead: What We Should Watch

As the AI race heats up, here are the key metrics and developments to monitor:

MAU growth rates: Is Gemini continuing to grow at 100M per quarter? Is Chat GPT plateauing? These trend lines matter.

Enterprise API usage: Which AI platform is processing more tokens? This tells you about real commercial traction.

Product releases: How big are the next model updates? What new capabilities do they add?

Regulatory developments: How will governments regulate AI? This could reshape the competitive landscape.

International expansion: Which AI assistant is winning in Japan, Europe, India? Geography matters.

Pricing changes: Who lowers prices and why? This signals strategy shifts.

Integration depth: Which ecosystem is more locked-in for consumers and enterprises?

The story of AI in 2026 isn't about who's winning. It's about the competitive dynamics shifting from "Will Google catch up?" to "Can anyone compete with Google's distribution?" It's about Chat GPT remaining strong while losing the top position. It's about Meta struggling to find AI's value proposition. It's about Microsoft quietly building enterprise dominance through integration rather than product excellence.

750 million Gemini users is a milestone. But the real inflection point is that Google's AI strategy is working. The bet on integrating AI across all of Google's products and leveraging existing distribution is paying off faster than most people expected.

For the next three years, watch Gemini's growth rate, the quality of Gemini's models relative to competitors, and Google's success in converting free users to paid subscribers. Those three things will determine whether Google becomes the default AI provider for most of humanity.

Conclusion: The Shift We're All Missing

The 750 million number is important, but it's a symptom, not the disease. What's actually happening is a fundamental shift in how AI products get built and distributed.

The old model: Build an amazing product, acquire users through marketing and network effects, monetize through subscriptions. This worked for Chat GPT.

The new model: Leverage existing distribution (Google Search, Microsoft Office, Meta's social graph), integrate AI into the existing product, convert users through ubiquity. This is what Google's doing.

The second model is more efficient. Why spend billions on user acquisition when you already have billions of users? Why convince people to switch products when you can improve their existing product?

This is why Gemini's growth has been so fast and why Chat GPT's path to dominance has become harder. Distribution advantages compound over time. Google's getting stronger at AI every quarter, and each improvement reaches more people automatically.

The implication is clear: the future of AI isn't about the best product winning. It's about the best product that also has distribution advantage winning. On that dimension, Google has no peer.

Open AI will remain a leader in AI research and a significant product in its own right. Microsoft will dominate enterprise. But Google's going to be the default for most people. The 750 million number is just the beginning.

FAQ

What does 750 million monthly active users actually mean for Gemini?

Monthly active users (MAU) means 750 million people used Gemini at least once in the past 30 days. This doesn't indicate how frequently they used it (some used it once, others daily) but confirms genuine engagement. The figure is significant because it shows Gemini is mainstream, not niche. For context, this exceeds the entire population of Europe and is roughly equivalent to one out of every ten people on Earth.

How did Gemini grow from 650 million to 750 million users in just one quarter?

Gemini's rapid growth is driven by three factors: first, integration into Google Search results means every Google search user can access Gemini without switching products; second, the rollout of Gemini to Gmail, Docs, Sheets, and Slides adds touchpoints across Google's ecosystem; third, Google's distribution network of 8.5 billion daily search users provides an unparalleled foundation for adoption. No other AI product has access to this scale of existing users. Additionally, Gemini 3's release and aggressive $7.99/month pricing accelerated both consumer and enterprise adoption.

Can Chat GPT maintain its lead over Gemini given Gemini's growth trajectory?

Chat GPT's 810 million users still edge Gemini's 750 million, but the gap is closing at roughly 100 million users per quarter based on recent trends. Whether Chat GPT maintains its lead depends on three variables: first, if GPT-5 represents a substantial capability leap over Gemini 3; second, if Open AI can maintain brand loyalty among power users despite Gemini's lower price; third, if Microsoft's enterprise partnerships create enough switching friction to prevent customer defection. Realistically, both products will likely coexist as leaders rather than one completely dominating, similar to how Google and Microsoft both thrive in productivity software despite competing products.

What does the $7.99 monthly pricing mean for the AI market?

Google's AI Plus pricing at

How does Gemini's 10 billion tokens per minute enterprise metric compare to Chat GPT's?

The 10 billion tokens per minute figure represents enterprise API usage and indicates Gemini is processing massive computational workloads from customers integrating Gemini into their products. This isn't consumer usage (consumers can't generate billions of tokens of output monthly). This suggests Fortune 500 companies, SaaS platforms, and large-scale applications are using Gemini at scale. Open AI hasn't publicly disclosed equivalent numbers, but based on industry analysis, Chat GPT processes in the 4-8 billion tokens per minute range. Google's higher number suggests Gemini has achieved faster enterprise adoption than initially expected. This matters because enterprise API revenue scales faster than consumer subscription revenue and has higher margins.

What's the strategic importance of Google's Ironwood TPU chip for AI competition?

Google's custom silicon (Ironwood TPU) is a long-term competitive moat that most people underestimate. While Nvidia GPUs dominate AI hardware currently, Google designs Ironwood specifically for running Gemini and Google AI services. This means Google can optimize every layer of the hardware-software stack, reducing latency, improving throughput, and decreasing cost per inference. Over three to five years, this compounds into significant advantages: lower operating costs that enable aggressive pricing, faster response times that improve user experience, and higher throughput that enables serving more users. Open AI and others buy from Nvidia and cloud providers, making them dependent on suppliers. Google controls its own destiny. This is similar to how Apple's vertical integration in hardware-software created advantages over competitors that used standard components.

Will Gemini eventually surpass Chat GPT in monthly active users?

Based on current growth trajectories and assuming linear scaling, Gemini could theoretically surpass Chat GPT within 6-12 months. However, linear growth assumptions rarely hold. As Gemini approaches Chat GPT's user base, growth likely slows due to market saturation, increased competition, and declining acquisition efficiency. More realistically, both products will converge to similar user bases (700-800 million each) and stabilize within the next 18-24 months. The companies will then compete on engagement (daily active users, time spent), retention, and monetization rather than raw monthly user counts. Think of it like how Gmail and Outlook both have hundreds of millions of users and compete on features and integration rather than trying to eliminate each other.

How does Google's distribution advantage translate to long-term competitive dominance in AI?

Google's distribution advantage compounds over time through network effects and switching costs. As more users integrate Gemini into their workflows through Google Search, Workspace, and other products, switching to a competitor becomes increasingly costly. Learning new interfaces, rebuilding workflows, and losing conversation history all create friction. Distribution advantages also enable faster iteration: Google can test Gemini features with millions of users immediately and scale successful ones quickly. Competitors like Open AI need to convince users to opt-in to features. Over five years, Google's technical AI capabilities and product distribution advantage will likely widen, not narrow, unless something disrupts Google's business model or a competitor leapfrogs on both product and distribution dimensions simultaneously.

What implications does Gemini's 750M user milestone have for the broader internet and content creators?

If Gemini becomes the primary way people search for information, traffic to content creator websites decreases because users get answers directly from search results rather than clicking through. This threatens publisher business models dependent on ad revenue. For content creators, this means developing strategies to benefit from AI integration: optimizing content for AI training, creating AI-native content formats, building communities outside search, or licensing content to AI companies. The tension between what's good for users (instant answers) and content creators (traffic, monetization) will drive regulatory scrutiny. Expect government investigations into how AI companies use content in training and whether attribution constitutes fair use. Long-term, the internet likely fragments into AI-friendly and AI-hostile zones, with creators choosing which future they want to support.

Key Takeaways

- Gemini reached 750M MAU in Q4 2025, growing 100M users per quarter and closing on ChatGPT's 810M through superior distribution integration

- Google's 8.5B daily search users provide unmatched distribution advantage that competitors like OpenAI cannot replicate without existing monopoly position

- Enterprise adoption is accelerating with 10B tokens/minute API throughput, indicating massive integration by Fortune 500 companies and SaaS platforms

- Aggressive 20/month, driving volume adoption

- Gemini 3 and custom Ironwood TPU chips demonstrate Google's commitment to remaining competitive on both product quality and infrastructure efficiency

Related Articles

- Enterprise AI Race: Multi-Model Strategy Reshapes Competition [2025]

- How AI Is Cracking Unsolved Math Problems: The Axiom Breakthrough [2025]

- Microsoft's Security Leadership Shift: What Gallot's Return Means [2025]

- Amazon Alexa+ Free on Prime: Full Review & Early User Warnings [2025]

- Microsoft's AI Chip Stops Hardware Inflation: What's Coming [2025]

- Nvidia's $100B OpenAI Gamble: What's Really Happening Behind Closed Doors [2025]

![Google Gemini Hits 750M Users: How It Competes with ChatGPT [2025]](https://tryrunable.com/blog/google-gemini-hits-750m-users-how-it-competes-with-chatgpt-2/image-1-1770246623350.jpg)