Hardware Compression in PCIe Gen 5 SSDs: How Dapu Stor's Roealsen 6 Challenges Conventional Storage Limits

Speed records keep falling in the SSD world. Every quarter, somebody breaks the last quarter's benchmark. But what happens when you get honest about how those records actually work?

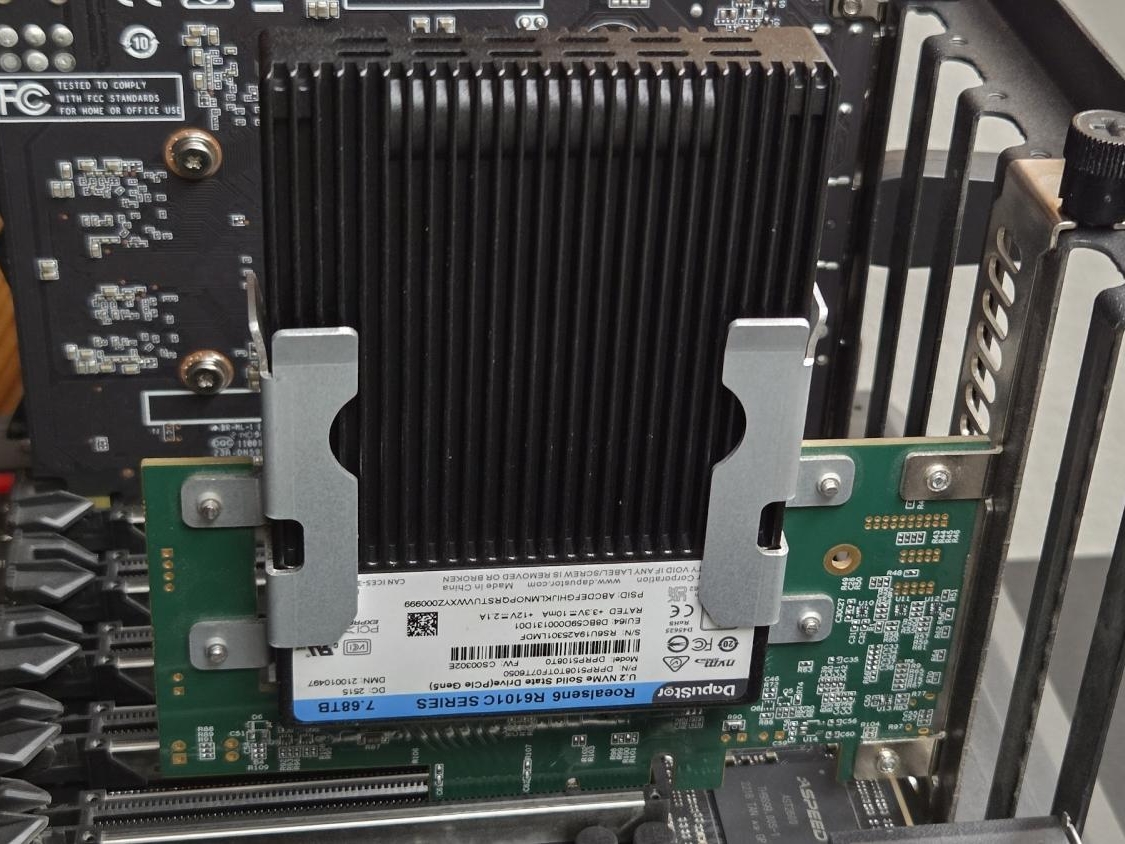

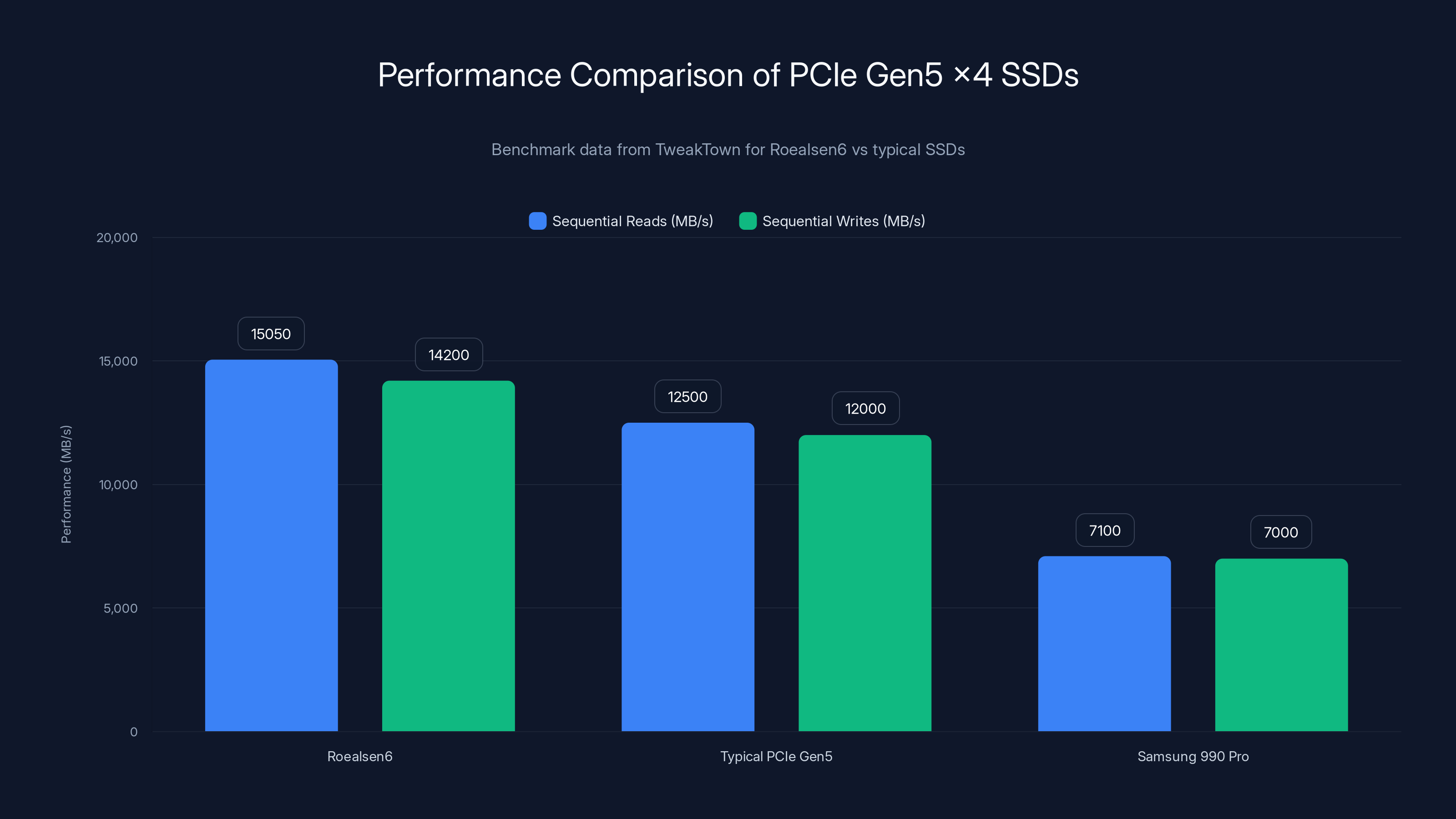

That's where the Roealsen 6 R6101C from Dapu Stor comes in. This Chinese startup's drive hits speeds that sound almost fictional: sequential reads hitting 15,050 MB/s, writes at 14,200 MB/s, and random write performance touching 1.27 million IOPS in 4K workloads. For context, that's not just fast. That's "faster than any single PCIe Gen 5 x 4 SSD we've tested" according to independent reviewers.

But here's the catch that everyone glosses over: those numbers depend entirely on how well your data compresses.

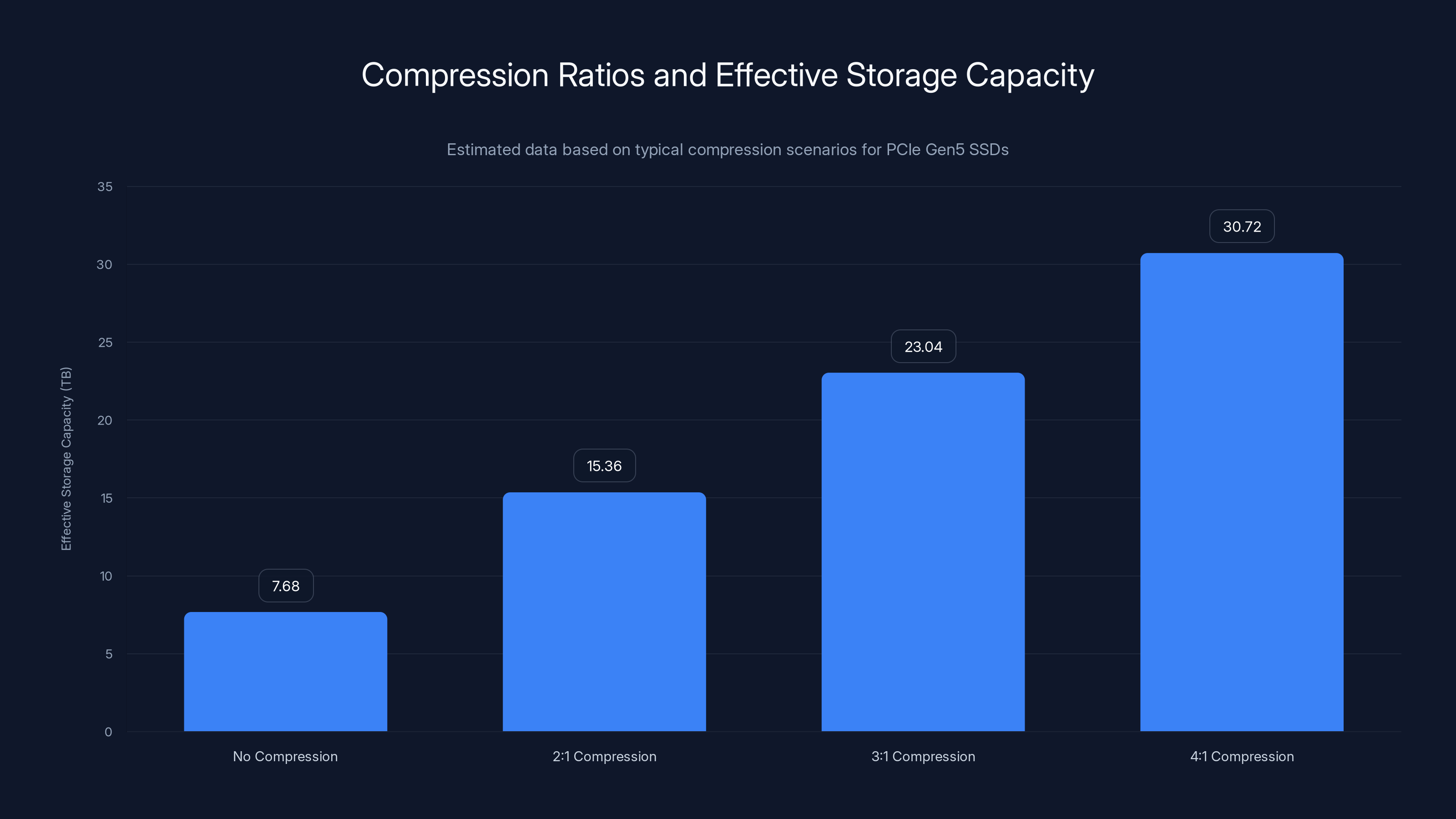

The Roealsen 6 doesn't break the laws of physics by hiding behind marketing fluff. It uses transparent hardware compression, a technique that's actually ancient in storage history but controversial in modern SSDs. Compression happens automatically at the hardware level, before data even touches the NAND flash. The drive can achieve compression ratios up to 4:1 under ideal conditions, which means a 7.68TB physical drive can present itself as over 30TB to the host system.

When compression works beautifully, you get those record-breaking numbers. When your data doesn't compress well—and plenty of modern data doesn't—you get normal SSD speeds. Maybe even slower than conventional drives, depending on how hard the compression engine works.

This isn't a new trick. Tape storage has used this approach for decades. Enterprise storage arrays compress data routinely. But in NVMe SSDs, compression remains polarizing. Some argue it's essential for the next generation of storage economics. Others treat it like a benchmark hack that shouldn't exist.

Let's dig into what's actually happening, why it matters, and whether this approach represents the future of enterprise storage or a clever marketing workaround.

TL; DR

- Record-Breaking Performance: Dapu Stor's Roealsen 6 R6101C achieves 15,050 MB/s sequential reads and 1.27 million random write IOPS through transparent hardware compression

- Compression-Dependent Results: Performance depends entirely on workload compressibility, with potential 4:1 compression ratios under optimal conditions

- Ancient Technique, Modern Application: Hardware compression has been standard in tape storage for decades but remains controversial in enterprise SSDs

- Real-World Trade-offs: Effective capacity increases dramatically with compression, but uncompressible data (video, encrypted content) gets no benefit

- Enterprise-Only Solution: The R6101C targets hyperscaler environments with predictable, compressible workloads, not consumer systems

The Roealsen6 significantly outperforms typical PCIe Gen5 x4 SSDs and the Samsung 990 Pro in both sequential read and write speeds, showcasing its superior performance capabilities under compressible data conditions.

What Is Hardware Compression in SSDs and Why Does It Matter?

Hardware compression in SSDs is a data processing technique that compresses files automatically before they're written to NAND flash memory. The compression happens transparently—your operating system doesn't know it's happening, and your applications don't need to be modified. Everything looks normal from the user's perspective.

This is fundamentally different from software compression, where an operating system or application compresses files before storing them. Software compression adds CPU overhead and typically compresses files individually. Hardware compression works at the storage controller level, processing data streams in real-time as they flow toward the flash.

Inside the Roealsen 6, this process uses an application processor paired with what Dapu Stor calls a "transparent hardware compression engine." The drive also includes its own in-house DP800 controller and custom firmware. When data arrives through the PCIe interface, it gets intercepted by this compression engine before being written to the 3D e TLC NAND flash.

The benefits stack up quickly if you understand the math. Compressed data takes up less physical space on the flash, which means:

- Faster writes: Less data needs to move through the flash interface

- Faster reads: Less data needs to be retrieved from the same physical storage medium

- Higher effective capacity: A 7.68TB drive can present as 30TB or more if compression works well

- Better efficiency: The same physical storage delivers more usable capacity

But compression also introduces complexity. Your drive needs extra processing power to compress and decompress data. If compression ratios fall below a certain threshold, the overhead might actually slow things down compared to writing raw data.

The Roealsen 6 handles this through intelligent tiering. The drive can favor raw speed or effective capacity, but both are directly tied to what kind of data you're actually storing. Tweak Town's testing showed that at a 2:1 compression ratio, the drive hit those record numbers. At 4:1 compression, performance would theoretically improve even further, though real-world testing at that ratio remains limited.

The controversial part: these are exactly the kind of benchmarks that provoke arguments in enterprise forums. Are these realistic numbers for real workloads, or are benchmarks cherry-picked around highly compressible test data? The answer is both. The numbers are real for compressible workloads. They're irrelevant for uncompressible workloads.

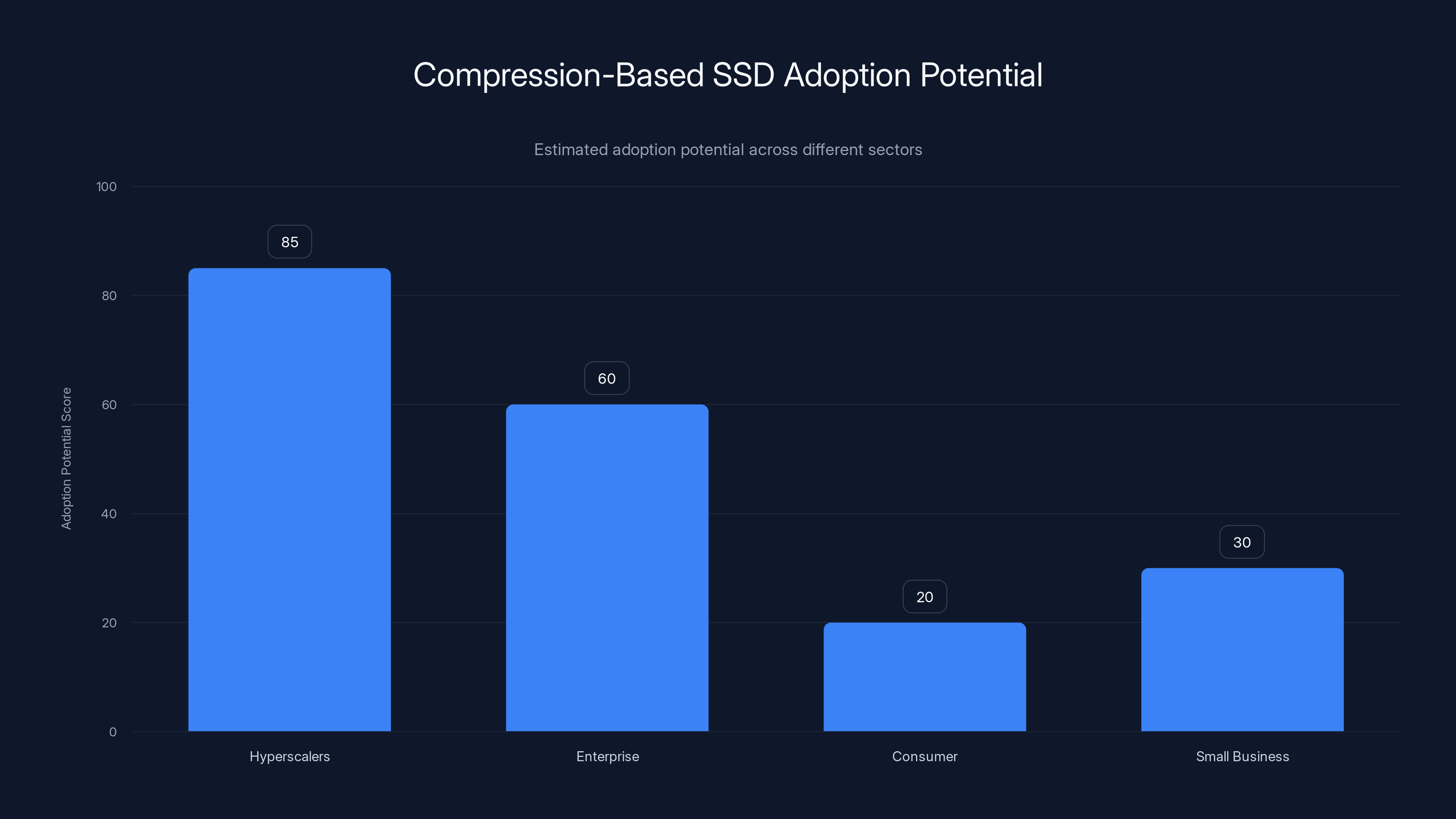

Compression-based SSDs have high adoption potential for hyperscalers due to cost benefits, moderate for enterprises, and low for consumer and small-business sectors. Estimated data based on typical workload characteristics.

The Historical Precedent: Why Tape Storage Never Abandoned Compression

Compression in storage is ancient. It's not new. It's not even particularly modern. Tape storage never abandoned it because tape solved a problem that kept compression relevant: scale economics.

Back in the 1990s and 2000s, tape was the only cost-effective way to store gigantic datasets. A single tape cartridge could hold terabytes of data, but only if compression worked. LTO technology built compression into the standard from the beginning. LTO-2 cartridges listed native capacity and compressed capacity side-by-side. LTO-9 cartridges officially hold 18TB native or 45TB compressed. Nobody questions these numbers. Enterprise storage teams just understand that compression ratios vary by workload.

Why did SSDs never adopt this approach broadly? Partly because SSDs arrived in the consumer market first. Consumer data is often already compressed (video, images, music). Partly because SSD manufacturers wanted to avoid the marketing confusion. And partly because nobody wanted to build the complexity into the controller when flash was expensive and raw capacity was the primary selling point.

But as flash densities increase and costs fall, the economics change. You can squeeze more storage density through compression than through denser NAND cells, and compression is purely a firmware and controller problem, not a manufacturing problem.

Tape storage also never faced the raw-speed obsession that SSDs do. A tape drive that's slightly slower but holds 3x more data is clearly the right choice. An SSD that achieves record benchmarks but only on compressible data creates confusion. The number is technically true, but the context matters.

Dapu Stor's approach mirrors what Scale Flux already brought to market with their compression-enabled SSDs. But the Roealsen 6 brings more aggressive specifications and enterprise-grade reliability. The drive supports NVMe 2.0 protocol, uses a U.2 form factor for enterprise deployments, rates for 1 DWPD (Drive Writes Per Day), and integrates with standard enterprise platforms.

The timing makes sense. Cloud storage companies, hyperscalers, and AI infrastructure teams are accumulating enormous datasets. These workloads are often highly compressible: training data, logs, checkpoints, model weights. The cost difference between storing data raw versus compressed becomes enormous at scale.

How the Roealsen 6's Hardware Compression Engine Actually Works

The mechanics of the Roealsen 6's compression system involve several layers working in concert. At the highest level, the drive includes an application processor running custom firmware from Dapu Stor, a transparent compression engine, a PCIe 5.0 interface controller, and the underlying 3D e TLC NAND flash array.

When data arrives via PCIe, it hits the application processor first. This processor decides whether compression is worth attempting. For highly incompressible data, the processor might skip compression entirely to save overhead. For highly compressible data, it applies full compression.

The compression algorithm itself matters significantly. Different algorithms have different speed and compression-ratio trade-offs. Faster algorithms like LZSS compress quickly but achieve lower ratios. Slower algorithms like LZMA achieve better compression but require more CPU. Enterprise compression engines typically use approaches optimized for the data types they expect: variants of LZ77, DEFLATE, or proprietary algorithms tuned for specific workloads.

Dapu Stor doesn't publicly specify which algorithm the Roealsen 6 uses, but it mentions achieving up to 4:1 compression ratios under ideal conditions. For reference, realistic compression ratios look like this:

- Database records: 2:1 to 3:1

- Log files: 4:1 to 6:1

- Machine learning training data: 3:1 to 5:1

- Already-compressed media: 1.01:1 to 1.1:1

- Encrypted data: 1.0:1 (no compression)

Once compressed, the data writes to the 3D e TLC NAND. The drive maintains metadata about which blocks are compressed and at what ratios. When data needs to be read back, the compression engine decompresses it transparently. The host system receives the uncompressed data and has no idea compression ever happened.

Tweak Town's testing revealed the real-world implications. At a 2:1 compression ratio, sequential reads hit 15,050 MB/s and writes hit 14,200 MB/s. The drive consumed roughly 18W under load. Random write testing reached 1.27 million IOPS in 4K workloads.

These numbers exceed Dapu Stor's published specifications. That's typically what happens when compression works better than conservative engineering estimates. Specifications usually assume lower-than-maximum compression, adding safety margins for worst-case scenarios.

The catch: at lower compression ratios, or with incompressible data, performance drops. This isn't a flaw in the design—it's fundamental to how compression works. The question becomes: what's your typical compression ratio?

If your workload achieves 2:1 compression and the device's raw speed is about 7,500 MB/s, effective speed is 15,000 MB/s. If your workload achieves 1.1:1 compression, effective speed is only 8,250 MB/s.

With hardware compression, a 7.68TB SSD can effectively store up to 30.72TB of data at a 4:1 compression ratio. Estimated data.

Record-Breaking Benchmarks: What Tweak Town Found and What It Means

Independent testing by Tweak Town provides the most concrete performance data available on the Roealsen 6. Their conclusion was unambiguous: "This is by far the fastest rate we've encountered from any single PCIe Gen 5 x 4 SSD."

The specific numbers:

- Sequential reads: 15,050 MB/s

- Sequential writes: 14,200 MB/s

- Random write IOPS: 1.27 million 4K operations per second

- Power consumption: ~18W under load

- Form factor: U.2 (2.5-inch enterprise standard)

- Test compression ratio: 2:1

For perspective, typical PCIe Gen 5 x 4 SSDs peak around 12,000-13,000 MB/s sequential performance. Samsung's 990 Pro, one of the fastest consumer drives, maxes out around 7,100 MB/s. Even specialized enterprise drives rarely exceed 13,000 MB/s without compression tricks.

The Roealsen 6 breaks those numbers by a significant margin. But the "with compression" caveat matters enormously. Tweak Town's testing used highly compressible test data—benchmarks that compress cleanly and predictably. Real-world results depend on whether your actual production data compresses at similar ratios.

Tweak Town gave the drive a 99/100 rating, which was high praise. But they included the critical disclaimer: "Real world results will depend on achieving compression levels that won't apply to all data types." That's the honest assessment most reviewers gloss over.

Here's what the benchmarks actually tell you:

- The hardware is capable: The DP800 controller and compression engine can sustain these speeds

- PCIe Gen 5 isn't the bottleneck: The interface can handle 15,000+ MB/s without saturation

- Compression math checks out: The speed improvements align mathematically with the compression ratios achieved

- Enterprise reliability works: The drive maintained stable performance across test cycles, no thermal throttling reported

What the benchmarks don't tell you:

- Whether your data compresses this well: Different workloads have wildly different compression characteristics

- What happens at lower compression ratios: Performance degrades predictably, but testing at 1.1:1 or 1.3:1 ratios would be more realistic for many workloads

- Real-world deduplication effectiveness: Some workloads benefit from deduplication more than compression, but deduplication wasn't tested

- Latency characteristics: The benchmarks focused on throughput, not latency distribution or tail latencies

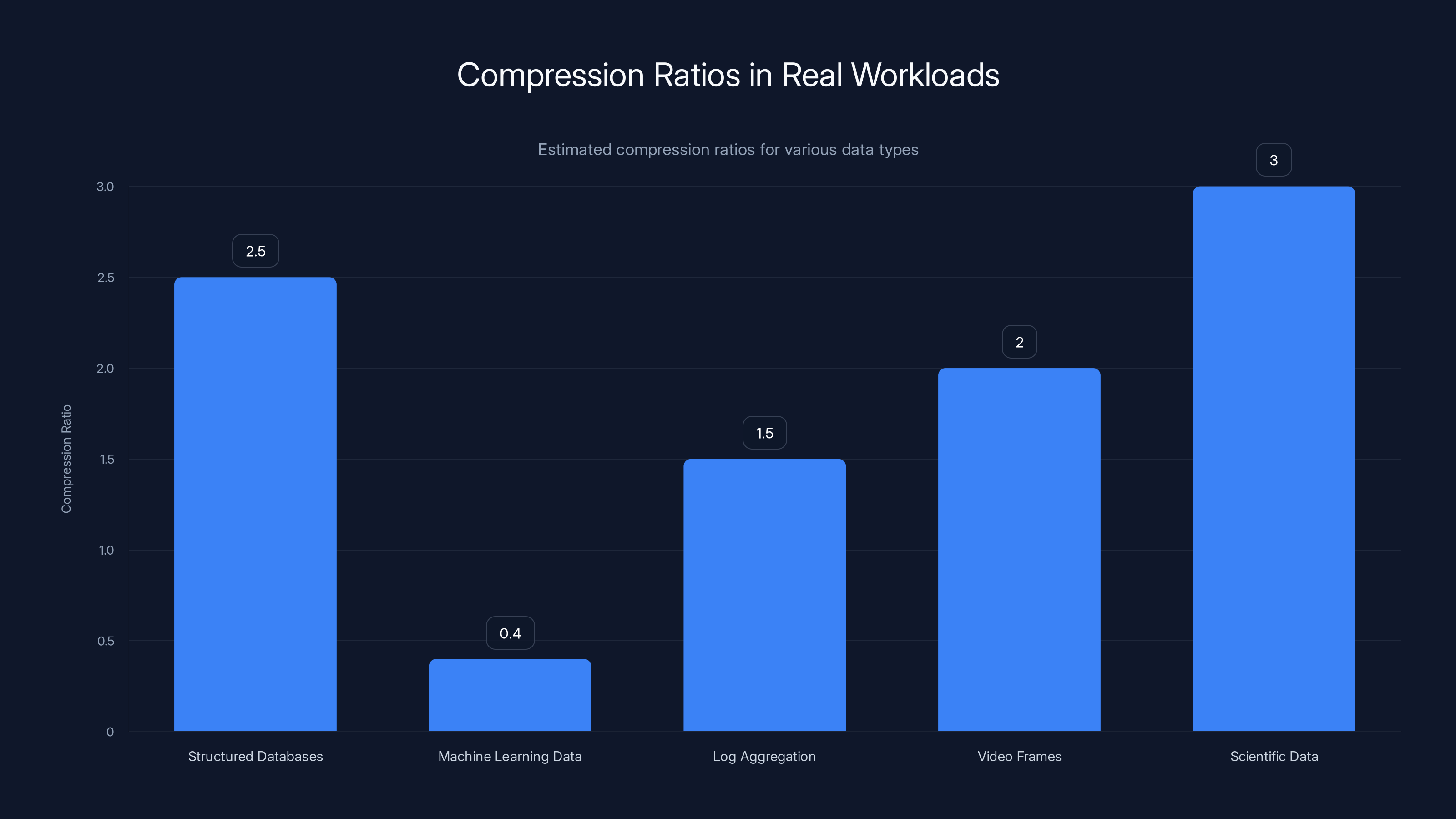

Compression Ratios in Real Workloads: The Reality Check

Benchmarks tell one story. Reality tells another. Understanding what compression ratios you'll actually achieve is crucial for making this architecture decision.

Database workloads compress exceptionally well. If you're storing structured data—user records, transaction logs, analytics tables—you're looking at consistent 2:1 to 3:1 compression. Databases have lots of repeated patterns, lots of NULL values, and lots of integer sequences that compress efficiently.

Machine learning training data often compresses well too. Training datasets are typically numeric arrays with repetitive structure and patterns that algorithms can exploit. Py Torch tensors, Num Py arrays, and Tensor Flow checkpoints often compress to 30-50% of original size.

But modern enterprise workloads are mixed. Your database exports might be stored as gzip-compressed CSV files already. Your machine learning checkpoints might be in HDF5 format with internal compression. Your log files are often already rolled through gzip nightly. Your backups might use incremental snapshots with their own compression.

Here's the uncomfortable truth: a lot of enterprise data is already compressed. Adding compression at the storage layer on top of existing compression doesn't help much. You end up with 1.05:1 or 1.1:1 compression ratios—barely worth the CPU overhead.

Compression works best in these scenarios:

- Structured databases without internal compression: Raw SQL Server databases, Postgre SQL data files, Oracle tablespaces

- Machine learning workloads with raw numeric data: Uncompressed training datasets, model checkpoints stored as raw tensors

- Log aggregation systems: Raw syslog, application logs, audit trails before any log rotation happens

- Video frame sequences: Individual video frames stored separately (uncommon but does happen in media production)

- Scientific data: Climate models, genomics data, particle simulation outputs

Compression fails in these scenarios:

- Media files: MP4, JPEG, PNG, WAV, AAC are already compressed

- Encrypted data: Encrypted volumes, encrypted backups, TLS data in transit

- Compressed archives: ZIP files, tar.gz, 7z files

- Already-compressed databases: Databases using internal compression like Inno DB compression or Oracle OLTP Table Compression

- Deduplicated backups: Deduplication already identified patterns; compression is redundant

For the Roealsen 6 to make economic sense, you need sustained workloads that compress at 2:1 or better. At 1.5:1 compression, the performance advantage shrinks by 25%. At 1.1:1 compression, you might as well use a standard PCIe Gen 5 drive.

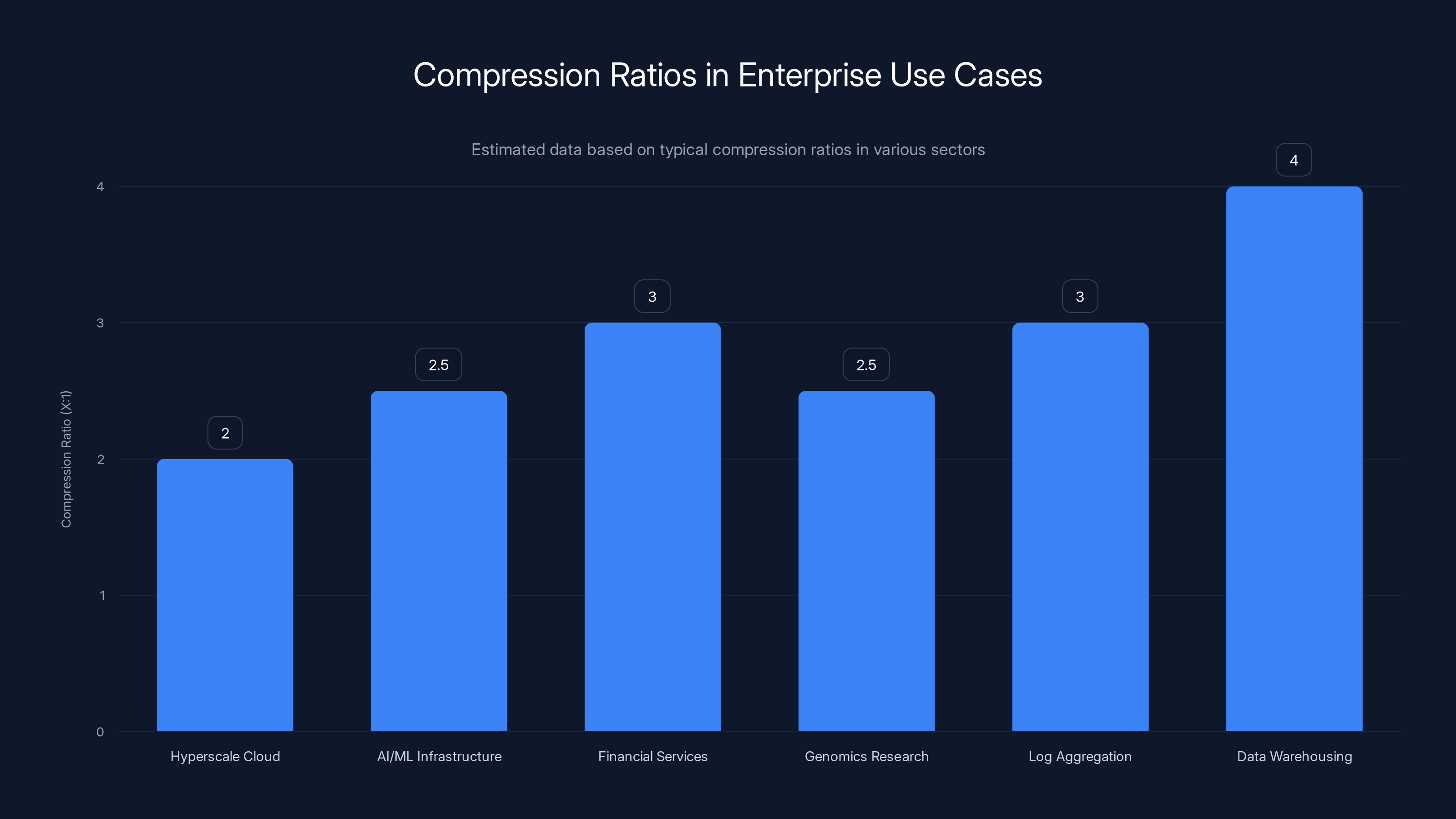

Compression ratios vary across enterprise use cases, with data warehousing achieving the highest compression at 4:1. Estimated data highlights potential cost savings.

Enterprise Use Cases Where Compression Makes Economic Sense

Compression-based SSDs aren't for everyone. They're specifically designed for enterprise environments where the economics work out.

Hyperscale cloud providers are the obvious target. When you're managing petabytes of storage across thousands of servers, compression ratios compound into enormous cost savings. A 2:1 compression ratio translates directly to a 50% reduction in NAND costs. At scale, that's millions of dollars per data center.

AI and machine learning infrastructure providers can justify compression heavily. Training datasets for large language models often compress to 40-50% of original size. Model checkpoints compress well too. If you're storing hundreds of terabytes of training data, compression represents meaningful cost reduction.

Financial services and analytics companies often run highly compressible workloads. Stock market tick data, financial transactions, and analytical databases compress excellently. Banks and trading firms accumulate enormous historical datasets; compression can make cold storage economical where it otherwise wouldn't be.

Genomics research generates massive sequencing datasets that compress well. A single genome sequencing run produces gigabytes of data; compressing to 2:1 or 3:1 ratios is realistic. Genomics companies and research institutions benefit significantly.

Log aggregation and monitoring infrastructure accumulates huge volumes of logs. Splunk, ELK stack, Datadog—these platforms consume terabytes of log data daily. Compression on the storage layer behind these systems can reduce infrastructure costs significantly.

Data warehouse and analytics platforms like Snowflake, Big Query, and Redshift work with columnar data that compresses exceptionally well. Snowflake's advertising that they can handle compression transparently; storage-layer compression compounds the benefit.

But here's what matters: all these use cases have one thing in common. They're exclusively enterprise workloads with predictable, homogeneous data. Nobody buys a compression-based SSD for their gaming PC or home NAS. The economics only work when you have massive scale and consistent compression characteristics.

The Roealsen 6 targets this market explicitly. It's a 7.68TB enterprise-class drive with U.2 form factor designed for data centers, not a consumer product. The 1 DWPD rating indicates enterprise-class workload expectations. The NVMe 2.0 support aligns with enterprise standards.

The Controversy: Are Compression-Based Benchmarks Fair?

There's legitimate debate in storage engineering forums about whether compression-based benchmarks are fair or misleading.

On one side, the argument goes like this: the Roealsen 6's numbers are achieved using compression. The benchmarks don't represent raw SSD performance; they represent SSD performance with a specific data transformation applied. It's like benchmarking a video encoder and claiming those are the player's speeds. It's technically true but contextually misleading.

The other side argues: compression happens transparently at the hardware level. The drive presents itself as a storage device that delivers these speeds. If your application is storing compressible data, these are the speeds you actually get. From the system's perspective, it doesn't matter whether the speed comes from raw flash or from compression assistance. The application sees the speed.

Both perspectives have merit. The problem is that benchmarks need context, and context gets lost in marketing materials.

Here's what's indisputably true:

- The benchmarks are real: The drive genuinely achieves these speeds with compressible test data

- The benchmarks are conditional: Performance depends entirely on data compressibility

- The benchmarks don't match typical workloads: Standard benchmarks assume less-compressible data

- Marketing oversells the story: Ads show the big numbers without adequate context

- Enterprise buyers understand the trade-offs: Hyperscaler teams know how to evaluate compression characteristics

Scale Flux faced the same criticism years ago with their compression-enabled SSDs. The technology is legitimate; the marketing challenge is explaining why the benchmarks are simultaneously true and not universally applicable.

For the storage industry, this debate matters. If compression-based SSDs become standard, benchmarking standards need to evolve. Industry bodies like SNIA and JEDEC would need to establish compression-aware benchmarking methodologies that account for different compression characteristics.

Until then, any compression-based SSD benchmark requires detailed analysis:

- What data was used in testing?

- What compression ratios were achieved?

- What's the minimum viable compression ratio for the benchmark to hold up?

- How does performance degrade at lower compression ratios?

- What percentage of enterprise workloads achieve similar compression characteristics?

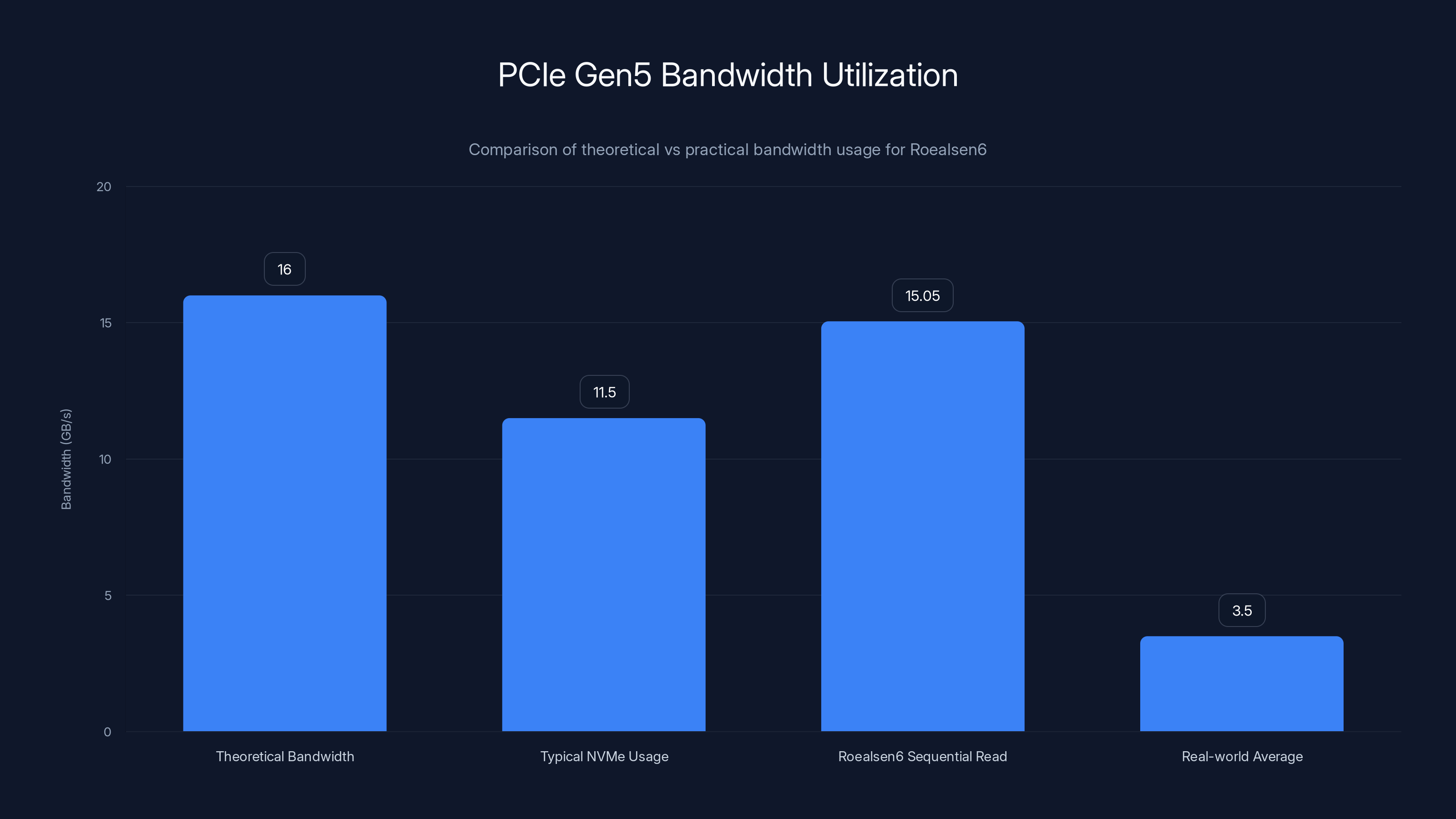

The Roealsen6 drive approaches the PCIe Gen5 interface's theoretical bandwidth with sequential reads, but real-world usage averages much lower. Estimated data for real-world average.

Dapu Stor's DP800 Controller: The Brains Behind the Operation

The Roealsen 6's performance depends on the DP800 controller—Dapu Stor's in-house designed controller and firmware stack. This is where the compression magic actually lives.

A storage controller is essentially a specialized computer. It manages communication between the host system and the NAND flash. It handles wear leveling, error correction, garbage collection, and all the low-level flash management that users never see. In typical SSDs, the controller also manages queuing, power management, and thermal throttling.

The DP800 adds compression as a core function. This isn't bolted on; it's baked into the firmware architecture. The controller includes dedicated compression circuitry or a compression-optimized execution path, depending on what Dapu Stor actually implemented (they don't publish detailed specs).

Building a custom controller is expensive. Most SSD makers buy controllers from Marvell, Phison, or Silicon Motion. Dapu Stor invested in proprietary design specifically to integrate compression at the controller level rather than trying to retrofit it into existing controller architectures.

This approach has advantages:

- Optimized hardware path: Compression isn't fighting for CPU resources; it's a first-class operation

- Transparent to the host: No driver changes needed; compression is completely invisible

- Integrated power management: Compression workload factors into power and thermal management

- Firmware flexibility: Dapu Stor can adjust compression algorithms without hardware changes

It also has disadvantages:

- No software fallback: If compression hardware fails, the entire drive is affected

- Locked into Dapu Stor's choices: You can't swap compression algorithms; you get what Dapu Stor implemented

- Longer time-to-market: Custom controllers take years to develop, so Dapu Stor can't pivot quickly

- Limited backward compatibility: The drive's intelligence depends on firmware; older firmware might lack optimizations

Competitors like Scale Flux have chosen slightly different approaches, sometimes implementing compression more as a firmware feature than a hardware feature. Different trade-offs exist. Dapu Stor's approach prioritizes transparency and sustained performance.

The application processor mentioned in Roealsen 6 specs is worth examining. This is a separate CPU running firmware that sits above the raw controller layer. It makes higher-level decisions: whether to compress, which algorithm to use, when to apply acceleration features.

This two-tier architecture is sophisticated. The application processor handles policy decisions; the controller handles execution. It's the difference between a human deciding to compress a file and the compression algorithm actually doing it.

PCIe Gen 5 and the Interface Bottleneck Question

The Roealsen 6 uses PCIe 5.0 x 4 interface, which provides up to 16 GB/s theoretical bandwidth. In practice, NVMe drives achieve about 70-80% of theoretical PCIe bandwidth, so realistic throughput is around 11-13 GB/s.

The question becomes: does the Roealsen 6 saturate the PCIe interface?

At 15,050 MB/s sequential read speed, the drive is consuming 15.05 GB/s of bandwidth. That's above typical saturations rates but not impossible. PCIe efficiency varies based on workload patterns. A single continuous stream might achieve 14+ GB/s even though typical random workloads only hit 10 GB/s.

For benchmark testing with sustained sequential operations, the drive probably comes close to saturating the interface. This is fine—it means compression and the controller aren't leaving performance on the table.

For real-world workloads, most systems don't maintain continuous sequential reads. You get bursty access patterns, random operations, and idle periods. The average throughput utilization is much lower.

This matters for capacity planning. A system might see 15 GB/s peak throughput but only 3-4 GB/s sustained average throughput. The distinction determines whether you're bottlenecked by storage, network, or compute.

PCIe Gen 6 is coming, which will double bandwidth again. Eventually, even with compression, storage controllers will need more bandwidth. This cycle has always happened: new interface standards arrive, devices learn to use the bandwidth, then the next standard arrives.

Structured databases and scientific data achieve the highest compression ratios, often between 2:1 to 3:1, while machine learning data typically compresses to 30-50% of its original size. Estimated data.

The Power Consumption Trade-off

The Roealsen 6 draws approximately 18W under load. That's higher than typical PCIe Gen 5 consumer SSDs, which often draw 8-12W. Higher power consumption is expected because the drive is doing more work: compression, higher sustained speeds, and continuous processing.

For enterprise data centers, power consumption matters enormously. Data center power budgets are constrained. Every watt of additional storage consumption reduces watts available for compute or network.

The question becomes: does the power cost justify the benefits?

If compression allows you to replace two standard SSDs with one Roealsen 6, you're trading 18W for 24W, which is actually a power win. If compression allows you to reduce NAND inventory by 50%, the overall system power consumption drops because you have fewer drives spinning (even though they're not mechanical).

For some workloads, the trade-off is worth it. For others, it's not. This is context-specific.

Heat generation is a secondary concern. 18W is manageable in enterprise U.2 slots with adequate airflow. The Roealsen 6 isn't pushing thermal limits, unlike some consumer SSDs that can exceed 50-60W during sustained loads.

Capacity Reporting and Storage Efficiency

One of the most unusual aspects of compression-based SSDs is how they report capacity to the operating system.

A standard 7.68TB SSD reports exactly 7.68TB to the host. The drive presents itself as 7.68TB, the OS allocates 7.68TB, and users can store 7.68TB of data (minus filesystem overhead).

The Roealsen 6 can report itself as presenting multiple times its physical capacity depending on compression characteristics. If compression achieves 4:1 ratios, the drive could report as 30.72TB to the host.

This creates interesting scenarios:

- Optimistic capacity reporting: If the drive reports 30TB but only achieves 2:1 compression, the OS thinks it's half-full when it's actually full

- Space management challenges: Traditional capacity monitoring doesn't work well; you need compression-aware monitoring

- Allocation accuracy: The drive needs to be intelligent about when to report out-of-space errors

This is solvable but non-trivial. The drive needs to track compression ratios in real-time and adjust reported capacity accordingly. If actual compression ratios drop below expected values, the drive must either compress harder (using more CPU) or gracefully handle write failures.

Tape storage solved this problem decades ago. LTO drives report both native and compressed capacity; administrators know to plan for the native capacity and celebrate if compression does better. SSD storage could adopt similar models.

From an enterprise perspective, this is actually elegant. If your monitoring shows you have 10TB free in a 30TB reported drive but you know your workload compresses at 2:1, you actually have 20TB free. The math works if you understand the context.

Comparing Compression-Based SSDs to Standard Gen 5 Drives

How does the Roealsen 6 actually compare to alternatives?

Compression-based approach (Roealsen 6):

- Advantages: Higher speeds with compressible data, higher effective capacity, better cost per gigabyte

- Disadvantages: Workload dependent, requires understanding of compression characteristics, higher power consumption, more complex implementation

Standard PCIe Gen 5 (Samsung 990 Pro, SK Hynix P41 Platinum, etc.):

- Advantages: Predictable performance, simple capacity model, lower power consumption, well-understood characteristics

- Disadvantages: Lower raw speeds, lower effective capacity, higher cost per byte

The choice depends on your workload:

Choose compression-based SSDs if:

- Your data is highly compressible (databases, logs, ML training data)

- You have massive scale where compression cost savings matter

- Your infrastructure team can manage compression-aware capacity planning

- You're willing to accept workload-dependent performance

Choose standard Gen 5 SSDs if:

- Your data is mixed or already compressed

- You need predictable, guaranteed performance

- Simplicity matters more than peak efficiency

- You're building consumer or small-business systems

The Future of Compression in Storage

Compression in SSDs isn't going away. The economics are too good, especially at hyperscale. But adoption will likely remain concentrated in enterprise for several years.

Consumer adoption depends on two things:

- Standardization: Storage industry standards need to define compression benchmarking and capacity reporting

- Simplification: Tools need to evolve so non-experts can understand compression characteristics

Industry trajectory suggests this is inevitable. As SSDs become cheaper and capacity becomes less of a constraint, efficiency becomes the differentiator. Compression is an efficiency multiplier.

Future developments might include:

- Compression algorithm options: Drives that let users choose compression algorithms for different workloads

- Deduplication integration: Combining compression with deduplication for even higher effective capacity

- Workload-aware compression: Machine learning that adjusts compression strategies based on observed workload patterns

- Better capacity reporting standards: Industry agreement on how to communicate compression characteristics

Microsoft, Google, and Amazon are probably already experimenting with compression-heavy architectures in their data centers. Once they standardize on it internally, the technology will trickle down to enterprise and eventually consumer markets.

Practical Implementation Considerations

If you're evaluating compression-based SSDs for your infrastructure, here's what you need to assess:

Data Characterization: Take representative samples of your actual production data. Run them through compression tools. Measure actual compression ratios for your specific workload. Don't use industry averages; use your data.

Monitoring and Alerting: Build compression-aware monitoring. Standard tools don't work. You need to track:

- Average compression ratios across the drive

- Compression ratio distribution (minimum, maximum, percentiles)

- Sustained throughput with realistic compression ratios

- Write amplification from compression overhead

Capacity Planning: Plan for native capacity, not reported capacity. If a drive reports 30TB but achieves 2:1 compression, plan around 15TB. This is conservative and safe.

Firmware Updates: Stay current with firmware. Compression algorithms and efficiency improvements often come through firmware updates. Unlike consumer SSDs, enterprise drives need active firmware management.

Failover and Redundancy: Understand failure modes. What happens if compression fails? Can the drive fall back to uncompressed operation? Is data integrity preserved? Enterprise systems need redundancy regardless, but understanding these failure modes matters.

Workload Testing: Don't deploy at scale without testing. Run your actual workloads against compression-based SSDs in a lab environment first. Measure real performance. Compare to standard SSDs. Make sure the benefits justify the complexity.

Conclusion: Are Compression-Based SSDs the Future?

The Roealsen 6 represents something genuinely interesting: a completely honest, mathematically sound approach to breaking through conventional SSD speed barriers using transparent compression.

The 15,050 MB/s sequential reads aren't fake. They're real. They're achieved using a legitimate technique. The benchmarks are true. They're also conditional on achieving 2:1 compression ratios, which matters enormously for real-world deployment.

This is actually better than most enterprise marketing, which would show the number without any caveats. Dapu Stor was relatively transparent about compression dependence, even though some reviewers glossed over it.

For hyperscalers dealing with petabytes of compressible data, compression-based SSDs are obvious cost reductions. The economics work out. A 2:1 compression ratio means 50% less NAND, which translates to 50% less capex in the storage tier.

For enterprise buyers with mixed workloads, the decision is more complex. You need to understand your actual compression characteristics. You need monitoring and capacity planning approaches that account for compression. You need to be okay with workload-dependent performance.

For consumer and small-business applications, compression-based SSDs don't make sense. Consumer data is often already compressed. The complexity outweighs the benefits.

Dapu Stor's Roealsen 6 isn't going to replace all SSDs. But for the specific use cases where compression works, it's a legitimate performance and cost breakthrough. That matters. That's worth attention.

The broader lesson is that storage engineering isn't about squeezing more performance out of the same architecture. Sometimes it's about choosing different architectures entirely. Compression is an architecture choice that works for some workloads and fails for others.

Understanding which category your workload falls into is the crucial skill. The technology is interesting. The application of that technology is what determines whether it's valuable.

FAQ

What is hardware compression in PCIe Gen 5 SSDs?

Hardware compression in SSDs is an automatic data compression process that occurs at the storage controller level before data is written to NAND flash memory. The Roealsen 6 uses a transparent compression engine that compresses data in real-time as it flows through the storage interface. This compression happens invisibly to the operating system and applications, with data being decompressed automatically when read back. Under ideal conditions, compression ratios can reach 4:1, allowing a physical 7.68TB drive to present as 30TB or more to the host system.

How does the Roealsen 6's transparent compression engine work?

The Roealsen 6 includes an application processor running custom Dapu Stor firmware paired with a dedicated compression engine. When data arrives through the PCIe 5.0 interface, the application processor evaluates whether compression is beneficial for that data. For highly compressible data like databases or logs, the processor sends the data to the hardware compression engine, which compresses it before writing to the 3D e TLC NAND. For incompressible data like encrypted content or already-compressed files, the processor may skip compression entirely to avoid overhead. Compression metadata is maintained so the drive knows which blocks are compressed and at what ratios, enabling transparent decompression during reads.

Why do the Roealsen 6 benchmarks achieve such high speeds?

The benchmark speeds reflect the mathematical advantages of compression combined with high-speed NAND. At a 2:1 compression ratio, the drive physically writes half as much data to the flash, which translates to approximately double the throughput. The measured 15,050 MB/s sequential reads and 14,200 MB/s sequential writes were achieved with highly compressible test data that compressed at 2:1 ratios. However, real-world performance depends entirely on whether your actual production data compresses at similar ratios. Data that doesn't compress well will achieve lower speeds, potentially approaching standard PCIe Gen 5 performance of 12,000-13,000 MB/s.

What types of data compress well on the Roealsen 6?

Structured databases typically compress to 2:1 to 3:1 ratios, making them excellent candidates for compression-based SSDs. Machine learning training datasets, logs, and analytics workloads also compress well, often achieving 3:1 to 5:1 ratios. Log files before rotation are particularly compressible at 4:1 to 6:1 ratios. Conversely, media files like MP4, JPEG, and PNG are already compressed and see minimal compression benefit. Encrypted data doesn't compress at all. The key is understanding your specific workload's compression characteristics before deploying.

Is the 2:1 compression ratio realistic for typical enterprise workloads?

It depends on the specific workload. For pure, uncompressed database storage, log aggregation, and raw machine learning training data, 2:1 compression is realistic and often conservative. However, much enterprise data is already pre-compressed. Databases using internal compression, backup systems using existing compression, and systems storing already-compressed media files will see minimal compression benefit, often below 1.1:1 ratios. Hyperscalers with carefully curated datasets often achieve 2:1 to 3:1 compression, which is why they benefit most from compression-based SSDs. You need to audit your actual data to determine realistic compression ratios.

How does compression-based SSD performance compare to standard PCIe Gen 5 drives?

At optimal compression ratios (2:1 or better), compression-based SSDs significantly outperform standard PCIe Gen 5 drives, which typically max out around 12,000-13,000 MB/s. The Roealsen 6 achieves 15,050 MB/s through compression assistance. However, at lower compression ratios (1.1:1 to 1.5:1), performance approaches standard Gen 5 speeds or can even fall below them due to compression overhead. For incompressible data, compression-based SSDs may perform worse than standard drives because the compression engine consumes resources without benefit. Standard PCIe Gen 5 drives offer predictable, consistent performance regardless of data characteristics, making them simpler for mixed-workload environments.

What is the power consumption impact of compression-based SSDs?

The Roealsen 6 draws approximately 18W under load, compared to 8-12W for typical consumer PCIe Gen 5 drives. The higher power consumption reflects the additional computational work involved in compression and the drive's sustained high-speed operation. In enterprise data centers, this matters because power budgets are constrained. However, if compression allows you to replace two standard SSDs with one Roealsen 6, you're trading 18W for 24W, which is actually a power efficiency gain. At scale with multiple compression-based drives, the infrastructure-level power trade-offs can be favorable despite higher per-drive consumption.

How does capacity reporting work on compression-based SSDs?

Compression-based SSDs report different logical capacity depending on compression ratios achieved. A physical 7.68TB drive might report as 30.72TB if achieving 4:1 compression, or 15.36TB at 2:1 compression. This creates capacity management challenges because traditional storage monitoring tools expect 1:1 data ratios. You need compression-aware monitoring that tracks actual compression ratios and adjusts reported capacity accordingly. Plan capacity conservatively around native physical capacity rather than reported logical capacity. The drive needs to intelligently handle situations where compression ratios drop and reported capacity becomes inaccurate, either by compressing more aggressively or gracefully handling write failures when actual capacity is exceeded.

Is compression at the SSD level different from application-level compression?

Fundamentally yes. Application-level compression typically compresses files individually, requiring CPU resources and adding latency to every read. Hardware compression at the SSD controller level processes data streams in real-time without application awareness or intervention. Hardware compression is transparent and operates at line rate as data flows through the storage interface. Application compression requires intentional activation by software. Hardware compression also has different overhead characteristics and can optimize for the specific workload patterns the SSD observes, whereas application compression uses generic algorithms. Hardware compression is more efficient for sustained, high-throughput workloads.

When should you choose a compression-based SSD over a standard Gen 5 drive?

Choose compression-based SSDs when your data is known to be highly compressible (databases, logs, ML training data) and you operate at scale where compression's cost savings matter significantly. Also choose compression-based SSDs when your infrastructure team has the expertise to manage compression-aware capacity planning and monitoring. Standard PCIe Gen 5 drives are better for mixed or pre-compressed workloads, consumer systems, situations requiring predictable performance, or environments where simplicity is paramount. The decision ultimately comes down to understanding your specific workload's compression characteristics and whether the complexity is justified by the efficiency gains.

The Bottom Line

The Roealsen 6 R6101C represents a legitimate breakthrough in PCIe Gen 5 SSD performance, but one that requires careful evaluation of your specific workload characteristics. If you're running highly compressible enterprise data at hyperscale, this drive is genuinely interesting. If your data is mixed or already compressed, standard PCIe Gen 5 drives remain the simpler, more predictable choice.

Storage engineering isn't just about maximizing a single metric. It's about total system efficiency across cost, performance, power, and reliability. Compression-based SSDs shift that balance in specific directions that work brilliantly for some use cases and don't make sense for others.

Understanding which category you fall into is what separates good infrastructure decisions from expensive mistakes.

Key Takeaways

- Hardware compression in the Roealsen6 R6101C achieves 15,050 MB/s sequential reads by reducing physical data written to NAND flash

- Performance is workload-dependent: compression works excellently for databases and logs (2:1-5:1 ratios) but provides no benefit for encrypted or already-compressed data

- Benchmark speeds are real but conditional—they depend on achieving 2:1+ compression ratios that don't apply to all data types

- Enterprise hyperscalers benefit most from compression-based SSDs due to scale, while consumer and mixed-workload systems should stick with standard PCIe Gen5 drives

- Compression-based SSDs require new approaches to capacity planning, monitoring, and infrastructure management that traditional tools don't support

Related Articles

- Reused Enterprise SSDs: The Silent Killer of AI Data Centers [2025]

- Kioxia Memory Shortage 2026: Why SSD Prices Stay High [2025]

- Micron 3610 Gen5 NVMe SSD: AI-Speed Storage & QLC Advantage [2025]

- Seagate's 32TB IronWolf Pro Hard Drive Hits Japan [2025]

- Optical Glass Storage: 500GB Tablets Reshape Data Archival [2025]

- The 245TB SSD Revolution: How AI is Reshaping Hyperscale Storage [2025]

![Hardware Compression PCIe Gen5 SSDs: Roealsen6 Breaks Speed Records [2025]](https://tryrunable.com/blog/hardware-compression-pcie-gen5-ssds-roealsen6-breaks-speed-r/image-1-1769468831885.jpg)