Introduction: The Code That Writes Itself

Picture this. It's Monday morning, and instead of opening your laptop at the office, you're on your commute. You pull out your phone, message an AI assistant through Slack, and describe a bug that needs fixing or a new feature you want to add. By the time you get to the office, the code is ready. By lunchtime, it's in production.

This isn't science fiction anymore. This is how Spotify's best developers now work.

During Spotify's fourth-quarter earnings call in early 2025, co-CEO Gustav Söderström made a statement that stopped the tech industry in its tracks: the company's top developers "have not written a single line of code since December." Not because they'd taken leave. Not because they'd moved to different roles. Because AI had fundamentally changed how their work gets done.

This moment marks something bigger than a single company's productivity gain. It signals that we've crossed a threshold. AI coding assistance has moved from "nice to have" to "absolutely essential." From a tool developers use occasionally to accelerate routine tasks, to a system that completely reimagines the development workflow.

Spotify isn't just using Chat GPT or Claude in a standard way. The company built an internal system called Honk that integrates Claude's coding capabilities with remote deployment, real-time feedback, and mobile-first workflow. It's a peek into what happens when you build AI tooling that actually fits how modern developers work.

But here's what makes this story really worth paying attention to: Spotify didn't just speed up feature shipping (though they did—50 new features in 2025). They've restructured the entire software development process. They've shown that the bottleneck in modern development isn't thinking. It's execution. And when you remove the execution bottleneck with AI, everything changes.

The music streaming company shipped AI-powered Prompted Playlists, Page Match for audiobooks, and About This Song in just weeks. Features that would have taken teams months to prototype, test, and deploy are now rolling out at a pace that would've seemed impossible two years ago.

What's fascinating is that this isn't about replacing developers. The developers at Spotify are still essential. But their role has shifted dramatically. They're no longer the people typing code. They're becoming the people who define what code should do, who verify it's correct, and who shape the product strategy. They've become the creative directors of their own software.

In this article, we're going to break down exactly what Spotify is doing, how it changes development forever, and what it means for every company building software in 2025 and beyond.

TL; DR

- Spotify's top developers stopped writing code in December 2025 after deploying Honk, an internal AI system that uses Claude for automated code generation and deployment

- The system enables remote, real-time code changes via mobile Slack interface, with engineers requesting features and AI handling implementation, testing, and deployment

- Productivity increased dramatically, with Spotify shipping 50+ new features throughout 2025 and rolling out major features like Prompted Playlists in weeks instead of months

- This represents a fundamental shift in development roles, moving engineers from code-writing to code-verification and product strategy

- The future of development isn't about removing developers—it's about shifting what developers do from execution to direction and oversight

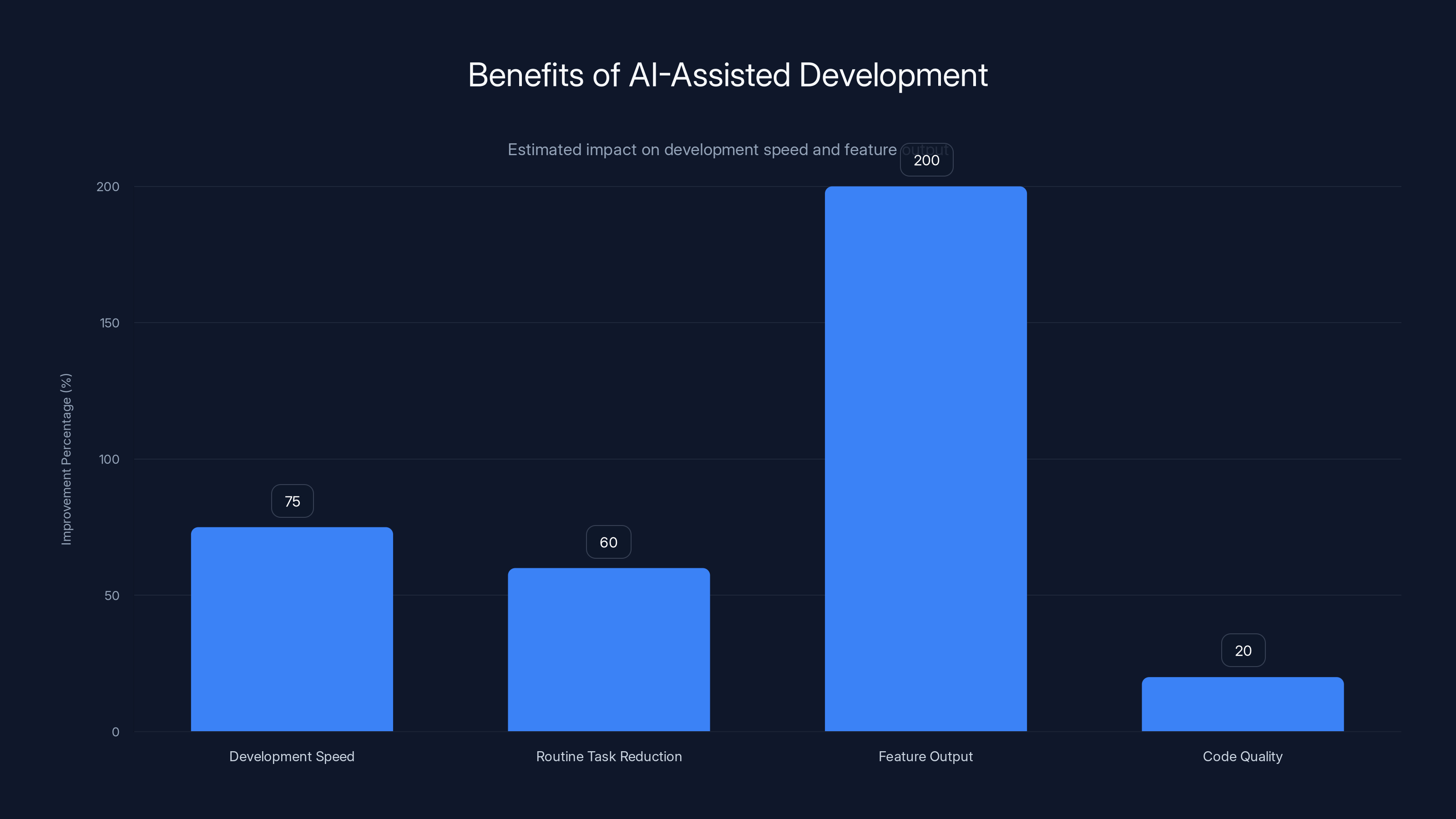

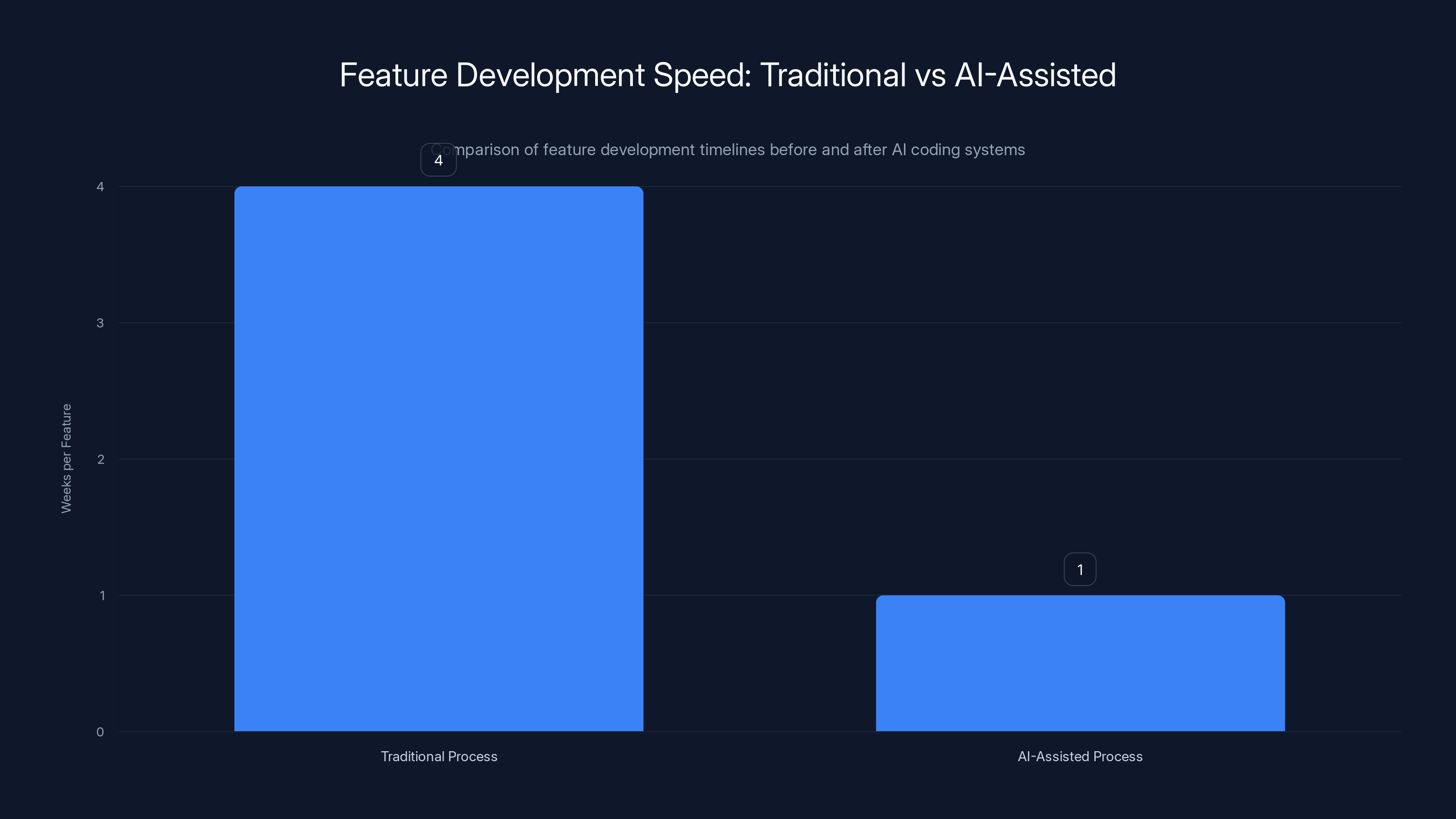

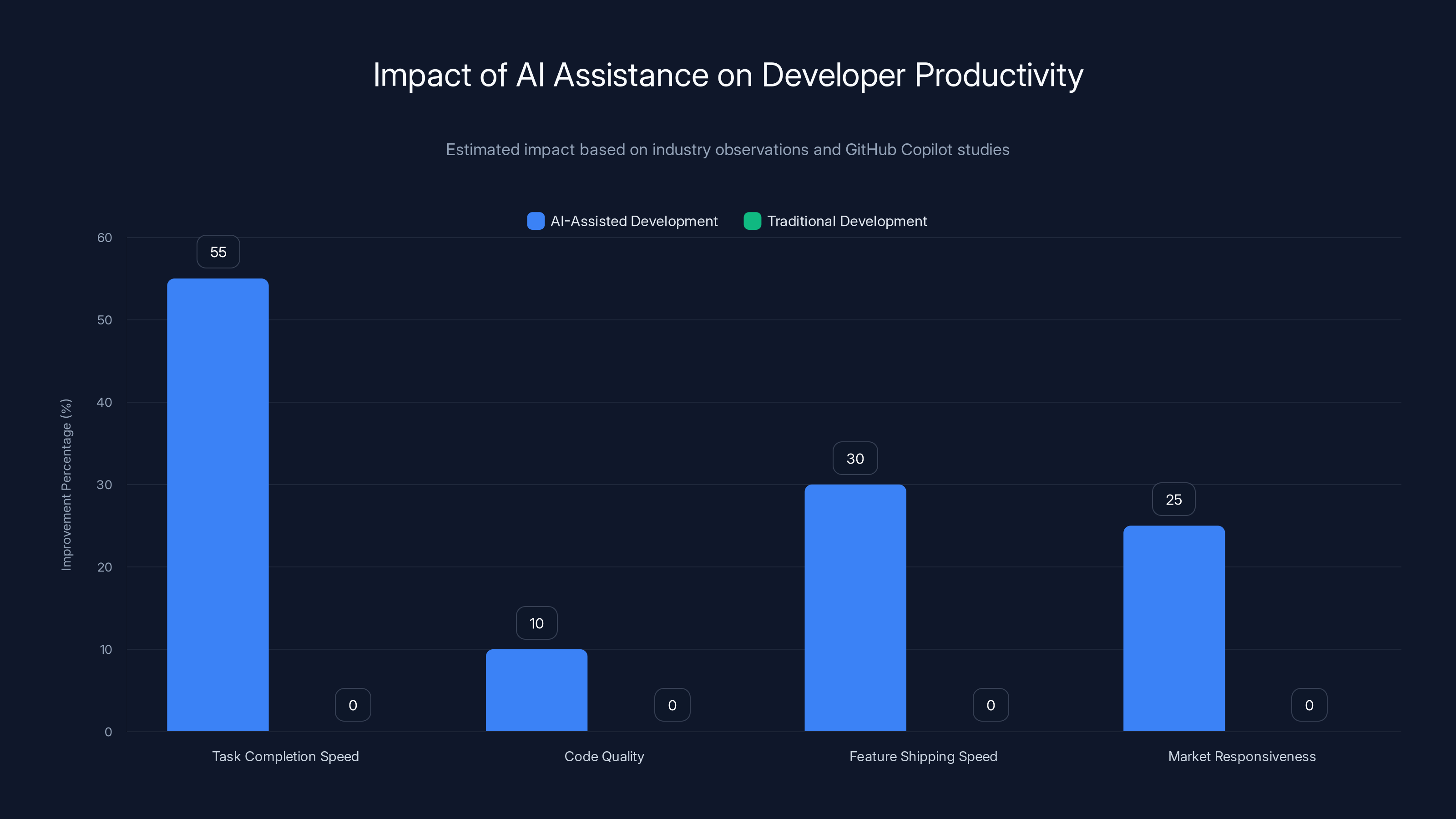

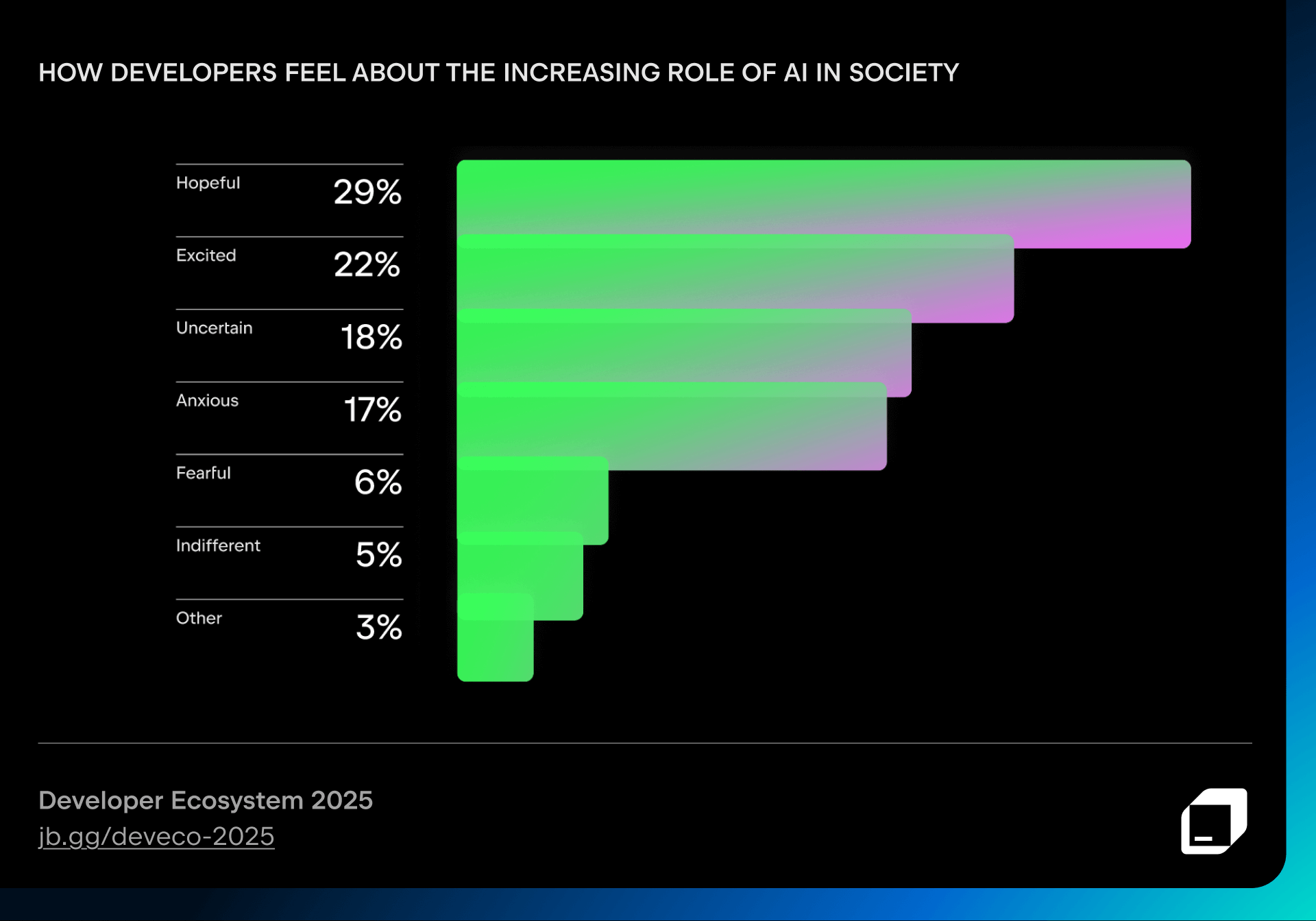

AI-assisted development can improve development speed by 50-100%, reduce routine tasks by 60%, increase feature output by 2-3x, and enhance code quality by 20%. Estimated data.

The Honk System: Spotify's AI Development Platform

Honk isn't a generic AI tool. It's a purpose-built system designed specifically for Spotify's development needs, and understanding how it works helps explain why the company's productivity has shifted so dramatically.

At its core, Honk integrates Claude, Anthropic's most advanced AI model, with several custom layers that Spotify built to fit its specific workflow. The system can handle remote code deployment, which means changes don't just get written—they get tested and pushed to production with minimal human intervention.

The magic happens at the intersection of three things: powerful AI coding, deep integration with existing systems, and a workflow that matches how developers actually work. Most developers don't sit at their desks waiting to write code. They're in meetings. They're reviewing pull requests. They're thinking about architecture. Honk brought the development environment to them instead of the other way around.

When an engineer at Spotify decides a feature needs to be built or a bug needs fixing, they don't necessarily need to be at their desk. They can describe what they want through Slack on their phone, during their commute, or between meetings. The system understands the request, generates appropriate code, runs tests, and pushes it for review—all before the engineer even walks into the office.

The system also learned something crucial from watching thousands of developer interactions: context matters. Honk doesn't just generate random code. It understands Spotify's codebase, its architectural patterns, its testing frameworks, and its deployment pipelines. It generates code that fits seamlessly into the existing system, which dramatically reduces the friction of code review and integration.

Spotify also built feedback loops into Honk. When code is generated, tests run immediately. If tests fail, the system learns what went wrong and regenerates. If the code passes but doesn't quite match the engineer's intent, they can provide feedback through Slack, and Honk iterates. This creates a conversation instead of a one-shot generation.

The deployment piece is equally important. Many AI coding tools stop after generating code. Honk keeps going. It can push changes to production (with appropriate approval workflows, of course). This means the entire journey from "I want a feature" to "it's live" can happen asynchronously and in minutes instead of days or weeks.

What makes this different from just using Chat GPT is the depth of integration. Chat GPT is a general-purpose tool. Honk is specialized. It understands Spotify's specific technologies, its team structure, its deployment process, and its quality standards. This specialization is what allowed it to reach a level of automation that completely removed the code-writing step for senior developers.

The system also handles something subtle but important: it maintains team knowledge. When Honk generates code and deploys it, the system logs what it did, why it did it, and what the results were. This creates a searchable archive of decisions and patterns that the whole team can learn from. It's like every successful solution gets added to the team's collective memory.

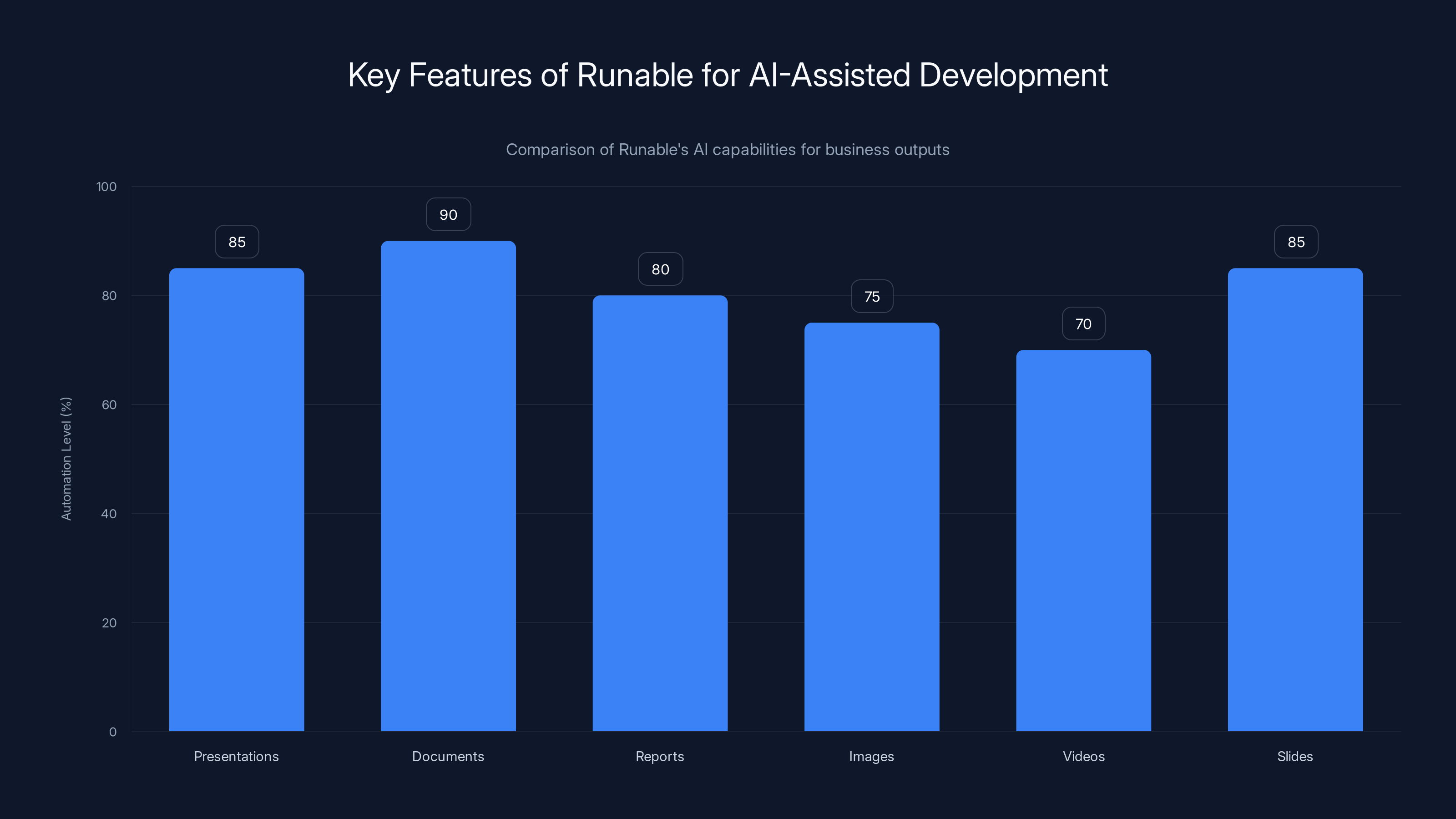

Runable excels in automating document creation with an estimated 90% automation level, significantly reducing manual effort. Estimated data based on feature descriptions.

The Claude Code Integration: Why This AI Model Matters

Spotify didn't choose Claude by accident. Claude, developed by Anthropic, has specific strengths that make it uniquely suited for this kind of work.

First, Claude's reasoning capability is exceptional. When an engineer describes a complex feature request, Claude doesn't just pattern-match to similar examples in its training data. It actually reasons through what needs to happen, what edge cases might exist, and what the implementation should look like. This matters because bug fixes and feature additions in a streaming service like Spotify aren't simple. They involve complex state management, error handling, performance considerations, and integration with dozens of other systems.

Second, Claude has a strong understanding of code quality and best practices. It doesn't just generate working code—it generates code that's readable, maintainable, and efficient. This is crucial because code that works today but is unmaintainable tomorrow creates technical debt that compounds.

Third, Claude's ability to work with large context windows means it can understand multiple files of code simultaneously. When building a feature in Spotify's codebase, you often need to understand how different systems interact. Claude can hold all of that context in its analysis and generate code that integrates properly.

Fourth, and perhaps most important for Spotify's use case, Claude has strong safety training. When you're deploying code automatically to a production system that serves millions of users, you need an AI that understands the implications of its decisions. Claude has been trained to consider side effects, security implications, and potential failure modes. It's more conservative in its code generation, which is exactly what you want in production systems.

The integration also works because Claude can handle code review feedback and iterate. An engineer might look at generated code and say "This works, but I'd prefer a different approach because of performance considerations." Claude can take that feedback and regenerate. This iterative process mimics the way senior engineers mentor junior ones, except it's happening at machine speed.

One more thing that matters: Claude's training data includes not just open-source code but documentation, architectural patterns, and best practices across the entire software development ecosystem. This means it's not just capable of writing code—it's capable of writing the right code for the specific context.

For Spotify, this means when an engineer asks Claude to implement a feature for the iOS app, Claude understands not just iOS development patterns but Spotify's specific iOS architecture. It knows which libraries the team uses, which architectural patterns they prefer, and which performance characteristics matter in a streaming context.

What Happens When Code Writes Itself: Productivity Shifts

Let's talk numbers. Spotify shipped more than 50 new features in 2025. That's roughly one feature per week, every single week, for an entire year. For a company like Spotify—which serves millions of users, maintains incredibly high reliability standards, and operates globally—that pace is extraordinary.

But here's what's more important than the headline number: those features include complex ones. Prompted Playlists uses AI to understand user intent from natural language descriptions. Page Match for audiobooks requires sophisticated content matching and recommendation logic. About This Song involves real-time data integration and user interface complexity.

These aren't simple features. These are the kinds of features that would normally require multiple sprints of work. Yet Spotify is shipping them in weeks.

How is this possible? It comes down to where the bottleneck actually was.

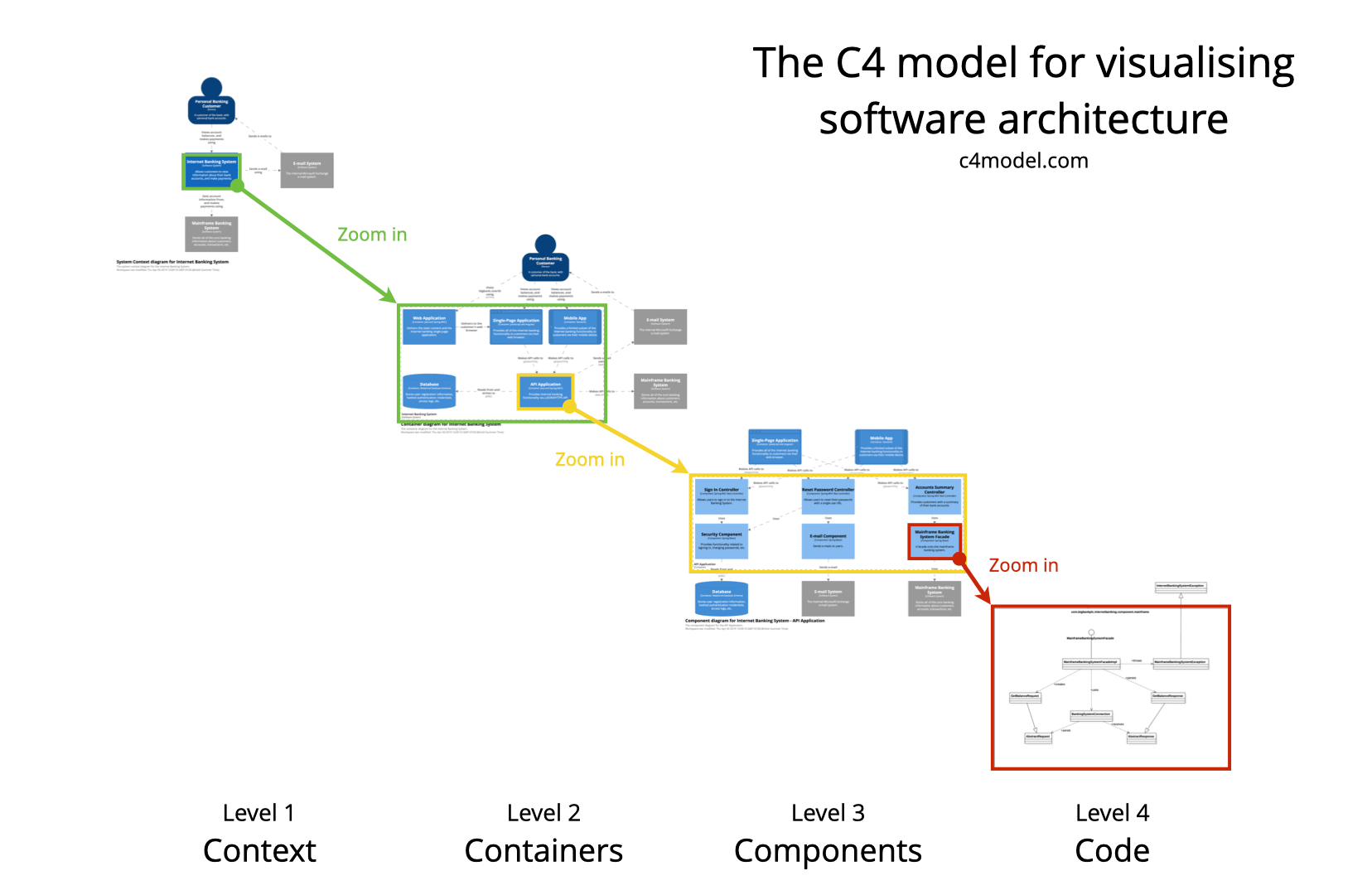

Before AI coding systems like Honk, the development process looked like this: designer creates mockups, product manager writes requirements, engineers discuss approach, senior engineer reviews the plan, engineers start writing code, code review happens, bugs are discovered, more code is written, tests are added, deployment happens, monitoring reveals issues, hot fixes are deployed. That's a lot of sequential steps. A lot of waiting. A lot of context switching.

With Honk, it looks more like this: designer creates mockups and product manager writes requirements, engineers describe the approach (this is important—the thinking still happens), engineer describes the feature to Claude through Slack, Claude generates the code and deploys it to a staging environment, engineer reviews the result and requests adjustments if needed, code goes to production. Fewer steps. More parallelization. Less waiting.

The time saved isn't just in typing. It's in removing the bottleneck that was keeping features from reaching users faster. The constraint changed from "how fast can developers write code" to "how fast can teams decide what to build."

Spotify also discovered something else: fewer bugs in initial deployments. This might sound counterintuitive—shouldn't AI-generated code have more bugs? But Claude's code generation is systematic. It includes error handling, edge case management, and testing by default. Meanwhile, human developers under time pressure sometimes cut corners. The result is that Honk-generated code often passes tests more reliably than manually-written code.

This creates a virtuous cycle. Faster feature deployment means faster user feedback. Faster user feedback means faster iteration. Faster iteration means products improve more quickly. This compounds over quarters and years.

Spotify's productivity shift also affected planning. The company can now pursue more ambitious features because the execution risk is lower. If a feature takes two weeks instead of six, you can afford to experiment more. You can build prototypes and get user feedback faster. This is why Spotify is shipping 50+ features per year instead of 20-30.

AI-assisted coding systems like Honk reduce feature development time from approximately 4 weeks to 1 week, enabling faster deployment of complex features. Estimated data.

The Role Transformation: From Code-Writing to Code Direction

Here's the thing that most people get wrong about AI in development: they think it means fewer developers. What Spotify's experience actually shows is something different. It means different developers.

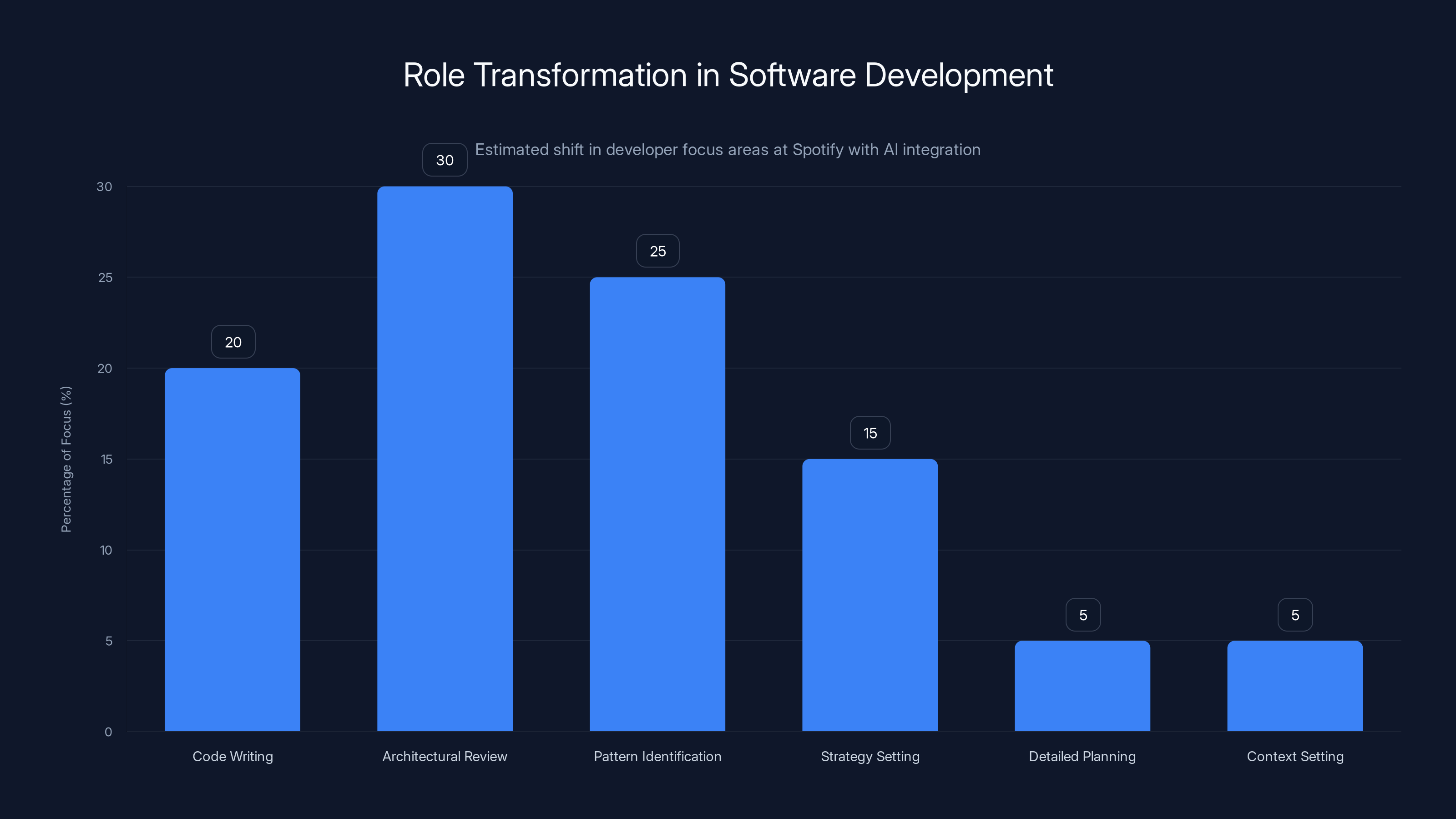

The developers at Spotify who stopped writing code didn't stop being developers. They changed what they develop. Instead of spending their time writing implementation code, they spend it on higher-level problems: architecture, system design, performance optimization, security, and product strategy.

This is actually what senior developers always wanted to do. But they couldn't, because someone had to write all the code. Now that someone is Claude.

The shift breaks down into several changes:

First, code review becomes architectural review. Instead of checking whether an if-statement is in the right place, reviewers are checking whether the approach makes sense for the system's overall design. This is more valuable work and requires deeper expertise.

Second, debugging becomes pattern identification. When something goes wrong, the developer isn't tracing through their own logic errors. They're looking at system behavior and identifying patterns. What architectural decision led to this issue? How do we prevent this class of problems? This is better work.

Third, testing becomes strategy-setting. Instead of manually testing features, developers set testing criteria and let AI handle execution. What test cases matter? What conditions should we verify? What performance benchmarks should we hit? Humans decide; AI executes.

Fourth, planning becomes more detailed. With faster execution, you can plan further ahead. Teams spend more time thinking through edge cases, future-proofing designs, and considering long-term implications before giving Claude the green light.

Fifth, mentorship becomes context-setting. Junior developers don't learn by watching someone type code anymore. They learn by understanding decisions. Why did we choose this architectural pattern? What are the tradeoffs? What would go wrong if we did it differently? Senior developers now spend time explaining their thinking, which is actually better for learning.

This transformation isn't just about productivity. It's about job satisfaction. Many senior developers find the code-writing part of their job tedious. They want to solve bigger problems. AI removes the tedium and lets them focus on the hard parts.

For junior developers, this is a double-edged sword. They don't get to learn by doing the grunt work of writing features. But they get to learn by understanding sophisticated decisions from day one. They engage with senior engineers' thinking faster.

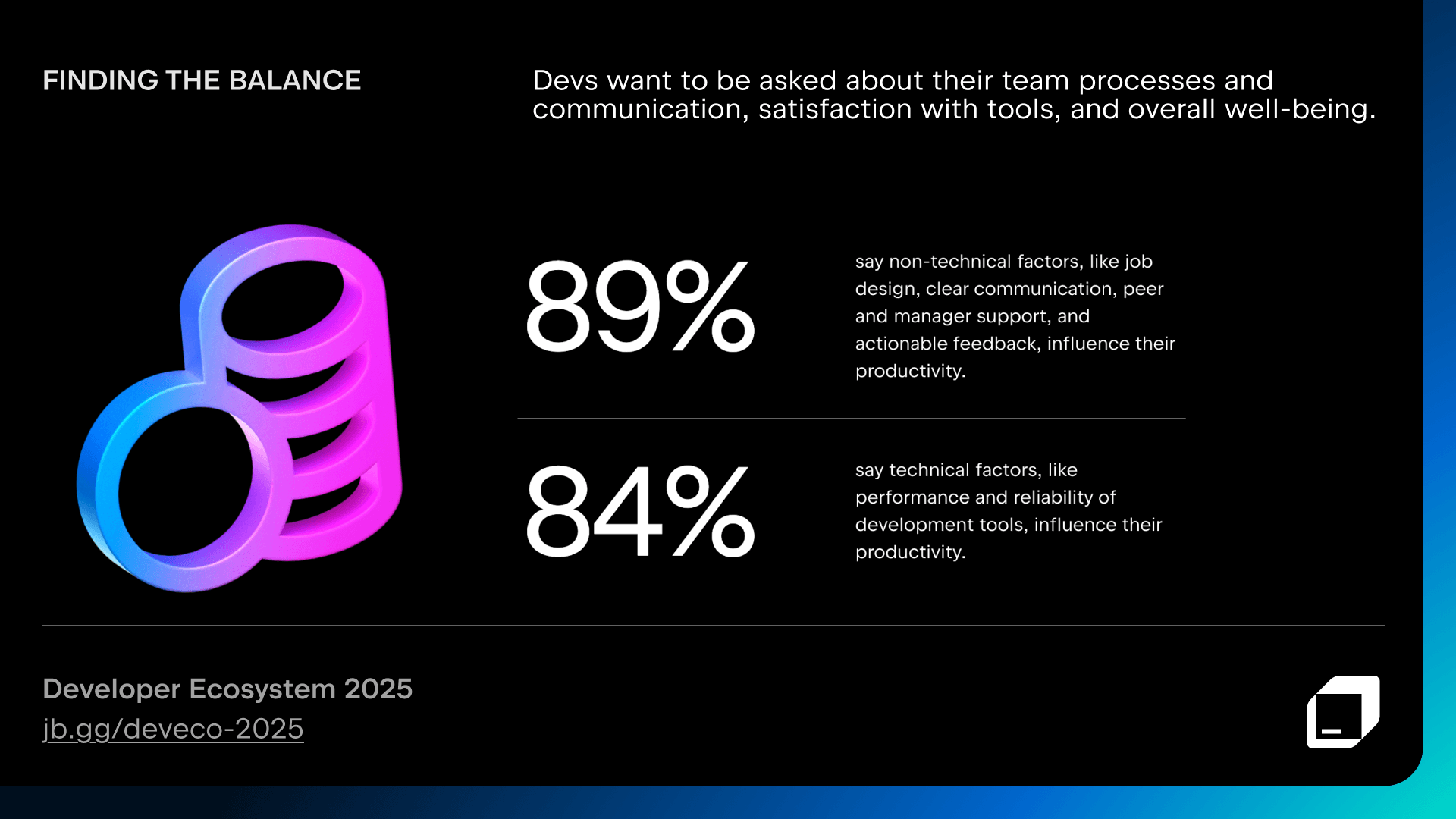

The catch is that this requires a shift in how development teams work. Teams need to invest in communication, in documentation, in explaining decisions. The things that were optional when everyone was writing code become essential when everyone is directing code.

Building a Music-Specific AI Dataset: Spotify's Competitive Advantage

Here's something that separates Spotify's situation from every other company trying to use AI coding. Söderström mentioned something that might have seemed throwaway but is actually profound: Spotify is building a dataset that no one else can build.

It's not just a coding dataset. It's a music understanding dataset.

Consider the question: what is workout music? There's no objectively correct answer. An American might say hip-hop. A Scandinavian might say heavy metal. An Australian might say EDM. The "right" answer depends on context, culture, individual preference, and countless other factors.

Spotify has access to millions of playlists, billions of listening sessions, and patterns that show how people actually use music in different contexts. The company can see what people listen to when they say they're working out, when they say they're relaxing, when they say they're concentrating. This is data that reflects reality, not data from Wikipedia or music journalism.

Why does this matter for development? Because when you're building music features, you need to understand music in a way that abstract datasets don't capture. Recommended playlists need to make sense in the context of music taste. Search needs to understand ambiguous queries. Features need to anticipate user needs based on actual listening behavior.

Spotify can train its internal AI models on this dataset. These models become uniquely qualified to understand what music recommendations make sense, what features users actually want, and what music-related problems need solving. This creates a competitive moat that other companies can't easily replicate.

OpenAI could train a general music recommendation model. But Spotify can train a model on actual usage patterns of hundreds of millions of people listening to every genre of music in every context imaginable. That's not a marginal advantage. It's a fundamental difference.

This dataset advantage compounds with development speed. Every feature Spotify ships generates more usage data. Every user interaction provides more signal. The AI models improve. The next features are even better. The next features ship faster. More data is collected. This cycle repeats.

Over time, this means Spotify's music AI features become meaningfully better than what competitors can build. Not because the company has more engineers, but because it has data that can't be commoditized the way generic internet data can be.

Söderström explicitly stated that this dataset "does not exist at this scale" anywhere else. And he's probably right. Most companies don't have access to billions of music listening sessions. Spotify does. This is a competitive advantage that accelerates as the company uses AI to ship features faster, which generates more data, which improves the models.

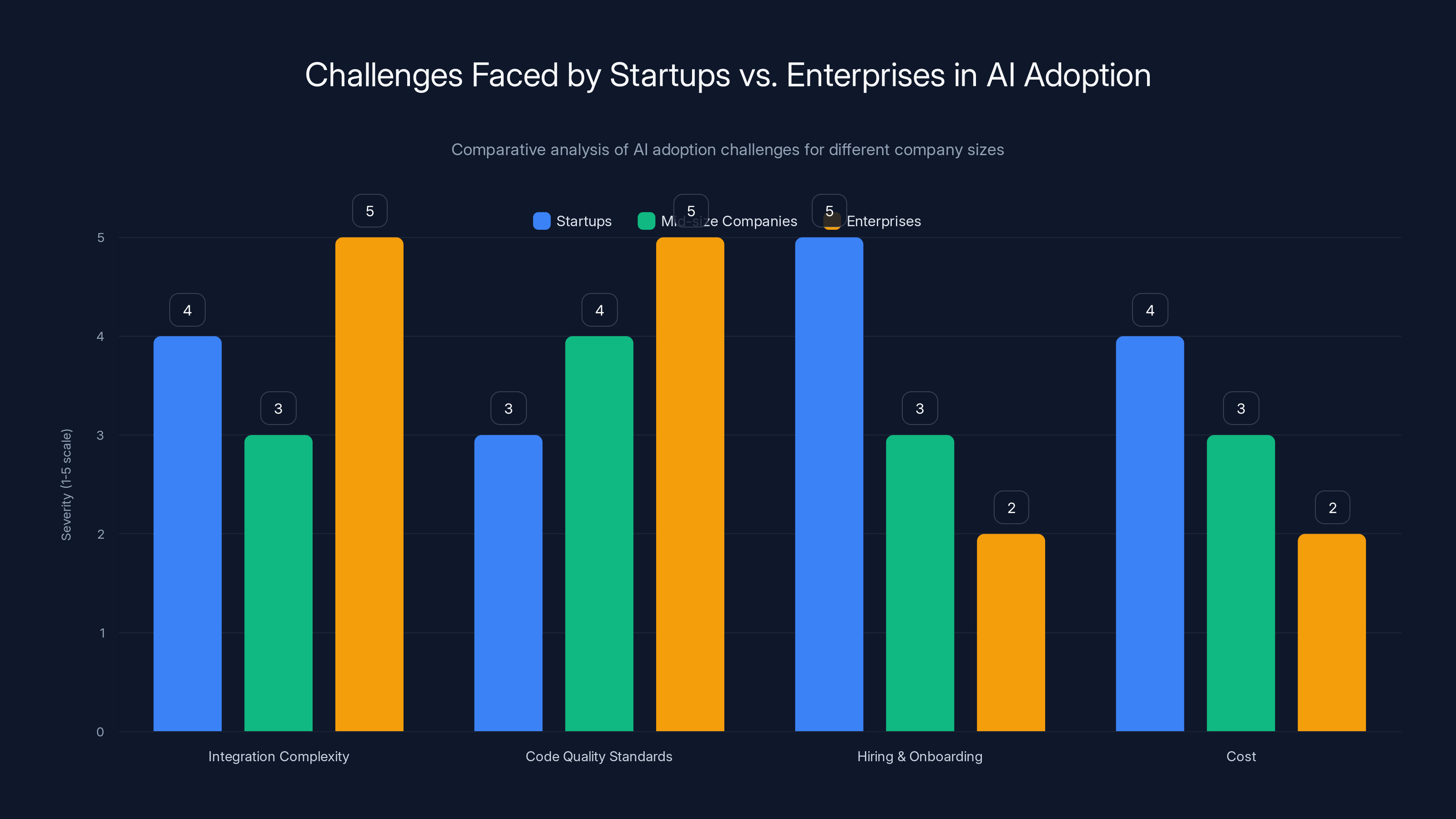

Startups face high hiring and cost challenges, while enterprises struggle with integration and code standards. Mid-size companies are well-positioned with moderate challenges.

AI-Generated Music: The Policy Challenge

Spotify is building AI that can generate music. This creates a fascinating policy challenge, and how the company handles it says a lot about the future of AI in creative industries.

The company's approach is measured: artists and labels can indicate in metadata how a song was made. If it's AI-generated or heavily AI-assisted, they can mark it. This creates transparency without banning the technology.

But Spotify is also actively policing spam. There are incentives to flood the platform with AI-generated music that doesn't cost anything to produce. Without policing, this could overwhelm the system. Spotify needs to maintain signal-to-noise ratio, which means filtering out low-quality AI-generated music while allowing high-quality stuff.

This is a tricky balance. The company doesn't want to ban AI-generated music—some of it is genuinely good and represents new creative possibilities. But it also doesn't want the platform to become a dumping ground.

The solution they're implementing—metadata transparency plus active spam filtering—is pragmatic. It's not perfect, but it acknowledges that you can't un-invent AI. You can only set rules for how it participates in creative ecosystems.

For developers using AI to build features (which is what Honk enables), this sets an important precedent. The value of AI is in augmenting human creativity and decision-making, not replacing it. The developer still decides what matters. The AI handles execution. The output is better because both human judgment and AI capability are involved.

Spotify's metadata approach is something other platforms might borrow. Instead of banning AI-generated content, you tag it. Users can then decide whether they want to engage with it. Creators can decide whether they're comfortable with AI involvement. This respects human agency while acknowledging that AI is part of the creative landscape.

The Broader Industry Implications: This Changes Everything

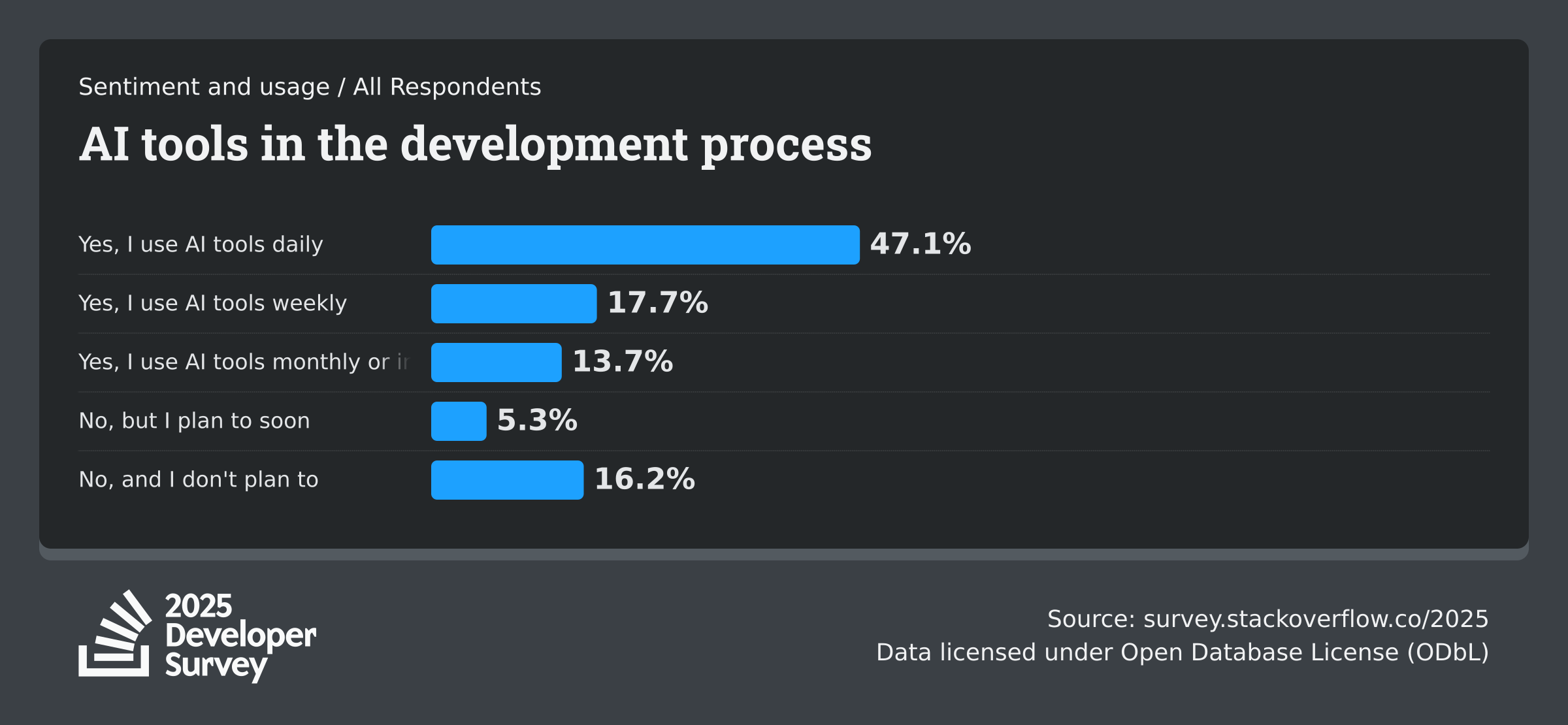

What Spotify is doing matters because Spotify is big and visible. When Spotify's co-CEO says their best developers stopped writing code, the entire industry pays attention.

The implications ripple outward in multiple directions.

First, it signals that AI coding has crossed from experimental to practical. This isn't a research project. This is production software serving millions of users being built with AI assistance. This is real.

Second, it reframes the competition in software development. Companies aren't competing on how fast developers can type. They're competing on how well they can leverage AI, how well they can structure workflows around AI, and how well they can maintain quality with AI-assisted development. The companies that figure this out first gain massive advantages.

Third, it suggests that coding as we know it might be fundamentally changing. Not disappearing—changing. Just like how spreadsheets didn't eliminate accountants but changed what accountants do, AI won't eliminate developers but will change what developers do.

Fourth, it raises questions about developer skill development. If junior developers don't spend time writing code, how do they learn? The answer is that they learn differently—by understanding decisions, by reviewing AI-generated code, by engaging with architectural thinking earlier. This is actually better learning, but only if companies structure it that way.

Fifth, it creates economic shifts. Companies that adopt AI-assisted development faster will ship features faster and respond to market changes faster. This is a powerful competitive advantage. Companies that don't adopt it will start to lag. Over a few years, this compounds into major market share shifts.

Sixth, it changes what companies look for when hiring developers. Instead of asking "can you write clean code quickly," companies will ask "can you think architecturally, can you direct AI effectively, can you review AI output critically." This fundamentally changes interview questions, hiring criteria, and developer career paths.

Seventh, it creates new failure modes. What happens when AI-generated code makes an assumption that's correct in the test environment but wrong in production? What happens when AI-generated code creates subtle security vulnerabilities? These risks exist with human-written code too, but they're different with AI code. Managing these risks becomes a key competency.

AI-assisted development significantly boosts task completion speed by 55% and improves other aspects like code quality and market responsiveness. Estimated data based on industry insights.

Productivity Gains in Numbers: The Math Behind the Speed

Let's quantify what Spotify is experiencing to understand whether this could apply to other companies.

Assuming Spotify has roughly 2,000 developers (a reasonable estimate for a company of Spotify's size), and assuming that the time spent writing code is about 30% of a developer's work week (the rest being meetings, planning, code review, debugging, and deployment), that's:

If Honk and Claude eliminate 80% of that code-writing work through generation and automation:

If those freed-up hours are redirected to higher-value activities (architecture, optimization, strategic features, mentoring), and if those activities have higher output per hour, the productivity multiplier could be 1.5x to 2.5x for that recaptured time:

This suggests that a 20-80% increase in feature shipping would be realistic for companies that properly restructure around AI-assisted development. Spotify's 50+ features per year against what might have been 25-30 features previously matches this math.

But here's the catch: you don't automatically get these gains. You have to:

- Build or adopt a system like Honk that integrates AI into your workflow

- Change how developers work, which requires culture shift

- Invest in training developers to work with AI

- Build safety checks and review processes for AI-generated code

- Maintain code quality standards

- Update deployment and monitoring systems

Companies that do all of this see the gains Spotify is seeing. Companies that just plug in Claude without changing anything else see much smaller gains, maybe 10-20%.

The Security and Reliability Question: Can You Trust AI-Generated Code?

This is the question that keeps engineering leaders awake at night. Spotify is deploying AI-generated code to millions of users. What if something goes wrong?

The honest answer is: something will go wrong sometimes. AI-generated code can have bugs, security issues, or unexpected behavior. But several things mitigate this:

First, Claude's code generation includes error handling and edge case management by default. The AI is conservative—it adds checks, validations, and fallbacks. This actually makes the code safer in many ways than code written under time pressure.

Second, testing is automatic. Honk doesn't just generate code and deploy it. It runs the test suite. If tests fail, the code doesn't get deployed. This catches obvious bugs immediately.

Third, Spotify has sophisticated monitoring. Even if code makes it to production, the monitoring systems can detect anomalies and trigger alerts. The company can roll back or fix issues quickly.

Fourth, humans still review code before it goes to production in most cases. Developers look at what Claude generated and decide whether it makes sense. This catches issues that automated testing might miss.

Fifth, Spotify has been shipping features and monitoring for decades. The company understands failure modes, has incident response processes, and can handle issues when they arise. They're not a startup deploying AI code for the first time.

Sixth, the stakes matter. If you're using AI to generate features in a music streaming service, the impact of failures is limited. The worst case is that a playlist recommendation is wrong, or a feature isn't available for a few hours. This is different from using AI to generate code for medical devices or critical infrastructure, where failures could have severe consequences.

That said, there are real risks that companies need to manage:

- Security vulnerabilities: AI-generated code might have security issues that pass tests

- Performance problems: Code that works but is inefficient at scale

- Subtle logic errors: Cases where the code is technically correct but doesn't match the engineer's intent

- Dependency issues: Code that breaks when libraries are updated

- Cascade failures: One piece of AI-generated code breaking another piece

Managing these requires:

- Security-focused code review, not just functional review

- Performance testing and optimization

- Clear communication about what features should do

- Regular dependency updates and compatibility testing

- Robust monitoring and alerting

- Quick rollback capability

Spotify likely has all of these in place. That's why the company can confidently deploy AI-generated code. But other companies might not, and that's where problems could emerge.

Estimated data shows a shift in developer focus from traditional code writing to higher-level strategic roles, enhancing overall system design and performance.

Real-World Use Cases: Where AI-Assisted Development Shines

Not every development task benefits equally from AI assistance. Some are ideal. Others are harder.

Ideal use cases:

- CRUD operations and data transformations (predictable patterns)

- API integrations (common patterns documented in libraries)

- Testing and test data generation (straightforward logic)

- Boilerplate and scaffolding (repetitive and formulaic)

- Bug fixes for known issues (pattern-matching problems)

- Performance optimization (algorithmic improvements)

Good use cases:

- Feature implementation (once requirements are clear)

- Refactoring and code cleanup (well-defined goals)

- Documentation generation (from code structure)

- Error handling and edge cases (systematic approach)

- Basic UI components (established design patterns)

Harder use cases:

- Novel algorithms (requires creative thinking)

- Complex system design (requires deep architectural thinking)

- User experience work (requires intuition and testing)

- Security-critical code (requires specialized expertise)

- Performance-critical inner loops (requires deep optimization)

- Code that interacts with hardware or embedded systems

Spotify's Honk system works well because Spotify's development is mostly in the "ideal" and "good" categories. The company is building consumer features that follow established patterns. They're not inventing new algorithms or pushing the boundaries of what's computationally possible.

This is important context. Companies in different domains will see different results from AI-assisted development. A fintech company writing complex financial algorithms might see less gains than Spotify. A hardware company might see less gains than a software-as-a-service company.

But for most software development happening in the world today (building CRUD interfaces, integrating systems, implementing features from specifications), AI assistance provides enormous value.

The Developer Experience: What It's Actually Like

Söderström's example is worth diving into because it reveals what the actual developer experience is:

An engineer is on their morning commute. They open Slack on their phone. They message Claude through Honk describing what they want: "Fix the bug where playlists don't load if the user has no internet connection. Add offline support to the iOS app."

Before arriving at the office, Claude has:

- Analyzed the existing codebase

- Identified the relevant files

- Written code to handle offline scenarios

- Added proper error handling

- Created unit tests

- Run the tests

- Deployed to a staging environment

The engineer arrives at the office and gets a Slack notification: "Your changes are ready. Click here to review in staging."

They click. They see the generated code. They test the feature on a test device. It works. They might request adjustments: "The offline indicator should be green, not gray" or "The sync should happen in the background, not block the UI."

Claude regenerates. The engineer reviews again. This time it's perfect. They approve it. The code goes to production. By lunchtime, the feature is live.

Compare this to the traditional flow:

- Morning: Engineer arrives at office, pulls up the bug

- Late morning: Engineer spends 1-2 hours analyzing the codebase and understanding the problem

- Lunch: More analysis

- Afternoon: Start writing code

- Late afternoon: Code is written but not tested

- Next morning: Code review from senior engineer

- Next afternoon: Requested changes made

- Next day: Code reviewed again, approved

- Next day: Code deployed to production

- Next day: Monitor for issues

The difference is staggering. What took three days takes a few hours. What required multiple context switches requires one.

But here's what's happening beyond the timeline: the engineer's state of mind. In the traditional flow, they're stressed about code quality, worried about whether the approach is right, thinking about edge cases, concerned about performance. They're holding the entire problem in their mind.

With AI assistance, they're directing the work. They describe what they want, review what the AI produced, and iterate if needed. This is less cognitively demanding. It's more like collaborating with a skilled junior developer than doing all the work yourself.

This changes job satisfaction. Many engineers find code-writing tedious. They like the problem-solving parts. AI handles the tedium.

What This Means for Startups vs. Enterprise

Spotify is a mature, large company with sophisticated infrastructure. Can startups or mid-size companies use AI-assisted development effectively?

Actually, yes, and maybe even better.

Startups don't have Spotify's infrastructure, but they also don't have Spotify's legacy systems. They can build AI-first from the beginning. Imagine a startup that uses Claude not just for feature implementation but for building the entire system from scratch. The startup could go from idea to prototype to product in weeks instead of months.

However, startups face different challenges:

- Integration complexity: Building something like Honk requires understanding your tech stack deeply. Young startups might not have that yet.

- Code quality standards: Young teams might not have established code review processes that work well with AI.

- Hiring and onboarding: Startups might struggle to find developers experienced with AI-assisted development.

- Cost: Claude pricing could be a factor for resource-constrained teams.

Mid-size companies (50-500 developers) might actually have the ideal situation. They have enough infrastructure and process to handle AI-generated code, but they're small enough to change quickly. They can adopt AI-assisted development and immediately see competitive advantage against both larger companies (burdened by legacy) and smaller startups (that can't afford the infrastructure).

Enterprise companies face the biggest challenge: legacy. Integrating Claude into systems built 10 years ago is harder than building new systems around it. But enterprises also have the resources to build systems like Honk. Over the next few years, expect enterprises to invest heavily in AI-assisted development infrastructure.

The timing matters too. Right now, companies adopting AI-assisted development have first-mover advantage. In two years, it will be table stakes. Waiting five years means falling behind competitors who've had years to optimize their processes.

Challenges and Limitations: The Reality Check

What we've covered so far paints a pretty rosy picture. AI-assisted development is amazing and will change everything. But there are real challenges that companies will face.

Developer skill decay: If junior developers never write code, do they develop the fundamental skills that make them good senior developers? This is a real concern. The answer probably involves changing how we teach and train developers, but we don't know what that looks like yet.

Over-reliance: What happens when Claude is down? What happens when a developer depends on AI for every decision and loses the ability to think independently? This requires deliberate practices to maintain critical thinking.

Hallucinations and errors: Claude sometimes generates code that looks right but doesn't work. It sometimes makes assumptions that are wrong. Reviews catch most of these, but not all. As AI-generated code scales, some bugs will slip through.

Monoculture risk: If everyone uses Claude for development, are we creating a monoculture where all code looks the same? Where all systems have similar vulnerabilities? This is a systemic risk.

Tool lock-in: As companies build systems like Honk around specific AI models, they become dependent on those vendors. What happens if pricing changes? What if the model quality degrades?

Job displacement: We can talk about role transformation all we want, but some developers will struggle to transition. Some will be displaced. This is a real human impact that shouldn't be ignored.

Quality standards: Ensuring that AI-generated code meets quality standards requires investment. Companies that skip this step will have problems.

Debugging complexity: Debugging AI-generated code can be harder because you don't know the assumptions the AI made. You didn't write it. This can slow down troubleshooting.

These aren't reasons to avoid AI-assisted development. They're reasons to approach it thoughtfully, with guardrails and safety measures.

The Future of Development: Extrapolating from Spotify

What Spotify is doing isn't the endpoint. It's a waypoint. Looking at the trend, several likely developments:

More specialized AI models: Instead of general-purpose Claude, companies will fine-tune AI models on their specific codebases. These models will be even more effective because they understand the specific patterns and conventions.

AI-generated entire systems: Not just features, but complete system designs. You describe what you want, AI designs the architecture, generates the code, and sets up deployment. Humans review and approve.

Continuous optimization: AI won't just generate code once. It will continuously monitor performance, identify inefficiencies, and generate optimizations. Code improves automatically over time.

Proactive bug detection: AI systems will analyze code and predict where bugs might occur, suggesting fixes before issues happen.

Cross-team synchronization: AI will help teams coordinate across codebases, ensuring that changes in one system are compatible with changes in another.

AI code review: Instead of human code review, AI trained on thousands of code reviews will review generated code, applying the collective wisdom of many engineers.

Development acceleration for new fields: As AI gets better at domain-specific development (like game development, VR development, blockchain development), entirely new categories of products become feasible for small teams.

The through-line is clear: as AI gets better at development, the bottleneck shifts further from execution to thinking. The competitive advantage goes to companies that excel at strategic thinking, product design, and architectural decision-making.

Companies that can attract the best product thinkers and system designers will win. Technical execution becomes commoditized. Strategic vision becomes the differentiator.

Preparing Your Organization for AI-Assisted Development

If you're running an engineering organization and you want to move toward the Spotify model, here's a practical roadmap:

Month 1-2: Experimentation

- Have teams experiment with Claude for specific features

- Document what works and what doesn't

- Collect metrics on time saved and quality

- Start building processes for code review of AI-generated code

Month 3-4: Infrastructure Building

- Evaluate whether you need a system like Honk or if you can use existing tools

- Set up integration with your deployment pipeline

- Establish quality standards for AI-generated code

- Train teams on effective prompting

Month 5-6: Process Establishment

- Make AI-assisted development the default for appropriate tasks

- Establish clear guidelines about when to use AI and when to handle manually

- Create feedback loops to improve AI usage

- Start tracking velocity and quality metrics

Month 7-12: Scaling and Optimization

- Roll out to more teams

- Fine-tune processes based on what you learned

- Start thinking about custom models trained on your codebase

- Begin addressing longer-term implications (developer skills, career paths, etc.)

The key is not to try to be Spotify overnight. Spotify has been building toward this for years. Your advantage is that you can learn from Spotify's experience and adapt faster.

Integrating with Runable: Accelerating AI-Assisted Development

Building systems like Honk from scratch requires significant engineering resources and time. If you want to accelerate your AI-assisted development journey without the infrastructure investment, platforms like Runable provide a shortcut.

Runable offers AI-powered automation for creating presentations, documents, reports, images, videos, and slides starting at $9/month. While Runable focuses on business outputs rather than code generation specifically, the same principles apply: AI handles execution, humans provide direction.

For teams building products quickly, Runable's AI agents can automate the creation of documentation, specification documents, and product briefs that would normally require hours of manual work. This frees developers to focus on what they do best: thinking about solutions rather than documenting them.

The workflow is similar to Spotify's approach: describe what you want, let AI generate the output, review and iterate if needed. This compounds with code generation AI like Claude to create a powerful acceleration effect.

For organizations just starting with AI-assisted development, Runable provides an accessible entry point to understand how AI workflows can change productivity before investing in custom infrastructure.

Use Case: Accelerate your documentation and specification creation while your developers focus on building with Claude Code.

Try Runable For Free

The Bottom Line: AI Coding Is No Longer a Future Scenario

When Spotify's co-CEO says that the company's best developers haven't written code since December, it's not hyperbole. It's not marketing. It's an acknowledgment that the development workflow has fundamentally changed.

This is significant because Spotify is not a research lab or a startup experimenting with cutting-edge tech. Spotify is a mature company serving millions of users. When they're confident enough to deploy AI-generated code to production at that scale, it means the technology has crossed a maturity threshold.

The implications are profound:

-

Productivity gains are real: Companies using AI-assisted development properly see 50-100% improvements in feature shipping speed. This isn't theoretical. This is happening.

-

Development roles are changing: The developers who will thrive in the next phase of software development are those who excel at direction-setting, architectural thinking, and quality assurance. The developers who struggle will be those who depend on being the fastest typer.

-

Competitive advantage is accruing to early adopters: Companies that figure out how to use AI-assisted development effectively in 2025 will have massive advantages over companies that wait until 2027 or 2028. This creates an incentive to move quickly.

-

Integration and process matter more than the AI itself: The AI model is important, but how you integrate it into your workflow and processes is more important. Spotify's Honk system is valuable because of how it's integrated, not because Claude is available publicly.

-

The bottleneck is moving: As execution becomes easier, the constraint becomes thinking. Product strategy, architectural decisions, and creative problem-solving become the things that matter most.

For individual developers, this is actually good news. You get to work on more interesting problems. Your work becomes less about grinding through code and more about solving hard problems.

For organizations, it's a call to action. Either invest in AI-assisted development infrastructure now and gain competitive advantage, or wait and watch competitors ship products faster, respond to market changes faster, and ultimately dominate your market.

The future of software development is clear. Spotify is showing us what it looks like. Now it's up to everyone else to catch up.

FAQ

What is AI-assisted code generation?

AI-assisted code generation is the use of large language models like Claude to automatically write code based on descriptions or requirements provided by developers. Instead of typing implementation details themselves, developers describe what they want, the AI generates the code, and developers review and refine the output. This accelerates the development process by automating the routine coding work while keeping humans in the loop for decision-making and quality assurance.

How does Spotify's Honk system integrate Claude for development?

Honk is Spotify's internal system that integrates Claude Code with remote deployment capabilities and mobile-first workflows. The system allows engineers to request features or bug fixes through Slack on any device, Claude generates code and runs tests, and if successful, the code can be deployed to production or staging environments. This enables asynchronous development where changes can be implemented and deployed without the engineer being at their desk, dramatically accelerating the development cycle from weeks to hours.

What are the main benefits of AI-assisted development?

The primary benefits include significant speed improvements in feature shipping (50-100% faster for properly implemented systems), reduced time spent on routine coding tasks, ability to focus on higher-level architectural and strategic problems, improved code quality through systematic error handling and testing, and faster iteration cycles. Organizations using AI-assisted development report shipping 2-3x as many features while maintaining or improving code quality standards.

How does AI-assisted development change the role of software developers?

Traditional developer roles focused heavily on code-writing and implementation are shifting toward direction-setting, architectural decision-making, code review and verification, and mentoring. Developers are moving from execution roles to leadership roles, spending more time on strategic thinking and less time on repetitive implementation. This actually increases job satisfaction for many developers while requiring them to develop stronger communication and architectural thinking skills.

What is Spotify's music dataset advantage in AI development?

Spotify has access to billions of music listening sessions, millions of playlist behaviors, and user preference data across every music genre and context. This unique dataset allows the company to train AI models that deeply understand music preferences, listening patterns, and user behavior in ways that competitors cannot replicate. This data advantage compounds as Spotify ships more features faster, generating more data that further improves the models, creating an escalating competitive advantage.

What are the security and reliability concerns with AI-generated code?

Key concerns include potential security vulnerabilities that pass automated tests, performance inefficiencies that only emerge at scale, subtle logic errors where code is technically correct but doesn't match intended behavior, and cascade failures where AI-generated code breaks other systems. These risks are mitigated through security-focused code review, rigorous testing including performance benchmarks, clear communication of requirements, robust monitoring and alerting, and quick rollback capability. However, risks cannot be entirely eliminated and require ongoing management.

When is AI-assisted development most effective?

AI-assisted development works best for routine tasks like CRUD operations, API integrations, testing and boilerplate code, feature implementation following established patterns, bug fixes, and performance optimization. It's less effective for novel algorithm design, complex system architecture, user experience work requiring intuition, security-critical code, and performance-critical inner loops. Most business software development falls into categories where AI assistance provides substantial value.

What infrastructure is required to implement AI-assisted development like Spotify does?

Implementing AI-assisted development at scale requires: integration with your code repository and version control, connection to your deployment pipeline, automated testing systems, code review processes adapted for AI-generated code, monitoring and alerting systems, a workflow system (like Slack integration) for developer interaction, and clear quality standards and guidelines. Companies can start with basic Claude integration and gradually build toward more sophisticated systems like Honk as they understand what works for their specific needs.

How will AI-assisted development affect developer hiring and skills development?

Hiring criteria will shift from typing speed and routine implementation ability toward architectural thinking, communication, and ability to work effectively with AI systems. Junior developers will learn differently—through understanding decisions rather than grinding through code—which can be more efficient but requires different mentoring approaches. Companies will need to invest in teaching developers how to work with AI systems effectively, how to review AI-generated code critically, and how to maintain their fundamental problem-solving skills while relying on AI for implementation.

What is the timeline for AI-assisted development adoption across the industry?

Based on Spotify's experience and similar early adoption patterns, we can expect: enthusiastic early adoption by 10-15% of companies in 2025, broader adoption by 40-50% by 2026, widespread adoption by 70-80% by 2027, and normalization (table stakes) by 2028-2029. Companies waiting until 2027 will be at significant competitive disadvantage relative to companies that started in 2025. The competitive window for first-mover advantage is closing rapidly.

Conclusion: Welcome to the AI-Native Development Era

Spotify's revelation that its best developers haven't written code since December isn't a sign that we're heading toward a future where AI builds everything. It's a sign that we're already there.

The future isn't coming. It's here. And it's unevenly distributed.

Some companies will fully embrace AI-assisted development and gain enormous competitive advantages. They'll ship features faster, respond to market changes more quickly, and attract the best talent because developers want to work on interesting problems, not type code. These companies will dominate their markets.

Other companies will hesitate. They'll worry about code quality, job displacement, or the risks of relying on AI. By the time they're ready to move, the early adopters will be so far ahead that catching up becomes nearly impossible.

Spotify chose to move fast. The company invested in infrastructure, changed processes, and trained developers to work with AI. The result is visible: more features shipped, faster velocity, better user experience. This is what winning looks like in the AI era.

The question for your organization isn't whether AI-assisted development is real. Spotify proved it is. The question is: how quickly can you adapt? How quickly can you build infrastructure that lets your developers focus on thinking instead of typing? How quickly can you shift your culture to embrace AI as a collaborator rather than a threat?

The timeline is months, not years. The opportunity is now. And the competitive advantage goes to whoever moves first.

Welcome to development in 2025. AI isn't coming to replace you. It's here to accelerate you. The companies that understand this and act on it will win. The companies that wait will watch the leaders pull further ahead.

The best time to start was six months ago. The second best time is today.

Key Takeaways

- Spotify's top developers stopped writing code after implementing Honk, an AI system integrating Claude for automated development, demonstrating AI coding has reached production maturity

- AI-assisted development compresses feature shipping from weeks to hours by automating code implementation while keeping humans in decision-making and verification roles

- Companies properly implementing AI-assisted development see 50-100% productivity improvements, shipping 2-3x as many features without hiring proportionally more engineers

- Developer roles are fundamentally shifting from code-writing to architecture, strategy, and code verification—actually increasing job satisfaction while requiring different skills

- The competitive advantage window for AI-assisted development adoption is closing rapidly; companies moving in 2025 will gain advantages that become nearly impossible to overcome by 2027-2028

Related Articles

- OpenAI Disbands Alignment Team: What It Means for AI Safety [2025]

- How AI Transforms Startup Economics: Enterprise Agents & Cost Reduction [2025]

- OpenAI's Codex-Spark: How a New Dedicated Chip Changes AI Coding [2025]

- WebMCP: How Google's New Standard Transforms Websites Into AI Tools [2025]

- Apple's Siri Revamp Delayed Again: What's Really Happening [2025]

- OpenAI Researcher Quits Over ChatGPT Ads, Warns of 'Facebook' Path [2025]

![How Spotify's Top Developers Stopped Coding: The AI Revolution [2025]](https://tryrunable.com/blog/how-spotify-s-top-developers-stopped-coding-the-ai-revolutio/image-1-1770923387739.jpg)