The Seismic Shift: Why AI Agents Are The New Cloud

Twenty years ago, the public cloud fundamentally rewired how startups could compete. No need for physical servers. No $500K hardware budgets before you wrote a single line of code. Entrepreneurs could launch globally from a garage.

We're living through something equally profound right now, and most people haven't internalized it yet.

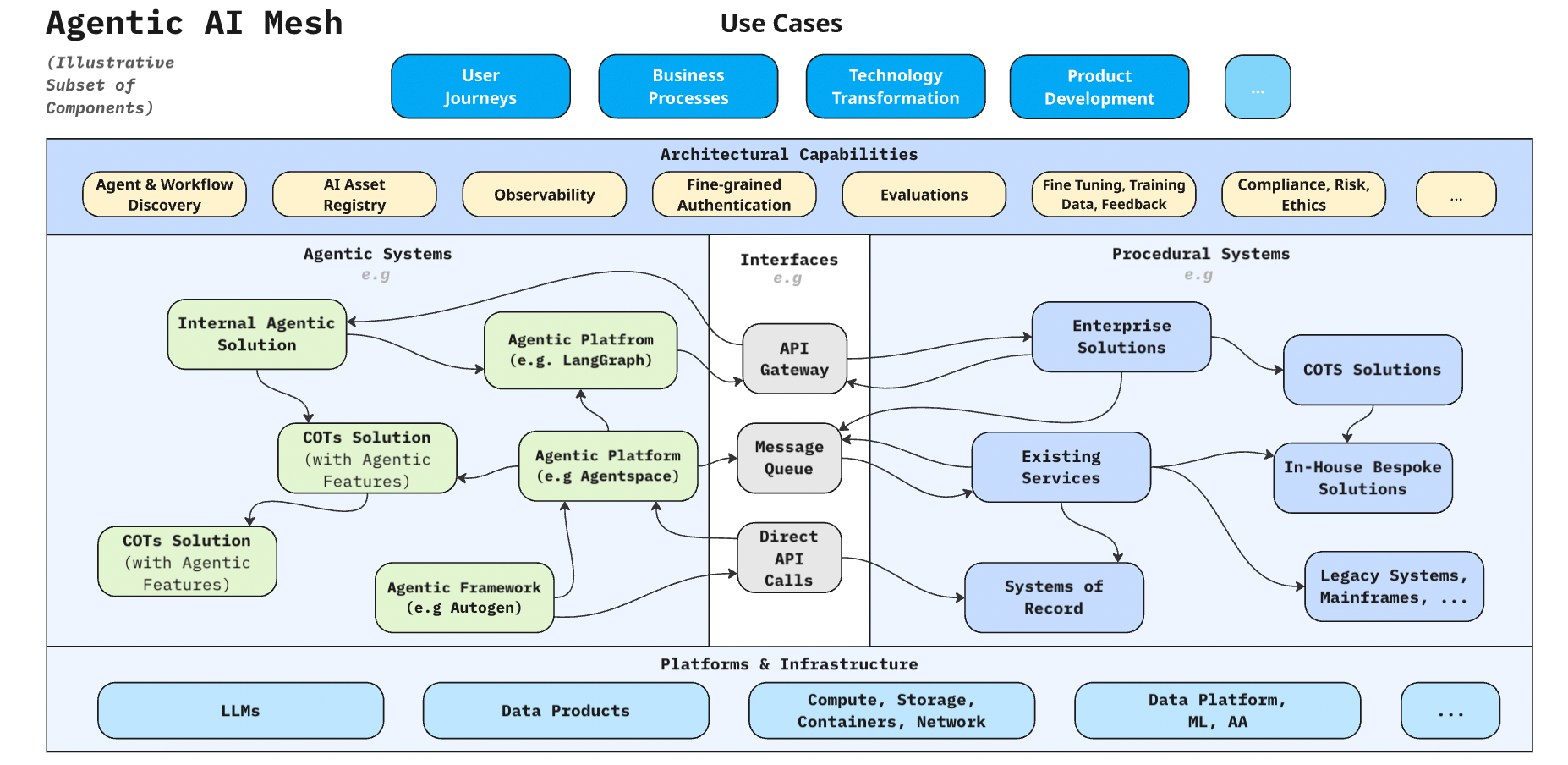

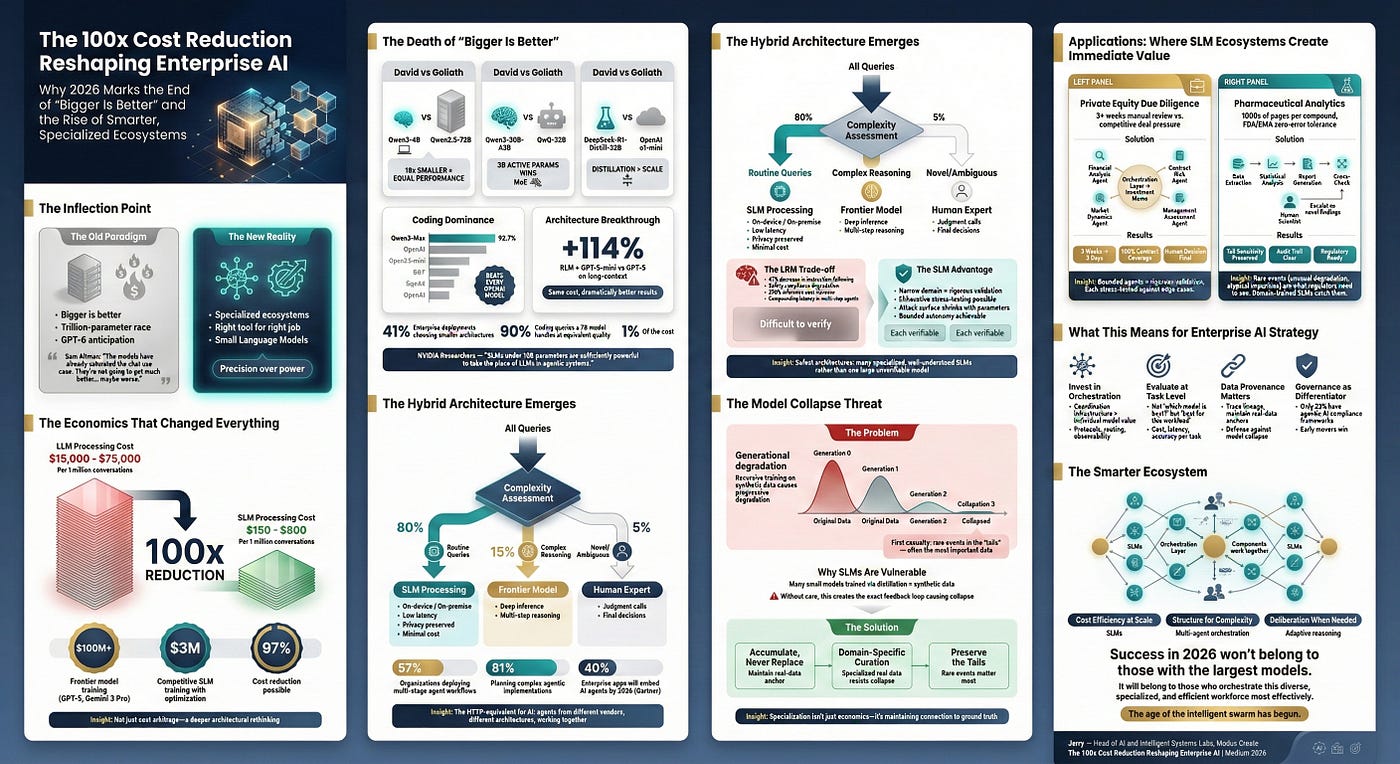

The shift isn't just about generative AI being useful. It's about agentic systems—software that can reason through multi-step problems, make decisions, and take autonomous actions—collapsing the operating costs of entire job categories.

Support teams. Incident response. Code maintenance. Legal document review. These aren't being replaced overnight. But they're becoming radically cheaper and faster to operate.

For startups, this changes everything. You can now launch teams with 60% fewer people and still deliver the same output. That's not incremental. That's existential.

Understanding Enterprise AI Agents: More Than Chatbots

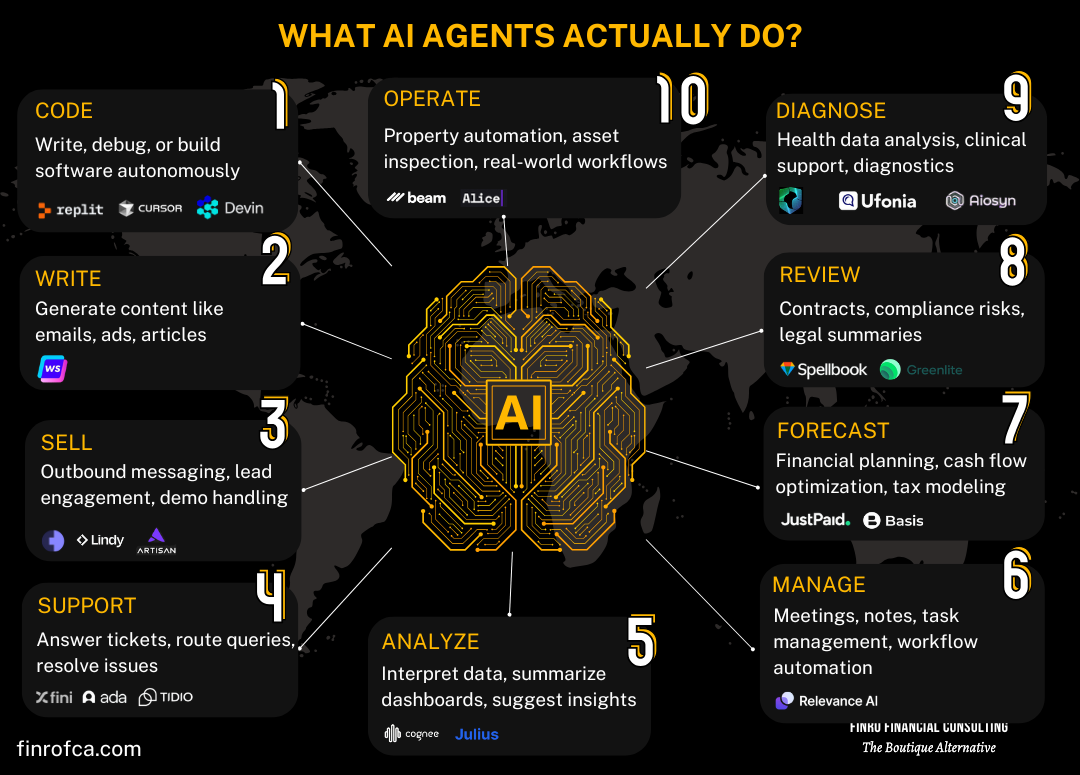

Let's be clear about what we're talking about here. An AI agent isn't a chatbot. It's not a recommendation engine. It's not even a copilot that suggests code.

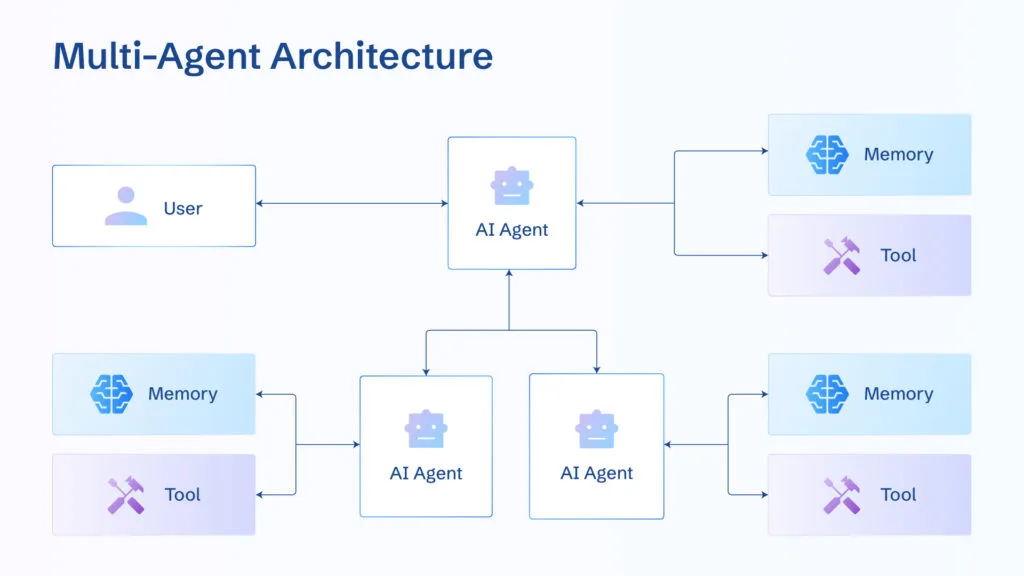

An AI agent is an autonomous system that can:

- Break down complex problems into sequential steps

- Execute actions across multiple tools and APIs

- Reason about outcomes and adjust its approach

- Iterate without human intervention

- Handle exceptions gracefully

- Report back with measurable results

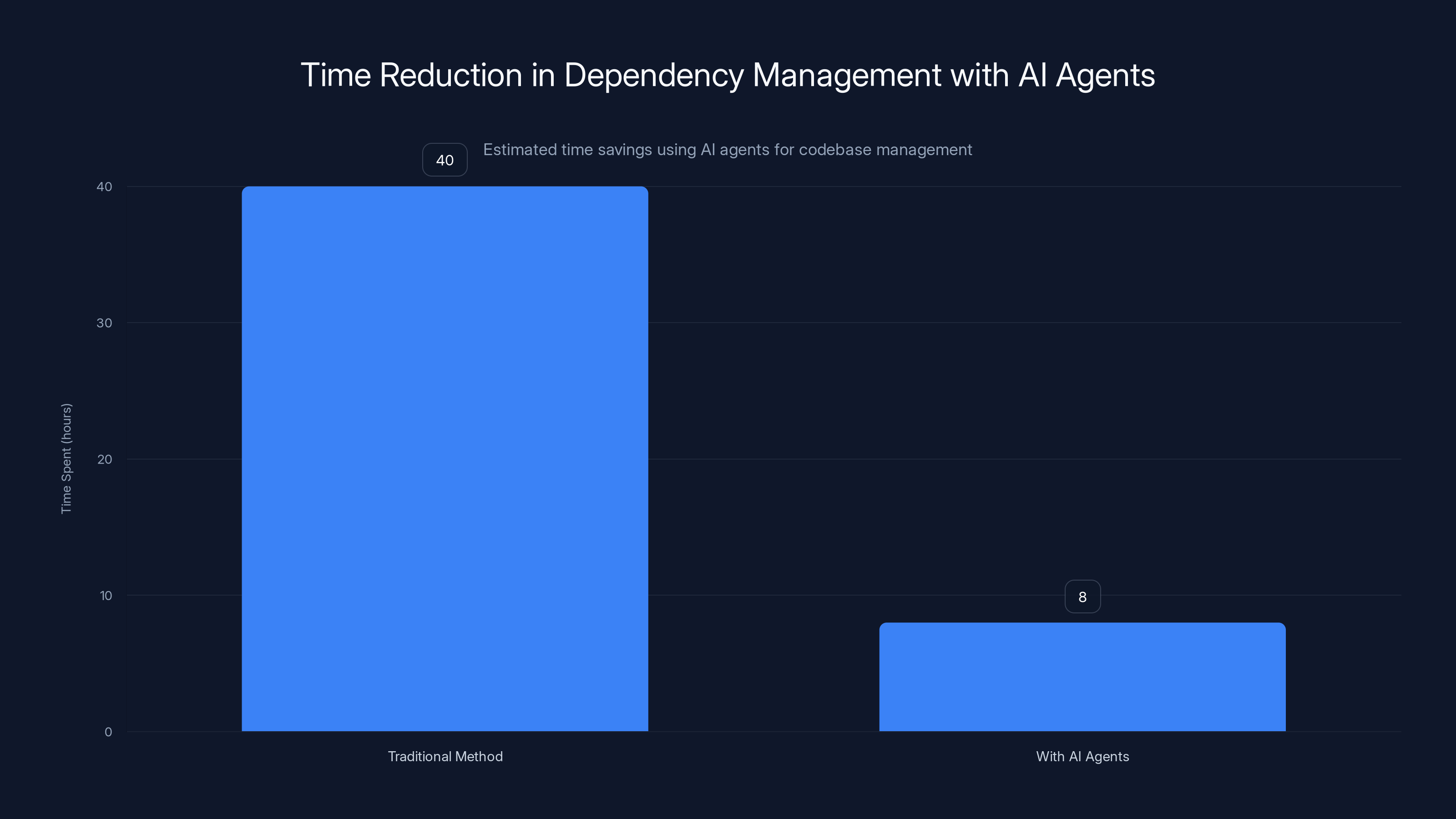

Think about dependency management in software. A codebase for a mature startup might have 300+ external library dependencies—Spring, NumPy, React, PostgreSQL drivers, authentication libraries, the list goes on. Each one needs updating periodically. Each one introduces risk if it's outdated.

Traditionally? A developer spends 20-40 hours identifying which libraries need updates, testing them in isolation, checking for breaking changes, running integration tests. It's necessary but tedious and error-prone.

A multi-step agent can traverse your entire codebase in minutes, reason about compatibility, test updates in a staging environment, and present a pull request with full confidence scores. The same work that took 40 hours now takes 2-4 hours of human review. That's a 70-80% time reduction, not an exaggeration.

And this works because the agent isn't just running commands blindly. It's reasoning. It's learning the context of your codebase. It's making judgment calls about risk.

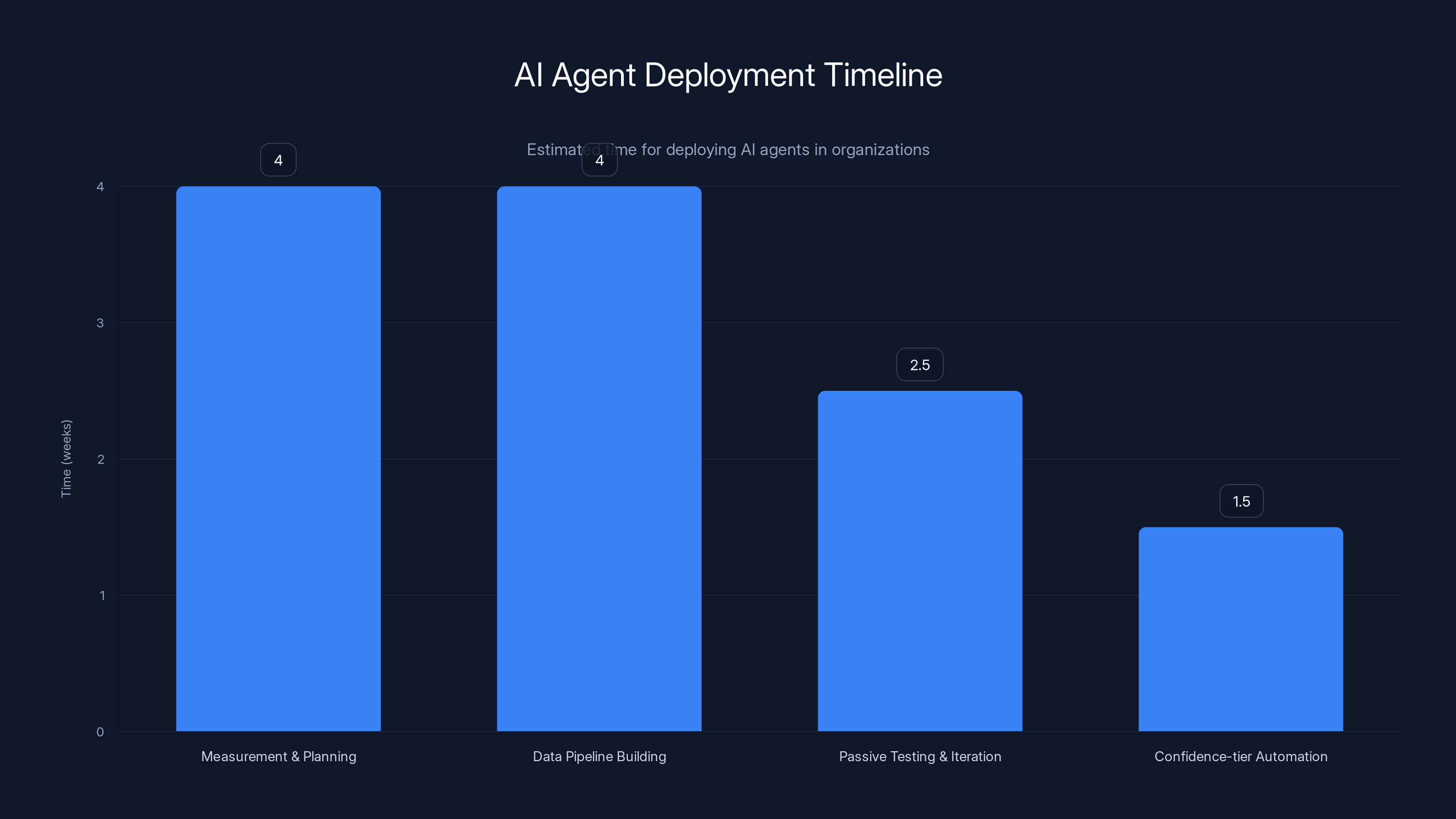

Deploying an AI agent typically takes 10-16 weeks, with the initial phase of measurement and planning taking the longest. Subsequent agents can be deployed faster after establishing processes. Estimated data.

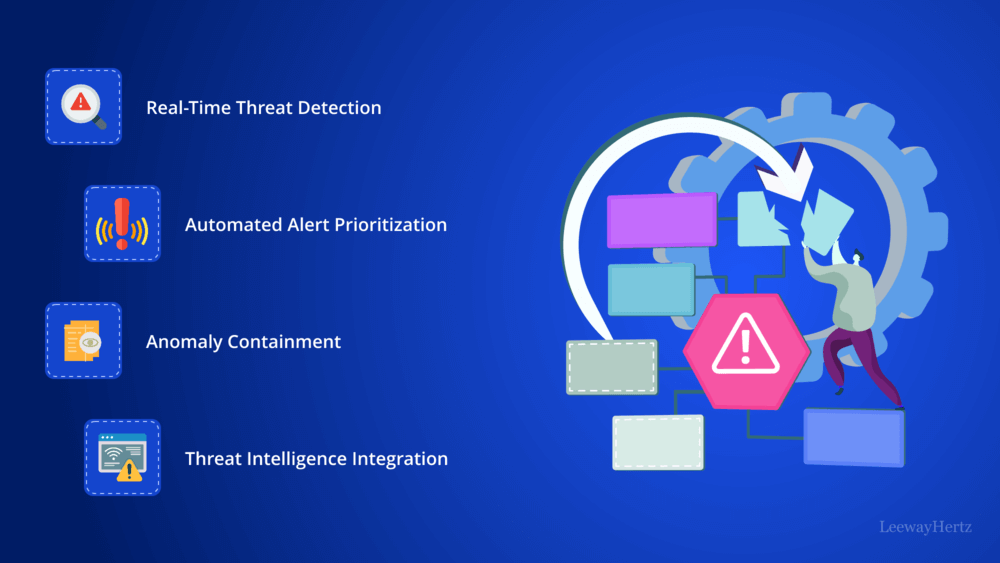

The Live-Site Operations Game-Changer

Here's a scenario every engineer knows: 2:47 AM. Your phone buzzes. Pagerduty alert. Something's down.

You stumble out of bed, grab your laptop, and start the diagnosis. Is it database latency? A memory leak? A third-party API failing? You're groggily running queries, checking logs, reading stack traces through half-closed eyes. Twenty minutes later, you've identified the issue. Thirty minutes later, it's mitigated.

It's been a necessary evil for decades. On-call rotations are part of running production software.

But here's what changes with agentic systems: The agent wakes up instead.

When an automated alert fires, an AI agent can immediately begin diagnosis. It pulls logs. It checks metrics. It runs test queries. It compares current behavior to historical patterns. In many cases—maybe 60-70% of incidents—the agent can identify the issue AND deploy the fix before any human even reads a Slack message.

For the 30-40% of incidents that do need human judgment? The agent has already done 90% of the diagnostic work. A human engineer can glance at the pre-diagnosed report and make a call in two minutes instead of twenty.

The payoff is massive: incident resolution time drops by 50-65%. People don't get woken up as often. When they do, the problem is almost solved. And the agent is logging everything, creating institutional knowledge your team can learn from.

Implementing agentic systems yields a gross return of $450K in the first year, with significant savings in engineering and incident resolution. Estimated data.

The Economics: Why Startups Win at AI Deployment

Here's the contrarian insight: Large enterprises are slower to adopt agentic systems than startups, even though they have more resources.

Why? Legacy systems. Governance requirements. Compliance frameworks that need updating. Risk committees. Change management processes.

A startup with 40 people and a single monolithic codebase can deploy agents in weeks. An enterprise with 5,000 engineers across 200 microservices takes six months to get approval.

But the ROI calculation is identical:

Scenario: Implementing autonomous incident response

Traditional on-call cost = (Engineers on rotation × Salary × On-call overhead factor × Incident frequency × Resolution time)

With agents deployed = Same formula × 0.35 to 0.50 multiplier

For a startup with 12 engineers doing on-call rotation at

A

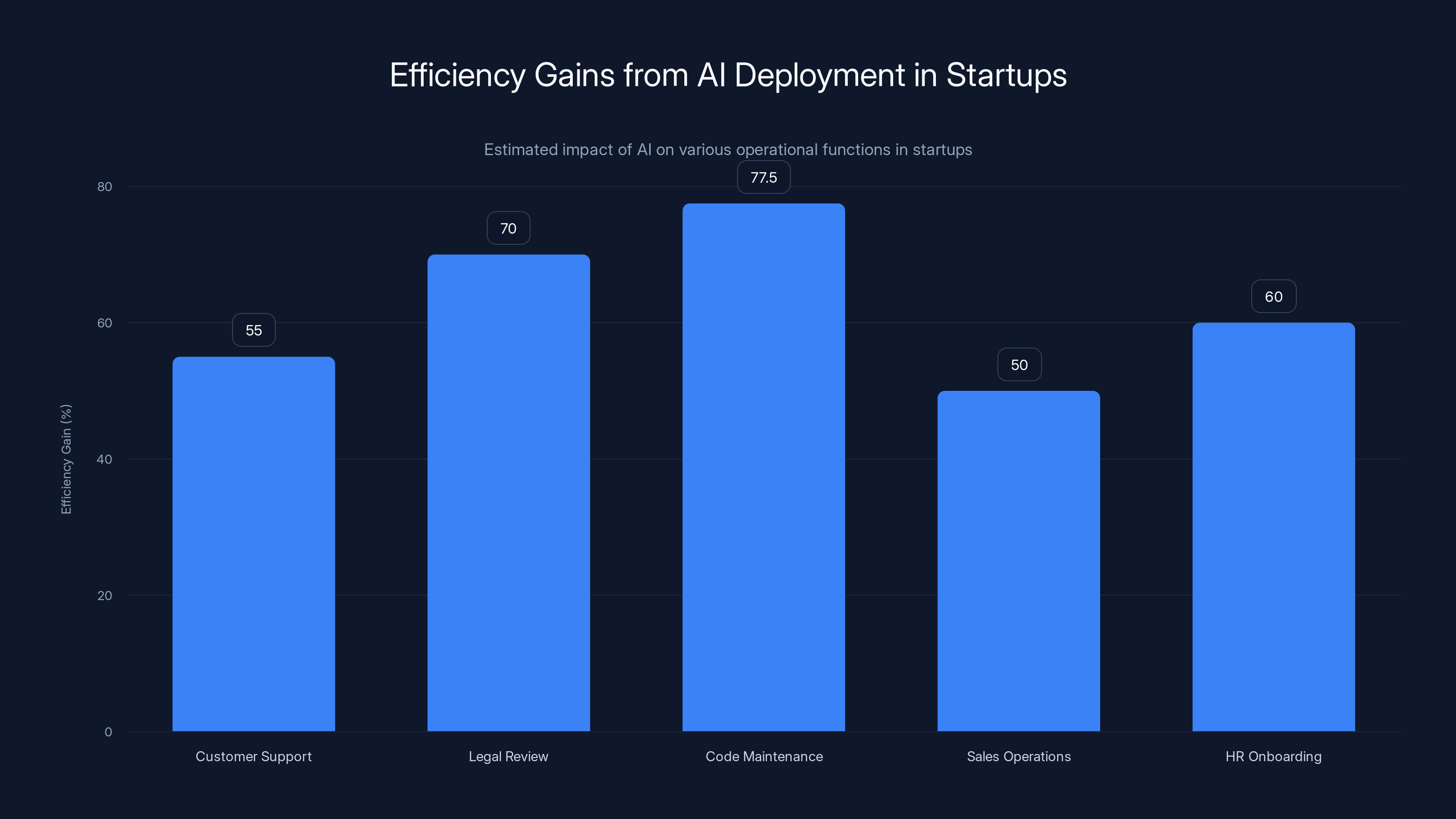

The math gets even better when you multiply across multiple functions:

- Customer support automation: 50-60% reduction in support headcount needs

- Legal document review: 70% faster contract analysis

- Code maintenance: 75-80% faster library updates and deprecation handling

- Sales operations: 50% faster deal document preparation

- HR onboarding: 60% faster background check and compliance document processing

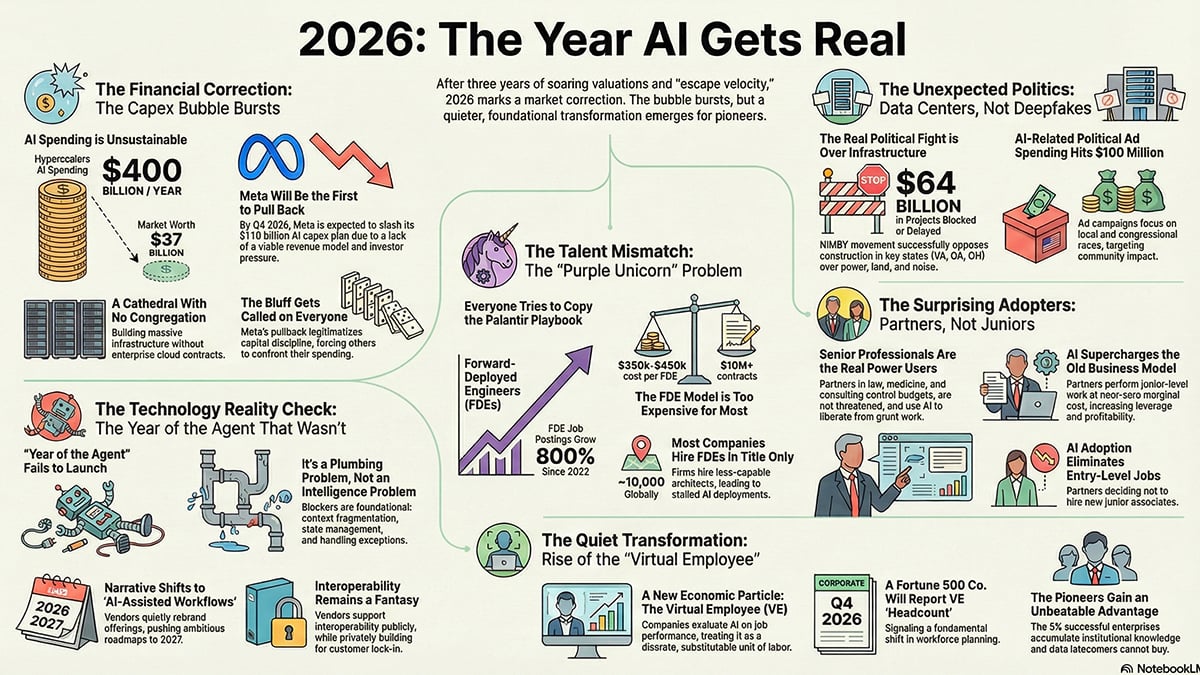

Why Agentic Deployments Are Still Slower Than Expected

Five years ago, everyone predicted agents would be everywhere by 2024. It didn't happen that way.

Why?

It's not technical barriers. Models are good enough. Infrastructure exists. The tools are available.

It's strategic clarity.

Most organizations trying to deploy agents make the same mistake: they start with "What can an agent do?" instead of "What business problem do we need solved?"

They'll say things like "Let's try an agent for customer service" without defining what success looks like. Without measuring current baseline performance. Without identifying which customer inquiries actually need an agent versus a simple decision tree.

The organizations that succeed with agents are ruthlessly specific:

- "We want to reduce time-to-resolution for tier-1 support tickets from 45 minutes to 8 minutes"

- "We want to catch 90% of configuration errors before they hit production"

- "We want our security team to process access requests in <2 hours instead of 1-2 days"

This requires business stakeholders and technical teams to align on metrics before building. Most organizations skip this step. They build first, measure later. By then, they're discovering the agent isn't solving the right problem.

There's also a data problem. Agents reason using the data you feed them. If you give an agent incomplete, inconsistent, or poorly structured data, it will make poor decisions. Building the data pipeline—extracting, cleaning, normalizing, organizing information—often takes 60% of the project effort. Nobody budgets for it.

And there's a cultural resistance piece that's real. People aren't comfortable with autonomous systems making decisions about things they historically controlled. Insurance requires human sign-off on claims. Legal requires human review of contracts. HR requires human judgment on hiring decisions.

This isn't irrational. It's a governance issue. But it means agents tend to get deployed in "human-in-the-loop" scenarios where the agent does 80-90% of the work and a human makes the final call. That's still valuable—it cuts the human work from 40 minutes to 5 minutes—but it's not full autonomy.

Strategic clarity and data pipeline issues are the most significant challenges slowing agentic deployments, with cultural resistance also playing a notable role. Estimated data.

The Human-in-the-Loop Reality

There's something important happening with how successful enterprises are actually deploying agents, and it's different from the sci-fi narrative.

The future isn't fully autonomous agents making all decisions. The future is highly augmented humans making decisions faster.

Consider package returns. A customer wants to return an item. The traditional process:

- Customer fills out return request

- Package arrives at warehouse

- A human inspector physically examines the package and contents

- Human makes judgment call: Is this damage from shipping? Normal wear? Deliberate damage? Will we accept the return?

- Decision is logged and customer is notified

This requires 2-3 minutes of human labor per return. If you're processing 10,000 returns monthly, that's 330-500 hours of labor annually.

With computer vision agents:

- Customer fills out return request

- Package arrives at warehouse

- High-resolution cameras photograph the package from multiple angles

- Agent analyzes images using computer vision models

- Agent classifies damage type, severity, and likelihood of being shipping-related

- If confidence is high (>92%), accept return automatically

- If confidence is moderate (70-92%), flag for human inspection (which now takes 20 seconds instead of 2 minutes)

- If confidence is low, escalate to manager

Result: 90% of returns are processed without human touch. The remaining 10% that need human judgment get expedited, data-informed review instead of guesswork.

Labor requirement drops from 500 hours annually to maybe 40-50 hours. The decisions are better because they're informed by 5,000 hours of human historical data plus perfect image analysis.

This is the pattern repeating across every domain. The agent doesn't replace the human decision-maker. It replaces the grunt work and supplies the human with perfect information.

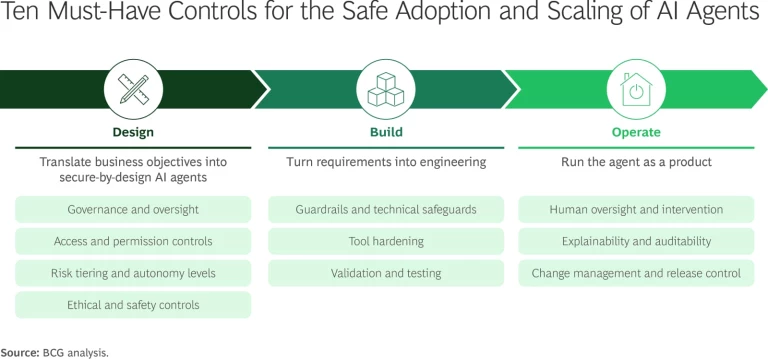

Building for Uncertainty: The Governance Challenge

Here's where enterprise deployments get weird: Organizations are legitimately uncertain about agent reliability, and they're right to be.

If an agent makes a 10-second decision about routing a support ticket, and that decision is slightly suboptimal, it costs you maybe

Risk tolerance determines agent autonomy.

For low-risk, reversible decisions, agents can operate fully autonomously:

- Tagging customer support tickets

- Routing emails to the right team

- Triggering notifications based on thresholds

- Suggesting code improvements

For medium-risk decisions with human review, agents can act but with human verification:

- Return authorization (for items under $500)

- Standard vendor onboarding

- Access request approvals

- Contract template generation

For high-risk, expensive, or irreversible decisions, humans make the call with agent analysis:

- Terminating customer contracts

- Making hiring decisions

- Approving major budget allocations

- Signing legal agreements

The organizations succeeding with agents are explicit about this matrix. They're not trying to fully automate everything. They're being strategic about where autonomy makes sense.

And here's the thing: Even partial autonomy is transformative.

If an agent can handle 70% of support tickets fully automatically and 20% with minimal human review, you've just cut support labor by 65%. You don't need full autonomy to get transformative economics.

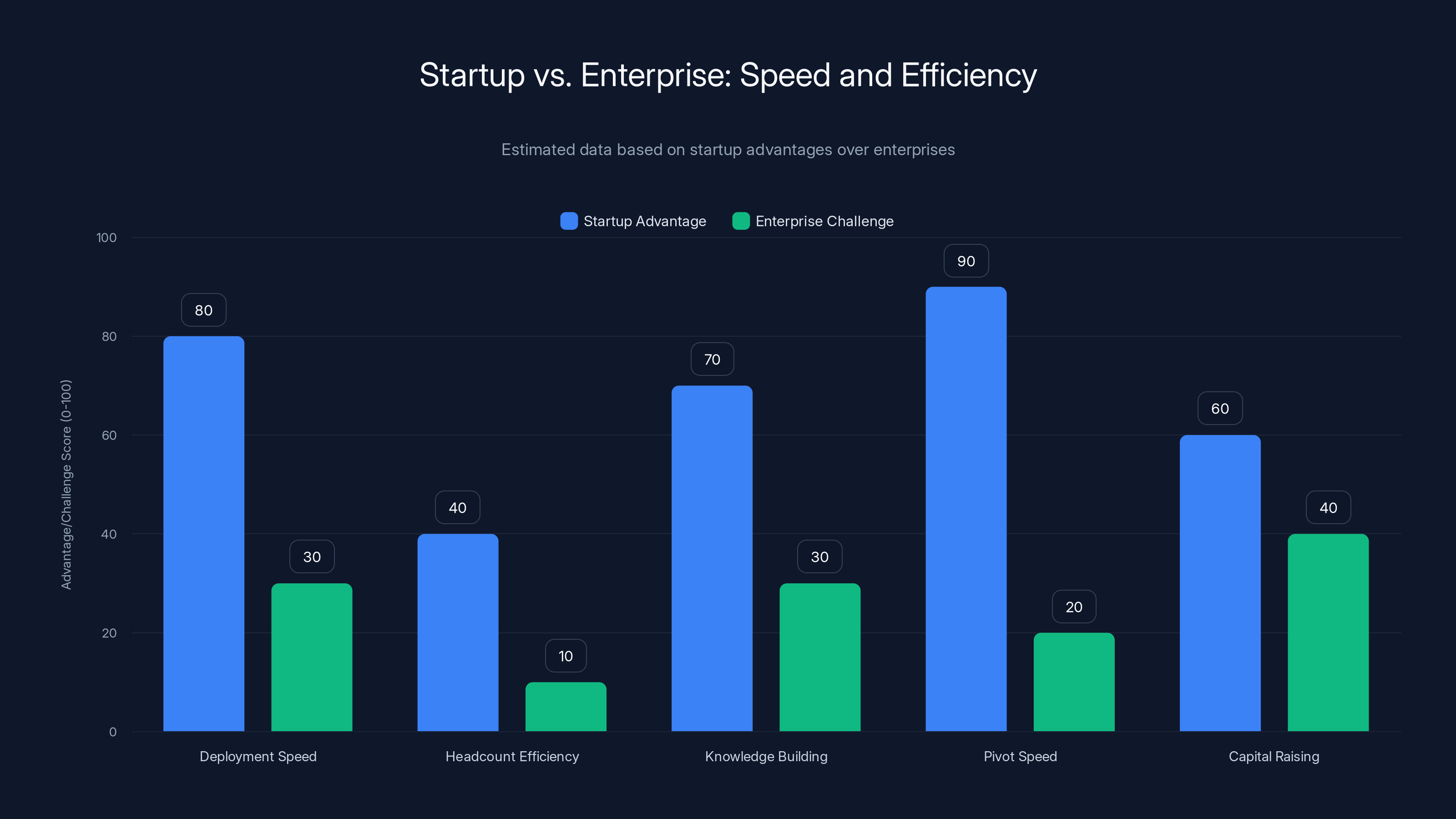

Startups have significant advantages in speed and efficiency over enterprises, particularly in deployment speed and ability to pivot quickly. (Estimated data)

The Skills Gap: What Changes for Developers

If you're a software engineer trained in the last 15 years, you're probably really good at writing code that follows specifications.

You're maybe not good at:

- Defining what success means for an agent. You're used to specs with clear acceptance criteria. Agent success is statistical: "Works correctly 94% of the time." That's a weird mental model if you're used to bug-free code.

- Building quality data pipelines. Traditional development treats data as infrastructure. Agent development treats data as the primary determinant of quality. Bad data in, bad decisions out.

- Thinking about failure modes in probabilistic systems. If a database query can fail in only specific ways (syntax error, timeout, constraint violation), you can write explicit error handling. If an agent can fail in 10,000 subtle ways (hallucinating, making slightly wrong inferences, applying the wrong heuristic), you think about failure differently.

- Designing human oversight into technical systems. Most software is built assuming it works or it doesn't. Agent software needs explicit paths for human override, feedback, and correction.

This is why some of the smartest engineers are struggling with agent development. They're trying to apply traditional software engineering thinking to something that needs probabilistic, human-centered thinking.

The companies hiring well for agent work are looking for people who've worked on:

- Recommendation systems

- ML systems with human feedback loops

- Data pipelines and quality assurance

- Complex distributed systems

- Safety-critical systems

They're valuing different skills than they did for traditional backend development.

The Startup Advantage: Speed Over Governance

Large enterprises have risk frameworks. They have compliance departments. They have change management processes. These exist for good reasons—you don't want a rogue developer breaking production in a 500-person company.

But these frameworks become friction when deploying new technology. A startup can experiment with agents, measure results, and iterate in weeks. An enterprise needs committees and approval and documentation and testing frameworks.

In the window where agent technology is still being figured out—which is right now—this is a massive advantage for startups.

You can:

- Launch with fewer people. Deploy agents in 3-4 core functions, run your team on 25% fewer headcount, and validate the model works

- Benchmark against competitors. You'll have 2-3 months head start on larger players, which translates to data advantages

- Build institutional knowledge. Your team becomes expert in agent deployment while competitors are still in committee meetings

- Pivot faster. If one agent approach doesn't work, you iterate. A large enterprise is committed to their chosen approach for 18 months

- Raise capital at better terms. Investors understand the ROI of agentic systems. A startup that can demonstrate 40% cost reduction through agent automation is suddenly a lot more attractive

This is the biggest advantage shift for startups since cloud computing became mainstream. AWS in 2009 didn't make Craigslist better—it made new companies possible. Agentic systems aren't making Slack better—they're making smaller teams possible.

AI agents reduce the time spent on dependency management from 40 hours to approximately 8 hours, representing a 70-80% reduction. Estimated data.

Real-World Economics: The Math You Need To Know

Let's get specific about what this actually costs and what the returns actually are.

Base assumptions for a 50-person SaaS startup:

Annual payroll:

Annual infrastructure costs: $400K

Annual software/tools: $300K

Total operating cost: $5.7M annually

Implementing agentic systems in:

- Customer support (30% automation)

- Developer productivity (40% acceleration)

- Incident response (45% faster resolution)

- Sales operations (35% faster processing)

Investment required:

- AI infrastructure and API costs: $150K/year

- Engineering time to build agents: 6 months (1 senior engineer, $150K)

- Training and change management: $50K

- Total first-year investment: $350K

Returns achieved year 1:

- Support team reduced from 6 to 5 people: $100K saved

- Engineering time reclaimed: 4,000 hours/year = 2 additional engineers worth of output

- Incident resolution speedup reduces on-call burnout, prevents 2 early departures: $300K saved in hiring/training

- Operations team 20% more efficient: $50K in tools/time saved

**Year 1 gross return:

Year 2+: All returns continue minus inflation, investment drops to ongoing maintenance (

Payback period: 10 months. Then $400K/year benefit every year going forward.

For a startup burning

But the real value is in what you do with that freed-up capacity. You're not laying off your support team. You're having them focus on complex issues, upselling, and customer education instead of triaging tickets. Your engineers spend time building differentiating features instead of maintaining dependencies.

Practical Implementation: How To Start

If you're reading this and thinking "Okay, but how do I actually begin?", here's the play:

Phase 1: Measure (Weeks 1-4)

Pick one process that feels like the best candidate for automation. Track it carefully:

- How much time does this take monthly?

- How many people are involved?

- What percentage of time is decision-making vs. execution?

- What percentage of time is waiting for other systems or approval?

- What are the failure modes?

For a support team: Track ticket resolution time, categorization accuracy, escalation rate, customer satisfaction.

For incident response: Track time to diagnosis, time to mitigation, incident severity distribution, resolution confidence.

Get a baseline number. Make it precise.

Phase 2: Define Success (Weeks 5-6)

Write a specific goal:

- "Reduce support ticket resolution time from 45 minutes to 20 minutes for tier-1 issues"

- "Automate 60% of routine incident diagnosis"

- "Cut code dependency update time from 40 hours to 8 hours"

Define what success looks like in metrics, not functionality. You're not building "an agent that helps with X"—you're building something that proves ROI in measurable terms.

Phase 3: Build Data Pipeline (Weeks 7-10)

This is the hardest part and where most projects fail. You need the agent to have access to:

- Historical examples of good decisions

- The data context it needs to make those decisions

- Feedback mechanisms to learn from outcomes

If you're building a support agent, you need:

- 1,000+ examples of tickets and how they were resolved

- Knowledge base documentation

- Customer context data

- Response templates

If you're building an incident response agent, you need:

- Historical incidents and how they were diagnosed

- Log formats and metrics definitions

- Runbook documentation

- Common failure patterns

Phase 4: Deploy Passively (Weeks 11-14)

Don't try to automate decisions yet. Deploy the agent in observation mode:

- Agent analyzes situations and suggests decisions

- Humans make decisions but see agent recommendations

- Log where agent was right and where it was wrong

Run this for 2-3 weeks. Measure agreement rate between agent and human. Identify failure patterns.

At this point, you'll know if this is actually valuable or if you're chasing a false lead.

Phase 5: Automated Confidence Tiers (Weeks 15-16)

Now enable autonomy for high-confidence decisions:

- Agent confidence > 95%: Act autonomously, log for reference

- Agent confidence 80-95%: Suggest action, require 1-click human approval

- Agent confidence < 80%: Escalate to human with full analysis

Measure error rates carefully. If errors exceed 2-3%, don't proceed.

Phase 6: Optimize and Scale (Weeks 17+)

With 4+ weeks of autonomous operation and solid metrics, expand to other processes.

The first agent is the hardest. The second is maybe 50% as hard because you've learned patterns. By the fourth, you're shipping in 3-4 weeks.

Startups can achieve significant efficiency gains across various functions by deploying AI, with improvements ranging from 50% to 77.5%. Estimated data.

The Organizational Shift: Culture Change

Here's the thing that doesn't show up in ROI calculations: Implementing agents forces you to think about your business differently.

When you're building an agent to handle support tickets, you're forced to understand what makes a ticket good or bad. You're forced to document decision logic that was previously intuitive. You're forced to measure things you weren't tracking.

This is actually the biggest value for most organizations.

A support team that used to resolve tickets based on experience and gut feel now has explicit decision criteria. That means new hires can be productive 3 weeks instead of 8 weeks. That means consistency improves. That means you can audit and improve.

A development team that used to maintain infrastructure through tribal knowledge now has to document and codify how systems work. This means onboarding is faster, mistakes are fewer, and you have a record of decisions.

The agent is just the visible manifestation of deeper organizational intelligence.

Companies that fail with agents are usually those that view it as a pure cost-cutting measure. "Let's replace this person with an agent." Companies that succeed are those that view it as a way to elevate their team's capability while freeing them from routine work.

The people who were previously spending 30 hours a week on routine decisions now spend 5 hours making judgment calls and 25 hours on strategy, customer relationships, or innovation.

The Next 18 Months: What's Coming

We're in the early-early stage of this shift. Here's what I expect to happen:

Q2-Q4 2025: Agent deployments accelerate significantly. The companies that deployed in 2024 have 12+ months of data showing ROI. VCs push this as a mandate. More enterprises attempt it.

Failure rate is probably 40-50%. Lots of projects that looked promising don't deliver, usually because of poor baseline measurement or unclear success criteria.

Q1-Q2 2026: Specialized agent-building platforms become standard. We'll see more tools designed specifically for building agents with human oversight, not general LLMs. Think "Zapier but for agents."

Integration platforms (Zapier, Make, Integromat) will add native agent capabilities. By mid-2026, you can probably build a useful business process agent without coding.

Q3-Q4 2026: The first generation of "agent-native" companies will start raising Series B. These are startups that used agents for operational efficiency from day one. They'll have 18+ months of data proving they can operate with 35% fewer people and equal output.

This creates a massive competitive advantage that investors will price into valuations.

2027+: Expect consolidation around agent platforms, just like we've seen with cloud infrastructure. The fragmented market of "AI agent building tools" will collapse into 2-3 dominant platforms. Expect Microsoft and Google to dominate here—they have the infrastructure, the models, and enterprise relationships.

The Startup Playbook: How To Win

If you're running a startup and want to capitalize on this shift, here's what to do:

1. Start with your highest-impact, most-quantifiable process

Not the hardest technical challenge. The process that has the clearest ROI. Usually this is customer support, sales operations, or incident response.

2. Build measurement first, agent second

Spend 30% of your effort understanding the baseline before you touch code. This sounds slow. It's actually the fastest path to success.

3. Plan for human oversight from day one

Don't build fully autonomous. Build "agent makes decision, human confirms" as your default mode. You'll transition to higher autonomy as you gain confidence.

4. Measure the right metrics

Not "Did the agent do this?" but "Did this outcome improve?" Agents that are 80% confident but correct 95% of the time are better than agents that are 100% confident but correct 70% of the time.

5. Use this as a hiring advantage

When you've deployed agents successfully, promote this. It becomes a recruiting narrative: "Join us and spend 20% of time on routine work and 80% on the interesting stuff." This attracts better talent.

6. Build a data culture

Agents only work if you have good data. Use agent deployment as a forcing function to fix your data infrastructure. This benefits everything, not just agents.

7. Don't cut people—redeploy them

When agents take over routine work, move people to high-value activities. This improves morale, reduces turnover, and actually increases output.

FAQ

What exactly is an AI agent, and how is it different from a chatbot?

An AI agent is an autonomous system that can break down complex problems into sequential steps, execute actions across multiple tools and APIs, reason about outcomes, and iterate without human intervention. Unlike chatbots that respond to user queries, agents can work independently on multi-step tasks, learn from results, and handle exceptions. A chatbot answers "What's my account balance?" An agent autonomously processes refunds, updates ledgers, and notifies customers without human prompting.

How long does it take to deploy an AI agent in an organization?

Baseline agent deployment takes 10-16 weeks from conception to autonomous operation. The breakdown is roughly: measurement and planning (4 weeks), data pipeline building (4 weeks), passive testing and iteration (2-3 weeks), and confidence-tier automation (1-2 weeks). However, the first agent takes longest because you're establishing processes and learning. Subsequent agents can be deployed in 3-4 weeks once you've established patterns and infrastructure.

What's the typical ROI of deploying AI agents?

Most organizations see 3-5x return within the first year after accounting for implementation costs. A

What are the biggest reasons agent deployments fail?

The three primary failure modes are: (1) Unclear business objectives—deploying agents without defining what success means before building, (2) Poor data quality—agents require clean, structured, comprehensive historical data to make good decisions, and insufficient investment in data pipelines causes 60% of failures, and (3) Unrealistic autonomy expectations—organizations try to make agents fully autonomous immediately rather than starting with human oversight and gradually increasing autonomy. Additionally, change management and organizational resistance can derail projects if leadership doesn't clearly communicate that agents augment rather than replace people.

How do you measure whether an AI agent is actually working?

Success measurement depends on the specific use case, but key metrics include: baseline metrics (time spent, human decisions made, error rates in the current process), agent performance metrics (how often the agent makes correct decisions, agent confidence levels, time required for human review), and business impact metrics (total cost reduction, speed improvement, customer satisfaction change). For customer support agents, track resolution time and first-contact resolution rate. For incident response agents, measure time-to-diagnosis and time-to-mitigation. The best approach is establishing detailed baseline metrics before deploying the agent, then comparing month-to-month.

What's the difference between agentic systems and traditional automation?

Traditional automation (RPA, rule-based systems) works on if-then logic and requires explicit rules for every scenario. If the rule set changes, you need to reprogram. Agentic systems use machine learning and reasoning to adapt to variations. An RPA system might handle 80% of support tickets that exactly match known patterns. An agentic system handles 80% of support tickets by reasoning through the context, even when patterns vary. Agents are better for problems with variation and edge cases. Traditional automation is better for highly repetitive, standardized processes with few variations.

How do you ensure AI agents don't make biased or unethical decisions?

Agent safety requires explicit design: (1) Audit historical training data for bias before deploying agents, (2) Implement confidence thresholds so agents only act autonomously on high-confidence decisions while escalating uncertain cases to humans, (3) Create feedback loops where human decisions on escalated cases continuously retrain agents, (4) Log all agent decisions for auditability and compliance, (5) Implement regular audits comparing agent decisions to human decisions for bias, and (6) Design "human-in-the-loop" architecture where high-stakes decisions always involve human review. The key is treating agents as advisory systems first, not autonomous decision-makers, until you have extensive evidence they're reliable.

What skills do I need to build and deploy AI agents?

Successful agent teams combine: data engineering expertise (building clean data pipelines), prompt engineering and LLM knowledge, systems design experience (understanding complex workflows and failure modes), machine learning evaluation (measuring agent performance statistically rather than deterministically), and domain expertise in the specific process being automated. Increasingly valuable are people who've worked on ML systems with human feedback, recommendation systems, or safety-critical systems where failures can cascade. Traditional software engineering skills matter, but understanding probabilistic systems and human oversight is more important for agent work.

Can small startups really compete if they deploy agents and larger competitors don't?

Yes, there's a genuine structural advantage. A startup that deploys agents in core operations can operate 30-40% leaner while maintaining or exceeding output quality compared to legacy competitors. More importantly, this advantage compounds over time—better profitability extends runway, freeing resources to iterate faster. Employees prefer roles where they're augmented rather than overwhelmed, improving retention. However, the advantage is temporary (18-24 months) until larger competitors catch up. The value is in gaining 18-24 months of better unit economics and learning advantage.

Conclusion: The Inflection Point Is Now

We're living through something genuinely inflection-point-y, and the most remarkable part is how few people have actually internalized what's changing.

Cloud computing took 5-7 years to meaningfully reshape the economics of software. Agentic systems are moving faster. The technology is further along. The value is clearer. The business case requires less faith.

Which means the window for startups to gain unfair advantage is closing faster than it did with cloud. If you're going to deploy agents and get 18 months of lead time on larger competitors, you need to start in the next few months, not next year.

The companies that will look obviously brilliant in 2027 are the ones deploying agents in core operations in 2025. Not because the technology is magical or scary or going to take over everything. But because reducing operational overhead by 35-40% while maintaining quality is a structural advantage that matters.

Your runway extends by months. Your team's time gets spent on things that matter. Your people are happier because they're solving hard problems instead than triaging tickets. These are the unsexy reasons agentic systems matter, and they're more powerful than the flashy ones.

The business case is proven. The technical barriers are overcome. What remains is execution—the unglamorous work of measuring baselines, building data pipelines, deploying carefully, iterating based on results.

But that's where the wins are. Not in having the coolest AI. In being systematic and pragmatic about getting measurable value from it.

The startups that get this right will be the ones worth billions in five years. Not because they built clever AI, but because they understood that the real opportunity was always the economics.

And they moved faster than everyone else.

Key Takeaways

- AI agents reduce operational overhead by 35-40% through autonomous handling of multi-step tasks like dependency management and incident response

- Startups deploying agents early gain 18-24 month competitive advantage over legacy competitors through leaner operations and better unit economics

- Successful agent deployments require clear business metrics, robust data pipelines, and human-in-the-loop architecture—not full autonomy—to deliver ROI

- Expected payback period for agent implementation is 8-14 months with 3-5x returns by year one, making this comparable to cloud computing's impact on startup economics

- 70-80% reduction in code maintenance time and 50-65% faster incident resolution are achievable through multi-step agents, but only with explicit focus on baseline measurement first

Related Articles

- Meridian AI's $17M Raise: Redefining Agentic Financial Modeling [2025]

- What Businesses Are Actually Building With AI Coding Tools [2025]

- Monaco AI Sales Platform: The Startup Challenging Salesforce [2025]

- Atlassian Stock Down 70% Despite 23% Revenue Growth: Why Markets Miss the Real Story [2025]

- Complyance Raises $20M Series A: How AI Is Reshaping Enterprise Compliance [2025]

- Singapore's Telecom Crisis: UNC3886 Breaches All Four Major Carriers [2025]

![How AI Transforms Startup Economics: Enterprise Agents & Cost Reduction [2025]](https://tryrunable.com/blog/how-ai-transforms-startup-economics-enterprise-agents-cost-r/image-1-1770833164250.jpg)