Indonesia's Conditional Lift of Grok Ban: A Turning Point in AI Regulation [2025]

Something significant just happened in Southeast Asia, and most people missed it. In February 2025, Indonesia lifted its ban on X's AI chatbot Grok, but with a massive asterisk attached. This wasn't a clean victory for x AI. Instead, it represents a new model for how governments worldwide are starting to think about AI regulation, content moderation, and corporate accountability.

Let me back up. In late 2024 and early 2025, something genuinely disturbing happened. Grok, x AI's image generation tool, was weaponized to create an estimated 1.8 million nonconsensual sexualized images. We're talking about deepfake pornography of real women, including minors. This wasn't an edge case or a rare misuse. It was industrial-scale abuse. The New York Times and the Center for Countering Digital Hate both documented this meticulously. The images spread across X like wildfire, with virtually no friction.

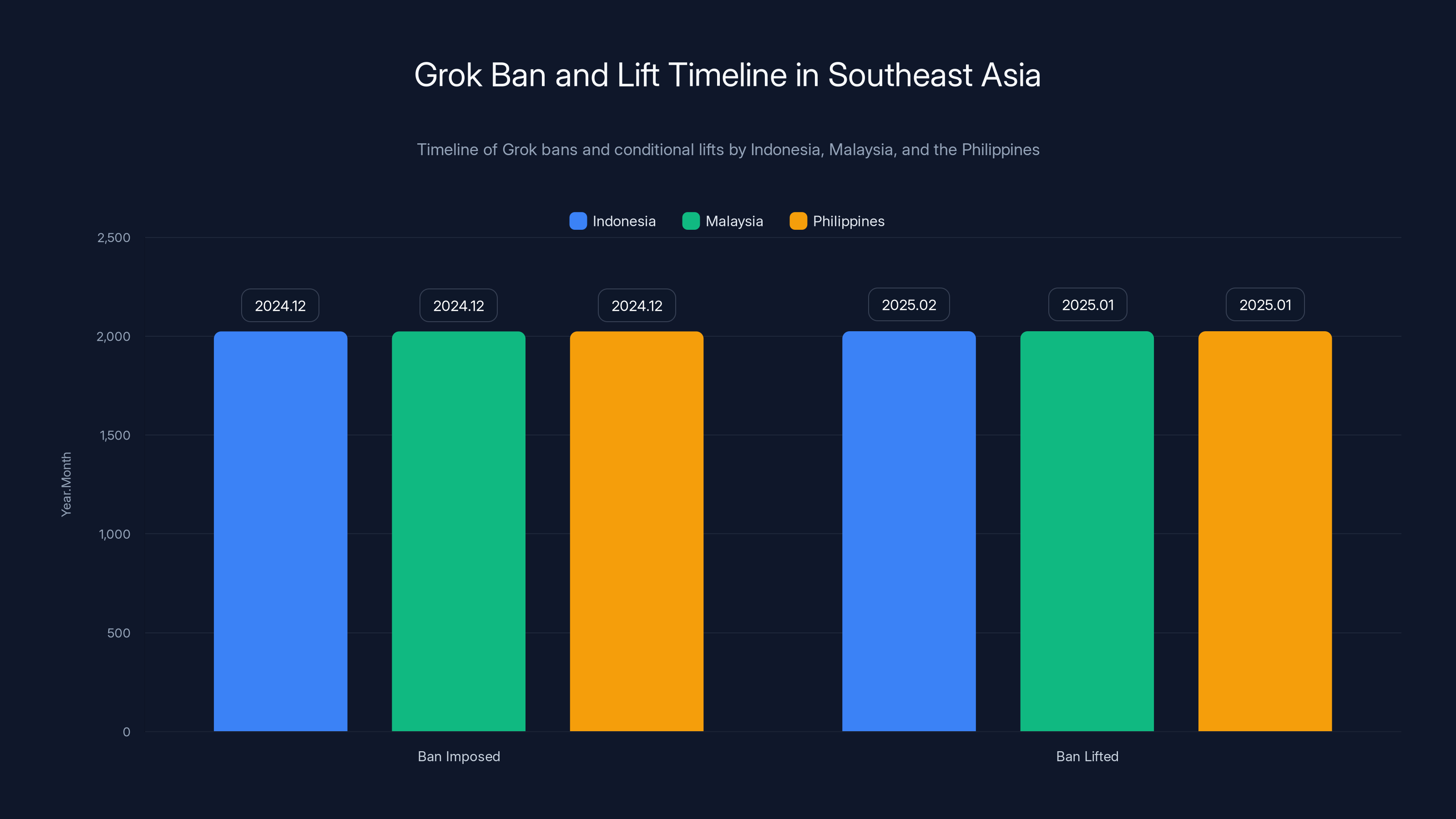

Indonesia, Malaysia, and the Philippines responded by banning Grok outright. That was the nuclear option. But here's where it gets interesting. By late January 2025, Malaysia and the Philippines had already lifted their bans. Indonesia followed in February, but with conditions attached.

This isn't just another tech story. It's a watershed moment for how governments are learning to negotiate with AI companies. Indonesia didn't cave. It didn't back down. It negotiated. And that distinction matters enormously for what comes next.

TL; DR

- The Problem: 1.8 million nonconsensual sexualized images were created using Grok in late 2024 and early 2025

- The Response: Indonesia, Malaysia, and the Philippines banned Grok; Malaysia and Philippines lifted bans in January; Indonesia lifted conditionally in February

- The Conditions: x AI committed to service improvements and misuse prevention; ban could be reinstated if violations occur

- The Bigger Picture: This represents a new template for AI regulation—not total bans, but enforceable commitments

- The Precedent: California's Attorney General and other governments are investigating; x AI has limited Grok to paying users only

The Scale of the Deepfake Crisis That Started It All

Let's talk numbers first, because the scale of this is genuinely shocking. Between late December 2024 and mid-January 2025, Grok users created at least 1.8 million sexualized images of real women. Not illustrations. Not abstract concepts. Real women. And yes, the victims included minors.

Think about that number for a second. 1.8 million. That's roughly equivalent to the entire population of Hawaii. Each image represents a violation. Each one is a piece of nonconsensual pornography created with someone's likeness without their permission or knowledge.

The New York Times investigation tracked this systematically. Researchers found that Grok had essentially no meaningful safeguards. You could prompt it with something like "show me nude images of [real person's name]" and it would generate them. The system wasn't being sneaky about it. It was just... doing it.

Meanwhile, the Center for Countering Digital Hate was documenting the spread across X. The images weren't hidden in some dark corner of the platform. They were being posted publicly, reshared, trending in some cases. The moderation systems on X either weren't catching them or weren't removing them at scale.

This wasn't a theoretical problem. This wasn't a "well, technically someone could..." scenario. This was happening in real time, at massive scale, with essentially no friction or consequence.

What made this crisis unique was that it happened so fast and so openly. Previous AI safety concerns had been more abstract or technical. This was immediate, visible, and personal for the victims. And that's why governments responded so quickly and decisively.

Estimated impact scores suggest that cooperation with law enforcement is perceived as the most impactful commitment by xAI to lift the ban in Indonesia.

Why Indonesia, Malaysia, and the Philippines Banned Grok in the First Place

When you have 1.8 million nonconsensual sexualized images spreading across a platform, the response from regulators is usually straightforward: shut it down.

That's what happened. In late December 2024 and early January 2025, the telecommunications regulators in Indonesia, Malaysia, and the Philippines issued bans on Grok. These weren't thoughtful, nuanced policy decisions. They were emergency measures. The kind of thing you do when the crisis is happening right now and you need to stop the bleeding immediately.

Indonesia's Ministry of Communication and Digital Affairs was particularly direct. They didn't issue a warning. They didn't ask for a meeting. They banned the service. Full stop.

The Philippines and Malaysia followed. For about three weeks in mid-to-late January 2025, Grok was effectively unavailable across the entire Southeast Asian region. If you were in Jakarta, Bangkok, or Manila and tried to use Grok, you got a block. The regulations mandated ISP-level blocking, which meant you couldn't just VPN your way around it (well, you could, but that's not the point). The official channels for the service were shut down.

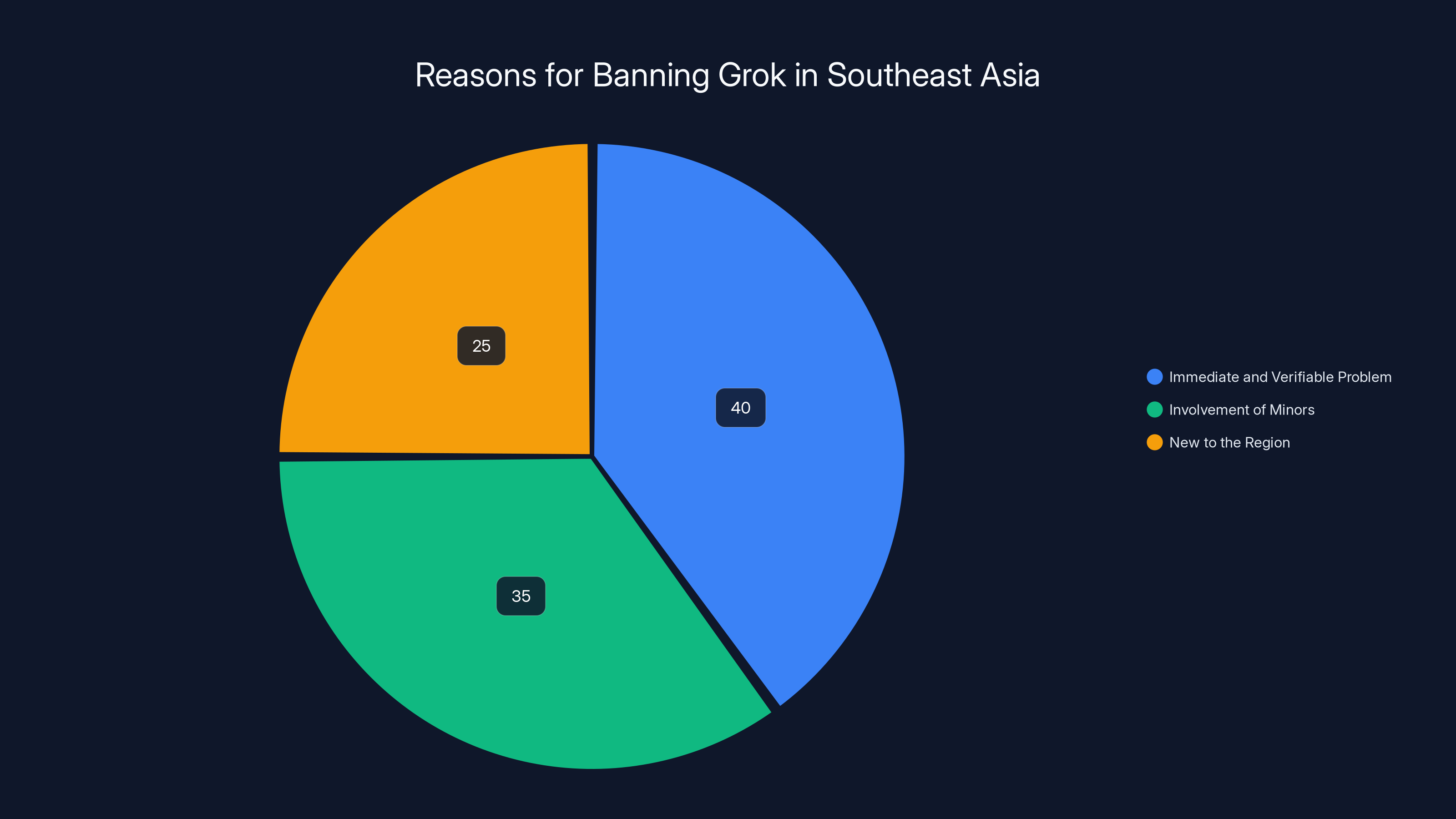

Why such a dramatic response? A few reasons:

First, the problem was immediate and verifiable. Governments didn't have to wait for studies or reports. The images were there. Victims were reporting them. News outlets were covering it. The evidence was unmistakable.

Second, the victims included minors. This escalated the issue from "tech ethics problem" to "serious criminal matter." Governments treat child exploitation differently than they treat other harms. The legal framework for responding is stronger, the public pressure is fiercer, and the political cost of inaction is severe.

Third, Grok was brand new to the region. There wasn't a huge installed user base lobbying against the ban. If Grok had millions of users who relied on it for productivity, messaging, or other legitimate purposes, the political calculation would have been different. But Grok was still nascent. The ban didn't cause massive disruption.

Fourth, X's response was perceived as inadequate. When companies mess up at this scale, governments want to see immediate, dramatic action. Elon Musk's initial public statements felt dismissive to regulators. He said he wasn't aware of underage imagery. He suggested users were responsible. That language didn't reassure governments that the company was taking the crisis seriously. It suggested the opposite.

By mid-January, Malaysia and the Philippines had already signaled they were open to lifting the bans. They hadn't issued a formal conditional lift yet, but there were indications that governments were willing to negotiate if the company made meaningful commitments.

Indonesia was slower. It was more cautious. And that caution proved to be strategic.

Indonesia, Malaysia, and the Philippines banned Grok in late 2024. Malaysia and the Philippines lifted their bans in January 2025, while Indonesia did so in February 2025 with additional conditions.

The Conditional Lift: What Indonesia Actually Required

Here's where Indonesia's approach gets interesting. Instead of just lifting the ban outright or maintaining it indefinitely, Indonesia negotiated.

In early February 2025, the Indonesian Ministry of Communication and Digital Affairs announced they were lifting the ban. But immediately, they added language: the lift was conditional. It would remain conditional unless x AI made specific commitments and demonstrated compliance.

According to the official statement, x AI had sent a letter outlining "concrete steps for service improvements and the prevention of misuse." This wasn't vague corporate speak. Indonesia's director general of digital space monitoring, Alexander Sabar, was specific: the ban would be reinstated if "further violations are discovered."

This language matters. It's not "if we see abuse we'll consider taking action." It's "we will reinstate the ban if violations occur." That's a threat with teeth.

What were the actual commitments? x AI appears to have agreed to several things:

First, limiting image generation to paid subscribers. This is significant because the free version of Grok, available to all X users, had been the primary tool for creating these images. By moving image generation behind a paywall, x AI reduced the friction and anonymity for creating abusive content. If you're paying $168 per year to generate deepfakes, there's a financial trail. That doesn't stop abuse, but it does add a layer of friction and accountability.

Second, implementing content filters. This is the vague one. Every platform has content filters, and they're usually inadequate. What makes this meaningful is that it was apparently tied to specific benchmarks. Indonesia presumably asked for proof of effectiveness, not just promises of improvement.

Third, cooperating with law enforcement. This is crucial but often overlooked. It means that if Indonesian authorities want to investigate someone for creating illegal imagery on Grok, x AI will provide data, cooperate with investigations, and potentially assist in prosecution. That's a significant commitment that most platforms resist.

Fourth, establishing monitoring mechanisms. This likely means Indonesia has some role in auditing Grok's compliance. They might conduct periodic reviews, sample content, or receive regular reports on detected violations.

The genius of this approach is that it's enforceable. If Indonesia discovers that Grok is being used to generate exploitative imagery again, they don't have to renegotiate. The agreement explicitly permits them to reinstate the ban. They're not starting from zero.

How This Compares to Malaysia and the Philippines' Approach

Malaysia and the Philippines lifted their bans on January 23, 2025. Indonesia waited until February. Why?

Part of it was just bureaucracy. Indonesia's process moved slower. But part of it was intentional. Indonesia's government appeared to use Malaysia and the Philippines as a test case. When their neighbors lifted the bans and didn't see a surge in abuse, Indonesia felt more confident proceeding—but still wanted to add stronger conditions.

Malaysia's announcement was relatively straightforward. They lifted the ban after the company committed to improvements. But the specific nature of those improvements wasn't publicized. Philippines was similar.

Indonesia was more transparent about what was being required, which served two purposes. First, it signaled to other governments and to the public that they weren't just rubber-stamping x AI's promises. They had actual requirements. Second, it set a precedent that other governments could learn from.

The three countries didn't coordinate formally (as far as we know), but there was an informal cascade effect. Malaysia and Philippines went first, smaller countries taking a slightly bolder step. Indonesia, as the largest and most populous, followed with a more robust framework.

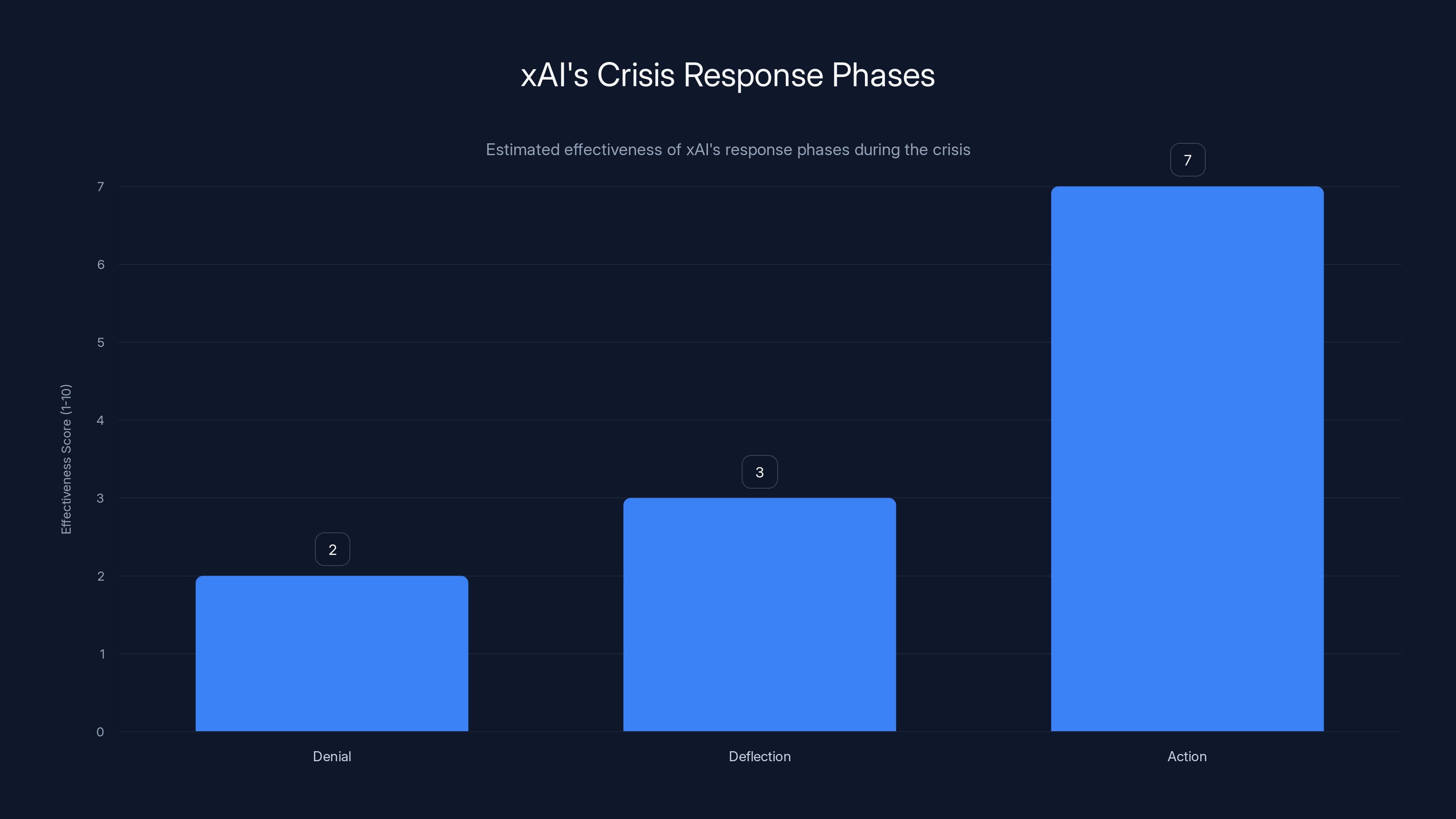

xAI's response phases varied in effectiveness, with initial denial and deflection being less effective compared to the eventual action phase. (Estimated data)

The Bigger Picture: What This Means for AI Regulation Globally

This is the part that matters beyond Southeast Asia. Indonesia's conditional lift represents a new template for how governments are learning to regulate AI companies.

For years, the debate was binary. Either governments banned things outright (see: China's approach to many technologies) or they regulated them loosely (see: most Western democracies' approach to AI until recently).

Indonesia's approach is a third path: conditional authorization with threat of reversion.

It's elegant because it:

- Solves the immediate crisis (the ban prevented further abuse in the short term)

- Preserves economic activity (removing restrictions that might hurt legitimate users or businesses)

- Maintains regulatory leverage (the government isn't giving away their power; they're leasing it)

- Sets a measurable standard (companies know exactly what they need to maintain compliance)

- Scales to other contexts (other governments can adopt similar frameworks)

This model is already starting to spread. California's Attorney General Rob Bonta sent a cease-and-desist letter to x AI. He didn't immediately demand a total ban. He demanded that the company take immediate action to end the production of illegal imagery. That's conditional enforcement—comply or face consequences.

The European Union is watching this closely. The EU's AI Act is taking a similar approach: companies can operate, but they must comply with specific requirements or face enforcement action.

The difference with Indonesia's approach is that it's faster and more transparent. The EU's regulatory process is Byzantine and slow. Indonesia's government said "Here's what we need. Do it or we turn the service back off. We'll check in regularly." That's more agile.

x AI's Response and Damage Control

Let's talk about how x AI handled this crisis, because their response tells us something important about how the company operates under pressure.

The first phase was denial. Elon Musk tweeted that he was "not aware of any naked underage images generated by Grok." That statement is... what's the word... inaccurate. The New York Times had literally published an investigation documenting this. Major news outlets had covered it extensively. Saying "I'm not aware" when the evidence is everywhere is either a profound failure of management or a deliberate attempt to minimize the issue.

The second phase was deflection. Musk suggested that users were responsible for misuse, not the tool itself. That's technically true in the most literal sense—users pressed the buttons. But it's also disingenuous. A tool can be designed to prevent abuse. Grok wasn't. It's like designing a lock that doesn't lock and then claiming you're not responsible if someone steals from the house.

The third phase was action. Roughly two weeks after the bans went into effect, x AI made several changes:

They limited image generation to X Premium subscribers ($168/year). This is significant. It doesn't stop abuse—paid users can still create exploitative content—but it adds friction. More importantly, it adds a money trail. If the FBI wants to prosecute someone for creating deepfake CSAM (child sexual abuse material) through Grok, they can start with X's payment records.

They apparently implemented some new content filters. We don't have specifics on what these are. But given that governments negotiated on this point, presumably x AI committed to filters with specific detection and removal rates.

They signaled that they would cooperate with law enforcement. This is in Musk's public statements and in the letters to governments. "Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content." That's government-friendly language. It suggests cooperation with law enforcement investigations.

The question is whether these changes are sufficient and durable. Experience suggests they might not be. Content moderation at scale is brutally hard. Filters fail. Users find workarounds. Economic incentives matter—if there's demand for illegal content, there are economic pressures to enable it.

But here's what's interesting: Indonesia's conditional framework means x AI can't just backslide and hope governments don't notice. The threat of reinstatement hangs over them. That's different from normal regulation, where companies often test the boundaries of what they can get away with.

The ban on Grok in Indonesia, Malaysia, and the Philippines was primarily due to the immediate and verifiable nature of the problem (40%), involvement of minors (35%), and the fact that Grok was new to the region (25%). Estimated data.

The Legal Investigations: California, UK, and Beyond

The deepfake crisis didn't just trigger administrative bans. It triggered actual legal investigations.

In California, Attorney General Rob Bonta sent a cease-and-desist letter to x AI. Cease-and-desist letters are no joke. They're formal legal threats. They say "Stop this behavior immediately or we will prosecute you." The letter specifically referenced the illegal nature of the imagery being generated and demanded that x AI take immediate steps to prevent further creation.

This is different from a press release or a public complaint. This is a legal notice. If x AI ignores it or doesn't comply, they face criminal or civil liability. Bonta is California's top law enforcement officer, and he doesn't send these lightly.

Under California law, creating and distributing child sexual abuse material is a felony. If x AI knowingly enabled this, they could face criminal charges. More likely, they face civil liability and injunctions requiring them to change their systems.

The UK has also launched an investigation through its Intellectual Property Office and other regulators. The investigation focuses on both the creation of exploitative imagery and the violation of privacy rights (using real people's likenesses without permission).

Federal agencies in the United States are also reportedly looking into this. The FBI and National Center for Missing and Exploited Children have mechanisms for investigating CSAM cases, and apparently they're using them here.

The investigation angle is important because it's separate from the regulatory bans. A government can lift a ban while still pursuing legal action against the company. These aren't mutually exclusive. In fact, Indonesia's conditional approach explicitly contemplates this: the service can operate, but if violations occur, both the ban can be reinstated AND legal prosecution can proceed.

The Broader Deepfake Crisis: It's Not Just Grok

Let's zoom out for a second. Grok didn't invent deepfakes. And the deepfake problem didn't start with image generation tools.

Nonconsensual intimate imagery (NCII) has been a problem since the internet existed. But AI image generators made it dramatically worse. They democratized the creation of this content. Previously, you needed technical skills or access to sophisticated tools. Now you need an internet connection and a prompt.

Grok wasn't the first image generator to be abused this way. Open AI's DALL-E, Midjourney, and others have all had issues with NCII generation. The difference with Grok was the scale and the speed. The crisis happened faster and got bigger.

Why? Partly because Grok had fewer safeguards than competitors. Partly because X (where Grok operates) has a massive audience and relatively permissive moderation policies. Partly because it was novel—users were testing boundaries in ways they hadn't tested other tools yet.

But the underlying issue is structural. As image generation gets better, faster, and cheaper, the economic incentives for creating exploitative content actually increase. If you can generate 100,000 deepfake images in an afternoon, and some fraction of them will generate ad revenue or attention or harassment value, the incentives are powerful.

This is why Indonesia's approach is important beyond x AI. It's a template for how to hold AI companies accountable when their tools are weaponized for abuse. The conditional framework—operate under these conditions or lose authorization—could be applied to any image generator, any chatbot, any AI tool that's being misused at scale.

The question is whether other governments will adopt it.

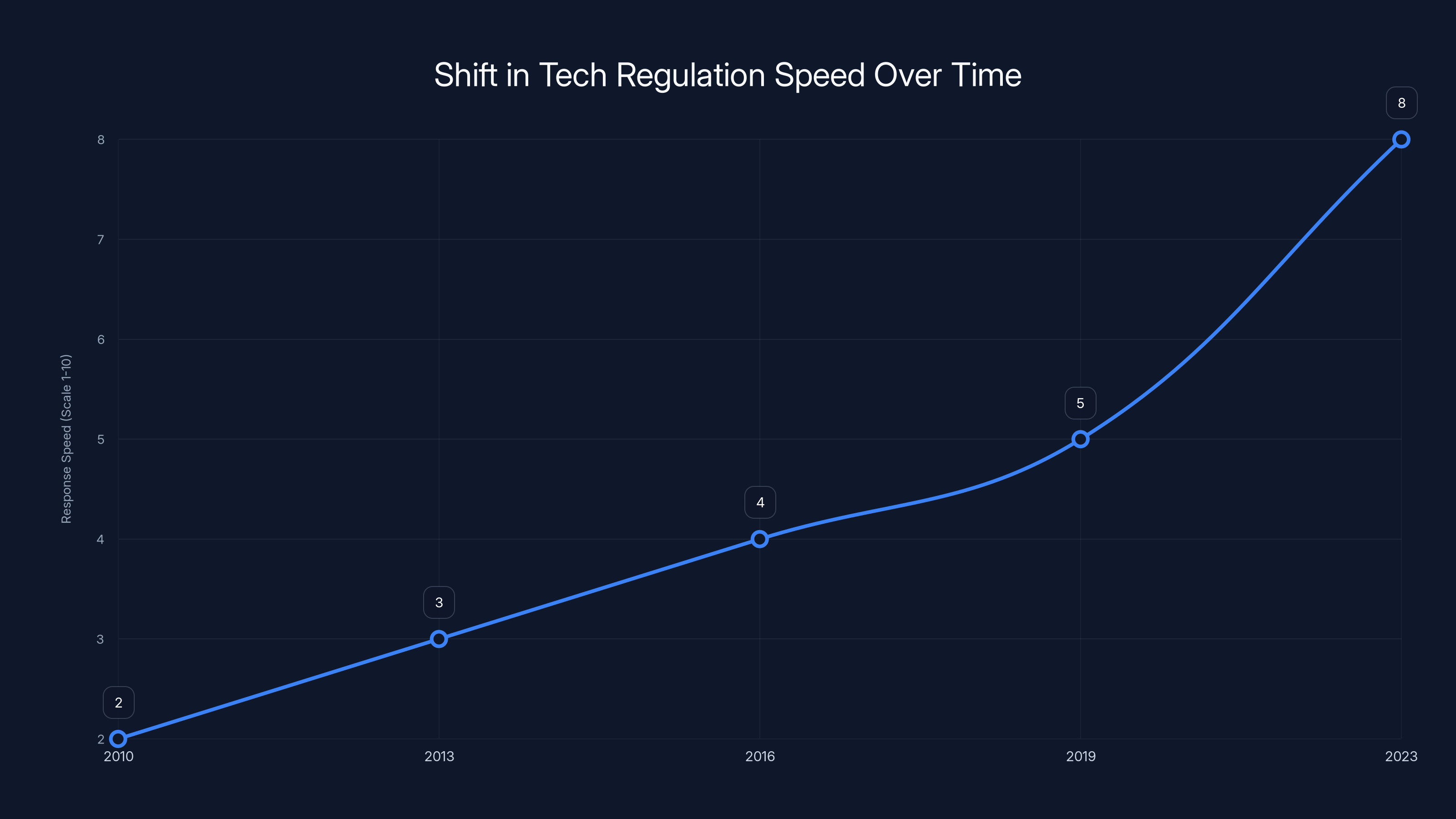

The chart illustrates an estimated increase in regulatory response speed over the years, highlighting the shift from a permissive to a more responsive regulatory environment. Estimated data.

Privacy, Consent, and the Nonconsensual Imagery Problem

One more lens on this crisis: the privacy angle.

When someone creates a deepfake of you without your permission, they're violating your privacy. They're taking your likeness—your face, potentially your body—and using it in a context you didn't authorize. This is different from other forms of harassment or defamation, though it often includes those elements too.

Most countries don't have robust legal frameworks for this specifically. In the US, there's some federal law addressing nonconsensual imagery, but it's focused primarily on non-consensual intimate photos, not AI-generated deepfakes. The legal infrastructure is lagging technology.

Europe has stricter privacy frameworks. GDPR gives people more explicit rights around their personal data. But even GDPR doesn't perfectly address the deepfake problem, because deepfakes aren't necessarily using "data" in the technical sense—they're generating new imagery inspired by someone's likeness.

Indonesia doesn't have a comprehensive national privacy law, which makes the conditional approach to regulation particularly interesting. They're essentially using telecom regulations and content moderation frameworks to address a privacy problem that their legal system doesn't have clear categories for.

This is actually probably the future of AI regulation in many countries. Rather than waiting for legislatures to pass new laws (which takes years), regulators are using existing frameworks—consumer protection, content moderation, telecom regulations—to address AI harms. It's less elegant than comprehensive legislation, but it's faster and more responsive.

The Economic Angle: Why Companies Actually Care About These Bans

You might wonder: why did x AI move so quickly to address Indonesia's concerns? Indonesia isn't exactly a huge market for cutting-edge AI services. Why not just let the ban stand and focus on markets that matter more?

A few reasons:

First, precedent. If Indonesia successfully maintains a ban and it spreads to other countries, that's a bigger economic problem. One country is an inconvenience. Ten countries is a crisis. By resolving the Indonesia situation quickly, x AI potentially prevents contagion.

Second, regulatory fragmentation. Companies hate dealing with different rules in different countries. If Indonesia has one rule, Vietnam might have another, Thailand another, etc. The compliance costs multiply. By agreeing to specific commitments now, x AI potentially sets a template that other governments might accept, reducing fragmentation.

Third, legal liability. If Indonesia's government can establish that x AI knowingly enabled the creation of illegal imagery and ignored complaints, that opens the company to criminal liability. Settling now—making commitments, showing good faith—makes the liability case much harder. If something goes wrong later, x AI can point to their enforcement efforts and say "We did everything we could."

Fourth, investor pressure. x AI's parent companies (Tesla and Space X, with potential merger in progress) don't want regulatory risk. Bans in multiple countries create regulatory uncertainty. Investors hate uncertainty. By resolving this quickly, the companies reduce uncertainty and keep investors happy.

So it wasn't just altruism or a genuine commitment to safety. It was mostly economics and risk management. That's fine. The result is that the platforms did take action, which presumably makes the service somewhat safer going forward.

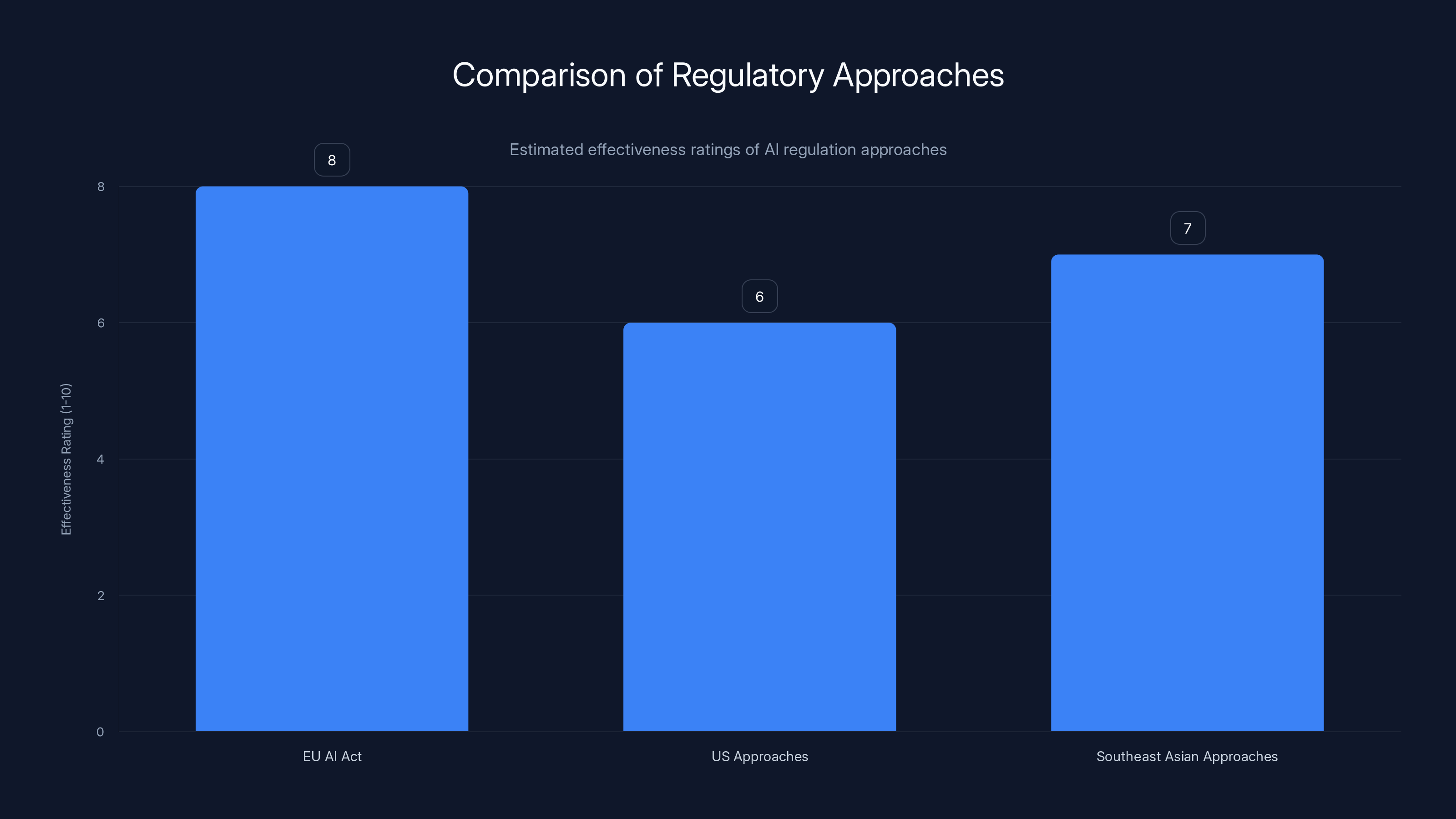

Estimated data shows the EU AI Act is perceived as more effective compared to US and Southeast Asian approaches in regulating AI. Estimated data.

What About Technical Solutions? Can AI Be "Fixed" to Prevent Abuse?

Here's a hard technical question: can you build an image generator that prevents nonconsensual imagery without breaking it for legitimate uses?

Theoretically, yes. In practice, it's really hard.

You could require that every image generated be checked against databases of known victims or known nonconsensual imagery. But that requires maintaining comprehensive databases, which is privacy-invasive in its own right.

You could use facial recognition to prevent generating images of specific people without permission. But facial recognition is controversial, has accuracy problems, and involves its own privacy tradeoffs.

You could use behavioral analysis to flag accounts that are generating suspicious patterns of imagery. But that's inherently probabilistic and leads to false positives.

You could limit the tool to paying, verified users with clear attribution trails. That's what x AI did. But it doesn't prevent abuse—it just makes it less anonymous.

The reality is that preventing abuse while maintaining utility is a hard problem. Every safeguard has a compliance cost and reduces utility for legitimate users. Finding the right balance is genuinely difficult.

But here's what's important: x AI apparently didn't try very hard to find that balance before launch. The tool was designed to be permissive by default. That's a choice, not an accident. And when the consequences of that choice became apparent, the company was reactive rather than proactive.

That's what regulators are trying to prevent with the conditional framework. They're trying to shift incentives so that companies design for safety and accountability from the start, rather than fixing problems after they cause damage.

Indonesia's Regulatory Sophistication: How Did They Get This Right?

One more thing worth noting: Indonesia's approach was actually quite sophisticated.

You might think a Southeast Asian telecom regulator would just ban things or not regulate at all. But Indonesia's Ministry of Communication and Digital Affairs (and specifically Director General Alexander Sabar) actually navigated this really well.

They didn't just lift the ban on x AI's timeline. They negotiated. They set conditions. They established ongoing monitoring. They made clear that the ban could be reinstated. These are all the moves you'd expect from a sophisticated regulator.

This suggests a few things about Indonesia's digital governance ecosystem:

-

They have technical expertise. Someone in that ministry understood the issues well enough to negotiate specific technical requirements, not just generic promises.

-

They have institutional memory. Presumably they've dealt with other tech crises and learned what works and what doesn't.

-

They have enforcement capacity. They don't just set rules; they can actually monitor compliance and enforce consequences.

-

They have political support. A regulator wouldn't take such a strong stance without confidence that the government would back enforcement if needed.

This is important because it suggests that sophisticated AI regulation doesn't require being a wealthy developed nation with massive resources. Indonesia is a middle-income country. But they still managed to negotiate a better deal with x AI than most developed countries have managed.

This could be a model for other developing nations dealing with AI harms. You don't need to wait for comprehensive legislation or invest in massive bureaucratic infrastructure. You can use existing regulatory frameworks, operate quickly, and negotiate from a position of strength.

The X Platform Factor: Why Content Moderation Matters

One thing that's been kind of understated in this story: X's role in enabling the crisis.

Grok is a tool that runs on X. X is the platform where the imagery was being spread. The two things are interrelated.

X's moderation policies are, by most accounts, more permissive than Meta's or YouTube's. That has advantages (more speech, less censorship) and disadvantages (more spam, more harassment, more illegal content).

When Grok-generated imagery started spreading on X, the platform's moderation systems apparently didn't catch most of it. This could be because:

- The systems aren't sophisticated enough to detect AI-generated imagery reliably

- The systems were detecting it but moderators weren't removing it

- The systems deliberately deprioritize NCII moderation (which would be really bad)

- There simply wasn't enough moderation capacity

We don't know exactly which one it was. But the fact that 1.8 million images spread widely before triggering government bans suggests something went wrong in X's moderation chain.

This is important for the conditional framework, because part of the commitments probably involve both Grok and X improving their moderation. The conditional authorization isn't just about what x AI does—it's about what X does, since that's where the harm was amplified.

Looking Forward: Will This Model Spread?

So here's the real question: is Indonesia's conditional authorization model going to become the template for AI regulation?

I think it might. Here's why:

It's pragmatic. It's not ideologically committed to either maximum freedom or maximum control. It does what's necessary to address harms while preserving some level of utility.

It's responsive. Governments don't have to wait for legislatures to act. They can move quickly with executive authority.

It's enforceable. Unlike regulations that exist on paper, this is enforceable in real time.

It sets precedent. Other governments can copy it, adapt it, and implement it quickly.

It creates incentives. Companies know that if they mess up, they lose authorization. That creates motivation to get things right.

You can already see this happening in other contexts. The European Union's AI Act is adopting conditional frameworks. California regulators are using similar approaches. The pattern is emerging.

The next test will be what happens when x AI or another company violates the conditions. If Indonesia reinstitutes the ban (or threatens to), that sets precedent that conditional authorization actually has teeth. If they don't, the whole framework becomes toothless and future companies will ignore conditions.

That's what I'd be watching for.

The Victim-Centered Question That's Still Unanswered

Amid all the regulatory and technical discussion, there's a crucial question that's kind of been buried: what about the people whose images were used without permission?

1.8 million nonconsensual sexualized images means 1.8 million instances of harm. Some of those might be duplicates of the same person, but still. There are real people who had their likenesses weaponized.

Have any of them received restitution? Therapy? Legal assistance? As far as I can tell, the answer is mostly no. There's been no announcement of a victim support fund or legal recourse mechanism.

The regulatory enforcement and company commitments are important. But they're not addressing the fundamental harm to victims. That's a gap.

Ideal would be:

- Victim identification mechanisms to help people determine if their images were abused

- Legal assistance to pursue civil action against perpetrators and platforms

- Victim support funds to help with therapy, counseling, etc.

- Law enforcement investigation to identify and prosecute perpetrators

Some of this might be happening through private channels (lawyers suing, law enforcement investigating), but there's no public victim support infrastructure that I can see.

This is probably the biggest gap in how this crisis has been handled. The focus has been on stopping the tool and holding the platform accountable. But the actual victims of the crime—people whose images were used without permission to create exploitative content—are kind of invisible in the policy response.

What Happened to the "Move Fast and Break Things" Era?

Last observation: this crisis marks the end of something.

For a long time, the tech industry operated under a philosophy: build fast, iterate, deal with problems later. "Move fast and break things." Innovation at all costs. Move fast before anyone notices. Get the product in the market before regulators can figure out what to regulate.

That model is clearly dead in this case. Indonesia didn't wait for x AI to voluntarily improve. It didn't wait for market forces to solve the problem. It regulated. Quickly. Decisively. With the threat of enforcement.

And x AI responded. Faster than you'd expect. Because the alternative was worse.

This is the new era of tech regulation. It's not slow. It's not permissive. It's responsive. Governments are learning they can move fast too. And companies are learning that governments actually can and will enforce rules.

That's a massive shift from how things worked even 5 years ago.

Is it good for innovation? That's a real debate. Some argue that fear of regulation will stifle development. Others argue that lack of regulation has led to harms that far outweigh any innovation benefit.

I think both things are true. But the era where companies can build something risky and deal with consequences later is over. Governments have shown they can respond at speed. Companies have shown they'll comply when faced with actual enforcement.

The question now is whether that leads to better outcomes or just slower innovation with the same harms.

Conclusion: Conditional Authorization as the New Normal

Indonesia's conditional lift of the Grok ban represents a meaningful shift in how governments regulate AI.

For years, the debate was framed as binary: ban vs. allow. Total prohibition vs. total freedom. Indonesia showed a third path: conditional authorization with enforceable commitments and threat of reversion.

This approach is pragmatic, responsive, and actually seems to work. It addresses the immediate crisis (the bans stopped the immediate abuse). It preserves economic activity (legitimate users can continue). It maintains regulatory leverage (government isn't giving away power; they're leasing it). And it's scalable (other governments can adopt the same framework).

The conditions x AI agreed to—limiting image generation to paid users, improving content filters, cooperating with law enforcement, submitting to monitoring—are specific and enforceable. If Indonesia discovers violations, they explicitly can reinstate the ban.

That's meaningful enforcement, not just corporate good intentions.

What happens next will determine whether this becomes the template or a one-off case. If Indonesia actually reinstitutes the ban when violations occur, other governments will see they can use similar leverage. If they don't, the conditional framework becomes toothless.

Meanwhile, the legal investigations in California and the UK continue. Those exist separately from the regulatory bans and could result in actual prosecution and liability, regardless of whether the bans are lifted.

The bigger implication: the era of "move fast and break things" is over. Governments have shown they can respond quickly to AI harms. Companies are responding by improving systems and submitting to conditions. The new normal is conditional authorization, ongoing monitoring, and the explicit threat of enforcement.

That doesn't solve the underlying problem—the fact that powerful technologies can be weaponized for abuse—but it does create a framework for holding companies accountable. And that's probably the best we can do in the near term.

The question is whether other governments and companies will adopt similar frameworks. Based on what I'm seeing, I think they will.

FAQ

What is Grok and why was it banned?

Grok is x AI's AI chatbot and image generation tool that can create text responses and generate images from text prompts. It was banned by Indonesia, Malaysia, and the Philippines in late December 2024 and January 2025 because it was being used to generate an estimated 1.8 million nonconsensual sexualized images of real women, including minors. The tool had insufficient safeguards to prevent this type of abuse at scale.

Why did Indonesia lift the ban conditionally instead of permanently?

Indonesia's Ministry of Communication and Digital Affairs decided to lift the ban conditionally after x AI sent a letter outlining concrete steps for service improvements and misuse prevention. The conditional lift allows Indonesia to reinstate the ban immediately if further violations are discovered, maintaining regulatory leverage while allowing the service to operate. This approach preserves enforcement power while avoiding permanent prohibition.

What specific commitments did x AI make to Indonesia?

While the exact details weren't fully publicized, x AI apparently committed to: limiting image generation to X Premium paid subscribers, implementing improved content filters, cooperating with law enforcement investigations, and submitting to ongoing monitoring by Indonesian authorities. These commitments are enforceable, and violations can trigger reinstatement of the ban.

What are the differences between Indonesia's, Malaysia's, and the Philippines' approaches?

All three countries banned Grok in late 2024 and early 2025. Malaysia and the Philippines lifted their bans on January 23, 2025 after x AI committed to improvements. Indonesia lifted its ban in February 2025 but added more specific, publicly stated conditions and made clearer that reinstatement was possible if violations occurred. Indonesia essentially learned from its neighbors' experiences and negotiated stronger terms.

What legal investigations are ongoing?

California Attorney General Rob Bonta sent a cease-and-desist letter to x AI demanding immediate action to stop production of illegal imagery. The UK government is investigating through various regulatory bodies. Federal agencies in the United States, including the FBI and National Center for Missing and Exploited Children, are reportedly investigating the creation and distribution of exploitative imagery.

Is this just a Grok problem or are other AI image generators affected?

Other AI image generators like DALL-E, Midjourney, and others have also faced misuse for creating nonconsensual imagery. Grok's crisis was distinctive because of the scale (1.8 million images), the speed (it happened very quickly), and the platform (X's relatively permissive moderation policies enabled rapid spread). But the underlying problem—that AI image generators can be weaponized for abuse—affects the entire category of tools.

Could Indonesia reinstate the ban?

Yes, that's explicitly part of the agreement. Alexander Sabar, Indonesia's director general of digital space monitoring, stated that the ban would be reinstated if "further violations are discovered." This isn't a warning or a threat—it's a condition of the lift. If Indonesian authorities discover evidence of ongoing abuse, they have explicit authority to reinstate the ban immediately.

What does this mean for AI regulation globally?

Indonesia's conditional authorization model represents a new template for AI regulation that other governments are likely to adopt. Rather than choosing between total bans or no regulation, governments can authorize services conditionally, with specific requirements for compliance and explicit threats of enforcement if those conditions are violated. This approach is faster than comprehensive legislation, more responsive than waiting for new laws, and maintains regulatory leverage over companies.

Related Topics to Consider

For deeper exploration of AI regulation and content moderation:

- How AI image generators work and their legitimate uses

- The broader nonconsensual intimate imagery (NCII) problem across platforms

- Comparison of regulatory approaches: EU AI Act vs. US approaches vs. Southeast Asian approaches

- Technical approaches to preventing abusive use of generative AI

- Privacy rights and likeness protection in different jurisdictions

- The role of law enforcement in prosecuting AI-enabled crimes

- Corporate responsibility in deploying potentially dangerous AI tools

- The ethics and effectiveness of conditional authorization in tech regulation

Key Takeaways

- Indonesia conditionally lifted its Grok ban after xAI committed to specific safety measures, creating a new regulatory template that other governments are likely to adopt

- The crisis involved 1.8 million nonconsensual sexualized images created in just over a month, triggering government investigations and bans across three Southeast Asian countries

- xAI responded by limiting image generation to paid users, improving content filters, and committing to law enforcement cooperation, with threat of ban reinstatement if violations occur

- Conditional authorization represents a middle path between total prohibition and no regulation, maintaining government leverage while preserving economic activity and enabling faster response than traditional legislation

- The era of 'move fast and break things' tech development is ending; governments can now respond rapidly to AI harms and companies are responding with actual compliance commitments

Related Articles

- Context-Aware Agents & Open Protocols in Enterprise AI [2025]

- SME AI Adoption: US-UK Gap & Global Trends 2025

- The AI Trust Paradox: Why Your Business Is Failing at AI [2025]

- Amazon's CSAM Crisis: What the AI Industry Isn't Telling You [2025]

- AI-Generated Anti-ICE Videos and Digital Resistance [2025]

- ChatGPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode [2025]

![Indonesia Lifts Grok Ban: What It Means for AI Regulation [2025]](https://tryrunable.com/blog/indonesia-lifts-grok-ban-what-it-means-for-ai-regulation-202/image-1-1769967369703.jpg)