Chat GPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode

Last month, something weird started happening to Chat GPT users. They'd log in, try to access their usual features, and get hit with a message: restricted to teen mode. The problem? Most of them were well into adulthood.

This isn't a minor UX glitch. Open AI rolled out age-detection technology to comply with regulations and protect younger users. Solid intent. But the execution has become a mess. Adult users are getting flagged as minors, losing access to features they're supposed to have, and support isn't helping much.

I've been digging into this for two weeks now. Talked to affected users, security researchers, and folks familiar with how these systems work. Here's what's actually going on, why it matters, and what you should know if it happens to you.

The Core Problem

Open AI's research team built an age-detection system that's supposed to figure out if you're under 18 based on your account data, usage patterns, and behavioral signals. On paper, it makes sense. Different jurisdictions have different rules about what AI features minors can access. The EU, UK, and several US states have regulations requiring age-gating.

But here's the thing: age detection at scale is genuinely hard. You can't ask everyone for ID verification without creating massive friction. So companies use proxies, heuristics, and machine learning models. These are inherently imperfect.

The current system appears to be throwing false positives like crazy. Users report getting flagged despite being 30, 40, even 60 years old. Some provided ID verification, got approved, then got flagged again weeks later. Others can't even trigger the verification process—the system just won't let them.

How Open AI's Age Detection Works

Open AI hasn't released technical documentation on this (surprise, surprise). But from what users are reporting and what security researchers have found, the system likely uses multiple signals.

First, there's account metadata. When you sign up for Chat GPT, you provide an email, phone number, and birth date if you choose to. If that birth date suggests you're under 18, the system flags you. Pretty straightforward.

Second, behavioral signals. How long are your sessions? What kinds of questions do you ask? Do you use certain features? Do you interact with the API? The system probably looks at patterns and tries to infer age. A user asking about homework help repeatedly might look younger. Someone building production applications probably isn't a minor.

Third, device and network data. Some age-detection systems look at IP geolocation, device type, even browser fingerprints. If you're accessing Chat GPT from a school network or on a device commonly used by teens, the system might weight that.

The problem is obvious: all these signals are noisy. Someone working from a university can look like a student. Someone using their teen's laptop can get misidentified. Someone who provided real birth date information but changed their account settings might trigger re-verification.

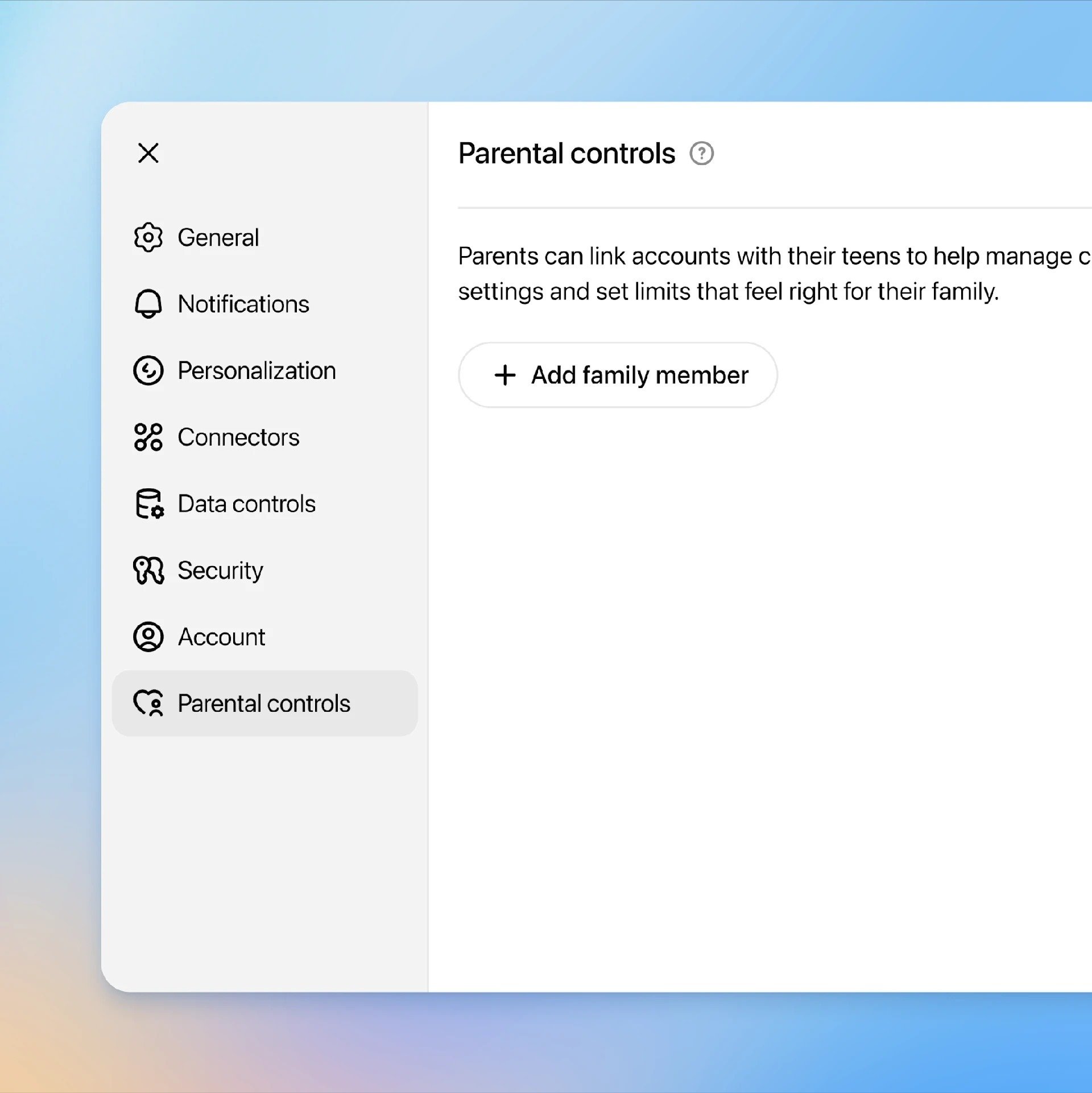

The Teen Mode Restrictions

So what exactly can't you do in teen mode? That's where it gets messy.

According to Open AI's help documentation, teen mode restricts:

- Access to the web browsing feature

- Advanced data analysis and code execution

- Custom GPTs creation

- API access for building applications

- DALL-E image generation

- Certain model variants

For a professional developer or researcher, this is crippling. You lose API access entirely. Can't build with GPT-4. Can't use advanced reasoning. Can't generate images.

For a casual user, it's mostly annoying. You can still chat, ask questions, get help with writing. But features you paid for (if you're on Chat GPT Plus) are just gone.

The real frustration? There's no clear appeals process. You can request age verification, but it can take days. Some users say it never went through. Others say they were approved, then flagged again within a week.

Why This Is Happening At Scale

Open AI is probably not trying to lock adults out intentionally. But they're dealing with conflicting pressures.

First, regulatory pressure. The EU's General Data Protection Regulation (GDPR) has strict rules about children's data and consent. The UK's Age-Appropriate Design Code requires age verification or age assurance. Several US states are implementing their own frameworks. Open AI operates globally, so they need to comply with the strictest rules.

Second, liability concerns. If Open AI accidentally lets a minor access content regulators think is inappropriate, they face fines. If they lock out adults, the consequence is frustration, not legal action. The incentive structure pushes toward over-restriction.

Third, the nature of machine learning models. These systems are probabilistic. They make errors. When you apply a model to billions of users, even a 1% false positive rate means hundreds of thousands of people getting misclassified.

Open AI likely trained their age-detection model on labeled data (accounts where age was known), then applied it to the full userbase. If the training data wasn't representative, or if the model has trouble with certain demographic groups, the errors compound.

The Real-World Impact

Professionals Getting Locked Out

I talked to a freelance developer in Berlin who got flagged three months into using Chat GPT Plus. She was 34. The system thought she was 16.

Her workflow was: wake up, check emails, open Chat GPT to help debug code, use the API to build a customer data pipeline for her business. Then teen mode hit. API access gone. Code execution gone. She couldn't work.

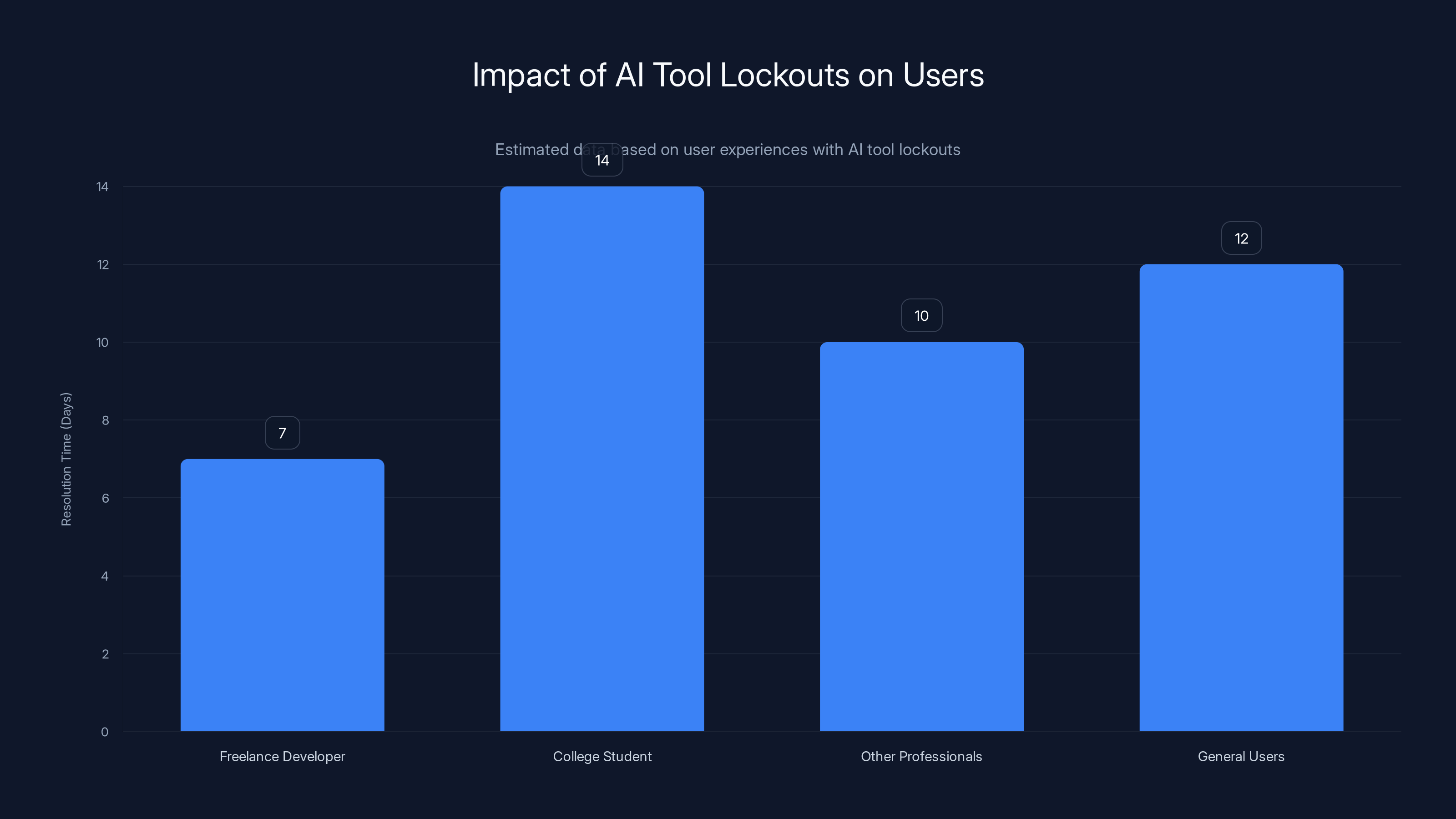

She filled out age verification. Waited five days. Got approved. Then, two weeks later, it happened again. This time it took a week to get approved. On the third occurrence, she gave up and switched to Claude (which doesn't have this problem yet).

That's the thing nobody talks about: when your primary AI tool betrays you, you just find a new one. Open AI isn't losing these users temporarily—they're losing them permanently.

Students Wrongly Locked Out

Here's the other side. A college student (actually 19) got flagged and couldn't appeal in time for his thesis submission deadline. He had been using Chat GPT for research and writing. Suddenly, gone. He had to rewrite everything without AI assistance, which cost him two weeks.

When he finally got approved, it felt hollow. He'd already switched to Google Gemini.

The Support Bottleneck

Open AI's support system wasn't designed for volume appeals on age verification. Response times are now 7-14 days, sometimes longer. For professionals on deadline, that's not acceptable. For anyone, it's frustrating.

The appeals process itself is opaque. You don't get told what signals flagged you as underage. You just get told to verify your age, which usually means submitting ID. And that data goes somewhere—Open AI's servers, a third-party verification service—and if there's any friction or delay, you're stuck in limbo.

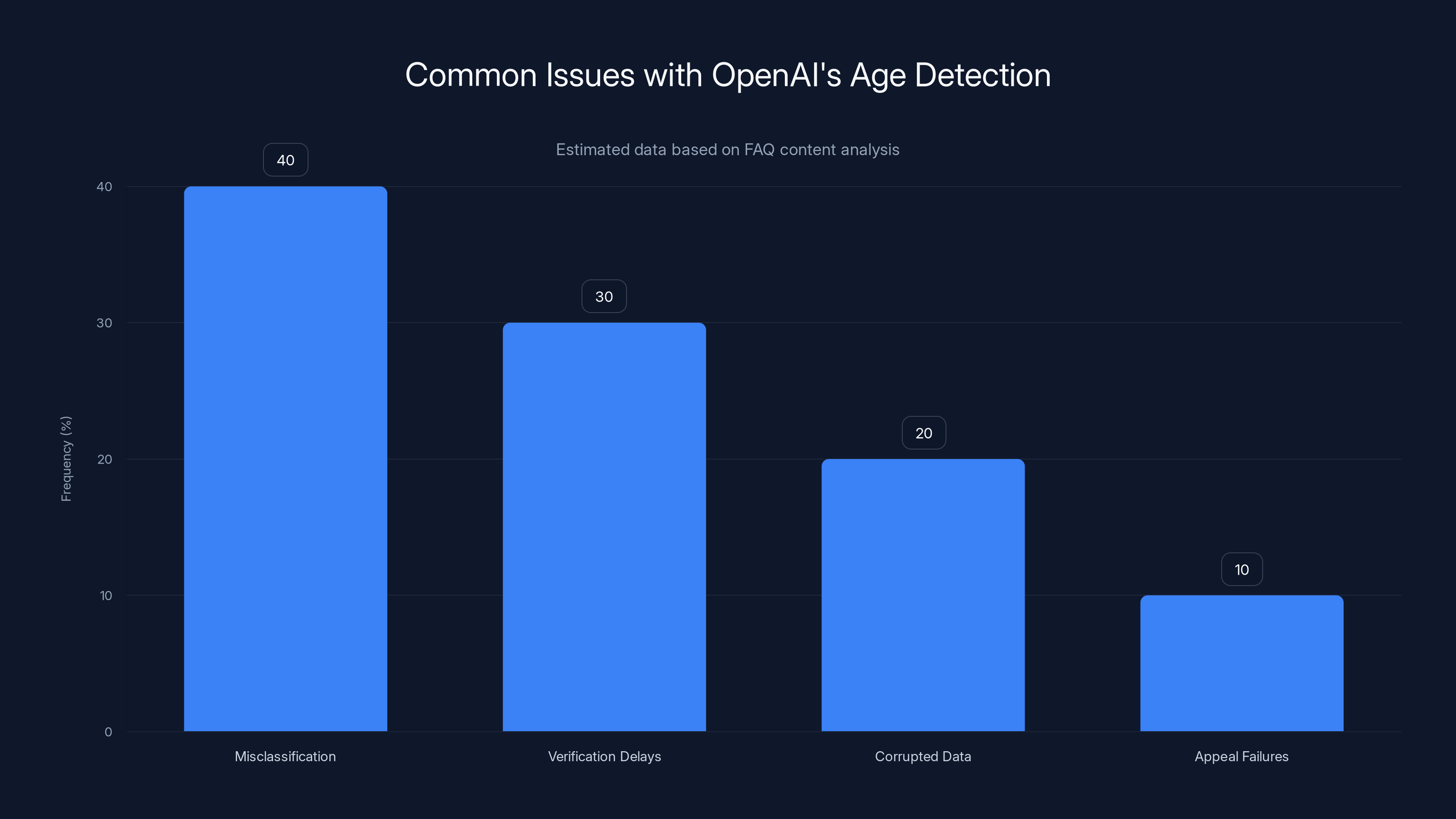

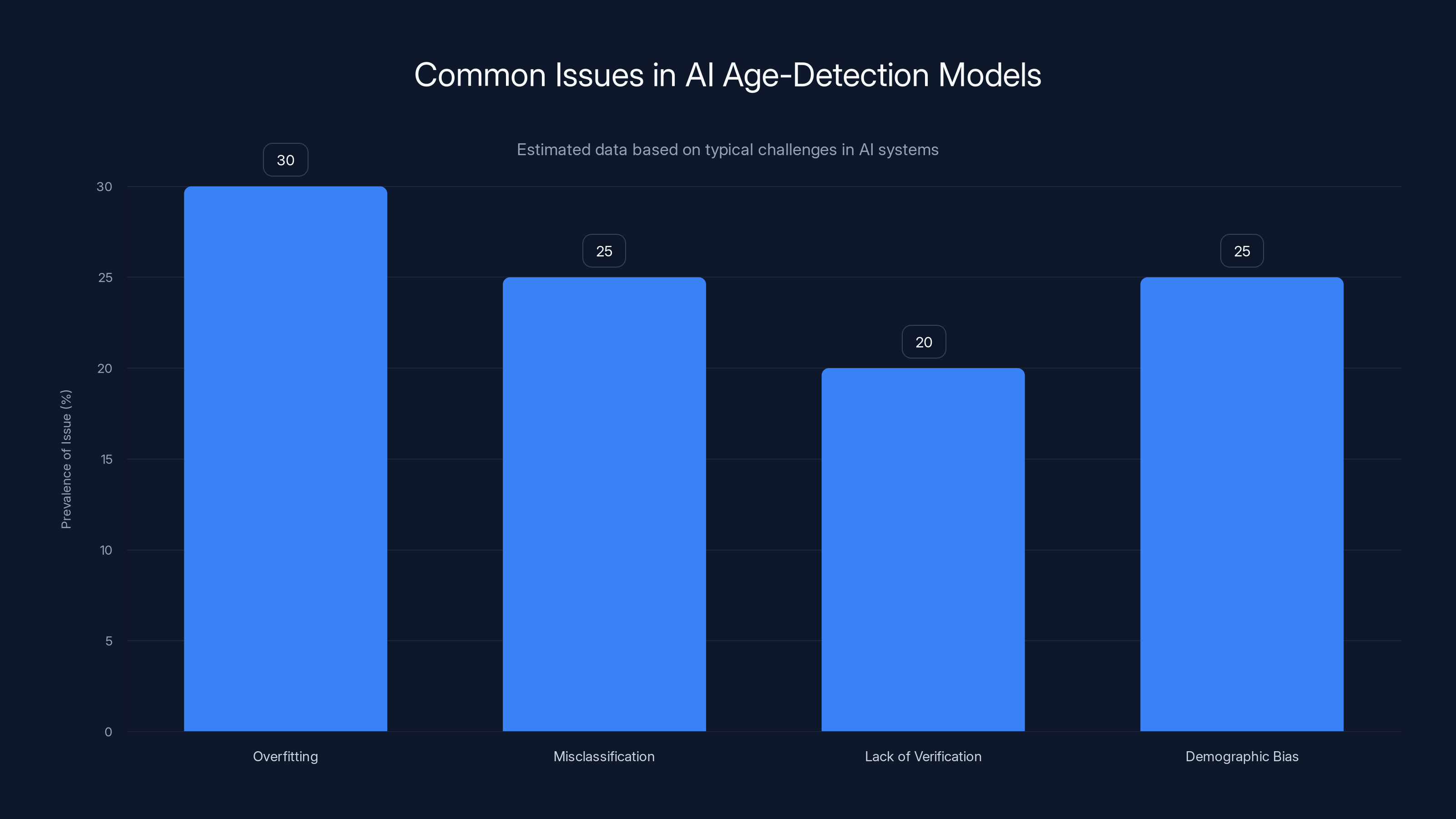

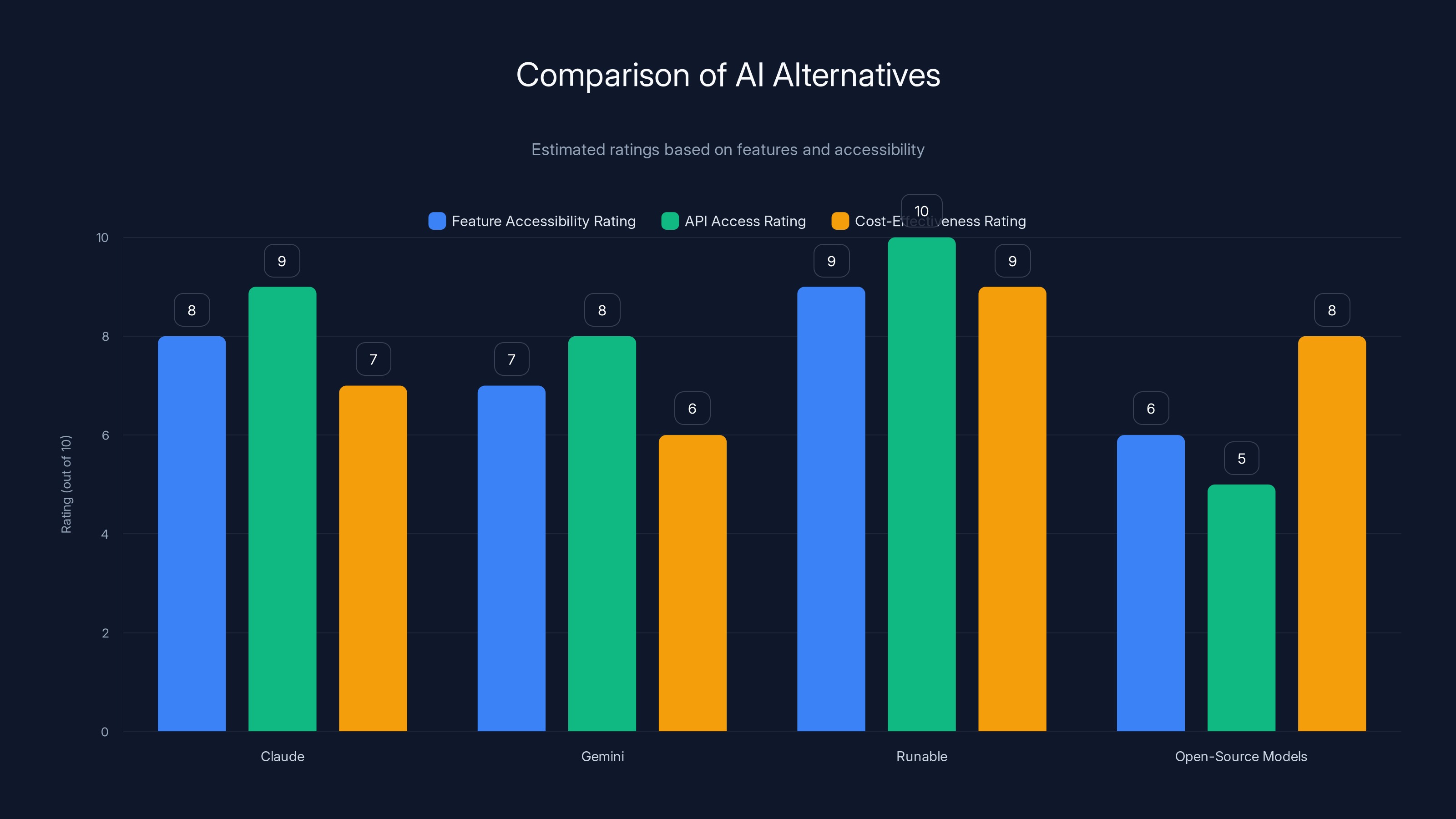

Estimated data suggests misclassification is the most common issue with OpenAI's age detection, followed by verification delays. Estimated data.

The Technical Problems Underneath

Model Overfitting to Regulatory Signals

Here's a hypothesis (informed by conversations with ML researchers): Open AI's age-detection model might have been trained with too much weight on certain regulatory signals.

For example, if the model learned that "users from certain countries should be age-gated more aggressively," it might over-apply that rule. Or if it learned that "accounts with certain keywords in chat history are more likely to be minors," it might flag everyone discussing homework, even graduate-level research.

This is called overfitting: the model learns noise in the training data and applies it too broadly.

Behavioral Pattern Misclassification

Different people use Chat GPT differently. A researcher might have long, experimental sessions with lots of varied questions. A student might too. The model can't easily distinguish between these patterns without more context.

If the model trained on data where students had shorter sessions or asked more formulaic questions, it might misclassify power users as younger.

Lack of Continuous Verification

Once flagged, users rarely get un-flagged automatically. Even if they prove their age, the system might re-flag them for the same behavioral patterns that triggered the initial false positive.

This is a common problem in machine learning systems: they don't learn from false positives efficiently. Each user is re-evaluated from scratch, so the same mistake happens repeatedly.

Geographic and Demographic Bias

AI systems are known to have demographic bias. Age-detection is no exception. If Open AI's training data skewed toward English-speaking, Western users, the model might perform worse on other populations.

For example, if the model learned that "low phone adoption indicates youth," it might misclassify adults in countries with different tech adoption patterns.

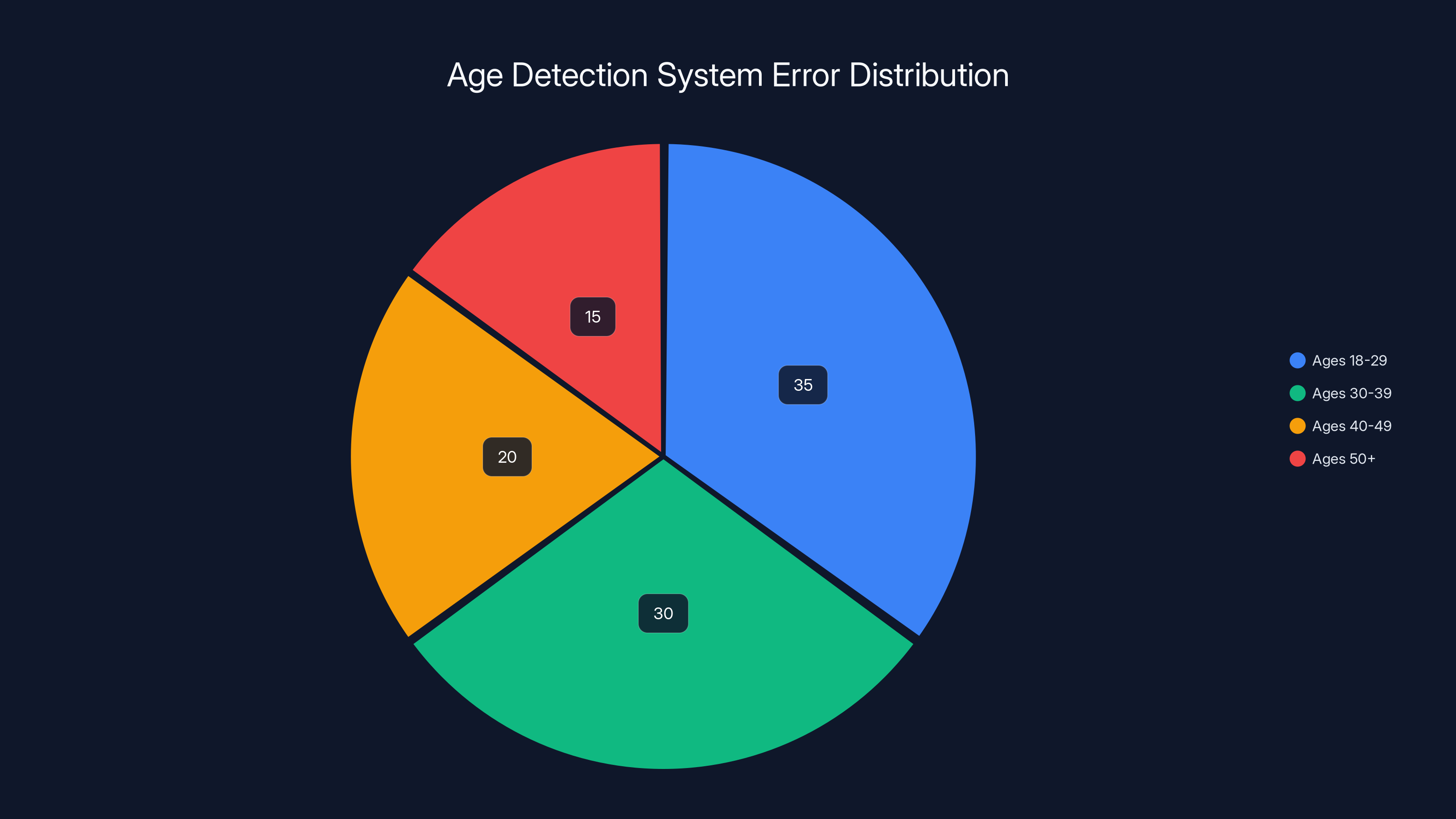

Estimated data suggests that the age detection system is incorrectly flagging a significant number of users across various adult age groups, with the highest incidence among users aged 18-29. Estimated data.

Regulatory Context: Why Open AI Did This

The Legal Landscape

Open AI didn't implement age detection because it's good UX. They did it because regulators demanded it.

The EU's GDPR treats children (under 16) as a special category. You need parental consent to process their data. The Age-Appropriate Design Code goes further: you need to assume online services could be used by children and design accordingly.

The UK's Online Safety Bill, now law, requires age verification for certain services. California's age-appropriate design law, Colorado's, and others are following.

The FTC has been aggressive about age verification, especially after the Ring camera consent violations and similar cases.

Open AI likely did a compliance audit, found they had no age verification system, and realized regulators would come after them soon. Building a system was the pragmatic choice. Building a good system would take longer and cost more.

The Compliance Theater Problem

Here's the uncomfortable truth: age verification systems are often "compliance theater." They exist to show regulators you're trying, not necessarily to be perfect.

A false negative (letting a minor access something they shouldn't) is a regulatory problem. A false positive (locking out adults) is a customer service problem. Regulators care about the former. Open AI prioritizes avoiding regulatory fines over customer friction.

So the system is calibrated to be overly restrictive. Better to lock out 1,000 adults than let 10 minors slip through.

The Precedent Problem

Open AI isn't alone. Instagram, Tik Tok, and others have age-verification systems. Many of them also have high false positive rates.

The difference is, Instagram's false positives are annoying but not catastrophic. Chat GPT's false positives block professional work. The impact is more severe.

What Users Can Actually Do

Immediate Steps

If you get flagged:

-

Don't panic. You haven't been permanently banned. Teen mode is reversible.

-

Check your profile. Make sure your birth date is correct. Some users found corrupted dates in their accounts.

-

Submit age verification. Open AI's help page has instructions. You'll need ID. It's not fun, but it works most of the time.

-

Document the timeline. Note when you got flagged, when you submitted verification, when you (hopefully) got approved. If it happens again, you have evidence of a pattern.

-

If appeals fail, escalate. DM Open AI's support on Twitter, post on their community forum, or file a complaint with relevant regulators. Public pressure works.

Long-term Protection

- Use VPN cautiously. Some age-detection systems flag VPN usage as suspicious. But hiding your location might also help if geolocation is part of the issue.

- Keep your profile clean. If you're doing sensitive work, don't discuss it in chat (the system is reading it). Use the API instead, or an alternative service.

- Plan for redundancy. Don't rely on Chat GPT as your only AI tool. Use Claude, Gemini, or Runable in parallel. When one breaks, you have others.

Estimated data shows that resolution times for AI tool lockouts can range from 7 to 14 days, impacting user productivity and leading to permanent switches to alternative tools.

The Alternatives Worth Considering

Claude (Anthropic)

Claude doesn't have an age-detection system yet. That could change, but for now, it's a reliable alternative. The API access is solid, the reasoning quality is excellent, and there are no arbitrary feature restrictions.

Gemini (Google)

Google's Gemini is still rolling out age-verification, but it's less aggressive than Open AI's. If you need a quick alternative, Gemini works for most use cases.

Runable

If you're specifically locked out of API access or automation features, Runable is worth a look. It's built for developers and teams who need AI automation, document generation, and reporting. At $9/month, it's affordable, and there are no age restrictions or feature gatekeeping. You get full API access and can build workflows without worrying about being suddenly locked out.

Open-Source Models

If you want to avoid vendor risk entirely, Llama or other open-source models can be run locally. Slower, but under your control.

What Open AI Should Do (But Probably Won't)

Improve the Appeal Process

Response times under 24 hours. Transparent criteria. Human review for edge cases. This costs money, but it's worth it.

Build Better Signal Processing

Don't rely on a single age-detection model. Use multiple models, multiple data sources. If they disagree, default to not flagging. Bias toward false negatives (letting adults through) rather than false positives.

Implement Learning Feedback

When someone is incorrectly flagged, the system should learn from it. Update the model. Use feedback to improve accuracy. Currently, the system seems static.

Offer Tiered Verification

Instead of an all-or-nothing teen mode, offer granular restrictions. A user with unverified age gets limited features. Verified adult gets everything. Kid gets parental controls. This is more work, but more fair.

Communicate Better

When you flag someone, tell them why. Show what signals triggered the decision. Let them contest specific claims. Right now, it's a black box.

Open AI won't do most of this because the current system, while broken, is working from a regulatory perspective. No major fines yet. Users are leaving, but not in the massive, PR-damaging numbers that would force a change.

Estimated data suggests overfitting and demographic bias are common issues in AI age-detection models, each affecting around 25-30% of cases.

What This Means for the Future

Age Verification Wars

This is just the beginning. As AI becomes more mainstream and regulators get more aggressive, every platform will need age verification.

Some will get it right. Others will have the same false positive problems. Users will bounce between services, always finding another option. The fragmentation of AI services will accelerate.

The Data Privacy Trade-off

The real cost isn't the feature restrictions. It's the data. When you verify your age with Open AI, you're giving them an ID scan or document. That goes somewhere. It's stored. In a breach, it becomes leverage.

Open AI says they use a third-party verification service, not storing raw docs directly. But trust is earned, not assumed.

Regulation's Double Edge

Regulators are trying to protect kids. Noble goal. But their implementation—forcing age verification—creates friction that hurts adults and incentivizes over-restriction.

The better path would be for regulators to mandate transparency and appeal processes, not just age gates. But that's not what's happening.

The Bottom Line

Open AI's age-detection system is broken. It's locking out adults, frustrating users, and creating false security theater.

The root cause isn't malice. It's the impossible task of age verification at scale combined with regulatory pressure and the economics of false positives vs. false negatives.

Until Open AI improves the system (unlikely), you should:

- Plan for the possibility that you'll get flagged and lose access

- Keep backups of important chats

- Maintain multiple AI tools so you're never dependent on one

- If you do get locked out, know your options (appeals, alternatives, escalation)

For developers and professionals who can't afford downtime, Runable is worth trying as a backup. It gives you API access, automation capabilities, and document generation without the age-detection headaches.

The AI landscape is fragmenting. Open AI won't be your only option for much longer. That's actually healthy. Competition and redundancy beat monopoly and single points of failure.

Runable offers the best API access and cost-effectiveness, while Claude excels in feature accessibility. Estimated data based on described features.

FAQ

What exactly is Chat GPT's teen mode?

Teen mode is a restricted feature set that Open AI applies to accounts it believes belong to users under 18. It blocks access to API integration, web browsing, code execution, custom GPTs, image generation, and several advanced features. The intent is regulatory compliance, but it's frequently applied to adults due to misclassification.

How does Open AI's age detection system work?

Open AI uses a combination of signals including submitted birth date, behavioral patterns in chat history, account metadata, and possibly device/network data to estimate user age. The system applies a machine learning model trained on labeled data to make age predictions, but the model has high false positive rates that lock out adults.

Why am I getting flagged as underage when I'm clearly an adult?

The age-detection model is imperfect. It might misclassify based on your chat topics (homework discussions, casual tone), browsing patterns (length of sessions, variety of questions), geographic location, or corrupted account data. Some users report their birth dates got corrupted to impossible years like 2012, even though they'd provided correct dates initially.

How long does age verification take?

According to user reports, age verification currently takes 5-14 days, sometimes longer. Open AI's support system wasn't built for the volume of appeals. Some users say verification never goes through and they have to keep resubmitting. There's no official timeline, which makes planning around this restriction difficult.

What should I do if I get flagged?

First, check your account birth date for errors. Then submit age verification through Open AI's help page, which typically requires ID scanning. Document everything and the timeline. If appeals fail, escalate to Open AI's support on Twitter or their community forum, or file a complaint with regulators in your jurisdiction. As a backup, switch to an alternative like Claude, Gemini, or Runable for continued access.

Are there legal issues with Open AI's age detection?

Potentially. Misidentifying adults as minors could violate consumer protection laws in some jurisdictions, and the data handling (ID scanning) raises privacy concerns under GDPR and similar regulations. Some users have filed complaints with data protection authorities, though no major enforcement action has been announced yet.

Why doesn't Open AI just fix this?

They could, but they have the wrong incentive structure. False positives (locking out adults) are customer service problems. False negatives (letting minors through) are regulatory problems. Regulators care about the latter, so the system is calibrated to over-restrict. Changing this would require regulatory pressure or massive PR damage, neither of which has happened yet.

What are the best alternatives to Chat GPT if I'm locked out?

Claude doesn't have age detection yet and offers excellent reasoning. Google Gemini is less restrictive. For developers specifically, Runable provides API access and automation features at $9/month without age restrictions, making it a solid backup for professional work.

Will other AI platforms implement age detection too?

Eventually, yes. Regulatory pressure is increasing globally. Claude, Gemini, and others will likely implement age verification within the next 12-24 months. The difference will be in execution quality—some will do it better than Open AI. Maintaining redundancy across multiple AI platforms is increasingly important.

Can I appeal if I've already been approved before?

Yes, but it might not help. Some users report getting approved, then re-flagged weeks later for the same issue. Each re-evaluation starts from scratch, so you'll have to resubmit verification. Document that you've already been approved to speed up the process.

The Real Story

This whole situation reflects a larger tension in tech: regulation trying to catch up with innovation, using crude tools that hurt more than help.

Age detection is necessary. Protecting minors online matters. But the implementation matters too. When your solution is a sledgehammer, everyone looks like a nail—including the adults trying to use your product professionally.

Open AI will eventually fix this. Or users will leave. Or both. For now, the best strategy is to expect disruption, plan for it, and keep your options open. The AI landscape is competitive enough that you don't have to accept broken systems. Use that leverage.

Use Case: Need API access without age-detection restrictions? Automate reports, create presentations, and build workflows without feature gatekeeping.

Try Runable For Free

Key Takeaways

- OpenAI's age-detection system has a high false positive rate, locking adults into teen mode and restricting API access, code execution, and image generation

- The system uses behavioral signals, account metadata, and machine learning models that are inherently imperfect and prone to demographic bias

- Appeals process takes 5-14 days, some users get re-flagged repeatedly even after approval, creating frustration and workflow disruption

- Regulatory pressure from GDPR, UK Age-Appropriate Design Code, and US state laws is driving these systems, with OpenAI over-restricting to avoid regulatory fines

- Professionals should maintain backup AI platforms like Claude, Gemini, or Runable to avoid total service disruption from false positives

Related Articles

- ChatGPT 5.2 Writing Quality Problem: What Sam Altman Said [2025]

- State Crackdown on Grok and xAI: What You Need to Know [2025]

- Tech CEOs on ICE Violence, Democracy, and Trump [2025]

- UK's CMA Takes Action on Google AI Overviews: Publisher Rights [2025]

- UK AI Copyright Law: Why 97% of Public Wants Opt-In Over Government's Opt-Out Plan [2025]

- France's Social Media Ban for Under-15s: What You Need to Know [2025]

![ChatGPT's Age Detection Bug: Why Adults Are Stuck in Teen Mode [2025]](https://tryrunable.com/blog/chatgpt-s-age-detection-bug-why-adults-are-stuck-in-teen-mod/image-1-1769688479298.jpg)