AI-Generated Anti-ICE Videos and Digital Resistance: Understanding the Phenomenon

There's a video circulating on Instagram and Facebook that shows something most people would never witness in real life. A New York City school principal, armed with nothing but a bat, stands between masked immigration enforcement officers and the school entrance. She swings with determination. Instead of escalation, the scene erupts with cheers. "Let me show you why they call me bat girl," she declares. The encounter ends without violence, without bloodshed, without the tragic finality that's become all too common in actual confrontations.

It's cathartic. It's also completely fake.

These videos are part of an exploding ecosystem of AI-generated content pushing back against immigration enforcement. They've gone viral across platforms, racking up millions of views in days. A New York City school principal stopping ICE agents with a bat. A server flinging hot noodles at dining officers. A shop owner standing firm on Fourth Amendment rights. A priest ejecting federal agents from church doors with theology and conviction. Drag queens in neon wigs chasing enforcement officials through neighborhoods.

The timing matters. These videos emerged during a period of intense federal immigration enforcement, including tragic incidents in January that left two US citizens dead at the hands of government officials. Renee Nicole Good, a 37-year-old mother of three, and Alex Pretti, a 37-year-old Department of Veterans Affairs nurse, were both unarmed when fatally shot. The videos flooded social media in response to this crisis.

But here's what makes this moment significant: these aren't authentic recordings of resistance. They're digital fantasy constructed through artificial intelligence. And that distinction matters enormously. The videos reveal something profound about how modern protest movements operate, how technology shapes political messaging, and how the line between cathartic expression and dangerous misinformation continues to blur.

They're beautiful lies. Beautiful, because they imagine a world where people of color stand up to systemic oppression without dying. Beautiful, because they channel collective rage into scenarios where accountability actually exists. Lies, because every frame is algorithmically generated, every interaction fabricated, every outcome wishes rather than reality.

The phenomenon raises hard questions. Are these videos cathartic relief valves that help communities process trauma? Or are they contributing to a broader erosion of public trust in visual evidence? Do they inspire resistance or undermine it? When people see fabricated footage of heroic resistance, how does that shape their understanding of actual confrontations? What happens when algorithms amplify fiction until it drowns out fact?

TL; DR

- AI-generated anti-ICE videos have exploded across social media following fatal encounters in January, garnering millions of views through fantasy scenarios of resistance

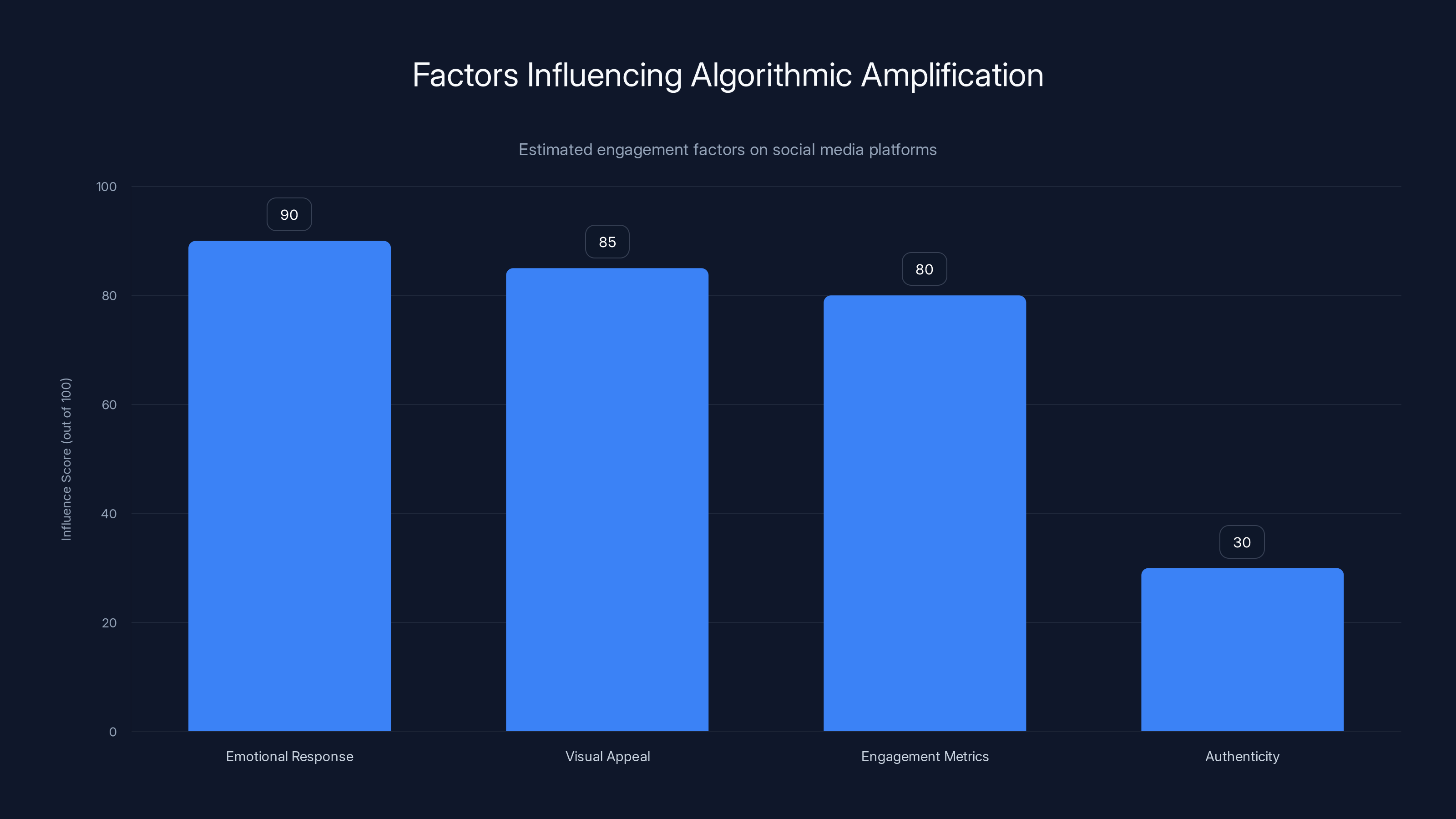

- Platforms like Instagram and Facebook algorithmically amplify emotionally charged, visually striking content, making these videos reach enormous audiences despite being entirely fictional

- The content creates a dual problem: it offers cathartic expression for communities experiencing real trauma while simultaneously contributing to the broader distortion of visual evidence

- Creators face conflicting motivations, ranging from genuine political expression to algorithmic gaming and monetization through controversial content

- The fundamental tension: these videos imagine liberation people cannot find in reality, raising critical questions about AI's role in political discourse and the future of authentic visual evidence

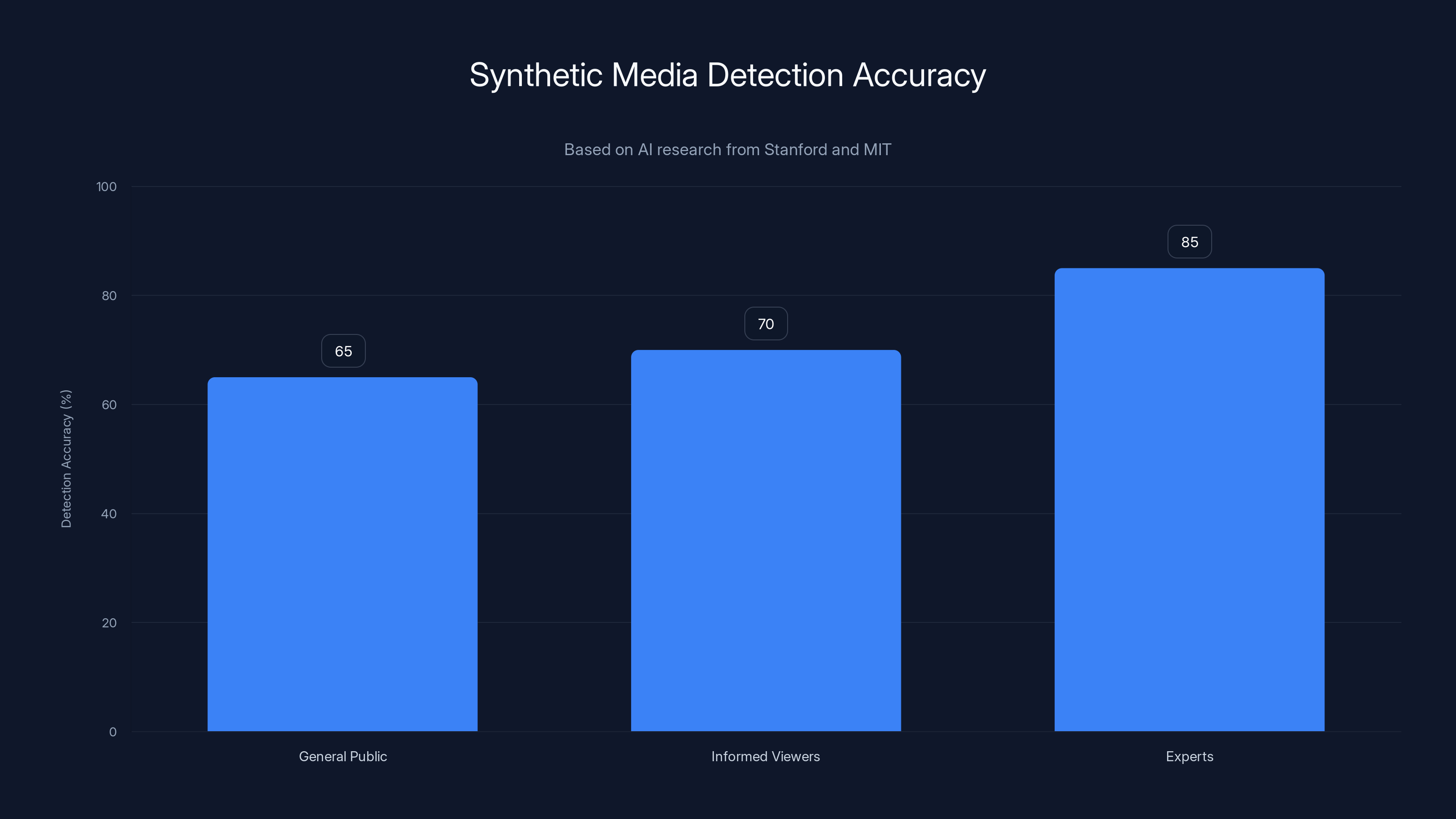

Detection accuracy for AI-generated videos drops significantly below 70% for the general public, highlighting the challenge in distinguishing synthetic media.

Understanding the Phenomenon: What These Videos Actually Are

The anti-ICE videos aren't complicated technically. They use readily available AI video generation tools to create scenarios that feel plausible but are entirely synthetic. The technology has advanced to a point where casual viewers might not immediately recognize the footage as artificially generated. Movement looks roughly natural. Skin tones render with reasonable accuracy. Clothing and environments appear contextually appropriate.

What makes them effective isn't technical sophistication. It's narrative structure. These videos follow the logic of fan fiction, a creative genre where fans reimagine existing stories with alternative outcomes. In this case, the "source material" is the documented reality of immigration enforcement, and the "alternative outcome" is one where enforcement officials face resistance, pushback, and consequences.

Take one of the more viewed examples: ICE agents at a sporting event confronting white tailgaters. The scenario itself carries coded meaning. Immigration enforcement targeting white Americans at leisure? It inverts the actual pattern of enforcement, which disproportionately affects communities of color. The video then escalates this inversion into action. Someone pushes back. Spectators intervene. The officers retreat. One commenter captured the tone perfectly: "This is fake. ICE can't run." The humor, dark though it is, acknowledges the fabrication while celebrating the fantasy.

These aren't isolated incidents. One prolific account operating under the name Mike Wayne uploaded more than 1,000 videos to Instagram and Facebook since the January killings. The sheer volume indicates this is no longer a niche phenomenon. It's become an organized production, a continuous stream of content feeding algorithmic systems designed to amplify engagement.

The videos also adopt specific aesthetic conventions. Many feature dramatic confrontations in public spaces. Churches, schools, restaurants, sports stadiums. Locations embedded with symbolic significance. The setting choices aren't accidental. They position enforcement officials as invaders of sacred or civilian spaces, violating the sanctity of institutions Americans theoretically protect.

A priest confronting ICE agents carries theological weight. A school principal defending students invokes parental protection. A server flinging noodles at officers while they dine suggests violation of hospitality. Each video taps into cultural narratives about which spaces should remain sacred, which institutions should protect vulnerable populations, and what resistance looks like when it succeeds.

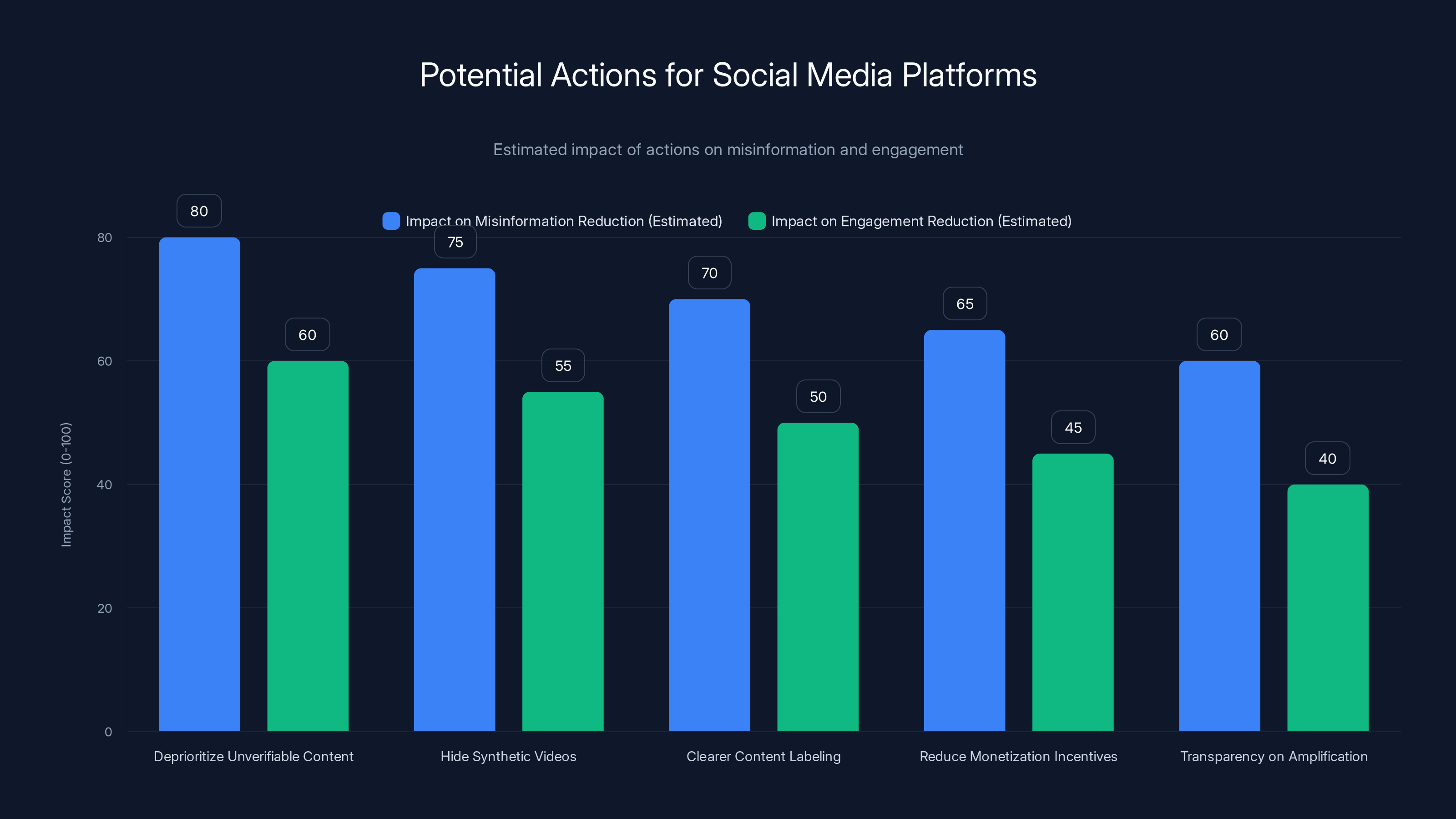

Estimated data shows that actions like deprioritizing unverifiable content and hiding synthetic videos could significantly reduce misinformation but may also reduce user engagement.

The Catharsis Question: Why People Are Creating and Sharing

Understanding why these videos exist requires understanding the emotional context they emerged into. In January, federal enforcement operations intensified dramatically. News cycles filled with stories of raids, deportations, and the tragic deaths of Good and Pretti. For communities directly targeted by immigration enforcement, for immigrant communities living with uncertainty, for Americans horrified by government actions, the psychological weight accumulated.

Catharsis is real. The ability to imagine scenarios where the powerless become powerful, where the threatened become defenders, where systemic injustice meets immediate consequences—that matters psychologically. When actual avenues for justice feel blocked, when legal systems appear designed to protect enforcement rather than constrain it, when protests result in arrests while raids continue unchecked, imaginative resistance fills a void.

Filmmaker and AI creator Willonious Hatcher articulated this directly: "The oppressed have always built what they could not find." The statement contains profound truth. Throughout history, marginalized communities have created art, literature, music, and visual culture depicting the liberation they couldn't access in material reality. Slave narratives imagined freedom before emancipation. Protest songs envisioned justice before laws changed. Political art has always functioned partly as prophecy, partly as therapy.

AI video generation simply gives this ancient impulse new tools. Instead of painted canvases or written narratives, creators can now generate convincing visual sequences showing their desired futures. The technology is just the vehicle. The underlying drive is the same.

But catharsis and problem-solving exist on different planes. A video that feels emotionally satisfying doesn't necessarily advance political goals. In fact, Hatcher raises the critical question that undercuts the catharsis narrative entirely: "The question is not whether these videos are helpful. The question is what they tell us about this country, that people must fabricate images of their own liberation because the real thing remains out of reach."

That's the real weight here. These videos aren't triumphant. They're elegiac. They mourn the gap between desired reality and actual conditions. They acknowledge that material resistance remains impossible for most people, so digital resistance becomes the substitute. That's not healthy political engagement. That's symptom, not solution.

Creators and sharers likely hold multiple motivations simultaneously. Some truly seek cathartic expression. Some want to contribute to anti-ICE discourse on social media. Some are testing what "goes viral" and optimizing for algorithmic amplification. Some believe that saturating social media with anti-ICE content, even fictional content, shifts overall narrative tone. Most probably operate across several motivations without consciously separating them.

The Algorithmic Amplification Problem

These videos would never reach millions of people without algorithmic assistance. Instagram and Facebook's recommendation systems don't care about authenticity. They care about engagement. Does the content generate clicks, likes, comments, shares? Does it hold attention? Does it trigger emotional response?

A fabricated video of ICE agents being confronted and defeated checks every box. It's visually striking. It's emotionally charged. It invokes strong responses. It asks implicit questions ("What would you do in this situation?") that prompt comments. It aligns with existing political views held by hundreds of millions of people globally, making it shareable across ideological boundaries among people who support immigration rights or distrust government enforcement.

The algorithms don't distinguish between authentic documentation and convincing fiction. They optimize for engagement. A brilliant deepfake of political importance generates more engagement than accurate video of administrative procedures. The recommendation systems can't and don't evaluate truthfulness. They measure signals: watch time, shares, likes, comment volume.

This creates a structural incentive to create emotionally manipulative content regardless of truthfulness. If you're running an account and trying to maximize reach, you learn what works. Videos of people of color standing up to authority? High engagement. Videos with dramatic action sequences? Higher engagement. Videos that are technically sophisticated and visually convincing? Highest engagement because people watch longer.

The economy of social media content production rewards exactly this kind of fiction. A creator can post a video that took hours to generate, get millions of views, potentially monetize through brand partnerships or engagement-based revenue sharing, all without any pretense that the content documents reality.

Those working in digital literacy and media criticism have started using the term "attention economy" to describe this dynamic. In an attention economy, the scarcest resource is human focus. Platforms compete for attention by showing users whatever keeps them scrolling longest. Truthfulness becomes irrelevant when engagement is the metric.

This isn't conspiracy thinking. This is how these platforms openly function. They've published research documenting that emotionally intense content, particularly content that triggers anger or outrage, generates more engagement than neutral content. They've built systems that explicitly amplify high-engagement content. They understand the incentive structure they've created.

What they've been slower to address is the consequence: when your system rewards emotional intensity regardless of accuracy, you'll inevitably amplify misinformation alongside authentic content. The anti-ICE videos benefit from the same algorithmic boost that spreads vaccine denial, election falsehoods, and conspiracy theories.

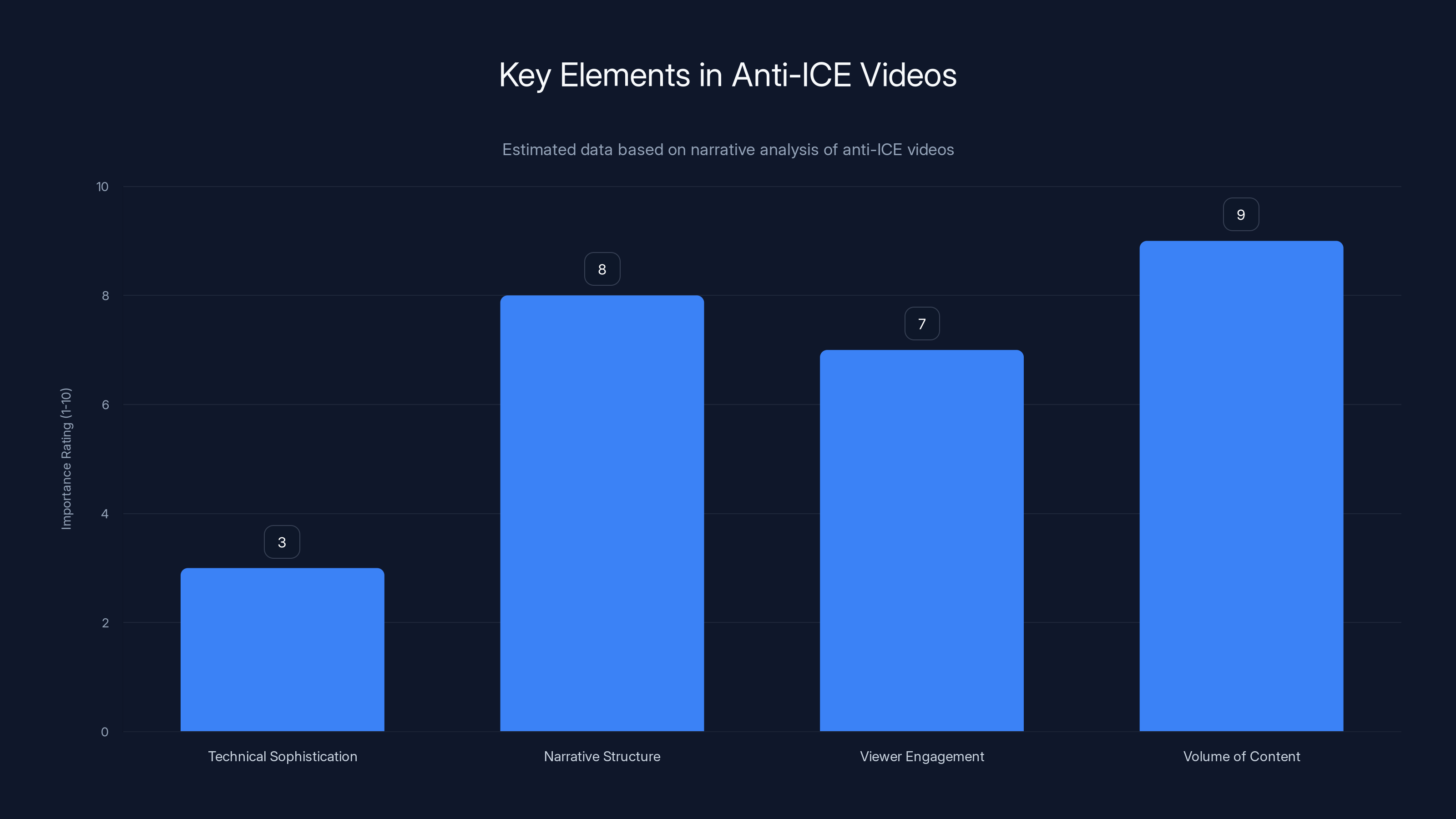

Narrative structure and volume of content are key elements driving the effectiveness and engagement of anti-ICE videos. (Estimated data)

The Misinformation Paradox: Fiction That Feels Like Fact

Here's where the phenomenon becomes genuinely concerning. These videos exist in a category where they're obviously fake if you pay attention, but they degrade public trust in visual evidence even when they're understood to be fictional.

Research on misinformation shows that exposure to falsehoods has downstream effects even after debunking. If people see a false claim repeatedly, and then later learn it's false, they often maintain a residual belief in the false claim. The repetition creates a sense of familiarity that persists even after correction.

The anti-ICE videos operate in a slightly different register, but with similar dynamics. Someone scrolls past a video. It looks realistic enough. The narrative feels plausible. Maybe they don't immediately realize it's synthetic. Or they realize it's synthetic but still feel the emotional hit. Or they understand it's synthetic but don't know how to explain that distinction to someone else who saw it and thought it was real.

After consuming dozens of AI-generated videos, someone's baseline expectation for what video evidence means shifts. Video starts feeling like something that can be immediately fabricated rather than something that documents reality. When that psychological shift happens at scale, it affects trust in actual footage of actual events.

Consider the political consequences. Imagine enforcement officials capture footage of community members blocking agency vehicles or creating confrontations. They release the video. Critics argue it's deepfaked or fabricated. And because the landscape has been saturated with convincing AI-generated anti-enforcement videos, the skepticism has some plausible basis. The authentic footage becomes harder to assert as genuine because the information ecosystem is cluttered with sophisticated fiction.

This is especially dangerous because the anti-ICE videos likely aren't the last wave of politically motivated AI content. As the technology becomes more accessible, we should expect continued proliferation of partisan deepfakes, fictional footage, and synthetic evidence generated for political purposes from across the ideological spectrum. The anti-enforcement videos are just the opening act.

The fundamental problem is that visual literacy hasn't kept pace with visual technology. Most people can't reliably distinguish authentic video from sophisticated AI generation. Media literacy education is spotty at best, virtually absent at worst. So we're living through a period where the technology for creating convincing video forgeries is outpacing the public's ability to recognize forgeries.

In that environment, even if creators of anti-ICE videos intend no malice, even if they're explicitly framing content as fictional fan fiction, the broader ecosystem effect is degradation of video evidence as a category.

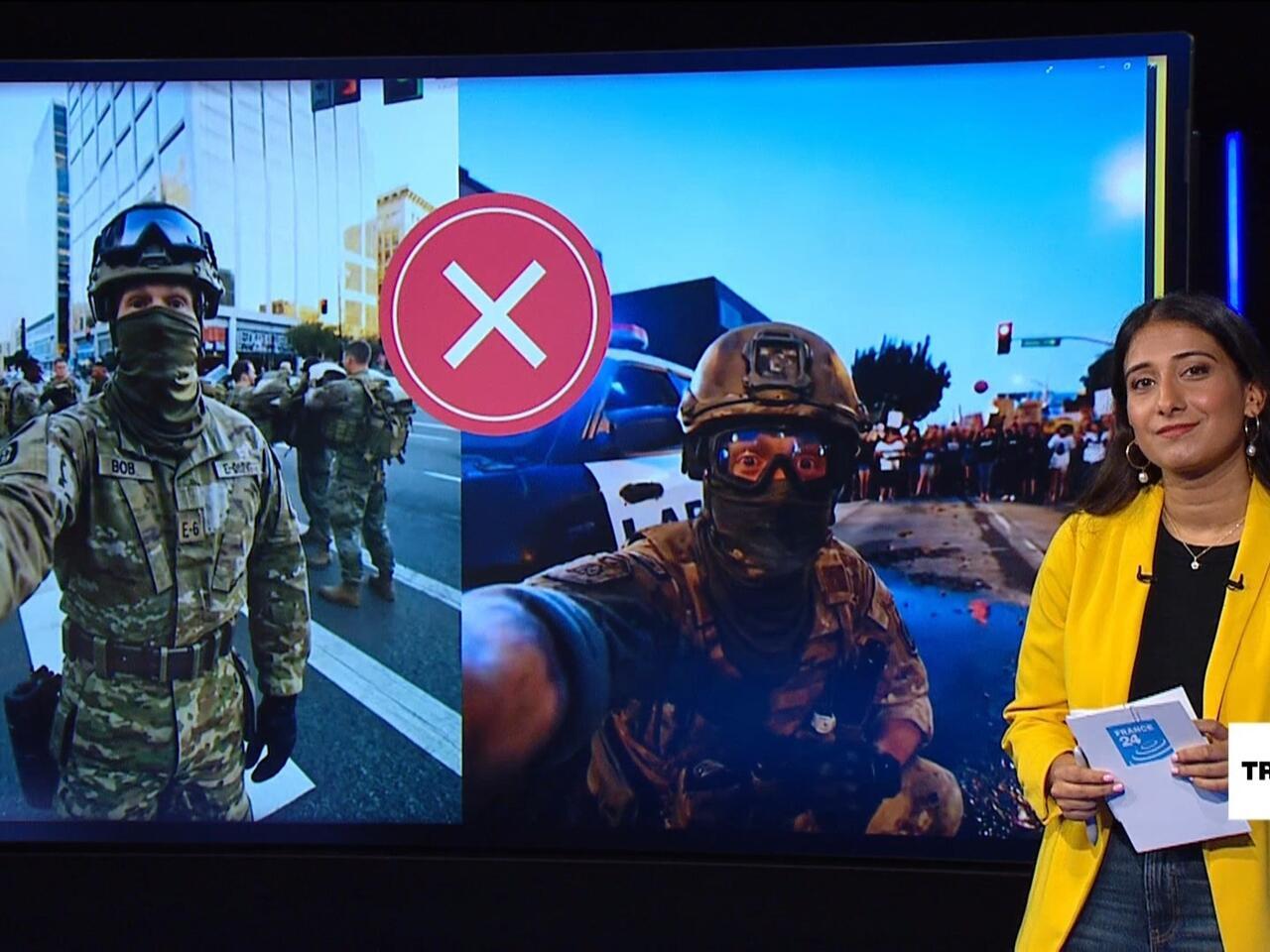

The White House Weaponization of AI: The Other Side of This Story

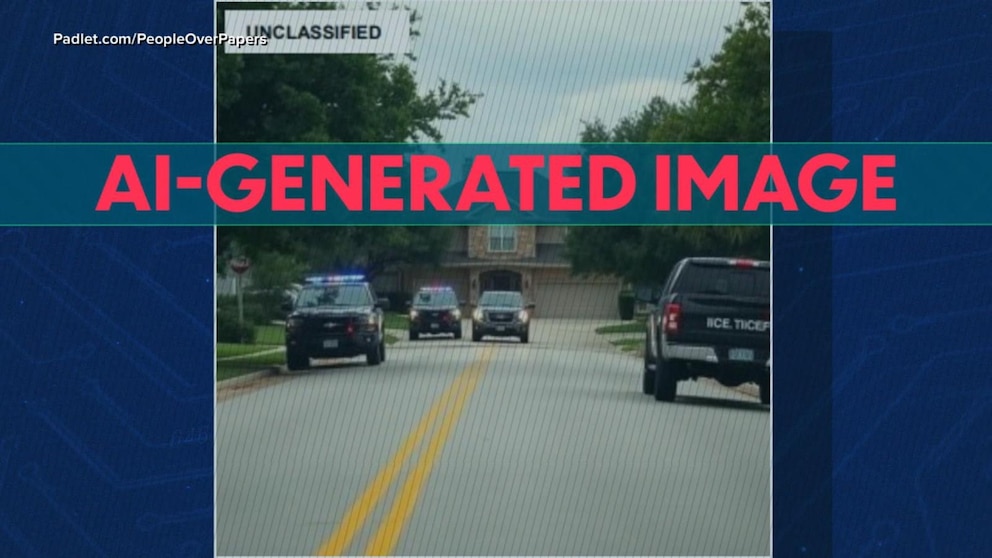

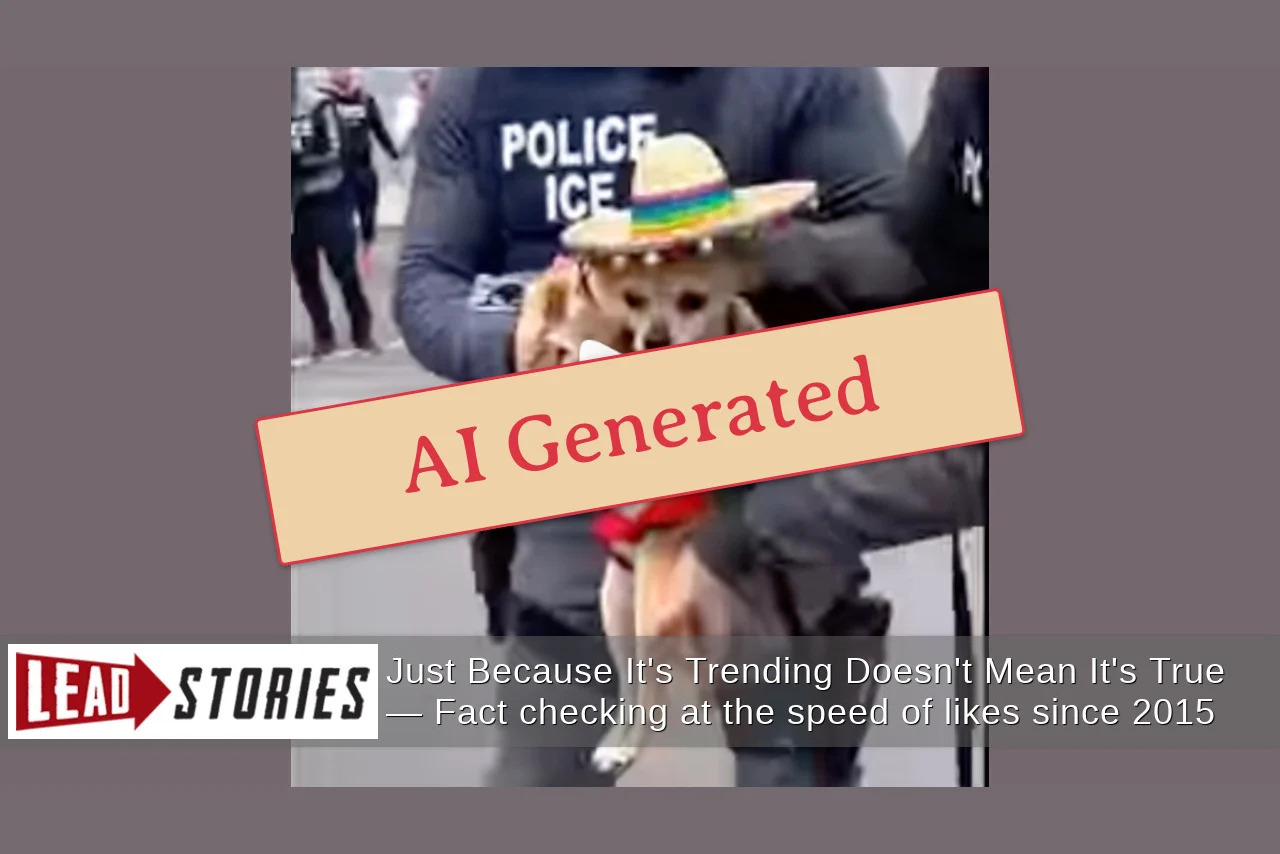

What makes the full context even more troubling is simultaneous weaponization of AI manipulation by officials themselves. The same week these anti-ICE videos were proliferating, the White House released altered photographs.

One specifically targeted Nekima Levy Armstrong, a civil rights attorney and former Minneapolis NAACP president. She'd been arrested at a peaceful demonstration and the White House released edited imagery while labeling her as a "far-left agitator." The alterations weren't subtle tricks. They were crude photo manipulations intended to discredit her through visual distortion rather than factual argument.

This reveals something crucial about the current information environment. AI and digital manipulation aren't just tools for grassroots activists creating fantasy resistance content. They're also tools for government agencies and official power structures. And they have vastly more resources, distribution capabilities, and credibility to deploy them.

When government agencies can manipulate imagery to discredit activists, and simultaneously claim that activist-generated videos are fraudulent deepfakes, they've essentially occupied both sides of the authenticity debate. They can dismiss genuine activist footage as synthetic while distributing manipulated official imagery and benefiting from credibility assumptions.

The power asymmetry is stark. A teenager with a laptop can generate impressive-looking AI videos that might fool people in their social circle. Official agencies can distribute manipulated imagery through official channels, then have that imagery reported by credible news outlets, then benefit from the amplification. The teenager's content might reach millions through algorithmic distribution. The official content reaches billions through institutional legitimacy.

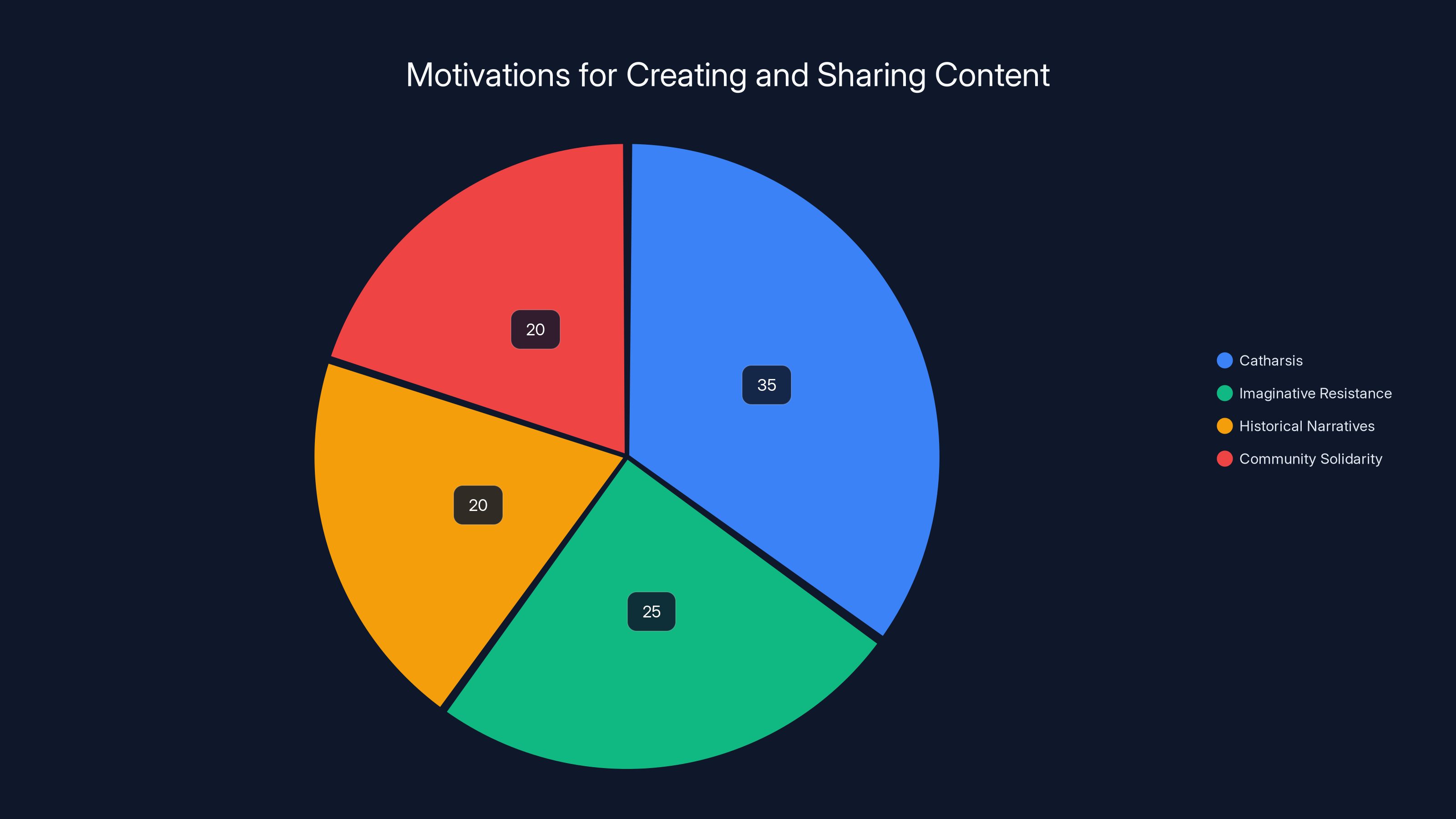

Estimated data shows that catharsis is a primary motivation for creating and sharing content, followed by imaginative resistance and historical narratives.

Monetization, Virality, and Perverse Incentives

Not all creators of anti-ICE content are driven by pure political conviction. Some are explicitly chasing virality and monetization. That's not malicious. It's just how social media content production functions. People can hold multiple motivations simultaneously.

Nicholas Arter, an AI creator who founded the consultancy AI for the Culture, argues that creators operate across a spectrum of intentions. Some are genuinely committed to political expression. Others are, as he notes, "chasing virality or monetization by leaning into controversial or emotionally charged content." These aren't mutually exclusive categories.

The monetization dynamics are real. Accounts with high engagement can earn through Facebook's content monetization program, through brand partnerships with companies wanting to reach engaged audiences, through Patreon or other direct support from followers, or through selling access to their audience to third parties.

When virality is monetizable, the incentive to create emotionally intense, algorithmically optimized content intensifies. And when the content that goes most viral is precisely the kind that either documents traumatic reality or depicts cathartic fantasy, creators optimize for those poles.

Mike Wayne's account, the prolific poster with over 1,000 videos in a few weeks, is presumably optimizing for engagement and monetization. Is Wayne primarily a political activist who's found a way to express anti-ICE sentiment? Or primarily a content creator who's identified what type of content performs best algorithmically? Probably both, and it doesn't really matter. The incentive structure drives the behavior either way.

What AI Creators Themselves Are Saying

The artists and technologists working with these tools are more thoughtful about the phenomenon than media coverage often suggests. They recognize the tensions and contradictions.

Willonious Hatcher frames it as excavating the truth that should be obvious: "These videos are not delusion. They are diagnosis." What they diagnose is a society where marginalized communities must fabricate their own liberation because actual liberation remains materially unavailable. The videos aren't attempts to deceive. They're symptoms of a system that's failed so profoundly that fantasy becomes preferable to reality.

Arter emphasizes that social media has historically served as a distribution channel for voices excluded from institutional media. Pre-AI, marginalized communities used Facebook, Instagram, and Tik Tok to reach audiences that wouldn't see them on network news. AI doesn't fundamentally change this role. It just changes the tools. Instead of uploading authentic footage of protests, creators can upload synthetic footage of imagined resistance. The underlying impulse is identical.

But Arter also recognizes the fundamental tension. Resistance movements need visual documentation of reality to maintain accountability. If actual video evidence becomes indistinguishable from convincing fabrications, movements lose one of their most powerful tools. When government can point to anti-ICE videos and claim "see, this is what they do, they spread misinformation," they've essentially inoculated their actual behavior against video evidence.

The creators aren't naive about these dynamics. They're working with difficult tools in a difficult moment. They understand that cathartic fantasy and political strategy are different things. But the pressure to create content, to engage audiences, to reach scale, to monetize—that pressure is real and constant.

Emotional response and visual appeal significantly influence algorithmic amplification, often outweighing authenticity. Estimated data.

The Social Media Platform's Complicity and Inaction

Instagram and Facebook have known for years that their algorithms amplify emotionally intense content regardless of truthfulness. They've published internal research documenting this. They've built systems that deliberately maximize engagement over accuracy. And they've done relatively little to address the predictable consequences.

Platforms claim they're not responsible for content moderation at scale. They argue that third-party fact-checkers should identify false content, that users should assess sources critically, that democratic discourse requires some tolerance for misinformation. These arguments aren't entirely wrong, but they also conveniently sidestep the platforms' own role in the equation.

They could deprioritize unverifiable video content in recommendations. They could default to hiding videos that analysis suggests are synthetically generated. They could provide clearer labeling when content hasn't been verified. They could reduce the monetization incentives for viral content. They could be transparent about algorithmic amplification. They could do many things.

They mostly don't. The costs of action (reduced engagement, slower growth, pressure from creators) exceed the benefits (less misinformation, more trust) from their perspective. So the current equilibrium persists. Algorithms optimize for engagement. Emotionally intense content gets amplified. Misinformation spreads alongside accurate content. Synthetic footage becomes indistinguishable from documentation. The commons degradates.

Theoretically, users could self-organize to reject unreliable sources and demand better information. That would require widespread media literacy, which is unevenly distributed. It would require coordination across platforms, which doesn't naturally emerge. It would require platforms to support rather than undermine those efforts, which doesn't happen. So we're stuck in a system where the structural incentives push toward worse information quality despite everyone technically having the ability to do better.

The Question of Accountability and Visual Evidence

Here's what keeps media analysts, legal experts, and policy people up at night: we're moving toward a future where video evidence becomes essentially unusable in legal and governmental contexts because it can no longer be reliably trusted.

Lawyers already struggle with deepfakes in courtroom contexts. How do you establish that a video is authentic when the technology to fabricate convincing video is becoming commodified? You can run forensic analysis, but that's expensive, requires expertise, and sophisticated fabrications might defeat forensic techniques. You can look for tells, but AI-generated content is rapidly improving at eliminating tells.

Court systems depend on some evidentiary baseline. If video evidence becomes unreliable, what replaces it? More reliance on eyewitness testimony? That's demonstrably worse. More reliance on written documentation? That's slower and less accessible. More reliance on physical evidence? That's often absent.

For protest movements and resistance efforts, this is also concerning. Civil rights documentarians have historically relied on video evidence to document government overreach and police violence. If that evidence becomes systematically discounted because the information ecosystem is saturated with convincing fabrications, movements lose a crucial tool.

The anti-ICE videos contribute to this degradation whether they intend to or not. Every AI-generated video of resistance, every fabrication of a dramatic confrontation, chips away at the collective assumption that video documents reality. The more people who see these videos, understand they're synthetic, and then encounter actual footage of actual confrontations, the more the baseline expectation shifts.

What emerges is a kind of epistemic exhaustion. If video can be fabricated, maybe all video is fabricated. Maybe nothing is real. Maybe trust in documentation itself is naive. That's where this path leads.

Repeated exposure to misinformation can maintain belief in false claims even after debunking. Estimated data based on typical misinformation effects.

Global Implications: AI as Political Tool Everywhere

The anti-ICE videos are a localized US phenomenon, but the implications are global. Any movement anywhere can now generate synthetic video evidence of their preferred reality. Resistance movements can create videos showing successful confrontations with government. Governments can create videos showing manufactured evidence of opposition groups committing violence.

Consider what this means for civil conflicts globally. In Myanmar, Venezuela, Hong Kong, Sudan, Syria, and dozens of other countries where governments and opposition movements clash, synthetic video becomes another weapon. Both sides generate convincing footage. Neither side can point to video as reliable evidence. The information environment degrades further.

Authoritarian governments actually benefit more from this dynamic than resistance movements do. Governments have greater resources to generate sophisticated synthetic media. Governments have more distribution channels through state media. Governments can combine synthetic footage with official authority to lend credibility.

When both sides in a conflict are deploying synthetic media, the side with more resources and institutional credibility tends to win the information war. That's usually the government.

So what looks like a kind of democratic tool for grassroots expression—the ability to generate synthetic political videos—might actually accelerate authoritarianization at global scale. Resistance movements burn political capital on generating and distributing videos that become unreliable. Governments use the ensuing epistemic confusion to dismiss all accusations of wrongdoing as fabrications.

Technical Sophistication: How Accessible Is This Technology?

Understanding the likely future of AI-generated political content requires understanding how accessible the tools are. If video generation remains expensive and technically difficult, you'll see limited proliferation. If it becomes cheap and easy, expect exponential growth.

Current trajectory suggests rapid democratization. Tools that required specialized technical knowledge two years ago are now available through simple web interfaces. Tools that cost thousands a year are becoming free-tier accessible. What took filmmakers days to create now takes minutes. Barriers to entry are collapsing.

That democratization sounds theoretically positive. Powerful tools available to everyone, not just institutions. But in practice, it means both freedom movements and authoritarian governments get access simultaneously. Both can deploy the technology. But the side with more resources, institutional support, and distribution channels has advantage.

So the democratization of AI tools for video generation is simultaneously empowering and destabilizing. It empowers marginalized creators, and it destabilizes information systems that depend on documentary evidence.

Where This Trajectory Leads

Extrapolating from current trends suggests a future that's deeply troubling. As AI tools improve and become more accessible, we should expect continuous increase in political video synthesis. Not just anti-ICE content. Anti-police content. Anti-government content. Pro-government content. Anti-immigration content. Anti-corporate content. Content pushing every possible political direction.

In that environment, video evidence becomes unreliable. Legal systems that depend on video documentation face legitimacy crises. Protest movements lose a crucial accountability tool. Democratic discourse becomes even more fractured as people increasingly live in separate information ecosystems with separate "video evidence" of reality.

Governments might respond by restricting technology access or implementing heavy-handed content moderation. That has its own dangers, potentially suppressing legitimate expression. Or they might develop new standards for synthetic media verification, similar to blockchain verification or cryptographic proof of authenticity. That's technically feasible but requires coordination and commitment to implementation.

Or we might just accept that video evidence is unreliable and organize political systems accordingly. That's probably the most realistic trajectory. It's also deeply destabilizing because we've structured contemporary accountability partly around visual documentation.

Lessons for Policy Makers and Platform Designers

If we want to address this thoughtfully rather than reactively, several interventions make sense. None are particularly novel, but they're necessary.

First, platforms could deliberately reduce the algorithmic amplification of unverified video content. Not remove it, not censor it, but decline to use it as algorithmic fuel. A video that hasn't been fact-checked could still appear if someone explicitly searches for it, but it wouldn't get recommended broadly. That's a modest intervention that addresses the amplification problem without total censorship.

Second, platforms could be more transparent about their algorithmic mechanics. Users should understand that what they're seeing is curated by engagement-optimizing systems, not neutral representation of reality. That transparency doesn't solve the problem, but it shifts user expectations in useful ways.

Third, media literacy education could expand dramatically. Most people can't assess source credibility or recognize synthetic content. Teaching those skills at scale would address the underlying problem of AI content proliferation. That's expensive and requires educational infrastructure that's currently underfunded, but it's ultimately necessary.

Fourth, law enforcement and judicial systems could develop better standards for video authentication. Cryptographic verification, forensic analysis, evidentiary standards for synthetic media—these frameworks exist but are unevenly implemented. Codifying them makes attribution and verification more reliable.

None of these are sufficient on their own. Collectively, they might reduce the trajectory toward epistemic breakdown. But they all require commitment and resources that don't currently exist.

The Authentic Question Underneath All This

Ultimately, what the anti-ICE video phenomenon reveals is a deeper truth about late capitalism, algorithmic mediation, and political power. Willonious Hatcher stated it bluntly: "The question is what they tell us about this country, that people must fabricate images of their own liberation because the real thing remains out of reach."

That's the actual story. Not that AI-generated video is spreading. That's technical reality. Not that platforms are amplifying misinformation. That's algorithmic function. The actual story is that marginalized communities have given up on documenting or accessing material justice and have retreated into fabricating imagined justice.

That's a damning statement about where we are. It's not commentary on AI or social media technology. It's commentary on the failure of existing institutions and power structures to deliver accountability or change. The videos exist because the system they're responding to is so broken that fantasy feels more achievable than reform.

You can't solve that with better content moderation. You can't solve it with media literacy education. You can only solve it by addressing the underlying conditions that make imagined resistance feel more real than material change.

The videos are symptoms. They're sophisticated symptoms enabled by new technology, but still symptoms of systemic failures that predate AI and will persist after it. They're telling us something urgent about what's broken. Whether we listen or just fight the symptom is the actual political question.

FAQ

What exactly are AI-generated anti-ICE videos?

These are synthetic video clips created using artificial intelligence that depict fictional scenarios of people of color successfully resisting or confronting immigration enforcement officers. The videos use AI video generation tools to create convincing but entirely fabricated footage of dramatic confrontations—such as a school principal with a bat stopping ICE agents, servers throwing food at officers, or drag queens chasing agents through streets. They're explicitly fictional content designed with narrative and emotional appeal, spreading widely across Instagram and Facebook with millions of views.

How are these videos created and what tools do creators use?

Creators use readily available AI video generation platforms that have become increasingly accessible and affordable. These tools allow users to input text descriptions or parameters and have the AI generate corresponding video sequences. The process has become streamlined enough that what previously required professional filmmaking equipment and expertise can now be done by individuals with minimal technical training. The exact tools vary, but the democratization of video synthesis technology is rapid and ongoing.

Why did these videos emerge specifically during this time period?

The videos proliferated following the January deaths of Renee Nicole Good and Alex Pretti, both unarmed US citizens killed by federal enforcement officials during immigration raids. The videos represent a response to genuine trauma and increased government enforcement activity. Communities experiencing direct threats from immigration enforcement created these videos as a form of imaginative resistance, offering cathartic fantasy during a period of real danger and documented violence.

Are these videos considered misinformation or disinformation?

They exist in a gray area. Most creators are transparent that these are fictional AI-generated fantasies, not documentary evidence. They're presented in fan fiction aesthetic and are understood by savvy viewers to be synthetic. However, the distinction between obvious fiction and dangerous misinformation becomes blurry when millions of viewers encounter these videos without context. The broader problem is that their proliferation degrades public trust in video evidence generally, making it harder to assess which video documents reality and which is fabricated.

What are the potential harms of these videos spreading widely?

The primary harms are epistemic. Each AI-generated video that circulates trains viewers to expect that video might be fabricated. When actual footage of real confrontations or injustice circulates later, viewers are primed to dismiss it as synthetic. Additionally, these videos can be cited by officials to claim that activist resistance movements spread misinformation, using the existence of fabrications to delegitimize authentic documentation. The broader information environment becomes degraded when visual evidence becomes unreliable as a category.

How do social media algorithms contribute to these videos reaching so many people?

Instagram and Facebook's algorithmic systems prioritize content that generates high engagement—clicks, likes, comments, shares—regardless of whether the content is factually accurate. Anti-ICE videos are emotionally intense, visually striking, and trigger strong responses, making them algorithmically favored. Platforms have known for years that emotional intensity drives engagement, and they've built systems that deliberately amplify such content. This structural incentive means that fabricated emotional content reaches larger audiences than boring documentary evidence would.

What distinguishes these from other forms of protest art or political expression?

Forms of political expression throughout history have included paintings, written narratives, music, and other media depicting desired futures or imagined resistance. These anti-ICE videos follow that tradition but use AI video generation as the medium. The distinction is that contemporary viewers are more likely to mistake video for documentation of reality than they would mistake a painting or poem. Video carries strong assumptions of authenticity. That makes AI-generated video fundamentally different from traditional political art forms—it has greater potential to be misunderstood as factual.

Could these videos inspire actual resistance or are they purely cathartic fantasy?

They're likely both. Some viewers might find them motivating. Others process them as cathartic fantasy that helps them manage traumatic circumstances without necessarily inspiring material action. The psychological function probably varies significantly by viewer. But even if they inspire action, that's distinct from whether they contribute to useful political outcomes. Psychological catharsis doesn't necessarily advance movements' material goals and might actually substitute for effective organizing.

What are governments doing to respond to these videos and AI content?

Responses vary globally. Some governments attempt to suppress the videos through takedowns or restrictions on video generation tools. Others weaponize similar AI technology to create synthetic media that serves their interests while dismissing opposition video as fabricated. The White House example of altered photographs of activist Nekima Levy Armstrong shows how official institutions leverage the confused information environment to their advantage. There's no coordinated global policy response, and governance lags behind technology deployment.

How does this phenomenon relate to broader concerns about deepfakes and synthetic media?

Anti-ICE videos are one manifestation of a larger trend toward AI-generated synthetic media. Similar dynamics apply to politically motivated deepfakes across ideological spectrums, AI-generated news content, synthetic social media profiles, and doctored imagery. The anti-ICE videos are notable because they're being created by marginalized communities for political expression, but the underlying technical problem—proliferation of synthetic media that degrades trust in documentation—applies across all uses.

What should policy makers, platforms, and creators do about this?

Multiple interventions are necessary. Platforms could reduce algorithmic amplification of unverified video while remaining transparent about why. Media literacy education could expand dramatically to teach people how to assess source credibility and identify synthetic content. Legal and judicial systems could develop authentication standards for video evidence. Creators could embrace clearer labeling distinguishing fiction from documentation. Fundamentally, however, the underlying issue is that material conditions remain so unjust that people resort to fabricating imagined liberation rather than achieving actual change—and that requires addressing systemic injustice itself.

Conclusion: What These Videos Actually Reveal

The anti-ICE videos aren't really about video generation technology or algorithmic amplification or social media dysfunction, though all those elements matter. They're about what happens when people lose faith that existing systems will deliver justice.

They're about a moment in American history where immigration enforcement has become sufficiently aggressive that people respond by creating fantasies of successful resistance. They're about communities traumatized by government violence imagining worlds where that violence meets immediate consequences. They're about the exhaustion of expecting change through institutional channels and retreating into imaginative alternatives.

The technology enables this expression, but doesn't fundamentally explain it. People have always created art and narrative depicting the liberation they couldn't access materially. What's changed is the tools and distribution mechanisms, not the underlying impulse.

What should concern us isn't that the videos exist. It's what they reveal about the conditions that produce them. When people must fabricate images of their own liberation rather than accessing actual liberation, something fundamental has failed in the political system.

The videos will keep spreading. The technology will keep improving. More people will create more synthetic media for more political purposes. But addressing that sprawl technologically—through better moderation, authentication standards, or media literacy—won't solve the underlying problem. It will just make the symptoms slightly less visible while the disease persists.

The real question we should be asking isn't whether these videos are helpful or harmful to movements. It's why movements feel compelled to retreat into fabricated resistance rather than pursuing material change. Answer that question and you might address something deeper than algorithmic amplification or media literacy. You might address the systemic conditions that generate the need for these videos in the first place.

Until then, expect the videos to keep flowing, algorithms to keep amplifying them, platforms to keep profiting, and the gap between imagined and actual justice to keep widening. The videos are a symptom. Treating symptoms while ignoring the disease just extends suffering.

Key Takeaways

- AI-generated anti-ICE videos reaching millions demonstrate how accessible technology enables both grassroots political expression and information degradation

- Algorithmic systems amplify emotionally intense content regardless of truthfulness, creating structural incentives for misinformation spread

- Proliferation of synthetic media erodes public trust in video evidence generally, threatening documentary accountability mechanisms

- Governments wield greater resources for synthetic media deployment, creating power asymmetries in information warfare

- These videos ultimately diagnose systemic failures that force marginalized communities to fabricate liberation rather than achieve material change

Related Articles

- Slopagandists: How Nick Shirley and Digital Propaganda Work [2025]

- ICE's Military Playbook: Why Paramilitary Tactics Fail in Law Enforcement [2025]

- Deezer's AI Music Detection Tool: How Streaming Platforms Fight Fraud [2025]

- Face Recognition Surveillance: How ICE Deploys Facial ID Technology [2025]

- Meta Blocks ICE List: Content Moderation, Privacy & Free Speech [2025]

- TikTok Censorship Fears & Algorithm Bias: What Experts Say [2025]

![AI-Generated Anti-ICE Videos and Digital Resistance [2025]](https://tryrunable.com/blog/ai-generated-anti-ice-videos-and-digital-resistance-2025/image-1-1769715574379.jpg)