Infosys and Anthropic Partner to Build Enterprise AI Agents: What It Means for Enterprise AI

When Indian IT giant Infosys announced its partnership with Anthropic, it wasn't just another tech deal. It was a statement about the future of enterprise AI, the survival of legacy IT services, and how large language models are reshaping industries worth hundreds of billions of dollars.

Here's the context. The global IT services market has been built on a simple formula for decades: take highly regulated work, staff it with skilled labor in lower-cost countries, deliver reliable services to enterprises. India's IT services industry alone is worth $280 billion annually. But that model is cracking. When Anthropic launched its enterprise AI tools in early 2025, Indian IT stock prices plummeted. Investors saw the threat immediately: if AI can automate legal research, sales workflows, and customer support, why does a bank need to employ thousands of offshore IT workers?

Infosys' move with Anthropic is essentially a bet that the company can stay relevant by becoming an AI integrator rather than a labor provider. Instead of competing with AI, Infosys wants to deploy it for enterprises that lack the expertise or infrastructure to do it themselves.

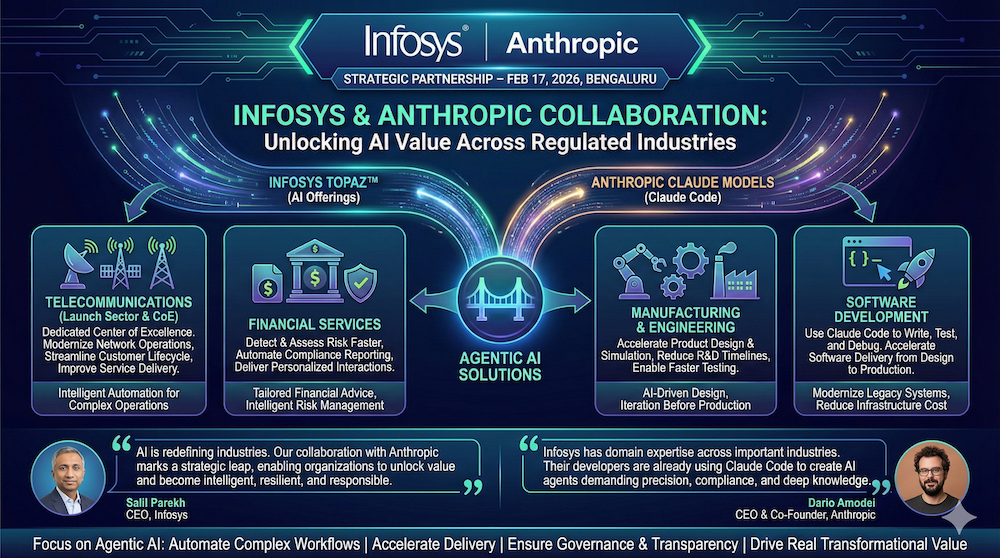

For Anthropic, this partnership solves a fundamental problem: Claude is powerful, but deploying it in heavily regulated industries like banking and insurance requires domain expertise, compliance knowledge, and governance frameworks that a pure AI lab doesn't have. Infosys brings three decades of experience navigating financial services regulations, telecom compliance, and manufacturing operations. Together, they're trying to bridge the gap between what AI can do in a demo and what it can do when real money, real regulations, and real consequences are at stake.

This article dives deep into what this partnership means, why it matters for enterprise AI, and what it reveals about the future of AI-powered business automation.

TL; DR

- The Deal: Infosys is integrating Anthropic's Claude models into its Topaz AI platform to build enterprise-grade AI agents for banking, telecoms, and manufacturing.

- The Timing: Announced at India's AI Impact Summit amid investor fears that AI will disrupt India's $280 billion IT services industry.

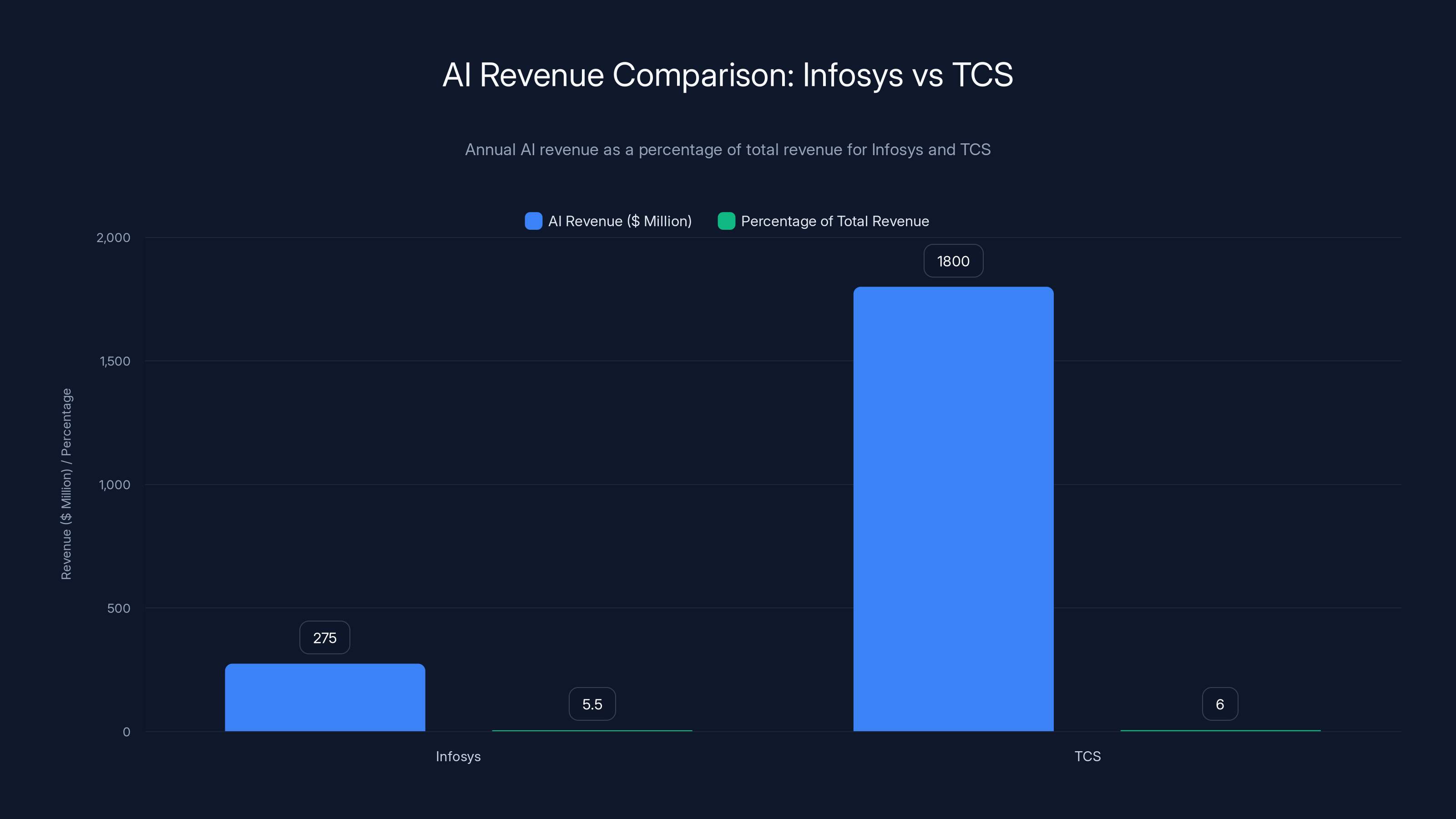

- The Revenue: Infosys generated 1.8 billion annually from AI services.

- The Strategic Play: For Infosys, it's survival—shift from labor provider to AI integrator; for Anthropic, it's access to regulated industries where Claude models are desperately needed.

- The Bottom Line: This partnership signals that enterprise AI won't be deployed by pure-play AI companies alone, but by experienced IT services firms that understand governance, compliance, and complex workflows.

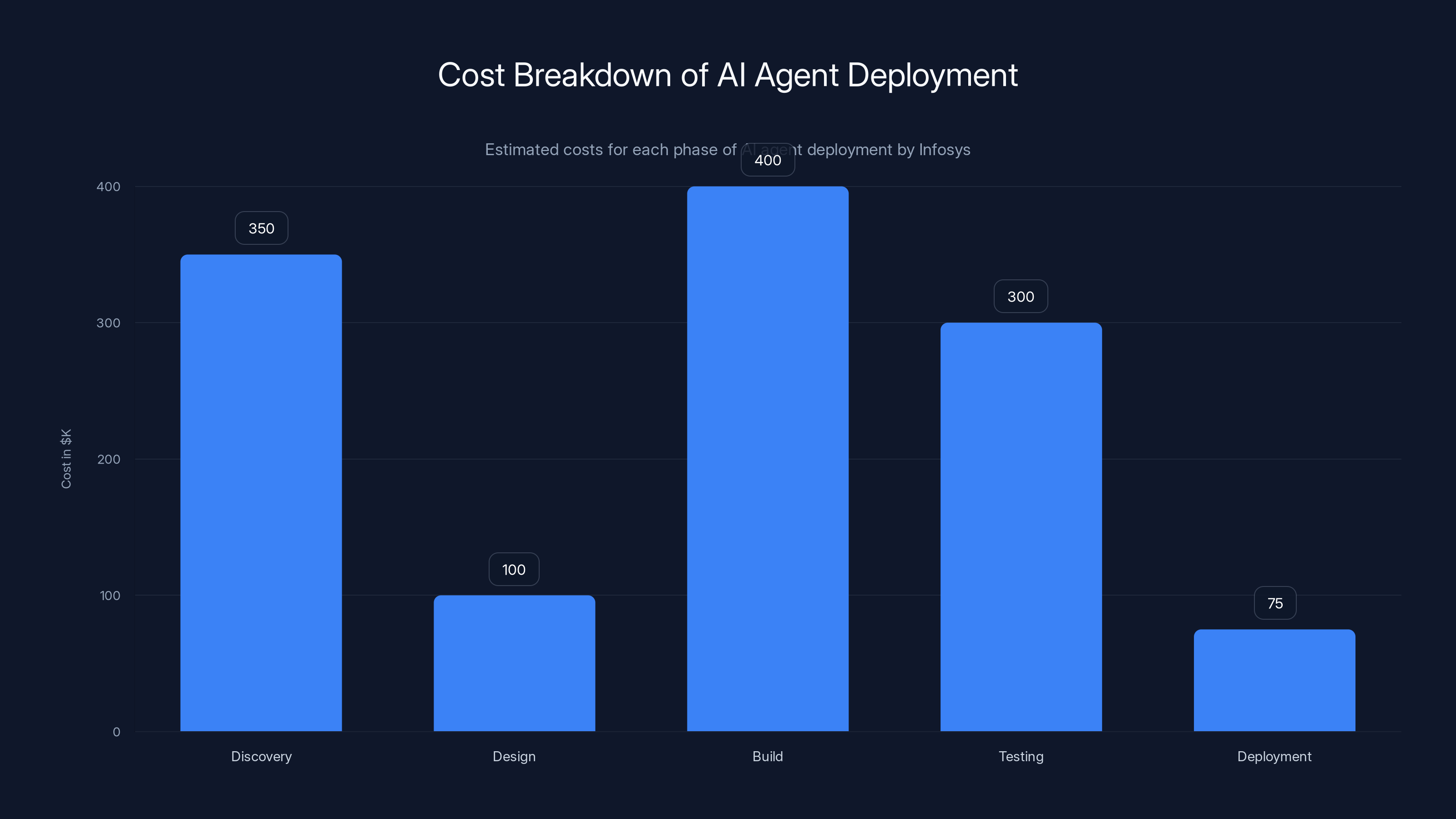

The majority of costs in AI agent deployment are incurred during the Discovery and Build phases, with estimated costs ranging from

The Perfect Storm: Why This Partnership Happens Now

Timing in tech rarely feels accidental. The Infosys-Anthropic deal was announced at India's AI Impact Summit in February 2025, just weeks after Anthropic's enterprise AI suite launch sent shockwaves through stock markets. That wasn't coincidence. It was panic meeting opportunity.

Indian IT companies have a fundamental problem: their stock valuations depend on revenue growth, and their revenue growth depends on hiring more people to serve more clients. But if AI can do the work faster, cheaper, and better than people, the entire business model breaks. When Anthropic released tools claiming to automate legal document review, sales pipeline analysis, and marketing campaign research, investors did the math and didn't like the answer.

Consider the numbers. Infosys employs over 300,000 people globally. TCS, its largest competitor, employs over 600,000. These aren't small teams—they're small countries. If even 5% of their workforce becomes redundant due to AI automation, we're talking about layoffs affecting tens of thousands of people. Stock prices don't recover well from those announcements.

But here's what Infosys understood: they can't fight AI. They can only join it or disappear. The company already generates

The partnership with Anthropic lets Infosys reframe the narrative. Instead of "Our workers are being replaced by AI," the story becomes "Infosys is the company deploying AI for enterprises." It's the difference between disruption and adaptation. That narrative matters enormously to stock prices and investor confidence.

For Anthropic, the calculus is different but equally strategic. Claude is a remarkable model, but deploying it in production for Fortune 500 companies requires more than raw intelligence. It requires understanding how compliance works in financial services. It requires knowing how telecommunications regulators think. It requires expertise in manufacturing quality control and supply chain logistics. Infosys has spent three decades building that expertise across hundreds of enterprise clients.

Dario Amodei, Anthropic's CEO, said it directly: "There's a big gap between an AI model that works in a demo and one that works in a regulated industry." That gap is worth billions of dollars to bridge. Infosys has the tools, processes, and relationships to cross it.

Anthropically speaking (sorry), this is also why Anthropic opened its first India office in Bengaluru during this same period. India now accounts for 6% of global Claude usage, second only to the United States. Much of that activity is concentrated in software development and coding tasks. But Anthropic's long-term bet is clear: India has the talent density, the regulatory expertise, and the startup culture needed to become a major hub for enterprise AI deployment.

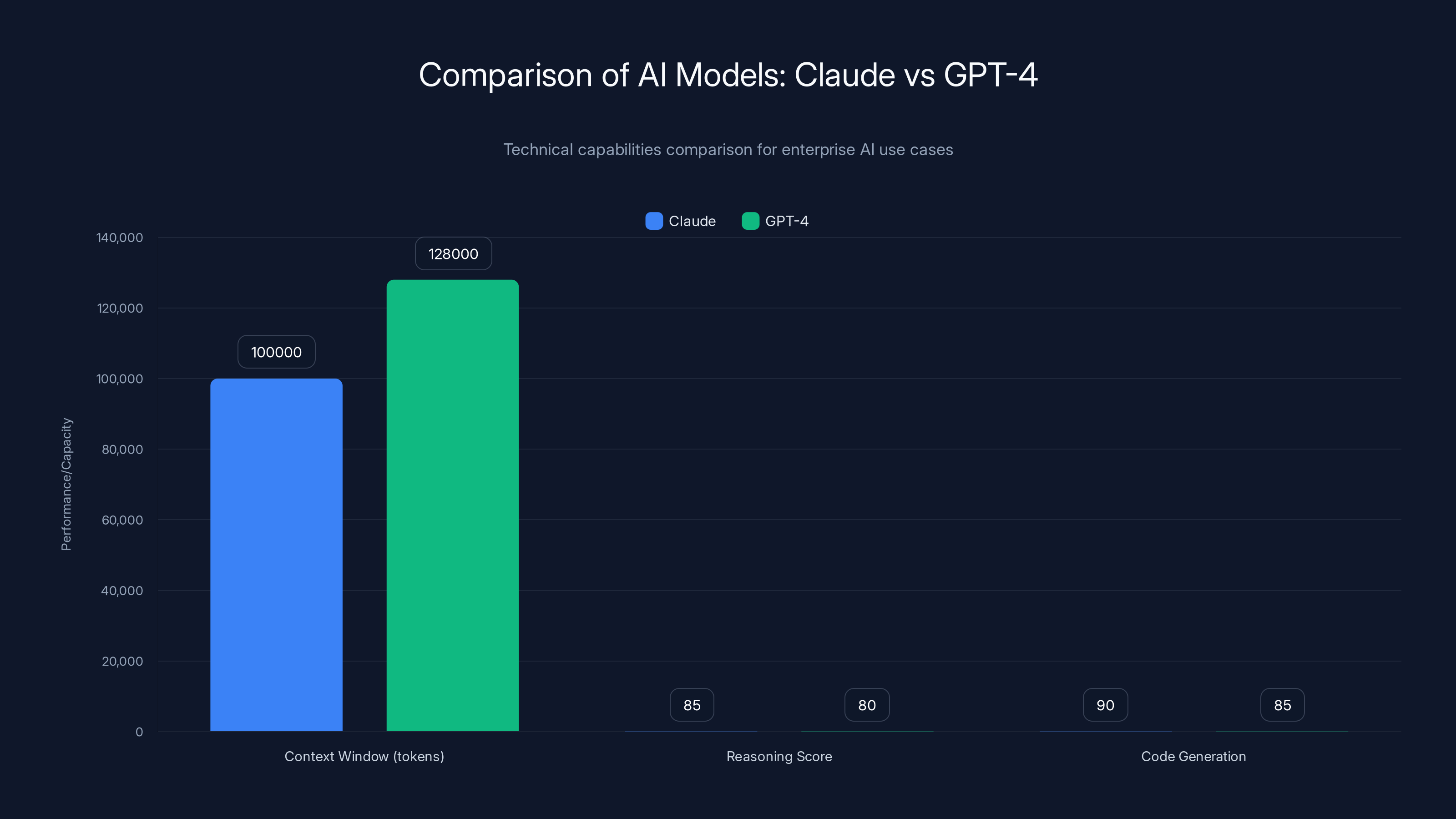

Claude offers a substantial 100,000-token context window and excels in reasoning and code generation, making it suitable for complex enterprise tasks. Estimated data for reasoning and code generation scores.

Understanding the Topaz AI Platform and What Integration Means

Infosys' Topaz AI platform isn't new. The company launched it in 2022 as a cloud-based suite of AI tools for enterprises. Think of it as a control center for AI operations: document automation, code generation, business process optimization, and data analytics all in one place.

The platform currently integrates with multiple AI models and services. Adding Claude changes the game for one specific reason: Anthropic's models are particularly strong at reasoning, following complex instructions, and handling nuanced enterprise scenarios. If you need an AI system to review a 200-page legal contract and flag only the clauses that could create regulatory exposure, Claude is remarkably good at that. If you need AI to analyze a manufacturing defect report and trace it back to root causes in your supply chain, Claude can do that too.

Infosys says it's already using Claude Code internally to help its engineers write, test, and debug code. This is important context. They're not theoretically planning to use Claude. They're actively testing it, building muscle memory with it, and preparing their client-facing teams to deploy it at scale.

When Infosys integrates Claude directly into Topaz, what emerges is a more powerful system. Instead of customers needing to separately manage Infosys' tools AND Anthropic's API, they get a unified interface. The workflow looks something like this:

- Customer defines a business process: "Extract key contract terms from vendor agreements"

- Infosys configures that workflow in Topaz

- Topaz connects to Claude for the reasoning-heavy work

- Claude processes the contracts, flags issues, and returns structured data

- Infosys' platform routes the output to the customer's systems

The customer sees one system, one vendor relationship, one SLA. They don't care that Claude is powering the brain—they care that the result is reliable, compliant, and auditable.

This integration also opens doors for specialized AI agents. An "agentic" system, in modern AI terminology, is one that can reason about its tasks, plan multi-step workflows, and iterate toward solutions without human intervention in between steps. For example:

Banking Use Case: A telecom company receives billing inquiries from customers. Instead of routing every inquiry to a human agent, an AI agent could:

- Read the customer's account history (step 1)

- Understand their billing complaint (step 2)

- Check against policies and service agreements (step 3)

- Calculate credits or adjustments if appropriate (step 4)

- Generate a response explaining the resolution (step 5)

- Route only exceptional cases to humans

The agent handles 80% of cases autonomously. Humans handle the 20% that require judgment, compassion, or escalation. Both the company and the customer win: faster resolution, lower costs, better customer experience.

This is what Infosys means by "agentic systems." Not fully autonomous robots replacing people, but intelligent systems that handle structured work while humans focus on exceptions and strategy.

The AI Agent Revolution: What "Agentic" Actually Means

Let's pause and define what we're really talking about here, because "AI agents" has become a buzzword that means everything and nothing depending on who's using it.

Traditionally, when you use Chat GPT or Claude, the interaction pattern is straightforward: you write a prompt, you get a response, conversation over. It's stateless—the AI doesn't remember your previous conversation unless you include the context in your current message. It's single-threaded—it generates one response and waits for your next input.

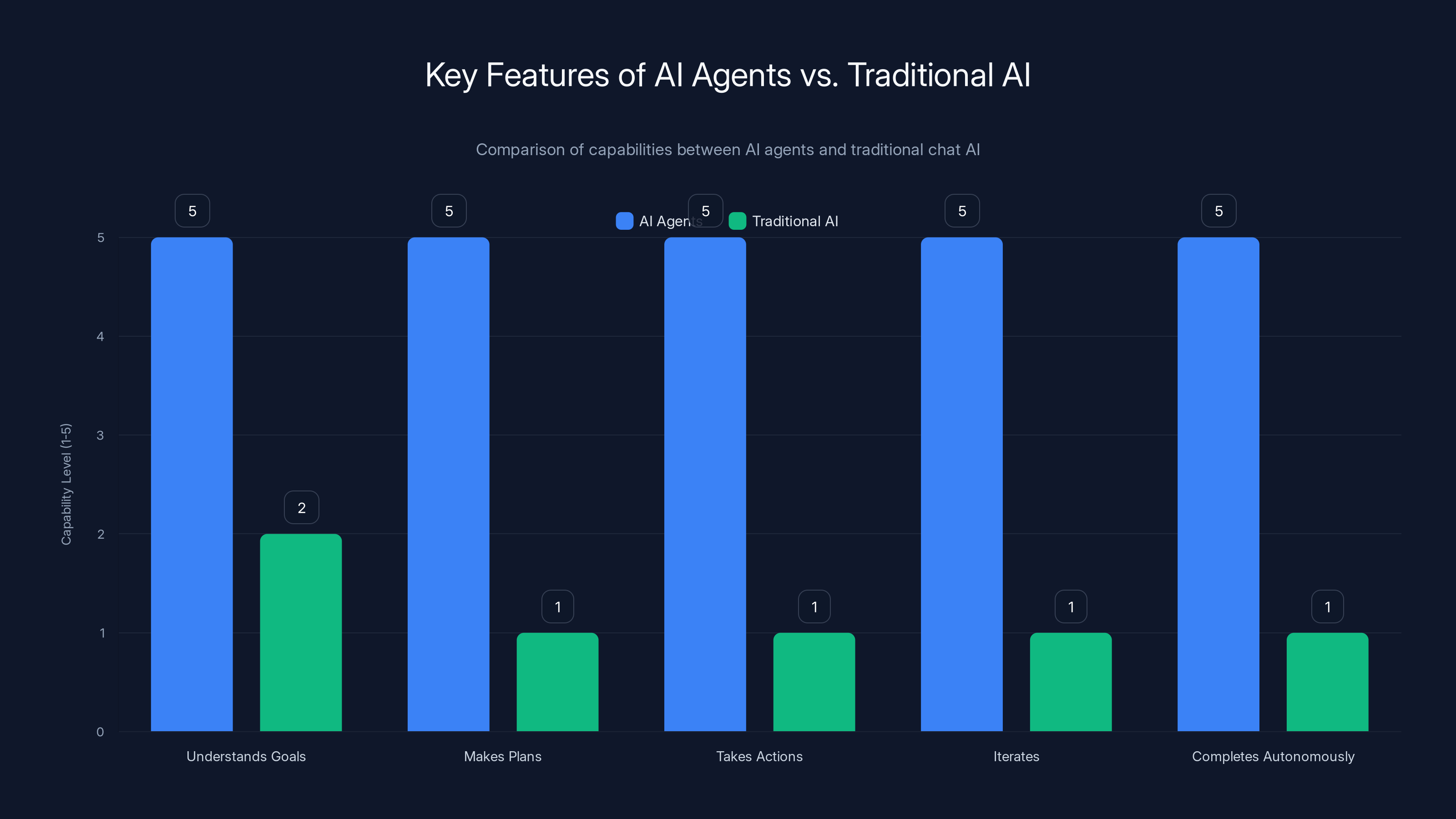

An AI agent, by contrast, is fundamentally different. It's a system that:

- Understands goals - You tell it "Reconcile this month's expense reports" and it understands what success looks like

- Makes plans - It breaks the goal into substeps: collect reports, validate amounts, check policy compliance, flag exceptions

- Takes actions - It doesn't just suggest—it actually executes steps. It calls APIs, queries databases, generates documents

- Iterates - If a step fails, it tries alternatives. If a result looks wrong, it verifies

- Completes autonomously - It keeps going until the goal is achieved, only asking humans for help when truly stuck

This is a fundamentally different paradigm from "chat with an AI." An agent is more like an employee who's been given a task and a deadline, understands what resources they have access to, and figures out how to complete the job.

Infosys and Anthropic's partnership is betting that enterprise AI's future is agents, not chat. Here's why that matters:

For enterprises, agents are more valuable than chat because they reduce human-in-the-loop overhead. A human asking Chat GPT questions still requires humans to review answers, validate results, and execute decisions. An agent does all three if it's given the right permissions and guardrails.

For IT services firms, agents are more valuable than chat because they create recurring revenue. Installing an agent that handles your expense reconciliation every month means monthly fees. Selling companies a Chat GPT license is a one-time conversation.

For Anthropic, agents are more valuable than chat because they create a higher cost of switching. Once an enterprise deploys a Claude-powered agent handling their supply chain management, switching to a competitor's model requires retraining the agent, migrating workflows, and re-validating results. High switching costs = stable revenue.

The question investors and enterprises are asking is: how capable do agents need to be before they create significant economic disruption? The answer is "less capable than you think." An agent that handles 60% of a process correctly and flags the remaining 40% for human review is still massively valuable. It cuts human workload in half. Most enterprises would celebrate that.

This is where Infosys' domain expertise becomes critical. Banking compliance agents need to understand KYC (Know Your Customer) regulations. Healthcare agents need to understand HIPAA. Manufacturing agents need to understand lean processes and quality control. Anthropic can build the foundation, but Infosys knows how to make it industry-specific, regulation-compliant, and integrated with legacy systems that enterprises can't simply rip out and replace.

TCS leads with

Why AI Tools Terrify IT Services Companies (And What They're Doing About It)

Let's be direct about something that's discussed in whispers but rarely stated plainly: AI is an existential threat to the IT services business model.

The model has been remarkably stable for 30+ years. A company in the US or Western Europe has a software development need or a business process that requires customization. They hire an Indian IT services company to provide dedicated engineers. Those engineers work remotely, are paid 30-50% less than Western labor, and deliver the same quality. The IT services company makes 40-50% margins and grows revenue by hiring more people to staff more projects.

This works until AI can do the work better, faster, and without needing to hire people at all.

When Open AI released Chat GPT in November 2022, most IT executives' first thought was "this will reduce our demand." By 2024, the fear was widespread. By 2025, the fear had become reality. Hiring growth at major IT services firms slowed. Stock prices remained under pressure. Investors asked increasingly difficult questions: "If AI can code, why are you hiring coders?"

Infosys, TCS, and other IT services firms faced a choice:

- Ignore the threat - Hope that regulation or economic factors would slow AI adoption (obviously not viable)

- Compete with AI - Try to become better coders than Claude and GPT-4, in which case they'd still lose because AI is faster and cheaper

- Become AI integrators - Shift from being the labor that builds solutions to being the firm that deploys AI solutions for enterprises

Infosys chose option three. The Anthropic partnership is essentially a public declaration of that strategy. We're not going to compete with Claude. We're going to sell Claude to enterprises that can't deploy it themselves.

This requires a different skillset than traditional IT services. Your best people now need to be:

- ML engineers who understand how to fine-tune models

- Data engineers who can structure enterprise data to feed AI systems

- Risk specialists who understand what can go wrong when you deploy AI in regulated industries

- Change management experts who help enterprises adapt to AI-driven workflows

These are harder roles to staff than traditional developers. They're higher-cost. They're in shorter supply. The margin profiles look different.

But here's the strategic insight: margins might be lower, but revenue can be higher. A developer who builds a custom solution for one client one time generates maybe $500K in revenue. An AI agent that processes customer service inquiries for a bank every single month, month after month, year after year, generates millions in cumulative revenue. And once deployed, adding new use cases is relatively cheap—you're mostly configuring Claude differently, not rewriting code from scratch.

TCS, Infosys' largest competitor, is pursuing a similar strategy with Open AI. Both companies have essentially hedged their bets by partnering with the largest AI labs. They're not trying to build their own LLMs. They're trying to own the "last mile" of enterprise AI deployment—the expensive, complex, regulated part that AI labs don't want to deal with.

Will it work? Maybe. The partnership at least buys time and gives investors a narrative about adaptation rather than disruption. But it doesn't solve the underlying problem: if enterprises can deploy AI more efficiently with fewer consultants, IT services firms will still face headwinds regardless of which AI model they're using.

The Regulatory Moat: Why Established Firms Have an Advantage

Here's something that often gets overlooked in AI discussions: regulation is boring and unsexy and incredibly powerful.

Anthropically could, theoretically, hire a team of regulations experts and sell Claude directly to banks and insurance companies. They could probably do a decent job of it. But they'd be competing against firms like Infosys that have spent decades understanding how regulatory bodies think, what documentation they require, and how to pass audits.

Consider what happens when a bank wants to deploy an AI system to make lending decisions. This isn't theoretical—multiple banks have already done this or are planning to. The regulatory requirements are daunting:

- Explainability: The bank must be able to explain to regulators WHY the AI denied a loan application. "The model decided" is not an acceptable explanation.

- Auditability: Every decision must be logged and auditable. The bank must be able to reproduce the AI's decision-making on any historical case.

- Fairness testing: The bank must prove that the AI doesn't discriminate based on protected characteristics (race, gender, age, etc.) even indirectly.

- Model governance: The bank must have documented processes for how the model gets updated, who approves updates, and how they validate improvements.

- Fallback procedures: If the AI fails, the bank must have manual processes to continue operations.

- Vendor management: If Anthropic is providing the model, the bank must manage vendor risk, including what happens if Anthropic goes out of business or decides to change terms.

Building all of this is not trivial. It's not something you can improvise. It requires expertise in:

- Banking regulations (Basel III, Fair Lending, FCRA in the US; PSD2, GDPR in Europe; etc.)

- AI-specific regulation (which is still being written but increasingly specific)

- Audit and compliance

- Quality assurance for AI systems (which is different from traditional software QA)

Infosys has teams of people who do this work today, for different purposes. They've already navigated the labyrinth. Anthropic would need to either build these teams or partner with someone who has them. They chose partnership.

This regulatory moat extends across industries:

Healthcare: HIPAA compliance, FDA approval processes, medical device regulations. Infosys has experience. Anthropic doesn't.

Telecommunications: Regulatory filing requirements, network reliability standards, data localization rules. Infosys has been in this market for decades. Anthropic is new.

Manufacturing: Quality control standards, supply chain regulations, export controls. Infosys has domain expertise. Anthropic has never shipped a physical product.

The partnership essentially lets both companies play to their strengths: Anthropic focuses on model capability, Infosys focuses on regulatory compliance and integration. The customer relationship goes to Infosys (they know the client, they have the relationships), but the underlying intelligence comes from Anthropic.

For Anthropic, this is a concession—they're not going to be the direct vendor to the enterprise. But it's a smart trade because it gets them into markets where they couldn't effectively operate alone. They get market access without having to build compliance teams.

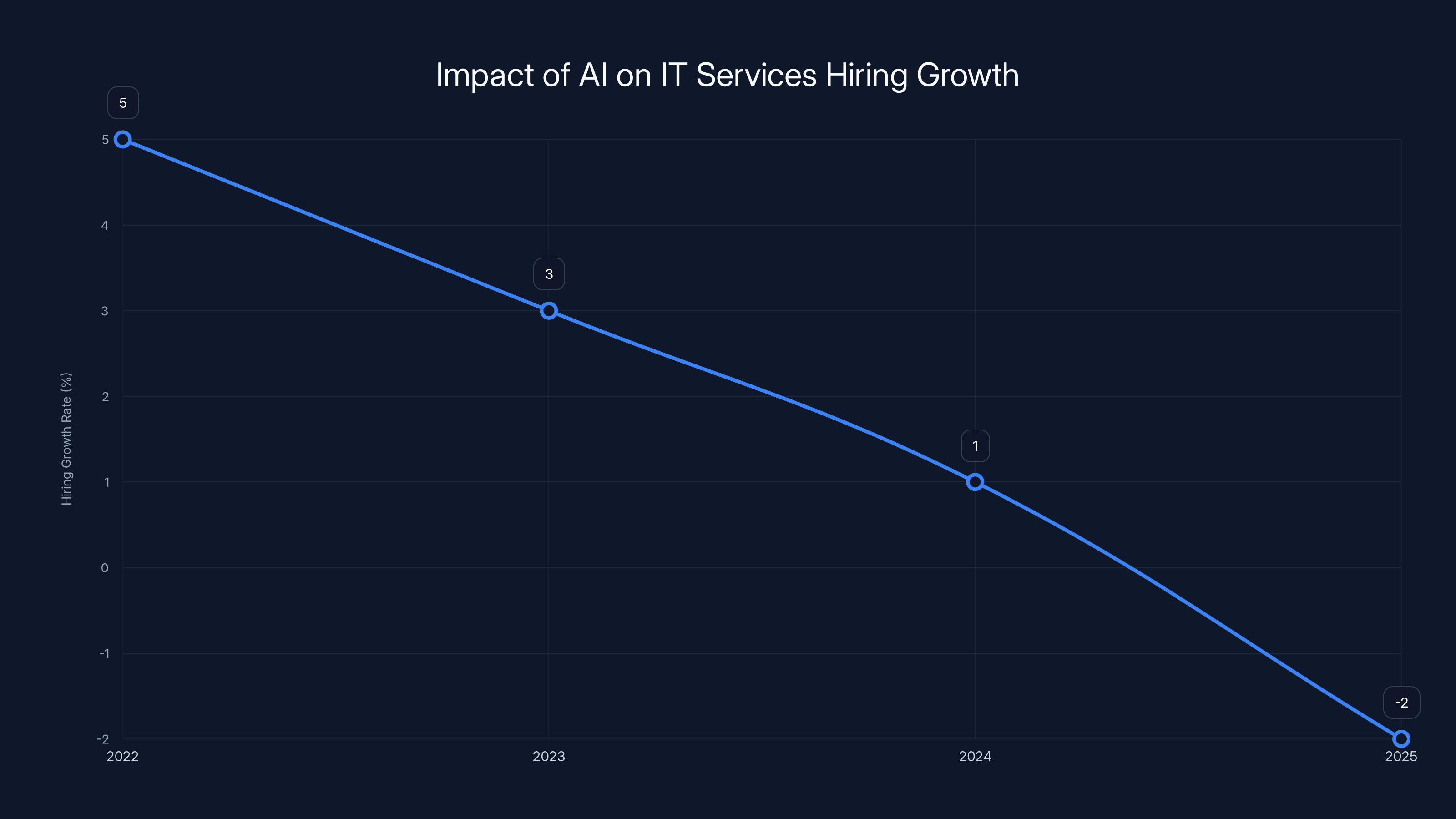

The hiring growth rate at major IT services firms is projected to decline from 5% in 2022 to -2% by 2025 due to the rise of AI tools. Estimated data.

Measuring Success: The Revenue Model for Enterprise AI Agents

Abstract partnerships are fine, but what actually drives value? How does Infosys make money from this? How does Anthropic benefit beyond abstract market access?

Let's think through the economics.

Scenario One: Dedicated AI Agent Deployment

A bank approaches Infosys and says: "We want an AI agent to handle know-your-customer (KYC) verification for new accounts." Here's how the engagement might be structured:

- Discovery phase (2-3 months): Infosys consultants understand the bank's current KYC process, data systems, and regulatory requirements. Cost: 500K.

- Design phase (1 month): Infosys designs the AI agent, including what data it will access, what decisions it will make, and what cases get escalated to humans. Cost: $100K.

- Build phase (2-3 months): Engineers implement the agent using Claude, integrate with the bank's systems, and build monitoring and governance layers. Cost: 500K.

- Testing and compliance (2 months): QA and compliance teams validate the agent works correctly, audit the decisions, and prepare documentation for regulators. Cost: 400K.

- Deployment and training (1 month): The agent goes live, staff are trained, and Infosys sets up 24/7 support. Cost: 100K.

Total project cost: roughly

But here's where it gets interesting. Once deployed, the agent requires ongoing maintenance:

- Monitoring: Infosys watches for degradation in performance, data quality issues, regulatory changes. Cost: 10K per month.

- Model updates: When Anthropic releases Claude updates, Infosys evaluates whether the new version should be deployed, tests it, and performs compliance validation. Cost: 20K per update, maybe 4-6 per year.

- Operational support: Handling edge cases, investigating why an agent made a particular decision, updating guardrails. Cost: 25K per month.

- Retaining: As new regulations emerge or business rules change, the agent needs retraining. Cost: 40K per change.

Total annual ongoing revenue:

For a large bank, you might deploy 5-10 agents across different functions (KYC, transaction monitoring, fraud detection, lending decisions, etc.). That's

Scale this across a portfolio of enterprise clients, and the revenue becomes substantial. If Infosys lands 10 major enterprise clients with 5 agents each, that's 50 agents generating

Scenario Two: Managed AI Operations Center

Larger enterprises might take a different approach. Instead of deploying specific agents, they engage Infosys to set up a "managed AI operations center"—essentially a team of Infosys specialists who work alongside the enterprise to continuously identify opportunities for AI agents, build them, deploy them, and optimize them.

This looks like:

- 2-3 senior AI engineers (managed by Infosys) working full-time at the client

- 1 compliance/governance specialist

- 1 data engineer

- Support from Infosys' core engineering teams

Cost:

The beauty of this model is stickiness. The specialists are integrated into the client's organization. They understand the business. They've built relationships. The client would face enormous switching costs if they tried to replace them.

For Infosys, this is the dream engagement: high-revenue, high-margin, long-term, low-risk.

What Anthropic Gets Out of This

Anthropically, the deal works for Anthropic too. Infosys commits to use Claude models in their Topaz platform. Each deployed agent is an API call to Anthropic's infrastructure. Those API calls generate revenue—Anthropic charges somewhere between

Let's model it out. An AI agent making 1,000 decisions per day (a moderately busy agent) might use:

- 200 input tokens per decision = 200,000 tokens/day

- At 2/day in API costs

- 365 days/year = $730/year in API revenue per agent

That sounds small until you scale it. If Infosys deploys 1,000 agents, that's

But more importantly, the partnership legitimizes Claude for enterprise use. When Infosys engineers recommend Claude, it's not a vendor pitch—it's an engineer who's tested it extensively in production. That recommendation carries weight.

For Anthropic, legitimacy in enterprise markets is worth far more than the API revenue itself.

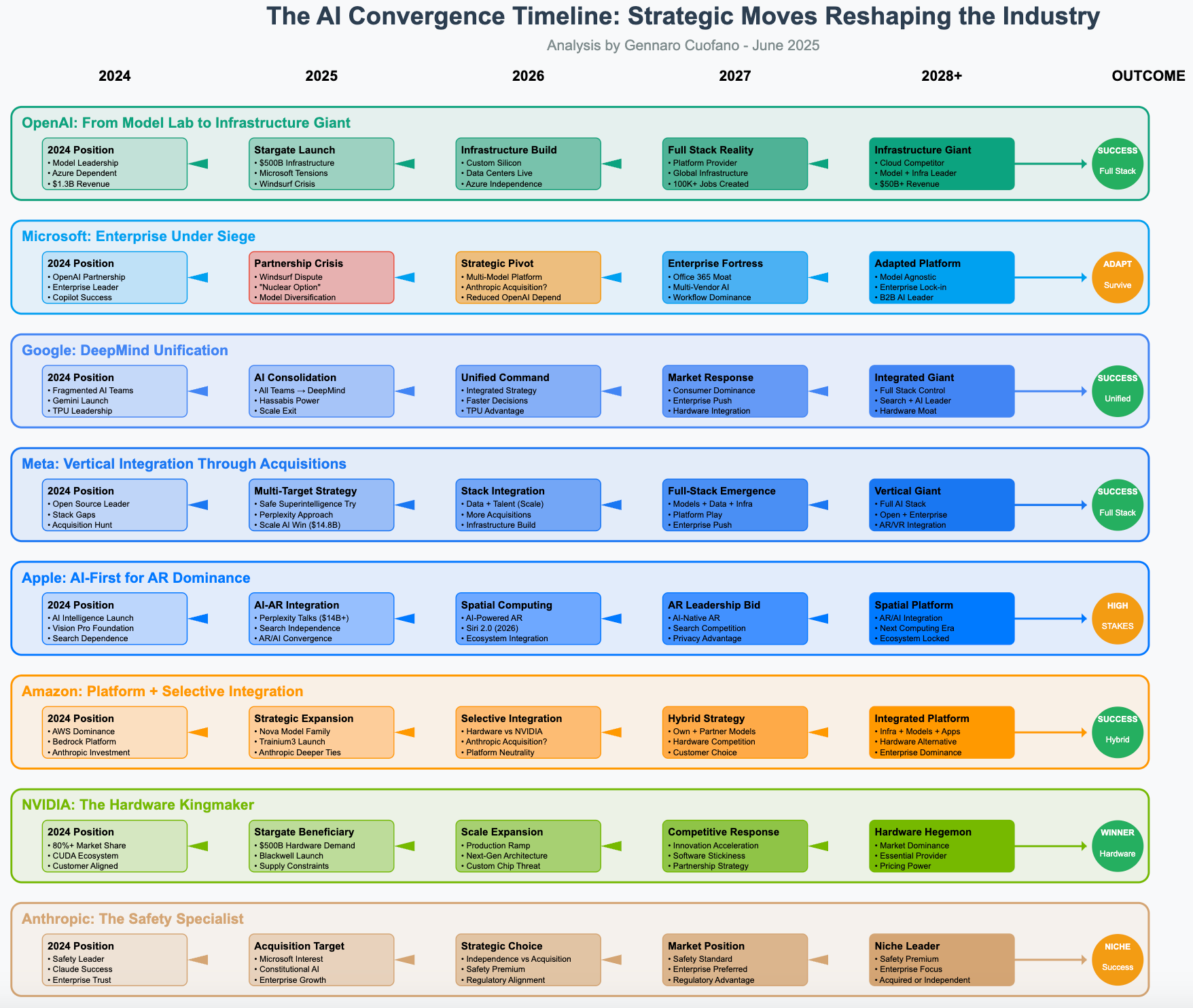

The Global Competitive Landscape: Who's Building AI Agents, and Who's Winning

The Infosys-Anthropic partnership didn't emerge in a vacuum. It's part of a larger industry reshuffling where AI capabilities, cloud platforms, and enterprise services are consolidating.

Let's map the landscape.

Tier 1: The Pure AI Companies

Open AI, Anthropic, Google Deep Mind, and Meta are the foundational model companies. They're not typically going directly to enterprises. Instead, they're publishing APIs and building platforms that others can build on top of.

Anthropic tried to differentiate with enterprise features like Claude's 100K context window and strong reasoning abilities. Open AI is focusing on ease-of-use and ecosystem lock-in through GPT-4 and fine-tuning.

Tier 2: The IT Services Giants

Infosys, Tata Consultancy Services, HCL Technologies, and Accenture are the enterprises' trusted advisors. They have relationships, they have compliance expertise, they have thousands of consultants who can implement solutions.

TCS went with Open AI for its AI partnerships, while Infosys is now aligned with Anthropic. HCL has partnerships with both Open AI and others. Accenture is positioning itself as a neutral implementer, willing to deploy any model.

Tier 3: The Cloud Platforms

Microsoft Azure, AWS, and Google Cloud are building their own AI capabilities and trying to own the "hosting and infrastructure" layer. Azure has invested heavily in Open AI. AWS is building its own models (Bedrock) while also offering access to third-party models.

Tier 4: The Specialized AI Companies

Salesforce, Service Now, Hub Spot, and others are embedding AI into their specific applications. Salesforce has Einstein. Service Now has its own AI capabilities. These companies are betting that enterprises prefer AI embedded in the tools they already use rather than having to integrate multiple vendors.

The competitive dynamics are becoming clear. Nobody is winning across all layers. Instead, we're seeing specialization:

- Open AI dominates Tier 1 (pure AI) but is struggling to execute Tier 2 work (enterprise implementation)

- Infosys and TCS are winning Tier 2 (enterprise implementation) but don't have Tier 1 capabilities, so they partner with Anthropic or Open AI

- Azure and AWS are winning Tier 3 (infrastructure) and trying to expand into Tiers 1 and 2 with limited success

- Salesforce and Service Now are winning Tier 4 (embedded AI) by leveraging their existing customer relationships

The Infosys-Anthropic partnership is essentially two players saying: "We're going to dominate Tiers 1 and 2 together." Anthropic does the hard thinking (building and improving Claude). Infosys does the hard selling and implementation work (understanding enterprise needs, navigating compliance, managing consultants).

This is historically how enterprise software works. The application vendors (like Salesforce) focus on features. The implementation partners (like Accenture or Infosys) focus on making those features work in customer environments. The partnership is natural because they're playing different games.

AI agents significantly outperform traditional AI in understanding goals, planning, taking actions, iterating, and completing tasks autonomously. Estimated data.

The India Advantage: Why Anthropic Is Betting on Bengaluru

Anthropically opened its first India office in Bengaluru at roughly the same time as the Infosys partnership announcement. That's not a coincidence. India is becoming the center of gravity for AI services, and not for the reasons people typically think.

Yes, India has talent density. There are more software engineers per capita in Bengaluru than in Silicon Valley. Yes, cost is favorable compared to the US. But those are commodities—any company can hire Indian engineers remotely.

What's special about India is infrastructure understanding and regulatory knowledge. India has:

-

A massive fintech market - India's digital payments volume exceeds the US. NPCI's UPI processes more transactions daily than Visa and Mastercard combined. Indian fintech experts understand payment flows, fraud detection, and compliance in ways that Western engineers sometimes don't.

-

A sophisticated telecom regulatory environment - India's Department of Telecommunications (Do T) is aggressive and sophisticated about spectrum, data localization, and network governance. Telecom companies that operate in India have learned to navigate complexity.

-

A booming startup ecosystem - Bengaluru has thousands of startups working on AI, data, and infrastructure. The talent density means that Anthropic can hire people who've already been working on distributed systems, model optimization, and edge computing.

-

Manufacturing experience - India's manufacturing sector, while smaller than China's, is growing and has similar complexity around supply chain, quality control, and regulatory navigation.

When Anthropic says India now represents 6% of Claude usage (second only to the US), that's significant. That's not just Western companies using Anthropic's API. That's Indian developers, Indian companies, and Indian enterprises actively using Claude to build products.

For Anthropic, setting up an India office means:

- Getting closer to a growing market of users

- Recruiting talent for building and improving Claude

- Building relationships with enterprises and IT services firms like Infosys

- Understanding how non-English-language markets use LLMs

The geopolitical implications are also notable. US-based AI companies are increasingly aware that US export controls and political tension with China or other countries could affect their business. Having operations in India provides geographic diversification and reduces political risk.

What Could Go Wrong: Risks in the Infosys-Anthropic Partnership

Partnerships look good in press releases. The underlying reality is often messier. Let's identify the risks that could derail this initiative or limit its impact.

Risk 1: Model Degradation Under Enterprise Load

Claude is impressive in demos. But what happens when an Infosys client puts an AI agent in production and it processes 1 million transactions per day? What happens when edge cases emerge that weren't anticipated?

Enterprise deployments are fundamentally different from research demos. In a demo, you control inputs carefully. In production, users do weird things. They find edge cases. They ask the system to handle scenarios that weren't in the training data.

If Claude starts making mistakes under load, Infosys takes the blame with the customer, not Anthropic. This creates tension in the partnership: Infosys is incentivized to limit Claude's responsibility and add human oversight; Anthropic is incentivized to show that Claude can handle autonomy.

Risk 2: The Autonomous Agent Dream Might Be Harder Than Expected

Building an agent that truly operates autonomously (without human review) in regulated environments is hard. Regulators don't like autonomous decision-making for high-stakes decisions. They want explainability, auditability, human accountability.

This means most deployed "agents" will actually be semi-autonomous: they handle 70% of decisions, they escalate 30% to humans. But escalation adds friction. It means you can't hit the ROI targets you projected. Infosys clients might be disappointed.

Risk 3: Competitive Pressure from Other Partnerships

TCS has partnered with Open AI. Accenture is partnering with multiple AI labs. The market for enterprise AI implementation is not winner-take-all. Infosys might build strong capabilities with Anthropic, only to find that customers prefer GPT-4 or later versions, or decide to switch implementations.

Risk 4: Regulatory Backlash

Governments are increasingly scrutinizing AI deployment in regulated industries. India's government is particularly focused on controlling foreign AI companies. If India decides that all AI systems used in Indian companies must use Indian AI models, this partnership becomes much less valuable.

Alternatively, if the US government decides to restrict AI exports or partnerships with Indian companies, the whole arrangement collapses.

Risk 5: Implementation Complexity Costs More Than Anticipated

The real money in this partnership depends on Infosys being able to implement Claude-based systems cost-effectively. But enterprise implementation is genuinely hard. If the average project runs 50% over budget, Infosys can't hit margin targets, and the partnership becomes unprofitable at scale.

Risk 6: Claude Gets Commoditized

Five years from now, multiple companies might be offering Claude-equivalent models (or better models). If Claude is no longer a differentiation factor, then the partnership's competitive advantage erodes. Infosys and Anthropic would both be offering generic AI implementation.

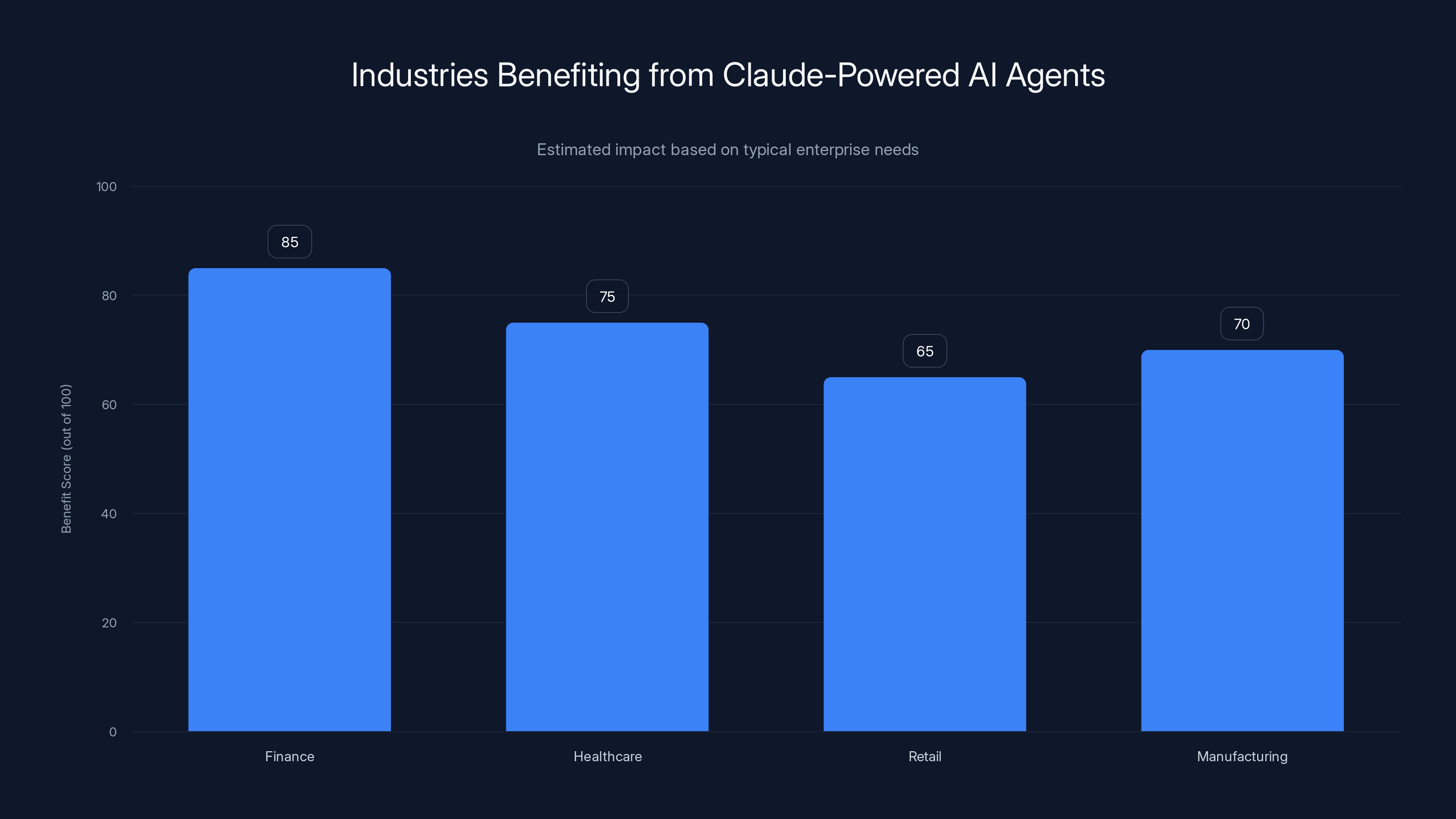

Finance is expected to benefit the most from Claude-powered AI agents due to their need for complex reasoning and compliance tasks. Estimated data.

The Technology Stack: What Does Claude Bring to the Table?

We've talked a lot about the partnership strategically, but let's get specific about the technical advantages Anthropic's Claude brings to enterprise AI agents.

Long Context Windows

Claude has a 100,000-token context window (as of 2025), which is roughly equivalent to 75,000 words or 75 full PDF documents. This is critical for enterprise use cases because it means:

- You can load an entire contract into Claude and ask it to analyze the whole thing

- You can provide 10+ documents of context to help Claude understand a complex situation

- You can maintain longer conversation histories without losing context

Compare this to Open AI's GPT-4, which has a 128,000-token window. The difference isn't massive, but it matters. For enterprise contracts and documents, Claude's context window is comfortably sufficient.

Strong Reasoning Capabilities

Claude performs notably well on complex reasoning tasks: logic puzzles, multi-step math, and code debugging. In Anthropic's own evaluations, Claude scores highly on benchmarks like LSAT, GRE, and programming challenges.

For enterprise agents, this matters because many enterprise workflows involve reasoning:

- A loan underwriter needs to reason about credit risk based on financial documents

- A supply chain manager needs to reason about optimal routes and inventory levels

- A compliance officer needs to reason about regulatory risk

Claude's reasoning strengths make it better at these tasks than more chatbot-oriented models.

Code Generation Capability

Anthropic has been heavily promoting Claude Code, which generates and executes code in a sandbox. This is powerful for enterprise scenarios like:

- Data transformation and cleaning

- Report generation from raw data

- Integration with legacy systems

- Building one-off tools

Infosys has indicated it's using Claude Code internally for these exact purposes. The fact that they've already validated it in production is a significant endorsement.

Constitutional AI and Safety Alignment

Anthropic developed a training approach called Constitutional AI, which trains models to be helpful, harmless, and honest according to a set of principles. In practice, this means Claude is somewhat more reliable at not making things up (hallucinating) compared to other models.

For enterprise use, hallucination is a serious problem. If an AI agent confidently lies to you about contract terms or regulatory requirements, that's a legal disaster. Anthropic's training approach is designed to reduce this, though it doesn't eliminate it entirely.

Capability Across Modalities

Claude can process text and images (Claude 3 can even analyze charts, diagrams, and documents in image format). For enterprise workflows, this is valuable:

- Review scanned contracts in image format

- Analyze screenshots or PDFs of legacy systems

- Extract data from handwritten forms

This multimodal capability makes Claude more flexible than text-only models.

The combination of these capabilities makes Claude a strong choice for enterprise AI agents. It's not perfect, and it's not the only viable option, but it's a solid technical foundation for the Infosys partnership.

The Customer Acquisition Path: How Will Infosys Actually Sell This?

A great technology and partnership don't automatically translate to revenue. Infosys needs to actually get customers to buy this. How will they do it?

The Account Manager Approach

Infosys likely has existing relationships with hundreds of large enterprises. Account managers for those companies will begin mentioning AI agent capabilities in conversations. "By the way, we've just built the capability to deploy Claude-powered agents for your supply chain processes. Want to explore that?"

This is the traditional IT services playbook: account managers sell based on relationships, not necessarily on deep technical understanding. If an account manager can articulate a use case where an AI agent saves the customer significant money, the customer will engage.

The Proof-of-Concept Approach

For larger deals, Infosys will pitch a "proof of concept" (POC): a 2-3 month engagement where Infosys builds an AI agent for a specific process, deploys it, and measures the impact. If the POC is successful, it becomes a foundation for a larger contract.

POCs are lower-risk for customers (limited budget, limited scope) but they're also how Infosys builds case studies and evidence that the technology works.

The Built-in Approach

Infosys' Topaz AI platform is positioned as an integrated suite. Customers who are already buying Infosys' broader AI and cloud services might discover Claude agent capabilities bundled into their existing contracts. They don't have to buy anything new—they just get access to the new capability.

This is powerful because it doesn't require a separate sales cycle. It's just an extension of existing relationships.

The Vertical-Specific Approach

Infosys has deep expertise in specific industries: financial services, healthcare, telecoms, manufacturing. They'll likely take a vertical-specific approach where they market Claude agents for specific industry use cases:

- To banks: "Claude-powered KYC agents reduce onboarding time by 70%"

- To telecoms: "Claude-powered billing agents reduce customer service costs by 50%"

- To manufacturers: "Claude-powered quality control agents reduce defects by 30%"

Vertical-specific messaging is more credible than generic messaging because it shows you understand the customer's specific challenges.

Will this work? Probably. Infosys has the sales infrastructure, the customer relationships, and the credibility to sell enterprise software. The challenge is execution—actually delivering on the promises made by the sales team. If the first 10 deployments struggle, word will spread and the sales pipeline will slow.

Financial Projections: Can This Actually Be Valuable?

Let's do some math. If the Infosys-Anthropic partnership is real, what would meaningful success look like financially?

Assumptions:

- Infosys can land 50 major enterprise clients over 3 years who each deploy 3-5 Claude-based agents

- That's 150-250 agents in production

- Average annual revenue per agent (including implementation, maintenance, updates): $500K

- Average gross margin: 55%

Calculations:

- At 150 agents: 41M gross profit

- At 250 agents: 68M gross profit

For context, Infosys' total revenue in FY2024 was roughly

However, this revenue is higher-margin and more stable (recurring, not project-based) than traditional IT services. From an investor perspective, this is worth more than equivalent revenue from traditional consulting. If Infosys can show that AI services are 10-15% of revenue and growing at 20%+ annually, stock valuations could improve.

For Anthropic, the financial impact is harder to calculate but potentially significant. If Infosys deploys 250 agents, and each agent uses

More importantly, the partnership is a customer reference—the most valuable asset a startup can have. When Anthropic talks to other enterprise prospects, they can say: "Infosys is already deploying Claude in production at scale. Here's what's working."

That reference is worth far more than the API revenue.

The Talent and Capability Challenge: Can Infosys Actually Execute?

Here's the hard part: executing on this partnership requires a different skillset than Infosys traditionally has. Building a large-scale IT services business around AI agents is going to demand:

-

AI Research Skills: People who understand how models work, their limitations, and how to evaluate them for specific use cases. Infosys historically hasn't needed these people.

-

ML Engineering Skills: People who can build, train, and optimize ML systems. Again, not Infosys' historical strength.

-

Governance and Risk Specialists: People who understand how to audit AI decisions, document model behavior, and demonstrate regulatory compliance. This is somewhat adjacent to Infosys' traditional compliance work, but AI-specific.

-

Domain Experts: People who understand banking, telecoms, manufacturing, etc. Infosys has these, but they've traditionally been coders who understood the domain. They need to evolve into consultants who can advise on AI strategy.

-

Change Management Experts: People who can help enterprises adapt to AI-driven workflows. This is new territory.

Building these teams takes time and costs money. Infosys can hire externally, but integrating external hires into a legacy organization is hard. They can train internally, but that requires investment upfront with payoff later.

More fundamentally, Infosys' organizational structure is built around project delivery. You sell a project, you assign a team, they deliver, the project ends. AI agents and agentic systems are more like products—they're ongoing, they evolve, they require continuous learning and adaptation.

Changing organizational structure is harder than changing strategy. Infosys will likely struggle with this transition, at least initially.

Looking Forward: What Happens Next?

The Infosys-Anthropic partnership is a starting point, not a finish line. Here's what we're likely to see unfold over the next 12-24 months:

In the Next 6 Months:

- Infosys will announce 3-5 proof-of-concept deployments with named customers

- The company will begin hiring ML engineers and AI specialists aggressively

- Infosys will release updates to Topaz AI highlighting Claude integration

- Anthropic will highlight Infosys as a key enterprise deployment partner

In the Next 12 Months:

- Infosys will report AI services revenue of 400M (up from $275M in Q3 2024)

- The company will begin offering certified training programs for "Claude Agent Deployment"

- Infosys will land 2-3 significant enterprise customers with multi-agent deployments

- Competitors (TCS with Open AI, Accenture with multiple partners) will announce similar initiatives

In the Next 18-24 Months:

- AI agents will become a standard offering, not a differentiator

- Infosys will need to differentiate on industry-specific capabilities or integration excellence, not on Claude itself

- Anthropic will likely partner with additional IT services firms (Deloitte, EY, Mc Kinsey) to broaden distribution

- The market for enterprise AI implementation will be in a growth phase, with multiple vendors competing

Strategic Inflection Points:

-

If Deployed Agents Perform Well: The partnership becomes a virtuous cycle. Successful deployments lead to case studies, which lead to more customers, which lead to more expertise, which leads to better deployments.

-

If Deployed Agents Struggle: Infosys faces reputational risk. Failed AI deployments are visible and costly. If the first few deployments underperform, word spreads and the sales pipeline slows.

-

If Claude Gets Disrupted: If Open AI or another competitor releases a significantly better model, the partnership becomes less valuable. Infosys would need to quickly evaluate switching models (which is expensive).

-

If Regulation Becomes Hostile: If governments decide that AI deployment requires specific local controls or data governance, the entire partnership model might need to be rearchitected.

Conclusion: The Bigger Picture

The Infosys-Anthropic partnership is not about two companies making a strategic announcement. It's about the fundamental transformation of how enterprise software gets built, deployed, and scaled in the AI era.

For two decades, the enterprise software story was: cloud companies build infrastructure, application vendors build software, implementation partners help customers deploy that software. Salesforce built the CRM, Accenture helped companies deploy it.

The AI era is different. Now, there's a fourth layer: the AI model. And that model—Claude, GPT-4, or whatever—can't be deployed effectively without domain expertise, regulatory knowledge, and integration work that sits between the model and the customer.

The Infosys-Anthropic partnership recognizes this reality and positions both companies to win in that middle layer. Anthropic gets distribution and market access. Infosys gets a way to stay relevant as AI changes what enterprise IT services means.

Will it work? Execution matters more than strategy, and enterprise software execution is hard. But the strategic positioning is sound. If Infosys can actually deliver on the promise of deploying reliable, compliant, valuable AI agents for enterprise customers, both companies win. If Infosys struggles with execution, they've invested in a capability that's valuable but difficult to monetize.

For enterprises watching this unfold, the message is clear: AI implementation is not something you do alone. You need vendors who understand both the technology and your business. Partnerships like this one—between AI labs and IT services firms—are going to be critical to enterprise AI success.

The question isn't whether partnerships like Infosys-Anthropic will succeed. The question is how much of the enterprise AI market they'll capture, and how quickly they can scale. Given the talent constraints, the execution challenges, and the competitive pressure from other partnerships, my assessment is that this partnership creates significant opportunity but faces real execution risk.

For investors: watch the proof-of-concept deployments closely. If Infosys can show 3-4 successful customer deployments in the next 18 months with measurable ROI, the partnership thesis becomes more credible. If deployments struggle or get delayed, it signals deeper execution problems.

For enterprises evaluating AI implementation partners: ask hard questions about their specific Claude experience, their team's composition, and their track record with similar deployments. Generic promises about AI agents matter less than specific evidence that a partner can deliver.

This partnership is a big deal. Not because the announcement itself is surprising, but because it signals how enterprise AI will actually get deployed: through trusted advisors who combine technology expertise with domain knowledge. The companies that can do both, at scale, will win the next decade of enterprise software.

FAQ

What is an AI agent?

An AI agent is an autonomous system that understands goals, creates plans to achieve those goals, takes actions against real systems and data, and iterates to solve problems without constant human direction. Unlike chatbots that respond to user input, agents operate independently and can complete multi-step workflows over extended periods. For example, an AI agent might autonomously process expense reports, validate them against policy, route them for approval, and post them to accounting systems.

How does the Infosys-Anthropic partnership work?

Infosys integrates Anthropic's Claude models directly into its Topaz AI platform, making Claude the underlying intelligence powering AI agents that Infosys sells to enterprise customers. Infosys handles customer relationships, domain expertise, compliance requirements, and implementation work, while Anthropic provides the foundational Claude model and API access. Customers see a single integrated solution from Infosys rather than managing separate vendors.

Why did Infosys partner with Anthropic instead of Open AI?

While Infosys didn't publicly disclose this reasoning, several factors likely influenced the decision. Claude is particularly strong at reasoning tasks and code generation, which are valuable for enterprise workflows. Anthropic was eager for distribution partnerships, while Open AI was already aligned with TCS. Additionally, Anthropic's focus on safety and interpretability aligns with enterprise governance needs. The partnership also gives Infosys a differentiation point—TCS went with Open AI, so Infosys gets to offer something different.

What industries will benefit most from Claude-powered AI agents?

Industries with heavy document processing, regulatory compliance requirements, and structured workflows stand to benefit most: financial services (loan underwriting, compliance review, fraud detection), healthcare (claim processing, clinical documentation), legal services (contract review, due diligence), insurance (underwriting, claims processing), and manufacturing (quality control, supply chain optimization). These industries have complex workflows, regulatory requirements, and processes that AI agents can meaningfully automate.

How much will AI agents cost enterprises?

Pricing varies based on complexity and deployment scope. Initial implementation typically costs

What are the risks of deploying AI agents in regulated industries?

The primary risks are explainability (regulators want to understand why the AI made a decision), accuracy (mistakes can be costly or dangerous), and auditability (all decisions must be logged and reproducible). AI agents must be designed with human oversight for high-stakes decisions, proper governance frameworks, and clear escalation paths. Infosys' role is specifically to manage these risks through compliance expertise and governance processes.

How does this partnership affect IT services jobs?

In the short term (1-2 years), there may be modest employment effects as AI agents automate some routine work. However, the partnership actually suggests a different narrative: rather than AI destroying IT services jobs, it's creating a new category of jobs (AI specialists, governance experts, change management roles) that IT services firms can offer. The bigger question is whether these new jobs grow fast enough to offset automation of routine work. Historical precedent suggests transformation rather than net job loss, but transition periods are painful for affected workers.

Can other IT services firms replicate this model?

Yes, but with effort. TCS has its Open AI partnership, Accenture is partnering broadly, and others are building similar capabilities. The advantage goes to firms that can combine technology capability with domain expertise at scale. The competitive differentiation comes from execution quality and proven track records, not from having a partnership announcement.

Consider using Runable for automating reports and presentations about your AI implementation progress. Runable's AI-powered platform can generate automated reports from your deployment data, create executive presentations, and even produce documentation about your AI agents, starting at $9/month. This saves your team hours on documentation work while keeping stakeholders informed.

Key Takeaways

- Infosys partnering with Anthropic represents a strategic adaptation by IT services firms to stay relevant as AI transforms enterprise software

- AI agents—autonomous systems that complete multi-step workflows without constant human oversight—are positioned as the next major enterprise software category

- The partnership bridges a critical gap: AI labs like Anthropic build powerful models, but enterprises need domain expertise and compliance knowledge to deploy them safely in regulated industries

- Major IT services competitors are pursuing similar strategies with different AI partners (TCS with OpenAI, Accenture with multiple vendors), signaling industry-wide recognition of this trend

- Execution will determine success more than strategy; Infosys must build new talent capabilities in ML engineering, governance, and change management to deliver on partnership promises

Related Articles

- Fractal Analytics IPO Signals India's AI Market Reality [2025]

- AI Bias as Technical Debt: Hidden Costs Draining Your Budget [2025]

- How Airbnb's AI Now Handles 33% of Customer Support [2025]

- Peter Steinberger Joins OpenAI: The Future of Personal AI Agents [2025]

- OpenAI Hires OpenClaw Developer Peter Steinberger: The Future of Personal AI Agents [2025]

- OpenClaw Founder Joins OpenAI: The Future of Multi-Agent AI [2025]

![Infosys and Anthropic Partner to Build Enterprise AI Agents [2025]](https://tryrunable.com/blog/infosys-and-anthropic-partner-to-build-enterprise-ai-agents-/image-1-1771333753483.jpg)